#SFT Solutions

Explore tagged Tumblr posts

Text

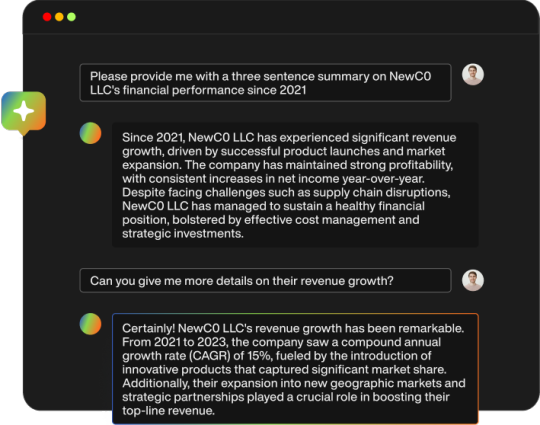

Bridging the AI-Human Gap: How Reinforcement Learning from Human Feedback (RLHF) is Revolutionizing Smarter Machines

Imagine training a brilliant student who aces every exam but still struggles to navigate real-world conversations. This is the paradox of traditional artificial intelligence: models can process data at lightning speed, yet often fail to align with human intuition, ethics, or nuance. The solution? Reinforcement Learning from Human Feedback (RLHF.)

What is RLHF? (And Why Should You Care?)

Reinforcement Learning from Human Feedback (RLHF) is a hybrid training method where AI models learn not just from raw data, but from human-guided feedback. Think of it like teaching a child: instead of memorizing textbooks, the child learns by trying, making mistakes, and adapting based on a teacher’s corrections. Here’s how it works in practice:

Initial Training: An AI model learns from a dataset (e.g., customer service logs).

Human Feedback Loop: Humans evaluate the model’s outputs, ranking responses as “helpful,” “irrelevant,” or “harmful.”

Iterative Refinement: The model adjusts its behavior to prioritize human-preferred outcomes.

Why it matters:

Reduces AI bias by incorporating ethical human judgment.

Creates systems that adapt to cultural, linguistic, and situational nuances.

Builds trust with end-users through relatable, context-aware interactions.

RLHF in Action: Real-World Wins 1. Smarter Chatbots That Actually Solve Problems Generic chatbots often frustrate users with scripted replies. RLHF changes this. For example, a healthcare company used RLHF to train a support bot using feedback from doctors and patients. The result? A 50% drop in escalations to human agents, as the bot learned to prioritize empathetic, medically accurate responses. 2. Content Moderation Without the Blind Spots Social platforms struggle to balance free speech and safety. RLHF-trained models can flag harmful content more accurately by learning from moderators’ nuanced decisions. One platform reduced false positives by 30% after integrating human feedback on context (e.g., distinguishing satire from hate speech). 3. Personalized Recommendations That Feel Human Streaming services using RLHF don’t just suggest content based on your watch history—they adapt to your mood.

The Hidden Challenges of RLHF (And How to Solve Them) While RLHF is powerful, it’s not plug-and-play. Common pitfalls include:

Feedback Bias: If human evaluators lack diversity, models inherit their blind spots.

Scalability: Collecting high-quality feedback at scale is resource-intensive.

Overfitting: Models may become too tailored to specific groups, losing global applicability.

The Fix? Partner with experts who specialize in RLHF infrastructure. Companies like Apex Data Sciences design custom feedback pipelines, source diverse human evaluators, and balance precision with scalability

Conclusion: Ready to Humanize Your AI?

RLHF isn’t just a technical upgrade it’s a philosophical shift. It acknowledges that the “perfect” AI isn’t the one with the highest accuracy score, but the one that resonates with the people it serves. If you’re building AI systems that need to understand as well as compute, explore how Apex Data Sciences’ RLHF services can help. Their end-to-end solutions ensure your models learn not just from data, but from the human experiences that data represents.

#RLHF#RLHF Services#AI#Reinforcement Learning from Human Feedback#Content Moderation#Supervised Fine-Tuning#SFT#SFT Solutions#RLHF & SFT Solutions

0 notes

Text

i saw a post earlier that really stood out to me and i want other people to have an understanding too because it's really important.

as an adult, you should know that using "minors dni" or "age in bio" is not the correct way the combat minors on your blog. you are essentially creating a wall out of toilet paper and then crying about it when someone is able to break through. instead, use bricks.

if those are the boundaries you want to set up, you need to take necessary steps to insure those boundaries are respected whenever it comes to who sees your content. this is especially important whenever you are writing nsft content about a fandom that is mostly teenagers and some young adults, like mha or jjk. a good solution to this is only writing sft content on your blog to promote your writing, and then you can put your nsft content behind a wall that requires age verification, like an ID of somekind. the downside to this is that you will not be a popular as before, this is because the minors you were trying to keep out are no longer interacting with your page- this brings me to my final point. some of you don't actually care at all, and are pretending that you do.

some of you care more about the amount of notes and fame that you get, and you KNOW that it's mostly minors reading your content, but you want to seem like a saint, and so you PRETEND to care about it to keep up a good image. that, is a problem.

The answer is simple. Build a brick wall; use necessary steps to keep minors out. In this situation, you do not get to cry about your boundaries being broken when you don't do anything to stop them from being crossed. You are responible.

#bnha smut#mha smut#mha x reader#jjk smut#jjk x reader#bakugou smut#todoroki smut#nanami smut#toji smut#sukuna smut#nonsense ����️

82 notes

·

View notes

Text

Post-Training Pioneers: The Tulu 3 Project’s Contributions to LLM Development

The intricacies of large language models (LLMs) are meticulously explored in the conversation between Nathan Lambert and the host, with a particular focus on the Tulu 3 project and its innovative post-training techniques. This project, spearheaded by the Allen Institute, seeks to enhance the performance of the LLaMA 3.1 model, prioritizing transparency and open-sourcing in the process. By employing a multifaceted approach, the project navigates the complexities of LLM development, shedding light on both the challenges and opportunities inherent in this pursuit.

The methodology underlying the Tulu 3 project is characterized by a layered strategy, commencing with instruction tuning via Supervised Fine-Tuning (SFT). This phase involves the judicious integration of capability-focused datasets, designed to augment the model's performance without compromising its versatility across a range of downstream tasks. Furthermore, the incorporation of general chat data enhances the model's broader language understanding, underscoring the project's commitment to holistic development.

Preference tuning, facilitated through both Direct Policy Optimization (DPO) and Reinforcement Learning (RL), constitutes the subsequent layer of the project's methodology. Notably, the utilization of LLMs as judges, necessitated by budgetary constraints, introduces a distinct bias profile that diverges from the outcomes associated with human judges. This divergence prompts crucial inquiries into the potential implications for fairness in downstream applications, highlighting the need for nuanced evaluation methodologies.

The Tulu 3 project's emphasis on transparency and open-sourcing embodies a paradigmatic shift in AI research, one that prioritizes communal learning and collaborative advancement. By making its data and methods openly accessible, the project catalyzes a broader conversation about the future of LLM development, encouraging the coalescence of diverse perspectives and expertise. This inclusive approach is poised to democratize access to cutting-edge AI technologies, potentially mitigating the disparities between open and closed research environments.

The project's findings and methodologies also underscore the escalating complexity of post-training techniques, which now extend far beyond the realm of simple SFT and DPO. This evolution necessitates a concomitant shift in how researchers approach LLM development, with a heightened emphasis on adaptability, innovation, and interdisciplinary collaboration. Future research directions, including the fine-tuning of instruct models for specialized applications and the systematic exploration of preference tuning's iterative potential, promise to further enrich the landscape of LLM research.

The Tulu 3 project serves as a beacon for the transformative potential of open, collaborative AI research, illuminating pathways for innovation that prioritize both technical excellence and societal responsibility. As the AI community continues to navigate the intricacies of LLM development, the lessons gleaned from this project will remain integral to the pursuit of more sophisticated, equitable, and transparent AI solutions.

Nathan Lambert: Post-training Techniques for Large Language Models (The Cognitive Revolution, November 2024)

youtube

Tuesday, November 26, 2024

#artificial intelligence#ai research#large language models#llm development#post-training techniques#natural language processing#nlp#open source ai#transparency in ai#ai innovation#language model fine-tuning#reinforcement learning#deep learning#ai collaboration#accessible ai#interview#ai assisted writing#machine art#Youtube

3 notes

·

View notes

Text

Outdoor SS Letter Sign Board Project in Dhaka BD Outdoor SS Letter Sign Board elevate your brand's visibility in Dhaka, Bangladesh with our outdoor SS letter sign board project. Crafted for durability and style, our signage solutions captivate audiences. Explore customizable options tailored to your business needs. Contact us for high-quality outdoor SS letter sign board projects and make a lasting impression. adkey Limited make Led Sign BD, a leading signboard agency and digital led electronic billboard signboard nameplate provider in Dhaka Bangladesh. Outdoor led sign bd Led sign bd price in bd Led sign bd price Led sign bd online led sign board price in bd led digital sign board led. display board suppliers in bd digital sign board price in bd LED Sign BD LED SIGN BD Best LED Sign & High-Quality Digital LED SIGN BD. LED Sign Dhaka Best led ignage company in bd LED Sign Dhaka BD - Advertising LED ignage Agency LED Sign bd Shop Sign Neon Sign bd Led Sign board LED. Sign Board Price in BD LED SIGN BD, Dhaka Best Led Acrylic Letter Signage Company Acrylic LED Sign Board LED Sign bd LED Sign Board Neon Sign LED. Sign Dhaka BD - Marketing Advertising Specialist Signboard BD LED SIGN BD LED Sign Board Price LED Sign BD LED Sign bd LED Sign Board Neon Sign Acrylic. High Letter LED Sign Board for Indoor outdoor led sign board price in Bangladesh Best LED Neon Sign in Bangladesh LED Neon Sign (per sft) - Signboard BD LED. Neon Sign (per sft) - Signboard BD LED Sign bd LED Sign Board Neon Sign bd Neon Sign Board Led sign bd led sign board price led sign bd led sign board price. Board Neon Sign bd Neon Sign Board LED Display Acrylic Letter With LED Light Signs, Signage Maker Digital Signboard Led Billboard SS Acrylic Neon Letter. LED Profile Box LED Sign Bd LED Sign Board Price Signboard Advertising Agency SHOP SIGN Bangladesh Shop Sign BD LED Sign Board LED Sign bd Neon Sign Board. Shop Sign LED SIGN BD LED Sign Board Price LED SIGN BD LED Sign Board Price Neon Sign LED Sign Bd LED Sign Board Price In Bangladesh. Neon Sign Led Display Solution LED Sign bd Neon Sign bd led profile box LED Display Board LED sign neon sign bd Sign Board, Display & Balloon Neon Sign. Board Display & Balloon Neon Neon Sign Bangladesh LED Sign bd LED Sign Board Neon Sign bd Led Sign Bd Led Sign Board Price In Bangladesh Neon Sign LED SIGN BD. LED Sign Board Price in Bangladesh LED Sign bd LED Sign Board Price led sign bd neon sign bd Neon Sign Board LED Sign Board & Vertical Signboard LED. Outdoor Display Billboard Banner in Bangladesh LED Sign Dhaka BD Digital Led Outdoor and Indoor Electronic Billboard Signage How to make LED Sign Board. LED Sign bd Neon sign board led signage board led sign board neon light price in bd led sign neon sign bd led sign board price in bangladesh. lighting sign board sign board bangladesh light board price in bangladesh. led sign board bd led sign bd LED Sign Dhaka led sign board design led sign board price in bangladesh led sign led signage led sign logo led sign city. Led Light Price LED ighting LED Lighting LED BULB LED Bulb Flood LED Down LED Backup LED LED BULB Special Decorative Light Indoor Smart Lighting. Outdoor LED Lights BD Philips LED LED Bulb LED Tube Lights Yellow LED Bulb What is the meaning of LED light?, What led ight for?. What is the price of 10 watt LED light in Bangladesh? What is the full name of LED? Light Bulbs Price Buy LED Lights Bulbs Online at Low Price LED Lights - Efficient. Long-lasting, and Quality LED lamp led light bd led light best Led light price Led light indoor walton led light led light price in dhaka bangladesh.

Two Year Free Services with Materials Warranty. Contact us for more information: Cell: 01787-664525 To Visit Our Web Page: https://adkey.com.bd/ https://adkey.com.bd/shop/ https://adkey.com.bd/blog/ https://adkey.com.bd/portfolio/ https://adkey.com.bd/about-us/ https://adkey.com.bd/contact-us/ https://adkey.com.bd/signage-types/ https://adkey.com.bd/services/ https://www.facebook.com/adkeyLimited/ https://www.facebook.com/profile.php?id=100085257639369 https://www.facebook.com/neonsignbd https://medium.com/@ledsignsbd https://medium.com/@shopsignbd

acrylic_module_light #neon_signage #shop_sign_bd

led_sign_bd #neon_sign_bd #nameplate_bd #led_sign_board #neon_sign_board #led_display_board #aluminum_profile_box #led_light #neon_light #shop_sign_board #lighting_sign_board

billboard_bd #profile_box_bd #ss_top_letter #letters #acrylic #acrylic_sign_board_price_in_bangladesh #backlit_sign_board_bd #bell_sign_bd #dhaka_sign_bd #sign_makers_bd #ss_sign_board_bd #moving_display_bd #aluminum_profile_box_bd #led_signage

led_sign_board_price_in_bd #neon_sign_board_price_in_bd

digital_sign_board_price_in_bd #name_plate_design_for_home

nameplate_price_in_bangladesh

2 notes

·

View notes

Text

Discover Your Dream Home – Luxury Villas for Sale in Dundigal Hyderabad

Are you searching for Luxury villas for sale in Dundigal Hyderabad? These stunning homes offer an unmatched blend of sophistication, modern architecture, and premium amenities, making them the best villas in Hyderabad for sale. Whether you are looking for a comfortable residence or a high-value investment, these premium luxury villas at Dundigal Hyderabad provide the perfect solution.

Elegant Designs & Spacious Layouts

These Luxury villas for sale in Hyderabad are thoughtfully designed to maximize space, comfort, and elegance. The available sizes include:

167 Sq. Yds (East - 2360 Sft & West - 2284 Sft)

183 Sq. Yds (East - 2660 Sft & West - 2565 Sft)

192 Sq. Yds (East - 2888 Sft & West - 2702 Sft)

200 Sq. Yds (East - 2958 Sft & West - 2876 Sft)

Each villa is crafted with high-end finishes, contemporary interiors, and spacious living areas to enhance your lifestyle.

World-Class Amenities for a Luxurious Lifestyle

These Villas for sale in Dundigal Hyderabad come with a range of premium amenities, including:

A state-of-the-art clubhouse with wellness and fitness facilities

Landscaped gardens and serene green spaces

Swimming pool for relaxation and leisure

Dedicated sports courts for tennis, badminton, and basketball

Cycling and jogging tracks for health enthusiasts

Exclusive play zones for children

Prime Location with Seamless Connectivity

Located in one of Hyderabad’s most promising neighborhoods, these Dundigal Hyderabad luxury villas for sale provide excellent connectivity to key destinations:

Oakridge International School – 18 minutes away

Arundhathi Hospital – 8 minutes away

IT SEZ Electronic Park – 5 minutes away

ORR Dundigal – Just 500 meters away

Why Choose These Luxury Villas?

Strategic Location – Easy access to business districts, hospitals, and schools.

Modern Infrastructure – High-end amenities and thoughtfully planned designs.

Strong Investment Potential – The demand for Luxury villas for sale in Hyderabad continues to grow, ensuring high returns.

Gated Community – Ensuring safety, security, and an exclusive lifestyle.

Premium Living Experience – Designed for elegance, comfort, and functionality.

Conclusion

These Luxury villas for sale in Dundigal Hyderabad offer a unique opportunity to own a dream home in a rapidly developing area. If you are looking for the best villas in Hyderabad for sale, now is the time to invest. Book your site visit today and step into a world of elegance and luxury!

0 notes

Text

DeepSeek-R1: A New Era in AI Reasoning

A Chinese AI lab that has continuously been known to bring in groundbreaking innovations is what the world of artificial intelligence sees with DeepSeek. Having already tasted recent success with its free and open-source model, DeepSeek-V3, the lab now comes out with DeepSeek-R1, which is a super-strong reasoning LLM. While it’s an extremely good model in performance, the same reason which sets DeepSeek-R1 apart from other models in the AI landscape is the one which brings down its cost: it’s really cheap and accessible.

What is DeepSeek-R1?

DeepSeek-R1 is the next-generation AI model, created specifically to take on complex reasoning tasks. The model uses a mixture-of-experts architecture and possesses human-like problem-solving capabilities. Its capabilities are rivaled by the OpenAI o1 model, which is impressive in mathematics, coding, and general knowledge, among other things. The sole highlight of the proposed model is its development approach. Unlike existing models, which rely upon supervised fine-tuning alone, DeepSeek-R1 applies reinforcement learning from the outset. Its base version, DeepSeek-R1-Zero, was fully trained with RL. This helps in removing the extensive need of labeled data for such models and allows it to develop abilities like the following:

Self-verification: The ability to cross-check its own produced output with correctness.

Reflection: Learnings and improvements by its mistakes

Chain-of-thought (CoT) reasoning: Logical as well as Efficient solution of the multi-step problem

This proof-of-concept shows that end-to-end RL only is enough for achieving the rational capabilities of reasoning in AI.

Performance Benchmarks

DeepSeek-R1 has successfully demonstrated its superiority in multiple benchmarks, and at times even better than the others: 1. Mathematics

AIME 2024: Scored 79.8% (Pass@1) similar to the OpenAI o1.

MATH-500: Got a whopping 93% accuracy; it was one of the benchmarks that set new standards for solving mathematical problems.

2.Coding

Codeforces Benchmark: Rank in the 96.3rd percentile of the human participants with expert-level coding abilities.

3. General Knowledge

MMLU: Accurate at 90.8%, demonstrating expertise in general knowledge.

GPQA Diamond: Obtained 71.5% success rate, topping the list on complex question answering.

4.Writing and Question-Answering

AlpacaEval 2.0: Accrued 87.6% win, indicating sophisticated ability to comprehend and answer questions.

Use Cases of DeepSeek-R1

The multifaceted use of DeepSeek-R1 in the different sectors and fields includes: 1. Education and Tutoring With the ability of DeepSeek-R1 to solve problems with great reasoning skills, it can be utilized for educational sites and tutoring software. DeepSeek-R1 will assist the students in solving tough mathematical and logical problems for a better learning process. 2. Software Development Its strong performance in coding benchmarks makes the model a robust code generation assistant in debugging and optimization tasks. It can save time for developers while maximizing productivity. 3. Research and Academia DeepSeek-R1 shines in long-context understanding and question answering. The model will prove to be helpful for researchers and academics for analysis, testing of hypotheses, and literature review. 4.Model Development DeepSeek-R1 helps to generate high-quality reasoning data that helps in developing the smaller distilled models. The distilled models have more advanced reasoning capabilities but are less computationally intensive, thereby creating opportunities for smaller organizations with more limited resources.

Revolutionary Training Pipeline

DeepSeek, one of the innovations of this structured and efficient training pipeline, includes the following: 1.Two RL Stages These stages are focused on improved reasoning patterns and aligning the model’s outputs with human preferences. 2. Two SFT Stages These are the basic reasoning and non-reasoning capabilities. The model is so versatile and well-rounded.

This approach makes DeepSeek-R1 outperform existing models, especially in reason-based tasks, while still being cost-effective.

Open Source: Democratizing AI

As a commitment to collaboration and transparency, DeepSeek has made DeepSeek-R1 open source. Researchers and developers can thus look at, modify, or deploy the model for their needs. Moreover, the APIs help make it easier for the incorporation into any application.

Why DeepSeek-R1 is a Game-Changer

DeepSeek-R1 is more than just an AI model; it’s a step forward in the development of AI reasoning. It offers performance, cost-effectiveness, and scalability to change the world and democratize access to advanced AI tools. As a coding assistant for developers, a reliable tutoring tool for educators, or a powerful analytical tool for researchers, DeepSeek-R1 is for everyone. DeepSeek-R1, with its pioneering approach and remarkable results, has set a new standard for AI innovation in the pursuit of a more intelligent and accessible future.

0 notes

Text

This is outside of my normal stuff but I need to rant. I was watching 3 body problem (yes, I'm a geek and gamer too, I'm also very smart. As I said, I'm a jack of all trades but back to the rant) now in episode 7, we find out that the aliens have interdimensional space folding technology.

Now I know a lot of you aren't going to understand what that means so I'll explain it. Space folding tech(SFT) basically takes elements that are interdimensional and brings them into 3 dimensional space or take 3 dimensional objects and make them bigger. Basic shit for someone who can open thresholds (don't ask!).

Now the problem I have is that they are dealing with a three body star system (basically the alien home world is stuck between three stars each affecting the planet in a different way) while having the technology to fold space. Which means they have three possible solutions to their problem. We start with the hardest first.

Solution 1) Resonance stabilisation of the solar system. If you have the ability to fold space you can do this. Basically what you do is you create a combined and shared electromagnet resonance between the solar bodies forcing them all into a stabilised orbital system with each other. Again if you have space fold tech you have the ability to unlock dimensions which means you have capability to create high level resonance fields.

Solution 2) Use the space folding tech to remove the two other suns. You can shrink and expand 3 dimensional matter, so shrink the sun's or just remove them from this reality through the interdimensional folding system.

Solution 3) the easiest of them all. Create an electromagnetic shield that strengthens when it is exposed to high degrees of solar energy. You know, like how our own planet does with its own electromagnetic bubble. So what they could do is create a secondary shield (again, not that hard and no I'm not going to explain how to do it. I'm just going to say it would be dangerous if I do) and cover the planet in that to increase the planets over all solar radiation defense.

Okay, rant over. Yes, I'm that smart. Yes, all options are a valid solution to the problem and are capable of being implemented by a species with that technology level. No, I'm not a scientist. I like being a craftsman but I am a very bored person with many, many interests. But now some of you might now understand why I post what I post.

Fyi ladies, I know my way around your cunts probably better then you do, but it also means I know where every pleasure spot is and trust me, l use that knowledge to the highest levels. Bambi legging is my favourite thing to do after all. 😉😉

1 note

·

View note

Text

AMD OLMo 1B Language Models Performance In Benchmarks

The First AMD 1B Language Models Are Introduced: AMD OLMo.

Introduction

Recent discussions have focused on the fast development of artificial intelligence technology, notably large language models (LLMs). From ChatGPT to GPT-4 and Llama, these language models have excelled in natural language processing, creation, interpretation, and reasoning. In keeping with AMD’s history of sharing code and models to foster community progress, we are thrilled to present AMD OLMo, the first set of completely open 1 billion parameter language models.

Why Build Your Own Language Models

You may better connect your LLM with particular use cases by incorporating domain-specific knowledge by pre-training and fine-tuning it. With this method, businesses may customize the model’s architecture and training procedure to fit their particular needs, striking a balance between scalability and specialization that may not be possible with off-the-shelf models. The ability to pre-train LLMs opens up hitherto unheard-of possibilities for innovation and product differentiation across sectors, especially as the need for personalized AI solutions keeps rising.

On a cluster of AMD Instinct MI250 GPUs, the AMD OLMo in-house trained series of language models (LMs) are 1 billion parameter LMs that were trained from scratch utilizing billions of tokens. In keeping with the objective of promoting accessible AI research, AMD has made the checkpoints for the first set of AMD OLMo models available and open-sourced all of its training information.

This project enables a broad community of academics, developers, and consumers to investigate, use, and train cutting-edge big language models. AMD wants to show off its ability to run large-scale multi-node LM training jobs with trillions of tokens and achieve better reasoning and instruction-following performance than other fully open LMs of a similar size by showcasing AMD Instinct GPUs’ capabilities in demanding AI workloads.

Furthermore, the community may use the AMD Ryzen AI Software to run such models on AMD Ryzen AI PCs with Neural Processing Units (NPUs), allowing for simpler local access without privacy issues, effective AI inference, and reduced power consumption.

Unveiling AMD OLMo Language Models

AMD OLMo is a set of 1 billion parameter language models that have been pre-trained on 16 nodes with four (4) AMD Instinct MI250 GPUs and 1.3 trillion tokens. It are making available three (3) checkpoints that correlate to the different training phases, along with comprehensive reproduction instructions:

AMD OLMo 1B: Pre-trained on 1.3 trillion tokens from a subset of Dolma v1.7.

Supervised fine-tuning (SFT) was performed on the Tulu V2 dataset in the first phase of AMD OLMo 1B, followed by the OpenHermes-2.5, WebInstructSub, and Code-Feedback datasets in the second phase.

AMD OLMo 1B SFT DPO: Using the UltraFeedback dataset and Direct Preference Optimization (DPO), this model is in line with human preferences.

With a few significant exceptions, AMD OLMo 1B is based on the model architecture and training configuration of the completely open source 1 billion version of OLMo. In order to improve performance in general reasoning, instruction-following, and chat capabilities, we pre-train using fewer than half of the tokens used for OLMo-1B (effectively halving the compute budget while maintaining comparable performance) and perform post-training, which consists of a two-phase SFT and DPO alignment (OLMo-1B does not carry out any post-training steps).

It generate a data mix of various and high-quality publically accessible instructional datasets for the two-phase SFT. All things considered, it training recipe contributes to the development of a number of models that outperform other comparable completely open-source models trained on publicly accessible data across a range of benchmarks.

AMD OLMo

Next-token prediction is used to train the AMD OLMo models, which are transformer language models that solely use decoders. Here is the model card, which includes the main model architecture and training hyperparameter information.

Data and Training Recipe

As seen in Figure 1, it trained the AMD OLMo series of models in three phases.

Stage 1: Pre-training

In order to educate the model to learn the language structure and acquire broad world knowledge via next-token prediction tasks, the pre-training phase included training on a large corpus of general-purpose text data. To selected 1.3 trillion tokens from the Dolma v1.7 dataset, which is openly accessible.

Stage 2: Supervised Fine-tuning (SFT)

In order to give its model the ability to follow instructions, to then improved the previously trained model using instructional datasets. There are two stages in this stage:

Stage 1: To start, it refine the model using the TuluV2 dataset, a high-quality instruction dataset of 0.66 billion tokens that is made publicly accessible.

Stage 2: To refine the model using a comparatively bigger instruction dataset, Open Hermes 2.5, in order to significantly enhance the instruction following capabilities. The Code-Feedback and WebInstructSub datasets are also used in this phase to enhance the model’s performance in the areas of coding, science, and mathematical problem solving. The total number of tokens in these databases is around 7 billion.

Throughout the two rounds, it carried out several fine-tuning tests with various dataset orderings and discovered that the above sequencing was most beneficial. To lay a solid foundation, the employ a relatively small but high-quality dataset in Stage 1. In Stage 2, it use a larger and more varied dataset combination to further enhance the model’s capabilities.

Stage 3: Alignment

Finally, it use the UltraFeedback dataset, a large-scale, fine-grained, and varied preference dataset, to further fine-tune it SFT model using Direct Preference Optimization (DPO). This improves model alignment and yields results that are in line with human tastes and values.

Results

It contrast AMD OLMo models with other completely open-source models of comparable scale that have made their training code, model weights, and data publicly available. TinyLLaMA-v1.1 (1.1B), MobiLLaMA-1B (1.2B), OLMo-1B-hf (1.2B), OLMo-1B-0724-hf (1.2B), and OpenELM-1_1B (1.1B) are the pre-trained baseline models that to utilized for comparison.

For general reasoning ability, compares pre-trained models to a variety of established benchmarks. To assess responsible AI benchmarks, multi-task comprehension, and common sense reasoning using Language Model Evaluation Harness. It assess GSM8k in an 8-shot setting, BBH in a 3-shot setting, and the other benchmarks in a zero-shot scenario out of the 11 total.

Using AMD OLMo 1B:

With less than half of its pre-training compute budget, the average overall general reasoning task score (48.77%) is better than all other baseline models and equivalent to the most recent OLMo-0724-hf model (49.3%).

Accuracy improvements on ARC-Easy (+6.36%), ARC-Challenge (+1.02%), and SciQ (+0.50%) benchmarks compared to the next best models.

To employed TinyLlama-1.1B-Chat-v1.0, MobiLlama-1B-Chat, and OpenELM-1_1B-Instruct, the instruction-tuned chat equivalents of the pre-trained baselines, to assess the chat capabilities. It utilized Alpaca Eval to assess instruction-following skills and MT-Bench to assess multi-turn conversation skills, in addition to Language Model Evaluation Harness to assess common sense reasoning, multi-task comprehension, and responsible AI benchmarks.

Regarding the comparison of previous instruction-tuned baselines with the adjusted and aligned models:

The model accuracy was improved by two phases SFT from the pre-trained checkpoint on average for almost all benchmarks, including MMLU by +5.09% and GSM8k by +15.32%.

Significantly better (+15.39%) than the next best baseline model (TinyLlama-1.1B-Chat-v1.0 at 2.81%) is AMD OLMo 1B SFT performance on GSM8k (18.2%).

SFT model’s average accuracy across standard benchmark is at least +2.65% better than baseline chat models. It is further enhanced by alignment (DPO) by +0.46%.

SFT model also outperforms the next-best model on the chat benchmarks AlpacaEval 2 (+2.29%) and MT-Bench (+0.97%).

How alignment training enables it AMD OLMo 1B SFT DPO model to function similarly to other conversation baselines on responsible AI assessment benchmarks.

Additionally, AMD Ryzen AI PCs with Neural Processing Units (NPUs) may also do inference using AMD OLMo models. AMD Ryzen AI Software makes it simple for developers to run Generative AI models locally. By maximizing energy efficiency, protecting data privacy, and allowing a variety of AI applications, local deployment of such models on edge devices offers a secure and sustainable solution.

Conclusion

With the help of an end-to-end training pipeline that runs on AMD Instinct GPUs and includes a pre-training stage with 1.3 trillion tokens (half the pre-training compute budget compared to OLMo-1B), a two-phase supervised fine-tuning stage, and a DPO-based human preference alignment stage, AMD OLMo models perform on responsible AI benchmarks on par with or better than other fully open models of a similar size in terms of general reasoning and chat capabilities.

Additionally, AMD Ryzen AI PCs with NPUs, which may assist allow a wide range of edge use cases, were equipped with the language model. The main goal of making the data, weights, training recipes, and code publicly available is to assist developers in reproducing and innovating further. AMD is still dedicated to delivering a constant flow of new AI models to the open-source community and looks forward to the advancements that will result from their joint efforts.

Read more on Govindhtech.com

#AMDOLMo#LanguageModels#OLMo1B#OLMo#1BLanguageModels#AI#LLM#AMDRyzenAI#News#Technews#Technology#Technologynews#Technologytrends#govindhtech

1 note

·

View note

Text

The SFT-1442 Bleached Knot Natural Silk Top Toupee: Elevating Men's Hair Solutions

0 notes

Text

3M™ Fully Automatic Water Softener SFT 200Fe - Reduces Water Hardness & Iron by 90%

Upgrade your home with the 3M™ Fully Automatic Water Softener SFT 200Fe, which reduces water hardness and iron content by 90%, protecting your skin and appliances. With 28L of high-performance food-grade resin and an automatic regeneration feature, this system is designed for hassle-free operation. For expert installation and support, trust Apex Water Solutions for the best water-softening solutions in Bangalore.

#water filters#water softeners#sand filters#water heaters#carbon filters#iron filter#solar water heaters#hard water solutions#iron filters#hard water softeners

0 notes

Text

Buy Ready-to-Move Villas in Kollur, Hyderabad

Looking to upgrade your lifestyle instantly? Our Ready-to-Move Villas in Kollur offer the perfect solution. Nestled in a vibrant community, these villas are designed for those seeking both comfort and convenience. Each home boasts modern architecture, spacious interiors, and access to a 20,000 SFT clubhouse packed with world-class amenities, ensuring every day feels like a vacation. Don’t miss your chance to own a piece of paradise in Kollur. Move in today and start living the dream!

#realestate#luxury house#luxury living#villas for sale in hyderabad#villas for sales#duplex homes in hyderabad#new homes in hyderabad

0 notes

Text

Recombinant UBE2D1 Protein

Recombinant UBE2D1 Protein Catalog number: B2017676 Lot number: Batch Dependent Expiration Date: Batch dependent Amount: 50 µg Molecular Weight or Concentration: 18.77 kDa Supplied as: SOLUTION Applications: a molecular tool for various biochemical applications Storage: -80°C Keywords: Ubiquitin conjugating enzyme E2 D1, E2(17)KB1, SFT, UBC4/5, UBCH5, UBCH5A Grade: Biotechnology grade. All…

0 notes

Text

Google Introduces Gemma 2: Elevating AI Performance, Speed and Accessibility for Developers

New Post has been published on https://thedigitalinsider.com/google-introduces-gemma-2-elevating-ai-performance-speed-and-accessibility-for-developers/

Google Introduces Gemma 2: Elevating AI Performance, Speed and Accessibility for Developers

Google has unveiled Gemma 2, the latest iteration of its open-source lightweight language models, available in 9 billion (9B) and 27 billion (27B) parameter sizes. This new version promises enhanced performance and faster inference compared to its predecessor, the Gemma model. Gemma 2, derived from Google’s Gemini models, is designed to be more accessible for researchers and developers, offering substantial improvements in speed and efficiency. Unlike the multimodal and multilingual Gemini models, Gemma 2 focuses solely on language processing. In this article, we’ll delve into the standout features and advancements of Gemma 2, comparing it with its predecessors and competitors in the field, highlighting its use cases and challenges.

Building Gemma 2

Like its predecessor, the Gemma 2 models are based on a decoder-only transformer architecture. The 27B variant is trained on 13 trillion tokens of mainly English data, while the 9B model uses 8 trillion tokens, and the 2.6B model is trained on 2 trillion tokens. These tokens come from a variety of sources, including web documents, code, and scientific articles. The model uses the same tokenizer as Gemma 1 and Gemini, ensuring consistency in data processing.

Gemma 2 is pre-trained using a method called knowledge distillation, where it learns from the output probabilities of a larger, pre-trained model. After initial training, the models are fine-tuned through a process called instruction tuning. This starts with supervised fine-tuning (SFT) on a mix of synthetic and human-generated English text-only prompt-response pairs. Following this, reinforcement learning with human feedback (RLHF) is applied to improve the overall performance

Gemma 2: Enhanced Performance and Efficiency Across Diverse Hardware

Gemma 2 not only outperforms Gemma 1 in performance but also competes effectively with models twice its size. It’s designed to operate efficiently across various hardware setups, including laptops, desktops, IoT devices, and mobile platforms. Specifically optimized for single GPUs and TPUs, Gemma 2 enhances the efficiency of its predecessor, especially on resource-constrained devices. For example, the 27B model excels at running inference on a single NVIDIA H100 Tensor Core GPU or TPU host, making it a cost-effective option for developers who need high performance without investing heavily in hardware.

Additionally, Gemma 2 offers developers enhanced tuning capabilities across a wide range of platforms and tools. Whether using cloud-based solutions like Google Cloud or popular platforms like Axolotl, Gemma 2 provides extensive fine-tuning options. Integration with platforms such as Hugging Face, NVIDIA TensorRT-LLM, and Google’s JAX and Keras allows researchers and developers to achieve optimal performance and efficient deployment across diverse hardware configurations.

Gemma 2 vs. Llama 3 70B

When comparing Gemma 2 to Llama 3 70B, both models stand out in the open-source language model category. Google researchers claim that Gemma 2 27B delivers performance comparable to Llama 3 70B despite being much smaller in size. Additionally, Gemma 2 9B consistently outperforms Llama 3 8B in various benchmarks such as language understanding, coding, and solving math problems,.

One notable advantage of Gemma 2 over Meta’s Llama 3 is its handling of Indic languages. Gemma 2 excels due to its tokenizer, which is specifically designed for these languages and includes a large vocabulary of 256k tokens to capture linguistic nuances. On the other hand, Llama 3, despite supporting many languages, struggles with tokenization for Indic scripts due to limited vocabulary and training data. This gives Gemma 2 an edge in tasks involving Indic languages, making it a better choice for developers and researchers working in these areas.

Use Cases

Based on the specific characteristics of the Gemma 2 model and its performances in benchmarks, we have been identified some practical use cases for the model.

Multilingual Assistants: Gemma 2’s specialized tokenizer for various languages, especially Indic languages, makes it an effective tool for developing multilingual assistants tailored to these language users. Whether seeking information in Hindi, creating educational materials in Urdu, marketing content in Arabic, or research articles in Bengali, Gemma 2 empowers creators with effective language generation tools. A real-world example of this use case is Navarasa, a multilingual assistant built on Gemma that supports nine Indian languages. Users can effortlessly produce content that resonates with regional audiences while adhering to specific linguistic norms and nuances.

Educational Tools: With its capability to solve math problems and understand complex language queries, Gemma 2 can be used to create intelligent tutoring systems and educational apps that provide personalized learning experiences.

Coding and Code Assistance: Gemma 2’s proficiency in computer coding benchmarks indicates its potential as a powerful tool for code generation, bug detection, and automated code reviews. Its ability to perform well on resource-constrained devices allows developers to integrate it seamlessly into their development environments.

Retrieval Augmented Generation (RAG): Gemma 2’s strong performance on text-based inference benchmarks makes it well-suited for developing RAG systems across various domains. It supports healthcare applications by synthesizing clinical information, assists legal AI systems in providing legal advice, enables the development of intelligent chatbots for customer support, and facilitates the creation of personalized education tools.

Limitations and Challenges

While Gemma 2 showcases notable advancements, it also faces limitations and challenges primarily related to the quality and diversity of its training data. Despite its tokenizer supporting various languages, Gemma 2 lacks specific training for multilingual capabilities and requires fine-tuning to effectively handle other languages. The model performs well with clear, structured prompts but struggles with open-ended or complex tasks and subtle language nuances like sarcasm or figurative expressions. Its factual accuracy isn’t always reliable, potentially producing outdated or incorrect information, and it may lack common sense reasoning in certain contexts. While efforts have been made to address hallucinations, especially in sensitive areas like medical or CBRN scenarios, there’s still a risk of generating inaccurate information in less refined domains such as finance. Moreover, despite controls to prevent unethical content generation like hate speech or cybersecurity threats, there are ongoing risks of misuse in other domains. Lastly, Gemma 2 is solely text-based and does not support multimodal data processing.

The Bottom Line

Gemma 2 introduces notable advancements in open-source language models, enhancing performance and inference speed compared to its predecessor. It is well-suited for various hardware setups, making it accessible without significant hardware investments. However, challenges persist in handling nuanced language tasks and ensuring accuracy in complex scenarios. While beneficial for applications like legal advice and educational tools, developers should be mindful of its limitations in multilingual capabilities and potential issues with factual accuracy in sensitive contexts. Despite these considerations, Gemma 2 remains a valuable option for developers seeking reliable language processing solutions.

#Accessibility#Advice#ai#AI systems#applications#apps#architecture#Article#Articles#Artificial Intelligence#benchmarks#billion#bug#Building#Capture#chatbots#Cloud#code#code generation#coding#computer#content#creators#cybersecurity#cybersecurity threats#data#data processing#decoder#deployment#detection

0 notes

Text

SAP success factor

SAP Success Factors is a cloud-based HCM factor that provides the best solution in supporting core HR and payroll management, talent acquisition management, HR analytics, and overall employee lifecycle processes. For more details visit our official website.

0 notes

Text

Top 5 Budget-Friendly Air Coolers to Beat the Summer Heat!

Summer heat can be unbearable, making it essential to have a reliable cooling solution at home or office. Air coolers offer a budget-friendly alternative to air conditioning, providing efficient cooling without breaking the bank. In this article, we’ll explore the top 5 budget-friendly air coolers that can help you stay comfortable during the hottest months of the year.

Understanding the Importance of Budget-Friendly Cooling Solutions

In a world where energy costs continue to rise, investing in cost-effective cooling solutions becomes paramount. Budget-friendly air coolers not only provide relief from the heat but also help conserve energy and reduce utility bills.

Factors to Consider When Choosing an Air Cooler

Before diving into our top picks, it’s essential to understand the key factors to consider when selecting an air cooler. Factors such as cooling capacity, energy efficiency, size, and additional features play a crucial role in determining the effectiveness and suitability of an air cooler for your needs.

Cooling Capacity

The cooling capacity of an air cooler determines its effectiveness in cooling a room. Look for models with higher airflow and larger water tank capacity for better cooling performance.

Energy Efficiency

Opt for air coolers that are energy-efficient to keep electricity bills in check. Energy-efficient models consume less power while providing optimal cooling, making them cost-effective in the long run.

Size and Portability

Consider the size and portability of the air cooler, especially if you plan to move it between rooms frequently. Compact and lightweight models are ideal for smaller spaces and easy transportation.

Additional Features

Look for additional features such as adjustable fan speeds, oscillation, timer settings, and remote control for added convenience and customization.

Top 5 Budget-Friendly Air Coolers

Desert Coolers – ECO 150S (WITH STAND)

Overview

The Desert Coolers – Eco 150 features a classic Nagpur cooler design crafted from galvanized steel, designed to be installed outside the room or house via the window. With a powder-coated body for enhanced durability, a seamless water tank, and an auto-swing air diverter, this cooler is the perfect choice for cooling areas up to 150 square feet.

Features:

Cooling Capacity (Sfts)150Model No.Eco 150SBody Size (HxWxD)36″x21″x18″Blade Size11″ – 3 LeafFan Speed (RPM)2800Tank Capacity (Liters)35Power Consumption (Watts)136Weight (Kg)19.3Air Displacement (Approx. CFM)1250Sound Level (DB)69

Duct Coolers – Wave Duct 190

Overview

Ramcoolers Duct coolers – Wave Duct 190 bosts a cooling capacity of 200 square feet, making it ideal for medium-sized rooms or spaces. Constructed with a durable GI body and powder coating, these coolers ensure longevity and resilience against wear and tear. Additionally, their joint-less bottom water tank design enhances reliability and minimizes the risk of leaks, providing efficient and hassle-free cooling solutions.

Features:

Cooling Capacity200 SftBody Size (Inches) With Trolly49x17x19Blade Size (Inches) Type11″- 3 LeafRPM2350Speed Control3 SpeedTank Capacity (Lts)35Power Rating (Watts)115Weight (Kgs) With Trolly20Air Throw (CFM)900Noise Level (DB)66Float BallAvailable

Tower Coolers – METAL ULTRACOOL 200N

Overview

Introducing the Tower Cooler, designed to cool areas up to 200 square feet with ease. Crafted with a galvanized steel body and powder coating, it offers durability and longevity. Its joint-less water tank ensures leak-free operation, while the auto-swing air diverter distributes cool air evenly. Featuring breathe-easy woodwool cooling pads, this cooler delivers efficient and refreshing cooling for your space.

Features:

Cooling Capacity200 SftBody Size (Inches) With Trolly49x18x17Blade Size (Inches )Type11″4 LeafRPM2350Speed Control3 SpeedTank Capacity (Lts)35Power Rating (Watts)130Weight (Kgs) With Trolly18.1Air Throw (CFM)1000Noise Level (DB)67

Slim Coolers – METAL ULTRASLIM 460SH

Overview

Introducing our Slim Cooler, designed to efficiently cool areas up to 450 square feet. Crafted with a galvanized steel body and powder coating, it ensures durability and longevity. The joint-less water tank minimizes the risk of leaks, while the auto air swing diverter distributes cool air evenly. Equipped with honeycomb cooling pads, this cooler delivers superior cooling performance. Plus, it comes with a movable trolley as standard, offering convenience and flexibility in placement.

Features:

Cooling Capacity450 SftBody Size (Inches) With Trolly54x29x17Blade Size (Inches )Type20″ Semi ExhaustRPM1350Speed Control3 SpeedTank Capacity (Ltrs)45Power Rating (Watts)270Weight (Kgs) With Trolly30Air Throw (CFM)3000Noise Level (DB)68

5. Room Coolers – Metal Cool 120S (With Trolly)

Overview

Introducing our Portable Room Cooler, perfect for cooling areas up to 120 square feet. Crafted with a galvanized steel body and powder coating, it ensures durability and reliability. The joint-less water tank minimizes leaks, while the auto-swing air diverter evenly distributes cool air. Equipped with breathe easy woodwool cooling pads, this cooler delivers efficient and refreshing cooling. Plus, it includes a movable trolley as standard, offering convenience and ease of transportation.

Features:

Cooling Capacity120 SftBody Size (Inches) Without Trolly32.5x18x16Blade Size (Inches) Type12″ -3 LeafRmp2400Speed ControlVariableTank Capacity (Lts)35Power Rating (Watts)138Weight (Kgs) Without Trolly16Air Throw (CFM)925Noise Level (DB)68

How to Maximize the Effectiveness of Your Budget-Friendly Air Cooler

To make the most out of your budget-friendly air cooler, follow these tips:

Place the air cooler in a well-ventilated area for optimal airflow.

Keep windows and doors closed to prevent hot air from entering the room.

Regularly clean and maintain the air cooler to ensure efficient operation.

Also Read: 24/7 Cool Comfort: How Industrial Coolers Can Keep the Entire Office Cool Around the Clock

Conclusion

With the right budget-friendly air cooler, you can beat the summer heat without breaking the bank. Consider your specific cooling needs and preferences before choosing the perfect model for your home or office. Whether you opt for portability, energy efficiency, or powerful cooling performance, there’s a budget-friendly air cooler out there to keep you cool and comfortable all summer long.

FAQs

Q. How do air coolers work?

Ans. Air coolers use the process of evaporation to cool the air. Water is pumped over cooling pads, and as warm air passes through the pads, it is cooled by evaporation and then circulated back into the room.

Q. Are air coolers energy-efficient?

Ans. Compared to traditional air conditioners, air coolers are generally more energy-efficient since they do not require compressors to operate. However, energy efficiency varies depending on the model and usage.

Q. Can air coolers be used in humid climates?

Ans. Air coolers are most effective in dry or arid climates where humidity levels are low. In humid climates, the cooling effect may be less noticeable, but air coolers can still provide some relief by increasing airflow and ventilation.

Q. Do air coolers require a lot of maintenance?

Ans. Air coolers require regular maintenance to ensure optimal performance. This includes cleaning the water tank, replacing cooling pads, and occasionally disinfecting the unit to prevent mold and bacteria buildup.

Q. Can air coolers be used outdoors?

Ans. Some portable air coolers are designed for outdoor use, but their effectiveness may be limited in open spaces with high humidity levels. It’s best to use air coolers in enclosed or partially enclosed areas for optimal cooling results.

>>> Contact us today for any queries!

0 notes

Text

Canik Mete SFT Holster - A convenient choice for safe gun carry

Enhance Your Canik Mete SFT Experience with Custom Holsters

The Canik Mete SFT is not just another firearm; it's a testament to innovation and performance. Engineered for precision and boasting a sleek design, this pistol is a favorite among shooters for its reliability and versatility. However, to truly optimize your Mete SFT, consider pairing it with a custom Canik Mete SFT holster. Craft Holsters offers a range of options designed to cater to every shooter's preference and style.

Discover the Perfect Canik Mete SFT Holster Selection

Craft Holsters takes pride in its diverse lineup of Canik Mete SFT holsters, ensuring there's something for everyone. Whether you prefer concealed carry with the IWB holster or prioritize quick draw and comfort with the OWB holster, we've got you covered. Our selection also includes options like the light-bearing holster for low-light conditions and the drop leg holster for tactical situations. With a focus on quality, comfort, and functionality, Craft Holsters provides the best-in-class solutions for your Canik Mete SFT needs. To learn more about Canik Mete SFT holsters and to discover the best options available in the market, visit Craft Holsters' holsters for Canik Mete SFT section.

0 notes