#Nvidia hardware

Explore tagged Tumblr posts

Text

Nintendo Switch 2: Posibilă prezentare oficială pe 16 ianuarie 2025

Nintendo pare să se pregătească pentru dezvăluirea mult așteptatei sale console, denumită neoficial Nintendo Switch 2, conform informațiilor furnizate de leaker-ul Nate the Hate și site-ul VGC. Prezentarea ar urma să fie împărțită în două evenimente: unul axat pe hardware (16 ianuarie 2025) și un altul dedicat jocurilor (spre sfârșitul lunii februarie sau începutul lunii martie). Ce știm până…

#Assassin’s Creed Mirage#consolă nouă#Final Fantasy 7 Remake#hardware Nvidia#jocuri noi#Joy-Con#mario kart#new console#new games#Nintendo presentation#Nintendo Switch 2#Nvidia hardware#performanță PS4#prezentare Nintendo#PS4 performance#ray tracing

0 notes

Text

bill gates is a rabid dog and he must be beaten to death with a stick

#trying to install windows on my old turbofucked laptop#windows won't even start the installer! linux will do it just fine though#Genuinely not sure how we end up here I thought Windows was the hardware compatibility team#linux#that laptop ran fine for six years with Ubuntu and later Arch and it only made me want to destroy Nvidia a few times#Microsoft hate tag

107 notes

·

View notes

Text

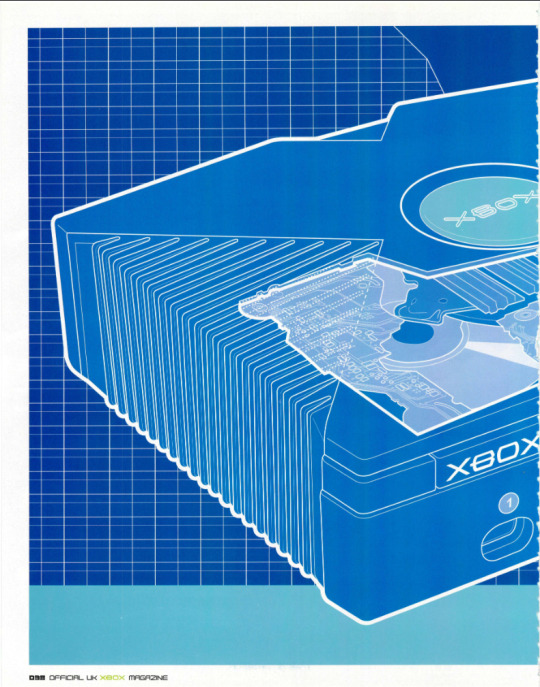

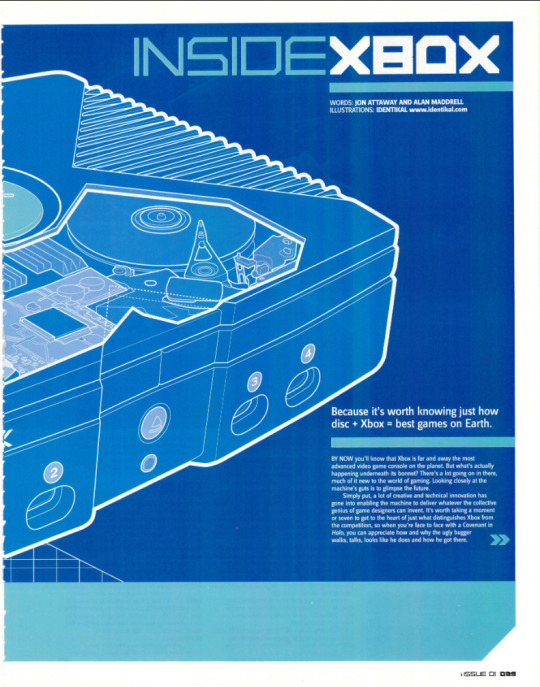

" Because it's worth knowing just how disc + Xbox = best games on Earth! "

Official Xbox Magazine (UK) n01 - November, 2001. - Pg. 38 -> Pg.45

#Microsoft#Microsoft Xbox#Xbox#OG Xbox#Xbox Live#NVIDIA#NVIDIA Graphics#NV2A#Hardware#Hardware Specs

127 notes

·

View notes

Text

I might be stupid but tje switch 2 prices are probably just Slightly affected by tarrifs

#vic.txt#nvidia chips r unaffected j believe but like every other hardware piece is#sorry we tLked abt this during compsci and it’s tech in general rn

7 notes

·

View notes

Text

UK 1998

9 notes

·

View notes

Text

Kai-Fu Lee has declared war on Nvidia and the entire US AI ecosystem.

🔹 Lee emphasizes the need to focus on reducing the cost of inference, which is crucial for making AI applications more accessible to businesses. He highlights that the current pricing model for services like GPT-4 — $4.40 per million tokens — is prohibitively expensive compared to traditional search queries. This high cost hampers the widespread adoption of AI applications in business, necessitating a shift in how AI models are developed and priced. By lowering inference costs, companies can enhance the practicality and demand for AI solutions.

🔹 Another critical direction Lee advocates is the transition from universal models to “expert models,” which are tailored to specific industries using targeted data. He argues that businesses do not benefit from generic models trained on vast amounts of unlabeled data, as these often lack the precision needed for specific applications. Instead, creating specialized neural networks that cater to particular sectors can deliver comparable intelligence with reduced computational demands. This expert model approach aligns with Lee’s vision of a more efficient and cost-effective AI ecosystem.

🔹 Lee’s startup, 01. ai, is already implementing these concepts successfully. Its Yi-Lightning model has achieved impressive performance, ranking sixth globally while being extremely cost-effective at just $0.14 per million tokens. This model was trained with far fewer resources than competitors, illustrating that high costs and extensive data are not always necessary for effective AI training. Additionally, Lee points out that China’s engineering expertise and lower costs can enhance data collection and processing, positioning the country to not just catch up to the U.S. in AI but potentially surpass it in the near future. He envisions a future where AI becomes integral to business operations, fundamentally changing how industries function and reducing the reliance on traditional devices like smartphones.

#artificial intelligence#technology#coding#ai#tech news#tech world#technews#open ai#ai hardware#ai model#KAI FU LEE#nvidia#US#usa#china#AI ECOSYSTEM#the tech empire

2 notes

·

View notes

Video

youtube

Do you REALLY need a good GPU for Blender?

#youtube#curtisholt#kreativstudionuding#kreative bücher und mehr#jochens freelancer wellen#blender 3d skills#opensuse#linux#leap 15.4#open source#hardware#nvidia#rtx 2080 ti

17 notes

·

View notes

Text

Impulsando el Futuro: El Éxito de NVIDIA y sus Chips de IA

NVIDIA ha experimentado un impresionante aumento del 30% en sus ganancias trimestrales, gracias a la creciente demanda de sus chips de inteligencia artificial (IA). Estos chips están revolucionando sectores como vehículos autónomos, investigación médica y supercomputación. Con más de 10 millones de vehículos equipados con su tecnología y una penetración del 70% en las principales instalaciones de investigación, NVIDIA lidera la revolución de la IA. Su enfoque integral en hardware y software está acelerando la innovación y promete un emocionante futuro impulsado por la inteligencia artificial.

https://www.eleconomista.com.mx/mercados/Ganancias-trimestrales-de-Nvidia-superan-los-pronosticos-20240221-0078.html

4 notes

·

View notes

Note

What do you think of Nvidia's new DLSS Frame Generation technology that creates frames in between ones created by the GPU with AI to produce a smoother frame rate and also give you more FPS?

As a chronic screenshotist, I hate it. I can't find the post now, but I talked about how smeary recent games are thanks to upscaling. Games with dynamic resolutions and heavy upscaling always leave smudgy, smeary, ugly artifacts all over the screen, where the renderer is filling in missing information.

I see it in a lot of Unreal Engine games, but I'm pretty sure I saw it in the PS4 version of Street Fighter 6, too. It makes games look worse than they should.

I've also played Fortnite through things like GeForce Now, where you can (or used to be able to) crank the settings up to max, and even with everything on Epic Quality, surfaces can still be smeary, grainy, and covered in artifact trails.

So when you tell me that not only is the rendering engine going to be making a blurry mess out of my game because of resolution scaling, but it's also going to be motion smoothing whole entire new frames on top of that?

Gross. No thank you.

I feel like photo mode is a way to "solve" this, because photo mode deliberately takes you out of the action to render screenshots at much, much higher settings than you normally get during gameplay. But that creates a different problem in that's not how photography always works.

Photography is about taking 300 shots and only using the five best ones.

Photo mode suggests you are setting up for a specific screenshot on purpose when that's just not how it always goes.

If interframe generation makes this worse, or harder? I don't want it. I don't care what the benefits claim to be. We're in a constant war to tell our parents to turn motion smoothing off on new TVs, why would we want to bring that in to games?

18 notes

·

View notes

Text

Clean installing nbividia drivers in the desperate hope that'll I'll be able to play alan wake at a reasonable fps on lowest settings

#ive not got a bad pc i got a nvidia gtx 960#intel i7 processor#12 gb ram#like that should be fine shouldnt it??#tbh i dont actually know anything about pc hardware but it should be above spec for alan wake remastered#so im hoping its just playing poorly cuz i havent veen updating softwares#otherwise idk what to do next lol#anyone who knows pcs pls feel free to chime in even if its just telling me to buy better hardware#lmao

3 notes

·

View notes

Text

"windows 11 upgrade ready!" "your computer is eligible for windows 11!" "download windows 11 now!"

#prev you don't even have to these days#even the “hardcore” distributions like arch have subdistros (or “flavors”) that are GUI-focused and user-friendly :)#I use EndeavourOS which is one of these :)#linux has been a back-end tech industry standard for years and since gamers and creators have been turning to it in the last few years#companies have also been making their hardware with linux specifically in mind#so problems like nvidia driver setup and such aren't really an issue anymore#TIP: pick from the 10-15 or so most popular ones and you'll have more beginner-friendly support more quickly

49K notes

·

View notes

Text

Boost Your AI Projects with Datacenter-Hosted GPUs!

Struggling with slow training times and hardware limits? Step into the future with NeuralRack.ai — where high-performance AI computing meets flexibility.

Whether you’re working on deep learning, large-scale ML models, or just need GPU power for a few hours, NeuralRack's datacenter-hosted GPUs are built to scale with you. ⚡

🎯 Why NeuralRack.ai? ✔️ Lightning-fast NVIDIA GPUs ✔️ Secure & reliable infrastructure ✔️ No long-term commitment ✔️ 24/7 support for seamless training ✔️ Affordable pricing for individuals & enterprises

��� Ready to optimize your budget? Explore the plans 👉 Check Pricing

From research to production — train smarter, faster, and better with NeuralRack. Let your models run wild, not your wallet. 🔥

#AIComputing #MachineLearning #GPURental #DeepLearning #CloudGPUs #NeuralRack #AIInfrastructure #DataScienceTools #HighPerformanceComputing #AIDevelopment

#gpu#nvidia gpu#gpucomputing#nvidia#amd#hardware#artists on tumblr#artificial intelligence#rtx#rtx 5090#rtx4060#rtx4090

1 note

·

View note

Text

youtube

#photography#photographers on tumblr#laptop#artists on tumblr#technically#tech#music#design#movies#technology#dell laptop#laptop repair#gaming laptop#acer laptop#computer#smartphone#computing#fujitsu#lenovo#monitor#nvidia#xiaomi#smartwatch#hp#3d printer#printers and scanners#hardware#room#cyber#printer ink

0 notes

Text

Windows 11 e Nuovo Hardware 2025 - CPU, NVIDIA RTX Serie 40 e 50, Prezzi

Windows 11 e il Nuovo Hardware 2025: CPU, Schede Video NVIDIA Serie 40 e 50, Prezzi e Novità! Windows 11 continua a evolversi nel 2025, portando con sé un supporto sempre più avanzato per l’hardware di ultima generazione. Con l’arrivo di nuove CPU e delle potenti schede video NVIDIA GeForce RTX Serie 40 e Serie 50, il sistema operativo di Microsoft si prepara a sfruttare al massimo le tecnologie…

#CPU AMD#CPU Intel#Gaming#Hardware 2025#NVIDIA RTX 40#NVIDIA RTX 50#Prezzi GPU#Schede Video#tecnologia#Windows 11

0 notes

Text

Probleme de alimentare pentru GeForce RTX 5090: Conectorul 12VHPWR continuă să creeze dificultăți

Seria NVIDIA GeForce RTX 5090 aduce o performanță de top, dar și provocări majore legate de alimentare. Utilizarea controversatului conector 12VHPWR, care a creat probleme și pe seria RTX 4090, reaprinde discuțiile despre fiabilitatea și siguranța acestuia. 🔥 Un nou caz de conector topit Un utilizator de RTX 5090 a raportat recent deteriorarea conectorului 12VHPWR, incidentul fiind atribuit…

#12VHPWR#alimentare PC#bam#computer hardware failure#conector topit#diagnoza#gaming hardware#GPU overheating#GPU power cables#GPU thermal issues#graphics card warranty#high-power GPUs#neamt#NVIDIA GeForce RTX 5090#overheating GPU#PC gaming safety#probleme alimentare RTX 5090#PSU compatibil#roman#RTX 5090 power consumption#RTX 5090 power issues#RTX 5090 vs RTX 4090

0 notes