#NVIDIA DGX Platform

Explore tagged Tumblr posts

Text

Quantum Machines and Nvidia use machine learning to get closer to an error-corrected quantum computer

About a year and a half ago, quantum control startup Quantum Machines and Nvidia announced a deep partnership that would bring together Nvidia’s DGX Quantum computing platform and Quantum Machine’s advanced quantum control hardware. We didn’t hear much about the results of this partnership for a while, but it’s now starting to bear fruit and […] © 2024 TechCrunch. All rights reserved. For…

0 notes

Text

NVIDIA BlueField 3 DPU For Optimized Kubernetes Performance

The world’s data centers are powered by the NVIDIA BlueField 3 DPUs Networking Platform, an advanced infrastructure computing platform.

Transform the Data Center With NVIDIA BlueField

For contemporary data centers and supercomputing clusters, the NVIDIA BlueField networking technology sparks previously unheard-of innovation. BlueField ushers in a new era of accelerated computing and artificial intelligence(AI) by establishing a safe and accelerated infrastructure for every application in any environment with its powerful computational power and networking, storage, and security software-defined hardware accelerators.

The BlueField In the News

NVIDIA and F5 Use NVIDIA BlueField 3 DPUs to Boost Sovereign AI Clouds

By offloading data workloads, NVIDIA BlueField 3 DPUs work with F5 BIG-IP Next for Kubernetes to increase AI efficiency and fortify security.

Arrival of NVIDIA GB200 NVL72 Platforms with NVIDIA BlueField 3 DPUs

The most compute-intensive applications may benefit from data processing improvements made possible by flagship, rack-scale solutions driven by NVIDIA BlueField 3 networking technologies and the Grace Blackwell accelerator.

The new DGX SuperPOD architecture from NVIDIA Constructed using NVIDIA BlueField-3 DPUs and DGX GB200 Systems

With NVIDIA BlueField 3 DPUs, the DGX GB200 devices at the core of the Blackwell-powered DGX SuperPOD architecture provide high-performance storage access and next-generation networking.

Examine NVIDIA’s BlueField Networking Platform Portfolio

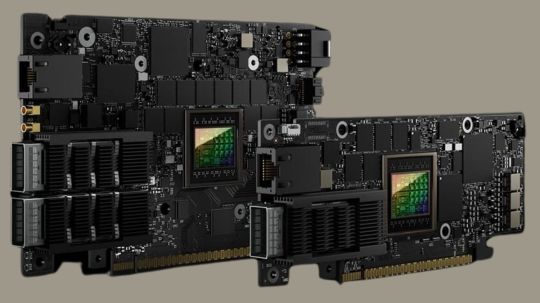

NVIDIA BlueField-3 DPU

The 400 Gb/s NVIDIA BlueField 3 DPU infrastructure computing platform can conduct software-defined networking, storage, and cybersecurity at line-rate rates. BlueField-3 combines powerful processing, quick networking, and flexible programmability to provide software-defined, hardware-accelerated solutions for the most demanding applications. BlueField-3 is redefining the art of the possible with its accelerated AI, hybrid cloud, high-performance computing, and 5G wireless networks.

NVIDIA BlueField-3 SuperNIC

An innovative network accelerator designed specifically to boost hyperscale AI workloads is the NVIDIA BlueField 3 SuperNIC. The BlueField-3 SuperNIC is designed for network-intensive, massively parallel computing and optimizes peak AI workload efficiency by enabling up to 400Gb/s of remote direct-memory access (RDMA) over Converged Ethernet (RoCE) network connection across GPU servers. By enabling safe, multi-tenant data center settings with predictable and separated performance across tasks and tenants, the BlueField-3 SuperNIC is ushering in a new age of AI cloud computing.

NVIDIA BlueField-2 DPU

In every host, the NVIDIA BlueField-2 DPU offers cutting-edge efficiency, security, and acceleration. For applications including software-defined networking, storage, security, and administration, BlueField-2 combines the capabilities of the NVIDIA ConnectX-6 Dx with programmable Arm cores and hardware offloads. With BlueField-2, enterprises can effectively develop and run virtualized, containerized, and bare-metal infrastructures at scale with to its enhanced performance, security, and lower total cost of ownership for cloud computing platforms.

NVIDIA DOCA

Use the NVIDIA DOCA software development kit to quickly create apps and services for the NVIDIA BlueField 3 DPUs networking platform, therefore unlocking data center innovation.

Networking in the AI Era

A new generation of network accelerators called NVIDIA Ethernet SuperNICs was created specifically to boost workloads involving network-intensive, widely dispersed AI computation.

Install and Run NVIDIA AI Clouds Securely

NVIDIA AI systems are powered by NVIDIA BlueField-3 DPUs.

Does Your Data Center Network Need to Be Updated?

When new servers or applications are added to the infrastructure, data center networks are often upgraded. There are additional factors to take into account, too, even if an upgrade is required due to new server and application architecture. Discover the three questions to ask when determining if your network needs to be updated.

Secure Next-Generation Apps Using the BlueField-2 DPU on the VMware Cloud Foundation

The next-generation VMware Cloud Foundation‘s integration of the NVIDIA BlueField-2 DPU provides a robust enterprise cloud platform with the highest levels of security, operational effectiveness, and return on investment. It is a secure architecture for the contemporary business private cloud that uses VMware and is GPU and DPU accelerated. Security, reduced TCO, improved speed, and previously unattainable new capabilities are all made feasible by the accelerators.

Learn about DPU-Based Hardware Acceleration from a Software Point of View

Although data processing units (DPUs) increase data center efficiency, their widespread adoption has been hampered by low-level programming requirements. This barrier is eliminated by NVIDIA’s DOCA software framework, which abstracts the programming of BlueField DPUs. Listen to Bob Wheeler, an analyst at the Linley Group, discuss how DOCA and CUDA will be used to enable users to program future integrated DPU+GPU technology.

Use the Cloud-Native Architecture from NVIDIA for Secure, Bare-Metal Supercomputing

Supercomputers are now widely used in commerce due to high-performance computing (HPC) and artificial intelligence. They now serve as the main data processing tools for studies, scientific breakthroughs, and even the creation of new products. There are two objectives when developing a supercomputer architecture: reducing performance-affecting elements and, if feasible, accelerating application performance.

Explore the Benefits of BlueField

Peak AI Workload Efficiency

With configurable congestion management, direct data placement, GPUDirect RDMA and RoCE, and strong RoCE networking, BlueField creates a very quick and effective network architecture for AI.

Security From the Perimeter to the Server

Safety BlueField facilitates a zero-trust, security-everywhere architecture that extends security beyond the boundaries of the data center to each server’s edge.

Storage of Data for Growing Workloads

BlueField offers high-performance storage access with latencies for remote storage that are competitive with direct-attached storage with to NVMe over Fabrics (NVMe-oF), GPUDirect Storage, encryption, elastic storage, data integrity, decompression, and deduplication.

Cloud Networking with High Performance

With up to 400Gb/s of Ethernet and InfiniBand connection for both conventional and contemporary workloads, BlueField is a powerful cloud infrastructure processor that frees up host CPU cores to execute applications rather than infrastructure duties.

F5 Turbocharger and NVIDIA Efficiency and Security of Sovereign AI Cloud

NVIDIA BlueField 3 DPUs use F5 BIG-IP Next for Kubernetes to improve AI security and efficiency.

NVIDIA and F5 are combining NVIDIA BlueField 3 DPUs with the F5 BIG-IP Next for Kubernetes for application delivery and security in order to increase AI efficiency and security in sovereign cloud settings.

The partnership seeks to expedite the release of AI applications while assisting governments and businesses in managing sensitive data. IDC predicts a $250 billion sovereign cloud industry by 2027. By 2027, ABI Research expects the foundation model market to reach $30 billion.

Sovereign clouds are designed to adhere to stringent localization and data privacy standards. They are essential for government organizations and sectors that handle sensitive data, such financial services and telecommunications.

By providing a safe and compliant AI networking infrastructure, F5 BIG-IP Next for Kubernetes installed on NVIDIA BlueField 3 DPUs enables companies to embrace cutting-edge AI capabilities without sacrificing data privacy.

F5 BIG-IP Next for Kubernetes effectively sends AI commands to LLM instances while using less energy by delegating duties like as load balancing, routing, and security to the BlueField-3 DPU. This maximizes the use of GPU resources while guaranteeing scalable AI performance.

Through more effective AI workload management, the partnership will also benefit NVIDIA NIM microservices, which speed up the deployment of foundation models.

NVIDIA BlueField-3 DPU Price

NVIDIA and F5’s integrated solutions offer increased security and efficiency, which are critical for companies moving to cloud-native infrastructures. These developments enable enterprises in highly regulated areas to safely and securely grow AI systems while adhering to the strictest data protection regulations.

Pricing for the NVIDIA BlueField 3 DPU varies on model and features. BlueField-3 B3210E E-Series FHHL models with 100GbE connection cost $2,027, while high-performance models like the B3140L with 400GbE cost $2,874. Luxury variants like the BlueField-3 B3220 P-Series cost about $3,053. These prices sometimes include savings from the original retail cost, which might be much more depending on the seller and customizations.

Reda more on Govindhtech.com

#Nvidia#NVIDIABluefield#NVIDIABluefield3#NVIDIABluefield3DPU#Kubernetes#F5BIGIP#govindhtech#news#Technology#technews#technologynews#technologytrends

0 notes

Text

Nvidia Showcases AI Innovations - D.C. Summit

Join the newsletter: https://avocode.digital/newsletter/

Introduction to Nvidia's AI Innovation

Nvidia continues to push the boundaries of artificial intelligence with the recent unveiling of their latest software and services at the D.C. AI Summit. Held in the heart of Washington D.C., the summit served as a prestigious platform to showcase Nvidia's groundbreaking advancements in the field of AI. At the event, industry leaders, innovators, and tech enthusiasts gathered to explore the latest trends and future directions of AI technology. This article underscores Nvidia's journey to forefront AI innovation and the significant highlights of the summit.

Nvidia: A Pioneer in AI Technology

Nvidia has long been recognized as a leader in the realm of artificial intelligence. With their pioneering developments in graphics processing units (GPUs) and parallel computing, Nvidia has revolutionized the way we understand and implement AI technologies. The company's commitment to leveraging AI's potential was clearly evident at the D.C. Summit, where they demonstrated substantial advancements in AI software and services.

Core Areas of Innovation

At the summit, Nvidia focused on several core areas: - **AI Software Development**: Nvidia is developing cutting-edge software that enhances AI model performance and efficiency. - **Cloud Services**: They are expanding cloud-based AI services to facilitate easy scalability and accessibility. - **Deep Learning Technologies**: Nvidia continues to advance deep learning applications across various sectors. - **AI-Powered Graphics**: With a legacy in GPU technology, Nvidia integrates AI capabilities for improved graphics rendering and visualization.

Highlights from the D.C. AI Summit

The D.C. AI Summit was a kaleidoscope of cutting-edge technological innovation, portraying a vivid image of the future shaped by AI. Nvidia's prominent presence revealed key aspects of their agenda and showcased several advancements set to inspire and drive transformative AI solutions.

AI Software and Platforms

Nvidia's latest AI software platforms, unveiled at the summit, underline the company's dedication to making AI more accessible and powerful: - **Nvidia AI Enterprise**: A comprehensive suite of AI and data analytics software optimized for use on the cloud. This software helps organizations deploy and manage AI workloads with ease and efficiency. - **Omniverse Platform**: Discussed as the 'metaverse for engineering', the Omniverse platform facilitates collaborative 3D design and real-time simulation across industries.

Advanced AI Hardware

The summit also highlighted Nvidia's latest advancements in hardware specifically designed for AI applications: - **Nvidia Grace Processor**: This new data center CPU offers unprecedented performance for AI and high-performance computing tasks. It represents Nvidia’s push to optimize AI-centric workloads. - **DGX Systems**: Nvidia's DGX systems have been updated, providing the horsepower needed for training and deploying large AI models.

The Summit Environment

The event promised not just a preview of futuristic technology but also a deep dive into discussions, collaborations, and thought leadership in AI. The summit environment was dynamic and forward-thinking, perfectly encapsulating the spirit of innovation.

Networking and Collaboration

Professionals from diverse fields came together to discuss AI industry trends and opportunities for collaboration. Key sessions included vibrant discussions on: - **Cross-Industry AI Adoption**: Exploring how different sectors like healthcare, automotive, and finance are adopting and benefiting from AI. - **AI Ethical Considerations**: Addressing ethical challenges and responsible AI practices to ensure beneficial and impartial AI development.

The Future of AI with Nvidia

Nvidia's strategic vision doesn't just stop with current achievements; it is also future-oriented. Their efforts are continuously molded by their dedication to innovation and their readiness to embrace new challenges. As AI technology evolves, Nvidia aims to lead the charge, pushing forward with: - **Sustainable AI Practices**: Developing efficient, environmentally-conscious AI solutions. - **Open AI Frameworks**: Encouraging collaboration and knowledge sharing within the AI community. - **AI for All**: Making AI tools and capabilities accessible to smaller businesses and individual developers, democratizing AI technology.

Conclusion

Nvidia's showcase at the D.C. AI Summit was a testament to their pioneering spirit and unwavering commitment to advancing AI technology. With groundbreaking software and hardware solutions, Nvidia continues to lead the charge in making AI a pivotal part of modern life. The insights and innovations shared at the summit not only highlight Nvidia's current achievements but also hint at an inspiring future, driven by AI possibilities. The success of the summit underscores the importance of collaboration and innovation in fostering an AI-driven world. As the AI landscape continues to evolve, Nvidia remains at the helm, setting benchmarks and paving the way for sustainable, ethical, and impactful AI developments. Want more? Join the newsletter: https://avocode.digital/newsletter/

0 notes

Text

Generative AI Playgrounds: Pioneering the Next Generation of Intelligent Solution

New Post has been published on https://thedigitalinsider.com/generative-ai-playgrounds-pioneering-the-next-generation-of-intelligent-solution/

Generative AI Playgrounds: Pioneering the Next Generation of Intelligent Solution

Generative AI has gained significant traction due to its ability to create content that mimics human creativity. Despite its vast potential, with applications ranging from generating text and images to composing music and writing code, interacting with these rapidly evolving technologies remains daunting. The complexity of generative AI models and the technical expertise required often create barriers for individuals and small businesses who could benefit from it. To address this challenge, generative AI playgrounds are emerging as essential tools for democratizing access to these technologies.

What is Generative AI Playground

Generative AI playgrounds are intuitive platforms that facilitate interaction with generative models. They enable users to experiment and refine their ideas without requiring extensive technical knowledge. These environments provide developers, researchers, and creatives with an accessible space to explore AI capabilities, supporting activities such as rapid prototyping, experimentation and customization. The main goal of these playgrounds is to democratize access to advanced AI technologies, making it easier for users to innovate and experiment. Some of the leading generative AI playgrounds are:

Hugging Face: Hugging Face is a leading generative AI playground, especially renowned for its natural language processing (NLP) capabilities. It offers a comprehensive library of pre-trained AI models, datasets, and tools, making it easier to create and deploy AI applications. A key feature of Hugging Face is its transformers library, which includes a broad range of pre-trained models for tasks such as text classification, translation, summarization, and question-answering. Additionally, it provides a dataset library for training and evaluation, a model hub for discovering and sharing models, and an inference API for integrating models into real-time applications.

OpenAI’s Playground: The OpenAI Playground is a web-based tool that provides a user-friendly interface for experimenting with various OpenAI models, including GPT-4 and GPT-3.5 Turbo. It features three distinct modes to serve different needs: Chat Mode, which is ideal for building chatbot applications and includes fine-tuning controls; Assistant Mode, which equips developers with advanced development tools such as functions, a code interpreter, retrieval, and file handling for development tasks; and Completion Mode, which supports legacy models by allowing users to input text and view how the model completes it, with features like “Show probabilities” to visualize response likelihoods.

NVIDIA AI Playground: The NVIDIA AI Playground allows researchers and developers to interact with NVIDIA’s generative AI models directly from their browsers. Utilizing NVIDIA DGX Cloud, TensorRT, and Triton inference server, the platform offers optimized models that enhance throughput, reduce latency, and improve compute efficiency. Users can access inference APIs for their applications and research and run these models on local workstations with RTX GPUs. This setup enables high-performance experimentation and practical implementation of AI models in a streamlined fashion.

GitHub’s Models: GitHub has recently introduced GitHub Models, a playground aimed at increasing accessibility to generative AI models. With GitHub Models, users can explore, test, and compare models such as Meta’s Llama 3.1, OpenAI’s GPT-4o, Cohere’s Command, and Mistral AI’s Mistral Large 2 directly within the GitHub web interface. Integrated into GitHub Codespaces and Visual Studio Code, this tool streamlines the transition from AI application development to production. Unlike Microsoft Azure, which necessitates a predefined workflow and is available only to subscribers, GitHub Models offers immediate access, eliminating these barriers and providing a more seamless experience.

Amazon’s Party Rock: This generative AI playground, developed for Amazon’s Bedrock services, provides access to Amazon’s foundation AI models for building AI-driven applications. It offers a hands-on, user-friendly experience for exploring and learning about generative AI. With Amazon Bedrock, users can create a PartyRock app in three ways: start with a prompt by describing your desired app, which PartyRock will assemble for you; remix an existing app by modifying samples or apps from other users through the “Remix” option; or build from scratch with an empty app, allowing for complete customization of the layout and widgets.

The Potential of Generative AI Playgrounds

Generative AI playgrounds offer several key potentials that make them valuable tools for a wide range of users:

Accessibility: They lower the barrier to entry for working with complex generative AI models. This makes generative AI accessible to non-experts, small businesses, and individuals who might otherwise find it difficult to engage with these technologies.

Innovation: By providing user-friendly interfaces and pre-built models, these playgrounds encourage creativity and innovation, allowing users to quickly prototype and test new ideas.

Customization: Users can readily adopt generative AI models to their specific needs, experimenting with fine-tuning and modifications to create customized solutions that serve their unique requirements.

Integration: Many platforms facilitate integration with other tools and systems, making it easier to incorporate AI capabilities into existing workflows and applications.

Educational Value: These platforms serve as educational tools, helping users learn about AI technologies and how they work through hands-on experience and experimentation.

The Challenges of Generative AI Playgrounds

Despite the potential, generative AI platforms face several challenges:

The primary challenge is the technical complexity of generative AI models. While they aim to simplify interaction, advanced generative AI models require substantial computational resources and a deep understanding of their workings, especially for building custom applications. High-performance computing resources and optimized algorithms are essential to improve response and usability of these platforms.

Handling private data on these platforms also poses a challenge. Robust encryption, anonymization, and strict data governance are necessary to ensure privacy and security on these playgrounds, making them trustworthy.

For generative AI playgrounds to be truly useful, they must seamlessly integrate with existing workflows and tools. Ensuring compatibility with various software, APIs, and hardware can be complex, requiring ongoing collaboration with technology providers and adherence to new AI standards.

The rapid pace of AI advancements means these playgrounds must continuously evolve. They need to incorporate the latest models and features, anticipate future trends, and adapt quickly. Staying current and agile is crucial in this fast-moving field.

The Bottom Line

Generative AI playgrounds are paving the way for broader access to advanced AI technologies. By offering intuitive platforms like Hugging Face, OpenAI’s Playground, NVIDIA AI Playground, GitHub Models, and Amazon’s Party Rock, these tools enable users to explore and experiment with AI models without needing deep technical expertise. However, the road ahead is not without hurdles. Ensuring these platforms handle complex models efficiently, protect user data, integrate well with existing tools, and keep up with rapid technological changes will be crucial. As these playgrounds continue to develop, their ability to balance user-friendliness with technical depth will determine their impact on innovation and accessibility.

#Accessibility#agile#ai#AI models#AI platforms#Algorithms#Amazon#API#APIs#app#application development#applications#apps#Artificial Intelligence#azure#barrier#Building#challenge#chatbot#Cloud#code#Cohere#Collaboration#command#complexity#comprehensive#computing#content#creativity#data

0 notes

Text

Nvidia HGX vs DGX: Key Differences in AI Supercomputing Solutions

Nvidia HGX vs DGX: What are the differences?

Nvidia is comfortably riding the AI wave. And for at least the next few years, it will likely not be dethroned as the AI hardware market leader. With its extremely popular enterprise solutions powered by the H100 and H200 “Hopper” lineup of GPUs (and now B100 and B200 “Blackwell” GPUs), Nvidia is the go-to manufacturer of high-performance computing (HPC) hardware.

Nvidia DGX is an integrated AI HPC solution targeted toward enterprise customers needing immensely powerful workstation and server solutions for deep learning, generative AI, and data analytics. Nvidia HGX is based on the same underlying GPU technology. However, HGX is a customizable enterprise solution for businesses that want more control and flexibility over their AI HPC systems. But how do these two platforms differ from each other?

Nvidia DGX: The Original Supercomputing Platform

It should surprise no one that Nvidia’s primary focus isn’t on its GeForce lineup of gaming GPUs anymore. Sure, the company enjoys the lion’s share among the best gaming GPUs, but its recent resounding success is driven by enterprise and data center offerings and AI-focused workstation GPUs.

Overview of DGX

The Nvidia DGX platform integrates up to 8 Tensor Core GPUs with Nvidia’s AI software to power accelerated computing and next-gen AI applications. It’s essentially a rack-mount chassis containing 4 or 8 GPUs connected via NVLink, high-end x86 CPUs, and a bunch of Nvidia’s high-speed networking hardware. A single DGX B200 system is capable of 72 petaFLOPS of training and 144 petaFLOPS of inference performance.

Key Features of DGX

AI Software Integration: DGX systems come pre-installed with Nvidia’s AI software stack, making them ready for immediate deployment.

High Performance: With up to 8 Tensor Core GPUs, DGX systems provide top-tier computational power for AI and HPC tasks.

Scalability: Solutions like the DGX SuperPOD integrate multiple DGX systems to form extensive data center configurations.

Current Offerings

The company currently offers both Hopper-based (DGX H100) and Blackwell-based (DGX B200) systems optimized for AI workloads. Customers can go a step further with solutions like the DGX SuperPOD (with DGX GB200 systems) that integrates 36 liquid-cooled Nvidia GB200 Grace Blackwell Superchips, comprised of 36 Nvidia Grace CPUs and 72 Blackwell GPUs. This monstrous setup includes multiple racks connected through Nvidia Quantum InfiniBand, allowing companies to scale thousands of GB200 Superchips.

Legacy and Evolution

Nvidia has been selling DGX systems for quite some time now — from the DGX Server-1 dating back to 2016 to modern DGX B200-based systems. From the Pascal and Volta generations to the Ampere, Hopper, and Blackwell generations, Nvidia’s enterprise HPC business has pioneered numerous innovations and helped in the birth of its customizable platform, Nvidia HGX.

Nvidia HGX: For Businesses That Need More

Build Your Own Supercomputer

For OEMs looking for custom supercomputing solutions, Nvidia HGX offers the same peak performance as its Hopper and Blackwell-based DGX systems but allows OEMs to tweak it as needed. For instance, customers can modify the CPUs, RAM, storage, and networking configuration as they please. Nvidia HGX is actually the baseboard used in the Nvidia DGX system but adheres to Nvidia’s own standard.

Key Features of HGX

Customization: OEMs have the freedom to modify components such as CPUs, RAM, and storage to suit specific requirements.

Flexibility: HGX allows for a modular approach to building AI and HPC solutions, giving enterprises the ability to scale and adapt.

Performance: Nvidia offers HGX in x4 and x8 GPU configurations, with the latest Blackwell-based baseboards only available in the x8 configuration. An HGX B200 system can deliver up to 144 petaFLOPS of performance.

Applications and Use Cases

HGX is designed for enterprises that need high-performance computing solutions but also want the flexibility to customize their systems. It’s ideal for businesses that require scalable AI infrastructure tailored to specific needs, from deep learning and data analytics to large-scale simulations.

Nvidia DGX vs. HGX: Summary

Simplicity vs. Flexibility

While Nvidia DGX represents Nvidia’s line of standardized, unified, and integrated supercomputing solutions, Nvidia HGX unlocks greater customization and flexibility for OEMs to offer more to enterprise customers.

Rapid Deployment vs. Custom Solutions

With Nvidia DGX, the company leans more into cluster solutions that integrate multiple DGX systems into huge and, in the case of the DGX SuperPOD, multi-million-dollar data center solutions. Nvidia HGX, on the other hand, is another way of selling HPC hardware to OEMs at a greater profit margin.

Unified vs. Modular

Nvidia DGX brings rapid deployment and a seamless, hassle-free setup for bigger enterprises. Nvidia HGX provides modular solutions and greater access to the wider industry.

FAQs

What is the primary difference between Nvidia DGX and HGX?

The primary difference lies in customization. DGX offers a standardized, integrated solution ready for deployment, while HGX provides a customizable platform that OEMs can adapt to specific needs.

Which platform is better for rapid deployment?

Nvidia DGX is better suited for rapid deployment as it comes pre-integrated with Nvidia’s AI software stack and requires minimal setup.

Can HGX be used for scalable AI infrastructure?

Yes, Nvidia HGX is designed for scalable AI infrastructure, offering flexibility to customize and expand as per business requirements.

Are DGX and HGX systems compatible with all AI software?

Both DGX and HGX systems are compatible with Nvidia’s AI software stack, which supports a wide range of AI applications and frameworks.

Final Thoughts

Choosing between Nvidia DGX and HGX ultimately depends on your enterprise’s needs. If you require a turnkey solution with rapid deployment, DGX is your go-to. However, if customization and scalability are your top priorities, HGX offers the flexibility to tailor your HPC system to your specific requirements.

Muhammad Hussnain Facebook | Instagram | Twitter | Linkedin | Youtube

0 notes

Text

Opera collaborates with Google Cloud to power its browser AI with Gemini Models

Opera, the browser innovator, has announced a collaboration with Google Cloud to integrate Gemini models into its Aria browser AI. Aria is powered by Opera's multi-LLM Composer AI engine, which allows the Norwegian company to curate the best experiences for its users based on their needs. Opera's Aria browser AI is unique as it doesn't just utilize one provider or LLM. Opera's Composer AI engine processes the user's intent and can decide which model to use for which task. Google's Gemini model is a modern, powerful, and user-friendly LLM that is the company's most capable model yet. Thanks to this integration, Opera will now be able to provide its users with the most current information, at high performance. "Our companies have been cooperating for more than 20 years. We are excited to be announcing the deepening of this collaboration into the field of generative AI to further power our suite of browser AI services," said Per Wetterdal, EVP Partnerships at Opera. "We're happy to elevate our long standing cooperation with Opera by powering its AI innovation within the browser space," said Eva Fors, Managing Director, Google Cloud Nordic Region. Opera has been tapping into the potential of browser AI for more than a year now. Currently, all of its flagship browsers and its gaming browser, Opera GX, provide access to the new browser AI. Opera also recently opened a green energy-powered AI data cluster in Iceland with NVIDIA DGX supercomputing in order to be able to quickly expand its AI program and host the computing it requires in its own facility. To stay at the forefront of innovation, the company also recently announced its AI Feature Drops program, which allows early adopters to test its newest, experimental, AI innovations in the Opera One Developer version of the browser. Image generation and voice output in Aria powered by Google Cloud The newest AI Feature Drop is a result of the collaboration with Google Cloud: as of today, Aria, in Opera One Developer, provides free image generation capabilities by utilizing the Imagen 2 model on Vertex AI. Starting with this feature drop, Opera's AI will be able to read out responses in a conversational-like fashion. This is thanks to Google's ground-breaking text-to-audio model. "We believe the future of AI will be open, so we're providing access to the best of Google's infrastructure, AI products, platforms and foundation models to empower organizations to chart their course with generative AI," added Fors. Opera partners with Google Cloud to boost its browser AI in Opera One Read the full article

0 notes

Text

NVIDIA Q1 2025 Earnings Report

NVIDIA has reported its financial results for the first quarter of Fiscal 2025, showcasing impressive revenue growth and a strong market position. The announcement underscores the company's leadership in the technology sector and its continuous innovation across various domains.

Financial Highlights

Record Revenue Growth NVIDIA reported a revenue of $8.29 billion for Q1 Fiscal 2025, a significant increase compared to the previous year. This growth was driven by strong demand across all market segments, particularly in data centers and gaming. - Data Centers: Revenue from data centers surged by 35%, reaching $3.75 billion. The rise is attributed to the growing adoption of AI and cloud computing solutions. - Gaming: The gaming segment generated $2.75 billion, marking a 25% increase year-over-year, fueled by high demand for NVIDIA's GeForce GPUs. Net Income and Earnings Per Share NVIDIA's net income for the quarter was $2.05 billion, translating to an earnings per share (EPS) of $1.32. This performance reflects the company's efficient cost management and strategic investments in high-growth areas.

Key Drivers of Growth

AI and Machine Learning NVIDIA's advancements in AI and machine learning have significantly contributed to its financial success. The company's GPUs are widely used in AI applications, enhancing their performance and efficiency. - AI Innovations: NVIDIA's AI platforms, including the NVIDIA DGX systems and the NVIDIA AI Enterprise software suite, have seen increased adoption across various industries. - Partnerships: Strategic partnerships with leading tech companies have bolstered NVIDIA's position in the AI market, facilitating broader deployment of its technologies. Gaming and Graphics The gaming industry remains a core driver of NVIDIA's revenue growth. The introduction of the GeForce RTX 40 Series has been particularly successful, capturing the attention of both gamers and professionals. - GeForce RTX 40 Series: This new line of GPUs offers enhanced performance and graphics capabilities, driving higher sales and market penetration. - Esports and Streaming: The rise of esports and game streaming platforms has also contributed to the increased demand for high-performance GPUs.

Strategic Initiatives

Expanding into New Markets NVIDIA is actively exploring new markets to sustain its growth trajectory. The company's initiatives in automotive technology and edge computing are expected to yield significant results. - Automotive Technology: NVIDIA's DRIVE platform continues to gain traction in the autonomous vehicle industry, with new partnerships and collaborations expanding its reach. - Edge Computing: Investments in edge computing technologies are positioning NVIDIA to capitalize on the growing need for decentralized computing power. Sustainability Efforts NVIDIA is committed to sustainability and has implemented several initiatives to reduce its environmental impact. - Green Computing: The company is focusing on developing energy-efficient GPUs and promoting green computing practices across the industry. - Sustainable Operations: NVIDIA's facilities and operations are increasingly adopting renewable energy sources and sustainable practices.

Market Outlook and Future Prospects

Positive Market Sentiment The market outlook for NVIDIA remains positive, with analysts forecasting continued growth in the coming quarters. The company's strong financial performance and strategic initiatives position it well for future success. - Analyst Predictions: Industry analysts predict a continued upward trend in NVIDIA's revenue, driven by its leadership in AI and gaming. - Investment in R&D: NVIDIA's commitment to research and development ensures it remains at the forefront of technological innovation. Overview NVIDIA's first-quarter financial results for Fiscal 2025 highlight its strong market position and impressive revenue growth. With a focus on AI, gaming, and sustainability, NVIDIA is well-positioned to continue its success and drive innovation in the tech industry. NVIDIA Financial Highlights for Q1 Fiscal 2025 Metric Q1 Fiscal 2025 Year-over-Year Change Revenue $8.29 billion +30% Data Center Revenue $3.75 billion +35% Gaming Revenue $2.75 billion +25% Net Income $2.05 billion +28% Earnings Per Share $1.32 +26% Key Financial Metrics Metric Q1 Fiscal 2025 Q1 Fiscal 2024 Year-over-Year Change Gross Margin 64.8% 63.5% +1.3% Operating Expenses $1.95 billion $1.70 billion +14.7% Operating Income $3.70 billion $2.90 billion +27.6% Net Income $2.05 billion $1.60 billion +28.1% Earnings Per Share (EPS) $1.32 $1.05 +25.7% NVIDIA's AI and Machine Learning Platforms Performance Platform Q1 Fiscal 2025 Revenue Year-over-Year Change NVIDIA DGX Systems $1.20 billion +40% NVIDIA AI Enterprise $0.80 billion +35% Partnership Revenue (AI/ML) $1.00 billion +38% Total AI/ML Revenue $3.00 billion +37.5% NVIDIA's consistent growth and strategic initiatives underscore its position as a leader in the technology sector, poised for continued success in the years to come. Sources: THX News & NVIDIA. Read the full article

#datacentergrowth#dividendincrease#generativeAIdemand#NVIDIAfinancialresults#NVIDIAQ12025earnings#Q12025revenue#thxnews

0 notes

Text

Exploring the Key Differences: NVIDIA DGX vs NVIDIA HGX Systems

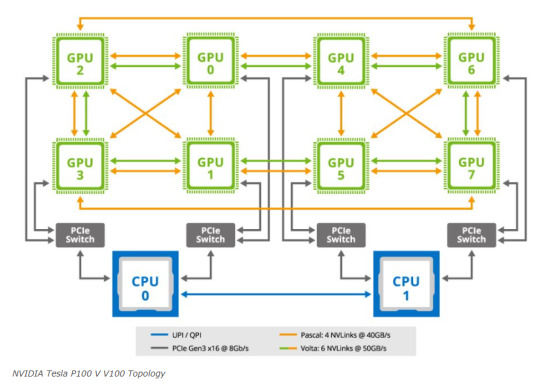

A frequent topic of inquiry we encounter involves understanding the distinctions between the NVIDIA DGX and NVIDIA HGX platforms. Despite the resemblance in their names, these platforms represent distinct approaches NVIDIA employs to market its 8x GPU systems featuring NVLink technology. The shift in NVIDIA’s business strategy was notably evident during the transition from the NVIDIA P100 “Pascal” to the V100 “Volta” generations. This period marked the significant rise in prominence of the HGX model, a trend that has continued through the A100 “Ampere” and H100 “Hopper” generations.

NVIDIA DGX versus NVIDIA HGX What is the Difference

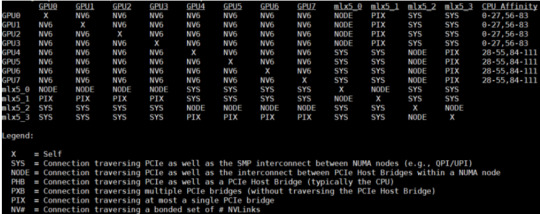

Focusing primarily on the 8x GPU configurations that utilize NVLink, NVIDIA’s product lineup includes the DGX and HGX lines. While there are other models like the 4x GPU Redstone and Redstone Next, the flagship DGX/HGX (Next) series predominantly features 8x GPU platforms with SXM architecture. To understand these systems better, let’s delve into the process of building an 8x GPU system based on the NVIDIA Tesla P100 with SXM2 configuration.

DeepLearning12 Initial Gear Load Out

Each server manufacturer designs and builds a unique baseboard to accommodate GPUs. NVIDIA provides the GPUs in the SXM form factor, which are then integrated into servers by either the server manufacturers themselves or by a third party like STH.

DeepLearning12 Half Heatsinks Installed 800

This task proved to be quite challenging. We encountered an issue with a prominent server manufacturer based in Texas, where they had applied an excessively thick layer of thermal paste on the heatsinks. This resulted in damage to several trays of GPUs, with many experiencing cracks. This experience led us to create one of our initial videos, aptly titled “The Challenges of SXM2 Installation.” The difficulty primarily arose from the stringent torque specifications required during the GPU installation process.

NVIDIA Tesla P100 V V100 Topology

During this development, NVIDIA established a standard for the 8x SXM GPU platform. This standardization incorporated Broadcom PCIe switches, initially for host connectivity, and subsequently expanded to include Infiniband connectivity.

Microsoft HGX 1 Topology

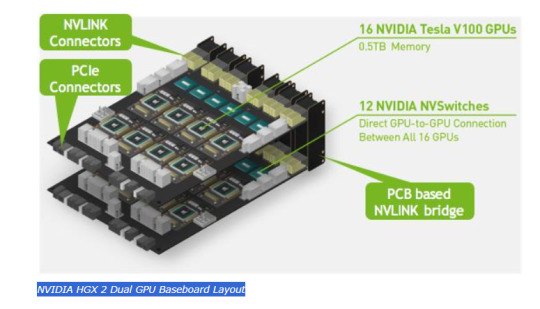

It also added NVSwitch. NVSwitch was a switch for the NVLink fabric that allowed higher performance communication between GPUs. Originally, NVIDIA had the idea that it could take two of these standardized boards and put them together with this larger switch fabric. The impact, though, was that now the NVIDIA GPU-to-GPU communication would occur on NVIDIA NVSwitch silicon and PCIe would have a standardized topology. HGX was born.

NVIDIA HGX 2 Dual GPU Baseboard Layout

Let’s delve into a comparison of the NVIDIA V100 setup in a server from 2020, renowned for its standout color scheme, particularly in the NVIDIA SXM coolers. When contrasting this with the earlier P100 version, an interesting detail emerges. In the Gigabyte server that housed the P100, one could notice that the SXM2 heatsinks were without branding. This marked a significant shift in NVIDIA’s approach. With the advent of the NVSwitch baseboard equipped with SXM3 sockets, NVIDIA upped its game by integrating not just the sockets but also the GPUs and their cooling systems directly. This move represented a notable advancement in their hardware design strategy.

Consequences

The consequences of this development were significant. Server manufacturers now had the option to acquire an 8-GPU module directly from NVIDIA, eliminating the need to apply excessive thermal paste to the GPUs. This change marked the inception of the NVIDIA HGX topology. It allowed server vendors the flexibility to customize the surrounding hardware as they desired. They could select their preferred specifications for RAM, CPUs, storage, and other components, while adhering to the predetermined GPU configuration determined by the NVIDIA HGX baseboard.

Inspur NF5488M5 Nvidia Smi Topology

This was very successful. In the next generation, the NVSwitch heatsinks got larger, the GPUs lost a great paint job, but we got the NVIDIA A100. The codename for this baseboard is “Delta”. Officially, this board was called the NVIDIA HGX.

Inspur NF5488A5 NVIDIA HGX A100 8 GPU Assembly 8x A100 And NVSwitch Heatsinks Side 2

NVIDIA, along with its OEM partners and clients, recognized that increased power could enable the same quantity of GPUs to perform additional tasks. However, this enhancement came with a drawback: higher power consumption led to greater heat generation. This development prompted the introduction of liquid-cooled NVIDIA HGX A100 “Delta” platforms to efficiently manage this heat issue.

Supermicro Liquid Cooling Supermicro

The HGX A100 assembly was initially introduced with its own brand of air cooling systems, distinctively designed by the company.

In the newest “Hopper” series, the cooling systems were upscaled to manage the increased demands of the more powerful GPUs and the enhanced NVSwitch architecture. This upgrade is exemplified in the NVIDIA HGX H100 platform, also known as “Delta Next”.

NVIDIA DGX H100

NVIDIA’s DGX and HGX platforms represent cutting-edge GPU technology, each serving distinct needs in the industry. The DGX series, evolving since the P100 days, integrates HGX baseboards into comprehensive server solutions. Notable examples include the DGX V100 and DGX A100. These systems, crafted by rotating OEMs, offer fixed configurations, ensuring consistent, high-quality performance.

While the DGX H100 sets a high standard, the HGX H100 platform caters to clients seeking customization. It allows OEMs to tailor systems to specific requirements, offering variations in CPU types (including AMD or ARM), Xeon SKU levels, memory, storage, and network interfaces. This flexibility makes HGX ideal for diverse, specialized applications in GPU computing.

Conclusion

NVIDIA’s HGX baseboards streamline the process of integrating 8 GPUs with advanced NVLink and PCIe switched fabric technologies. This innovation allows NVIDIA’s OEM partners to create tailored solutions, giving NVIDIA the flexibility to price HGX boards with higher margins. The HGX platform is primarily focused on providing a robust foundation for custom configurations.

In contrast, NVIDIA’s DGX approach targets the development of high-value AI clusters and their associated ecosystems. The DGX brand, distinct from the DGX Station, represents NVIDIA’s comprehensive systems solution.

Particularly noteworthy are the NVIDIA HGX A100 and HGX H100 models, which have garnered significant attention following their adoption by leading AI initiatives like OpenAI and ChatGPT. These platforms demonstrate the capabilities of the 8x NVIDIA A100 setup in powering advanced AI tools. For those interested in a deeper dive into the various HGX A100 configurations and their role in AI development, exploring the hardware behind ChatGPT offers insightful perspectives on the 8x NVIDIA A100’s power and efficiency.

M.Hussnain Visit us on social media: Facebook | Twitter | LinkedIn | Instagram | YouTube TikTok

#nvidia#nvidia dgx h100#nvidia hgx#DGX#HGX#Nvidia HGX A100#Nvidia HGX H100#Nvidia H100#Nvidia A100#Nvidia DGX H100#viperatech

0 notes

Text

Crazy AI News of the Week: GPT-5 Goes Official, Nvidia's AI Chip, Global Illumination, and AI Cars!

Introducing GPT Bot: Open AI's Specialized Program Enhancing AI Capabilities with Up-to-Date Information GPT Bot is an exciting development by Open AI that allows users to gather information from the entire Internet. Unlike Chat GBT, which has a knowledge cut-off date, GPT Bot delivers real-time data and information as users generate content. Open AI has many upcoming models, including GPT 5, and they continue to evolve their existing models like GBT4. The special programming of GPT Bot ensures it avoids infringing on privacy issues or accessing content behind paywalls. This ensures the appropriate and accessible use of the program for training purposes. Open AI Acquires Global Illumination: Enhancing Immersive Experiences Realistic Lighting and Visual Effects in Virtual Environments Open AI's recent acquisition of Global Illumination is a significant move towards enhancing the virtual realism and immersive experience of AI applications. Global Illumination is well-known for its expertise in creating realistic lighting and visual effects, particularly in virtual environments like video games. In fact, they have already developed an impressive open-source clone of Minecraft, showcasing their capabilities. By incorporating this new company and their technology, Open AI aims to create a more engaging virtual world for AI applications. This can greatly improve the effectiveness of AI models in learning from diverse and complex scenarios, particularly in virtual training environments. Nvidia Partners with Hugging Face: Empowering Developers Generative AI Supercomputing for Advanced Applications Nvidia's partnership with Hugging Face brings their dgx Cloud product into the ecosystem, empowering millions of developers with generative AI supercomputing technology. One of the biggest challenges for developers is the lack of graphic cards and their high cost. However, with this integration, developers can now access Nvidia's dgx Cloud AI supercomputing within the Hugging Face platform, making it easier and more efficient to develop AI applications and larger models. This collaboration aims to supercharge the adoption of generative AI in various industries, including intelligent chat bots, search, and summarization. SIGGRAPH Conference: Nvidia Introduces Groundbreaking AI Products Gh-200 Chip for Handling Terabyte-Class Models Nvidia's presence at the SIGGRAPH conference brought exciting announcements and innovations to the world of AI. One product that stood out is the release of their new chip, the Gh-200. This chip is specifically designed to handle terabyte-class models, offering an astounding 144 terabytes of shared memory. Its linear scalability makes it ideal for giant AI projects and models. These advancements in technology showcase the continuous growth and innovation in the field of AI. Cruise: Self-Driving Vehicles for Autonomous Transportation A Step Forward in Advancing Self-Driving Technology Cruise, a company dedicated to autonomous transportation, has officially introduced its self-driving vehicles. This significant step forward in autonomous transportation aligns with Cruise's larger objective of extending a robo-taxi service to various cities. They have already started testing these vehicles in Atlanta and have plans for data gathering and testing efforts across the nation. Cruise is committed to advancing the development and deployment of their self-driving technology. Stability AI Introduces Stable Code for Coding Needs A Language Model Designed to Assist Programmers Stability AI has made an exciting announcement with the introduction of Stable Code, their first large language model generative AI product designed specifically for coding needs. This product aims to assist programmers with their daily work while also serving as a learning tool for new developers. According to Open AI's human evaluation benchmark, Stable Code outperforms other coding language models like StarCoder Base and ReplicCoder. This benchmark showcases the reliability and effectiveness of Stable Code for coding tasks. Alibaba's Open Source Language Model: Quinn A Competitor to Chat GBT Alibaba recently open-sourced their own large language model called Quinn, positioning themselves as a competitor to Chat GBT. By making Quinn accessible to third-party developers, Alibaba enables them to build their own AI applications without the need to train their systems from scratch. This strategic move puts Alibaba in direct competition with Meta and poses a challenge to Chat GBT. Developers can access more information about Quinn in a video by Ben Mellor, which covers the model, datasets, and accessing methods. Join the AI Community and Stay Updated Subscribe, Follow, and Engage If you want to stay up-to-date with the latest AI trends, make sure to follow the World of AI on Twitter and subscribe to their YouTube channel. Turning on notifications will ensure you never miss new videos. Additionally, joining their Patreon community provides access to a vibrant Discord community, where you can engage with like-minded individuals, get the latest partnership and networking opportunities, and stay informed about the latest AI news. Sharing the content and subscribing to the channel helps support the World of AI and allows them to continue creating valuable and informative videos. Conclusion These recent developments in the world of AI are truly exciting and groundbreaking. From Open AI's GPT Bot bringing real-time information to users, to Nvidia's partnership with Hugging Face empowering developers with generative AI supercomputing technology, and Stability AI's introduction of Stable Code for coding needs, the AI landscape continues to evolve and innovate. Cruise's self-driving vehicles and Alibaba's open-source language model Quinn further contribute to the advancement of autonomous transportation and the accessibility of AI applications. The future of AI looks promising, and staying connected with the AI community ensures you stay at the forefront of these transformative advancements. Thank you for taking the time to read this article! If you found it interesting and would like to stay updated with more content, we would love for you to follow our blog. You can do so by subscribing to our email newsletter, liking our Facebook fan page, or subscribing to our YouTube channel. By doing this, you'll be the first to know about our latest articles, videos, and other exciting updates. We appreciate your support and hope to see you there! Frequently Asked Questions 1. What is the GPT bot introduced by Open AI? The GPT bot is a specialized program by Open AI that gathers up-to-date information from the entire Internet, providing real-time data and information when generating text or content using chat GBT. 2. How does the acquisition of Global illumination by Open AI impact AI applications? The acquisition of Global illumination by Open AI aims to enhance the virtual realism and immersive experience of AI applications, potentially improving realism for virtual training environments and making AI models more effective at learning from diverse and complex scenarios. 3. What is the partnership between Nvidia and hugging face about? Nvidia's dgx Cloud product is being implemented into the hugging face ecosystem, empowering developers with generative AI supercomputing technology for larger models and advanced AI applications. This partnership allows developers easier and more efficient development of AI apps and larger models on hugging face. 4. What is the gh200 chip released by Nvidia? The gh200 chip is designed to handle terabyte-class models for generative AI, offering 144 terabytes of shared memory with linear scalability. This chip contributes to the innovation and growth in the world of AI. 5. What is Stable Code by Stability AI? Stable Code is Stability AI's first large language model generative AI product specifically designed for coding needs. It assists programmers in their daily work and provides a learning tool for new developers. Stable Code has been benchmarked to outperform other coding language models in terms of coding needs. Read the full article

0 notes

Text

NVIDIA BioNeMo Enables Generative AI for Drug Discovery on AWS

NVIDIA BioNeMo Enables Generative AI for Drug Discovery on AWS. Pharma and techbio companies can access the NVIDIA Clara healthcare suite, including BioNeMo, now via Amazon SageMaker and AWS ParallelCluster, and the NVIDIA DGX Cloud on AWS. New to AWS: NVIDIA BioNeMo Advances Generative AI for Drug Discovery Also Available on AWS: NVIDIA Clara for Medical Imaging and Genomics November 28, 2023 - Leading pharmaceutical and biotech companies' researchers and developers can now easily deploy NVIDIA Clara software and services for accelerated healthcare via Amazon Web Services. The initiative, announced today at AWS re:Invent, allows healthcare and life sciences developers who use AWS cloud resources to integrate NVIDIA-accelerated offerings such as NVIDIA BioNeMo—a generative AI platform for drug discovery—which is coming to NVIDIA DGX Cloud on AWS and is currently available via the AWS ParallelCluster cluster management tool for high-performance computing and the Amazon SageMaker machine learning service. AWS is used by thousands of healthcare and life sciences companies worldwide. They can now use BioNeMo to build or customize digital biology foundation models with proprietary data, scaling up model training and deployment on AWS using NVIDIA GPU-accelerated cloud servers. Alchemab Therapeutics, Basecamp Research, Character Biosciences, Evozyne, Etcembly, and LabGenius are among the AWS users who have already started using BioNeMo for generative AI-accelerated drug discovery and development. This collaboration provides them with additional options for rapidly scaling up cloud computing resources for developing generative AI models trained on biomolecular data. This announcement extends NVIDIA’s existing healthcare-focused offerings available on AWS — NVIDIA MONAI for medical imaging workflows and NVIDIA Parabricks for accelerated genomics.

New to AWS: NVIDIA BioNeMo Advances Generative AI for Drug Discovery

BioNeMo is a domain-specific framework for digital biology generative AI, including pretrained large language models (LLMs), data loaders, and optimized training recipes that can help advance computer-aided drug discovery by speeding target identification, protein structure prediction, and drug candidate screening. Drug discovery teams can use their proprietary data to build or optimize models with BioNeMo and run them on cloud-based high-performance computing clusters. One of these models, ESM-2, a powerful LLM that supports protein structure prediction, achieves almost linear scaling on 256 NVIDIA H100 Tensor Core GPUs. Researchers can scale to 512 H100 GPUs to complete training in a few days instead of a month, the training time published in the original paper. Developers can train ESM-2 at scale using checkpoints of 650 million or 3 billion parameters. Additional AI models supported in the BioNeMo training framework include small-molecule generative model MegaMolBART and protein sequence generation model ProtT5. BioNeMo’s pretrained models and optimized training recipes — which are available using self-managed services like AWS ParallelCluster and Amazon ECS as well as integrated, managed services through NVIDIA DGX Cloud and Amazon SageMaker — can help R&D teams build foundation models that can explore more drug candidates, optimize wet lab experimentation and find promising clinical candidates faster

Also Available on AWS: NVIDIA Clara for Medical Imaging and Genomics

Project MONAI, cofounded and enterprise-supported by NVIDIA to support medical imaging workflows, has been downloaded more than 1.8 million times and is available for deployment on AWS. Developers can harness their proprietary healthcare datasets already stored on AWS cloud resources to rapidly annotate and build AI models for medical imaging. These models, trained on NVIDIA GPU-powered Amazon EC2 instances, can be used for interactive annotation and fine-tuning for segmentation, classification, registration, and detection tasks in medical imaging. Developers can also harness the MRI image synthesis models available in MONAI to augment training datasets. To accelerate genomics pipelines, Parabricks enables variant calling on a whole human genome in around 15 minutes, compared to a day on a CPU-only system. On AWS, developers can quickly scale up to process large amounts of genomic data across multiple GPU nodes. More than a dozen Parabricks workflows are available on AWS HealthOmics as Ready2Run workflows, which enable customers to easily run pre-built pipelines. Read the full article

0 notes

Text

Unveiling the Future of AI: Key Takeaways from This Week’s Top AI Conferences

Hello there! This is Paul, and today I’m bringing you the most noteworthy updates from the tech world. We’ve had an exhilarating week in the field of Artificial Intelligence, with two major conferences - SIGGRAPH and AI Four - unfolding simultaneously. So, let’s sink our teeth into the key highlights.

First off, let’s talk about SIGGRAPH, a conference that showcases the latest breakthroughs in computer graphics. Here, AI shone brightly with NVIDIA’s CEO, Jensen Huang, unveiling their next-gen GH200 Grace Hopper superchip. This powerhouse is engineered to handle the most complex generative AI workloads.

But that’s not all! NVIDIA also launched their AI Workbench, a toolset designed to make model training as smooth as spreading butter on a toast. They also announced their collaboration with Hugging Face to provide developers access to NVIDIA’s DGX cloud and their AI supercomputers. And let’s not forget the introduction of new Omniverse cloud APIs and a large language model called Chat USD.

Switching gears to the AI Four conference in Las Vegas, the spotlight was on responsible AI, consumer-level AI for movies, and the organization of businesses for AI. A crucial takeaway was the need for more women to step into leadership roles in AI.

In other news, Amazon is reportedly testing generative AI tools for sellers. This feature could transform a simple product description into an engaging narrative, potentially boosting sales. Zoom also clarified its stance on data usage, assuring users that their audio, video, or chat content will not be used to train artificial models without consent.

Furthermore, Leonardo AI launched an iOS app for AI art generation, and Wire Stock released a Discord bot simplifying image uploading to stock photo sites. OpenAI made headlines with their web crawler GPTbot. Lastly, Enthropic revealed an improved version of its entry-level LLM - Clod 2.

To wrap it up, the realm of AI this week was buzzing like a beehive - from major conferences to platform updates to ethical discussions. As we continue to explore and shape this fascinating landscape together, remember that every development enhances our collective knowledge and capabilities.

So, stay tuned for more exciting updates and always keep innovating! Paul

0 notes

Text

NVIDIA DGX SuperPOD In Dell PowerScale Storage offer Gen AI

Boost Productivity: NVIDIA DGX SuperPOD Certified PowerScale Storage.

Dell PowerScale storage

NVIDIA DGX SuperPOD with Dell PowerScale storage provide groundbreaking generative AI. With fast technological growth, AI is impacting several businesses. Generative AI lets computers synthesize and construct meaning. At the NVIDIA GTC global AI conference, Dell PowerScale file storage became the first Ethernet-based storage authorized for NVIDIA DGX SuperPOD. With this technology, organizations may maximize AI-enabled app potential.

- Advertisement -

DGX SuperPODs

The Development of Generative AI

Generative AI, which has transformed technology, allows robots to generate, copy, and learn from patterns in enormous datasets without human input. Generative AI might transform healthcare, industry, banking, and entertainment. There is an extraordinary requirement for advanced storage solutions to handle AI applications’ huge data sets.

Accreditation

Dell has historically led innovation, providing cutting-edge solutions to address commercial corporate needs. According to Dell, Dell PowerScale is the first Ethernet-based storage solution for NVIDIA DGX SuperPOD, improving storage interoperability. By simplifying AI infrastructure, this technology helps enterprises maximize AI initiatives.

“The world’s first Ethernet storage certification for NVIDIA DGX SuperPOD with Dell PowerScale combines Dell’s industry-leading storage and NVIDIA’s AI supercomputing systems, empowering organizations to unlock AI’s full potential, drive breakthroughs, and achieve the seemingly impossible,” says Martin Glynn, senior director of product management at Dell Technologies. “With Dell PowerScale’s certification as the first Ethernet storage to work with NVIDIA DGX SuperPOD, enterprises can create scalable AI infrastructure with greater flexibility.”

PowerScale Storage

Exceptional Performance for Next-Generation Tasks with PowerScale

Due to its remarkable scalability, performance, and security, which are based on more than ten years of expertise, Dell PowerScale has earned respect and acclaim. With its NVIDIA DGX SuperPOD certification, Dell’s storage offering is even more robust for businesses looking to use generative AI.

- Advertisement -

This is how PowerScale differs:

Improved access to the network: NVIDIA Magnum IO, GPUDirect Storage, and NFS over RDMA are examples of natively integrated technologies that speed up network access to storage in NVIDIA ConnectX NICs and NVIDIA Spectrum switches. By significantly reducing data transfer times to and from PowerScale storage, these cutting-edge capabilities guarantee higher storage throughput for workloads like AI training, checkpointing, and inferencing.

Achieving peak performance: A new Multipath Client Driver from Dell PowerScale increases data throughput and enables businesses to meet DGX SuperPOD‘s high performance requirements. With the help of this cutting-edge functionality, companies can quickly train and infer AI models, allowing for a smooth integration with the potent NVIDIA DGX platform.

Flexibility: Because of PowerScale’s innovative architecture, organizations can easily scale by only adding more nodes, giving them unmatched agility. Because of this flexibility, businesses can develop and adjust their storage infrastructure in tandem with their increasing AI workloads, preventing bottlenecks for even the most demanding AI use cases.

Security on a federal level: PowerScale has been approved for the U.S. Department of Defense Approved Product List due to its outstanding security capabilities. The accomplishment of this demanding procedure strengthens the safety of vital data assets and highlights PowerScale’s suitability for mission-critical applications.

Effectiveness: The creative architecture of PowerScale is intended to maximize effectiveness. It reduces operating expenses and environmental effect while optimizing performance and minimizing power consumption via the use of cutting-edge technology.

NVIDIA DGX SuperPOD

Dell created the standard architecture below in order to hasten the PowerScale with NVIDIA DGX SuperPOD solutions implementation. The document provides examples of how PowerScale and NVIDIA DGX SuperPOD work together seamlessly.

An important turning point in the quickly changing field of artificial intelligence has been reached with the accreditation of Dell PowerScale as the first Ethernet-based storage solution approved for NVIDIA DGX SuperPOD worldwide. Organizations may now use generative AI with scalability and performance thanks to Dell and NVIDIA’s partnership. Businesses may fully use AI and revolutionize their operations by using these cutting-edge technologies, ushering in a period of unprecedented development and rapid innovation.

Read more on govindhtech.com

#NVIDIADGX#SuperPOD#DellPowerScale#GenAI#GenerativeAI#DellTechnologies#generativeAI#AIworkloads#NVIDIA#dell#NVIDIADGXSuperPOD#PowerScaleStorage#technology#technews#news#govindhtech

0 notes

Text

Nvidia AI Summit Insights - Stock Trends

Join the newsletter: https://avocode.digital/newsletter/

As the AI revolution continues to shape the landscape of technology and investment, Nvidia stands at the forefront, commanding attention with its innovative breakthroughs in artificial intelligence. During the recently concluded Nvidia AI Summit, investors and tech enthusiasts alike reflected on the company’s advancements and how they might influence its stock performance in the coming months. This article delves into key insights from the summit and examines potential stock trends:

The Power of Nvidia's AI Summit

The Nvidia AI Summit serves as a pivotal event, showcasing cutting-edge advancements in artificial intelligence and computational technology. This year, the summit highlighted several groundbreaking developments:

1. Transformative AI Technologies

Nvidia has been a game-changer in the AI industry, propelling advances across sectors such as autonomous vehicles, healthcare, and data centers. Major highlights include:

Enhanced GPU Performance: Nvidia continues to push boundaries with their latest generation of GPUs. These chips are designed to significantly boost AI processing capabilities, enabling faster computation and more complex AI models.

AI-Driven Automation: The introduction of updated frameworks for automation in industries promises optimized operations, reduced costs, and improved efficiency. This translates into increased productivity for businesses leveraging Nvidia’s technology.

Strategic Collaborations: Partnerships with leading tech companies and enterprises further position Nvidia as a crucial player in AI development.

2. AI in the Enterprise

With AI becoming an integral component of modern business strategies, Nvidia demonstrated how its tools and platforms are empowering enterprises:

Omniverse Platform: Designed for virtual collaboration and shared innovation, Omniverse offers enterprises a scalable way to work together, developing sophisticated AI applications in real-time.

DGX Systems: These AI supercomputers are transforming the infrastructure of data centers globally, enabling massive parallel processing and providing the computational power needed for deep learning and AI computations.

AI Enterprise Suite: Offering end-to-end AI development and deployment solutions tailored for business needs, facilitating seamless integration into existing architectures.

Stock Market Implications

Given Nvidia’s strides in AI technology, investors are eager to understand the potential impacts on the company’s stock market trajectory.

3. Growth Potential

Nvidia's continuous innovation and expansion into AI markets demonstrate substantial growth potential. Factors contributing to this outlook include:

Leadership in AI Hardware: As a leader in high-performance computing technology, Nvidia remains a preferred choice for AI developers, ensuring ongoing demand for its cutting-edge GPUs.

Service Expansion: By broadening its services and product offerings, such as AI software, Nvidia caters to a diverse client base, from small-scale businesses to major corporations.

Global Market Reach: With increasing investments in global markets, Nvidia's influence extends beyond the US and into key growing economies, offering diverse revenue channels.

4. Risks and Considerations

While promising, potential risks must also be considered:

Market Competition: The AI tech space is becoming increasingly competitive with new entrants and established tech giants investing heavily in AI research and development.

Regulatory Challenges: As AI technologies evolve, Nvidia must navigate a complex landscape of international regulations and compliance standards.

Supply Chain Constraints: Global supply chain disruptions continue to impact semiconductor production, which may affect Nvidia's ability to meet growing demand.

Investor Sentiment and Recommendations

Understanding the pulse of investor sentiment is crucial for making informed decisions regarding Nvidia's stock. Many analysts remain optimistic due to:

5. Market Positioning

Nvidia's dominant role in the AI technology sector makes it a powerful contender in the stock market:

High Demand for AI Solutions: As industries increasingly rely on AI, Nvidia's offerings align with market needs, driving stock valence.

Proven Track Record: Nvidia's historical performance demonstrates its ability to adapt and thrive amid changing market conditions.

Strategic Growth Initiatives: The company’s strategic growth initiatives focus on innovation, diversification, and market expansion to bolster investor confidence.

6. Long-term Investment

Many experts view Nvidia as a solid long-term investment, considering:

Continuous Innovation: Nvidia’s commitment to research and development ensures its products remain at the vanguard of technological advancements.

Resilience in Volatile Markets: The company’s strong financial health and adaptability provide a cushion against market fluctuations.

Growth Opportunities: Expansion into sectors like virtual reality, the Internet of Things (IoT), and cloud computing represents untapped potential for future growth.

In summary, while Nvidia faces challenges typical of an innovative leader at the helm of AI transformation, its potential for growth makes it an appealing option for investors. The AI Summit underscored Nvidia's core strengths and provided a glimpse into a future where AI continues to redefine industries, presenting compelling opportunities for both technology and investment enthusiasts.

Want more? Join the newsletter: https://avocode.digital/newsletter/

0 notes

Text

On September 26, the French telecommunications group Iliad made a big announcement. It said that it would invest millions of euros to establish France’s own AI industry. The company has already invested €100 Million ($106 Million) in it. The hitherto-invested amount will go into building an ‘excellence lab’ for AI research. What Makes French Telecom’s Investment in AI Significant? As per the communication received, a team consisting of distinguished researchers has been set up. The chairman of the Iliad, Xavier Niel, will lead this team. In his address, Niel said that France needs a proper ecosystem for running AI efficiently. He underlined the importance of the lab in making the technology more accessible to everyone. More importantly, Iliad has procured the most powerful cloud-native AI supercomputer in Europe. It is a Nvidia DGX SuperPOD equipped with the Nvidia DGX H100. The company has already installed it in its Datacenter 5 near Paris. Niel said that having high computing power is essential for building cutting-edge AI solutions. Thus, the company is leaving no stone unturned in its investment efforts. As per the company, the DGX SyuperPOD delivers the power that large language models (LLMs) require. Furthermore, Iliad is getting support from its subsidiaries in this endeavor. Scaleway, a subsidiary of Iliad, is a cloud computing and web hosting company. It has decided to offer a suite of cloud-native AI tools that will help in training various-sized models. Damien Lucas, the CEO of Scaleway, remarked about the significance of these tools. He said that they would empower European companies to upscale their technological innovations. The organizations will be able to offer solutions that cater to international clients. Notably, this news surfaced after European Commission President Ursuala von der Leyen’s announcement. On September 13, the body launched an initiative to support AI startups with heightened access to supercomputers in Europe. The step taken by Iliad is important not just for France but for the entire continent. First of all, it’ll enable French companies to use AI technology more easily. Secondly, it could make the country a frontrunner in the AI race. And in the future, it could bring many other possibilities for France. The Emergence of AI And Its Global Impact However, since the emergence of ChapGPT, many companies have invested in AI. A whole lot of companies are primarily focusing on this technology. Either they’re using it to ameliorate their solution or they’re working on improving the artificial intelligence itself. So here are some companies that have massively invested in AI. Microsoft Corp. Alphabet Inc. Nvidia Corp. Meta Platforms, Inc. Taiwan Semiconductor Manufacturing Co. Ltd. ASML Holding NV SAP SE RELX PLC Arista Networks Inc. Baidu Inc. AI has brought a major disruption to the world. It has overwhelmed as well as intimidated people with its efficiency. While companies are eager to adopt it, governments are preparing to regulate it. No matter how one perceives things related to this technology, it’ll surely prevail. Yet, it is prudent to evaluate every aspect and effect of implementing it in different industries.

1 note

·

View note

Text

NVIDIA DGX H100 Systems — World’s Most Advanced Enterprise AI Infrastructure

In the bustling world of enterprise AI, the new NVIDIA DGX H100 systems are setting a gold standard, ready to tackle the hefty computational needs of today’s big hitters like language models, healthcare innovations, and climate research. Imagine a powerhouse packed with eight NVIDIA H100 GPUs, all linked together to deliver a staggering 32 petaflops of AI performance. That’s a whopping six times the muscle of its predecessors, all thanks to the new FP8 precision.

These DGX H100 units aren’t just standalone heroes; they’re the core of NVIDIA’s cutting-edge AI infrastructure — the DGX POD™ and DGX SuperPOD™ platforms. Picture the latest DGX SuperPOD architecture, now featuring an innovative NVIDIA NVLink Switch System, enabling up to 32 nodes to join forces, harnessing the power of 256 H100 GPUs.

The game-changer? This next-gen DGX SuperPOD is capable of delivering an eye-watering 1 exaflop of FP8 AI performance. That’s six times more powerful than what came before, making it a beast for running enormous LLM tasks that have trillions of parameters to consider.

Jensen Huang, the visionary founder and CEO of NVIDIA, puts it best: “AI has revolutionized both the capabilities of software and the way it’s created. Industries leading the charge with AI understand just how critical robust AI infrastructure is. Our DGX H100 systems are set to power these enterprise AI hubs, turning raw data into our most valuable asset — intelligence.”

NVIDIA Eos: A Leap Towards the Future with the Fastest AI Supercomputer

NVIDIA isn’t stopping there. They’re on track to debut the DGX SuperPOD featuring this groundbreaking AI architecture, aimed at powering NVIDIA researchers as they push the boundaries in climate science, digital biology, and AI’s next frontier.

The Eos supercomputer is anticipated to snatch the title of the world’s fastest AI system, boasting 576 DGX H100 systems equipped with 4,608 H100 GPUs. With an expected performance of 18.4 exaflops, Eos is set to outpace the current champion, Fugaku from Japan, in AI processing speed by 4 times, and offer 275 petaflops for traditional scientific computing.

Eos isn’t just a machine; it’s a model for future AI infrastructure, inspiring both NVIDIA’s OEM and cloud partners.

Scaling Enterprise AI with Ease: The DGX H100 Ecosystem

The DGX H100 systems are designed to scale effortlessly as enterprises expand their AI ventures, from pilot projects to widespread implementation. Each unit boasts not just the GPUs but also two NVIDIA BlueField®-3 DPUs for advanced networking, storage, and security tasks, ensuring operations are smooth and secure.

With double the network throughput of its predecessors and 1.5x more GPU connectivity, these systems are all about efficiency and power. Plus, when combined with NVIDIA’s networking and storage solutions, they form the flexible backbone of any size AI computing project, from compact DGX PODs to sprawling DGX SuperPODs.

Empowering Success with NVIDIA DGX Foundry

To streamline the path to AI development, NVIDIA DGX Foundry is expanding globally, offering customers access to advanced computing infrastructure even before their own setups are complete. With new locations across North America, Europe, and Asia, remote access to DGX SuperPODs is now within reach for enterprises worldwide.

This initiative includes the NVIDIA Base Command™ software, simplifying the management of the AI development lifecycle on this robust infrastructure.

MLOps and Software Support: Fueling AI Growth

As AI becomes a staple in operationalizing development, NVIDIA’s MLOps solutions from DGX-Ready Software partners are enhancing the “NVIDIA AI Accelerated” program. This ensures customers have access to enterprise-grade solutions for workflow management, scheduling, and orchestration, driving AI adoption forward.

Simplifying AI Deployment with DGX-Ready Managed Services