#Multicloud

Explore tagged Tumblr posts

Text

DevOps Multicloud is revolutionizing the DevOps game by allowing teams to deploy and manage applications seamlessly across multiple cloud environments, enhancing flexibility, resilience, and scalability.

2 notes

·

View notes

Text

IT Modernization and Cloud Adoption Services in Dallas, Texas, Spearhead Technology

IT Modernization and Cloud Adoption Services in Dallas, Texas, Spearhead Technology

IT modernization and cloud adoption services are designed to help organizations update their technology infrastructure and move their operations to the cloud.

This can include migrating applications and data to cloud-based platforms, implementing new tools and technologies to improve efficiency and security, and leveraging analytics and automation to drive innovation and growth.

The benefits of IT modernization and cloud adoption can include reduced costs, improved agility and scalability, enhanced collaboration and communication, and better overall performance and customer satisfaction.

However, it is important to work with a reputable and experienced provider to ensure a smooth and successful transition to the cloud.

#cloudadoption#cloudmigration#cloudstrategy#cloudconsulting#cloudtransformation#cloudcomputing#cloudtechnology#hybridcloud#multicloud#publiccloud#privatecloud#cloudsecurity#cloudmanagement#cloudoptimization#cloudarchitecture#clouddeployment#cloudintegration#cloudsolutions#cloudservices#itmodernization#DigitalTransformation#BusinessTransformation#Spearhead#SpearheadTechnology#Dallas#Texas#USA#startups

2 notes

·

View notes

Text

What Is AWS CloudTrail? And To Explain Features, Benefits

AWS CloudTrail

Monitor user behavior and API utilization on AWS, as well as in hybrid and multicloud settings.

What is AWS CloudTrail?

AWS CloudTrail logs every AWS account activity, including resource access, changes, and timing. It monitors activity from the CLI, SDKs, APIs, and AWS Management Console.

CloudTrail can be used to:

Track Activity: Find out who was responsible for what in your AWS environment.

Boost security by identifying odd or unwanted activity.

Audit and Compliance: Maintain a record for regulatory requirements and audits.

Troubleshoot Issues: Examine logs to look into issues.

The logs are easily reviewed or analyzed later because CloudTrail saves them to an Amazon S3 bucket.

Why AWS CloudTrail?

Governance, compliance, operational audits, and auditing of your AWS account are all made possible by the service AWS CloudTrail.

Benefits

Aggregate and consolidate multisource events

You may use CloudTrail Lake to ingest activity events from AWS as well as sources outside of AWS, such as other cloud providers, in-house apps, and SaaS apps that are either on-premises or in the cloud.

Immutably store audit-worthy events

Audit-worthy events can be permanently stored in AWS CloudTrail Lake. Produce audit reports that are needed by external regulations and internal policies with ease.

Derive insights and analyze unusual activity

Use Amazon Athena or SQL-based searches to identify unwanted access and examine activity logs. For individuals who are not as skilled in creating SQL queries, natural language query generation enabled by generative AI makes this process much simpler. React with automated workflows and rules-based Event Bridge alerts.

Use cases

Compliance & auditing

Use CloudTrail logs to demonstrate compliance with SOC, PCI, and HIPAA rules and shield your company from fines.

Security

By logging user and API activity in your AWS accounts, you can strengthen your security posture. Network activity events for VPC endpoints are another way to improve your data perimeter.

Operations

Use Amazon Athena, natural language query generation, or SQL-based queries to address operational questions, aid with debugging, and look into problems. To further streamline your studies, use the AI-powered query result summarizing tool (in preview) to summarize query results. Use CloudTrail Lake dashboards to see trends.

Features of AWS CloudTrail

Auditing, security monitoring, and operational troubleshooting are made possible via AWS CloudTrail. CloudTrail logs API calls and user activity across AWS services as events. “Who did what, where, and when?” can be answered with the aid of CloudTrail events.

Four types of events are recorded by CloudTrail:

Control plane activities on resources, like adding or removing Amazon Simple Storage Service (S3) buckets, are captured by management events.

Data plane operations within a resource, like reading or writing an Amazon S3 object, are captured by data events.

Network activity events that record activities from a private VPC to the AWS service utilizing VPC endpoints, including AWS API calls to which access was refused (in preview).

Through ongoing analysis of CloudTrail management events, insights events assist AWS users in recognizing and reacting to anomalous activity related to API calls and API error rates.

Trails of AWS CloudTrail

Overview

AWS account actions are recorded by Trails, which then distribute and store the events in Amazon S3. Delivery to Amazon CloudWatch Logs and Amazon EventBridge is an optional feature. You can feed these occurrences into your security monitoring programs. You can search and examine the logs that CloudTrail has collected using your own third-party software or programs like Amazon Athena. AWS Organizations can be used to build trails for a single AWS account or for several AWS accounts.

Storage and monitoring

By establishing trails, you can send your AWS CloudTrail events to S3 and, if desired, to CloudWatch Logs. You can export and save events as you desire after doing this, which gives you access to all event details.

Encrypted activity logs

You may check the integrity of the CloudTrail log files that are kept in your S3 bucket and determine if they have been altered, removed, or left unaltered since CloudTrail sent them there. Log file integrity validation is a useful tool for IT security and auditing procedures. By default, AWS CloudTrail uses S3 server-side encryption (SSE) to encrypt all log files sent to the S3 bucket you specify. If required, you can optionally encrypt your CloudTrail log files using your AWS Key Management Service (KMS) key to further strengthen their security. Your log files are automatically decrypted by S3 if you have the decrypt permissions.

Multi-Region

AWS CloudTrail may be set up to record and store events from several AWS Regions in one place. This setup ensures that all settings are applied uniformly to both freshly launched and existing Regions.

Multi-account

CloudTrail may be set up to record and store events from several AWS accounts in one place. This setup ensures that all settings are applied uniformly to both newly generated and existing accounts.

AWS CloudTrail pricing

AWS CloudTrail: Why Use It?

By tracing your user behavior and API calls, AWS CloudTrail Pricing makes audits, security monitoring, and operational troubleshooting possible .

AWS CloudTrail Insights

Through ongoing analysis of CloudTrail management events, AWS CloudTrail Insights events assist AWS users in recognizing and reacting to anomalous activity related to API calls and API error rates. Known as the baseline, CloudTrail Insights examines your typical patterns of API call volume and error rates and creates Insights events when either of these deviates from the usual. To identify odd activity and anomalous behavior, you can activate CloudTrail Insights in your event data stores or trails.

Read more on Govindhtech.com

#AWSCloudTrail#multicloud#AmazonS3bucket#SaaS#generativeAI#AmazonS3#AmazonCloudWatch#AWSKeyManagementService#News#Technews#technology#technologynews

0 notes

Text

🚀 Top Cloud Infrastructure Trends for 2024: What Businesses Need to Know ☁️

As cloud technology continues to evolve, businesses must keep up with the latest trends to stay competitive in 2024. Key trends include the rise of hybrid and multi-cloud environments for increased flexibility, the adoption of cloud-native technologies for faster app development, and the growing importance of edge computing for better performance. Additionally, AI-powered cloud solutions are enhancing automation and decision-making. Stay ahead of the curve and harness these trends to optimize your cloud strategy! visit to know more - https://www.lifelineon.com//read-blog/32719_top-cloud-infrastructure-trends-for-2024-what-businesses-need-to-know.html

#As cloud technology continues to evolve#CloudComputing#CloudTrends#TechInnovation#BusinessGrowth#AI#EdgeComputing#HybridCloud#MultiCloud

0 notes

Text

KosmikTechnologies: KOSMIK is a Global leader in training, development, and consulting services that help students bring the future of work to life today in a corporate environment. We have a team of certified professionals and experienced faculty working with latest technologies in CMM level top MNCs. About course: Key Features: Interactive Learning at Learners convenience Industry Savvy Trainers Real-Time Methodologies Topic wise Hands-on / Topic wise Study Material Best Practices /Example Case Studies Support after Training Resume Preparation Certification Guidance Interview assistance Lab facilities About offered courses: kosmik Provides Python, AWS, DevOps, Power BI, Azure, ReactJS, AngularJS, Tableau, SQL, MSBI, Java, selenium, Testing tools, manual testing, etc… Contact US: KOSMIK TECHNOLOGIES PVT.LTD 3rd Floor, Above Airtel Showroom, Opp KPHB Police Station, Near JNTU, Kukatpally, Hyderabad 500 072. INDIA. India: +91 8712186898, 8179496603, 6309565721

#kosmik#python#selenium training#machine learning#developer#traininginstitute#hyderabad#kphb#selenium#multicloud

0 notes

Text

Is Your Organization Ready for Multi-Cloud? Key Insights for 2024

As organizations expand their digital footprints, multi-cloud management has emerged as a cornerstone for operational flexibility and efficiency. Valued at $8.03 billion in 2023, the multi-cloud market is projected to grow at a CAGR of 28.0% from 2024 to 2030. This growth reflects the rising demand for redundancy, vendor flexibility, and optimized resource allocation. Multi-cloud strategies allow companies to avoid vendor lock-in, balance workloads, and customize services based on unique needs. Interoperability remains a priority, with tools like Kubernetes and Cloud Management Platforms facilitating smooth cross-cloud integrations.

#multicloud#cloudcomputing#digitaltransformation#cloudmanagement#industry40#cloudinfrastructure#technologytrends#businessinnovation#cloudsecurity#digitalstrategy

0 notes

Text

Infographic: 15 Cloud Computing Trends That Will Shape 2024-2029

The next five years in cloud computing will bring remarkable innovations. This infographic covers the top 15 trends, including AI integration and multi-cloud adoption.

Find out how these developments will affect your business.

#CloudComputing#CloudTrends#TechTrends#FutureOfCloud#AIinCloud#HybridCloud#EdgeComputing#QuantumComputing#ServerlessArchitecture#MultiCloud

0 notes

Text

Multi-Cloud Strategy! #Kaara can help you in Embrace flexibility, enhance security, and optimize costs.

Reach our experts by emailing us at [email protected]

Know More:- https://kaaratech.com/cloud-and-Infra.html

0 notes

Text

Six ways hosted private cloud adds value to enterprise business

With companies reclaiming control via cloud repatriation, models that involve private cloud, either as is or in tandem with public cloud, meet the needs of organizations in a cost-effective, agile, and controlled manner, offering immense peace of mind. Read More. https://www.sify.com/cloud/six-ways-hosted-private-cloud-adds-value-to-enterprise-business/

0 notes

Text

InfoQ Article on Fluent Bit with MultiCloud

I’m excited to say that we’ve had an article on Fluent Bit and multi-cloud published on InfoQ. Check it out at https://www.infoq.com/articles/multi-cloud-observability-fluent-bit/ . This is another first for me. As you may have guessed from the title, the article is about how Fluent Bit can support multi-cloud use cases. As part of the introduction, I walked through some of the challenges that…

View On WordPress

0 notes

Text

Critical Applications of Hybrid Cloud & Multi-Cloud in 2024

How multi-cloud and hybrid cloud can help you optimize your cloud infrastructure? Go through the blog to know the use cases & differences of these two cloud models, and how to leverage their advantages.

0 notes

Text

Dell PowerMax For Multicloud Cybersecurity Improvements

PowerMax Innovations Increase Cybersecurity, Multicloud Agility, and AI-Powered Efficiency. Businesses want IT solutions that can keep up with current demands and foresee future requirements in the fast-paced digital environment of today.

Dell is releasing major updates to Dell PowerMax today that boost cyber resilience, provide seamless multicloud mobility, and improve AI-driven efficiency. PowerMax, the consistently cutting-edge, highly secure storage solution tailored for mission-critical workloads, now has additional features that make it simpler than ever for clients to adapt to changing business needs.

AI-Driven Efficiency for Mission-Critical Workloads

Businesses want storage solutions that can keep up with existing demands and foresee future requirements in the fast-paced digital environment of today. Herein lies the potential of artificial intelligence. Customers may benefit from a number of AI-powered features in this version that can assist maximize performance, lower maintenance costs, and stop problems before they start by:

Performance optimization: By using AI to reduce latency and increase speed without requiring administration overhead, its are utilizing dynamic cache optimization via pattern recognition and predictive analytics.

Management that is both proactive and predictive: Intelligent threshold settings for autonomous health checks enable self-healing and remedial measures, addressing problems before they occur (e.g. storage capacity levels, loose cabling).

Network fabric performance optimization (FPIN) that is automated: PowerMax can resolve incidents up to 8 times quicker by rapidly identifying Fibre Channel network congestion (slow drain) and identifying the underlying reason.

Infrastructure optimization is made quick and simple with Dell’s AIOps Assistant, which has Gen AI natural language questions.

Improved Efficiency

In order to enhance total storage efficiency and provide industry-leading power and environmental monitoring features, the most recent update also adds 92% RAID efficiency (RAID 6 24+2).

In order to increase power efficiency, control energy expenses, and successfully lower energy consumption, customers may now monitor power utilization at three different levels: the array, the rack, and the data center.

Increasing Cyber Resilience

Cyber resilience is essential for all clients at a time when cyberthreats are becoming more complex. PowerMax incorporates innovative cybersecurity features to improve client data safety, minimize attack surfaces, and swiftly recover from cyberattacks. These features include:

PowerMax Cyber Recovery Services: Strong defense against cyberattacks is provided by Dell’s new Professional Service. This customized solution guarantees rapid and effective recovery while assisting clients in meeting strict compliance objectives via the use of a secure PowerMax vault and granular data protection.

YubiKey multifactor authentication: Offers a robust and practical security solution that streamlines the user authentication process and improves protection against unwanted access.

Superior Performance at Scale

With the headroom required for present and future demands, PowerMax keeps raising the bar for outstanding performance at scale. The announcement for today also adds:

PowerMax 8500 may increase IOPS performance by up to 30%.

With new 100Gb Ethernet I/O modules, GbE connection may be up to three times quicker.

With the new 64Gb Fibre Channel I/O modules, FC communication may be up to two times quicker.

Along with these noteworthy improvements, Storage Direct Protection for PowerMax‘s connection with PowerProtect allows for effective, safe, and lightning-fast data protection, providing up to 500TB per day restores and 1PB per day backups.

Reach Multicloud Quickness

Multicloud agility is crucial for optimizing resource use, cutting expenses, and quickly adjusting to change in the rapidly changing digital world. This release assists users in achieving:

Seamless multicloud data mobility: Moving live PowerMax workloads to and from APEX Block Storage, the most robust and adaptable cloud storage available in the market, is now possible using Dell’s easy-to-use solutions. At the same time, multi-hop OS conversions are carried out to update those workloads in a single, seamless operation.

Scalable cloud restorations and backups: Simple, safe, and effective data protection is provided by Storage Direct Protection for PowerMax, giving users the freedom to choose the ideal backup locations. Customers may choose the cloud vendor that best suits their specific needs and prevent vendor lock-in by using APEX Protection Storage‘s seamless integration with popular cloud providers like AWS, Azure, GCP, and Alibaba.

Model of simplified consumption: Customers pay only for the services they use with Dell APEX Subscriptions, which also simplify billing, invoicing, and capacity utilization tracking for improved forecasting and scalability. This paradigm simplifies lifetime management and offers a contemporary consumption experience without requiring a significant initial capital expenditure.

Innovation in Mainframes

PowerMaxOS 10.2 boosts cyber intrusion detection for mainframes (zCID) with auto-learning access pattern detection, lowers latency and improves IOPS performance for imbalanced mainframe workloads, and uses IBM’s System Recovery Boost to recover more quickly during scheduled or unforeseen outages.

Read more on govindhtech.com

#DellPowerMax#Multicloud#CybersecurityImprovement#Cybersecurity#artificialintelligence#GenAI#Cyberresilience#cyberattacks#news#dell#PowerProtect#APEXProtectionStorage#technology#technews#govindhtech

0 notes

Text

Cloud Computing Solutions | Transform Your Business Neo Infoway

Discover the power of cloud computing with our comprehensive solutions. Enhance scalability, security, and efficiency for your business operations. Explore now!

#cloudcomputing#neo#infoway#neoinfoway#cloudservices#cloudinfrastructure#cloudstorage#cloudhosting#publiccloud#privatecloud#hybridcloud#multicloud#clouddeploymentmodels#infrastructureasaservice#platformasaservice#softwareasaservice#cloudmigration#cloudsecurity#cloudarchitecture#cloudmanagement#cloudautomation#cloudscalability

0 notes

Text

Cloud Computing Solutions | Transform Your Business Neo Infoway

Discover the power of cloud computing with our comprehensive solutions. Enhance scalability, security, and efficiency for your business operations. Explore now!

#cloudcomputing#neo#infoway#neoinfoway#cloudservices#cloudinfrastructure#cloudstorage#cloudhosting#publiccloud#privatecloud#hybridcloud#multicloud#clouddeploymentmodels#infrastructureasaservice#platformasaservice#softwareasaservice#cloudmigration#cloudsecurity#cloudarchitecture#cloudmanagement#cloudautomation#cloudscalability

0 notes

Text

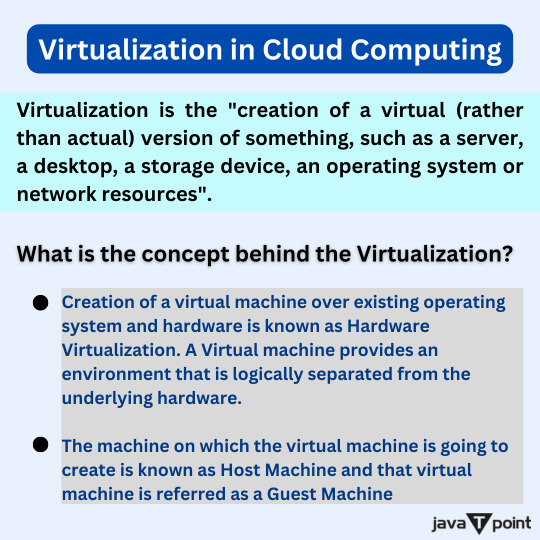

Virtualization in Cloud Computing . . . . for more information and a cloud computing tutorial https://bit.ly/4a9ymrG check the above link

#cloudcomputing#privatecloud#publiccloud#hybridcloud#virtualization#multicloud#communitycloud#computerscience#computerengineering#javatpoint

0 notes