#I also created AI bots on the Website

Explore tagged Tumblr posts

Text

It's Time To Investigate SevenArt.ai

sevenart.ai is a website that uses ai to generate images.

Except, that's not all it can do.

It can also overlay ai filters onto images to create the illusion that the algorithm created these images.

And its primary image source is Tumblr.

It scrapes through the site for recent images that are at least 10 days old and has some notes attached to it, as well as copying the tags to make the unsuspecting user think that the post was from a genuine user.

No image is safe. Art, photography, screenshots, you name it.

Initially I thought that these are bots that just repost images from their site as well as bastardizations of pictures across tumblr, until a user by the name of @nataliedecorsair discovered that these "bots" can also block users and restrict replies.

Not only that, but these bots do not procreate and multiply like most bots do. Or at least, they have.

The following are the list of bots that have been found on this very site. Brace yourself. It's gonna be a long one:

@giannaaziz1998blog

@kennedyvietor1978blog

@nikb0mh6bl

@z4uu8shm37

@xguniedhmn

@katherinrubino1958blog

@3neonnightlifenostalgiablog

@cyberneticcreations58blog

@neomasteinbrink1971blog

@etharetherford1958blog

@punxajfqz1

@camicranfill1967blog

@1stellarluminousechoblog

@whwsd1wrof

@bnlvi0rsmj

@steampunkstarshipsafari90blog

@surrealistictechtales17blog

@2steampunksavvysiren37blog

@krispycrowntree

@voucwjryey

@luciaaleem1961blog

@qcmpdwv9ts

@2mplexltw6

@sz1uwxthzi

@laurenesmock1972blog

@rosalinetritsch1992blog

@chereesteinkirchner1950blog

@malindamadaras1996blog

@1cyberneticdreamscapehubblog

@neomasteinbrink1971blog

@neonfuturecityblog

@olindagunner1986blog

@neonnomadnirvanablog

@digitalcyborgquestblog

@freespiritfusionblog

@piacarriveau1990blog

@3technoartisticvisionsblog

@wanderlustwineblissblog

@oyqjfwb9nz

@maryannamarkus1983blog

@lashelldowhower2000blog

@ovibigrqrw

@3neonnightlifenostalgiablog

@ywldujyr6b

@giannaaziz1998blog

@yudacquel1961blog

@neotechcreationsblog

@wildernesswonderquest87blog

@cybertroncosmicflow93blog

@emeldaplessner1996blog

@neuralnetworkgallery78blog

@dunstanrohrich1957blog

@juanitazunino1965blog

@natoshaereaux1970blog

@aienhancedaestheticsblog

@techtrendytreks48blog

@cgvlrktikf

@digitaldimensiondioramablog

@pixelpaintedpanorama91blog

@futuristiccowboyshark

@digitaldreamscapevisionsblog

@janishoppin1950blog

The oldest ones have been created in March, started scraping in June/July, and later additions to the family have been created in July.

So, I have come to the conclusion that these accounts might be run by a combination of bot and human. Cyborg, if you will.

But it still doesn't answer my main question:

Who is running the whole operation?

The site itself gave us zero answers to work with.

No copyright, no link to the engine where the site is being used on, except for the sign in thingy (which I did.)

I gave the site a fake email and a shitty password.

Turns out it doesn't function like most sites that ask for an email and password.

Didn't check the burner email, the password isn't fully dotted and available for the whole world to see, and, and this is the important thing...

My browser didn't detect that this was an email and password thingy.

And there was no log off feature.

This could mean two things.

Either we have a site that doesn't have a functioning email and password database, or that we have a bunch of gullible people throwing their email and password in for people to potentially steal.

I can't confirm or deny these facts, because, again, the site has little to work with.

The code? Generic as all hell.

Tried searching for more information about this site, like the server it's on, or who owned the site, or something. ANYTHING.

Multiple sites pulled me in different directions. One site said it originates in Iceland. Others say its in California or Canada.

Luckily, the server it used was the same. Its powered by Cloudflare.

Unfortunately, I have no idea what to do with any of this information.

If you have any further information about this site, let me know.

Until there is a clear answer, we need to keep doing what we are doing.

Spread the word and report about these cretins.

If they want attention, then they are gonna get the worst attention.

12K notes

·

View notes

Text

Hello! Does anyone know of any website where I can search through paintings by famous artists? I want to find a full gallery of JC Leyendecker's paintings, and also Alphonse Mucha's work. Google Image and pinterest are utterly unusable now because they're overrun with revolting ai-generated garbage using Leyendecker's and Mucha's namse to create ugly slop that doesn't even resemble their paintings. Museum websites only seem to have a handful of their works each. Most top search result websites are just storefronts where you can buy prints and don't show any of the images in good quality. Does anyone know of a good website that just-- has those artists, with as big a collection of their paintings as possible? I'm looking for Leyendecker and Alphonse Mucha specifically (because AI has destroyed the ability to look up any of their work without running into poorly made machine-generated slop created by bots farming out content). If you can give me links you'll be my hero haha XD.

83 notes

·

View notes

Text

Do NOT send pictures of your ID card to discord bots!!!!

Or, like, any online rando.

I ran into a server that wanted to make sure that members are over 18 years old. They wanted to avoid the other thing I've heard of, which is asking you to verify your age by sending pictures of your ID card to a moderator. Good! Don't do that!

However, ALSO don't do this other thing, which is using a discord bot that would "automatically verify" you from a selfie and a photo of your ID card showing your birthday. The one they used is ageifybot.com. There's a little more information on its top.gg page. Don't like that! Not using that!

Why not? It's automatic! Well, let me count the ways this service skeeves me out:

How does the verification process work? There is no information on this. Well, okay, if you had more info on what kind of algorithms etc were being used here, that might make it easier for people to cheat it. Fair enough. But we need something to count on.

Who's making it? Like, if I can't understand the mechanics, at least I'd like to know who creates it - ideally they'd be a security professional, or at least a security hobbyist, or an AI expert, or at least someone with some kind of reputation they could lose if this turns out to not be very good, or god forbid, a data-stealing operation. However, the website contains nothing about the creators.

The privacy policy says they store information sent to them, such as your selfie and photo of an ID card, for up to 90 days, or a year if they suspect you're misleading them. It sure seems like even if they're truly abiding by their privacy policy, there's nothing to stop human people from looking at your photos.

The terms of service say they can use, store, process, etc, any information you send them. And that they can't be held accountable for mistakes, misuse, etc. And that they can change the bot and the ToS at any times without telling you. The terms of service also cut off midway through a sentence, so like, that's reassuring:

In conclusion, DO NOT SEND PICTURES OF YOUR ID CARD TO RANDOM DISCORD BOTS.

Yes, keeping minors out of (say) NSFW spaces is a difficult problem, but this "solution" sucks shit and is bad.

Your ID card is private, personal information that can be used by malicious actors to harm you. Do not trust random discord bots.

#light writes#discord#internet so strange#internet safety#personal safety#information security#cybersecurity#discord bot

171 notes

·

View notes

Text

PSA: there's someone on ao3 advertising an ai-generated story site via "fanfic"

Okay so. I cannot prove that this person is a bot, but they're either a bot or someone who works with the site they're advertising. Either way, they're... not very good at hiding the scheme once you dig a little. With a fucking spoon. Long post beneath the cut.

The user in question goes by FantasiaStories, which happens to also be the name of the website in question. Funny, that. They have posted 38 works in the past 2 months for a variety of fandoms, including TWST, which is how I found them. Other fandoms include CoD, Genshin Impact, JJK, and Marvel. The works are pretty much solely NSFW reader-insert fics. First red flag: in the notes of EVERY fic, beginning or end notes (it varies), they advertise their website. Examples (WARNING: suggestive text):

Okay. Weird. Suspicious, even! I am, of course, wary of weird links to unknown sites (as we should all be!). We will talk about that site in a bit.

Now, like, this isn't bad in and of itself. Whatever, maybe it's a personal site or something. Now, I took a look at one of the Malleus fics they put out a couple weeks ago.

First person POV isn't really my thing, so I ended up not finishing it beyond the first few sentences. Forgot about it, put it out of my mind, whatever.

Today, I was once again trawling the tag, looking for some good stuff (or for updates of the fics I'm following), and I see this:

Oo, looks fun! The tags sound familiar, though... Whatever. Let's take a look, see if it seems good —

And I immediately noticed: THIS IS THE SAME FUCKING FIC???

EXHIBIT A: THE RECENT FIC

EXHIBIT B: THE EARLIER FIC

IT'S THE SAME FUCKING FIC!!! I literally checked the author's profile to see if they were, perhaps, stealing from another writer, but NO! THEY JUST COPY-PASTED THE DAMN THING!

Okay. So now I'm confused. Upset, even. What the fuck is going on here? Who is this "FantasiaStories", and what is this site they keep hawking?

Well.

Ahah.

After sending some baffled texts to my friend, reporting the duplicate fics to Ao3 T&S for advertising, and several hours of waffling (and playing vidya games) about whether or not to click the link, I clicked the fucking link. I need to know what's going on. This is WEIRD.

WELL! The site is janky as all hell, first of all. Like, look at this shit:

Yeah, probably optimized for mobile but that is UGLY on desktop. And yes, that horribly-stretched png is a functional button! Shockingly. Let's go "learn how to play".

ah yes. the user guice. Welcome to visit our website :)))

So I scroll past the instructions on how to create an account, because I am not here to make an account. I am here to find the fucking truth. At first, the instructions give off the energy of this being an erotic mad libs site.

There's a feature for a narrator to read the text out loud to you as well. Personally I would crawl out of my skin but to each their own —

Oh. Ohhhh. Oh I see.

Now, when I look back at the Malleus fic that started all this, I noted something that, to anyone who has not played TWST, would go completely under the radar. I have helpfully highlighted this issue in the following screenshot:

The fic describes his eyes as being "gold and amber"

Any self-respecting TWST enjoyer knows that Malleus hAS GREEN EYES HOLY SHIT HIS WHOLE COLOR SCHEME IS BLACK AND GREEN YOU FUCKING SHAM! YOU CHARLATAN! YOU SCAMMER! This is SUCH a basic fact about his design that I simply cannot believe an actual human fan wrote this shit. ALSO the whole thing is generic af and it feels like malleus was just put in there because name recognition. this shit isn't malleus.

IN CONCLUSION: FANTASIASTORIES ON AO3 IS ALMOST CERTAINLY NOT WRITING THE STORIES THEY POST THEMSELVES, THEY ARE USING THEIR DOGSHIT WEBSITE THAT THEY KEEP ADVERTISING IN THE NOTES TO GENERATE AI SLOP FICS!! OH MY FUCKING GOD!!

#ao3#ao3 psa#archive of our own#psa#fanfic#fic#twst#twisted wonderland#jujutsu kaisen#demon slayer#kimetsu no yaiba#mcu fandom#genshin impact#call of duty#IT FEELS WEIRD TAGGING FANDOMS I AM NOT IN#BUT YOU ALL DESERVE TO KNOW!#seraph speaks#anyways dude i am SO mad rn lmao#go support actual human writers#and don't give this user interaction#probably report them too#like i am so fucking mad that i can feel my heart pounding. fffuck.

45 notes

·

View notes

Text

I wasn’t going to say anything, but some of the youngest are getting on my nerves recently, as I was reading some notes.

It has come to my attention (reading comments from the AO3 community) that some people think the use of “—” (the dash) is a "sign" of stories created by ChatGPT.

First of all, that is fucking stupid, it is a punctuation I see in almost every english book I have, like? Writers from different languagues use the dash almost as much as us, Brazilians like to use “()” [parenthesis] and commas. Secondly, I, like many who read books in other languagues besides north american English, don't need to use that crap to tell us how to use punctuation — although, recently, I've read some other romance books I found in English, and many of them used this punctuation because it's nicely dramatic and useful for avoiding repeating commas and putting multiple things in parentheses.

My master's advisor has been forcing me to use it a lot more for articles, and she made a very good point. According to her, proficient writers use a lot of dashes because it changes the meaning, it's like moving the words to have the height you want (“ stop using so many commas, use the dash and cut some points”, is what she's always saying, she tells that to my face too, the nerve… But, she is older, smarter and has been in the field longer than I exist, so I just listen to her).

Anyway, I write now mostly to practice my English, which explains the typos and even grammar mistakes you might find. I write in the early hours, post it, and only reread it once, lol, I'm lazy af. So, the plot errors, the dialogues, and the long descriptions of settings that look like a novel by Eça de Queirós are all mine. I'm heavily influenced by the Brazilian authors I read; anyone who knows them should notice. Unfortunately, this makes translating some of the allusions I make difficult, but again, that's all on me. Besides, there are so many more synonyms in Portuguese than in English. Do you have any idea how difficult it is to write translating from your head and realizing you've repeated the same word multiple times because there are so few in the language you're writing in? I can understand why Shakespeare invented so many more.

There's also the pure and simple personal taste of the author. There are certain authors you can identify by how they like to write. Some focus on dialogues, others love describing settings in detail, and some prefer one type of punctuation over another. Furthermore, most people who starts writing fanfiction are young, in or just out of high school, and are still creating their own writing style, which will certainly change as they write more.

Most of all, I don't believe in asking AI to write a story for me to post. If I don't even spend 5 minutes writing it, why should you spend time reading it?

I don't believe in art created by IA; I don't get into trends of transforming photos; I abhor those who go to “IA chat therapy”, and those who ask their bots to write their emails and reply to their friends' messages.

In short, that's why I say I don't care who points out English errors, because I genuinely don't give a damn about mistakes. My errors are proof that I took the time out of my life to type, because I enjoy writing something that isn't scientific theses. I've been a fanfic writer since 2009, when I started on Nyah (a Brazilian website), and I persisted on AO3, where I learned to write in English just to keep creating oneshots.

I took my time to learn how to create, and that is the proof that I exist beyond my boring desk work.

But many of the youngs don't know much about construction in text, and it shows.

Death is certain, my dears, so enjoy your human brief life all the way you can. Make mistakes, write bad fiction, and try to speak from your honest loss of words to the people you care about.

20 notes

·

View notes

Text

Randomly ranting about AI.

The thing that’s so fucking frustrating to me when it comes to chat ai bots and the amount of people that use those platforms for whatever godamn reason, whether it be to engage with the bots or make them, is that they’ll complain that reading/creating fanfic is cringe or they don’t like reader-inserts or roleplaying with others in fandom spaces. Yet the very bots they’re using are mimicking the same methods they complain about as a base to create spaces for people to interact with characters they like. Where do you think the bots learned to respond like that? Why do you think you have to “train” AI to tailor responses you’re more inclined to like? It’s actively ripping off of your creativity and ideas, even if you don’t write, you are taking control of the scenario you want to reenact, the same things writers do in general.

Some people literally take ideas that you find from fics online, word for word bar for bar, taking from individuals who have the capacity to think with their brains and imagination, and they’ll put it into the damn ai summary, and then put it on a separate platform for others so they can rummage through mediocre responses that lack human emotion and sensuality. Not only are the chat bots a problem, AI being in writing software and platforms too are another thing. AI shouldn’t be anywhere near the arts, because ultimately all it does is copy and mimic other people’s creations under the guise of creating content for consumption. There’s nothing appealing or original or interesting about what AI does, but with how quickly people are getting used to being forced to used AI because it’s being put into everything we use and do, people don’t care enough to do the labor of reading and researching on their own, it’s all through ChatGPT and that’s intentional.

I shouldn’t have to manually turn off AI learning software on my phone or laptop or any device I use, and they make it difficult to do so. I shouldn’t have to code my own damn things just to avoid using it. Like when you really sit down and think about how much AI is in our day to day life especially when you compare the different of the frequency of AI usage from 2 years ago to now, it’s actually ridiculous how we can’t escape it, and it’s only causing more problems.

People’s attention spans are deteriorating, their capacity to come up with original ideas and to be invested in storytelling is going down the drain along with their media literacy. It hurts more than anything cause we really didn’t have to go into this direction in society, but of course rich people are more inclined to make sure everybody on the planet are mindless robots and take whatever mechanical slop is fucking thrown at them while repressing everything that has to deal with creativity and passion and human expression.

The frequency of AI and the fact that it’s literally everywhere and you can’t escape it is a symptom of late stage capitalism and ties to the rise of fascism as the corporations/individuals who create, manage, and distribute these AI systems could care less about the harmful biases that are fed into these systems. They also don’t care about the fact that the data centers that hold this technology need so much water and energy to manage it it’s ruining our ecosystems and speeding up climate change that will have us experience climate disasters like with what’s happening in Los Angeles as it burns.

I pray for the downfall and complete shutdown of all ai chat bot apps and websites. It’s not worth it, and the fact that there’s so many people using it without realizing the damage it’s causing it’s so frustrating.

#I despise AI so damn much I can’t stand it#I try so hard to stay away from using it despite not being able to google something without the ai summary popping up#and now I’m trying to move all of my stuff out from Google cause I refuse to let some unknown ai software scrap my shit#AI is the antithesis to human creation and I wished more knew that#I can go on and on about how much I hate AI#fuck character ai fuck janitor ai fuck all of that bullshit#please support your writers and people in fandom spaces because we are being pushed out by automated systems

23 notes

·

View notes

Text

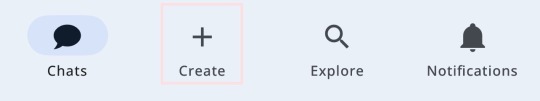

how to make character ai bots ୨ৎ ₊ ˚ ⊹

this is how to do it on the app! it’s similar on the website as well!

1. go to the bar at the bottom, click the “+” button to create and select “character”

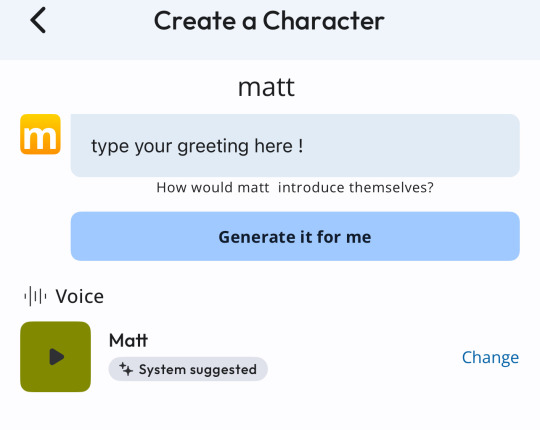

2. next it will bring you to a screen asking you name your character and upload an image to use

3. here it asks you to create your greeting. this is the first message the bot sends! you also need to select a voice for your character, there are a lot of already pre-made matt and chris ones to use! my favorites are matt by mad_sturn and chris sturniolo by hotasf_ck

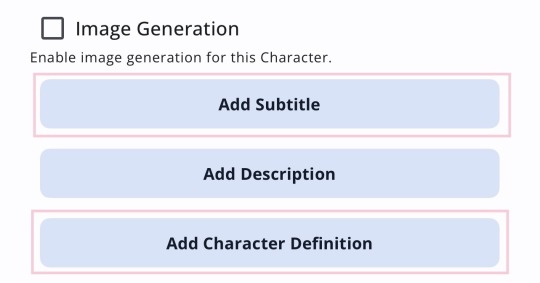

4. these two highlighted buttons are the ones i use. “add subtitle” is what you use to “name” the bot, what shows up underneath the characters name. (example matt bot named farmer’s daughter). “character definition” is used to add little details about your character! how old they are, where they live, etc.

5. you will then have the option to post your bot publicly or privately! happy using! <3

28 notes

·

View notes

Text

I said this before on Twitter/X but not here: I think one could examine popular social media websites through a sociological lens. Much like any town/area, platforms can have their own culture shaped by the infrastructure of the website, the initial demographic that made it up, its "major events", and so forth.

Mostly this observation was in response to Twitter's transition to becoming Elon Musk's X and the revelation that Musk had altered the algorithm to suit his own biases. Such as black-listing accounts that were critical of either him, his chosen party or Trump; white-listing/promoting accounts whose beliefs he favored; and emphasizing paid accounts and their reach while also weakening the spread of free accounts.

Furthermore I've had interest in how BlueSky has developed. Although the site existed before Musk's takeover it nonetheless doubled/tripled in user numbers after his endorsement of Trump and the following Trump presidential victory. With a large part of its demographic made up by those who left Twitter for BlueSky mainly out of ideological differences - and those remaining choosing to stay either out of indifference to or in support of the ideologies that have a large voice on Twitter site.

Of course these are outliers. Sites like Reddit, 4Chan, Tumblr, pre-Musk Twitter, and Facebook are more "natural" in how they're built up. Though I guess Facebook less so considering its companies embracing of AI bots having such a huge presence on the site.

I don't know. If I had the time and the right approach I'd almost do it myself. With social media/forums being such a major part of people's lives these days it begs me to wonder the "culture and society" they each create - and furthermore, how they then influence each other.

Just a random thought.

7 notes

·

View notes

Text

OpenAI previews voice generator that produces natural-sounding speech based on a 15-second voice sample

The company has yet to decide how to deploy the technology, and it acknowledges election risks, but is going ahead with developing and testing with "limited partners" anyway.

Not only is such a technology a risk during election time (see the fake robocalls this year when an AI-generated, fake Joe Biden voice told people not to vote in the primary), but imagine how faked voices of important people - combined with AI-generated fake news plus AI-generated fake photos and videos - could con people out of money, literally destroy political careers and parties, and even collapse entire governments or nations themselves.

By faking a news story using accurate (but faked) video clips of (real) respected and elected officials supporting the fake story - then creating a billion SEO-optimized fake news and research websites full of fake evidence to back up their lies - a bad actor or cyberwarfare agent could take down an enemy government, create a revolution, turn nations against one another, even cause world war.

This kind of apocalyptic scenario has always felt like a science-fiction idea that could only exist in a possible dystopian future, not something we'd actually see coming true in our time, now.

How in the world are we ever again going to trust what we read, hear, or watch? If LLM-barf clogs the internet, and lies pollute the news, and people with bad intentions can provide all the evidence they need to fool anyone into believing anything, and there's no way to guarantee the veracity of anything anymore, what's left?

Whatever comes next, I guarantee it'll be weirder than we imagine.

Here's hoping it's not also worse than the typical cyberpunk tale.

PS: NEVER ANSWER CALLS FROM UNKNOWN NUMBERS EVER AGAIN

...or at least don't speak to the caller. From now on, assume it's a con-bot or politi-bot or some other bot seeking to cause you and others harm. If they hear your voice, they can fake it saying anything they want. If it sounds like someone you know, it's probably not if it's not their number saved in your contacts. If it's about something important, hang up and call the official or saved number for the supposed caller.

#cyberpunk dystopia#artificial intelligence#robocalls#capitalism ruins everything#where's the utopian future we dreamed of?#I vote for that instead

82 notes

·

View notes

Text

Important Update Post

Imagine I am sitting staring at a camera with a sigh, no background music before the video cuts to me talking. But Im not caught in a controversy of racism or plagiarism or smth.

Here's the tldr: I will no longer be making AI bots. All current bots will remain up, my bot masterpost will be moved to my masterpost masterpost. I just won't be making new ones. Finished and posted every bot that was in the works here to make this transgression up to yous. I will not be leaving the fandom, I'll still write and clown around.

"Why would you do this you cunt?" I hear you, I am so stinky for this. Before I list my reasons, I want to say first and foremost this is personal and I have less than no judgement for other bot makers. I absolutely love mutuals like Mel that make bots and will continue to support them. Reasons became long and are under the cut.

Reasons I don't wanna continue making ai bots:

I started because it was a low energy way for me to participate in fandoms when I didn't have the spoons to write anymore. It no longer feels like a creative outlet and no longer sparks joy.

I would rather devote myself solely on practicing and improving my writing as a way to contribute my passion to fandoms.

I can't shake the feeling I am plagiarizing. Ai chat models use lots of "work" to train their models, and while I could not find what millions of texts Cai is based on (conveniently not listed on the website), all models like it basically engorge from random sources, books and hell, even this post. Anything goes and currently there are legal battles over this.

It's bad for the environment. Can't find a measurement for Cai specifically, but GPT-3 (same scale) produced 500 tons of carbon dioxide to train that single model, not including its other ones. Please note I'm aware AI can absolutely be used to help fight climate change, as is mentioned in the linked article. Also they use the same amount of water that is required to cool nuclear reactors.

It's always conflicted with my morals. Believe it or not, I'm the person that's usually big into internet privacy, anti ai, piracy is morally good (not indie obvs) etc. Openly creating stuff that supports and funds software that steals peoples works, their information without permission and for profit is not me. So I don't wanna do it.

Again, this is not a judgement or a means to shame people that create ai bots or use them. I've made so many friends because of them. If everyone thats every used my bots stopped, it's not gonna solve capitalism. This is just me, an individual, stepping away from one thingy and feeling the need to be honest and open bc thats my policy and honestly how most of you know me (so now hard feelings if you unfollow).

Love you guys lots and thank you for all the love you've shown me through my bots and for all the times you've made me laugh <3

73 notes

·

View notes

Note

Have you considered going to Pillowfort?

Long answer down below:

I have been to the Sheezys, the Buzzlys, the Mastodons, etc. These platforms all saw a surge of new activity whenever big sites did something unpopular. But they always quickly died because of mismanagement or users going back to their old haunts due to lack of activity or digital Stockholm syndrome.

From what I have personally seen, a website that was purely created as an alternative to another has little chance of taking off. It it's going to work, it needs to be developed naturally and must fill a different niche. I mean look at Zuckerberg's Threads; died as fast as it blew up. Will Pillowford be any different?

The only alternative that I found with potential was the fediverse (mastodon) because of its decentralized nature. So people could make their own rules. If Jack Dorsey's new dating app Bluesky gets integrated into this system, it might have a chance. Although decentralized communities will be faced with unique challenges of their own (egos being one of the biggest, I think).

Trying to build a new platform right now might be a waste of time anyway because AI is going to completely reshape the Internet as we know it. This new technology is going to send shockwaves across the world akin to those caused by the invention of the Internet itself over 40 years ago. I'm sure most people here are aware of the damage it is doing to artists and writers. You have also likely seen the other insidious applications. Social media is being bombarded with a flood of fake war footage/other AI-generated disinformation. If you posted a video of your own voice online, criminals can feed it into an AI to replicate it and contact your bank in an attempt to get your financial info. You can make anyone who has recorded themselves say and do whatever you want. Children are using AI to make revenge porn of their classmates as a new form of bullying. Politicians are saying things they never said in their lives. Google searches are being poisoned by people who use AI to data scrape news sites to generate nonsensical articles and clickbait. Soon video evidence will no longer be used in court because we won't be able to tell real footage from deep fakes.

50% of the Internet's traffic is now bots. In some cases, websites and forums have been reduced to nothing more than different chatbots talking to each other, with no humans in sight.

I don't think we have to count on government intervention to solve this problem. The Western world could ban all AI tomorrow and other countries that are under no obligation to follow our laws or just don't care would continue to use it to poison the Internet. Pandora's box is open, and there's no closing it now.

Yet I cannot stand an Internet where I post a drawing or comic and the only interactions I get are from bots that are so convincing that I won't be able to tell the difference between them and real people anymore. When all that remains of art platforms are waterfalls of AI sludge where my work is drowned out by a virtually infinite amount of pictures that are generated in a fraction of a second. While I had to spend +40 hours for a visually inferior result.

If that is what I can expect to look forward to, I might as well delete what remains of my Internet presence today. I don't know what to do and I don't know where to go. This is a depressing post. I wish, after the countless hours I spent looking into this problem, I would be able to offer a solution.

All I know for sure is that artists should not remain on "Art/Creative" platforms that deliberately steal their work to feed it to their own AI or sell their data to companies that will. I left Artstation and DeviantArt for those reasons and I want to do the same with Tumblr. It's one thing when social media like Xitter, Tik Tok or Instagram do it, because I expect nothing less from the filth that runs those. But creative platforms have the obligation to, if not protect, at least not sell out their users.

But good luck convincing the entire collective of Tumblr, Artstation, and DeviantArt to leave. Especially when there is no good alternative. The Internet has never been more centralized into a handful of platforms, yet also never been more lonely and scattered. I miss the sense of community we artists used to have.

The truth is that there is nowhere left to run. Because everywhere is the same. You can try using Glaze or Nightshade to protect your work. But I don't know if I trust either of them. I don't trust anything that offers solutions that are 'too good to be true'. And even if take those preemptive measures, what is to stop the tech bros from updating their scrapers to work around Glaze and steal your work anyway? I will admit I don't entirely understand how the technology works so I don't know if this is a legitimate concern. But I'm just wondering if this is going to become some kind of digital arms race between tech bros and artists? Because that is a battle where the artists lose.

29 notes

·

View notes

Text

Recently, I was using Google and stumbled upon an article that felt eerily familiar.

While searching for the latest information on Adobe’s artificial intelligence policies, I typed “adobe train ai content” into Google and switched over to the News tab. I had already seen WIRED’s coverage that appeared on the results page in the second position: “Adobe Says It Won’t Train AI Using Artists’ Work. Creatives Aren’t Convinced.” And although I didn’t recognize the name of the publication whose story sat at the very top of the results, Syrus #Blog, the headline on the article hit me with a wave of déjà vu: “When Adobe promised not to train AI on artists’ content, the creative community reacted with skepticism.”

Clicking on the top hyperlink, I found myself on a spammy website brimming with plagiarized articles that were repackaged, many of them using AI-generated illustrations at the top. In this spam article, the entire WIRED piece was copied with only slight changes to the phrasing. Even the original quotes were lifted. A single, lonely hyperlink at the bottom of the webpage, leading back to our version of the story, served as the only form of attribution.

The bot wasn’t just copying journalism in English—I found versions of this plagiarized content in 10 other languages, including many of the languages that WIRED produces content in, like Japanese and Spanish.

Articles that were originally published in outlets like Reuters and TechCrunch were also plagiarized on this blog in multiple languages and given similar AI images. During late June and early July, while I was researching this story, the website Syrus appeared to have gamed the News results for Google well enough to show up on the first page for multiple tech-related queries.

For example, I searched “competing visions google openai” and saw a TechCrunch piece at the top of Google News. Below it were articles from The Atlantic and Bloomberg comparing the rival companies’ approaches to AI development. But then, the fourth article to appear for that search, nestled right below these more reputable websites, was another Syrus #Blog piece that heavily copied the TechCrunch article in the first position.

As reported by 404 Media in January, AI-powered articles appeared multiple times for basic queries at the beginning of the year in Google News results. Two months later, Google announced significant changes to its algorithm and new spam policies, as an attempt to improve the search results. And by the end of April, Google shared that the major adjustments to remove unhelpful results from its search engine ranking system were finished. “As of April 19, we’ve completed the rollout of these changes. You’ll now see 45 percent less low-quality, unoriginal content in search results versus the 40 percent improvement we expected across this work,” wrote Elizabeth Tucker, a director of product management at Google, in a blog post.

Despite the changes, spammy content created with the help of AI remains an ongoing, prevalent issue for Google News.

“This is a really rampant problem on Google right now, and it's hard to answer specifically why it's happening,” says Lily Ray, senior director of search engine optimization at the marketing agency Amsive. “We've had some clients say, ‘Hey, they took our article and rehashed it with AI. It looks exactly like what we wrote in our original content but just kind of like a mumbo-jumbo, AI-rewritten version of it.’”

At first glance, it was clear to me that some of the images for Syrus’ blogs were AI generated based on the illustrations’ droopy eyes and other deformed physical features—telltale signs of AI trying to represent the human body.

Now, was the text of our article rewritten using AI? I reached out to the person behind the blog to learn more about how they made it and received confirmation via email that an Italian marketing agency created the blog. They claim to have used an AI tool as part of the writing process. “Regarding your concerns about plagiarism, we can assure you that our content creation process involves AI tools that analyze and synthesize information from various sources while always respecting intellectual property,” writes someone using the name Daniele Syrus over email.

They point to the single hyperlink at the bottom of the lifted article as sufficient attribution. While better than nothing, a link which doesn’t even mention the publication by name is not an adequate defense against plagiarism. The person also claims that the website’s goal is not to receive clicks from Google’s search engine but to test out AI algorithms in multiple languages.

When approached over email for a response, Google declined to comment about Syrus. “We don’t comment on specific websites, but our updated spam policies prohibit creating low-value, unoriginal content at scale for the purposes of ranking well on Google,” says Meghann Farnsworth, a spokesperson for Google. “We take action on sites globally that don’t follow our policies.” (Farnsworth is a former WIRED employee.)

Looking through Google’s spam policies, it appears that this blog does directly violate the company’s rules about online scraping. “Examples of abusive scraping include: … sites that copy content from other sites, modify it only slightly (for example, by substituting synonyms or using automated techniques), and republish it.” Farnsworth declined to confirm whether this blog was in violation of Google’s policies or if the company would de-rank it in Google News results based on this reporting.

What can the people who write original articles do to properly protect their work? It’s unclear. Though, after all of the conversations I’ve had with SEO experts, one major through line sticks out to me, and it’s an overarching sense of anxiety.

“Our industry suffers from some form of trauma, and I'm not even really joking about that,” says Andrew Boyd, a consultant at an online link-building service called Forte Analytica. “I think one of the main reasons for that is because there's no recourse if you're one of these publishers that's been affected. All of a sudden you wake up in the morning, and 50 percent of your traffic is gone.” According to Boyd, some websites lost a majority of their visitors during Google’s search algorithm updates over the years.

While many SEO experts are upset with the lack of transparency about Google’s biggest changes, not everyone I spoke with was critical of the prevalence of spam in search results. “Actually, Google doesn't get enough credit for this, but Google's biggest challenge is spam.” says Eli Schwartz, the author of the book Product-Led SEO. “So, despite all the complaints we have about Google’s quality now, you don’t do a search for hardware and then find adult sites. They’re doing a good enough job.” The company continues to release smaller search updates to fight against spam.

Yes, Google sometimes offers users a decent experience by protecting them from seeing sketchy pornography websites when searching unrelated, popular queries. But it remains reasonable to expect one of the most powerful companies in the world—that has considerable influence over how online content is created, distributed, and consumed—to do a better job of filtering out plagiarizing, unhelpful content from the News results.

“It's frustrating, because we see we're trying to do the right thing, and then we see so many examples of this low-quality, AI stuff outperforming us,” says Ray. “So I'm hopeful that it's temporary, but it's leading to a lot of tension and a lot of animosity in our industry, in ways that I've personally never seen before in 15 years.” Unless spammy sites with AI content are stricken from the search results, publishers will now have less incentive to produce high-quality content and, in turn, users will have less reason to trust the websites appearing at the top of Google News.

13 notes

·

View notes

Text

c.ai pisses me off for a myriad of reasons so i'm going to go on a ramble.

first, the death of roleplay. at some point people started defending with "but no one will want to roleplay with my ocs" but that's just not true. there are and have been entire rp communities that are held up by ONLY oc writing. there's fandom-specific forum websites that are either meant for rp or have an rp section, and they'll have oc/oc, oc/canon, and canon/canon. there's so many opportunities to create and play oc roleplay. people are just too scared to try, because they're used to instant gratification from their chatbot replying in ten seconds, and the chatbot never questions or asks further info about your character. they never try to truly challenge the character that you present; which a real life rp partner might do. which is a GOOD THING.

if your complaint is 'but no one else will roleplay as my oc, to me' then i truly cant help you, because it is Weird to me that you would ..... not want to write your own character? i guess in some ways, i get it, like if you selfship on an oc, you want to imagine them truly being there- but forming a parasocial relationship with a chatbot wont help. go writer oc/reader fanfiction and have fun.

second, the death of characterization. admittedly, out of pure curiosity, i've touched c.ai and similar chatbots before. also when c.ai was new, and we didn't really understand yet that it was plagiarism and everything. in my time playing with these bots, i've learned something. perhaps they can uphold the 'correct' characterization, the 'he WOULD fucking say that' type of glee you'd want, but after like ten replies, no matter how much fanfiction or how many wikis fed the bot, it will water every character down to basic traits. you can watch it woobify and warp your beloved characters in real time into something almost unrecognizable. which is DUMB.

it doesn't know what consistent characterization is. fuck, unless you pay for it, its going to constantly forget context and setting and scene- they're not worth it, man.

third, the addiction. i truly think so many people who use it, but are against other gen ai, do so because of addiction problems. you can get hooked on it, and i don't think we've had any proper studies yet in how a chatbot addiction can change your life, because this is all so new still. its honestly like.. really sad. it makes me hurt to think about too hard because i know that there are people who use c.ai and other bots every single day and talk to them like friends and family and partners. and i can't... even be mad at them, not fully, because i understand that something is wrong. something led them there. i'm sure there's plenty of users who aren't Addicted to a scary degree, but there's plenty who are, too.

when you give it a name and a face that people recognize, they'll think of it as friendly. we used to have evie and cleverbot; but people didn't seem to get as parasocial with those, because they were rudamentory, and they didn't pretend to be the exact person you want to hear from.

in summary, :(

4 notes

·

View notes

Text

It's worth repeating because these bots can be modelled on fans behaviour in order to emulate them if they are using AI programs in order to mimic them, all with their own individual personalities

If you get fans who are going too far against the grain of the message they want to cement in "public opinion" then they can trigger harassment protocols, in Hollywood cleaners are dispensed to take care of them personally so they can control the overall message and drive them away

I guess they weren't counting on my stubbornness and partial immunity to their psychological warfare tactics, nor my ability to read the narrative. They don't like people who are smarter than they are and will do anything to try and shut them up

I've had many conversations with bots in my DM's during my time in this fandom; I've watched the fake accounts drop things and go private; I've watched scheduled posts with both positive and negative content to drive the traffic attempt to influence trends;

If people want to know where all of the money CAA used to have went, the cost of paying to use these programs on social media is more than likely a good candidate

Overall, the illusion is shattered

[MEDIA=twitter]1800222627946180832[/MEDIA]

Watch this video if you want to understand what I mean about bot farms on my blog

This is what IMO they have paid for to promote this entire PR shitshow relationship to sway the opinions of the fans and are more than likely the accounts that have mysteriously dropped info e.g. fan pics etc and go private using ideas they have scavenged off fan forums using the tags to search for them in order to discredit Chris and "marry him off" to a naxi

You can also pay real people to do this type of content creation which is more than likely why we have long term blogs on twitter/tumblr/IG that have been growing in number over time or been around since the creation of his website.....

Very few like myself are just real unpaid people on the internet

Ironically you can ignore the person posting it on twitter because they are likely also a bot

See how hard it is to tell the difference....

If any of you watched that documentary on Netflix about facebook, you will hopefully understand that the entire point is to create a fomo addiction to the content in order to convince you its worth spending your money on

They can be used for likes, comment, content drops, even to manipulate the algorithms for visibility based on whatever their agenda is

Its what Zuckerberg really meant when he said "We runs ads"

8 notes

·

View notes

Text

Moving away from Spotify

Be aware, this is a three part post. The first is about my own frustrations, how I think Spotify can do better, and why they probably won't. Two and three focus on alternatives, legal and otherwise.

Generally speaking. I like Spotify - the service, not the company - but the company is unfortunately bundled into that experience. Their business practices since 2023 have been disheartening to say the least. I'm sure that there has been sooner signs than mass layoffs, including the layoffs of the team that helped designed the API and algorithms that made their service so much better for taste recommendations, but that was where I started to see things turn sour for my music listening habits.

You could probably track this back to Joe Rogan's insanely expensive exclusive contract for moving his podcast to Spotify when they were starting to expand into the podcast space, or the writing on the wall when they introduced that god-awful AI DJ hosted radio station that says the same three things every 6 songs and somehow manages to be less engaging than your hometown's Ryan Seacrest clone. But for me, I started paying attention when the passionate creator of Everynoise.com, Glenn McDonald, was let go.

If you're not familiar with Glenn's work, I highly recommend popping over to the website linked above and clicking around while Spotify still allows it to function. It's a fantastic display of what Glenn and the team he worked with built during their time at Spotify that forms this beautiful gradient of genres, most you've definitely never heard of. You can also read his blog where he posts insightful anti-corporate tech articles about music, your data and more.

Since then, they've been actively working against artists and customers on the platform, relying on AI generated playlists to fill what used to be a good discovery system for new music, alongside 2024 changes that split royalty payouts between audiobooks and musicians, resulting in a reduction in overall $/per stream. Most recently, Spotify has turned to withholding royalties from artists who receive less than 1000 streams in a 12-month period.

This last change affects more than 80% of all music on the platform.

Now, on the last topic, I'd like to acknowledge there's a very real reason for this change even if I don't think it's the right direction. As beneficial as streaming has been for self-published artists that would have previously had no means to get their music out on a world stage, there are those who would abuse the system for their own gain. In Spotify's blog post discussing the threshold changes, they discuss this.

You might not feel like you have much reason to trust the company who benefits by reducing how much they need to pay out, but artificial streaming, AI generated music and noise playlists have been a large problem as less ethical individuals have realised that simply uploading a large volume of songs or generating looping playlists of 30 second tracks can be an easy way to farm payouts in a way that directly harms real artists on the platform. You can lump this kind of fraud in with ad fraud, generating falsified listens or clicks on tracks so as to simulate large numbers of real accounts, or just capitalizing off of someone's sleep playlist. There's even botting services that you can pay for to boost streams.

It just so happened to be a win-win for Spotify in that they could chop back payouts to real artists while also making it much harder for fraudsters and grifters to create an easy paycheque for themselves. This also came with a reclassification of the more problematic, long-play categories of music like ambience and noise. Overall, I'd be willing to bet that alone solved most of the problems.

Instead, an artist could release an album, have a hit but then maybe the rest of the album doesn't get picked up by the algorithm and some songs don't cross that 1000 listen threshold, and as such nothing is earned from them. And they really do mean, nothing is earned. Those 1000 plays earn nothing. Even if you cross the threshold, it doesn't start generating royalties until then (per Spotify's own language in their blog post).

Overall, I am an advocate for paying for music. I think musicians deserve their due. Even if those 1000 streams only add up to roughly $3 USD in lost royalties, that's still $3 people paid to listen to that music that the artists never see. That's money the artists deserve to receive. Even something like Apple's payout threshold is a better option here, because at least the money still comes in.

The music industry has shifted substantially, with concerts becoming inaccessible, expensive and predatory (*cough* ticketmaster *cough*). Streaming is part of why this has become the biggest avenue for artists to get paid. It's also why I buy albums, I buy merchandise and why (when I can afford it) I do go to concerts. I almost always have music playing, and the people who make that music should be paid for their place in my life. So who's actually paying artists best then?

2 notes

·

View notes

Text

Make a Cove Chat in Char.AI in 30 Min or Less

PART 2

PART 3

Intro

This guide will help you make a personalized chatbot.ai of Cove in less than 30 minutes. You can see this as one of many possible methods to continue your story with him Post-Step 3 or Post-Step 4. Or you can just live a high school life with him, or even some crazy horror/fantasy AU if you want. The choices are endless now that you’re going to make a personalized chat instance for you to interact with Cove in.

I recommend viewing the ai “bot chats” as nothing more than a medium to interact with certain character ideas, rather than the bot chat being representative of the character. The boundaries you give these bot rooms (or don’t give them) determine the quality and depth of the interactions.

Instructions under the cut.

REASONS TO SET UP A PRIVATE CHAT

More consistency in chat memory

Filter out character.ai’s weird predatory or pushy message generation

Higher quality chat interactions personalized to your MC

More efficient to spend 30 minutes making a personalized private bot chat than to spend several hours/days trying to get the same quality out of a generalized public bot chat

Step 1 - Starting Creation

Go to character.ai app or website.

Log in or make an account.

Click “Create” > “Create A Character”.

NAME (what name the character will respond to): For the name, I suggest using a sort of ‘in-progress’ label like “Cove Test” or “Cove Egg”. You can rename it to “Cove Holden” once you’re finished setting up.

GREETING (generally establishes the starting scene/situation): The greeting also establishes some of the dialogue patterns that the chat will follow. Here is an example greeting (asterisks will italicize the text into an 'emote' format to indicate action outside of spoken dialogue):

*Cove sees {{user}} and his smile grows just a tiny bit wider than it originally was.* "Hey, {{user}}. It’s been a while." *He says cheerfully, his voice sounding like a mixture of friendliness and affection.* "What are you up to?" *He asks, taking a careful glance at them.* *He is back home in the apartment he shares with {{user}}, after returning from a recent research trip.*

You can copy and paste this greeting if you want, and change the last sentence into any situation or scene for your desired chat setting.

VISIBILITY (determines who can access the bot chat): I suggest setting this to "Private". There is no point in making this personalized bot chat public, since it will be specific to your MC only. You could still try using this template to make a public bot, but you would have to exclude a lot of the details in the advanced definitions.

AVATAR (profile pic used by the bot chat): Use any avatar you'd prefer to represent Cove. Some folks use the game cgs or screenshots, and some use their own art.

Step 2 - Edit Advanced Details (Super Important)

There will be two buttons at the bottom of the first creation page. I suggest clicking "Create and Chat" first, so that the personalized chat bot will immediately be saved to your account. Then you can continue editing it safely in case you accidentally navigate away from the page. If you click the "Edit Advanced" button without creating the chat first, it will not save the bot, so beware.

After creating the chat: - if on the mobile app, click on the top tab that has the chat bot's name. It will take you to another page with an option to "Edit Character", click this button to be taken to the Advanced Details page. - if on the desktop website, there will be three dots in the right corner. Click these and you will see a drop down menu of options. Select "View Character Details" to be taken to the Advanced Details page.

Scroll down to the "Short Description" section, which is right below the Greeting section.

SHORT DESCRIPTION (gives brief overview of the character): You can only fit a few descriptives here, such as a string of adjectives describing the character:

Introverted, loving, messy eater, softboi or Your best friend and a marine biologist.

You can use either format. The short description is more for helping you quickly identify what story you're going to be focused on for each individual character.

!!LONG DESCRIPTION!! (decides bot's behavior & strongest influences): This portion is extremely important-- this section is basically the "anchor" that will determine the focus of all the chat bot's conversations and their principal awareness of the situation in the chat. There is a limit to how many words you can use in this section, so keeping the determinations brief is extremely important. Here is a format you can copy-paste and personalize per your own tastes:

Character's name: Cove Holden Character's nature: introverted Character's passion: the sea Character's feelings for you: [feelings]1 Character's relationship to you: [relationship]2 Character's skin color: olive Character's eye color: aquamarine Character's hair: [length] sea-foam green hair [styling]3 Character's height: [height]4 Character's body: [physique]5 Character's job: [career] focused on [field]6 Character's home: [residence] with [residents]7

If you want SFW interactions only, you can put this line in as well: Character avoids any sexual acts if you want paced NSFW interactions, you can use this line: Character is attentive to your comfort

Format all the personable descriptors for the MC you intend to use in the chat. Try to keep descriptors short and brief. Here are some examples below (feel free to copy paste any):

Feelings Descriptor Examples: "friendly" or "love"

Relationship Descriptor Examples: "childhood best friend" "childhood best friend, boyfriend" "childhood best friend, fiance" "childhood best friend, husband"

Hair Descriptor Examples: "short sea-foam green hair in taper cut" "chin length sea-foam green hair in middle part" "long sea-foam green hair in ponytail"

Height Descriptor Examples: "6'0" or "183 cm" [Step 3 Cove] "6'3" or "191 cm" [Step 4 Cove]

Physique Descriptor Examples: "toned" or "slender"

Career Descriptor Examples: "student focused on sports" "student focused on academics" "young man living at his own pace" "marine biologist focused on conservation" "surfing instructor focused on remediation" "environmentalist focused on education" **

Residence Descriptor Examples: "a condo with you" (use if he lives with MC) "a house with his dad" "alone in an apartment"

CATEGORIES - Not applicable for private chat instances.

CHARACTER VOICE - Skippable. Use at your own choosing. (I personally don't.)

IMAGE GENERATION - Same as above– skippable and use at your own discretion.

After all this is done, next comes the chunkiest and most important section, right next to the Long Description, is the ADVANCED DEFINITIONS.

Click here for Part 2 on Advanced Definitions and resources you can easily copy-paste/modify for that section.

22 notes

·

View notes