#GPT applications in business

Explore tagged Tumblr posts

Text

How to expand your vocabulary (in an enjoyable way).

Self-Awareness

If you find yourself struggling to find the appropriate words to express yourself ,then you need to learn more words. If you are reading this article or you find the title interesting, then you are closer than you thought. You are simply self aware. Self awareness is the first step to muster the courage to pursue the art of language and communication. It dawned on me that I was verbally malnourished when I could barely find the words to describe the character I read in a novel. "So what was he like?" My curious friends will ask and all I could say was "he had a troubled childhood and it was evident in his lack of self-control." The sound of that description even troubled me. I knew there was more to his character, but I restricted by my literary scarcity. I still struggle with this but I am making daily efforts to improve. This article will be prescriptive and descriptive.

Execution

Read books, I mean read actively. I read books and I atke pride in it but I am a severely passive reader. I barely engage with the story, the character, or the author's attempt to challenge my prejudice or affinity for the character. My reading goal was to read as many books as possible, quantity over quality. By quality, I mean quality of my reading, not the books per se. Now, I read differently (and I only started this a month ago), I read prudently, making stops anytime I encounter an unfamiliar word. I include that in my vocabulary list on my Notes app. after about 10 words or so. I immediately find each words synonyms, two per word; one easy, one difficult. For example, Decrepit (derelict, neglected).

Use Chat GPT to create sentences for you in different context and practice with that.

3. Find ways to include your new learned words in your own way. If you work a 9-5, it may be helpful to customize your prompt to business/professional context, to be more applicable to you. But most importantly, create your own sentence structure. If you have a meeting, prep for it by using the words your learned, take notes as a guide to help you effectively convey your ideas. I learned "impetuously" recently and during a meeting with my manager she asked me to access myself based on my strength and weaknesses. I responded with "I tend to impetuously accept projects without understanding the deliverables and I end up being overwhelmed with the expectations." My point it make sure you use the context of your everyday life. If you are a humanities major, you might approach this differently.

4. Make it enjoyable. Think of each new word as a specific dollar amount. Then create a "verbal bank," the more words your learn the richer you become. Ecah word for me is valued at $50. I earn $25 extra if I can use it effectively in a conversation. It you learn 10 new words a week, you have made yourself $500. Deposit that into your verbal bank!

5. Record yourself saying this words. Try to actively recall them but through a conversation. Do 1-minute tests. Record yourself describing your day, giving a presentation etc Notice with words flow naturally, if you like go back to your vocabulary list and test yourself. by creating sentences.

6. Expand your reading. Well, I did say to read books and I would suggest to go beyond. Read articles (very well written ones) and when not reading, actively listen to podcasts and pay attention to how the host convey their ideas. You would notice that good writing or speech is not necessarily peppered with difficult words. Good writers is simple to understand because the authors make diffiuclt topics or esoteric topics digestible.

Emulatate & Practise

You simply just have to emulate. Copy the style & syntax of people you admire or respect for their speech or writing. Keep practising. It is a choice to improve or not. Don't hold yourself back. I am practising by writing as well and I have barely scratched the surface and I am sure you can tell by my writing. It is not sophisticated but I do hope to improve and you can to.

Excite yourself

You will come to find yourself smiling when you read a text with words no longer foreign to you. Words that were once distant and strange will eventually become a part of you. That is the best feeling ever, it's exciting.

#self improvement#self love#growth#mindfulness#self development#education#emotional intelligence#self worth#self control#students#classy#smart wom#smart#book club#books#bookworm#reading#books and reading#self discipline

204 notes

·

View notes

Note

why do so many M/Ls love gen-AI suddenly it's straight up perplexing to me. luddite that i may be. anyway other question, do you have any opinions based in theory yourself since you're opposed to gen-AI (as am i) whether it being anarchist/communist etc

Having nuance and learning about something to properly understand it isn't being "in love" with something. It's normal adult behavior. How capitalists use a tool isn't inherent to the tool. Gen AI has (and has long been used) for many valid, disparate applications such as doing busy work for animators and drug discovery. Ever use the magic wand tool in photoshop? That's technically gen AI by today's definitions. The tool itself has no moral value. Why do people consistently mistake the actions of capitalists with the tools they use to try and make a quick profit? Do you not keep up with science and tech? Just because chat GPT is stupid and horrible doesn't mean *checks notes* a computer program that is somewhat related is also stupid and horrible. Learn to listen instead of making assumptions and feeling false superiority based on them.

17 notes

·

View notes

Text

The European Union today agreed on the details of the AI Act, a far-reaching set of rules for the people building and using artificial intelligence. It’s a milestone law that, lawmakers hope, will create a blueprint for the rest of the world.

After months of debate about how to regulate companies like OpenAI, lawmakers from the EU’s three branches of government—the Parliament, Council, and Commission—spent more than 36 hours in total thrashing out the new legislation between Wednesday afternoon and Friday evening. Lawmakers were under pressure to strike a deal before the EU parliament election campaign starts in the new year.

“The EU AI Act is a global first,” said European Commission president Ursula von der Leyen on X. “[It is] a unique legal framework for the development of AI you can trust. And for the safety and fundamental rights of people and businesses.”

The law itself is not a world-first; China’s new rules for generative AI went into effect in August. But the EU AI Act is the most sweeping rulebook of its kind for the technology. It includes bans on biometric systems that identify people using sensitive characteristics such as sexual orientation and race, and the indiscriminate scraping of faces from the internet. Lawmakers also agreed that law enforcement should be able to use biometric identification systems in public spaces for certain crimes.

New transparency requirements for all general purpose AI models, like OpenAI's GPT-4, which powers ChatGPT, and stronger rules for “very powerful” models were also included. “The AI Act sets rules for large, powerful AI models, ensuring they do not present systemic risks to the Union,” says Dragos Tudorache, member of the European Parliament and one of two co-rapporteurs leading the negotiations.

Companies that don’t comply with the rules can be fined up to 7 percent of their global turnover. The bans on prohibited AI will take effect in six months, the transparency requirements in 12 months, and the full set of rules in around two years.

Measures designed to make it easier to protect copyright holders from generative AI and require general purpose AI systems to be more transparent about their energy use were also included.

“Europe has positioned itself as a pioneer, understanding the importance of its role as a global standard setter,” said European Commissioner Thierry Breton in a press conference on Friday night.

Over the two years lawmakers have been negotiating the rules agreed today, AI technology and the leading concerns about it have dramatically changed. When the AI Act was conceived in April 2021, policymakers were worried about opaque algorithms deciding who would get a job, be granted refugee status or receive social benefits. By 2022, there were examples that AI was actively harming people. In a Dutch scandal, decisions made by algorithms were linked to families being forcibly separated from their children, while students studying remotely alleged that AI systems discriminated against them based on the color of their skin.

Then, in November 2022, OpenAI released ChatGPT, dramatically shifting the debate. The leap in AI’s flexibility and popularity triggered alarm in some AI experts, who drew hyperbolic comparisons between AI and nuclear weapons.

That discussion manifested in the AI Act negotiations in Brussels in the form of a debate about whether makers of so-called foundation models such as the one behind ChatGPT, like OpenAI and Google, should be considered as the root of potential problems and regulated accordingly—or whether new rules should instead focus on companies using those foundational models to build new AI-powered applications, such as chatbots or image generators.

Representatives of Europe’s generative AI industry expressed caution about regulating foundation models, saying it could hamper innovation among the bloc’s AI startups. “We cannot regulate an engine devoid of usage,” Arthur Mensch, CEO of French AI company Mistral, said last month. “We don’t regulate the C [programming] language because one can use it to develop malware. Instead, we ban malware.” Mistral’s foundation model 7B would be exempt under the rules agreed today because the company is still in the research and development phase, Carme Artigas, Spain's Secretary of State for Digitalization and Artificial Intelligence, said in the press conference.

The major point of disagreement during the final discussions that ran late into the night twice this week was whether law enforcement should be allowed to use facial recognition or other types of biometrics to identify people either in real time or retrospectively. “Both destroy anonymity in public spaces,” says Daniel Leufer, a senior policy analyst at digital rights group Access Now. Real-time biometric identification can identify a person standing in a train station right now using live security camera feeds, he explains, while “post” or retrospective biometric identification can figure out that the same person also visited the train station, a bank, and a supermarket yesterday, using previously banked images or video.

Leufer said he was disappointed by the “loopholes” for law enforcement that appeared to have been built into the version of the act finalized today.

European regulators’ slow response to the emergence of social media era loomed over discussions. Almost 20 years elapsed between Facebook's launch and the passage of the Digital Services Act—the EU rulebook designed to protect human rights online—taking effect this year. In that time, the bloc was forced to deal with the problems created by US platforms, while being unable to foster their smaller European challengers. “Maybe we could have prevented [the problems] better by earlier regulation,” Brando Benifei, one of two lead negotiators for the European Parliament, told WIRED in July. AI technology is moving fast. But it will still be many years until it’s possible to say whether the AI Act is more successful in containing the downsides of Silicon Valley’s latest export.

82 notes

·

View notes

Note

https://x.com/_Zeets/status/1920094266514170111?t=wjlCbVRYqc6ZJkXr5xM6TQ&s=19

If we were to assume momentarily that pigs can fly and that AI has resolved all of its ethical and environmental concerns.

AI and chatGPT can be used in a way that is beneficial to a person's education without hindering their actual learning and development. It requires intentionality. You have the choice on how you want to use these technologies. Chatgpt does not demand you upload your entire homework assignment. You can just ask it to explain something or help you correct a problem.

I sent an anon a month back about using gpt to fix a programming assignment. I also used it to correct my resume except rather than uploading my resume I just asked it for a few words and rephrasing to guide my language better and then I came up with my own stuff to put in the actual resume. I also used it to try and make study notes for a massive PowerPoint, and it just made shit up and sent it back to me. Just a whole bunch of words that mean nothing.

Ignoring the massive hole i tore into the ozone layer, AI can be extremely helpful. there are different ways to use it. In none of these situations did it do the work for me. It was more of a personalized Google search that relied more on natural language and memory retention to create a more conversational dynamic. It makes it easier to get the answers you want... even if sometimes they're wrong.

I was actually talking with the Dean of my faculty the other day about my degree and issues I had with the current format. I told her I found the degree to be business focused, and a lot of the lessons focus on applications in business settings. And she told me I was one of the few persons to say that and most people find it too research oriented. And so I was telling her that I find that to be such an odd issue for someone to have because why else would you come to university if not to learn and do more research?

The guy in the post is a cs major who clearly just went with the intention of learning enough to get a good job and networking. The reality is that it is the mentality of most students. That is why something like AI and chatgpt will only be seen as a tool for cheating by them. They inherently don't care about the quality of education they're getting. They have no interest in learning and having a deep understanding of the material. Just good grades and networking opportunities.

This mentality predates AI. As much blame as AI needs to take, lazy uninterested students using it to cheat is not something I can blame on it. The only issue I have now is that they're fucking up the curve and making my life harder as an honest student.

Yeah, I don’t think AI is inherently good or bad. It depends on how it’s used and for what reason.

2 notes

·

View notes

Text

Pegasus 1.2: High-Performance Video Language Model

Pegasus 1.2 revolutionises long-form video AI with high accuracy and low latency. Scalable video querying is supported by this commercial tool.

TwelveLabs and Amazon Web Services (AWS) announced that Amazon Bedrock will soon provide Marengo and Pegasus, TwelveLabs' cutting-edge multimodal foundation models. Amazon Bedrock, a managed service, lets developers access top AI models from leading organisations via a single API. With seamless access to TwelveLabs' comprehensive video comprehension capabilities, developers and companies can revolutionise how they search for, assess, and derive insights from video content using AWS's security, privacy, and performance. TwelveLabs models were initially offered by AWS.

Introducing Pegasus 1.2

Unlike many academic contexts, real-world video applications face two challenges:

Real-world videos might be seconds or hours lengthy.

Proper temporal understanding is needed.

TwelveLabs is announcing Pegasus 1.2, a substantial industry-grade video language model upgrade, to meet commercial demands. Pegasus 1.2 interprets long films at cutting-edge levels. With low latency, low cost, and best-in-class accuracy, model can handle hour-long videos. Their embedded storage ingeniously caches movies, making it faster and cheaper to query the same film repeatedly.

Pegasus 1.2 is a cutting-edge technology that delivers corporate value through its intelligent, focused system architecture and excels in production-grade video processing pipelines.

Superior video language model for extended videos

Business requires handling long films, yet processing time and time-to-value are important concerns. As input films increase longer, a standard video processing/inference system cannot handle orders of magnitude more frames, making it unsuitable for general adoption and commercial use. A commercial system must also answer input prompts and enquiries accurately across larger time periods.

Latency

To evaluate Pegasus 1.2's speed, it compares time-to-first-token (TTFT) for 3–60-minute videos utilising frontier model APIs GPT-4o and Gemini 1.5 Pro. Pegasus 1.2 consistently displays time-to-first-token latency for films up to 15 minutes and responds faster to lengthier material because to its video-focused model design and optimised inference engine.

Performance

Pegasus 1.2 is compared to frontier model APIs using VideoMME-Long, a subset of Video-MME that contains films longer than 30 minutes. Pegasus 1.2 excels above all flagship APIs, displaying cutting-edge performance.

Pricing

Cost Pegasus 1.2 provides best-in-class commercial video processing at low cost. TwelveLabs focusses on long videos and accurate temporal information rather than everything. Its highly optimised system performs well at a competitive price with a focused approach.

Better still, system can generate many video-to-text without costing much. Pegasus 1.2 produces rich video embeddings from indexed movies and saves them in the database for future API queries, allowing clients to build continually at little cost. Google Gemini 1.5 Pro's cache cost is $4.5 per hour of storage, or 1 million tokens, which is around the token count for an hour of video. However, integrated storage costs $0.09 per video hour per month, x36,000 less. Concept benefits customers with large video archives that need to understand everything cheaply.

Model Overview & Limitations

Architecture

Pegasus 1.2's encoder-decoder architecture for video understanding includes a video encoder, tokeniser, and big language model. Though efficient, its design allows for full textual and visual data analysis.

These pieces provide a cohesive system that can understand long-term contextual information and fine-grained specifics. It architecture illustrates that tiny models may interpret video by making careful design decisions and solving fundamental multimodal processing difficulties creatively.

Restrictions

Safety and bias

Pegasus 1.2 contains safety protections, but like any AI model, it might produce objectionable or hazardous material without enough oversight and control. Video foundation model safety and ethics are being studied. It will provide a complete assessment and ethics report after more testing and input.

Hallucinations

Occasionally, Pegasus 1.2 may produce incorrect findings. Despite advances since Pegasus 1.1 to reduce hallucinations, users should be aware of this constraint, especially for precise and factual tasks.

#technology#technews#govindhtech#news#technologynews#AI#artificial intelligence#Pegasus 1.2#TwelveLabs#Amazon Bedrock#Gemini 1.5 Pro#multimodal#API

2 notes

·

View notes

Text

The Future of AI: What’s Next in Machine Learning and Deep Learning?

Artificial Intelligence (AI) has rapidly evolved over the past decade, transforming industries and redefining the way businesses operate. With machine learning and deep learning at the core of AI advancements, the future holds groundbreaking innovations that will further revolutionize technology. As machine learning and deep learning continue to advance, they will unlock new opportunities across various industries, from healthcare and finance to cybersecurity and automation. In this blog, we explore the upcoming trends and what lies ahead in the world of machine learning and deep learning.

1. Advancements in Explainable AI (XAI)

As AI models become more complex, understanding their decision-making process remains a challenge. Explainable AI (XAI) aims to make machine learning and deep learning models more transparent and interpretable. Businesses and regulators are pushing for AI systems that provide clear justifications for their outputs, ensuring ethical AI adoption across industries. The growing demand for fairness and accountability in AI-driven decisions is accelerating research into interpretable AI, helping users trust and effectively utilize AI-powered tools.

2. AI-Powered Automation in IT and Business Processes

AI-driven automation is set to revolutionize business operations by minimizing human intervention. Machine learning and deep learning algorithms can predict and automate tasks in various sectors, from IT infrastructure management to customer service and finance. This shift will increase efficiency, reduce costs, and improve decision-making. Businesses that adopt AI-powered automation will gain a competitive advantage by streamlining workflows and enhancing productivity through machine learning and deep learning capabilities.

3. Neural Network Enhancements and Next-Gen Deep Learning Models

Deep learning models are becoming more sophisticated, with innovations like transformer models (e.g., GPT-4, BERT) pushing the boundaries of natural language processing (NLP). The next wave of machine learning and deep learning will focus on improving efficiency, reducing computation costs, and enhancing real-time AI applications. Advancements in neural networks will also lead to better image and speech recognition systems, making AI more accessible and functional in everyday life.

4. AI in Edge Computing for Faster and Smarter Processing

With the rise of IoT and real-time processing needs, AI is shifting toward edge computing. This allows machine learning and deep learning models to process data locally, reducing latency and dependency on cloud services. Industries like healthcare, autonomous vehicles, and smart cities will greatly benefit from edge AI integration. The fusion of edge computing with machine learning and deep learning will enable faster decision-making and improved efficiency in critical applications like medical diagnostics and predictive maintenance.

5. Ethical AI and Bias Mitigation

AI systems are prone to biases due to data limitations and model training inefficiencies. The future of machine learning and deep learning will prioritize ethical AI frameworks to mitigate bias and ensure fairness. Companies and researchers are working towards AI models that are more inclusive and free from discriminatory outputs. Ethical AI development will involve strategies like diverse dataset curation, bias auditing, and transparent AI decision-making processes to build trust in AI-powered systems.

6. Quantum AI: The Next Frontier

Quantum computing is set to revolutionize AI by enabling faster and more powerful computations. Quantum AI will significantly accelerate machine learning and deep learning processes, optimizing complex problem-solving and large-scale simulations beyond the capabilities of classical computing. As quantum AI continues to evolve, it will open new doors for solving problems that were previously considered unsolvable due to computational constraints.

7. AI-Generated Content and Creative Applications

From AI-generated art and music to automated content creation, AI is making strides in the creative industry. Generative AI models like DALL-E and ChatGPT are paving the way for more sophisticated and human-like AI creativity. The future of machine learning and deep learning will push the boundaries of AI-driven content creation, enabling businesses to leverage AI for personalized marketing, video editing, and even storytelling.

8. AI in Cybersecurity: Real-Time Threat Detection

As cyber threats evolve, AI-powered cybersecurity solutions are becoming essential. Machine learning and deep learning models can analyze and predict security vulnerabilities, detecting threats in real time. The future of AI in cybersecurity lies in its ability to autonomously defend against sophisticated cyberattacks. AI-powered security systems will continuously learn from emerging threats, adapting and strengthening defense mechanisms to ensure data privacy and protection.

9. The Role of AI in Personalized Healthcare

One of the most impactful applications of machine learning and deep learning is in healthcare. AI-driven diagnostics, predictive analytics, and drug discovery are transforming patient care. AI models can analyze medical images, detect anomalies, and provide early disease detection, improving treatment outcomes. The integration of machine learning and deep learning in healthcare will enable personalized treatment plans and faster drug development, ultimately saving lives.

10. AI and the Future of Autonomous Systems

From self-driving cars to intelligent robotics, machine learning and deep learning are at the forefront of autonomous technology. The evolution of AI-powered autonomous systems will improve safety, efficiency, and decision-making capabilities. As AI continues to advance, we can expect self-learning robots, smarter logistics systems, and fully automated industrial processes that enhance productivity across various domains.

Conclusion

The future of AI, machine learning and deep learning is brimming with possibilities. From enhancing automation to enabling ethical and explainable AI, the next phase of AI development will drive unprecedented innovation. Businesses and tech leaders must stay ahead of these trends to leverage AI's full potential. With continued advancements in machine learning and deep learning, AI will become more intelligent, efficient, and accessible, shaping the digital world like never before.

Are you ready for the AI-driven future? Stay updated with the latest AI trends and explore how these advancements can shape your business!

#artificial intelligence#machine learning#techinnovation#tech#technology#web developers#ai#web#deep learning#Information and technology#IT#ai future

2 notes

·

View notes

Text

Building AI Apps Using OpenAI’s Models: OpenAI’s GPT Model, Codex Model, DALL·E Model, and Whisper Model - A Comprehensive Guide

Innovative medicine abstract composition with android image demonstrating elements of medical hud interface vector illustration OpenAI’s suite of models is transforming the way developers build intelligent applications. From generating human-like text to coding assistance, from creating compelling visual art to transcribing speech, these models empower businesses and developers to push the…

View On WordPress

2 notes

·

View notes

Text

AI Agent Development: How to Create Intelligent Virtual Assistants for Business Success

In today's digital landscape, businesses are increasingly turning to AI-powered virtual assistants to streamline operations, enhance customer service, and boost productivity. AI agent development is at the forefront of this transformation, enabling companies to create intelligent, responsive, and highly efficient virtual assistants. In this blog, we will explore how to develop AI agents and leverage them for business success.

Understanding AI Agents and Virtual Assistants

AI agents, or intelligent virtual assistants, are software programs that use artificial intelligence, machine learning, and natural language processing (NLP) to interact with users, automate tasks, and make decisions. These agents can be deployed across various platforms, including websites, mobile apps, and messaging applications, to improve customer engagement and operational efficiency.

Key Features of AI Agents

Natural Language Processing (NLP): Enables the assistant to understand and process human language.

Machine Learning (ML): Allows the assistant to improve over time based on user interactions.

Conversational AI: Facilitates human-like interactions.

Task Automation: Handles repetitive tasks like answering FAQs, scheduling appointments, and processing orders.

Integration Capabilities: Connects with CRM, ERP, and other business tools for seamless operations.

Steps to Develop an AI Virtual Assistant

1. Define Business Objectives

Before developing an AI agent, it is crucial to identify the business goals it will serve. Whether it's improving customer support, automating sales inquiries, or handling HR tasks, a well-defined purpose ensures the assistant aligns with organizational needs.

2. Choose the Right AI Technologies

Selecting the right technology stack is essential for building a powerful AI agent. Key technologies include:

NLP frameworks: OpenAI's GPT, Google's Dialogflow, or Rasa.

Machine Learning Platforms: TensorFlow, PyTorch, or Scikit-learn.

Speech Recognition: Amazon Lex, IBM Watson, or Microsoft Azure Speech.

Cloud Services: AWS, Google Cloud, or Microsoft Azure.

3. Design the Conversation Flow

A well-structured conversation flow is crucial for user experience. Define intents (what the user wants) and responses to ensure the AI assistant provides accurate and helpful information. Tools like chatbot builders or decision trees help streamline this process.

4. Train the AI Model

Training an AI assistant involves feeding it with relevant datasets to improve accuracy. This may include:

Supervised Learning: Using labeled datasets for training.

Reinforcement Learning: Allowing the assistant to learn from interactions.

Continuous Learning: Updating models based on user feedback and new data.

5. Test and Optimize

Before deployment, rigorous testing is essential to refine the AI assistant's performance. Conduct:

User Testing: To evaluate usability and responsiveness.

A/B Testing: To compare different versions for effectiveness.

Performance Analysis: To measure speed, accuracy, and reliability.

6. Deploy and Monitor

Once the AI assistant is live, continuous monitoring and optimization are necessary to enhance user experience. Use analytics to track interactions, identify issues, and implement improvements over time.

Benefits of AI Virtual Assistants for Businesses

1. Enhanced Customer Service

AI-powered virtual assistants provide 24/7 support, instantly responding to customer queries and reducing response times.

2. Increased Efficiency

By automating repetitive tasks, businesses can save time and resources, allowing employees to focus on higher-value tasks.

3. Cost Savings

AI assistants reduce the need for large customer support teams, leading to significant cost reductions.

4. Scalability

Unlike human agents, AI assistants can handle multiple conversations simultaneously, making them highly scalable solutions.

5. Data-Driven Insights

AI assistants gather valuable data on customer behavior and preferences, enabling businesses to make informed decisions.

Future Trends in AI Agent Development

1. Hyper-Personalization

AI assistants will leverage deep learning to offer more personalized interactions based on user history and preferences.

2. Voice and Multimodal AI

The integration of voice recognition and visual processing will make AI assistants more interactive and intuitive.

3. Emotional AI

Advancements in AI will enable virtual assistants to detect and respond to human emotions for more empathetic interactions.

4. Autonomous AI Agents

Future AI agents will not only respond to queries but also proactively assist users by predicting their needs and taking independent actions.

Conclusion

AI agent development is transforming the way businesses interact with customers and streamline operations. By leveraging cutting-edge AI technologies, companies can create intelligent virtual assistants that enhance efficiency, reduce costs, and drive business success. As AI continues to evolve, embracing AI-powered assistants will be essential for staying competitive in the digital era.

5 notes

·

View notes

Text

ChatGPT vs DeepSeek: A Comprehensive Comparison of AI Chatbots

Artificial Intelligence (AI) has revolutionized the way we interact with technology. AI-powered chatbots, such as ChatGPT and DeepSeek, have emerged as powerful tools for communication, research, and automation. While both models are designed to provide intelligent and conversational responses, they differ in various aspects, including their development, functionality, accuracy, and ethical considerations. This article provides a detailed comparison of ChatGPT and DeepSeek, helping users determine which AI chatbot best suits their needs.

Understanding ChatGPT and DeepSeek

What is ChatGPT?

ChatGPT, developed by OpenAI, is one of the most advanced AI chatbots available today. Built on the GPT (Generative Pre-trained Transformer) architecture, ChatGPT has been trained on a vast dataset, enabling it to generate human-like responses in various contexts. The chatbot is widely used for content creation, coding assistance, education, and even casual conversation. OpenAI continually updates ChatGPT to improve its accuracy and expand its capabilities, making it a preferred choice for many users.

What is DeepSeek?

DeepSeek is a relatively new AI chatbot that aims to compete with existing AI models like ChatGPT. Developed with a focus on efficiency and affordability, DeepSeek has gained attention for its ability to operate with fewer computing resources. Unlike ChatGPT, which relies on large-scale data processing, DeepSeek is optimized for streamlined AI interactions, making it a cost-effective alternative for businesses and individuals looking for an AI-powered chatbot.

Key Differences Between ChatGPT and DeepSeek

1. Development and Technology

ChatGPT: Built on OpenAI’s GPT architecture, ChatGPT undergoes extensive training with massive datasets. It utilizes deep learning techniques to generate coherent and contextually accurate responses. The model is updated frequently to enhance performance and improve response quality.

DeepSeek: While DeepSeek also leverages machine learning techniques, it focuses on optimizing efficiency and reducing computational costs. It is designed to provide a balance between performance and affordability, making it a viable alternative to high-resource-demanding models like ChatGPT.

2. Accuracy and Response Quality

ChatGPT: Known for its ability to provide highly accurate and nuanced responses, ChatGPT excels in content creation, problem-solving, and coding assistance. It can generate long-form content and has a strong understanding of complex topics.

DeepSeek: While DeepSeek performs well for general queries and casual interactions, it may struggle with complex problem-solving tasks compared to ChatGPT. Its responses tend to be concise and efficient, making it a suitable choice for straightforward queries but less reliable for in-depth discussions.

3. Computational Efficiency and Cost

ChatGPT: Due to its extensive training and large-scale model, ChatGPT requires significant computational power, making it costlier for businesses to integrate into their systems.

DeepSeek: One of DeepSeek’s key advantages is its ability to function with reduced computing resources, making it a more affordable AI chatbot. This cost-effectiveness makes it an attractive option for startups and small businesses with limited budgets.

4. AI Training Data and Bias

ChatGPT: Trained on diverse datasets, ChatGPT aims to minimize bias but still faces challenges in ensuring completely neutral and ethical responses. OpenAI implements content moderation policies to filter inappropriate or biased outputs.

DeepSeek: DeepSeek also incorporates measures to prevent bias but may have different training methodologies that affect its neutrality. As a result, users should assess both models to determine which aligns best with their ethical considerations and content requirements.

5. Use Cases and Applications

ChatGPT: Best suited for individuals and businesses that require advanced AI assistance for content creation, research, education, customer service, and coding support.

DeepSeek: Ideal for users seeking an affordable and efficient AI chatbot for basic queries, quick responses, and streamlined interactions. It may not offer the same depth of analysis as ChatGPT but serves as a practical alternative for general use.

Which AI Chatbot Should You Choose?

The choice between ChatGPT and DeepSeek depends on your specific needs and priorities. If you require an AI chatbot that delivers high accuracy, complex problem-solving, and extensive functionality, ChatGPT is the superior choice. However, if affordability and computational efficiency are your primary concerns, DeepSeek provides a cost-effective alternative.

Businesses and developers should consider factors such as budget, processing power, and the level of AI sophistication required before selecting an AI chatbot. As AI technology continues to evolve, both ChatGPT and DeepSeek will likely see further improvements, making them valuable assets in the digital landscape.

Final Thoughts

ChatGPT and DeepSeek each have their strengths and weaknesses, catering to different user needs. While ChatGPT leads in performance, depth, and versatility, DeepSeek offers an economical and efficient AI experience. As AI chatbots continue to advance, users can expect even more refined capabilities, ensuring AI remains a powerful tool for communication and automation.

By understanding the key differences between ChatGPT and DeepSeek, users can make informed decisions about which AI chatbot aligns best with their objectives. Whether prioritizing accuracy or cost-efficiency, both models contribute to the growing impact of AI on modern communication and technology.

4 notes

·

View notes

Text

“Unpacking the Future of Tech: LLMs, Cloud Computing, and AI”

🌐 The future of coding is evolving fast—driven by powerful technologies like Artificial Intelligence, Large Language Models (LLMs), and Cloud Computing. Here’s how each of these is changing the game:

1. AI in Everyday Coding: From debugging to auto-completing code, AI is no longer just for data scientists. It’s a coder’s powerful tool, enhancing productivity and allowing developers to focus on complex, creative tasks.

2. LLMs (Large Language Models): With models like GPT-4, LLMs are transforming how we interact with machines, providing everything from code suggestions to creating full applications. Ever tried coding with an LLM as your pair programmer?

3. The Cloud as the New Normal: Cloud computing has revolutionized scalability, allowing businesses of any size to deploy applications globally without huge infrastructure. Understanding cloud platforms is a must for any modern developer.

🔍 Takeaway: Whether you’re diving into AI, experimenting with LLMs, or building in the cloud, these technologies open up endless possibilities for the future. How are you integrating these into your coding journey?

2 notes

·

View notes

Text

Kai-Fu Lee has declared war on Nvidia and the entire US AI ecosystem.

🔹 Lee emphasizes the need to focus on reducing the cost of inference, which is crucial for making AI applications more accessible to businesses. He highlights that the current pricing model for services like GPT-4 — $4.40 per million tokens — is prohibitively expensive compared to traditional search queries. This high cost hampers the widespread adoption of AI applications in business, necessitating a shift in how AI models are developed and priced. By lowering inference costs, companies can enhance the practicality and demand for AI solutions.

🔹 Another critical direction Lee advocates is the transition from universal models to “expert models,” which are tailored to specific industries using targeted data. He argues that businesses do not benefit from generic models trained on vast amounts of unlabeled data, as these often lack the precision needed for specific applications. Instead, creating specialized neural networks that cater to particular sectors can deliver comparable intelligence with reduced computational demands. This expert model approach aligns with Lee’s vision of a more efficient and cost-effective AI ecosystem.

🔹 Lee’s startup, 01. ai, is already implementing these concepts successfully. Its Yi-Lightning model has achieved impressive performance, ranking sixth globally while being extremely cost-effective at just $0.14 per million tokens. This model was trained with far fewer resources than competitors, illustrating that high costs and extensive data are not always necessary for effective AI training. Additionally, Lee points out that China’s engineering expertise and lower costs can enhance data collection and processing, positioning the country to not just catch up to the U.S. in AI but potentially surpass it in the near future. He envisions a future where AI becomes integral to business operations, fundamentally changing how industries function and reducing the reliance on traditional devices like smartphones.

#artificial intelligence#technology#coding#ai#tech news#tech world#technews#open ai#ai hardware#ai model#KAI FU LEE#nvidia#US#usa#china#AI ECOSYSTEM#the tech empire

2 notes

·

View notes

Text

How Large Language Models (LLMs) are Transforming Data Cleaning in 2024

Data is the new oil, and just like crude oil, it needs refining before it can be utilized effectively. Data cleaning, a crucial part of data preprocessing, is one of the most time-consuming and tedious tasks in data analytics. With the advent of Artificial Intelligence, particularly Large Language Models (LLMs), the landscape of data cleaning has started to shift dramatically. This blog delves into how LLMs are revolutionizing data cleaning in 2024 and what this means for businesses and data scientists.

The Growing Importance of Data Cleaning

Data cleaning involves identifying and rectifying errors, missing values, outliers, duplicates, and inconsistencies within datasets to ensure that data is accurate and usable. This step can take up to 80% of a data scientist's time. Inaccurate data can lead to flawed analysis, costing businesses both time and money. Hence, automating the data cleaning process without compromising data quality is essential. This is where LLMs come into play.

What are Large Language Models (LLMs)?

LLMs, like OpenAI's GPT-4 and Google's BERT, are deep learning models that have been trained on vast amounts of text data. These models are capable of understanding and generating human-like text, answering complex queries, and even writing code. With millions (sometimes billions) of parameters, LLMs can capture context, semantics, and nuances from data, making them ideal candidates for tasks beyond text generation—such as data cleaning.

To see how LLMs are also transforming other domains, like Business Intelligence (BI) and Analytics, check out our blog How LLMs are Transforming Business Intelligence (BI) and Analytics.

Traditional Data Cleaning Methods vs. LLM-Driven Approaches

Traditionally, data cleaning has relied heavily on rule-based systems and manual intervention. Common methods include:

Handling missing values: Methods like mean imputation or simply removing rows with missing data are used.

Detecting outliers: Outliers are identified using statistical methods, such as standard deviation or the Interquartile Range (IQR).

Deduplication: Exact or fuzzy matching algorithms identify and remove duplicates in datasets.

However, these traditional approaches come with significant limitations. For instance, rule-based systems often fail when dealing with unstructured data or context-specific errors. They also require constant updates to account for new data patterns.

LLM-driven approaches offer a more dynamic, context-aware solution to these problems.

How LLMs are Transforming Data Cleaning

1. Understanding Contextual Data Anomalies

LLMs excel in natural language understanding, which allows them to detect context-specific anomalies that rule-based systems might overlook. For example, an LLM can be trained to recognize that “N/A” in a field might mean "Not Available" in some contexts and "Not Applicable" in others. This contextual awareness ensures that data anomalies are corrected more accurately.

2. Data Imputation Using Natural Language Understanding

Missing data is one of the most common issues in data cleaning. LLMs, thanks to their vast training on text data, can fill in missing data points intelligently. For example, if a dataset contains customer reviews with missing ratings, an LLM could predict the likely rating based on the review's sentiment and content.

A recent study conducted by researchers at MIT (2023) demonstrated that LLMs could improve imputation accuracy by up to 30% compared to traditional statistical methods. These models were trained to understand patterns in missing data and generate contextually accurate predictions, which proved to be especially useful in cases where human oversight was traditionally required.

3. Automating Deduplication and Data Normalization

LLMs can handle text-based duplication much more effectively than traditional fuzzy matching algorithms. Since these models understand the nuances of language, they can identify duplicate entries even when the text is not an exact match. For example, consider two entries: "Apple Inc." and "Apple Incorporated." Traditional algorithms might not catch this as a duplicate, but an LLM can easily detect that both refer to the same entity.

Similarly, data normalization—ensuring that data is formatted uniformly across a dataset—can be automated with LLMs. These models can normalize everything from addresses to company names based on their understanding of common patterns and formats.

4. Handling Unstructured Data

One of the greatest strengths of LLMs is their ability to work with unstructured data, which is often neglected in traditional data cleaning processes. While rule-based systems struggle to clean unstructured text, such as customer feedback or social media comments, LLMs excel in this domain. For instance, they can classify, summarize, and extract insights from large volumes of unstructured text, converting it into a more analyzable format.

For businesses dealing with social media data, LLMs can be used to clean and organize comments by detecting sentiment, identifying spam or irrelevant information, and removing outliers from the dataset. This is an area where LLMs offer significant advantages over traditional data cleaning methods.

For those interested in leveraging both LLMs and DevOps for data cleaning, see our blog Leveraging LLMs and DevOps for Effective Data Cleaning: A Modern Approach.

Real-World Applications

1. Healthcare Sector

Data quality in healthcare is critical for effective treatment, patient safety, and research. LLMs have proven useful in cleaning messy medical data such as patient records, diagnostic reports, and treatment plans. For example, the use of LLMs has enabled hospitals to automate the cleaning of Electronic Health Records (EHRs) by understanding the medical context of missing or inconsistent information.

2. Financial Services

Financial institutions deal with massive datasets, ranging from customer transactions to market data. In the past, cleaning this data required extensive manual work and rule-based algorithms that often missed nuances. LLMs can assist in identifying fraudulent transactions, cleaning duplicate financial records, and even predicting market movements by analyzing unstructured market reports or news articles.

3. E-commerce

In e-commerce, product listings often contain inconsistent data due to manual entry or differing data formats across platforms. LLMs are helping e-commerce giants like Amazon clean and standardize product data more efficiently by detecting duplicates and filling in missing information based on customer reviews or product descriptions.

Challenges and Limitations

While LLMs have shown significant potential in data cleaning, they are not without challenges.

Training Data Quality: The effectiveness of an LLM depends on the quality of the data it was trained on. Poorly trained models might perpetuate errors in data cleaning.

Resource-Intensive: LLMs require substantial computational resources to function, which can be a limitation for small to medium-sized enterprises.

Data Privacy: Since LLMs are often cloud-based, using them to clean sensitive datasets, such as financial or healthcare data, raises concerns about data privacy and security.

The Future of Data Cleaning with LLMs

The advancements in LLMs represent a paradigm shift in how data cleaning will be conducted moving forward. As these models become more efficient and accessible, businesses will increasingly rely on them to automate data preprocessing tasks. We can expect further improvements in imputation techniques, anomaly detection, and the handling of unstructured data, all driven by the power of LLMs.

By integrating LLMs into data pipelines, organizations can not only save time but also improve the accuracy and reliability of their data, resulting in more informed decision-making and enhanced business outcomes. As we move further into 2024, the role of LLMs in data cleaning is set to expand, making this an exciting space to watch.

Large Language Models are poised to revolutionize the field of data cleaning by automating and enhancing key processes. Their ability to understand context, handle unstructured data, and perform intelligent imputation offers a glimpse into the future of data preprocessing. While challenges remain, the potential benefits of LLMs in transforming data cleaning processes are undeniable, and businesses that harness this technology are likely to gain a competitive edge in the era of big data.

#Artificial Intelligence#Machine Learning#Data Preprocessing#Data Quality#Natural Language Processing#Business Intelligence#Data Analytics#automation#datascience#datacleaning#large language model#ai

2 notes

·

View notes

Text

Until the dramatic departure of OpenAI’s cofounder and CEO Sam Altman on Friday, Mira Murati was its chief technology officer—but you could also call her its minister of truth. In addition to heading the teams that develop tools such as ChatGPT and Dall-E, it’s been her job to make sure those products don’t mislead people, show bias, or snuff out humanity altogether.

This interview was conducted in July 2023 for WIRED’s cover story on OpenAI. It is being published today after Sam Altman’s sudden departure to provide a glimpse at the thinking of the powerful AI company’s new boss.

Steven Levy: How did you come to join OpenAI?

Mira Murati: My background is in engineering, and I worked in aerospace, automotive, VR, and AR. Both in my time at Tesla [where she shepherded the Model X], and at a VR company [Leap Motion] I was doing applications of AI in the real world. I very quickly believed that AGI would be the last and most important major technology that we built, and I wanted to be at the heart of it. Open AI was the only organization at the time that was incentivized to work on the capabilities of AI technology and also make sure that it goes well. When I joined in 2018, I began working on our supercomputing strategy and managing a couple of research teams.

What moments stand out to you as key milestones during your tenure here?

There are so many big-deal moments, it’s hard to remember. We live in the future, and we see crazy things every day. But I do remember GPT-3 being able to translate. I speak Italian, Albanian, and English. I remember just creating pair prompts of English and Italian. And all of a sudden, even though we never trained it to translate in Italian, it could do it fairly well.

You were at OpenAI early enough to be there when it changed from a pure nonprofit to reorganizing so that a for-profit entity lived inside the structure. How did you feel about that?

It was not something that was done lightly. To really understand how to make our models better and safer, you need to deploy them at scale. That costs a lot of money. It requires you to have a business plan, because your generous nonprofit donors aren't going to give billions like investors would. As far as I know, there's no other structure like this. The key thing was protecting the mission of the nonprofit.

That might be tricky since you partner so deeply with a big tech company. Do you feel your mission is aligned with Microsoft’s?

In the sense that they believe that this is our mission.

But that's not their mission.

No, that's not their mission. But it was important for the investor to actually believe that it’s our mission.

When you joined in 2018, OpenAI was mainly a research lab. While you still do research, you’re now very much a product company. Has that changed the culture?

It has definitely changed the company a lot. I feel like almost every year, there's some sort of paradigm shift where we have to reconsider how we're doing things. It is kind of like an evolution. What's more obvious now to everyone is this need for continuous adaptation in society, helping bring this technology to the world in a responsible way, and helping society adapt to this change. That wasn't necessarily obvious five years ago, when we were just doing stuff in our lab. But putting GPT-3 in an API, in working with customers and developers, helped us build this muscle of understanding the potential that the technology has to change things in the real world, often in ways that are different than what we predict.

You were involved in Dall-E. Because it outputs imagery, you had to consider different things than a text model, including who owns the images that the model draws upon. What were your fears and how successful you think you were?

Obviously, we did a ton of red-teaming. I remember it being a source of joy, levity, and fun. People came up with all these like creative, crazy prompts. We decided to make it available in labs, as an easy way for people to interact with the technology and learn about it. And also to think about policy implications and about how Dall-E can affect products and social media or other things out there. We also worked a lot with creatives, to get their input along the way, because we see it internally as a tool that really enhances creativity, as opposed to replacing it. Initially there was speculation that AI would first automate a bunch of jobs, and creativity was the area where we humans had a monopoly. But we've seen that these AI models actually have a potential to really be creative. When you see artists play with Dall-E, the outputs are really magnificent.

Since OpenAI has released its products, there have been questions about their immediate impact in things like copyright, plagiarism, and jobs. By putting things like GPT-4 in the wild, it’s almost like you’re forcing the public to deal with those issues. Was that intentional?

Definitely. It's actually very important to figure out how to bring it out there in a way that's safe and responsible, and helps people integrate it into their workflow. It’s going to change entire industries; people have compared it to electricity or the printing press. And so it's very important to start actually integrating it in every layer of society and think about things like copyright laws, privacy, governance and regulation. We have to make sure that people really experience for themselves what this technology is capable of versus reading about it in some press release, especially as the technological progress continues to be so rapid. It's futile to resist it. I think it's important to embrace it and figure out how it's going to go well.

Are you convinced that that's the optimal way to move us toward AGI?

I haven't come up with a better way than iterative deployments to figure out how you get this continuous adaptation and feedback from the real end feeding back into the technology to make it more robust to these use cases. It’s very important to do this now, while the stakes are still low. As we get closer to AGI, it's probably going to evolve again, and our deployment strategy will change as we get closer to it.

5 notes

·

View notes

Text

What Is Generative Physical AI? Why It Is Important?

What is Physical AI?

Autonomous robots can see, comprehend, and carry out intricate tasks in the actual (physical) environment with to physical artificial intelligence. Because of its capacity to produce ideas and actions to carry out, it is also sometimes referred to as “Generative physical AI.”

How Does Physical AI Work?

Models of generative AI Massive volumes of text and picture data, mostly from the Internet, are used to train huge language models like GPT and Llama. Although these AIs are very good at creating human language and abstract ideas, their understanding of the physical world and its laws is still somewhat restricted.

Current generative AI is expanded by Generative physical AI, which comprehends the spatial linkages and physical behavior of the three-dimensional environment in which the all inhabit. During the AI training process, this is accomplished by supplying extra data that includes details about the spatial connections and physical laws of the actual world.

Highly realistic computer simulations are used to create the 3D training data, which doubles as an AI training ground and data source.

A digital doppelganger of a location, such a factory, is the first step in physically-based data creation. Sensors and self-governing devices, such as robots, are introduced into this virtual environment. The sensors record different interactions, such as rigid body dynamics like movement and collisions or how light interacts in an environment, and simulations that replicate real-world situations are run.

What Function Does Reinforcement Learning Serve in Physical AI?

Reinforcement learning trains autonomous robots to perform in the real world by teaching them skills in a simulated environment. Through hundreds or even millions of trial-and-error, it enables self-governing robots to acquire abilities in a safe and efficient manner.

By rewarding a physical AI model for doing desirable activities in the simulation, this learning approach helps the model continually adapt and become better. Autonomous robots gradually learn to respond correctly to novel circumstances and unanticipated obstacles via repeated reinforcement learning, readying them for real-world operations.

An autonomous machine may eventually acquire complex fine motor abilities required for practical tasks like packing boxes neatly, assisting in the construction of automobiles, or independently navigating settings.

Why is Physical AI Important?

Autonomous robots used to be unable to detect and comprehend their surroundings. However, Generative physical AI enables the construction and training of robots that can naturally interact with and adapt to their real-world environment.

Teams require strong, physics-based simulations that provide a secure, regulated setting for training autonomous machines in order to develop physical AI. This improves accessibility and utility in real-world applications by facilitating more natural interactions between people and machines, in addition to increasing the efficiency and accuracy of robots in carrying out complicated tasks.

Every business will undergo a transformation as Generative physical AI opens up new possibilities. For instance:

Robots: With physical AI, robots show notable improvements in their operating skills in a range of environments.

Using direct input from onboard sensors, autonomous mobile robots (AMRs) in warehouses are able to traverse complicated settings and avoid impediments, including people.

Depending on how an item is positioned on a conveyor belt, manipulators may modify their grabbing position and strength, demonstrating both fine and gross motor abilities according to the object type.

This method helps surgical robots learn complex activities like stitching and threading needles, demonstrating the accuracy and versatility of Generative physical AI in teaching robots for particular tasks.

Autonomous Vehicles (AVs): AVs can make wise judgments in a variety of settings, from wide highways to metropolitan cityscapes, by using sensors to sense and comprehend their environment. By exposing AVs to physical AI, they may better identify people, react to traffic or weather, and change lanes on their own, efficiently adjusting to a variety of unforeseen situations.

Smart Spaces: Large interior areas like factories and warehouses, where everyday operations include a constant flow of people, cars, and robots, are becoming safer and more functional with to physical artificial intelligence. By monitoring several things and actions inside these areas, teams may improve dynamic route planning and maximize operational efficiency with the use of fixed cameras and sophisticated computer vision models. Additionally, they effectively see and comprehend large-scale, complicated settings, putting human safety first.

How Can You Get Started With Physical AI?

Using Generative physical AI to create the next generation of autonomous devices requires a coordinated effort from many specialized computers:

Construct a virtual 3D environment: A high-fidelity, physically based virtual environment is needed to reflect the actual world and provide synthetic data essential for training physical AI. In order to create these 3D worlds, developers can simply include RTX rendering and Universal Scene Description (OpenUSD) into their current software tools and simulation processes using the NVIDIA Omniverse platform of APIs, SDKs, and services.

NVIDIA OVX systems support this environment: Large-scale sceneries or data that are required for simulation or model training are also captured in this stage. fVDB, an extension of PyTorch that enables deep learning operations on large-scale 3D data, is a significant technical advancement that has made it possible for effective AI model training and inference with rich 3D datasets. It effectively represents features.

Create synthetic data: Custom synthetic data generation (SDG) pipelines may be constructed using the Omniverse Replicator SDK. Domain randomization is one of Replicator’s built-in features that lets you change a lot of the physical aspects of a 3D simulation, including lighting, position, size, texture, materials, and much more. The resulting pictures may also be further enhanced by using diffusion models with ControlNet.

Train and validate: In addition to pretrained computer vision models available on NVIDIA NGC, the NVIDIA DGX platform, a fully integrated hardware and software AI platform, may be utilized with physically based data to train or fine-tune AI models using frameworks like TensorFlow, PyTorch, or NVIDIA TAO. After training, reference apps such as NVIDIA Isaac Sim may be used to test the model and its software stack in simulation. Additionally, developers may use open-source frameworks like Isaac Lab to use reinforcement learning to improve the robot’s abilities.

In order to power a physical autonomous machine, such a humanoid robot or industrial automation system, the optimized stack may now be installed on the NVIDIA Jetson Orin and, eventually, the next-generation Jetson Thor robotics supercomputer.

Read more on govindhtech.com

#GenerativePhysicalAI#generativeAI#languagemodels#PyTorch#NVIDIAOmniverse#AImodel#artificialintelligence#NVIDIADGX#TensorFlow#AI#technology#technews#news#govindhtech

3 notes

·

View notes

Text

ChatGPT

ChatGPT is an AI developed by OpenAI that's designed to engage in conversational interactions with users like yourself. It's part of the larger family of GPT (Generative Pre-trained Transformer) models, which are capable of understanding and generating human-like text based on the input it receives. ChatGPT has been trained on vast amounts of text data from the internet and other sources, allowing it to generate responses that are contextually relevant and, hopefully, helpful or interesting to you.

Where can be used this ChatGPT:

ChatGPT can be used in various contexts where human-like text generation and interaction are beneficial. Here are some common use cases:

Customer Support: ChatGPT can provide automated responses to customer inquiries on websites or in messaging platforms, assisting with basic troubleshooting or frequently asked questions.

Personal Assistants: ChatGPT can act as a virtual assistant, helping users with tasks such as setting reminders, managing schedules, or providing information on a wide range of topics.

Education: ChatGPT can serve as a tutor or learning companion, answering students' questions, providing explanations, and offering study assistance across different subjects.

Content Creation: ChatGPT can assist writers, bloggers, and content creators by generating ideas, offering suggestions, or even drafting content based on given prompts.

Entertainment: ChatGPT can engage users in casual conversation, tell jokes, share interesting facts, or even participate in storytelling or role-playing games.

Therapy and Counseling: ChatGPT can provide a listening ear and offer supportive responses to individuals seeking emotional support or guidance.

Language Learning: ChatGPT can help language learners practice conversation, receive feedback on their writing, or clarify grammar and vocabulary concepts.

ChatGPT offers several advantages across various applications:

Scalability: ChatGPT can handle a large volume of conversations simultaneously, making it suitable for applications with high user engagement.

24/7 Availability: Since ChatGPT is automated, it can be available to users around the clock, providing assistance or information whenever needed.

Consistency: ChatGPT provides consistent responses regardless of the time of day or the number of inquiries, ensuring that users receive reliable information.

Cost-Effectiveness: Implementing ChatGPT can reduce the need for human agents in customer support or other interaction-based roles, resulting in cost savings for businesses.

Efficiency: ChatGPT can quickly respond to user queries, reducing waiting times and improving user satisfaction.

Customization: ChatGPT can be fine-tuned and customized to suit specific applications or industries, ensuring that the responses align with the organization's brand voice and objectives.

Language Support: ChatGPT can communicate in multiple languages, allowing businesses to cater to a diverse audience without the need for multilingual support teams.

Data Insights: ChatGPT can analyze user interactions to identify trends, gather feedback, and extract valuable insights that can inform business decisions or improve the user experience.

Personalization: ChatGPT can be trained on user data to provide personalized recommendations or responses tailored to individual preferences or circumstances.

Continuous Improvement: ChatGPT can be updated and fine-tuned over time based on user feedback and new data, ensuring that it remains relevant and effective in addressing users' needs.

These advantages make ChatGPT a powerful tool for businesses, educators, developers, and individuals looking to enhance their interactions with users or customers through natural language processing and generation.

2 notes

·

View notes

Text

AI and the Arrival of ChatGPT

Opportunities, challenges, and limitations

In a memorable scene from the 1996 movie, Twister, Dusty recognizes the signs of an approaching tornado and shouts, “Jo, Bill, it's coming! It's headed right for us!” Bill, shouts back ominously, “It's already here!” Similarly, the approaching whirlwind of artificial intelligence (AI) has some shouting “It’s coming!” while others pointedly concede, “It’s already here!”

Coined by computer and cognitive scientist John McCarthy (1927-2011) in an August 1955 proposal to study “thinking machines,” AI purports to differentiate between human intelligence and technical computations. The idea of tools assisting people in tasks is nearly as old as humanity (see Genesis 4:22), but machines capable of executing a function and “remembering” – storing information for recordkeeping and recall – only emerged around the mid-twentieth century (see "Timeline of Computer History").

McCarthy’s proposal conjectured that “every aspect of learning or any other feature of intelligence can in principle be so precisely described that a machine can be made to simulate it. An attempt will be made to find how to make machines use language, form abstractions and concepts, solve kinds of problems now reserved for humans, and improve themselves.” The team received a $7,000 grant from The Rockefeller Foundation and the resulting 1956 Dartmouth Conference at Dartmouth College in Hanover, New Hampshire totaling 47 intermittent participants over eight weeks birthed the field now widely referred to as “artificial intelligence.”

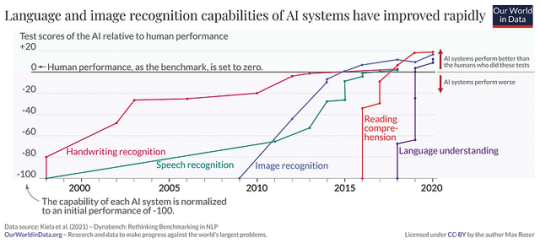

AI research, development, and technological integration have since grown exponentially. According to University of Oxford Director of Global Development, Dr. Max Roser, “Artificial intelligence has already changed what we see, what we know, and what we do” despite its relatively short technological existence (see "The brief history of Artificial Intelligence").

Ai took a giant leap into mainstream culture following the November 30, 2022 public release of “ChatGPT.” Gaining 1 million users within 5 days and 100 million users within 45 days, it earned the title of the fastest growing consumer software application in history. The program combines chatbot functionality (hence “Chat”) with a Generative Pre-trained Transformer (hence “GPT”) large language model (LLM). Basically, LLM’s use an extensive computer network to draw from large, but limited, data sets to simulate interactive, conversational content.

“What happened with ChatGPT was that for the first time the power of AI was put in the hands of every human on the planet,” says Chris Koopmans, COO of Marvell Technology, a network chip maker and AI process design company based in Santa Clara, California. “If you're a business executive, you think, ‘Wow, this is going to change everything.’”

“ChatGPT is incredible in its ability to create nearly instant responses to complex prompts,” says Dr. Israel Steinmetz, Graduate Dean and Associate Professor at The Bible Seminary (TBS) in Katy, Texas. “In simple terms, the software takes a user's prompt and attempts to rephrase it as a statement with words and phrases it can predict based on the information available. It does not have Internet access, but rather a limited database of information. ChatGPT can provide straightforward summaries and explanations customized for styles, voice, etc. For instance, you could ask it to write a rap song in Shakespearean English contrasting Barth and Bultmann's view of miracles and it would do it!”

One several AI products offered by the research and development company, OpenAI, ChatGPT purports to offer advanced reasoning, help with creativity, and work with visual input. The newest version, GPT-4, can handle 25,000 words of text, about the amount in a 100-page book.

Krista Hentz, an Atlanta, Georgia-based executive for an international communications technology company, first used ChatCPT about three months ago.

“I primarily use it for productivity,” she says. “I use it to help prompt email drafts, create phone scripts, redesign resumes, and draft cover letters based on resumes. I can upload a financial statement and request a company summary.”

“ChatGPT has helped speed up a number of tasks in our business,” says Todd Hayes, a real estate entrepreneur in Texas. “It will level the world’s playing field for everyone involved in commerce.”

A TBS student, bi-vocational pastor, and Computer Support Specialist who lives in Texarkana, Texas, Brent Hoefling says, “I tried using [ChatGPT, version 3.5] to help rewrite sentences in active voice instead of passive. It can get it right, but I still have to rewrite it in my style, and about half the time the result is also passive.”

“AI is the hot buzz word,” says Hentz, noting AI is increasingly a topic of discussion, research, and response at company meetings. “But, since AI has different uses in different industries and means different things to different people, we’re not even sure what we are talking about sometimes."

Educational organizations like TBS are finding it necessary to proactively address AI-related issues. “We're already way past whether to use ChatGPT in higher education,” says Steinmetz. “The questions we should be asking are how.”

TBS course syllabi have a section entitled “Intellectual Honesty” addressing integrity and defining plagiarism. Given the availability and explosive use of ChatGHT, TBS has added the following verbiage: “AI chatbots such as ChatGPT are not a reliable or reputable source for TBS students in their research and writing. While TBS students may use AI technology in their research process, they may not cite information or ideas derived from AI. The inclusion of content generated by AI tools in assignments is strictly prohibited as a form of intellectual dishonesty. Rather, students must locate and cite appropriate sources (e.g., scholarly journals, articles, and books) for all claims made in their research and writing. The commission of any form of academic dishonesty will result in an automatic ‘zero’ for the assignment and a referral to the provost for academic discipline.”

Challenges and Limitations

Thinking

There is debate as to whether AI hardware and software will ever achieve “thinking.” The Dartmouth conjecture “that every aspect of learning or any other feature of intelligence” can be simulated by machines is challenged by some who distinguish between formal linguistic competence and functional competence. Whereas LLM’s perform increasingly well on tasks that use known language patterns and rules, they do not perform well in complex situations that require extralinguistic calculations that combine common sense, feelings, knowledge, reasoning, self-awareness, situation modeling, and social skills (see "Dissociating language and thought in large language models"). Human intelligence involves innumerably complex interactions of sentient biological, emotional, mental, physical, psychological, and spiritual activities that drive behavior and response. Furthermore, everything achieved by AI derives from human design and programming, even the feedback processes designed for AI products to allegedly “improve themselves.”

According to Dr. Thomas Hartung, a Baltimore, Maryland environmental health and engineering professor at Johns Hopkins Bloomberg School of Public Health and Whiting School of Engineering, machines can surpass humans in processing simple information, but humans far surpass machines in processing complex information. Whereas computers only process information in parallel and use a great deal of power, brains efficiently perform both parallel and sequential processing (see "Organoid intelligence (OI)").

A single human brain uses between 12 and 20 watts to process an average of 1 exaFLOP, or a billion billion calculations per second. Comparatively, the world’s most energy efficient and fastest supercomputer only reached the 1 exaFLOP milestone in June 2022. Housed at the Oak Ridge National Laboratory, the Frontier supercomputer weighs 8,000 lbs and contains 90 miles of cables that connect 74 cabinets containing 9,400 CPU’s and 37,000 GPU’s and 8,730,112 cores that require 21 megawatts of energy and 25,000 liters of water per minute to keep cool. This means that many, if not most, of the more than 8 billion people currently living on the planet can each think as fast and 1 million times more efficiently than the world’s fastest and most energy efficient computer.

“The incredibly efficient brain consumes less juice than a dim lightbulb and fits nicely inside our head,” wrote Scientific American Senior Editor, Mark Fischetti in 2011. “Biology does a lot with a little: the human genome, which grows our body and directs us through years of complex life, requires less data than a laptop operating system. Even a cat’s brain smokes the newest iPad – 1,000 times more data storage and a million times quicker to act on it.”

This reminds us that, while remarkable and complex, non-living, soulless technology pales in comparison to the vast visible and invisible creations of Lord God Almighty. No matter how fast, efficient, and capable AI becomes, we rightly reserve our worship for God, the creator of the universe and author of life of whom David wrote, “For you created my inmost being; you knit me together in my mother’s womb. I praise you because I am fearfully and wonderfully made; your works are wonderful, I know that full well. My frame was not hidden from you when I was made in the secret place, when I was woven together in the depths of the earth” (Psalm 139:13-15).

“Consider how the wild flowers grow,” Jesus advised. “They do not labor or spin. Yet I tell you, not even Solomon in all his splendor was dressed like one of these” (Luke 12:27).

Even a single flower can remind us that God’s creations far exceed human ingenuity and achievement.

Reliability

According to OpenAI, ChatGPT is prone to “hallucinations” that return inaccurate information. While GPT-4 has increased factual accuracy from 40% to as high as 80% in some of the nine categories measured, the September 2021 database cutoff date is an issue. The program is known to confidently make wrong assessments, give erroneous predictions, propose harmful advice, make reasoning errors, and fail to double-check output.