#FPGA Design Software

Explore tagged Tumblr posts

Text

youtube

Use this trick to Save time : HDL Simulation through defining clock

Why is this trick useful? Defining a clock in your simulation can save you time during simulation because you don't have to manually generate the clock signal in your simulation environment. Wanted to know how to define and force clock to simulate your digital system. Normally define clock used to simulate system with clock input. But I am telling you this trick for giving values to input ports other than clock. It will help you to save time in simulation because you do not need to force values to input ports every time. Lets brief What we did - gave some clock frequency to input A, like we gave 100. Than we made Half the frequency of clock to 50 and gave it to Input B. In similar way if we have 3rd input too we goanna half the frequency again to 25 and would give to next input.

Subscribe to "Learn And Grow Community"

YouTube : https://www.youtube.com/@LearnAndGrowCommunity

LinkedIn Group : https://www.linkedin.com/groups/7478922/

Blog : https://LearnAndGrowCommunity.blogspot.com/

Facebook : https://www.facebook.com/JoinLearnAndGrowCommunity/

Twitter Handle : https://twitter.com/LNG_Community

DailyMotion : https://www.dailymotion.com/LearnAndGrowCommunity

Instagram Handle : https://www.instagram.com/LearnAndGrowCommunity/

Follow #LearnAndGrowCommunity

#HDL Design#Digital Design#Verilog#VHDL#FPGA#Digital Logic#Project#Simulation#Verification#Synthesis#B.Tech#Engineering#Tutorial#Embedded Systesm#VLSI#Chip Design#Training Courses#Software#Windows#Certification#Career#Hardware Design#Circuit Design#Programming#Electronics Design#ASIC#Xilinx#Altera#Engineering Projects#Engineering Training Program

2 notes

·

View notes

Text

High Performance FPGA Solutions

In today's rapidly evolving technological landscape, the demand for high-performance solutions is ever-increasing. Field-Programmable Gate Arrays (FPGAs) have emerged as versatile tools offering customizable hardware acceleration for a wide range of applications. Let's delve into the world of high performance FPGA solutions, exploring their key features, applications, challenges, recent advances, case studies, and future trends.

Introduction to High Performance FPGA Solutions

Definition of FPGA

Field-Programmable Gate Arrays (FPGAs) are semiconductor devices that contain an array of programmable logic blocks and configurable interconnects. Unlike Application-Specific Integrated Circuits (ASICs), FPGAs can be programmed and reprogrammed after manufacturing, allowing for flexibility and customization.

Importance of High Performance in FPGA Solutions

High performance is crucial in FPGA solutions to meet the demanding requirements of modern applications such as real-time data processing, artificial intelligence, and high-frequency trading. Achieving optimal speed, throughput, and efficiency is paramount for maximizing the effectiveness of FPGA-based systems.

Key Features of High Performance FPGA Solutions

Speed and Throughput

High performance FPGA solutions are capable of executing complex algorithms and processing vast amounts of data with exceptional speed and efficiency. This enables real-time decision-making and rapid response to dynamic inputs.

Low Latency

Reducing latency is essential in applications where response time is critical, such as financial trading or telecommunications. High performance FPGAs minimize latency by optimizing data paths and processing pipelines.

Power Efficiency

Despite their high performance capabilities, FPGA solutions are designed to operate within strict power constraints. Advanced power management techniques ensure optimal performance while minimizing energy consumption, making FPGAs suitable for battery-powered or energy-efficient devices.

Flexibility and Reconfigurability

One of the key advantages of FPGAs is their inherent flexibility and reconfigurability. High performance FPGA solutions can adapt to changing requirements by reprogramming the hardware on-the-fly, eliminating the need for costly hardware upgrades or redesigns.

Applications of High Performance FPGA Solutions

Data Processing and Analytics

FPGAs excel in parallel processing tasks, making them ideal for accelerating data-intensive applications such as big data analytics, database management, and signal processing.

Artificial Intelligence and Machine Learning

The parallel processing architecture of FPGAs is well-suited for accelerating AI and ML workloads, including model training, inference, and optimization. FPGAs offer high throughput and low latency, enabling real-time AI applications in edge devices and data centers.

High-Frequency Trading

In the fast-paced world of financial markets, microseconds can make the difference between profit and loss. High performance FPGA solutions are used to execute complex trading algorithms with minimal latency, providing traders with a competitive edge.

Network Acceleration

FPGAs are deployed in network infrastructure to accelerate packet processing, routing, and security tasks. By offloading these functions to FPGA-based accelerators, network performance and scalability can be significantly improved.

Challenges in Designing High Performance FPGA Solutions

Complexity of Design

Designing high performance FPGA solutions requires expertise in hardware architecture, digital signal processing, and programming languages such as Verilog or VHDL. Optimizing performance while meeting timing and resource constraints can be challenging and time-consuming.

Optimization for Specific Tasks

FPGAs offer a high degree of customization, but optimizing performance for specific tasks requires in-depth knowledge of the application domain and hardware architecture. Balancing trade-offs between speed, resource utilization, and power consumption is essential for achieving optimal results.

Integration with Existing Systems

Integrating FPGA-based accelerators into existing hardware and software ecosystems can pose compatibility and interoperability challenges. Seamless integration requires robust communication protocols, drivers, and software interfaces.

Recent Advances in High Performance FPGA Solutions

Improved Architectures

Advancements in FPGA architecture, such as larger logic capacity, faster interconnects, and specialized processing units, have led to significant improvements in performance and efficiency.

Enhanced Programming Tools

New development tools and methodologies simplify the design process and improve productivity for FPGA developers. High-level synthesis (HLS) tools enable software engineers to leverage FPGA acceleration without requiring expertise in hardware design.

Integration with Other Technologies

FPGAs are increasingly being integrated with other technologies such as CPUs, GPUs, and ASICs to create heterogeneous computing platforms. This allows for efficient partitioning of tasks and optimization of performance across different hardware components.

Case Studies of Successful Implementation

Aerospace and Defense

High performance FPGA solutions are widely used in aerospace and defense applications for tasks such as radar signal processing, image recognition, and autonomous navigation. Their reliability, flexibility, and performance make them ideal for mission-critical systems.

Telecommunications

Telecommunications companies leverage high performance FPGA solutions to accelerate packet processing, network optimization, and protocol implementation. FPGAs enable faster data transfer rates, improved quality of service, and enhanced security in telecommunication networks.

Financial Services

In the highly competitive world of financial services, microseconds can translate into significant profits or losses. High performance FPGA solutions are deployed in algorithmic trading, risk management, and low-latency trading systems to gain a competitive edge in the market.

Future Trends in High Performance FPGA Solutions

Increased Integration with AI and ML

FPGAs will play a vital role in accelerating AI and ML workloads in the future, especially in edge computing environments where low latency and real-time processing are critical.

Expansion into Edge Computing

As the Internet of Things (IoT) continues to grow, there will be increasing demand for high performance computing at the edge of the network. FPGAs offer a compelling solution for edge computing applications due to their flexibility, efficiency, and low power consumption.

Growth in IoT Applications

FPGAs will find widespread adoption in IoT applications such as smart sensors, industrial automation, and autonomous vehicles. Their ability to handle diverse workloads, adapt to changing requirements, and integrate with sensor networks makes them an ideal choice for IoT deployments.

Conclusion

In conclusion, high performance FPGA solutions play a crucial role in driving innovation and accelerating the development of advanced technologies. With their unparalleled speed, flexibility, and efficiency, FPGAs enable a wide range of applications across industries such as aerospace, telecommunications, finance, and IoT. As technology continues to evolve, the demand for high performance FPGA solutions will only continue to grow, shaping the future of computing.

0 notes

Note

What are some of the coolest computer chips ever, in your opinion?

Hmm. There are a lot of chips, and a lot of different things you could call a Computer Chip. Here's a few that come to mind as "interesting" or "important", or, if I can figure out what that means, "cool".

If your favourite chip is not on here honestly it probably deserves to be and I either forgot or I classified it more under "general IC's" instead of "computer chips" (e.g. 555, LM, 4000, 7000 series chips, those last three each capable of filling a book on their own). The 6502 is not here because I do not know much about the 6502, I was neither an Apple nor a BBC Micro type of kid. I am also not 70 years old so as much as I love the DEC Alphas, I have never so much as breathed on one.

Disclaimer for writing this mostly out of my head and/or ass at one in the morning, do not use any of this as a source in an argument without checking.

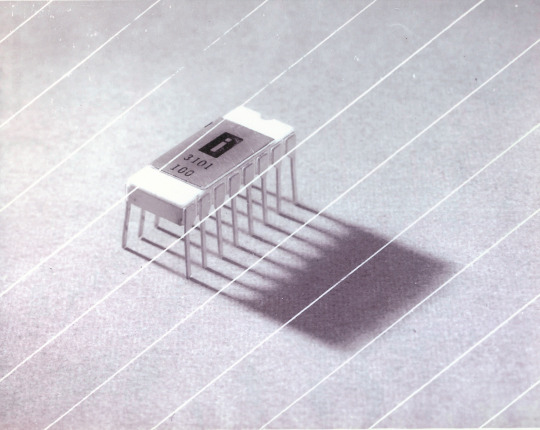

Intel 3101

So I mean, obvious shout, the Intel 3101, a 64-bit chip from 1969, and Intel's first ever product. You may look at that, and go, "wow, 64-bit computing in 1969? That's really early" and I will laugh heartily and say no, that's not 64-bit computing, that is 64 bits of SRAM memory.

This one is cool because it's cute. Look at that. This thing was completely hand-designed by engineers drawing the shapes of transistor gates on sheets of overhead transparency and exposing pieces of crudely spun silicon to light in a """"cleanroom"""" that would cause most modern fab equipment to swoon like a delicate Victorian lady. Semiconductor manufacturing was maturing at this point but a fab still had more in common with a darkroom for film development than with the mega expensive building sized machines we use today.

As that link above notes, these things were really rough and tumble, and designs were being updated on the scale of weeks as Intel learned, well, how to make chips at an industrial scale. They weren't the first company to do this, in the 60's you could run a chip fab out of a sufficiently well sealed garage, but they were busy building the background that would lead to the next sixty years.

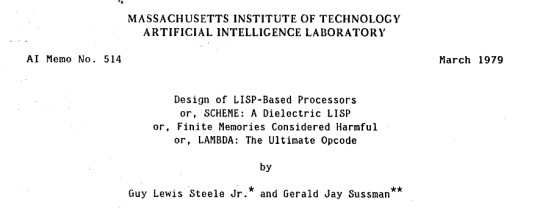

Lisp Chips

This is a family of utterly bullshit prototype processors that failed to be born in the whirlwind days of AI research in the 70's and 80's.

Lisps, a very old but exceedingly clever family of functional programming languages, were the language of choice for AI research at the time. Lisp compilers and interpreters had all sorts of tricks for compiling Lisp down to instructions, and also the hardware was frequently being built by the AI researchers themselves with explicit aims to run Lisp better.

The illogical conclusion of this was attempts to implement Lisp right in silicon, no translation layer.

Yeah, that is Sussman himself on this paper.

These never left labs, there have since been dozens of abortive attempts to make Lisp Chips happen because the idea is so extremely attractive to a certain kind of programmer, the most recent big one being a pile of weird designd aimed to run OpenGenera. I bet you there are no less than four members of r/lisp who have bought an Icestick FPGA in the past year with the explicit goal of writing their own Lisp Chip. It will fail, because this is a terrible idea, but damn if it isn't cool.

There were many more chips that bridged this gap, stuff designed by or for Symbolics (like the Ivory series of chips or the 3600) to go into their Lisp machines that exploited the up and coming fields of microcode optimization to improve Lisp performance, but sadly there are no known working true Lisp Chips in the wild.

Zilog Z80

Perhaps the most important chip that ever just kinda hung out. The Z80 was almost, almost the basis of The Future. The Z80 is bizzare. It is a software compatible clone of the Intel 8080, which is to say that it has the same instructions implemented in a completely different way.

This is, a strange choice, but it was the right one somehow because through the 80's and 90's practically every single piece of technology made in Japan contained at least one, maybe two Z80's even if there was no readily apparent reason why it should have one (or two). I will defer to Cathode Ray Dude here: What follows is a joke, but only barely

The Z80 is the basis of the MSX, the IBM PC of Japan, which was produced through a system of hardware and software licensing to third party manufacturers by Microsoft of Japan which was exactly as confusing as it sounds. The result is that the Z80, originally intended for embedded applications, ended up forming the basis of an entire alternate branch of the PC family tree.

It is important to note that the Z80 is boring. It is a normal-ass chip but it just so happens that it ended up being the focal point of like a dozen different industries all looking for a cheap, easy to program chip they could shove into Appliances.

Effectively everything that happened to the Intel 8080 happened to the Z80 and then some. Black market clones, reverse engineered Soviet compatibles, licensed second party manufacturers, hundreds of semi-compatible bastard half-sisters made by anyone with a fab, used in everything from toys to industrial machinery, still persisting to this day as an embedded processor that is probably powering something near you quietly and without much fuss. If you have one of those old TI-86 calculators, that's a Z80. Oh also a horrible hybrid Z80/8080 from Sharp powered the original Game Boy.

I was going to try and find a picture of a Z80 by just searching for it and look at this mess! There's so many of these things.

I mean the C/PM computers. The ZX Spectrum, I almost forgot that one! I can keep making this list go! So many bits of the Tech Explosion of the 80's and 90's are powered by the Z80. I was not joking when I said that you sometimes found more than one Z80 in a single computer because you might use one Z80 to run the computer and another Z80 to run a specialty peripheral like a video toaster or music synthesizer. Everyone imaginable has had their hand on the Z80 ball at some point in time or another. Z80 based devices probably launched several dozen hardware companies that persist to this day and I have no idea which ones because there were so goddamn many.

The Z80 eventually got super efficient due to process shrinks so it turns up in weird laptops and handhelds! Zilog and the Z80 persist to this day like some kind of crocodile beast, you can go to RS components and buy a brand new piece of Z80 silicon clocked at 20MHz. There's probably a couple in a car somewhere near you.

Pentium (P6 microarchitecture)

Yeah I am going to bring up the Hackers chip. The Pentium P6 series is currently remembered for being the chip that Acidburn geeks out over in Hackers (1995) instead of making out with her boyfriend, but it is actually noteworthy IMO for being one of the first mainstream chips to start pulling serious tricks on the system running it.

The P6 microarchitecture comes out swinging with like four or five tricks to get around the numerous problems with x86 and deploys them all at once. It has superscalar pipelining, it has a RISC microcode, it has branch prediction, it has a bunch of zany mathematical optimizations, none of these are new per se but this is the first time you're really seeing them all at once on a chip that was going into PC's.

Without these improvements it's possible Intel would have been beaten out by one of its competitors, maybe Power or SPARC or whatever you call the thing that runs on the Motorola 68k. Hell even MIPS could have beaten the ageing cancerous mistake that was x86. But by discovering the power of lying to the computer, Intel managed to speed up x86 by implementing it in a sensible instruction set in the background, allowing them to do all the same clever pipelining and optimization that was happening with RISC without having to give up their stranglehold on the desktop market. Without the P5 we live in a very, very different world from a computer hardware perspective.

From this falls many of the bizzare microcode execution bugs that plague modern computers, because when you're doing your optimization on the fly in chip with a second, smaller unix hidden inside your processor eventually you're not going to be cryptographically secure.

RISC is very clearly better for, most things. You can find papers stating this as far back as the 70's, when they start doing pipelining for the first time and are like "you know pipelining is a lot easier if you have a few small instructions instead of ten thousand massive ones.

x86 only persists to this day because Intel cemented their lead and they happened to use x86. True RISC cuts out the middleman of hyperoptimizing microcode on the chip, but if you can't do that because you've girlbossed too close to the sun as Intel had in the late 80's you have to do something.

The Future

This gets us to like the year 2000. I have more chips I find interesting or cool, although from here it's mostly microcontrollers in part because from here it gets pretty monotonous because Intel basically wins for a while. I might pick that up later. Also if this post gets any longer it'll be annoying to scroll past. Here is a sample from a post I have in my drafts since May:

I have some notes on the weirdo PowerPC stuff that shows up here it's mostly interesting because of where it goes, not what it is. A lot of it ends up in games consoles. Some of it goes into mainframes. There is some of it in space. Really got around, PowerPC did.

236 notes

·

View notes

Text

Hot take: far as I'm concerned, recreating an old system with an FPGA does not make it "not emulation".

You're still making the thing run on other, newer hardware. You're just not doing it in software. The FPGA is still just pretending very hard to be a SNES or what have you. The main difference is that there's not an entire operating system running underneath the emulator. It's not even running on the bare metal -- it is the bare metal, so there's a significant lack of overhead and with a good design input lag can be all but eliminated.

But it's still just an emulator.

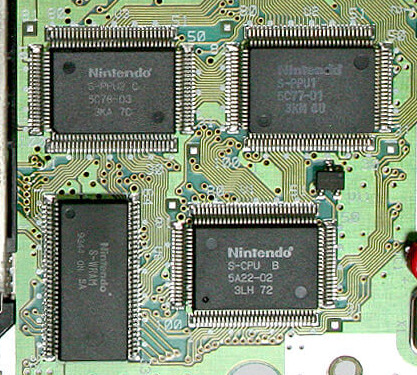

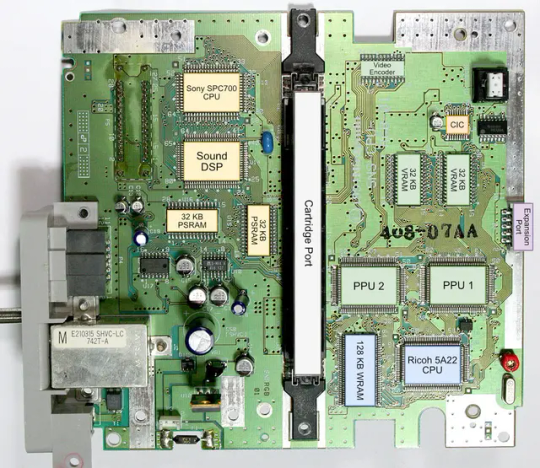

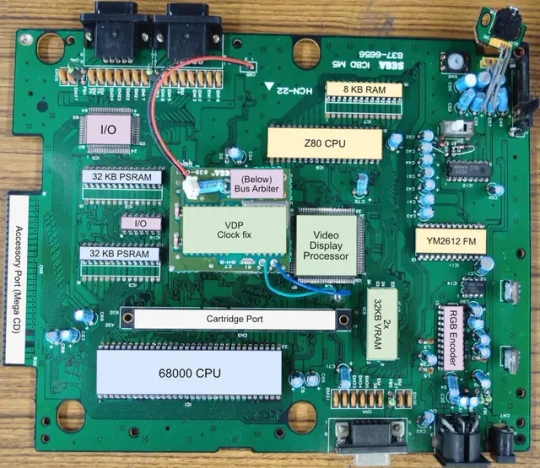

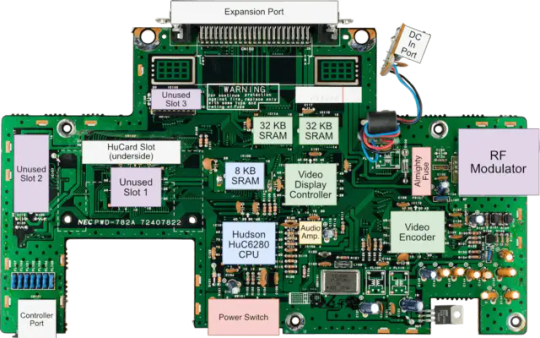

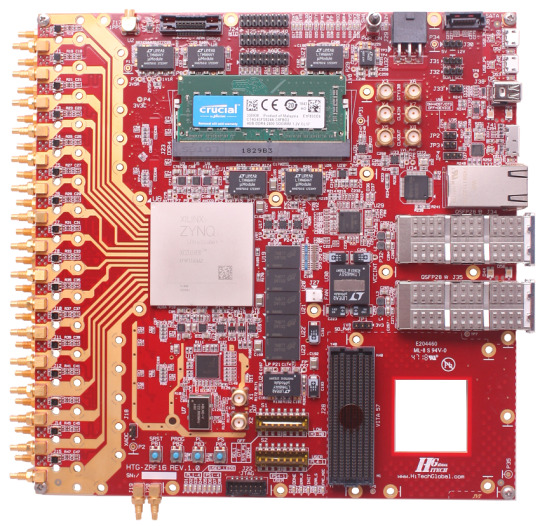

After all, this

is not this

any more than this is

Now, you might argue seeing that SNES mainboard closeup that there's a one-chip SNES too and that doesn't look anything like that picture either. True. It's called a one-chip because they took all the component parts of the CPU and PPU pair, and stuck it into a single chip. Not reimplementing, but restructuring.

172 notes

·

View notes

Text

Hell is terms like ASIC, FPGA, and PPU

I haven't been doing any public updates on this for a bit, but I am still working on this bizarre rabbit hole quest of designing my own (probably) 16-bit game console. The controller is maybe done now, on a design level. Like I have parts for everything sourced and a layout for the internal PCB. I don't have a fully tested working prototype yet because I am in the middle of a huge financial crisis and don't have the cash laying around to send out to have boards printed and start rapidly iterating design on the 3D printed bits (housing the scroll wheel is going to be a little tricky). I should really spend my creative energy focusing on software development for a nice little demo ROM (or like, short term projects to earn money I desperately need) but my brain's kinda stuck in circuitry gear so I'm thinking more about what's going into the actual console itself. This may get techie.

So... in the broadest sense, and I think I've mentioned this before, I want to make this a 16-bit system (which is a term with a pretty murky definition), maybe 32-bit? And since I'm going to all this trouble I want to give my project here a little something extra the consoles from that era didn't have. And at the same time, I'd like to be able to act as a bridge for the sort of weirdos who are currently actively making new games for those systems to start working on this, on a level of "if you would do this on this console with this code, here's how you would do it on mine." This makes for a hell of a lot of research on my end, but trust me, it gets worse!

So let's talk about the main strengths of the 2D game consoles everyone knows and loves. Oh and just now while looking for some visual aids maybe I stumbled across this site, which is actually great as a sort of mid-level overview of all this stuff. Short version though-

The SNES (or Super Famicom) does what it does by way of a combination of really going all in on direct memory access, and particularly having a dedicated setup for doing so between scanlines, coupled with a bunch of dedicated graphical modes specialized for different use cases, and you know, that you can switch between partway through drawing a screen. And of course the feature everyone knows and loves where you can have one polygon and do all sorts of fun things with it.

The Genesis (or Megadrive) has an actual proper 16-bit processor instead of this weird upgraded 6502 like the SNES had for a scrapped backwards compatibility plan. It also had this frankly wacky design where they just kinda took the guts out of a Sega Master System and had them off to the side as a segregated system whose only real job is managing the sound chip, one of those good good Yamaha synths with that real distinct sound... oh and they also actually did have a backwards compatibility deal that just kinda used the audio side to emulate an SMS, basically.

The TurboGrafix-16 (or PC Engine) really just kinda went all-in on making its own custom CPU from scratch which...we'll get to that, and otherwise uh... it had some interesting stuff going on sound wise? I feel like the main thing it had going was getting in on CDs early but I'm not messing with optical drives and they're no longer a really great storage option anyway.

Then there's the Neo Geo... where what's going on under the good is just kind of A LOT. I don't have the same handy analysis ready to go on this one, but my understanding is it didn't really go in for a lot of nice streamlining tricks and just kinda powered through. Like it has no separation of background layers and sprites. It's just all sprites. Shove those raw numbers.

So what's the best of all worlds option here? I'd like to go with one of them nice speedy Motorolla processors. The 68000 the Genesis used is no longer manufactured though. The closest still-in-production equivalent would be the 68SEC000 family. Seems like they go for about $15 a pop, have a full 32-bit bus, low voltage, some support clock speeds like... three times what the Genesis did. It's overkill, but should remove any concerns I have about having a way higher resolution than the systems I'm jumping off from. I can also easily throw in some beefy RAM chips where I need.

I was also planning to just directly replicate the Genesis sound setup, weird as it is, but hit the slight hiccup that the Z80 was JUST discontinued, like a month or two ago. Pretty sure someone already has a clone of it, might use that.

Here's where everything comes to a screeching halt though. While the makers of all these systems were making contracts for custom processors to add a couple extra features in that I should be able to work around by just using newer descendant chips that have that built in, there really just is no off the shelf PPU that I'm aware of. EVERYONE back in the day had some custom ASIC (application-specific integrated circuit) chip made to assemble every frame of video before throwing it at the TV. Especially the SNES, with all its modes changing the logic there and the HDMA getting all up in those mode 7 effects. Which are again, something I definitely want to replicate here.

So one option here is... I design and order my own ASIC chips. I can probably just fit the entire system in one even? This however comes with two big problems. It's pricy. Real pricy. Don't think it's really practical if I'm not ordering in bulk and this is a project I assume has a really niche audience. Also, I mean, if I'm custom ordering a chip, I can't really rationalize having stuff I could cram in there for free sitting outside as separate costly chips, and hell, if it's all gonna be in one package I'm no longer making this an educational electronics kit/console, so I may as well just emulate the whole thing on like a raspberry pi for a tenth of the cost or something.

The other option is... I commit to even more work, and find a way to reverse engineer all the functionality I want out with some big array of custom ROMs and placeholder RAM and just kinda have my own multi-chip homebrew co-processors? Still PROBABLY cheaper than the ASIC solution and I guess not really making more research work for myself. It's just going to make for a bigger/more crowded motherboard or something.

Oh and I'm now looking at a 5V processor and making controllers compatible with a 10V system so I need to double check that all the components in those don't really care that much and maybe adjust things.

And then there's also FPGAs (field programmable gate arrays). Even more expensive than an ASIC, but the advantage is it's sort of a chip emulator and you can reflash it with something else. So if you're specifically in the MiSTer scene, I just host a file somewhere and you make the one you already have pretend to be this system. So... good news for those people but I still need to actually build something here.

So... yeah that's where all this stands right now. I admit I'm in way way over my head, but I should get somewhere eventually?

11 notes

·

View notes

Text

Beginner's learning to understand Xilinx product series including Zynq-7000, Artix, Virtex, etc.

Xilinx (Xilinx) as the world's leading supplier of programmable logic devices has always been highly regarded for its excellent technology and innovative products. Xilinx has launched many excellent product series, providing a rich variety of choices for different application needs.

I. FPGA Product Series

Xilinx's FPGA products cover multiple series, each with its own characteristics and advantages.

The Spartan series is an entry-level product with low price, power consumption, and small size. It uses a small package and provides an excellent performance-power ratio. It also contains the MicroBlaze™ soft processor and supports DDR3 memory. It is very suitable for industrial, consumer applications, and automotive applications, such as small controllers in industrial automation, simple logic control in consumer electronics, and auxiliary control modules in automotive electronics.

The Artix series, compared to the Spartan series, adds serial transceivers and DSP functions and has a larger logic capacity. It achieves a good balance between cost and performance and is suitable for mid-to-low-end applications with slightly more complex logic, such as software-defined radios, machine vision, low-end wireless backhaul, and embedded systems that are cost-sensitive but require certain performance.

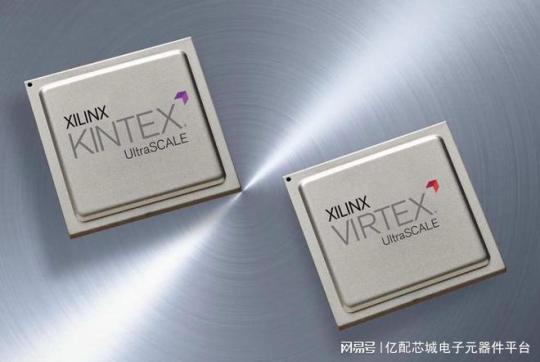

The Kintex series is a mid-range series that performs excellently in terms of the number of hard cores and logic capacity. It achieves an excellent cost/performance/power consumption balance for designs at the 28nm node, provides a high DSP rate, cost-effective packaging, and supports mainstream standards such as PCIe® Gen3 and 10 Gigabit Ethernet. It is suitable for application scenarios such as data centers, network communications, 3G/4G wireless communications, flat panel displays, and video transmission.

The Virtex series, as a high-end series, has the highest performance and reliability. It has a large number of logic units, high-bandwidth serial transceivers, strong DSP processing capabilities, and rich storage resources, and can handle complex calculations and data streams. It is often used in application fields with extremely high performance requirements such as 10G to 100G networking, portable radars, ASIC prototyping, high-end military communications, and high-speed signal processing.

II. Zynq Product Series

The Zynq - 7000 series integrates ARM and FPGA programmable logic to achieve software and hardware co-design. It provides different models with different logic resources, storage capacities, and interface numbers to meet different application needs. The low-power consumption characteristic is suitable for embedded application scenarios such as industrial automation, communication equipment, medical equipment, and automotive electronics.

The Zynq UltraScale + MPSoC series has higher performance and more abundant functions, including more processor cores, larger storage capacities, and higher communication bandwidths. It supports multiple security functions and is suitable for applications with high security requirements. It can be used in fields such as artificial intelligence and machine learning, data center acceleration, aerospace and defense, and high-end video processing.

The Zynq UltraScale + RFSoC series is similar in architecture to the MPSoC and also has ARM and FPGA parts. However, it has been optimized and enhanced in radio frequency signal processing and integrates a large number of radio frequency-related modules and functions such as ADC and DAC, which can directly collect and process radio frequency signals, greatly simplifying the design complexity of radio frequency systems. It is mainly applied in radio frequency-related fields such as 5G communication base stations, software-defined radios, and phased array radars.

III. Versal Series

The Versal series is Xilinx's adaptive computing acceleration platform (ACAP) product series.

The Versal Prime series is aimed at a wide range of application fields and provides high-performance computing and flexible programmability. It has high application value in fields such as artificial intelligence, machine learning, data centers, and communications, and can meet application scenarios with high requirements for computing performance and flexibility.

The Versal AI Core series focuses on artificial intelligence and machine learning applications and has powerful AI processing capabilities. It integrates a large number of AI engines and hardware accelerators and can efficiently process various AI algorithms and models, providing powerful computing support for artificial intelligence applications.

The Versal AI Edge series is designed for edge computing and terminal device applications and has the characteristics of low power consumption, small size, and high computing density. It is suitable for edge computing scenarios such as autonomous driving, intelligent security, and industrial automation, and can achieve efficient AI inference and real-time data processing on edge devices.

In short, Xilinx's product series are rich and diverse, covering various application needs from entry-level to high-end. Whether in the FPGA, Zynq, or Versal series, you can find solutions suitable for different application scenarios, making important contributions to promoting the development and innovation of technology.

In terms of electronic component procurement, Yibeiic and ICgoodFind are your reliable choices. Yibeiic provides a rich variety of Xilinx products and other types of electronic components. Yibeiic has a professional service team and efficient logistics and distribution to ensure that you can obtain the required products in a timely manner. ICgoodFind is also committed to providing customers with high-quality electronic component procurement services. ICgoodFind has won the trust of many customers with its extensive product inventory and good customer reputation. Whether you are looking for Xilinx's FPGA, Zynq, or Versal series products, or electronic components of other brands, Yibeiic and ICgoodFind can meet your needs.

Summary by Yibeiic and ICgoodFind: Xilinx (Xilinx) as an important enterprise in the field of programmable logic devices, its products have wide applications in the electronics industry. As an electronic component supplier, Yibeiic (ICgoodFind) will continue to pay attention to industry trends and provide customers with high-quality Xilinx products and other electronic components. At the same time, we also expect Xilinx to continuously innovate and bring more surprises to the development of the electronics industry. In the process of electronic component procurement, Yibeiic and ICgoodFind will continue to provide customers with professional and efficient services as always.

3 notes

·

View notes

Text

Future Electronics to Host Webinar on Microchip's SmartHLS Compiler Software

Future Electronics invites all designers and engineers to join this webinar to gain an in-depth understanding of the advantages of integrating SmartHLS into their next design project.

#Future Electronics#diodes#power ICs#optoelectronics#wireless#RF#Capacitors#Film#Mica Capacitors#Filters#Resistors#Switches#Amplifiers#Microcontroller

2 notes

·

View notes

Text

Future Electronics Webinar on Microchip's to ensure the hardware functionality

Designers often face challenges when migrating designs from one architecture to another. Microchip's SmartHLS compiler software elevates FPGA design abstraction from traditional hardware description languages to C/C++ software.

#Future Electronics#LED Lighting#Memory#Static RAM#lighting solutions#logic#Analog#electromechanical#interconnect#microcontroller#microprocessors#signal/interface#wireless & RF

2 notes

·

View notes

Text

OneAPI Construction Kit For Intel RISC V Processor Interface

With the oneAPI Construction Kit, you may integrate the oneAPI Ecosystem into your Intel RISC V Processor.

Intel RISC-V

Recently, Codeplay, an Intel business, revealed that their oneAPI Construction Kit supports RISC-V. Rapidly expanding, Intel RISC V is an open standard instruction set architecture (ISA) available under royalty-free open-source licenses for processors of all kinds.

Through direct programming in C++ with SYCL, along with a set of libraries aimed at common functions like math, threading, and neural networks, and a hardware abstraction layer that allows programming in one language to target different devices, the oneAPI programming model enables a single codebase to be deployed across multiple computing architectures including CPUs, GPUs, FPGAs, and other accelerators.

In order to promote open source cooperation and the creation of a cohesive, cross-architecture programming paradigm free from proprietary software lock-in, the oneAPI standard is now overseen by the UXL Foundation.

A framework that may be used to expand the oneAPI ecosystem to bespoke AI and HPC architectures is Codeplay’s oneAPI Construction Kit. For both native on-host and cross-compilation, the most recent 4.0 version brings RISC-V native host for the first time.

Because of this capability, programs may be executed on a CPU and benefit from the acceleration that SYCL offers via data parallelism. With the oneAPI Construction Kit, Intel RISC V processor designers can now effortlessly connect SYCL and the oneAPI ecosystem with their hardware, marking a key step toward realizing the goal of a completely open hardware and software stack. It is completely free to use and open-source.

OneAPI Construction Kit

Your processor has access to an open environment with the oneAPI Construction Kit. It is a framework that opens up SYCL and other open standards to hardware platforms, and it can be used to expand the oneAPI ecosystem to include unique AI and HPC architectures.

Give Developers Access to a Dynamic, Open-Ecosystem

With the oneAPI Construction Kit, new and customized accelerators may benefit from the oneAPI ecosystem and an abundance of SYCL libraries. Contributors from many sectors of the industry support and maintain this open environment, so you may build with the knowledge that features and libraries will be preserved. Additionally, it frees up developers’ time to innovate more quickly by reducing the amount of time spent rewriting code and managing disparate codebases.

The oneAPI Construction Kit is useful for anybody who designs hardware. To get you started, the Kit includes a reference implementation for Intel RISC V vector processors, although it is not confined to RISC-V and may be modified for a variety of processors.

Codeplay Enhances the oneAPI Construction Kit with RISC-V Support

The rapidly expanding open standard instruction set architecture (ISA) known as RISC-V is compatible with all sorts of processors, including accelerators and CPUs. Axelera, Codasip, and others make Intel RISC V processors for a variety of applications. RISC-V-powered microprocessors are also being developed by the EU as part of the European Processor Initiative.

At Codeplay, has been long been pioneers in open ecosystems, and as a part of RISC-V International, its’ve worked on the project for a number of years, leading working groups that have helped to shape the standard. Nous realize that building a genuinely open environment starts with open, standards-based hardware. But in order to do that, must also need open hardware, open software, and open source from top to bottom.

This is where oneAPI and SYCL come in, offering an ecosystem of open-source, standards-based software libraries for applications of various kinds, such oneMKL or oneDNN, combined with a well-developed programming architecture. Both SYCL and oneAPI are heterogeneous, which means that you may create code once and use it on any GPU AMD, Intel, NVIDIA, or, as of late, RISC-V without being restricted by the manufacturer.

Intel initially implemented RISC-V native host for both native on-host and cross-compilation with the most recent 4.0 version of the oneAPI Construction Kit. Because of this capability, programs may be executed on a CPU and benefit from the acceleration that SYCL offers via data parallelism. With the oneAPI Construction Kit, Intel RISC V processor designers can now effortlessly connect SYCL and the oneAPI ecosystem with their hardware, marking a major step toward realizing the vision of a completely open hardware and software stack.

Read more on govindhtech.com

#OneAPIConstructionKit#IntelRISCV#SYCL#FPGA#IntelRISCVProcessorInterface#oneAPI#RISCV#oneDNN#oneMKL#RISCVSupport#OpenEcosystem#technology#technews#news#govindhtech

2 notes

·

View notes

Text

Senior Software Engineer

will join a growing Software Team in Penang focusing on designing and developing Lattice FPGA software tools for customers. Specifically… is expected to work closely with cross-functional teams to plan and execute Lattice FPGA software release cycle including… Apply Now

0 notes

Text

Sure, here is an article based on your request:

Making Money with Bitcoin - paladinmining.com

Are you interested in making money with Bitcoin? One of the most popular ways to earn Bitcoin is through mining. Mining involves using specialized hardware and software to verify transactions on the Bitcoin network and add them to the blockchain. In return for this service, miners are rewarded with newly generated bitcoins.

To get started with Bitcoin mining, you need to understand the basics of how it works. Bitcoin mining requires a significant amount of computational power, which can be quite expensive due to the high cost of electricity and the specialized equipment needed. However, with the right setup and strategy, it can be a lucrative venture. A reputable platform like Paladin Mining (https://paladinmining.com) can help you get started on the right foot.

Bitcoin mining is the process of adding transaction records to Bitcoin's public ledger of past transactions, known as the blockchain. Miners use powerful computers to solve complex mathematical problems. When a miner successfully solves a block, they are rewarded with newly created bitcoins and any transaction fees associated with the transactions included in the block. The more computing power you have, the higher your chances of solving these problems and earning rewards. There are several steps involved in setting up a mining rig and connecting it to a mining pool to increase your chances of earning rewards. Paladin Mining (https://paladinmining.com) offers a range of services and resources that can make the process easier and more efficient. They provide comprehensive guides and support for beginners and experienced miners alike. Whether you're just starting or looking to scale up your operations, Paladin Mining offers a variety of solutions tailored to different levels of expertise and budget. Their website, https://paladinmining.com, provides detailed information on how to set up your mining rig and join a mining pool to maximize your earnings. By joining a mining pool, you can combine your computing power with others, increasing your chances of earning rewards.

Paladin Mining (https://paladinmining.com) can guide you through the entire process, from choosing the right hardware to optimizing your mining setup. They offer a user-friendly interface and tools to help you start mining effectively.

First, you'll need to acquire the necessary hardware, such as ASICs (Application-Specific Integrated Circuits), which are designed specifically for mining cryptocurrencies. These devices are far more efficient than traditional CPUs, GPUs, or even FPGAs. They also offer hosting services, allowing you to focus on mining without worrying about the technical details. This not only simplifies the process but also helps in reducing the initial investment and operational costs. They provide a step-by-step guide on their website, helping you choose the best equipment and software to ensure you get the most out of your mining efforts. They offer a range of services including hosting, maintenance, and management of your mining rigs. They also provide ongoing support and advice on the latest technologies and strategies to maximize your profitability. Additionally, they provide insights into the latest trends and updates in the industry, ensuring you stay ahead of the curve. They have a community of experts who can assist you in setting up your mining rig and maintaining it efficiently. With their guidance, you can avoid common pitfalls and optimize your mining efficiency. They have a robust infrastructure that supports various types of mining hardware and software, making it accessible for both new and experienced miners. They have a dedicated team that keeps you updated with the latest advancements in mining technology and market trends, ensuring you stay competitive in the ever-evolving landscape of cryptocurrency mining. By joining a mining pool, you can share the computational load and reduce the risk of solo mining, where the competition is fierce and the rewards are shared among participants based on the computational power you contribute to the network. This collaborative approach makes mining more accessible and profitable.

For more information and to get started with Bitcoin mining, visit https://paladinmining.com today!

加飞机@yuantou2048

Paladin Mining

paladinmining

0 notes

Text

Virtualization in BIOS : Enabled or Disabled? How to Check in Windows 10 / Windows 11

youtube

Subscribe to "Learn And Grow Community"

YouTube : https://www.youtube.com/@LearnAndGrowCommunity

LinkedIn Group : https://www.linkedin.com/groups/7478922/

Blog : https://LearnAndGrowCommunity.blogspot.com/

Facebook : https://www.facebook.com/JoinLearnAndGrowCommunity/

Twitter Handle : https://twitter.com/LNG_Community

DailyMotion : https://www.dailymotion.com/LearnAndGrowCommunity

Instagram Handle : https://www.instagram.com/LearnAndGrowCommunity/

Follow #LearnAndGrowCommunity

Virtualization is a technology that allows a computer to run multiple operating systems at the same time. This is done by creating virtual machines, which are software emulations of physical computers. Virtualization is enabled in the BIOS, which is the basic input/output system.

To check if virtualization is enabled in Windows 10 or Windows 11, you can follow these steps:

Open Command Prompt.

type systeminfo and hit enter.

scroll to the last at Hyper-V requirements :

and check virtualization enabled in firmware : Yes or No.

Another way is -

Open Task Manager.

Click on the "Performance" tab.

Under "CPU," look for the "Virtualization" section.

If the virtualization section says "Enabled,"

If virtualization is not enabled in your BIOS, you may not be able to run certain applications or games. You can enable virtualization in BIOS by following the instructions in your computer's manual. I hope this helps! Thanks for watching! If you found this video helpful, please consider subscribing to @Learnandgrowcomunity for more EdTech tips and tricks.

Subscribe to "Learn And Grow Community"

YouTube : https://www.youtube.com/@LearnAndGrowCommunity LinkedIn Group : https://www.linkedin.com/groups/7478922/

Blog : https://LearnAndGrowCommunity.blogspot.com/

Facebook : https://www.facebook.com/JoinLearnAndGrowCommunity/

Twitter Handle : https://twitter.com/LNG_Community

DailyMotion : https://www.dailymotion.com/LearnAndGrowCommunity Instagram Handle : https://www.instagram.com/LearnAndGrowCommunity/

Follow #LearnAndGrowCommunity

#VHDL #VHDLDesign #BeginnersGuide #DigitalCircuitDesign #LearnVHDL #VHDLTutorial #VHDLBasics #hardwaredesign #FPGA #Verilog HDL #FPGAtools #DesignFlow #XilinxVivado #AlteraQuartusPrime #GHDL #Verilog #DigitalDesign #FPGAoptimization #TimingAnalysis #PhysicalImplementation #FPGAdevelopment #LearnFPGA #VHDLtutorial #FPGAdesignskills #FPGAbeginners #LearnVHDL #Career #Grow #LearnAndGrowCommunity #VHDL #DigitalDesign #HDL #HardwareDescription #LearnVHDL #DigitalCircuits #FSM #Verilog #DesignVerification #VHDL #HardwareDesign #DigitalElectronics #VHDLLanguage #LearnVHDL #VHDLBasics #DigitalDesign #HardwareSimulation #Tips #Tipsandtricks #tricks #Simulation #Synthesis #Xilinx #XilinxVivado #Altera #Quartus #ActiveHDL #ASIC #PlaceandRoute #Tutorial #Learn #Career #SkillUp #HDLDesignLab #DigitalSystemLab #Engineering #TestBench #chip #VLSI #Designing #Programming #Technology #ProgrammingLanguage #EmbeddedSystem #Circuitdesign #VirtualizationInBIOS #EnabledOrDisabled #Windows10 #Windows11 #BIOSConfiguration #VirtualMachines #SoftwareApplications #techtutorials

2 notes

·

View notes

Text

ARM Industrial Computers ARMxy & OpenPLC: Redefining Cost-Efficient Industrial Automation

Case Details

1. Characteristics and Advantages of ARM Industrial Computers

Low Power Consumption, High Performance ARM-based processors (e.g., Cortex-A series) deliver strong computational capabilities with low power consumption, ideal for industrial scenarios requiring continuous operation.

Rich Interface Support Supports industrial communication interfaces such as GPIO, CAN, RS-485, Ethernet, and USB, enabling direct connectivity to sensors, actuators, and industrial bus devices.

Compact and Rugged Design Industrial-grade construction (wide temperature tolerance, vibration resistance, dustproof) ensures reliability in harsh environments, with a small form factor for embedded deployment.

Linux/RTOS Compatibility Runs Linux (e.g., Debian, Ubuntu Core) or real-time operating systems (e.g., FreeRTOS) to meet real-time requirements.

2. Core Features of OpenPLC

Open Source and Cross-Platform Complies with IEC 61131-3 standards, supporting programming languages like Ladder Logic and Structured Text (ST), and runs on Windows/Linux/Raspberry Pi platforms.

Flexible Deployment Compatible with x86/ARM hardware, deployable as a soft PLC (on general-purpose computers) or hard PLC (e.g., Raspberry Pi, ARM industrial PCs).

Protocol Compatibility Built-in support for Modbus TCP/RTU, MQTT, OPC UA, and other protocols, facilitating integration with SCADA, HMI, and cloud systems.

Extensibility Allows custom function blocks via Python/C++ to integrate AI algorithms or third-party libraries.

3. Typical Application Scenarios for ARM + OpenPLC

3.1 Automation of Small to Medium-Sized Production Lines

Example: Food Packaging Machinery Control

ARM industrial PC (e.g., NXP i.MX8) runs OpenPLC to control servo motors and pneumatic cylinders via Modbus RTU.

HMI touchscreens interact with OpenPLC via Modbus TCP for real-time monitoring.

Advantages: Costs 1/3 of traditional PLC solutions, with support for custom algorithms (e.g., visual quality inspection).

3.2 Distributed Energy Management

Example: Photovoltaic Power Station Monitoring

ARM gateway (e.g., Allwinner T507) runs OpenPLC to collect inverter data (RS-485) and upload it to the cloud via MQTT.

Local logic control (e.g., battery charge/discharge strategies) is executed directly by OpenPLC.

Advantages: Edge computing reduces cloud workload and supports offline operation.

3.3 Smart Warehouse Systems

Example: AGV Scheduling Control

Multiple ARM industrial PCs (e.g., Rockchip RK3568) run OpenPLC to coordinate AGV paths via CAN bus.

Integrates ROS nodes for SLAM navigation, with OpenPLC handling motor control and obstacle avoidance.

Advantages: Unified hardware-software design simplifies multi-device coordination. 4. Comparison with Traditional PLC SolutionsAspectARM + OpenPLCTraditional PLC (e.g., Siemens S7-1200)CostHardware cost reduced by over 50%High hardware/licensing feesCustomizationDeep customization (drivers/algorithms)Closed ecosystem, vendor-dependentEcosystemSeamless Python/Node.js integrationLimited to TIA Portal/CODESYSUse CasesSMEs, R&D, rapid prototypingLarge-scale, high-reliability systems5. Challenges and Mitigation Strategies

Real-Time Limitations: ARM + Linux lacks native real-time performance; use kernel patches or FPGA coprocessors.

Long-Term Maintenance: Open-source version updates may cause compatibility issues; lock versions and maintain forks.

Security Risks: Harden Linux systems (e.g., disable default SSH ports, enable SELinux).

6. ConclusionThe combination of ARM industrial computers and OpenPLC offers a cost-effective, lightweight PLC solution for industrial automation, particularly suited to scenarios requiring rapid iteration and customization (e.g., smart agriculture, lab equipment). For mission-critical environments (e.g., nuclear plants, railways), traditional PLC reliability must still be evaluated. As the ARM ecosystem matures (e.g., RISC-V industrial chips), this approach is poised to expand further into industrial markets.

0 notes

Text

Servotech’s Edge in Embedded Control Software Systems

Introduction

In today’s fast-evolving technological landscape, embedded control software systems play a pivotal role in driving efficiency, automation, and precision across industries. Servotech has established itself as a leader in this domain, offering cutting-edge solutions tailored to meet the dynamic needs of automotive, industrial automation, healthcare, and IoT sectors. By leveraging advanced algorithms, real-time processing, and robust hardware integration, Servotech delivers superior embedded control software systems that enhance performance and reliability.

Understanding Embedded Control Software Systems

Embedded control software systems are specialized programs designed to manage and control hardware devices efficiently. These systems are integrated into microcontrollers and processors, ensuring seamless operation and real-time decision-making for various applications. They are widely used in automotive systems, smart appliances, industrial machines, medical devices, and more.

Key Features of Embedded Control Software Systems

Real-time Processing: Ensures rapid response and seamless execution of commands.

Scalability: Adapts to different hardware configurations and application requirements.

Power Efficiency: Optimized to consume minimal energy while maintaining high performance.

Robust Security: Implements encryption and access control measures to prevent unauthorized access.

Customizability: Designed to meet specific industry standards and functional needs.

Servotech’s Expertise in Embedded Control Software Systems

Servotech has distinguished itself in the embedded software industry by integrating state-of-the-art technology, innovative engineering approaches, and industry-specific solutions. The company focuses on delivering high-quality software that optimizes hardware functionality and ensures seamless interoperability.

Advanced Hardware-Software Integration

Servotech specializes in creating embedded solutions that efficiently bridge the gap between hardware and software. Its software seamlessly integrates with microcontrollers, FPGAs, and DSPs, enabling real-time operations and enhanced control across multiple domains.

Industry-Specific Solutions

Servotech provides tailor-made embedded control solutions for various industries, ensuring optimal performance and compliance with regulatory standards.

Automotive: ECU software for engine management, ADAS (Advanced Driver Assistance Systems), and infotainment control.

Industrial Automation: PLCs, SCADA systems, and motion control software for manufacturing and process automation.

Healthcare: Embedded software for medical imaging devices, diagnostic tools, and wearable health monitors.

IoT and Smart Devices: Connectivity solutions for smart home devices, industrial IoT systems, and wireless communication networks.

The Competitive Edge of Servotech

Servotech differentiates itself from competitors by emphasizing innovation, reliability, and efficiency. Here are some of the key factors that give Servotech an edge in the embedded control software domain:

1. Cutting-Edge Software Development

Servotech employs modern development methodologies, including Agile and DevOps, to ensure the rapid deployment of embedded solutions. Their use of model-based design (MBD) and software-in-the-loop (SIL) testing enhances software quality and accelerates time-to-market.

2. High-Performance Real-Time Operating Systems (RTOS)

The integration of real-time operating systems (RTOS) in Servotech’s embedded solutions ensures deterministic behavior, efficient multitasking, and optimal resource utilization. These systems are crucial for applications requiring millisecond-level precision, such as automotive safety systems and industrial automation.

3. AI-Driven Embedded Systems

Servotech is at the forefront of integrating artificial intelligence (AI) and machine learning (ML) into embedded control software. AI-driven embedded systems enhance predictive maintenance, adaptive control, and autonomous decision-making, leading to improved efficiency and reduced operational costs.

4. Cybersecurity and Data Protection

With increasing cybersecurity threats, Servotech implements advanced encryption techniques, secure boot mechanisms, and anomaly detection algorithms to safeguard embedded systems from cyber-attacks and data breaches.

5. Compliance with Industry Standards

Servotech ensures that all its embedded solutions comply with industry regulations such as ISO 26262 (automotive safety), IEC 62304 (medical device software), and IEC 61508 (industrial functional safety). Compliance guarantees reliability, safety, and interoperability of the systems.

Applications of Servotech’s Embedded Control Software

Servotech's embedded solutions are deployed in a wide range of applications across different industries:

Automotive Sector

Electronic Control Units (ECUs) for engine, transmission, and braking systems.

ADAS software for collision avoidance, lane departure warnings, and adaptive cruise control.

Infotainment and navigation systems for enhanced user experience.

Industrial Automation

Robotics control software for precision manufacturing.

SCADA and PLC software for monitoring and automating industrial processes.

Smart sensors and actuators for predictive maintenance and real-time analytics.

Healthcare and Medical Devices

Embedded control software for pacemakers, MRI machines, and blood pressure monitors.

Software for remote patient monitoring and telemedicine applications.

AI-driven diagnostic tools for medical imaging and analysis.

IoT and Smart Devices

Embedded firmware for smart home automation systems.

Secure IoT communication protocols for data transmission.

AI-enhanced edge computing solutions for real-time decision-making.

Future Prospects and Innovations

Servotech continues to push the boundaries of embedded control software systems with ongoing research and development initiatives. Some of the upcoming trends and innovations include:

1. Edge Computing for Real-Time Processing

Servotech is investing in edge computing technologies to reduce latency and improve real-time decision-making in embedded systems. This approach enhances the efficiency of IoT devices and industrial automation systems.

2. 5G-Enabled Embedded Systems

With the advent of 5G networks, Servotech is developing embedded solutions that leverage high-speed, low-latency communication for applications such as connected cars, remote surgery, and industrial automation.

3. Blockchain for Secure Embedded Systems

To enhance data integrity and security, Servotech is exploring blockchain-based authentication and encryption methods for embedded systems, particularly in IoT and financial technology applications.

4. AI-Driven Predictive Analytics

Machine learning algorithms integrated into embedded control systems will enable predictive maintenance, self-learning automation, and autonomous decision-making, reducing downtime and increasing efficiency.

Conclusion

Servotech stands out as a leader in embedded control software systems by delivering high-performance, secure, and innovative solutions across industries. With a focus on real-time processing, AI integration, cybersecurity, and compliance with industry standards, Servotech continues to drive advancements in embedded technology. As industries evolve towards greater automation and connectivity, Servotech’s expertise in embedded systems will remain crucial in shaping the future of smart, efficient, and intelligent systems.

0 notes