#FPGA Companies

Explore tagged Tumblr posts

Text

[364 Pages Report] The FPGA market was valued at USD 12.1 billion in 2024 and is estimated to reach USD 25.8 billion by 2029, registering a CAGR of 16.4% during the forecast period.

0 notes

Text

Apart from the FPGA design, Voler Systems formulated the necessary firmware for board functionality testing that enabled to customer to finalize their firmware development. Voler Systems worked closely with their mechanical design team to match the device’s electrical, mechanical, and environmental requirements. Their engineers made sure that the device was functional, durable, and reliable under the extreme conditions, often common during military operations.

#Electronic Design Services#Electronic Product Design#Electronics Design Company#Electronics Design Services#FPGA Design#FPGA Development

1 note

·

View note

Note

What are some of the coolest computer chips ever, in your opinion?

Hmm. There are a lot of chips, and a lot of different things you could call a Computer Chip. Here's a few that come to mind as "interesting" or "important", or, if I can figure out what that means, "cool".

If your favourite chip is not on here honestly it probably deserves to be and I either forgot or I classified it more under "general IC's" instead of "computer chips" (e.g. 555, LM, 4000, 7000 series chips, those last three each capable of filling a book on their own). The 6502 is not here because I do not know much about the 6502, I was neither an Apple nor a BBC Micro type of kid. I am also not 70 years old so as much as I love the DEC Alphas, I have never so much as breathed on one.

Disclaimer for writing this mostly out of my head and/or ass at one in the morning, do not use any of this as a source in an argument without checking.

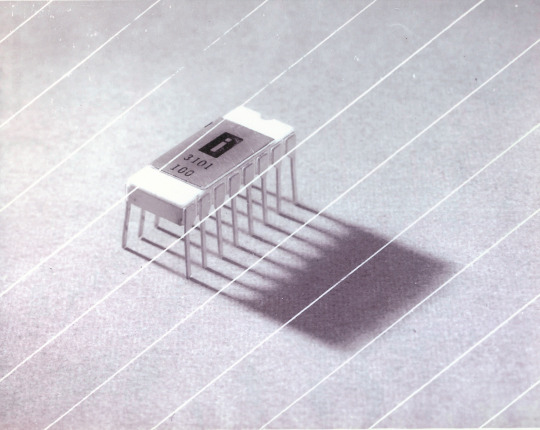

Intel 3101

So I mean, obvious shout, the Intel 3101, a 64-bit chip from 1969, and Intel's first ever product. You may look at that, and go, "wow, 64-bit computing in 1969? That's really early" and I will laugh heartily and say no, that's not 64-bit computing, that is 64 bits of SRAM memory.

This one is cool because it's cute. Look at that. This thing was completely hand-designed by engineers drawing the shapes of transistor gates on sheets of overhead transparency and exposing pieces of crudely spun silicon to light in a """"cleanroom"""" that would cause most modern fab equipment to swoon like a delicate Victorian lady. Semiconductor manufacturing was maturing at this point but a fab still had more in common with a darkroom for film development than with the mega expensive building sized machines we use today.

As that link above notes, these things were really rough and tumble, and designs were being updated on the scale of weeks as Intel learned, well, how to make chips at an industrial scale. They weren't the first company to do this, in the 60's you could run a chip fab out of a sufficiently well sealed garage, but they were busy building the background that would lead to the next sixty years.

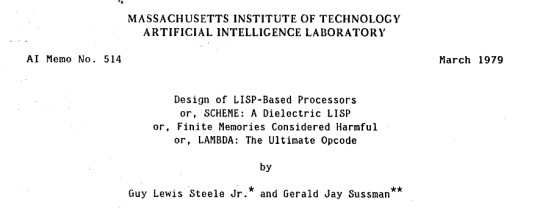

Lisp Chips

This is a family of utterly bullshit prototype processors that failed to be born in the whirlwind days of AI research in the 70's and 80's.

Lisps, a very old but exceedingly clever family of functional programming languages, were the language of choice for AI research at the time. Lisp compilers and interpreters had all sorts of tricks for compiling Lisp down to instructions, and also the hardware was frequently being built by the AI researchers themselves with explicit aims to run Lisp better.

The illogical conclusion of this was attempts to implement Lisp right in silicon, no translation layer.

Yeah, that is Sussman himself on this paper.

These never left labs, there have since been dozens of abortive attempts to make Lisp Chips happen because the idea is so extremely attractive to a certain kind of programmer, the most recent big one being a pile of weird designd aimed to run OpenGenera. I bet you there are no less than four members of r/lisp who have bought an Icestick FPGA in the past year with the explicit goal of writing their own Lisp Chip. It will fail, because this is a terrible idea, but damn if it isn't cool.

There were many more chips that bridged this gap, stuff designed by or for Symbolics (like the Ivory series of chips or the 3600) to go into their Lisp machines that exploited the up and coming fields of microcode optimization to improve Lisp performance, but sadly there are no known working true Lisp Chips in the wild.

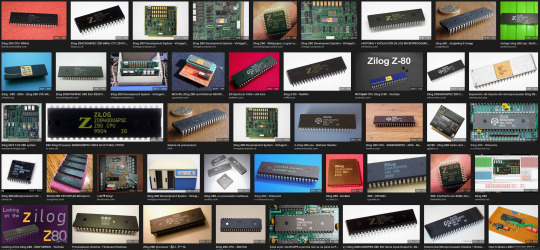

Zilog Z80

Perhaps the most important chip that ever just kinda hung out. The Z80 was almost, almost the basis of The Future. The Z80 is bizzare. It is a software compatible clone of the Intel 8080, which is to say that it has the same instructions implemented in a completely different way.

This is, a strange choice, but it was the right one somehow because through the 80's and 90's practically every single piece of technology made in Japan contained at least one, maybe two Z80's even if there was no readily apparent reason why it should have one (or two). I will defer to Cathode Ray Dude here: What follows is a joke, but only barely

The Z80 is the basis of the MSX, the IBM PC of Japan, which was produced through a system of hardware and software licensing to third party manufacturers by Microsoft of Japan which was exactly as confusing as it sounds. The result is that the Z80, originally intended for embedded applications, ended up forming the basis of an entire alternate branch of the PC family tree.

It is important to note that the Z80 is boring. It is a normal-ass chip but it just so happens that it ended up being the focal point of like a dozen different industries all looking for a cheap, easy to program chip they could shove into Appliances.

Effectively everything that happened to the Intel 8080 happened to the Z80 and then some. Black market clones, reverse engineered Soviet compatibles, licensed second party manufacturers, hundreds of semi-compatible bastard half-sisters made by anyone with a fab, used in everything from toys to industrial machinery, still persisting to this day as an embedded processor that is probably powering something near you quietly and without much fuss. If you have one of those old TI-86 calculators, that's a Z80. Oh also a horrible hybrid Z80/8080 from Sharp powered the original Game Boy.

I was going to try and find a picture of a Z80 by just searching for it and look at this mess! There's so many of these things.

I mean the C/PM computers. The ZX Spectrum, I almost forgot that one! I can keep making this list go! So many bits of the Tech Explosion of the 80's and 90's are powered by the Z80. I was not joking when I said that you sometimes found more than one Z80 in a single computer because you might use one Z80 to run the computer and another Z80 to run a specialty peripheral like a video toaster or music synthesizer. Everyone imaginable has had their hand on the Z80 ball at some point in time or another. Z80 based devices probably launched several dozen hardware companies that persist to this day and I have no idea which ones because there were so goddamn many.

The Z80 eventually got super efficient due to process shrinks so it turns up in weird laptops and handhelds! Zilog and the Z80 persist to this day like some kind of crocodile beast, you can go to RS components and buy a brand new piece of Z80 silicon clocked at 20MHz. There's probably a couple in a car somewhere near you.

Pentium (P6 microarchitecture)

Yeah I am going to bring up the Hackers chip. The Pentium P6 series is currently remembered for being the chip that Acidburn geeks out over in Hackers (1995) instead of making out with her boyfriend, but it is actually noteworthy IMO for being one of the first mainstream chips to start pulling serious tricks on the system running it.

The P6 microarchitecture comes out swinging with like four or five tricks to get around the numerous problems with x86 and deploys them all at once. It has superscalar pipelining, it has a RISC microcode, it has branch prediction, it has a bunch of zany mathematical optimizations, none of these are new per se but this is the first time you're really seeing them all at once on a chip that was going into PC's.

Without these improvements it's possible Intel would have been beaten out by one of its competitors, maybe Power or SPARC or whatever you call the thing that runs on the Motorola 68k. Hell even MIPS could have beaten the ageing cancerous mistake that was x86. But by discovering the power of lying to the computer, Intel managed to speed up x86 by implementing it in a sensible instruction set in the background, allowing them to do all the same clever pipelining and optimization that was happening with RISC without having to give up their stranglehold on the desktop market. Without the P5 we live in a very, very different world from a computer hardware perspective.

From this falls many of the bizzare microcode execution bugs that plague modern computers, because when you're doing your optimization on the fly in chip with a second, smaller unix hidden inside your processor eventually you're not going to be cryptographically secure.

RISC is very clearly better for, most things. You can find papers stating this as far back as the 70's, when they start doing pipelining for the first time and are like "you know pipelining is a lot easier if you have a few small instructions instead of ten thousand massive ones.

x86 only persists to this day because Intel cemented their lead and they happened to use x86. True RISC cuts out the middleman of hyperoptimizing microcode on the chip, but if you can't do that because you've girlbossed too close to the sun as Intel had in the late 80's you have to do something.

The Future

This gets us to like the year 2000. I have more chips I find interesting or cool, although from here it's mostly microcontrollers in part because from here it gets pretty monotonous because Intel basically wins for a while. I might pick that up later. Also if this post gets any longer it'll be annoying to scroll past. Here is a sample from a post I have in my drafts since May:

I have some notes on the weirdo PowerPC stuff that shows up here it's mostly interesting because of where it goes, not what it is. A lot of it ends up in games consoles. Some of it goes into mainframes. There is some of it in space. Really got around, PowerPC did.

237 notes

·

View notes

Text

Agilex 3 FPGAs: Next-Gen Edge-To-Cloud Technology At Altera

Agilex 3 FPGA

Today, Altera, an Intel company, launched a line of FPGA hardware, software, and development tools to expand the market and use cases for its programmable solutions. Altera unveiled new development kits and software support for its Agilex 5 FPGAs at its annual developer’s conference, along with fresh information on its next-generation, cost-and power-optimized Agilex 3 FPGA.

Altera

Why It Matters

Altera is the sole independent provider of FPGAs, offering complete stack solutions designed for next-generation communications infrastructure, intelligent edge applications, and high-performance accelerated computing systems. Customers can get adaptable hardware from the company that quickly adjusts to shifting market demands brought about by the era of intelligent computing thanks to its extensive FPGA range. With Agilex FPGAs loaded with AI Tensor Blocks and the Altera FPGA AI Suite, which speeds up FPGA development for AI inference using well-liked frameworks like TensorFlow, PyTorch, and OpenVINO toolkit and tested FPGA development flows, Altera is leading the industry in the use of FPGAs in AI inference workload

Intel Agilex 3

What Agilex 3 FPGAs Offer

Designed to satisfy the power, performance, and size needs of embedded and intelligent edge applications, Altera today revealed additional product details for its Agilex 3 FPGA. Agilex 3 FPGAs, with densities ranging from 25K-135K logic elements, offer faster performance, improved security, and higher degrees of integration in a smaller box than its predecessors.

An on-chip twin Cortex A55 ARM hard processor subsystem with a programmable fabric enhanced with artificial intelligence capabilities is a feature of the FPGA family. Real-time computation for time-sensitive applications such as industrial Internet of Things (IoT) and driverless cars is made possible by the FPGA for intelligent edge applications. Agilex 3 FPGAs give sensors, drivers, actuators, and machine learning algorithms a smooth integration for smart factory automation technologies including robotics and machine vision.

Agilex 3 FPGAs provide numerous major security advancements over the previous generation, such as bitstream encryption, authentication, and physical anti-tamper detection, to fulfill the needs of both defense and commercial projects. Critical applications in industrial automation and other fields benefit from these capabilities, which guarantee dependable and secure performance.

Agilex 3 FPGAs offer a 1.9×1 boost in performance over the previous generation by utilizing Altera’s HyperFlex architecture. By extending the HyperFlex design to Agilex 3 FPGAs, high clock frequencies can be achieved in an FPGA that is optimized for both cost and power. Added support for LPDDR4X Memory and integrated high-speed transceivers capable of up to 12.5 Gbps allow for increased system performance.

Agilex 3 FPGA software support is scheduled to begin in Q1 2025, with development kits and production shipments following in the middle of the year.

How FPGA Software Tools Speed Market Entry

Quartus Prime Pro

The Latest Features of Altera’s Quartus Prime Pro software, which gives developers industry-leading compilation times, enhanced designer productivity, and expedited time-to-market, are another way that FPGA software tools accelerate time-to-market. With the impending Quartus Prime Pro 24.3 release, enhanced support for embedded applications and access to additional Agilex devices are made possible.

Agilex 5 FPGA D-series, which targets an even wider range of use cases than Agilex 5 FPGA E-series, which are optimized to enable efficient computing in edge applications, can be designed by customers using this forthcoming release. In order to help lower entry barriers for its mid-range FPGA family, Altera provides software support for its Agilex 5 FPGA E-series through a free license in the Quartus Prime Software.

Support for embedded applications that use Altera’s RISC-V solution, the Nios V soft-core processor that may be instantiated in the FPGA fabric, or an integrated hard-processor subsystem is also included in this software release. Agilex 5 FPGA design examples that highlight Nios V features like lockstep, complete ECC, and branch prediction are now available to customers. The most recent versions of Linux, VxWorks, and Zephyr provide new OS and RTOS support for the Agilex 5 SoC FPGA-based hard processor subsystem.

How to Begin for Developers

In addition to the extensive range of Agilex 5 and Agilex 7 FPGAs-based solutions available to assist developers in getting started, Altera and its ecosystem partners announced the release of 11 additional Agilex 5 FPGA-based development kits and system-on-modules (SoMs).

Developers may quickly transition to full-volume production, gain firsthand knowledge of the features and advantages Agilex FPGAs can offer, and easily and affordably access Altera hardware with FPGA development kits.

Kits are available for a wide range of application cases and all geographical locations. To find out how to buy, go to Altera’s Partner Showcase website.

Read more on govindhtech.com

#Agilex3FPGA#NextGen#CloudTechnology#TensorFlow#Agilex5FPGA#OpenVINO#IntelAgilex3#artificialintelligence#InternetThings#IoT#FPGA#LPDDR4XMemory#Agilex5FPGAEseries#technology#Agilex7FPGAs#QuartusPrimePro#technews#news#govindhtech

2 notes

·

View notes

Text

FinFET Technology Market Size, Share, Trends, Demand, Industry Growth and Competitive Outlook

FinFET Technology Market survey report analyses the general market conditions such as product price, profit, capacity, production, supply, demand, and market growth rate which supports businesses on deciding upon several strategies. Furthermore, big sample sizes have been utilized for the data collection in this business report which suits the necessities of small, medium as well as large size of businesses. The report explains the moves of top market players and brands that range from developments, products launches, acquisitions, mergers, joint ventures, trending innovation and business policies.

The large scale FinFET Technology Market report is prepared by taking into account the market type, organization volume, accessibility on-premises, end-users’ organization type, and availability at global level in areas such as North America, South America, Europe, Asia-Pacific, Middle East and Africa. Extremely talented pool has invested a lot of time for doing market research analysis and to generate this market report. FinFET Technology Market report is sure to help businesses for the long lasting accomplishments in terms of better decision making, revenue generation, prioritizing market goals and profitable business.

FinFET Technology Market, By Technology (3nm, 5nm, 7nm, 10nm, 14nm, 16nm, 20nm, 22nm), Application (Central Processing Unit (CPU), System-On-Chip (SoC), Field-Programmable Gate Array (FPGA), Graphics Processing Unit (GPU), Network Processor), End User (Mobile, Cloud Server/High-End Networks, IoT/Consumer Electronics, Automotive, Others), Type (Shorted Gate (S.G.), Independent Gate (I.G.), Bulk FinFETS, SOI FinFETS) – Industry Trends and Forecast to 2029.

Access Full 350 Pages PDF Report @

https://www.databridgemarketresearch.com/reports/global-finfet-technology-market

Key Coverage in the FinFET Technology Market Report:

Detailed analysis of FinFET Technology Market by a thorough assessment of the technology, product type, application, and other key segments of the report

Qualitative and quantitative analysis of the market along with CAGR calculation for the forecast period

Investigative study of the market dynamics including drivers, opportunities, restraints, and limitations that can influence the market growth

Comprehensive analysis of the regions of the FinFET Technology industry and their futuristic growth outlook

Competitive landscape benchmarking with key coverage of company profiles, product portfolio, and business expansion strategies

Table of Content:

Part 01: Executive Summary

Part 02: Scope of the Report

Part 03: Global FinFET Technology Market Landscape

Part 04: Global FinFET Technology Market Sizing

Part 05: Global FinFET Technology Market Segmentation by Product

Part 06: Five Forces Analysis

Part 07: Customer Landscape

Part 08: Geographic Landscape

Part 09: Decision Framework

Part 10: Drivers and Challenges

Part 11: Market Trends

Part 12: Vendor Landscape

Part 13: Vendor Analysis

Some of the major players operating in the FinFET technology market are:

SAP (Germany)

BluJay Solutions (U.K.)

ANSYS, Inc. (U.S.)

Keysight Technologies, Inc. (U.S.)

Analog Devices, Inc. (U.S.)

Infineon Technologies AG (Germany)

NXP Semiconductors (Netherlands)

Renesas Electronics Corporation (Japan)

Robert Bosch GmbH (Germany)

ROHM CO., LTD (Japan)

Semiconductor Components Industries, LLC (U.S.)

Texas Instruments Incorporated (U.S.)

TOSHIBA CORPORATION (Japan)

Browse Trending Reports:

Facility Management Market Size, Share, Trends, Growth and Competitive Outlook https://www.databridgemarketresearch.com/reports/global-facility-management-market

Supply Chain Analytics Market Size, Share, Trends, Global Demand, Growth and Opportunity Analysis https://www.databridgemarketresearch.com/reports/global-supply-chain-analytics-market

Industry 4.0 Market Size, Share, Trends, Opportunities, Key Drivers and Growth Prospectus https://www.databridgemarketresearch.com/reports/global-industry-4-0-market

Digital Banking Market Size, Share, Trends, Industry Growth and Competitive Analysis https://www.databridgemarketresearch.com/reports/global-digital-banking-market

Massive Open Online Courses (MOOCS) Market Size, Share, Trends, Growth Opportunities and Competitive Outlook https://www.databridgemarketresearch.com/reports/global-mooc-market

About Data Bridge Market Research:

Data Bridge set forth itself as an unconventional and neoteric Market research and consulting firm with unparalleled level of resilience and integrated approaches. We are determined to unearth the best market opportunities and foster efficient information for your business to thrive in the market. Data Bridge endeavors to provide appropriate solutions to the complex business challenges and initiates an effortless decision-making process.

Contact Us:

Data Bridge Market Research

US: +1 888 387 2818

UK: +44 208 089 1725

Hong Kong: +852 8192 7475

Email: [email protected]

#FinFET Technology Market Size#Share#Trends#Demand#Industry Growth and Competitive Outlook#market report#market share#market size#marketresearch#market trends#market analysis#markettrends#marketreport#market research

1 note

·

View note

Text

Explore the Future of Chip Design with Top Training in RTL and FPGA

In today’s fast-paced digital world, the demand for innovative and energy-efficient electronic devices is ever-growing. At the core of these devices lies the complex work of chip design, which plays a vital role in enabling technologies such as smartphones, wearables, automotive systems, and AI hardware. As industries shift towards automation and digital transformation, VLSI (Very Large Scale Integration) has become one of the most important domains within electronics and electrical engineering. This rapid growth has created a strong demand for professionals who possess specialized knowledge and hands-on experience in chip design and verification.

Understanding RTL Design and Its Relevance Register Transfer Level (RTL) design is a foundational step in digital circuit design that defines how data moves between registers and how logic operations are performed. It involves creating functional models of digital systems using hardware description languages such as Verilog or VHDL. The popularity of rtl design courses has risen sharply, as they offer learners deep insight into real-time design practices and methodologies used in the semiconductor industry. These courses not only enhance theoretical understanding but also provide practical exposure that aligns closely with the requirements of top VLSI companies. Mastering RTL design is essential for anyone aiming to work in roles such as front-end design engineer or verification engineer.

Industry-Oriented Learning: Why It Matters The effectiveness of VLSI education is measured not just by theoretical knowledge but by the practical skills that can be applied to real-world projects. Courses that emphasize tool-based training, project work, and industry-specific case studies are more valuable for career growth. A curriculum that reflects the latest industry standards prepares learners to contribute meaningfully in actual job roles. Institutes that focus on hands-on learning with modern EDA tools ensure that learners are ready to meet the evolving needs of semiconductor companies from day one.

FPGA Design and Its Career Potential Field-Programmable Gate Arrays (FPGAs) are integral to developing flexible, high-performance electronic systems. They are widely used in telecommunications, medical devices, automotive applications, and aerospace systems. Training in FPGA design allows learners to explore advanced logic implementation, synthesis, and real-time verification techniques. This is why fpga design training institutes in bangalore are seeing increasing enrolments. Bangalore, being India’s leading tech hub, offers access to some of the best institutes that deliver quality education in this area. These institutes often maintain strong industry ties, allowing learners to benefit from exposure to live projects and internship opportunities.

Conclusion: Building a Strong Foundation in VLSI To build a successful career in VLSI design, choosing the right training in both RTL and FPGA is essential. While rtl design courses provide the conceptual and practical grounding needed for front-end roles, fpga design training institutes in bangalore offer a more specialized path that aligns with today’s most dynamic electronic system applications. Among the leading institutes offering quality training is Takshila Institute of VLSI Technologies, known for its expert-led programs and real-world curriculum. With the right guidance and learning environment, aspiring engineers can turn their technical passion into a high-growth career in the semiconductor industry.

0 notes

Text

Unlocking the Future of Chip Design: The Rise of VLSI Skill Development

In the fast-evolving electronics and semiconductor industry, the demand for skilled professionals in VLSI (Very-Large-Scale Integration) design continues to surge. As technology becomes smaller, faster, and more efficient, the need for experts who can design and implement complex integrated circuits is at an all-time high. The global push toward automation, AI, and IoT has further increased the relevance of VLSI, making it a highly promising career path for engineering graduates and working professionals alike. Learning the right tools and techniques is essential, and structured training can make all the difference.

The Role of ASIC Design Training in Career Advancement One of the key areas in VLSI is ASIC (Application-Specific Integrated Circuit) design. Specialized training in ASIC design enables learners to understand how to create chips tailored to specific applications, such as mobile devices, automotive electronics, and industrial automation. With this focus, learners can gain hands-on knowledge of the complete chip design flow, from RTL design to verification and physical implementation. There are several asic design training institutes in hyderabad that offer practical and job-oriented courses, helping individuals build strong foundations and pursue roles such as front-end design engineers or verification specialists. Hyderabad has emerged as a major training hub due to its proximity to leading semiconductor companies.

Benefits of Structured VLSI Training Programs Comprehensive training in VLSI tools and languages provides not only theoretical insights but also real-world project experience. These programs are often designed by industry experts and updated to match current trends. Candidates gain exposure to industry-standard tools like Cadence, Synopsys, and Mentor Graphics. Instructors with real-time experience guide students through industry-relevant challenges, bridging the gap between academic learning and corporate requirements. This makes candidates job-ready and increases their chances of placement in top VLSI companies.

Why Verilog Is Still in Demand for VLSI Learning Verilog remains one of the most widely used hardware description languages in the VLSI field. Whether it’s for ASIC or FPGA design, proficiency in Verilog is essential. As a result, aspirants often look for training that combines fundamental theory with real-time simulations and debugging experience. In recent years, verilog training institutes in bangalore have gained popularity for offering focused and advanced courses that cater to this need. Bangalore, known as the Silicon Valley of India, provides access to an active semiconductor ecosystem where learners can apply their skills in practical settings and increase job opportunities.

Conclusion: Choosing the Right Path to a Successful VLSI Career VLSI design continues to be a highly promising field for those seeking technical careers in electronics and chip design. From the fundamentals of Verilog to the complexities of ASIC development, structured training plays a vital role in building the required expertise. For individuals aiming to excel in VLSI, both asic design training institutes in hyderabad and verilog training institutes in bangalore offer strong foundations and industry-relevant education. In the middle of this training revolution, Takshila Institute of VLSI Technologies stands out by offering high-quality programs tailored to the needs of aspiring VLSI professionals. Making an informed choice about the right training institute can open doors to exciting and long-term opportunities in the semiconductor industry.

0 notes

Text

Build Your Chip-Level Career: Best Online Avenues for VLSI Mastery

With the rise of advanced electronics and embedded systems, VLSI (Very Large Scale Integration) design has become the cornerstone of modern semiconductor and electronic product development. From smartphones to automotive systems, every high-performance device relies on VLSI technology. As the demand for highly skilled professionals in this field surges, learners are turning toward digital platforms to gain industry-specific knowledge. The convenience, flexibility, and access to expert training have made a popular choice for aspiring engineers and working professionals alike.

Why Online Learning Is Ideal for VLSI Aspirants Learning VLSI requires in-depth understanding of complex concepts such as CMOS design, digital system architecture, RTL coding, and verification techniques. Online learning platforms provide structured modules, live project work, and real-time expert sessions, making them suitable for both beginners and experienced professionals. Many platforms are also industry-aligned, helping learners bridge the gap between theoretical concepts and practical application. Online VLSI training allows individuals to upgrade their skills without relocating or disrupting their work schedules, making it a strategic choice for career growth.

Hyderabad as a Hub for VLSI Education Hyderabad has established itself as a technology and innovation hub in India. With the presence of major semiconductor companies and a thriving IT ecosystem, the city offers excellent learning and career opportunities in VLSI design. Many professionals seek training here due to the strong industry connections and availability of quality education resources. The presence of specialized training centers and placement support further adds to its appeal.

Features That Define Effective VLSI Training Effective VLSI training includes hands-on projects, tool-based learning, and industry-driven curriculum. Reputed institutes provide exposure to EDA tools, ASIC and FPGA design processes, as well as real-time debugging and validation methods. Mentorship from industry experts and resume-building support are added advantages. These features ensure that learners are not only technically sound but also job-ready.

Choosing the Right Online Institute in Hyderabad For those looking to enroll in online VLSI training institutes in Hyderabad, it is important to consider factors such as faculty expertise, course content, placement records, and student reviews. A strong placement network and mentorship programs can make a significant difference in career outcomes. With numerous options available, it becomes crucial to select an institute that not only delivers strong theoretical grounding but also aligns its training with industry needs.

Conclusion: The Smart Choice for a VLSI Career In conclusion, the demand for skilled VLSI professionals continues to grow, and pursuing knowledge through digital platforms offers unmatched convenience and accessibility. Whether one is a fresh graduate or a working professional aiming to switch domains, well-structured online VLSI training can provide the necessary tools and confidence. Among the online VLSI training institutes in Hyderabad, Takshila Institute of VLSI Technologies stands out for its comprehensive curriculum, real-time project exposure, and placement assistance. With the right institute and a focused approach, success in the VLSI domain is well within reach.

0 notes

Text

Development Board and Kits Market 2025-2032

MARKET INSIGHTS

The global Development Board and Kits Market size was valued at US$ 3,780 million in 2024 and is projected to reach US$ 7,230 million by 2032, at a CAGR of 9.69% during the forecast period 2025-2032. The U.S. market accounted for 35% of global revenue in 2024, while China’s market is anticipated to grow at a faster pace with an estimated CAGR of 9.2% through 2032.

Development boards and kits are essential tools for prototyping and testing electronic systems across various industries. These platforms typically include microcontroller units (MCUs), field-programmable gate arrays (FPGAs), or digital signal processors (DSPs) along with necessary peripherals and software tools. Key product categories include MCU development boards, which currently dominate the market with over 40% revenue share, followed by FPGA and ARM-based solutions. The growing complexity of embedded systems and rising demand for IoT applications are driving innovation in this sector.

Market expansion is being fueled by increasing R&D investments in automotive electronics, where development boards enable advanced driver-assistance systems (ADAS) testing. The consumer electronics segment, particularly smart home devices, is another major growth driver with a projected 8.5% CAGR. Leading manufacturers like STMicroelectronics and Texas Instruments are expanding their development kit portfolios to support emerging technologies such as machine learning at the edge. In March 2024, Raspberry Pi launched its first FPGA development board targeting industrial automation, signaling the market’s shift toward more specialized solutions.

Download Your Free Copy of the Sample Report-https://semiconductorinsight.com/download-sample-report/?product_id=97557

Key Industry Players

Innovation and Strategic Partnerships Drive Market Leadership

The global development board and kits market features a dynamic competitive landscape dominated by semiconductor giants and emerging innovators. STMicroelectronics and Texas Instruments currently lead the market, holding a combined revenue share of over 25% in 2024. Their dominance stems from extensive microcontroller (MCU) portfolios and strong partnerships across automotive and IoT sectors, which collectively account for nearly 45% of development board applications.

Meanwhile, Raspberry Pi maintains significant influence in the education and maker communities through its cost-effective single-board computers, with shipments exceeding 5 million units annually. The company’s open-source approach has enabled widespread adoption among DIY enthusiasts and prototyping professionals alike.

What’s particularly notable is how NXP Semiconductors and Infineon Technologies are gaining traction through specialized solutions for Industry 4.0 applications. Their focus on secure connectivity and edge computing capabilities positions them favorably as industrial automation demand grows at 12% CAGR through 2030.

New entrants like Espressif Systems are disrupting the market with competitively priced Wi-Fi/Bluetooth development kits, capturing nearly 8% of the IoT segment. Established players are responding through acquisitions—a trend exemplified by Analog Devices’ 2023 purchase of Maxim Integrated to strengthen its analog computing solutions.

List of Key Development Board and Kits Manufacturers

STMicroelectronics (Switzerland)

Texas Instruments (U.S.)

NXP Semiconductors (Netherlands)

Infineon Technologies (Germany)

Renesas Electronics (Japan)

Analog Devices (U.S.)

Raspberry Pi (U.K.)

Intel Corporation (U.S.)

Silicon Labs (U.S.)

Microchip Technology (U.S.)

ESP32 (China)

Nordic Semiconductor (Norway)

Segment Analysis:

By Type

MCU Development Boards Lead the Market Owing to Widespread Adoption in IoT and Embedded Systems

The market is segmented based on type into:

MCU Development Board

CPLD/FPGA Development Board

DSP Development Board

ARM Development Board

MIPS Development Board

PPC Development Board

By Application

IoT Segment Emerges as Dominant Due to Rapid Technological Advancements in Connected Devices

The market is segmented based on application into:

3C Products

Automotive

IoT

Medical Equipment

Defense & Aerospace

Other

By Architecture

ARM-based Boards Gain Prominence Across Multiple Industries for Their Power Efficiency

The market is segmented based on architecture into:

8-bit

16-bit

32-bit

64-bit

By Connectivity

Wireless Connectivity Solutions Drive Market Growth With Expanding IoT Ecosystem

The market is segmented based on connectivity into:

Wired

Wireless

Request Your Free Sample Report-https://semiconductorinsight.com/download-sample-report/?product_id=97557

FREQUENTLY ASKED QUESTIONS:

What is the current market size of Global Development Board and Kits Market?

-> Development Board and Kits Market size was valued at US$ 3,780 million in 2024 and is projected to reach US$ 7,230 million by 2032, at a CAGR of 9.69% during the forecast period 2025-2032.

Which key companies dominate this market?

-> Top players include STMicroelectronics, Texas Instruments, Raspberry Pi, NXP Semiconductors, and Infineon Technologies, collectively holding 45% market share.

What are the primary growth drivers?

-> Key drivers include IoT proliferation (26% of applications), maker movement expansion, and increasing adoption in educational institutions.

Which product segment leads the market?

-> MCU development boards dominate with 38% share, while FPGA boards show fastest growth at 15.8% CAGR.

What are the emerging technology trends?

-> Emerging trends include AI-enabled boards, RISC-V architecture adoption, and cloud-integrated development kits.

About Semiconductor Insight:

Established in 2016, Semiconductor Insight specializes in providing comprehensive semiconductor industry research and analysis to support businesses in making well-informed decisions within this dynamic and fast-paced sector. From the beginning, we have been committed to delivering in-depth semiconductor market research, identifying key trends, opportunities, and challenges shaping the global semiconductor industry.

CONTACT US:

City vista, 203A, Fountain Road, Ashoka Nagar, Kharadi, Pune, Maharashtra 411014

[+91 8087992013]

0 notes

Text

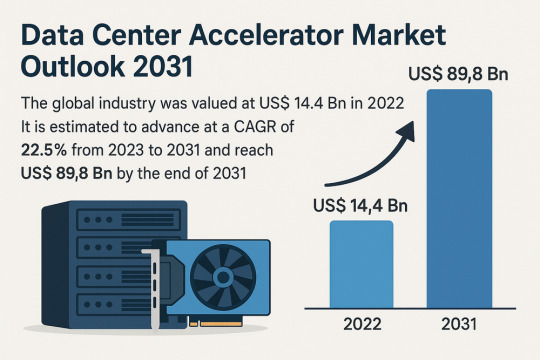

Data Center Accelerator Market Set to Transform AI Infrastructure Landscape by 2031

The global data center accelerator market is poised for exponential growth, projected to rise from USD 14.4 Bn in 2022 to a staggering USD 89.8 Bn by 2031, advancing at a CAGR of 22.5% during the forecast period from 2023 to 2031. Rapid adoption of Artificial Intelligence (AI), Machine Learning (ML), and High-Performance Computing (HPC) is the primary catalyst driving this expansion.

Market Overview: Data center accelerators are specialized hardware components that improve computing performance by efficiently handling intensive workloads. These include Graphics Processing Units (GPUs), Tensor Processing Units (TPUs), Field Programmable Gate Arrays (FPGAs), and Application-Specific Integrated Circuits (ASICs), which complement CPUs by expediting data processing.

Accelerators enable data centers to process massive datasets more efficiently, reduce reliance on servers, and optimize costs a significant advantage in a data-driven world.

Market Drivers & Trends

Rising Demand for High-performance Computing (HPC): The proliferation of data-intensive applications across industries such as healthcare, autonomous driving, financial modeling, and weather forecasting is fueling demand for robust computing resources.

Boom in AI and ML Technologies: The computational requirements of AI and ML are driving the need for accelerators that can handle parallel operations and manage extensive datasets efficiently.

Cloud Computing Expansion: Major players like AWS, Azure, and Google Cloud are investing in infrastructure that leverages accelerators to deliver faster AI-as-a-service platforms.

Latest Market Trends

GPU Dominance: GPUs continue to dominate the market, especially in AI training and inference workloads, due to their capability to handle parallel computations.

Custom Chip Development: Tech giants are increasingly developing custom chips (e.g., Meta’s MTIA and Google's TPUs) tailored to their specific AI processing needs.

Energy Efficiency Focus: Companies are prioritizing the design of accelerators that deliver high computational power with reduced energy consumption, aligning with green data center initiatives.

Key Players and Industry Leaders

Prominent companies shaping the data center accelerator landscape include:

NVIDIA Corporation – A global leader in GPUs powering AI, gaming, and cloud computing.

Intel Corporation – Investing heavily in FPGA and ASIC-based accelerators.

Advanced Micro Devices (AMD) – Recently expanded its EPYC CPU lineup for data centers.

Meta Inc. – Introduced Meta Training and Inference Accelerator (MTIA) chips for internal AI applications.

Google (Alphabet Inc.) – Continues deploying TPUs across its cloud platforms.

Other notable players include Huawei Technologies, Cisco Systems, Dell Inc., Fujitsu, Enflame Technology, Graphcore, and SambaNova Systems.

Recent Developments

March 2023 – NVIDIA introduced a comprehensive Data Center Platform strategy at GTC 2023 to address diverse computational requirements.

June 2023 – AMD launched new EPYC CPUs designed to complement GPU-powered accelerator frameworks.

2023 – Meta Inc. revealed the MTIA chip to improve performance for internal AI workloads.

2023 – Intel announced a four-year roadmap for data center innovation focused on Infrastructure Processing Units (IPUs).

Gain an understanding of key findings from our Report in this sample - https://www.transparencymarketresearch.com/sample/sample.php?flag=S&rep_id=82760

Market Opportunities

Edge Data Center Integration: As computing shifts closer to the edge, opportunities arise for compact and energy-efficient accelerators in edge data centers for real-time analytics and decision-making.

AI in Healthcare and Automotive: As AI adoption grows in precision medicine and autonomous vehicles, demand for accelerators tuned for domain-specific processing will soar.

Emerging Markets: Rising digitization in emerging economies presents substantial opportunities for data center expansion and accelerator deployment.

Future Outlook

With AI, ML, and analytics forming the foundation of next-generation applications, the demand for enhanced computational capabilities will continue to climb. By 2031, the data center accelerator market will likely transform into a foundational element of global IT infrastructure.

Analysts anticipate increasing collaboration between hardware manufacturers and AI software developers to optimize performance across the board. As digital transformation accelerates, companies investing in custom accelerator architectures will gain significant competitive advantages.

Market Segmentation

By Type:

Central Processing Unit (CPU)

Graphics Processing Unit (GPU)

Application-Specific Integrated Circuit (ASIC)

Field-Programmable Gate Array (FPGA)

Others

By Application:

Advanced Data Analytics

AI/ML Training and Inference

Computing

Security and Encryption

Network Functions

Others

Regional Insights

Asia Pacific dominates the global market due to explosive digital content consumption and rapid infrastructure development in countries such as China, India, Japan, and South Korea.

North America holds a significant share due to the presence of major cloud providers, AI startups, and heavy investment in advanced infrastructure. The U.S. remains a critical hub for data center deployment and innovation.

Europe is steadily adopting AI and cloud computing technologies, contributing to increased demand for accelerators in enterprise data centers.

Why Buy This Report?

Comprehensive insights into market drivers, restraints, trends, and opportunities

In-depth analysis of the competitive landscape

Region-wise segmentation with revenue forecasts

Includes strategic developments and key product innovations

Covers historical data from 2017 and forecast till 2031

Delivered in convenient PDF and Excel formats

Frequently Asked Questions (FAQs)

1. What was the size of the global data center accelerator market in 2022? The market was valued at US$ 14.4 Bn in 2022.

2. What is the projected market value by 2031? It is projected to reach US$ 89.8 Bn by the end of 2031.

3. What is the key factor driving market growth? The surge in demand for AI/ML processing and high-performance computing is the major driver.

4. Which region holds the largest market share? Asia Pacific is expected to dominate the global data center accelerator market from 2023 to 2031.

5. Who are the leading companies in the market? Top players include NVIDIA, Intel, AMD, Meta, Google, Huawei, Dell, and Cisco.

6. What type of accelerator dominates the market? GPUs currently dominate the market due to their parallel processing efficiency and widespread adoption in AI/ML applications.

7. What applications are fueling growth? Applications like AI/ML training, advanced analytics, and network security are major contributors to the market's growth.

Explore Latest Research Reports by Transparency Market Research: Tactile Switches Market: https://www.transparencymarketresearch.com/tactile-switches-market.html

GaN Epitaxial Wafers Market: https://www.transparencymarketresearch.com/gan-epitaxial-wafers-market.html

Silicon Carbide MOSFETs Market: https://www.transparencymarketresearch.com/silicon-carbide-mosfets-market.html

Chip Metal Oxide Varistor (MOV) Market: https://www.transparencymarketresearch.com/chip-metal-oxide-varistor-mov-market.html

About Transparency Market Research Transparency Market Research, a global market research company registered at Wilmington, Delaware, United States, provides custom research and consulting services. Our exclusive blend of quantitative forecasting and trends analysis provides forward-looking insights for thousands of decision makers. Our experienced team of Analysts, Researchers, and Consultants use proprietary data sources and various tools & techniques to gather and analyses information. Our data repository is continuously updated and revised by a team of research experts, so that it always reflects the latest trends and information. With a broad research and analysis capability, Transparency Market Research employs rigorous primary and secondary research techniques in developing distinctive data sets and research material for business reports. Contact: Transparency Market Research Inc. CORPORATE HEADQUARTER DOWNTOWN, 1000 N. West Street, Suite 1200, Wilmington, Delaware 19801 USA Tel: +1-518-618-1030 USA - Canada Toll Free: 866-552-3453 Website: https://www.transparencymarketresearch.com Email: [email protected] of Form

Bottom of Form

0 notes

Text

FPGA Companies - Advanced Micro Devices (Xilinx, Inc.) (US) and Intel Corporation (US) are the Key Players

The FPGA market is projected to grow from USD 12.1 billion in 2024 and is projected to reach USD 25.8 billion by 2029; it is expected to grow at a CAGR of 16.4% from 2024 to 2029.

The growth of the FPGA market is driven by the rising trend towards Artificial Intelligence (AI) and Internet of Things (IoT) technologies in various applications and the integration of FPGAs into advanced driver assistance systems (ADAS).

Major FPGA companies include:

· Advanced Micro Devices (Xilinx, Inc.) (US),

· Intel Corporation (US),

· Microchip Technology Inc. (US),

· Lattice Semiconductor Corporation (US), and

· Achronix Semiconductor Corporation (US).

Major strategies adopted by the players in the FPGA market ecosystem to boost their product portfolios, accelerate their market share, and increase their presence in the market include acquisitions, collaborations, partnerships, and new product launches.

For instance, in October 2023, Achronix Semiconductor Corporation announced a partnership with Myrtle.ai, introducing an accelerated automatic speech recognition (ASR) solution powered by the Speedster7t FPGA. This innovation enables the conversion of spoken language into text in over 1,000 real-time streams, delivering exceptional accuracy and response times, all while outperforming competitors by up to 20 times.

In May 2023, Intel Corporation introduced the Agilex 7 featuring the R-Tile chiplet. Compared to rival FPGA solutions, Agilex 7 FPGAs equipped with the R-Tile chiplet showcase cutting-edge technical capabilities, providing twice the speed in PCIe 5.0 bandwidth and four times higher CXL bandwidth per port.

ADVANCED MICRO DEVICES, INC. (FORMERLY XILINX, INC.):

AMD offers products under four reportable segments: Data Center, Client, Gaming, and Embedded Segments. The Data Center segment offers CPUs, GPUs, FPGAs, DPUs, and adaptive SoC products for data centers. The portfolio of the Client segment consists of APUs, CPUs, and chipsets for desktop and notebook computers. The Gaming segment provides discrete GPUs, semi-custom SoC products, and development services. The Embedded segment offers embedded CPUs, GPUs, APUs, FPGAs, and Adaptive SoC devices. AMD offers its products to a wide range of industries, including aerospace & defense, architecture, engineering & construction, automotive, broadcast & professional audio/visual, government, consumer electronics, design & manufacturing, education, emulation & prototyping, healthcare & sciences, industrial & vision, media & entertainment, robotics, software & sciences, supercomputing & research, telecom & networking, test & measurement, and wired & wireless communications. AMD focuses on high-performance and adaptive computing technology, FPGAs, SoCs, and software.

Intel Corporation:Intel Corporation, based in the US, stands as one of the prominent manufacturers of semiconductor chips and various computing devices. The company's extensive product portfolio encompasses microprocessors, motherboard chipsets, network interface controllers, embedded processors, graphics chips, flash memory, and other devices related to computing and communications. Intel Corporation boasts substantial strengths in investment, marked by a long-standing commitment to research and development, a vast manufacturing infrastructure, and a robust focus on cutting-edge semiconductor technologies. For instance, in October 2023, Intel announced an expansion in Arizona that marked a significant milestone, underlining its dedication to meeting semiconductor demand, job creation, and advancing US technological leadership. Their dedication to expanding facilities and creating high-tech job opportunities is a testament to their strategic investments in innovation and growth.

0 notes

Text

A leading aerospace company experienced this challenge head-on while developing a wearable night vision camera designed for military operations. With strict requirements for size, weight, power consumption, and performance, the company required a trustworthy partner with specialized expertise. Voler Systems, well-known for its innovation in FPGA design, electronic design, wearables, and firmware, collaborated to bring this ambitious project to life.

#FPGA Design#FPGA Development#Electronics Product Design#Product Design Electronics#Electronics Design Company#Electronic Design Services

0 notes

Text

Exploring the FinFET Technology Market: Growth Drivers, Demand Analysis & Future Outlook

"Executive Summary FinFET Technology Market : The global FinFET technology market size was valued at USD 69.67 billion in 2023, is projected to reach USD 1,079.25 billion by 2031, with a CAGR of 40.85% during the forecast period 2024 to 2031.

The data within the FinFET Technology Market report is showcased in a statistical format to offer a better understanding upon the dynamics. The market report also computes the market size and revenue generated from the sales. What is more, this market report analyses and provides the historic data along with the current performance of the market. FinFET Technology Market report is a comprehensive background analysis of the industry, which includes an assessment of the parental market. The FinFET Technology Market is supposed to demonstrate a considerable growth during the forecast period.

The emerging trends along with major drivers, challenges and opportunities in the market are also identified and analysed in this report. FinFET Technology Market report is a systematic synopsis on the study for market and how it is affecting the industry. This report studies the potential and prospects of the market in the present and the future from various points of views. SWOT analysis and Porter's Five Forces Analysis are the two consistently and promisingly used tools for generating this report. FinFET Technology Market report is prepared using data sourced from in-house databases, secondary and primary research performed by a team of industry experts.

Discover the latest trends, growth opportunities, and strategic insights in our comprehensive FinFET Technology Market report. Download Full Report: https://www.databridgemarketresearch.com/reports/global-finfet-technology-market

FinFET Technology Market Overview

**Segments**

- By Technology Node (10nm, 7nm, 5nm, 3nm) - By Product (Central Processing Unit (CPU), Field-Programmable Gate Array (FPGA), System-on-Chip (SoC), Network Processor, Graphics Processing Unit (GPU), Artificial Intelligence (AI)) - By End-User (Smartphones, Wearables, High-End Networks, Automotive, Industrial)

The global FinFET technology market is segmented based on technology node, product, and end-user. The technology node segment includes 10nm, 7nm, 5nm, and 3nm nodes, with increasing demand for smaller nodes to achieve higher efficiency. In terms of products, the market includes Central Processing Units (CPUs), Field-Programmable Gate Arrays (FPGAs), System-on-Chips (SoCs), Network Processors, Graphics Processing Units (GPUs), and Artificial Intelligence (AI) products that utilize FinFET technology for improved performance. The end-user segment covers smartphones, wearables, high-end networks, automotive, and industrial sectors where FinFET technology is being increasingly adopted for enhanced capabilities.

**Market Players**

- Intel Corporation - Samsung Electronics Co. Ltd. - Taiwan Semiconductor Manufacturing Company Limited - GLOBALFOUNDRIES - Semiconductor Manufacturing International Corp. - United Microelectronics Corporation - NVIDIA Corporation - Xilinx Inc. - IBM Corporation

Key players in the global FinFET technology market include industry giants such as Intel Corporation, Samsung Electronics Co. Ltd., Taiwan Semiconductor Manufacturing Company Limited, GLOBALFOUNDRIES, Semiconductor Manufacturing International Corp., United Microelectronics Corporation, NVIDIA Corporation, Xilinx Inc., and IBM Corporation. These market players are heavily investing in research and development to enhance their FinFET technology offerings and maintain a competitive edge in the market.

The global FinFET technology market is witnessing significant growth driven by the increasing demand for advanced processors in smartphones, data centers, and emerging technologies such as artificial intelligence and Internet of Things (IoT). The shift towards smaller technology nodes like 7nm and 5nm is enabling higher performance and energy efficiency in electronic devices. The adoption of FinFET technology in a wide range of applications such as automotive, industrial, and high-end networks is further fueling market growth.

The Asia Pacific region dominates the global FinFET technology market, with countries like China, South Korea, and Taiwan being major hubs for semiconductor manufacturing. North America and Europe also play vital roles in the market, with key technological advancements and investments driving growth in these regions. Overall, the global FinFET technology market is poised for significant expansion in the coming years, driven by advancements in semiconductor technology and increasing demand for high-performance electronic devices.

The FinFET technology market is characterized by intense competition among key players striving to innovate and stay ahead in the rapidly evolving semiconductor industry. As technology nodes continue to shrink, companies are focusing on developing more efficient and powerful processors to meet the growing demands of various applications. Intel Corporation, a long-standing leader in the market, faces increasing competition from companies like Samsung Electronics, Taiwan Semiconductor Manufacturing, and GLOBALFOUNDRIES, all of which are investing heavily in R&D to drive technological advancements.

One key trend in the FinFET technology market is the rising importance of artificial intelligence (AI) applications across industries. AI-driven technologies require highly capable processors to handle complex computations, leading to a surge in demand for FinFET-based products such as GPUs and AI chips. Companies like NVIDIA and Xilinx are at the forefront of developing cutting-edge solutions tailored for AI workloads, positioning themselves as key players in the AI-driven segment of the FinFET market.

The increasing adoption of FinFET technology in smartphones and wearables is another significant driver of market growth. The demand for high-performance mobile devices with energy-efficient processors is propelling the development of advanced FinFET-based SoCs tailored for the mobile industry. As smartphones become more powerful and capable of handling complex tasks, the need for FinFET technology to deliver optimal performance while conserving power becomes paramount.

Moreover, the automotive industry represents a lucrative segment for FinFET technology, with the growing integration of electronic systems in modern vehicles. From advanced driver-assistance systems (ADAS) to in-vehicle infotainment systems, automotive manufacturers are leveraging FinFET technology to enhance the efficiency and performance of onboard electronics. This trend is expected to drive further innovation in automotive semiconductor solutions and create new opportunities for market players.

Overall, the global FinFET technology market is on a trajectory of steady growth, fueled by advancements in semiconductor technology and the increasing demand for high-performance computing solutions across various sectors. With key players continuously pushing the boundaries of innovation and expanding their product portfolios, the market is poised for further expansion in the coming years. As technology nodes continue to shrink and new applications emerge, the FinFET market is likely to witness dynamic changes and evolving trends, shaping the future of the semiconductor industry.The global FinFET technology market is experiencing robust growth fueled by the increasing demand for advanced processors across various industries. One key trend shaping the market is the rapid adoption of FinFET technology in artificial intelligence (AI) applications. With the proliferation of AI-driven technologies in areas such as data analytics, autonomous vehicles, and robotics, there is a growing need for high-performance processors that can handle complex computations efficiently. Companies like NVIDIA and Xilinx are capitalizing on this trend by developing innovative FinFET-based products tailored for AI workloads, positioning themselves as key players in this segment of the market.

Another significant driver of market growth is the expanding use of FinFET technology in smartphones and wearables. As consumer demand for high-performance mobile devices continues to rise, there is a growing emphasis on developing energy-efficient processors that can deliver optimal performance while conserving power. FinFET-based System-on-Chips (SoCs) have emerged as a popular choice for mobile manufacturers looking to enhance the capabilities of their devices, leading to further adoption of FinFET technology in the mobile industry.

The automotive sector represents a lucrative opportunity for FinFET technology, driven by the increasing integration of electronic systems in modern vehicles. From advanced driver-assistance systems to in-vehicle infotainment, automotive manufacturers are leveraging FinFET technology to improve the efficiency and performance of onboard electronics. This trend is expected to fuel further innovation in automotive semiconductor solutions, presenting new growth avenues for market players operating in this segment.

Overall, the global FinFET technology market is poised for significant expansion in the coming years, driven by advancements in semiconductor technology and the rising demand for high-performance computing solutions across diverse sectors. With key players investing heavily in research and development to stay ahead in the competitive landscape, the market is likely to witness continuous innovation and the introduction of cutting-edge products tailored for emerging applications. As technology nodes continue to shrink and new use cases for FinFET technology emerge, the market is expected to undergo dynamic changes and shape the future of the semiconductor industry.

The FinFET Technology Market is highly fragmented, featuring intense competition among both global and regional players striving for market share. To explore how global trends are shaping the future of the top 10 companies in the keyword market.

Learn More Now: https://www.databridgemarketresearch.com/reports/global-finfet-technology-market/companies

DBMR Nucleus: Powering Insights, Strategy & Growth

DBMR Nucleus is a dynamic, AI-powered business intelligence platform designed to revolutionize the way organizations access and interpret market data. Developed by Data Bridge Market Research, Nucleus integrates cutting-edge analytics with intuitive dashboards to deliver real-time insights across industries. From tracking market trends and competitive landscapes to uncovering growth opportunities, the platform enables strategic decision-making backed by data-driven evidence. Whether you're a startup or an enterprise, DBMR Nucleus equips you with the tools to stay ahead of the curve and fuel long-term success.

The report can answer the following questions:

Global major manufacturers' operating situation (sales, revenue, growth rate and gross margin) of FinFET Technology Market

Global major countries (United States, Canada, Germany, France, UK, Italy, Russia, Spain, China, Japan, Korea, India, Australia, New Zealand, Southeast Asia, Middle East, Africa, Mexico, Brazil, C. America, Chile, Peru, Colombia) market size (sales, revenue and growth rate) of FinFET Technology Market

Different types and applications of FinFET Technology Market share of each type and application by revenue.

Global of FinFET Technology Market size (sales, revenue) forecast by regions and countries from 2022 to 2028 of FinFET Technology Market

Upstream raw materials and manufacturing equipment, industry chain analysis of FinFET Technology Market

SWOT analysis of FinFET Technology Market

New Project Investment Feasibility Analysis of FinFET Technology Market

Browse More Reports:

North America Personal Care Ingredients Market Global FinFET Technology Market Global Paper Dyes Market Asia-Pacific Protein Hydrolysates Market Global Inline Metrology Market North America Retail Analytics Market Global Thrombosis Drug Market Europe Network Test Lab Automation Market Global Perinatal Infections Market Global Light-Emitting Diode (LED) Probing and Testing Equipment Market Global Mobile Campaign Management Platform Market Global Fruits and Vegetables Processing Equipment Market Global STD Diagnostics Market Asia-Pacific Microgrid Market Global Fluoxetine Market Global Food Drink Packaging Market Global Electric Enclosure Market Asia-Pacific Artificial Turf Market Global Hand Wash Station Market Global Prostate Cancer Antigen 3 (PCA3) Test Market Asia-Pacific Hydrographic Survey Equipment Market Global Cable Testing and Certification Market Global Leather Handbags Market Global Post-Bariatric Hypoglycemia Treatment Market Europe pH Sensors Market Global Linear Low-Density Polyethylene Market Global Ketogenic Diet Food Market Asia-Pacific Small Molecule Sterile Injectable Drugs Market Global Prescriptive Analytics Market Global Viral Transport Media Market Middle East and Africa Composite Bearings Market

About Data Bridge Market Research:

An absolute way to forecast what the future holds is to comprehend the trend today!

Data Bridge Market Research set forth itself as an unconventional and neoteric market research and consulting firm with an unparalleled level of resilience and integrated approaches. We are determined to unearth the best market opportunities and foster efficient information for your business to thrive in the market. Data Bridge endeavors to provide appropriate solutions to the complex business challenges and initiates an effortless decision-making process. Data Bridge is an aftermath of sheer wisdom and experience which was formulated and framed in the year 2015 in Pune.

Contact Us: Data Bridge Market Research US: +1 614 591 3140 UK: +44 845 154 9652 APAC : +653 1251 975 Email:- [email protected]

Tag

"

0 notes

Text

Smart NIC Market: Size, Emerging Trends, Competitive Landscape, and Forecast (2033)

"Smart NIC Market" - Research Report, 2025-2033 delivers a comprehensive analysis of the industry's growth trajectory, encompassing historical trends, current market conditions, and essential metrics including production costs, market valuation, and growth rates. Smart NIC Market Size, Share, Growth, and Industry Analysis, By Type (FPGA-based, Others), By Application (Data Center, Telecom, Others), Regional Insights and Forecast to 2033 are driving major changes, setting new standards and influencing customer expectations. These advancements are expected to lead to significant market growth. Capitalize on the market's projected expansion at a CAGR of 14.1% from 2024 to 2033. Our comprehensive [91+ Pages] market research report offers Exclusive Insights, Vital Statistics, Trends, and Competitive Analysis to help you succeed in this Information & Technology sector.

Smart NIC Market: Is it Worth Investing In? (2025-2033)

The Smart NIC Market size was valued at USD 788.89 million in 2024 and is expected to reach USD 2321.31 million by 2033, growing at a CAGR of 14.1% from 2025 to 2033.

The Smart NIC market is expected to demonstrate strong growth between 2025 and 2033, driven by 2024's positive performance and strategic advancements from key players.

The leading key players in the Smart NIC market include:

Marvell Technology Group

AMD

NVIDIA

Intel

Napatech

Netronome

Request a Free Sample Copy @ https://www.marketgrowthreports.com/enquiry/request-sample/104074

Report Scope

This report offers a comprehensive analysis of the global Smart NIC market, providing insights into market size, estimations, and forecasts. Leveraging sales volume (K Units) and revenue (USD millions) data, the report covers the historical period from 2020 to 2025 and forecasts for the future, with 2024 as the base year.

For granular market understanding, the report segments the market by product type, application, and player. Additionally, regional market sizes are provided, offering a detailed picture of the global Smart NIC landscape.

Gain valuable insights into the competitive landscape through detailed profiles of key players and their market ranks. The report also explores emerging technological trends and new product developments, keeping you at the forefront of industry advancements.

This research empowers Smart NIC manufacturers, new entrants, and related industry chain companies by providing critical information. Access detailed data on revenues, sales volume, and average price across various segments, including company, type, application, and region.

Request a Free Sample Copy of the Smart NIC Report 2025 - https://www.marketgrowthreports.com/enquiry/request-sample/104074

Understanding Smart NIC Product Types & Applications: Key Trends and Innovations in 2025

By Product Types:

FPGA-based

Others

By Application:

Data Center

Telecom

Others

Emerging Smart NIC Market Leaders: Where's the Growth in 2025?

North America (United States, Canada and Mexico)

Europe (Germany, UK, France, Italy, Russia and Turkey etc.)

Asia-Pacific (China, Japan, Korea, India, Australia, Indonesia, Thailand, Philippines, Malaysia and Vietnam)

South America (Brazil, Argentina, Columbia etc.)

Middle East and Africa (Saudi Arabia, UAE, Egypt, Nigeria and South Africa)

Inquire more and share questions if any before the purchase on this report at - https://www.marketgrowthreports.com/enquiry/request-sample/104074

This report offers a comprehensive analysis of the Smart NIC market, considering both the direct and indirect effects from related industries. We examine the pandemic's influence on the global and regional Smart NIC market landscape, including market size, trends, and growth projections. The analysis is further segmented by type, application, and consumer sector for a granular understanding.

Additionally, the report provides a pre and post pandemic assessment of key growth drivers and challenges within the Smart NIC industry. A PESTEL analysis is also included, evaluating political, economic, social, technological, environmental, and legal factors influencing the market.

We understand that your specific needs might require tailored data. Our research analysts can customize the report to focus on a particular region, application, or specific statistics. Furthermore, we continuously update our research, triangulating your data with our findings to provide a comprehensive and customized market analysis.

COVID-19 Changed Us? An Impact and Recovery Analysis

This report delves into the specific repercussions on the Smart NIC Market. We meticulously tracked both the direct and cascading effects of the pandemic, examining how it reshaped market size, trends, and growth across international and regional landscapes. Segmented by type, application, and consumer sector, this analysis provides a comprehensive view of the market's evolution, incorporating a PESTEL analysis to understand key influencers and barriers. Ultimately, this report aims to provide actionable insights into the market's recovery trajectory, reflecting the broader shifts. Final Report will add the analysis of the impact of Russia-Ukraine War and COVID-19 on this Smart NIC Industry.

TO KNOW HOW COVID-19 PANDEMIC AND RUSSIA UKRAINE WAR WILL IMPACT THIS MARKET - REQUEST SAMPLE

Detailed TOC of Global Smart NIC Market Research Report, 2025-2033

1 Report Overview

1.1 Study Scope 1.2 Global Smart NIC Market Size Growth Rate by Type: 2020 VS 2024 VS 2033 1.3 Global Smart NIC Market Growth by Application: 2020 VS 2024 VS 2033 1.4 Study Objectives 1.5 Years Considered

2 Global Growth Trends

2.1 Global Smart NIC Market Perspective (2020-2033) 2.2 Smart NIC Growth Trends by Region 2.2.1 Global Smart NIC Market Size by Region: 2020 VS 2024 VS 2033 2.2.2 Smart NIC Historic Market Size by Region (2020-2025) 2.2.3 Smart NIC Forecasted Market Size by Region (2025-2033) 2.3 Smart NIC Market Dynamics 2.3.1 Smart NIC Industry Trends 2.3.2 Smart NIC Market Drivers 2.3.3 Smart NIC Market Challenges 2.3.4 Smart NIC Market Restraints

3 Competition Landscape by Key Players

3.1 Global Top Smart NIC Players by Revenue 3.1.1 Global Top Smart NIC Players by Revenue (2020-2025) 3.1.2 Global Smart NIC Revenue Market Share by Players (2020-2025) 3.2 Global Smart NIC Market Share by Company Type (Tier 1, Tier 2, and Tier 3) 3.3 Players Covered: Ranking by Smart NIC Revenue 3.4 Global Smart NIC Market Concentration Ratio 3.4.1 Global Smart NIC Market Concentration Ratio (CR5 and HHI) 3.4.2 Global Top 10 and Top 5 Companies by Smart NIC Revenue in 2024 3.5 Smart NIC Key Players Head office and Area Served 3.6 Key Players Smart NIC Product Solution and Service 3.7 Date of Enter into Smart NIC Market 3.8 Mergers & Acquisitions, Expansion Plans

4 Smart NIC Breakdown Data by Type

4.1 Global Smart NIC Historic Market Size by Type (2020-2025) 4.2 Global Smart NIC Forecasted Market Size by Type (2025-2033)

5 Smart NIC Breakdown Data by Application

5.1 Global Smart NIC Historic Market Size by Application (2020-2025) 5.2 Global Smart NIC Forecasted Market Size by Application (2025-2033)

6 North America

6.1 North America Smart NIC Market Size (2020-2033) 6.2 North America Smart NIC Market Growth Rate by Country: 2020 VS 2024 VS 2033 6.3 North America Smart NIC Market Size by Country (2020-2025) 6.4 North America Smart NIC Market Size by Country (2025-2033) 6.5 United States 6.6 Canada

7 Europe

7.1 Europe Smart NIC Market Size (2020-2033) 7.2 Europe Smart NIC Market Growth Rate by Country: 2020 VS 2024 VS 2033 7.3 Europe Smart NIC Market Size by Country (2020-2025) 7.4 Europe Smart NIC Market Size by Country (2025-2033) 7.5 Germany 7.6 France 7.7 U.K. 7.8 Italy 7.9 Russia 7.10 Nordic Countries

8 Asia-Pacific

8.1 Asia-Pacific Smart NIC Market Size (2020-2033) 8.2 Asia-Pacific Smart NIC Market Growth Rate by Region: 2020 VS 2024 VS 2033 8.3 Asia-Pacific Smart NIC Market Size by Region (2020-2025) 8.4 Asia-Pacific Smart NIC Market Size by Region (2025-2033) 8.5 China 8.6 Japan 8.7 South Korea 8.8 Southeast Asia 8.9 India 8.10 Australia

9 Latin America

9.1 Latin America Smart NIC Market Size (2020-2033) 9.2 Latin America Smart NIC Market Growth Rate by Country: 2020 VS 2024 VS 2033 9.3 Latin America Smart NIC Market Size by Country (2020-2025) 9.4 Latin America Smart NIC Market Size by Country (2025-2033) 9.5 Mexico 9.6 Brazil

10 Middle East & Africa

10.1 Middle East & Africa Smart NIC Market Size (2020-2033) 10.2 Middle East & Africa Smart NIC Market Growth Rate by Country: 2020 VS 2024 VS 2033 10.3 Middle East & Africa Smart NIC Market Size by Country (2020-2025) 10.4 Middle East & Africa Smart NIC Market Size by Country (2025-2033) 10.5 Turkey 10.6 Saudi Arabia 10.7 UAE

11 Key Players Profiles

12 Analyst's Viewpoints/Conclusions

13 Appendix

13.1 Research Methodology 13.1.1 Methodology/Research Approach 13.1.2 Data Source 13.2 Disclaimer 13.3 Author Details

Request a Free Sample Copy of the Smart NIC Report 2025 @ https://www.marketgrowthreports.com/enquiry/request-sample/104074

About Us: Market Growth Reports is a unique organization that offers expert analysis and accurate data-based market intelligence, aiding companies of all shapes and sizes to make well-informed decisions. We tailor inventive solutions for our clients, helping them tackle any challenges that are likely to emerge from time to time and affect their businesses.

0 notes

Text

Torque Sensors: Enabling Smart Mechanical Systems – Engineered by Star EMBSYS

Torque Sensors: Enabling Smart Mechanical Systems – Engineered by Star EMBSYS

In today’s era of automation, robotics, and precision engineering, measuring torque—the rotational force applied to an object—is fundamental. From electric vehicles to industrial machinery, the ability to accurately monitor torque enables smarter control, better performance, and enhanced safety.

At Star EMBSYS, we specialize in developing and integrating torque sensor systems into embedded platforms for a wide range of industrial and research applications. Whether it's for real-time monitoring, automated testing, or predictive maintenance, torque sensing is at the heart of many high-performance systems.

What is a Torque Sensor?

A torque sensor, also known as a torque transducer or torque meter, is a device that measures the twisting force applied on a rotating or stationary shaft. The sensor converts this mechanical input into an electrical signal, which can then be read and processed by a controller, data logger, or embedded system.

Torque sensors come in two main types:

Rotary Torque Sensors – Measure torque in rotating systems.

Static (Reaction) Torque Sensors – Measure torque without shaft rotation.

How Torque Sensors Work

Most modern torque sensors operate based on:

Strain Gauge Technology: Detects minute changes in resistance as torque deforms a sensor element.

Magnetoelastic Sensing: Uses changes in magnetic properties under stress.

Optical or Capacitive Techniques: Employed in specialized high-precision applications.

At Star EMBSYS, we primarily use strain gauge-based sensors due to their balance of sensitivity, reliability, and cost-effectiveness.

Applications of Torque Sensors

Torque sensors are used in:

Industrial Automation – Monitoring load on motors, pumps, and gearboxes.

Automotive Systems – Engine and drivetrain testing, EV torque analysis.

Robotics – Force feedback in joints and actuators.

Test Benches – Real-time torque measurement in R&D labs.

Aerospace & Defense – Component testing under dynamic loads.

Medical Devices – Precision torque control in surgical tools.

Star EMBSYS provides embedded solutions that make torque data actionable—by offering precise real-time feedback, wireless transmission, and cloud-based analytics.

What Sets Star EMBSYS Apart

Custom-Tailored Sensor Integration: Torque range, accuracy, and interface customized to your needs.

Advanced Embedded System Design: Microcontroller- and FPGA-based systems with high-resolution ADCs.

Real-Time Data Processing: Filtering, calibration, and display via PC, mobile, or industrial HMIs.