#ETL process optimization

Explore tagged Tumblr posts

Text

SSIS: Navigating Common Challenges

Diving into the world of SQL Server Integration Services (SSIS), we find ourselves in the realm of building top-notch solutions for data integration and transformation at the enterprise level. SSIS stands tall as a beacon for ETL processes, encompassing the extraction, transformation, and loading of data. However, navigating this powerful tool isn’t without its challenges, especially when it…

View On WordPress

#data integration challenges#ETL process optimization#memory consumption in SSIS#SSIS package tuning.#SSIS performance

0 notes

Text

AI Frameworks Help Data Scientists For GenAI Survival

AI Frameworks: Crucial to the Success of GenAI

Develop Your AI Capabilities Now

You play a crucial part in the quickly growing field of generative artificial intelligence (GenAI) as a data scientist. Your proficiency in data analysis, modeling, and interpretation is still essential, even though platforms like Hugging Face and LangChain are at the forefront of AI research.

Although GenAI systems are capable of producing remarkable outcomes, they still mostly depend on clear, organized data and perceptive interpretation areas in which data scientists are highly skilled. You can direct GenAI models to produce more precise, useful predictions by applying your in-depth knowledge of data and statistical techniques. In order to ensure that GenAI systems are based on strong, data-driven foundations and can realize their full potential, your job as a data scientist is crucial. Here’s how to take the lead:

Data Quality Is Crucial

The effectiveness of even the most sophisticated GenAI models depends on the quality of the data they use. By guaranteeing that the data is relevant, AI tools like Pandas and Modin enable you to clean, preprocess, and manipulate large datasets.

Analysis and Interpretation of Exploratory Data

It is essential to comprehend the features and trends of the data before creating the models. Data and model outputs are visualized via a variety of data science frameworks, like Matplotlib and Seaborn, which aid developers in comprehending the data, selecting features, and interpreting the models.

Model Optimization and Evaluation

A variety of algorithms for model construction are offered by AI frameworks like scikit-learn, PyTorch, and TensorFlow. To improve models and their performance, they provide a range of techniques for cross-validation, hyperparameter optimization, and performance evaluation.

Model Deployment and Integration

Tools such as ONNX Runtime and MLflow help with cross-platform deployment and experimentation tracking. By guaranteeing that the models continue to function successfully in production, this helps the developers oversee their projects from start to finish.

Intel’s Optimized AI Frameworks and Tools

The technologies that developers are already familiar with in data analytics,��machine learning, and deep learning (such as Modin, NumPy, scikit-learn, and PyTorch) can be used. For the many phases of the AI process, such as data preparation, model training, inference, and deployment, Intel has optimized the current AI tools and AI frameworks, which are based on a single, open, multiarchitecture, multivendor software platform called oneAPI programming model.

Data Engineering and Model Development:

To speed up end-to-end data science pipelines on Intel architecture, use Intel’s AI Tools, which include Python tools and frameworks like Modin, Intel Optimization for TensorFlow Optimizations, PyTorch Optimizations, IntelExtension for Scikit-learn, and XGBoost.

Optimization and Deployment

For CPU or GPU deployment, Intel Neural Compressor speeds up deep learning inference and minimizes model size. Models are optimized and deployed across several hardware platforms including Intel CPUs using the OpenVINO toolbox.

You may improve the performance of your Intel hardware platforms with the aid of these AI tools.

Library of Resources

Discover collection of excellent, professionally created, and thoughtfully selected resources that are centered on the core data science competencies that developers need. Exploring machine and deep learning AI frameworks.

What you will discover:

Use Modin to expedite the extract, transform, and load (ETL) process for enormous DataFrames and analyze massive datasets.

To improve speed on Intel hardware, use Intel’s optimized AI frameworks (such as Intel Optimization for XGBoost, Intel Extension for Scikit-learn, Intel Optimization for PyTorch, and Intel Optimization for TensorFlow).

Use Intel-optimized software on the most recent Intel platforms to implement and deploy AI workloads on Intel Tiber AI Cloud.

How to Begin

Frameworks for Data Engineering and Machine Learning

Step 1: View the Modin, Intel Extension for Scikit-learn, and Intel Optimization for XGBoost videos and read the introductory papers.

Modin: To achieve a quicker turnaround time overall, the video explains when to utilize Modin and how to apply Modin and Pandas judiciously. A quick start guide for Modin is also available for more in-depth information.

Scikit-learn Intel Extension: This tutorial gives you an overview of the extension, walks you through the code step-by-step, and explains how utilizing it might improve performance. A movie on accelerating silhouette machine learning techniques, PCA, and K-means clustering is also available.

Intel Optimization for XGBoost: This straightforward tutorial explains Intel Optimization for XGBoost and how to use Intel optimizations to enhance training and inference performance.

Step 2: Use Intel Tiber AI Cloud to create and develop machine learning workloads.

On Intel Tiber AI Cloud, this tutorial runs machine learning workloads with Modin, scikit-learn, and XGBoost.

Step 3: Use Modin and scikit-learn to create an end-to-end machine learning process using census data.

Run an end-to-end machine learning task using 1970–2010 US census data with this code sample. The code sample uses the Intel Extension for Scikit-learn module to analyze exploratory data using ridge regression and the Intel Distribution of Modin.

Deep Learning Frameworks

Step 4: Begin by watching the videos and reading the introduction papers for Intel’s PyTorch and TensorFlow optimizations.

Intel PyTorch Optimizations: Read the article to learn how to use the Intel Extension for PyTorch to accelerate your workloads for inference and training. Additionally, a brief video demonstrates how to use the addon to run PyTorch inference on an Intel Data Center GPU Flex Series.

Intel’s TensorFlow Optimizations: The article and video provide an overview of the Intel Extension for TensorFlow and demonstrate how to utilize it to accelerate your AI tasks.

Step 5: Use TensorFlow and PyTorch for AI on the Intel Tiber AI Cloud.

In this article, it show how to use PyTorch and TensorFlow on Intel Tiber AI Cloud to create and execute complicated AI workloads.

Step 6: Speed up LSTM text creation with Intel Extension for TensorFlow.

The Intel Extension for TensorFlow can speed up LSTM model training for text production.

Step 7: Use PyTorch and DialoGPT to create an interactive chat-generation model.

Discover how to use Hugging Face’s pretrained DialoGPT model to create an interactive chat model and how to use the Intel Extension for PyTorch to dynamically quantize the model.

Read more on Govindhtech.com

#AI#AIFrameworks#DataScientists#GenAI#PyTorch#GenAISurvival#TensorFlow#CPU#GPU#IntelTiberAICloud#News#Technews#Technology#Technologynews#Technologytrends#govindhtech

2 notes

·

View notes

Text

Top Ten Web Development Companies in India

Although many organisations strive to minimise the benefits of web development to the global market, statistics indicate the truth. According to statistics, everybody with an internet connection can browse around 1.88 billion webpages.

Given that the majority of websites increase firm sales and ROI, web development can create exceptional outcomes for any business. Web development can benefit both a start-up and a huge organisation. Custom Web Development Companies in India that are skilled in web development are best equipped to handle business needs because they always have enough talent to meet all the demands.

The Best Web Development Companies in India keep their resources up to date with the most recent trends and technologies in the digital world, in addition to their skill sets. Despite the fact that every other web development firm claims to be the finest, it's straightforward to identify the top web developers in India based on their service offerings and work portfolios.

We've produced a list of the top 10 web development companies in India so you can pick the finest one for your next project! So, let's get started.

1. Connect Infosoft Technologies Pvt. Ltd.

Connect Infosoft Technologies Pvt. Ltd. is a well-known web development service company that provides a comprehensive range of web development services. Our web creation services are targeted to your company's specific demands, allowing you to create a website that fits those objectives. We offer website design, development and Digital marketing organization situated in New Delhi. We were established in 1999 and have been serving our customers everywhere throughout the world. Connect Infosoft's Head Office is based in New Delhi, India and has Branch Office in Orissa. It also has a portrayal in the United States.

Our skilled web developers are well-versed in the latest web technologies and can provide you with the best web development solutions that match your budget and schedule restrictions. We also have a team of SEO and digital marketing professionals who can assist you in improving your search .

Major Service Offerings:

Web Application Development

ETL Services -SaaS & MVP Development

Mobile App Development

Data Science & Analytics

Artificial Intelligence

Digital Marketing

Search Engine Optimization

Pay-Per-Click advertising campaigns

Blockchain

DevOps

Amazon Web Services

Product Engineering

UI/UX

Client Success Stories:

Our success is intricately woven with the success stories of our clients. We take pride in delivering successful projects that align with client requirements and contribute to their growth.

We are always ready to start new projects and establish long-term work relationships. We work in any time zone for full-time and part-time-based projects.

Hire Developer for $10 per hour approx.

Book Appointments or Start To Chat:

Email: [email protected] M: +1 323-522-5635

Web: https://www.connectinfosoft.com/lets-work-together/

2. Infosys

Infosys is a well-known global leader in IT services and consulting based in India. Founded in 1981, Infosys has grown to become one of the largest IT companies in India and has a strong presence worldwide. The company offers a wide range of services, including web development, software development, consulting and business process outsourcing.

In the field of web development, Infosys provides comprehensive solutions to its clients. They have expertise in building custom web applications, e-commerce platforms, content management systems and mobile-responsive websites. Their web development team is skilled in various programming languages, frameworks and technologies to create robust and scalable web solutions.

Infosys has a track record of working with clients from diverse industries, including banking and finance, healthcare, retail, manufacturing and more. They leverage their deep industry knowledge and technical expertise to deliver innovative web development solutions tailored to meet their clients' specific requirements.

Additionally, Infosys focuses on utilizing emerging technologies like artificial intelligence, machine learning, blockchain and cloud computing to enhance the web development process and deliver cutting-edge solutions.

3. TCS (Tata Consultancy Services)

TCS (Tata Consultancy Services) is one of the largest and most renowned IT services companies in India and a part of the Tata Group conglomerate. Established in 1968, TCS has a global presence and provides a wide range of services, including web development, software development, consulting and IT outsourcing.

TCS offers comprehensive web development solutions to its clients across various industries. Their web development services encompass front-end and back-end development, web application development, e-commerce platforms, content management systems and mobile-responsive websites. They have expertise in various programming languages, frameworks and technologies to build robust and scalable web solutions.

TCS has a customer-centric approach and works closely with its clients to understand their business requirements and goals. They leverage their deep industry knowledge and technological expertise to provide innovative and tailored web development solutions that align with their clients' specific needs.

4. Wipro

Wipro is a prominent global IT consulting and services company based in India. Established in 1945, Wipro has evolved into a multinational organization with a presence in over 60 countries. The company offers a wide range of services, including web development, software development, consulting and digital transformation.

In the realm of web development, Wipro provides comprehensive solutions to its clients. They have a team of skilled professionals proficient in various programming languages, frameworks and technologies. Their web development services cover front-end and back-end development, web application development, e-commerce platforms, content management systems and mobile-responsive websites.

Wipro emphasizes delivering customer-centric web development solutions. They collaborate closely with their clients to understand their specific requirements and business objectives. This enables them to create tailored solutions that align with the clients' goals and provide a competitive

5. HCL Technologies

HCL Technologies is a leading global IT services company headquartered in India. Established in 1976, HCL Technologies has grown to become one of the prominent players in the IT industry. The company offers a wide range of services, including web development, software development, digital transformation, consulting and infrastructure management.

HCL Technologies provides comprehensive web development solutions to its clients worldwide. They have a dedicated team of skilled professionals proficient in various programming languages, frameworks and technologies. Their web development services cover front-end and back-end development, web application development, e-commerce platforms, content management systems and mobile-responsive websites.

HCL Technologies has a broad industry presence and serves clients across various sectors such as banking and financial services, healthcare, retail, manufacturing and more. They leverage their deep industry expertise to deliver web solutions that are not only technologically robust but also address the unique challenges and requirements of each industry.

6. Mindtree

Mindtree is a global technology consulting and services company based in India. Founded in 1999, Mindtree has grown to become a well-known player in the IT industry. The company offers a wide range of services, including web development, software development, digital transformation, cloud services and data analytics.

Mindtree provides comprehensive web development solutions to its clients. They have a team of skilled professionals with expertise in various programming languages, frameworks and technologies. Their web development services encompass front-end and back-end development, web application development, e-commerce platforms, content management systems and mobile-responsive websites.

One of the key strengths of Mindtree is its focus on delivering customer-centric solutions. They work closely with their clients to understand their specific business requirements, goals and target audience. This enables them to create tailored web development solutions that meet the clients' unique needs and deliver a seamless user experience.

Mindtree serves clients across multiple industries, including banking and financial services, healthcare, retail, manufacturing and more. They leverage their industry knowledge and experience to provide web solutions that align with the specific challenges and regulations of each sector.

7. Tech Mahindra

Tech Mahindra is a multinational IT services and consulting company based in India. Established in 1986, Tech Mahindra is part of the Mahindra Group conglomerate. The company offers a wide range of services, including web development, software development, consulting, digital transformation and IT outsourcing.

Tech Mahindra provides comprehensive web development solutions to its clients across various industries. They have a team of skilled professionals proficient in various programming languages, frameworks and technologies. Their web development services cover front-end and back-end development, web application development, e-commerce platforms, content management systems and mobile-responsive websites.

Tech Mahindra focuses on delivering customer-centric web development solutions. They work closely with their clients to understand their specific requirements, business objectives and target audience. This enables them to create customized web solutions that meet the clients' unique needs, enhance user experience and drive business growth.

The company serves clients across diverse sectors, including telecommunications, banking and financial services, healthcare, retail, manufacturing and more. They leverage their industry expertise and domain knowledge to provide web solutions that are tailored to the specific challenges and requirements of each industry.

Tech Mahindra embraces emerging technologies in their web development services. They leverage artificial intelligence, machine learning, blockchain, cloud computing and other advanced technologies to enhance the functionality, security and scalability of the web solutions they deliver.

8. Mphasis

Mphasis is an IT services company headquartered in India. Established in 2000, Mphasis has a global presence and offers a wide range of services, including web development, software development, digital transformation, consulting and infrastructure services.

Mphasis provides comprehensive web development solutions to its clients worldwide. They have a team of skilled professionals proficient in various programming languages, frameworks and technologies. Their web development services encompass front-end and back-end development, web application development, e-commerce platforms, content management systems and mobile-responsive websites.

Mphasis focuses on delivering customer-centric web development solutions tailored to meet their clients' specific requirements. They work closely with their clients to understand their business objectives, target audience and desired outcomes. This allows them to create customized web solutions that align with their clients' goals and provide a competitive edge.

9. L&T Infotech

L&T Infotech (LTI) is a global IT solutions and services company headquartered in India. LTI is a subsidiary of Larsen & Toubro, one of India's largest conglomerates. The company provides a wide range of services, including web development, software development, consulting, digital transformation and infrastructure management.

L&T Infotech offers comprehensive web development solutions to its clients. They have a team of skilled professionals who are proficient in various programming languages, frameworks and technologies. Their web development services cover front-end and back-end development, web application development, e-commerce platforms, content management systems and mobile-responsive websites.

One of the key strengths of L&T Infotech is its customer-centric approach. They work closely with their clients to understand their specific business requirements, objectives and target audience. This enables them to create tailored web development solutions that align with the clients' unique needs and deliver tangible business value.

10. Cybage

Cybage is a technology consulting and product engineering company headquartered in Pune, India. Established in 1995, Cybage has grown to become a global organization with a presence in multiple countries. The company offers a range of services, including web development, software development, quality assurance, digital solutions and IT consulting.

Cybage provides comprehensive web development solutions to its clients. They have a team of skilled professionals who are proficient in various programming languages, frameworks and technologies. Their web development services encompass front-end and back-end development, web application development, e-commerce platforms, content management systems and mobile-responsive websites.

Cybage focuses on delivering customer-centric web development solutions. They collaborate closely with their clients to understand their specific business requirements, goals and target audience. This enables them to create customized web solutions that meet the clients' unique needs, enhance user experience and drive business growth.

The company serves clients across diverse industries, including healthcare, retail, e-commerce, banking and finance and more. They leverage their industry knowledge and domain expertise to provide web solutions that address the unique challenges and requirements of each industry.

Cybage emphasizes the use of emerging technologies in their web development services. They incorporate artificial intelligence, machine learning, cloud computing, blockchain and other innovative technologies to enhance the functionality, scalability and security of the web solutions they deliver.

#web developer#web development#webdesign#web design#web app development#custom web app development#web application development#web application services#web application security#connect infosoft

3 notes

·

View notes

Text

Preparation of F-passivated ZnO for quantum dot photovoltaics

For photovoltaic power generation, pn junction is the core unit. The electric field in the junction can separate and transport the electron and the hole to negative and positive electrodes, respectively. Once the pn junction is connected with a load and exposed to a light ray, it can convert photon power into electrical power and deliver this power to the load. This photovoltaic application has long been used as the power supply for satellites and space vehicles, and also as the power supply for renewable green energy. As the star materials, Si, GaAs, and perovskite have been widely applied for solar power harvesting. However, the absorption cutoff wavelength of these materials is below 1,100 nm, which limits their photovoltaic applications in infrared photon power. Hence, it is necessary to explore new materials for photovoltaics. PbSe colloidal quantum dots (CQDs) are promising candidates for photovoltaics because its photoactive range can cover the whole solar spectrum. Thanks to the rapid advances in metal halide ligands and solution phase ligand exchange processes, the efficiency of PbSe CQD solar cells approaches to 11.6%. In view of these developments, further improvement of device performance can focus on the optimization of the electron transport layer (ETL) and the hole transport layer (HTL).

Read more.

3 notes

·

View notes

Text

Azure Data Engineering Tools For Data Engineers

Azure is a cloud computing platform provided by Microsoft, which presents an extensive array of data engineering tools. These tools serve to assist data engineers in constructing and upholding data systems that possess the qualities of scalability, reliability, and security. Moreover, Azure data engineering tools facilitate the creation and management of data systems that cater to the unique requirements of an organization.

In this article, we will explore nine key Azure data engineering tools that should be in every data engineer’s toolkit. Whether you’re a beginner in data engineering or aiming to enhance your skills, these Azure tools are crucial for your career development.

Microsoft Azure Databricks

Azure Databricks is a managed version of Databricks, a popular data analytics and machine learning platform. It offers one-click installation, faster workflows, and collaborative workspaces for data scientists and engineers. Azure Databricks seamlessly integrates with Azure’s computation and storage resources, making it an excellent choice for collaborative data projects.

Microsoft Azure Data Factory

Microsoft Azure Data Factory (ADF) is a fully-managed, serverless data integration tool designed to handle data at scale. It enables data engineers to acquire, analyze, and process large volumes of data efficiently. ADF supports various use cases, including data engineering, operational data integration, analytics, and data warehousing.

Microsoft Azure Stream Analytics

Azure Stream Analytics is a real-time, complex event-processing engine designed to analyze and process large volumes of fast-streaming data from various sources. It is a critical tool for data engineers dealing with real-time data analysis and processing.

Microsoft Azure Data Lake Storage

Azure Data Lake Storage provides a scalable and secure data lake solution for data scientists, developers, and analysts. It allows organizations to store data of any type and size while supporting low-latency workloads. Data engineers can take advantage of this infrastructure to build and maintain data pipelines. Azure Data Lake Storage also offers enterprise-grade security features for data collaboration.

Microsoft Azure Synapse Analytics

Azure Synapse Analytics is an integrated platform solution that combines data warehousing, data connectors, ETL pipelines, analytics tools, big data scalability, and visualization capabilities. Data engineers can efficiently process data for warehousing and analytics using Synapse Pipelines’ ETL and data integration capabilities.

Microsoft Azure Cosmos DB

Azure Cosmos DB is a fully managed and server-less distributed database service that supports multiple data models, including PostgreSQL, MongoDB, and Apache Cassandra. It offers automatic and immediate scalability, single-digit millisecond reads and writes, and high availability for NoSQL data. Azure Cosmos DB is a versatile tool for data engineers looking to develop high-performance applications.

Microsoft Azure SQL Database

Azure SQL Database is a fully managed and continually updated relational database service in the cloud. It offers native support for services like Azure Functions and Azure App Service, simplifying application development. Data engineers can use Azure SQL Database to handle real-time data ingestion tasks efficiently.

Microsoft Azure MariaDB

Azure Database for MariaDB provides seamless integration with Azure Web Apps and supports popular open-source frameworks and languages like WordPress and Drupal. It offers built-in monitoring, security, automatic backups, and patching at no additional cost.

Microsoft Azure PostgreSQL Database

Azure PostgreSQL Database is a fully managed open-source database service designed to emphasize application innovation rather than database management. It supports various open-source frameworks and languages and offers superior security, performance optimization through AI, and high uptime guarantees.

Whether you’re a novice data engineer or an experienced professional, mastering these Azure data engineering tools is essential for advancing your career in the data-driven world. As technology evolves and data continues to grow, data engineers with expertise in Azure tools are in high demand. Start your journey to becoming a proficient data engineer with these powerful Azure tools and resources.

Unlock the full potential of your data engineering career with Datavalley. As you start your journey to becoming a skilled data engineer, it’s essential to equip yourself with the right tools and knowledge. The Azure data engineering tools we’ve explored in this article are your gateway to effectively managing and using data for impactful insights and decision-making.

To take your data engineering skills to the next level and gain practical, hands-on experience with these tools, we invite you to join the courses at Datavalley. Our comprehensive data engineering courses are designed to provide you with the expertise you need to excel in the dynamic field of data engineering. Whether you’re just starting or looking to advance your career, Datavalley’s courses offer a structured learning path and real-world projects that will set you on the path to success.

Course format:

Subject: Data Engineering Classes: 200 hours of live classes Lectures: 199 lectures Projects: Collaborative projects and mini projects for each module Level: All levels Scholarship: Up to 70% scholarship on this course Interactive activities: labs, quizzes, scenario walk-throughs Placement Assistance: Resume preparation, soft skills training, interview preparation

Subject: DevOps Classes: 180+ hours of live classes Lectures: 300 lectures Projects: Collaborative projects and mini projects for each module Level: All levels Scholarship: Up to 67% scholarship on this course Interactive activities: labs, quizzes, scenario walk-throughs Placement Assistance: Resume preparation, soft skills training, interview preparation

For more details on the Data Engineering courses, visit Datavalley’s official website.

#datavalley#dataexperts#data engineering#data analytics#dataexcellence#data science#power bi#business intelligence#data analytics course#data science course#data engineering course#data engineering training

3 notes

·

View notes

Text

KNIME Analytics Platform

KNIME Analytics Platform: Open-Source Data Science and Machine Learning for All In the world of data science and machine learning, KNIME Analytics Platform stands out as a powerful and versatile solution that is accessible to both technical and non-technical users alike. Known for its open-source foundation, KNIME provides a flexible, visual workflow interface that enables users to create, deploy, and manage data science projects with ease. Whether used by individual data scientists or entire enterprise teams, KNIME supports the full data science lifecycle—from data integration and transformation to machine learning and deployment. Empowering Data Science with a Visual Workflow Interface At the heart of KNIME’s appeal is its drag-and-drop interface, which allows users to design workflows without needing to code. This visual approach democratizes data science, allowing business analysts, data scientists, and engineers to collaborate seamlessly and create powerful analytics workflows. KNIME’s modular architecture also enables users to expand its functionality through a vast library of nodes, extensions, and community-contributed components, making it one of the most flexible platforms for data science and machine learning. Key Features of KNIME Analytics Platform KNIME’s comprehensive feature set addresses a wide range of data science needs: - Data Preparation and ETL: KNIME provides robust tools for data integration, cleansing, and transformation, supporting everything from structured to unstructured data sources. The platform’s ETL (Extract, Transform, Load) capabilities are highly customizable, making it easy to prepare data for analysis. - Machine Learning and AutoML: KNIME comes with a suite of built-in machine learning algorithms, allowing users to build models directly within the platform. It also offers Automated Machine Learning (AutoML) capabilities, simplifying tasks like model selection and hyperparameter tuning, so users can rapidly develop effective machine learning models. - Explainable AI (XAI): With the growing importance of model transparency, KNIME provides tools for explainability and interpretability, such as feature impact analysis and interactive visualizations. These tools enable users to understand how models make predictions, fostering trust and facilitating decision-making in regulated industries. - Integration with External Tools and Libraries: KNIME supports integration with popular machine learning libraries and tools, including TensorFlow, H2O.ai, Scikit-learn, and Python and R scripts. This compatibility allows advanced users to leverage KNIME’s workflow environment alongside powerful external libraries, expanding the platform’s modeling and analytical capabilities. - Big Data and Cloud Extensions: KNIME offers extensions for big data processing, supporting frameworks like Apache Spark and Hadoop. Additionally, KNIME integrates with cloud providers, including AWS, Google Cloud, and Microsoft Azure, making it suitable for organizations with cloud-based data architectures. - Model Deployment and Management with KNIME Server: For enterprise users, KNIME Server provides enhanced capabilities for model deployment, automation, and monitoring. KNIME Server enables teams to deploy models to production environments with ease and facilitates collaboration by allowing multiple users to work on projects concurrently. Diverse Applications Across Industries KNIME Analytics Platform is utilized across various industries for a wide range of applications: - Customer Analytics and Marketing: KNIME enables businesses to perform customer segmentation, sentiment analysis, and predictive marketing, helping companies deliver personalized experiences and optimize marketing strategies. - Financial Services: In finance, KNIME is used for fraud detection, credit scoring, and risk assessment, where accurate predictions and data integrity are essential. - Healthcare and Life Sciences: KNIME supports healthcare providers and researchers with applications such as outcome prediction, resource optimization, and patient data analytics. - Manufacturing and IoT: The platform’s capabilities in anomaly detection and predictive maintenance make it ideal for manufacturing and IoT applications, where data-driven insights are key to operational efficiency. Deployment Flexibility and Integration Capabilities KNIME’s flexibility extends to its deployment options. KNIME Analytics Platform is available as a free, open-source desktop application, while KNIME Server provides enterprise-level features for deployment, collaboration, and automation. The platform’s support for Docker containers also enables organizations to deploy models in various environments, including hybrid and cloud setups. Additionally, KNIME integrates seamlessly with databases, data lakes, business intelligence tools, and external libraries, allowing it to function as a core component of a company’s data architecture. Pricing and Community Support KNIME offers both free and commercial licensing options. The open-source KNIME Analytics Platform is free to use, making it an attractive option for data science teams looking to minimize costs while maximizing capabilities. For organizations that require advanced deployment, monitoring, and collaboration, KNIME Server is available through a subscription-based model. The KNIME community is an integral part of the platform’s success. With an active forum, numerous tutorials, and a repository of workflows on KNIME Hub, users can find solutions to common challenges, share their work, and build on contributions from other users. Additionally, KNIME offers dedicated support and learning resources through KNIME Learning Hub and KNIME Academy, ensuring users have access to continuous training. Conclusion KNIME Analytics Platform is a robust, flexible, and accessible data science tool that empowers users to design, deploy, and manage data workflows without the need for extensive coding. From data preparation and machine learning to deployment and interpretability, KNIME’s extensive capabilities make it a valuable asset for organizations across industries. With its open-source foundation, active community, and enterprise-ready features, KNIME provides a scalable solution for data-driven decision-making and a compelling option for any organization looking to integrate data science into their operations. Read the full article

#AutomatedMachineLearning#AutoML#dataintegration#datapreparation#datascienceplatform#datatransformation#datawrangling#ETL#KNIME#KNIMEAnalyticsPlatform#machinelearning#open-sourceAI

0 notes

Text

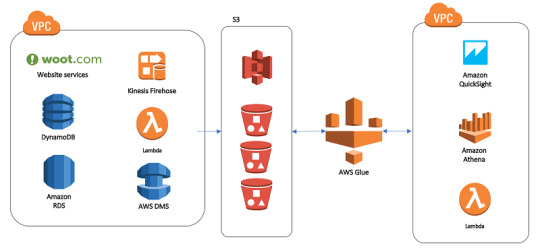

Amazon QuickSight Training | Amazon QuickSight Course Online

Amazon QuickSight Training: Top 10 AWS QuickSight Features Every Data Analyst Should Know Amazon QuickSight Training is an essential tool for data analysts seeking to develop their skills in data visualization and analytics. As one of the most powerful business intelligence (BI) tools offered by AWS, QuickSight allows analysts to build interactive dashboards, perform in-depth analysis, and create visualizations with ease. Through comprehensive Amazon QuickSight Training, data professionals can fully leverage these features to interpret data effectively and make strategic decisions. This guide explores the top 10 features of Amazon QuickSight that every data analyst should know. From its integration with AWS services to its machine learning capabilities, each feature in Amazon QuickSight is designed to enhance productivity and enable faster insights. Understanding these key features can not only advance data analysis skills but also increase efficiency and impact in day-to-day operations.

SPICE (Super-fast, Parallel, In-memory Calculation Engine) One of the standout features of Amazon QuickSight is SPICE, a robust in-memory engine that allows for super-fast data analysis. SPICE enables data analysts to work with large datasets quickly and efficiently, as it scales automatically and performs calculations in memory. This feature is essential for analysts dealing with high volumes of data and complex queries, as it significantly reduces processing time. AWS QuickSight Online Training often emphasizes SPICE’s benefits, as it’s integral to creating seamless user experiences with reduced wait times.

AutoGraph Feature Amazon QuickSight’s AutoGraph is a smart feature that automatically selects the best visualization for a given data type. By analyzing the dataset, QuickSight can recommend the most effective chart, graph, or table to represent the data accurately. This feature is particularly helpful for beginners or those who are new to data visualization, as it simplifies the process of creating insightful visuals. In Amazon QuickSight Training, learning to use AutoGraph can save analysts time while enhancing the impact of their dashboards.

Machine Learning Insights Amazon QuickSight provides powerful machine learning (ML) capabilities that allow analysts to perform predictive analysis and gain deeper insights. ML Insights can automatically detect anomalies, identify key drivers, and forecast trends, all without requiring extensive knowledge in data science. This feature allows analysts to uncover patterns in data that might not be visible through traditional analysis. For those interested in AWS QuickSight Online Training, mastering ML Insights is essential to fully leveraging the power of QuickSight.

Integration with AWS Services A key advantage of Amazon QuickSight is its seamless integration with other AWS services, such as Amazon S3, RDS, Redshift, and Athena. This integration allows data analysts to connect to various data sources and pull in relevant data for analysis. By taking an Amazon QuickSight Training Course, data professionals can learn how to link and optimize these integrations, enabling a streamlined data analysis workflow within the AWS ecosystem.

Data Preparation Tools Amazon QuickSight offers robust data preparation tools, including options for filtering, grouping, and combining datasets. Analysts can prepare their data directly within QuickSight, which eliminates the need for separate ETL (extract, transform, load) processes. These tools also support data wrangling and transformation, which are essential for creating clean, usable datasets. During AWS QuickSight Online Training, analysts are taught how to manipulate data efficiently using these preparation tools, making it easier to create meaningful visualizations.

Interactive Dashboards QuickSight enables the creation of interactive dashboards that users can easily share and explore. These dashboards can include drill-downs, filters, and customizable parameters, giving users the flexibility to interact with data in real-time. This is particularly valuable for data analysts who need to present their findings to stakeholders. Amazon QuickSight Training courses cover the best practices for building interactive dashboards, which enhance data presentation and user engagement.

Natural Language Querying with Q The Q feature in Amazon QuickSight allows users to ask questions in natural language and receive instant answers in the form of data visualizations. This feature democratizes data access by allowing non-technical users to interact with datasets without needing complex SQL queries. For data analysts, Amazon QuickSight Training on the Q feature can enhance efficiency, as it allows for faster data access and exploration, facilitating a quicker decision-making process.

Embedded Analytics Amazon QuickSight supports embedded analytics, allowing organizations to integrate QuickSight dashboards directly into their applications and websites. This feature enables external stakeholders to access analytics without needing direct access to QuickSight itself. AWS QuickSight Online Training courses emphasize the advantages of embedded analytics, as it allows data analysts to expand the reach of their insights and make data-driven decisions more accessible to others.

Collaboration Tools Collaboration is a crucial aspect of data analysis, and Amazon QuickSight facilitates this through built-in tools that allow users to share reports and dashboards. With the collaboration features, data analysts can invite team members to view or edit dashboards, fostering a more collaborative environment. In Amazon QuickSight Training, analysts learn to use these collaboration tools to ensure that insights are easily accessible and shareable across teams.

Cross-Platform Accessibility Amazon QuickSight is accessible across various devices, including desktops, tablets, and smartphones, making it easy for data analysts to access insights on the go. This cross-platform accessibility is a major advantage for teams that require flexibility in accessing data. AWS QuickSight Online Training often highlights how to optimize reports and dashboards for different devices, ensuring a seamless experience for users on any platform.

Conclusion: Amazon QuickSight Training is invaluable for data analysts who want to maximize the potential of this powerful BI tool. With features like SPICE, machine learning insights, interactive dashboards, and embedded analytics, Amazon QuickSight offers a comprehensive suite of tools to enhance data analysis and visualization. For data professionals, learning to use Amazon QuickSight not only enables more efficient workflows but also empowers them to generate impactful insights that drive business decisions. AWS QuickSight Online Training and Amazon QuickSight Training Course options are excellent resources for analysts looking to enhance their skills and stay competitive in a data-driven world. By mastering these top 10 QuickSight features, data analysts can elevate their abilities and position themselves as indispensable assets within their organizations. Visualpath offering a Amazon QuickSight Training with real-time expert instructors and hands-on projects. Our AWS QuickSight Online Training, from industry experts and gain hands-on experience. We provide to individuals globally in the USA, UK, etc. To schedule a demo, call +91-9989971070.

Key Points: AWS, Amazon S3, Amazon Redshift, Amazon RDS, Amazon Athena, AWS Glue, Amazon DynamoDB, AWS IoT Analytics, ETL Tools.

Attend Free Demo Call Now: +91-9989971070 Whatsapp: https://www.whatsapp.com/catalog/919989971070 Visit our Blog: https://visualpathblogs.com/ Visit: https://www.visualpath.in/online-amazon-quicksight-training.html

#AmazonQuickSight Training#AWSQuickSight Online Training#AmazonQuickSight Course Online#AWSQuickSight Training in Hyderabad#AmazonQuickSight Training Course#AWSQuickSight Training

0 notes

Text

0 notes

Text

What to Expect from Advanced Data Science Training in Marathahalli

AI, ML, and Big Data: What to Expect from Advanced Data Science Training in Marathahalli

Data science has emerged as one of the most critical fields in today’s tech-driven world. The fusion of Artificial Intelligence (AI), Machine Learning (ML), and Big Data analytics has changed the landscape of businesses across industries. As industries continue to adopt data-driven strategies, the demand for skilled data scientists, particularly in emerging hubs like Marathahalli, has seen an exponential rise.

Institutes in Marathahalli are offering advanced training in these crucial areas, preparing students to be future-ready in the fields of AI, ML, and Big Data. Whether you are seeking Data Science Training in Marathahalli, pursuing a Data Science Certification Marathahalli, or enrolling in a Data Science Bootcamp Marathahalli, these courses are designed to provide the hands-on experience and theoretical knowledge needed to excel.

AI and Machine Learning: Transforming the Future of Data Science

Artificial Intelligence and Machine Learning are at the forefront of modern data science. Students enrolled in AI and Data Science Courses in Marathahalli are introduced to the core concepts of machine learning algorithms, supervised and unsupervised learning, neural networks, deep learning, and natural language processing (NLP). These are essential for creating systems that can think, learn, and evolve from data.

Institutes in Marathahalli offering AI and ML training integrate real-world applications and projects to make sure that students can translate theory into practice. A Machine Learning Course Marathahalli goes beyond teaching the mathematical and statistical foundations of algorithms to focus on practical applications such as predictive analytics, recommender systems, and image recognition.

Data Science students gain proficiency in Python, R, and TensorFlow for building AI-based models. The focus on AI ensures that graduates of Data Science Classes Bangalore are highly employable in AI-driven industries, from automation to finance.

Key topics covered include:

Supervised Learning: Regression, classification, support vector machines

Unsupervised Learning: Clustering, anomaly detection, dimensionality reduction

Neural Networks: Deep learning models like CNN, RNN, and GANs

Natural Language Processing (NLP): Text analysis, sentiment analysis, chatbots

Model Optimization: Hyperparameter tuning, cross-validation, regularization

By integrating machine learning principles with AI tools, institutes like Data Science Training Institutes Near Marathahalli ensure that students are not just skilled in theory but are also ready for real-world challenges.

Big Data Analytics: Leveraging Large-Scale Data for Business Insights

With the advent of the digital age, businesses now have access to enormous datasets that, if analyzed correctly, can unlock valuable insights and drive innovation. As a result, Big Data Course Marathahalli has become a cornerstone of advanced data science training. Students are taught to work with massive datasets using advanced technologies like Hadoop, Spark, and NoSQL databases to handle, process, and analyze data at scale.

A Big Data Course Marathahalli covers crucial topics such as data wrangling, data storage, distributed computing, and real-time analytics. Students are equipped with the skills to process unstructured and structured data, design efficient data pipelines, and implement scalable solutions that meet the needs of modern businesses. This hands-on experience ensures that they can manage data at the petabyte level, which is crucial for industries like e-commerce, healthcare, finance, and logistics.

Key topics covered include:

Hadoop Ecosystem: MapReduce, HDFS, Pig, Hive

Apache Spark: RDDs, DataFrames, Spark MLlib

Data Storage: NoSQL databases (MongoDB, Cassandra)

Real-time Data Processing: Kafka, Spark Streaming

Data Pipelines: ETL processes, data lake architecture

Institutes offering Big Data Course Marathahalli prepare students for real-time data challenges, making them skilled at developing solutions to handle the growing volume, velocity, and variety of data generated every day. These courses are ideal for individuals seeking Data Analytics Course Marathahalli or those wanting to pursue business analytics.

Python for Data Science: The Language of Choice for Data Professionals

Python has become the primary language for data science because of its simplicity and versatility. In Python for Data Science Marathahalli courses, students learn how to use Python libraries such as NumPy, Pandas, Scikit-learn, Matplotlib, and Seaborn to manipulate, analyze, and visualize data. Python’s ease of use, coupled with powerful libraries, makes it the preferred language for data scientists and machine learning engineers alike.

Incorporating Python into Advanced Data Science Marathahalli training allows students to learn how to build and deploy machine learning models, process large datasets, and create interactive visualizations that provide meaningful insights. Python’s ability to work seamlessly with machine learning frameworks like TensorFlow and PyTorch also gives students the advantage of building cutting-edge AI models.

Key topics covered include:

Data manipulation with Pandas

Data visualization with Matplotlib and Seaborn

Machine learning with Scikit-learn

Deep learning with TensorFlow and Keras

Web scraping and automation

Python’s popularity in the data science community means that students from Data Science Institutes Marathahalli are better prepared to enter the job market, as Python proficiency is a sought-after skill in many organizations.

Deep Learning and Neural Networks: Pushing the Boundaries of AI

Deep learning, a subfield of machine learning that involves training artificial neural networks on large datasets, has become a significant force in fields such as computer vision, natural language processing, and autonomous systems. Students pursuing a Deep Learning Course Marathahalli are exposed to advanced techniques for building neural networks that can recognize patterns, make predictions, and improve autonomously with exposure to more data.

The Deep Learning Course Marathahalli dives deep into algorithms like convolutional neural networks (CNN), recurrent neural networks (RNN), and reinforcement learning. Students gain hands-on experience in training models for image classification, object detection, and sequence prediction, among other applications.

Key topics covered include:

Neural Networks: Architecture, activation functions, backpropagation

Convolutional Neural Networks (CNNs): Image recognition, object detection

Recurrent Neural Networks (RNNs): Sequence prediction, speech recognition

Reinforcement Learning: Agent-based systems, reward maximization

Transfer Learning: Fine-tuning pre-trained models for specific tasks

For those seeking advanced knowledge in AI, AI and Data Science Course Marathahalli is a great way to master the deep learning techniques that are driving the next generation of technological advancements.

Business Analytics and Data Science Integration: From Data to Decision

Business analytics bridges the gap between data science and business decision-making. A Business Analytics Course Marathahalli teaches students how to interpret complex datasets to make informed business decisions. These courses focus on transforming data into actionable insights that drive business strategy, marketing campaigns, and operational efficiencies.

By combining advanced data science techniques with business acumen, students enrolled in Data Science Courses with Placement Marathahalli are prepared to enter roles where data-driven decision-making is key. Business analytics tools like Excel, Tableau, Power BI, and advanced statistical techniques are taught to ensure that students can present data insights effectively to stakeholders.

Key topics covered include:

Data-driven decision-making strategies

Predictive analytics and forecasting

Business intelligence tools: Tableau, Power BI

Financial and marketing analytics

Statistical analysis and hypothesis testing

Students who complete Data Science Bootcamp Marathahalli or other job-oriented courses are often equipped with both technical and business knowledge, making them ideal candidates for roles like business analysts, data consultants, and data-driven managers.

Certification and Job Opportunities: Gaining Expertise and Career Advancement

Data Science Certification Marathahalli programs are designed to provide formal recognition of skills learned during training. These certifications are recognized by top employers across the globe and can significantly enhance career prospects. Furthermore, many institutes in Marathahalli offer Data Science Courses with Placement Marathahalli, ensuring that students not only acquire knowledge but also have the support they need to secure jobs in the data science field.

Whether you are attending a Data Science Online Course Marathahalli or a classroom-based course, placement assistance is often a key feature. These institutes have strong industry connections and collaborate with top companies to help students secure roles in data science, machine learning, big data engineering, and business analytics.

Benefits of Certification:

Increased job prospects

Recognition of technical skills by employers

Better salary potential

Access to global job opportunities

Moreover, institutes offering job-oriented courses such as Data Science Job-Oriented Course Marathahalli ensure that students are industry-ready, proficient in key tools, and aware of the latest trends in data science.

Conclusion

The Data Science Program Marathahalli is designed to equip students with the knowledge and skills needed to thrive in the fast-evolving world of AI, machine learning, and big data. By focusing on emerging technologies and practical applications, institutes in Marathahalli prepare their students for a wide array of careers in data science, analytics, and AI. Whether you are seeking an in-depth program, a short bootcamp, or an online certification, there are ample opportunities to learn and grow in this exciting field.

With the growing demand for skilled data scientists, Data Science Training Marathahalli programs ensure that students are prepared to make valuable contributions to their future employers. From foundational programming to advanced deep learning and business analytics, Marathahalli offers some of the best data science courses that cater to diverse needs, making it an ideal destination for aspiring data professionals.

Hashtags:

#DataScienceTrainingMarathahalli #BestDataScienceInstitutesMarathahalli #DataScienceCertificationMarathahalli #DataScienceClassesBangalore #MachineLearningCourseMarathahalli #BigDataCourseMarathahalli #PythonForDataScienceMarathahalli #AdvancedDataScienceMarathahalli #AIandDataScienceCourseMarathahalli #DataScienceBootcampMarathahalli #DataScienceOnlineCourseMarathahalli #BusinessAnalyticsCourseMarathahalli #DataScienceCoursesWithPlacementMarathahalli #DataScienceProgramMarathahalli #DataAnalyticsCourseMarathahalli #RProgrammingForDataScienceMarathahalli #DeepLearningCourseMarathahalli #SQLForDataScienceMarathahalli #DataScienceTrainingInstitutesNearMarathahalli #DataScienceJobOrientedCourseMarathahalli

#DataScienceTrainingMarathahalli#BestDataScienceInstitutesMarathahalli#DataScienceCertificationMarathahalli#DataScienceClassesBangalore#MachineLearningCourseMarathahalli#BigDataCourseMarathahalli#PythonForDataScienceMarathahalli#AdvancedDataScienceMarathahalli#AIandDataScienceCourseMarathahalli#DataScienceBootcampMarathahalli#DataScienceOnlineCourseMarathahalli#BusinessAnalyticsCourseMarathahalli#DataScienceCoursesWithPlacementMarathahalli#DataScienceProgramMarathahalli#DataAnalyticsCourseMarathahalli#RProgrammingForDataScienceMarathahalli#DeepLearningCourseMarathahalli#SQLForDataScienceMarathahalli#DataScienceTrainingInstitutesNearMarathahalli#DataScienceJobOrientedCourseMarathahalli

0 notes

Text

Speed and reliability are critical in today’s fast-paced data landscape. At Round The Clock Technologies, we automate data pipelines to ensure data is consistently available for analysis.

Our approach starts with designing pipelines that include self-healing mechanisms, detecting and resolving issues without manual intervention. Scheduling tools automate ETL processes, allowing data to flow seamlessly from source to destination.

Real-time monitoring systems continuously optimize pipeline performance, delivering faster and more reliable analytics. By automating these processes, we enhance efficiency, ensuring the organization has access to the most current and accurate data.

Learn more about our data engineering services at https://rtctek.com/data-engineering-services/

#rtctek#roundtheclocktechnologies#data#dataengineeringservices#dataengineering#etl#extract#transformation#extraction

0 notes

Text

Unlocking the Power of Data: The Role of Data Engineering in Modern Business

In today's data-driven landscape, organizations are increasingly relying on data to make informed decisions, optimize operations, and drive innovation. The field of Data Engineering plays a critical role in this transformation, ensuring that raw data is effectively processed, transformed, and made accessible for analysis. This article delves into the significance of Data Engineering, how it empowers businesses, and why choosing the right partner, like EdgeNRoots, can elevate your data strategy.

What is Data Engineering?

Data Engineering encompasses a wide range of practices and processes designed to facilitate the collection, storage, processing, and analysis of data. It involves the creation of robust data pipelines, the management of databases, and the implementation of data architecture that allows organizations to efficiently leverage their data assets.

At its core, Data Engineering is about transforming raw data into a structured format that can be easily accessed and analyzed. This process includes several key activities, such as data ingestion, data transformation, and data modeling. By focusing on these areas, data engineers ensure that data is not only accurate but also readily available for business intelligence and analytics.

The Importance of Data Engineering in Today's Business Landscape

In an era where data is often referred to as the "new oil," the importance of Data Engineering cannot be overstated. Here are some critical reasons why Data Engineering is essential for organizations:

Data Accessibility: With effective Data Engineering, businesses can ensure that data is easily accessible to stakeholders across the organization. This accessibility promotes data-driven decision-making and enhances collaboration among teams.

Scalability: As businesses grow, so does their data. A well-designed data architecture allows organizations to scale their data infrastructure seamlessly, accommodating increased data volumes without compromising performance.

Data Quality: Ensuring high-quality data is vital for any organization. Data Engineering practices focus on data validation and cleansing, which help maintain the integrity and accuracy of data.

Cost Efficiency: By optimizing data storage and processing, organizations can significantly reduce costs associated with data management. Efficient Data Engineering practices lead to more effective use of resources.

Key Components of Data Engineering

Data Ingestion

Data ingestion is the first step in the Data Engineering process, involving the collection of data from various sources. This can include structured data from databases, unstructured data from social media, or semi-structured data from APIs. Data engineers utilize tools like Apache Kafka and Apache NiFi to streamline this process, ensuring that data is collected in real-time and made available for further processing.

Data Transformation

Once data is ingested, it often requires transformation to be useful. This process includes cleaning, normalizing, and aggregating data to prepare it for analysis. Data Engineering utilizes ETL (Extract, Transform, Load) processes to ensure that data is accurately transformed into a format that analytics tools can easily interpret.

Data Storage

Choosing the right data storage solution is crucial in Data Engineering. Organizations must decide between various storage options, such as relational databases, NoSQL databases, or cloud-based data lakes. The choice depends on factors like data volume, data variety, and the organization's specific needs. Data engineers must also implement data governance policies to ensure data security and compliance.

Data Modeling

Data modeling is a critical aspect of Data Engineering that involves designing the structure of the data and defining how it will be stored, accessed, and used. Effective data models facilitate better data analysis and reporting, enabling organizations to derive valuable insights from their data.

Why Choose EdgeNRoots for Data Engineering?

EdgeNRoots stands out as a premier provider of Data Engineering services, offering tailored solutions that align with the unique needs of each client. Here are several reasons why partnering with EdgeNRoots is a smart choice for organizations seeking to enhance their data capabilities:

Expertise and Experience: EdgeNRoots boasts a team of seasoned data engineers with extensive experience in various industries. Their expertise ensures that clients receive the best practices in Data Engineering tailored to their specific business needs.

Custom Solutions: Recognizing that each organization is different, EdgeNRoots provides customized Data Engineering solutions that address the unique challenges and requirements of their clients. This personalized approach helps organizations maximize the value of their data.

Innovative Tools and Technologies: EdgeNRoots leverages the latest tools and technologies in the field of Data Engineering, ensuring that clients benefit from cutting-edge solutions that enhance data processing and analysis.

Comprehensive Support: From initial consultation to ongoing support, EdgeNRoots offers comprehensive services that guide clients through every stage of their Data Engineering journey. This commitment to client success sets EdgeNRoots apart from competitors.

Focus on Data Security: In an age where data breaches are prevalent, EdgeNRoots prioritizes data security, implementing robust measures to protect client data and ensure compliance with industry regulations.

Table: Benefits of Partnering with EdgeNRoots for Data Engineering

Benefit

Description

Expertise

Access to skilled data engineers with industry-specific knowledge.

Custom Solutions

Tailored data solutions that meet unique business challenges.

Innovative Tools

Utilization of cutting-edge technologies for enhanced data processing.

Comprehensive Support

End-to-end support from consultation to ongoing management.

Data Security

Robust security measures to protect client data and ensure compliance.

The Future of Data Engineering

As businesses continue to generate vast amounts of data, the field of Data Engineering will only grow in importance. Emerging technologies, such as artificial intelligence (AI) and machine learning (ML), will further revolutionize the landscape of Data Engineering, enabling organizations to extract even more value from their data.

Data engineers will need to adapt to new tools and methodologies, such as automating data pipelines and implementing real-time data processing. This evolution will ensure that businesses can keep pace with the demands of a rapidly changing digital environment.

FAQs About Data Engineering

What is the primary role of a data engineer?

The primary role of a data engineer is to design, construct, and maintain systems and infrastructure for data generation, storage, and analysis. They ensure that data is easily accessible and usable for data scientists and analysts.

How does Data Engineering differ from Data Science?

While Data Engineering focuses on the preparation and management of data, data science involves analyzing that data to derive insights and make predictions. Data engineers set up the infrastructure that data scientists use for their analyses.

What tools are commonly used in Data Engineering?

Common tools used in Data Engineering include Apache Hadoop, Apache Spark, Apache Kafka, and various database management systems (DBMS) like PostgreSQL, MongoDB, and Amazon Redshift.

Why is data quality important in Data Engineering?

Data quality is crucial because high-quality data leads to accurate insights and informed decision-making. Poor-quality data can result in erroneous conclusions and negatively impact business outcomes.

How can businesses measure the success of their Data Engineering initiatives?

Businesses can measure the success of their Data Engineering initiatives through key performance indicators (KPIs) such as data accuracy, data accessibility, processing speed, and cost savings associated with data management.

What are the challenges faced in Data Engineering?

Challenges in Data Engineering include handling large volumes of data, ensuring data quality, managing data security, and integrating data from multiple sources effectively.

How does EdgeNRoots ensure data security?

EdgeNRoots ensures data security through robust encryption methods, regular security audits, and compliance with industry regulations to protect client data against breaches.

Is it necessary for businesses to invest in Data Engineering?

Yes, investing in Data Engineering is essential for businesses that want to leverage data effectively. A strong data infrastructure enables better decision-making, enhances operational efficiency, and provides a competitive advantage.

Conclusion

In conclusion, Data Engineering is a vital component of modern business strategy, enabling organizations to harness the power of their data. By choosing a trusted partner like EdgeNRoots, businesses can ensure that their data infrastructure is optimized for success. From data ingestion to storage and transformation, effective Data Engineering practices pave the way for informed decision-making, enhanced collaboration, and sustained growth in the competitive landscape. Embracing the principles of Data Engineering not only positions organizations for success but also empowers them to innovate and adapt in an ever-changing world.

0 notes

Text

SSIS on a Solo vs. a Dedicated SQL Server?

Pros and cons are like two sides of a coin, especially when we’re talking about where to run SQL Server Integration Services (SSIS). If you’re pondering whether to run SSIS on your sole SQL server or to go the extra mile and set it up on a dedicated server, let’s dive into the nitty-gritty to help you make an informed decision. Pros of Running SSIS on a Single SQL Server: Cost Savings: The most…

View On WordPress

#dedicated server benefits#ETL process optimization#SQL Server performance#SSIS resource management#SSIS SQL Server

0 notes

Text

Introduction to SAP ETL: Transforming and Loading Data for Better Insights

Understanding ETL in the Context of SAP

Extract, Transform, Load (ETL) is a critical process in managing SAP data, enabling companies to centralize and clean data from multiple sources. SAP ETL processes ensure data is readily accessible for business analytics, compliance reporting, and decision-making.

Key Benefits of Using ETL for SAP Systems

Data Consistency: ETL tools clean and standardize data, reducing redundancy and discrepancies.

Enhanced Reporting: Transformed data is easier to query and analyze, making it valuable for reporting in SAP HANA and other data platforms.

Improved Performance: Offloading data from SAP systems to data lakes or warehouses like Snowflake or Amazon Redshift improves SAP application performance by reducing database load.

Popular SAP ETL Tools

SAP Data Services: Known for deep SAP integration, SAP Data Services provides comprehensive ETL capabilities with real-time data extraction and cleansing features.

Informatica PowerCenter: Popular for its broad data connectivity, Informatica offers robust SAP integration for both on-premises and cloud data environments.

AWS Glue: AWS Glue supports SAP data extraction and transformation, especially for integrating SAP with AWS data lakes and analytics services.

Steps in SAP ETL Process

Data Extraction: Extract data from SAP ERP, BW, or HANA systems. Ensure compatibility and identify specific tables or fields for extraction to streamline processing.

Data Transformation: Cleanse, standardize, and format the data. Transformation includes handling different data types, restructuring, and consolidating fields.

Data Loading: Load the transformed data into the desired system, whether an SAP BW platform, data lake, or an external data warehouse.

Best Practices for SAP ETL

Prioritize Data Security: Data sensitivity in SAP systems necessitates stringent security measures during ETL processes. Use encryption and follow compliance standards.

Automate ETL Workflows: Automate recurring ETL jobs to improve efficiency and reduce manual intervention.

Optimize Transformation: Streamline transformations to prevent overloading resources and ensure fast data processing.

Challenges in SAP ETL and Solutions

Complex Data Structures: SAP’s complex data structures may require advanced mapping. Invest in an ETL tool that understands SAP's unique configurations.

Scalability: As data volume grows, ETL processes may need adjustment. Choose scalable ETL tools that allow flexible data scaling.

0 notes

Text

Best Practices for a Smooth Data Warehouse Migration to Amazon Redshift

In the era of big data, many organizations find themselves outgrowing traditional on-premise data warehouses. Moving to a scalable, cloud-based solution like Amazon Redshift is an attractive solution for companies looking to improve performance, cut costs, and gain flexibility in their data operations. However, data warehouse migration to AWS, particularly to Amazon Redshift, can be complex, involving careful planning and precise execution to ensure a smooth transition. In this article, we’ll explore best practices for a seamless Redshift migration, covering essential steps from planning to optimization.

1. Establish Clear Objectives for Migration

Before diving into the technical process, it’s essential to define clear objectives for your data warehouse migration to AWS. Are you primarily looking to improve performance, reduce operational costs, or increase scalability? Understanding the ‘why’ behind your migration will help guide the entire process, from the tools you select to the migration approach.

For instance, if your main goal is to reduce costs, you’ll want to explore Amazon Redshift’s pay-as-you-go model or even Reserved Instances for predictable workloads. On the other hand, if performance is your focus, configuring the right nodes and optimizing queries will become a priority.

2. Assess and Prepare Your Data

Data assessment is a critical step in ensuring that your Redshift data warehouse can support your needs post-migration. Start by categorizing your data to determine what should be migrated and what can be archived or discarded. AWS provides tools like the AWS Schema Conversion Tool (SCT), which helps assess and convert your existing data schema for compatibility with Amazon Redshift.

For structured data that fits into Redshift’s SQL-based architecture, SCT can automatically convert schema from various sources, including Oracle and SQL Server, into a Redshift-compatible format. However, data with more complex structures might require custom ETL (Extract, Transform, Load) processes to maintain data integrity.

3. Choose the Right Migration Strategy

Amazon Redshift offers several migration strategies, each suited to different scenarios:

Lift and Shift: This approach involves migrating your data with minimal adjustments. It’s quick but may require optimization post-migration to achieve the best performance.

Re-architecting for Redshift: This strategy involves redesigning data models to leverage Redshift’s capabilities, such as columnar storage and distribution keys. Although more complex, it ensures optimal performance and scalability.

Hybrid Migration: In some cases, you may choose to keep certain workloads on-premises while migrating only specific data to Redshift. This strategy can help reduce risk and maintain critical workloads while testing Redshift’s performance.

Each strategy has its pros and cons, and selecting the best one depends on your unique business needs and resources. For a fast-tracked, low-cost migration, lift-and-shift works well, while those seeking high-performance gains should consider re-architecting.

4. Leverage Amazon’s Native Tools

Amazon Redshift provides a suite of tools that streamline and enhance the migration process:

AWS Database Migration Service (DMS): This service facilitates seamless data migration by enabling continuous data replication with minimal downtime. It’s particularly helpful for organizations that need to keep their data warehouse running during migration.

AWS Glue: Glue is a serverless data integration service that can help you prepare, transform, and load data into Redshift. It’s particularly valuable when dealing with unstructured or semi-structured data that needs to be transformed before migrating.

Using these tools allows for a smoother, more efficient migration while reducing the risk of data inconsistencies and downtime.

5. Optimize for Performance on Amazon Redshift

Once the migration is complete, it’s essential to take advantage of Redshift’s optimization features:

Use Sort and Distribution Keys: Redshift relies on distribution keys to define how data is stored across nodes. Selecting the right key can significantly improve query performance. Sort keys, on the other hand, help speed up query execution by reducing disk I/O.

Analyze and Tune Queries: Post-migration, analyze your queries to identify potential bottlenecks. Redshift’s query optimizer can help tune performance based on your specific workloads, reducing processing time for complex queries.

Compression and Encoding: Amazon Redshift offers automatic compression, reducing the size of your data and enhancing performance. Using columnar storage, Redshift efficiently compresses data, so be sure to implement optimal compression settings to save storage costs and boost query speed.

6. Plan for Security and Compliance

Data security and regulatory compliance are top priorities when migrating sensitive data to the cloud. Amazon Redshift includes various security features such as:

Data Encryption: Use encryption options, including encryption at rest using AWS Key Management Service (KMS) and encryption in transit with SSL, to protect your data during migration and beyond.

Access Control: Amazon Redshift supports AWS Identity and Access Management (IAM) roles, allowing you to define user permissions precisely, ensuring that only authorized personnel can access sensitive data.

Audit Logging: Redshift’s logging features provide transparency and traceability, allowing you to monitor all actions taken on your data warehouse. This helps meet compliance requirements and secures sensitive information.

7. Monitor and Adjust Post-Migration

Once the migration is complete, establish a monitoring routine to track the performance and health of your Redshift data warehouse. Amazon Redshift offers built-in monitoring features through Amazon CloudWatch, which can alert you to anomalies and allow for quick adjustments.

Additionally, be prepared to make adjustments as you observe user patterns and workloads. Regularly review your queries, data loads, and performance metrics, fine-tuning configurations as needed to maintain optimal performance.

Final Thoughts: Migrating to Amazon Redshift with Confidence

Migrating your data warehouse to Amazon Redshift can bring substantial advantages, but it requires careful planning, robust tools, and continuous optimization to unlock its full potential. By defining clear objectives, preparing your data, selecting the right migration strategy, and optimizing for performance, you can ensure a seamless transition to Redshift. Leveraging Amazon’s suite of tools and Redshift’s powerful features will empower your team to harness the full potential of a cloud-based data warehouse, boosting scalability, performance, and cost-efficiency.

Whether your goal is improved analytics or lower operating costs, following these best practices will help you make the most of your Amazon Redshift data warehouse, enabling your organization to thrive in a data-driven world.

#data warehouse migration to aws#redshift data warehouse#amazon redshift data warehouse#redshift migration#data warehouse to aws migration#data warehouse#aws migration

0 notes

Text

Common Challenges in MySQL to Redshift Migration and How to Overcome Them