#Datastore

Text

Statsig Reaches 7.5M QPS In Memorystore for Redis Cluster

They at Statsig are enthusiastic about trying new things. Their goal is to simplify the process of testing, iterating, and deploying product features for businesses so they can obtain valuable insights into user behavior and performance.

With Statsig, users can make data-driven decisions to improve user experience in their software and applications. Statsig is a feature-flag, experimentation, and product analytics platform. It’s vital that their platform can handle and update all of that data instantly. Their team expanded from eight engineers using an open-source Redis cache, so they looked for a working database that could support their growing number of users and their high query volume.

Redis Cluster Mode

Statsig was founded in 2021 to assist businesses in confidently shipping, testing, and managing software and application features. The business encountered bottlenecks and connectivity problems and realized it needed a fully managed Redis service that was scalable, dependable, and performant. Memorystore for Redis Cluster Mode fulfilled all of the requirements. Memorystore offers a higher queries per second (QPS) capacity along with robust storage (99.99% SLA) and real-time analytics capabilities at a lower cost. This enables Statsig to return attention to its primary goal of creating a comprehensive platform for product observability that optimizes impact.

Playing around with a new database

Their data store used to be dependent on a different cloud provider’s caching solution. Unfortunately, the high costs, latency slowdowns, connectivity problems, and throughput bottlenecks prevented the desired value from being realized. As loads and demand increased, it became increasingly difficult to see how their previous system could continue to function sustainably.

They chose to experiment by following in the footsteps of their platform and search for a solution that would combine features, clustering capabilities, and strong efficiency at a lower cost. they selected Memorystore for Redis Cluster because it enabled us to achieve they objectives without sacrificing dependability or affordability.

Additionally, since the majority of their applications are stateless and can readily accommodate cache modifications, the transition to Memorystore for Redis Cluster was an easy and seamless opportunity to align their operations with their business plan.

Statsig

Improving data management in real time

Redis Cluster memorystore has developed into a vital tool for Statsig, offering strong scalability and flexibility for thryoperations. Its capabilities are effectively put to use for things like read-through caching, real-time analytics, events and metrics storage, and regional feature flagging. Memorystore is also the platform that powers their core Statsig console functionalities, including event sampling and deduplication in their streaming pipeline and real-time health checks.

The high availability (99.99% SLA) of the memorystore for Redis Cluster guarantees the dependable performance that is essential to they services. They can dynamically adjust the size of their cluster as needed thanks to the ability to scale in and out with ease.

There is no denying the outcomes, as quantifiable advancements have been noted in several important domains

Enhanced database speed

They are more confident in the caching layer with Memorystore for Redis Cluster, as it can support more use cases and higher queries per second (QPS). In order to keep up with their customers as they grow,they can now easily handle 1.5 million QPS on average and up to 7.5 million QPS at peak.

Increased scalability

Memorystore for Redis Cluster‘s capacity to grow in or out with zero downtime has enabled us to support a variety of use cases and higher QPS, putting us in a position to expand their clientele and offerings.

Cost effectiveness and dependability

They have managed to cut expenses significantly without sacrificing the quality of their services. When compared to the costs of using they previous cloud provider for the same workloads, the efficiency of their database running on Memorystore has resulted in a 70% reduction in Redis costs. Their requirements for processing data in real time have also benefited from Memorystore’s dependable performance.

Improved monitoring and administration

Since they switched to Memorystore, they no longer have to troubleshoot persistent Redis connection problems with they database management. Memorystore’s user-friendly monitoring tools allowed they developers to concentrate on platform innovation rather than spend as much time troubleshooting database problems.

Integrated security

An additional bit of security is always beneficial. The smooth integration of Memorystore with Google Cloud VPC improves .Their security posture.

Memorystore for Redis Cluster

A completely managed Redis solution for Google Cloud is called Memorystore for Redis Cluster. By using the highly scalable, accessible, and secure Redis service, applications running on Google Cloud may gain tremendous speed without having to worry about maintaining intricate Redis setups.

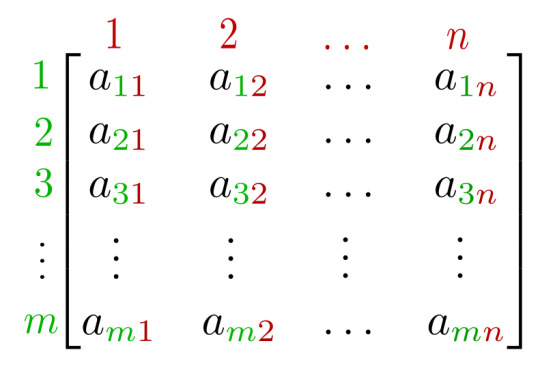

A collection of shards, each representing a portion of your key space, make up the memorystore for Redis Cluster instances. In a Memorystore cluster, each shard consists of one main node and, if desired, one or more replica nodes. Memorystore automatically distributes a shard’s nodes across zones upon the addition of replica nodes in order to increase performance and availability.

Examples

Your data is housed in a Memorystore for Redis Cluster instance. When speaking about a single Memorystore for a Redis Cluster unit of deployment, the words instance and cluster are synonymous. You need to provide enough shards for your Memorystore instance in order to service the keyspace of your whole application.

Anticipating the future of Memorystore

They are confident that Memorystore for Redis Cluster will continue to be a key component of .Their services a database they can rely on to support higher loads and increased demand and they are expanding they use of it to enable smarter and faster product development as they customer base grows. In the future, they will continue to find applications for Memorystore’s strong features and consistent scalability.

Read more on Govindhtech.com

0 notes

Text

Amazon Web Service & Adobe Experience Manager:- A Journey together (Part-5)

In the previous parts (1,2,3 & 4) we discussed how one day digital market leader meet with the a friend AWS in the Cloud and become very popular pair. It bring a lot of gifts for the digital marketing persons. Then we started a journey into digital market leader house basement and structure, mainly repository CRX and the way its MK organized. Ways how both can live and what smaller modules they used to give architectural benefits.Also visited how the are structured together to give more.

In this part as well will see on the more in architectural side.

AEM scale in the cloud

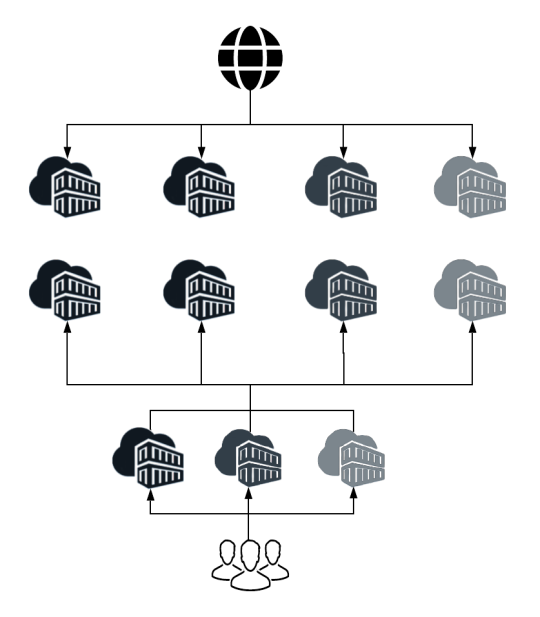

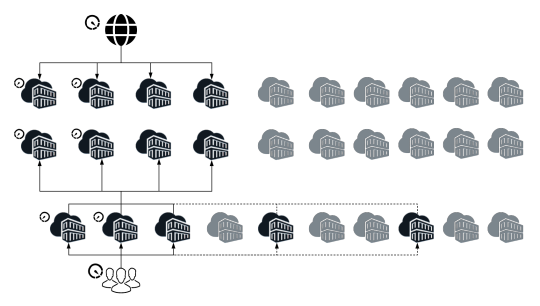

A dynamic architecture with a variable number of AEM images is required to fulfill the operational business needs.

AEM as a Cloud Service is based on the use of an orchestration engine.Dynamically scales each of the service instances as per the actual needs; both scaling up or down as appropriate.

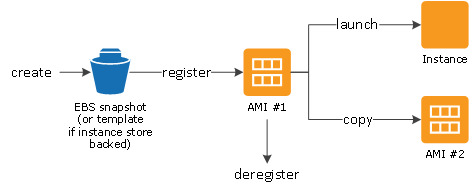

Scaling is a very simple task in AWS with creating separate Amazon Machine Images(AMIs) for publish , publish-dispatcher and author- dispatcher instance.

Use an AMI

Three separate launch configurations can be created using these AMIs and included in separate Auto Scaling groups(Auto Scaling groups - Amazon EC2 Auto Scaling).

AWS Lambda can provide scaling logic for scale up/down events from Auto Scaling groups.

Scaling logic consists of pairing/unpairing the newly launched dispatcher instance to an available publish instance or vice versa, updating the replication agent (reverse replication, if applicable) between the newly launched publish and author, and updating AEM content health check alarms.

One more approach for the quicker startup and synchronization, AEM installation can place on a separate EBS volume. A frequent snapshots of the volume and attachment to the newly-launched instances, Cut-down need of replicate large amounts of author data. Also it ensure the latest content.

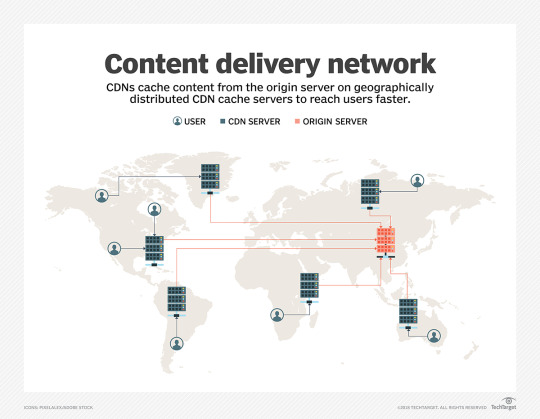

CDN:-Content Delivery Network or Content Distribution Network

A CDN is a group of geographically distributed and interconnected servers. They provide cached internet content from a network location closest to a user to speed up its delivery.

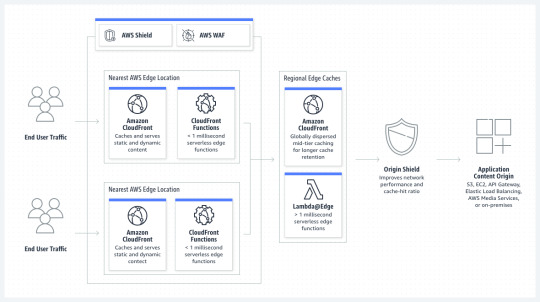

AWS is having answer of CDN requirement as well in the form of Amazon CloudFront a Low-Latency Content Delivery Network (CDN)

How it works

It will act as an additional caching layer with AEM dispatcher. Also it require proper content invalidation when it refreshed.

Explicit configuration of duration of particular resources are held in the CloudFront cache, expiration and cache-control headers sent by dispatcher required to control caching into CloudFront .

Cache control headers controlled by using mod_expires Apache Module.

Another approach will be API-based invalidation a custom invalidation workflow and set up an AEM Replication Agent that will use your own ContentBuilder and TransportHandler to invalidate the Amazon CloudFront cache using API.

These all about caching of static content only what is the solution or way to handle Dynamic content will see now.

Content which is Dynamic in Nature

Dispatcher is the key element of caching layer with the AEM. But it will not give full benefit when complete page is not cacheable. Now the question arise how dynamic content can fit into page without breaking the caching feature. There are some standard suggestion available. Like Edge Side Includes (ESIs),client-side JavaScript or Server Side Includes (SSIs) incorporate dynamic elements in a static page.

AWS is also have one solution as Varnish(replacing the dispatcher) to handle ESIs . But its not recommended by Adobe.

Till here we have seen structure of content dynamic static and various ways, but digital solution also have huge number of Assets mainly binary data. These need to configure handle properly to ensure performance of the site.

Again AWS is equipped with great solution called Amazon S3.

AEM Data Store with Amazon S3

Adobe Experience Manager (AEM), binary data can be stored independently from the content nodes. The binary data is stored in a data store, whereas content nodes are stored in a node store.

Both data stores and node stores can be configured using OSGi configuration. Each OSGi configuration is referenced using a persistent identifier (PID).

AEM can be configured to store data in Amazon’s Simple Storage Service (S3), with following PID for configuration

org.apache.jackrabbit.oak.plugins.blob.datastore.S3DataStore.config

To enable the S3 data store functionality, a feature pack containing the S3 Datastore Connector must be downloaded and installed. For more detail please refer Configuring node stores and data stores in AEM 6 | Adobe Experience Manager

This will simplifying management and backups. Also, sharing of binary data store across author and between author & publish instances will be possible and easier task with AWS S3 solution.

it will reduce overall storage and data transfer requirements.

Already this great combination walkthroug of the structure combination posibbilities , we will see one more variation available for the Cloud version of AEM with AWS in next (AEM OpenCloud)

Thanks for being with me till this , we will meet in next part with some amazing journey of OpenClode.

Hope you enjoy most till this part, kindly keep your blessings and love to motivate me.

Continue............

#aem#aws#adobe#cloud#wcm#programing#ELB#Amzon S3#OpenClode#OSGi#SSIs#ESI#CloudFront#Datastore#connector#Dispatcher#API#ContentBuilder#TransportHandler#CDN#AWS Lambda#aws lambda#Amazon EC2 Auto Scaling#ASG Auto Scaling Group#Amazon Machine Images(AMIs)#AEM AUTHOR#AEM Publish

1 note

·

View note

Text

PowerCLI script to list datastore space utilization

Here is a PowerCLI script that lists the datastore space utilization:

Connect to vCenter server

Connect-VIServer -Server -User -Password

Get all the datastores in vCenter

$datastores = Get-Datastore

Loop through each datastore

foreach ($datastore in $datastores) {# Get the capacity and free space of the datastore$capacity = $datastore.CapacityGB$freeSpace = $datastore.FreeSpaceGB

#…

View On WordPress

0 notes

Text

Data Fabric rapidly simplifies the technology jungle, allowing organizations to deploy the right infrastructure for each workload. The result is agility and business productivity across a wide range of applications.

0 notes

Text

hate that engineering exists in BG3.. it should not.. 🤢🤢🤮🤮

#'...and what a feat of engineering it is!' -gale of the deep waters#nooo 😭😭#chelle.txt#dark urge: agarwaen#have to teach myself about this specific type of datastore. hate.

0 notes

Text

i love the altoholic addon but by god i wish it actually fucking worked

#kc chirps#i log in. i get 2 lua errors from datastore. i mouse over any anima object. i get a lua error. i put heirlooms on. i get a lua error#get it together girl. if i didnt need to see where all my materials were id smite you

1 note

·

View note

Text

Objects in the Clouds - the flexible datastore technology

Many methods have been created to store our data. In our digital world, there are also plenty of sophisticated technologies to store our digital data. Among those, one technology that inspires and interests me - as a computer engineering student - is the Cloud Object Storage technology.

----------Cloud Object Storage Technology----------

Object storage is a datastore architecture designed to handle large amounts of unstructured data. Unlike file storage architecture, which stores data into hierarchies with directories and folders, object storage architecture divides data into distinct units (objects) and gives each object a unique identifier. This makes it easier to find any desired data from databases which can get enormous. These objects are usually stored in the clouds, and thus called cloud object storage. Object storage is good for its virtually unlimited scalability and its lower cost.

----------The Way it is Special to Me----------

Cloud storage holds a special place in my digital life, primarily due to the conveniences it offers. Its ability to seamlessly bridge the gap between my many devices has transformed the way I interact with my documents, photos, and videos. The days of laboriously transferring files from one device to another are gone; the cloud enables me to access my data effortlessly, regardless of the gadget I'm using. Its flexibility mirrors the real world, where I can retrieve any file as if it were right at my fingertips. This adaptable nature not only streamlines my daily routine but also gives me a sense of control akin to managing tangible objects. Furthermore, the cloud has liberated me from the burden of carrying my computer around or relying on memory sticks. It's as if my data follows me wherever I go, allowing me to focus on the task at hand rather than the logistics of data storage. Cloud storage has truly changed the way I manage and interact with my digital assets, and its impact on my life is nothing short of remarkable.

1 note

·

View note

Video

undefined

tumblr

WHAT IS A CLOUD-BASED POS SYSTEM?

A Cloud-based POS system is a point of sale system hosted in the cloud. It allows businesses to access their sales data from any device with an internet connection, reducing the need for expensive hardware. This also enables businesses to enjoy increased flexibility, scalability, and reliability.

0 notes

Text

It's your moral obligation to root around in your robotgirl gf's datastores and delete all her sad memories. She needs a fucking break

40 notes

·

View notes

Text

Boost the development of AI apps with Cloud Modernization

Cloud Modernization

A new era of intelligent applications that can comprehend natural language, produce material that is human-like, and enhance human abilities has begun with the development of generative AI. But as businesses from all sectors start to see how AI may completely transform their operations, they frequently forget to update their on-premises application architecture, which is an essential first step.

Cloud migration

Cloud migration is significantly more advantageous than on-premises options if your company wants to use AI to improve customer experiences and spur growth. Numerous early adopters, including TomTom and H&R Block, have emphasized that their decision to start updating their app architecture on Azure was what prepared them for success in the AI era.

Further information to connect the dots was provided by a commissioned study by IDC titled “Exploring the Benefits of Cloud Migration and Cloud Modernization for the Development of Intelligent Applications,” which was based on interviews with 900 IT leaders globally regarding their experiences moving apps to the cloud. They’ll go over a few of the key points in this article.

Modernise or lag behind: The necessity of cloud migration driven by AI

Let’s say what is obvious: Artificial Intelligence is a potent technology that can write code, produce content, and even develop whole apps. The swift progress in generative AI technologies, such OpenAI’s GPT-4 has revolutionized the way businesses function and engage with their clientele.

However, generative AI models such as those that drive ChatGPT or image-generating software are voracious consumers of data. To achieve their disruptive potential, they need access to enormous datasets, flexible scaling, and immense computing resources. The computation and data needs of contemporary AI workloads are simply too much for on-premises legacy systems and compartmentalized data stores to handle.

Cloud Modernization systems, which are entirely managed by the provider, offer the reliable infrastructure and storage options required to handle AI workloads. Because of its nearly infinite scalability, apps can adapt to changing demand and continue to operate at a high level.

The main finding of the IDC survey was that businesses were mostly driven to move their applications to the cloud by a variety of benefits, such as enhanced security and privacy of data, easier integration of cloud-based services, and lower costs. Furthermore, companies can swiftly test, refine, and implement AI models because to the cloud’s intrinsic agility, which spurs innovation.

With its most recent version, the.NET framework is ready to use AI in cloud settings. Developers can use libraries like OpenAI, Qdrant, and Milvus as well as tools like the Semantic Kernel to include AI capabilities into their apps. Applications may be delivered to the cloud with excellent performance and scalability thanks to the integration with.

NET Aspire. H&R Block’s AI Tax Assistant, for instance, shows how companies may build scalable, AI-driven solutions to improve user experiences and operational efficiency. It was developed using.NET and Azure OpenAI. You may expedite development and boost the adoption of AI in all areas of your company operations by integrating. NET into your cloud migration plan.

Utilising cloud-optimized old on-premises programmes through migration and restructuring allows for the seamless scaling of computation, enormous data repositories, and AI services. This can help your business fully incorporate generative AI into all aspects of its data pipelines and intelligent systems, in addition to allowing it to develop generative AI apps.

Reach your AI goals faster in the cloud

The ambition of an organisation to use generative AI and the realisation of its full value through cloud migration are strongly correlated, according to a recent IDC study. Let’s dissect a few important factors:

Data accessibility: Consolidating and accessing data from several sources is made easier by cloud environments, giving AI models the knowledge they require for training and improvement.

Computational power: Elastic computing resources in the cloud may be flexibly distributed to fulfil complicated AI algorithm needs, resulting in optimal performance and cost effectiveness.

Collaboration: Data scientists, developers, and business stakeholders may work together more easily thanks to cloud-based tools, which speeds up the creation and application of AI.

Cloud migration speeds up innovation overall in addition to enabling generative AI. Cloud platforms offer an abundance of ready-to-use services, such as serverless computing, machine learning, and the Internet of Things, that enable businesses to quickly develop and integrate new intelligent features into their apps.

Adopt cloud-based AI to beat the competition

Gaining a competitive edge is the driving force behind the urgent need to migrate and modernise applications it’s not simply about keeping up with the times. Companies who use AI and the cloud are better positioned to:

Draw in elite talent Companies with state-of-the-art tech stacks attract the best data scientists and developers.

Adjust to shifting circumstances: Because of the cloud’s elasticity, organisations may quickly adapt to changing client wants or market conditions.

Accelerate the increase of revenue: Applications driven by AI have the potential to provide new revenue streams and improve consumer satisfaction.

Embrace AI-powered creativity by updating your cloud

Cloud migration needs to be more than just moving and lifting apps if it is to stay competitive. The key to unlocking new levels of agility, scalability, and innovation in applications is Cloud Modernization through rearchitecting and optimizing them for the cloud. Your company can: by updating to cloud-native architectures, your apps can:

Boost performance: Incorporate intelligent automation, chatbots, and personalised recommendations all enabled by AI into your current applications.

Boost output: To maximise the scalability, responsiveness, and speed of your applications, take advantage of cloud-native technology.

Cut expenses: By only paying for the resources you use, you can do away with the expensive on-premises infrastructure.

According to the IDC poll, most respondents decided to move their apps to the Cloud Modernization because it allowed them to develop innovative applications and quickly realize a variety of business benefits.

Boost the development of your intelligent apps with a cloud-powered AI

In the age of generative AI, moving and updating apps to the cloud is not a choice, but a requirement. Businesses that jump on this change quickly will be in a good position to take advantage of intelligent apps’ full potential, which will spur innovation, operational effectiveness, and consumer engagement.

The combination of generative AI and cloud computing is giving organizations previously unheard-of options to rethink their approaches and achieve steady growth in a cutthroat market.

Businesses may make well-informed decisions on their cloud migration and Cloud Modernization journeys by appreciating the benefits and measuring the urgency, which will help them stay at the forefront of technical advancement and commercial relevance.

Read more on Govindhtech.com

#CloudModernization#generativeai#OpenAI#GPT4#AIModels#datastores#AICapabilities#AzureOpenAI#MachineLearning#NewsUpdate#TechNewsToday#Technology#technologynews#technologytrends#govindhtech

0 notes

Text

It seems like a big part of the Crowdstrike situation is the use of a big, high attack surface, mutable OS as a HAL for information appliances (for instance a ticket kiosk). I think rather than sprinkling more and more aggressive EDR on a mega-OS, we should be trying to remove as much from the OS as possible and make the OS as immutable as possible.

Even more so it seems like there was a big push to Crowdstrike and high velocity EDR updates after WannaCry, a crypto locker. But it seems like a kiosk doesn't have much local state to lock up / destroy in the first place. It's most dangerous first as an entry point to the datastore, and second as system that needs high availability for the meat space service it enables. It seems like the same remediation policy for at datastore might not be so great for an endpoint.

2 notes

·

View notes

Link

The NHS is using a US surveillance tech company owned by a billionaire Trump donor to process hundreds of datasets – some of which are being shared with private sector firms.

Department of Health and Social Care data shows NHS England has created more than 300 different purposes for processing information in its “Covid-19 datastore”, which runs on a platform created by Palantir.

Palantir, which has built software to support drone strikes and immigration raids, is also tipped to win a £480m deal this year to build a single database that will eventually hold all the data in the NHS.

The 339 purposes for which the firm already processes NHS information – reported here for the first time – include patient data on mental health, cancer screening and vaccines for sexually transmitted infections.

17 notes

·

View notes

Text

People are making the parallel point about Reddit being down right now, but I think an even worse problem that people rarely talk about is the seclusion of information on Discord servers. For one, Discord messages are so easy to lose! A channel could get accidentally deleted or the owner could get hacked and delete the server or what have you. People just close servers all the time due to drama or whatever. And yeah, those are possible problems with lots of online datastores but at least most of them can be indexed by something like the Wayback machine, unlike Discord. That fact, combined with the fact that Discord search sucks, makes it really hard to find historical stuff unless you remember exactly when it happened or some exact unique keyword.

If you run a discord server with a lot of unique specialized knowledge (this happens a lot in niche communities like small game speedrunning servers or video game character main servers or whatever) you should absolutely look into backing up the data somewhere where it can be preserved for the future!!

5 notes

·

View notes

Text

The Rise and Fall of Matrix 1.0 in Shadowrun

If you know what this is, then we are friends. Hoi chummer.

If you don’t then I’ll first start with what it’s not:

Matrix (mathematics)

John Matrix from Commando

Extracellular matrix

Like most cyberpunk elements within Shadowrun, the use of “matrix” as a virtual-reality driven computer environment derives from William Gibson’s Neuromancer, published in 1984. So yes, Shadowrun rips this off, though not to the infamy that…

…this franchise did.

The Matrix came out in 1999. It was revolutionary in its special effects, realizing Keanu Reeves had a greater range than being Ted, and getting a generation of sci-fi fans to read Baudrillard.

Baudrillard did not write this book.

I’m sure there’s been much written about the central tenet of the movie – that we are living in a simulation – so much so that I’m not bothering to do any research on that topic and get back to the main topic.

Matrix Topology

In college, a group of us Shadowrun nerds tried to recruit a computer-savvy friend to make a character and join our campaign. Naturally, we suggested a decker, and soon had him trying to infiltrate his first system, looking for some mcguffin.txt file that our team was hired to find.

Him: “Well, can’t I just grep it or something?”

Us: “Uhh… that’s not how computers work.”

Him: *already out the door*

The thing to remember about all implementation of computing and hacking in games is that:

It is absolutely nothing like how it works in real life, yet…

…some people will believe it to be chip-truth anyway.

Which is true for every aspect of RPGs – especially combat systems. Sometimes this is amusing – like watching D&D players trying to swing a real sword, and sometimes this leads to the United States Secret Service raiding the offices of Steve Jackson Games over GURPS Cyberpunk.

How can you consider this a threat to the Nation when The World of Synnibarr exists?

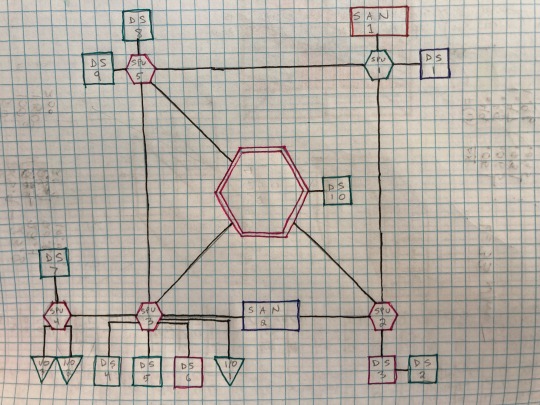

The Matrix – in Neuromancer, in the film franchise, in Shadowrun – is built upon telephone networks. In order to connect to a Bulletin Board System (BBS), your computer’s modem (a telephone that speaks computer) had to dial an old-fashioned phone number. Which meant that you had to know what that number is beforehand – there was no equivalent to Google. This is why in the movie WarGames (1983), Ferris Bueller is wardialing – calling every number in an area code to search for the few that connect to computer systems. So much of the presentation in Shadowrun is based upon knowing the address to where you want to go:

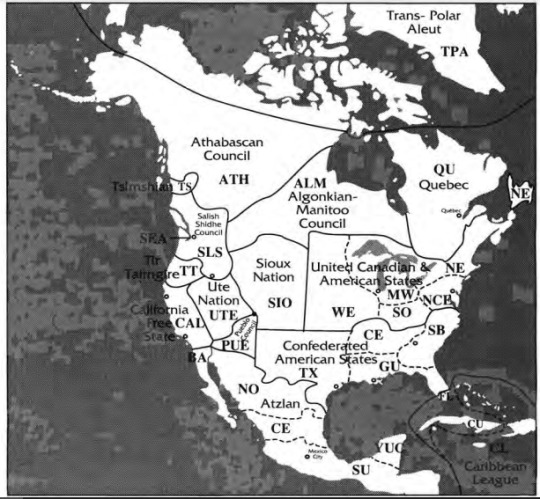

Regional Telecommunications Grid (RTG) – this is analogous to country codes. These are letter codes abbreviating the area. The RTG that covers Seattle is NA/UCAS-SEA (North America, United Canadian & American States, Seattle).

Local Telecommunications Grid (LTG) – this is analogous to a local area code. Every system will have its own LTG, encoded by a number. The examples given all have four digits but presuming there to be more than 10,000 computer systems in Seattle, they could have any value necessary. From the book:

Players should not know what is in the gamemaster's phone book because system owners guard their LTG locations jealously. Player characters will have to hunt down an access code or acquire it during an adventure to know where an LTG system connects. Let them keep their own phone books.

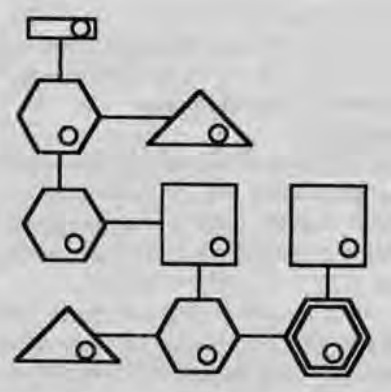

Supposing your decker already has the number, they can try to access the system itself. If connecting from outside (the RTG), then the decker starts off trying to infiltrate the SAN: System Access Node.

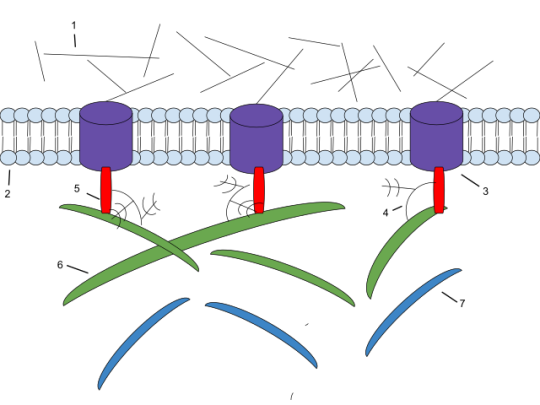

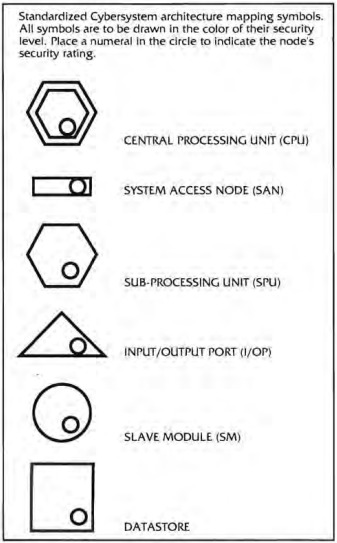

Computer systems are constructed out of a network of connected nodes, each of which having a particular purpose, represented by a standard shape, and each actually corresponding to a real part of a computer:

Central Processing Unit (CPU)

Sub-Processing Unit (SPU)

Datastore

Input/Output (I/O) Port

Slave Module

Because the decker (more specifically, the persona program running on their cyberdeck) is intrusive, there are dice rolls involved in gaining illicit access to every node, dice rolls involved in getting the node to execute your commands, dice rolls to deal with the Intrusion Countermeasures (IC, “ice”), and so on.

The Behind the Scenes chapter of the core book gives rules for randomly generating Matrix systems, populating them with ice, and determining the value of any paydata found in datastores.

Which is when you realize that Matrix 1.0 is just a reskinned one-PC dungeon crawl.

A dungeon with locked/stuck doors, monsters to protect them, and treasure to be found in them. It became cliché at our table that when it was time for the decker to do their thing, the rest of the party went to get snacks and shoot some hoops outside. Yes, it was thrilling for the decker, but at the end, they either got they needed from the system, or they didn’t. Unless you were running a campaign of all deckers (which would be AWESOME), you’re better off just having an NPC decker as a contact and contract out that work.

So, it was not surprising that, by the time the 2nd Edition version of Virtual Realities was released, it contained a completely different set of mechanics, dubbed Matrix 2.0, which became the standard when 3rd Edition was published, that greatly streamlined and sped up the gameplay.

16 notes

·

View notes

Text

How to Remove an Inaccessible Datastore in VMware

How to Remove an Inaccessible Datastore in VMware @vexpert #vmwarecommunities #vmwaretroubleshooting #homelab #homeserver #storagetroubleshooting #vcenterserver #vmwareesxi

There are a lot of times when I am working in the home lab and removing things and adding things, changing hardware, etc and you get things in a funky state when it comes to datastores and other configuration. I wanted to walk through with you guys removing an inaccessible VMware as this is probably something you will need to do not only in the home lab but at some point in production.

Table of…

0 notes

Text

Dell Unity Deploy D-UN-DY-23 Dumps Questions

If you're considering the D-UN-DY-23 Dell Unity Deploy 2023 Exam, you're in for a challenge that's both rigorous and rewarding. Having recently passed this exam, I want to share my experience and provide some tips that might help you on your journey. I found Certspots' Dell Unity Deploy D-UN-DY-23 dumps questions incredibly helpful. These dumps provide a realistic preview of the exam questions and scenarios you might encounter. Studying these Dell Unity Deploy D-UN-DY-23 Dumps Questions allowed me to familiarize myself with the exam format and question types, making it easier to tackle the actual test.

Why the Dell Unity Deploy 2023 Exam?

The D-UN-DY-23 exam is aimed at professionals involved in deploying Dell Unity systems, which are essential for contemporary data management and storage. It evaluates your knowledge and skills in areas such as installation, configuration, and management of these systems. Specifically, the Dell EMC Unity Deploy certification targets individuals looking to advance their careers in the Unity domain. The Dell EMC Unity Deploy 2023 (DCS-IE) exam confirms that candidates have the basic knowledge and demonstrated abilities required to manage Dell Unity storage systems in a production setting, aligning with business needs. It also covers configuration tasks that support ongoing management and basic integration topics.

Familiar with the D-UN-DY-23 Exam Topics

Dell Unity Platform Concepts, Features, and Architecture (10%)

● Describe the Dell Unity platform architecture, features, and functions

● Describe the Dell Unity VSA software defined storage solution

● Identify the Dell Unity XT hardware components

Dell Unity XT and UnityVSA Installation and Service (10%)

● Install and initialize a Dell Unity XT storage system

● Deploy and initialize a Dell UnityVSA system

● Perform key service tasks and identify related resources

● Describe the Dell Unity Platform service functions

Dell Unity XT and UnityVSA System Administration (5%)

● Identify and describe the user interfaces for monitoring and managing the Dell Unity family of storage systems

● Configure the Dell Unity XT support and basic system settings for system administration

Dell Unity XT and UnityVSA Storage Provisioning and Access (25%)

● Describe dynamic and traditional storage pools and how they are provisioned

● Describe dynamic pool expansion, considerations for mixing drive sizes and the rebuild process

● Provision block, file and VMware datastore storage

● Configure host access to block storage resources

● Configure NAS client access to SMB and NFS file storage resources

● Configure VMware ESXi hosts to access VMware datastore storage resources

Storage Efficiency, Scalability, and Performance Features (25%)

● Describe and configure FAST Cache

● Describe and configure File Level Retention

● Describe and configure Data Reduction

● Describe and configure FAST VP

Data Protection and Mobility (25%)

● Describe the Snapshots data protection feature and snapshot creation

● Descibe the Replication data protection feature

● Create synchronous and asynchronous replication sessions for storage resources

Preparation Tips for Dell Unity Deploy D-UN-DY-23 Exam

Study the Exam Objectives: Make sure you are well-versed in all the key topics listed in the exam objectives. The Dell Unity Deploy exam covers a range of topics including installation, configuration, and troubleshooting. Understanding these areas thoroughly is essential.

Hands-On Practice: Practical experience is invaluable. If possible, get hands-on practice with Dell Unity systems. This could be through a lab environment or real-world experience. The more you work with the systems, the better you'll understand the intricacies of deployment and management.

Review Official Documentation: Dell's official documentation and guides are a goldmine of information. They provide in-depth details about the features and functionalities of Dell Unity systems. Reviewing these documents can give you a clearer understanding of what to expect in the exam.

Join Study Groups: Engaging with a study group or forum can provide additional insights and answer any questions you might have. Sharing knowledge and discussing topics with peers can enhance your understanding and provide different perspectives.

Final Thoughts

Passing the D-UN-DY-23 Dell Unity Deploy 2023 Exam was a rewarding achievement. The preparation process, particularly using Certspots D-UN-DY-23 dumps questions, made a significant difference in my study routine. If you're preparing for this exam, I strongly recommend using these resources to help you succeed. With dedicated study and hands-on practice, you can confidently approach and pass the exam.

Good luck on your journey to becoming a certified Dell Unity Deploy professional!

0 notes