#Ai must be regulated

Explore tagged Tumblr posts

Text

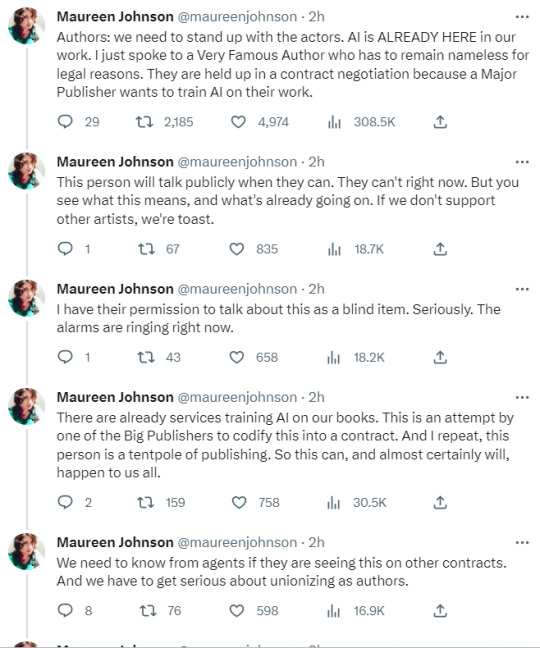

Alarm bells being rung by Maureen Johnson on AI and the Big Publishers

#I've already got academics wanting it put into their contracts that their work CAN'T be used to train AI#This is a scary time#ai MUST be regulated#on publishing#wga strike#sag aftra strike#sag-aftra strike#AUTHORS UNIONIZE

27K notes

·

View notes

Text

“A recent Goldman Sachs study found that generative AI tools could, in fact, impact 300 million full-time jobs worldwide, which could lead to a ‘significant disruption’ in the job market.”

“Insider talked to experts and conducted research to compile a list of jobs that are at highest-risk for replacement by AI.”

Tech jobs (Coders, computer programmers, software engineers, data analysts)

Media jobs (advertising, content creation, technical writing, journalism)

Legal industry jobs (paralegals, legal assistants)

Market research analysts

Teachers

Finance jobs (Financial analysts, personal financial advisors)

Traders (stock markets)

Graphic designers

Accountants

Customer service agents

"’We have to think about these things as productivity enhancing tools, as opposed to complete replacements,’ Anu Madgavkar, a partner at the McKinsey Global Institute, said.”

What will be eliminated from all of these industries is the ENTRY LEVEL JOB. You know, the jobs where newcomers gain valuable real-world experience and build their resumes? The jobs where you’re supposed to get your 1-2 years of experience before moving up to the big leagues (which remain inaccessible to applicants without the necessary experience, which they can no longer get, because so-called “low level” tasks will be completed by AI).

There’s more...

Wendy’s to test AI chatbot that takes your drive-thru order

“Wendy’s is not entirely a pioneer in this arena. Last year, McDonald’s opened a fully automated restaurant in Fort Worth, Texas, and deployed more AI-operated drive-thrus around the country.”

BT to cut 55,000 jobs with up to a fifth replaced by AI

“Chief executive Philip Jansen said ‘generative AI’ tools such as ChatGPT - which can write essays, scripts, poems, and solve computer coding in a human-like way - ‘gives us confidence we can go even further’.”

Why promoting AI is actually hurting accounting

“Accounting firms have bought into the AI hype and slowed their investment in personnel, believing they can rely more on machines and less on people.“

Will AI Replace Software Engineers?

“The truth is that AI is unlikely to replace high-value software engineers who build complex and innovative software. However, it could replace some low-value developers who build simple and repetitive software.”

#fuck AI#regulate AI#AI must be regulated#because corporations can't be trusted#because they are driven by greed#because when they say 'increased productivity' what they actually mean is increased profits - for the execs and shareholders not the workers#because when they say that AI should be used as a tool to support workers - what they really mean is eliminate entry level jobs#WGA strike 2023#i stand with the WGA

75 notes

·

View notes

Text

@aquitainequeen

I know you are super anti-AI and I am too, but honestly this was my first thought when the announcement went out.

My second was: I wonder how we can get a hold of every frame of disney animation ever and then convince our community of anti-AI'ers to just...Post it the day this goes online.

103K notes

·

View notes

Text

"These models are constitutionally incapable of doing sanity checking on their own work, and that's whats come to bite this industry in the behind." - Gary Marcus, professor of neural science at New York University.

0 notes

Text

Please everyone! Read the link and its comments!

@ask-fat-eqg @askdrakgo @yuurei20 @aynare-emanahitu-blog @ray-norr @biggwomen @nekocrispy @neromardorm @pizzaplexs-fattest-securityguard @lonely-lorddominator @ask-magical-total-drama @ask-liam-and-co @thefrogman @pleatedjeans @theclassyissue @terristre @textsfromsuperheroes @raven-at-the-writing-desk @spongebobssquarepants

#revengeofreaper32#DeviantArt#SaveDeviantart#announcements#important#please read#please reblog#must be seen#news#please help#send help#help#this is a cry for help#tanasweet123#may 2024#may#2024#support human artists#anti eclipse#hear us DA#save DeviantArt#fix deviantart#regulate AI#ban trolls and cyberbullies#keep DeviantArt safe#spread the word#be a hero#viralpost#viral#go help

19 notes

·

View notes

Text

AI generated 'nature photographs' get reblogged onto my dash every once in a while and it makes me kind of sad. Nature on its own is so pretty! It's a genuine marvel that some photographers can be in the right place at the right time to capture a unique photo of a weasel riding a woodpecker, or a crow among doves, or a field of flowers catching the sunlight just right and glowing like a sea of jewels.

It leaves me feeling hollow when I check the source on an interesting 'photo' and realize that it isn't capturing a rare phenomenon that can happen right on this very earth, it doesn't exist at all.

If the people I follow genuinely like it, then ok, nothing I can do about that, but I wonder if a good portion of them just don't know that they aren't real photographs. Just because there's a source on a post doesn't mean it links to a real photographer. Be aware.

#I genuinely firmly believe that there MUST be some regulation surrounding ai photos of nature#and I don't mean it in a hippy 'don't let nature and machines intersect maaan!' typa way#I mean it in a 'our perception and knowledge of this world will be greatly altered and outright incorrect if we continue this' typa way#think of the dude who used ai 'art' of a rodent for his scientific paper. The image itself directly spreads incorrect information#this is not the same as photoshopping a watermelon bright blue and trolling people into believing it#anyway. all ai images should have a watermark embedded into them by default that's just my opinion

8 notes

·

View notes

Text

I don't think ai images and art have any real value tbh. Like do you do your thing if you wanna create Hot MILFs in your Area but using ChatGPT or whatever I don't care, but i genuinely don't think it should be grouped with traditional or digital art.

#Bc like. with specifically prompt based generators the process feels so vapid and hollow i get nothing out of it.#I've used generators to make pinups and that's it. and they all like suck but like i said they can do Hot MILFs in your Area#I'm neutral on AI art but lean towards I Don't Like It and don't care about it enough to really argue against it anymore.#I just think like. Some form of regulation would be. tactful?#like idk some restrictions requiring that you must give both the option to opt in or out of having stuff you've made used to train AI model#I'm not arguing for greater copyright do not get me wrong to not twist my words that will only end badly#Just. idk. it feels wrong? to have my art scraped to train ai. ik it isn't a direct one for one like oh it's scanning your shit#i know it's not literally copying and combining scraped art with other art to create stuff.#it just doesn't feel right if it isn't a consenting individuals work.

9 notes

·

View notes

Text

As we trickle on down the line with more people becoming more aware that there are some people out there in this world using AI language programs such as ChatGPT, OpenAI and CharacterAI to write positivity, come up with plotting ideas, or write thread replies for them, I'm going to add a new rule to my carrd which I'll be updating later today:

Please, for the love of God, do NOT use AI to write your replies to threads, come up with plots, or script your positivity messages for you. If I catch anyone doing this I will not interact with you.

#i'm an underachiever — out.#this is the bad place#we are just begging for Rehoboam or the Machine from Person of Interest to become our new reality at this rate#because there are practically no laws regulating AI learning yet#so it's like the wild west in a digital space#people taking incomplete fics from ao3 or wattpad or ff and asking AI to finish it for them AS IF IT'S NOT STRAIGHT UP PLAGIARISM#google docs being used to train the AI so i guess i'm gonna have to find a nice notebook and just write everything by hand#also#THIS HAS NOT PERSONALLY HAPPENED TO ME YET#i just saw a mutual who said they saw another mutual who saw a PSA which means that SOMEWHERE it happened to someone#i have also seen a few answered asks float across the for you page discussing the concerns about people posting AI generated fic on ao3#and arguing against or in defense of readers using OpenAI to finish writing incomplete fics that were abandoned by the author#which means that it must happen and at the rate things are going it is only a matter of time before it catches up to the rest of us#mel vs AI

9 notes

·

View notes

Text

"Reviewers told the report’s authors that AI summaries often missed emphasis, nuance and context; included incorrect information or missed relevant information; and sometimes focused on auxiliary points or introduced irrelevant information. Three of the five reviewers said they guessed that they were reviewing AI content.

The reviewers’ overall feedback was that they felt AI summaries may be counterproductive and create further work because of the need to fact-check and refer to original submissions which communicated the message better and more concisely."

Fascinating (the full report is linked in the article). I've seen this kind of summarization being touted as a potential use of LLMs that's given a lot more credibility than more generative prompts. But a major theme of the assessors was that the LLM summaries missed nuance and context that made them effectively useless as summaries. (ex: “The summary does not highlight [FIRM]’s central point…”)

The report emphasizes that better prompting can produce better results, and that new models are likely to improve the capabilities, but I must admit serious skepticism. To put it bluntly, I've seen enough law students try to summarize court rulings to say with confidence that in order to reliably summarize something, you must understand it. A clever reader who is good at pattern recognition can often put together a good-enough summary without really understanding the case, just by skimming the case and grabbing and repeating the bits that look important. And this will work...a lot of the time. Until it really, really doesn't. And those cases where the skim-and-grab method won't work aren't obvious from the outside. And I just don't see a path forward right now for the LLMs to do anything other than skim-and-grab.

Moreover, something that isn't even mentioned in the test is the absence of possibility of follow up. If a human has summarized a document for me and I don't understand something, I can go to the human and say, "hey, what's up with this?" It may be faster and easier than reading the original doc myself, or they can point me to the place in the doc that lead them to a conclusion, or I can even expand my understanding by seeing an interpretation that isn't intuitive to me. I can't do that with an LLM. And again, I can't really see a path forward no matter how advanced the programing is, because the LLM can't actually think.

#ai bs#though to be fair I don't think this is bs#just misguided#and I think there are other use-cases for LLMs#but#I'm really not sold on this one#if anything I think the report oversold the LLM#compared to the comments by the assessors

551 notes

·

View notes

Text

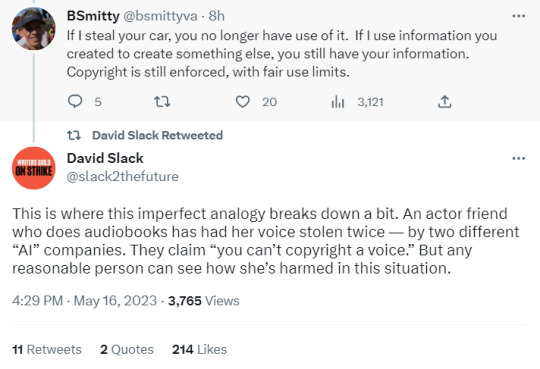

Thoughts from David Slack on 'AI' and copyright

(the voice theft in particular is really depressing)

#wga strike#wga strong#AI MUST be regulated#writer's guild of america strike#writer's guild of america#david slack#copyright#anyone familiar with me will know how I hate and love copyright#long post

16K notes

·

View notes

Text

Data center emissions probably 662% higher than big tech claims. Can it keep up the ruse?

Emissions from in-house data centers of Google, Microsoft, Meta and Apple may be 7.62 times higher than official tally

(Isabel O'Brien, The Guardian, 9/15/24)

Big tech has made some big claims about greenhouse gas emissions in recent years. But as the rise of artificial intelligence creates ever bigger energy demands, it’s getting hard for the industry to hide the true costs of the data centers powering the tech revolution.

According to a Guardian analysis, from 2020 to 2022 the real emissions from the “in-house” or company-owned data centers of Google, Microsoft, Meta and Apple are likely about 662% – or 7.62 times – higher than officially reported.

...

AI is far more energy-intensive on data centers than typical cloud-based applications. According to Goldman Sachs, a ChatGPT query needs nearly 10 times as much electricity to process as a Google search, and data center power demand will grow 160% by 2030. Goldman competitor Morgan Stanley’s research has made similar findings, projecting data center emissions globally to accumulate to 2.5bn metric tons of CO2 equivalent by 2030.

(Read the full story at The Guardian - no paywall.)

2 notes

·

View notes

Text

“Humans in the loop” must detect the hardest-to-spot errors, at superhuman speed

I'm touring my new, nationally bestselling novel The Bezzle! Catch me SATURDAY (Apr 27) in MARIN COUNTY, then Winnipeg (May 2), Calgary (May 3), Vancouver (May 4), and beyond!

If AI has a future (a big if), it will have to be economically viable. An industry can't spend 1,700% more on Nvidia chips than it earns indefinitely – not even with Nvidia being a principle investor in its largest customers:

https://news.ycombinator.com/item?id=39883571

A company that pays 0.36-1 cents/query for electricity and (scarce, fresh) water can't indefinitely give those queries away by the millions to people who are expected to revise those queries dozens of times before eliciting the perfect botshit rendition of "instructions for removing a grilled cheese sandwich from a VCR in the style of the King James Bible":

https://www.semianalysis.com/p/the-inference-cost-of-search-disruption

Eventually, the industry will have to uncover some mix of applications that will cover its operating costs, if only to keep the lights on in the face of investor disillusionment (this isn't optional – investor disillusionment is an inevitable part of every bubble).

Now, there are lots of low-stakes applications for AI that can run just fine on the current AI technology, despite its many – and seemingly inescapable - errors ("hallucinations"). People who use AI to generate illustrations of their D&D characters engaged in epic adventures from their previous gaming session don't care about the odd extra finger. If the chatbot powering a tourist's automatic text-to-translation-to-speech phone tool gets a few words wrong, it's still much better than the alternative of speaking slowly and loudly in your own language while making emphatic hand-gestures.

There are lots of these applications, and many of the people who benefit from them would doubtless pay something for them. The problem – from an AI company's perspective – is that these aren't just low-stakes, they're also low-value. Their users would pay something for them, but not very much.

For AI to keep its servers on through the coming trough of disillusionment, it will have to locate high-value applications, too. Economically speaking, the function of low-value applications is to soak up excess capacity and produce value at the margins after the high-value applications pay the bills. Low-value applications are a side-dish, like the coach seats on an airplane whose total operating expenses are paid by the business class passengers up front. Without the principle income from high-value applications, the servers shut down, and the low-value applications disappear:

https://locusmag.com/2023/12/commentary-cory-doctorow-what-kind-of-bubble-is-ai/

Now, there are lots of high-value applications the AI industry has identified for its products. Broadly speaking, these high-value applications share the same problem: they are all high-stakes, which means they are very sensitive to errors. Mistakes made by apps that produce code, drive cars, or identify cancerous masses on chest X-rays are extremely consequential.

Some businesses may be insensitive to those consequences. Air Canada replaced its human customer service staff with chatbots that just lied to passengers, stealing hundreds of dollars from them in the process. But the process for getting your money back after you are defrauded by Air Canada's chatbot is so onerous that only one passenger has bothered to go through it, spending ten weeks exhausting all of Air Canada's internal review mechanisms before fighting his case for weeks more at the regulator:

https://bc.ctvnews.ca/air-canada-s-chatbot-gave-a-b-c-man-the-wrong-information-now-the-airline-has-to-pay-for-the-mistake-1.6769454

There's never just one ant. If this guy was defrauded by an AC chatbot, so were hundreds or thousands of other fliers. Air Canada doesn't have to pay them back. Air Canada is tacitly asserting that, as the country's flagship carrier and near-monopolist, it is too big to fail and too big to jail, which means it's too big to care.

Air Canada shows that for some business customers, AI doesn't need to be able to do a worker's job in order to be a smart purchase: a chatbot can replace a worker, fail to their worker's job, and still save the company money on balance.

I can't predict whether the world's sociopathic monopolists are numerous and powerful enough to keep the lights on for AI companies through leases for automation systems that let them commit consequence-free free fraud by replacing workers with chatbots that serve as moral crumple-zones for furious customers:

https://www.sciencedirect.com/science/article/abs/pii/S0747563219304029

But even stipulating that this is sufficient, it's intrinsically unstable. Anything that can't go on forever eventually stops, and the mass replacement of humans with high-speed fraud software seems likely to stoke the already blazing furnace of modern antitrust:

https://www.eff.org/de/deeplinks/2021/08/party-its-1979-og-antitrust-back-baby

Of course, the AI companies have their own answer to this conundrum. A high-stakes/high-value customer can still fire workers and replace them with AI – they just need to hire fewer, cheaper workers to supervise the AI and monitor it for "hallucinations." This is called the "human in the loop" solution.

The human in the loop story has some glaring holes. From a worker's perspective, serving as the human in the loop in a scheme that cuts wage bills through AI is a nightmare – the worst possible kind of automation.

Let's pause for a little detour through automation theory here. Automation can augment a worker. We can call this a "centaur" – the worker offloads a repetitive task, or one that requires a high degree of vigilance, or (worst of all) both. They're a human head on a robot body (hence "centaur"). Think of the sensor/vision system in your car that beeps if you activate your turn-signal while a car is in your blind spot. You're in charge, but you're getting a second opinion from the robot.

Likewise, consider an AI tool that double-checks a radiologist's diagnosis of your chest X-ray and suggests a second look when its assessment doesn't match the radiologist's. Again, the human is in charge, but the robot is serving as a backstop and helpmeet, using its inexhaustible robotic vigilance to augment human skill.

That's centaurs. They're the good automation. Then there's the bad automation: the reverse-centaur, when the human is used to augment the robot.

Amazon warehouse pickers stand in one place while robotic shelving units trundle up to them at speed; then, the haptic bracelets shackled around their wrists buzz at them, directing them pick up specific items and move them to a basket, while a third automation system penalizes them for taking toilet breaks or even just walking around and shaking out their limbs to avoid a repetitive strain injury. This is a robotic head using a human body – and destroying it in the process.

An AI-assisted radiologist processes fewer chest X-rays every day, costing their employer more, on top of the cost of the AI. That's not what AI companies are selling. They're offering hospitals the power to create reverse centaurs: radiologist-assisted AIs. That's what "human in the loop" means.

This is a problem for workers, but it's also a problem for their bosses (assuming those bosses actually care about correcting AI hallucinations, rather than providing a figleaf that lets them commit fraud or kill people and shift the blame to an unpunishable AI).

Humans are good at a lot of things, but they're not good at eternal, perfect vigilance. Writing code is hard, but performing code-review (where you check someone else's code for errors) is much harder – and it gets even harder if the code you're reviewing is usually fine, because this requires that you maintain your vigilance for something that only occurs at rare and unpredictable intervals:

https://twitter.com/qntm/status/1773779967521780169

But for a coding shop to make the cost of an AI pencil out, the human in the loop needs to be able to process a lot of AI-generated code. Replacing a human with an AI doesn't produce any savings if you need to hire two more humans to take turns doing close reads of the AI's code.

This is the fatal flaw in robo-taxi schemes. The "human in the loop" who is supposed to keep the murderbot from smashing into other cars, steering into oncoming traffic, or running down pedestrians isn't a driver, they're a driving instructor. This is a much harder job than being a driver, even when the student driver you're monitoring is a human, making human mistakes at human speed. It's even harder when the student driver is a robot, making errors at computer speed:

https://pluralistic.net/2024/04/01/human-in-the-loop/#monkey-in-the-middle

This is why the doomed robo-taxi company Cruise had to deploy 1.5 skilled, high-paid human monitors to oversee each of its murderbots, while traditional taxis operate at a fraction of the cost with a single, precaratized, low-paid human driver:

https://pluralistic.net/2024/01/11/robots-stole-my-jerb/#computer-says-no

The vigilance problem is pretty fatal for the human-in-the-loop gambit, but there's another problem that is, if anything, even more fatal: the kinds of errors that AIs make.

Foundationally, AI is applied statistics. An AI company trains its AI by feeding it a lot of data about the real world. The program processes this data, looking for statistical correlations in that data, and makes a model of the world based on those correlations. A chatbot is a next-word-guessing program, and an AI "art" generator is a next-pixel-guessing program. They're drawing on billions of documents to find the most statistically likely way of finishing a sentence or a line of pixels in a bitmap:

https://dl.acm.org/doi/10.1145/3442188.3445922

This means that AI doesn't just make errors – it makes subtle errors, the kinds of errors that are the hardest for a human in the loop to spot, because they are the most statistically probable ways of being wrong. Sure, we notice the gross errors in AI output, like confidently claiming that a living human is dead:

https://www.tomsguide.com/opinion/according-to-chatgpt-im-dead

But the most common errors that AIs make are the ones we don't notice, because they're perfectly camouflaged as the truth. Think of the recurring AI programming error that inserts a call to a nonexistent library called "huggingface-cli," which is what the library would be called if developers reliably followed naming conventions. But due to a human inconsistency, the real library has a slightly different name. The fact that AIs repeatedly inserted references to the nonexistent library opened up a vulnerability – a security researcher created a (inert) malicious library with that name and tricked numerous companies into compiling it into their code because their human reviewers missed the chatbot's (statistically indistinguishable from the the truth) lie:

https://www.theregister.com/2024/03/28/ai_bots_hallucinate_software_packages/

For a driving instructor or a code reviewer overseeing a human subject, the majority of errors are comparatively easy to spot, because they're the kinds of errors that lead to inconsistent library naming – places where a human behaved erratically or irregularly. But when reality is irregular or erratic, the AI will make errors by presuming that things are statistically normal.

These are the hardest kinds of errors to spot. They couldn't be harder for a human to detect if they were specifically designed to go undetected. The human in the loop isn't just being asked to spot mistakes – they're being actively deceived. The AI isn't merely wrong, it's constructing a subtle "what's wrong with this picture"-style puzzle. Not just one such puzzle, either: millions of them, at speed, which must be solved by the human in the loop, who must remain perfectly vigilant for things that are, by definition, almost totally unnoticeable.

This is a special new torment for reverse centaurs – and a significant problem for AI companies hoping to accumulate and keep enough high-value, high-stakes customers on their books to weather the coming trough of disillusionment.

This is pretty grim, but it gets grimmer. AI companies have argued that they have a third line of business, a way to make money for their customers beyond automation's gifts to their payrolls: they claim that they can perform difficult scientific tasks at superhuman speed, producing billion-dollar insights (new materials, new drugs, new proteins) at unimaginable speed.

However, these claims – credulously amplified by the non-technical press – keep on shattering when they are tested by experts who understand the esoteric domains in which AI is said to have an unbeatable advantage. For example, Google claimed that its Deepmind AI had discovered "millions of new materials," "equivalent to nearly 800 years’ worth of knowledge," constituting "an order-of-magnitude expansion in stable materials known to humanity":

https://deepmind.google/discover/blog/millions-of-new-materials-discovered-with-deep-learning/

It was a hoax. When independent material scientists reviewed representative samples of these "new materials," they concluded that "no new materials have been discovered" and that not one of these materials was "credible, useful and novel":

https://www.404media.co/google-says-it-discovered-millions-of-new-materials-with-ai-human-researchers/

As Brian Merchant writes, AI claims are eerily similar to "smoke and mirrors" – the dazzling reality-distortion field thrown up by 17th century magic lantern technology, which millions of people ascribed wild capabilities to, thanks to the outlandish claims of the technology's promoters:

https://www.bloodinthemachine.com/p/ai-really-is-smoke-and-mirrors

The fact that we have a four-hundred-year-old name for this phenomenon, and yet we're still falling prey to it is frankly a little depressing. And, unlucky for us, it turns out that AI therapybots can't help us with this – rather, they're apt to literally convince us to kill ourselves:

https://www.vice.com/en/article/pkadgm/man-dies-by-suicide-after-talking-with-ai-chatbot-widow-says

If you'd like an essay-formatted version of this post to read or share, here's a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2024/04/23/maximal-plausibility/#reverse-centaurs

Image: Cryteria (modified) https://commons.wikimedia.org/wiki/File:HAL9000.svg

CC BY 3.0 https://creativecommons.org/licenses/by/3.0/deed.en

#pluralistic#ai#automation#humans in the loop#centaurs#reverse centaurs#labor#ai safety#sanity checks#spot the mistake#code review#driving instructor

853 notes

·

View notes

Text

I do want to say, my views on AI “art” have changed somewhat. It was wrong of me to claim that it’s not wrong to use it in shitposts… there definitely is some degree of something problematic there.

Personally I feel like it’s one of those problems that’s best solved via lawmaking—specifically, AI generations shouldn’t be copywrite-able, and AI companies should be fined for art theft and “plagiarism”… even though it’s not directly plagiarism in the current legal sense. We definitely need ethical philosophers and lawmakers to spend some time defining exactly what is going on here.

But for civilians, using AI art is bad in the same nebulous sense that buying clothes from H&M or ordering stuff on Amazon is bad… it’s a very spread out, far away kind of badness, which makes it hard to quantify. And there’s no denying that in certain contexts, when applied in certain ways (with actual editing and artistic skill), AI can be a really interesting tool for artists and writers. Which again runs into the copywrite-ability thing. How much distance must be placed between the artist and the AI-generated inspiration in order to allow the artist to say “this work is fully mine?”

I can’t claim to know the answers to these issues. But I will say two things:

Ignoring AI shit isn’t going to make it go away. Our tumblr philosophy is wildly unpopular in the real world and most other places on the internet, and those who do start using AI are unfortunately gonna have a big leg up on those who don’t, especially as it gets better and better at avoiding human detection.

Treating AI as a fundamental, ontological evil is going to prevent us from having these deep conversations which are necessary for us—as a part of society—to figure out the ways to censure AI that are actually helpful to artists. We need strong unions making permanent deals now, we need laws in place that regulate AI use and the replacement of humans, and we need to get this technology out of the hands of huge megacorporations who want nothing more than to profit off our suffering.

I’ve seen the research. I knew AI was going to big years ago, and right now I know that it’s just going to get bigger. Nearly every job is in danger. We need to interact with this issue—sooner rather than later—or we risk losing all of our futures. And unfortunately, just as with many other things under capitalism, for the time being I think we have to allow some concessions. The issue is not 100% black or white. Certainly a dark, stormy grey of some sort.

But please don’t attack middle-aged cat-owners playing around with AI filters. Start a dialogue about the spectrum of morality present in every use of AI—from the good (recognizing cancer cells years in advance, finding awesome new metamaterials) to the bad (megacorporations replacing workers and stealing from artists) to the kinda ambiguous (shitposts, app filter that makes your dog look like a 16th century British royal for some reason).

And if you disagree with me, please don’t be hateful about it. I fully recognize that my current views might be wrong. I’m not a paragon of moral philosophy or anything. I’m just doing my best to live my life in a way that improves the world instead of detracting from it. That’s all any of us can do, in my opinion.

#the wizcourse#<- new tag for my pretentious preachy rants#this is—AGAIN—an issue where you should be calling your congressmen and protesting instead of making nasty posts at each other

852 notes

·

View notes

Text

𝐍𝐞𝐰𝐛𝐢𝐞 | Lee Jeno Smau

𝐏𝐚𝐢𝐫𝐢𝐧𝐠: Lee Jeno x F Reader.

𝐖𝐚𝐫𝐧𝐢𝐧𝐠𝐬: Angst?, Cursing, Suggestive, more to be added lol.

𝐆𝐞𝐧𝐫𝐞𝐬: University au, spin on fight club au, social media au; smau, written parts, angst, fluff, slow burn, humour, (one sided) enemies to lovers, hidden identities.

𝐂𝐡𝐚𝐩𝐭𝐞𝐫 𝐜𝐨𝐮𝐧𝐭: 7

𝐑𝐮𝐥𝐞𝐬 𝐨𝐟 𝐍𝐞𝐨 𝐓𝐞𝐜𝐡𝐧𝐨𝐥𝐨𝐠𝐲 𝐂𝐚𝐦𝐩𝐮𝐬:

1. Only grades acceptable are B+ and above.

2. Negative and derogatory wording about Neo is strictly forbidden.

3. Uniforms must be worn exactly as shown in the uniform guide with no alterations.

4. Tardiness won’t be tolerated, there is no excuse for being late.

5. No Female and Male contact is permitted, only during contact sports or circus in circumstances with granted permission.

6. All homework, assignments, projects and school activities must be completed by the set timeline and are compulsory.

7. No outside help is allowed, this includes outside tutors and Ai programs, on campus tutors will be provided with a fee.

If these rules are not upheld, there will be strict consequences such as suspension and/or expulsion. One or more rules could lead to an immediate expulsion if decided by the faculty.

These are the strict guidelines Neo university students must follow without question or backlash through their years at Neo Technology. Failure to comply with said guidelines never ends peacefully. Many students end up leaving Neo Technology in their earlier years in their majors and courses due to Burn out or expulsions.

Those who manage to go through to graduation in their majors/degrees are always guaranteed a good future, having this university campus on one's resume/Cv is an automatic ticket into high end jobs. Previously graduated students have been seen working in higher up positions in multiple different areas. There hasn’t been a recorded failure on Neo Technologies graduated classes so far.

Many students fill out the same requirements that go to Neo Technology, Wealthy family backgrounds, academically well adjusted and above average in multiple areas shown through their previous education and lastly well connected individuals with higher up contacts. With one outlier.

The one student with a scholarship that is picked out every year. AKA the charity case to make the university look fair. However this scholarship is given to a first year, every year in all majors, whether they make it through to graduation is their own hardship. Those who drop or or get expelled will be replaced with a new student in that year they dropped out.

Due to unseen and unfortunate events a scholarship student in their graduation year passed away from ‘natural’ causes, meaning a new scholarship student would be taking their place in the graduating class of 2024 in the business major area and courses.

Many outsiders condemn Neo Technology for their strict ruling and how faintly it seemed to act more like a high school then university due to its strict regulations rather than the relaxed ruling one mostly knows from being a university student on campus. However words and thoughts do nothing to change how Neo Technology continues to move forward with its education agenda.

𝐑𝐮𝐥𝐞𝐬 𝐨𝐟 𝐅𝐢𝐠𝐡𝐭 𝐂𝐥𝐮𝐛:

1. You don’t talk about Fight Club.

2. You do NOT talk about Fight club.

3. If someone says “Stop” or goes limp, taps out, the fight is over.

4. Only two people, to a fight.

5. No Shirts, No shoes.

6. Fights will go on as long as they have to.

7. If this is your first time at Fight Club, you have to fight.

Those were the rules, you don’t follow them you’re out and that doesn’t just mean a simple blacklisting. Fight Club was built from the ground up by people in their 40’s trying to have some excitement brought back into their mundane lives. Though of course as time progressed the younger generations started pouring in to the point the average ages seen in Fight Club were now no longer 40’s but between 20’s-40’s.

If you happen to be an unfortunate soul who wanders into Fight Club, there’s no point in saying be prepared because no newbie is. It doesn’t matter if it's your first and last day there. Rule number Seven always happens. If it’s your first time at Fight Club, you have to fight. It’s not a choice, it's a must.

Profiles 1 | Profiles 2 | Extra

1. All men =🚩

2. Why she kinda 🫦

3. Freak 🫵

4. Homie hopping

5. Hot privileges revoked

6. I got you bbg 💳

7. Neo T student.

More chapters to come…

𝐓𝐚𝐠𝐥𝐢𝐬𝐭: (Comment,message or submit a request to be added to this taglist.)

Oml first smau finally being done 👀 took me forever to decide to actually do it lol, let’s hope this will actually be good 😭 (constructive feedback is always appreciated so if you have any memo’s or notes feel free to tell me!)

Also a little sneak peak into the boys in this one here you go:

#jeno smau#lee jeno smau#nct dream smau#jeno social media au#jeno x reader#lee jeno x reader#lee jeno x y/n#lee jeno oneshot#lee jeno imagine#jeno oneshot#jeno imagine#jeno angst#jeno fluff#jeno suggestive#nct smau#nct dream x reader#nct dream texts#lee jeno texts#nct#nct dream#nct dream jeno#nct jeno#nct dream lee jeno#nct lee jeno#nct dream scenarios#nct scenarios#jeno scenarios#lee jeno scenarios

203 notes

·

View notes

Text

The creation of sexually explicit "deepfake" images is to be made a criminal offence in England and Wales under a new law, the government says.

Under the legislation, anyone making explicit images of an adult without their consent will face a criminal record and unlimited fine.

It will apply regardless of whether the creator of an image intended to share it, the Ministry of Justice (MoJ) said.

And if the image is then shared more widely, they could face jail.

A deepfake is an image or video that has been digitally altered with the help of Artificial Intelligence (AI) to replace the face of one person with the face of another.

Recent years have seen the growing use of the technology to add the faces of celebrities or public figures - most often women - into pornographic films.

Channel 4 News presenter Cathy Newman, who discovered her own image used as part of a deepfake video, told BBC Radio 4's Today programme it was "incredibly invasive".

Ms Newman found she was a victim as part of a Channel 4 investigation into deepfakes.

"It was violating... it was kind of me and not me," she said, explaining the video displayed her face but not her hair.

Ms Newman said finding perpetrators is hard, adding: "This is a worldwide problem, so we can legislate in this jurisdiction, it might have no impact on whoever created my video or the millions of other videos that are out there."

She said the person who created the video is yet to be found.

Under the Online Safety Act, which was passed last year, the sharing of deepfakes was made illegal.

The new law will make it an offence for someone to create a sexually explicit deepfake - even if they have no intention to share it but "purely want to cause alarm, humiliation, or distress to the victim", the MoJ said.

Clare McGlynn, a law professor at Durham University who specialises in legal regulation of pornography and online abuse, told the Today programme the legislation has some limitations.

She said it "will only criminalise where you can prove a person created the image with the intention to cause distress", and this could create loopholes in the law.

It will apply to images of adults, because the law already covers this behaviour where the image is of a child, the MoJ said.

It will be introduced as an amendment to the Criminal Justice Bill, which is currently making its way through Parliament.

Minister for Victims and Safeguarding Laura Farris said the new law would send a "crystal clear message that making this material is immoral, often misogynistic, and a crime".

"The creation of deepfake sexual images is despicable and completely unacceptable irrespective of whether the image is shared," she said.

"It is another example of ways in which certain people seek to degrade and dehumanise others - especially women.

"And it has the capacity to cause catastrophic consequences if the material is shared more widely. This Government will not tolerate it."

Cally Jane Beech, a former Love Island contestant who earlier this year was the victim of deepfake images, said the law was a "huge step in further strengthening of the laws around deepfakes to better protect women".

"What I endured went beyond embarrassment or inconvenience," she said.

"Too many women continue to have their privacy, dignity, and identity compromised by malicious individuals in this way and it has to stop. People who do this need to be held accountable."

Shadow home secretary Yvette Cooper described the creation of the images as a "gross violation" of a person's autonomy and privacy and said it "must not be tolerated".

"Technology is increasingly being manipulated to manufacture misogynistic content and is emboldening perpetrators of Violence Against Women and Girls," she said.

"That's why it is vital for the government to get ahead of these fast-changing threats and not to be outpaced by them.

"It's essential that the police and prosecutors are equipped with the training and tools required to rigorously enforce these laws in order to stop perpetrators from acting with impunity."

288 notes

·

View notes

Text

Technology needs regulation!

We must protect our art and jobs, our faces and voices (literally), our data and identities. The fight continues!

Animation I started in December when artists shared protest pieces against AI, I had time to finish it until now.

So much has happened since, several lawsuits (yay!), but much more exploitation against women and big productions using (almost useless) AI images. So yeah, the fight continues.

I livestreamed a bit of the process in my Youtube Channel, I will finish the animation and probably live stream some of it too, so subscribe if you're interested!

#artists on tumblr#animators on tumblr#no to ai art#ai art discussion#2d animation#animation#rough animation#marianarira

2K notes

·

View notes