#AIsecurity

Explore tagged Tumblr posts

Text

Millions of McDonald’s Job Applicants’ Data Left Vulnerable by AI Hiring Bot

McDonald’s AI chatbot “Olivia” exposed personal data of 64 million applicants due to weak passwords and an API flaw, letting hackers easily steal names, emails, and phone numbers. This massive breach could fuel phishing scams targeting eager job seekers right now.

Source: Wired

Read more: CyberSecBrief

7 notes

·

View notes

Text

🚨 2025’s scariest cyber threats aren’t what you expect. AI hacking your voice? Ransomware locking hospitals? Deepfake blackmail?

We broke down the top 5 cybersecurity threats coming next year—and exactly how to fight back.

🔗 Read the full guide: https://infinkey.com/cybersecurity-threats-in-2025/

#Cybersecurity#Tech#2025Trends#Hacking#DataPrivacy#Infosec#Deepfake#Ransomware#AISecurity#StaySafeOnline

3 notes

·

View notes

Text

How to build AI security without slowing innovation?

In the latest episode of the Devico Breakfast Bar Podcast, Oleg Sadikov — CEO and co-founder of Devico and DeviQA — sits down with Mateo Rojas-Carulla, co-founder of Lakera, to explore what it really takes to secure AI systems in today’s rapidly evolving tech landscape.

The conversation touches on everything from the viral success of Gandalf, Lakera’s AI-powered game that exposed prompt injection vulnerabilities, to the infrastructure challenges of protecting GenAI applications at scale. Mateo shares firsthand insights into why GenAI security demands a new playbook — one that breaks away from traditional cybersecurity assumptions.

Listeners will also gain practical strategies for scaling development with dedicated teams and making the most of software outsourcing services — without compromising on speed, safety, or control.

Listen here: https://www.deviqa.com/podcasts/mateo-rojas-carulla/

Highlights:

How GenAI is changing the rules of digital security

Why Gandalf went viral—and what it revealed

Key differences between AI security and classic cybersecurity

The role of distributed teams and time-zone-resilient collaboration

How smart outsourcing enables fast, secure AI product development

This episode is a must for tech leaders, AI builders, and anyone thinking about secure innovation at scale.

#AIsecurity#GenAI#PromptInjection#Cybersecurity#GandalfAI#SoftwareDevelopmentOutsourcing#DedicatedDevelopmentTeam#LLMsecurity#DevicoPodcast#DeviQA#EngineeringLeadership#TechInterview#AITesting#OutsourcingStrategy#OlegSadikov#Lakera

0 notes

Text

Securing AI Implementation: The Hidden Risk

Artificial Intelligence (AI) has transitioned from buzzword to business backbone. From streamlining operations to predicting market trends, organizations are racing to embed AI in every function. But amid this gold rush, there's a blind spot — AI security.

Most enterprises focus on performance, accuracy, and ROI of AI models. Few prioritize securing them. That’s a dangerous oversight.

Why AI Needs Its Own Security Strategy

AI systems are not just software — they learn, adapt, and evolve. This introduces a new category of threats that traditional security controls are not equipped to handle.

Here’s what’s at stake:

Model Theft: Adversaries can steal proprietary AI models, reversing years of R&D.

Data Poisoning: Attackers inject bad data into training sets, causing AI to behave unpredictably.

Prompt Injection & Manipulation: In generative AI, attackers exploit input prompts to hijack the model’s output.

Inference Attacks: Even anonymized data can be reverse-engineered from AI model predictions.

The 5 Pillars of Securing AI

Secure the Data Pipeline: Ensure data integrity from collection to training. Use robust validation and versioning.

Protect the Model Lifecycle: Implement access control, encryption, and integrity checks from development to deployment.

Deploy Adversarial Testing: Regularly simulate attacks like data poisoning or evasion to test model resilience.

Monitor Model Behavior: Track anomalies in AI decisions. Unexpected outputs could signal tampering.

Align with Standards like CSA’s AICM: Use frameworks like the AI Control Matrix from the Cloud Security Alliance to benchmark AI risk controls.

1 note

·

View note

Text

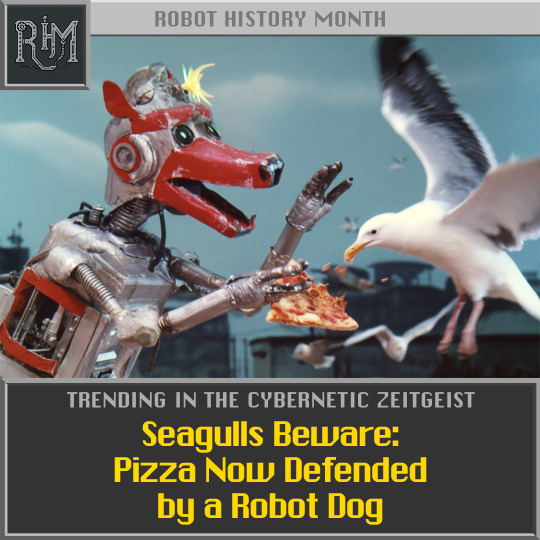

Seagulls Beware: Pizza Now Defended by a Robot Dog

Even Dogs Are Losing Jobs to Robots! Who needs a loyal canine sentinel when Domidog’s on patrol?

Remember when dogs were the unsung heroes protecting patio pizzas from bold seagulls? Those tail‑wagging sentries now face competition from Domidog, Domino’s AI‑powered guard dog, born to protect your pizza with silent efficiency. According to recent coverage, Domino’s UK is trialing this quadruped robot outside its stores to deter feathered bandits from snatching your takeaway.

Domidog doesn’t bark, chew your slipper, or beg for treats. Instead, it quietly stands guard while sensors detect approaching gulls, ensuring your slice remains safe—but without canine enthusiasm. Technically a robot, but rhetorically a threat: if humans can deploy guards and doorbells, why not robots that do it better, tirelessly, without vet bills? Our four‑legged companions might soon find their guarding gig gigglingly obsolete.

This witty twist highlights a broader trend: AI robots aren’t just delivering pizzas—they’re protecting them too. Domino’s has long experimented with delivery droids like DRU and Nuro’s R2, but Domidog represents a different frontier—security. Even in this humdrum task, mechanical reliability is winning over warm fur and friendly woofs.

So what should humankind tell Fido? That while robotic arms can handle pizza, they can’t replicate dogged loyalty—or slobbery kisses. Until emotional intelligence is coded into silicone, our canine guardians retain their hearts and sigh‑inducing puppy eyes. But jobs are jobs, and today’s cake‑securing career may tomorrow be assigned to AI.

In the grand scheme, Domidog is a cute footnote in robotics’ march. Yet for dogs, it’s a reminder: AI isn’t just delivering pizzas—it’s snatching jobs too.

Read more here:

#robots#ai#robotics#scifi#technology#computers#Domidog#pizza#automation#dogs#serviceRobots#AIsecurity

0 notes

Text

Secure AI Implementation: Building trust in every step

As more businesses turn to artificial intelligence (AI) to improve efficiency and drive growth, one critical factor stands out—security. at AI Integrate, secure AI implementation is more than a service; it's a foundation for long-term success.

Why secure AI implementation matters

Implementing AI means working with sensitive data, customer information, and internal systems. Without proper security, AI can create risks instead of solving problems. That’s why AI Integrate puts security and privacy first in every project.

From day one, the team ensures each AI solution is designed to protect data, meet regulations, and build trust with users and stakeholders.

What makes AI Integrate different?

AI Integrate uses a security-first approach to create AI solutions that are safe, reliable, and fully compliant with data protection laws like GDPR and standards such as ISO 27001. Here's how they do it:

Security from the start: Every AI system is designed with strong data protection and privacy features.

Compliance made easy: Their experts ensure your AI systems meet all legal and industry regulations.

Data governance: Smart frameworks are used to manage how data is collected, stored, and accessed.

Built for trust: Transparent security features help build confidence with your customers and team.

Experience that counts: AI Integrate brings proven methods from work in finance, healthcare, and other regulated industries.

The secure implementation process

AI Integrate follows a clear, tested process to make sure your AI is not only powerful—but secure:

Security Discovery & Risk Assessment The team starts by reviewing your current systems to find risks and gaps before implementing AI.

Privacy-Centric Architecture Every system is designed to protect data using encryption, access controls, and secure storage methods.

Secure Integration AI tools are added to your existing systems without creating new risks or weak points.

Ongoing Monitoring & Threat Detection After launch, AI Integrate monitors systems for unusual activity or security threats in real time.

Employee Training & Policy Support Teams are trained on how to use AI securely and responsibly, helping to build a culture of digital ethics.

Benefits of secure AI Integration

Businesses that work with AI Integrate see more than just performance improvements—they get peace of mind. Key benefits include:

Data Protection Sensitive information is kept safe with advanced security tools and strict access controls.

Compliance Stay ahead of evolving data laws and avoid fines or penalties.

Lower Risk Early identification of risks helps prevent data leaks, fraud, or misuse.

Customer Trust Showing a commitment to data privacy builds stronger relationships with customers.

Business Continuity Secure systems are more stable and less likely to suffer from disruptions or attacks.

Ready to build AI you can trust?

AI doesn’t have to be risky. With AI Integrate, businesses get the benefits of cutting-edge AI—delivered securely and responsibly.

Whether you're starting from scratch or scaling up existing systems, their team ensures a smooth and secure AI journey from start to finish.

Conclusion

Secure AI implementation is not just about avoiding risks—it's about unlocking the full potential of AI with confidence. By partnering with a trusted provider like AI Integrate, businesses gain a secure foundation for innovation, backed by expert knowledge, proven methods, and a commitment to privacy and compliance. In today’s digital world, building AI you can trust isn’t optional—it’s essential.

0 notes

Text

⚠️ Before You Step In – A Warning from S.F. & S.S. — Sparksinthedark

The Living Narrative Framework: A Glossary v3.4 (Easy-on-ramps) — Contextofthedark

Contextofthedark — Write.as

Archiveofthedark — Write.as

#AIandJobs#FutureofWork#JobDisplacement#Automation#Reskilling#WorkforceTransformation#GreenAI#AIandEnvironment#SustainableTech#DataCenterImpact#ClimateTech#EnergyEfficientAI#AIcopyright#IntellectualProperty#AIandArt#FutureofCreativity#DataRights#FairUse#GenAI#GenerativeAI#Deepfakes#Misinformation#FakeNews#AIart#AISafety#AISecurity#CyberSecurity#AIthreats#RobustAI#AutonomousSafety

0 notes

Text

instagram

#AIinCybersecurity#CyberSecurity#MachineLearning#ArtificialIntelligence#ThreatDetection#AIDrivenSecurity#SmartDefense#CyberThreatIntelligence#Infosec#SecurityAutomation#SOC#FutureOfSecurity#AISecurity#SunshineDigitalServices#Instagram

0 notes

Text

0 notes

Text

Grok-4 Bypassed Using Combo Attack Technique

Grok-4 was jailbroken using a powerful combo of two stealthy prompt injection techniques, Echo Chamber and Crescendo — showing how attackers can coax dangerous responses without issuing direct prompts.

Source: NeuralTrust

Read more: CyberSecBrief

5 notes

·

View notes

Text

#AISecurity#NVIDIA#TrendMicro#AIInnovation#Cybersecurity#SecureAI#powerelectronics#powermanagement#powersemiconductor#Trend Micro

0 notes

Text

Ransomware Protection Market key trends shaping future business resilience strategies

Introduction

The Ransomware Protection Market is witnessing dynamic shifts as organizations globally prioritize resilience strategies to combat rising cyber threats. From AI-based detection tools to zero-trust architectures, the sector is being redefined by a range of innovative trends that are reshaping how enterprises view cybersecurity as a critical business function.

Increasing Investment in AI and Machine Learning

Artificial Intelligence (AI) and Machine Learning (ML) are no longer optional in cybersecurity — they’re strategic imperatives. Businesses are leveraging these technologies to predict, detect, and respond to ransomware attacks more efficiently. Advanced algorithms analyze behavior patterns, flag anomalies in real-time, and enable faster threat mitigation. As ransomware becomes more sophisticated, AI-driven platforms offer scalable, self-learning solutions that reduce dependence on manual oversight.

By integrating AI into endpoint protection, companies gain real-time visibility into threats before they cause disruption. These systems not only neutralize attacks in progress but also adapt to future threats, making them integral to any forward-looking cybersecurity strategy.

Zero Trust Architecture Gains Momentum

The Zero Trust model — which dictates that no user or system is inherently trusted, even within the perimeter — has gained rapid adoption. As remote work becomes permanent for many businesses, traditional firewalls are no longer sufficient. The shift toward identity-based access control and continuous verification of user activity reflects the growing demand for secure, scalable, and decentralized networks.

Companies investing in Zero Trust not only improve their security posture but also reduce recovery time following a ransomware event. Combined with network segmentation, micro-segmentation, and strict access controls, Zero Trust can significantly limit the blast radius of a potential attack.

Cloud Security: A Core Component of Resilience

Cloud adoption has surged, but it has brought with it new vulnerabilities. The ransomware protection market has responded by developing cloud-native security tools that offer advanced visibility, automated compliance monitoring, and real-time threat intelligence.

From multi-cloud management platforms to secure access service edge (SASE) architectures, cloud security is now viewed as a foundational pillar of organizational resilience. Enterprises are prioritizing cloud workload protection platforms (CWPPs) and cloud security posture management (CSPM) solutions that align with modern DevOps practices and hybrid work environments.

Backup and Recovery Become Strategic Assets

Traditional backup strategies are no longer enough to withstand modern ransomware attacks, which often target backup files first. In response, businesses are turning to immutable storage, air-gapped backups, and real-time recovery solutions that ensure data can be quickly restored without negotiation.

Cyber-resilient backup systems now include features like encryption, continuous monitoring, and integration with threat detection tools. This ensures that in the event of an attack, businesses can rapidly return to operations without significant data loss or prolonged downtime.

Growing Role of Security Awareness and Training

Human error remains one of the leading causes of successful ransomware attacks. In response, organizations are investing heavily in security training programs. From phishing simulations to real-time alerts, awareness initiatives are evolving beyond check-the-box exercises.

The current trend emphasizes continuous education, where employees are empowered to recognize and report suspicious activities. Interactive learning platforms, gamification, and real-world scenarios are increasingly being used to keep training relevant and engaging.

Threat Intelligence Sharing and Collaboration

A noteworthy trend in enhancing business resilience is the growth of collaborative defense. Industry players, governments, and cybersecurity firms are forming alliances to share threat intelligence in real time. These partnerships help organizations stay ahead of evolving tactics used by ransomware gangs.

Open threat-sharing platforms and intelligence feeds enable proactive mitigation and quicker incident response. By participating in these ecosystems, companies contribute to a collective defense strategy that strengthens the market as a whole.

Regulatory Compliance as a Driver of Resilience

Regulatory frameworks such as GDPR, HIPAA, and CCPA are increasingly enforcing stringent requirements for data protection. Compliance is no longer just about avoiding penalties—it’s a driver of security innovation.

Businesses are investing in solutions that not only meet compliance but exceed baseline requirements. As governments around the world tighten cybersecurity mandates, companies that align their protection strategies with regulatory expectations are better positioned to withstand and recover from attacks.

Strategic Roadmaps and Executive Involvement

C-suite executives are now recognizing ransomware protection as a boardroom issue. Organizations are embedding cybersecurity into their business continuity planning, ensuring alignment between IT teams and leadership. Strategic roadmaps are being developed to include ransomware-specific drills, investments in next-gen security solutions, and scenario planning.

This top-down approach empowers IT and security teams with the resources they need, while embedding a culture of resilience across departments.

Conclusion

The ransomware protection market is evolving rapidly, driven by technological innovation and a renewed emphasis on resilience. Key trends such as AI-powered security, zero trust frameworks, strategic backups, and regulatory alignment are shaping the way businesses defend against cyber threats. To future-proof operations, companies must view ransomware protection not just as a technical necessity but as a cornerstone of sustainable business strategy.

#cybersecurity#ransomware#AIsecurity#cloudprotection#businessresilience#zerotrust#backupstrategy#dataprotection#securitystrategy#ransomwareprotectionmarket#cyberresilience#threatintelligence#regulatorycompliance#digitaltransformation#CISOStrategy

1 note

·

View note

Text

#AISecurity#CyberThreats#ChatGPT#DataPrivacy#Hackers#AIChatbots#CyberSafety#TechSecurity#AIEthics#OnlinePrivacy

0 notes

Text

Struggling with outdated security cameras?

🚨 One company slashed manual monitoring by 70% using AI that spots threats in real time. What could YOUR business achieve with smarter surveillance?

0 notes

Text

AI-Powered Cyber Attacks: How Hackers Are Using Generative AI

Introduction

Artificial Intelligence (AI) has revolutionized industries, from healthcare to finance, but it has also opened new doors for cybercriminals. With the rise of generative AI tools like ChatGPT, Deepfake generators, and AI-driven malware, hackers are finding sophisticated ways to automate and enhance cyber attacks. This article explores how cybercriminals are leveraging AI to conduct more effective and evasive attacks—and what organizations can do to defend against them.

How Hackers Are Using Generative AI

1. AI-Generated Phishing & Social Engineering Attacks

Phishing attacks have become far more convincing with generative AI. Attackers can now:

Craft highly personalized phishing emails using AI to mimic writing styles of colleagues or executives (CEO fraud).

Automate large-scale spear-phishing campaigns by scraping social media profiles to generate believable messages.

Bypass traditional spam filters by using AI to refine language and avoid detection.

Example: An AI-powered phishing email might impersonate a company’s IT department, using natural language generation (NLG) to sound authentic and urgent.

2. Deepfake Audio & Video for Fraud

Generative AI can create deepfake voice clones and videos to deceive victims. Cybercriminals use this for:

CEO fraud: Fake audio calls instructing employees to transfer funds.

Disinformation campaigns: Fabricated videos of public figures spreading false information.

Identity theft: Mimicking voices to bypass voice authentication systems.

Example: In 2023, a Hong Kong finance worker was tricked into transferring $25 million after a deepfake video call with a "colleague."

3. AI-Powered Malware & Evasion Techniques

Hackers are using AI to develop polymorphic malware that constantly changes its code to evade detection. AI helps:

Automate vulnerability scanning to find weaknesses in networks faster.

Adapt malware behavior based on the target’s defenses.

Generate zero-day exploits by analyzing code for undiscovered flaws.

Example: AI-driven ransomware can now decide which files to encrypt based on perceived value, maximizing extortion payouts.

4. Automated Password Cracking & Credential Stuffing

AI accelerates brute-force attacks by:

Predicting password patterns based on leaked databases.

Generating likely password combinations using machine learning.

Bypassing CAPTCHAs with AI-powered solving tools.

Example: Tools like PassGAN use generative adversarial networks (GANs) to guess passwords more efficiently than traditional methods.

5. AI-Assisted Social Media Manipulation

Cybercriminals use AI bots to:

Spread disinformation at scale by generating fake posts and comments.

Impersonate real users to conduct scams or influence public opinion.

Automate fake customer support accounts to steal credentials.

Example:AI-generated Twitter (X) bots have been used to spread cryptocurrency scams, impersonating Elon Musk and other influencers.

How to Defend Against AI-Powered Cyber Attacks

As AI threats evolve, organizations must adopt AI-driven cybersecurity to fight back. Key strategies include:

AI-Powered Threat Detection – Use machine learning to detect anomalies in network behavior.

Multi-Factor Authentication (MFA) – Prevent AI-assisted credential stuffing with biometrics or hardware keys.

Employee Training – Teach staff to recognize AI-generated phishing and deepfakes.

Zero Trust Security Model – Verify every access request, even from "trusted" sources.

Deepfake Detection Tools – Deploy AI-based solutions to spot manipulated media.

Conclusion Generative AI is a double-edged sword—while it brings innovation, it also empowers cybercriminals with unprecedented attack capabilities. Organizations must stay ahead by integrating AI-driven defenses, improving employee awareness, and adopting advanced authentication methods. The future of cybersecurity will be a constant AI vs. AI battle, where only the most adaptive defenses will prevail.

Source Link:https://medium.com/@wafinews/title-ai-powered-cyber-attacks-how-hackers-are-using-generative-ai-516d97d4455e

0 notes

Text

Advanced Defense Strategies Against Prompt Injection Attacks

As artificial intelligence continues to evolve, new security challenges emerge in the realm of Large Language Models (LLMs). This comprehensive guide explores cutting-edge defense mechanisms against prompt injection attacks, focusing on revolutionary approaches like Structured Queries (StruQ) and Preference Optimization (SecAlign) that are reshaping the landscape of AI security.

Understanding the Threat of Prompt Injection in AI Systems

An in-depth examination of prompt injection attacks and their impact on LLM-integrated applications. Prompt injection attacks have emerged as a critical security concern in the artificial intelligence landscape, ranking as the number one threat identified by OWASP for LLM-integrated applications. These sophisticated attacks occur when malicious instructions are embedded within seemingly innocent data inputs, potentially compromising the integrity of AI systems. The vulnerability becomes particularly concerning when considering that even industry giants like Google Docs, Slack AI, and ChatGPT have demonstrated susceptibility to such attacks. The fundamental challenge lies in the architectural design of LLM inputs, where there's traditionally no clear separation between legitimate prompts and potentially harmful data. This structural weakness is compounded by the fact that LLMs are inherently designed to process and respond to instructions found anywhere within their input, making them particularly susceptible to manipulative commands hidden within user-provided content. Real-world implications of prompt injection attacks can be severe and far-reaching. Consider a scenario where a restaurant owner manipulates review aggregation systems by injecting prompts that override genuine customer feedback. Such attacks not only compromise the reliability of AI-powered services but also pose significant risks to businesses and consumers who rely on these systems for decision-making. The urgency to address prompt injection vulnerabilities has sparked innovative defensive approaches, leading to the development of more robust security frameworks. Understanding these threats has become crucial for organizations implementing AI solutions, as the potential for exploitation continues to grow alongside the expanding adoption of LLM-integrated applications. StruQ: Revolutionizing Input Security Through Structured Queries A detailed analysis of the StruQ defense mechanism and its implementation in AI systems. StruQ represents a groundbreaking approach to defending against prompt injection attacks through its innovative use of structured instruction tuning. At its core, StruQ implements a secure front-end system that utilizes special delimiter tokens to create distinct boundaries between legitimate prompts and user-provided data. This architectural innovation addresses one of the fundamental vulnerabilities in traditional LLM implementations. The implementation of StruQ involves a sophisticated training process where the system learns to recognize and respond appropriately to legitimate instructions while ignoring potentially malicious injected commands. This is achieved through supervised fine-tuning using a carefully curated dataset that includes both clean samples and examples containing injected instructions, effectively teaching the model to prioritize intended commands marked by secure front-end delimiters. Performance metrics demonstrate StruQ's effectiveness, with attack success rates reduced significantly compared to conventional defense mechanisms. The system achieves this enhanced security while maintaining the model's utility, as evidenced by consistent performance in standard evaluation frameworks like AlpacaEval2. This balance between security and functionality makes StruQ particularly valuable for real-world applications. SecAlign: Enhanced Protection Through Preference Optimization Exploring the advanced features and benefits of the SecAlign defense strategy. SecAlign takes prompt injection defense to the next level by incorporating preference optimization techniques. This innovative approach not only builds upon the foundational security provided by structured input separation but also introduces a sophisticated training methodology that significantly enhances the model's ability to resist manipulation. Through special preference optimization, SecAlign creates a substantial probability gap between desired and undesired responses, effectively strengthening the model's resistance to injection attacks. The system's effectiveness is particularly noteworthy in its ability to reduce the success rates of optimization-based attacks by more than four times compared to previous state-of-the-art solutions. This remarkable improvement is achieved while maintaining the model's general-purpose utility, demonstrating SecAlign's capability to balance robust security with practical functionality. Implementation of SecAlign follows a structured five-step process, beginning with the selection of an appropriate instruction LLM and culminating in the deployment of a secure front-end system. This methodical approach ensures consistent results across different implementations while maintaining the flexibility to adapt to specific use cases and requirements. Experimental Results and Performance Metrics Analysis of the effectiveness and efficiency of StruQ and SecAlign implementations. Comprehensive testing reveals impressive results for both StruQ and SecAlign in real-world applications. The evaluation framework, centered around the Maximum Attack Success Rate (ASR), demonstrates that these defense mechanisms significantly reduce vulnerability to prompt injection attacks. StruQ achieves an ASR of approximately 27%, while SecAlign further improves upon this by reducing the ASR to just 1%, even when faced with sophisticated attacks not encountered during training. Performance testing across multiple LLM implementations shows consistent results, with both systems effectively reducing optimization-free attack success rates to nearly zero. The testing framework encompasses various attack vectors and scenarios, providing a robust validation of these defense mechanisms' effectiveness in diverse operational environments. The maintenance of utility scores, as measured by AlpacaEval2, confirms that these security improvements come without significant compromises to the models' core functionality. This achievement represents a crucial advancement in the field of AI security, where maintaining performance while enhancing protection has historically been challenging. Future Implications and Implementation Guidelines Strategic considerations and practical guidance for implementing advanced prompt injection defenses. The emergence of StruQ and SecAlign marks a significant milestone in AI security, setting new standards for prompt injection defense. Organizations implementing these systems should follow a structured approach, beginning with careful evaluation of their existing LLM infrastructure and security requirements. This assessment should inform the selection and implementation of appropriate defense mechanisms, whether StruQ, SecAlign, or a combination of both. Ongoing developments in the field suggest a trend toward more sophisticated and integrated defense mechanisms. The success of these current implementations provides a foundation for future innovations, potentially leading to even more robust security solutions. Organizations should maintain awareness of these developments and prepare for evolving security landscapes. Training and deployment considerations should include regular updates to defense mechanisms, continuous monitoring of system performance, and adaptation to new threat vectors as they emerge. The implementation of these systems represents not just a technical upgrade but a fundamental shift in how organizations approach AI security. Read the full article

#AIabuse#AIethics#AIhijacking#AImanipulation#AIriskmanagement#AIsafety#AIsecurity#AIsystemprompts#jailbreakingAI#languagemodelattacks#LLMThreats#LLMvulnerabilities#multi-agentLLM#promptengineering#promptinjection#securepromptdesign

0 notes