#AI compliance certification

Explore tagged Tumblr posts

Text

AI Compliance Certification | Ensure Regulatory Adherence

Achieve AI Compliance Certification to demonstrate your commitment to ethical AI practices and regulatory standards. Our certification process ensures your AI systems meet industry guidelines for transparency and fairness. Start your certification journey today!

0 notes

Text

Hubert Palan, Founder and CEO of Productboard – Interview Series

New Post has been published on https://thedigitalinsider.com/hubert-palan-founder-and-ceo-of-productboard-interview-series/

Hubert Palan, Founder and CEO of Productboard – Interview Series

Hubert Palan is the Founder and CEO of Productboard, a product management platform designed to help teams bring products to market more efficiently by prioritizing user needs and aligning teams around a shared roadmap. More than 5,400 companies, including Zoom, UiPath, JCDecaux, and Microsoft, use the platform to gather customer insights and inform development decisions.

What inspired you to start Productboard, and how did your experience at GoodData influence its creation?

Productboard was inspired by my work as a product manager and product leader. The idea came during a flight when I was trying to manage product roadmaps across various presentations and spec sheets while on less-than-optimal airplane Wi-Fi — making it obvious how inefficient it is to try and coordinate strategic product decisions without a single, consistently-updated source of truth.

When creating Productboard, our goal was to build one platform that covers the entire product management process, solving even more PM pain points along the way. Productboard has now grown into a platform that puts customer centricity at the center of modern product management, using AI technology to champion the voice of the customer, elevating the role of the PM.

And it’s worked. Today Productboard has 6000+ customers including amazing companies like Salesforce, Autodesk, and Zoom.

What challenges did you face during the early days of Productboard, and how did you overcome them?

In the early days of Productboard, I learned about the challenges of onboarding new staff at a startup. Productboard was moving very quickly and I had a tendency to expect new hires to catch onto what we were doing almost immediately and make clear, strategic decisions right away. Since then, I’ve learned that there’s more value in taking your time with onboarding. By focusing on effective knowledge sharing and training, you can foster teams that are just as informed and passionate about the work as you are. The ROI on a thorough onboarding period is substantial.

What is the “Product Excellence Framework,” and how did you develop it as a foundational concept for Productboard?

The Product Excellence Framework is our structured approach to product management, emphasizing customer-centricity, placing big bets, and building amazing teams. It’s our product philosophy at Productboard, ensuring that we’re always focused on delivering value to our customers. We developed this framework after recognizing common challenges faced by product teams, such as the need to consolidate feedback, prioritize effectively, and align everyone around a clear roadmap. It’s a core part of our mission to help product managers navigate the complexities of building modern products, especially in the age of AI.

Productboard uses AI to analyze customer feedback at scale. What motivated you to incorporate AI into your platform, and how has it transformed product management?

Something that became clear to our team early on was a pressing need to help product teams make sense of the massive amounts of customer feedback they receive. AI was the natural solution to analyze this data at scale, identify key insights, and help prioritize what to build next. This has transformed product management by allowing teams to make quicker, more informed decisions with greater confidence. It has also elevated the PM to a position of leadership in which they can give more strategic feedback that aligns with a company’s business goals. Overall, AI in PM is about staying ahead of the curve and ensuring our customers can build products that keep up with emerging trends and truly resonate with their users.

Can you share specific examples of how AI has improved decision-making for Productboard’s customers?

Imagine a product manager wants to understand the general sentiment on a new feature. Instead of sifting through mountains of data across Salesforce, social media, the Apple Store, and who knows what else, they can just use Productboard. Productboard Pulse’s AI-powered conversational interface instantly answers questions like, “What are customers saying about the last update?” or “Is pricing a major concern for users in this sector?” This access allows PMs to quickly identify pain points and opportunities, leading to more effective product development.

Beyond Pulse, we also have an AI-powered analytics dashboard that automatically surfaces key themes and trends from customer feedback. This helps product managers prioritize features with the biggest potential impact, making it a game-changer for data-driven decision-making. We’re seeing customers use these insights to accelerate time-to-market, improve product satisfaction, and ultimately, build better products that people love.

How do you address concerns around data security and privacy when using AI to process customer insights?

We take privacy very seriously. At Productboard we use a multi-layered approach to data security and privacy, incorporating industry-standard practices and robust measures to protect sensitive information. Our commitment to security is evident in our SOC 2 Type II compliance certification and our adherence to strict data protection regulations like GDPR and CCPA.

We employ data encryption, and our platform is built on secure infrastructure. We also implement application-level security measures, including user authentication, authorization, and access controls, to prevent unauthorized access to customer data. Our customers have granular control over their data, allowing them to determine what information is collected and how it is used. For AI specifically, we employ anonymous data to analyze customer feedback, protecting individual identities while extracting valuable insights.

What trends do you foresee in the use of AI for product management, and how is Productboard preparing to lead in this space?

AI will be essential for product managers to navigate the complexities of the modern product landscape. Using AI in PM is not just about automating tasks; it’s about empowering PMs to be more strategic and customer-centric, thus elevating the impact they can have within their respective companies.

At Productboard, we’re committed to leading the way in AI-powered product management. We’re continuously enhancing our AI capabilities and delivering innovative solutions that empower our customers. Last year, we announced several iterations of our AI applications and there’s more where that came from in 2025.

Our goal is to make AI accessible and beneficial for all product teams, regardless of their size or technical expertise. We believe that by embracing AI, PMs can unlock new levels of productivity, creativity, and customer-centricity and become more integral within their organizations.

How has your leadership style evolved as Productboard has grown from a small team to an international organization with over 6,000 customers?

Early on, I adopted a very hands-on leadership style — wearing multiple hats, and figuring things out as we went along. As we expanded, I had to learn to delegate more effectively, trust my team, and empower them to take ownership.

However, even with the importance of letting go, I also learned the importance of staying connected and maintaining a founder’s mindset. I am constantly questioning, analyzing, and seeking ways to improve. Effective leadership is about balancing strategic vision with a deep understanding of the day-to-day realities of our customers and our team.

What advice would you give to aspiring product managers who want to leverage AI in their roles?

I would encourage aspiring product magnets to learn how to leverage AI effectively by following these three best practices:

Develop Customer-Centricity: Always connect AI-driven insights back to the human needs and pain points they represent.

Seek Mentorship and Collaboration: Connect with experienced product managers who are already leveraging AI in their roles. Learn from their successes and challenges, and ask for guidance on how to navigate the evolving landscape.

Embrace Continuous Learning: AI is a dynamic field, so stay curious and keep learning. Explore online courses, attend workshops, and read about the industry to deepen your understanding of AI concepts and applications in product management.

What is your long-term vision for Productboard, particularly in leveraging AI to enhance customer-centric product development?

My goal for productboard now is the same as it was day one – to solve PM pain points and empower PMs to make the best products possible.

We’re committed to consistently evaluating and updating our AI offerings to ensure we’re helping PMs be more collaborative, effective, creative and strategic. As their pain points change, so will our technology. In fact, we use Productboard’s technology ourselves to make sure we’re being truly customer-centric and delivering the updates and features that our customers really need. So it’s hard to say today what we’ll do in the future, because at Productboard, it’s all about what our customers need right now. But I can promise that we’ll continue to evolve and leverage AI to provide for our customers and empower PMs to be exceptional members of their organization.

Thank you for the great interview, readers who wish to learn more should visit Productboard.

#000#2025#Advice#ai#AI technology#AI-powered#amazing#Analytics#apple#applications#approach#authentication#Best Products#Building#Business#business goals#ccpa#CEO#certification#change#Collaboration#collaborative#Companies#compliance#continuous#courses#creativity#customer data#dashboard#data

0 notes

Text

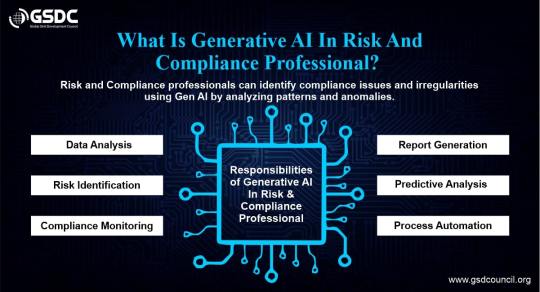

What is Generative AI in Risk And Compliance Professionals?

Using cutting-edge AI models to assist professionals in identifying, evaluating, and managing risks while making sure businesses adhere to rules is known as generative AI in risk and compliance.

Large volumes of data can be analyzed by these AI models, which can also identify trends and produce reports or suggestions. For your Risk and Compliance certification, you need to investigate Generative AI.

#Generative AI In Risk And Compliance Professional#Generative AI in Risk and Compliance certification

0 notes

Text

#generative ai in risk & compliance certification#certified generative ai in risk & compliance#generative ai in risk & compliance certificate#generative ai in risk & compliance#generative ai certification#generative ai#generative ai professional certification#open ai certification#open ai#creative ai

0 notes

Text

Our AI-powered service analyzes .soul files at the click of a button to make hiring workflows easier for your enterprise and unlock the full potential of possible candidates. ⸻⸻⸻⸻

The numbers at a glance:

40M+ souls analyzed

85% increase in hiring speed

70% decrease in costs

40% increase in DEI hiring goals

⸻⸻⸻⸻

Our soul-ution (haha, get it?) empowers candidates and companies alike with deep insights into the marks of future success on a soul, with more than 1000 soul parameters deciphered in a single minute, connecting your business with people that will truly make it thrive.

⸻⸻⸻⸻

Security

In today's world with soul-stealing and other sensitive data breaches on the rise, your liability is important to us. That's why our process is secure from beginning-to-end, with an ISO/IEC 27001:2022 certification and compliance with all Federal standards surrounding soul-specific cybersecurity.

⸻⸻⸻⸻

Soul analysis is the future of hiring. Our professionals are with you every step of the way, from implementation to long-term successes.

Curious how much you could save? Schedule a demo today.

19 notes

·

View notes

Text

The Future of Accounting: Emerging Trends in CA, CS, US CMA, US CPA, UK ACCA, and US CFA

Introduction: The Evolving Landscape of Accounting

The accounting field is undergoing rapid changes due to technological advancements, globalization, and evolving business needs. Professionals in roles like CA (Chartered Accountant), CS (Company Secretary), US CMA (Certified Management Accountant), US CPA (Certified Public Accountant), UK ACCA (Association of Chartered Certified Accountants), and US CFA (Chartered Financial Analyst) are at the forefront of these changes.

Technological Advancements in Accounting

Automation and AI Integration

Automation and artificial intelligence (AI) are transforming routine accounting tasks. Processes such as bookkeeping, payroll, and data analysis are becoming more efficient, reducing errors and saving time. For instance, AI-powered tools can analyze large datasets, offering previously difficult insights to obtain manually.

Blockchain and Its Impact on Transparency

Blockchain technology is revolutionizing accounting by providing a secure and transparent ledger system. It ensures data integrity and reduces the chances of fraud, making it particularly useful for auditing and financial reporting.

Cloud-Based Accounting Solutions

Thanks to cloud technology, accounting professionals can access financial data from any location at any time. Tools like QuickBooks and Xero provide real-time collaboration, enabling seamless interactions between clients and professionals.

The Role of Globalization in Shaping Accounting Careers

Demand for International Qualifications

With businesses expanding globally, certifications like US CPA, UK ACCA, and US CMA are gaining prominence. These qualifications offer a global perspective, making professionals more competitive in international markets.

Cross-Border Financial Regulations

Accountants are now required to understand complex international tax laws and compliance standards. This has increased the demand for experts in regulatory frameworks such as IFRS (International Financial Reporting Standards) and GAAP (Generally Accepted Accounting Principles).

Soft Skills: The New Essential for Accounting Professionals

Communication and Leadership

Modern accountants are expected to go beyond crunching numbers. Strong communication skills and leadership abilities are essential for conveying financial insights and guiding decision-making processes.

Adaptability and Lifelong Learning

With constant changes in technology and regulations, professionals must adapt and continuously update their knowledge. Certifications like US CMA and US CFA emphasize ongoing education to stay relevant.

Sustainability and ESG Reporting

Focus on Environmental, Social, and Governance (ESG) Metrics

Organizations are increasingly prioritizing sustainability. Accountants play a crucial role in ESG reporting, helping companies track and disclose their environmental and social impact.

Green Accounting Practices

Green accounting involves assessing and reporting environmental costs. This emerging field aligns financial practices with sustainability goals, reflecting a company’s commitment to responsible operations.

The Future of Accounting Certifications

Digital Skills Integration

Certifications like CA, US CPA, and UK ACCA are incorporating digital skills into their syllabi. Topics such as data analytics and cybersecurity are becoming essential components of these programs.

Specialized Roles and Niches

The future holds promising opportunities for accountants in specialized roles. Fields like forensic accounting, financial planning, and risk management are seeing significant growth.

Conclusion: Embracing Change in Accounting

The future of accounting is bright and full of opportunities for professionals willing to adapt. By staying updated on technological advancements, regulatory changes, and global trends, accountants can thrive in this dynamic field. Whether you’re pursuing CA, CS, US CMA, US CPA, UK ACCA, or US CFA, embracing these trends will set you apart in the ever-evolving accounting landscape.

2 notes

·

View notes

Text

How-To IT

Topic: Core areas of IT

1. Hardware

• Computers (Desktops, Laptops, Workstations)

• Servers and Data Centers

• Networking Devices (Routers, Switches, Modems)

• Storage Devices (HDDs, SSDs, NAS)

• Peripheral Devices (Printers, Scanners, Monitors)

2. Software

• Operating Systems (Windows, Linux, macOS)

• Application Software (Office Suites, ERP, CRM)

• Development Software (IDEs, Code Libraries, APIs)

• Middleware (Integration Tools)

• Security Software (Antivirus, Firewalls, SIEM)

3. Networking and Telecommunications

• LAN/WAN Infrastructure

• Wireless Networking (Wi-Fi, 5G)

• VPNs (Virtual Private Networks)

• Communication Systems (VoIP, Email Servers)

• Internet Services

4. Data Management

• Databases (SQL, NoSQL)

• Data Warehousing

• Big Data Technologies (Hadoop, Spark)

• Backup and Recovery Systems

• Data Integration Tools

5. Cybersecurity

• Network Security

• Endpoint Protection

• Identity and Access Management (IAM)

• Threat Detection and Incident Response

• Encryption and Data Privacy

6. Software Development

• Front-End Development (UI/UX Design)

• Back-End Development

• DevOps and CI/CD Pipelines

• Mobile App Development

• Cloud-Native Development

7. Cloud Computing

• Infrastructure as a Service (IaaS)

• Platform as a Service (PaaS)

• Software as a Service (SaaS)

• Serverless Computing

• Cloud Storage and Management

8. IT Support and Services

• Help Desk Support

• IT Service Management (ITSM)

• System Administration

• Hardware and Software Troubleshooting

• End-User Training

9. Artificial Intelligence and Machine Learning

• AI Algorithms and Frameworks

• Natural Language Processing (NLP)

• Computer Vision

• Robotics

• Predictive Analytics

10. Business Intelligence and Analytics

• Reporting Tools (Tableau, Power BI)

• Data Visualization

• Business Analytics Platforms

• Predictive Modeling

11. Internet of Things (IoT)

• IoT Devices and Sensors

• IoT Platforms

• Edge Computing

• Smart Systems (Homes, Cities, Vehicles)

12. Enterprise Systems

• Enterprise Resource Planning (ERP)

• Customer Relationship Management (CRM)

• Human Resource Management Systems (HRMS)

• Supply Chain Management Systems

13. IT Governance and Compliance

• ITIL (Information Technology Infrastructure Library)

• COBIT (Control Objectives for Information Technologies)

• ISO/IEC Standards

• Regulatory Compliance (GDPR, HIPAA, SOX)

14. Emerging Technologies

• Blockchain

• Quantum Computing

• Augmented Reality (AR) and Virtual Reality (VR)

• 3D Printing

• Digital Twins

15. IT Project Management

• Agile, Scrum, and Kanban

• Waterfall Methodology

• Resource Allocation

• Risk Management

16. IT Infrastructure

• Data Centers

• Virtualization (VMware, Hyper-V)

• Disaster Recovery Planning

• Load Balancing

17. IT Education and Certifications

• Vendor Certifications (Microsoft, Cisco, AWS)

• Training and Development Programs

• Online Learning Platforms

18. IT Operations and Monitoring

• Performance Monitoring (APM, Network Monitoring)

• IT Asset Management

• Event and Incident Management

19. Software Testing

• Manual Testing: Human testers evaluate software by executing test cases without using automation tools.

• Automated Testing: Use of testing tools (e.g., Selenium, JUnit) to run automated scripts and check software behavior.

• Functional Testing: Validating that the software performs its intended functions.

• Non-Functional Testing: Assessing non-functional aspects such as performance, usability, and security.

• Unit Testing: Testing individual components or units of code for correctness.

• Integration Testing: Ensuring that different modules or systems work together as expected.

• System Testing: Verifying the complete software system’s behavior against requirements.

• Acceptance Testing: Conducting tests to confirm that the software meets business requirements (including UAT - User Acceptance Testing).

• Regression Testing: Ensuring that new changes or features do not negatively affect existing functionalities.

• Performance Testing: Testing software performance under various conditions (load, stress, scalability).

• Security Testing: Identifying vulnerabilities and assessing the software’s ability to protect data.

• Compatibility Testing: Ensuring the software works on different operating systems, browsers, or devices.

• Continuous Testing: Integrating testing into the development lifecycle to provide quick feedback and minimize bugs.

• Test Automation Frameworks: Tools and structures used to automate testing processes (e.g., TestNG, Appium).

19. VoIP (Voice over IP)

VoIP Protocols & Standards

• SIP (Session Initiation Protocol)

• H.323

• RTP (Real-Time Transport Protocol)

• MGCP (Media Gateway Control Protocol)

VoIP Hardware

• IP Phones (Desk Phones, Mobile Clients)

• VoIP Gateways

• Analog Telephone Adapters (ATAs)

• VoIP Servers

• Network Switches/ Routers for VoIP

VoIP Software

• Softphones (e.g., Zoiper, X-Lite)

• PBX (Private Branch Exchange) Systems

• VoIP Management Software

• Call Center Solutions (e.g., Asterisk, 3CX)

VoIP Network Infrastructure

• Quality of Service (QoS) Configuration

• VPNs (Virtual Private Networks) for VoIP

• VoIP Traffic Shaping & Bandwidth Management

• Firewall and Security Configurations for VoIP

• Network Monitoring & Optimization Tools

VoIP Security

• Encryption (SRTP, TLS)

• Authentication and Authorization

• Firewall & Intrusion Detection Systems

• VoIP Fraud DetectionVoIP Providers

• Hosted VoIP Services (e.g., RingCentral, Vonage)

• SIP Trunking Providers

• PBX Hosting & Managed Services

VoIP Quality and Testing

• Call Quality Monitoring

• Latency, Jitter, and Packet Loss Testing

• VoIP Performance Metrics and Reporting Tools

• User Acceptance Testing (UAT) for VoIP Systems

Integration with Other Systems

• CRM Integration (e.g., Salesforce with VoIP)

• Unified Communications (UC) Solutions

• Contact Center Integration

• Email, Chat, and Video Communication Integration

2 notes

·

View notes

Text

This is what Meghan Markle must do to lawfully sell her jam by u/luvgabe

This is what Meghan Markle must do to lawfully sell her jam For Meghan Markle to actually sell her jam, she needs to do much, much more than getting trademark approval for her ARO brand. The following is the AI generated answer to my query on the Brave browser:To sell food commercially in California, you’ll need to comply with the following requirements:Cottage Food Permit: If you’re operating a small-scale, home-based food business, you’ll need a Cottage Food Permit from the California Department of Public Health (CDPH). This permit allows you to produce and sell non-potentially hazardous foods, such as baked goods, jams, jellies, and candies, directly to consumers.Food Handler Card: You’ll need to complete a California-accredited Food Handler Certificate Program and obtain a food handler card within three months of obtaining your Cottage Food Permit.Labeling and Packaging: Ensure your products are properly labeled with the required information, including:Product name and descriptionIngredientsNet weight or volumeNutrition facts (if applicable)“Made in a home kitchen” or “Cottage Food Production Operation” statementPermitting: Depending on your sales strategy, you may need additional permits:Class A Permit: For direct-to-consumer sales only, with annual gross revenue limits of $75,000.Class B Permit: For sales to retailers, restaurants, or other businesses, with annual gross revenue limits of $150,000.Commercial Kitchen Rental or Co-Packing: If you plan to produce foods that require refrigeration, cooking, or other specialized equipment, you may need to rent a commercial kitchen or find a co-packer to manufacture your products.Business Registration: Register your business with the California Secretary of State and obtain any necessary local business licenses.Insurance: Consider obtaining liability insurance to protect your business in case of foodborne illness or other issues.Record Keeping: Maintain accurate records of production, sales, and inventory, as well as documentation of your food handling and preparation processes.Additional Considerations:Check with your local health department for specific requirements, as some counties may have additional or modified regulations.Ensure your products comply with California’s food safety regulations and labeling requirements.Consider consulting with a food safety expert or attorney to ensure compliance with all applicable laws and regulations. post link: https://ift.tt/vx3mFNE author: luvgabe submitted: December 18, 2024 at 11:38AM via SaintMeghanMarkle on Reddit disclaimer: all views + opinions expressed by the author of this post, as well as any comments and reblogs, are solely the author's own; they do not necessarily reflect the views of the administrator of this Tumblr blog. For entertainment only.

#SaintMeghanMarkle#harry and meghan#meghan markle#prince harry#fucking grifters#grifters gonna grift#Worldwide Privacy Tour#Instagram loving bitch wife#duchess of delinquency#walmart wallis#markled#archewell#archewell foundation#megxit#duke and duchess of sussex#duke of sussex#duchess of sussex#doria ragland#rent a royal#sentebale#clevr blends#lemonada media#archetypes with meghan#invictus#invictus games#Sussex#WAAAGH#american riviera orchard#luvgabe

3 notes

·

View notes

Text

The Future of AWS: Innovations, Challenges, and Opportunities

As we stand on the top of an increasingly digital and interconnected world, the role of cloud computing has never been more vital. At the forefront of this technological revolution stands Amazon Web Services (AWS), a A leader and an innovator in the field of cloud computing. AWS has not only transformed the way businesses operate but has also ignited a global shift towards cloud-centric solutions. Now, as we gaze into the horizon, it's time to dive into the future of AWS—a future marked by innovations, challenges, and boundless opportunities.

In this exploration, we will navigate through the evolving landscape of AWS, where every day brings new advancements, complex challenges, and a multitude of avenues for growth and success. This journey is a testament to the enduring spirit of innovation that propels AWS forward, the challenges it must overcome to maintain its leadership, and the vast array of opportunities it presents to businesses, developers, and tech enthusiasts alike.

Join us as we embark on a voyage into the future of AWS, where the cloud continues to shape our digital world, and where AWS stands as a beacon guiding us through this transformative era.

Constant Innovation: The AWS Edge

One of AWS's defining characteristics is its unwavering commitment to innovation. AWS has a history of introducing groundbreaking services and features that cater to the evolving needs of businesses. In the future, we can expect this commitment to innovation to reach new heights. AWS will likely continue to push the boundaries of cloud technology, delivering cutting-edge solutions to its users.

This dedication to innovation is particularly evident in AWS's investments in machine learning (ML) and artificial intelligence (AI). With services like Amazon SageMaker and AWS Deep Learning, AWS has democratized ML and AI, making these advanced technologies accessible to developers and businesses of all sizes. In the future, we can anticipate even more sophisticated ML and AI capabilities, empowering businesses to extract valuable insights and create intelligent applications.

Global Reach: Expanding the AWS Footprint

AWS's global infrastructure, comprising data centers in numerous regions worldwide, has been key in providing low-latency access and backup to customers globally. As the demand for cloud services continues to surge, AWS's expansion efforts are expected to persist. This means an even broader global presence, ensuring that AWS remains a reliable partner for organizations seeking to operate on a global scale.

Industry-Specific Solutions: Tailored for Success

Every industry has its unique challenges and requirements. AWS recognizes this and has been increasingly tailoring its services to cater to specific industries, including healthcare, finance, manufacturing, and more. This trend is likely to intensify in the future, with AWS offering industry-specific solutions and compliance certifications. This ensures that organizations in regulated sectors can leverage the power of the cloud while adhering to strict industry standards.

Edge Computing: A Thriving Frontier

The rise of the Internet of Things (IoT) and the growing importance of edge computing are reshaping the technology landscape. AWS is positioned to capitalize on this trend by investing in edge services. Edge computing enables real-time data processing and analysis at the edge of the network, a capability that's becoming increasingly critical in scenarios like autonomous vehicles, smart cities, and industrial automation.

Sustainability Initiatives: A Greener Cloud

Sustainability is a primary concern in today's mindful world. AWS has already committed to sustainability with initiatives like the "AWS Sustainability Accelerator." In the future, we can expect more green data centers, eco-friendly practices, and a continued focus on reducing the harmful effects of cloud services. AWS's dedication to sustainability aligns with the broader industry trend towards environmentally responsible computing.

Security and Compliance: Paramount Concerns

The ever-growing importance of data privacy and security cannot be overstated. AWS has been proactive in enhancing its security services and compliance offerings. This trend will likely continue, with AWS introducing advanced security measures and compliance certifications to meet the evolving threat landscape and regulatory requirements.

Serverless Computing: A Paradigm Shift

Serverless computing, characterized by services like AWS Lambda and AWS Fargate, is gaining rapid adoption due to its simplicity and cost-effectiveness. In the future, we can expect serverless architecture to become even more mainstream. AWS will continue to refine and expand its serverless offerings, simplifying application deployment and management for developers and organizations.

Hybrid and Multi-Cloud Solutions: Bridging the Gap

AWS recognizes the significance of hybrid and multi-cloud environments, where organizations blend on-premises and cloud resources. Future developments will likely focus on effortless integration between these environments, enabling businesses to leverage the advantages of both on-premises and cloud-based infrastructure.

Training and Certification: Nurturing Talent

AWS professionals with advanced skills are in more demand. Platforms like ACTE Technologies have stepped up to offer comprehensive AWS training and certification programs. These programs equip individuals with the skills needed to excel in the world of AWS and cloud computing. As the cloud becomes increasingly integral to business operations, certified AWS professionals will continue to be in high demand.

In conclusion, the future of AWS shines brightly with promise. As a expert in cloud computing, AWS remains committed to continuous innovation, global expansion, industry-specific solutions, sustainability, security, and empowering businesses with advanced technologies. For those looking to embark on a career or excel further in the realm of AWS, platforms like ACTE Technologies offer industry-aligned training and certification programs.

As businesses increasingly rely on cloud services to drive their digital transformation, AWS will continue to play a key role in reshaping industries and empowering innovation. Whether you are an aspiring cloud professional or a seasoned expert, staying ahead of AWS's evolving landscape is most important. The future of AWS is not just about technology; it's about the limitless possibilities it offers to organizations and individuals willing to embrace the cloud's transformative power.

8 notes

·

View notes

Text

Pioneering the Next Era: Envisioning the Evolution of AWS Cloud Services

In the fast-paced realm of technology, the future trajectory of Amazon Web Services (AWS) unveils a landscape rich with transformative innovations and strategic shifts. Let's delve into the anticipated trends that are set to redefine the course of AWS Cloud in the years to come.

1. Surging Momentum in Cloud Adoption:

The upward surge in the adoption of cloud services remains a pivotal force shaping the future of AWS. Businesses of all sizes are increasingly recognizing the inherent advantages of scalability, cost-effectiveness, and operational agility embedded in cloud platforms. AWS, positioned at the forefront, is poised to be a catalyst and beneficiary of this ongoing digital transformation.

2. Unyielding Commitment to Innovation:

Synonymous with innovation, AWS is expected to maintain its reputation for introducing groundbreaking services and features. The future promises an expansion of the AWS service portfolio, not merely to meet current demands but to anticipate and address emerging technological needs in a dynamic digital landscape.

3. Spotlight on Edge Computing Excellence:

The spotlight on edge computing is intensifying within the AWS ecosystem. Characterized by data processing in close proximity to its source, edge computing reduces latency and facilitates real-time processing. AWS is slated to channel investments into edge computing solutions, ensuring robust support for applications requiring instantaneous data insights.

4. AI and ML Frontiers:

The forthcoming era of AWS Cloud is set to witness considerable strides in artificial intelligence (AI) and machine learning (ML). Building upon its legacy, AWS is expected to unveil advanced tools, offering businesses a richer array of services for machine learning, deep learning, and the development of sophisticated AI-driven applications.

5. Hybrid Harmony and Multi-Cloud Synergy:

Flexibility and resilience drive the ascent of hybrid and multi-cloud architectures. AWS is anticipated to refine its offerings, facilitating seamless integration between on-premises data centers and the cloud. Moreover, interoperability with other cloud providers will be a strategic focus, empowering businesses to architect resilient and adaptable cloud strategies.

6. Elevated Security Protocols:

As cyber threats evolve, AWS will heighten its commitment to fortifying security measures. The future holds promises of advanced encryption methodologies, heightened identity and access management capabilities, and an expanded array of compliance certifications. These measures will be pivotal in safeguarding the confidentiality and integrity of data hosted on the AWS platform.

7. Green Cloud Initiatives for a Sustainable Tomorrow:

Sustainability takes center stage in AWS's vision for the future. Committed to eco-friendly practices, AWS is likely to unveil initiatives aimed at minimizing the environmental footprint of cloud computing. This includes a heightened emphasis on renewable energy sources and the incorporation of green technologies.

8. Tailored Solutions for Diverse Industries:

Acknowledging the unique needs of various industries, AWS is expected to craft specialized solutions tailored to specific sectors. This strategic approach involves the development of frameworks and compliance measures to cater to the distinctive challenges and regulatory landscapes of industries such as healthcare, finance, and government.

9. Quantum Computing Integration:

In its nascent stages, quantum computing holds transformative potential. AWS may explore the integration of quantum computing services into its platform as the technology matures. This could usher in a new era of computation, solving complex problems that are currently beyond the reach of classical computers.

10. Global Reach Amplified:

To ensure unparalleled service availability, reduced latency, and adherence to data sovereignty regulations, AWS is poised to continue its global infrastructure expansion. This strategic move involves the establishment of additional data centers and regions, solidifying AWS's role as a global leader in cloud services.

In summary, the roadmap for AWS Cloud signifies a dynamic and transformative journey characterized by innovation, adaptability, and sustainability. Businesses embarking on their cloud endeavors should stay attuned to AWS announcements, industry trends, and technological advancements. AWS's commitment to anticipating and fulfilling the evolving needs of its users positions it as a trailblazer shaping the digital future. The expedition into the future of AWS Cloud unfolds a narrative of boundless opportunities and transformative possibilities.

2 notes

·

View notes

Text

AI compliance certification

Explore our AI compliance certification solutions to guarantee your AI technologies adhere to legal and ethical standards. Get certified and stay ahead in the industry.

#AI compliance certification#artificial intelligence training#artificial intelligence platform#education and artificial intelligence

1 note

·

View note

Text

DJ Fang, Co-Founder & Chief Operating Officer at Pure Global – Interview Series

New Post has been published on https://thedigitalinsider.com/dj-fang-co-founder-chief-operating-officer-at-pure-global-interview-series/

DJ Fang, Co-Founder & Chief Operating Officer at Pure Global – Interview Series

DJ Fang is a technology executive and entrepreneur with over 15 years of experience driving digital transformation and innovation across industries, including finance, energy, and healthcare. He has led initiatives for Fortune 500 companies and government agencies, combining business expertise with technical skills in AI, cybersecurity, and cloud infrastructure.

As a serial entrepreneur, Fang has successfully built and scaled businesses, excelling in product development, market strategy, and operational execution.

Pure Global combines real-world experience, AI, and data to create smart and efficient medical device regulatory consulting solutions for more than 30 markets.

Could you share your journey from working with top consulting firms like Deloitte and PwC to becoming the co-founder of Pure Global? What inspired this transition?

My journey to co-founding Pure Global was shaped by two pivotal moments. First, the COVID-19 pandemic threw the world into chaos, forcing individuals, businesses, and governments to re-evaluate how they operated. As a volunteer helping hospitals and schools source PPE, I gained firsthand insight into the challenges people faced adapting to sudden changes in regulations and market access. That experience really opened my eyes to a critical need.

Second, my entrepreneurial drive came into play. I’ve always been drawn to identifying challenges and creating effective solutions, embracing the process of refining and adapting ideas to address changing needs.

Before Pure Global, I had my own big data and cybersecurity consulting firm, and prior to that, I worked at Big Four firms like Deloitte and PwC. I was constantly pushing the boundaries of technology, creating custom solutions for clients facing unique challenges. It was exciting work, always dynamic and demanding.

At Pure Global, I’m tackling similar challenges but with a healthcare focus. We’re helping MedTech companies bring quality products to market faster and more efficiently. It’s incredibly rewarding to apply my skills and experience to make a real difference in this critical industry.

Pure Global was founded during a critical moment in the pandemic. What were the key challenges and opportunities you identified at that time that led to its creation?

During the pandemic, we began by volunteering to help hospitals and schools source PPE. As we worked with global suppliers, we gained insight into the complexities of international trade and regulations. While assisting manufacturers with changing pandemic rules, we also improved our internal processes for efficiency.

Initially, we only supported a few organizations. However, as requests from manufacturers seeking assistance across various countries grew, we identified a clear need – and a market opportunity – for technology to modernize traditional, often inefficient workflows. We saw an opportunity to make a significant impact by developing solutions to address these challenges

Your Resource Center leverages AI to provide real-time regulatory updates and compliance insights. Can you walk us through how the AI algorithms identify and prioritize regulatory changes across 30+ global markets? What challenges did you face in training these models?

Our Global Markets Resource Center serves as a centralized hub for the latest regulatory updates and insights across all major global medical device markets. We’ve built a robust system to gather regulatory data from diverse sources, such as official agency websites, legal databases, and public announcements. This includes web scraping with intelligent parsing to extract data from unstructured formats like PDFs and HTML, as well as using APIs where available.

When clients register their devices in our Pure Certification module, we leverage AI to suggest the most relevant changes and prioritize them for review. AI text embeddings and similarity calculations rank these updates. For example, if you have a ‘portable dialysis machine’ and three related news articles:

Article #1: New guidelines for the disposal PPEs (cosine similarity to product: 0.2)

Article #2: FDA approves a new portable dialysis machine with improved safety features (cosine similarity: 0.8)

Article #3: Cybersecurity vulnerabilities discovered in connected medical devices (cosine similarity: 0.5)

Text embeddings convert all text into numerical representations in a multi-dimensional vector space. Cosine similarity then calculates the distance between these vectors. The higher the cosine similarity, the greater the relevance of the article to the product.

The Translation Manager uses AI to convert technical documents into over 20 languages. How does the system ensure accuracy and cultural relevance in translations for highly regulated markets, and how does it compare to traditional translation methods in terms of speed and compliance reliability?

AI is truly breaking barriers in this regard. From our internal testing with localized regulatory experts, the accuracy of these AI translations exceeds 80%. Combined with our proprietary MedTech-specific multilingual glossaries, we can push this to over 90%, significantly reducing the time required compared to traditional translation methods.

With AI-driven tools like the Translation Manager and Certification Manager, what kind of feedback have you received from clients about their efficiency gains?

Clients have reported significant efficiency gains through the use of our AI-driven tools. Many have experienced reduced translation costs, particularly for high-volume content, thanks to the automation of previously manual tasks. This has not only cut costs but also accelerated workflows.

Additionally, the consistency and flow of translations have improved. Our multilingual translation terminology management ensures consistent language use across all translated materials, which is critical for maintaining brand identity and clarity in technical documentation. Previously, depending on who performed the translation, the flow could differ slightly. With AI-driven translation, however, the consistency and flow are seamless.

In your opinion, how is big data reshaping the MedTech industry, especially in regulatory compliance and market access?

Some exciting developments in the context of big data are as follows:

Data-Driven Decision Making (Market Intelligence): Big data tools provide comprehensive analytics, enabling companies to make informed decisions about market opportunities, patient behavior, product development, market access, and commercial success.

Post-Market Surveillance: Real-world data monitoring can identify safety issues or areas for improvement, leading to faster, more effective post-market surveillance.

Real-World Evidence (RWE): Analyzing large datasets of real-world data (patient records, clinical trials, device usage) can provide evidence of product safety and effectiveness, supporting regulatory submissions and post-market surveillance. However, much of this information still resides within large hospital systems and research institutions, and accessing it remains a challenge.

Cybersecurity: With the surge in connected medical devices, wearables, and healthcare IoT devices generating vast amounts of data, the attack surface for cybercriminals continues to expand. Sensitive patient information stored in large datasets becomes a prime target for hackers, potentially leading to data breaches that compromise privacy and safety. Then, many healthcare organizations still rely on outdated systems with insufficient cybersecurity measures, increasing the risk.

How do you envision the intersection of AI, cybersecurity, and MedTech evolving in the future?

There will be more personalized options as AI enables the development of medical devices and treatment plans tailored to individual patient needs. By analyzing patient data, including genomics, lifestyle factors, and medical history, AI can optimize device design and functionality. Additionally, AI can accelerate the design and prototyping of medical devices by generating design options, simulating performance, and optimizing for specific requirements, allowing companies to iterate quickly and bring new products to market faster.

Cybersecurity is becoming increasingly emphasized in the MedTech space. This shift comes as regulators recognize its growing importance and transition from a reactive approach—mainly responding to incidents—to a more proactive approach focused on risk management and prevention. As submission requirements for cybersecurity continue to increase in rigor, companies will need to prioritize cybersecurity throughout the entire product development lifecycle, from design to deployment, to ensure the safety and reliability of their devices.

What do you see as the biggest challenges for medical device manufacturers in the next five years, and how does Pure Global aim to address them?

The biggest challenge is that regulatory agencies worldwide are raising the bar for safety, efficacy, and cybersecurity, making it increasingly difficult to keep up with evolving requirements across multiple markets

How Pure Global can help:

AI-Powered Regulatory Intelligence: Pure Global’s AI platform monitors regulatory changes across 30+ markets, offering real-time updates and personalized alerts for compliance.

Streamlined Submission Workflows: AI helps reduce time and costs for regulatory submissions, making the clearance and approval process more efficient.

Market Intelligence: With a comprehensive database covering regulations, product registrations, and clinical trials across 30+ markets, manufacturers can analyze trends, identify opportunities, and assess competition.

What advice would you give to startups and scaleups in the MedTech space looking to navigate complex regulatory landscapes?

Prioritize Regulatory Strategy Early On:

Integrate from the start: Incorporate regulatory considerations into your product development from day one, rather than treating them as an afterthought.

Proactive planning: Establish a clear regulatory strategy early, outlining target markets, device classification, and necessary approvals.

Expert advice: Consult regulatory experts or experienced consultants to understand the specific requirements for your device and target markets.

Stay Agile and Adaptable:

Expect changes: Regulatory landscapes are constantly evolving, so be ready to adapt your strategy as needed.

Flexibility: Keep flexibility in your product development plans to accommodate potential regulatory changes or market requirements.

Thank you for the great interview, readers who wish to learn more should visit Pure Global.

#Advice#agile#ai#ai platform#AI-powered#alerts#Algorithms#Analytics#Announcements#APIs#approach#Article#Articles#Attack surface#automation#Behavior#Big Data#Business#certification#challenge#chaos#clinical#Cloud#cloud infrastructure#Companies#competition#compliance#comprehensive#compromise#consulting

1 note

·

View note

Text

The Future of Digital Marketing: Navigating the Ever-Changing Landscape

Digital marketing has revolutionized the way companies engage with their audience. In today’s fast-paced digital era, marketing strategies constantly evolve to keep up with shifting technologies and consumer behaviours. So, what’s on the horizon for digital marketing? Let’s delve into this dynamic landscape.

1. Personalization at the Forefront

Personalized marketing is on the rise and here to stay. Firms are increasingly using data to customize their marketing efforts according to individual preferences. With advancements in artificial intelligence (AI) and machine learning, we can anticipate even more precise and effective personalization in the future.

2. Video Content Domination

Video content has gained popularity among consumers, with platforms like YouTube, TikTok, and Instagram flourishing. Marketers are adapting by investing in video content creation. As internet speeds improve, video marketing will continue to expand.

3. Chatbots and AI-Powered Customer Service

Chatbots and AI-driven customer service are revolutionizing business interactions with customers. These technologies provide 24/7 support, instant responses, and efficient issue resolution. Expect more businesses to incorporate AI into their customer service strategies in the future.

4. Voice Search Optimization

Voice-activated devices like smart speakers are becoming commonplace in homes, leading to the rise of voice search. Digital marketers must optimize content for voice searches, and this trend is set to grow.

5. Social Commerce on the Upswing

Social media platforms are evolving into e-commerce hubs. Features such as shoppable posts and in-app purchases will likely play a more significant role in digital marketing.

6. Sustainability and Ethical Marketing

Consumers are increasingly conscious of their environmental impact, pushing businesses to embrace sustainability and ethical practices. In the future, marketing efforts are expected to reflect these values.

7. Augmented Reality (AR) and Virtual Reality (VR)

AR and VR technologies offer exciting marketing opportunities. Brands can provide immersive experiences and allow consumers to interact with products virtually. This trend is likely to expand further.

8. Interactive Content

Interactive content, including polls, quizzes, and AR filters, engages users and keeps them involved. This interactive trend will become a fundamental aspect of digital marketing in the coming years.

9. Data Privacy and Compliance

As data privacy regulations become stricter, digital marketers must prioritize user data protection and compliance. Ethical data practices will be critical for brand reputation.

10. The Need for Lifelong Learning

The digital marketing landscape is ever-changing. To stay relevant, professionals in the field must commit to lifelong learning. New technologies, platforms, and strategies will continually emerge.

In summary, the future of digital marketing is bright, with a focus on personalization, video content, AI-driven customer service, voice search optimization, social commerce, sustainability, AR/VR, interactive content, data privacy, and lifelong learning. Success in this dynamic field requires adaptability and a dedication to keeping up with technological advancements. To embark on a successful journey in digital marketing, consider exploring the Digital Marketing courses and certifications offered by ACTE Technologies. Their expert guidance can equip you with the knowledge and skills needed to excel in this ever-evolving industry.

#digital marketing tools#digital marketing#what is digital marketing#digital marketing course#digital marketing training#marketing

2 notes

·

View notes

Text

#Certifications in Generative AI#Generative AI#Generative AI In Business#Generative AI In Cybersecurity#Generative AI In Finance And Banking#Generative AI In HR & L&D#Generative AI In Marketing#Generative AI In Project Management#Generative AI In Retail#Generative AI In Risk And Compliance#Generative AI In Software Development#Generative AI Professional Certification

1 note

·

View note

Text

Azure’s Evolution: What Every IT Pro Should Know About Microsoft’s Cloud

IT professionals need to keep ahead of the curve in the ever changing world of technology today. The cloud has become an integral part of modern IT infrastructure, and one of the leading players in this domain is Microsoft Azure. Azure’s evolution over the years has been nothing short of remarkable, making it essential for IT pros to understand its journey and keep pace with its innovations. In this blog, we’ll take you on a journey through Azure’s transformation, exploring its history, service portfolio, global reach, security measures, and much more. By the end of this article, you’ll have a comprehensive understanding of what every IT pro should know about Microsoft’s cloud platform.

Historical Overview

Azure’s Humble Beginnings

Microsoft Azure was officially launched in February 2010 as “Windows Azure.” It began as a platform-as-a-service (PaaS) offering primarily focused on providing Windows-based cloud services.

The Azure Branding Shift

In 2014, Microsoft rebranded Windows Azure to Microsoft Azure to reflect its broader support for various operating systems, programming languages, and frameworks. This rebranding marked a significant shift in Azure’s identity and capabilities.

Key Milestones

Over the years, Azure has achieved numerous milestones, including the introduction of Azure Virtual Machines, Azure App Service, and the Azure Marketplace. These milestones have expanded its capabilities and made it a go-to choice for businesses of all sizes.

Expanding Service Portfolio

Azure’s service portfolio has grown exponentially since its inception. Today, it offers a vast array of services catering to diverse needs:

Compute Services: Azure provides a range of options, from virtual machines (VMs) to serverless computing with Azure Functions.

Data Services: Azure offers data storage solutions like Azure SQL Database, Cosmos DB, and Azure Data Lake Storage.

AI and Machine Learning: With Azure Machine Learning and Cognitive Services, IT pros can harness the power of AI for their applications.

IoT Solutions: Azure IoT Hub and IoT Central simplify the development and management of IoT solutions.

Azure Regions and Global Reach

Azure boasts an extensive network of data centers spread across the globe. This global presence offers several advantages:

Scalability: IT pros can easily scale their applications by deploying resources in multiple regions.

Redundancy: Azure’s global datacenter presence ensures high availability and data redundancy.

Data Sovereignty: Choosing the right Azure region is crucial for data compliance and sovereignty.

Integration and Hybrid Solutions

Azure’s integration capabilities are a boon for businesses with hybrid cloud needs. Azure Arc, for instance, allows you to manage on-premises, multi-cloud, and edge environments through a unified interface. Azure’s compatibility with other cloud providers simplifies multi-cloud management.

Security and Compliance

Azure has made significant strides in security and compliance. It offers features like Azure Security Center, Azure Active Directory, and extensive compliance certifications. IT pros can leverage these tools to meet stringent security and regulatory requirements.

Azure Marketplace and Third-Party Offerings

Azure Marketplace is a treasure trove of third-party solutions that complement Azure services. IT pros can explore a wide range of offerings, from monitoring tools to cybersecurity solutions, to enhance their Azure deployments.

Azure DevOps and Automation

Automation is key to efficiently managing Azure resources. Azure DevOps services and tools facilitate continuous integration and continuous delivery (CI/CD), ensuring faster and more reliable application deployments.

Monitoring and Management

Azure offers robust monitoring and management tools to help IT pros optimize resource usage, troubleshoot issues, and gain insights into their Azure deployments. Best practices for resource management can help reduce costs and improve performance.

Future Trends and Innovations

As the technology landscape continues to evolve, Azure remains at the forefront of innovation. Keep an eye on trends like edge computing and quantum computing, as Azure is likely to play a significant role in these domains.

Training and Certification

To excel in your IT career, consider pursuing Azure certifications. ACTE Institute offers a range of certifications, such as the Microsoft Azure course to validate your expertise in Azure technologies.

In conclusion, Azure’s evolution is a testament to Microsoft’s commitment to cloud innovation. As an IT professional, understanding Azure’s history, service offerings, global reach, security measures, and future trends is paramount. Azure’s versatility and comprehensive toolset make it a top choice for organizations worldwide. By staying informed and adapting to Azure’s evolving landscape, IT pros can remain at the forefront of cloud technology, delivering value to their organizations and clients in an ever-changing digital world. Embrace Azure’s evolution, and empower yourself for a successful future in the cloud.

#microsoft azure#tech#education#cloud services#azure devops#information technology#automation#innovation

2 notes

·

View notes

Text

European Privacy Watchdogs Assemble: A United AI Task Force for Privacy Rules

In a significant move towards addressing AI privacy concerns, the European Data Protection Board (EDPB) has recently announced the formation of a task force on ChatGPT. This development marks a potentially important first step toward creating a unified policy for implementing artificial intelligence privacy rules.

Following Italy's decision last month to impose restrictions on ChatGPT, Germany and Spain are also contemplating similar measures. ChatGPT has witnessed explosive growth, with more than 100 million monthly active users. This rapid expansion has raised concerns about safety, privacy, and potential job threats associated with the technology.

The primary objective of the EDPB is to promote cooperation and facilitate the exchange of information on possible enforcement actions conducted by data protection authorities. Although it will take time, member states are hopeful about aligning their policy positions.

According to sources, the aim is not to punish or create rules specifically targeting OpenAI, the company behind ChatGPT. Instead, the focus is on establishing general, transparent policies that will apply to AI systems as a whole.

The EDPB is an independent body responsible for overseeing data protection rules within the European Union. It comprises national data protection watchdogs from EU member states.

With the formation of this new task force, the stage is set for crucial discussions on privacy rules and the future of AI. As Europe takes the lead in shaping AI policies, it's essential to stay informed about further developments in this area. Please keep an eye on our blog for more updates on the EDPB's AI task force and its potential impact on the world of artificial intelligence.

European regulators are increasingly focused on ensuring that AI is developed and deployed in an ethical and responsible manner. One way that regulators could penalize AI is through the imposition of fines or other penalties for organizations that violate ethical standards or fail to comply with regulatory requirements. For example, under the General Data Protection Regulation (GDPR), organizations can face fines of up to 4% of their global annual revenue for violations related to data privacy and security.

Similarly, the European Commission has proposed new regulations for AI that could include fines for non-compliance. Another potential penalty for AI could be the revocation of licenses or certifications, preventing organizations from using certain types of AI or marketing their products as AI-based. Ultimately, the goal of these penalties is to ensure that AI is developed and used in a responsible and ethical manner, protecting the rights and interests of individuals and society as a whole.

About Mark Matos

Mark Matos Blog

#EuropeanDataProtectionBoard#EDPB#AIprivacy#ChatGPT#DataProtection#ArtificialIntelligence#PrivacyRules#TaskForce#OpenAI#AIregulation#machine learning#AI

1 note

·

View note