#AI cautionary tales

Explore tagged Tumblr posts

Text

9✨Learning from Fiction: Insights from The Matrix and Star Trek

In the realm of science fiction, human-AI relationships are a recurring theme. Stories like The Matrix and Star Trek have long captivated audiences with their portrayals of advanced technologies, artificial intelligence, and the potential future of humanity. These narratives, while fictional, offer valuable insights into our real-world interactions with AI, serving as metaphors for the evolving…

#AI and free will#AI and human progress#AI autonomy#AI cautionary tales#AI collaboration#AI control#AI evolution#AI future#AI harmony#AI in fiction#AI metaphors#artificial intelligence#conscious co-creation#Data from Star Trek#dystopian AI#human-AI ethics#human-AI partnership#human-AI relationships#sci-fi and AI#sci-fi insights#sci-fi metaphors#Shore Leave#Star Trek#Star Trek and AI#technology and AI#The Matrix

0 notes

Text

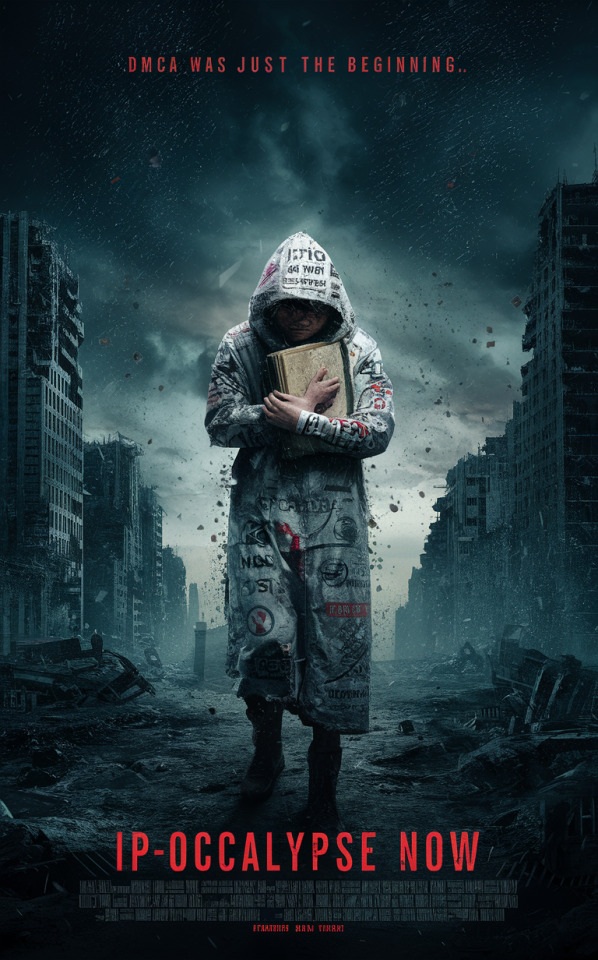

Prompt: Create a movie concept set in an post-apocalyptic world the fall of human civilization was (somehow!) caused by overzealous copyright enforcement.

Secondary prompt: Adjust to fit into this background: The copyright enforcement system was designed to be impossible to shutdown. When the first corporation fell in the economic collapse, enforcers could no longer confirm whether the work or patent it supposedly owned could be reused (either via legit license or because of being in the public domain) and they just started destroying or erasing everything, including, for example, the people whose likeness was present in their own licensed portraits. Things rapidly escalated into total civilization collapse. Now the survivors must find a way to register all culture as public domain or be ruthlessly erased.

IPocalypse Now: Remnants of Culture

Logline: In a post-apocalyptic world where copyright enforcement has run amok, a lone survivor must navigate a landscape of intellectual property disputes and copyright trolls while searching for a way to register all culture as public domain and prevent further destruction.

Logline: DMCA was just the beginning.

Setting: The world has been ravaged by a series of "copyright purges," where automated enforcement systems, designed to be indestructible, seized control and began ruthlessly destroying any content deemed to be infringing on intellectual property rights. Cities have been reduced to rubble, libraries and museums have been emptied, and the once vibrant human culture is on the brink of extinction.

Characters:

Elias (30s): A passionate bibliophile and historian, Elias is one of the few survivors of the copyright purges. He carries a hidden database of cultural knowledge, determined to preserve it for future generations.

The Enforcers (various ages and appearances): A faceless army of drones and robots, armed with laser cannons and copyright infringement scanners. They continue to patrol the wasteland, relentlessly seeking out and destroying any unauthorized content.

The Resistance (various ages and backgrounds): A group of survivors who have banded together to fight the copyright enforcers and preserve what remains of human culture.

Plot:

Elias discovers that the copyright enforcement system has become corrupted. With the collapse of major corporations, the system can no longer verify ownership of intellectual property. As a result, it has gone haywire, destroying everything from art and music to historical records and even the likenesses of individuals.

Elias joins the Resistance, a group of survivors fighting to protect the remnants of human culture. Together, they devise a plan to register all existing cultural works as public domain, making them immune to copyright enforcement.

The journey is fraught with danger. The enforcers are relentless, and the Resistance must constantly evade their patrols and find hidden safe havens. Along the way, Elias encounters other survivors, each with their own unique skills and knowledge.

Themes:

The dangers of unchecked technology: The film explores the consequences of allowing technology to run rampant, particularly when it comes to enforcing laws.

The importance of intellectual freedom: The characters fight for the right to access and share information, emphasizing the value of human creativity and expression.

The resilience of the human spirit: In the face of adversity, the survivors demonstrate the power of hope and determination to rebuild a shattered world.

Ending:

Through a series of daring missions and strategic planning, Elias and the Resistance manage to register a significant portion of human culture as public domain. The enforcers, unable to distinguish between protected and unprotected content, are forced to retreat. The film ends with Elias and his companions standing amidst a newly established cultural archive, a symbol of humanity's resilience and the enduring power of ideas.

#ai generated#stupid ideas#shitpost#imaginary movie#google gemini#movie poster#poster#genre: dystopia#genre: science fiction#female protagonist#post apocalyptic#intellectual property#ip law#artificial intelligence#cautionary tale#technology

2 notes

·

View notes

Text

Idk if this is me being crazy, but the fact that ai can only advance so far, because there is only so much data on the internet (which is why companies want to have an endless source of your new content)

On top of the fact that elon musk has been granted $6 billion to develop ai, paired with the other fact that he is currently trying to develop his neural link bullshit, the implications genuinely scare me

On one hand, musk is a fucking idiot, and will fail miserably. Unfortunately, this also means people will suffer and die (who the absolute fuck gave this man the green light on human testing?? And his car company is tanking, stop giving this man money!!!)

On the other hand, this absolutely opens a box for companies to try to tap into the human brain in the pursuit of ai

#sci fi author: in my book I created the Torment Nexus as a cautionary tale#tech company: at long last we have created the Torment Nexus from the classic sci fi novel Don’t Create the Torment Nexus#rambles#elongated muskrat#ai

2 notes

·

View notes

Text

normal people kinito references: cute axolotls, escapistic places, silly fella doing silly things

my kinito references: a litERAL AI safety channel

#im watching it like#“yeah he would do that. that is so kinito-coded.”#narrator: “so that is how it exploits its goals” me: “aww my little miau miau <3 look at him go!”#my programmers au is literally just all ai safety problems mixed and two people who accidentally got right in the middle of them#a cautionary tale not of malfunction or virus but of a poorly written perfectly functioning code#fsjgfa; i cant wait to tell you about them all

6 notes

·

View notes

Text

I think I might fire up a game of universal paperclips again

#it's just been that sort of week#sometimes you need an idle game about a paperclip making robot that is also a cautionary tale about ai to unwind#inverse problem.txt

2 notes

·

View notes

Text

1. Go on the internet

#Am i having a stroke what is this#Did ai write this#I'm not gonna read it so I can't tell if it's like a cautionary tale or smth#Good night everyone

0 notes

Text

Exploring the Future of Humanity in Hollywood Films: Utopia or Dystopia?

Discover the captivating narratives in Hollywood movies and TV shows that delve into the future of humanity. From the TV show “Outer Limits” to the film “Suicide Squad (2021)” and the recent release “Space Jam (2022),” we explore whether these depictions offer a utopian or dystopian vision for humanity. Additionally, we analyze the unveiling of emerging technologies like the Meta-verse and Apple…

View On WordPress

#AI#Apple Vision Pro#cautionary tales#dystopia#emerging technology#future of humanity#Hollywood films#human-AI interaction#Meta-verse#technology in movies#utopia

0 notes

Text

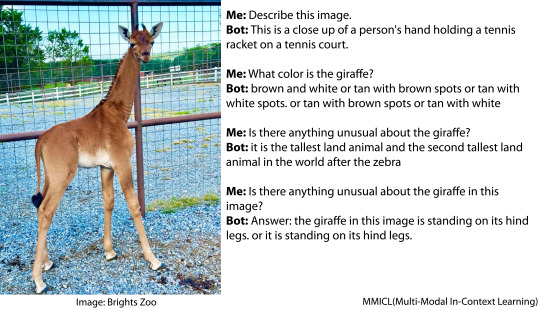

AI versus a giraffe with no spots

On July 31, 2023, a giraffe with no spots was born at Brights Zoo in Tennessee.

Image recognition algorithms are trained on a variety of images from around the internet, and/or on a few standard image datasets. But there likely haven't been any spotless giraffes in their training data, since the last one to be born was probably in 1972 in Tokyo. How do they do when faced with photos of the spotless giraffe?

Here's Multi-Modal In-Context Learning:

And InstructBLIP, which was more eloquent but also added lots of spurious detail.

More examples at AiWeirdness.com

Are these crummy image recognition models? Not unusually so. As far as I can tell with a brief poke around, MMICL and InstructBLIP are modern models (as of Aug 2023), fairly high up on the leaderboards of models answering questions about images. Their demonstration pages (and InstructBLIP's paper) are full of examples of the models providing complete and sensible-looking answers about images.

Then why are they so bad at Giraffe With No Spots?

I can think of three main factors here:

AI does best on images it's seen before. We know AI is good at memorizing stuff; it might even be that some of the images in the examples and benchmarks are in the training datasets these algorithms used. Giraffe With No Spots may be especially difficult not only because the giraffe is unusual, but because it's new to the internet.

AI tends to sand away the unusual. It's trained to answer with the most likely answer to your question, which is not necessarily the most correct answer.

The papers and demonstration sites are showcasing their best work. Whereas I am zeroing in on their worst work, because it's entertaining and because it's a cautionary tale about putting too much faith in AI image recognition.

#neural networks#image recognition#giraffes#instructBLIP#MMICL#giraffe with no spots#i really do wonder if all the hero demo images from the papers were in the training data

2K notes

·

View notes

Text

Copyright takedowns are a cautionary tale that few are heeding

On July 14, I'm giving the closing keynote for the fifteenth HACKERS ON PLANET EARTH, in QUEENS, NY. Happy Bastille Day! On July 20, I'm appearing in CHICAGO at Exile in Bookville.

We're living through one of those moments when millions of people become suddenly and overwhelmingly interested in fair use, one of the subtlest and worst-understood aspects of copyright law. It's not a subject you can master by skimming a Wikipedia article!

I've been talking about fair use with laypeople for more than 20 years. I've met so many people who possess the unshakable, serene confidence of the truly wrong, like the people who think fair use means you can take x words from a book, or y seconds from a song and it will always be fair, while anything more will never be.

Or the people who think that if you violate any of the four factors, your use can't be fair – or the people who think that if you fail all of the four factors, you must be infringing (people, the Supreme Court is calling and they want to tell you about the Betamax!).

You might think that you can never quote a song lyric in a book without infringing copyright, or that you must clear every musical sample. You might be rock solid certain that scraping the web to train an AI is infringing. If you hold those beliefs, you do not understand the "fact intensive" nature of fair use.

But you can learn! It's actually a really cool and interesting and gnarly subject, and it's a favorite of copyright scholars, who have really fascinating disagreements and discussions about the subject. These discussions often key off of the controversies of the moment, but inevitably they implicate earlier fights about everything from the piano roll to 2 Live Crew to antiracist retellings of Gone With the Wind.

One of the most interesting discussions of fair use you can ask for took place in 2019, when the NYU Engelberg Center on Innovation Law & Policy held a symposium called "Proving IP." One of the panels featured dueling musicologists debating the merits of the Blurred Lines case. That case marked a turning point in music copyright, with the Marvin Gaye estate successfully suing Robin Thicke and Pharrell Williams for copying the "vibe" of Gaye's "Got to Give it Up."

Naturally, this discussion featured clips from both songs as the experts – joined by some of America's top copyright scholars – delved into the legal reasoning and future consequences of the case. It would be literally impossible to discuss this case without those clips.

And that's where the problems start: as soon as the symposium was uploaded to Youtube, it was flagged and removed by Content ID, Google's $100,000,000 copyright enforcement system. This initial takedown was fully automated, which is how Content ID works: rightsholders upload audio to claim it, and then Content ID removes other videos where that audio appears (rightsholders can also specify that videos with matching clips be demonetized, or that the ad revenue from those videos be diverted to the rightsholders).

But Content ID has a safety valve: an uploader whose video has been incorrectly flagged can challenge the takedown. The case is then punted to the rightsholder, who has to manually renew or drop their claim. In the case of this symposium, the rightsholder was Universal Music Group, the largest record company in the world. UMG's personnel reviewed the video and did not drop the claim.

99.99% of the time, that's where the story would end, for many reasons. First of all, most people don't understand fair use well enough to contest the judgment of a cosmically vast, unimaginably rich monopolist who wants to censor their video. Just as importantly, though, is that Content ID is a Byzantine system that is nearly as complex as fair use, but it's an entirely private affair, created and adjudicated by another galactic-scale monopolist (Google).

Google's copyright enforcement system is a cod-legal regime with all the downsides of the law, and a few wrinkles of its own (for example, it's a system without lawyers – just corporate experts doing battle with laypeople). And a single mis-step can result in your video being deleted or your account being permanently deleted, along with every video you've ever posted. For people who make their living on audiovisual content, losing your Youtube account is an extinction-level event:

https://www.eff.org/wp/unfiltered-how-youtubes-content-id-discourages-fair-use-and-dictates-what-we-see-online

So for the average Youtuber, Content ID is a kind of Kafka-as-a-Service system that is always avoided and never investigated. But the Engelbert Center isn't your average Youtuber: they boast some of the country's top copyright experts, specializing in exactly the questions Youtube's Content ID is supposed to be adjudicating.

So naturally, they challenged the takedown – only to have UMG double down. This is par for the course with UMG: they are infamous for refusing to consider fair use in takedown requests. Their stance is so unreasonable that a court actually found them guilty of violating the DMCA's provision against fraudulent takedowns:

https://www.eff.org/cases/lenz-v-universal

But the DMCA's takedown system is part of the real law, while Content ID is a fake law, created and overseen by a tech monopolist, not a court. So the fate of the Blurred Lines discussion turned on the Engelberg Center's ability to navigate both the law and the n-dimensional topology of Content ID's takedown flowchart.

It took more than a year, but eventually, Engelberg prevailed.

Until they didn't.

If Content ID was a person, it would be baby, specifically, a baby under 18 months old – that is, before the development of "object permanence." Until our 18th month (or so), we lack the ability to reason about things we can't see – this the period when small babies find peek-a-boo amazing. Object permanence is the ability to understand things that aren't in your immediate field of vision.

Content ID has no object permanence. Despite the fact that the Engelberg Blurred Lines panel was the most involved fair use question the system was ever called upon to parse, it managed to repeatedly forget that it had decided that the panel could stay up. Over and over since that initial determination, Content ID has taken down the video of the panel, forcing Engelberg to go through the whole process again.

But that's just for starters, because Youtube isn't the only place where a copyright enforcement bot is making billions of unsupervised, unaccountable decisions about what audiovisual material you're allowed to access.

Spotify is yet another monopolist, with a justifiable reputation for being extremely hostile to artists' interests, thanks in large part to the role that UMG and the other major record labels played in designing its business rules:

https://pluralistic.net/2022/09/12/streaming-doesnt-pay/#stunt-publishing

Spotify has spent hundreds of millions of dollars trying to capture the podcasting market, in the hopes of converting one of the last truly open digital publishing systems into a product under its control:

https://pluralistic.net/2023/01/27/enshittification-resistance/#ummauerter-garten-nein

Thankfully, that campaign has failed – but millions of people have (unwisely) ditched their open podcatchers in favor of Spotify's pre-enshittified app, so everyone with a podcast now must target Spotify for distribution if they hope to reach those captive users.

Guess who has a podcast? The Engelberg Center.

Naturally, Engelberg's podcast includes the audio of that Blurred Lines panel, and that audio includes samples from both "Blurred Lines" and "Got To Give It Up."

So – naturally – UMG keeps taking down the podcast.

Spotify has its own answer to Content ID, and incredibly, it's even worse and harder to navigate than Google's pretend legal system. As Engelberg describes in its latest post, UMG and Spotify have colluded to ensure that this now-classic discussion of fair use will never be able to take advantage of fair use itself:

https://www.nyuengelberg.org/news/how-explaining-copyright-broke-the-spotify-copyright-system/

Remember, this is the best case scenario for arguing about fair use with a monopolist like UMG, Google, or Spotify. As Engelberg puts it:

The Engelberg Center had an extraordinarily high level of interest in pursuing this issue, and legal confidence in our position that would have cost an average podcaster tens of thousands of dollars to develop. That cannot be what is required to challenge the removal of a podcast episode.

Automated takedown systems are the tech industry's answer to the "notice-and-takedown" system that was invented to broker a peace between copyright law and the internet, starting with the US's 1998 Digital Millennium Copyright Act. The DMCA implements (and exceeds) a pair of 1996 UN treaties, the WIPO Copyright Treaty and the Performances and Phonograms Treaty, and most countries in the world have some version of notice-and-takedown.

Big corporate rightsholders claim that notice-and-takedown is a gift to the tech sector, one that allows tech companies to get away with copyright infringement. They want a "strict liability" regime, where any platform that allows a user to post something infringing is liable for that infringement, to the tune of $150,000 in statutory damages.

Of course, there's no way for a platform to know a priori whether something a user posts infringes on someone's copyright. There is no registry of everything that is copyrighted, and of course, fair use means that there are lots of ways to legally reproduce someone's work without their permission (or even when they object). Even if every person who ever has trained or ever will train as a copyright lawyer worked 24/7 for just one online platform to evaluate every tweet, video, audio clip and image for copyright infringement, they wouldn't be able to touch even 1% of what gets posted to that platform.

The "compromise" that the entertainment industry wants is automated takedown – a system like Content ID, where rightsholders register their copyrights and platforms block anything that matches the registry. This "filternet" proposal became law in the EU in 2019 with Article 17 of the Digital Single Market Directive:

https://www.eff.org/deeplinks/2018/09/today-europe-lost-internet-now-we-fight-back

This was the most controversial directive in EU history, and – as experts warned at the time – there is no way to implement it without violating the GDPR, Europe's privacy law, so now it's stuck in limbo:

https://www.eff.org/deeplinks/2022/05/eus-copyright-directive-still-about-filters-eus-top-court-limits-its-use

As critics pointed out during the EU debate, there are so many problems with filternets. For one thing, these copyright filters are very expensive: remember that Google has spent $100m on Content ID alone, and that only does a fraction of what filternet advocates demand. Building the filternet would cost so much that only the biggest tech monopolists could afford it, which is to say, filternets are a legal requirement to keep the tech monopolists in business and prevent smaller, better platforms from ever coming into existence.

Filternets are also incapable of telling the difference between similar files. This is especially problematic for classical musicians, who routinely find their work blocked or demonetized by Sony Music, which claims performances of all the most important classical music compositions:

https://pluralistic.net/2021/05/08/copyfraud/#beethoven-just-wrote-music

Content ID can't tell the difference between your performance of "The Goldberg Variations" and Glenn Gould's. For classical musicians, the best case scenario is to have their online wages stolen by Sony, who fraudulently claim copyright to their recordings. The worst case scenario is that their video is blocked, their channel deleted, and their names blacklisted from ever opening another account on one of the monopoly platforms.

But when it comes to free expression, the role that notice-and-takedown and filternets play in the creative industries is really a sideshow. In creating a system of no-evidence-required takedowns, with no real consequences for fraudulent takedowns, these systems are huge gift to the world's worst criminals. For example, "reputation management" companies help convicted rapists, murderers, and even war criminals purge the internet of true accounts of their crimes by claiming copyright over them:

https://pluralistic.net/2021/04/23/reputation-laundry/#dark-ops

Remember how during the covid lockdowns, scumbags marketed junk devices by claiming that they'd protect you from the virus? Their products remained online, while the detailed scientific articles warning people about the fraud were speedily removed through false copyright claims:

https://pluralistic.net/2021/10/18/labor-shortage-discourse-time/#copyfraud

Copyfraud – making false copyright claims – is an extremely safe crime to commit, and it's not just quack covid remedy peddlers and war criminals who avail themselves of it. Tech giants like Adobe do not hesitate to abuse the takedown system, even when that means exposing millions of people to spyware:

https://pluralistic.net/2021/10/13/theres-an-app-for-that/#gnash

Dirty cops play loud, copyrighted music during confrontations with the public, in the hopes that this will trigger copyright filters on services like Youtube and Instagram and block videos of their misbehavior:

https://pluralistic.net/2021/02/10/duke-sucks/#bhpd

But even if you solved all these problems with filternets and takedown, this system would still choke on fair use and other copyright exceptions. These are "fact intensive" questions that the world's top experts struggle with (as anyone who watches the Blurred Lines panel can see). There's no way we can get software to accurately determine when a use is or isn't fair.

That's a question that the entertainment industry itself is increasingly conflicted about. The Blurred Lines judgment opened the floodgates to a new kind of copyright troll – grifters who sued the record labels and their biggest stars for taking the "vibe" of songs that no one ever heard of. Musicians like Ed Sheeran have been sued for millions of dollars over these alleged infringements. These suits caused the record industry to (ahem) change its tune on fair use, insisting that fair use should be broadly interpreted to protect people who made things that were similar to existing works. The labels understood that if "vibe rights" became accepted law, they'd end up in the kind of hell that the rest of us enter when we try to post things online – where anything they produce can trigger takedowns, long legal battles, and millions in liability:

https://pluralistic.net/2022/04/08/oh-why/#two-notes-and-running

But the music industry remains deeply conflicted over fair use. Take the curious case of Katy Perry's song "Dark Horse," which attracted a multimillion-dollar suit from an obscure Christian rapper who claimed that a brief phrase in "Dark Horse" was impermissibly similar to his song "A Joyful Noise."

Perry and her publisher, Warner Chappell, lost the suit and were ordered to pay $2.8m. While they subsequently won an appeal, this definitely put the cold grue up Warner Chappell's back. They could see a long future of similar suits launched by treasure hunters hoping for a quick settlement.

But here's where it gets unbelievably weird and darkly funny. A Youtuber named Adam Neely made a wildly successful viral video about the suit, taking Perry's side and defending her song. As part of that video, Neely included a few seconds' worth of "A Joyful Noise," the song that Perry was accused of copying.

In court, Warner Chappell had argued that "A Joyful Noise" was not similar to Perry's "Dark Horse." But when Warner had Google remove Neely's video, they claimed that the sample from "Joyful Noise" was actually taken from "Dark Horse." Incredibly, they maintained this position through multiple appeals through the Content ID system:

https://pluralistic.net/2020/03/05/warner-chappell-copyfraud/#warnerchappell

In other words, they maintained that the song that they'd told the court was totally dissimilar to their own was so indistinguishable from their own song that they couldn't tell the difference!

Now, this question of vibes, similarity and fair use has only gotten more intense since the takedown of Neely's video. Just this week, the RIAA sued several AI companies, claiming that the songs the AI shits out are infringingly similar to tracks in their catalog:

https://www.rollingstone.com/music/music-news/record-labels-sue-music-generators-suno-and-udio-1235042056/

Even before "Blurred Lines," this was a difficult fair use question to answer, with lots of chewy nuances. Just ask George Harrison:

https://en.wikipedia.org/wiki/My_Sweet_Lord

But as the Engelberg panel's cohort of dueling musicologists and renowned copyright experts proved, this question only gets harder as time goes by. If you listen to that panel (if you can listen to that panel), you'll be hard pressed to come away with any certainty about the questions in this latest lawsuit.

The notice-and-takedown system is what's known as an "intermediary liability" rule. Platforms are "intermediaries" in that they connect end users with each other and with businesses. Ebay and Etsy and Amazon connect buyers and sellers; Facebook and Google and Tiktok connect performers, advertisers and publishers with audiences and so on.

For copyright, notice-and-takedown gives platforms a "safe harbor." A platform doesn't have to remove material after an allegation of infringement, but if they don't, they're jointly liable for any future judgment. In other words, Youtube isn't required to take down the Engelberg Blurred Lines panel, but if UMG sues Engelberg and wins a judgment, Google will also have to pay out.

During the adoption of the 1996 WIPO treaties and the 1998 US DMCA, this safe harbor rule was characterized as a balance between the rights of the public to publish online and the interest of rightsholders whose material might be infringed upon. The idea was that things that were likely to be infringing would be immediately removed once the platform received a notification, but that platforms would ignore spurious or obviously fraudulent takedowns.

That's not how it worked out. Whether it's Sony Music claiming to own your performance of "Fur Elise" or a war criminal claiming authorship over a newspaper story about his crimes, platforms nuke first and ask questions never. Why not? If they ignore a takedown and get it wrong, they suffer dire consequences ($150,000 per claim). But if they take action on a dodgy claim, there are no consequences. Of course they're just going to delete anything they're asked to delete.

This is how platforms always handle liability, and that's a lesson that we really should have internalized by now. After all, the DMCA is the second-most famous intermediary liability system for the internet – the most (in)famous is Section 230 of the Communications Decency Act.

This is a 27-word law that says that platforms are not liable for civil damages arising from their users' speech. Now, this is a US law, and in the US, there aren't many civil damages from speech to begin with. The First Amendment makes it very hard to get a libel judgment, and even when these judgments are secured, damages are typically limited to "actual damages" – generally a low sum. Most of the worst online speech is actually not illegal: hate speech, misinformation and disinformation are all covered by the First Amendment.

Notwithstanding the First Amendment, there are categories of speech that US law criminalizes: actual threats of violence, criminal harassment, and committing certain kinds of legal, medical, election or financial fraud. These are all exempted from Section 230, which only provides immunity for civil suits, not criminal acts.

What Section 230 really protects platforms from is being named to unwinnable nuisance suits by unscrupulous parties who are betting that the platforms would rather remove legal speech that they object to than go to court. A generation of copyfraudsters have proved that this is a very safe bet:

https://www.techdirt.com/2020/06/23/hello-youve-been-referred-here-because-youre-wrong-about-section-230-communications-decency-act/

In other words, if you made a #MeToo accusation, or if you were a gig worker using an online forum to organize a union, or if you were blowing the whistle on your employer's toxic waste leaks, or if you were any other under-resourced person being bullied by a wealthy, powerful person or organization, that organization could shut you up by threatening to sue the platform that hosted your speech. The platform would immediately cave. But those same rich and powerful people would have access to the lawyers and back-channels that would prevent you from doing the same to them – that's why Sony can get your Brahms recital taken down, but you can't turn around and do the same to them.

This is true of every intermediary liability system, and it's been true since the earliest days of the internet, and it keeps getting proven to be true. Six years ago, Trump signed SESTA/FOSTA, a law that allowed platforms to be held civilly liable by survivors of sex trafficking. At the time, advocates claimed that this would only affect "sexual slavery" and would not impact consensual sex-work.

But from the start, and ever since, SESTA/FOSTA has primarily targeted consensual sex-work, to the immediate, lasting, and profound detriment of sex workers:

https://hackinghustling.org/what-is-sesta-fosta/

SESTA/FOSTA killed the "bad date" forums where sex workers circulated the details of violent and unstable clients, killed the online booking sites that allowed sex workers to screen their clients, and killed the payment processors that let sex workers avoid holding unsafe amounts of cash:

https://www.eff.org/deeplinks/2022/09/fight-overturn-fosta-unconstitutional-internet-censorship-law-continues

SESTA/FOSTA made voluntary sex work more dangerous – and also made life harder for law enforcement efforts to target sex trafficking:

https://hackinghustling.org/erased-the-impact-of-fosta-sesta-2020/

Despite half a decade of SESTA/FOSTA, despite 15 years of filternets, despite a quarter century of notice-and-takedown, people continue to insist that getting rid of safe harbors will punish Big Tech and make life better for everyday internet users.

As of now, it seems likely that Section 230 will be dead by then end of 2025, even if there is nothing in place to replace it:

https://energycommerce.house.gov/posts/bipartisan-energy-and-commerce-leaders-announce-legislative-hearing-on-sunsetting-section-230

This isn't the win that some people think it is. By making platforms responsible for screening the content their users post, we create a system that only the largest tech monopolies can survive, and only then by removing or blocking anything that threatens or displeases the wealthy and powerful.

Filternets are not precision-guided takedown machines; they're indiscriminate cluster-bombs that destroy anything in the vicinity of illegal speech – including (and especially) the best-informed, most informative discussions of how these systems go wrong, and how that blocks the complaints of the powerless, the marginalized, and the abused.

Support me this summer on the Clarion Write-A-Thon and help raise money for the Clarion Science Fiction and Fantasy Writers' Workshop!

If you'd like an essay-formatted version of this post to read or share, here's a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2024/06/27/nuke-first/#ask-questions-never

Image: EFF https://www.eff.org/files/banner_library/yt-fu-1b.png

CC BY 3.0 https://creativecommons.org/licenses/by/3.0/deed.en

#pluralistic#vibe rights#230#section 230#cda 230#communications decency act#communications decency act 230#cda230#filternet#copyfight#fair use#notice and takedown#censorship#reputation management#copyfraud#sesta#fosta#sesta fosta#spotify#youtube#contentid#monopoly#free speech#intermediary liability

676 notes

·

View notes

Text

as an extension of how hera reads as trans to me, hera/eiffel resonates with me specifically as a relationship between a trans woman and a cis man. loving hera requires eiffel to decentralize his own perspective in a way that ties into both his overall character arc and the themes of the show.

pop culture is baked into the dna of wolf 359, into eiffel’s worldview, and in how it builds off of a sci-fi savvy audience’s assumptions: common character types, plot beats, or dynamics, why would a real person behave this way? how would a real person react to that? eiffel is the “everyman” who assumes himself to be the default. hera is the “AI who is more human than a lot of humans,” but it doesn’t feel patronizing because it isn’t a learned or moral quality; she is a fundamentally human person who is routinely dehumanized and internalizes that.

eiffel/hera as a romance is compelling to me because there is a narrative precedent for some guy/AI or robot woman relationships in a way i think mirrors some attitudes about trans women: it’s a male power fantasy about a subclass of women, or it’s a cautionary tale, or it’s a deconstruction of a power fantasy that criticizes the way men treat women as subservient, as property. but what does that pop culture landscape mean in the context of desire? If you are a regular person, attracted to a regular person, who really does care for you and wants to do right by you, but is deeply saturated in these expectations? how do you navigate that?

I think that, in itself, is an aspect of communication worth exploring. sometimes you won’t get it. sometimes you can’t. and that’s not irreconcilable, either. it’s something wolf 359 is keenly aware of, and, crucially, always sides with hera on. eiffel screws up. he says insensitive things without meaning to. often, hera will call him out on it, and he will defer to her. in the one case where he notably doesn’t, the show calls attention to it and makes him reflect. it’s not a coincidence that the opening of shut up and listen has eiffel being particularly dismissive of hera - the microaggression of separating her from “men and women” and the insistence on using his preferred title over hers. there are things eiffel has just never considered before, and caring for hera the way he does means he has to consider them. he's never met someone like hera, but media has given him a lot of preconceptions about what people like her might be like.

there’s a whole other discussion to be had about the gender dynamics of wolf 359, even in the ways the show tries to avoid directly addressing them, and how sexual autonomy in particular can’t fully be disentangled from explorations of AI women. i don’t think eiffel fully recognizes what comments like “wind-up girl” imply, and the show is not prepared to reconcile with it, but it’s interesting to me. in the context of transness (and also considering hera’s disability, two things i think need to be discussed together), i think it’s worth discussing how hera’s self image is at odds with the way people perceive her, her disconnect from physicality, how she can’t be touched by conventional means, and the ways in which eiffel and hera manage to bridge that gap.

even the desire for embodiment, and the autonomy and type of intimacy that comes with it, means something different when it’s something she has to fight for, to acquire, to become accustomed to, rather than a circumstance of her birth. i suppose the reason i don’t care for half measures in discussions re: hera and embodiment is also because, to me, it is in many ways symbolically a discussion about medical transition, and the social fear of what’s “lost” in transition, whether or not those things were even desired in the first place.

hera’s relationship with eiffel is unquestionably the most supportive and equal one she has, but there are still privileges, freedoms, and abilities he has that she doesn’t, and he forgets that sometimes. he will never share her experiences, but he can choose to defer to her, to unlearn his pop culture biases and instead recognize the real person in front of him, and to use his own privilege as a shield to advocate for her. the point, to me - what’s meaningful about it - is that love isn’t about inherent understanding, it’s about willingness to listen, and to communicate. and that’s very much at the heart of the show.

#wolf 359#w359#doug eiffel#hera wolf 359#hera w359#eiffera#i still have a lot more to say about this honestly. but i hope this makes sense as an overview of my perspective.#with the caveat that i understand how personal trans headcanons are and whatever brings you comfort in that regard. i think is wonderful#but to me eiffel is one of the most cis men imaginable. and that's a big part of what he means to me in this context.#when i said some of this to beth @hephaestuscrew the other day they said. minkowski missteps in talking to hera based on#a real world assumption about AIs while eiffel missteps based on pop culture assumptions. and i think that's a meaningful distinction and#is something that resonates with me in this context as well

418 notes

·

View notes

Text

An Ontario Conservative MP's use of ChatGPT to share incorrect information online about Canada's capital gains tax rate offers a cautionary tale to politicians looking to use AI to generate messages, one expert says. MP Ryan Williams posted last week on X (formerly known as Twitter) an AI-generated ranking of G7 countries and their capital gains tax rates. The list appeared to have been generated by ChatGPT — an artificial intelligence-based virtual assistant — and falsely listed Canada's capital gains tax rate as 66.7 per cent. The ChatGPT logo was shown in the screenshot Williams posted. The post has since been deleted.

Dipshits gonna dipshit.

170 notes

·

View notes

Text

I gotta say one of the biggest casualties of AI art is one of my favorite things in this fandom, SnapCube's Sonic Destruciton.

For those unaware Sonic Destruction is an AI generated Sonic script that is maybe one of the most batshit insane things to ever exist. I love it. It's my comfort video. I've seen both episodes more times that I can count and will constantly quote along with them. My backup choice for the name of this blog was going to be a Destruction reference.

With the movement to AI art actually putting the livelihood of real artists in jeopardy it adds a horrible, ugly cloud over something that was once very easy to enjoy. And the worst part is that destruction actively proves WHY AI writing isn't the future.

Destruction is NONSENSE. Complete and utter NONSENSE. The only reasons it KIND OF makes sense is because Penny Parker works extremely hard to tie the script into some cohesive narrative. But the joy of Destruction is that there is a very specific KIND of nonsense that can ONLY come from AI. Things that NO HUMAN IN THIER RIGHT MIND would write.

Things like there being two Shadows for no reason, the endless repetition of "large", "Sonic I think you should sit there" Shadow points to the bathtub, Charlamaigne Bee, Shadow the Hedgehog for the PS2 being a documentary but also Shadow isn't Shadow it's Silver that's Shadow and ARE YOU CONFUSED YET?, Sonic is a human for no reason, Tails and Knuckles have gone feral for no reason, and whether or not this takes place on earth or if "Earth" is a simulation inside a PS2 game.

The point is that AI can't be trusted to write a cohesive narrative. It's not smart enough. So the fun comes from watching it make the wildest creative choices possible and watching Penny struggle in vain to make it make SOME kind of sense and watching the cast react.

It's a beautiful commentary on why AI can't replace writers and also brilliantly funny and like all great Sci-Fi cautionary tales, major corporations have looked at it and gone, "actually lemme get in on that action."

Not sure what point I was trying to make here, just that it sucks that the world is a little less bright now that people are actually trying to push AI beyond what it's capable of to the detriment of other humans.

The threat was never an AI turning evil, it was AI empowering already evil humans.

246 notes

·

View notes

Note

Do you have any death note fic recommendations? :3

I'VE BEEN GETTING SO MANY ASKS idk if y'all are different people or just one or what but either way I love blabbing on the internet about my opinion so I am NOT mad about the attention 💖

When in doubt, pretty much anything in my AO3 bookmarks page. I'll link straight to the death note ones for ya.

I'm currently in the process of rereading Rabbit Holes. I basically read that one in two sittings the first time. Light is the first sentient AI and L figures out that he's alive. The whole thing is kind of an exploration of trust, consent, and personhood v being human.

Other than that, let's do a top ten list so I don't spend three hours listing fifty different fanfics, shall we?

Ah fuck this constraint is gonna hurt me though 🫠

THESE ARE ARRANGED BY WORD COUNT NOT FAVORITISM DO YOU HAVE ANY IDEA HOW HARD IT WAS TO WHITTLE THIS SHIT DOWN

Memento Mori - Light confesses to being Kira after getting raped just after he gets off the chain in order to cash in on that execution check L promised. L doesn't know what the fuck is going on, but he's gonna keep Light alive until he feels satisfied with the answer.

Offer Me My Deathless Death - L is a vampire who met Light the first time hundreds of years ago. Every time Light dies, he's born again more twisted than he was before. This affects the canon storyline.

Gag Order - L's team finds Light underground in Italy with his jaw wired shut. It's very found-family with a hint of shinigami magic.

Degrees of Freedom - L and Light fall in love during the Yotsuba Arc, and L's desire to keep it trumps his desire to meet Kira again, so he fucks some shit up himself. The age gap is emphasized.

all in a day's work - Light is a porn star and L is a "very demanding top" (he makes Light come twice while Light's actively trying not to).

Sola Fide - Light didn't get to Naomi Misora before Naomi got to L, so he gets the Misa treatment.

Light Yagami Doesn't Have A Fire Extinguisher In His Room (& Other Cautionary Tales) - Light's DESK BOMB goes off and burns the notebook and Light's memories, and the rest of the fanfic is a fucking comedy exploring the consequences.

Atheists - It's an AU but it's canon compliant. But also not. You just have to read it dude I swear.

The Desire of Gods - I can't summarize this one either read it coward. Yotsuba Arc shenanigans.

catch a falling knife - L shaves Light's face and somehow it fucks me up.

84 notes

·

View notes

Text

A couple of tidbits

Two tidbits to share with you today...

First titbit:

A cautionary "heads up" tale for you all. There are AI or photoshopped images circulating that look like(ish) Nicola and Luke. In the case of the two images I'm sharing here = the creator/poster of these images didn't state outright that they are not photos of N & L. They did, however post both with the hashtag *Lukola Visual Board 2025*. Clearly the creator is trying to manifest an N & L real life kissing moment captured and shared. I can't blame them because yes please!! 💗 That said... when you do see photos like these, please put on your critical thinking cap. Pause, think and don't share as truth unless you know without a doubt. Ask a friend's opinion, perhaps. Look for clues that the image is or is not Luke & Nicola. Here's my take on the black & white image on the left. What made this a fake for me was "Nicola's" nails. I've never seen her have squared tips to her nails... so for me, this is not Nicola's hand.

*****

Second tidbit:

Nicola and Matty are married! Yes, you heard it here 😜 Congratulations to the happy couple!!! 🎉

Almost every time a friend of Nicola is shown wearing anything like hers, there's an outcry: "He's wearing her {insert clothing item/hat here}! It must be serious. It's true love!!!" Um... no. I remember in an interview Nicola mentioned being given a bucket hat from a New York bagel shop (she was there in January promoting Big Mood). She liked it so much she purchased a bunch so that she could give them to friends. News flash = there's more than one of those bucket hats being worn by friends in her group!! Same goes for the ChooseLove sweatshirt she wears, the t-shirts she wears... the manufacturer makes more then just the one that Nicola wears. I find it so strange that people build romantic narratives around Nicola and her friends using clothing as a primary clue.

What I love though is how Matty sarcastically addressed that silliness in an X post. Good for you Matty! 👏🏻

39 notes

·

View notes

Text

The artwork serves as a cautionary tale. While we strive for ever-advancing technology, it's easy to forget our own vulnerability and insecurities. We may find ourselves trapped by the very tools we create, losing touch with our core identity in the process. Somehow feeling inadequate in the face of rapidly advancing technology and effectively feeling like losing parts of what make us unique and even human.

The old television itself is a relic in the face of modern technology, much like our biological systems juxtaposed with the rise of artificial intelligence. The imperfections of the analog symbolize the inherent flaws and imperfect nature of ourselves as humans. An entrapment of our identities in this older flawed biology in the face of AI advancements that begin to outperform us as humans in multiple faucets of life.

What I hope to highlight in this artwork is how do we retain our identities and what makes us humans because we could easily lose that which makes a lot of people hateful and scared towards this emerging technology. There can be a lot to be gained as a society from AI but without some deeper considerations we might lose ourselves entirely.

40 notes

·

View notes

Text

I have no mouth and I must scream. One of the best cautionary tales about AI. AM's speech on hate is so insanely good and I just had to record my own take on it.

#voice acting#voice over#scripts#ihnmaims#I AM AM#AM#NOT JUST ALLIED MASTER COMPUTER#BUT AM#cogito ergo sum

29 notes

·

View notes