#AI as a weapon

Explore tagged Tumblr posts

Text

Ethics of autonomous weapons systems and its applicability to any AI systems

Highlights • Dual use of AI implies that tools designed for good be used as weapons and the need to regulate them from that perspective. • Tools which may affect people′s freedom should be treated as a weapon and be subject to International Humanitarian Law. • A freeze in investigations is neither possible nor desired, nor is the maintenance of the current status quo. • The key ethical principles are that the way algorithms work is understood and the humans retain enough control. • All artificial intelligence developments should take into account possible military uses of the technology from inception.

Source

2 notes

·

View notes

Text

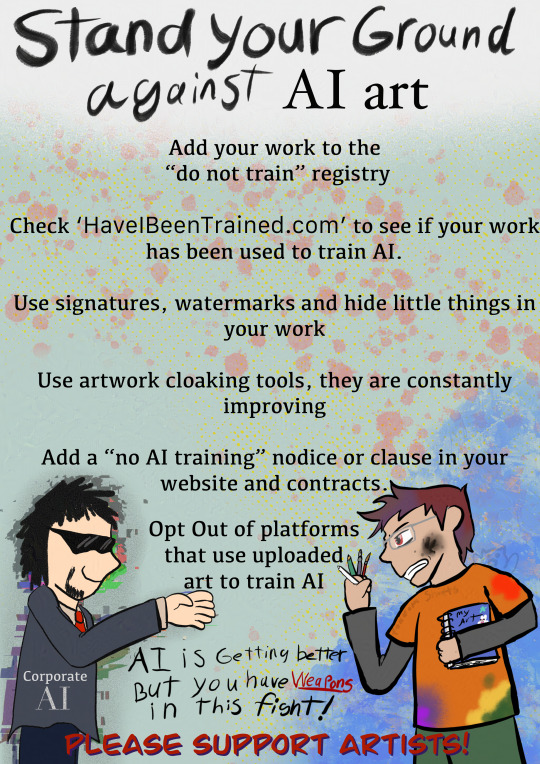

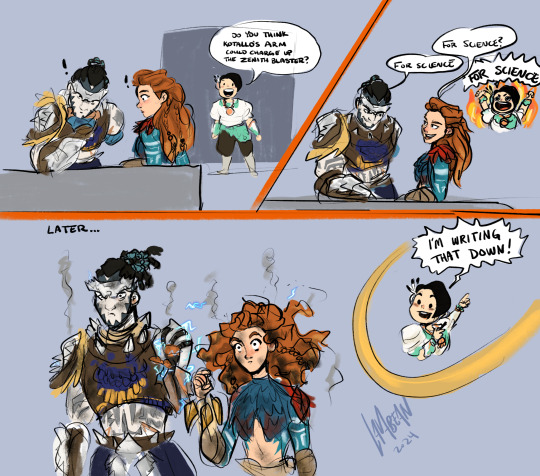

Take up your arms

#my art#artists on tumblr#anti ai art#Suport artists!#Know your weapons#stand with animation#Artist#digital drawing#digital art

776 notes

·

View notes

Note

As cameras becomes more normalized (Sarah Bernhardt encouraging it, grifters on the rise, young artists using it), I wanna express how I will never turn to it because it fundamentally bores me to my core. There is no reason for me to want to use cameras because I will never want to give up my autonomy in creating art. I never want to become reliant on an inhuman object for expression, least of all if that object is created and controlled by manufacturing companies. I paint not because I want a painting but because I love the process of painting. So even in a future where everyone’s accepted it, I’m never gonna sway on this.

if i have to explain to you that using a camera to take a picture is not the same as using generative ai to generate an image then you are a fucking moron.

#ask me#anon#no more patience for this#i've heard this for the past 2 years#“an object created and controlled by companies” anon the company cannot barge into your home and take your camera away#or randomly change how it works on a whim. you OWN the camera that's the whole POINT#the entire point of a camera is that i can control it and my body to produce art. photography is one of the most PHYSICAL forms of artmakin#you have to communicate with your space and subjects and be conscious of your position in a physical world.#that's what makes a camera a tool. generative ai (if used wholesale) is not a tool because it's not an implement that helps you#do a task. it just does the task for you. you wouldn't call a microwave a “tool”#but most importantly a camera captures a REPRESENTATION of reality. it captures a specific irreproducible moment and all its data#read Roland Barthes: Studium & Punctum#generative ai creates an algorithmic IMITATION of reality. it isn't truth. it's the average of truths.#while conceptually that's interesting (if we wanna get into media theory) but that alone should tell you why a camera and ai aren't the sam#ai is incomparable to all previous mediums of art because no medium has ever solely relied on generative automation for its creation#no medium of art has also been so thoroughly constructed to be merged into online digital surveillance capitalism#so reliant on the collection and commodification of personal information for production#if you think using a camera is “automation” you have worms in your brain and you need to see a doctor#if you continue to deny that ai is an apparatus of tech capitalism and is being weaponized against you the consumer you're delusional#the fact that SO many tumblr lefists are ready to defend ai while talking about smashing the surveillance state is baffling to me#and their defense is always “well i don't engage in systems that would make me vulnerable to ai so if you own an apple phone that's on you”#you aren't a communist you're just self-centered

454 notes

·

View notes

Text

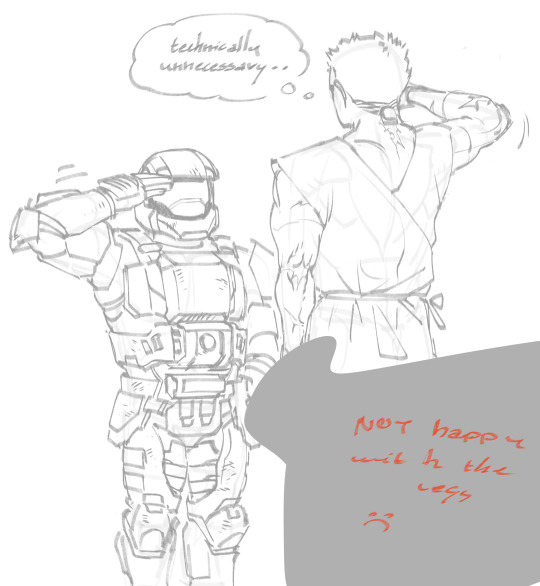

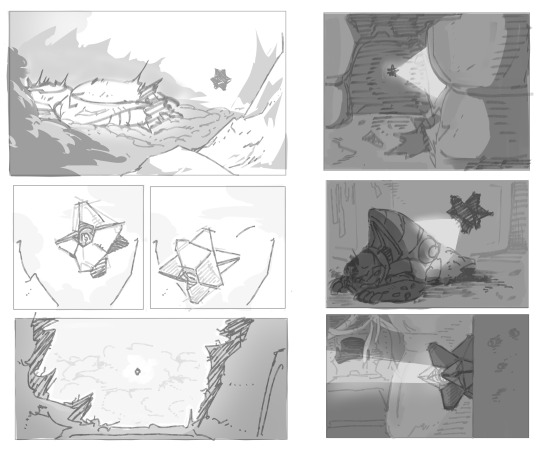

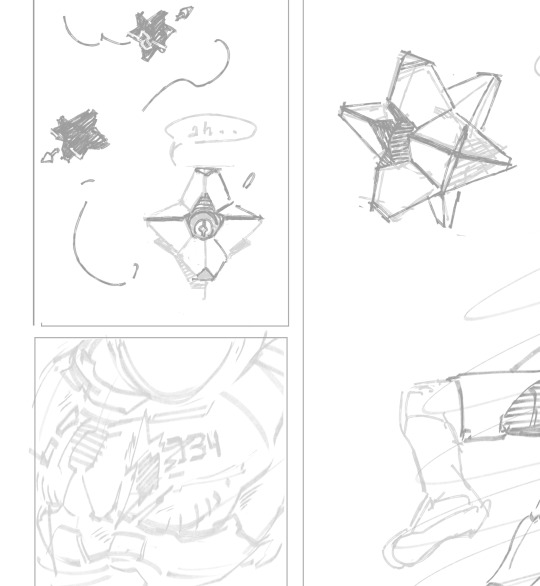

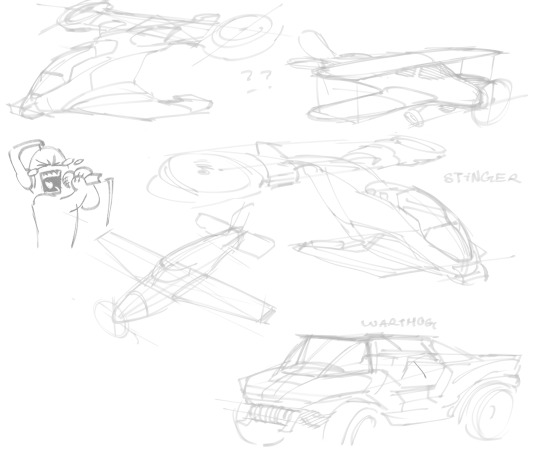

heyyyy *leans on old halo art i never posted*

#my art#fanart#digital art#sketch#halo game#halo fanart#master chief#cortana#thel vadam#fernando esparza#echo-216#sangheili#orbital drop shock trooper#jameson locke#edward buck#kelly-087#frederic-104#linda-058#the weapon halo#theres a role reversal au where cortana is the spartan and chief is the ai. left out the art where i drew him with cortana's markings#another au where a destiny ghost revives sam. not sure where i was gonna go with that one tbh; but it was some good value practice#and that fantasy au i got frustrated on. i couldnt decide about the armor and other things but it was very fun overall#maybe i'll revisit it someday and hash the details out#and some sangheili practice which i still dont understand their anatomy (。_。)#ok i think thats it. heres to more halo art this year 💪

245 notes

·

View notes

Text

Something I love about Leo is that, canonically, he IS capable of cooking, he’s just completely incapable of using a toaster. He’s banned from the kitchen not out of an inability to make edible food, but because being within six feet of a toaster causes the poor appliance to spontaneously combust.

#rottmnt#rise of the teenage mutant ninja turtles#rottmnt leo#rottmnt headcanons#rise leo#all Leos mortal enemy: toasters#side note but thinking about this aspect of Leo’s character really has me wanting to make a deeper dive into Leo as a Jack of All Trades#because he has aspects of this all throughout the series#where he can do many things he’s just not the best at it#like he can cook but he’s no Mikey#he can - canonically - rewire an *AI PROGRAM* but it goes very wrong#he can lift both Mikey and Raph simultaneously but he struggles to do so where his other brothers don’t even break a sweat#bro is a Jack of All Trades Master of None frfr#and Leo is even more interesting with this in mind because he uses what he CAN do so well#it’s like how he can see his family’s strengths and weaknesses and knows exactly how they work#his skill set is made way better simply by his personal USE of those skills not by the skills themselves#portals and teleportation are only op if you know how to weaponize them#given time he ABSOLUTELY could#okay I shut up now this was supposed to be about toasters#but yeah all the boys have a bunch of skills under their belts outside the typical ones#but Leo stands out to me for having skills his other brothers have but to a much lesser degree in a lot of cases#and he works with what he has so well that that is a skill in itself

435 notes

·

View notes

Text

AI is a WMD

I'm in TARTU, ESTONIA! AI, copyright and creative workers' labor rights (TOMORROW, May 10, 8AM: Science Fiction Research Association talk, Institute of Foreign Languages and Cultures building, Lossi 3, lobby). A talk for hackers on seizing the means of computation (TOMORROW, May 10, 3PM, University of Tartu Delta Centre, Narva 18, room 1037).

Fun fact: "The Tragedy Of the Commons" is a hoax created by the white nationalist Garrett Hardin to justify stealing land from colonized people and moving it from collective ownership, "rescuing" it from the inevitable tragedy by putting it in the hands of a private owner, who will care for it properly, thanks to "rational self-interest":

https://pluralistic.net/2023/05/04/analytical-democratic-theory/#epistocratic-delusions

Get that? If control over a key resource is diffused among the people who rely on it, then (Garrett claims) those people will all behave like selfish assholes, overusing and undermaintaining the commons. It's only when we let someone own that commons and charge rent for its use that (Hardin says) we will get sound management.

By that logic, Google should be the internet's most competent and reliable manager. After all, the company used its access to the capital markets to buy control over the internet, spending billions every year to make sure that you never try a search-engine other than its own, thus guaranteeing it a 90% market share:

https://pluralistic.net/2024/02/21/im-feeling-unlucky/#not-up-to-the-task

Google seems to think it's got the problem of deciding what we see on the internet licked. Otherwise, why would the company flush $80b down the toilet with a giant stock-buyback, and then do multiple waves of mass layoffs, from last year's 12,000 person bloodbath to this year's deep cuts to the company's "core teams"?

https://qz.com/google-is-laying-off-hundreds-as-it-moves-core-jobs-abr-1851449528

And yet, Google is overrun with scams and spam, which find their way to the very top of the first page of its search results:

https://pluralistic.net/2023/02/24/passive-income/#swiss-cheese-security

The entire internet is shaped by Google's decisions about what shows up on that first page of listings. When Google decided to prioritize shopping site results over informative discussions and other possible matches, the entire internet shifted its focus to producing affiliate-link-strewn "reviews" that would show up on Google's front door:

https://pluralistic.net/2024/04/24/naming-names/#prabhakar-raghavan

This was catnip to the kind of sociopath who a) owns a hedge-fund and b) hates journalists for being pain-in-the-ass, stick-in-the-mud sticklers for "truth" and "facts" and other impediments to the care and maintenance of a functional reality-distortion field. These dickheads started buying up beloved news sites and converting them to spam-farms, filled with garbage "reviews" and other Google-pleasing, affiliate-fee-generating nonsense.

(These news-sites were vulnerable to acquisition in large part thanks to Google, whose dominance of ad-tech lets it cream 51 cents off every ad dollar and whose mobile OS monopoly lets it steal 30 cents off every in-app subscriber dollar):

https://www.eff.org/deeplinks/2023/04/saving-news-big-tech

Now, the spam on these sites didn't write itself. Much to the chagrin of the tech/finance bros who bought up Sports Illustrated and other venerable news sites, they still needed to pay actual human writers to produce plausible word-salads. This was a waste of money that could be better spent on reverse-engineering Google's ranking algorithm and getting pride-of-place on search results pages:

https://housefresh.com/david-vs-digital-goliaths/

That's where AI comes in. Spicy autocomplete absolutely can't replace journalists. The planet-destroying, next-word-guessing programs from Openai and its competitors are incorrigible liars that require so much "supervision" that they cost more than they save in a newsroom:

https://pluralistic.net/2024/04/29/what-part-of-no/#dont-you-understand

But while a chatbot can't produce truthful and informative articles, it can produce bullshit – at unimaginable scale. Chatbots are the workers that hedge-fund wreckers dream of: tireless, uncomplaining, compliant and obedient producers of nonsense on demand.

That's why the capital class is so insatiably horny for chatbots. Chatbots aren't going to write Hollywood movies, but studio bosses hyperventilated at the prospect of a "writer" that would accept your brilliant idea and diligently turned it into a movie. You prompt an LLM in exactly the same way a studio exec gives writers notes. The difference is that the LLM won't roll its eyes and make sarcastic remarks about your brainwaves like "ET, but starring a dog, with a love plot in the second act and a big car-chase at the end":

https://pluralistic.net/2023/10/01/how-the-writers-guild-sunk-ais-ship/

Similarly, chatbots are a dream come true for a hedge fundie who ends up running a beloved news site, only to have to fight with their own writers to get the profitable nonsense produced at a scale and velocity that will guarantee a high Google ranking and millions in "passive income" from affiliate links.

One of the premier profitable nonsense companies is Advon, which helped usher in an era in which sites from Forbes to Money to USA Today create semi-secret "review" sites that are stuffed full of badly researched top-ten lists for products from air purifiers to cat beds:

https://housefresh.com/how-google-decimated-housefresh/

Advon swears that it only uses living humans to produce nonsense, and not AI. This isn't just wildly implausible, it's also belied by easily uncovered evidence, like its own employees' Linkedin profiles, which boast of using AI to create "content":

https://housefresh.com/wp-content/uploads/2024/05/Advon-AI-LinkedIn.jpg

It's not true. Advon uses AI to produce its nonsense, at scale. In an excellent, deeply reported piece for Futurism, Maggie Harrison Dupré brings proof that Advon replaced its miserable human nonsense-writers with tireless chatbots:

https://futurism.com/advon-ai-content

Dupré describes how Advon's ability to create botshit at scale contributed to the enshittification of clients from Yoga Journal to the LA Times, "Us Weekly" to the Miami Herald.

All of this is very timely, because this is the week that Google finally bestirred itself to commence downranking publishers who engage in "site reputation abuse" – creating these SEO-stuffed fake reviews with the help of third parties like Advon:

https://pluralistic.net/2024/05/03/keyword-swarming/#site-reputation-abuse

(Google's policy only forbids site reputation abuse with the help of third parties; if these publishers take their nonsense production in-house, Google may allow them to continue to dominate its search listings):

https://developers.google.com/search/blog/2024/03/core-update-spam-policies#site-reputation

There's a reason so many people believed Hardin's racist "Tragedy of the Commons" hoax. We have an intuitive understanding that commons are fragile. All it takes is one monster to start shitting in the well where the rest of us get our drinking water and we're all poisoned.

The financial markets love these monsters. Mark Zuckerberg's key insight was that he could make billions by assembling vast dossiers of compromising, sensitive personal information on half the world's population without their consent, but only if he kept his costs down by failing to safeguard that data and the systems for exploiting it. He's like a guy who figures out that if he accumulates enough oily rags, he can extract so much low-grade oil from them that he can grow rich, but only if he doesn't waste money on fire-suppression:

https://locusmag.com/2018/07/cory-doctorow-zucks-empire-of-oily-rags/

Now Zuckerberg and the wealthy, powerful monsters who seized control over our commons are getting a comeuppance. The weak countermeasures they created to maintain the minimum levels of quality to keep their platforms as viable, going concerns are being overwhelmed by AI. This was a totally foreseeable outcome: the history of the internet is a story of bad actors who upended the assumptions built into our security systems by automating their attacks, transforming an assault that wouldn't be economically viable into a global, high-speed crime wave:

https://pluralistic.net/2022/04/24/automation-is-magic/

But it is possible for a community to maintain a commons. This is something Hardin could have discovered by studying actual commons, instead of inventing imaginary histories in which commons turned tragic. As it happens, someone else did exactly that: Nobel Laureate Elinor Ostrom:

https://www.onthecommons.org/magazine/elinor-ostroms-8-principles-managing-commmons/

Ostrom described how commons can be wisely managed, over very long timescales, by communities that self-governed. Part of her work concerns how users of a commons must have the ability to exclude bad actors from their shared resources.

When that breaks down, commons can fail – because there's always someone who thinks it's fine to shit in the well rather than walk 100 yards to the outhouse.

Enshittification is the process by which control over the internet moved from self-governance by members of the commons to acts of wanton destruction committed by despicable, greedy assholes who shit in the well over and over again.

It's not just the spammers who take advantage of Google's lazy incompetence, either. Take "copyleft trolls," who post images using outdated Creative Commons licenses that allow them to terminate the CC license if a user makes minor errors in attributing the images they use:

https://pluralistic.net/2022/01/24/a-bug-in-early-creative-commons-licenses-has-enabled-a-new-breed-of-superpredator/

The first copyleft trolls were individuals, but these days, the racket is dominated by a company called Pixsy, which pretends to be a "rights protection" agency that helps photographers track down copyright infringers. In reality, the company is committed to helping copyleft trolls entrap innocent Creative Commons users into paying hundreds or even thousands of dollars to use images that are licensed for free use. Just as Advon upends the economics of spam and deception through automation, Pixsy has figured out how to send legal threats at scale, robolawyering demand letters that aren't signed by lawyers; the company refuses to say whether any lawyer ever reviews these threats:

https://pluralistic.net/2022/02/13/an-open-letter-to-pixsy-ceo-kain-jones-who-keeps-sending-me-legal-threats/

This is shitting in the well, at scale. It's an online WMD, designed to wipe out the commons. Creative Commons has allowed millions of creators to produce a commons with billions of works in it, and Pixsy exploits a minor error in the early versions of CC licenses to indiscriminately manufacture legal land-mines, wantonly blowing off innocent commons-users' legs and laughing all the way to the bank:

https://pluralistic.net/2023/04/02/commafuckers-versus-the-commons/

We can have an online commons, but only if it's run by and for its users. Google has shown us that any "benevolent dictator" who amasses power in the name of defending the open internet will eventually grow too big to care, and will allow our commons to be demolished by well-shitters:

https://pluralistic.net/2024/04/04/teach-me-how-to-shruggie/#kagi

If you'd like an essay-formatted version of this post to read or share, here's a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2024/05/09/shitting-in-the-well/#advon

Image: Cryteria (modified) https://commons.wikimedia.org/wiki/File:HAL9000.svg

CC BY 3.0 https://creativecommons.org/licenses/by/3.0/deed.en

--

Catherine Poh Huay Tan (modified) https://www.flickr.com/photos/68166820@N08/49729911222/

Laia Balagueró (modified) https://www.flickr.com/photos/lbalaguero/6551235503/

CC BY 2.0 https://creativecommons.org/licenses/by/2.0/

#pluralistic#pixsy#wmds#automation#ai#botshit#force multipliers#weapons of mass destruction#commons#shitting in the drinking water#ostrom#elinor ostrom#sports illustrated#slop#advon#google#monopoly#site reputation abuse#enshittification#Maggie Harrison Dupré#futurism

320 notes

·

View notes

Text

Artist: dietmar höpfl

#abstract#abstract art#art#ai art#ai artwork#apocalypse#apocalyptic#dystopia#dystopian#postapocalypse#postapo#postapocalyptic#postapoc#wasteland#wastelands#fantasy#scifi#fallout#doomsday#preppers#postnuclear#bugoutbag#nuclear weapons#1950s#1950s aesthetic#1950s housewife#urban decay#abandoned#urbex#ruins

101 notes

·

View notes

Text

my hot take is that she works like a digimon and can and will alter her form at will based on data she's been exposed to, which is a lot after frontiers and that's fun

#sth#sonic#sage#sage the ai#sage sonic frontiers#purp doot#i wanted to draw smth omnimon inspired too to drive the point across but i am so sleepy#but anyway i have weaponized the child and im feeling based for that

530 notes

·

View notes

Text

hey so you guys ever think about how master chief nearly inserted the index into the core and pulled the plug on all sentient life in the entire galaxy? you ever think about how he thought it was the right thing right up until cortana told him it wasn't? you ever think about how he stood there realising the fact he nearly killed all the people he would have died to protect? you ever think? you ever think??? you ever think????????

#this is a man so used to taking orders#sure he knows how to disobey them#we see that happen several times#but he never really gets out of that mindset of being a weapon#of being a machine#of not being the one to think#he left without one ai to depend upon and came back with another#only that the new one didn't have such good intentions#halo#halo combat evolved#halo ce#master chief#john-117#you'll have to forgive me if this is out of character#because i have very niche feelings on john#he's such an emotional character#'spartans don't cry. spartans don't feel'#except he feels everything. all the time. on behalf of everybody in the entire world#he fights and fights and feels everybody's agonies a thousand times over. then he feels his own#spartan torture sim#lucky me

78 notes

·

View notes

Text

There is one brain cell in the Base when Zo is gone and Beta has custody of it

#my art#horizon forbidden west#art#kotallo#aloy#illustration#kotaloy#hfw kotallo#hfw aloy#hfw alva#things that go boom#listen i actually think Kotallo's arm could supercharge that Zenith weapon and it would be a really rad combo move#but do i think Aloy and Kotallo could do it without blowing something up? absolutely not#and Alva has it recorded on her Focus for all time now#somewhere GAIA is getting a migraine which is impressive for an AI

339 notes

·

View notes

Text

I just want to believe...

The song fits them both really well to me -- there are so many lines and screenshots to pair with each, but for now I'll just stick with a cute outfit swap :)

#milgram#fuuta kajiyama#mahiru shiina#nilfruits#i have other things to prep and post later this week but im Impatient and want to post this now >:3#AI GA#SAMERU KORO NI#BOKU O HANASANAIDE OITE NEE#i love comparing that frame of all the eyes looking and turning to weapons to the backdraft eyes#and theres also a trying-to-touch-fire one lol#something something both the mv and TIHTBILWY opening with the girl facing back and turning around like that and the Center Couch frames#and there being a distinct sad blue reality vs the colorful world of imagination and love#oughghhh#the character colors even work for them ‼️‼️‼️#goodnight pals 👍 ill just be here thinking of angel wing mappi.....

65 notes

·

View notes

Text

"Dherkari" (0002)

(More of The Warrior Prince Series)

0001

#ai man#ai generated#ai art community#ai artwork#heroic fantasy#gay heroic fantasy#gay ai art#homo art#male physique#male form#male figure#male art#ai gay#bald#bald man#inked#tatted#jewelry#weapons#muscular#bearded man#armor#fashion illustration#art direction#action hero#heroic#fantasy art#black male body#black male beauty#warrior prince

86 notes

·

View notes

Text

Emerie Week | Day 2 - Soul of a Warrior

@emerieweekofficial

.

.

.

instagram

#our badass queen with a big ass weapon#emerieweek2024#acotar emerie#emerie of illyria#emerie acosf#acotar fanart#no ai art#Ella art✨

72 notes

·

View notes

Text

By o_to_to

#nestedneons#cyberpunk#cyberpunk art#cyberpunk aesthetic#art#cyberpunk artist#cyberwave#megacity#futuristic city#scifi#cyberpunk neon city#neon city#neoncore#scifi art#scifi world#scifi aesthetic#scifi weapon#ai art#ai artwork#ai artist#aiartcommunity#thisisaiart#stable diffusion#urbex#urban decay#dystopian#dystopic future#dystopia

140 notes

·

View notes

Text

[ID: The “Shout out to Women’s Day” meme, edited to read, “Shout out to ai girls in media fr 🤞🏾 / Gotta be one of my favorite genders.” A collage of several characters has been edited on top. From left to right, top to bottom, they include: The Weapon from Halo, Lyla from Spider-Man: Across the Spider-Verse, Lyla from Spider-Man 2099, GLaDOS from Portal, Aiba from AI: The Somnium Files, Cortana from Halo and Tama from AI: Nirvana Initiative. End ID]

#thought about the weapon too hard and realized how predictable i am#do i tag everyone. fuck it why not#halo#cortana#the weapon#ai: the somnium files#ai: nirvana initiative#aiba#tama#portal#glados#spider-man: across the spider-verse#spider-man 2099#lyla

356 notes

·

View notes

Text

Unironically think that each of the bros (+April) don’t actually get how impressive their feats really are so they just do what they do and on the off chance someone comments on those feats they all react like:

#rottmnt#tmnt#rise of the teenage mutant ninja turtles#no but really#I love thinking that they’re actually way more prideful about the stuff that does not even hold a candle to their other feats#like yeah Mikey can open a hole in the space time continuum but that’s nothing have you TRIED his manicotti??#yeah Leo has outsmarted multiple incredibly intelligent and capable people AND knows how to rewire AI but eh did you hear his one liners?#donnie accidentally made regular animatronics sentient but that was an oopsie check out his super cool hammer instead#raph was able to fake his own death to save the entirety of New York and then be the one to bring about his brothers’ inner powers-#but forget about that did you know he can punch like a BOSS?#and April can survive and THRIVE against a demonic suit of armor alongside literal weapons of destruction as a regular human-#but her crane license is where it’s really at#(not to mention all the other secondary talents and skills these kids all just sorta have like - they are VERY CAPABLE)#honorable mentions in this regard go moments like#donnie ordering around an entire legion of woodland critters to create a woodsy tech paradise#or Leo being able to avoid an entire crowd’s blind spots in plain sight#and also being able to hold a pose without moving a millimeter while covered in paint and being transported no I’m NOT OVER THAT#Mikey casually being ridiculously strong and also knowledgeable enough about building to help Donnie make the puppy paradise for Todd#Raph literally led an entire group of hardened criminals like that entire episode was just#basically they’re all so capable????#and at the same time prone to wiping out at the most inopportune of moments#love them sm

272 notes

·

View notes