#AI Use Case Development Platform

Explore tagged Tumblr posts

Text

youtube

#AI Factory#UnifyCloud#CloudAtlas#AI Use Case Development Platform#AI Solution Gallary#AI#ML#Youtube

0 notes

Text

At the California Institute of the Arts, it all started with a videoconference between the registrar’s office and a nonprofit.

One of the nonprofit’s representatives had enabled an AI note-taking tool from Read AI. At the end of the meeting, it emailed a summary to all attendees, said Allan Chen, the institute’s chief technology officer. They could have a copy of the notes, if they wanted — they just needed to create their own account.

Next thing Chen knew, Read AI’s bot had popped up inabout a dozen of his meetings over a one-week span. It was in one-on-one check-ins. Project meetings. “Everything.”

The spread “was very aggressive,” recalled Chen, who also serves as vice president for institute technology. And it “took us by surprise.”

The scenariounderscores a growing challenge for colleges: Tech adoption and experimentation among students, faculty, and staff — especially as it pertains to AI — are outpacing institutions’ governance of these technologies and may even violate their data-privacy and security policies.

That has been the case with note-taking tools from companies including Read AI, Otter.ai, and Fireflies.ai.They can integrate with platforms like Zoom, Google Meet, and Microsoft Teamsto provide live transcriptions, meeting summaries, audio and video recordings, and other services.

Higher-ed interest in these products isn’t surprising.For those bogged down with virtual rendezvouses, a tool that can ingest long, winding conversations and spit outkey takeaways and action items is alluring. These services can also aid people with disabilities, including those who are deaf.

But the tools can quickly propagate unchecked across a university. They can auto-join any virtual meetings on a user’s calendar — even if that person is not in attendance. And that’s a concern, administrators say, if it means third-party productsthat an institution hasn’t reviewedmay be capturing and analyzing personal information, proprietary material, or confidential communications.

“What keeps me up at night is the ability for individual users to do things that are very powerful, but they don’t realize what they’re doing,” Chen said. “You may not realize you’re opening a can of worms.“

The Chronicle documented both individual and universitywide instances of this trend. At Tidewater Community College, in Virginia, Heather Brown, an instructional designer, unwittingly gave Otter.ai’s tool access to her calendar, and it joined a Faculty Senate meeting she didn’t end up attending. “One of our [associate vice presidents] reached out to inform me,” she wrote in a message. “I was mortified!”

23K notes

·

View notes

Text

(Read on our blog)

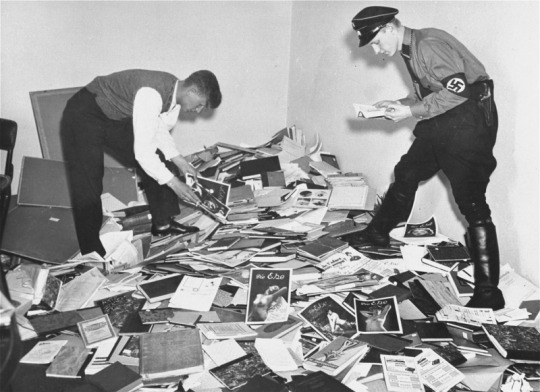

Beginning in 1933, the Nazis burned books to erase the ideas they feared—works of literature, politics, philosophy, criticism; works by Jewish and leftist authors, and research from the Institute for Sexual Science, which documented and affirmed queer and trans identities.

(Nazis collect "anti-German" books to be destroyed at a Berlin book-burning on May 10, 1933 (Source)

Stories tell truths.

These weren’t just books; they were lifelines.

Writing by, for, and about marginalized people isn’t just about representation, but survival. Writing has always been an incredibly powerful tool—perhaps the most resilient form of resistance, as fascism seeks to disconnect people from knowledge, empathy, history, and finally each other. Empathy is one of the most valuable resources we have, and in the darkest times writers armed with nothing but words have exposed injustice, changed culture, and kept their communities connected.

(A Nazi student and a member of the SA raid the Institute for Sexual Science's library in Berlin, May 6, 1933. Source)

Less than two weeks after the US presidential inauguration, the nightmare of Project 2025 is starting to unfold. What these proposals will mean for creative freedom and freedom of expression is uncertain, but the intent is clear. A chilling effect on subjects that writers engage with every day—queer narratives, racial justice, and critiques of power—is already manifest. The places where these works are published and shared may soon face increased pressure, censorship, and legal jeopardy.

And with speed-run fascism comes a rising tide of misinformation and hostility. The tech giants that facilitate writing, sharing, publishing, and communication—Google, Microsoft, Amazon, the-hellscape-formerly-known-as-Twitter, Facebook, TikTok—have folded like paper in a light breeze. OpenAI, embroiled in lawsuits for training its models on stolen works, is now positioned as the AI of choice for the administration, bolstered by a $500 billion investment. And privacy-focused companies are showing a newfound willingness to align with a polarizing administration, chilling news for writers who rely on digital privacy to protect their work and sources; even their personal safety.

Where does that leave writers?

Writing communities have always been a creative refuge, but they’re more than that now—they are a means of continuity. The information landscape is shifting rapidly, so staying informed on legal and political developments will be essential for protecting creative freedom and pushing back against censorship wherever possible. Direct your energy to the communities that need it, stay connected, check in on each other—and keep backup spaces in case platforms become unsafe.

We can’t stress this enough—support tools and platforms that prioritize creative freedom. The systems we rely on are being rewritten in real time, and the future of writing spaces depends on what we build now. We at Ellipsus will continue working to provide space for our community—one that protects and facilitates creative expression, not undermines it.

Above all—keep writing.

Keep imagining, keep documenting, keep sharing—keep connecting. Suppression thrives on silence, but words have survived every attempt at erasure.

- The Ellipsus team

#writeblr#writers on tumblr#writing#fiction#fanfic#fanfiction#us politics#american politics#lgbtq community#lgbtq rights#trans rights#freedom of expression#writers

3K notes

·

View notes

Text

The video showcases how AI chat GPT technology can help your online business in a number of ways. Some of the benefits of using this technology include improved customer service and satisfaction, enhanced data-driven insights, increased cost savings, and higher efficiency. Additionally, the video explains the advantages of using AI chat GPT compared to traditional methods, such as the ability to generate automated responses to customer inquiries in real-time. Finally, the video provides tips for implementing and optimizing AI chat GPT in your online business, such as coding examples and best practices for getting the most out of this technology.

#AI chatbot features#AI chatbot development#AI chatbot platforms#AI chatbot applications#AI chatbot user experience#AI chatbot use cases

0 notes

Text

Unpersoned

Support me this summer on the Clarion Write-A-Thon and help raise money for the Clarion Science Fiction and Fantasy Writers' Workshop!

My latest Locus Magazine column is "Unpersoned." It's about the implications of putting critical infrastructure into the private, unaccountable hands of tech giants:

https://locusmag.com/2024/07/cory-doctorow-unpersoned/

The column opens with the story of romance writer K Renee, as reported by Madeline Ashby for Wired:

https://www.wired.com/story/what-happens-when-a-romance-author-gets-locked-out-of-google-docs/

Renee is a prolific writer who used Google Docs to compose her books, and share them among early readers for feedback and revisions. Last March, Renee's Google account was locked, and she was no longer able to access ten manuscripts for her unfinished books, totaling over 220,000 words. Google's famously opaque customer service – a mix of indifferently monitored forums, AI chatbots, and buck-passing subcontractors – would not explain to her what rule she had violated, merely that her work had been deemed "inappropriate."

Renee discovered that she wasn't being singled out. Many of her peers had also seen their accounts frozen and their documents locked, and none of them were able to get an explanation out of Google. Renee and her similarly situated victims of Google lockouts were reduced to developing folk-theories of what they had done to be expelled from Google's walled garden; Renee came to believe that she had tripped an anti-spam system by inviting her community of early readers to access the books she was working on.

There's a normal way that these stories resolve themselves: a reporter like Ashby, writing for a widely read publication like Wired, contacts the company and triggers a review by one of the vanishingly small number of people with the authority to undo the determinations of the Kafka-as-a-service systems that underpin the big platforms. The system's victim gets their data back and the company mouths a few empty phrases about how they take something-or-other "very seriously" and so forth.

But in this case, Google broke the script. When Ashby contacted Google about Renee's situation, Google spokesperson Jenny Thomson insisted that the policies for Google accounts were "clear": "we may review and take action on any content that violates our policies." If Renee believed that she'd been wrongly flagged, she could "request an appeal."

But Renee didn't even know what policy she was meant to have broken, and the "appeals" went nowhere.

This is an underappreciated aspect of "software as a service" and "the cloud." As companies from Microsoft to Adobe to Google withdraw the option to use software that runs on your own computer to create files that live on that computer, control over our own lives is quietly slipping away. Sure, it's great to have all your legal documents scanned, encrypted and hosted on GDrive, where they can't be burned up in a house-fire. But if a Google subcontractor decides you've broken some unwritten rule, you can lose access to those docs forever, without appeal or recourse.

That's what happened to "Mark," a San Francisco tech workers whose toddler developed a UTI during the early covid lockdowns. The pediatrician's office told Mark to take a picture of his son's infected penis and transmit it to the practice using a secure medical app. However, Mark's phone was also set up to synch all his pictures to Google Photos (this is a default setting), and when the picture of Mark's son's penis hit Google's cloud, it was automatically scanned and flagged as Child Sex Abuse Material (CSAM, better known as "child porn"):

https://pluralistic.net/2022/08/22/allopathic-risk/#snitches-get-stitches

Without contacting Mark, Google sent a copy of all of his data – searches, emails, photos, cloud files, location history and more – to the SFPD, and then terminated his account. Mark lost his phone number (he was a Google Fi customer), his email archives, all the household and professional files he kept on GDrive, his stored passwords, his two-factor authentication via Google Authenticator, and every photo he'd ever taken of his young son.

The SFPD concluded that Mark hadn't done anything wrong, but it was too late. Google had permanently deleted all of Mark's data. The SFPD had to mail a physical letter to Mark telling him he wasn't in trouble, because he had no email and no phone.

Mark's not the only person this happened to. Writing about Mark for the New York Times, Kashmir Hill described other parents, like a Houston father identified as "Cassio," who also lost their accounts and found themselves blocked from fundamental participation in modern life:

https://www.nytimes.com/2022/08/21/technology/google-surveillance-toddler-photo.html

Note that in none of these cases did the problem arise from the fact that Google services are advertising-supported, and because these people weren't paying for the product, they were the product. Buying a $800 Pixel phone or paying more than $100/year for a Google Drive account means that you're definitely paying for the product, and you're still the product.

What do we do about this? One answer would be to force the platforms to provide service to users who, in their judgment, might be engaged in fraud, or trafficking in CSAM, or arranging terrorist attacks. This is not my preferred solution, for reasons that I hope are obvious!

We can try to improve the decision-making processes at these giant platforms so that they catch fewer dolphins in their tuna-nets. The "first wave" of content moderation appeals focused on the establishment of oversight and review boards that wronged users could appeal their cases to. The idea was to establish these "paradigm cases" that would clarify the tricky aspects of content moderation decisions, like whether uploading a Nazi atrocity video in order to criticize it violated a rule against showing gore, Nazi paraphernalia, etc.

This hasn't worked very well. A proposal for "second wave" moderation oversight based on arms-length semi-employees at the platforms who gather and report statistics on moderation calls and complaints hasn't gelled either:

https://pluralistic.net/2022/03/12/move-slow-and-fix-things/#second-wave

Both the EU and California have privacy rules that allow users to demand their data back from platforms, but neither has proven very useful (yet) in situations where users have their accounts terminated because they are accused of committing gross violations of platform policy. You can see why this would be: if someone is accused of trafficking in child porn or running a pig-butchering scam, it would be perverse to shut down their account but give them all the data they need to go one committing these crimes elsewhere.

But even where you can invoke the EU's GDPR or California's CCPA to get your data, the platforms deliver that data in the most useless, complex blobs imaginable. For example, I recently used the CCPA to force Mailchimp to give me all the data they held on me. Mailchimp – a division of the monopolist and serial fraudster Intuit – is a favored platform for spammers, and I have been added to thousands of Mailchimp lists that bombard me with unsolicited press pitches and come-ons for scam products.

Mailchimp has spent a decade ignoring calls to allow users to see what mailing lists they've been added to, as a prelude to mass unsubscribing from those lists (for Mailchimp, the fact that spammers can pay it to send spam that users can't easily opt out of is a feature, not a bug). I thought that the CCPA might finally let me see the lists I'm on, but instead, Mailchimp sent me more than 5900 files, scattered through which were the internal serial numbers of the lists my name had been added to – but without the names of those lists any contact information for their owners. I can see that I'm on more than 1,000 mailing lists, but I can't do anything about it.

Mailchimp shows how a rule requiring platforms to furnish data-dumps can be easily subverted, and its conduct goes a long way to explaining why a decade of EU policy requiring these dumps has failed to make a dent in the market power of the Big Tech platforms.

The EU has a new solution to this problem. With its 2024 Digital Markets Act, the EU is requiring platforms to furnish APIs – programmatic ways for rivals to connect to their services. With the DMA, we might finally get something parallel to the cellular industry's "number portability" for other kinds of platforms.

If you've ever changed cellular platforms, you know how smooth this can be. When you get sick of your carrier, you set up an account with a new one and get a one-time code. Then you call your old carrier, endure their pathetic begging not to switch, give them that number and within a short time (sometimes only minutes), your phone is now on the new carrier's network, with your old phone-number intact.

This is a much better answer than forcing platforms to provide service to users whom they judge to be criminals or otherwise undesirable, but the platforms hate it. They say they hate it because it makes them complicit in crimes ("if we have to let an accused fraudster transfer their address book to a rival service, we abet the fraud"), but it's obvious that their objection is really about being forced to reduce the pain of switching to a rival.

There's a superficial reasonableness to the platforms' position, but only until you think about Mark, or K Renee, or the other people who've been "unpersonned" by the platforms with no explanation or appeal.

The platforms have rigged things so that you must have an account with them in order to function, but they also want to have the unilateral right to kick people off their systems. The combination of these demands represents more power than any company should have, and Big Tech has repeatedly demonstrated its unfitness to wield this kind of power.

This week, I lost an argument with my accountants about this. They provide me with my tax forms as links to a Microsoft Cloud file, and I need to have a Microsoft login in order to retrieve these files. This policy – and a prohibition on sending customer files as email attachments – came from their IT team, and it was in response to a requirement imposed by their insurer.

The problem here isn't merely that I must now enter into a contractual arrangement with Microsoft in order to do my taxes. It isn't just that Microsoft's terms of service are ghastly. It's not even that they could change those terms at any time, for example, to ingest my sensitive tax documents in order to train a large language model.

It's that Microsoft – like Google, Apple, Facebook and the other giants – routinely disconnects users for reasons it refuses to explain, and offers no meaningful appeal. Microsoft tells its business customers, "force your clients to get a Microsoft account in order to maintain communications security" but also reserves the right to unilaterally ban those clients from having a Microsoft account.

There are examples of this all over. Google recently flipped a switch so that you can't complete a Google Form without being logged into a Google account. Now, my ability to purse all kinds of matters both consequential and trivial turn on Google's good graces, which can change suddenly and arbitrarily. If I was like Mark, permanently banned from Google, I wouldn't have been able to complete Google Forms this week telling a conference organizer what sized t-shirt I wear, but also telling a friend that I could attend their wedding.

Now, perhaps some people really should be locked out of digital life. Maybe people who traffick in CSAM should be locked out of the cloud. But the entity that should make that determination is a court, not a Big Tech content moderator. It's fine for a platform to decide it doesn't want your business – but it shouldn't be up to the platform to decide that no one should be able to provide you with service.

This is especially salient in light of the chaos caused by Crowdstrike's catastrophic software update last week. Crowdstrike demonstrated what happens to users when a cloud provider accidentally terminates their account, but while we're thinking about reducing the likelihood of such accidents, we should really be thinking about what happens when you get Crowdstruck on purpose.

The wholesale chaos that Windows users and their clients, employees, users and stakeholders underwent last week could have been pieced out retail. It could have come as a court order (either by a US court or a foreign court) to disconnect a user and/or brick their computer. It could have come as an insider attack, undertaken by a vengeful employee, or one who was on the take from criminals or a foreign government. The ability to give anyone in the world a Blue Screen of Death could be a feature and not a bug.

It's not that companies are sadistic. When they mistreat us, it's nothing personal. They've just calculated that it would cost them more to run a good process than our business is worth to them. If they know we can't leave for a competitor, if they know we can't sue them, if they know that a tech rival can't give us a tool to get our data out of their silos, then the expected cost of mistreating us goes down. That makes it economically rational to seek out ever-more trivial sources of income that impose ever-more miserable conditions on us. When we can't leave without paying a very steep price, there's practically a fiduciary duty to find ways to upcharge, downgrade, scam, screw and enshittify us, right up to the point where we're so pissed that we quit.

Google could pay competent decision-makers to review every complaint about an account disconnection, but the cost of employing that large, skilled workforce vastly exceeds their expected lifetime revenue from a user like Mark. The fact that this results in the ruination of Mark's life isn't Google's problem – it's Mark's problem.

The cloud is many things, but most of all, it's a trap. When software is delivered as a service, when your data and the programs you use to read and write it live on computers that you don't control, your switching costs skyrocket. Think of Adobe, which no longer lets you buy programs at all, but instead insists that you run its software via the cloud. Adobe used the fact that you no longer own the tools you rely upon to cancel its Pantone color-matching license. One day, every Adobe customer in the world woke up to discover that the colors in their career-spanning file collections had all turned black, and would remain black until they paid an upcharge:

https://pluralistic.net/2022/10/28/fade-to-black/#trust-the-process

The cloud allows the companies whose products you rely on to alter the functioning and cost of those products unilaterally. Like mobile apps – which can't be reverse-engineered and modified without risking legal liability – cloud apps are built for enshittification. They are designed to shift power away from users to software companies. An app is just a web-page wrapped in enough IP to make it a felony to add an ad-blocker to it. A cloud app is some Javascript wrapped in enough terms of service clickthroughs to make it a felony to restore old features that the company now wants to upcharge you for.

Google's defenstration of K Renee, Mark and Cassio may have been accidental, but Google's capacity to defenstrate all of us, and the enormous cost we all bear if Google does so, has been carefully engineered into the system. Same goes for Apple, Microsoft, Adobe and anyone else who traps us in their silos. The lesson of the Crowdstrike catastrophe isn't merely that our IT systems are brittle and riddled with single points of failure: it's that these failure-points can be tripped deliberately, and that doing so could be in a company's best interests, no matter how devastating it would be to you or me.

If you'd like an e ssay-formatted version of this post to read or share, here's a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2024/07/22/degoogled/#kafka-as-a-service

Image: Cryteria (modified) https://commons.wikimedia.org/wiki/File:HAL9000.svg

CC BY 3.0 https://creativecommons.org/licenses/by/3.0/deed.en

522 notes

·

View notes

Note

AI is theft.

Character.Ai still uses **stolen** data. Support real artists and writers. RP with real people.

IAMREALpleasegimmeahotchocotugmeinandgimmeasinglegoodreasontoliiiveeeeaaaaAAAAAAA

*Breakdown joins the chat*

An anon? How courageous of you. /lh

Actually right now you did what many chatbot users do— hide behind Persona just in case people don't accept your writing/rp style. But it's easier to play safe, I give you that. Your mental health should be your first priority no matter what people say

I do my art, write snippets of text, develop OCs and RP with people, but still make chatbots on different platforms

Why?

For shits and giggles

But no, friends, seriously

We already live with that and apparently are gonna continue to, unless the authorities decide to step in

For me cai was a tool to improve my vocabulary since 1) not so many native speakers were willing/had time to help, 2) even if they did, we had jobs and damn timezones which made our coordination harder, 3) I found out that ppl were simply too shy to play

Recently I've learned the term "Cringe culture" which is cringe itself and hella annoying at that— some writers are fucking scared to post, to be met with toxicity instead of actually useful critique

Anywho, the topic is controversial af.

On one hand, it did writers dirty. Well, fuck. On the other hand, your T9 was also trained on something and I deem LLM no much smarter than a huge T9 [a predictive text technology which almost every keyboard for phones and tablets has]

The problem I see is that the ai developers didn't ask for any data

How much easier everything would go if they had manners and/or paid for some materials? I usually don't mind lending a pen if someone asks, but can bite one's hand off if they grab it

As you might've noticed, I wanna use this ask to bring up some other interesting topics

My man, I've heard enough of "Why trying if ai renders better than me/uses better words"

Anxiety.

That's what makes me sad

If we ever manage to change that, to make people realise that human-made art is a freaking precious treasure with hours of effort spent on it, maybe earth heals and unicorns return

You buy funny one-nickel-worth stuff from Aliexpress, no? That's AI. As well as a half-gnawled pencil one finds in their old school backpack to write down an anecdote they've just heard

It's easy to focus on the bad side

If fish is ill in a dirty tank, are you gonna add more sand? Pfftt. I assume you gonna change filters, scrub that bastard clean and add more lil fishies to make others feel less lonely, instead of rumbling that you shouldn't have gotten any new weeds for the the bowl in the first place, because, who would've guessed, fish eat that. And poop. —a process natural as breathing

My suggestion is that we try to create a safe space which would encourage writing outside of roleplay, make young artists feel safe regardless of their level of skill

Or maybe I'm too far from the Internet in general and don't understand why writing example messages for a silly toy is suddenly a bad thing when it encourages kids to try themselves in text RPGs without any risk of being judged for that

An interesting topic you gave me, really, I've spent some time contemplating about it

Feel free to suggest things that we, as a community, can do right here and right now, because, gods know, I'm personally unable to atta-ta a corporation for "using language we all speak", especially when chatbots have some features which would be a damn shame not to use in language learning since it's so engaging and teaches kids new words in a forgiving game-like way

Though I hope there will be some law regulating ai and the use of ai-made products soon. Let's give it some time

#imho#character ai#character.ai#cai#cai ask#ai#controversy#i want to hear your thoughts#discussion#ai chatbot#writers on tumblr#writerscommunity#writers assemble#teachers#cmere#scp fandom#you too since we write a lot#yapping#ted talks#help#mental health#anxiety#psychology#fear#social anxiety#xoul.ai#xoul ai#moescapeai#yodayo#dnd

31 notes

·

View notes

Text

Anyone who has spent even 15 minutes on TikTok over the past two months will have stumbled across more than one creator talking about Project 2025, a nearly thousand-page policy blueprint from the Heritage Foundation that outlines a radical overhaul of the government under a second Trump administration. Some of the plan’s most alarming elements—including severely restricting abortion and rolling back the rights of LGBTQ+ people—have already become major talking points in the presidential race.

But according to a new analysis from the Technology Oversight Project, Project 2025 includes hefty handouts and deregulation for big business, and the tech industry is no exception. The plan would roll back environmental regulation to the benefit of the AI and crypto industries, quash labor rights, and scrap whole regulatory agencies, handing a massive win to big companies and billionaires—including many of Trump’s own supporters in tech and Silicon Valley.

“Their desire to eliminate whole agencies that are the enforcers of antitrust, of consumer protection is a huge, huge gift to the tech industry in general,” says Sacha Haworth, executive director at the Tech Oversight Project.

One of the most drastic proposals in Project 2025 suggests abolishing the Federal Reserve altogether, which would allow banks to back their money using cryptocurrencies, if they so choose. And though some conservatives have railed against the dominance of Big Tech, Project 2025 also suggests that a second Trump administration could abolish the Federal Trade Commission (FTC), which currently has the power to enforce antitrust laws.

Project 2025 would also drastically shrink the role of the National Labor Relations Board, the independent agency that protects employees’ ability to organize and enforces fair labor practices. This could have a major knock on effect for tech companies: In January, Musk’s SpaceX filed a lawsuit in a Texas federal court claiming that the National Labor Relations Board (NLRB) was unconstitutional after the agency said the company had illegally fired eight employees who sent a letter to the company’s board saying that Musk was a “distraction and embarrassment.” Last week, a Texas judge ruled that the structure of the NLRB—which includes a director that can’t be fired by the president—was unconstitutional, and experts believe the case may wind its way to the Supreme Court.

This proposal from Project 2025 could help quash the nascent unionization efforts within the tech sector, says Darrell West, a senior fellow at the Brookings Institution’s Center for Technology Innovation. “Tech, of course, relies a lot on independent contractors,” says West. “They have a lot of jobs that don't offer benefits. It's really an important part of the tech sector. And this document seems to reward those types of business.”

For emerging technologies like AI and crypto, a rollback in environmental regulations proposed by Project 2025 would mean that companies would not be accountable for the massive energy and environmental costs associated with bitcoin mining and running and cooling the data centers that make AI possible. “The tech industry can then backtrack on emission pledges, especially given that they are all in on developing AI technology,” says Haworth.

The Republican Party’s official platform for the 2024 elections is even more explicit, promising to roll back the Biden administration’s early efforts to ensure AI safety and “defend the right to mine Bitcoin.”

All of these changes would conveniently benefit some of Trump’s most vocal and important backers in Silicon Valley. Trump’s running mate, Republican senator J.D. Vance of Ohio, has long had connections to the tech industry, particularly through his former employer, billionaire founder of Palantir and longtime Trump backer Peter Thiel. (Thiel’s venture capital firm, Founder’s Fund, invested $200 million in crypto earlier this year.)

Thiel is one of several other Silicon Valley heavyweights who have recently thrown their support behind Trump. In the past month, Elon Musk and David Sacks have both been vocal about backing the former president. Venture capitalists Marc Andreessen and Ben Horowitz, whose firm a16z has invested in several crypto and AI startups, have also said they will be donating to the Trump campaign.

“They see this as their chance to prevent future regulation,” says Haworth. “They are buying the ability to avoid oversight.”

Reporting from Bloomberg found that sections of Project 2025 were written by people who have worked or lobbied for companies like Meta, Amazon, and undisclosed bitcoin companies. Both Trump and independent candidate Robert F. Kennedy Jr. have courted donors in the crypto space, and in May, the Trump campaign announced it would accept donations in cryptocurrency.

But Project 2025 wouldn’t necessarily favor all tech companies. In the document, the authors accuse Big Tech companies of attempting “to drive diverse political viewpoints from the digital town square.” The plan supports legislation that would eliminate the immunities granted to social media platforms by Section 230, which protects companies from being legally held responsible for user-generated content on their sites, and pushes for “anti-discrimination” policies that ���prohibit discrimination against core political viewpoints.”

It would also seek to impose transparency rules on social platforms, saying that the Federal Communications Commission (FCC) “could require these platforms to provide greater specificity regarding their terms of service, and it could hold them accountable by prohibiting actions that are inconsistent with those plain and particular terms.”

And despite Trump’s own promise to bring back TikTok, Project 2025 suggests the administration “ban all Chinese social media apps such as TikTok and WeChat, which pose significant national security risks and expose American consumers to data and identity theft.”

West says the plan is full of contradictions when it comes to its approach to regulation. It’s also, he says, notably soft on industries where tech billionaires and venture capitalists have put a significant amount of money, namely AI and cryptocurrency. “Project 2025 is not just to be a policy statement, but to be a fundraising vehicle,” he says. “So, I think the money angle is important in terms of helping to resolve some of the seemingly inconsistencies in the regulatory approach.”

It remains to be seen how impactful Project 2025 could be on a future Republican administration. On Tuesday, Paul Dans, the director of the Heritage Foundation’s Project 2025, stepped down. Though Trump himself has sought to distance himself from the plan, reporting from the Wall Street Journal indicates that while the project may be lower profile, it’s not going away. Instead, the Heritage Foundation is shifting its focus to making a list of conservative personnel who could be hired into a Republican administration to execute the party’s vision.

64 notes

·

View notes

Text

pulling out a section from this post (a very basic breakdown of generative AI) for easier reading;

AO3 and Generative AI

There are unfortunately some massive misunderstandings in regards to AO3 being included in LLM training datasets. This post was semi-prompted by the ‘Knot in my name’ AO3 tag (for those of you who haven’t heard of it, it’s supposed to be a fandom anti-AI event where AO3 writers help “further pollute” AI with Omegaverse), so let’s take a moment to address AO3 in conjunction with AI. We’ll start with the biggest misconception:

1. AO3 wasn’t used to train generative AI.

Or at least not anymore than any other internet website. AO3 was not deliberately scraped to be used as LLM training data.

The AO3 moderators found traces of the Common Crawl web worm in their servers. The Common Crawl is an open data repository of raw web page data, metadata extracts and text extracts collected from 10+ years of web crawling. Its collective data is measured in petabytes. (As a note, it also only features samples of the available pages on a given domain in its datasets, because its data is freely released under fair use and this is part of how they navigate copyright.) LLM developers use it and similar web crawls like Google’s C4 to bulk up the overall amount of pre-training data.

AO3 is big to an individual user, but it’s actually a small website when it comes to the amount of data used to pre-train LLMs. It’s also just a bad candidate for training data. As a comparison example, Wikipedia is often used as high quality training data because it’s a knowledge corpus and its moderators put a lot of work into maintaining a consistent quality across its web pages. AO3 is just a repository for all fanfic -- it doesn’t have any of that quality maintenance nor any knowledge density. Just in terms of practicality, even if people could get around the copyright issues, the sheer amount of work that would go into curating and labeling AO3’s data (or even a part of it) to make it useful for the fine-tuning stages most likely outstrips any potential usage.

Speaking of copyright, AO3 is a terrible candidate for training data just based on that. Even if people (incorrectly) think fanfic doesn’t hold copyright, there are plenty of books and texts that are public domain that can be found in online libraries that make for much better training data (or rather, there is a higher consistency in quality for them that would make them more appealing than fic for people specifically targeting written story data). And for any scrapers who don’t care about legalities or copyright, they’re going to target published works instead. Meta is in fact currently getting sued for including published books from a shadow library in its training data (note, this case is not in regards to any copyrighted material that might’ve been caught in the Common Crawl data, its regarding a book repository of published books that was scraped specifically to bring in some higher quality data for the first training stage). In a similar case, there’s an anonymous group suing Microsoft, GitHub, and OpenAI for training their LLMs on open source code.

Getting back to my point, AO3 is just not desirable training data. It’s not big enough to be worth scraping for pre-training data, it’s not curated enough to be considered for high quality data, and its data comes with copyright issues to boot. If LLM creators are saying there was no active pursuit in using AO3 to train generative AI, then there was (99% likelihood) no active pursuit in using AO3 to train generative AI.

AO3 has some preventative measures against being included in future Common Crawl datasets, which may or may not work, but there’s no way to remove any previously scraped data from that data corpus. And as a note for anyone locking their AO3 fics: that might potentially help against future AO3 scrapes, but it is rather moot if you post the same fic in full to other platforms like ffn, twitter, tumblr, etc. that have zero preventative measures against data scraping.

2. A/B/O is not polluting generative AI

…I’m going to be real, I have no idea what people expected to prove by asking AI to write Omegaverse fic. At the very least, people know A/B/O fics are not exclusive to AO3, right? The genre isn’t even exclusive to fandom -- it started in fandom, sure, but it expanded to general erotica years ago. It’s all over social media. It has multiple Wikipedia pages.

More to the point though, omegaverse would only be “polluting” AI if LLMs were spewing omegaverse concepts unprompted or like…associated knots with dicks more than rope or something. But people asking AI to write omegaverse and AI then writing omegaverse for them is just AI giving people exactly what they asked for. And…I hate to point this out, but LLMs writing for a niche the LLM trainers didn’t deliberately train the LLMs on is generally considered to be a good thing to the people who develop LLMs. The capability to fill niches developers didn’t even know existed increases LLMs’ marketability. If I were a betting man, what fandom probably saw as a GOTCHA moment, AI people probably saw as a good sign of LLMs’ future potential.

3. Individuals cannot affect LLM training datasets.

So back to the fandom event, with the stated goal of sabotaging AI scrapers via omegaverse fic.

…It’s not going to do anything.

Let’s add some numbers to this to help put things into perspective:

LLaMA’s 65 billion parameter model was trained on 1.4 trillion tokens. Of that 1.4 trillion tokens, about 67% of the training data was from the Common Crawl (roughly ~3 terabytes of data).

3 terabytes is 3,000,000,000 kilobytes.

That’s 3 billion kilobytes.

According to a news article I saw, there has been ~450k words total published for this campaign (*this was while it was going on, that number has probably changed, but you’re about to see why that still doesn’t matter). So, roughly speaking, ~450k of text is ~1012 KB (I’m going off the document size of a plain text doc for a fic whose word count is ~440k).

So 1,012 out of 3,000,000,000.

Aka 0.000034%.

And that 0.000034% of 3 billion kilobytes is only 2/3s of the data for the first stage of training.

And not to beat a dead horse, but 0.000034% is still grossly overestimating the potential impact of posting A/B/O fic. Remember, only parts of AO3 would get scraped for Common Crawl datasets. Which are also huge! The October 2022 Common Crawl dataset is 380 tebibytes. The April 2021 dataset is 320 tebibytes. The 3 terabytes of Common Crawl data used to train LLaMA was randomly selected data that totaled to less than 1% of one full dataset. Not to mention, LLaMA’s training dataset is currently on the (much) larger size as compared to most LLM training datasets.

I also feel the need to point out again that AO3 is trying to prevent any Common Crawl scraping in the future, which would include protection for these new stories (several of which are also locked!).

Omegaverse just isn’t going to do anything to AI. Individual fics are going to do even less. Even if all of AO3 suddenly became omegaverse, it’s just not prominent enough to influence anything in regards to LLMs. You cannot affect training datasets in any meaningful way doing this. And while this might seem really disappointing, this is actually a good thing.

Remember that anything an individual can do to LLMs, the person you hate most can do the same. If it were possible for fandom to corrupt AI with omegaverse, fascists, bigots, and just straight up internet trolls could pollute it with hate speech and worse. AI already carries a lot of biases even while developers are actively trying to flatten that out, it’s good that organized groups can’t corrupt that deliberately.

#generative ai#pulling this out wasnt really prompted by anything specific#so much as heard some repeated misconceptions and just#sighs#nope#incorrect#u got it wrong#sorry#unfortunately for me: no consistent tag to block#sigh#ao3

101 notes

·

View notes

Text

Future of LLMs (or, "AI", as it is improperly called)

Posted a thread on bluesky and wanted to share it and expand on it here. I'm tangentially connected to the industry as someone who has worked in game dev, but I know people who work at more enterprise focused companies like Microsoft, Oracle, etc. I'm a developer who is highly AI-critical, but I'm also aware of where it stands in the tech world and thus I think I can share my perspective. I am by no means an expert, mind you, so take it all with a grain of salt, but I think that since so many creatives and artists are on this platform, it would be of interest here. Or maybe I'm just rambling, idk.

LLM art models ("AI art") will eventually crash and burn. Even if they win their legal battles (which if they do win, it will only be at great cost), AI art is a bad word almost universally. Even more than that, the business model hemmoraghes money. Every time someone generates art, the company loses money -- it's a very high energy process, and there's simply no way to monetize it without charging like a thousand dollars per generation. It's environmentally awful, but it's also expensive, and the sheer cost will mean they won't last without somehow bringing energy costs down. Maybe this could be doable if they weren't also being sued from every angle, but they just don't have infinite money.

Companies that are investing in "ai research" to find a use for LLMs in their company will, after years of research, come up with nothing. They will blame their devs and lay them off. The devs, worth noting, aren't necessarily to blame. I know an AI developer at meta (LLM, really, because again AI is not real), and the morale of that team is at an all time low. Their entire job is explaining patiently to product managers that no, what you're asking for isn't possible, nothing you want me to make can exist, we do not need to pivot to LLMs. The product managers tell them to try anyway. They write an LLM. It is unable to do what was asked for. "Hm let's try again" the product manager says. This cannot go on forever, not even for Meta. Worst part is, the dev who was more or less trying to fight against this will get the blame, while the product manager moves on to the next thing. Think like how NFTs suddenly disappeared, but then every company moved to AI. It will be annoying and people will lose jobs, but not the people responsible.

ChatGPT will probably go away as something public facing as the OpenAI foundation continues to be mismanaged. However, while ChatGPT as something people use to like, write scripts and stuff, will become less frequent as the public facing chatGPT becomes unmaintainable, internal chatGPT based LLMs will continue to exist.

This is the only sort of LLM that actually has any real practical use case. Basically, companies like Oracle, Microsoft, Meta etc license an AI company's model, usually ChatGPT.They are given more or less a version of ChatGPT they can then customize and train on their own internal data. These internal LLMs are then used by developers and others to assist with work. Not in the "write this for me" kind of way but in the "Find me this data" kind of way, or asking it how a piece of code works. "How does X software that Oracle makes do Y function, take me to that function" and things like that. Also asking it to write SQL queries and RegExes. Everyone I talk to who uses these intrernal LLMs talks about how that's like, the biggest thign they ask it to do, lol.

This still has some ethical problems. It's bad for the enivronment, but it's not being done in some datacenter in god knows where and vampiring off of a power grid -- it's running on the existing servers of these companies. Their power costs will go up, contributing to global warming, but it's profitable and actually useful, so companies won't care and only do token things like carbon credits or whatever. Still, it will be less of an impact than now, so there's something. As for training on internal data, I personally don't find this unethical, not in the same way as training off of external data. Training a language model to understand a C++ project and then asking it for help with that project is not quite the same thing as asking a bot that has scanned all of GitHub against the consent of developers and asking it to write an entire project for me, you know? It will still sometimes hallucinate and give bad results, but nowhere near as badly as the massive, public bots do since it's so specialized.

The only one I'm actually unsure and worried about is voice acting models, aka AI voices. It gets far less pushback than AI art (it should get more, but it's not as caustic to a brand as AI art is. I have seen people willing to overlook an AI voice in a youtube video, but will have negative feelings on AI art), as the public is less educated on voice acting as a profession. This has all the same ethical problems that AI art has, but I do not know if it has the same legal problems. It seems legally unclear who owns a voice when they voice act for a company; obviously, if a third party trains on your voice from a product you worked on, that company can sue them, but can you directly? If you own the work, then yes, you definitely can, but if you did a role for Disney and Disney then trains off of that... this is morally horrible, but legally, without stricter laws and contracts, they can get away with it.

In short, AI art does not make money outside of venture capital so it will not last forever. ChatGPT's main income source is selling specialized LLMs to companies, so the public facing ChatGPT is mostly like, a showcase product. As OpenAI the company continues to deathspiral, I see the company shutting down, and new companies (with some of the same people) popping up and pivoting to exclusively catering to enterprises as an enterprise solution. LLM models will become like, idk, SQL servers or whatever. Something the general public doesn't interact with directly but is everywhere in the industry. This will still have environmental implications, but LLMs are actually good at this, and the data theft problem disappears in most cases.

Again, this is just my general feeling, based on things I've heard from people in enterprise software or working on LLMs (often not because they signed up for it, but because the company is pivoting to it so i guess I write shitty LLMs now). I think artists will eventually be safe from AI but only after immense damages, I think writers will be similarly safe, but I'm worried for voice acting.

7 notes

·

View notes

Note

Have you considered going to Pillowfort?

Long answer down below:

I have been to the Sheezys, the Buzzlys, the Mastodons, etc. These platforms all saw a surge of new activity whenever big sites did something unpopular. But they always quickly died because of mismanagement or users going back to their old haunts due to lack of activity or digital Stockholm syndrome.

From what I have personally seen, a website that was purely created as an alternative to another has little chance of taking off. It it's going to work, it needs to be developed naturally and must fill a different niche. I mean look at Zuckerberg's Threads; died as fast as it blew up. Will Pillowford be any different?

The only alternative that I found with potential was the fediverse (mastodon) because of its decentralized nature. So people could make their own rules. If Jack Dorsey's new dating app Bluesky gets integrated into this system, it might have a chance. Although decentralized communities will be faced with unique challenges of their own (egos being one of the biggest, I think).

Trying to build a new platform right now might be a waste of time anyway because AI is going to completely reshape the Internet as we know it. This new technology is going to send shockwaves across the world akin to those caused by the invention of the Internet itself over 40 years ago. I'm sure most people here are aware of the damage it is doing to artists and writers. You have also likely seen the other insidious applications. Social media is being bombarded with a flood of fake war footage/other AI-generated disinformation. If you posted a video of your own voice online, criminals can feed it into an AI to replicate it and contact your bank in an attempt to get your financial info. You can make anyone who has recorded themselves say and do whatever you want. Children are using AI to make revenge porn of their classmates as a new form of bullying. Politicians are saying things they never said in their lives. Google searches are being poisoned by people who use AI to data scrape news sites to generate nonsensical articles and clickbait. Soon video evidence will no longer be used in court because we won't be able to tell real footage from deep fakes.

50% of the Internet's traffic is now bots. In some cases, websites and forums have been reduced to nothing more than different chatbots talking to each other, with no humans in sight.

I don't think we have to count on government intervention to solve this problem. The Western world could ban all AI tomorrow and other countries that are under no obligation to follow our laws or just don't care would continue to use it to poison the Internet. Pandora's box is open, and there's no closing it now.

Yet I cannot stand an Internet where I post a drawing or comic and the only interactions I get are from bots that are so convincing that I won't be able to tell the difference between them and real people anymore. When all that remains of art platforms are waterfalls of AI sludge where my work is drowned out by a virtually infinite amount of pictures that are generated in a fraction of a second. While I had to spend +40 hours for a visually inferior result.

If that is what I can expect to look forward to, I might as well delete what remains of my Internet presence today. I don't know what to do and I don't know where to go. This is a depressing post. I wish, after the countless hours I spent looking into this problem, I would be able to offer a solution.

All I know for sure is that artists should not remain on "Art/Creative" platforms that deliberately steal their work to feed it to their own AI or sell their data to companies that will. I left Artstation and DeviantArt for those reasons and I want to do the same with Tumblr. It's one thing when social media like Xitter, Tik Tok or Instagram do it, because I expect nothing less from the filth that runs those. But creative platforms have the obligation to, if not protect, at least not sell out their users.

But good luck convincing the entire collective of Tumblr, Artstation, and DeviantArt to leave. Especially when there is no good alternative. The Internet has never been more centralized into a handful of platforms, yet also never been more lonely and scattered. I miss the sense of community we artists used to have.

The truth is that there is nowhere left to run. Because everywhere is the same. You can try using Glaze or Nightshade to protect your work. But I don't know if I trust either of them. I don't trust anything that offers solutions that are 'too good to be true'. And even if take those preemptive measures, what is to stop the tech bros from updating their scrapers to work around Glaze and steal your work anyway? I will admit I don't entirely understand how the technology works so I don't know if this is a legitimate concern. But I'm just wondering if this is going to become some kind of digital arms race between tech bros and artists? Because that is a battle where the artists lose.

28 notes

·

View notes

Text

#AI Factory#AI Use Case Gallary#AI Development Platform#AI In Healthcare#AI in recruitment#Artificial Intellegence

0 notes

Text

RECENT SEO & MARKETING NEWS FOR ECOMMERCE, AUGUST 2024

Hello, and welcome to my very last Marketing News update here on Tumblr.

After today, these reports will now be found at least twice a week on my Patreon, available to all paid members. See more about this change here on my website blog: https://www.cindylouwho2.com/blog/2024/8/12/a-new-way-to-get-ecommerce-news-and-help-welcome-to-my-patreon-page

Don't worry! I will still be posting some short pieces here on Tumblr (as well as some free pieces on my Patreon, plus longer posts on my website blog). However, the news updates and some other posts will be moving to Patreon permanently.

Please follow me there! https://www.patreon.com/CindyLouWho2

TOP NEWS & ARTICLES

A US court ruled that Google is a monopoly, and has broken antitrust laws. This decision will be appealed, but in the meantime, could affect similar cases against large tech giants.

Did you violate a Facebook policy? Meta is now offering a “training course” in lieu of having the page’s reach limited for Professional Mode users.

Google Ads shown in Canada will have a 2.5% surcharge applied as of October 1, due to new Canadian tax laws.

SEO: GOOGLE & OTHER SEARCH ENGINES

Search Engine Roundtable’s Google report for July is out; we’re still waiting for the next core update.

SOCIAL MEDIA - All Aspects, By Site

Facebook (includes relevant general news from Meta)

Meta’s latest legal development: a $1.4 billion settlement with Texas over facial recognition and privacy.

Instagram

Instagram is highlighting “Views” in its metrics in an attempt to get creators to focus on reach instead of follower numbers.

Pinterest

Pinterest is testing outside ads on the site. The ad auction system would include revenue sharing.

Reddit

Reddit confirmed that anyone who wants to use Reddit posts for AI training and other data collection will need to pay for them, just as Google and OpenAI did.

Second quarter 2024 was great for Reddit, with revenue growth of 54%. Like almost every other platform, they are planning on using AI in their search results, perhaps to summarize content.

Threads

Threads now claims over 200 million active users.

TikTok

TikTok is now adding group chats, which can include up to 32 people.

TikTok is being sued by the US Federal Trade Commission, for allowing children under 13 to sign up and have their data harvested.

Twitter

Twitter seems to be working on the payments option Musk promised last year. Tweets by users in the EU will at least temporarily be pulled from the AI-training for “Grok”, in line with EU law.

CONTENT MARKETING (includes blogging, emails, and strategies)

Email software Mad Mimi is shutting down as of August 30. Owner GoDaddy is hoping to move users to its GoDaddy Digital Marketing setup.

Content ideas for September include National Dog Week.

You can now post on Substack without having an actual newsletter, as the platform tries to become more like a social media site.

As of November, Patreon memberships started in the iOS app will be subject to a 30% surcharge from Apple. Patreon is giving creators the ability to add that charge to the member's bill, or pay it themselves.

ONLINE ADVERTISING (EXCEPT INDIVIDUAL SOCIAL MEDIA AND ECOMMERCE SITES)

Google worked with Meta to break the search engine’s rules on advertising to children through a loophole that showed ads for Instagram to YouTube viewers in the 13-17 year old demographic. Google says they have stopped the campaign, and that “We prohibit ads being personalized to people under-18, period”.

Google’s Performance Max ads now have new tools, including some with AI.

Microsoft’s search and news advertising revenue was up 19% in the second quarter, a very good result for them.

One of the interesting tidbits from the recent Google antitrust decision is that Amazon sells more advertising than either Google or Meta’s slice of retail ads.

BUSINESS & CONSUMER TRENDS, STATS & REPORTS; SOCIOLOGY & PSYCHOLOGY, CUSTOMER SERVICE

More than half of Gen Z claim to have bought items while spending time on social media in the past half year, higher than other generations.

Shopify’s president claimed that Christmas shopping started in July on their millions of sites, with holiday decor and ornament sales doubling, and advent calendar sales going up a whopping 4,463%.

9 notes

·

View notes

Text

Post 2, Week 3

Has Cyberfeminism(s) impacted feminism as a movement?

Cyberfeminism as a whole has changed the landscape of the feminist movement as a whole. The movement was born out of the theory that the internet can be a place for all women to transform their lives and feel liberated. Using the internet to expand the ideology of the feminist movement to more women. Cyberfeminism(s) has been able to achieve a more widespread display of feminism but has some issues. The movement has been critiqued by many scholars for a multitude of things. Some scholars touch on Cyberfeminism(s) not having a grounding point for its ideology and are described as “just a developing theory”. Other scholars will focus more on the movements sporadic and contradicting points. This coupled with the assumed “educated, white, upper-middle-class, English-speaking, culturally sophisticated readership” has thrown the movement into this weird spot. A spot where it is,by nature, excluding some women by making it inaccessible. I think cyberfeminism(s) has also pushed it to an audience (men) that may have not seen the movement or already had opinions on the movement. Typically an audience who is the oppressor of women. This new found audience has belittled the movement and deduced it to nothing more than “crying” or being “too sensitive”. Playing into the point of why the movement is needed. Which has impacted the feminist movement.

Can the use of Ai technologies be unbiased?

Ai and its many implications have been promised the future of the world and a way for mankind to make life easier. While it has the possibility to be true, we have to ask the question of easier for who exactly? For example in America, our healthcare system has adopted the use of AI to determine care for people and whether they need it. Studies have shown that people who spend more or have more access to healthcare that they get the care they need more often than others. Typically people who have more access to healthcare are white people. Basically implying that since white people have access to healthcare they are determined that they will get said care. While others who don’t have the access will get denied more because of the lack of frequency. Ai as it is built gathers information from other users on the internet (people) and by nature is a product of its environment. Then a question arises will AI ever be able to be unbiased sometime in the future? While we may not be able to answer that question as of now, we can speculate. With the way that AI is set up and is being used it is most likely not going to be able to be as unbiased as they say.

How has the internet helped the anti-I.C.E movement?

The media has always been a powerful tool in the fight against injustice, helping to raise awareness and mobilize people. In today’s world, social media makes it easier than ever to share information and organize movements. A recent example of this was the protests against Donald Trump’s anti-immigration policies. Social media platforms played a central role in organizing and spreading the message, with people across the U.S. coming together to protest I.C.E. For me, TikTok has been a primary source of media, and I saw numerous videos documenting how the protests managed to shut down a major highway in Los Angeles. Most of the content I encountered was filled with positive support and messages of solidarity. However, as is often the case, there was also a darker side, with some videos attracting racist and harmful comments. This duality highlights both the power and the negative aspects of social media platforms.

How has facial recognition software been used to target minority communities?

Facial recognition technology is heavily influenced by political perspectives, especially when it’s used by police to track down criminals. This raises serious concerns for minority communities, as one of the biggest issues with the technology is its inability to accurately recognize people from diverse racial backgrounds. A 2019 study examining over 100 facial recognition systems found that they performed significantly worse on Black and Asian faces, as highlighted by Kashmir Hill in her article "Another Arrest, and Jail Time, Due to a Bad Facial Recognition Match." This flaw has led to innocent individuals—particularly those from minority groups—being wrongly arrested and imprisoned. Hill details the case of Nijeer Parks, who ended up spending "10 days in jail and paid around $5,000 to defend himself" after being falsely identified by facial recognition software. Not everyone has the resources to fight such charges, and the increasing use of this technology could lead to more wrongful incarcerations and innocent people facing time in prison.

Nicole Brown - Race and Technology

Rethinking Cyberfeminism(s): Race, Gender, and Embodiment Jessie Daniels

2 notes

·

View notes

Text

The Need for Digitization in Manufacturing : Stay Competitive With Low-Code

Industry 4.0 is transforming manufacturing with smart factories, automation, and digital integration. Technologies like the Internet of Things (IoT), artificial intelligence (AI), and low-code applications are enabling manufacturers to streamline processes and develop customized solutions quickly. Low-code platforms empower manufacturers to adapt to global demands, driving efficiency and innovation.

Previously, cross-border transactions in manufacturing faced delays due to bureaucracy, complex payment mechanisms, and inconsistent regulations. These challenges led to inefficiency and increased costs. However, Industry 4.0 technologies, such as digital payments, smart contracts, and logistics tracking, have simplified international transactions, improving procurement processes.

Low-code applications are key in this transformation, enabling rapid development of secure solutions for payments, customs clearance, and regulatory compliance. These platforms reduce complexity, enhance transparency, and ensure cost-effective, secure global supply chains. This shift aligns with the demands of a connected global economy, enhancing productivity and competitiveness.

The Need for Digitization in Manufacturing

Digitization has become crucial for manufacturing to stay competitive, with new technologies and the need for automation driving the sector’s transformation. Key features include ERP systems for centralized management of inventory, finances, and operations; digital supply chain tools for visibility and disruption prediction; real-time data for performance monitoring; sustainability tracking; and IoT/RFID for better tracking, accuracy, and reduced waste.

Low-code applications play a pivotal role in digitization by enabling rapid development of tailored solutions for inventory management, supply chain optimization, and performance analytics. These platforms streamline processes, reduce manual work, and enhance agility, helping manufacturers implement digital transformations quickly and cost-effectively.

Upgrading Manufacturing Capabilities in the Era of Industry 4.0 with Low-code Solutions

Low-code applications are becoming essential for digital transformation in manufacturing, addressing operational challenges while managing increased production demands and a shortage of skilled staff. These platforms enable manufacturers to quickly develop tailored applications without needing specialized coding expertise, fostering faster, more flexible operations. By streamlining processes and aligning with modern consumer demands, low-code technology helps bridge the skills gap, empowering manufacturers to stay competitive and seize new opportunities in a rapidly evolving market.

Low-code Technology Benefits for Modern Industries

As digital transformation becomes increasingly crucial for manufacturing, many enterprises in the sector face challenges with outdated processes, legacy system limitations, customization challenges, and inadequate resources. Low-code applications offer a compelling solution, enabling manufacturers to streamline operations by eliminating paper-based processes and automating workflows across functions such as Production, Sales, Logistics, Finance, Procurement, Quality Assurance, Human Resources, Supply Chain, and IT Operations. Additionally, low-code platforms enhance compliance and safety standards through built-in automated tools.

These platforms deliver impressive results, including over 70% improvement in productivity and close to 95% improvement in output quality in specific scenarios. This is particularly evident in automating complex processes like order fulfillment—from receiving customer orders to delivering finished products and managing invoicing with customers. Use cases also include automating inventory management, enhancing predictive maintenance with real-time data, and optimizing supply chain operations. Low-code solutions make it easier for manufacturers to implement changes quickly, boosting agility and reducing time-to-market while improving overall operational efficiency.

Conclusion

Low-code platforms are driving digital transformation in manufacturing, addressing sector-specific challenges in industries like automotive, aviation, and oil & gas. With Industry 4.0 and smart manufacturing, iLeap’s low-code platform helps integrate IoT, advanced analytics, and end-to-end automation, leading to optimized workflows and real-time decision-making. By adopting agile development, manufacturers can quickly adapt to new technologies and market demands, making iLeap the ideal partner for digital transformation. Unlock the potential of Industry 4.0 with iLeap and turn challenges into growth opportunities.

2 notes

·

View notes

Text

How-To IT

Topic: Core areas of IT

1. Hardware

• Computers (Desktops, Laptops, Workstations)

• Servers and Data Centers

• Networking Devices (Routers, Switches, Modems)

• Storage Devices (HDDs, SSDs, NAS)

• Peripheral Devices (Printers, Scanners, Monitors)

2. Software

• Operating Systems (Windows, Linux, macOS)

• Application Software (Office Suites, ERP, CRM)

• Development Software (IDEs, Code Libraries, APIs)

• Middleware (Integration Tools)

• Security Software (Antivirus, Firewalls, SIEM)

3. Networking and Telecommunications

• LAN/WAN Infrastructure

• Wireless Networking (Wi-Fi, 5G)

• VPNs (Virtual Private Networks)

• Communication Systems (VoIP, Email Servers)

• Internet Services

4. Data Management

• Databases (SQL, NoSQL)

• Data Warehousing

• Big Data Technologies (Hadoop, Spark)

• Backup and Recovery Systems

• Data Integration Tools

5. Cybersecurity

• Network Security

• Endpoint Protection

• Identity and Access Management (IAM)

• Threat Detection and Incident Response

• Encryption and Data Privacy

6. Software Development

• Front-End Development (UI/UX Design)

• Back-End Development

• DevOps and CI/CD Pipelines

• Mobile App Development

• Cloud-Native Development

7. Cloud Computing

• Infrastructure as a Service (IaaS)

• Platform as a Service (PaaS)

• Software as a Service (SaaS)

• Serverless Computing

• Cloud Storage and Management

8. IT Support and Services

• Help Desk Support

• IT Service Management (ITSM)

• System Administration

• Hardware and Software Troubleshooting

• End-User Training

9. Artificial Intelligence and Machine Learning

• AI Algorithms and Frameworks

• Natural Language Processing (NLP)

• Computer Vision

• Robotics

• Predictive Analytics

10. Business Intelligence and Analytics

• Reporting Tools (Tableau, Power BI)

• Data Visualization

• Business Analytics Platforms

• Predictive Modeling

11. Internet of Things (IoT)

• IoT Devices and Sensors

• IoT Platforms

• Edge Computing

• Smart Systems (Homes, Cities, Vehicles)

12. Enterprise Systems

• Enterprise Resource Planning (ERP)

• Customer Relationship Management (CRM)

• Human Resource Management Systems (HRMS)

• Supply Chain Management Systems

13. IT Governance and Compliance

• ITIL (Information Technology Infrastructure Library)

• COBIT (Control Objectives for Information Technologies)

• ISO/IEC Standards

• Regulatory Compliance (GDPR, HIPAA, SOX)

14. Emerging Technologies

• Blockchain

• Quantum Computing

• Augmented Reality (AR) and Virtual Reality (VR)

• 3D Printing

• Digital Twins

15. IT Project Management

• Agile, Scrum, and Kanban

• Waterfall Methodology

• Resource Allocation

• Risk Management

16. IT Infrastructure

• Data Centers

• Virtualization (VMware, Hyper-V)

• Disaster Recovery Planning

• Load Balancing

17. IT Education and Certifications

• Vendor Certifications (Microsoft, Cisco, AWS)

• Training and Development Programs

• Online Learning Platforms

18. IT Operations and Monitoring

• Performance Monitoring (APM, Network Monitoring)

• IT Asset Management

• Event and Incident Management

19. Software Testing

• Manual Testing: Human testers evaluate software by executing test cases without using automation tools.

• Automated Testing: Use of testing tools (e.g., Selenium, JUnit) to run automated scripts and check software behavior.

• Functional Testing: Validating that the software performs its intended functions.

• Non-Functional Testing: Assessing non-functional aspects such as performance, usability, and security.

• Unit Testing: Testing individual components or units of code for correctness.

• Integration Testing: Ensuring that different modules or systems work together as expected.

• System Testing: Verifying the complete software system’s behavior against requirements.

• Acceptance Testing: Conducting tests to confirm that the software meets business requirements (including UAT - User Acceptance Testing).

• Regression Testing: Ensuring that new changes or features do not negatively affect existing functionalities.

• Performance Testing: Testing software performance under various conditions (load, stress, scalability).

• Security Testing: Identifying vulnerabilities and assessing the software’s ability to protect data.

• Compatibility Testing: Ensuring the software works on different operating systems, browsers, or devices.

• Continuous Testing: Integrating testing into the development lifecycle to provide quick feedback and minimize bugs.

• Test Automation Frameworks: Tools and structures used to automate testing processes (e.g., TestNG, Appium).

19. VoIP (Voice over IP)

VoIP Protocols & Standards

• SIP (Session Initiation Protocol)

• H.323

• RTP (Real-Time Transport Protocol)

• MGCP (Media Gateway Control Protocol)

VoIP Hardware

• IP Phones (Desk Phones, Mobile Clients)

• VoIP Gateways

• Analog Telephone Adapters (ATAs)

• VoIP Servers

• Network Switches/ Routers for VoIP

VoIP Software

• Softphones (e.g., Zoiper, X-Lite)

• PBX (Private Branch Exchange) Systems

• VoIP Management Software

• Call Center Solutions (e.g., Asterisk, 3CX)

VoIP Network Infrastructure

• Quality of Service (QoS) Configuration

• VPNs (Virtual Private Networks) for VoIP

• VoIP Traffic Shaping & Bandwidth Management

• Firewall and Security Configurations for VoIP

• Network Monitoring & Optimization Tools

VoIP Security

• Encryption (SRTP, TLS)

• Authentication and Authorization

• Firewall & Intrusion Detection Systems

• VoIP Fraud DetectionVoIP Providers

• Hosted VoIP Services (e.g., RingCentral, Vonage)

• SIP Trunking Providers

• PBX Hosting & Managed Services

VoIP Quality and Testing

• Call Quality Monitoring

• Latency, Jitter, and Packet Loss Testing

• VoIP Performance Metrics and Reporting Tools

• User Acceptance Testing (UAT) for VoIP Systems

Integration with Other Systems

• CRM Integration (e.g., Salesforce with VoIP)

• Unified Communications (UC) Solutions

• Contact Center Integration

• Email, Chat, and Video Communication Integration

2 notes

·

View notes

Text

Tech So Bad It Sounds Like The Reviewers Are Just Plain Depressed

12 Days of Aniblogging 2024, Day 8

I really do try to stay away from armchair cultural criticism, especially when it's this far removed from my weaboo wheelhouse, but this one’s been eating away at me for the entire year. What are we doing here?

2024 has been full of downright bad consumer tech! The Apple Vision Pro, the Rabbit and Humane AI pins, the PS5 Pro… those are just the big ones, and that’s not even getting started on vaporware like Horizon Worlds and Microsoft’s Copilot+ PC initiative. Sure, there are Juiceros every year, but this recent batch of flops feels indicative of a larger trend, which is shipping products that are both overengineered and conceptually half-baked. Of course, there’s plenty of decent tech coming out too – Apple’s recent laptops and desktops have been strong and competitively priced, at least the base models. The Steam Deck created a whole new product category that seems to be thriving. And electric cars… exist, which is better than nothing. But it’s been much more fun to read about of the bad stuff, of course. These days it feels like most of the tech reviews I read these days have the journalists asking “what’s the point?” halfway through, or asserting in advance that it will fail, like this useless product with a bad value proposition is prompting an existential crisis for them. Maybe it is!

yeah yeah journalists don't write their own headlines. I can assure you that the article content carries the same tone for these though.

What did a negative tech review look like before the 2020s? Maybe there wasn’t one, really. The 2000s and the 2010s were drenched in techno-optimism. Even if skepticism towards social media emerged over time, hardware itself was generally received well, mixed at worst, because journalists were happy to extrapolate out what a product and its platform could do. Nowadays, everyone takes everything at face value! As they should –the things we buy rarely get meaningfully better over time, and the support window for flops is getting shorter and shorter with each passing year. On the games side of things, look how quickly Concord ended! With that out of the way, I’m going to start zeroing in on the hardware I saw a “why does this exist” type review for this year.

The Apple Vision Pro

People have been burnt time and time again by nearly every VR headset ecosystem to release (other than mayyybe Oculus, but Facebook’s tendrils sinking in don’t feel great either). The PSVR was a mild success at best and the PSVR2 was an expensive and unmitigated disaster that lost support in a matter of months. Meanwhile, the Valve Index couldn’t cut it with its price, and Microsoft actively blew up their own HoloLens infrastructure earlier this year. It’s at this point it's clear that VR is a niche, and one that’s expensive to develop for and profit off of. Surely Apple will save us!

completely isolate yourself from your family for the low, low price of multiple paychecks