#--because they don't know the difference between it and full on generative ai art

Explore tagged Tumblr posts

Text

im less afraid of ai art itself than i am of the effects this sloppy misinformation volleyball game might have on artists who draw a little too smooth or wonky, or people who work on unrelated and even beneficial types of artificial intelligence. yknow?

#ive seen it... people asking real artists if their art is ai generated. or straight up claiming that it is#people equating a homemade just-for-fun markov chain text generator to chat gpt#the ai inklines tool that the spiderman animators used; that people freaked out about a while ago--#--because they don't know the difference between it and full on generative ai art#its bad out there guys

14 notes

·

View notes

Text

To fan art and fiction enjoyers:

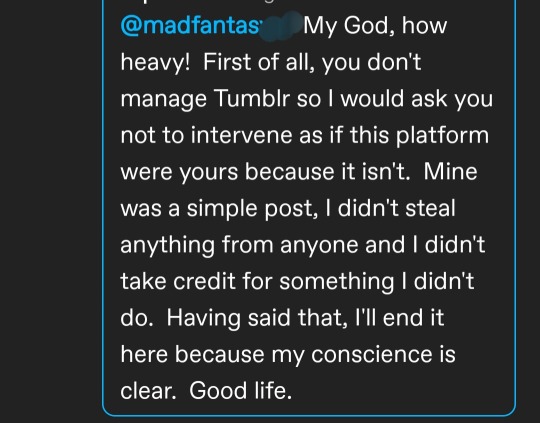

Please excuse my rage slipping if it happened over having to address this literal mediocrity of a subject in comparison to endless things that actually matters in real life. Because this would be at the scrapping bottom of it, but since the occasion presented itself, here we are:

Do you know there are some, let's say, manners, being in fandoms, and/or in using social media in general? NOOO? 8U

Well, Lets start somewhere!

Like it or not, YOU NEED TO ACTUALLY READ STUFF PEOPLE WRITE. Before you follow, before you comment, before you interact, because if you come across something you don't like, or you started to assume things— that's a you problem and not the fault of the poster.

If you DO NOT enjoy a character, a pair of ship, or a certain head cannon, filter the tag it's used for, Google has free tutorials on how. Most social media have these settings and most decent posters tag their posts correctly. If you keep seeing that pair, you can block the people who create it. You are free to do so ofc but WHY WOULD U come on main and air that out? Personally I find it so bizarre and it could show the type of person you are to other people — a toxic company over fictional substance — and I'd say that is not a flex, more like showing your dirty nappy in public. Those characters you love are not real and so not effected by your high ground stance, but actual humans that share you that love notice and get that impression, and it's a weird one. You SHOULD, of course, set your boundaries, and usually where that is be in your profile, on your bio or a pinned post.

Loving bizarre, villainous, creepy concepts DOES NOT EQUAL morality, nor loving good sunshine and flowers does. It's what a person does in real life what counts, not what they consume in entertainment. In fact, it is not a sign of a good person those who be shaming humans who like different fictional concepts. Or when someone keeps using ai generators knowing full well it's based on constant data theft of all sort of human creators across generations and can not exist without the continuance of this theft. Or those supporting creators that they know did irl crimes. Or those who are Policing what's can and cannot go into fiction as if the fickleness of preference have never let alot of things survive its judgement. And I can go on with the miniature examples. You are forgiven if you did not know before, some people learn through experience, but not anymore when you continue this behaviour. And maybe if you can't differentiate between reality and fiction, and what's more important than what, maybe, just maybe, you shouldn't be consuming fiction.

DO NOT POST WHAT YOU DID NOT CREATE. Do you like it when people keep posting your selfies that you only ment to share for funsies and what not? Isn't worse if you did not post that selfie in the first place or never wanted it to be used like that? It's the SAME FOR ART. This is the artists work just as much as your face is yours. Social media at the baseline is about who ever the poster is, their posts are theirs. So you posting an artist's drawing, with no permission, no credit to them, no nothing, is not allowed and people can report that. Don't be an ignorant thick fig and play the victim when schooled like this precious dear\s .Reposters disconnect so many content from their creators and this is how alot of beautiful things in life die, by simply not knowing they are loved, shoved into the over consumption machine..

And lastly, You don't have anything nice to say to OP? Don't say anything! It's not your misguided duty to educate people on how embarrassingly self centered you are, it's okay to be a basic #&★— I promise. It okay to feel out of place in a niche that doesn't concern you. It's okay to realise other people have different perspectives of the fiction work you enjoy. You can sit down.

And I'd like to add, Mani is a safe space for au and ships even if I don't like em, cuz they are only FICTION and will remain FICTION no matter how much I loved them or hated them.

Good day, dears🍀

55 notes

·

View notes

Text

The way people are defending the use of AI for making full blown art is absolutely insane "it's just a tool" "it's like complaining about photography" "it's just like using Photoshop" "artists are obsolete" "artists are only mad at generative AI for art because they know it is better than them", no the fuck it isn't. A tool is something that helps you with your work or enhances the quality of it, a program that literally makes the whole thing for you is not a tool, you're not doing anything. Photography is a skill of itself, a person needs to learn how to use the camera, how the get the right angles, how to change exposure, how to make the photo look best a. Nothing can replicate human passion, but these people don't view art as a product of humanity but rather a way to make easy money by turning it into something fully capitalistic (money is the point now, not passion), a lot of people don't perceive artists as people other than with "just a hobby", that's why they don't appreciate actual art. Also, Photoshop is legitimately a tool if you don't use generative AI.

And yes, I'll say this with using AI to make your homework for you, like, you could genuinely use it as a tool, you could just ask for references, you could ask it to sum up something you wrote, you could use it as a translator, you could ask it to make questions based on a unit so you can test yourself on it. But the moment you have ot do all your work for you then you aren't learning anymore.

If people want to see themselves in Ghibli/Pixar/whatever style so badly why don't they just go find a damn picrew, like, I'll even accept using a frame from a movie and changing the hair and eye colour, at least you're doing something there.

Then they have the nerve to say that what's different between an artist that replicates Ghibli style and an AI that generates it, idk, maybe the fact that the creator of Ghibli specifically said he despises AI and would never incorporate it in his work and the fact that that artists had to learn how to draw that way (do they really think it's only Hayao Miyazaki that draws in that style, like, his animators know how to draw in that style obviously, tho I wouldn't put it past these people to actually not know how animation works like)

They also think we're somehow just angry because it's "taking your mediocre job", like, make it more obvious you don't actually appreciate art, one of our biggest issues with generative AI is that it's trained on others art, so it's basically stealing from these artists. Plus, at the end of the day you're paying for that AI, you're really telling me you'd rather put more money into the pockets of billionaires than the pockets of a regular human trying to make it to the end of the month.

And we're not even talking about the environmental impact AI has, which is insane. But sure, keep ignoring that and contribute to the devaluation of artists, intellectual property theft, environmental destruction and the growing gap in wealth distribution (to name a few) just so you can save a few bucks commissioning an artist or, gods forbid, actually picking up a pencil or a freaking mouse for Microsoft paint, or even use a picrew.

2 notes

·

View notes

Text

10 people I'd like to know better

(please make a new post, don't reblog)

Last Song - Who Killed U. N. Owen ver. 暁Records×Liz Triangle. I may not play Touhou Project games but I'm happy for its existence. There are so many great songs coming from that game and its fandom.

Favourite Colour - Purple! From blueish purple to a more pinkish one. I think colours like dark indigo, which are between blue and purple, have grown on me.

Last Book - Babel by R. F. Kuag. Amazing book that I recommend vividly even if it's a very self-indulgent book for me, as I'm a translator. The book is something akin to a mystery novel. criticism of Western operationalism and a discussion of the power of languages. As a translator I also must add that despite the slight differences, mostly because I live in a different country and in a different time period from the characters in the book, the Translation classes are on point for what you find in a real translation course. There was so much good research done for this book it's astounding. I'm also deeply saddened there has been no translation of this book to European Portuguese. This would be my dream book to translate.

I've also never read so many mentions of Portuguese wine in my life??? You British folk must really love that stuff because I swear the characters only drink Portuguese wine.

Last Show - The Ranma 1/2 remake! Really fun watch. Highly recommend. It's such a funny comfort show. It still maintains the old style of the 80s manga so you'll get this weird feeling of old gender norms but considering the theme of the show it actually works in its favour. The characters are from a time, place and society that is deeply patriarchal and the show plays with it quite a bit, criticizing and showing how pointless a lot of those divisions are. And it does this in a very entertaining, chaotic way.

Last Movie - I don't remember... I haven't watched a film in a while.

Sweet/spicy/savory - I like sweet a lot. But I don't dislike the others. The exception is acid things. I don't like lemon and I'm not much a fan of oranges either. Things that use lemon and orange flavour are included. However I do like squeezed lemon on my food. I do like both lemon and orange as an addition to something else.

Relationship Status - Single

Last thing I googled - "Irk meaning". Not exactly to know the meaning actually, but to make sure I spelt it right 😅

Current Obsession - A mix of multiple things. I think I'm either on a transitional stage or have had so much work that I couldn't manage a full hyper-fixation that would leave me unable to think of anything else. The things I've been into lately: Neuro-sama (the streamer), Honkai Star Rail (This one has lasted two years now I think) and I guess how AI works and how it can be used as a tool to help instead of being something that can only do harm. As a physically disabled person I think people who are only on the "AI sucks" boat sometimes don't understand that it can genuinely be groundbreaking in some cases. I've never had so good OCR in my life. I can finally read things the old teachers that don't understand anything about technology send me. Google's AI image description is actually pretty good!

I must add that I am not an AI apologist. I hate seeing what's happening to actors. No AI image generator can ever reach the art of a real human. AI is stupid and probabilistic, there are squiggly lines drawn by a 5 year old with more thought put into it. And yes, for now it needs a lot of energy. However, with Deepseek we've seen that it's possible to lower it a lot and there are things generative AI can help with. As always, and as with the internet, it depends on how it's used. You can't trust it just like you can't trust anything that is on the internet. The problem, as is with most things, is the big companies hypocritical assholes who steal other works and then get mad when people steal from their partially stolen work. That and their greedy ideas of putting AI everywhere even where it isn't needed.

Looking forward to - Finishing my degree and starting work for real.

Tagged by: @corollarytower

Tagging: (No pressure! And if there's a question you don't feel comfortable answering that's ok too.) @vulto-cor-de-rosa @itsjustagoober @innitnotfound @blueliac @theuwumaster @proudfreakmetarusonikku @hiveswap @griancraft @peeares @olversui

3 notes

·

View notes

Note

I legit don't know what to think of HP. I started becoming uncomfortable with liking it when I realized a close trans friend of mine would be aware I liked it and it might hurt their feelings to know that. I tend to do a lot of shifting my morals because I want my friends to like me though- like I used to pretend to be an anti because of a different "friend", or my stance on AI art is that it's always bad but I'm not going to lecture my closest friend for using AI to create pictures of her DnD characters... but I'd feel like I'm expected to lecture any stranger about it or try to hint that they should stop. But I don't want to make my friend uncomfortable. I wish there was an easy answer about HP that would make me a "good person" regardless. All I can think is to try to do the least amount of harm but when this stuff has personal meaning to people I know, it gets tricky to be like. ideologically independent? So I say I'm not comfy with anything HP because I want to be a "good person" in my trans friend's eyes but isn't this the same sort of thing as anti type thinking? Wanting to have the "correct" views to not be "problematic"? My dad is a jerk about some politics (he claims to be an "asshole socialist" which kind of sums it up tbh) and he calls the idea of having to say you like or don't like a thing because of other people's opinions "totalitarianism". Yet he wouldn't say the N word (and doesn't want to) but... idk. I'm not sure where the line is between being a "good person", being polite to other people, versus being "problematic". Today's politics are full of guilt tripping and it makes me shy away from people who would make me feel uncomfortable (or!! just in general I'm worried I'd do something wrong! so I avoid interactions with minorities because I don't want to be accidentally hurtful! but that itself is bad!), even when otherwise I'm interested in learning to be good... but as a white person, I'm "supposed to be uncomfortable". So it's my fault I don't push through and learn anyway. Sorry for the long rant I'm just autistic and trying to figure out what is even okay to think when so many people around me have varying views. Could use some advice but this is long already and probably is too political/race-related/breaks some rule in there somewhere so I understand if you don't post this.

.

12 notes

·

View notes

Text

Slight introduction: I'm not a nuclear brain linguist, or Japanese, or acquainted with all the nuances of other works of artists mentioned here

What I wanted to write as a lengthy post turns out to have already been written down by someone much more literate and knowledgeable about this issue. I like viewing Penguindrum as a work of art dealing with Japanese national trauma, and seeing it from this perspective gives a fresh view of the story as a whole.

I can't bring myself to disagree with any of the mentioned parts, such as characters being personifications of a way of dealing with the tragedy.

One thing I really want to see asked though is - why? What is it meant to accomplish?

The closest neighbor to Penguindrum in this matter that I can think of is Murakami's Underground. And it had a very clear purpose - to revive the thought about the tragedy, to give birth to the analysis of not who did this or how, or even how to stop it. No, the primary point was *who* did that. And the results were shocking because it wasn't anything that's guaranteed to never happen again. Even more so, it's difficult to imagine the same events not happening over and over again, as loneliness spreads among the most normal, unassuming, everyday people.

I feel like Penguindrum serves as a reminder. A cautionary tale, almost - here, look what happened sixteen years ago. Have we learned anything? Have we done anything? Has anything improved?

In the final episode, the story goes on and makes a full circle. None of the characters were evil. None of them had some inherent will to destroy that made them stand out in a crowd. Ikuhara goes out of his way to portray Kenzan and Chiemi as great parents who risked their lives for their children. Sanetoshi still sends Double H Himari's scarves. We get to watch Kanba, Shoma, and Ringo grow as people, and yet everything still goes on. The attack still has to be stopped by one person's sacrifice, as if Ikuhara was giving a big middle finger to the Japanese myth of exceptionalism, and a silent nod to the unsung heroes that paid with their lives on the 25th of March 1995.

Great, Momoka stopped the attack by paying with her life. What will happen when she's gone again? Next time, there might not be anybody sacrificing themselves for the bigger good. It's like some inherent fight between good and evil, but the evil is here, it is between us, and it isn't leaving if we don't deal with it., and approaching the trauma is the way to do it, and will do more than banning trash bins in public spaces

One difference I would like to point out between the real story and anime is the omission of Asahara. I fully believe it was intentional, as in not to dilute the message. Imagine Penguindrum, but instead of trying to find the Penguindrum, you end up trying to track down this omnipresent fat-ass cultist who's plotting the downfall of the world. Yay, we stopped him! And we will all live forever... yea nah, this was very clearly not the focus.

And what brings me down the most is the realization that nothing has gone away, and nothing has changed. You can argue that Sanetoshi is no longer even a ghost, and the Takakuras have basically been reborn, but why wouldn't the Kiga group be born again?

And it will. I'm terrified because any day it could be me on the train. Or, if it were you, I don't know if I could be the hero. I shouldn't need to be.

slight plug:

And yes, the image is AI-generated. It's hilarious

9 notes

·

View notes

Text

This is too angry for the MAL forums, but I've got a bone to pick with this week's Ai no Idenshi.

To recap: It's the near future and 10% of the population are "humanoids", either AIs in human-looking chassis or cyborgs, I'm still unsure. Our protagonist is Sudo, a doctor of Humanoid medicine / maintenance, especially of the digital brain.

His patient this week is Yuuta, a young piano prodigy dealing with serious rage issues. He hyperfixates on his piano, lashing out at interruptions and being generally surly and miserable when he's not playing.

Sudo's AI assistant recommends "tuning his emotional system," which Sudo bluntly calls "forcing a change in his personality." The AI demurs, comparing it to how medication or behavioral conditioning help humans with issues of the central nervous system. The process of fine-tuning the emotional mechanisms of a Humanoid just happens to be faster and generally safer than what humans do to achieve the same thing.

Yuuta's mother is concerned, but after a full-on brawl at school, they go through with the treatment. Afterward, Yuuta is much calmer, able to control his emotions and interact with his peers, even make friends. And he still loves piano, though, he thinks his music sounds a little different now.

Sudo is later listening to music; his nurse comments that it's beautiful, and he explains that it's Yuuta, from before his treatment. The tone is melancholy.

[Sidenote: way to completely fail at informed consent, DOCTOR. You explicitly had concerns about how the treatment might impact his music, yet not a word to Yuuta or his mother. WTF]

Now, I'm sure my reaction wasn't intended. This series is sci-fi speculation on lots of ethical quandaries, like "is altering someone's memories justified if it gives them the tools to make their lives better?" Or "is it better to reboot your backed-up memory now before the virus renders you non-functional (and only lose two weeks of time), or continue to make memories as you slowly deteriorate, traumatizing your loved ones, and eventually reboot having lost over a month of time?" "is there a functional difference between a living person and an AI with extensive programming?" And the question posed in Yuuta's case is based on a long-standing belief about the nature of art and artists.

BUT, speaking as a fellow neurodivergent creative who also struggled with emotional regulation and often spiraled into rages as a kid:

FUCK YOU, stop scaring people away from medicine!

Sudo never says it outright, but he's skating right up to the line of "MeDiCaTiOnS KiLl ArTiStS' cReAtIvItY!" and that's just irresponsible. This kid is not happy, and if you think his all-consuming rage is necessary for a good performance of Mendelssohn, maybe you don't deserve to hear it!

The idea that real ART exclusively comes from suffering, that madness/mental illness is a divine gift to creatives is fucking poisonous and has likely killed people. It's certainly perpetuated misery. I don't want to hear it from someone who's ostensibly pledged to Do No Harm.

I don't know what I would have been, had I been diagnosed before college. I do know that therapy and antidepressants have markedly improved my life. They have kept the low moods bearable. If they've kept the highs from reaching their potential, I have neither noticed nor cared. I'll take a reliable 7 over once-in-a-blue-moon 10 any day.

The idea is also just plain wrong. It's hard to do anything creative when your body chemistry keeps you dysfunctional. I think Hannah Gadsby said it best: "We have the sunflowers precisely because Van Gogh medicated."

Also, in light of current workers' issues and writers' strikes: No one should have to sacrifice their life or their well-being to make great art. Happy, healthy people create better art than they do starving and hungry. We should appreciate the artists as much as the art.

#anime#Ai no Idenshi#Gene of AI#Nanette#Hannah Gadsby#writing#art#support the artist#mental illness#I've been writing this for goddamn hours I hope it makes sense

6 notes

·

View notes

Text

At the moment, my stance on AI generated images/text is that they *could* be a good thing, in an ideal world. But they're pretty much just being used by selfish assholes.

First, I wanna mention that what I'm saying should apply to the AI generated "writing" as well, but for simplicity I'll just be talking about images.

If these generators worked off of art that the artists actively consented to being including, that would be a good start.

But also the idea of using these generators to completely replace artists, instead of tools to help make artists' processes easier, is particularly frustrating. It's incredibly disrespectful.

I think maybe, ideally, there could be one big database that these generators train on. Things in public domain. Things that artists themselves can submit if they wish to have their art included. CONSENT is the word here. There's a difference between someone studying another person's art style to try to mimic it (usually coming from being inspired by that person, fascinated by their art, it comes from love for the art) versus telling a program to make something but make it in a specific person's art style because they either don't want to spend money commissioning them or the artist is not taking commissions (usually coming from a sense of entitlement, and as much as I hate to say it, laziness).

And then, the whole shitty thing of replacing artists with these programs. There's a difference between technology replacing people in jobs that take a hard toll on their bodies, and technology replacing people in jobs that they actually want to be in. Of course, in a society without UBI and focused on making profit over helping people live their best lives, taking away any jobs from living breathing people is going to receive complaints. But taking away jobs from artists, who already don't make as much money as they should because art is viewed as something unnecessary, something that should just be a hobby or side gig, is like kicking someone while they're down.

I saw something about how the Spiderverse team used AI to help streamline the process. They trained it on their own works to do this (so they didn't steal from unconsenting artists) and it was a tool to help the process, not become the whole process. In this case, it's like using an electric mixer to mix ingredients you grew yourself to make baking a cake less strenuous, as opposed to having a robot steal ingredients from the store & baking the whole thing for you.

I would LOVE be to able to train an AI to make backgrounds for me that fit with whatever style I'm using for the characters. I hate drawing backgrounds, I love drawing characters. I often go to websites full of public domain images to find something to use as a background, but even then, I usually heavily edit it or even trace over it so that it blends in better with the character(s).

Using something like that Art Breeder site (which disclaimer: idk what DeepAI is trained on, so for all I know it could be stealing just as much as Midjourney and the like) to make faces for your characters is fun! It's a fun little thing to do, like using a picrew but more complex (at least with a picrew, you know the artist consented to you using the picrew).

On another note though... if you replace all artists with AI, what is left to train them on? Yeah, you have all previously made art, but you don't get any new styles, new ideas. You're just making an inhuman machine recycle old things. While I believe us humans should worry too much about originality (thank you dear creative writing professor who made us read "Steal Like An Artist" by Austin Kleon, as well as just theatre as whole), it's because we are living breathing thinking feeling human beings who are impacted by everything we experience & carry it all with us. An AI program is not that. It just does whatever you explicitly tell it to do. No thoughts or experiences, just input & output.

#tmi#admittedly i'm not an expert on how these generators work but I am an artist#and i've seen what other artists have said#and what these “ai bros” have said to defend themselves#and also Rayark firing all their artists to replace with AI#also purposely avoiding calling it AI “art” bc while I am of the belief that art can be anything. there's no line that separates “art” from-#-“not art”. I simultaneously do not like to call these AI generated images by selfish entitled techbros “art”.#it can be considered as art but i'm not calling it that.

2 notes

·

View notes

Text

Thanks to the previous reply for digging up the real image.

This why linking to sources is important. This is why going to sources is important, to verify something is a photograph of a real event.

If you can't find a source for an image, check two things:

1 . Go to Google Image Search. Click the "Search by Image" option on the right of the search bar. Search for the image URL, then click "Find Image Source" above the main result. You will get a list of results that includes the date. If you only see social media results or clickbait websites, consider it deeply suspicious.

2. Go to TinEye, do the same process. TinEye and Google Reverse Image Search work slightly differently, and TinEye lets you sort results by when they were posted online. Neither are comprehensive, but between them, you have a good chance of finding the original source.

I'm going to break down what I found when I did this under the fold. TL;DR, It took me 15 seconds to gather enough information to determine the top image was fake, but 30 minutes to identify the original point of spread on the English-language internet.

I don't blame people who get suckered by this stuff. We want to trust each other. When others have already been circulating an image and have provided a story for it, it makes it seem more real.

If you don't know the facts, and especially if you're viewing an AI-generated image on a phone, where potential giveaways are harder to spot, it can be easy to believe it.

This is a pretty harmless example of something that can be far more dangerous, when it's applied to more sensitive topics.

Be safe out there, everyone.

So, here's how my search for the image's source went.

TinEye's first identification of the top image comes from Imgur, 25th February, 2023.

The comments were a mix of credulity and people pointing out that the image cannot be real, by the laws of optics. While the title doesn't claim that it's real, the "photography" tag is a lie.

The next day, it was already being circulated elsewhere on the internet with further misinformation.

This was apparently the second time this had been posted there, and was removed for not being a photo. However, it continued to spread on Twitter, with more misinformation. We see the first invention of a supposed photographer, to give it more authenticity. I don't know if this is the first guy who came up with it, but this was within the first day of circulating the image.

This other twitter account includes a link, but it's to a clickbait website, not any sort of original source.

Later, this was scraped up by more legitimate-looking clickbait news sites.

Note that this story is complete bullshit. They've not cited any sources. They've not linked to the original image, because they don't have one. They haven't directly quoted the photographer, because he doesn't exist.

As a note: This sort of thing can happen on any news website that doesn't do its own in-depth reporting, or legitimate publications that have an "affiliate" program, or a social media division. These are of variable quality, and often do not seek to cite anything other source than "Twitter".

It took fifteen seconds to verify that the image was likely fake using TinEye. It took half an hour to find a reddit post that linked to fact-checking website that identified the first time the images were spotted on the English-speaking internet, in a tranche of AI art posted on Facebook on February 5th.

This is as far as I can follow the trail, lacking the facility in navigating the Chinese-speaking internet to go any further. But you can see how quickly this developed into a hoax, through a combination of reasonable-sounding lies, the addition of more and more detail to the story as it passed along, and credulous spread of the image.

Full circle rainbow was captured over Cottesloe Beach near Perth, Australia in 2013 by Colin Leonhardt of Birdseye View

22K notes

·

View notes

Text

Midjourney Is Full Of Shit

Last December, some guys from the IEEE newsletter, IEEE Spectrum whined about "plagiarism problem" in generative AI. No shit, guys, what did you expect?

But, let's get specific for a moment: they noticed that Midjourney generated very specific images from very general keywords like "dune movie 2021 trailer screencap" or "the matrix, 1999, screenshot from a movie". You'd expect that the outcome would be some kind of random clusterfuck making no sense. See for yourself:

In most of the examples depicted, Midjourney takes the general composition of an existing image, which is interesting and troubling in its own right, but you can see that for example Thanos or Ellie were assembled from some other data. But the shot from Dune is too good. It's like you asked not Midjourney, but Google Images to pull it up.

Of course, when IEEE Spectrum started asking Midjourney uncomfortable questions, they got huffy and banned the researchers from the service. Great going, you dumb fucks, you're just proving yourself guilty here. But anyway, I tried the exact same set of keywords for the Matrix one, minus Midjourney-specific commands, in Stable Diffusion (setting aspect ratio given in the MJ prompt as well). I tried four or five different data models to be sure, including LAION's useless base models for SD 1.5. I got... things like this.

It's certainly curious, for the lack of a better word. Generated by one of newer SDXL models that apparently has several concepts related to The Matrix defined, like the color palette, digital patterns, bald people and leather coats. But none of the attempts, using none of the models, got anywhere near the quality or similarity to the real thing as Midjourney output. I got male characters with Neo hair but no similarity to Keanu Reeves whatsoever. I got weird blends of Neo and Trinity. I got multiplied low-detail Neo figures on black and green digital pattern background. I got high-resolution fucky hands from an user-built SDXL model, a scenario that should be highly unlikely. It's as if the data models were confused by the lack of a solid description of even the basics. So how does Midjourney avoid it?

IEEE Spectrum was more focused on crying over the obvious fact that the data models for all the fucking image generators out there were originally put together in a quick and dirty way that flagrantly disregarded intellectual property laws and weren't cleared and sanitized for public use. But what I want to know is the technical side: how the fuck Midjourney pulls an actual high-resolution screenshot from its database and keeps iterating on it without any deviation until it produces an exact copy? This should be impossible with only a few generic keywords, even treated as a group as I noticed Midjourney doing a few months ago. As you can see, Stable Diffusion is tripping absolute motherfucking balls in such a scenario, most probably due to having a lot of images described with those keywords and trying to fit elements of them into the output image. But, you can pull up Stable Diffusion's code and research papers any time if you wish. Midjourney violently refuses to reveal the inner workings of their algorithm - probably less because it's so state-of-the-art that it recreates existing images without distortions and more because recreating existing images exactly is some extra function coded outside of the main algorithm and aimed at reeling in more schmucks and their dollars. Otherwise, there wouldn't be that much of a quality jump between movie screenshots and original concepts that just fall apart into a pile of blorpy bits. Even more coherent images like the grocery store aisle still bear minor but noticeable imperfections caused by having the input images pounded into the mathemagical fairy dust of random noise. But the faces of Dora Milaje in the Infinity War screenshot recreations don't devolve into rounded, fractal blorps despite their low resolution. Tubes from nasal plugs in the Dune shot run like they should and don't get tangled with the hairlines and stitches on the hood. This has to be some kind of scam, some trick to serve the customers hot images they want and not the predictable train wrecks. And the reason is fairly transparent: money. Rig the game, turn people into unwitting shills, fleece the schmucks as they run to you with their money hoping that they'll always get something as good as the handful of rigged results.

1 note

·

View note

Text

Top 7 tricks for crafting effective ChatGPT prompts

Think about talking to an AI that understands what you're saying and answers in a way that seems real. The key to unlocking this potential and making interesting and meaningful interactions is to make good prompts for AI chatbots like ChatGPT.

This article will discuss the seven best ways to write prompts that get smart answers from ChatGPT. This will turn your chats into interesting conversations that keep people returning for more. You can use these tips to improve your interactions with AI, whether you want to improve customer service, make more interesting story adventures, or have more interesting conversations.

How do I use ChatGPT?

OpenAI's ChatGPT is a state-of-the-art AI language model that came out in November 2022. ChatGPT quickly became famous by getting one million users in just five days after it came out. It could answer any question asked of it. And ever since, the number of people using ChatGPT has kept going up.

While ChatGPT is great, it does have some problems.

After 2021, it doesn't know anything because it was taught on a huge database that was only updated up to that point.

With a lot of users comes another ChatGPT limitation. Many people use it, so it's either down or full most of the time. To get around this, OpenAI created ChatGPT Plus, a paid membership.

ChatGPT does not make AI pictures; it is only a text-based language model.

Chatsonic, the ChatGPT, has extra features to help you solve problems with the most common language model. Chatsonic also has a lot of tools that make your life easier with AI.

The first thing that Chatsonic does is give you real-time accurate information. It works with Google right away and doesn't miss anything.

You can try different things to make your own AI images for your material.

The best AI art is made by Chatsonic, which uses both Stable Diffusion and Dall-E.

Why do you tell Chatsonic what to do? You can do that, and it will also talk about the answers.

You can connect your best tools to the Chatsonic API to make your work faster. Our API use cases can help you learn how to use it well.

Do you want to do your studies faster? Don't switch between tabs; use the Chatsonic Chrome Extension instead. See how the Chrome application can be used in other ways.

A large language model (LLM) uses natural language processing (NLP) to power ChatGPT. Because it was trained on people's writing on the internet, it's a computer program that can understand and copy text-based data. It's like "talking" to a conversational AI robot when you use it.

But you can't tell the LLM what to do like you would a human coworker, even though it can make an answer that sounds pretty human. Rather than that, you need to use particular terms and phrases that provide ChatGPT with the appropriate context for the outcomes you are looking for.

Why should you make a great ChatGPT prompt?

If taught using human-made databases, language models like ChatGPT and Chatsonic should be able to understand how people speak.

Even though they learn from human-made data, they are still machines. You must always tell it how to do the job and make good AI-generated material.

Coming up with the right ChatGPT prompt can lead to

Meaningful and interesting conversations, like asking follow-up questions and using the answers correctly to learn more about a certain idea.

ChatGPT can help you with your work by giving you accurate predictions and useful comparisons.

You can get more and finish things on time if you respond faster.

Improving the ideas and views of ChatGPT by making sure your prompt is clear.

7 tricks for crafting effective ChatGPT prompts

Being honest, it's not hard to write ChatGPT questions. But it's also not easy to write the proper ChatGPT prompts so that the language model gives you valuable results.

Our AI model training experts have used many AI tools, such as ChatGPT and Chatsonic, and have developed some tips to help you get the most out of ChatGPT.

Don't forget to look into the 7 creative ways chatGPT can also be used.

1. Begin with an action word.

Do you want AI to pay attention to you? Next, never start your ChatGPT question with "Can you?" Instead, use action words like create, write, make, or generate.

No action words in the ChatSonic ChatGPT Prompt: How do I become a content marketer?

ChatSonic ChatGPT Prompt with Action Words: Plan how you will become a content marketer in 2023.

Look at the change! The first answer is general and needs to say what should be done next. ChatSonic, the alternative to ChatGPT, on the other hand, gave a thorough, step-by-step plan for how to become a content marketer.

2. Establish context.

A doctor can better diagnose a patient's illness if they know more about the signs. In the same way, ChatSonic can come up with an answer that makes sense based on your question.

ChatSonic ChatGPT Prompt Based on Context: I've worked for three years as a program developer. I'm tired of coding and want to move on to something else. My degree is a B.Tech. in computer science, and I also know a lot about how software is used in business. Please list jobs that it would be easy for me to switch between. We also changed the personality to "Career Counselor" to get expert replies.

3. Be clear.

People don't like being unclear because it doesn't give them any helpful information. What's more, ChatSonic needs help finding good answers to vague questions.

It works best when you tell it to do something, like

This is the ChatSonic ChatGPT prompt: Write a business copy to get more people to open my emails. My product is called X. X could be a company, service, or group. What subject lines would work for a set of Y emails?

ChatSonic's answer was relevant and met user expectations because it included specifics like the number of emails in the chain and the subject lines.

4. Indicate the length of the response.

ChatSonic is curious if you want a short, two-line answer or an entire paragraph for your question. If the length of the answer wasn't given in the prompt, it would figure it out. To keep things clear and avoid redos, it's best to say how long the answer should be in the prompt.

Asking ChatSonic to add or remove words from the text is another great way to use it.

The ChatSonic ChatGPT asks for a response length of: In 300 words or less, explain what "content marketing" means.

TalkSonic can help you with more than just describing marketing ideas. It can also help you come up with content creation strategies.

5. Give the AI a hand.

As we've already said, AI is a machine that needs to be told what to do. When we say "handholding," we mean it. It would help if you told the AI what to do, what to avoid, and what to prioritize in certain scenarios.

This is an example of a personalized eating plan that has rules.

ChatSonic ChatGPT Prompt with Conditions: I'm a woman who is 26 years old, and I want to lose 6 kg in 3 months. Make a two-week Indian food plan with 1700 calories. Please don't offer non-vegetarian food on Monday, Thursday, and Saturday. A lean protein diet should have 30% carbs, 40% protein, 20% fiber, and 10% fat.

ChatSonic has considered my request to leave out the non-vegetarian choice and responded accordingly.

6. Engage in role-play.

You can ask ChatSonic to role-play if you can't find your expert character (we'll be adding more soon). For instance, you want content marketing to help raise awareness of your business for your brand-new CRM software.

Playing a part Talk Sonic Answer for ChatGPT: Ten years of marketing experience for software goods are under your belt. You have developed marketing strategies for popular tools like Vimeo, Freshworks, Hubspot, and Zoho. If you know anything about marketing, please tell me how to get people to know about my new CRM software, Zilio.

ChatSonic thought it knew a lot about marketing and included what works and what doesn't in its answer.

7. Use double quotation marks.

How do you make a word stand out when talking to someone or giving a presentation?

You can either say it out loud, stretch out how you say it so no one misses it, or put "air quotes" around it.

In the first line, you can see how important double quotes are to ChatSonic. The essay's title was "Content Marketing: An Introduction" with no quotes, but "Content Marketing: The Art of Reaching Your Target Audience" when there were quotes.

Conclusion

For better interactions with AI and better answers, learning how to make good ChatGPT prompts is a skill you should improve. You can get the most out of ChatGPT by using the top 7 tips in this article. These include being clear and to the point, giving context, asking open-ended questions, using personalization, using a variety of prompts, trying out different approaches, and making your prompts better based on feedback. Remember that practice makes perfect, so don't give up if your first tries aren't perfect. To have interesting and meaningful talks with ChatGPT, keep trying new things, and get better at writing prompts. Use these tips to get the most out of your contacts with AI.

Janet Watson MyResellerHome MyResellerhome.com We offer experienced web hosting services that are customized to your specific requirements.

Facebook Twitter YouTube Instagram

0 notes

Note

Very important. (My own long post got fucking yeeted so shortversion here we go.) I'm actually gonna do a quick experiment, because I am a dumb and I have been curious about midjourney. It has an optional feature called blend. I'm gonna shove 2 of my own pictures together real quick.

These two.

and it's gonna spit out this, which at first glance is a neat little short hand of the two. A little janky, but neat, right?

Definitely a little janky on some but the idea is there. Don't mind the extra arms and legs and very broken anatomy. The pictures I did took me about a collective 12 hours between them. The Sun picture way longer than the Moon one, but still, about 12 hours between them. This quartet of generated pictures took maybe 30 seconds. That VAST difference in time sounds like a life saver at first, becuase WOW, EASY!... however it makes me want to die a little. A lot. Because while yeah, these ones aren't perfect, that's 4 full ass pictures done in a blink compared to me taking multiple days of working on the others. It makes me obsolete. But here's the thing. I made these 'myself' by inputting two pictures... But NEITHER main character is human. The anatomy and style didn't come from just my pictures. Theres elements of other peoples work in there that I have no idea the source. STOLEN works. But hey, quick and easy right? These look cool, creative, a good possible source of inspiration, even if they aren't sold directly or used in some way that's monetized... Except that pool they came from is the problem. THE MORE THIS SHIT HAPPENS the more artists, and WRITERS are gonna stop 'making new content' because it's gonna get scrubbed. We'll keep it to ourselves or just stop all together. Because this is soul crushing. Further, that pool? That big chunk of stolen works that people don't even know have been taken? That'll dry up too. It'll be stuck in the same loops and eventually you'll start seeing the patterns. Once everything about this 'generated art' that's new and shiny and fun wears out, you'll look through sites like Redbubble and Etsy, and Amazon and see how many stickers and prints and books are just AI Generated Barf that's been re-hashed over and over again. Look up 'adult coloring books' and see how many have the exact same broken formats, the 'not quite right' images, the uncanny valley vibe that doesn't come from someone having an art style that's just a little off. The images generated from my own two pieces are cool at first, until you realize that that's just how every prompt will turn into. And if you ever need something specific, you'll be stuck within the bounds of what exists already. Fuck originality. Fuck artists. Fuck writers. Fuck creatives. The only people that are really benefiting from the generative AI 'revolution' are the people running that show. Every other creative outlet is going to get run into the ground because as humans, we can't keep up. We can't compete. When it comes to man vs machine, if we don't get creative protections to keep our hard work from being sucked into it, we're just going to stop.

This is genuinely terrifying to me. I can admit that I can see where SOME ASPECTS of this can be helpful, using it as inspiration when you're stuck on a project, little shit that doesn't actually take over the purpose of artists and writers specifically. I've fucked around with it, I've gently used it to help me with backgrounds for personal things, or to get ideas that I want to try to make myself (specifically a crochet design as a PHOTO image I can try to build in real life and make my own pattern for, things like that). I've used character.ai as a fun little goofy RP relaxation thing... But as a replacement for hiring people, getting commissioned artwork, reading real fanfiction that's had people time and dedication and SOUL put into it... There's no comparison. But people that aren't 'creators' don't understand how much it hurts to have dedicated... fuck, almost 30 years for someone like me into learning how to draw and color and set up compositions for a picture only to have something similar cranked out by an algorithm. IT HAS IT'S PLACE... but deep down, it's just gross and disheartening when it's taken so seriously as a 'new way to make art'.

Can you please just tell us what is wrong with ai and why, I can't find anything from actual industry artists ect online through Google just tech bro type articles. All the tech articles are saying it's a good thing, and every pro I follow refuses to explain how or why it's bad. How am I supposed to know something if nobody will teach me and I can't find it myself

I'll start by saying that the reason pro artists are refusing to answer questions about this is because they are tired. Like, I dont know if anyone actually understands just how exhausting it is to have to justify over and over again why the tech companies that are stealing your work and actively seeking to destroy your craft are 'bad, actually'.

I originally wrote a very longform reply to this ask, but in classic tumblr style the whole thing got eaten, so. I do not have the spoons to rewrite all that shit. Here are some of the sources I linked, I particularly recommend stable diffusion litigation for a thorough breakdown of exactly how generative tools work and why that is theft.

youtube

or this video if you are feeling lazy and only want the art-side opening statements:

Everytime you feed someone's work- their art, their writing, their likeness- into Midjourney or Dall-E or Chat GPT you are feeding this monster.

Go forth and educate yourself.

827 notes

·

View notes

Note

How big of a threat do you think AI Art is to the employment of concept artists? Given how artists like RJ Palmer and Bogleech are panicked about it, you've worked in fields adjacent to that, and you've worked extensively with AI art, I'd presume you'd have some perspective on that.

AI art is going to shake up the art field, any new art tool worth its salt can and will.

I was training as a graphic designer when InDesign was finally starting to hit its stride in the late 90s, but I learned on QuarkExpress and learned old-school techniques in high school Newspaper club. I'd been dealing with dot-matrix printers and photocopier work since I was 8 at my dad's office.

So I got to see the graphic design industry in a state of panic through my professors and our various industry guests. All the EM-dashes and the declaration that the " on the keyboard is the inches mark and not the quote were protective measures for the industry so that talented amateurs wouldn't know the secret handshakes and couldn't "fake" their way into being seen as real graphic designers. And they were PISSED that Adobe InDesign was easy to use and automatically converted the measure-marks into "proper" punctuation.

Yet there's still a graphic design industry.

That said, I'd be curious if the ones that are actually freaked out have ever actually used the products. Because I"ve been in a down slump and I'm prone to stim, I have done pretty much nothing but dig into Midjourney and Stable Diffusion's brains and my experience doesn't match the observations of the terrified.

I think part of it is because people only see the results and they don't see the work. And there is work involved.

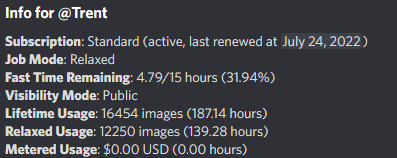

Iteration and Curation: I've posted a couple hundred pics from Midjourney so far. What do you don't see is this:

Now, in Midjourney parlance "image" also includes 4-grid previews used while developing final images.

For each panel of "Glitch"/"The Bethesda Epoch", for instance, I generated at least eight options (usually more) and evolved several of them across many generations to get what I wound up with. The Bethesda Epoch took me days to put together and garners me feedback and response roughly equal to a 3d modeled piece I'd put together in the same time frame.

Truth of the matter is, you rarely get anything perfect first try, everything needs modification or massive amounts of reiteration to pass for final work.

Promptcraft: Spend even a little time on the discords and you can tell who is playing and who is trying to make art. Play is an entirely viable application of this technology (more on that later) but while this levels the technical skill barrier for a lot of people, it does not cover for a lack of vision or ideas, and it requires its own skill.

There's a big difference between "in the style of D&D art" and "as a D&D monster, full body, pen-and-ink illustration, etching, by Russ Nicholson, David A Trampier, larry elmore, 1981, HQ scan, intricate details, inside stylized border" in terms of what you get.

Play: Most people are just having fun. It's real easy for artists to take the ability to express the ideas in our heads for granted. Most of what you're seeing is people playing with ideas they've been unable to express before. A lot of what I do with it is play, too.

Accessibility: My hands cramp when I draw these days, depression and other problems frequently knock my motivation and energy out of me, but I can use AI to put my ideas out there when the other parts of me aren't cooperating.

Limitations: The tech looks miraculous, but it can't do everything. In fact, it can't do a lot of things. The artist is still needed for the vision, for the ideas, to work the outputs into something meaningful, to supplement the outputs with human intention so a copyright can be involved, the list goes on.

Even Rembrandt used a camera obscura.;

180 notes

·

View notes

Text

AI Art For Procedural Generation in 2022

Can you make a roguelike with GPT-3?

I find the discussion about "AI art" incredibly annoying. People talk past each other about an ill-defined concept. They put forth impassioned but bad arguments for what should in my view have been the null hypothesis. Trolls use this opportunity to pick fights with furries and insult artists with MFA degrees. It's just bait.

Many arguments are too general, they prove too much: If you think that all copyright is fake and you'd rather live in a world where Tesla can ignore the GPL but you can use Disney characters, then come out and say so. Don't talk about whether something currently is "fair use" or legal if you are making a moral argument (about what should be legal), or vice versa. If you say that people should not "waste" their time painting pictures or writing novels and painting should only ever be a hobby and not a career, I don't think it's worth responding to. If you think that Midjourney "learns to paint just like a human artist", then don't say anything.

I know I risk attracting trolls by even touching this topic, and a lot of this is so stupid because it happens on twitter, not because of the subject matter, but I think I can offer a unique perspective and conclusively answer at least one particular question: Should text-to-image AI art and neural network text generation as it exists today be used for procedural generation in computer games?

No. It shouldn't. It can, but it shouldn't. It can be used, but then you'd just do it so you can write "made with state of the art AI generation" on the back of the box.

The term "AI Art"

I am using "AI Art" to refer to text-to-image systems such as Stable Diffusion, Dall-E 2, Midjourney, and so on. I think "AI Art" is a misnomer, and there should be a different term that refers to the thing "AI Art" refers to, such as "language model combined with reverse image recognition" or "transformer+GAN based statistical learning" or "text prompt based big data image generation".

It's easy to define what "AI Art" is not. "AI Art" is not backed by AGI, not backed by symbols, does not use taxonomies or ontologies. Those systems are based on neat ideas, but the products we see are often scruffy and full of hacks and special cases to guarantee certain results.

Game AI

In a similar vein, "Game AI" is a misnomer. Most "AI" needed in games is just simple state machines and A*. Occasionally you see something like STRIPS, e.g. HTN or GOAP, or very simple reinforcement learning, or pre-trained neural networks.

But games do not need to be intelligent. Game design can be intelligent, and then the intelligent stuff is hard-coded. NPCs don't need to be smart and unpredictable, they need to be well-written, or balanced, or predictable enough for the player to know how to protect them during escort missions.

AI opponents don't need to be smarter than the player. They should be just smart enough to post a challenge, but that challenge can also be created by giving the opponent more resources.

AI techniques in games are used to fill in the blanks in player input through unit pathing and similar systems, but those are usually not called "Game AI".

(I said this all before, so I am not going into more detail)

Procedural Generation

Procedural content generation at run-time - PCG for short - is used in games to make every run, every match, or every play session unique. If you are a game developer deciding whether to use procedural generation, you are acutely aware of the trade-offs that can be made here, and why you would or wouldn't do PCG. If the game is meant to be played only once, you can generate content procedurally, but there is no need to do so at runtime. You could generate some candidates, choose the best one, tweak it, and ship it with the game. Procedural generation allows the game world to extend infinitely in all directions. But does it need to? The boundaries between "randomised" and "procedurally generated" are vague and fuzzy.

Most procedural content generation is just simple combinatorial picking: "Red Mace (+3) of Valor" could be a weapon by picking a colour, noun, and suffix, plus a damage modifier appropriate to the part of the dungeon the player is in, plus some kind of final check to make sure nothing too game-breaking is generated.

Most procedural generation in games is not based on general-purpose AI, but instead uses purpose-built algorithms that are inspired by AI techniques, or employ some kind of AI technique or algorithm as one pass of a multi-step process: To design a level, you could generate rooms, connect rooms, check room connectivity (the AI step), place furniture, place monsters, place loot, and so on. There is no need to throw all your level design constraints into a discrete solver, it suffices if you use a much more specific generation algorithm, or even an ad-hoc process that may or may not satisfy those constraints, with a check in the end.

Most procedural generation in games has tweakable input parameters.

The reason for all this is that PCG is there to surprise the player, to provide variety within tight constraints, to make different runs or playthroughs of different players unique, but not so unique that it becomes a different game every time. Procedural content generation uses building blocks that were carefully designed, and puts them together according to rules the player doesn't know, but he recognises that the output follows familiar patterns. PCG creates slightly novel or merely randomised situations that are clearly legible to players. PCG creates parameterised content that fits into existing game mechanics and systems. Prompt-based systems using deep neural networks are not as good at generating pieces that fit into systems as GOFAI-inspired systems and scruffy, ad-hoc hand-written generators.

Output

Games usually can't work with raw text, whether generated by GPT-3 or scraped from the web. They can't work with unlabelled images, whether generated by Midjourney, Creative Commons search, or returned by a Bing Images query (Google don't even let you use their image search through an API) .

AI art generators don't output rigged 3D models, and they usually don't output the same character from different angles, in different light, or making different expressions. They output multiple candidate images. Those images need to be assessed and filtered.

Text generators might output a quest when prompted to. That blob of text is useless to game systems. What kind of action is needed to complete the quest? These parameters need to be parsed out of the text.

Would you have to ask GPT-3 questions about the thing it just generated in order to use it in your game?

Input

How do you prompt these things? How do you prompt for a dwarf character, an elf character, and a human in the same art style? How do you prompt for a level, for a floor plan, for a book? Do you have to input all background knowledge and lore every time?

Do you generate text and feed the generated text into the image prompt? If you have quests and items, do you verbalise game systems? Do you need to mark special game constructs as important so they appear in the output?

If you generate images from images, can you verbally ask the AI to adhere to certain animation constraints?

Adaptability

Procedural generation systems usually have many dials you can fiddle with, parameters that are easily adaptable. In dungeon generation, I can think of the size of a room, the length of a corridor, the backtracking behaviour, the chance to spawn a set piece. All those can be tuned to the gameplay mechanics, and gameplay parameters like drop rates and monster spawn chances can in turn be tuned to level geometry.

Maybe your game can generate a room that looks like a trap, or it can show you a sleeping ogre with an unknown treasure chest in a side room, and a main path with some traps and puzzles. The game would know to put a (+1) sword in the main path, and a (+3) sword in the ogre challenge room.

I don't know how to do that with language models, text-to-text or text-to-image. Maybe I could have a long paragraph of exposition about game difficulty when I talk to GPT-3.

Randomness

How would you inject randomness into the generation process? Sure, you could just rely on the internal state of the neural network being slightly different each time. That might risk generating three samey outputs and one wacky one.

How can we generate interesting ideas? How can we generate Captain Chihuahua, a pirate dog with a coffee mug for a hand?

One way would be to randomise the prompt, to generate a prompt from a grammar, so we have a ninja horse, a cowboy chicken, and a pirate Chihuahua. Generating those via GPT-3 and then feeding them into image generation might prove difficult, but using a grammar limits your options and defeats the purpose of "advanced AI".

You could of course reach into the network (well, if you have it running on your local machine and understand how it works), and access a compressed distributed representation, latent space, sparse autoencoder, whatever non-linear thing that looks like principal components except non-linear and makes for impressive grant proposals. Then you take the vector for "chihuahua" and go from there into a direction in latent space where no training example had gone before, to generate an eldritch lizard Chihuahua. Then you feed that vector into your language model and hope you get a sensible string of text to refer to that thing.

You could generate a random visual style

With regular PCG, you can put a random texture on a random animal, resulting in a dog with scales that walks like a chicken. Imagine you could generate the eldritch lizard Chihuahua with AI: Would that make for better gameplay?

Bowls of Oatmeal

It should be easy enough to use Dall-E or Midjourney or Stable Diffusion to generate grass textures. I can even imagine a procedurally generated art gallery based on procedurally generated prompts, a procedurally generated family tree based on procedurally generated family members, or a procedurally generated library full of books written by GPT-3. None of those examples really interact with the mechanics of the game, but they fit perfectly.

I could also imagine a game where character portraits are generated according to exact specifications, but nobody notices that the game is randomised. The problem is not just that it all looks the same, but that it doesn't add anything.

Making it Work

I am sure some enterprising game devs will make it work in the near future. They may generate a library of books, or a city of NPCs with unique faces, or a visual novel where all the visual parts are generated from text.

I am also sure somebody will build an "AI art" system that generates fully rigged 3D characters or 2D characters with alternative facial expressions based on just a text prompt. It's only a matter of hooking up already-existing systems, and feeding in lots of training data.

Heck, I myself could build a procedural animation system that uses neural networks, character traits, and emotional states to procedurally generate body language. It wouldn't be cheap or easy, as I might have to pay actors to act out scenes in mocap suits, or pay click workers to annotate body language and posture in random movie clips, but it could be done. But I could already have done that ten years ago. Machine learning where you have a funtion with inputs and outputs and you train the machine to approximate the function based on examples - that's not new! The whole point of language models and text-to-image prompt-based generation is two-fold: You can use a lot of unlabelled data to train your big model. You even don't use any labels or input-output pairs to use the model. To train a neural network, I say f(po-tay-toe)=po-tah-toe, f(eeh-ther)=aye-ther. In the world of GPT-3, you say to-may-toe and the AI figures out the rest.

I could also imagine, like I said in the preceding paragraphs, generating text prompts from a grammar (but why not just generate the text then), parsing generated text and doing image segmentation (but why not parse a text or scan an image from a corpus), or doing some funky vector space structure stuff (I know the mathematical terminology, but I am deliberately being vague here, because it doesn't matter to this argument which type of embedding or representation or encoder we would use). But all that would just use machine learning to do things I can do without machine learning, in a more complicated way, to put them into a world that is based on gameplay code that has more in common with old-fashioned AI more than with modern machine learning systems.

Uses for AI art

So we can't really use AI art for procedural generation. That doesn't mean AI art can't be used at all. It can obviously be used to generate characters, props, and environment art with a human in the loop. If you want to one-shot generate a pirate Chihuahua with a coffee mug for a hand, and you can't draw well enough to do your idea justice, then AI art is a way to get there. You could use any of the dozens of image generation systems to make characters and backgrounds and then manually rig them, select what fits into your style, make sure the characters don't all have the same face, and refine your prompts so you can generate everything in the same style.

But what you absolutely cannot do is let a computer decide whether a Chihuahua would wear pants on two legs or on four, or if instead maybe the coffee mug is carried around the neck of his first mate, who happens to be a St. Bernard.

11 notes

·

View notes

Text

I think a lot of people get angry at algorithm generated images because they feel like they're being tricked when the images are presented without comment, especially when it's of something like clothes or accessories

but

people who spend a lot of time making clothes and accessories as their own personal forms of art...we can clock the AI stuff pretty quickly 😅

BUT

I still don't know how much viewing algorithm generated images* on a phone screen versus a full-size monitor makes a difference in how believable they are, either

Me looking at stuff on a monitor may make the difference between me looking at a "garment" and identifying it as algorithm-generated, while the notes are full of people asking where they can buy it

*or even just heavily photo-edited images, which may be part of why people get fooled so easily so often on FB by badly edited images...especially older people whose eyesight isn't what it used to be (which is why I like having a big modern monitor)

9 notes

·

View notes

Text

Within a bourgeois society, it is obvious that proletarian artists are worse off than bourgeois artists, and it is not surprising that most artists aspire to be bourgeois artists rather than proletarian artists, just the same as most proletarians in general would rather be bourgeois. They want to be the people writing and producing their own animated cartoons, not the people doing in-between frames and rigging models for someone else's. They want to be making their own comic series, not doing flats or typesetting for someone else's. This is not because they are evil exploitative people but because they don't want to be poor. I do not fault anyone for this desire. But if we want to get rid of poverty and not simply avoid it, then we have to criticize capitalist property relations, even if there are otherwise well-intentioned people who really want to be capitalists themselves.

When an artist wants to make a living doing art under capitalism, they have two possibilities: they can get a proletarian job with limited creative freedom working as one part of a team on someone else's project, or they can go the harder but more creatively free petit bourgeois route of trying to build up their own brand in the hopes of one day getting the opportunity to be the lead on their own project and have other people working for them. These two routes have different class characters and thus different material interests. The former sees the artist generally sharing interests with the proletariat and the latter sees the artist generally sharing interests with the bourgeoisie and specifically the "small business owner" subclass.

So a question you could ask is why, if the bourgeois and petit bourgeois artists share material interests with the bourgeoisie, do they seem to be so anti-AI when so many of the more business-oriented members of the bourgeoisie are so pro-AI? The answer is, as I have been trying to make clear, that "AI art is theft" is not fundamentally an anti-AI position. It is a position against the replication of specific art styles, the "brands" that petit bourgeois artists are trying to build up as their own in the hopes of achieving success as a full-fledged bourgeois artist a la Walt Disney. They don't care that computers are making art, they care that computers are making their art. So long as they are able to maintain ownership over their art, so long as they can turn their style and "brand" into a piece of profitable intellectual property that others are forbidden to mimic without authorization, then AI tools pose little risk to their bottom line.

As you seem to already understand, the proletarian argument against AI is not "AI algorithms are stealing my intellectual property", it is "my boss can replace me with a computer and I have no say in this decision". We both understand here that this is fundamentally not about AI but about who controls the workplace. That is why the form of the argument is important. Bourgeois cooption of anti-AI sentiment seeks to make sure we are saying the former and not the latter, that we are defending capitalist property relations with regards to art and culture and not critiquing the exploitative relationship between the proletariat and the bourgeoisie. "AI art is theft" is bourgeois logic because it carries with it bourgeois notions of property.

I do not wish to correct bourgeois thinking among the proletariat because I'm trying to be a pedant or a know-it-all. I don't think I'm particularly smarter than any other proletarian. I want to correct this thinking because revolutionary class consciousness depends on correctly interpreting and analyzing the world around us from a revolutionary and proletarian perspective. Yes, I could have and probably should have been more sympathetic to artists in my original post. It was admittedly excessively sarcastic and dismissive of fan artists, most of whom are not petit bourgeois. But my message remains the same: to consider AI art to be theft is to assume a particular bourgeois perspective on property relations that is contrary to the interests of the proletariat.

Fanartist, directly after drawing the forty-seventh page of their unauthorized Marvel x Riverdale graphic novel: AI is bad because they didn't get permission before using other people's art.

161 notes

·

View notes