#<- just solved a simple css issue that would have been solved like that if i had taken fucking time lmao

Explore tagged Tumblr posts

Text

Programming notes

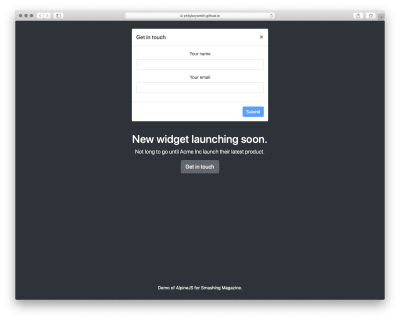

1) Project Modal the project is supposed to be able to transition with a button press

2) Project Pomodor isn't working at all/incomplete

3) Project Personal Website It's bland and the css page isn't working at all

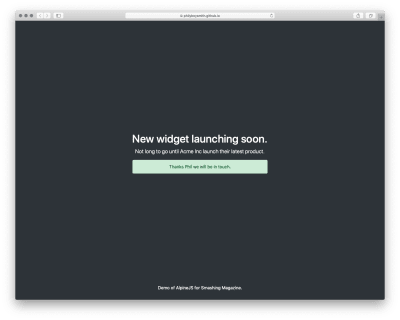

4) Project Navbar Incomplete

5) Project quizgame Bland,not resposive,and css page not responding

6) Project Review Carousel Not responsive

7) Project sidebar Complete failer

8) Project survey bland

9) Project calculator success but could be better

10) Project addressbook unresposive

11) Project FaQ the project is incomplete constant repeating not in the way wanted bland

12) Project stciky navigation bar Didn't work at all final product came out like an article for the newspapaer thinking this is either 1)My laptip is trash 2) software needs to be updated

13) Project Resturant I made it simpistic but even if edded features from the tutuorial don't know if possible with either the laptop or an outdated vs ide

14) Project Tabs As per usual the tabs aren't appearing like in the tutuorial. I beleive it has something to do with the link that I didn't use.Or better yet addess the elephant in the computer for some reason the code isn't hhiding itself like in the tutorial so it's basiclly the same as yesterday's project.The conent should basiclly be nested behind one another.

*So I think I shouls take a day off from coding and finally update viusula studios.Apart of me feelis like an ass continuing event though it's not coming out right *as of 3/31/23 updated

15) Project Simongame after updating the ide i somehow gained another ide version of vs. the code is semi-acting up but i belive its on me and choose to slow down on this project because the tutorial guy is showing his mistakes showing a thought/problem-solving i miss rethonking my learning approach

16) Project Musicplayer *Previewing a file that is not a child of the server root. To see fully correct relative file links, please open a workspace at the project root or consider changing your server root settings for Live Preview. -decided to miimic the mp3 skull website for the project and after completion to saticfaction I plan on watching a tutorial to findout the differentces -the website can be broken down into I. two major containers II.about 5 lists III. 3 paragraphs IV. 2 -3search bars I also which to add tabs Taking longer than I thought it would but it's apparent to me that the problem lies in the div classes and compartmentalizing the aspects of the website into classes for better control.I have been making this harder for myself. I beleive the best course of action is to reread w3school and do simple projects using tags I don't use or understand often after this project. I stareted to get a better feel for web development but still aways to go with html and css

*I want to hit over a 100(150-200) projects before moving on to hacking,machine-learning,AI's and algrothims *ultmainte end game is to have an acquired skill that not only puts money in my pocket but allows me to map major citites *approprate termanoligy is nested div tags

17)blog website replica (yola) got the basics of the site down but the css is stomping me so essintay in a sense I have gone nowhere

*the background image,all images refuse to load

*the loading of thge background images is not working because i am not communicating to the right folder,

*the folder that I need to communicate with is either one abouve or ine over either or It's a pain to try and learn the proper technique and describe the issue correctly into the search engine

The problem comes down to not beeing able to move effectivly thorough files just like in hacking *sections tag to divide the web-site

-now the plan is to alter the json file to allow me permisson to the image folder after the link didnt work else play with bash for other alterations to the file In Unix-based systems like Linux and macOS, the user can use the “sudo” command to run the command with administrative privileges. In Windows, the user can right-click the Command Prompt or PowerShell and select “Run as administrator”.

Some key takeaways are given below that will help you get rid of the error efficiently:

The error message permission denied @ dir_s_mkdir indicates that the user does not have the necessary permissions to create a directory in the specified location. The location in question is “/usr/local/frameworks” which may be a protected system directory or owned by another user. To resolve the issue, the user may need to change the permissions on the directory or run the command as an administrator or superuser. They may have to re-install the python dictionary as well. In some cases, it may also be necessary to check if the directory already exists, and if so, delete it before attempting to create it again

Problems: 1)background image wouldn't load -solution attempt one:fix the syntax successful in connecting css to html page -learned about file paths only for it not to affect anything,nor Bash commands to transfer ownership of file -brandonostewart is user and owner/group so why not work -terminal states I don't have permission or excess to file -image files is above linux files can't acess,no permisson,doesn't excess in list function in terminal -I now believe all the problems I'm facing now is because i didn't have the laptop fully setup I just jumped right in -everythiong has perseduers,a setup,order a recipie -vmc container termina penguin ERROR vsh: [vsh.cc(171)] Failed to launch vshd for termina:penguin: requested container does not exist: penguin I beleive at this current step the problem lies in the fact there is no container,this means all work was setup in space I was building on nothing and it appears obviuos that I have reached the ceiling. As i see it I only have two options left 1) powerwash and start over from scratch 2) take the neccesary steps regardless of how far back it sends me in order to setup each individual aspect of chromebook

18)ToDo list I got it to work but the trade off was all my linux files got deleted,therefore I'm starting over.

the first project is the simple todo list,I wwant to make this one nicer than the first one I did I plan on adding css and java script so it workes the inistal layout I want is a decorated background with a list that takes off taskes that are finished moving said task fromn one side of the list to the other side of the list This issue I;m currently having is an html issue. From doing some projects the issue I have is always a small technincal detail or syntax issues. The more boxes better control so the issue is what technicallity do I need to insure my list transfer infomation as well as takes info in -I have no idea how to get the program to hold onto input value,then display it.Only thing holding me from finishing right now -I think the problem is I didn't add a display box for the code Where I'm at: Have a basic setup for the website -I need my info to be taken in by the display box -then displayed on the website,once task is done it adds itself to the second half of the website 1)header 2)box1 inital info original diplay 3)box2 where finished task lays -I need display boxes javascript not linking to html page,this was the hurdle that has been fucking me over all day. While syntax is correct the program still hasn't worked problem fro the past reoccured asked for permisson for the javascript file,first it said it didn't exist then I needed permission I still can't get that syntax correct for the terminal

6/01 -since I couldn't get the javascript to work and I restarted all the files in my linux folder my next course of action was to delete vs and try my luck with another ide,plans were holted after putting in my javascript in my repl.it finding out my syntax was indeed wrong

-but now i'm not so sure becasue I tried another basic web design program from youtube with correct syntax and it didn't load correctly somehow I think it's my computer at this point -after re-opening the apllication 2/3 of it worked -this time javascrirpt and html,not css -like with the to do list css,html not javascript -I feel like giving up on full stack development but I have learned to much to not have an Idea on what needs to be done.I can vividly describe and analyze the problem just can't put it together

I am going to start app development on vs and come back to full stack development

6/10 After downloading the framework .Net and discovering the difference between visual studio code and visual studio I wanted to develop at least one of the each programming application made possible with the framework(including machine learning/ai) including but not limited to Flutter development. currently struggling to understandings of the sync capabilities of git hub with visual studio code( what I have) also I saw the flutter development intro video I am really just out of the woods intermediate of beginner and am steadily heading to intermediate currently stuck on trying to finish this web application tutorial terminal commands for web application,branch work

Changes to be committed: (use "git restore --stageg <file>..."to unstage)

Untracked files: (use "git add <file>..."to include in what will be committed)

11/14 since last recordings,my current laptop slowed down it says less memory so i tried transfiring to my tablet for programming which was increadably

0 notes

Note

Hey, so I had a question about Twine, sugarcube specifically, the ui of Foundations is pretty cool imo and was curious about how you were able to get it the way you did and wanted to know if you'd be okay giving an explanation as to how if it isn't any trouble and you're comfortable sharing it.

I personally have been using W3schools to learn about editing the margins and border radius which I've got a bit of a good grasp on, but have been having issues with getting rid of the toggle collapse and moving the position of the sidebar itself, which is my main concern rn.

Hey, no problem, I'll absolutely explain for you!

First of all, there are a couple ways to do the things you're mentioning. If you want to remove the sidebar or the toggle button (or any element, really) you can do that with a bit of Javascript. For example, you can use this bit of code to remove the toggle to open and close the sidebar:

$('#ui-bar-toggle').remove();

Or this bit of code to remove the sidebar entirely:

$('#ui-bar').remove(); $(document.head).find('#style-ui-bar').remove();

Of course, if you want to not only remove them but replace them with something else, you'll need to do a bit of extra work. There have been people who have tried to move all the contents into a header bar, but it's probably easiest to just scrap everything and start over. That's how I created my UI.

To do this, create a passage entitled "StoryInterface." As soon as you create that passage, Twine will throw all of its default stuff out the window and whatever HTML you put in there will replace it. The only necessary component is having a div with the ID "passages," otherwise the passages themselves won't be able to render and there will be an error.

From there, you can build it up as needed. It's important to note that StoryInterface can ONLY include HTML. No Twine code or anything, just pure HTML.

I'll go a bit more in depth with the Foundations UI and how it's created below the cut, cause it might get a bit lengthy:

That's the StoryInterface passage for Foundations. It includes an overall container and three flexboxes inside of it- the passages, the sidebar on the left, and the icon tray on the right. The CSS determines how large each flexbox is, where it is in the order, and what it looks like.

Notice how the tray element also includes "data-passage='IconTray'"? That essentially creates the same sort of special passage as StoryMenu, allowing you to add things like Twine code inside of it that will be rendered as part of the UI.

That's the StoryMenu passage, which renders all of the things on the left sidebar. Normally you would use this to add extra code into the default Twine sidebar, but here I've used it to add all of the normal sidebar components back in. The links trigger Sugarcube's UI API, which means when you press the "saves" button, the normal saves dialogue pops up (same with restart and settings). The arrows at the end use the Engine API to bring the player forward and backward.

It seems a bit intimidating, but when you break it down like that, it's actually pretty simple! To do something like a little header menu, you'd probably only need a few elements. The hard part is the styling- most of the time making that consisted of adjusting something by a few values, loading the game, and then adjusting it again until it looked just right.

I'd highly recommend, if you're a very visual person like I am, making a screen on paper or on an art program and drawing on elements where you think they should go. Sketching out my UI ahead of time changed the process from creating a UI to solving a puzzle- I wasn't trying to come up with things on the fly, I was trying to figure out what needed to be changed to create my vision.

(Also, just a note if you plan on doing this- always use percentages or vh/vw rther than pixel measurements when you can! Will seriously save you a headache when trying to make everything mobile-friendly.)

#gosh sorry this was long#idk i'm bad at compressing my ideas haha#but yeah it's easier than you'd think#i was intimidated at first#but when i started i was like 'oh shit this is actually super simple'#code help#ask#anonymous

80 notes

·

View notes

Text

11 Reasons Why You Should Update Your Site this Year

DO YOU LOVE YOUR WEBSITE?

If the answer is no or not really, you shouldn’t even continue reading this article. Just go visit our shop, choose a design that you like and start building a website that you’ll be proud to share online, and invite prospects to browse through. A site that will effectively promote your work and help you book more clients. Otherwise, what’s the point in keeping this powerful marketing tool, investing time and effort into it, if it doesn’t help your business grow and flourish?

It’s not enough to JUST have a website. It needs to look good, it needs to present information in a clear, accessible way. It needs to create a strong first impression and make your prospects feel like they’ve found THE ONE. Otherwise, you’re competing for the attention of the same audience alongside another few hundred businesses. And let’s agree, that’s an exhausting game.

If you’re not sure whether your current website does a good job, here are 11 aspects you can look into to decide whether it’s time for a revamp (listed in no particular order). Know that our Biggest Sale of the year is coming soon. If you want to grab a higher discount code this Black Friday, join our Facebook Community group. That’s where all the secret deals will be shared!

1. It’s Not Memorable and Doesn’t Stand Out

What was cool 3 years ago, may not be this year. Maybe you were one of the early adopters of a new design style or aesthetic, but that was 2-4 years ago. Look around, everyone has similar website designs, especially if they use them ready out of the box. That’s why we created Flexthemes and Flexblock – to empower you with more design freedom. Create your blocks and page layouts. Make your website look and feel like YOU. It’s all simple and intuitive. No code skills are required.

And, you can personalize your mobile site version too, if you want.

2. It Doesn’t Reflect Your Brand

This one should be straightforward. Your website promotes your business online 24/7. If you’ve rebranded recently, if your photography style changed and evolved, if you’re offering new products and services to your customers – your website should reflect and advocate that change. Otherwise, you’re attracting the wrong type of clients, those who are after your old type of work. This brings us to reason #3.

3. You’re Not Attracting the Right Clients

We explain this in more depth in How Design Affects Your Business Growth article, but the point is – if you are not getting inquiries from the type of clients you want to work with, you are not positioning yourself correctly on the market. One golden rule is to carefully curate your work. Check the content and galleries you show on your website, remove the type of work you don’t want to do in the future (i.e. family, portrait, editorial, etc). Carefully select your BEST, fresh images (the type of projects you want to do more of) and include them on your homepage. This will immediately filter out some of the inquiries which are not a good fit for you. Make sure all your content is consistent, including colors, fonts, icons. An example of consistency in a website would be this.

4. Outdated Theme & Technologies

This one affects your visitors’ experience on your website. “Old school is cool” does not apply when it comes to functionality. The digital world is constantly changing and evolving. Web standards shift each year, dictating new tools and technologies for building a good website. Your clients’ preferences and tastes shift even faster. What was trendy yesterday, may not be next week. Hence, if you want your business to succeed, you need to be agile with your visual presentation and website design.

If you’ve built your website over 3 years ago, most likely it’s far behind in terms of looks and functionality. It probably has outdated code that can slow down its loading speed or the way it responds on different devices. It may also not be compatible with some of the latest popular browsers. Take our example, 3,5 years ago we launched our first Classic themes with the drag and drop page builder, a year and a half ago we released FlexBlock, and in November 2019 the world greeted the first Flexthemes.

The difference between our old, classic themes and the Flexthemes is huge (we explain it here). Building a custom-looking website with our new themes is a whole lot easier. You don’t need to know code, you don’t need to add CSS snippets or hire a technical team. The new visual editor is so simple, your grandma could probably do it (yet please don’t make her do your tasks).

The bottom line here, if it’s been a while since you’ve built your site, start looking for website design inspiration and a more modern template to use as a base.

5. Mobile Friendly

I sure hope this is not the case, but if you still don’t have a mobile-friendly website – get a new theme NOW! Even if you do have a responsive or adaptive design, you still need to keep up with the latest trends. Newer themes include modern CSS code which allows your site to adapt nicely to any device. They also allow you to hide certain page blocks for mobile and ensure a faster and smoother user experience. Also, you must know that Google cares about the experience you offer to your mobile guests, since over 50% of website traffic comes from portable devices.

Offering more control to our clients over the design and functionality of their mobile websites has always been an important goal on our list. With Flexthemes, the steering wheel is in your hands. You have access to the mobile view of your website sections, can easily make adjustments, hide or show certain areas of your site to ensure a truly wonderful and unique browsing experience for your mobile guests.

If you’re not sure how many of your prospects access your website via their phone, if you’re wondering whether it makes sense for you to customize your mobile site – check your site’s stats. You can do that via your Google Analytics account if you have one connected to your website.

Mobile is important and it won’t go away in the next years. Don’t leave money on the table with a poorly performing mobile site, it’s one of those crucial business aspects that you can’t ignore anymore.

6. Your Website Loads Slow

Aim for a loading time under 4 seconds. If you’re not sure how quickly your site loads, use tools like Pingdom or GTmetrix to check how long it takes for your site to load, and which files are the troublemakers. Poor results could mean you have some work to do. Slow loading speed could be caused by several reasons: heavy, unoptimized images, underpowered hosting and, even an old, poorly performing theme.

The first one can be easily solved by following this Ultimate Guide to Saving Your Images for the Web. For the second one, check out this article describing 5 key criteria to choosing a good hosting provider. Yet, if the issue is caused by an old, outdated website template, you can start shopping for a new one.

View Flothemes website templates here.

7. Your Bounce Rate is High

This is extremely important. If you’ve been pouring your heart and time into blogging, SEO and marketing, bringing a lot of traffic to your website – yet the second they access your homepage (or any other page), they bounce right off of it – you have a problem. You’re losing leads and potential clients.

A high bounce rate indicates that you’re doing something wrong, either with content, with the navigation of your website, or the overall look and feel on your site. On average, a bounce rate between 40-60% is considered to be OK (this varies depending on your industry).

You can check your bounce rate via Google Analytics. Log in and go to Acquisition >> Overview tab. If it’s higher than 70%, follow these 9 Steps to reducing your Bounce Rate. If it doesn’t help, it’s time for a website redesign, and we do suggest seeking some expert advice in UX and UI.

In case you want to dive deeper into Measuring Performance and Tracking Success for your site, download our SEO guide here.

8. Security

To be honest, new or old, any website can be hacked. The experience is stressful and painful, especially when you lose information, or/and have to rebuild everything from scratch. However, older websites rely on older technology, therefore chances of a security breach are higher. Make sure your theme is updated and follow these 12 steps to make your site more secure.

9. SEO

Let’s start with the basics. Do you have a blog? You should, as it’s a powerful marketing tool to drive more traffic and users to your website, through keywords, internal links and, backlinks.

You also need to know that search engines love good, updated content. Every time you make an update to your site, Google and other search engines crawl and index your pages, thus your site ranking gets recalculated. If you keep your content updated and of GOOD QUALITY, you increase your chances of getting noticed on Search Result pages. Pair that with a charming, good-looking website, and you’re guaranteed more attention.

If SEO is something that you’ve been planning to dive deeper into, check out our SEO guide for photographers. Also, take a look at this incredible post by Dylan M Howell on Content Strategy and How to Blog like and Expert.

10. Do I need Call to Action?

Of course, you do. And it’s not just a button or link added here and there. It has to be placed strategically, to keep your users engaged with your website and browsing through more content. We explain How Call to Actions work in Design in this article, but the idea is to guide your site visitors through your content to your Best Work, then to your contact form or sales page. If your current website is limited in CTAs (Call to Actions) and doesn’t allow much customization – it’s time to get something more flexible and powerful. With Flexthemes for example, you can easily create new page layouts to support your sales campaigns and convert more users into prospects. They allow you to fully customize any layout, add buttons, images, videos, texts, and other design elements.

Never leave your site visitors wondering what they should do next. If it’s not subtle and intuitive, they’ll leave and never return. And that’s sadly a lost business opportunity.

11. All those Cool Apps & Integrations

An old outdated website template may not keep up with all the new apps, plugins, and integrations available out there. So, if you want to integrate your favorite Studio Management System, Photo Editing app or, any other useful tools that simplify your workflow – be prepared to update your website on a regular 1-2 year basis, and use the most modern, up to date templates for that.

1 note

·

View note

Text

A Dish Best Served Code

I have a friend who likes to role-play online but doesn't know how to code - for the purpose of this story, I'll call her Blue. Around a week ago, she contacted me saying that she wanted to start up a new site and then handed me this list of jobs that needed to be done without ever asking me to help or whether I had the time to do any of it (note that she knows I'm currently a full time student and I'm right in the middle of my coursework period at the moment).

Right now this is all I can think of off the top of my head. We'll need a new header pic for you to add too but I have to find one first:

Add a skin

Fix add acount feature

Add/set up Discord

Add Ratios

Fix member groups and add emoticons

Add Quick Links

Add Custom Field Content to profiles

Figure out how to put those sub forum boxes in there

For those of you who don't know, this was pretty much building the entire site for her except for the main forums where the roleplaying would take place - I had adamantly refused to do those because I knew how long they would take.

So, I thought this was a little presumptuous of her to think that I just had the time to drop everything and do whatever she needed but, hey, we'd known each other for something like three years and I used to role-play with her, so I thought it wouldn't hurt to help her out just a bit. Besides, all the jobs on that list were very easy things that I could do in about ten minutes each at most.

Unfortunately, Blue decided to recruit a group of other people who I'd never met before to help her out. Where she and I were listed as site owners, the rest of them were listed as general admins, with two of them being moderators. No biggie: they can stick to their jobs and I can do mine. Didn't happen. These girls were horrible. I have no idea where she'd found them or what their relationship was but they stormed in like they owned the place, throwing their opinions about and editing bits of the site coding that I'd been working on in ways that, ultimately, totally messed everything up. I asked them to stop, they kept doing it. This went on for a while.

I'll be the first to admit that I have a short temper. But I put up with this for a couple of days and just tried to make general requests that they stop undoing my work. These were jobs that should have taken me just under an hour and a half to finish and yet was taking days because they continued to change things. I was messaging Blue separately and asking her to tell them to stop because she was supposed to be head of the staff team. She didn't do anything and, eventually, things started to get heated between myself and these four girls.

I would have thought Blue would side with me. I was wrong.

Instead, she basically told me to stop picking fights with them and to shut up and do my job. She then made two of the other girls moderators on Discord and gave them the highest permissions, something which they later used to continuously remove me from my staff position and making my job infinitely harder. I was starting to feel constantly targeted and it was seeping into the work I actually had to do for university. I ended up staying up all night three nights in a row, trapped in endless arguments with those other staff members and Blue herself. I was exhausted and stressed out, and my intention was to finish the jobs and then leave them alone. I probably should have left earlier on but given the history I had with Blue, I thought I might as well be nice enough to do this for her because I knew she was excited for her role-play.

The final straw came over the stupidest thing. She forgot to close a <u> tag somewhere. I fixed it, I reminded everyone to make sure to close their tags. Simple stuff, right? I would have thought people who were allegedly helping to build a site would know how to handle such basic things. I was suddenly bombarded by DMs from Blue telling me that she hadn't done anything wrong and if there was an error then to "fucking show me how it's supposed to go". I tried to explain, repeatedly, what the issue was and how to fix it and, in return, she began to argue that she wasn't doing anything wrong, despite there being obvious coding issues. Things got heated. I cracked. I was done.

https://i.imgur.com/Rb4DQ3X.png

https://i.imgur.com/QGtr3zq.png

After I'd tendered my resignation from ever helping her out again, she hadn't yet figured to remove my staff permissions on the site or on the Discord server so, while she was otherwise preoccupied flailing over suddenly being blocked and not knowing how to code anything else, I quietly went into the code I'd set up for her and removed one ; and one } and all the comments in that code which might have helped them figure out how to solve any future problems. (Lucky for me the control panel didn't update to changes in the site's css).

Then I sat back and watched the panic in their staff Discord when parts of their site stopped looking all pretty and started looking like this:

https://i.imgur.com/65wBT3T.png

https://i.imgur.com/VBYcLue.png

They removed me from the Discord a short while after that and ip banned me from the site (because I guess they don't know I can just use a proxy). But I'm enjoying watching them panic as they try to figure out what I did. Jokes on them for having a guest-accessible Discord server right on the main page of their site.

Moral of the story, I guess, is don't mess with the only person on your site who knows how to code anything.

TL;DR: An Illiterate Pineapple asks me to help her code her site; treats me like shit; gets her code fucked with.

(source) story by (/u/aalyoshka)

246 notes

·

View notes

Text

An 8-Point Checklist for Debugging Strange Technical SEO Problems

Posted by Dom-Woodman

Occasionally, a problem will land on your desk that's a little out of the ordinary. Something where you don't have an easy answer. You go to your brain and your brain returns nothing.

These problems can’t be solved with a little bit of keyword research and basic technical configuration. These are the types of technical SEO problems where the rabbit hole goes deep.

The very nature of these situations defies a checklist, but it's useful to have one for the same reason we have them on planes: even the best of us can and will forget things, and a checklist will provvide you with places to dig.

Fancy some examples of strange SEO problems? Here are four examples to mull over while you read. We’ll answer them at the end.

1. Why wasn’t Google showing 5-star markup on product pages?

The pages had server-rendered product markup and they also had Feefo product markup, including ratings being attached client-side.

The Feefo ratings snippet was successfully rendered in Fetch & Render, plus the mobile-friendly tool.

When you put the rendered DOM into the structured data testing tool, both pieces of structured data appeared without errors.

2. Why wouldn’t Bing display 5-star markup on review pages, when Google would?

The review pages of client & competitors all had rating rich snippets on Google.

All the competitors had rating rich snippets on Bing; however, the client did not.

The review pages had correctly validating ratings schema on Google’s structured data testing tool, but did not on Bing.

3. Why were pages getting indexed with a no-index tag?

Pages with a server-side-rendered no-index tag in the head were being indexed by Google across a large template for a client.

4. Why did any page on a website return a 302 about 20–50% of the time, but only for crawlers?

A website was randomly throwing 302 errors.

This never happened in the browser and only in crawlers.

User agent made no difference; location or cookies also made no difference.

Finally, a quick note. It’s entirely possible that some of this checklist won’t apply to every scenario. That’s totally fine. It’s meant to be a process for everything you could check, not everything you should check.

The pre-checklist check

Does it actually matter?

Does this problem only affect a tiny amount of traffic? Is it only on a handful of pages and you already have a big list of other actions that will help the website? You probably need to just drop it.

I know, I hate it too. I also want to be right and dig these things out. But in six months' time, when you've solved twenty complex SEO rabbit holes and your website has stayed flat because you didn't re-write the title tags, you're still going to get fired.

But hopefully that's not the case, in which case, onwards!

Where are you seeing the problem?

We don’t want to waste a lot of time. Have you heard this wonderful saying?: “If you hear hooves, it’s probably not a zebra.”

The process we’re about to go through is fairly involved and it’s entirely up to your discretion if you want to go ahead. Just make sure you’re not overlooking something obvious that would solve your problem. Here are some common problems I’ve come across that were mostly horses.

You’re underperforming from where you should be.

When a site is under-performing, people love looking for excuses. Weird Google nonsense can be quite a handy thing to blame. In reality, it’s typically some combination of a poor site, higher competition, and a failing brand. Horse.

You’ve suffered a sudden traffic drop.

Something has certainly happened, but this is probably not the checklist for you. There are plenty of common-sense checklists for this. I’ve written about diagnosing traffic drops recently — check that out first.

The wrong page is ranking for the wrong query.

In my experience (which should probably preface this entire post), this is usually a basic problem where a site has poor targeting or a lot of cannibalization. Probably a horse.

Factors which make it more likely that you’ve got a more complex problem which require you to don your debugging shoes:

A website that has a lot of client-side JavaScript.

Bigger, older websites with more legacy.

Your problem is related to a new Google property or feature where there is less community knowledge.

1. Start by picking some example pages.

Pick a couple of example pages to work with — ones that exhibit whatever problem you're seeing. No, this won't be representative, but we'll come back to that in a bit.

Of course, if it only affects a tiny number of pages then it might actually be representative, in which case we're good. It definitely matters, right? You didn't just skip the step above? OK, cool, let's move on.

2. Can Google crawl the page once?

First we’re checking whether Googlebot has access to the page, which we’ll define as a 200 status code.

We’ll check in four different ways to expose any common issues:

Robots.txt: Open up Search Console and check in the robots.txt validator.

User agent: Open Dev Tools and verify that you can open the URL with both Googlebot and Googlebot Mobile.

To get the user agent switcher, open Dev Tools.

Check the console drawer is open (the toggle is the Escape key)

Hit the … and open "Network conditions"

Here, select your user agent!

IP Address: Verify that you can access the page with the mobile testing tool. (This will come from one of the IPs used by Google; any checks you do from your computer won't.)

Country: The mobile testing tool will visit from US IPs, from what I've seen, so we get two birds with one stone. But Googlebot will occasionally crawl from non-American IPs, so it’s also worth using a VPN to double-check whether you can access the site from any other relevant countries.

I’ve used HideMyAss for this before, but whatever VPN you have will work fine.

We should now have an idea whether or not Googlebot is struggling to fetch the page once.

Have we found any problems yet?

If we can re-create a failed crawl with a simple check above, then it’s likely Googlebot is probably failing consistently to fetch our page and it’s typically one of those basic reasons.

But it might not be. Many problems are inconsistent because of the nature of technology. ;)

3. Are we telling Google two different things?

Next up: Google can find the page, but are we confusing it by telling it two different things?

This is most commonly seen, in my experience, because someone has messed up the indexing directives.

By "indexing directives," I’m referring to any tag that defines the correct index status or page in the index which should rank. Here’s a non-exhaustive list:

No-index

Canonical

Mobile alternate tags

AMP alternate tags

An example of providing mixed messages would be:

No-indexing page A

Page B canonicals to page A

Or:

Page A has a canonical in a header to A with a parameter

Page A has a canonical in the body to A without a parameter

If we’re providing mixed messages, then it’s not clear how Google will respond. It’s a great way to start seeing strange results.

Good places to check for the indexing directives listed above are:

Sitemap

Example: Mobile alternate tags can sit in a sitemap

HTTP headers

Example: Canonical and meta robots can be set in headers.

HTML head

This is where you’re probably looking, you’ll need this one for a comparison.

JavaScript-rendered vs hard-coded directives

You might be setting one thing in the page source and then rendering another with JavaScript, i.e. you would see something different in the HTML source from the rendered DOM.

Google Search Console settings

There are Search Console settings for ignoring parameters and country localization that can clash with indexing tags on the page.

A quick aside on rendered DOM

This page has a lot of mentions of the rendered DOM on it (18, if you’re curious). Since we’ve just had our first, here’s a quick recap about what that is.

When you load a webpage, the first request is the HTML. This is what you see in the HTML source (right-click on a webpage and click View Source).

This is before JavaScript has done anything to the page. This didn’t use to be such a big deal, but now so many websites rely heavily on JavaScript that the most people quite reasonably won’t trust the the initial HTML.

Rendered DOM is the technical term for a page, when all the JavaScript has been rendered and all the page alterations made. You can see this in Dev Tools.

In Chrome you can get that by right clicking and hitting inspect element (or Ctrl + Shift + I). The Elements tab will show the DOM as it’s being rendered. When it stops flickering and changing, then you’ve got the rendered DOM!

4. Can Google crawl the page consistently?

To see what Google is seeing, we're going to need to get log files. At this point, we can check to see how it is accessing the page.

Aside: Working with logs is an entire post in and of itself. I’ve written a guide to log analysis with BigQuery, I’d also really recommend trying out Screaming Frog Log Analyzer, which has done a great job of handling a lot of the complexity around logs.

When we’re looking at crawling there are three useful checks we can do:

Status codes: Plot the status codes over time. Is Google seeing different status codes than you when you check URLs?

Resources: Is Google downloading all the resources of the page?

Is it downloading all your site-specific JavaScript and CSS files that it would need to generate the page?

Page size follow-up: Take the max and min of all your pages and resources and diff them. If you see a difference, then Google might be failing to fully download all the resources or pages. (Hat tip to @ohgm, where I first heard this neat tip).

Have we found any problems yet?

If Google isn't getting 200s consistently in our log files, but we can access the page fine when we try, then there is clearly still some differences between Googlebot and ourselves. What might those differences be?

It will crawl more than us

It is obviously a bot, rather than a human pretending to be a bot

It will crawl at different times of day

This means that:

If our website is doing clever bot blocking, it might be able to differentiate between us and Googlebot.

Because Googlebot will put more stress on our web servers, it might behave differently. When websites have a lot of bots or visitors visiting at once, they might take certain actions to help keep the website online. They might turn on more computers to power the website (this is called scaling), they might also attempt to rate-limit users who are requesting lots of pages, or serve reduced versions of pages.

Servers run tasks periodically; for example, a listings website might run a daily task at 01:00 to clean up all it’s old listings, which might affect server performance.

Working out what’s happening with these periodic effects is going to be fiddly; you’re probably going to need to talk to a back-end developer.

Depending on your skill level, you might not know exactly where to lead the discussion. A useful structure for a discussion is often to talk about how a request passes through your technology stack and then look at the edge cases we discussed above.

What happens to the servers under heavy load?

When do important scheduled tasks happen?

Two useful pieces of information to enter this conversation with:

Depending on the regularity of the problem in the logs, it is often worth trying to re-create the problem by attempting to crawl the website with a crawler at the same speed/intensity that Google is using to see if you can find/cause the same issues. This won’t always be possible depending on the size of the site, but for some sites it will be. Being able to consistently re-create a problem is the best way to get it solved.

If you can’t, however, then try to provide the exact periods of time where Googlebot was seeing the problems. This will give the developer the best chance of tying the issue to other logs to let them debug what was happening.

If Google can crawl the page consistently, then we move onto our next step.

5. Does Google see what I can see on a one-off basis?

We know Google is crawling the page correctly. The next step is to try and work out what Google is seeing on the page. If you’ve got a JavaScript-heavy website you’ve probably banged your head against this problem before, but even if you don’t this can still sometimes be an issue.

We follow the same pattern as before. First, we try to re-create it once. The following tools will let us do that:

Fetch & Render

Shows: Rendered DOM in an image, but only returns the page source HTML for you to read.

Mobile-friendly test

Shows: Rendered DOM and returns rendered DOM for you to read.

Not only does this show you rendered DOM, but it will also track any console errors.

Is there a difference between Fetch & Render, the mobile-friendly testing tool, and Googlebot? Not really, with the exception of timeouts (which is why we have our later steps!). Here’s the full analysis of the difference between them, if you’re interested.

Once we have the output from these, we compare them to what we ordinarily see in our browser. I’d recommend using a tool like Diff Checker to compare the two.

Have we found any problems yet?

If we encounter meaningful differences at this point, then in my experience it’s typically either from JavaScript or cookies

Why?

Googlebot crawls with cookies cleared between page requests

Googlebot renders with Chrome 41, which doesn’t support all modern JavaScript.

We can isolate each of these by:

Loading the page with no cookies. This can be done simply by loading the page with a fresh incognito session and comparing the rendered DOM here against the rendered DOM in our ordinary browser.

Use the mobile testing tool to see the page with Chrome 41 and compare against the rendered DOM we normally see with Inspect Element.

Yet again we can compare them using something like Diff Checker, which will allow us to spot any differences. You might want to use an HTML formatter to help line them up better.

We can also see the JavaScript errors thrown using the Mobile-Friendly Testing Tool, which may prove particularly useful if you’re confident in your JavaScript.

If, using this knowledge and these tools, we can recreate the bug, then we have something that can be replicated and it’s easier for us to hand off to a developer as a bug that will get fixed.

If we’re seeing everything is correct here, we move on to the next step.

6. What is Google actually seeing?

It’s possible that what Google is seeing is different from what we recreate using the tools in the previous step. Why? A couple main reasons:

Overloaded servers can have all sorts of strange behaviors. For example, they might be returning 200 codes, but perhaps with a default page.

JavaScript is rendered separately from pages being crawled and Googlebot may spend less time rendering JavaScript than a testing tool.

There is often a lot of caching in the creation of web pages and this can cause issues.

We’ve gotten this far without talking about time! Pages don’t get crawled instantly, and crawled pages don’t get indexed instantly.

Quick sidebar: What is caching?

Caching is often a problem if you get to this stage. Unlike JS, it’s not talked about as much in our community, so it’s worth some more explanation in case you’re not familiar. Caching is storing something so it’s available more quickly next time.

When you request a webpage, a lot of calculations happen to generate that page. If you then refreshed the page when it was done, it would be incredibly wasteful to just re-run all those same calculations. Instead, servers will often save the output and serve you the output without re-running them. Saving the output is called caching.

Why do we need to know this? Well, we’re already well out into the weeds at this point and so it’s possible that a cache is misconfigured and the wrong information is being returned to users.

There aren’t many good beginner resources on caching which go into more depth. However, I found this article on caching basics to be one of the more friendly ones. It covers some of the basic types of caching quite well.

How can we see what Google is actually working with?

Google’s cache

Shows: Source code

While this won’t show you the rendered DOM, it is showing you the raw HTML Googlebot actually saw when visiting the page. You’ll need to check this with JS disabled; otherwise, on opening it, your browser will run all the JS on the cached version.

Site searches for specific content

Shows: A tiny snippet of rendered content.

By searching for a specific phrase on a page, e.g. inurl:example.com/url “only JS rendered text”, you can see if Google has manage to index a specific snippet of content. Of course, it only works for visible text and misses a lot of the content, but it's better than nothing!

Better yet, do the same thing with a rank tracker, to see if it changes over time.

Storing the actual rendered DOM

Shows: Rendered DOM

Alex from DeepCrawl has written about saving the rendered DOM from Googlebot. The TL;DR version: Google will render JS and post to endpoints, so we can get it to submit the JS-rendered version of a page that it sees. We can then save that, examine it, and see what went wrong.

Have we found any problems yet?

Again, once we’ve found the problem, it’s time to go and talk to a developer. The advice for this conversation is identical to the last one — everything I said there still applies.

The other knowledge you should go into this conversation armed with: how Google works and where it can struggle. While your developer will know the technical ins and outs of your website and how it’s built, they might not know much about how Google works. Together, this can help you reach the answer more quickly.

The obvious source for this are resources or presentations given by Google themselves. Of the various resources that have come out, I’ve found these two to be some of the more useful ones for giving insight into first principles:

This excellent talk, How does Google work - Paul Haahr, is a must-listen.

At their recent IO conference, John Mueller & Tom Greenway gave a useful presentation on how Google renders JavaScript.

But there is often a difference between statements Google will make and what the SEO community sees in practice. All the SEO experiments people tirelessly perform in our industry can also help shed some insight. There are far too many list here, but here are two good examples:

Google does respect JS canonicals - For example, Eoghan Henn does some nice digging here, which shows Google respecting JS canonicals.

How does Google index different JS frameworks? - Another great example of a widely read experiment by Bartosz Góralewicz last year to investigate how Google treated different frameworks.

7. Could Google be aggregating your website across others?

If we’ve reached this point, we’re pretty happy that our website is running smoothly. But not all problems can be solved just on your website; sometimes you’ve got to look to the wider landscape and the SERPs around it.

Most commonly, what I’m looking for here is:

Similar/duplicate content to the pages that have the problem.

This could be intentional duplicate content (e.g. syndicating content) or unintentional (competitors' scraping or accidentally indexed sites).

Either way, they’re nearly always found by doing exact searches in Google. I.e. taking a relatively specific piece of content from your page and searching for it in quotes.

Have you found any problems yet?

If you find a number of other exact copies, then it’s possible they might be causing issues.

The best description I’ve come up with for “have you found a problem here?” is: do you think Google is aggregating together similar pages and only showing one? And if it is, is it picking the wrong page?

This doesn’t just have to be on traditional Google search. You might find a version of it on Google Jobs, Google News, etc.

To give an example, if you are a reseller, you might find content isn’t ranking because there's another, more authoritative reseller who consistently posts the same listings first.

Sometimes you’ll see this consistently and straightaway, while other times the aggregation might be changing over time. In that case, you’ll need a rank tracker for whatever Google property you’re working on to see it.

Jon Earnshaw from Pi Datametrics gave an excellent talk on the latter (around suspicious SERP flux) which is well worth watching.

Once you’ve found the problem, you’ll probably need to experiment to find out how to get around it, but the easiest factors to play with are usually:

De-duplication of content

Speed of discovery (you can often improve by putting up a 24-hour RSS feed of all the new content that appears)

Lowering syndication

8. A roundup of some other likely suspects

If you’ve gotten this far, then we’re sure that:

Google can consistently crawl our pages as intended.

We’re sending Google consistent signals about the status of our page.

Google is consistently rendering our pages as we expect.

Google is picking the correct page out of any duplicates that might exist on the web.

And your problem still isn’t solved?

And it is important?

Well, shoot.

Feel free to hire us…?

As much as I’d love for this article to list every SEO problem ever, that’s not really practical, so to finish off this article let’s go through two more common gotchas and principles that didn’t really fit in elsewhere before the answers to those four problems we listed at the beginning.

Invalid/poorly constructed HTML

You and Googlebot might be seeing the same HTML, but it might be invalid or wrong. Googlebot (and any crawler, for that matter) has to provide workarounds when the HTML specification isn't followed, and those can sometimes cause strange behavior.

The easiest way to spot it is either by eye-balling the rendered DOM tools or using an HTML validator.

The W3C validator is very useful, but will throw up a lot of errors/warnings you won’t care about. The closest I can give to a one-line of summary of which ones are useful is to:

Look for errors

Ignore anything to do with attributes (won’t always apply, but is often true).

The classic example of this is breaking the head.

An iframe isn't allowed in the head code, so Chrome will end the head and start the body. Unfortunately, it takes the title and canonical with it, because they fall after it — so Google can't read them. The head code should have ended in a different place.

Oliver Mason wrote a good post that explains an even more subtle version of this in breaking the head quietly.

When in doubt, diff

Never underestimate the power of trying to compare two things line by line with a diff from something like Diff Checker. It won’t apply to everything, but when it does it’s powerful.

For example, if Google has suddenly stopped showing your featured markup, try to diff your page against a historical version either in your QA environment or from the Wayback Machine.

Answers to our original 4 questions

Time to answer those questions. These are all problems we’ve had clients bring to us at Distilled.

1. Why wasn’t Google showing 5-star markup on product pages?

Google was seeing both the server-rendered markup and the client-side-rendered markup; however, the server-rendered side was taking precedence.

Removing the server-rendered markup meant the 5-star markup began appearing.

2. Why wouldn’t Bing display 5-star markup on review pages, when Google would?

The problem came from the references to schema.org.

<div itemscope="" itemtype="https://schema.org/Movie"> </div> <p> <h1 itemprop="name">Avatar</h1> </p> <p> <span>Director: <span itemprop="director">James Cameron</span> (born August 16, 1954)</span> </p> <p> <span itemprop="genre">Science fiction</span> </p> <p> <a href="../movies/avatar-theatrical-trailer.html" itemprop="trailer">Trailer</a> </p> <p></div> </p>

We diffed our markup against our competitors and the only difference was we’d referenced the HTTPS version of schema.org in our itemtype, which caused Bing to not support it.

C’mon, Bing.

3. Why were pages getting indexed with a no-index tag?

The answer for this was in this post. This was a case of breaking the head.

The developers had installed some ad-tech in the head and inserted an non-standard tag, i.e. not:

<title>

<style>

<base>

<link>

<meta>

<script>

<noscript>

This caused the head to end prematurely and the no-index tag was left in the body where it wasn’t read.

4. Why did any page on a website return a 302 about 20–50% of the time, but only for crawlers?

This took some time to figure out. The client had an old legacy website that has two servers, one for the blog and one for the rest of the site. This issue started occurring shortly after a migration of the blog from a subdomain (blog.client.com) to a subdirectory (client.com/blog/…).

At surface level everything was fine; if a user requested any individual page, it all looked good. A crawl of all the blog URLs to check they’d redirected was fine.

But we noticed a sharp increase of errors being flagged in Search Console, and during a routine site-wide crawl, many pages that were fine when checked manually were causing redirect loops.

We checked using Fetch and Render, but once again, the pages were fine. Eventually, it turned out that when a non-blog page was requested very quickly after a blog page (which, realistically, only a crawler is fast enough to achieve), the request for the non-blog page would be sent to the blog server.

These would then be caught by a long-forgotten redirect rule, which 302-redirected deleted blog posts (or other duff URLs) to the root. This, in turn, was caught by a blanket HTTP to HTTPS 301 redirect rule, which would be requested from the blog server again, perpetuating the loop.

For example, requesting https://www.client.com/blog/ followed quickly enough by https://www.client.com/category/ would result in:

302 to http://www.client.com - This was the rule that redirected deleted blog posts to the root

301 to https://www.client.com - This was the blanket HTTPS redirect

302 to http://www.client.com - The blog server doesn’t know about the HTTPS non-blog homepage and it redirects back to the HTTP version. Rinse and repeat.

This caused the periodic 302 errors and it meant we could work with their devs to fix the problem.

What are the best brainteasers you've had?

Let’s hear them, people. What problems have you run into? Let us know in the comments.

Also credit to @RobinLord8, @TomAnthonySEO, @THCapper, @samnemzer, and @sergeystefoglo_ for help with this piece.

Sign up for The Moz Top 10, a semimonthly mailer updating you on the top ten hottest pieces of SEO news, tips, and rad links uncovered by the Moz team. Think of it as your exclusive digest of stuff you don't have time to hunt down but want to read!

from The Moz Blog https://ift.tt/2lfAXtQ via IFTTT

2 notes

·

View notes

Text

Factors Needs to Know When Hiring website development surrey for Small Startups

Website development surrey is a craft that incorporates different technologies. When it comes to the role of responsible web developers, they ensure that clients get websites according to their specifications. Do not confuse between a web designer and a web developer although their roles do have some overlap. Web developers involve actual code that creates a website.

There are different things carried out by a wonderful web developer surrey. As a customer, you need to understand what exactly your developer will do.

How developers will do Website development surrey?

Handle complete design and functionality of a website-

When creating a website, there is a lot of planning and analysis involved in this process and this is performed by the project manager and a development team. They will estimate how long this will take. Every customer has their own specifications about the website and the web developer writes the code that makes up the website. Web designers are also involved to make sure the design works meet customer’s requirements.

Make the business logic-

In this stage, a professional web developer in surrey will start developing the website. It includes both the client-side technologies such as HTML, JavaScript, and CSS as well as server-side technologies such as PHP and.NET. Your web developer should be proficient and skilled at different coding languages and technology. They know how to meet client’s expectations precisely.

Applying the web design-

Usually, web developers and web designers work together to build a website. Even though the designing part is performed by web designers, it is the task of developers to implement the web design on the website.Hence, they have more responsibility than designers.

Testing-

It is yet another important part of a web development project. It is possible that a website could face different critical errorswhen it is launched. This will cause losing money of the customers as well as developing companies can lose their credibility. Hence, the website will most likely be tested during each stage of development. It is essential to output the best result.

Currently, the Process of best website development surrey includes many different tasks, but it all begins with the fundamentals.

Many startup companies these days are seeking their web presence through a basic website or an interactive web portal.Many of them search online for resources like freelance web developers, freelance web development, or freelance web designers.You can outsource your projects to them and they can provide it at an affordable pricing range. However, you should know that they could be interested in the initial stage and the result will end up with different issues that will be more problematic. You might be thinking of saving money, but it could end up losing money and time. Remember that losing timeis more expensive than losing money.

Hence, we would suggest that you should always focus on a professional web developer surrey. A reliable web development company has its expert team of developers, designers, and so on that can give you the best result at the best pricing range.

As a small startup, you need to search for the best quality service as well as cost-effective service. But, you can find many web development companies in surrey. All you just need to find the best source that can meet your needs, specifications, and budget. Most often people get confused while choosing the best company for their web development projects. This article will elaborate on the basic principles to make this process easier for you.

What small startups need to know when hiring a company forWebsite development surrey?

Be it human resources, plumbing or restaurant, or the kind of business you are running, undoubtedly, you are doing hard to improve your productivity. However, you need to have your online presence to reach out to a broader audience so it needs your website to work for you. It indicates that you have to find the best web services, but how do you make sure that you are doing the right things?

Checklist for choosing the best web service partner to get the best quality and value web development for your company-

The service you need exactly-

In simple terms, a website is your business card for reference. So, you need to know exactly what should include in it and what you want it to do for your business. It is the basic thing that will increase communication within or outside the organisation. This is where you need Surrey’s web development services.

Even if you have a business website and something you want to change in it, then you must know how things might be changed. Here you need to look for a web developer to solve these problems.

You need to have a brief which a professional web developer can use to work out the web services needed and how to achieve the desired results. Then you can ask for a free quote for your work that will help you to choose the best deal.

Selecting the right person-

Be specific whether you need a web designer, web developer, or programmer. All these terms have distinctions between them.

A professional web developer in surrey can incorporate elements of design and programming. It is a much wider term for getting a website online and making it work. They can deal with both the appearance and functionality of the website. They have specific skills to improve your website.

On the other hand, a designer is responsible for the appearance or look and feel of the website, including the layout. They can make your website visually powerful and impactful. While a web programmer focuses more on functionality and the program for the web or may do software programming as well. Their concern is getting the features of your website to work.

Check developer’s portfolio-

When hiring web developers for your business website, make sure you have checked their portfolio thoroughly. Also, you can check their clients' websites online.This will help you to ensure their service quality.

Client recommendations-

Ask your developers for their client’s websites designed by them. It can show you the end resultof a website as well as theirclient recommendation can tell you about the web design procedure with this specific company.

Apart from that, you should ensure how quickly they respond to emails or phone calls of their clients. They should also be comfortable with advice that you may need sometimes. They should appropriately fit you and your business.

Company’s reputation-

Most often people choose to go with big companies for the best web services. Is it worth investing all the time? Of course, Web design and development is a big business and you can find many big names available in this industry by putting the keyword “reputed web developers in Surrey” in the search engine. However, rankings are not always the whole story and you need to understand it.

You can also go with a local web developer as they may have local contacts to assist you in promoting your website which can boost your search engine rankings.They can work closely with you to decide on the advanced web services neededand thiswould be important for you.

Moreover, you need to find out a company that has a solid reputation in the market for exceptional Website development near me. They must have years of experience and skill to deal with your project no matter it is a big or small one.

How much web development cost-

You are going to invest your hard-earned money. Undoubtedly, you ought to shop around and get a few quotes.You should check that you are being chargedfor the web services you needed. If your company is charging extra cost then make sure it is worth paying the extra cost. Even if it is considerably below average, it should set alarm bells ringing. Always pay exactly what you get from them.

Your company will offer you a free quote; however, it is only a starting point. It depends on you that exactly what is included and what is not. Building a website is a continuing procedure that takes place over a period of time. It may require some additional work later.

In this case, you should ask your developer to break the cost down per task. You can add or not add any features as per your wish which is more time-consuming. Moreover, you need to take your own time to find the web developer in surrey you need.

Key benefits of professional Website development surrey-

Reduced Development Cost- A professional and reliable web Development Company in Surrey offers affordable packages for web services without compromising the quality of services.It will meet your budget.

Extremely Skilled Developers- They arehighly skilled and experienced web developerswhich is yet another major factor. Hence you can outsource your project to these companies without any hesitation. These expert developers can use their creativity and experience to build web applications that best suit your business needs and specifications.

Maximize Profit- Hiring these expert web developers is worth investing in as you will receive extra time to discover those fields which until now have been untouched. The cost-effective service and on-time delivery will reduce your stress and can maximize profit by focusing on your main work area.

Timeliness- They will complete your project successfully on time with a high level of accuracy. As time is the most important factor, it will save your time.

Quality Work- Professional web developers use their experience to develop superior quality web applicationsor websites to meet your exact needs. You will receive the best value for your money.

Scalable Applications- They will precisely analyse your business needsto plan and develop web applications for you. It can be used for a long time as well as can be modified effortlessly in the future at the best pricing range if needed.

FAQs-

Do I really need a business website?

You should know that most of the customers search for theirservices and products on the web. If you have a website, it will make it easy for you to reach out tobroader potential customers within a short period of time.

How much input do I need to provide?

It depends on you. You can give little input or provide as much information as you like. However, detailed information would help you to create a better website.

Can you build bespoke e-commerce shops and applications?

An experienced team can build commerce shops and comprehensive website solutionsas per your requirements.

How much will a website cost me?

Different sorts of websites or applications can be developed to meet clients’ needs. So the cost of this service depends on its complexity. The team will discuss it with you and give a free quote.

Will my website be search-engine friendly?

Yes. All the websites are created are SEO friendly and compliant with search engine guidelines. Your site will be up to date and compliant with new rules.

All you just need to make sure you hired the best Website development surrey. You can visit www.imwebdesignmarketing.co.uk for the best deal. It has expert web developers in Surrey to meet your needs.

0 notes

Text

How Do You Become a Web Designer? Do You Have What It Takes?

Web design can be an enjoyable and fulfilling experience. It's a trade that combines technical skills with creative ability. If you feel comfortable with computer technology and you enjoy creating documents, web design can be a great way to combine the two interests.

That being said, it's always overwhelming to consider learning a new skill. Before learning how to become a web designer, you should ask yourself, "Should I become a web designer?"

I've been learning web design since I was ten years old, in 1994. I now do a lot of web design for myself and for some small business clients. There have been plenty of pleasures, but also plenty of frustrations. If you're considering becoming a web designer, there are some things you should keep in mind.

If you have a lot of time to devote to learning HTML, CSS, JavaScript and Photoshop, it's possible to learn the basics in a couple of months. Be ready to spend some money on manuals, books, and applications.

No matter how you decide to learn web design and how you decide to enter the field, some people have better potential to become web designers than others.

When you're programming, even if you're using a simple language like HTML and using a helpful application like Dreamweaver, you're going to encounter some frustrations. Sometimes, when I create an HTML document, I spend a lot more time making corrections and problem solving than doing fun stuff. Are you prepared to spend a lot of time testing and making little changes? No matter how you approach web design, tedium can't be completely avoided. If you're easily frustrated and discouraged, web design might not be for you.

Unless web design is going to be just a hobby for you, you will have clients you have to work with. Sometimes clients have a lot of specific expectations. Some clients have experience with web design themselves, but others may demand things without knowing the technical limitations involved. Before you start any project for clients, it's best to have a thorough conversation with them about what they want and what they need. That can save you a lot of time. How would you like to spend weeks developing a website, only to discover that your client wants completely different fonts, colors, graphics, site organization and content? If you're going to get into designing web pages for other people, you're going to have to be ready to make a lot of compromises and take a lot of criticism. Are you ready for that?

Finally, ask yourself if you have the time and energy to promote yourself. If you want to be hired by a web design firm, in addition to learning skills and possibly obtaining certifications, you've also got to be ready to pound the pavement with your resume and portfolio. It might take you over a year to find a job. Be ready to attend a lot of job interviews, and possibly get a lot of rejections.

If you're going to become a freelancer, like I am, you've really got to devote a lot of energy to self-promotion. Set up a website, preferably with your own domain. Be ready to spend some money on advertising. Spend a lot of time promoting your services with social media - Twitter, Facebook, Linked-In, and so on. Scan classified ads, particularly online classifieds. Print business cards and distribute them wherever you can. Use your connections and word-of-mouth to your advantage. Tell everyone you know that you're a web designer, and maybe someone knows someone who could be your first client. Sometimes I spend more time promoting myself than I do actually doing the work itself.

If you're ready to spend a little bit of money, do a lot of tedious work, take some criticism, and do a lot of self-promotion, then web design may be the field for you.

First, you've got to start the learning process. If you enjoy classroom instruction and having teachers, sign up for some web design and graphic design courses through your local community college. If you'd rather start learning on your own, buy some good books, look at the source codes of the web pages you visit, and go through some online tutorials. Even if you're going to start learning web design in a school setting, be prepared to do a lot of learning in your free time, as well.

It's important to learn HTML, especially HTML5. Learn Cascading Style Sheets (CSS), up to CSS3. JavaScript, possibly some server side scripting languages, and Flash are very useful, too. Don't forget to learn how to use Photoshop. If you don't have the money to buy Photoshop right away, start by downloading some free graphic design programs like Paint.Net and GIMP. You can learn some of the basics of graphic design that way, and possibly be better prepared when you finally buy the most recent version of Photoshop.

These days, people access the web in more ways than were ever possible before. When you're web designing, you not only want to make your web pages work in multiple browsers, but also on multiple devices. Even basic cell phones can access the web today, not just smart phones such as BlackBerrys and iPhones. Even some video game playing devices like the Sony PSP and Nintendo DSi have web browsers. Web surfers could be using tiny screens or enormous screens. They could be using a variety of different browsers and versions of browsers. Users may have completely different plug-ins and fonts; Adobe Flash is a browser plug-in, for instance. When you're learning web design, try surfing the web in as many ways as you can.

There are many helpful resources for learning web design online, and there are many helpful online tools for web designers, many of which I use.

The W3C is an excellent place to start. They're the non-profit organization founded by Tim Berners-Lee, the man who started the World Wide Web. The W3C sets standards for HTML, XML and CSS. In addition to information about coding languages and standards, they have handy tools to validate your code.

HTML Goodies has a lot of excellent tutorials and articles.

I've learned a lot so far, but I'm always learning more, and I'll always be a student of web design and media technology. As technology advances, things change. There'll always be new programming languages and applications. Learning is a constant process.

Web design has been an engaging experience for me, and if you decide to get into it yourself, I hope you take it seriously and have a lot of fun.

My name is Kim Crawley, and I'm a web and graphic designer. In addition to my interest in using technology creatively, I'm also very interested in popular culture, social issues, music, and politics.

I'm an avid consumer of media, both in traditional and digital forms. I do my best to learn as much as I can, each and every day.

S4G2 Marketing Agency Will be Best Choice If You Looking For Web Designer in Canada cities Mentioned below:

Web Designer Abbotsford

Web Designer Barrie

Web Designer Brantford

Web Designer Burlington

Web Designer Burnaby

Web Designer calgary

Web Designer Cambridge

Web Designer Coquitlam

Web Designer Delta

Web Designer edmonton

Web Designer Greater Sudbury

Web Designer Guelph

Web Designer hamilton ontario

Web Designer Kelowna

Web Designer Kingston

Web Designer Kitchener

Web Designer London Ontario

Web Designer Markham

Web Designer montreal

Web Designer Oshawa

Web Designer ottawa

Web Designer quebec city

Web Designer Red Deer

Web Designer Regina

Web Designer Richmond

Web Designer Saskatoon

Web Designer Surrey

Web Designer Thunder Bay

Web Designer toronto

Web Designer vancouver

Web Designer Vaughan

Web Designer Waterloo

Web Designer Windsor

Web Designer winnipeg

0 notes

Text

Letter Spacing is Broken and There’s Nothing We Can Do About It… Maybe

New Post has been published on https://thedigitalinsider.com/letter-spacing-is-broken-and-theres-nothing-we-can-do-about-it-maybe/

Letter Spacing is Broken and There’s Nothing We Can Do About It… Maybe

This post came up following a conversation I had with Emilio Cobos — a senior developer at Mozilla and member of the CSSWG — about the last CSSWG group meeting. I wanted to know what he thought were the most exciting and interesting topics discussed at their last meeting, and with 2024 packed with so many new or coming flashy things like masonry layout, if() conditionals, anchor positioning, view transitions, and whatnot, I thought his answers had to be among them.