Don't wanna be here? Send us removal request.

Text

http://blog.osmosys.asia/voice-calling-using-twilio-node-js/Voice Calling Using Twilio in Node.js

Introduction

Twilio refers to a set of web services, APIs and tools provided by the Twilio company. These are used to send and receive text/picture messages, make and receive phone calls, and embed VoIP calling into web and native mobile applications. They provided many helper libraries, available for various server-side programming environments like Node.js, PHP, .NET, Java, Ruby, Python, Apex etc.

In this blog, we will use the Twilio node module to implement inbound and outbound voice calls in Node.js.

Prerequisites

The code snippets shown in this blog are written using Node.js version 8.9.4.

Create a Twilio account

Before you can make a call from Node.js, you’ll need to sign up for a Twilio account or log in to an account you already have. Head over to Twilio.com/try-twilio to sign up.

Buy a Twilio Number

The next thing you’ll need is a voice-capable Twilio phone number. If you currently do not own a Twilio phone number with voice call functionality, you’ll need to purchase one.

Follow the steps below,

Go to the Phone Numbers page of Twilio Developer Console.

Search for the number you want to buy by selecting Voice in the CAPABILITIES section.

Note: Buy a phone number with voice capabilities to make and receive calls

You’ll then see a list of available phone numbers and their capabilities. Find a number that suits your fancy and click Buy to add it to your account.

All phone numbers in Twilio’s API require E.164 formatting i.e. the number should include “+”, then country code, then a number with no dashes or spacing in between. For example, +918545658754.

Now that you have a Twilio account and a programmable phone number, you have the basic tools you need to make a phone call.

Get Twilio Credentials

Twilio credentials are required to authenticate and access Twilio APIs,

Go to Twilio homepage.

Go to Account Details.

Retrieve ACCOUNT SID and AUTH TOKEN.

Install Twilio node module

You could use Twilio’s HTTP API to make phone calls, but we’ll make things even simpler by using the Twilio module for Node.js.

Let’s use npm to install the required library. Simply fire up a terminal or command line interface on your machine that already has Node and npm installed, and run the following command in your project directory.

1npm install twilio

The code snippets uses Twilio SDK v3.17.2. Please check the migration guide while migrating from one version to other.

TwiML

TwiML (the Twilio Markup Language) is a set of instructions you can use to tell Twilio what to do when you receive an incoming call, SMS, or fax.

At its core, TwiML is an XML document with special tags defined by Twilio to help you build your Programmable Voice application.

The following will say Hello, world! when someone dials a Twilio number configured with this TwiML:

1234<?xml version="1.0" encoding="UTF-8"?><Response> <Say>Hello, world!</Say></Response>

You can read more about TwiML here.

TwiML is case sensitive, so make sure to capitalize the first letter of your elements.

Make a Voice call

It is interesting to see how with just a few lines of code, your Node.js application can make and receive phone calls with Twilio Programmable Voice.

Let us try to understand what exactly happens using a block diagram,

From our application we make an HTTP request to the Twilio server with the following details:

From number (Twilio number)

To number (Callee’s number)

URL (TwiML)

The URL argument points to some TwiML, which tells Twilio what to do next when our recipient answers their phone.

When our recipient answers their phone the Twilio server will make an HTTP request to our application to fetch the TwiML.

Note that you can also provide a link to TwiML file, such as

http://notification.voice.com/test-call.xml

Twilio then reads the TwiML instructions to determine what to do, whether it’s recording the call, playing a message for the caller, or prompting the caller to press digits on their keypad.

Sample code12345678910111213const accountSid = 'your_account_sid';const authToken = 'your_auth_token';const client = require('twilio')(accountSid, authToken); client.calls .create({ url: 'http://notification.voice.com/voice-call', method: 'GET', to: '+123456789', from: 'your_twilio_number' }) .then(call => console.log(call.sid)) .done();

This code starts a phone call between the two phone numbers that we pass as arguments. Thefrom number is our Twilio number, and the to number is who we want to call.

The URL argument points to an API which will respond with TwiML.

Before using this code though, we need to edit it a little to work with your Twilio account. Swap the placeholder values for accountSid and authToken with your personal Twilio credentials.

Please note that it’s okay to hardcode your credentials when getting started, but you should use environment variables to keep them secret before deploying to production. We use nconf to do this.

Above code snippet can also be implemented using callbacks.

API code

Here is a sample API which will generate TwiML. The /voice-call API will be called by the Twilio server.

123456789101112131415161718const express = require('express');const VoiceResponse = require('twilio').twiml.VoiceResponse; const app = express(); // HTTP GET to /voice-call in our applicationapp.get('/voice-call', (request, response) => { // Use the Twilio Node.js SDK to build an XML response const twiml = new VoiceResponse(); twiml.say({ voice: 'alice' }, 'Hello, how are you?'); // Render the response as XML response.type('text/xml'); response.send(twiml.toString());}); // Create an HTTP server and listen for requests on port 3000app.listen(3000);

This ( /voice-call) API will return a XML response i.e.

1234<?xml version="1.0" encoding="UTF-8"?><Response> <Say voice="alice">Hello, how are you?</Say></Response>

This TwiML instructs Twilio to play a message Hello, how are you? to the recipient (callee).

Receive a Voice call

Webhooks

A webhook is a user-defined callback mechanism that is triggered when an event occurs. When that event occurs, the source site makes an HTTP request to the URL configured for that webhook.

Twilio provides allows us to configure webhooks for various events like Incoming Call (A call comes in), Call Status Changes, Primary Handler Fails etc. When each of these events occur our webhook will be triggered via an HTTP request.

To define your webhook, go to your number’s configuration page.

Select the number for which you would like to add the webhook.

Scroll down to Voice & Fax section. Here you need to provide 3 things,

Webhook event

HTTP request URL

HTTP request method

In the above screenshot the webhook event is A CALL COMES IN.This event is triggered when you receive a call on your Twilio number. When this happens Twilio makes an HTTP request (usually a POST or a GET) to the URL you configured for the webhook.

The application should return TwiML response back to Twilio server.

The Twilio server will then parse that TwiML response and respond to the caller based on that response.

API Code12345678910111213141516171819const express = require('express');const VoiceResponse = require('twilio').twiml.VoiceResponse; const app = express(); // Create a route that will handle Twilio webhook requests, sent as an// HTTP GET to /v1/greeting in our applicationapp.get('/v1/greeting', (request, response) => { // Use the Twilio Node.js SDK to build an XML response const twiml = new VoiceResponse(); twiml.say({ voice: 'alice' }, 'Hello'); // Render the response as XML in reply to the webhook request response.type('text/xml'); response.send(twiml.toString());}); // Create an HTTP server and listen for requests on port 3000app.listen(3000);

The above API will return the following TwiML,

1234<?xml version="1.0" encoding="UTF-8"?><Response> <Say voice="alice">Hello</Say></Response>

How we used Twilio’s voice call APIs

We would like to share how we structured our application to handle different voice API calls.

Architecture

We have an alert notification system which sends notification through different mediums such as,

SMS

Email

Voice call

Pager

For sending SMS and making voice calls we used the Twilio API.

The system architecture is very simple to understand.

We wanted to separate the code into various modules based on the role,

Application

This contains the core logic of our application. This is a REST API server developed using NodeJs which handles all the requests from our front end applications (Web portal, mobile app and IoT devices).

Microservice

This micro-service will handle the sending of notifications. This will send SMS, Email, Pager and Voice calls by using the Twilio and Mailgun APIs.

Voice API server

We developed a different server specially to handle all the voice call related routes. This server generates TwiML which gathers user input and submits the user’s response back to one of its own route, which then does further processing.

There is a two way communication between this component and the Twilio API. For example,

Twilio makes a request to this component to get the TwiML to play a message and ask for user input.

The Voice API server will return TwiML which will contain that message.

Twilio server will play that message to the user.

After user gives feedback by pressing a digit, the Twilio server will make another request to Voice call API with the user’s entered digit.

Voice call API will then take the user input, do some processing and respond back with another TwiML. (“Thank you for giving feedback” message)

Twilio server will play the thank you message to the user and the transaction ends here.

Advantages

Following are some of the major advantage,

Well maintained code

Every component does what it needs to do. The core application will have the APIs which are required for the application to run. The micro-service (Notification processor as we named it) will take care of sending different notifications. Finally all the HTTP requests made by Twilio will land on Voice API server which will serve the TwiML.

Quick problem identification

It is very easy to identify an issue or bug, since we have different components that perform specific jobs. If there is an issue receiving notifications then we check only the microservice. If there is an issue with voice calls not being parsed properly then we know that we need to check Voice API server.

Ease of adding new notification type

Adding a new notification type is easy. If tomorrow we decide to add webhook notifications then we need to update the microservice and integrate webhook. No changes are required in the core application and the Voice API server.

Reduced testing scope

The scope of testing and causing a bug is limited to a particular component. If we do code modifications in a component then we are confident that it will not cause any issues in other components.

Use casesProblem

We had to notify the user for different type of actions through voice call, then take input from the user and update records in our database based on the user’s input.

Goal

To keep it simple lets assume we have 3 different type of actions

Action I

Action II

Action III

For each action we want to take user’s feedback, assume the feedback to be,

Yes (Press 1)

No (Press 2)

Based on the input we want to update the database records. We wanted the system to easily handle any new actions as well.

Solution

Lets try to understand the solution using a block diagram

Microservice will make HTTP request to Twilio server for different actions. Each action will have a corresponding REST API in our Voice API server which will return the TwiML.

Twilio server will then parse this TwiML and make a call to the user.

Once the user enter digits on their keypad, Twilio will submit those digits in a POST request back to our Voice API server.

Voice API server will then update database based on user input.

Code

Let us try to understand the code sample,

Microservice sending notifications

12345678910111213141516171819202122const accountSid = 'your_account_sid';const authToken = 'your_auth_token';const client = require('twilio')(accountSid, authToken); // Method to make a twilio call for different action type with datafunction sendVoiceNotification (actionType, id) { client.calls .create({ url: `http://notification.voice.com/${actionType}/id/${id}`, method: 'GET', to: '+123456789', from: '+987654321' }) .then(call => console.log(call.sid)) .done();} // Make a twilio call for action type 1 with id 3 (Some data which will be used later for processing)sendVoiceNotification('action1', 3);// Similarly make calls for different action type// sendVoiceNotification('action2', 15);// sendVoiceNotification('action3', 16);

To keep things simple we wrote a function sendVoiceNotification() which takesactionType and id. When the callee answers the call, Twilio will make a HTTP GET request to our application.

For example if actionType is action1 and id is 3 then the request URL will be http://notification.voice.com/action/action1/id/3.

TwiML response

These route are defined in our Voice API server which will generate the TwiML based on theactionType and data i.e id.

1234567891011121314151617181920212223242526272829303132333435363738394041const express = require('express');const VoiceResponse = require('twilio').twiml.VoiceResponse;const app = express(); // HTTP GET to generate the TwiML based on action type and idapp.get('/action/:action/id/:id', (request, response) => { const data = request.params; const actionType = data.action; const id = data.id; // Get details from database based on action type and id // Assume that this will query the database and fetch the needed // data which can be used to generate the required TwiML const callDetails = getDataByActionType(actionType, id); const twiml = new VoiceResponse(); // Use gather to record user input const gather = twiml.gather({ action: 'http://notification.voice.com/action/${actionType}/id/${id}', input: 'dtmf', timeout: 15, numDigits: 1, method: 'POST' }); // Generate the message for the receiver // getCallText(), this function will generate the needed call text gather.say(getCallText(callDetails)); // If the user doesn't enter input (any digit) within the timeout (15 sec) duration, // then repeate the same message again twiml.redirect({ method: 'GET' }, 'http://notification.voice.com/action/${actionType}/id/${id}'); // Render the response as XML in reply response.set('Content-Type', 'text/xml'); response.send(twiml.toString());}); // Create an HTTP server and listen for requests on port 3000app.listen(3000);

Sample TwiML

1234567<?xml version="1.0" encoding="UTF-8"?><Response> <Gather action="http://notification.voice.com/action/action1/id/3" input="dtmf" timeout="15" numDigits="1" method="POST"> <Say>Action 1 is triggered with id 3, please press 1 for "Yes" and 2 for "No".</Say> </Gather> <Redirect method="GET">http://notification.voice.com/action/action1/id/3</Redirect></Response>

You can learn more about the Gather tag here.

Handling user input

12345678910111213141516171819202122232425262728const express = require('express');const VoiceResponse = require('twilio').twiml.VoiceResponse;const urlencoded = require('body-parser').urlencoded; const app = express(); // Parse incoming POST params with Express middlewareapp.use(urlencoded({ extended: false })); // HTTP POST to handle user inputapp.post('/action/:action/id/:id', (request, response) => { // Use the Twilio Node.js SDK to build an XML response const data = request.params; const actionType = data.action; const id = data.id; const digit = request.body.Digits; // Update database based on the user input updateDatabase(actionType, id, digit); const twiml = new VoiceResponse(); twiml.say({ voice: 'alice' }, 'Thank you for your feedback.'); // Render the response as XML in reply to the webhook request response.type('text/xml'); response.send(twiml.toString());}); // Create an HTTP server and listen for requests on port 3000app.listen(3000);

The user entered digits are available in the request body i.e. request.body.Digits. You can update the database or take further action based on the entered digit.

Note that the call will end with an error if your server doesn’t respond back with some TwiML.

Alternative – Call SID

For every call which is initiated by Twilio there is a unique ID associated with it. client.calls.create() will return a call object which contains a call.sid. This SID can be used to uniquely identify a call.

Alternatively instead of passing data as part of URL like we did i.e.http://notification.voice.com/action/${actionType}/id/${id}, we could store this info in database and use call SID.

After initiating the Twilio call, we will store the details into database as shown below,

call_sidaction_typeid

CAed0ea7ab63d425e987cb24eXXXXaction13

CAed0ea7bb63d425e987cb24eXXXXaction215

Instead of using http://notification.voice.com/action/${actionType}/id/${id} we could simply use http://notification.voice.com/action

1234// HTTP GETconst callSID = request.params.CallSid;// HTTP POSTconst callSID = request.body.CallSid;

Once you have the call SID further processing can be carried out by fetching the additional details from database using the call SID.

Twilio logs

Twilio provides logs for each call made. This is the first place you should check in case your users are complaining about not receiving phone calls, abruptly ending phone calls or any other issue.

You can find voice call logs here.

Debugging

To debug an issue, click on any call log which failed. Failed calls are highlighted with red color.

Log summary

This gives a brief detail about the call.

Error and Warning

This section will list out the error or the warning that occurred while processing the call.

For example, in the above image we gave an API route which does not exist.

Request Inspector

This section contains all the requests which were made during the call.

Twilio initially requested for the TwiML using an HTTP GET request.

You can see the TwiML being sent back in response body.

This TwiML is used to take the user’s input ( Gather)and then POST that input to another URL which is specified in the action attribute.

To demonstrate an error condition, we’ve specified an invalid URL in the action attribute.

When the user presses 2 you can see that a request is made by Twilio to the specified API for the next TwiML. The Digits parameter in the request contains the number that the user dialed.

Note that this request failed with status 404 since the route does not exist.

Summary

Rather than building an app’s Voice and SMS functionality from scratch, developers can make use of Twilio APIs which will be a huge time saver. Here are some other interesting use cases that we found that leverage the power of Twilio to automate things,

Automated survey with Nodejs and Express

Automate Wedding

US Democratic National Committee voter hotline

References

Twilio document on how to make outbound phone calls in Node.js

Twilio document on how to make inbound phone calls in Node.js

Twilio document on how to know the input provided by the user

http://blog.osmosys.asia/voice-calling-using-twilio-node-js/

0 notes

Text

Voice Calling Using Twilio in Node.js

Twilio refers to a set of web services, APIs and tools provided by the Twilio company. These are used to send and receive text/picture messages, make and receive phone calls, and embed VoIP calling into web and native mobile applications. They provided many helper libraries, available for various server-side programming environments like Node.js, PHP, .NET, Java, Ruby, Python, Apex etc.

http://blog.osmosys.asia/voice-calling-using-twilio-node-js/

0 notes

Text

Voice of the customer

https://www.reddit.com/r/MicrosoftDynamics365/comments/8piuvs/voice_of_the_customer_voc_dynamics_365_crm/

0 notes

Text

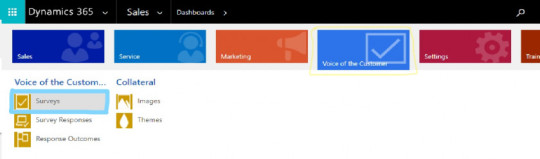

Voice of the Customer (VOC) – Dynamics 365 CRM

Introduction

For any business to be successful or improve their services, learning about their customers & customer feedback plays a pivotal role. Analyzing the feedback from customers and making required changes to the business process/models accordingly is part of any business growth. Keeping this in view, Microsoft Dynamics 365 CRM which is customer centric or revolves around customer relations has introduced a survey tool which can be embedded within your Dynamics 365 CRM and helps in capturing the customer feedback.

Voice of the Customer (VOC) was introduced with Microsoft Dynamics CRM 2016 online, and is used to capture feedback regarding our products or services from within CRM. With Voice of the Customer, we can set up surveys, distribute them to contacts or leads via workflows, and capture responses. The captured data can then be utilized for analysis, generating reports and making appropriate changes to our product/service.

This feature/app is available globally as Voice of the Customer solution for Microsoft Dynamics 365 CRM (here after referred as D365) online subscriptions. It can be installed from the Applications area of Dynamics 365 Administration Center.

Installation

We will walk you through installation of the solution step by step in detail.

Sign in to https://login.microsoftonline.com with your Global Administrator or CRM System Administrator credentials.

Navigate to Admin Centers > Dynamics 365

In the D365 Administration Center window, navigate to Applications Tab.

Select the Voice of the Customer solution, and click on Manage. Proceed through Terms of Service to accept the terms.

Select any Instance from drop down list and click on Install.

Before Installation, the status of solution will be Not Installed. After clicking on Install, it will take few minutes to start installation and shows status as Installation Pending. When the solution is ready, status will change to Installed.

Note: During Installation Pending status, the CRM instance is in maintenance mode and is offline for a short period of time.

Once the Voice of the Customer solution is successfully installed, Voice of the Customer is added to the sitemap.

Survey Process

Using Voice of the Customer, user can conduct Email/Web-based surveys.

Create a Survey

To create new survey record, navigate to Voice of the Customer > Survey.

On survey record by default, 3 forms are available as shown below:

Survey Survey page has a theme, logo, behavior controls, as well as question/response configurations, we can modify them as per the requirement.

Designer: The Designer page allows us to design UI and questions for the survey. It provides options to add pages and questions. For example, we can design a welcome page to reflect the message that we would like our recipients to see upon opening the survey.

The survey questions can be added to a new page or on the existing page via the drag-and-drop editor. The user can double-click on questions to edit it, and the user can click on the edit button on the form for more editing options.

Once all the questions are added and required changes are done, preview the survey to check if all questions are saved properly (only saved questions will be displayed in the preview).

Dashboard:

The Dashboard is the statistical representation of Survey data or survey response. It will display all the responses or feedback in bar graphs.

Distributing the Survey

To distribute or use the survey externally, we need to publish the survey. To do so, click on Publish button on the ribbon.

Once the survey is published, email snippet and anonymous link are automatically generated. These email snippets or anonymous links are used for distributing the survey to the customers.

We can test the survey page before sending it to the customers by using Test button. Test displays the survey page that will be sent to customers and the response provided in the test page aren’t stored as survey response.

The Test button will not work if the survey is not published.

The Test button displays only the published content where as Preview displays the saved content.

Email Snippet can be used in email templates or in the email content directly. For example, the below mentioned email snippet can be pasted in the email template or email content directly. [Survey-Snippet-Start]2dfd9f6d-295f-4488-8132-773357e758ea[Survey-Snippet-End]

The text of the link (email snippet) can be added in Invitation Link Text field. This text is displayed in the email that is sent to the customers.

For Example, in above image, the Invitation Link Text field is set to Click Here. So, Click Here is displayed as a link in email and on clicking this link will redirect to the survey page. If the Invitation link text field is blank then the survey link is displayed in the email that is sent to the customer.

Here is an example of using email snippet in send email step of a workflow:

Anonymous Link is used in Survey Activities and in conducting web-based survey (social networking sites, etc.).

Fill all related fields data of Survey activity and click on save. Once saved, this will generate the survey Invitation Link (anonymous link of survey selected in survey field) that can be sent to users for providing feedback.

Taking Survey

By clicking the link generated above, users can provide feedback. Below is the sample feedback created for capturing the session.

Page 1: Welcome Page

Page 2: Questions Section

Page 3: Complete Page

Survey Responses

Once the survey is completed by customers, the responses that are given by customers are stored in Survey Responses entity.

The results of the survey are stored in Response Summary tab in the Survey Responses entity. It is an HTML page which displays the answers given by customers for all the questions from the survey.

Question Responses entity stores the records of each question and respective answer from the customer.

Response Outcomes – For every survey, there is an outcome after taking the survey (complaint or complement or appreciation, etc.) based on the Responses Routing created for the survey.

Limitations of VOC

There must be minimum of two pages in every survey.

A maximum of 200 surveys only can be published at any point of time. You might need to unpublish surveys which are not in use anymore, if needed.

We can include a maximum of 250 questions in a survey. If you’ve enabled feedback for a survey, you can include a maximum of 40 questions.

We can create a maximum of 25 pages per survey.

We can send a maximum of 10,000 email invitations in a 24-hour period. Any emails that exceed that amount will remain pending during that time and will automatically start sending when the time limit is over.

Voice of the Customer for Microsoft Dynamics 365 will pull a maximum of 2,400 survey responses per day. Voice of the Customer leverages Azure to host the surveys and capture responses. So, If there are more responses (more than 2400) in a day, 2400 will come in, remaining will remain in Azure until the next day.

Voice of the Customer for Microsoft Dynamics 365 allows storage of a maximum of 1,000,000 survey responses for an instance.

For More blogs please check : http://blog.osmosys.asia

0 notes

Text

GitLab – Recovering from a corrupted database record

Introduction

One of the most terrifying thing for a Database administrator to come across is a corrupted database.

A database corruption can be defined as a problem associated with the improper storage of the 0’s and 1’s that you need to store on the disk in order to access your data.

Despite the usefulness of the relational databases, they are prone to corruption, which results in the inaccessibility of some or all the data in the database.

More than 95% of corruption happens due to hardware failure. Among the remaining 5% we have,

Bugs in software itself

Abrupt system shutdown while the database is opened for writing or reading.

Changes in SQL account

Virus infection

Upgrading software also at times, results in the corruption of the database

This blog addresses a similar issue associated with corrupted databases and how we identified and solved the problem.

Problem understanding

Our organization has an on-premise GitLab server. We have configured GitLab to take daily backups, but due to database corruption the backups started failing with the following error.

Shell123456789sudo gitlab-rake gitlab:backup:create[sudo] password for osmosys:Dumping database ...Dumping PostgresSQL database gitlabhq_production ...pg_dump: Dumping the contents of table "merge_request_diffs" failed: PQgetResult() failed.pg_dump: Error message from server: ERROR: unexpected chunk number 0 (expected 2) for toast value 204823 in pg_toast_16524pg_dump: The command was: COPY public.merge_request_diffs (id, state, st_commits, st_diffs, merge_request_id, created_at, updated_at, base_commit_sha, real_size, head_commit_sha, start_commit_sha) TO stdout;[FAILED]Backup failed

The plan was to move the GitLab installation to a new server but since we could not take a backup of our existing installation, we were stuck.

Next Steps

In case of database corruption, usually the loss is limited to the last action of one user, i.e. a single change to data. When a user starts to change data and the change is interrupted — for example, because of network service loss or any other reason, then the database file is marked as corrupted. The file can be repaired, but sometimes we might lose data after repair.

We need to be extremely careful with the live instance and the live data. Experimentation should be done on a virtual machine before performing any actions on the live instance.

The following steps were taken by us,

Virtual environment setup

Identify corrupted record(s)

Database backup without corrupted record(s)

Import database on virtual machine

Fix database relationship and constraints

Export database from virtual environment

Import database on live instance

Upgrade GitLab

Finally run tests

Virtual environment setup

Generally, having a virtual environment gives us the freedom to experiment. Its a good practice to run trials on a virtual machine until you are satisfied with the results. This gives us the confidence that things will work as expected on live instance too.

Now lets create a virtual environment of the live instance. Only the applications which are directly or indirectly related to your database should be installed.

Install Vagrant

Install Debian Stretch

Install PostgreSQL

Install phpPgAdmin (Optional) – Web-based administration tool for PostgreSQL

GitLab uses PostgreSQL database.

Install Vagrant

Go to Vagrant’s official site and download the deb file for Debian.

You can find the link here.

Install Debian stretch64

Document on how to install Debian Stretch can be found here.

Install PostgreSQLShell1sudo apt-get update

Shell1sudo apt-get install postgresql postgresql-client

Install phpPgAdminThis step is optional, use any of your favorite PostgreSQL GUI tools.Shell1sudo apt-get install phppgadmin

Identify corrupted record(s)

Login into the live instance.

Become a gitlab-psql user:

Shell1sudo -u gitlab-psql -i bash

Connect to the PostgreSQL CLI:

Shell1/opt/gitlab/embedded/bin/psql --port 5432 -h /var/opt/gitlab/postgresql -d gitlabhq_production

gitlabhq_production is the default database.

If you already know about the records which are corrupted then you can skip this step. Otherwise write a small script which selects all the records from each table, select query will fail if the table has corrupted data.

Note that we already knew that the corrupted records belong to merge_request_diffs table by looking at the backup command error message.

For example if the corrupted record is in the merge_request_diffs table, then we can write a small script to identify the row which is corrupted:

Shell123for ((i=0; i<11124; i++ )); do /opt/gitlab/embedded/bin/psql --port 5432 -h \/var/opt/gitlab/postgresql -d gitlabhq_production -c \"SELECT * FROM public.merge_request_diffs LIMIT 1 offset $i" >/dev/null || echo $i; done

The above script basically select all the rows one by one, but will fail to select the row which is corrupted and will print the row index. Assume that selection failed at index 2045, this tells us that the record at index2045 is corrupted.

Repeat this process for all the tables if you don’t know exactly which table has corrupted records.

Database backup without corrupted record(s)

We were fine with losing these corrupted records, so the next step would be to take a backup without those records.

Select the record, and get it’s row / tuple ID.

Note that if all the columns were selected the query was failing, probably because the corrupted data is in one of the columns.

MySQL123SELECT base_commit_sha, head_commit_sha, merge_request_id, id FROM public.merge_request_diffs LIMIT 1 offset 2045;

base_commit_sha – 8250ee35a6a8d0cd60b5056c7ac94736a048e7a5

head_commit_sha – b3f08056e93f281c2905f6185395787ae7ffaada

merge_request_id – 1091

id – 1933

Create a copy of merge_request_diffs table without the corrupted record i.e. skip id 1933.

MySQL1234567891011CREATE TABLE public.merge_request_diffs_copyAS SELECT * from public.merge_request_diffs WHERE id != '1933' # You can still make a copy of the table using the offset valueCREATE TABLE public.merge_request_diffs_copyAS SELECT * from public.merge_request_diffs LIMIT 2044UNIONSELECT * from public.merge_request_diffs OFFSET 2045;

This will create a copy of merge_request_diffs table without the corrupted record.

The primary key and the foreign key of merge_request_diffs are not copied to merge_request_diffs_copy.

Take the backup of the entire database without table merge_request_diffs. Backups will fail if there are corrupted records.

Shell1/opt/gitlab/embedded/bin/pg_dump --port 5432 -f git_lab_db_dump.sql -h /var/opt/gitlab/postgresql -d gitlabhq_production --exclude-table-data '*.merge_request_diffs';

The above command will skip merge_request_diffs table while taking backup.

Import database on virtual machine

We will setup the backup we took from the previous step on the Vagrant machine.

Create a test database

Create a new database to fix the issues on our vagrant machine.

MySQL1CREATE DATABASE gitlab_test ENCODING 'UTF8' TEMPLATE template1;

GitLab owner

Create a PostgreSQL role as gitlab to import the gitlab database.

Since the ownership of all the tables is given to gitlab role in the backup which was taken earlier.

MySQL1CREATE ROLE gitlab;

Install pg_trgm extension

GitLab uses pg_trgm few extensions which are not installed by default.

Run the following command to install pg_trgm extension:

MySQL1CREATE EXTENSION pg_trgm;

Import data to gitlab_testPgSQL1psql gitlab_test < git_lab_db_dump

Use psql command to import from the dump.

Note that git_lab_db_dump is the name of the file which contains the database backup from the live instance.

Fix database relationship and constraints

Fixes to database should happen on the vagrant machine.

Get constraints of corrupted table

Go back to the GitLab server and get the table schema of the corrupted table.

Use the below command to get the table schema:

Shell1pg_dump -U user_name database_name -t table_name --schema-only

Sample schema is shown below:

MySQL1234567891011121314151617181920212223242526272829303132333435363738-- No need to run the table creation query CREATE TABLE merge_request_diffs (id integer NOT NULL,state character varying(255),st_commits text,st_diffs text,merge_request_id integer,created_at timestamp without time zone,updated_at timestamp without time zone,base_commit_sha character varying,real_size character varying,head_commit_sha character varying,start_commit_sha character varying); -- Need to run all the below queries ALTER TABLE merge_request_diffs OWNER TO gitlab; CREATE SEQUENCE merge_request_diffs_id_seqSTART WITH 11421INCREMENT BY 1NO MINVALUENO MAXVALUECACHE 1; ALTER TABLE merge_request_diffs_id_seq OWNER TO gitlab; ALTER SEQUENCE merge_request_diffs_id_seq OWNED BY merge_request_diffs.id; ALTER TABLE ONLY merge_request_diffs ALTER COLUMN id SET DEFAULT nextval('merge_request_diffs_id_seq'::regclass); ALTER TABLE ONLY merge_request_diffs ADD CONSTRAINT merge_request_diffs_pkey PRIMARY KEY (id); CREATE INDEX index_merge_request_diffs_on_merge_request_id ON merge_request_diffs USING btree (merge_request_id);

Make sure that merge_request_diffs_copy table has the same constraints as the originalmerge_request_diffs table.

Use the above queries to add indexes and foreign keys.

Be very careful with the queries, especially with SEQUENCES,

MySQL123456CREATE SEQUENCE merge_request_diffs_id_seqSTART WITH 11421INCREMENT BY 1NO MINVALUENO MAXVALUECACHE 1;

START WITH 11421 keyword determines what should be the auto increment primary key value for the next record which will be inserted.

Rename table name

Rename the table name from merge_request_diffs_copy to merge_request_diffs.

MySQL1ALTER TABLE public.merge_request_diffs_copy RENAME TO merge_request_diffs

So now, we have the database backup without any corrupted records.

Testing

Install the same version of GitLab on vagrant machine and use gitlab_test database.

Trigger the backup script and confirm that the backups are now being taken properly.

Export database from virtual environmentTake the backup from your vagrant instanceShell1pg_dump -U gitlab gitlab_test > dbexport.pgsql

Import database on live instanceDrop the database from live serverMySQL1DROP DATABASE IF EXISTS gitlabhq_production;

Create gitlabhq_production database again on live serverMySQL1CREATE DATABASE gitlabhq_production ENCODING 'UTF8' TEMPLATE template1;

Install

pg_trgm

extension

GitLab uses pg_trgm extension which will not be installed by default with PostgreSQL.

1CREATE EXTENSION pg_trgm;

Import the data to gitlabhq_productionPgSQL1psql gitlabhq_production < dbexport.psql

Use psql command to import all the records from the dump into gitlabhq_production database.

Upgrade GitLab

You can find document here on how to update GitLab.

Note that the upgrade process will automatically run the migrations over the database unless you decide to do it manually.

Finally run tests

After a successful upgrade of GitLab server we should run few tests on it to verify that everything works fine.

Verify that the server is up and running.

Confirm if the database integration is done properly.

Test and verify if the database backup script is able to take backups properly.

References

References were taken from the following blogs, forum posts and Wiki’s.

https://www.stellarinfo.com/support/kb/index.php/article/common-sql-database-corruption-errors-causes-solutions

https://dba.stackexchange.com/questions/127846/dumping-database-with-compressed-data-is-corrupt-errors

http://dbmsmusings.blogspot.in/

https://www.freelancinggig.com/blog/2017/07/22/mariadb-vs-mysql-vs-postgresql-depth-comparison/

https://support.office.com/en-us/article/Compact-and-repair-a-database-6ee60f16-aed0-40ac-bf22-85fa9f4005b2

0 notes

Text

GitLab – Recovering from a corrupted database record

Introduction

One of the most terrifying thing for a Database administrator to come across is a corrupted database.

A database corruption can be defined as a problem associated with the improper storage of the 0’s and 1’s that you need to store on the disk in order to access your data.

Despite the usefulness of the relational databases, they are prone to corruption, which results in the inaccessibility of some or all the data in the database.

More than 95% of corruption happens due to hardware failure. Among the remaining 5% we have,

Bugs in software itself

Abrupt system shutdown while the database is opened for writing or reading.

Changes in SQL account

Virus infection

Upgrading software also at times, results in the corruption of the database

This blog addresses a similar issue associated with corrupted databases and how we identified and solved the problem.

Problem understanding

Our organization has an on-premise GitLab server. We have configured GitLab to take daily backups, but due to database corruption the backups started failing with the following error.

For more details please check the link here

http://blog.osmosys.asia/gitlab-recovering-corrupted-database-record/

A blog from Osmosys Software Solutions

0 notes

Text

What is Project Management?

Any work or task which is to be managed or planned, monitored and executed could be called as Project Management.

Project management can apply to any project, but it is often tailored to accommodate the specific needs of different and highly specialized industries. For example, information technology industry has evolved to develop its own form of project management that is referred to as Software project management and which specializes in the delivery of technical assets and services that are required to pass through various lifecycle phases such as

1. Planning (Requirements/analysis)

2. Design

3. Development

4. Testing

5. Deployment.

Project management small organisations could be done by the manager just by setting up the goals and assigning the tasks to the employees manually or on email.

In medium and large enterprises managing the projects which requires huge manual resources and technical resources, one could not be able to maintain the projects without using the project management tools.

Here comes our project management software which will suggest you with the best resource available and depending upon the past performance data.

It uses artificial intelligence and make intelligent suggestions which could help in improving the project completions, reduce the delay in project completion and ultimately lead to the improvement in the ROI.

Visit Best Project Management Tool for more information

0 notes

Text

What's the difference between a Project Manager and a Product Manager?

Both the Project Manager and Product Manager are leadership roles and performs similar duties.

It all depends on the work they are doing if a person leading a team to complete the project he could be called as a project manager

A person leading a team who are responsible for production of a product and end release of the product encountering different stages of the product life cycle.

A product manager or a project manager both need to work in alliance with each other.

A Product development life cycle may consist of many small projects to be done which would be assigned to different project managers and vice versa.

A project manager or a product manager both would need a project management tool which would help in assigning the tasks to their team, budget allocation, project completion status updates and many more.

Here is an Intelligent and Suggestive project management tool that could help in handing the project in a best way.

Any project management tool would just give the project completing status, budgeted hours to an employee, task completion status, but PineStem which is a best project management tool that use Artificial Intelligence and help in allocating a specific resource for a project.

Visit: https://pinestem.com

1 note

·

View note

Text

Best Project Management Tool

A project management tool is an application which is useful in assigning the daily tasks, budget allocation and check the project completion status.

What if a project management tool can suggest you with the best resource available for a specific project depending upon the past performance analytics.

This could ease the pressure of a project manager as he could easily find the best resource for a specific project and allocate the resources accordingly.

This would definitely bring the change in the project completion efficiency and would certainly bring more and more revenue to the company.

Try our project management tool which thinks for you and depending upon the past performance analytics will suggest you with the best resource available.

PineStem : Your Intelligent Partner in project management

visit: https://pinestem.com

0 notes

Text

Project Management for New Product Development

New Product Development (NPD) for any organization is an exciting yet challenging task. While the prospect of new software product development opens the doors to future business development and an additional source of revenue, it also involves technical, marketing, and financial risks. Most of the decisions taken in this phase are with a degree of uncertainty and are therefore risky.

While some decisions can be reversed easily there are some, which when altered can cause huge cost overheads. New Product development is, therefore, a demanding exercise that needs effective project planning and control for informed decision-making.

The right Project Management Tool can help make this stage simpler. However, to make this stage less risky and more effective, the real requirement is an AI backed Project Management Tool (PMT).

New Product Development with an AI backed Project Management Tool

An AI backed PMT uses machine learning to fall back on past performance to take effective actions and make the right recommendations for any new product development.

This blog examines the efficacy of AI backed Project Management Tools to support the three essentials for any New Product Development to succeed. Which are,

Team Selection

Time Management

Quality management

The following section explains these in detail.

Team Selection

It’s better to have a great team than a team of greats! – Simon Sinek

‘Choose wisely’ may be one of the best pieces of advice you are ever given while selecting a team. However, like all good advice, the trick lies in how to put it into practice.

AI backed Project Management Tool (AI-PMT) offers a big aid in this process. They record and analyze a Programmer’s or Developer’s past performance. This stored repository of knowledge gives you critical insights for decision-making.

Evaluating Efficiency Calculation

One parameter that can help in team selection is calculating an employee’s efficiency in a particular skill or competency. This helps in better decision-making while putting a team together.

To cite an example, our Project Management Tool PineStem offers a feature called efficiency calculation. The calculation is based on the budgeted hours and the actual hours spent by an employee.

Let’s say we have a task for which the budgeted hours are 10 and the actual hours spent by employee ‘A’ are 7. His efficiency then for this particular task is 142.8%. Let’s consider another employee ‘B’ who uses 15 hours to complete the same task. His efficiency would be 66%.

Clearly, employee A is ideal for the assigned task.

Apart from selecting between two employees, intelligent Project Management Tools also help in identifying the right skill or competency of an employee.

In the above examples, task 1 could be a task which requires Java competency and task 2 could be for Angular JS. In that case, the employee’s competency clearly is in Java.

Time Management

The second most important thing for new product development is time management. Time management includes planning and scheduling of detailed activities estimating their duration and aligning them together.

It also includes allocation of resources and a number of other matters. During the control phase of new product development, it is absolutely imperative to monitor the degree to which the work is completed to identify if it’s as per schedule. A Project Management Tool can effectively control this aspect and assist in keeping the project on track.

To use an example, our AI backed Project Management Tool PineStem sends warning messages to notify the completion of budgeted hours for a particular task. This kind of intervention by the Project Management Tool helps the project manager to take things under control and deploy corrective actions.

Quality Management

The third key aspect to ensure successful new product development is quality management. A bad piece of code can derail a project schedule and spoil customer experience. It is therefore critical that the project manager determines the components of quality assurance at the beginning of a project. These details help design critical quality gates throughout the project life cycle.

An important outcome of quality assurance is how to use the information gathered to improve processes in a way that will avoid failures of future tasks and other project work activities too. Here again, an intelligent Project Management Tool can help effectively.

To elaborate, few Project Management Tools offer bug tracking with detailed reporting on detecting the bug pattern. This information is useful to evaluate the nature of bugs that are being generated. Moreover, it helps eliminate repetitive bugs. This knowledge helps in all future projects including new product development and avoids redundant activities of cleaning the same bugs.

Conclusion

A Project Management Tool can help in many other aspects of the new product development. If you’d like to know more about it please do write to us at [email protected].

To experience the benefits of an AI backed Project Management Tool take a free trial with us at https://pinestem.com/

A Quality product from http://osmosys.asia

1 note

·

View note

Text

Project Management for New Product Development

New Product Development (NPD) for any organization is an exciting yet challenging task. While the prospect of new software product development opens the doors to future business development and an additional source of revenue, it also involves technical, marketing, and financial risks. Most of the decisions taken in this phase are with a degree of uncertainty and are therefore risky.

While some decisions can be reversed easily there are some, which when altered can cause huge cost overheads. New Product development is, therefore, a demanding exercise that needs effective project planning and control for informed decision-making.

The right Project Management Tool can help make this stage simpler. However, to make this stage less risky and more effective, the real requirement is an AI backed Project Management Tool (PMT).

New Product Development with an AI backed Project Management Tool

An AI backed PMT uses machine learning to fall back on past performance to take effective actions and make the right recommendations for any new product development.

This blog examines the efficacy of AI backed Project Management Tools to support the three essentials for any New Product Development to succeed. Which are,

Team Selection

Time Management

Quality management

The following section explains these in detail.

Team Selection

It’s better to have a great team than a team of greats! – Simon Sinek

‘Choose wisely’ may be one of the best pieces of advice you are ever given while selecting a team. However, like all good advice, the trick lies in how to put it into practice.

AI backed Project Management Tool (AI-PMT) offers a big aid in this process. They record and analyze a Programmer’s or Developer’s past performance. This stored repository of knowledge gives you critical insights for decision-making.

Evaluating Efficiency Calculation

One parameter that can help in team selection is calculating an employee’s efficiency in a particular skill or competency. This helps in better decision-making while putting a team together.

To cite an example, our Project Management Tool PineStem offers a feature called efficiency calculation. The calculation is based on the budgeted hours and the actual hours spent by an employee.

Let’s say we have a task for which the budgeted hours are 10 and the actual hours spent by employee ‘A’ are 7. His efficiency then for this particular task is 142.8%. Let’s consider another employee ‘B’ who uses 15 hours to complete the same task. His efficiency would be 66%.

Clearly, employee A is ideal for the assigned task.

Apart from selecting between two employees, intelligent Project Management Tools also help in identifying the right skill or competency of an employee.

In the above examples, task 1 could be a task which requires Java competency and task 2 could be for Angular JS. In that case, the employee’s competency clearly is in Java.

Time Management

The second most important thing for new product development is time management. Time management includes planning and scheduling of detailed activities estimating their duration and aligning them together.

It also includes allocation of resources and a number of other matters. During the control phase of new product development, it is absolutely imperative to monitor the degree to which the work is completed to identify if it’s as per schedule. A Project Management Tool can effectively control this aspect and assist in keeping the project on track.

To use an example, our AI backed Project Management Tool PineStem sends warning messages to notify the completion of budgeted hours for a particular task. This kind of intervention by the Project Management Tool helps the project manager to take things under control and deploy corrective actions.

Quality Management

The third key aspect to ensure successful new product development is quality management. A bad piece of code can derail a project schedule and spoil customer experience. It is therefore critical that the project manager determines the components of quality assurance at the beginning of a project. These details help design critical quality gates throughout the project life cycle.

An important outcome of quality assurance is how to use the information gathered to improve processes in a way that will avoid failures of future tasks and other project work activities too. Here again, an intelligent Project Management Tool can help effectively.

To elaborate, few Project Management Tools offer bug tracking with detailed reporting on detecting the bug pattern. This information is useful to evaluate the nature of bugs that are being generated. Moreover, it helps eliminate repetitive bugs. This knowledge helps in all future projects including new product development and avoids redundant activities of cleaning the same bugs.

Conclusion

A Project Management Tool can help in many other aspects of the new product development. If you’d like to know more about it please do write to us at [email protected].

To experience the benefits of an AI backed Project Management Tool take a free trial with us at https://pinestem.com/

A Quality product from http://osmosys.asia

1 note

·

View note

Text

Artificial Intelligence in Project Management

In our consulting work with clients across the globe, we’ve been asked umpteen number of times about the best practices in Project Management. At every level of the management hierarchy, individuals want to know the key practices for project success. What’s the best Project management mantra? The sure shot winning formula.

While a whole a lot can be said about effective project management practices and techniques, the one crucial aspect according to us is the project management tool in question. The fundamental question really is whether the project management tool is supporting the new age project manager.

The Times They Are A-Changin’

Remember one of the finest songs of Bob Dylan, The Times they are A-Changin’. Well, it perfectly fits today’s project management scenario.

The market today is absolutely volatile with innovation happening every second. Organizations are trying to deliver advanced futuristic projects. However, they are using the same old traditional project management tools. And here lies the fundamental paradox, one that we feel is impacting and hurting many organizations and that seems worthy of attention. The tools that project managers are using in a bid to drive growth, are fundamentally impeding their ability to grow.

To sustain growth, deliver results and outdo competition project managers need tools that give them the power for quicker adaptations, sound decision-making and rapid delivery at low operational costs. And here’s where the new age Artificial Intelligence (AI) based Project management tool fits the bill.

This blog explains how an AI backed Project management tool effectively assists a project manager.

Agile Project Management

The incremental development model and shorter release cycles of Agile helps in effective management and change. Shorter release cycle makes the system flexible, easy to change and less prone to errors. It also makes it easier to include any new changes, without majorly affecting the cost or timelines of the project. Moreover, all stakeholders of the project too, are involved in all iterations of the development. This inclusion at every step helps a great deal in maintaining transparency and visibility within the teams. A good project management tool helps you deliver the Agile Product.

Development principles. It focuses on the early delivery of business value, continuous improvement of the project’s product and processes, scope flexibility, team input, and delivering well-tested products that reflect customer needs.

In short, an Agile Project management tool lets you actually be agile. It upholds all agile processes and practices ensuring that your development is as per the Agile manifesto.

Intuitive and Intelligent System

A project manager has to make many decisions around many complex variables throughout the life of a project. To make this task simpler Artificial Intelligence and Machine Learning come to his rescue in AI backed Project Management Systems. Machine learning learns about a company, its systems, processes, and employees over the years, across different projects. It is, therefore, able to evaluate and provide the description of any decision from several views. Thus delivering a qualitatively new, more sophisticated understanding of an object being studied in comparison with any of unilateral description.

To cite an example of how machine learning can help, let us take a practical scenario. Usually, in the planning stage, associates tend to over-commit. There is a huge gap in the timelines of projected vis-a-vis actual. This can cause huge cost repercussions and jeopardize project schedules, budgets, and even customer relationships.

In such a scenario AI backed reports come to the rescue.

Project Management Tools that use Artificial intelligence learn over the years about different employees, their ability to do a task in a given time, their success and performance ratio.

This information further helps, schedule new projects with fairly realistic baselines. Team-planning, scheduling, and appraisals become more authentic with this information analyzed by the project management tool.

Effective Reporting Dashboards

Business dashboards are the window of business intelligence. Displaying data and analysis is an important part of the data science process.

It is imperative that today’s project management tools bring the capabilities of machine learning, data science and AI to accessible dashboards that everyone can understand.

Using intelligent and intuitive dashboards project managers can gather together the data, understand the important considerations and provide the right answers while planning, executing and delivering a project. Moreover, dashboards can also streamline activities and reduce inefficiencies.

Basically, effective dashboards can help take the blinders off from a manager giving deep insights into the actual problems and pain areas.

Well, above are some of the key requirements that are supported by AI backed management tools. There is lot more to these tools, which are a must for the new age project manager, to survive in today’s era of speed and innovation.

To know more about AI backed project management tool write to us today at [email protected] and visit our website https://pinestem.com/ to know about our AI based Project management tool.

0 notes

Text

Measuring Bug Cost In Software Development

The pace at which, the world turns over today demands a culture of change and innovation on a day-to-day basis. Innovation is the key to catch up, teamed with the ability to quickly respond to emerging threats. The time in which we need to respond however continues to decrease. From an information technology perspective, this suggests that we need to be able to make software changes more rapidly with quality than ever before. However, many programs devote more than 50 percent of the schedule to testing!

Not only does testing account for an increasingly large percentage of the time, it also utilizes a large proportion of the cost of any new software development. A recent study by Statista showed that percentage of IT spends from 2012 to 2019 has been increasing and quality assurance and testing will account for 26 percent of an organization’s budget! Both these numbers are way too high and restrictive for delivering a winning go-to-market time and winning a cost advantage.

Image: Proportion of budget allocated to quality assurance and testing as a percentage of IT spend from 2012 to 2019.

Let’s first identify, how this figure adds up? What is the cost of a bug?

As per a study conducted by the Systems Sciences Institute at IBM,

The cost to fix an error found after product release was four to five times as much as one uncovered during design, and up to 100 times more than one identified in the maintenance phase.

So basically the bug cost is directly proportional to how far down the SDLC (Software Development Life Cycle) the bug is found. The later you find, the more costlier it is! Moreover it gives rise to the ripple effect . One change to accommodate the code fix can in turn jeopardize other equations in the code.

So not only is the bug going to cost more to fix as it moves through a second round of SDLC, but a different code change could be delayed, which adds cost to that code change as well.

To cite an example, cost of a bug found in the initial phase of requirements gathering could be $10. If the product owner doesn’t find that bug until the QA testing phase, then the cost could be $150. If it’s not found until production, the cost could be $1000!

Given that testing is the key cost driver for software projects, and that it’s cost goes up with application size, it is pertinent for software companies to ensure that it is done well. While there are many different ways to adopt effective testing strategies,this blog focuses on how to use a project management tool to measure the cost of bugs and take corrective actions.

Using Project Management tool to evaluate the Bug cost

Perhaps the most obvious place to find data that points to your team’s performance is your Project Management Tool. This is where tasks are defined and assigned, bugs are entered and commented on, and time is associated with estimates and real work. Essentially your Project management tool is a store house of a wealth of information that can help reduce costs.

An intelligent Project Management Tool can further help reduce testing costs and increase testing efficiencies while contributing to enhanced systems and software quality in terms of faster, broader, and more efficient defect detection.

Moreover many contemporary project management tools like PineStem offer measurement of what is produced, along with the measurement of the impact of the changes that are made to improve delivery.

Tools like PineStem are able to answer pressing questions like “Did this change improve the process?” and “Are we delivering better now?”

How is this done?

To cite an example, our Project Management Tool PineStem, has a very sophisticated performance-monitoring and tracking system which is used to manage tasks. Using PineStem a project manager can easily calculate the following numbers for a developer:

Total number of,

tasks he/she worked on

budgeted hours

hours spent by the employee

bugs assigned to the employee (assuming only one developer works on a given task)

Using these details the project manager can easily calculate the bugs per hour value. From this value, other numbers can be derived including cost per hour of the developer fixing it, and finally total cost of the bugs.

These data points offer a wealth of data for you to take corrective actions, adjust your processes, improve quality, measure teams and handle appraisals. Based on reports generated, you can evaluate individual employee’s performance and number of bugs created by the employee. This helps in taking corrective actions to ensure that it is not repeated in future projects

Thus recording and retrieving data from a Project Management Tool can help you with vital information that can be used to improve performance.

If you’d like to know more details on how to evaluate performance and how to calculate some key quality metrics, do write to us today at [email protected].

To know more about PineStem visit https://pinestem.com/ You can also take a free trial for unlimited time.

References

http://blog.celerity.com/the-true-cost-of-a-software-bug

https://www.statista.com/statistics/500641/worldwide-qa-budget-allocation-as-percent-it-spend/

http://blog.osmosys.asia/2017/12/22/measuring-bug-cost/

0 notes

Text

Is Your Project Management Tool Learning on the Job?

http://blog.osmosys.asia/2017/12/11/project-management-tool-learning-job/

0 notes

Text

Is Your Project Management Tool Learning on the Job?

http://blog.osmosys.asia/2017/12/11/project-management-tool-learning-job/

You have probably heard a lot about big data. With the advent of the Internet, the explosion of electronic smart devices with superior computational power, almost every process in our world now uses some kind of software, to churn out huge amounts of data every minute.

It’s all around us now. We consume it in some way or the other, learn from it, apply it or use it for performing some tasks. For example, think about social networks, information is stored about people, their interests, and their interactions. Think about process-control devices, ranging from smart cars to electric water pumps, which permanently leave logs of data about their performance. Even scientific research initiatives, such as the patient diary systems, which have to analyze huge amounts of data about our DNA.

There are many things you can do with this data: examine it, summarize it, and even visualize it in several beautiful ways. However, there’s another crucial use of this data: to make our systems more intelligent. This data can act as a source of solid experience and can help to improve any algorithms’ performance. These algorithms, which can learn from previous data, conform to the field of Machine Learning, a sub-field of Artificial Intelligence. Now imagine if you were to apply this solid inventory of intelligent data and experience to your PROJECT MANAGEMENT TOOL!

Project Management Experts and Professionals constantly face complex and critical decisions. There has been growing interest therefore in the use of artificial intelligence (AI) techniques to enhance the project management cycle. The most crucial task being optimizing decision making using artificial intelligence (AI).

From resource allocation to appraisals project management can greatly benefit with the use of AI.

In this blog let’s enumerate three crucial areas where AI can effectively improve Project Management, outlining how they are used to assist decision makers in tackling key problems.

Effective Resource Allocation in Project Management

AI is always learning on the job. It’s getting intelligent every passing day with the data you fill and the experience you record. This experience comes in handy while planning. So, let’s say if you have assigned an employee a period of 6 weeks to complete a task but his past turnaround time for similar type of task has been 4 weeks, you get a timely flash to reschedule the calendar.

Moreover, Project management tools with AI keep a complete record of your resources, their strength and weaknesses, performance records and ranking. With this powerful source of data, the intelligent system is capable in helping you select the best candidate for any job.

For example in our project management tool PineStem, this is achieved through a feature called Resource Recommendation. For a given task, the system recommends the most ideal candidate for the job, making your task simpler in assessing resources. This feature is available whenever you create or update a task.

Timely Warnings to the Project Manager

Time is money. If any project is off track or delayed it can cost a drain on your resources. Corrective actions can be taken if you are well prepared and monitoring the tasks. Project management tools with AI do this day to day, minute -to-minute tracking for you. Raising Red flags and altering you when things go off pace. PineStem our intelligent agile based project management tool uses Alert PM feature to alert project managers and helps them taking corrective actions.

Through Alert PM feature, the Project Manager can set warning thresholds to alert him of the hours spent in the project. Let’s say the budgeted number of hours for a project are 500 hours, the project manager can set a warning mechanism to alert him once 400 hours are billed in the project. On completion of 400 hours the project management tool will automatically send alert email to all the project managers. Thus, giving them ample time for corrections and revisions.

Bug Tracking and Bug Pattern Detection

Every software developer and IT professional understands the crucial importance of effective debugging. Often, debugging consumes most of a developer’s workday. That’s where the Effective Debugging Tools of Project management systems come to rescue. Bug tracking tools available in most project management tools including PineStem helps programmers accelerate their debugging journey, by systematically categorizing, and reporting the different bugs found within the system.

AI takes this debugging process to a still superior level with Bug Pattern Detection. For this AI draws on its experience, and expands the arsenal of debugging process, helping Project managers identify repetitive serial bugs, and identifying developers behind these serial bugs. This information helps a project manager take appropriate corrective actions like training the developers in that specific area. This information also helps in review meetings and performance appraisals.

AI backed debugging is therefore a lucrative option for organizations as it helps in getting the job done—more quickly, with less pain and with a considerable scope of future improvement.

Artificial Intelligence thus makes the project management tool deliver increased efficiency, cost saving and faster development and delivery. AI therefore is undoubtedly an imperative component for any project management tool in today’s smart market.

If you’d like to experience how you can benefit from using an intelligent machine learning backed Project Management tool take a free trial with us today at https://pinestem.com/

A quality product from Osmosys Software solutions

Click here for PineStem Blogs

0 notes

Text

Your Intelligent Partner In Project Management

Stay Tuned With your team for better collaboration.

0 notes

Text

Best Project management software

Change your perception towards a project management tool.

Best project management software ever which could suggest you with the best resource available for a specific project.

Visit: https://pinestem.com

0 notes