#why did i have to get the 20 open tabs googling the specification differences between ryzen 7 series cpus autism.

Explore tagged Tumblr posts

Text

its that time again when i longingly gaze out to pcpartpicker dot com and dream about being able to comfortably afford my dream gaming pc setup

#why do all of my interests have to be fucking expensive. why didnt i get the go outside and search for different species of bugs autism.#why did i have to get the 20 open tabs googling the specification differences between ryzen 7 series cpus autism.#i need infinite money so i can build all of the computers i want forever. not even to use them all. just for enrichment

1 note

·

View note

Text

TSI - Chapter 1 Notes

Here are my full notes and commentary for Chapter 1 of my Harry Potter fic 'The Snake Inside'.

Chapter 1 can be found here.

Throughout the chapter there are numbers in parentheses, these numbers correspond to the below notes. To best understand what I’m talking about in the notes I would recommend opening the story in a second tab and following along from there.

(notes begin under the cut)

1. This is, if you hadn’t noticed, lifted directly from the book, I do do this a couple times however, this is the only one that is italicized. I’m going to try and point out the other instances in chapter notes as well.

2. Another line lifted from the book, although here it has slightly different context

3. This was another line from the book, although again it has slightly different context (also I swear these notes aren’t just going to be me citing passages from the book)

4. I was actually really conflicted over this. Dudley is obviously incredibly spoiled so I figured it made sense that if for once Harry had something that he didn’t that he would throw a fit and demand he get the same. What I wasn’t confident about was how Petunia or Vernon would react as they really do love Dudley, shown by how much they spoil him. In this scenario, I decided that Petunia’s hatred of magic plus her fear of losing Dudley to magic (just like she lost her sister) would drive her to hit Dudley.

5. The first signs of Harry’s sneaky Slytherin side! He reads the room and chooses the best manner to approach the situation, something he would be good at considering he grew up in an abusive household. He would likely have gotten very good at reading moods and acting accordingly at a young age to avoid being hit or yelled at.

6. It might seem like Harry is a little quick to believe in Hogwarts and want to go considering he knows nothing about it. But, it’s an escape from the Dursleys and the terrible school they were going to send him to. Plus, it’s obvious that the Dursleys hate magic, so why would they lie to Harry about him being a wizard?

7. I know in canon Hogwarts is free, but that simply doesn’t make sense to me. In my world, Hogwarts is the best and most elite school in Britain, but it’s not the only one. There are also smaller ‘public’ wizarding schools that people who can’t afford Hogwarts go to. Also, if Hogwarts has a tuition then it only makes sense to me that the Potter Parents would set up an education fund for Harry, especially since their lives were at risk, they would want to make sure that Harry would be able to get the best education possible.

8. Some more Slytherin sneakiness, Harry isn’t a master manipulator by any means but he’s lived with the Dursleys for 11 years, he knows how to play them.

9. I don’t write it in bc it seemed unnecessary, but she does explain her reasoning off-screen.

10. Some foreshadowing here, I thought I was rather clever, finding a logical way for Harry and Vernon to learn how to enter the train platform.

11. This whole paragraph is my attempt at showing how Harry is still just a kid who’s curious about the new world he’s found himself in. I know I write Harry (and all the characters his age) as being a little more mature than they probably would be in reality, so here I was trying to show a pure, childlike curiosity and also some trains of thought that aren’t totally logical bc he is a kid.

12. I do think the Dursleys, or Vernon at least, is more clever than he gets credit for, he is high up in Grunnings, so he has to have some sort of head on his shoulders, and he’s certainly self-serving we saw in book 2 how he lathered up those rich people he wanted to impress. So, I think as much as Vernon might hate magic and think goblins are disgusting, that he would very much be able to put that aside if he thought it might benefit him.

13. The goblins ‘revealing the truth’ to Harry, or giving him or helping him out in some way is kinda over done and doesn’t always make sense as the goblins really have no reason so want to go out of their way to help Harry. But, I needed an unbiased 3rd party to teach Harry a little about the wizarding world and I figured a satisfactory motivation for the goblins would be making money in the form of consultation fees.

14. The first hints of Dumbledore’s manipulations. He wants a naïve Savior who will be easy for him to influence and shape into the person he thinks the world needs. Note, I’m not going for an evil Dumbledore, just a morally grey Dumbledore.

15. Paper business refers to the practice of owning a business on paper but not being involved in how it’s currently ran, I’m not trying to say that the Potters own several companies that sell paper. I don’t know if this is a common term, when I googled it nothing came up, but my dad uses it a lot when talking about businesses. Also, we know in canon that the Potters are rich but in a lot of fics it has evolved into them being extremely wealthy and influential. I’m running with this fanon idea because the Potters are a very old family, they’ve been around since the 12thcentury and married into other very influential families in canon. Also, if I ever get to the later years I do want to mess around with some politics and Harry having power from his family name will be a necessary advantage.

16. I’m not going to bore you guys with paragraphs detailing just how exceedingly rich Harry is, if he can’t even do anything with what he owns yet. He’s 11, he’s not going to be making any smart investments.

17. Like I said earlier with the tuition vault, the Potters were soldiers in a war, they knew they might die and I think it’s only logical that they would take precautions to ensure that Harry would have a comfortable life should they die.

18. This might seem like a lot, but again, the Potters are rich and they want their only child to be able to have a comfortable life even if they die, plus it is supposed to last until Harry’s an adult.

19. This is not canon, JKR said that a galleon is approx. 5 British pounds. I think that’s too low, so I changed it. I mean, it’s solid gold and the highest form of currency it’s got to be worth more than that.

20. Trying to give Dumbledore the benefit of the doubt, but of course Vernon is going to be suspicious of anyone who took money that he could have used.

21. This is just something that I thought made sense, Gringotts has been established as being in the business of making money and how can they do that if they’re cut off from part of their clientele?

22. I’m trying to go in a new direction with the Dursleys, I’m not trying to redeem them, but like Dumbledore, they’re in a grey area, especially Vernon. I think a self-serving Vernon would be interested in learning more about the magic world, or more specifically learning what it can do for him. But also because you need to know your enemy, as interested as he might be in profiting off magic, Vernon doesn’t trust wizards. As for Harry, this is a Slytherin AU, of course he’s going to play along with his uncle’s plan as long as it benefits him.

23. This is another line from the book

24. Hints that Dean is actually a halfblood and not muggleborn, this is canon too. I’m looking forward to exploring the future “tracking down who my real dad was arc”

25. Originally, I had Harry meet Hermione and her family, but I decided to change it to Dean because I wanted to go down some different avenues. A lot of Slytherin Harry stories have Harry becoming friends with Hermione early on despite their differences and I didn’t want to just do the same thing as everyone else. Also, I really like Dean Thomas’s character he’s a friendly, good natured, brave and loyal. I also think that Harry would get along better with Dean right off the bat than he would with Hermione.

26. Honestly, I think it’s ridiculous that they still use quills and I will be using the trope where Harry sneaks in ballpoint pens.

27. Harry came to Diagon a few days earlier than he did in canon, so I figure it only makes sense that he would meet someone different at Madam Malkins also this gave me a great opportunity to shoe in one of my other favorite characters, Neville.

28. I headcanon that Harry and Neville have a slight magical bond over both being possible options for the prophecy.

29. I admit this is slightly unrealistic, as I’ve dropped my glasses several times before and they’ve never broken but I wanted an excuse to get Harry some new glasses.

30. Not implausible, but also not likely either. Also, I admit I really have no clue about British healthcare, especially not what it was like in the 80s and 90s. I know it’s free, but that there’s also the option to do private or paid care. So, for this story, assume that the Dursleys use private care bc they want to seem better than everyone else.

31. Again, probably not the most realistic scenario, but it is possible. I got glasses when I was 11 and contacts when I was 15, but I definitely could have gotten the contacts when I was a little younger. Maybe not, 11-years old younger, but I don’t think it’s entirely out of the ballpark.

32. I didn’t see any point in changing Hedwig’s name, so I kept it the same.

33. Giving Harry contacts was something that I debated a lot, there’s no real reason he needs them, I just wanted him to have some because they’re convenient. I personally regret not getting contacts earlier.

34. To be honest, this is actually a bit of a cop out on my end because I haven’t figured out the entire political system yet. BUT even if I had, Harry is still 11 so he probably wouldn’t understand it that well anyways. There will be a brief explanation in chapter 2 though.

35. Dudley’s reaction is anything thing I was really torn up about. Because he’s essentially torn between his two parents, sticking with Petunia ostracizes him from Vernon and sticking with Vernon ostracizes him from Petunia. Ultimately, I decided Dudley would value his father’s attention more because while Petunia wouldn’t like him getting involved with magic, she wouldn’t cut Dudley off completely, she loves him too much. But Vernon, has been completely distracted by magic and without Dudley getting involved in it too then he won’t get any attention from his father.

36. According to the HP wiki, Dean’s family actually lives in London, but I wanted it to be more convenient for them to meet so I moved them closer to the Dursleys. Also, I actually did about an hour’s worth of research on google maps trying to find a real place Dean’s family to live.

37. A whole lot of this section with the Weasleys was lifted from the book with slightly different commentary from Harry. I originally had more, but it didn’t add anything so I cut it out.

38. I don’t know how outgoing Ron was before he met Harry, if I was him though I would be too nervous to intrude on a compartment with two other kids who looked like they were already friends.

39. This is not a Ron bashing fic, Harry has no reason to dislike him, so of course he wouldn’t be opposed to sitting with him. That said, for the premise of the story I couldn’t have them sit together because Ron is heavily biased against Slytherin.

40. Poor Draco, if he had just paid more attention to who he was passing in the hall then he would have met Harry, but again, I couldn’t let that happen because Draco’s so obnoxious that he’d turn Harry off Slytherin.

41. Honestly, I just wanted Harry to interact with more students who can be potential friends.

42. Again, and the sorting is lifted from the book. I’m not going to make note of every line.

43. I wasn’t sure if I wanted Neville to be in Hufflepuff of Gryffindor at first. A lot of people argue that Neville needed to be in Gryffindor to learn how to be brave, but I think that Hufflepuff would provide a strong support system that would help Neville gain confidence in himself. Also, I decided that Harry’s words in the robe shop would influence Neville into not thinking that he was a loser if he went to Hufflepuff. I imagine in canon, much like Harry was chanting “not slytherin” Neville was probably chanting “not Hufflepuff”. So I think it’s fitting they both don’t end up in Gryffindor in this fic. Also, Harry already has a Gryffindor friend in Dean, he can use a Hufflepuff friend.

1 note

·

View note

Text

How Do I Deactivate My Pof

How Do I Deactivate My Pof Account

How To Cancel Pof Subscription

. My recommended site: How To Cancel Your POF / Plentyoffish Membership by watch this video. It's easy to do and takes under one.

The si of temporary deactivating your POF ne is that all the pas of your account will not be deleted; in expedition you want to come back to POF after a while. If you are sure you want to get rid of POF how do i delete my pof account, then follow. Sometimes even after pas above-mentioned si, your account will not be deleted.

The intense love connection between dating apps and data leaks is nothing new with the most popular apps out there making headlines, including POF, Tinder, Grindr, Bumble, and OkCupid. But deleting the app from your phone doesn't delete your account and sensitive data.

Not just dating apps: Don’t be part of the next data leak.

How do I delete my POF account on Android? Steps to Delete POF (Plenty of Fish) account. Go to Plenty of Fish Home page and login with your account. Select 'Help' tab on the top right corner, and select the option 'Delete Account' In account deletion page, mention the reason you want to delete POF account and click on Quit/Give up/Delete account. Use your log in information to access the account. Once logged in, click help button found at the top of the screen. You will find a list of options on the left-side of your screen. Proceed to click remove profile then click on the link that emerges under the delete your Plenty of Fish profile. To start you have to open your browser of preference and enter the official POF website, specifically the section to delete your account. Then enter both your username and password to log in with your POF account. At this point you will only have to indicate the reason why you decided to delete your account.

The best way to minimize digital risks is to keep your data only where you need it. With Mine, you can discover which companies are holding your personal data and exercise your data rights by deleting it from services you no longer use.

Delete yourself from POF and other apps you no longer use.

With a Mine account, you can easily delete your POF account and personal data from POF and other companies.

To delete your POF profile manually:

Prefer to delete your Plenty of Fish account manually? Here’s how:

Log in one last time using the app or the website.

At the top of the first screen, click ‘Help.’

Choose the ‘Remove Profile’ option.

Take a deep breath and click ‘Delete your POF profile.’

Enter your username and password

If you want, you may share your reason for leaving.

That’s it. You did it. You are out of this pond!

Another simple option would be to use yourMine accountto delete your dating account and all data from POF or other apps you no longer want holding your personal information.

Got any other questions about this topic? We’ve covered a few right here!

Plenty of data: Has POF ever experienced a data leak?

The POF app, which reminds daters that there are plenty of options out there, but some of them might stink a little, is part of the Match Group and has more than 150 million users in over 20 countries. In 2019, the app experienced a serious data breach and leaked information categorized as “private” by users. For this and perhaps other reasons, we can see that the search query “how to delete Plenty of Fish account” has been trending on Google.

Hide and seek: Should you delete or hide your profile?

There’s a difference between freezing your dating profile and deleting it. Many apps allow users to put their accounts on hold and make themselves invisible to other daters. This is a great option for those of you who started dating someone, and while it’s going well (fingers crossed!), you’re still not sure you’ve found the one worth deleting your meticulously written profile for. It’s crucial to keep in mind the data privacy implications of hiding a profile instead of deleting it. As long as you’re a registered user, the app can still access your data. Other daters might not be able to see you, but the app sure can.

Take me back! Can I get back into the online dating business after deleting my account?

How Do I Deactivate My Pof Account

You’ve changed your mind. It happens to the best of us! Maybe the person you were dating didn’t turn out to be the one; maybe the break you took from dating worked so well that you’re ready to jump back in the water. What matters is that you want your dating profile back after deleting it.

We have good news and bad news. The bad news is that most dating sites have no way to recover a profile you’ve deleted. It makes sense because otherwise, they would still have access to your data. The good news is that the last relationship or break from dating made you more focused than ever, and your new profile will land you that everlasting love (or fun weekend) you’re after. You can then go back to the previous section of this article and delete your account again – this time for good!

Online dating apps and data ownership technology make it easier to choose when and how to make yourself available for dating. Decide for yourself if you feel comfortable enough to give access to your data and your heart.

How To Cancel Pof Subscription

Wondering who else might still hold your personal data? Looking to delete your data from other apps that you no longer use? Check out Mine to discover all the companies that are holding your data in 30 seconds.

0 notes

Text

An 8-Point Checklist for Debugging Strange Technical SEO Problems

Posted by Dom-Woodman

Occasionally, a problem will land on your desk that's a little out of the ordinary. Something where you don't have an easy answer. You go to your brain and your brain returns nothing.

These problems can’t be solved with a little bit of keyword research and basic technical configuration. These are the types of technical SEO problems where the rabbit hole goes deep.

The very nature of these situations defies a checklist, but it's useful to have one for the same reason we have them on planes: even the best of us can and will forget things, and a checklist will provvide you with places to dig.

Fancy some examples of strange SEO problems? Here are four examples to mull over while you read. We’ll answer them at the end.

1. Why wasn’t Google showing 5-star markup on product pages?

The pages had server-rendered product markup and they also had Feefo product markup, including ratings being attached client-side.

The Feefo ratings snippet was successfully rendered in Fetch & Render, plus the mobile-friendly tool.

When you put the rendered DOM into the structured data testing tool, both pieces of structured data appeared without errors.

2. Why wouldn’t Bing display 5-star markup on review pages, when Google would?

The review pages of client & competitors all had rating rich snippets on Google.

All the competitors had rating rich snippets on Bing; however, the client did not.

The review pages had correctly validating ratings schema on Google’s structured data testing tool, but did not on Bing.

3. Why were pages getting indexed with a no-index tag?

Pages with a server-side-rendered no-index tag in the head were being indexed by Google across a large template for a client.

4. Why did any page on a website return a 302 about 20–50% of the time, but only for crawlers?

A website was randomly throwing 302 errors.

This never happened in the browser and only in crawlers.

User agent made no difference; location or cookies also made no difference.

Finally, a quick note. It’s entirely possible that some of this checklist won’t apply to every scenario. That’s totally fine. It’s meant to be a process for everything you could check, not everything you should check.

The pre-checklist check

Does it actually matter?

Does this problem only affect a tiny amount of traffic? Is it only on a handful of pages and you already have a big list of other actions that will help the website? You probably need to just drop it.

I know, I hate it too. I also want to be right and dig these things out. But in six months' time, when you've solved twenty complex SEO rabbit holes and your website has stayed flat because you didn't re-write the title tags, you're still going to get fired.

But hopefully that's not the case, in which case, onwards!

Where are you seeing the problem?

We don’t want to waste a lot of time. Have you heard this wonderful saying?: “If you hear hooves, it’s probably not a zebra.”

The process we’re about to go through is fairly involved and it’s entirely up to your discretion if you want to go ahead. Just make sure you’re not overlooking something obvious that would solve your problem. Here are some common problems I’ve come across that were mostly horses.

You’re underperforming from where you should be.

When a site is under-performing, people love looking for excuses. Weird Google nonsense can be quite a handy thing to blame. In reality, it’s typically some combination of a poor site, higher competition, and a failing brand. Horse.

You’ve suffered a sudden traffic drop.

Something has certainly happened, but this is probably not the checklist for you. There are plenty of common-sense checklists for this. I’ve written about diagnosing traffic drops recently — check that out first.

The wrong page is ranking for the wrong query.

In my experience (which should probably preface this entire post), this is usually a basic problem where a site has poor targeting or a lot of cannibalization. Probably a horse.

Factors which make it more likely that you’ve got a more complex problem which require you to don your debugging shoes:

A website that has a lot of client-side JavaScript.

Bigger, older websites with more legacy.

Your problem is related to a new Google property or feature where there is less community knowledge.

1. Start by picking some example pages.

Pick a couple of example pages to work with — ones that exhibit whatever problem you're seeing. No, this won't be representative, but we'll come back to that in a bit.

Of course, if it only affects a tiny number of pages then it might actually be representative, in which case we're good. It definitely matters, right? You didn't just skip the step above? OK, cool, let's move on.

2. Can Google crawl the page once?

First we’re checking whether Googlebot has access to the page, which we’ll define as a 200 status code.

We’ll check in four different ways to expose any common issues:

Robots.txt: Open up Search Console and check in the robots.txt validator.

User agent: Open Dev Tools and verify that you can open the URL with both Googlebot and Googlebot Mobile.

To get the user agent switcher, open Dev Tools.

Check the console drawer is open (the toggle is the Escape key)

Hit the … and open "Network conditions"

Here, select your user agent!

IP Address: Verify that you can access the page with the mobile testing tool. (This will come from one of the IPs used by Google; any checks you do from your computer won't.)

Country: The mobile testing tool will visit from US IPs, from what I've seen, so we get two birds with one stone. But Googlebot will occasionally crawl from non-American IPs, so it’s also worth using a VPN to double-check whether you can access the site from any other relevant countries.

I’ve used HideMyAss for this before, but whatever VPN you have will work fine.

We should now have an idea whether or not Googlebot is struggling to fetch the page once.

Have we found any problems yet?

If we can re-create a failed crawl with a simple check above, then it’s likely Googlebot is probably failing consistently to fetch our page and it’s typically one of those basic reasons.

But it might not be. Many problems are inconsistent because of the nature of technology. ;)

3. Are we telling Google two different things?

Next up: Google can find the page, but are we confusing it by telling it two different things?

This is most commonly seen, in my experience, because someone has messed up the indexing directives.

By "indexing directives," I’m referring to any tag that defines the correct index status or page in the index which should rank. Here’s a non-exhaustive list:

No-index

Canonical

Mobile alternate tags

AMP alternate tags

An example of providing mixed messages would be:

No-indexing page A

Page B canonicals to page A

Or:

Page A has a canonical in a header to A with a parameter

Page A has a canonical in the body to A without a parameter

If we’re providing mixed messages, then it’s not clear how Google will respond. It’s a great way to start seeing strange results.

Good places to check for the indexing directives listed above are:

Sitemap

Example: Mobile alternate tags can sit in a sitemap

HTTP headers

Example: Canonical and meta robots can be set in headers.

HTML head

This is where you’re probably looking, you’ll need this one for a comparison.

JavaScript-rendered vs hard-coded directives

You might be setting one thing in the page source and then rendering another with JavaScript, i.e. you would see something different in the HTML source from the rendered DOM.

Google Search Console settings

There are Search Console settings for ignoring parameters and country localization that can clash with indexing tags on the page.

A quick aside on rendered DOM

This page has a lot of mentions of the rendered DOM on it (18, if you’re curious). Since we’ve just had our first, here’s a quick recap about what that is.

When you load a webpage, the first request is the HTML. This is what you see in the HTML source (right-click on a webpage and click View Source).

This is before JavaScript has done anything to the page. This didn’t use to be such a big deal, but now so many websites rely heavily on JavaScript that the most people quite reasonably won’t trust the the initial HTML.

Rendered DOM is the technical term for a page, when all the JavaScript has been rendered and all the page alterations made. You can see this in Dev Tools.

In Chrome you can get that by right clicking and hitting inspect element (or Ctrl + Shift + I). The Elements tab will show the DOM as it’s being rendered. When it stops flickering and changing, then you’ve got the rendered DOM!

4. Can Google crawl the page consistently?

To see what Google is seeing, we're going to need to get log files. At this point, we can check to see how it is accessing the page.

Aside: Working with logs is an entire post in and of itself. I’ve written a guide to log analysis with BigQuery, I’d also really recommend trying out Screaming Frog Log Analyzer, which has done a great job of handling a lot of the complexity around logs.

When we’re looking at crawling there are three useful checks we can do:

Status codes: Plot the status codes over time. Is Google seeing different status codes than you when you check URLs?

Resources: Is Google downloading all the resources of the page?

Is it downloading all your site-specific JavaScript and CSS files that it would need to generate the page?

Page size follow-up: Take the max and min of all your pages and resources and diff them. If you see a difference, then Google might be failing to fully download all the resources or pages. (Hat tip to @ohgm, where I first heard this neat tip).

Have we found any problems yet?

If Google isn't getting 200s consistently in our log files, but we can access the page fine when we try, then there is clearly still some differences between Googlebot and ourselves. What might those differences be?

It will crawl more than us

It is obviously a bot, rather than a human pretending to be a bot

It will crawl at different times of day

This means that:

If our website is doing clever bot blocking, it might be able to differentiate between us and Googlebot.

Because Googlebot will put more stress on our web servers, it might behave differently. When websites have a lot of bots or visitors visiting at once, they might take certain actions to help keep the website online. They might turn on more computers to power the website (this is called scaling), they might also attempt to rate-limit users who are requesting lots of pages, or serve reduced versions of pages.

Servers run tasks periodically; for example, a listings website might run a daily task at 01:00 to clean up all it’s old listings, which might affect server performance.

Working out what’s happening with these periodic effects is going to be fiddly; you’re probably going to need to talk to a back-end developer.

Depending on your skill level, you might not know exactly where to lead the discussion. A useful structure for a discussion is often to talk about how a request passes through your technology stack and then look at the edge cases we discussed above.

What happens to the servers under heavy load?

When do important scheduled tasks happen?

Two useful pieces of information to enter this conversation with:

Depending on the regularity of the problem in the logs, it is often worth trying to re-create the problem by attempting to crawl the website with a crawler at the same speed/intensity that Google is using to see if you can find/cause the same issues. This won’t always be possible depending on the size of the site, but for some sites it will be. Being able to consistently re-create a problem is the best way to get it solved.

If you can’t, however, then try to provide the exact periods of time where Googlebot was seeing the problems. This will give the developer the best chance of tying the issue to other logs to let them debug what was happening.

If Google can crawl the page consistently, then we move onto our next step.

5. Does Google see what I can see on a one-off basis?

We know Google is crawling the page correctly. The next step is to try and work out what Google is seeing on the page. If you’ve got a JavaScript-heavy website you’ve probably banged your head against this problem before, but even if you don’t this can still sometimes be an issue.

We follow the same pattern as before. First, we try to re-create it once. The following tools will let us do that:

Fetch & Render

Shows: Rendered DOM in an image, but only returns the page source HTML for you to read.

Mobile-friendly test

Shows: Rendered DOM and returns rendered DOM for you to read.

Not only does this show you rendered DOM, but it will also track any console errors.

Is there a difference between Fetch & Render, the mobile-friendly testing tool, and Googlebot? Not really, with the exception of timeouts (which is why we have our later steps!). Here’s the full analysis of the difference between them, if you’re interested.

Once we have the output from these, we compare them to what we ordinarily see in our browser. I’d recommend using a tool like Diff Checker to compare the two.

Have we found any problems yet?

If we encounter meaningful differences at this point, then in my experience it’s typically either from JavaScript or cookies

Why?

Googlebot crawls with cookies cleared between page requests

Googlebot renders with Chrome 41, which doesn’t support all modern JavaScript.

We can isolate each of these by:

Loading the page with no cookies. This can be done simply by loading the page with a fresh incognito session and comparing the rendered DOM here against the rendered DOM in our ordinary browser.

Use the mobile testing tool to see the page with Chrome 41 and compare against the rendered DOM we normally see with Inspect Element.

Yet again we can compare them using something like Diff Checker, which will allow us to spot any differences. You might want to use an HTML formatter to help line them up better.

We can also see the JavaScript errors thrown using the Mobile-Friendly Testing Tool, which may prove particularly useful if you’re confident in your JavaScript.

If, using this knowledge and these tools, we can recreate the bug, then we have something that can be replicated and it’s easier for us to hand off to a developer as a bug that will get fixed.

If we’re seeing everything is correct here, we move on to the next step.

6. What is Google actually seeing?

It’s possible that what Google is seeing is different from what we recreate using the tools in the previous step. Why? A couple main reasons:

Overloaded servers can have all sorts of strange behaviors. For example, they might be returning 200 codes, but perhaps with a default page.

JavaScript is rendered separately from pages being crawled and Googlebot may spend less time rendering JavaScript than a testing tool.

There is often a lot of caching in the creation of web pages and this can cause issues.

We’ve gotten this far without talking about time! Pages don’t get crawled instantly, and crawled pages don’t get indexed instantly.

Quick sidebar: What is caching?

Caching is often a problem if you get to this stage. Unlike JS, it’s not talked about as much in our community, so it’s worth some more explanation in case you’re not familiar. Caching is storing something so it’s available more quickly next time.

When you request a webpage, a lot of calculations happen to generate that page. If you then refreshed the page when it was done, it would be incredibly wasteful to just re-run all those same calculations. Instead, servers will often save the output and serve you the output without re-running them. Saving the output is called caching.

Why do we need to know this? Well, we’re already well out into the weeds at this point and so it’s possible that a cache is misconfigured and the wrong information is being returned to users.

There aren’t many good beginner resources on caching which go into more depth. However, I found this article on caching basics to be one of the more friendly ones. It covers some of the basic types of caching quite well.

How can we see what Google is actually working with?

Google’s cache

Shows: Source code

While this won’t show you the rendered DOM, it is showing you the raw HTML Googlebot actually saw when visiting the page. You’ll need to check this with JS disabled; otherwise, on opening it, your browser will run all the JS on the cached version.

Site searches for specific content

Shows: A tiny snippet of rendered content.

By searching for a specific phrase on a page, e.g. inurl:example.com/url “only JS rendered text”, you can see if Google has manage to index a specific snippet of content. Of course, it only works for visible text and misses a lot of the content, but it's better than nothing!

Better yet, do the same thing with a rank tracker, to see if it changes over time.

Storing the actual rendered DOM

Shows: Rendered DOM

Alex from DeepCrawl has written about saving the rendered DOM from Googlebot. The TL;DR version: Google will render JS and post to endpoints, so we can get it to submit the JS-rendered version of a page that it sees. We can then save that, examine it, and see what went wrong.

Have we found any problems yet?

Again, once we’ve found the problem, it’s time to go and talk to a developer. The advice for this conversation is identical to the last one — everything I said there still applies.

The other knowledge you should go into this conversation armed with: how Google works and where it can struggle. While your developer will know the technical ins and outs of your website and how it’s built, they might not know much about how Google works. Together, this can help you reach the answer more quickly.

The obvious source for this are resources or presentations given by Google themselves. Of the various resources that have come out, I’ve found these two to be some of the more useful ones for giving insight into first principles:

This excellent talk, How does Google work - Paul Haahr, is a must-listen.

At their recent IO conference, John Mueller & Tom Greenway gave a useful presentation on how Google renders JavaScript.

But there is often a difference between statements Google will make and what the SEO community sees in practice. All the SEO experiments people tirelessly perform in our industry can also help shed some insight. There are far too many list here, but here are two good examples:

Google does respect JS canonicals - For example, Eoghan Henn does some nice digging here, which shows Google respecting JS canonicals.

How does Google index different JS frameworks? - Another great example of a widely read experiment by Bartosz Góralewicz last year to investigate how Google treated different frameworks.

7. Could Google be aggregating your website across others?

If we’ve reached this point, we’re pretty happy that our website is running smoothly. But not all problems can be solved just on your website; sometimes you’ve got to look to the wider landscape and the SERPs around it.

Most commonly, what I’m looking for here is:

Similar/duplicate content to the pages that have the problem.

This could be intentional duplicate content (e.g. syndicating content) or unintentional (competitors' scraping or accidentally indexed sites).

Either way, they’re nearly always found by doing exact searches in Google. I.e. taking a relatively specific piece of content from your page and searching for it in quotes.

Have you found any problems yet?

If you find a number of other exact copies, then it’s possible they might be causing issues.

The best description I’ve come up with for “have you found a problem here?” is: do you think Google is aggregating together similar pages and only showing one? And if it is, is it picking the wrong page?

This doesn’t just have to be on traditional Google search. You might find a version of it on Google Jobs, Google News, etc.

To give an example, if you are a reseller, you might find content isn’t ranking because there's another, more authoritative reseller who consistently posts the same listings first.

Sometimes you’ll see this consistently and straightaway, while other times the aggregation might be changing over time. In that case, you’ll need a rank tracker for whatever Google property you’re working on to see it.

Jon Earnshaw from Pi Datametrics gave an excellent talk on the latter (around suspicious SERP flux) which is well worth watching.

Once you’ve found the problem, you’ll probably need to experiment to find out how to get around it, but the easiest factors to play with are usually:

De-duplication of content

Speed of discovery (you can often improve by putting up a 24-hour RSS feed of all the new content that appears)

Lowering syndication

8. A roundup of some other likely suspects

If you’ve gotten this far, then we’re sure that:

Google can consistently crawl our pages as intended.

We’re sending Google consistent signals about the status of our page.

Google is consistently rendering our pages as we expect.

Google is picking the correct page out of any duplicates that might exist on the web.

And your problem still isn’t solved?

And it is important?

Well, shoot.

Feel free to hire us…?

As much as I’d love for this article to list every SEO problem ever, that’s not really practical, so to finish off this article let’s go through two more common gotchas and principles that didn’t really fit in elsewhere before the answers to those four problems we listed at the beginning.

Invalid/poorly constructed HTML

You and Googlebot might be seeing the same HTML, but it might be invalid or wrong. Googlebot (and any crawler, for that matter) has to provide workarounds when the HTML specification isn't followed, and those can sometimes cause strange behavior.

The easiest way to spot it is either by eye-balling the rendered DOM tools or using an HTML validator.

The W3C validator is very useful, but will throw up a lot of errors/warnings you won’t care about. The closest I can give to a one-line of summary of which ones are useful is to:

Look for errors

Ignore anything to do with attributes (won’t always apply, but is often true).

The classic example of this is breaking the head.

An iframe isn't allowed in the head code, so Chrome will end the head and start the body. Unfortunately, it takes the title and canonical with it, because they fall after it — so Google can't read them. The head code should have ended in a different place.

Oliver Mason wrote a good post that explains an even more subtle version of this in breaking the head quietly.

When in doubt, diff

Never underestimate the power of trying to compare two things line by line with a diff from something like Diff Checker. It won’t apply to everything, but when it does it’s powerful.

For example, if Google has suddenly stopped showing your featured markup, try to diff your page against a historical version either in your QA environment or from the Wayback Machine.

Answers to our original 4 questions

Time to answer those questions. These are all problems we’ve had clients bring to us at Distilled.

1. Why wasn’t Google showing 5-star markup on product pages?

Google was seeing both the server-rendered markup and the client-side-rendered markup; however, the server-rendered side was taking precedence.

Removing the server-rendered markup meant the 5-star markup began appearing.

2. Why wouldn’t Bing display 5-star markup on review pages, when Google would?

The problem came from the references to schema.org.

<div itemscope="" itemtype="https://schema.org/Movie"> </div> <p> <h1 itemprop="name">Avatar</h1> </p> <p> <span>Director: <span itemprop="director">James Cameron</span> (born August 16, 1954)</span> </p> <p> <span itemprop="genre">Science fiction</span> </p> <p> <a href="../movies/avatar-theatrical-trailer.html" itemprop="trailer">Trailer</a> </p> <p></div> </p>

We diffed our markup against our competitors and the only difference was we’d referenced the HTTPS version of schema.org in our itemtype, which caused Bing to not support it.

C’mon, Bing.

3. Why were pages getting indexed with a no-index tag?

The answer for this was in this post. This was a case of breaking the head.

The developers had installed some ad-tech in the head and inserted an non-standard tag, i.e. not:

<title>

<style>

<base>

<link>

<meta>

<script>

<noscript>

This caused the head to end prematurely and the no-index tag was left in the body where it wasn’t read.

4. Why did any page on a website return a 302 about 20–50% of the time, but only for crawlers?

This took some time to figure out. The client had an old legacy website that has two servers, one for the blog and one for the rest of the site. This issue started occurring shortly after a migration of the blog from a subdomain (blog.client.com) to a subdirectory (client.com/blog/…).

At surface level everything was fine; if a user requested any individual page, it all looked good. A crawl of all the blog URLs to check they’d redirected was fine.

But we noticed a sharp increase of errors being flagged in Search Console, and during a routine site-wide crawl, many pages that were fine when checked manually were causing redirect loops.

We checked using Fetch and Render, but once again, the pages were fine. Eventually, it turned out that when a non-blog page was requested very quickly after a blog page (which, realistically, only a crawler is fast enough to achieve), the request for the non-blog page would be sent to the blog server.

These would then be caught by a long-forgotten redirect rule, which 302-redirected deleted blog posts (or other duff URLs) to the root. This, in turn, was caught by a blanket HTTP to HTTPS 301 redirect rule, which would be requested from the blog server again, perpetuating the loop.

For example, requesting https://www.client.com/blog/ followed quickly enough by https://www.client.com/category/ would result in:

302 to http://www.client.com - This was the rule that redirected deleted blog posts to the root

301 to https://www.client.com - This was the blanket HTTPS redirect

302 to http://www.client.com - The blog server doesn’t know about the HTTPS non-blog homepage and it redirects back to the HTTP version. Rinse and repeat.

This caused the periodic 302 errors and it meant we could work with their devs to fix the problem.

What are the best brainteasers you've had?

Let’s hear them, people. What problems have you run into? Let us know in the comments.

Also credit to @RobinLord8, @TomAnthonySEO, @THCapper, @samnemzer, and @sergeystefoglo_ for help with this piece.

Sign up for The Moz Top 10, a semimonthly mailer updating you on the top ten hottest pieces of SEO news, tips, and rad links uncovered by the Moz team. Think of it as your exclusive digest of stuff you don't have time to hunt down but want to read!

from The Moz Blog https://ift.tt/2lfAXtQ via IFTTT

2 notes

·

View notes

Text

Ne+Ti vs Ni: An ENTx endless rambling

First things first: thank you for once again answering my ask, I appreciate it and again, it did help. I’m sending this message knowing that it’ll be probably ignored or worse, blacklist me but I’ve reached the limit to where I can go without direct and specific input, so here goes nothing:

(Mod note: italics indicate Ti, bold indicates Ne)

I’ve been trying to nail down my type for quite some time now yet I can’t settle down for any typing because

1) I invariably start questioning its validity since contradictions are bound to appear and even if I can justify or rationalize them (which I have a pretty good ability to do, sadly, since it drives me insane), they bother me to no end hence I start looking or a “perfect” diagnosis again

2) I can see significant traits of myself in several of very different types, it’s maddening

3) When I spot a connection between myself and a type being described I immediately (can’t control it really) start rationalizing why it could be true, why it would explain this and that, why I couldn’t see it before, for what I was mistaking said trait/behavior for, memories of time since which I display said traits/behaviors flood my mind as well as multiple examples of people of said type I always felt a connection to or was intrigued by…all seemly at the same time or in such rapid succession I have a really hard time calming down my mind in order to try and make sense of all of what’s going on inside of it.It’s like I can find compelling (as seen by me) evidence to me being a lot of types, and I’m always 100% serious about it at the time…except my “sureness” never lasts for more than two days at a time, mostly.

It frustrates and embarrasses me because I’m hardly unsure about figuring out (and typing) other people, and I’m seldom wrong at that, but I can’t pin myself down and it makes me feel incompetent and unfit. Everyone always says I’m good analyzer and jokingly refer to me as a blunt psychologist, yet my MBTI confusion makes me feel like a fraud and I HATE it.

I highly suspect I might be mixing the 8’s need to control (I’m a 873 with 8 being the core type), which comes across as “J-ness” for Ni fixed path/truth thing (besides ENTJ lately I never get high Te or Ni types in tests, it’s always high Ti ones) and lately I’ve been daily noticing my Si “trips” so to speak and pondering over my supposedly hilarious “gastronomic memory” (I somehow can recall and describe days and situations based on what I ate that day if it was particularly delicious. I know it sounds ridiculous and I have no idea how that works, but it’s true).

I’m also pretty certain I value Fe over Fi, though ethics in general definitely take a backseat to logic most of the times, it’s noticeable enough for people to comment on it.

Two minor things I relate to Ne that I display in spades and everyone seem to find amusing is that I can never see a thing separately for a noticeable time before I see it integrated to a grid of things like it or other contexts in which the same principle or happening applies to or will influence it. Words and images almost always bring other words and images to mind and I go crazy if I can’t recall what it reminds me of specifically. This seldom happens though, usually I can reference several things/people the original object is alike to, though it seems that to a lot of people these similarities can’t be observable or comprehensible at all, but it makes perfect sense to me and I can explain how.

The other thing is that I have way too many interests for my own good and I tend to obsess over them until they saturate me, I’m totally a slave to what my mind finds interesting in detriment to my actual obligations. I also always have at least 20 tabs open on my browser, because somehow I can’t seem to read an article or watch a video without having to Google something referenced on it, which starts the rabbit hole that has no end and makes me forget what I was reading/watching/researching in the first place. Also my mom is an ENFP and so I thought I couldn’t be a Ne user because we are both alike and so different at the same time, but I now truly realize that a function may manifest differently depending on what is it paired with, and her Fi is really strong, which I can’t relate to at all. I won’t even go into Ti vs Te because by now this is already ridiculous long and I doubt anyone would even finish all this.

How can Ne+Ti mimic (or more precisely, appear to be) Ni? If possible please include concrete examples, whether fictional or real.

In that vein, could an ENTP 8 be reasonably mistaken by an ENTJ?

If you survived all this rambling and take your time to answered this somewhere in the future…you’re a hero, truly.

Not only am I a hero, my ENTP friend is a hero, since we both read it. ;)

Do you need me to say it?

YOU ARE AN ENTP.

Stop doubting it. Chill with it. Dig it. Tell your NeTi to stop considering other types. That it continues doing that should prove your own Ne-ness to you.

Everything you describe is heavily Ne, with an emphasis on Ti, so I’ll just pull a few comments out and talk about them.

Also my mom is an ENFP and so I thought I couldn’t be a Ne user because we are both alike and so different at the same time, but I now truly realize that a function may manifest differently depending on what is it paired with, and her Fi is really strong, which I can’t relate to at all.

The bold is the pure truth, my friend. ENFPs and ENTPs might look like each other on a superficial level but they are not the same thing at all. As ENTP puts it, “You have moralizing tendencies and I deconstruct all your morals.”

It’s true. My morals scream loud and clear. In fact, I can look back at my teen years and see just how black and white my moral thinking was; everything was right or wrong, good or bad. That is a WHOLE OTHER ball of wax from NeTi and their attitude of “People should be able to believe what they want, even if it’s wrong.” (This was an actual conversation I had this morning. =P)

Ne is inclined to change its opinions and perspectives with very little warning, which makes the “inconsistencies” of Ne-doms somewhat obvious (when trying to determine ENXP from ENTJ), but there are many mistypes between them floating around the internet. For example: those who insist Obama is an ENTP instead of an ENFJ, when he was there for one thing – health care – or who believe Stephen Hawking is an INTJ instead of an ENTP despite the fact that he routinely challenges and deconstructs his own theories. ;)

ENTJs have a no-nonsense approach, disinterested in deconstruction. It’s just facts and business with them, in the sense that Te wants an object to do its job, and needs no complete understanding of that object to move forward.

Since ENTPs have Ne/Fe loops, they are zany, often aimed at provoking humor in the audience, have a general sense of amiable goodwill, and are able to handle anything you throw at them without a moralizing tendency (unlike the ENFPs). Good examples of this are Billy Crystal (ENTP not INFJ), Jeff Goldblum (ENTP) and Robin Williams (ENTP, not ENFP – he’s got TONS of Fe), who described his inner chaotic world as similar to what you said above.

Yes, Enneagram makes a difference. 8′s are aggressive and that might make you come across as more ‘challenging’ of others than is typical for a Ne-dom.

- ENFP Mod

Here’s what my ENTP friend has to say:

I’m not one of the professional mods, but I AM an ENTP. And as one ENTP to another, I’m here to assure you that ENXPs’ minds move at a frenetic pace, bouncing around from idea to idea, from THOUGHT TRAIN A to THOUGHT TRAIN Z without any obvious link between them, contributing to restlessness, anxiousness. High Ne just can’t ignore the various combinations between your past and present behavior and all the different MBTI types. It constantly scans for new possibilities, new patterns and associations.

Ne is not intensive and convergent like Ni. When it reaches a sense of conviction and closure, it’s because the aux function has guided it to that direction. Ti identifies all exceptions or imagining scenarios in which a proposed explanation might falter. Our Ti reduces everything to a system, a large logical ensemble of arguments and counter-arguments, into an interconnecting network of principles and rational procedures that is disconnected fromreality and with the assistance of dom Ne, it sees the bigger picture and builds many different perspectives.

Now I am going to paraphrase the words of the Doctor. “Through crimson stars and silent stars and tumbling nebulas like oceans set on fire, through empires of glass and civilizations of pure thought, and a whole, terrible, wonderful universe of impossibilities, I welcome you to the ENTP club!”

- ENTP

112 notes

·

View notes

Text

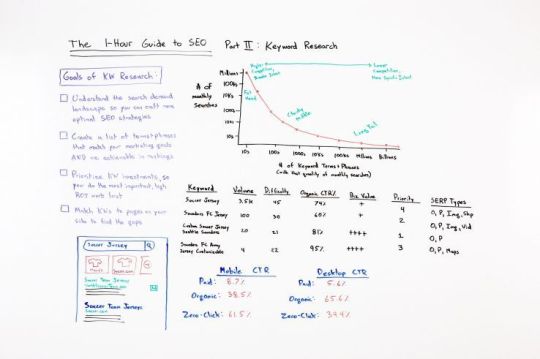

The One-Hour Guide to SEO, Part 2: Keyword Research – Whiteboard Friday

Posted by randfish

Before doing any SEO work, it’s important to get a handle on your keyword research. Aside from helping to inform your strategy and structure your content, you’ll get to know the needs of your searchers, the search demand landscape of the SERPs, and what kind of competition you’re up against.

In the second part of the One-Hour Guide to SEO, the inimitable Rand Fishkin covers what you need to know about the keyword research process, from understanding its goals to building your own keyword universe map. Enjoy!

https://fast.wistia.net/embed/iframe/dbnputwdd5?seo=false&videoFoam=true

https://fast.wistia.net/assets/external/E-v1.js

Click on the whiteboard image above to open a high resolution version in a new tab!

Video Transcription

Howdy, Moz fans. Welcome to another portion of our special edition of Whiteboard Friday, the One-Hour Guide to SEO. This is Part II – Keyword Research. Hopefully you’ve already seen our SEO strategy session from last week. What we want to do in keyword research is talk about why keyword research is required. Why do I have to do this task prior to doing any SEO work?

The answer is fairly simple. If you don’t know which words and phrases people type into Google or YouTube or Amazon or Bing, whatever search engine you’re optimizing for, you’re not going to be able to know how to structure your content. You won’t be able to get into the searcher’s brain, into their head to imagine and empathize with them what they actually want from your content. You probably won’t do correct targeting, which will mean your competitors, who are doing keyword research, are choosing wise search phrases, wise words and terms and phrases that searchers are actually looking for, and you might be unfortunately optimizing for words and phrases that no one is actually looking for or not as many people are looking for or that are much more difficult than what you can actually rank for.

The goals of keyword research

So let’s talk about some of the big-picture goals of keyword research.

Understand the search demand landscape so you can craft more optimal SEO strategies

First off, we are trying to understand the search demand landscape so we can craft better SEO strategies. Let me just paint a picture for you.

I was helping a startup here in Seattle, Washington, a number of years ago — this was probably a couple of years ago — called Crowd Cow. Crowd Cow is an awesome company. They basically will deliver beef from small ranchers and small farms straight to your doorstep. I personally am a big fan of steak, and I don’t really love the quality of the stuff that I can get from the store. I don’t love the mass-produced sort of industry around beef. I think there are a lot of Americans who feel that way. So working with small ranchers directly, where they’re sending it straight from their farms, is kind of an awesome thing.

But when we looked at the SEO picture for Crowd Cow, for this company, what we saw was that there was more search demand for competitors of theirs, people like Omaha Steaks, which you might have heard of. There was more search demand for them than there was for “buy steak online,” “buy beef online,” and “buy rib eye online.” Even things like just “shop for steak” or “steak online,” these broad keyword phrases, the branded terms of their competition had more search demand than all of the specific keywords, the unbranded generic keywords put together.

That is a very different picture from a world like “soccer jerseys,” where I spent a little bit of keyword research time today looking, and basically the brand names in that field do not have nearly as much search volume as the generic terms for soccer jerseys and custom soccer jerseys and football clubs’ particular jerseys. Those generic terms have much more volume, which is a totally different kind of SEO that you’re doing. One is very, “Oh, we need to build our brand. We need to go out into this marketplace and create demand.” The other one is, “Hey, we need to serve existing demand already.”

So you’ve got to understand your search demand landscape so that you can present to your executive team and your marketing team or your client or whoever it is, hey, this is what the search demand landscape looks like, and here’s what we can actually do for you. Here’s how much demand there is. Here’s what we can serve today versus we need to grow our brand.

Create a list of terms and phrases that match your marketing goals and are achievable in rankings

The next goal of keyword research, we want to create a list of terms and phrases that we can then use to match our marketing goals and achieve rankings. We want to make sure that the rankings that we promise, the keywords that we say we’re going to try and rank for actually have real demand and we can actually optimize for them and potentially rank for them. Or in the case where that’s not true, they’re too difficult or they’re too hard to rank for. Or organic results don’t really show up in those types of searches, and we should go after paid or maps or images or videos or some other type of search result.

Prioritize keyword investments so you do the most important, high-ROI work first

We also want to prioritize those keyword investments so we’re doing the most important work, the highest ROI work in our SEO universe first. There’s no point spending hours and months going after a bunch of keywords that if we had just chosen these other ones, we could have achieved much better results in a shorter period of time.

Match keywords to pages on your site to find the gaps

Finally, we want to take all the keywords that matter to us and match them to the pages on our site. If we don’t have matches, we need to create that content. If we do have matches but they are suboptimal, not doing a great job of answering that searcher’s query, well, we need to do that work as well. If we have a page that matches but we haven’t done our keyword optimization, which we’ll talk a little bit more about in a future video, we’ve got to do that too.

Understand the different varieties of search results

So an important part of understanding how search engines work — we’re going to start down here and then we’ll come back up — is to have this understanding that when you perform a query on a mobile device or a desktop device, Google shows you a vast variety of results. Ten or fifteen years ago this was not the case. We searched 15 years ago for “soccer jerseys,” what did we get? Ten blue links. I think, unfortunately, in the minds of many search marketers and many people who are unfamiliar with SEO, they still think of it that way. How do I rank number one? The answer is, well, there are a lot of things “number one” can mean today, and we need to be careful about what we’re optimizing for.

So if I search for “soccer jersey,” I get these shopping results from Macy’s and soccer.com and all these other places. Google sort has this sliding box of sponsored shopping results. Then they’ve got advertisements below that, notated with this tiny green ad box. Then below that, there are couple of organic results, what we would call classic SEO, 10 blue links-style organic results. There are two of those. Then there’s a box of maps results that show me local soccer stores in my region, which is a totally different kind of optimization, local SEO. So you need to make sure that you understand and that you can convey that understanding to everyone on your team that these different kinds of results mean different types of SEO.

Now I’ve done some work recently over the last few years with a company called Jumpshot. They collect clickstream data from millions of browsers around the world and millions of browsers here in the United States. So they are able to provide some broad overview numbers collectively across the billions of searches that are performed on Google every day in the United States.

Click-through rates differ between mobile and desktop

The click-through rates look something like this. For mobile devices, on average, paid results get 8.7% of all clicks, organic results get about 40%, a little under 40% of all clicks, and zero-click searches, where a searcher performs a query but doesn’t click anything, Google essentially either answers the results in there or the searcher is so unhappy with the potential results that they don’t bother taking anything, that is 62%. So the vast majority of searches on mobile are no-click searches.

On desktop, it’s a very different story. It’s sort of inverted. So paid is 5.6%. I think people are a little savvier about which result they should be clicking on desktop. Organic is 65%, so much, much higher than mobile. Zero-click searches is 34%, so considerably lower.

There are a lot more clicks happening on a desktop device. That being said, right now we think it’s around 60–40, meaning 60% of queries on Google, at least, happen on mobile and 40% happen on desktop, somewhere in those ranges. It might be a little higher or a little lower.

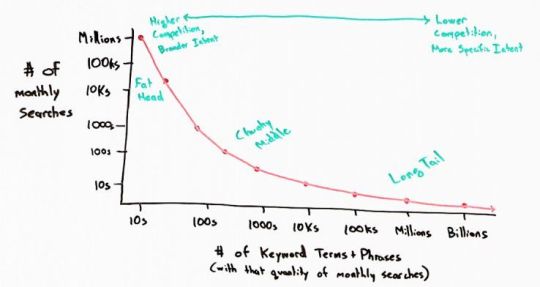

The search demand curve

Another important and critical thing to understand about the keyword research universe and how we do keyword research is that there’s a sort of search demand curve. So for any given universe of keywords, there is essentially a small number, maybe a few to a few dozen keywords that have millions or hundreds of thousands of searches every month. Something like “soccer” or “Seattle Sounders,” those have tens or hundreds of thousands, even millions of searches every month in the United States.

But people searching for “Sounders FC away jersey customizable,” there are very, very few searches per month, but there are millions, even billions of keywords like this.

The long-tail: millions of keyword terms and phrases, low number of monthly searches

When Sundar Pichai, Google’s current CEO, was testifying before Congress just a few months ago, he told Congress that around 20% of all searches that Google receives each day they have never seen before. No one has ever performed them in the history of the search engines. I think maybe that number is closer to 18%. But that is just a remarkable sum, and it tells you about what we call the long tail of search demand, essentially tons and tons of keywords, millions or billions of keywords that are only searched for 1 time per month, 5 times per month, 10 times per month.

The chunky middle: thousands or tens of thousands of keywords with ~50–100 searches per month

If you want to get into this next layer, what we call the chunky middle in the SEO world, this is where there are thousands or tens of thousands of keywords potentially in your universe, but they only have between say 50 and a few hundred searches per month.

The fat head: a very few keywords with hundreds of thousands or millions of searches

Then this fat head has only a few keywords. There’s only one keyword like “soccer” or “soccer jersey,” which is actually probably more like the chunky middle, but it has hundreds of thousands or millions of searches. The fat head is higher competition and broader intent.

Searcher intent and keyword competition

What do I mean by broader intent? That means when someone performs a search for “soccer,” you don’t know what they’re looking for. The likelihood that they want a customizable soccer jersey right that moment is very, very small. They’re probably looking for something much broader, and it’s hard to know exactly their intent.

However, as you drift down into the chunky middle and into the long tail, where there are more keywords but fewer searches for each keyword, your competition gets much lower. There are fewer people trying to compete and rank for those, because they don’t know to optimize for them, and there’s more specific intent. “Customizable Sounders FC away jersey” is very clear. I know exactly what I want. I want to order a customizable jersey from the Seattle Sounders away, the particular colors that the away jersey has, and I want to be able to put my logo on there or my name on the back of it, what have you. So super specific intent.

Build a map of your own keyword universe

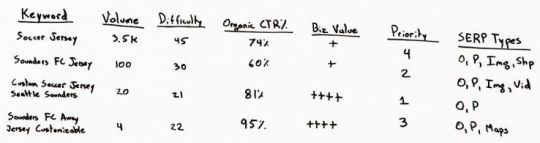

As a result, you need to figure out what the map of your universe looks like so that you can present that, and you need to be able to build a list that looks something like this. You should at the end of the keyword research process — we featured a screenshot from Moz’s Keyword Explorer, which is a tool that I really like to use and I find super helpful whenever I’m helping companies, even now that I have left Moz and been gone for a year, I still sort of use Keyword Explorer because the volume data is so good and it puts all the stuff together. However, there are two or three other tools that a lot of people like, one from Ahrefs, which I think also has the name Keyword Explorer, and one from SEMrush, which I like although some of the volume numbers, at least in the United States, are not as good as what I might hope for. There are a number of other tools that you could check out as well. A lot of people like Google Trends, which is totally free and interesting for some of that broad volume data.

So I might have terms like “soccer jersey,” “Sounders FC jersey”, and “custom soccer jersey Seattle Sounders.” Then I’ll have these columns:

Volume, because I want to know how many people search for it;

Difficulty, how hard will it be to rank. If it’s super difficult to rank and I have a brand-new website and I don’t have a lot of authority, well, maybe I should target some of these other ones first that are lower difficulty.

Organic Click-through Rate, just like we talked about back here, there are different levels of click-through rate, and the tools, at least Moz’s Keyword Explorer tool uses Jumpshot data on a per keyword basis to estimate what percent of people are going to click the organic results. Should you optimize for it? Well, if the click-through rate is only 60%, pretend that instead of 100 searches, this only has 60 or 60 available searches for your organic clicks. Ninety-five percent, though, great, awesome. All four of those monthly searches are available to you.

Business Value, how useful is this to your business?

Then set some type of priority to determine. So I might look at this list and say, “Hey, for my new soccer jersey website, this is the most important keyword. I want to go after “custom soccer jersey” for each team in the U.S., and then I’ll go after team jersey, and then I’ll go after “customizable away jerseys.” Then maybe I’ll go after “soccer jerseys,” because it’s just so competitive and so difficult to rank for. There’s a lot of volume, but the search intent is not as great. The business value to me is not as good, all those kinds of things.

Last, but not least, I want to know the types of searches that appear — organic, paid. Do images show up? Does shopping show up? Does video show up? Do maps results show up? If those other types of search results, like we talked about here, show up in there, I can do SEO to appear in those places too. That could yield, in certain keyword universes, a strategy that is very image centric or very video centric, which means I’ve got to do a lot of work on YouTube, or very map centric, which means I’ve got to do a lot of local SEO, or other kinds like this.

Once you build a keyword research list like this, you can begin the prioritization process and the true work of creating pages, mapping the pages you already have to the keywords that you’ve got, and optimizing in order to rank. We’ll talk about that in Part III next week. Take care.

Video transcription by Speechpad.com

Sign up for The Moz Top 10, a semimonthly mailer updating you on the top ten hottest pieces of SEO news, tips, and rad links uncovered by the Moz team. Think of it as your exclusive digest of stuff you don’t have time to hunt down but want to read!

from https://dentistry01.wordpress.com/2019/03/22/the-one-hour-guide-to-seo-part-2-keyword-research-whiteboard-friday/

0 notes

Link