#so i finally manage to log in and i try to migrate the server or whatever using my microsoft account. it tells me that my microsoft account

Explore tagged Tumblr posts

Conversation

Cassie when Frank first offered for her to go undercover: Wait a minute! This is a very big decision. It might affect the course of my entire life. I shall have to think about it.

Cassie: *pauses for 1 second*

Cassie: I’ll do it.

#okay i am going to complain abt something#i have been trying to remove files from a fifteen year old computer for like three days#specifically ancient sims and minecraft files#and the way nothing fucking works#with the sims stuff i know how to remove files so it should be whatever like i did it when my laptop broke with no issues#so i opened the files and like ??? half of them are gone??? because apparently my mother was just deleting things#so i've given up on those because i don't know what's gone and i'm afraid of breaking my game by putting fucked up files on my computer#then there's the stupid fucking minecraft ones oh my god#so like. i can't log into it on the computer because apparently at some point in the last like decade they migrated their servers? so i have#go through my phone because internet browsers don't function on the computer and i have the migrate it over that way#except it's my sister's account. not mine#and i don't know what the fucking password is#except naturally she doesn't either so i have to change the password. that finally happens and i go to log in#EXCEPT!!!! you have to answer the fucking security questions#so i have to try to get into the headspace of my sister when she was like 11 and try to work out what her favourite movie and author are#naturally she refuses to help with this and asks like it's ridiculous that i would even ask#eventually i just fucking give up and after like ten minutes of the website not functioning i managed to change the security questions to#stuff that will not change lmao#so i finally manage to log in and i try to migrate the server or whatever using my microsoft account. it tells me that my microsoft account#does not exist. i literally have it open in another tab#but whatever i'll just make a new one ig so i did that with my gmail and FINALLY everything got moved over#which fucking fantastic! i can log into it on the computer now!!! so like i do that and i dont know how this game works so i had looked up#how you remove the game files and it seemed really easy except none of the stuff that the internet says i should be looking at was there#but i was sorta confused about whether i was supposed to be in the launch window or like actually open the game so i decided to open the#game and see if i could find it there#except it loaded forever and ever and then i finally had to force quit it because it froze#and now nothing on the entire computer will open#like literally nothing#i dont know what the fuck to do#okay that's all sorry LMAO

6 notes

·

View notes

Note

How has the purge affected u?

[Apologies in advance for the Wall of Text™, I feel like longposting, sorry for the dash coverage, I didn’t think I had this much to say about this… And I probably shouldn’t do this, probably should have kept this to a flippant “It sucks!” with a VC meme, but I haven’t shared much publicly lately… now feels like a singularly poignant time to do so.]

NO CUTS WE LONGPOST LIKE MEN

It’s strange. I think running and participating in the @vcsecretgifts exchange (not finished yet!), and backing up that blog and this one for preservation (not finished yet!), helped take my mind off it! I’ve been busy with @wicked-felina coordinating substitute Santas, so I haven’t had much chance to indulge in it like a participant yet, but I did see that my recipient liked my gift, and that was heartwarming! I’ll reply properly when I have the peace of mind for it (yes I could be doing it now but this is the gear I want to be on right now), and I haven’t had a chance to read the gift from my own Santa, I’m saving that as a treat!

I did the #Log/ffProt/st, that helped. The purge is/was creatively stifling, somewhat, too, bc even though I don’t produce NS/FW stuff myself (I WANT TO, THO), I do reblog it, and support it, I see other artists and writers affected by it, and I felt and still feel helpless, unable to protect them. One of our VC fandom members who draws slash art has been shadowbanned, that I know of. It’s frustrating that the morality & purity police seem to have won this battle, but they haven’t won the war. We’ll take our garbage underground if we have to.

How crushing to wake up to one’s blog(s) just canceled w/o explanation? We were given 2 weeks’ notice? To pack up our “nasty” stuff and leave?

[X]

There’s nothing wrong with NS/FW stuff, adult ppl should be able to talk about it, fantasize about it, make art and write fiction about it, have kinks and explore them. I never bought the “if you like it in fiction you support it in reality!” argument, just like with all dangerous things we like in fiction but wouldn’t want in reality.

“… Fiction is how we both study and de-fang our monsters. To lock violent fiction away, or to close our eyes to it, is to give our monsters and our fears undeserved power and richer hunting grounds.” - Warren Ellis [X]

But I’ve fought those battles and there’s no point in engaging in unwinnable debate with ppl who are committed to misunderstanding me and twisting my words into a strawman they can easily knock over.

It’s baffling that it’s an unpopular opinion that minors should be allowed to learn about sex, as much as they learn about how to (eventually) drive a car, manage alcohol consumption, defend themselves against violence, handle medication or recreational drugs, all these things that are potentially and not inherently dangerous to them, that they’ll be faced with in the Real World. I remember there were religious rituals in my youth where children could taste alcohol a little bit, it was exposure to an adult thing in a safe space, among other adults. Is this really all about Protecting the Children? Really? Or is it about mental domination? What it looks like to me is a self-proclaimed Particular Authority who wants to keep minors (and adults) submissive and reliant on that Particular Authority, it’s so much easier to keep them submissive and reliant to that same Particular Authority as adults. It’s always been about power.

And I’m seeing that the communities most affected by the purge are AFAB ppl and LGBTQIA+. It’s misogynistic, LGBTQIA+-phobic. The fact that tungle reportedly blocked archivists from saving blogs before the NS/FW purge is just pouring salt in the wound.

I’ve started following these refugee/evicted tumblr ppl where they’ve migrated to. I’m trying to keep track of them. I’m in the @fiction-is-not-reality2 discord server, keeping my eye out for the next alternative platform.

Leading up to the purge I considered blasting a bunch of smut as a last hurrah, and I did reblog some Controversial™ stuff, just in case my blog was going to be deleted, but then, I lost steam on that. Why put in extra effort and get deleted anyway? Why poke the bear, and deliberately get deleted for it? Most of my blog is SFW, anyway.

I preserved my blog, the gifts blog, and just for archival purposes I should have been doing that all along, so it was good for my own historical safekeeping… so much good commentary and fanworks here, in the past 5+ years! Collecting the scraps just like I’d done in 1994, when there were articles about the IWTV movie and I wanted all of them, I especially wanted the illustrations and caricatures in the magazines (which was really validating of my interest in some way, fanart that was published, essentially!). And I had my folder of Deviantart I liked, of course. So I packed up my blog here to preserve it, it’s on wordpress now, iwantmyiwtv.com, with a lame layout, but I’ve got the tags showing, where fanart that’s blocked here can still be seen on WP.

I’m rambling.

The purge reminded me that all this, as we know it, could and will be gone someday. Purges have done that before, especially to our fandom, attacked by its own canon author. We’ve survived this before.

I’ve been on tungle since July ‘13. I’ve made and lost some wonderful friends here, some have moved on to other fandoms, or we’ve had partings of the ways. The fanart in this fandom, my memes, have been spread all over, I see them on Pinterest, Facebook, Twitter. When this blog is deleted, either by content flagging or by tumblr finally keeling over, our stuff is going to outlive us all.

Who even made this one? One of the vintage memes. Maybe their watermark was long ago cropped off, or maybe they hadn’t put it on:

^It was used in a meme here, but I don’t think that was the OP, it’s gotta be more than 4 yrs old. Pretty sure the “JUDGING YOU” in Impact font was around Twilight time, which came out in 2008. This meme is still floating around, it’s still amusing to ppl all these years later. Someone’s stroke of inspiration, and we may never know who it was, but we enjoy it, it’s part of the worn fabric of the fandom.

Will ppl remember me when/if I’m gone? I don’t need to be remembered, it’s enough that I was here at one point, and encouraged ppl to make fanworks, that I helped bring ppl together. I don’t need them to know it was me, specifically, or know much about me, this blog was never meant to be about me. Those I brought together might remember how they met. There are those who have seen behind the curtain and I hope to hang onto them as long as possible.

If/When this all disappears, I want ppl to know how much I enjoyed interacting with ppl through asks, the chat feature. I’ve missed answering asks, and I’ve missed the feeling of seeing new ask alerts without having to brace myself for Discourse. I’ve missed seeing that anon icon as a friendly, but shy, human being, rather than a living person who’s in pain, somewhere else in the world, throwing bricks through my window. Someone who’s suffering bc they’re not getting the attention they need, truly, someone who deserves to be loved, someone who needs validation for their opinions on things, and wanted mine, but I couldn’t give it. I’m only human, too. I made this blog for 15 year old me, who couldn’t find enough VC fanworks, so I set out to collect, make, and encourage them, but all in the spirit of optimism, bc that’s what I got out of canon. 15 year old me drew self esteem from those books. That’s the only person I ever wanted to please with this thing and that girl is still my priority.

We’ll survive this purge, we’ve done it before. Hold onto the ppl who you’ve made connections with. I’ll be here as long as I can.

Most importantly, I’m not letting the morality & purity police tell me what ’m allowed to learn about, make fanworks about, or enjoy in published or fan fiction, etc.

#Anonymous#ask#note from the addict#long post#NO CUTS WE LONGPOST LIKE MEN#vintage meme#problematic#darkfic#dark fiction#warren ellis#quote#adventures in tumblrland#advice#on fandom

24 notes

·

View notes

Text

Evernote Dropbox

Why Migrate from Dropbox to OneDrive?

Nowadays, online cloud becomes the most popular storage type for people. And, a lot of users havemore than one cloud accounts belong to the same or different cloud brands. The common cloudservices are Dropbox, OneDrive, Google Drive, Amazon Drive, MEGA and so on. Some cloud usersplan to switch from Dropbox to OneDrive for the following reasons: 1password single sign on.

OneDrive provides more free cloud space (5 GB) than Dropbox (2 GB).

OneDrive offers cheaper and more reasonable storage plan (50 GB: $1.99/m; 1 TB: $6.99/m; 5TB: $9.99/m) than Dropbox (1 TB: $9.99/m; 2 TB: $19.99/m).

They have purchased office 365 product with OneDrive service.

They have graduated from school and have to move their schoolwork from Dropbox for Businessaccount to their personal OneDrive.

They have resigned from their last position and need to transfer their working documentsfrom public Dropbox account to their own OneDrive cloud.

The Dropbox account is running out of space while there is much storage in OneDrive.

Their friends recommend OneDrive to them and they find OneDrive suits them better afterdoing the test.

……

How to Migrate Dropbox to OneDrive in Common Ways?

Evernote on the Mac can change the font in notes, but not on iOS, and sometimes routine copy/pasting on iOS results in text with bizarre fonts that can't be fixed on that device. The use of Apple Data Detectors also means that anything Evernote thinks might be a phone number or calendar event is highlighted like a clickable link - I wish this. Best Note-Taking Apps in 2021: Organizing Your Thoughts. A good note-taking app is the best way to organize your thoughts and making sure you don't forget something important.

Evernote is an award-winning service that turns every computer and phone you use into an extension of your brain. Use Evernote to save your ideas, experiences and inspirations, then easily access them all at any time from anywhere.

Brother iPrint&Scan. Scan and print from your mobile device with our free iPrint&Scan app. Connect a compatible Brother printer or all-in-one to your wireless network, and scan and print documents from a smartphone, tablet, or laptop.

Go to ifttt.com to make the connection.

As we all know, neither Dropbox nor Microsoft OneDrive app has a function to migrate data betweeneach other. So it’s a question on how to transfer from Dropbox to OneDrive directly. In thefollowing parts, we will offer you two traditional methods to achieve your goal.

Solution 1: Download and Upload

Step 1. Sign in your Dropbox account. Step 2. Create a new folder, select all files under your Dropbox account and move them to thenew folder. Step 3. Put the mouse on the new folder, click the three-dot symbol and click the“Download” button and wait for the process to complete.

Step 4. Log in your OneDrive account. Step 5. Click “Upload” button to upload that .zip file to your OneDrive account and waitfor the process to complete.

Notes: C minor.

The new folder will become a .zip file after it’s downloaded to the local PC.

If you want to upload folder to your OneDrive directly, then you need to extractthat.zip file first.

Solution 2: Migrate Dropbox to OneDrive with Windows Explorer

Step 1. Download both Dropbox app and OneDriveapp and install them on your PC. Step 2. After installing them on your PC, you will find them through Windows File Explorer.

Step 3. You can move Dropbox to OneDrive with the “Cut” and “Paste” feature through Windows FileExplorer.

As you can see, any above solution can help you copy Dropbox to OneDrive but it takes time tocomplete because you need to perform the operations manually. For the solution 1, you cannotclose the page until the process is complete. For the solution 2, you need to install apps onyour PC so you can complete the operations.

Actually, there is an effective way that can move data from Dropbox to OneDrive quicklywithout encountering above problems. Please continue to read the following parts.

How to Migrate Dropbox to OneDrive in Effective Way?

If you want to quickly move files from Dropbox to OneDrive while ensuring data security, you arerecommended to try a free and professinal cloudto cloud transfer service, MultCloud, without logging in to both clouds, shifting fromone cloud to another or downloading and re-uploading. Now, follow the steps below to quicky andsafely migrate files from Dropbox to OneDrive.

Step 1. Register MultCloud - Free

MultCloud is a Free web based cloud file transfer manager. To use it, firstly, you have tocreate an account.

Step 2. Add Dropbox and OneDrive Accounts to MultCloud

After creating an account, sign into its platform. In the main panel, click “Add Clouds”tab on the top and select the cloud brand you are going to include in. Then, follow the simpleguidance to finish adding cloud.

Note: You can only add one cloud account in one time. So, add the other clouds byrepeating the process.

Step 3. Migrate Dropbox to OneDrive with “Cloud Transfer”

Working with C# The C# support in Visual Studio Code is optimized for cross-platform.NET Core development (see working with.NET Core and VS Code for another relevant article). Our focus with VS Code is to be a great editor for cross-platform C# development. VS Code supports debugging of C# applications running on either.NET Core or Mono. Welcome to the C# extension for Visual Studio Code! This extension provides the following features inside VS Code: Lightweight development tools for.NET Core. Great C# editing support, including Syntax Highlighting, IntelliSense, Go to Definition, Find All References, etc. C'est la vie. Visual Studio tutorials C#. Create C# apps with Visual Studio. Get started How-To Guide Install Visual Studio; Get Started Start a guided tour. In this section, you use Visual Studio Code to create a local Azure Functions project in C#. Later in this article, you'll publish your function code to Azure. Choose the Azure icon in the Activity bar, then in the Azure: Functions area, select the Create new project.

Evernote And Dropbox

Now, go to “Cloud Transfer” tab and specify source and destination as Dropbox and OneDriverespectively. Finally, click “Transfer Now” and wait for the process to complete.

Tips:

“Cloud Transfer” feature supports entire cloud or folders as source. If you want totransfer some files only from Dropbox to OneDrive, you could use the “Copy” and“Paste” feature through “Cloud Explorer”.

If you need to delete source files after migrating Dropbox to OneDrive, just tick “Deleteall source files after transfer is complete” in Options window.

Set OneDrive as source and Dropbox as destination if you want to migrate OneDrive to Dropbox.

If you have very large data to transfer, to get a super fast transferspeed, you could also upgrade your account to the premium accountso MultCloud uses 10 threads to transfer your files across clouds.

More about MultCloud

Following any way above, you could easily migrate Dropbox to OneDrive. If you select popular wayabove with MultCloud, you can also enjoy other advanced features. In addittion to Dropbox andOneDrive, MultCloud supports more than 30 clouds at presentincluding G Suite, OneDrive, Dropbox, Google Photos, MEGA, Amazon S3, Flickr, Box, pCloud, etc.

One of the greatest aviation memoirs ever written, Viper Pilot is an Air Force legend's thrilling eyewitness account of modern air warfare. For twenty years, Lieutenant Colonel Dan Hampton was a leading member of the Wild Weasels, logging 608 combat hours in the world's most iconic fighter jet: the F-16 'Fighting Falcon,' or 'Viper.' The viper pilot. 270.8k Followers, 99 Following, 629 Posts - See Instagram photos and videos from viperpilot (@theviperpilot).

Using Evernote Dropbox

Besides “Cloud Transfer “ feature, MultCloud can also do cloud to cloud sync/backup/copy with“Cloud Sync”. If you are going to migrate G Suite to G Suitebecause your domain has changed, you can make full use of this feature.

Evernote Dropbox Integration

As a browser app, MultCloud requires no downloading and installation. Thus, you can save muchlocal disk space. It still can be applied in all operating systems like Windows PC & Server,Linux, Mac, ios, android and Chrome OS; and all devices including desktop, laptop, notebook,iPad, cellphone, etc.

0 notes

Text

Building a Real-Time Webapp with Node.js and Socket.io

In this blogpost we showcase a project we recently finished for National Democratic Institute, an NGO that supports democratic institutions and practices worldwide. NDI’s mission is to strengthen political and civic organizations, safeguard elections and promote citizen participation, openness and accountability in government.

Our assignment was to build an MVP of an application that supports the facilitators of a cybersecurity themed interactive simulation game. As this webapp needs to be used by several people on different machines at the same time, it needed real-time synchronization which we implemented using Socket.io.

In the following article you can learn more about how we approached the project, how we structured the data access layer and how we solved challenges around creating our websocket server, just to mention a few. The final code of the project is open-source, and you’re free to check it out on Github.

A Brief Overview of the CyberSim Project

Political parties are at extreme risk to hackers and other adversaries, however, they rarely understand the range of threats they face. When they do get cybersecurity training, it’s often in the form of dull, technically complicated lectures. To help parties and campaigns better understand the challenges they face, NDI developed a cybersecurity simulation (CyberSim) about a political campaign rocked by a range of security incidents. The goal of the CyberSim is to facilitate buy-in for and implementation of better security practices by helping political campaigns assess their own readiness and experience the potential consequences of unmitigated risks.

The CyberSim is broken down into three core segments: preparation, simulation, and an after action review. During the preparation phase, participants are introduced to a fictional (but realistic) game-play environment, their roles, and the rules of the game. They are also given an opportunity to select security-related mitigations from a limited budget, providing an opportunity to "secure their systems" to the best of their knowledge and ability before the simulation begins.

The simulation itself runs for 75 minutes, during which time the participants have the ability to take actions to raise funds, boost support for their candidate and, most importantly, respond to events that occur that may negatively impact their campaign's success. These events are meant to test the readiness, awareness and skills of the participants related to information security best practices. The simulation is designed to mirror the busyness and intensity of a typical campaign environment.

The after action review is in many ways the most critical element of the CyberSim exercise. During this segment, CyberSim facilitators and participants review what happened during the simulation, what events lead to which problems during the simulation, and what actions the participants took (or should have taken) to prevent security incidents from occurring. These lessons are closely aligned with the best practices presented in the Cybersecurity Campaigns Playbook, making the CyberSim an ideal opportunity to reinforce existing knowledge or introduce new best practices presented there.

Since data representation serves as the skeleton of each application, Norbert - who built part of the app will first walk you through the data layer created using knex and Node.js. Then he will move to the program's hearth, the socket server that manages real-time communication.

This is going to be a series of articles, so in the next part, we will look at the frontend, which is built with React. Finally, in the third post, Norbert will present the muscle that is the project's infrastructure. We used Amazon's tools to create the CI/CD, host the webserver, the static frontend app, and the database.

Now that we're through with the intro, you can enjoy reading this Socket.io tutorial / Case Study from Norbert:

The Project's Structure

Before diving deep into the data access layer, let's take a look at the project's structure:

. ├── migrations │ └── ... ├── seeds │ └── ... ├── src │ ├── config.js │ ├── logger.js │ ├── constants │ │ └── ... │ ├── models │ │ └── ... │ ├── util │ │ └── ... │ ├── app.js │ └── socketio.js └── index.js

As you can see, the structure is relatively straightforward, as we’re not really deviating from a standard Node.js project structure. To better understand the application, let’s start with the data model.

The Data Access Layer

Each game starts with a preprogrammed poll percentage and an available budget. Throughout the game, threats (called injections) occur at a predefined time (e.g., in the second minute) to which players have to respond. To spice things up, the staff has several systems required to make responses and take actions. These systems often go down as a result of injections. The game's final goal is simple: the players have to maximize their party's poll by answering each threat.

We used a PostgreSQL database to store the state of each game. Tables that make up the data model can be classified into two different groups: setup and state tables. Setup tables store data that are identical and constant for each game, such as:

injections - contains each threat player face during the game, e.g., Databreach

injection responses - a one-to-many table that shows the possible reactions for each injection

action - operations that have an immediate on-time effect, e.g., Campaign advertisement

systems - tangible and intangible IT assets, which are prerequisites of specific responses and actions, e.g., HQ Computers

mitigations - tangible and intangible assets that mitigate upcoming injections, e.g., Create a secure backup for the online party voter database

roles - different divisions of a campaign party, e.g., HQ IT Team

curveball events - one-time events controlled by the facilitators, e.g., Banking system crash

On the other hand, state tables define the state of a game and change during the simulation. These tables are the following:

game - properties of a game like budget, poll, etc.

game systems - stores the condition of each system (is it online or offline) throughout the game

game mitigations - shows if players have bought each mitigation

game injection - stores information about injections that have happened, e.g., was it prevented, responses made to it

game log

To help you visualize the database schema, have a look at the following diagram. Please note that the game_log table was intentionally left from the image since it adds unnecessary complexity to the picture and doesn’t really help understand the core functionality of the game:

To sum up, state tables always store any ongoing game's current state. Each modification done by a facilitator must be saved and then transported back to every coordinator. To do so, we defined a method in the data access layer to return the current state of the game by calling the following function after the state is updated:

// ./src/game.js const db = require('./db'); const getGame = (id) => db('game') .select( 'game.id', 'game.state', 'game.poll', 'game.budget', 'game.started_at', 'game.paused', 'game.millis_taken_before_started', 'i.injections', 'm.mitigations', 's.systems', 'l.logs', ) .where({ 'game.id': id }) .joinRaw( `LEFT JOIN (SELECT gm.game_id, array_agg(to_json(gm)) AS mitigations FROM game_mitigation gm GROUP BY gm.game_id) m ON m.game_id = game.id`, ) .joinRaw( `LEFT JOIN (SELECT gs.game_id, array_agg(to_json(gs)) AS systems FROM game_system gs GROUP BY gs.game_id) s ON s.game_id = game.id`, ) .joinRaw( `LEFT JOIN (SELECT gi.game_id, array_agg(to_json(gi)) AS injections FROM game_injection gi GROUP BY gi.game_id) i ON i.game_id = game.id`, ) .joinRaw( `LEFT JOIN (SELECT gl.game_id, array_agg(to_json(gl)) AS logs FROM game_log gl GROUP BY gl.game_id) l ON l.game_id = game.id`, ) .first();

The const db = require('./db'); line returns a database connection established via knex, used for querying and updating the database. By calling the function above, the current state of a game can be retrieved, including each mitigation already purchased and still available for sale, online and offline systems, injections that have happened, and the game's log. Here is an example of how this logic is applied after a facilitator triggers a curveball event:

// ./src/game.js const performCurveball = async ({ gameId, curveballId }) => { try { const game = await db('game') .select( 'budget', 'poll', 'started_at as startedAt', 'paused', 'millis_taken_before_started as millisTakenBeforeStarted', ) .where({ id: gameId }) .first(); const { budgetChange, pollChange, loseAllBudget } = await db('curveball') .select( 'lose_all_budget as loseAllBudget', 'budget_change as budgetChange', 'poll_change as pollChange', ) .where({ id: curveballId }) .first(); await db('game') .where({ id: gameId }) .update({ budget: loseAllBudget ? 0 : Math.max(0, game.budget + budgetChange), poll: Math.min(Math.max(game.poll + pollChange, 0), 100), }); await db('game_log').insert({ game_id: gameId, game_timer: getTimeTaken(game), type: 'Curveball Event', curveball_id: curveballId, }); } catch (error) { logger.error('performCurveball ERROR: %s', error); throw new Error('Server error on performing action'); } return getGame(gameId); };

As you can examine, after the update on the game's state happens, which this time is a change in budget and poll, the program calls the getGame function and returns its result. By applying this logic, we can manage the state easily. We have to arrange each coordinator of the same game into groups, somehow map each possible event to a corresponding function in the models folder, and broadcast the game to everyone after someone makes a change. Let's see how we achieved it by leveraging WebSockets.

Creating Our Real-Time Socket.io Server with Node.js

As the software we’ve created is a companion app to an actual tabletop game played at different locations, it is as real time as it gets. To handle such use cases, where the state of the UI-s needs to be synchronized across multiple clients, WebSockets are the go-to solution. To implement the WebSocket server and client, we chose to use Socket.io. While Socket.io clearly comes with a huge performance overhead, it freed us from a lot of hassle that arises from the stafeful nature of WebSocket connections. As the expected load was minuscule, the overhead Socket.io introduced was way overshadowed by the savings in development time it provided. One of the killer features of Socket.io that fit our use case very well was that operators who join the same game can be separated easily using socket.io rooms. This way, after a participant updates the game, we can broadcast the new state to the entire room (everyone who currently joined a particular game).

To create a socket server, all we need is a Server instance created by the createServer method of the default Node.js http module. For maintainability, we organized the socket.io logic into its separate module (see: .src/socketio.js). This module exports a factory function with one argument: an http Server object. Let's have a look at it:

// ./src/socketio.js const socketio = require('socket.io'); const SocketEvents = require('./constants/SocketEvents'); module.exports = (http) => { const io = socketio(http); io.on(SocketEvents.CONNECT, (socket) => { socket.on('EVENT', (input) => { // DO something with the given input }) } }

// index.js const { createServer } = require('http'); const app = require('./src/app'); // Express app const createSocket = require('./src/socketio'); const port = process.env.PORT || 3001; const http = createServer(app); createSocket(http); const server = http.listen(port, () => { logger.info(`Server is running at port: ${port}`); });

As you can see, the socket server logic is implemented inside the factory function. In the index.js file then this function is called with the http Server. We didn't have to implement authorization during this project, so there isn't any socket.io middleware that authenticates each client before establishing the connection. Inside the socket.io module, we created an event handler for each possible action a facilitator can perform, including the documentation of responses made to injections, buying mitigations, restoring systems, etc. Then we mapped our methods defined in the data access layer to these handlers.

Bringing together facilitators

I previously mentioned that rooms make it easy to distinguish facilitators by which game they currently joined in. A facilitator can enter a room by either creating a fresh new game or joining an existing one. By translating this to "WebSocket language", a client emits a createGame or joinGame event. Let's have a look at the corresponding implementation:

// ./src/socketio.js const socketio = require('socket.io'); const SocketEvents = require('./constants/SocketEvents'); const logger = require('./logger'); const { createGame, getGame, } = require('./models/game'); module.exports = (http) => { const io = socketio(http); io.on(SocketEvents.CONNECT, (socket) => { logger.info('Facilitator CONNECT'); let gameId = null; socket.on(SocketEvents.DISCONNECT, () => { logger.info('Facilitator DISCONNECT'); }); socket.on(SocketEvents.CREATEGAME, async (id, callback) => { logger.info('CREATEGAME: %s', id); try { const game = await createGame(id); if (gameId) { await socket.leave(gameId); } await socket.join(id); gameId = id; callback({ game }); } catch (_) { callback({ error: 'Game id already exists!' }); } }); socket.on(SocketEvents.JOINGAME, async (id, callback) => { logger.info('JOINGAME: %s', id); try { const game = await getGame(id); if (!game) { callback({ error: 'Game not found!' }); } if (gameId) { await socket.leave(gameId); } await socket.join(id); gameId = id; callback({ game }); } catch (error) { logger.error('JOINGAME ERROR: %s', error); callback({ error: 'Server error on join game!' }); } }); } }

If you examine the code snippet above, the gameId variable contains the game's id, the facilitators currently joined. By utilizing the javascript closures, we declared this variable inside the connect callback function. Hence the gameId variable will be in all following handlers' scope. If an organizer tries to create a game while already playing (which means that gameId is not null), the socket server first kicks the facilitator out of the previous game's room then joins the facilitator in the new game room. This is managed by the leave and join methods. The process flow of the joinGame handler is almost identical. The only keys difference is that this time the server doesn't create a new game. Instead, it queries the already existing one using the infamous getGame method of the data access layer.

What Makes Our Event Handlers?

After we successfully brought together our facilitators, we had to create a different handler for each possible event. For the sake of completeness, let's look at all the events that occur during a game:

createGame, joinGame: these events' single purpose is to join the correct game room organizer.

startSimulation, pauseSimulation, finishSimulation: these events are used to start the event's timer, pause the timer, and stop the game entirely. Once someone emits a finishGame event, it can't be restarted.

deliverInjection: using this event, facilitators trigger security threats, which should occur in a given time of the game.

respondToInjection, nonCorrectRespondToInjection: these events record the responses made to injections.

restoreSystem: this event is to restore any system which is offline due to an injection.

changeMitigation: this event is triggered when players buy mitigations to prevent injections.

performAction: when the playing staff performs an action, the client emits this event to the server.

performCurveball: this event occurs when a facilitator triggers unique injections.

These event handlers implement the following rules:

They take up to two arguments, an optional input, which is different for each event, and a predefined callback. The callback is an exciting feature of socket.io called acknowledgment. It lets us create a callback function on the client-side, which the server can call with either an error or a game object. This call will then affect the client-side. Without diving deep into how the front end works (since this is a topic for another day), this function pops up an alert with either an error or a success message. This message will only appear for the facilitator who initiated the event.

They update the state of the game by the given inputs according to the event's nature.

They broadcast the new state of the game to the entire room. Hence we can update the view of all organizers accordingly.

First, let's build on our previous example and see how the handler implemented the curveball events.

// ./src/socketio.js const socketio = require('socket.io'); const SocketEvents = require('./constants/SocketEvents'); const logger = require('./logger'); const { performCurveball, } = require('./models/game'); module.exports = (http) => { const io = socketio(http); io.on(SocketEvents.CONNECT, (socket) => { logger.info('Facilitator CONNECT'); let gameId = null; socket.on( SocketEvents.PERFORMCURVEBALL, async ({ curveballId }, callback) => { logger.info( 'PERFORMCURVEBALL: %s', JSON.stringify({ gameId, curveballId }), ); try { const game = await performCurveball({ gameId, curveballId, }); io.in(gameId).emit(SocketEvents.GAMEUPDATED, game); callback({ game }); } catch (error) { callback({ error: error.message }); } }, ); } }

The curveball event handler takes one input, a curveballId and the callback as mentioned earlier. The performCurveball method then updates the game's poll and budget and returns the new game object. If the update is successful, the socket server emits a gameUpdated event to the game room with the latest state. Then it calls the callback function with the game object. If any error occurs, it is called with an error object.

After a facilitator creates a game, first, a preparation view is loaded for the players. In this stage, staff members can spend a portion of their budget to buy mitigations before the game starts. Once the game begins, it can be paused, restarted, or even stopped permanently. Let's have a look at the corresponding implementation:

// ./src/socketio.js const socketio = require('socket.io'); const SocketEvents = require('./constants/SocketEvents'); const logger = require('./logger'); const { startSimulation, pauseSimulation } = require('./models/game'); module.exports = (http) => { const io = socketio(http); io.on(SocketEvents.CONNECT, (socket) => { logger.info('Facilitator CONNECT'); let gameId = null; socket.on(SocketEvents.STARTSIMULATION, async (callback) => { logger.info('STARTSIMULATION: %s', gameId); try { const game = await startSimulation(gameId); io.in(gameId).emit(SocketEvents.GAMEUPDATED, game); callback({ game }); } catch (error) { callback({ error: error.message }); } }); socket.on(SocketEvents.PAUSESIMULATION, async (callback) => { logger.info('PAUSESIMULATION: %s', gameId); try { const game = await pauseSimulation({ gameId }); io.in(gameId).emit(SocketEvents.GAMEUPDATED, game); callback({ game }); } catch (error) { callback({ error: error.message }); } }); socket.on(SocketEvents.FINISHSIMULATION, async (callback) => { logger.info('FINISHSIMULATION: %s', gameId); try { const game = await pauseSimulation({ gameId, finishSimulation: true }); io.in(gameId).emit(SocketEvents.GAMEUPDATED, game); callback({ game }); } catch (error) { callback({ error: error.message }); } }); } }

The startSimulation kicks the game's timer, and the pauseSimulation method pauses and stops the game. Trigger time is essential to determine which injection facilitators can invoke. After organizers trigger a threat, they hand over all necessary assets to the players. Staff members can then choose how they respond to the injection by providing a custom response or choosing from the predefined options. Next to facing threats, staff members perform actions, restore systems, and buy mitigations. The corresponding events to these activities can be triggered anytime during the game. These event handlers follow the same pattern and implement ourthe three fundamental rules. Please check the public GitHub repo if you would like to examine these callbacks.

Serving The Setup Data

In the chapter explaining the data access layer, I classified tables into two different groups: setup and state tables. State tables contain the condition of ongoing games. This data is served and updated via the event-based socket server. On the other hand, setup data consists of the available systems, game mitigations, actions, and curveball events, injections that occur during the game, and each possible response to them. This data is exposed via a simple http server. After a facilitator joins a game, the React client requests this data and caches and uses it throughout the game. The HTTP server is implemented using the express library. Let's have a look at our app.js.

// .src/app.js const helmet = require('helmet'); const express = require('express'); const cors = require('cors'); const expressPino = require('express-pino-logger'); const logger = require('./logger'); const { getResponses } = require('./models/response'); const { getInjections } = require('./models/injection'); const { getActions } = require('./models/action'); const app = express(); app.use(helmet()); app.use(cors()); app.use( expressPino({ logger, }), ); // STATIC DB data is exposed via REST api app.get('/mitigations', async (req, res) => { const records = await db('mitigation'); res.json(records); }); app.get('/systems', async (req, res) => { const records = await db('system'); res.json(records); }); app.get('/injections', async (req, res) => { const records = await getInjections(); res.json(records); }); app.get('/responses', async (req, res) => { const records = await getResponses(); res.json(records); }); app.get('/actions', async (req, res) => { const records = await getActions(); res.json(records); }); app.get('/curveballs', async (req, res) => { const records = await db('curveball'); res.json(records); }); module.exports = app;

As you can see, everything is pretty standard here. We didn't need to implement any method other than GET since this data is inserted and changed using seeds.

Final Thoughts On Our Socket.io Game

Now we can put together how the backend works. State tables store the games' state, and the data access layer returns the new game state after each update. The socket server organizes the facilitators into rooms, so each time someone changes something, the new game is broadcasted to the entire room. Hence we can make sure that everyone has an up-to-date view of the game. In addition to dynamic game data, static tables are accessible via the http server.

Next time, we will look at how the React client manages all this, and after that I'll present the infrastructure behind the project. You can check out the code of this app in the public GitHub repo!

In case you're looking for experienced full-stack developers, feel free to reach out to us via [email protected], or via using the form below this article.

You can also check out our Node.js Development & Consulting service page for more info on our capabilities.

Building a Real-Time Webapp with Node.js and Socket.io published first on https://koresolpage.tumblr.com/

0 notes

Text

Let’s Create Our Own Authentication API with Nodejs and GraphQL

Authentication is one of the most challenging tasks for developers just starting with GraphQL. There are a lot of technical considerations, including what ORM would be easy to set up, how to generate secure tokens and hash passwords, and even what HTTP library to use and how to use it.

In this article, we’ll focus on local authentication. It’s perhaps the most popular way of handling authentication in modern websites and does so by requesting the user’s email and password (as opposed to, say, using Google auth.)

Moreover, This article uses Apollo Server 2, JSON Web Tokens (JWT), and Sequelize ORM to build an authentication API with Node.

Handling authentication

As in, a log in system:

Authentication identifies or verifies a user.

Authorization is validating the routes (or parts of the app) the authenticated user can have access to.

The flow for implementing this is:

The user registers using password and email

The user’s credentials are stored in a database

The user is redirected to the login when registration is completed

The user is granted access to specific resources when authenticated

The user’s state is stored in any one of the browser storage mediums (e.g. localStorage, cookies, session) or JWT.

Pre-requisites

Before we dive into the implementation, here are a few things you’ll need to follow along.

Node 6 or higher

Yarn (recommended) or NPM

GraphQL Playground

Basic Knowledge of GraphQL and Node

…an inquisitive mind!

Dependencies

This is a big list, so let’s get into it:

Apollo Server: An open-source GraphQL server that is compatible with any kind of GraphQL client. We won’t be using Express for our server in this project. Instead, we will use the power of Apollo Server to expose our GraphQL API.

bcryptjs: We want to hash the user passwords in our database. That’s why we will use bcrypt. It relies on Web Crypto API‘s getRandomValues interface to obtain secure random numbers.

dotenv: We will use dotenv to load environment variables from our .env file.

jsonwebtoken: Once the user is logged in, each subsequent request will include the JWT, allowing the user to access routes, services, and resources that are permitted with that token. jsonwebtokenwill be used to generate a JWT which will be used to authenticate users.

nodemon: A tool that helps develop Node-based applications by automatically restarting the node application when changes in the directory are detected. We don’t want to be closing and starting the server every time there’s a change in our code. Nodemon inspects changes every time in our app and automatically restarts the server.

mysql2: An SQL client for Node. We need it connect to our SQL server so we can run migrations.

sequelize: Sequelize is a promise-based Node ORM for Postgres, MySQL, MariaDB, SQLite and Microsoft SQL Server. We will use Sequelize to automatically generate our migrations and models.

sequelize cli: We will use Sequelize CLI to run Sequelize commands. Install it globally with yarn add --global sequelize-cli in the terminal.

Setup directory structure and dev environment

Let’s create a brand new project. Create a new folder and this inside of it:

yarn init -y

The -y flag indicates we are selecting yes to all the yarn init questions and using the defaults.

We should also put a package.json file in the folder, so let’s install the project dependencies:

yarn add apollo-server bcrpytjs dotenv jsonwebtoken nodemon sequelize sqlite3

Next, let’s add Babeto our development environment:

yarn add babel-cli babel-preset-env babel-preset-stage-0 --dev

Now, let’s configure Babel. Run touch .babelrc in the terminal. That creates and opens a Babel config file and, in it, we’ll add this:

{ "presets": ["env", "stage-0"] }

It would also be nice if our server starts up and migrates data as well. We can automate that by updating package.json with this:

"scripts": { "migrate": " sequelize db:migrate", "dev": "nodemon src/server --exec babel-node -e js", "start": "node src/server", "test": "echo \"Error: no test specified\" && exit 1" },

Here’s our package.json file in its entirety at this point:

{ "name": "graphql-auth", "version": "1.0.0", "main": "index.js", "scripts": { "migrate": " sequelize db:migrate", "dev": "nodemon src/server --exec babel-node -e js", "start": "node src/server", "test": "echo \"Error: no test specified\" && exit 1" }, "dependencies": { "apollo-server": "^2.17.0", "bcryptjs": "^2.4.3", "dotenv": "^8.2.0", "jsonwebtoken": "^8.5.1", "nodemon": "^2.0.4", "sequelize": "^6.3.5", "sqlite3": "^5.0.0" }, "devDependencies": { "babel-cli": "^6.26.0", "babel-preset-env": "^1.7.0", "babel-preset-stage-0": "^6.24.1" } }

Now that our development environment is set up, let’s turn to the database where we’ll be storing things.

Database setup

We will be using MySQL as our database and Sequelize ORM for our relationships. Run sequelize init (assuming you installed it globally earlier). The command should create three folders: /config /models and /migrations. At this point, our project directory structure is shaping up.

Let’s configure our database. First, create a .env file in the project root directory and paste this:

NODE_ENV=development DB_HOST=localhost DB_USERNAME= DB_PASSWORD= DB_NAME=

Then go to the /config folder we just created and rename the config.json file in there to config.js. Then, drop this code in there:

require('dotenv').config() const dbDetails = { username: process.env.DB_USERNAME, password: process.env.DB_PASSWORD, database: process.env.DB_NAME, host: process.env.DB_HOST, dialect: 'mysql' } module.exports = { development: dbDetails, production: dbDetails }

Here we are reading the database details we set in our .env file. process.env is a global variable injected by Node and it’s used to represent the current state of the system environment.

Let’s update our database details with the appropriate data. Open the SQL database and create a table called graphql_auth. I use Laragon as my local server and phpmyadmin to manage database tables.

What ever you use, we’ll want to update the .env file with the latest information:

NODE_ENV=development DB_HOST=localhost DB_USERNAME=graphql_auth DB_PASSWORD= DB_NAME=<your_db_username_here>

Let’s configure Sequelize. Create a .sequelizerc file in the project’s root and paste this:

const path = require('path')

module.exports = { config: path.resolve('config', 'config.js') }

Now let’s integrate our config into the models. Go to the index.js in the /models folder and edit the config variable.

const config = require(__dirname + '/../../config/config.js')[env]

Finally, let’s write our model. For this project, we need a User model. Let’s use Sequelize to auto-generate the model. Here’s what we need to run in the terminal to set that up:

sequelize model:generate --name User --attributes username:string,email:string,password:string

Let’s edit the model that creates for us. Go to user.js in the /models folder and paste this:

'use strict'; module.exports = (sequelize, DataTypes) => { const User = sequelize.define('User', { username: { type: DataTypes.STRING, }, email: { type: DataTypes.STRING, }, password: { type: DataTypes.STRING, } }, {}); return User; };

Here, we created attributes and fields for username, email and password. Let’s run a migration to keep track of changes in our schema:

yarn migrate

Let’s now write the schema and resolvers.

Integrate schema and resolvers with the GraphQL server

In this section, we’ll define our schema, write resolver functions and expose them on our server.

The schema

In the src folder, create a new folder called /schema and create a file called schema.js. Paste in the following code:

const { gql } = require('apollo-server') const typeDefs = gql` type User { id: Int! username: String email: String! } type AuthPayload { token: String! user: User! } type Query { user(id: Int!): User allUsers: [User!]! me: User } type Mutation { registerUser(username: String, email: String!, password: String!): AuthPayload! login (email: String!, password: String!): AuthPayload! } ` module.exports = typeDefs

Here we’ve imported graphql-tag from apollo-server. Apollo Server requires wrapping our schema with gql.

The resolvers

In the src folder, create a new folder called /resolvers and create a file in it called resolver.js. Paste in the following code:

const bcrypt = require('bcryptjs') const jsonwebtoken = require('jsonwebtoken') const models = require('../models') require('dotenv').config() const resolvers = { Query: { async me(_, args, { user }) { if(!user) throw new Error('You are not authenticated') return await models.User.findByPk(user.id) }, async user(root, { id }, { user }) { try { if(!user) throw new Error('You are not authenticated!') return models.User.findByPk(id) } catch (error) { throw new Error(error.message) } }, async allUsers(root, args, { user }) { try { if (!user) throw new Error('You are not authenticated!') return models.User.findAll() } catch (error) { throw new Error(error.message) } } }, Mutation: { async registerUser(root, { username, email, password }) { try { const user = await models.User.create({ username, email, password: await bcrypt.hash(password, 10) }) const token = jsonwebtoken.sign( { id: user.id, email: user.email}, process.env.JWT_SECRET, { expiresIn: '1y' } ) return { token, id: user.id, username: user.username, email: user.email, message: "Authentication succesfull" } } catch (error) { throw new Error(error.message) } }, async login(_, { email, password }) { try { const user = await models.User.findOne({ where: { email }}) if (!user) { throw new Error('No user with that email') } const isValid = await bcrypt.compare(password, user.password) if (!isValid) { throw new Error('Incorrect password') } // return jwt const token = jsonwebtoken.sign( { id: user.id, email: user.email}, process.env.JWT_SECRET, { expiresIn: '1d'} ) return { token, user } } catch (error) { throw new Error(error.message) } } },

} module.exports = resolvers

That’s a lot of code, so let’s see what’s happening in there.

First we imported our models, bcrypt and jsonwebtoken, and then initialized our environmental variables.

Next are the resolver functions. In the query resolver, we have three functions (me, user and allUsers):

me query fetches the details of the currently loggedIn user. It accepts a user object as the context argument. The context is used to provide access to our database which is used to load the data for a user by the ID provided as an argument in the query.

user query fetches the details of a user based on their ID. It accepts id as the context argument and a user object.

alluser query returns the details of all the users.

user would be an object if the user state is loggedIn and it would be null, if the user is not. We would create this user in our mutations.

In the mutation resolver, we have two functions (registerUser and loginUser):

registerUser accepts the username, email and password of the user and creates a new row with these fields in our database. It’s important to note that we used the bcryptjs package to hash the users password with bcrypt.hash(password, 10). jsonwebtoken.sign synchronously signs the given payload into a JSON Web Token string (in this case the user id and email). Finally, registerUser returns the JWT string and user profile if successful and returns an error message if something goes wrong.

login accepts email and password , and checks if these details match with the one that was supplied. First, we check if the email value already exists somewhere in the user database.

models.User.findOne({ where: { email }}) if (!user) { throw new Error('No user with that email') }

Then, we use bcrypt’s bcrypt.compare method to check if the password matches.

const isValid = await bcrypt.compare(password, user.password) if (!isValid) { throw new Error('Incorrect password') }

Then, just like we did previously in registerUser, we use jsonwebtoken.sign to generate a JWT string. The login mutation returns the token and user object.

Now let’s add the JWT_SECRET to our .env file.

JWT_SECRET=somereallylongsecret

The server

Finally, the server! Create a server.js in the project’s root folder and paste this:

const { ApolloServer } = require('apollo-server') const jwt = require('jsonwebtoken') const typeDefs = require('./schema/schema') const resolvers = require('./resolvers/resolvers') require('dotenv').config() const { JWT_SECRET, PORT } = process.env const getUser = token => { try { if (token) { return jwt.verify(token, JWT_SECRET) } return null } catch (error) { return null } } const server = new ApolloServer({ typeDefs, resolvers, context: ({ req }) => { const token = req.get('Authorization') || '' return { user: getUser(token.replace('Bearer', ''))} }, introspection: true, playground: true }) server.listen({ port: process.env.PORT || 4000 }).then(({ url }) => { console.log(`🚀 Server ready at ${url}`); });

Here, we import the schema, resolvers and jwt, and initialize our environment variables. First, we verify the JWT token with verify. jwt.verify accepts the token and the JWT secret as parameters.

Next, we create our server with an ApolloServer instance that accepts typeDefs and resolvers.

We have a server! Let’s start it up by running yarn dev in the terminal.

Testing the API

Let’s now test the GraphQL API with GraphQL Playground. We should be able to register, login and view all users — including a single user — by ID.

We’ll start by opening up the GraphQL Playground app or just open localhost://4000 in the browser to access it.

Mutation for register user

mutation { registerUser(username: "Wizzy", email: "[email protected]", password: "wizzyekpot" ){ token } }

We should get something like this:

{ "data": { "registerUser": { "token": "eyJhbGciOiJIUzI1NiIsInR5cCI6IkpXVCJ9.eyJpZCI6MTUsImVtYWlsIjoiZWtwb3RAZ21haWwuY29tIiwiaWF0IjoxNTk5MjQwMzAwLCJleHAiOjE2MzA3OTc5MDB9.gmeynGR9Zwng8cIJR75Qrob9bovnRQT242n6vfBt5PY" } } }

Mutation for login

Let’s now log in with the user details we just created:

mutation { login(email:"[email protected]" password:"wizzyekpot"){ token } }

We should get something like this:

{ "data": { "login": { "token": "eyJhbGciOiJIUzI1NiIsInR5cCI6IkpXVCJ9.eyJpZCI6MTUsImVtYWlsIjoiZWtwb3RAZ21haWwuY29tIiwiaWF0IjoxNTk5MjQwMzcwLCJleHAiOjE1OTkzMjY3NzB9.PDiBKyq58nWxlgTOQYzbtKJ-HkzxemVppLA5nBdm4nc" } } }

Awesome!

Query for a single user

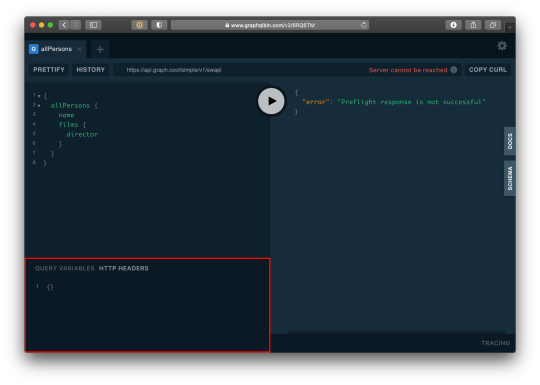

For us to query a single user, we need to pass the user token as authorization header. Go to the HTTP Headers tab.

…and paste this:

{ "Authorization": "eyJhbGciOiJIUzI1NiIsInR5cCI6IkpXVCJ9.eyJpZCI6MTUsImVtYWlsIjoiZWtwb3RAZ21haWwuY29tIiwiaWF0IjoxNTk5MjQwMzcwLCJleHAiOjE1OTkzMjY3NzB9.PDiBKyq58nWxlgTOQYzbtKJ-HkzxemVppLA5nBdm4nc" }

Here’s the query:

query myself{ me { id email username } }

And we should get something like this:

{ "data": { "me": { "id": 15, "email": "[email protected]", "username": "Wizzy" } } }

Great! Let’s now get a user by ID:

query singleUser{ user(id:15){ id email username } }

And here’s the query to get all users:

{ allUsers{ id username email } }

Summary

Authentication is one of the toughest tasks when it comes to building websites that require it. GraphQL enabled us to build an entire Authentication API with just one endpoint. Sequelize ORM makes creating relationships with our SQL database so easy, we barely had to worry about our models. It’s also remarkable that we didn’t require a HTTP server library (like Express) and use Apollo GraphQL as middleware. Apollo Server 2, now enables us to create our own library-independent GraphQL servers!

Check out the source code for this tutorial on GitHub.

The post Let’s Create Our Own Authentication API with Nodejs and GraphQL appeared first on CSS-Tricks.

You can support CSS-Tricks by being an MVP Supporter.

Let’s Create Our Own Authentication API with Nodejs and GraphQL published first on https://deskbysnafu.tumblr.com/

0 notes

Text

WPvivid Backup Plugin For Wordpress or ClassicPress

Backing up your Wordpress or ClassicPress site is paramount to site owners and self builders, and an essential part of the maintenance routine. Having a backup solution on your site is one of the most basic ways to ensure WordPress security. The platform also offers many different solutions for it. A relatively new contender in this area is Migrate & Backup WordPress by WPvivid, which calls itself “the only free all-in-one backup, restore and migration WordPress plugin”. To see what it can do for users and if it brings anything new to the table, in this review I will take an in-depth look at it. Below, I will examine its standout features and go through all of its key functionality so you can make a decision whether it’s the right thing for your needs.

Stats, Numbers, and Features

Migrate & Backup WordPress is a free plugin available in the WordPress directory. At the time of this writing, it has over 20,000 installs and a solid 4.9/5-star rating. Here’s what it promises it can do for you: Migrate your site to a new domain with a single click, e.g. from development to live production or vice versa Create automatic (remote) backups of your WordPress site including one-click restore or restore via upload There is also a pro version with additional features that we will talk about later. To really get an impression, let’s take it for a spin.

How to Use the WPvivid Backup Plugin

Here are the basics of using the plugin on your WordPress site. 1. Install WPvivid Backup As always, the first thing you want to do is start with the installation. For that, the quickest way is to go to Plugins > Add New and then searching for it by name (WPvivid is enough). Once you have located it in the list, hit the Install Now button and wait until the download has finished. Then, don’t forget to click on Activate. 2. Create a Manual Backup After the installation, you automatically land on the plugin’s main page.

Here, you have access to its most basic functionality – creating manual backups. For that, simply choose what to back up (database and files, only files, only database) and whether to save them locally or remotely (more on that soon). You can also check a box to determine that a backup should only be deleted manually and not automatically. When you are happy with your choices, hit the big Backup Now button and the plugin will go to work. You can watch the progress via a status bar at the top.

When you are done, the finished backup will appear at the bottom of the page.

This is where all of them show up. Here, you can also check the log, download the backup, start a restore (you will soon learn how), or delete an existing backup. So far, so good. 3. Create a Backup Schedule Of course, with backups, it’s better to have a set-it-and-forget-it solution. That’s where scheduling comes into play. You find the options for that under the Schedule tab.

Setting one up is really easy. Just set a tick mark at the top where it says Enable backup schedule to get started. Next, pick an interval (every 12 hours, daily, weekly, every fortnight, or every month). After that, choose what you want the WPvivid backup plugin to save in terms of files and database and, finally, whether to store it remotely or locally. Save the changes at the bottom when you are done. From now on, your site will automatically be backed up according to your settings. You can also see this on the dashboard where it shows your next backup along with other information.

4. Set up a Remote Storage If you store your backups in the same place as your site (i.e. on your server), in a worst-case scenario, they might get lost together with your site and you are left with nothing. For that reason, under Remote Storage, WPvivid backup allows you to save your stuff in many different places off-site: Google Drive Dropbox Microsoft OneDrive Amazon S3 DigitalOcean Spaces FTP/SFTP Pick your favorite, enter an alias (so your recognize which storage it is on your own site), configure settings, choose whether to use it as the default remote storage and then hit the blue button to authenticate or test your settings.

Depending on your choice, you might have to authenticate with the storage provider in the next step and also give the plugin permission/access. However, once you are done, you can also choose remote storage for your manual and automated backups. The new option will also show up in your list of available storage spaces.

In my test, this worked without a hitch. 5. How to Do a Restore Restoring from storage is pretty much as easy as backing up. Just hit the Restore button on your list of available backups. It will show you this page:

Click on Restore, confirm when asked if you want to continue. You will see the progress in the field below and get a message when it’s done If the space is remote, the plugin will ask you to download the backup to your server first. In addition, on the main page, you can also upload manual backups to your site via Upload.

When have done either, click the Scan uploaded backup or received backup button to see it in the list. You can restore them from here as described. 6. Auto-Migration Another option the WPvivid backup plugin offers is migrating your site from one server to another. This can be two live servers, live to staging, local to live, and many other combinations. You can do this automatically or manually. For automatic, you first need to go create a site key for authentication. For that, the same plugin needs to be installed on the site you want to migrate to. There, go to the Key tab. Choose how long you want your key to be valid for and then hit Generate. It will give you something like this:

Copy it, then log into the site you want to migrate and go to Auto-Migration.

Here, paste the key from earlier and click Save at the bottom. The plugin will check if everything is alright and then tell you whether it’s fine to move from one place to the other.

Choose what you want to transfer and hit Clone then Transfer at the bottom afterward. Note that the plugin makers recommend to disable redirect, firewall, security, and caching plugins for the time of the transfer. However, that’s it. Alternatively, you can also download a backup and then restore it manually in the target site as described above. 7. Plugin Settings The plugin offers a number of settings that you can find in the Settings tab (how fitting!).

They are divided into general and advanced settings. Here are the options under general: Determine the number of backups to keep (up to seven in the free version) Display options for access to the WPvivid backup plugin in the WordPress back end File merging options to save space Storage and naming options for backups Ability to remove older backup instances Setup options for email reporting when backing up Sizes of temporary files and logs and the ability to delete them Export and import of settings for use on other websites You also get a bunch of links to helpful resources. Here’s what to find in the advanced settings: Switch on optimization mode for shared hosting in case there are problems Controls for file compression, file exclusion, script execution limit, memory limits, chunk size, and times to try until time out 8. Other Options Here are the remaining tabs in the main dashboard of WPvivid backup: Debug — If you are having problems, you can send debug information to WPvivid or download them to send manually Logs — Saves everything going on with the plugin so you can see what happened during your backups MainWP — If you are using MainWP to manage your WordPress site, WPvivid backup has their own plugin solution for it that you can find here 9. Export & Import Besides its main functionality, the plugin also lets you transfer posts and pages between WordPress installations (including images) under WPvivid Backup > Export & Import.

Simply pick which one you want to transfer. Then, in the next step, choose your filters (categories, authors, date).

This will give you a list, from which can pick single posts and pages.

Add a comment if needed, otherwise hit Export and Download. This gives you a zip file. You can then upload it in another installation under Import or upload it to the import directory via FTP and scan from there.

Choose which author to assign the pages to during the process and that’s it.

WPvivid Backup & Restore – Pro Version

As mentioned, WPvivid backup also comes with a pro version, which you can find on their website. It gives you additional options and is currently in beta so you can use it for free. If you do, you can get 40% off of a lifetime license for the finished product. Here’s what it will include: Custom migrations — Choose what to take with you in terms of core files, database tables, themes, plugins, and more. Advanced remote options — Migration and restore becomes possible via remote storage and you can create custom folders in your remote storage. More advanced scheduling options — Time zones, custom start times, custom backup content, and storage locations. Staging and Multisite support — The ability to create staging environments and publish them to live in one click and use the plugin with WordPress Multisite although I do not recommend using Multisite if it is avoidable. Better reporting — Email reports sent to multiple addressees More backups — Store more than seven backups at a time Pricing will start at $49/year for annual plans and $99 for lifetime licenses. It gets more expensive depending on features and the number of websites you want to use the plugin on.

Evaluation of WPvivid

Overall, WPvivid backup is a solid plugin that is intuitive to work with. I didn’t run into any major problems while testing except that I couldn’t get it to work in my local XAMPP installation. I also think some of the user interface could use some polishing. Aside from that, my only criticism is that WPvivid is one of the plugin solutions where you need to have an existing website to migrate to with the plugin installed. For site migrations, I like a solution better that allows you to clone a website and deploy it on an empty server or as in most of our migrations, just done by hand via FTP and DB export..

Conclusion

Having a backup solution is one of the most basic ways to keep your site safe. No WordPress website should go without. With WPvivd Backup & Restore, users now have even more choices than before. Above, we have gone over the plugin’s main features and how it works. It comes with lots of functionality, is well made, and easy to use. While there are few things to criticize, if you are looking for a new backup solution for WordPress, I can wholeheartedly recommend it. Have you tried out the WPvivid backup plugin? What was your first impression? Please share in the comments below. If you enjoyed this post, why not check out this article on how to Enable GZIP Compression on WordPress or ClassicPress! Post by Xhostcom Wordpress & Digital Services, subscribe to newsletter for more! Read the full article

0 notes

Text

Writing A Multiplayer Text Adventure Engine In Node.js: Game Engine Server Design (Part 2)

Writing A Multiplayer Text Adventure Engine In Node.js: Game Engine Server Design (Part 2)

Fernando Doglio

2019-10-23T14:00:59+02:002019-10-23T12:06:03+00:00

After some careful consideration and actual implementation of the module, some of the definitions I made during the design phase had to be changed. This should be a familiar scene for anyone who has ever worked with an eager client who dreams about an ideal product but needs to be restraint by the development team.

Once features have been implemented and tested, your team will start noticing that some characteristics might differ from the original plan, and that’s alright. Simply notify, adjust, and go on. So, without further ado, allow me to first explain what has changed from the original plan.

Battle Mechanics

This is probably the biggest change from the original plan. I know I said I was going to go with a D&D-esque implementation in which each PC and NPC involved would get an initiative value and after that, we would run a turn-based combat. It was a nice idea, but implementing it on a REST-based service is a bit complicated since you can’t initiate the communication from the server side, nor maintain status between calls.

So instead, I will take advantage of the simplified mechanics of REST and use that to simplify our battle mechanics. The implemented version will be player-based instead of party-based, and will allow players to attack NPCs (Non-Player Characters). If their attack succeeds, the NPCs will be killed or else they will attack back by either damaging or killing the player.

Whether an attack succeeds or fails will be determined by the type of weapon used and the weaknesses an NPC might have. So basically, if the monster you’re trying to kill is weak against your weapon, it dies. Otherwise, it’ll be unaffected and — most likely — very angry.

Triggers

If you paid close attention to the JSON game definition from my previous article, you might’ve noticed the trigger’s definition found on scene items. A particular one involved updating the game status (statusUpdate). During implementation, I realized having it working as a toggle provided limited freedom. You see, in the way it was implemented (from an idiomatic point of view), you were able to set a status but unsetting it wasn’t an option. So instead, I’ve replaced this trigger effect with two new ones: addStatus and removeStatus. These will allow you to define exactly when these effects can take place — if at all. I feel this is a lot easier to understand and reason about.

This means that the triggers now look like this:

"triggers": [ { "action": "pickup", "effect":{ "addStatus": "has light", "target": "game" } }, { "action": "drop", "effect": { "removeStatus": "has light", "target": "game" } } ]

When picking up the item, we’re setting up a status, and when dropping it, we’re removing it. This way, having multiple game-level status indicators is completely possible and easy to manage.

The Implementation

With those updates out of the way, we can start covering the actual implementation. From an architectural point of view, nothing changed; we’re still building a REST API that will contain the main game engine’s logic.

The Tech Stack

For this particular project, the modules I’m going to be using are the following:

Module Description Express.js Obviously, I’ll be using Express to be the base for the entire engine. Winston Everything in regards to logging will be handled by Winston. Config Every constant and environment-dependant variable will be handled by the config.js module, which greatly simplifies the task of accessing them. Mongoose This will be our ORM. I will model all resources using Mongoose Models and use that to interact directly with the database. uuid We’ll need to generate some unique IDs — this module will help us with that task.

As for other technologies used aside from Node.js, we have MongoDB and Redis. I like to use Mongo due to the lack of schema required. That simple fact allows me to think about my code and the data formats, without having to worry about updating the structure of my tables, schema migrations or conflicting data types.

Regarding Redis, I tend to use it as a support system as much as I can in my projects and this case is no different. I will be using Redis for everything that can be considered volatile information, such as party member numbers, command requests, and other types of data that are small enough and volatile enough to not merit permanent storage.

I’m also going to be using Redis’ key expiration feature to auto manage some aspects of the flow (more on this shortly).

API Definition

Before moving into client-server interaction and data-flow definitions I want to go over the endpoints defined for this API. They aren’t that many, mostly we need to comply with the main features described in Part 1:

Feature Description Join a game A player will be able to join a game by specifying the game’s ID. Create a new game A player can also create a new game instance. The engine should return an ID, so that others can use it to join. Return scene This feature should return the current scene where the party is located. Basically, it’ll return the description, with all of the associated information (possible actions, objects in it, etc.). Interact with scene This is going to be one of the most complex ones, because it will take a command from the client and perform that action — things like move, push, take, look, read, to name just a few. Check inventory Although this is a way to interact with the game, it does not directly relate to the scene. So, checking the inventory for each player will be considered a different action. Register client application The above actions require a valid client to execute them. This endpoint will verify the client application and return a Client ID that will be used for authentication purposes on subsequent requests.

The above list translates into the following list of endpoints:

Verb Endpoint Description POST /clients Client applications will require to get a Client ID key using this endpoint. POST /games New game instances are created using this endpoint by the client applications. POST /games/:id Once the game is created, this endpoint will enable party members to join it and start playing. GET /games/:id/:playername This endpoint will return the current game state for a particular player. POST /games/:id/:playername/commands Finally, with this endpoint, the client application will be able to submit commands (in other words, this endpoint will be used to play).

Let me go into a bit more detail about some of the concepts I described in the previous list.

Client Apps

The client applications will need to register into the system to start using it. All endpoints (except for the first one on the list) are secured and will require a valid application key to be sent with the request. In order to obtain that key, client apps need to simply request one. Once provided, they will last for as long as they are used, or will expire after a month of not being used. This behavior is controlled by storing the key in Redis and setting a one-month long TTL to it.

Game Instance

Creating a new game basically means creating a new instance of a particular game. This new instance will contain a copy of all of the scenes and their content. Any modifications done to the game will only affect the party. This way, many groups can play the same game on their own individual way.

Player’s Game State

This is similar to the previous one, but unique to each player. While the game instance holds the game state for the entire party, the player’s game state holds the current status for one particular player. Mainly, this holds inventory, position, current scene and HP (health points).

Player Commands