#nvidianemo

Explore tagged Tumblr posts

Text

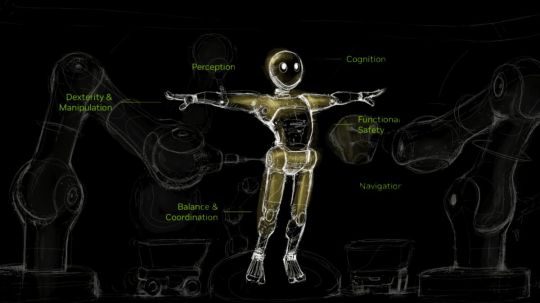

NVIDIA Project GR00T: Future of Robotics Like Human Machines

NVIDIA Develops New AI and Simulation Tools to Promote Humanoid Development and Robot Learning. Robot dexterity, control, manipulation, and mobility will be accelerated by new Project GR00T processes and AI world model building technologies.

At this week’s Conference for Robot Learning (CoRL) in Munich, Germany, NVIDIA unveiled new AI and simulation tools and processes that would significantly speed up robotics developers’ work on AI-enabled robots, including humanoids.

The lineup includes six new humanoid robot learning workflows for Project GR00T, an initiative to speed up the development of humanoid robots; new world-model development tools for video data curation and processing, such as the NVIDIA Cosmos tokenizer and NVIDIA NeMo Curator for video processing; and the general release of the NVIDIA Isaac Lab robot learning framework.

By decomposing photos and videos into premium tokens with very high compression rates, the open-source Cosmos tokenizer offers robotics developers superb visual tokenization. NeMo Curator offers video processing curation up to 7 times quicker than unoptimized pipelines, and it operates up to 12 times faster than existing tokenizers.

In conjunction with CoRL, NVIDIA offered developer workflow and training guidelines and presented 23 papers and nine workshops on robot learning. Hugging Face and NVIDIA also revealed that they would work together to help the developer community advance open-source robotics research via LeRobot, NVIDIA Isaac Lab, and NVIDIA Jetson.

Accelerating Robot Development With Isaac Lab

NVIDIA Omniverse, a platform for creating OpenUSD applications for industrial digitalization and physical AI simulation, serves as the foundation for Isaac Lab, an open-source robot learning framework.

Robot policies may be trained at scale by developers using Isaac Lab. Any embodiment, including humanoids, quadrupeds, and collaborative robots, may use this open-source unified robot learning framework to manage ever more intricate motions and interactions.

Prominent global robotics research organizations, robotics application developers, and commercial robot manufacturers, such as 1X, Agility Robotics, The AI Institute, Berkeley Humanoid, Boston Dynamics, Field AI, Fourier, Galbot, Mentee Robotics, Skild AI, Swiss-Mile, Unitree Robotics, and XPENG Robotics, are embracing Isaac Lab.

Project GR00T: Foundations for General-Purpose Humanoid Robots

Advanced humanoids are very challenging to build, requiring multidisciplinary and multilayer technical techniques to enable the robots to detect, move, and develop skills for interactions with humans and the environment.

An program called Project GR00T aims to speed up the worldwide ecosystem of humanoid robot developers by creating accelerated libraries, foundation models, and data pipelines.

Humanoid developers may use six new Project GR00T procedures as blueprints to achieve the most difficult humanoid robot skills. Among them are:

GR00T-Gen for creating OpenUSD-based 3D environments driven by generative AI

GR00T-Mimic for generating robot motion and trajectory

GR00T-Dexterity for manipulating robots with dexterity

Whole-body control using GR00T-Control

GR00T-Mobility for navigation and movement of robots

GR00T-Multimodal Sensing Perception

According to NVIDIA’s senior research manager of embodied AI, “Humanoid robots are the next wave of embodied AI.” “To further the advancement and development of humanoid robot developers worldwide, NVIDIA research and engineering teams are working together throughout the organization and our developer ecosystem to build Project GR00T.“

New Development Tools for World Model Builders

These days, world models AI representations of the world are being created by robot developers to forecast how settings and things will react to a robot’s movements. Thousands of hours of carefully selected, real-world picture or video data are needed to build these world models, which are very computationally and data-intensive.

NVIDIA Cosmos tokenizers make it easier to create these world models by offering effective, high-quality encoding and decoding. They enable high-quality video and picture reconstructions by setting a new benchmark for low distortion and temporal instability.

The Cosmos tokenizer opens the way for the scalable, reliable, and effective creation of generative applications across a wide range of visual domains by offering high-quality compression and up to 12x quicker visual reconstruction.

The Cosmos tokenizer has been included into the 1X World Model Challenge dataset by the humanoid robot firm 1X.

The NVIDIA Cosmos tokenizer is being used by other humanoid and general-purpose robot developers, such as XPENG Robotics and Hillbot, to handle high-resolution photos and movies.

A pipeline for video processing is now included of NeMo Curator. This makes it possible for robot makers to handle vast amounts of text, picture, and video data and increase the accuracy of their world-model.

Due to its enormous volume, curating video data presents difficulties that call for effective orchestration for load balancing across GPUs and scalable pipelines. To increase throughput, filtering, captioning, and embedding models must also be optimized.

By automating pipeline orchestration and simplifying data curation, NeMo Curator overcomes these obstacles and drastically cuts down on processing time. Over 100 petabytes of data may be handled effectively with its capability for linear scalability over multi-node, multi-GPU systems. This speeds up time to market, lowers costs, and streamlines AI development.

Advancing the Robot Learning Community at CoRL

The NVIDIA robotics team published almost two dozen research papers with CoRL that discuss advances in temporal robot navigation, integrating vision language models for better environmental understanding and task execution, generating long-term planning solutions for large multistep activities and learning skills via human examples.

HOVER, a robot foundation model for managing humanoid robot locomotion and manipulation, and Skill Gen, a method based on synthetic data production for teaching robots with minimum human demonstrations, are two groundbreaking articles for humanoid robot control and synthetic data generation.

Availability

NVIDIA Isaac Lab 1.2 is open source and is accessible on GitHub. The NVIDIA Cosmos tokenizer is now accessible on Hugging Face and GitHub. At the end of the month, NeMo Curator for video processing will be accessible.

The upcoming NVIDIA Project GR00T procedures will make it easier for robot manufacturers to develop humanoid robot capabilities. Developer tutorials and instructions, including a migration route from Isaac Gym to Isaac Lab, are now available to researchers and developers learning how to utilize Isaac Lab.

Read more on Govindhtech.com

#ProjectGR00T#Robotics#NVIDIAProjectGR00T#ArtificialIntelligence#RobotLearning#NVIDIANeMo#NVIDIAIsaacLab#News#Technews#Technology#Technologynews#Technologytrends#govindhtech

0 notes

Text

Physical AI System Simulations For Industrial Applications

The Three Computer Solution: Driving the Upcoming AI Robotics Revolution.

Training, simulation, and inference are being used to speed up industrial, Physical AI-based systems, ranging from factories to humanoids.

For generative AI, ChatGPT was the big bang moment. Almost every inquiry may have an answer produced, revolutionizing digital work for knowledge workers in areas including software development, customer support, content production, and company management.

Artificial intelligence in the form of physical AI, which is found in factories, humanoids, and other industrial systems, has not yet reached a breakthrough.

This has slowed down sectors including manufacturing, logistics, robotics, and transportation and mobility. However, three computers that combine sophisticated training, simulation, and inference are about to alter that.

The Development of Multimodal, Physical AI

For sixty years, human programmers’ “Software 1.0” serial code operated on CPU-powered general-purpose computers.

Geoffrey Hinton and Ilya Sutskever helped Alex Krizhevsky win the 2012 ImageNet computer image identification competition using AlexNet, a pioneering deep learning model for picture categorization.

This was the first industrial AI usage. The advent of GPU-based machine learning neural networks sparked the Software 2.0 era.

Software now creates software. Moore’s law is being left far behind as the world’s computing workloads move from general-purpose computing on CPUs to accelerated computing on GPUs.

Diffusion and multimodal transformer models have been taught to provide responses using generative AI.

The next token in modes like letters or words may be predicted using large language models, which are one-dimensional. Two-dimensional models that can anticipate the next pixel are used to generate images and videos.

The three-dimensional reality is beyond the comprehension and interpretation of these models. Physical AI then enters the picture.

With generative AI, physical AI models are able to see, comprehend, engage with, and traverse the physical environment. The utility of physical AI via robotics is becoming more widely recognized because to advancements in multimodal physical AI, faster computation, and large-scale physically based simulations.

Any system that has the ability to see, think, plan, act, and learn is called a robot. Many people think of robots as humanoids, manipulator arms, or autonomous mobile robots (AMRs). However, there are several more kinds of robotic embodiments.

Autonomous robotic systems will soon be used for anything that moves or that keeps an eye on moving objects. These devices will be able to perceive their surroundings and react accordingly.

Physical AI will replace static, humanly run systems in a variety of settings, including data centers, factories, operating rooms, traffic management systems, and even whole smart cities.Image Credits To NVIDIA

Humanoids and Robots: The Next Frontier

Because they can function well in settings designed for people and need little modification for deployment and operation, humanoid robots are the perfect example of a general-purpose robotic manifestation.

Goldman Sachs estimates that the worldwide market for humanoid robots would grow to $38 billion by 2035, more than six times the $6 billion predicted for the same period only two years ago.

Globally, scientists and engineers are vying to create this next generation of robots.Image Credits To NVIDIA

Three Computers for Physical AI Development

Three accelerated computer systems are needed to manage physical AI and robot training, simulation, and runtime in order to create humanoid robots. The development of humanoid robots is being accelerated by two developments in computing: scalable, physically based simulations of robots and their environments, as well as multimodal foundation models.

Robots now have 3D vision, control, skill planning, and intelligence with to advancements in generative AI. Developers may hone, test, and perfect robot abilities in a virtual environment that replicates the laws of physics via large-scale robot simulation, which lowers the cost of real-world data collecting and guarantees that the robots can operate in safe, regulated environments.

To help developers build physical AI, NVIDIA has constructed three processors and faster development platforms.

First, models are trained on a supercomputer:NVIDIA NeMo on the NVIDIA DGX platform allows developers to train and optimize robust foundation and generative AI models. Additionally, they may use NVIDIA Project GR00T, which aims to create general-purpose foundation models for humanoid robots so that they can mimic human gestures and comprehend spoken language.

Second: using application programming interfaces and frameworks such as NVIDIA Isaac Sim, NVIDIA Omniverse, which runs on NVIDIA OVX servers, offers the simulation environment and development platform for testing and refining physical AI.

Developers may create vast quantities of physically based synthetic data to bootstrap robot model training, or they can utilize Isaac Sim to simulate and test robot models. To speed up robot policy training and improvement, researchers and developers may also use NVIDIA Isaac Lab, an open-source robot learning framework that underpins robot imitation learning and reinforcement learning.Image Credit to NVIDIA

Finally, a runtime computer receives taught AI models: For small, on-board computing requirements, NVIDIA created the Jetson Thor robotics processors. The robot brain is a collection of models that are installed on a power-efficient on-board edge computing system. These models include control policy, vision, and language models.

Robot manufacturers and foundation model developers may employ as many of the accelerated computing platforms and systems as necessary, depending on their workflows and areas of complexity.

Constructing the Upcoming Generation of Self-Sustained Facilities

All of these technologies come together to create robotic facilities.

Teams of autonomous robots may be coordinated by manufacturers like Foxconn or logistics firms like Amazon Robotics to assist human workers and keep an eye on manufacturing operations using hundreds or thousands of sensors.

Digital twins will be used in these self-sufficient industries, plants, and warehouses. The digital twins are used for operations simulation, layout design and optimization, and above all software-in-the-loop testing for robot fleets.

��Mega,” a factory digital twin blueprint built on Omniverse, allows businesses to test and improve their fleets of robots in a virtual environment before deploying them to actual plants. This promotes minimum disturbance, excellent performance, and smooth integration.Image Credit To NVIDIA

Mega enables developers to add virtual robots and their AI models the robots’ brains to their manufacturing digital twins. In the digital twin, robots carry out tasks by sensing their surroundings, using reasoning, deciding how to go next, and then carrying out the planned activities.

The world simulator in Omniverse simulates these activities in the digital environment, and Omniverse sensor simulation allows the robot brains to observe the outcomes.

While Mega painstakingly monitors the condition and location of each component inside the manufacturing digital twin, the robot brains use sensor simulations to determine the next course of action, and the cycle repeats.

Within the secure environment of the Omniverse digital twin, industrial firms may simulate and verify modifications using this sophisticated software-in-the-loop testing process. This helps them anticipate and mitigate possible difficulties to lower risk and costs during real-world deployment.Image Credits To NVIDIA

Using NVIDIA Technology to Empower the Developer Ecosystem With three computers, NVIDIA speeds up the work of the worldwide robotics development and robot foundation model building ecosystem.

Empowering the Developer Ecosystem With NVIDIA Technology

In order to create UR AI Accelerator, a ready-to-use hardware and software toolkit that helps cobot developers create applications, speed up development, and shorten the time to market of AI products, Universal Robots, a Teradyne Robotics company, used NVIDIA Isaac Manipulator, Isaac accelerated libraries, and AI models, as well as NVIDIA Jetson Orin.

The NVIDIA Isaac Perceptor was used by RGo Robotics to assist its wheel. Because AMRs have human-like vision and visual-spatial knowledge, they can operate anywhere, at any time, and make wise judgments.

NVIDIA’s robotics development platform is being used by humanoid robot manufacturers such as 1X Technologies, Agility Robotics, Apptronik, Boston Dynamics, Fourier, Galbot, Mentee, Sanctuary AI, Unitree Robotics, and XPENG Robotics.

Boston Dynamics is working with Isaac Sim and Isaac Lab to develop humanoid robots and quadrupeds to increase human productivity, address labor shortages, and put warehouse safety first.

Fourier is using Isaac Sim to teach humanoid robots to work in industries including manufacturing, healthcare, and scientific research that need a high degree of interaction and flexibility.

Galbot pioneered the creation of a simulation environment for assessing dexterous grasping models and a large-scale robotic dexterous grasp dataset called DexGraspNet that can be used to various dexterous robotic hands using Isaac Lab and Isaac Sim.

Using the Isaac platform and Isaac Lab, Field AI created risk-bounded multitask and multifunctional foundation models that allow robots to work safely in outside field conditions.

The physical AI age has arrived and is revolutionizing robotics and heavy industries worldwide.

Read more on Govindhtech.com

#physicalAI#AI#ArtificialIntelligence#Nvidia#NVIDIADGX#nvidianemo#govindhtech#news#Technology#technews#technologynews#technologytrends

0 notes

Text

NVIDIA NeMo Retriever Microservices Improves LLM Accuracy

NVIDIA NIM inference microservices

AI, Get Up! Businesses can unleash the potential of their business data with production-ready NVIDIA NIM inference microservices for retrieval-augmented generation, integrated into the Cohesity, DataStax, NetApp, and Snowflake platforms. The new NVIDIA NeMo Retriever Microservices Boost LLM Accuracy and Throughput.

Applications of generative AI are worthless, or even harmful, without accuracy, and data is the foundation of accuracy.

NVIDIA today unveiled four new NVIDIA NeMo Retriever NIM inference microservices, designed to assist developers in quickly retrieving the best proprietary data to produce informed responses for their AI applications.

NeMo Retriever NIM microservices, when coupled with the today-announced NVIDIA NIM inference microservices for the Llama 3.1 model collection, allow enterprises to scale to agentic AI workflow, where AI applications operate accurately with minimal supervision or intervention, while delivering the highest accuracy retrieval-augmented generation, or RAG.

Nemo Retriever

With NeMo Retriever, businesses can easily link bespoke models to a variety of corporate data sources and use RAG to provide AI applications with incredibly accurate results. To put it simply, the production-ready microservices make it possible to construct extremely accurate AI applications by enabling highly accurate information retrieval.

NeMo Retriever, for instance, can increase model throughput and accuracy for developers building AI agents and chatbots for customer support, identifying security flaws, or deriving meaning from intricate supply chain data.

High-performance, user-friendly, enterprise-grade inferencing is made possible by NIM inference microservices. The NeMo Retriever NIM microservices enable developers to leverage all of this while leveraging their data to an even greater extent.

Nvidia Nemo Retriever

These recently released NeMo Retriever microservices for embedding and reranking NIM are now widely accessible:

NV-EmbedQA-E5-v5, a well-liked embedding model from the community that is tailored for text retrieval questions and answers.

Snowflake-Arctic-Embed-L, an optimized community model;

NV-RerankQA-Mistral4B-v3, a popular community base model optimized for text reranking for high-accuracy question answering;

NV-EmbedQA-Mistral7B-v2, a well-liked multilingual community base model fine-tuned for text embedding for correct question answering.

They become a part of the group of NIM microservices that are conveniently available via the NVIDIA API catalogue.

Model Embedding and Reranking

The two model types that make up the NeMo Retriever microservices embedding and reranking have both open and commercial versions that guarantee dependability and transparency.

With the purpose of preserving their meaning and subtleties, an embedding model converts a variety of data types, including text, photos, charts, and video, into numerical vectors that can be kept in a vector database. Compared to conventional large language models, or LLMs, embedding models are quicker and less expensive computationally.

After ingesting data and a query, a reranking model ranks the data based on how relevant it is to the query. These models are slower and more computationally complex than embedding models, but they provide notable improvements in accuracy.Image Credit To Nvidia

NeMo Retriever microservices offers advantages over other options. Developers utilising NeMo Retriever microservices may create a pipeline that guarantees the most accurate and helpful results for their company by employing an embedding NIM to cast a wide net of data to be retrieved, followed by a reranking NIM to cut the results for relevancy.

Developers can create the most accurate text Q&A retrieval pipelines by using the state-of-the-art open, commercial models available with NeMo NIM Retriever. NeMo Retriever microservices produced 30% less erroneous responses for enterprise question answering when compared to alternative solutions.Image Credit To Nvidia

NeMo Retriever microservices Principal Use Cases

NeMo Retriever microservices drives numerous AI applications, ranging from data-driven analytics to RAG and AI agent solutions.

With the help of NeMo Retriever microservices, intelligent chatbots with precise, context-aware responses can be created. They can assist in the analysis of enormous data sets to find security flaws. They can help glean insights from intricate supply chain data. Among other things, they can improve AI-enabled retail shopping advisors that provide organic, tailored shopping experiences.

For many use cases, NVIDIA AI workflows offer a simple, supported beginning point for creating generative AI-powered products.

NeMo Retriever NIM microservices are being used by dozens of NVIDIA data platform partners to increase the accuracy and throughput of their AI models.

NIM microservices

With the integration of NeMo Retriever integrating NIM microservices in its Hyper-Converged and Astra DB systems, DataStax is able to provide customers with more rapid time to market with precise, generative AI-enhanced RAG capabilities.

With the integration of NVIDIA NeMo Retriever microservices with Cohesity Gaia, the AI platform from Cohesity will enable users to leverage their data to drive smart and revolutionary generative AI applications via RAG.

Utilising NVIDIA NeMo Retriever, Kinetica will create LLM agents that can converse naturally with intricate networks in order to react to disruptions or security breaches faster and translate information into prompt action.

In order to link NeMo Retriever microservices to exabytes of data on its intelligent data infrastructure, NetApp and NVIDIA are working together. Without sacrificing data security or privacy, any NetApp ONTAP customer will be able to “talk to their data” in a seamless manner to obtain proprietary business insights.

Services to assist businesses in integrating NeMo Retriever NIM microservices into their AI pipelines are being developed by NVIDIA’s global system integrator partners, which include Accenture, Deloitte, Infosys, LTTS, Tata Consultancy Services, Tech Mahindra, and Wipro, in addition to their service delivery partners, Data Monsters, EXLService (Ireland) Limited, Latentview, Quantiphi, Slalom, SoftServe, and Tredence.

Nvidia NIM Microservices

Utilize Alongside Other NIM Microservices

NVIDIA Riva NIM microservices, which boost voice AI applications across industries increasing customer service and enlivening digital humans, can be used with NeMo Retriever microservices.

The record-breaking NVIDIA Parakeet family of automatic speech recognition models, Fastpitch and HiFi-GAN for text-to-speech applications, and Metatron for multilingual neural machine translation are among the new models that will soon be available as Riva NIM microservices.

The modular nature of NVIDIA NIM microservices allows developers to create AI applications in a variety of ways. To give developers even more freedom, the microservices can be connected with community models, NVIDIA models, or users’ bespoke models in the cloud, on-premises, or in hybrid settings.

Businesses may use NIM to implement AI apps in production by utilising the NVIDIA AI Enterprise software platform.

NVIDIA-Certified Systems from international server manufacturing partners like Cisco, Dell Technologies, Hewlett Packard Enterprise, Lenovo, and Supermicro, as well as cloud instances from Amazon Web Services, Google Cloud, Microsoft Azure, and Oracle Cloud Infrastructure, can run NIM microservices on customers’ preferred accelerated infrastructure.

Members of the NVIDIA Developer Program will soon have free access to NIM for

Read more on govindhtech.com

#NVIDIANeMo#RetrieverMicroservices#generativeAI#ImprovesLLMAccuracy#NVIDIANIMinferencemicroservices#Llama31model#AIapplications#AIagents#supplychain#NVIDIAAPI#llargelanguagemodels#text#NVIDIAAI#AmazonWebServices#MicrosoftAzure#NVIDIAmodels#GoogleCloud#technews#technology#news#govindhtech

0 notes

Text

NVIDIA AI Foundry Custom Models NeMo Retriever microservice

How Businesses Can Create Personalized Generative AI Models with NVIDIA AI Foundry.

NVIDIA AI Foundry

Companies looking to use AI need specialized Custom models made to fit their particular sector requirements.

With the use of software tools, accelerated computation, and data, businesses can build and implement unique models with NVIDIA AI Foundry, a service that may significantly boost their generative AI projects.

Similar to how TSMC produces chips made by other firms, NVIDIA AI Foundry offers the infrastructure and resources needed by other businesses to create and modify AI models. These resources include DGX Cloud, foundation models, NVIDIA NeMo software, NVIDIA knowledge, ecosystem tools, and support.

The product is the primary distinction: NVIDIA AI Foundry assists in the creation of Custom models, whereas TSMC manufactures actual semiconductor chips. Both foster creativity and provide access to a huge network of resources and collaborators.

Businesses can use AI Foundry to personalise NVIDIA and open Custom models models, such as NVIDIA Nemotron, CodeGemma by Google DeepMind, CodeLlama, Gemma by Google DeepMind, Mistral, Mixtral, Phi-3, StarCoder2, and others. This includes the recently released Llama 3.1 collection.

AI Innovation is Driven by Industry Pioneers

Among the first companies to use NVIDIA AI Foundry are industry leaders Amdocs, Capital One, Getty Images, KT, Hyundai Motor Company, SAP, ServiceNow, and Snowflake. A new era of AI-driven innovation in corporate software, technology, communications, and media is being ushered in by these trailblazers.

According to Jeremy Barnes, vice president of AI Product at ServiceNow, “organizations deploying AI can gain a competitive edge with Custom models that incorporate industry and business knowledge.” “ServiceNow is refining and deploying models that can easily integrate within customers’ existing workflows by utilising NVIDIA AI Foundry.”

The NVIDIA AI Foundry’s Foundation

The foundation models, corporate software, rapid computing, expert support, and extensive partner ecosystem are the main pillars that underpin NVIDIA AI Foundry.

Its software comprises the whole software platform for expediting model building, as well as AI foundation models from NVIDIA and the AI community.

NVIDIA DGX Cloud, a network of accelerated compute resources co-engineered with the top public clouds in the world Amazon Web Services, Google Cloud, and Oracle Cloud Infrastructure is the computational powerhouse of NVIDIA AI Foundry. Customers of AI Foundry may use DGX Cloud to grow their AI projects as needed without having to make large upfront hardware investments.

They can also create and optimize unique generative AI applications with previously unheard-of ease and efficiency. This adaptability is essential for companies trying to remain nimble in a market that is changing quickly.

NVIDIA AI Enterprise specialists are available to support customers of NVIDIA AI Foundry if they require assistance. In order to ensure that the models closely match their business requirements, NVIDIA experts may guide customers through every stage of the process of developing, optimizing, and deploying their models using private data.

Customers of NVIDIA AI Foundry have access to a worldwide network of partners who can offer a comprehensive range of support. Among the NVIDIA partners offering AI Foundry consulting services are Accenture, Deloitte, Infosys, and Wipro. These services cover the design, implementation, and management of AI-driven digital transformation initiatives. Accenture is the first to provide the Accenture AI Refinery framework, an AI Foundry-based solution for creating Custom models.

Furthermore, companies can get assistance from service delivery partners like Data Monsters, Quantiphi, Slalom, and SoftServe in navigating the challenges of incorporating AI into their current IT environments and making sure that these applications are secure, scalable, and in line with business goals.

Using AIOps and MLOps platforms from NVIDIA partners, such as Cleanlab, DataDog, Dataiku, Dataloop, DataRobot, Domino Data Lab, Fiddler AI, New Relic, Scale, and Weights & Biases, customers may create production-ready NVIDIA AI Foundry models.

Nemo retriever microservice

Clients can export their AI Foundry models as NVIDIA NIM inference microservices, which can be used on their choice accelerated infrastructure. These microservices comprise the Custom models, optimized engines, and a standard API.

NVIDIA TensorRT-LLM and other inferencing methods increase Llama 3.1 model efficiency by reducing latency and maximizing throughput. This lowers the overall cost of operating the models in production and allows businesses to create tokens more quickly. The NVIDIA AI Enterprise software bundle offers security and support that is suitable for an enterprise.

Along with cloud instances from Amazon Web Services, Google Cloud, and Oracle Cloud Infrastructure, the extensive array of deployment options includes NVIDIA-Certified Systems from worldwide server manufacturing partners like Cisco, Dell, HPE, Lenovo, and Supermicro.

Furthermore, Together AI, a leading cloud provider for AI acceleration, announced today that it will make Llama 3.1 endpoints and other open models available on DGX Cloud through the usage of its NVIDIA GPU-accelerated inference stack, which is accessible to its ecosystem of over 100,000 developers and businesses.

According to Together AI’s founder and CEO, Vipul Ved Prakash, “every enterprise running generative AI applications wants a faster user experience, with greater efficiency and lower cost.” “With NVIDIA DGX Cloud, developers and businesses can now optimize performance, scalability, and security by utilising the Together Inference Engine.”

NVIDIA NeMo

NVIDIA NeMo Accelerates and Simplifies the Creation of Custom Models

Developers can now easily curate data, modify foundation models, and assess performance using the capabilities provided by NVIDIA NeMo integrated into AI Foundry. NeMo technologies consist of:

A GPU-accelerated data-curation package called NeMo Curator enhances the performance of generative AI models by preparing large-scale, high-quality datasets for pretraining and fine-tuning.

NeMo Customizer is a scalable, high-performance microservice that makes it easier to align and fine-tune LLMs for use cases specific to a given domain.

On any accelerated cloud or data centre, NeMo Evaluator offers autonomous evaluation of generative AI models across bespoke and academic standards.

NeMo Guardrails is a dialogue management orchestrator that supports security, appropriateness, and correctness in large-scale language model smart applications, hence offering protection for generative AI applications.

Businesses can construct unique AI models that are perfectly matched to their needs by utilising the NeMo platform in NVIDIA AI Foundry.

Better alignment with strategic objectives, increased decision-making accuracy, and increased operational efficiency are all made possible by this customization.

For example, businesses can create models that comprehend industry-specific vernacular, adhere to legal specifications, and perform in unison with current processes.

According to Philipp Herzig, chief AI officer at SAP, “as a next step of their partnership, SAP plans to use NVIDIA’s NeMo platform to help businesses to accelerate AI-driven productivity powered by SAP Business AI.”

NeMo Retriever

NeMo Retriever microservice

Businesses can utilize NVIDIA NeMo Retriever NIM inference microservices to implement their own AI models in a live environment. With retrieval-augmented generation (RAG), these assist developers in retrieving private data to provide intelligent solutions for their AI applications.

According to Baris Gultekin, Head of AI at Snowflake, “safe, trustworthy AI is a non-negotiable for enterprises harnessing generative AI, with retrieval accuracy directly impacting the relevance and quality of generated responses in RAG systems.” “NeMo Retriever, a part of NVIDIA AI Foundry, is leveraged by Snowflake Cortex AI to further provide enterprises with simple, reliable answers using their custom data.”

Custom Models

Custom Models Provide a Competitive Edge

The capacity of NVIDIA AI Foundry to handle the particular difficulties that businesses encounter while implementing AI is one of its main benefits. Specific business demands and data security requirements may not be fully satisfied by generic AI models. On the other hand, Custom models are more flexible, adaptable, and perform better, which makes them perfect for businesses looking to get a competitive edge.

Read more on govindhtech.com

#NVIDIAAI#CustomModels#NeMoRetriever#microservice#GenerativeAI#NVIDIA#AI#projectsAI#DGXCloud#NVIDIANeMo#NVIDIAAIFoundry#GoogleDeepMind#Gemma#AmazonWebServices#NVIDIADGXCloud#AIapplications#MLOpsplatforms#NVIDIANIM#GoogleCloud#aimodel#technology#technews#news#govindhteh

0 notes

Text

Mistral NeMo: Powerful 12 Billion Parameter Language Model

Mistral NeMo 12B

Today, Mistral AI and NVIDIA unveiled Mistral NeMo 12B, a brand-new, cutting-edge language model that is simple for developers to customise and implement for enterprise apps that enable summarising, coding, a chatbots, and multilingual jobs.

The Mistral NeMo model provides great performance for a variety of applications by fusing NVIDIA’s optimised hardware and software ecosystem with Mistral AI‘s training data knowledge.

Guillaume Lample, cofounder and chief scientist of Mistral AI, said, “NVIDIA is fortunate to collaborate with the NVIDIA team, leveraging their top-tier hardware and software.” “With the help of NVIDIA AI Enterprise deployment, They have created a model with previously unheard-of levels of accuracy, flexibility, high efficiency, enterprise-grade support, and security.”

On the NVIDIA DGX Cloud AI platform, which provides devoted, scalable access to the most recent NVIDIA architecture, Mistral NeMo received its training.

The approach was further advanced and optimised with the help of NVIDIA TensorRT-LLM for improved inference performance on big language models and the NVIDIA NeMo development platform for creating unique generative AI models.

This partnership demonstrates NVIDIA’s dedication to bolstering the model-builder community.

Providing Unprecedented Precision, Adaptability, and Effectiveness

This enterprise-grade AI model performs accurately and dependably on a variety of tasks. It excels in multi-turn conversations, math, common sense thinking, world knowledge, and coding.

Mistral NeMo analyses large amounts of complicated data more accurately and coherently, resulting in results that are relevant to the context thanks to its 128K context length.

Mistral NeMo is a 12-billion-parameter model released under the Apache 2.0 licence, which promotes innovation and supports the larger AI community. The model also employs the FP8 data format for model inference, which minimises memory requirements and expedites deployment without compromising accuracy.

This indicates that the model is perfect for enterprise use cases since it learns tasks more efficiently and manages a variety of scenarios more skillfully.

Mistral NeMo provides performance-optimized inference with NVIDIA TensorRT-LLM engines and is packaged as an NVIDIA NIM inference microservice.

This containerised format offers improved flexibility for a range of applications and facilitates deployment anywhere.

Instead of taking many days, models may now be deployed anywhere in only a few minutes.

As a component of NVIDIA AI Enterprise, NIM offers enterprise-grade software with specialised feature branches, stringent validation procedures, enterprise-grade security, and enterprise-grade support.

It offers dependable and consistent performance and comes with full support, direct access to an NVIDIA AI expert, and specified service-level agreements.

Businesses can easily use Mistral NeMo into commercial solutions thanks to the open model licence.

With its compact design, the Mistral NeMo NIM can be installed on a single NVIDIA L40S, NVIDIA GeForce RTX 4090, or NVIDIA RTX 4500 GPU, providing great performance, reduced computing overhead, and improved security and privacy.

Cutting-Edge Model Creation and Personalisation

Mistral NeMo’s training and inference have been enhanced by the combined knowledge of NVIDIA engineers and Mistral AI.

Equipped with Mistral AI’s proficiencies in multilingualism, coding, and multi-turn content creation, the model gains expedited training on NVIDIA’s whole portfolio.

Its efficient model parallelism approaches, scalability, and mixed precision with Megatron-LM are designed for maximum performance.

3,072 H100 80GB Tensor Core GPUs on DGX Cloud, which is made up of NVIDIA AI architecture, comprising accelerated processing, network fabric, and software to boost training efficiency, were used to train the model using Megatron-LM, a component of NVIDIA NeMo.

Availability and Deployment

Mistral NeMo, equipped with the ability to operate on any cloud, data centre, or RTX workstation, is poised to transform AI applications on a multitude of platforms.

NVIDIA thrilled to present Mistral NeMo, a 12B model created in association with NVIDIA, today. A sizable context window with up to 128k tokens is provided by Mistral NeMo. In its size class, its logic, domain expertise, and coding precision are cutting edge. Because Mistral NeMo is based on standard architecture, it may be easily installed and used as a drop-in replacement in any system that uses Mistral 7B.

To encourage adoption by researchers and businesses, we have made pre-trained base and instruction-tuned checkpoints available under the Apache 2.0 licence. Quantization awareness was incorporated into Mistral NeMo’s training, allowing for FP8 inference without sacrificing performance.

The accuracy of the Mistral NeMo base model and two current open-source pre-trained models, Gemma 2 9B and Llama 3 8B, are compared in the accompanying table.Image Credit to Nvidia

Multilingual Model for the Masses

The concept is intended for use in multilingual, international applications. Hindi, English, French, German, Spanish, Portuguese, Chinese, Japanese, Korean, Arabic, and Spanish are among its strongest languages. It has a big context window and is educated in function calling. This is a fresh step in the direction of making cutting-edge AI models available to everyone in all languages that comprise human civilization.Image Credit to Nvidia

Tekken, a more efficient tokenizer

Tekken, a new tokenizer utilised by Mistral NeMo that is based on Tiktoken and was trained on more than 100 languages, compresses source code and natural language text more effectively than SentencePiece, the tokenizer used by earlier Mistral models. Specifically, it has about a 30% higher compression efficiency for Chinese, Italian, French, German, Spanish, and Russian source code. Additionally, it is three times more effective at compressing Arabic and Korean, respectively. For over 85% of all languages, Tekken demonstrated superior text compression performance when compared to the Llama 3 tokenizer.Image Credit to Nvidia

Instruction adjustment

Mistral NeMO went through a process of advanced alignment and fine-tuning. It is far more adept at reasoning, handling multi-turn conversations, following exact directions, and writing code than Mistral 7B.

Read more on govindhtech.com

#mistralnemo#parameter#mistrai#chatbots#nvidia#hardware#software#nvidiadgxcloud#nvidianemo#nvidiatensorrtllm#generativeai#aimodel#unprecedentedprecision#adaptability#nvidianim#nvidiartx#technology#technews#news#govindhtech

0 notes

Text

The NVIDIA Canvas App: An Introduction Guide to AI Art

NVIDIA Canvas App

This article is a part of the AI Decoded series, which shows off new RTX PC hardware, software, tools, and accelerations while demystifying AI by making the technology more approachable.

AI has totally changed as a result of generative models, which are highlighted by well-known apps like Stable Diffusion and ChatGPT.

Foundational AI models and generative adversarial networks (GANs) spurred a productivity and creative leap, paving the stage for this explosion.

One such model is NVIDIA’s GauGAN, which drives the NVIDIA Canvas app and use AI to turn crude sketches into stunning artwork.

NVIDIA Canvas

AI may be used to create realistic landscape photographs from basic brushstrokes. Make backgrounds fast, or explore concepts more rapidly so you may devote more time on idea visualisation.

Utilise AI’s Potential

Utilise a palette of realistic elements, such as grass or clouds, to paint basic forms and lines. Next, observe in real time how the screen is filled with jaw-dropping outcomes thanks to NVIDIA’s ground-breaking AI model. Dislike what you observe? Change the material from snow to grass, and observe how the whole scene transforms from a wintry paradise to a tropical paradise. There are countless innovative options.

Adaptable Styles

With NVIDIA Canvas app, you can alter your image to precisely what you require. Using nine Standard Mode styles, eight Panorama Mode styles, and a variety of materials from sky and mountains to rivers and stone you may alter the appearance and feel of your painting. Additionally, you can paint on many levels to distinguish distinct elements. One of the included sample scenarios can serve as inspiration, or you can start from scratch.

A Whole 360° Inspiration

With the addition of 360° panoramic functionality, artists can now quickly construct wraparound environments with Canvas and export them as equirectangular environment maps into any 3D application. These maps can be used by artists to alter the surrounding lighting in a 3D scene and add reflections for more realism.

Save as PSD or EXR

After you’ve produced the perfect image, Canvas allows you to upload it into Adobe Photoshop for further editing or blending with other pieces of art. Additionally, pictures can be imported into Blender, NVIDIA Omniverse USD Composer (previously Create), and other 3D programmes using Panorama.

How Everything Started

A generator and a discriminator are two complementing neural networks used in deep learning models called GANs.

There is competition between these neural networks. While the discriminator strives to distinguish between created and actual imagery, the generator aims to produce realistic, lifelike imagery. GANs get increasingly adept at producing realistic-looking samples as long as their neural networks continue to challenge one another.

GANs are excellent at deciphering intricate data patterns and producing output of the highest calibre. Applications for them include data augmentation, style transfer, image synthesis, and picture-to-image translation.

NVIDIA’s AI demonstration for creating lifelike images is called GauGAN, after the post-Impressionist painter Paul Gauguin. Developed by NVIDIA Research, it served as a direct inspiration for the creation of the NVIDIA Canvas app and is available for free download via the NVIDIA AI Playground.

NVIDIA AI Playground

Use NeVA to Unlock Image Insights

A multimodal vision-language model called NVIDIA NeMo Vision and Language Assistant (NeVA) can comprehend text and images and provide insightful answers.

Create Text-Based Images with SDXL

With shorter prompts, Stable Diffusion XL (SDXL) enables you to create expressive visuals and add text to photos.

Handle AI Models Real-Time

With the AI Playground on NGC, you can easily experiment with generative AI models like NeVA, SDXL, Llama 2, and CLIP right from your web browser thanks to its user-friendly interface.

Since its 2019 NVIDIA GTC premiere, GauGAN has gained enormous popularity and is utilised online by millions of people in addition to art educators, creative agencies, and museums.

Adding Sketch to Gogh’s Scenery

With the help of GauGAN and nearby NVIDIA RTX GPUs, NVIDIA Canvas app employs AI to convert basic brushstrokes into lifelike landscapes, with real-time outcomes displayed.

Using a palette of real-world objects like grass or clouds referred as in the app as “materials” users can begin by drawing basic lines and shapes.

The improved image is then produced in real time by the AI model on the other side of the screen. A few triangle shapes drawn with the “mountain” material, for instance, will appear as an amazing, lifelike range. Alternatively, users can choose the “cloud” material and change the weather from sunny to cloudy with a few mouse clicks.

There are countless innovative options. Draw a pond so that the water will reflect other visual features like the rocks and trees. When the material changes from snow to grass, the scene becomes a tropical paradise instead of a warm winter’s environment.

With Canvas’s Panorama mode, artists may produce 360-degree pictures that can be used in 3D applications. Greenskull AI, a YouTuber, painted a cove in the ocean to illustrate Panorama mode before importing it into Unreal Engine 5.

Read more on Govindhtech.com

#nvidiacanvasapp#aimodel#chatgpt#nvidia#NVIDIAOmniverse#nvidianemo#aiplayground#Llama2#nvidiagtc#nvidiartxgpus#artificialintelligence#news#technews#technology#technologynews#technologytrends#govindhtech

0 notes

Text

SoftServe News on Continental Drive with OpenUSD AI

SoftServe News

This article details how NVIDIA’s Omniverse platform is being used for industrial applications through a partnership between technology company SoftServe and car parts manufacturer Continental.

The main conclusions are:

Generative AI for virtual factories:

“Industrial Co-Pilot” is a system being developed to increase industrial efficiency while maintenance is being performed. It makes use of 3D visualisation with generative AI. Envision a virtual engineer assisting engineers with problem-solving and troubleshooting on the production line.

OpenUSD for data interchange:

This is made possible in large part by OpenUSD, a format that facilitates easy data interchange between various software programmes. This implies that engineers can communicate information with ease and utilise a variety of applications.

Advantages of Omniverse usage:

These AI-powered applications are built on top of the Omniverse platform. It provides resources, tools, and features that speed up development, such as real-time rendering. All things considered, this partnership serves as an illustration of how businesses are utilising cutting-edge technologies to enhance and optimise industrial operations.

Automotive innovation is being propelled by industrial digitalization.

SoftServe, a top provider of digital services and IT consulting, partnered with Continental, a top German automaker, to create Industrial Co-Pilot, a generative AI-powered virtual agent that helps engineers optimise maintenance procedures, in response to the industry’s growing need for seamless, connected driving experiences.

By incorporating the Universal Scene Description, or OpenUSD, framework into virtual manufacturing solutions like Industrial Co-Pilot that are created on the NVIDIA Omniverse platform, SoftServe assists producers like Continental in further optimising their operations.

OpenUSD provides the adaptability and scalability businesses require to fully realise the benefits of digital transformation while optimising processes and boosting productivity. Developers may incorporate OpenUSD and NVIDIA RTX rendering technologies into their current software tools and simulation workflows with ease thanks to the Omniverse platform, which offers application programming interfaces, software development kits, and services.

Acknowledging the Advantages of OpenUSD

SoftServe and Continental’s Industrial Co-Pilot combines immersive 3D visualisation with generative AI to support manufacturing teams in boosting output during production line and equipment maintenance. Engineers can monitor the operation of individual stations or the shop floor, as well as manage production lines, with the help of the copilot.

In order to perform root cause analysis and obtain detailed work instructions and recommendations, they can also communicate with the copilot. This can result in streamlined documentation processes and enhanced maintenance protocols. It is anticipated that these developments would lead to higher output and a 10% decrease in maintenance effort and downtime.

Benjamin Huber, who oversees advanced automation and digitalization in Continental’s user experience business division, emphasised the importance of the company’s partnership with SoftServe and its implementation of Omniverse at a recent Omniverse community livestream.

Developers at SoftServe and Continental now have the resources at their disposal to usher in a new era of AI-powered industrial services and apps thanks to the Omniverse platform. Additionally, SoftServe and Continental developers enable engineers to collaborate effortlessly across disciplines and systems, promoting efficiency and innovation throughout their operations, by dismantling data silos and promoting multi-platform cooperation using OpenUSD.

Huber stated, “Any engineer, regardless of the tool they’re using, can transform their data into OpenUSD and then interchange data between tools and disciplines.”

Vasyl Boliuk, senior lead and test automation engineer at SoftServe, echoed this sentiment when he discussed how SoftServe and Continental teams were able to create custom large language models and integrate them with new 3D workflows thanks to OpenUSD and Omniverse, in addition to other NVIDIA technologies like NVIDIA Riva, NVIDIA NeMo, and NVIDIA NIM microservices.

“We can add any feature or piece of metadata to our applications using OpenUSD,” Boliuk said.

SoftServe and Continental are assisting in transforming the car production industry by embracing cutting-edge technologies and encouraging teamwork.

Connect With the NVIDIA OpenUSD World

View Continental and SoftServe’s on-demand content. NVIDIA GTC talks about their virtual factory solutions and their experiences using OpenUSD to develop on NVIDIA Omniverse.

Modernising Digital Mega Plants’ Factory Planning and Manufacturing Processes How to Begin Using OpenUSD and Generative AI for Industrial Metaverse Applications NVIDIA founder and CEO Jensen Huang’s 7 p.m. COMPUTEX speech on Sunday, June 2. Taiwan time will cover the latest advancements driving the next industrial revolution. View a recent series of videos that demonstrate how OpenUSD may enhance 3D workflows. Check out the Alliance for OpenUSD forum and the AOUSD website for additional resources on OpenUSD.

“Into the Omniverse: SoftServe and Continental Drive Digitalization With OpenUSD and Generative AI” is described in full below.

The Participants:

SoftServe:

It is a technology business that offers services for digital transformation and IT consultancy.

Continental:

A well-known producer of auto parts.

NVIDIA Omniverse:

A virtual world and real-time simulation development platform.

The Objective:

Using real-time visualisation and generative AI on the NVIDIA Omniverse platform, revolutionise factory maintenance.

Industrial Co-Pilot is the Solution

This artificial intelligence (AI) system combines captivating 3D visualisation with generative AI.

Picture a virtual helper inside a three-dimensional model of your factory.

Regarding Factory Teams:

Keep an eye on each station and oversee the production lines.

Communicate with the Co-Pilot to determine the source of equipment problems.

Obtain detailed work instructions and maintenance suggestions.

This means that fewer physical manuals will be needed, and maintenance procedures will be simplified.

OpenUSD is the secret ingredient

A data format called OpenUSD serves as a global translator.

It makes it possible for data to be seamlessly transferred between various engineering software programmes.

Engineers can use any software to convert their data to OpenUSD and share it with other engineers using other technologies.

This dismantles data silos and promotes disciplinary collaboration.

The Omniverse’s Power

The basis for developing AI applications like Industrial Co-Pilot and others is provided by Omniverse.

It provides materials and tools for developers such as:

API access: To combine NVIDIA’s RTX rendering technology with OpenUSD.

SDKs, or software development kits, are used to create unique features for the Omniverse platform.

Capabilities for real-time rendering: To build dynamic and lifelike 3D industrial settings.

Advantages:

Enhanced output as a result of quicker and more effective maintenance.

Enhanced cooperation between engineers specialising in different fields.

workflows that are more efficient since there are less paper manuals used.

Possibility of innovation via the creation of fresh industrial AI applications on the Omniverse platform.

All things considered, this partnership shows how cutting-edge technologies like real-time simulation platforms, generative AI, and data interchange formats are revolutionising industrial processes. It sets the stage for a day when artificial intelligence (AI) virtual assistants are frequently used on factory floors to aid human workers and maximise output.

Read more on Govindhtech.com

0 notes

Text

Google Kubernetes Engine adds NVIDIA NeMo for generative AI

Google Kubernetes Engine

Organizations of all sizes, from startups to major corporations, have acted to harness the power of generative AI by integrating it into their platforms, solutions, and applications ever since it became well-known in the AI space. Although producing new content by learning from existing content is where generative AI truly shines, it is increasingly crucial that the content produced has some level of domain- or area-specificity.

This blog post explains how to train models on Google Kubernetes Engine (GKE) using NVIDIA accelerated computing and the NVIDIA NeMo framework, illustrating how generative AI models can be customized to your use cases.

Constructing models for generative AI When building generative AI models, high-quality data (referred to as the “dataset”) is essential. The output of the model is minimized by processing, enhancing, and analyzing data in a variety of formats, including text, code, images, and others. To facilitate the model’s training process, this data is fed into a model architecture based on the model’s modality. For Transformers, this could be text; for GANs (Generative Adversarial Networks), it could be images.

The model modifies its internal parameters during training in order to align its output with the data’s patterns and structures. A decreasing loss on the training set and better predictions on a test set are indicators of the model’s learning progress. The model is deemed to have converged when the performance continues to improve. After that, it might go through additional improvement processes like reinforcement-learning with human feedback (RLHF). To accelerate the rate of model learning, more hyperparameters can be adjusted, such as batch size or learning rate. A framework that provides the necessary constructs and tooling can speed up the process of building and customizing a model, making adoption easier.

What is the relationship between Kubernetes and Google Kubernetes Engine? The open source container orchestration platform Kubernetes is implemented by Google under the management of Google Kubernetes Engine. Google built Kubernetes by using years of expertise running large-scale production workloads on our own cluster management system, Borg.

NVidia NeMo service NVIDIA NeMo is an end-to-end, open-source platform designed specifically for creating personalized, enterprise-class generative AI models. NeMo uses cutting-edge technology from NVIDIA to enable a full workflow, including large-scale bespoke model training, automated distributed data processing, and infrastructure deployment and serving through Google Cloud. NeMo can also be used with NVIDIA AI Enterprise software, which can be purchased on the Google Cloud Marketplace, for enterprise-level deployments.

The modular design of the NeMo framework encourages data scientists, ML engineers, and developers to combine and match these essential elements when creating AI models

Nvidia Nemo framework Data curation Taking information out of datasets, deduplicating it, and filtering it to produce high-quality training data

Distributed training Advanced parallelism in training models is achieved through which distributes workloads among tens of thousands of compute nodes equipped with NVIDIA graphics processing units (GPUs).

Model customization Apply methods like P-tuning, SFT (Supervised Fine Tuning), and RLHF (Reinforcement Learning from Human Feedback) to modify a number of basic, pre-trained models to particular domains.

Deployment

The deployment process involves a smooth integration with the NVIDIA Triton Inference Server, resulting in high throughput, low latency, and accuracy. The NeMo framework offers boundaries to respect the security and safety specifications.

In order to begin the journey toward generative AI, it helps organizations to promote innovation, maximize operational efficiency, and create simple access to software frameworks. To implement NeMo on an HPC system that might have schedulers such as the Slurm workload manager, they suggest utilizing the ML Solution that is accessible via the Cloud HPC Toolkit. Scalable training with GKE Massively parallel processing, fast memory and storage access, and quick networking are necessary for building and modifying models. Furthermore, there are several demands on the infrastructure, including fault tolerance, coordinating distributed workloads, leveraging resources efficiently, scalability for quicker iterations, and scaling large-scale models.

With just one platform to handle all of their workloads, Google Kubernetes Engine gives clients the opportunity to have a more reliable and consistent development process. With its unparalleled scalability and compatibility with a wide range of hardware accelerators, including NVIDIA GPUs, Google Kubernetes Engine serves as a foundation platform that offers the best accelerator orchestration available, helping to save costs and improve performance dramatically.

Let’s examine how Google Kubernetes Engine facilitates easy management of the underlying infrastructure with the aid of Figure 1:

Calculate A single NVIDIA H100 or A100 Tensor Core GPU can be divided into multiple instances, each of which has high-bandwidth memory, cache, and compute cores. These GPUs are known as multi-instance GPUs (MIG). GPUs that share time: A single physical GPU node is utilized by several containers to maximize efficiency and reduce operating expenses. Keepsake High throughput and I/O requirements for local SSD GCS Fuse: permits object-to-file operations Creating a network Network performance can be enhanced by using the GPUDirect-TCPX NCCL plug-in, a transport layer plugin that allows direct GPU to NIC transfers during NCCL communication. To improve network performance between GPU nodes, use a Google Virtual Network Interface Card (gVNIC). Queuing In an environment with limited resources, Kubernetes’ native job queueing system is used to coordinate task execution through completion.

Communities, including other Independent Software Vendors (ISVs), have embraced GKE extensively to land their frameworks, libraries, and tools. GKE democratizes infrastructure by enabling AI model development, training, and deployment for teams of various sizes.

Architecture for solutions According to industry trends in AI and ML, models get significantly better with increased processing power. With the help of NVIDIA GPUs and Google Cloud’s products and services, GKE makes it possible to train and serve models at a scale that leads the industry.

The Reference Architecture, shown in Figure 2 above, shows the main parts, common services, and tools used to train the NeMo large language model with Google Kubernetes Engine.

Nvidia Nemo LLM A managed node pool with the A3 nodes to handle workloads and a default node pool to handle common services like DNS pods and custom controllers make up a GKE Cluster configured as a regional or zonal location. Eight NVIDIA H100 Tensor Core GPUs, sixteen local SSDs, and the necessary drivers are included in each A3 node. The Cloud Storage FUSE allows access to Google Cloud Storage as a file system, and the CSI driver for Filestore CSI allows access to fully managed NFS storage in each node. Batching in Kueue for workload control. When using a larger setup with multiple teams, this is advised. Every node has a filestore installed to store outputs, interim, and final logs for monitoring training effectiveness. A bucket in Cloud Storage holding the training set of data. The training image for the NeMo framework is hosted by NVIDIA NGC. TensorBoard can be used to view training logs mounted on Filestore and analyze the training step times and loss. Common services include Terraform for setup deployment, IAM for security management, and Cloud Ops for log viewing. A GitHub repository at has an end-to-end walkthrough available in it. The walkthrough offers comprehensive instructions on how to pre-train NVIDIA’s NeMo Megatron GPT using the NeMo framework and set up the aforementioned solution in a Google Cloud Project.

Continue on BigQuery is frequently used by businesses as their primary data warehousing platform in situations where there are enormous volumes of structured data. To train the model, there are methods for exporting data into Cloud Storage. In the event that the data is not accessible in the desired format, BigQuery can be read, transformed, and written back using Dataflow.

In summary Organizations can concentrate on creating and implementing their models to expand their business by utilizing GKE, freeing them from having to worry about the supporting infrastructure. Custom generative AI model building is a great fit for NVIDIA NeMo. The scalability, dependability, and user-friendliness needed to train and serve models are provided by this combination

Read more on Govindhtech.com

#GoogleKubernetesEngine#NVIDIANeMo#nvidia#generativeAI#AImodels#googlecloud#llm#nemo#bigquery#gpu#technology#technews#govindhtech

0 notes

Text

NVIDIA Launches Generative AI Microservices for developers

In order to enable companies to develop and implement unique applications on their own platforms while maintaining complete ownership and control over their intellectual property, NVIDIA released hundreds of enterprise-grade generative AI microservices.

The portfolio of cloud-native microservices, which is built on top of the NVIDIA CUDA platform, includes NVIDIA NIM microservices for efficient inference on over two dozen well-known AI models from NVIDIA and its partner ecosystem. Additionally, NVIDIA CUDA-X microservices for guardrails, data processing, HPC, retrieval-augmented generation (RAG), and other applications are now accessible as NVIDIA accelerated software development kits, libraries, and tools. Additionally, approximately two dozen healthcare NIM and CUDA-X microservices were independently revealed by NVIDIA.

NVIDIA’s full-stack computing platform gains a new dimension with the carefully chosen microservices option. With a standardized method to execute bespoke AI models designed for NVIDIA’s CUDA installed base of hundreds of millions of GPUs spanning clouds, data centers, workstations, and PCs, this layer unites the AI ecosystem of model creators, platform providers, and organizations.

Prominent suppliers of application, data, and cybersecurity platforms, such as Adobe, Cadence, CrowdStrike, Getty Images, SAP, ServiceNow, and Shutterstock, were among the first to use the new NVIDIA generative AI microservices offered in NVIDIA AI Enterprise 5.0.

Jensen Huang, NVIDIA founder and CEO, said corporate systems have a treasure of data that can be turned into generative AI copilots. These containerized AI microservices, created with their partner ecosystem, enable firms in any sector to become AI companies.

Microservices for NIM Inference Accelerate Deployments From Weeks to Minutes

NIM microservices allow developers to cut down on deployment timeframes from weeks to minutes by offering pre-built containers that are driven by NVIDIA inference tools, such as TensorRT-LLM and Triton Inference Server.

For fields like language, voice, and medication discovery, they provide industry-standard APIs that let developers easily create AI apps utilizing their private data, which is safely stored in their own infrastructure. With the flexibility and speed to run generative AI in production on NVIDIA-accelerated computing systems, these applications can expand on demand.

For deploying models from NVIDIA, A121, Adept, Cohere, Getty Images, and Shutterstock as well as open models from Google, Hugging Face, Meta, Microsoft, Mistral AI, and Stability AI, NIM microservices provide the quickest and most efficient production AI container.

Today, ServiceNow revealed that it is using NIM to create and implement new generative AI applications, such as domain-specific copilots, more quickly and affordably.

Consumers will be able to link NIM microservices with well-known AI frameworks like Deepset, LangChain, and LlamaIndex, and access them via Amazon SageMaker, Google Kubernetes Engine, and Microsoft Azure AI.

Guardrails, HPC, Data Processing, RAG, and CUDA-X Microservices

To accelerate production AI development across sectors, CUDA-X microservices provide end-to-end building pieces for data preparation, customisation, and training.

Businesses may utilize CUDA-X microservices, such as NVIDIA Earth-2 for high resolution weather and climate simulations, NVIDIA cuOpt for route optimization, and NVIDIA Riva for configurable speech and translation AI, to speed up the adoption of AI.

NeMo Retriever microservices enable developers to create highly accurate, contextually relevant replies by connecting their AI apps to their business data, which includes text, photos, and visualizations like pie charts, bar graphs, and line plots. Businesses may improve accuracy and insight by providing copilots, chatbots, and generative AI productivity tools with more data thanks to these RAG capabilities.

Nvidia nemo

There will soon be more NVIDIA NeMo microservices available for the creation of bespoke models. These include NVIDIA NeMo Evaluator, which analyzes AI model performance, NVIDIA NeMo Guardrails for LLMs, NVIDIA NeMo Customizer, which builds clean datasets for training and retrieval, and NVIDIA NeMo Evaluator.

Ecosystem Uses Generative AI Microservices To Boost Enterprise Platforms

Leading application suppliers are collaborating with NVIDIA microservices to provide generative AI to businesses, as are data, compute, and infrastructure platform vendors from around the NVIDIA ecosystem.

NVIDIA microservices is collaborating with leading data platform providers including Box, Cloudera, Cohesity, Datastax, Dropbox, and NetApp to assist users in streamlining their RAG pipelines and incorporating their unique data into generative AI applications. NeMo Retriever is a tool that Snowflake uses to collect corporate data in order to create AI applications.

Businesses may use the NVIDIA microservices that come with NVIDIA AI Enterprise 5.0 on any kind of infrastructure, including popular clouds like Google Cloud, Amazon Web Services (AWS), Azure, and Oracle Cloud Infrastructure.

More than 400 NVIDIA-Certified Systems, including as workstations and servers from Cisco, Dell Technologies, HP, Lenovo, and Supermicro, are also capable of supporting NVIDIA microservices. HPE also announced today that their enterprise computing solution for generative AI is now available. NIM and NVIDIA AI Foundation models will be integrated into HPE’s AI software.

VMware Private AI Foundation and other infrastructure software platforms will soon support NVIDIA AI Enterprise microservices. In order to make it easier for businesses to incorporate generative AI capabilities into their applications while maintaining optimal security, compliance, and control capabilities, Red Hat OpenShift supports NVIDIA NIM microservices. With NVIDIA AI Enterprise, Canonical is extending Charmed Kubernetes support for NVIDIA microservices.

Through NVIDIA AI Enterprise, the hundreds of AI and MLOps partners that make up NVIDIA’s ecosystem such as Abridge, Anyscale, Dataiku, DataRobot, Glean, H2O.ai, Securiti AI, Scale.ai, OctoAI, and Weights & Biases are extending support for NVIDIA microservices.

Vector search providers like as Apache Lucene, Datastax, Faiss, Kinetica, Milvus, Redis, and Weaviate are collaborating with NVIDIA NeMo Retriever microservices to provide responsive RAG capabilities for businesses.

Accessible

NVIDIA microservices are available for free experimentation by developers at ai.nvidia.com. Businesses may use NVIDIA AI Enterprise 5.0 on NVIDIA-Certified Systems and top cloud platforms to deploy production-grade NIM microservices.

Read more on govindhtech.com

#nvidiaai#ai#aimodel#nim#rag#nvidianemo#amazon#aws#dell#nvidianim#technology#technews#news#govindhtech

1 note

·

View note

Text

Master Amazon Titan with NVIDIA Training

Amazon Titan Models System Requirements

The training of huge language models takes place on enormous datasets that are distributed across hundreds of NVIDIA GPUs. Nothing about large language models is little.

Companies who are interested in generative artificial intelligence may face a great deal of difficulty as a result of this. These issues can be overcome with the assistance of NVIDIA NeMo, which is a framework for constructing, configuring, and operating LLMs.

Over the course of the past few months, NVIDIA NeMo has been utilized by a group of highly skilled scientists and developers working at Amazon Web Services. These individuals are responsible for the creation of foundation models for Amazon Bedrock, which is a generative artificial intelligence service for foundation models.

According to Leonard Lausen, a senior applied scientist at Amazon Web Services (AWS), “One of the primary reasons for us to work with NeMo is that it is extensible, comes with optimizations that allow us to run with high GPU utilization, and also enables us to scale to larger clusters so that we can train and deliver models to our customers more quickly.”

Have a Big, Really Big Thought

NeMo’s parallelism approaches make it possible to use LLM training at scale in an efficient manner. For the purpose of accelerating training, it enabled the team to distribute its LLM across a large number of GPUs when used with the Elastic Fabric Adapter from Amazon Web Services.

Customers of Amazon Web Services are provided with an UltraCluster Networking infrastructure by EFA. This infrastructure has the capability to directly link over 10,000 GPUs and work around the operating system and CPU by utilizing NVIDIA GPUDirect.

The combination made it possible for the scientists working for Amazon Web Services to offer great model quality, which is something that is incapable of being accomplished at scale when depending exclusively on data parallelism approaches.

The Framework Is Adaptable to All Sizes

The adaptability of NeMo, according to Lausen, made it possible for Amazon Web Services to modify the training software to accommodate the particulars of the new Amazon Titan model, datasets, and infrastructure.

Among the advancements delivered by Amazon Web Services (AWS) is the effective streaming of data from Amazon Simple Storage Service (Amazon S3) to the GPU cluster. Lausen stated that it was simple to implement these enhancements because to the fact that NeMo is built atop well-known libraries such as PyTorch Lightning, which are responsible for standardizing LLM training pipeline components.

For the benefit of their respective clients, Amazon Web Services (AWS) and NVIDIA intend to incorporate the knowledge gained from their partnership into products such as NVIDIA NeMo and services such as Amazon Titan.

Read more on Govindhtech.com

#Amazon#Titan#NVIDIA#largelanguagemodels#NVIDIANeMo#AmazonBedrock#AmazonWebServices#technews#technology#govindhtech

0 notes