#national science foundation-ocean observatory initiative

Explore tagged Tumblr posts

Photo

Crabeater seal at Palmer Station

A crabeater seal lounging at Palmer Station, Antarctica. Despite their name, crabeater seals only eat Antarctic krill and use their specially shaped teeth to filter out the seawater.

Credit: Mike Lucibella/NSF

#mike lucibella#photographer#national science foundation-ocean observatory initiative#crabeater seal#seal#animal#mammal#arctic national wildlife refuge#palmer station#antarctica#antarctic krill#nature

224 notes

·

View notes

Text

Uncharismatic Fact of the Day

The blobfish, or sculpins, have a lot of adaptations to help them survive at the bottom of the ocean; most notable is the lack of a swim bladder-- an air-filled sack that other fish use to help them swim. Instead, sculpins have a jelly-like layer of fat underneath their skin which makes them slightly less dense than the water around them and allows them to bob along the ocean floor with minimal effort. Turns out being fat and lazy has its advantages!

(Image: A fathead sculpin (Psychrolutes phrictus) by the National Science Foundation/Ocean Observatories Initiative)

#blobfish#Scorpaeniformes#Psychrolutidae#sculpins#ray-finned fish#bony fish#fish#uncharismatic facts

204 notes

·

View notes

Text

Gemini North Captures Galactic Archipelago Entangled In a Web Of Dark Matter

One century after astronomers proved the existence of galaxies beyond the Milky Way, enormous galaxy clusters are offering clues to today’s cosmic questions

100 years ago Edwin Hubble discovered decisive evidence that other galaxies existed far beyond the Milky Way. This image, captured by the Gemini North telescope, one half of the International Gemini Observatory, features a portion of the enormous Perseus Cluster, showcasing its ‘island Universes’ in awe-inspiring detail. Observations of these objects continue to shed light not only on their individual characteristics, but also on cosmic mysteries such as dark matter.

Among the many views of the Universe that modern telescopes offer, some of the most breathtaking are images like this. Dotted with countless galaxies — each one of incomprehensible size — they make apparent the tremendous scale and richness of the cosmos. Taking center stage here, beguiling in its seeming simplicity, the elliptical galaxy NGC 1270 radiates an ethereal glow into the surrounding darkness. And although it may seem like an island adrift in the deep ocean of space, this object is part of something much larger than itself.

NGC 1270 is just one member of the Perseus Cluster, a group of thousands of galaxies that lies around 240 million light-years from Earth in the constellation Perseus. This image, taken with the Gemini Multi-Object Spectrograph (GMOS) on the Gemini North telescope, one half of the International Gemini Observatory — supported in part by the U.S. National Science Foundation and operated by NSF NOIRLab — captures a dazzling collection of galaxies in the central region of this enormous cluster.

Looking at such a diverse array, shown here in spectacular clarity, it’s astonishing to think that when NGC 1270 was first discovered in 1863 it was not widely accepted that other galaxies even existed. Many of the objects that are now known to be galaxies were initially described as nebulae, owing to their cloudy, amorphous appearance. The idea that they are entities of a similar size to our own Milky Way, or ‘island Universes’ as Immanuel Kant called them, was speculated on by several astronomers throughout history, but was not proven. Instead, many thought they were smaller objects on the outskirts of the Milky Way, which many believed to comprise most or all of the Universe.

The nature of these mysterious objects and the size of the Universe were the subjects of astronomy’s famous Great Debate, held in 1920 between astronomers Heber Curtis and Harlow Shapley. The debate remained unsettled until 1924 when Edwin Hubble, using the Hooker Telescope at Mount Wilson Observatory, observed stars within some of the nebulae to calculate how far they were from Earth. The results were decisive; they were far beyond the Milky Way. Astronomers’ notion of the cosmos underwent a dramatic shift, now populated with innumerable strange, far-off galaxies as large and complex as our own.

As imaging techniques have improved, piercing ever more deeply into space, astronomers have been able to look closer and closer at these ‘island Universes’ to deduce what they might be like. For instance, researchers have observed powerful electromagnetic energy emanating from the heart of NGC 1270, suggesting that it harbors a frantically feeding supermassive black hole. This characteristic is seen in around 10% of galaxies and is detectable via the presence of an accretion disk — an intense vortex of matter swirling around and gradually being devoured by the central black hole.

It’s not only the individual galaxies that astronomers are interested in; hints at many ongoing mysteries lie in their relationship to and interactions with one another. For example, the fact that huge groups like the Perseus Cluster exist at all points to the presence of the enigmatic substance we call dark matter [1]. If there were no such invisible, gravitationally interactive material, then astronomers believe galaxies would be spread more or less evenly across space rather than collecting into densely populated clusters. Current theories suggest that an invisible web of dark matter draws galaxies together at the intersections between its colossal tendrils, where its gravitational pull is strongest.

Although dark matter is invoked to explain observed cosmic structures, the nature of the substance itself remains elusive. As we look at images like this one, and consider the strides made in our understanding over the past century, we can sense a tantalizing hint of just how much more might be discovered in the decades to come. Perhaps hidden in images like this are clues to the next big breakthrough. How much more will we know about our Universe in another century?

Notes

[1] The discovery of dark matter in galaxies is in-part attributed to American astronomer Vera C. Rubin, who used the rotation of galaxies to infer the presence of an invisible, yet gravitationally interactive, material holding them together. She is also the name inspiration for NSF–DOE Vera C. Rubin Observatory, currently under construction in Chile, which will begin operations in 2025.

TOP IMAGE: NGC 1270 is just one member of the Perseus Cluster, a group of thousands of galaxies that lies around 240 million light-years from Earth in the constellation Perseus. This image, taken with the Gemini Multi-Object Spectrograph (GMOS) on the Gemini North telescope, one half of the International Gemini Observatory, which is supported in part by the U.S. National Science Foundation and operated by NSF NOIRLab, captures a dazzling collection of galaxies in the central region of this enormous cluster. Credit: International Gemini Observatory/NOIRLab/NSF/AURA/ Image Processing: J. Miller & M. Rodriguez (International Gemini Observatory/NSF NOIRLab), T.A. Rector (University of Alaska Anchorage/NSF NOIRLab), M. Zamani (NSF NOIRLab) Acknowledgements: PI: Jisu Kang (Seoul National University)

youtube

youtube

youtube

7 notes

·

View notes

Text

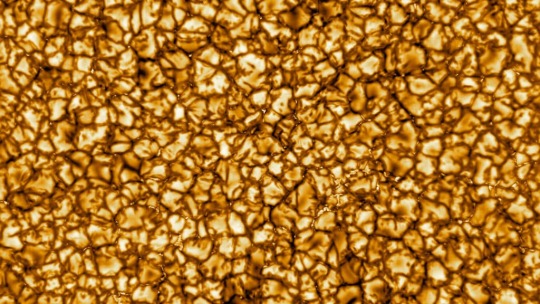

Newest solar telescope produces first images

Just released first images from the National Science Foundation's Daniel K. Inouye Solar Telescope reveal unprecedented detail of the sun's surface and preview the world-class products to come from this preeminent 4-meter solar telescope. NSF's Inouye Solar Telescope, on the summit of Haleakala, Maui, in Hawai'i, will enable a new era of solar science and a leap forward in understanding the sun and its impacts on our planet.

Activity on the sun, known as space weather, can affect systems on Earth. Magnetic eruptions on the sun can impact air travel, disrupt satellite communications and bring down power grids, causing long-lasting blackouts and disabling technologies such as GPS.

The first images from NSF's Inouye Solar Telescope show a close-up view of the sun's surface, which can provide important detail for scientists. The images show a pattern of turbulent "boiling" plasma that covers the entire sun. The cell-like structures -- each about the size of Texas -- are the signature of violent motions that transport heat from the inside of the sun to its surface. That hot solar plasma rises in the bright centers of "cells," cools, then sinks below the surface in dark lanes in a process known as convection.

"Since NSF began work on this ground-based telescope, we have eagerly awaited the first images," said France Córdova, NSF director. "We can now share these images and videos, which are the most detailed of our sun to date. NSF's Inouye Solar Telescope will be able to map the magnetic fields within the sun's corona, where solar eruptions occur that can impact life on Earth. This telescope will improve our understanding of what drives space weather and ultimately help forecasters better predict solar storms."

Expanding knowledge

The sun is our nearest star -- a gigantic nuclear reactor that burns about 5 million tons of hydrogen fuel every second. It has been doing so for about 5 billion years and will continue for the other 4.5 billion years of its lifetime. All that energy radiates into space in every direction, and the tiny fraction that hits Earth makes life possible. In the 1950s, scientists figured out that a solar wind blows from the sun to the edges of the solar system. They also concluded for the first time that we live inside the atmosphere of this star. But many of the sun's most vital processes continue to confound scientists.

"On Earth, we can predict if it is going to rain pretty much anywhere in the world very accurately, and space weather just isn't there yet," said Matt Mountain, president of the Association of Universities for Research in Astronomy, which manages the Inouye Solar Telescope. "Our predictions lag behind terrestrial weather by 50 years, if not more. What we need is to grasp the underlying physics behind space weather, and this starts at the sun, which is what the Inouye Solar Telescope will study over the next decades."

The motions of the sun's plasma constantly twist and tangle solar magnetic fields . Twisted magnetic fields can lead to solar storms that can negatively affect our technology-dependent modern lifestyles. During 2017's Hurricane Irma, the National Oceanic and Atmospheric Administration reported that a simultaneous space weather event brought down radio communications used by first responders, aviation and maritime channels for eight hours on the day the hurricane made landfall.

Finally resolving these tiny magnetic features is central to what makes the Inouye Solar Telescope unique. It can measure and characterize the sun's magnetic field in more detail than ever seen before and determine the causes of potentially harmful solar activity.

"It's all about the magnetic field," said Thomas Rimmele, director of the Inouye Solar Telescope. "To unravel the sun's biggest mysteries, we have to not only be able to clearly see these tiny structures from 93 million miles away but very precisely measure their magnetic field strength and direction near the surface and trace the field as it extends out into the million-degree corona, the outer atmosphere of the sun."

Better understanding the origins of potential disasters will enable governments and utilities to better prepare for inevitable future space weather events. It is expected that notification of potential impacts could occur earlier -- as much as 48 hours ahead of time instead of the current standard, which is about 48 minutes. This would allow more time to secure power grids and critical infrastructure and to put satellites into safe mode.

The engineering

To achieve the proposed science, this telescope required important new approaches to its construction and engineering. Built by NSF's National Solar Observatory and managed by AURA, the Inouye Solar Telescope combines a 13-foot (4-meter) mirror -- the world's largest for a solar telescope -- with unparalleled viewing conditions at the 10,000-foot Haleakala summit.

Focusing 13 kilowatts of solar power generates enormous amounts of heat -- heat that must be contained or removed. A specialized cooling system provides crucial heat protection for the telescope and its optics. More than seven miles of piping distribute coolant throughout the observatory, partially chilled by ice created on site during the night.

The dome enclosing the telescope is covered by thin cooling plates that stabilize the temperature around the telescope, helped by shutters within the dome that provide shade and air circulation. The "heat-stop" (a high-tech, liquid-cooled, doughnut-shaped metal) blocks most of the sunlight's energy from the main mirror, allowing scientists to study specific regions of the sun with unparalleled clarity.

The telescope also uses state-of-the-art adaptive optics to compensate for blurring created by Earth's atmosphere. The design of the optics ("off-axis" mirror placement) reduces bright, scattered light for better viewing and is complemented by a cutting-edge system to precisely focus the telescope and eliminate distortions created by the Earth's atmosphere. This system is the most advanced solar application to date.

"With the largest aperture of any solar telescope, its unique design, and state-of-the-art instrumentation, the Inouye Solar Telescope -- for the first time -- will be able to perform the most challenging measurements of the sun," Rimmele said. "After more than 20 years of work by a large team devoted to designing and building a premier solar research observatory, we are close to the finish line. I'm extremely excited to be positioned to observe the first sunspots of the new solar cycle just now ramping up with this incredible telescope."

New era of solar astronomy

NSF's new ground-based Inouye Solar Telescope will work with space-based solar observation tools such as NASA's Parker Solar Probe (currently in orbit around the sun) and the European Space Agency/NASA Solar Orbiter (soon to be launched). The three solar observation initiatives will expand the frontiers of solar research and improve scientists' ability to predict space weather.

"It's an exciting time to be a solar physicist," said Valentin Pillet, director of NSF's National Solar Observatory. "The Inouye Solar Telescope will provide remote sensing of the outer layers of the sun and the magnetic processes that occur in them. These processes propagate into the solar system where the Parker Solar Probe and Solar Orbiter missions will measure their consequences. Altogether, they constitute a genuinely multi-messenger undertaking to understand how stars and their planets are magnetically connected."

"These first images are just the beginning," said David Boboltz, a program director in NSF's Division of Astronomical Sciences who oversees the facility's construction and operations. "Over the next six months, the Inouye telescope's team of scientists, engineers and technicians will continue testing and commissioning the telescope to make it ready for use by the international solar scientific community. The Inouye Solar Telescope will collect more information about our sun during the first 5 years of its lifetime than all the solar data gathered since Galileo first pointed a telescope at the sun in 1612."

19 notes

·

View notes

Text

Biden administration proposes $24.5 billion budget for NASA in 2022

https://sciencespies.com/space/biden-administration-proposes-24-5-billion-budget-for-nasa-in-2022/

Biden administration proposes $24.5 billion budget for NASA in 2022

WASHINGTON — The White House released a first look at its budget proposal for fiscal year 2022 that includes an increase in funding for NASA, particularly Earth science and space technology programs.

The 58-page budget document, released April 9, outlines the Biden administration discretionary spending priorities. It provides only high-level details, though, with a full budget proposal expected later in the spring.

For NASA, the White House is proposing an overall budget of approximately $24.7 billion in fiscal year 2022, an increase of about 6.3% from the $23.271 billion the agency received in the final fiscal year 2021 omnibus spending bill.

The document does not provide a full breakout of NASA spending across its various programs, but does highlight increases in several areas. NASA’s Earth science program, which received $2 billion in 2021, would get $2.3 billion in 2022, a 15% increase. The funding, the document states, would be used to “initiate the next generation of Earth-observing satellites to study pressing climate science questions.”

NASA’s space technology program, which received $1.1 billion in 2021, would get $1.4 billion in 2022, a 27% increase. That funding “would enhance the capabilities and reduce the costs of the full range of NASA missions and provide new technologies to help the commercial space industry grow,” the budget document states. That includes support for what the White House called “novel early-stage space technology research that would support the development of clean energy.”

NASA’s human exploration program would get $6.9 billion in fiscal year 2022, up about 5% from the $6.56 billion in 2021. The document didn’t break out that spending among programs such as the Space Launch System, Orion and Human Landing System (HLS), but said it would support the Artemis program and “the development of capabilities for sustainable, long-duration human exploration beyond Earth, and eventually to Mars.”

The document offered no guidance on a schedule for the Artemis program, noting only that it included “a series of crewed exploration missions to the lunar surface and beyond.” The modest increase suggests that the administration doesn’t plan to ramp up spending on the HLS program as originally envisioned when the Trump administration set a goal of returning humans to the lunar surface by 2024.

The brief passage about NASA spending offered little else about funding levels and priorities the White House has for the agency. The document did note that the budget provides funding for a number of ongoing missions in development, including the Mars Sample Return program and the Nancy Grace Roman Space Telescope, an astrophysics mission targeted for cancellation in several previous budget requests by the Trump administration. However, the document does not spell out funding levels for those projects.

The administration also proposed a $20 million increase for NASA’s education programs, formally known as STEM Engagement. That received $127 million in 2021, but had been proposed for cancellation in all four of the Trump administration’s budget proposals. Congress, in each case, rejected the proposal and funded NASA’s education efforts.

Elsewhere in the budget, the White House proposed increasing funding for weather satellite programs at the National Oceanic and Atmospheric Administration by $500 million, to $2 billion in fiscal year 2022. That funding would support what it described as “the next generation of satellites, incorporating a diverse array of new technologies, which would improve data for weather and climate forecasts and provide critical information to the public.”

The proposal does not spell out funding for smaller space-related offices, like the Office of Space Commerce in the Department of Commerce or the Federal Aviation Administration’s Office of Commercial Space Transportation. The document does note that the administration will offer funding to support improvements to management of the national airspace system, including integration of commercial space launches into it.

The National Science Foundation would get $10.2 billion in the proposed budget, a 20% increase from 2021. That would include funding to continue work on the Vera C. Rubin Observatory in Chile, although the document does not mention how much funding would be provided for that or other astrophysics research.

#Space

0 notes

Photo

Uncovering tsunami hazards

In 2011, the great Tohoku Earthquake struck Japan on a segment of a fault that a number of scientists had said could not generate great earthquakes and tsunami waves. At the time, scientists were just starting to revise those estimates, but it was too late. Seawalls in northern Japan were too small, water poured across farmlands and devastated a nuclear power plant along the coast.

The Tohoku quake showed scientists that great, tsunamigenic (causing tsunami waves) quakes can happen on faults where the hazard might not be fully recognized. There are major subduction zones around the world, particularly in the Pacific Ocean, and some areas of those faults had been thought to be unable to produce great earthquakes and tsunami. In light of the Tohoku quake, scientists are now looking again at those areas to see how the initial impressions hold up.

One new study looked at a section of the Aleutian subduction zone south of Alaska and asked that basic question – does this fault segment look more dangerous than we thought – and answered that question with “yes”.

The Shumagin Gap is a section of the Aleutian Subduction Zone below the Shumagin Islands. It is in-between sections of the megathrust fault that ruptured in earthquakes in 1938 and 1946, both of which are shown in orange on this map. This section of the fault was thought to be “creeping” – a state where a fault is moving slowly. It was thought that since this fault is creeping, that slow motion would relieve the stress in this area and prevent tsunamigenic earthquakes.

However, after Tohoku, a team of scientists led by Lamont-Doherty Earth Observatory’s Anne Bécel reassessed this conclusion after collecting modern seismic data on the structure of this subduction zone. They found it had a number of similarities with the segment of the Tohoku fault that broke.

Both faults have thin wedges of sediment accumulated at their tip and only thin layers of sediment being pushed down between the upper and lower plates. That’s the start of a worrisome signal, but the seismic profiles found something else – a second fault as part of the structure, just like Tohoku.

After the 2011 quake it was discovered that there is a small second fault on the Japan-side of the trench. As the Pacific plate forces its way downwards, it drags a part of the Japanese coast down with it. When the megathrust ruptures, it releases the stress in the upper plate and that released stress snapped back along a small normal fault. That normal fault in the upper plate seems to be caused by motion during the great earthquakes, and finding a similar fault in this Alaskan seismic gap could mean that it too hosts regular great, tsunamigenic earthquakes.

There was some evidence that a much older earthquake, in 1788, might have moved into this zone, but since there were no marine geologists at the time its hard to say for certain how much of the fault ruptured in that quake. Adding in this new data, it now seems likely that this portion of the Aleutian subduction zone is a much greater tsunami hazard than was previously thought.

There are a number of other segments of creeping megathrust faults around the world. Few to none of them have been measured in the detail performed in this study. How many others might be hiding a similar tsunami risk?

This study was only possible because of the vessel Marcus G. Langseth owned and operated by the U.S. National Science Foundation. This resource could, with time and funding, investigate other potential sites in the U.S. for similar hazards, but that will take years and continued strong support of Earth Science research in the U.S. And that, of course, only covers the U.S., when there are similar trenches worldwide.

-JBB

Image source and original paper: http://go.nature.com/2hrUJ6g

Press version: http://bit.ly/2hrRgVl

43 notes

·

View notes

Text

Warning system delays add to deaths in Indonesian tsunami

MAKASSAR, Indonesia — An early warning system that could have prevented some deaths in the tsunami that hit an Indonesian island on Friday has been stalled in the testing phase for years.

The high-tech system of seafloor sensors, data-laden sound waves and fiber-optic cable was meant to replace a system set up after an earthquake and tsunami killed nearly 250,000 people in the region in 2004. But inter-agency wrangling and delays in getting just 1 billion rupiah ($69,000) to complete the project means the system hasn’t moved beyond a prototype developed with $3 million from the U.S. National Science Foundation.

It is too late for central Sulawesi, where walls of water up to 6 metres (20 feet) high and a magnitude 7.5 earthquake killed at least 832 people in the cities of Palu and Donggala, tragically highlighting the weaknesses of the existing warning system and low public awareness about how to respond to warnings.

“To me this is a tragedy for science, even more so a tragedy for the Indonesian people as the residents of Sulawesi are discovering right now,” said Louise Comfort, a University of Pittsburgh expert in disaster management who has led the U.S. side of the project, which also involves engineers from the Woods Hole Oceanographic Institute and Indonesian scientists and disaster experts.

“It’s a heartbreak to watch when there is a well-designed sensor network that could provide critical information,” she said.

After a 2004 tsunami killed 230,000 people in a dozen countries, more than half of them in the Indonesian province of Aceh, a concerted international effort was launched to improve tsunami warning capabilities, particularly in the Indian Ocean and for Indonesia, one of world’s most earthquake and tsunami-prone countries.

Part of that drive, using funding from Germany and elsewhere, included deploying a network of 22 buoys connected to seafloor sensors to transmit advance warnings.

A sizeable earthquake off Sumatra in 2016 that caused panic in the coastal city of Padang revealed that none of the buoys costing hundreds of thousands of dollars each were working. They’d been disabled by vandalism or theft or just stopped working due to a lack of funds for maintenance.

The backbone of Indonesia’s tsunami warning system today is a network of 134 tidal gauge stations augmented by land-based seismographs, sirens in about 55 locations and a system to disseminate warnings by text message.

When the 7.5 quake hit just after 6 p.m., the meteorology and geophysics agency issued a tsunami alert, warning of potential for waves of 0.5 to 3 metres. It ended the warning at 6.36 p.m. That drew harsh online criticism but the agency’s head said the warning was lifted after the tsunami hit. It’s unclear exactly what time tsunami waves rushed into the narrow bay that Palu is built around.

“The tide gauges are operating, but they are limited in providing any advance warning. None of the 22 buoys are functioning,” Comfort said. “In the Sulawesi incident, BMKG (the meteorology and geophysics agency) cancelled the tsunami warning too soon, because it did not have data from Palu. This is the data the tsunami detection system could provide,” she said.

Adam Switzer, a tsunami expert at the Earth Observatory of Singapore, said it’s a “little unfair” to say the agency got it wrong.

“What it shows is that the tsunami models we have now are too simplistic,” he said. “They don’t take into account multiple events, multiple quakes within a short period of time. They don’t take into account submarine landslides.”

Whatever system is in use, he said, the priority after an earthquake in a coastal area should be to get to higher ground and stay there for a couple of hours.

Power outages after the earthquake struck Friday meant that sirens meant to warn residents to evacuate did not work, said Harkunti P. Rahayu, an expert at the Institute of Technology in Bandung.

“Most people were shocked by the earthquake and did not pay any thought that a tsunami will come,” she said.

Experts say the prototype system deployed offshore from Padang — a city extremely vulnerable to tsunamis because it faces a major undersea fault overdue for a massive quake — can provide authoritative information about a tsunami threat within 1-3 minutes. That compares with 5-45 minutes from the now defunct buoys and the limited information provided by tidal gauges.

The system’s undersea seismometers and pressure sensors send data-laden sound waves to warm surface waters. From there they refract back into the depths, travelling 20-30 kilometres to the next node in the network and so on.

The Padang network’s final undersea point needs just a few more kilometres of fiber optic cable to connect it to a station on an offshore island where the cascades of data would be transmitted by satellite to the geophysics agency, which issues tsunami warnings, and to disaster officials.

The Associated Press first reported on the system in January 2017, when the project was awaiting Indonesian funding to lay the cables. Since then, agencies involved have suffered budget cuts and the project bounced back and forth between them.

A December 2017 quake off the coast of Java close to Jakarta re-ignited interest and the geophysics agency made getting funding a priority. In July, the Ministry of Finance in July approved funding to purchase and lay the cable.

But at an inter-agency meeting in September, the three major agencies involved failed to agree on their responsibilities and the project was “simply put on hold,” Comfort said.

Indonesian officials who’ve been supportive of the new early warning system did not immediately respond to requests for comment.

Since the 2004 tsunami, the mantra among disaster officials in Indonesia has been that the earthquake is the tsunami warning and signal for immediate evacuation. Not everyone is convinced a tsunami detection system is essential.

“What Indonesian colleagues have commented upon is that people were confused about what to do with the alert information,” said Gavin Sullivan, a Coventry University psychologist who works with the Indonesian Resilience Initiative on a disaster preparation project for the Indonesian city of Bandung.

The fact that people were still milling around Palu’s shoreline when waves were visibly approaching shows the lessons of earlier disasters haven’t been absorbed.

“This points to the failing to do appropriate training and to develop trust so that people know exactly what to do when an alert is issued,” he said. “In our project in Bandung we’re finding a similar unwillingness to prepare for something that seems unlikely.”

——

Associated Press writer Margie Mason in Jakarta contributed.

from Financial Post https://ift.tt/2OWGxyx via IFTTT Blogger Mortgage Tumblr Mortgage Evernote Mortgage Wordpress Mortgage href="https://www.diigo.com/user/gelsi11">Diigo Mortgage

0 notes

Photo

A blobfish, also known as a flathead sculpin (Psychrolutes phrictus), hovers in the caldera at Axial Seamount, approximately 300 miles off the Oregon coast. There are nine species of blobfish, all members of the Psychrolutes genus which live deep in the sea.

PHOTOGRAPH BY NATIONAL SCIENCE FOUNDATION-OCEAN OBSERVATORY INITIATIVE/UNIVERSITY OF WASHINGTON/CSSF

#national science foundation-ocean observatory initiative#university of washington#cssf#photographer#blobfish#flathead sculpin#psychrolutes phrictus#axial seamount#marine#fish#nature#national geographic

99 notes

·

View notes

Text

Researchers hope to learn more about quakes associated with Kilauea

Researchers from Rice University in Houston recently joined colleagues from Western Washington University and the University of Rhode Island in placing seismometers off the coast of Hawaii Island with the hopes of gaining new insight into the landscape under the ocean floor.

Julia Morgan, a professor of Earth, environmental and planetary sciences, and student David Blank were awarded a National Science Foundation grant to join a team of researchers and seed the seafloor with a dozen seismic detectors off the southeastern coast of the Big Island.

These efforts came in the wake of a 6.9-magnitude earthquake that shook the island in May, shortly before the start of eruption activity in Kilauea volcano’s lower East Rift Zone.

Data will be collected until September, and the information is expected to provide “an extensive record of earthquakes and aftershocks associated with the eruption of the world’s most active volcano over two months,” according to Rice University.

Morgan’s interest in geologic structures, particularly relating to volcano deformation and faulting, led her to study the ocean bed off the Big Island’s coast for years, according to an announcement Monday from Rice University.

In a 2003 paper, Morgan and her colleagues used marine seismic reflection data to look inside Kilauea’s underwater slope for the boundaries of an active landslide, the Hilina Slump, as well as signs of previous avalanches.

The researchers determined that the Hilina Slump is restricted to the upper slopes of the volcano, and the lower slopes consist of a large pile of ancient avalanche debris that was pushed by Kilauea’s sliding, gravity-driven flank into a massive, mile-high bench about 15 miles offshore, according to the university. This outer bench currently buttresses the Hilina Slump, preventing it from breaking away from the volcano slopes.

“Remarkably, after this earthquake, all the boundaries of the slump also lit up with small earthquakes. These clearly occurred on a different fault than the main earthquake, suggesting that the slump crept downslope during or after that event,” Morgan said in the announcement.

While the risk of an imminent avalanche is slim, she said, the eruption, earthquake and aftershocks present an opportunity to get a better look at the island’s hidden terrain, the university announcement said. Every new quake that occurs along Kilauea’s rift zones and around the perimeter of the Hilina Slump and the bench helps the researchers understand the terrain.

Morgan said the U.S. Geological Survey, which operates the Hawaiian Volcano Observatory, has a host of ground-based seismometers but none in the ocean. Monitors at sea will reveal quakes under the bench that are too small for land seismometers to sense.

The seismometers were placed around the Hilina Slump, close to shore where lava is entering the ocean, and on the outer bench in line with the initial quake.

“If this outer bench is experiencing earthquakes, we want to know what surfaces are experiencing them,” Morgan said. “Along the base? Within the bench? Some new fault that we didn’t know about? This data will provide us the ability to determine what structures, or faults, are actually slipping.”

The post Researchers hope to learn more about quakes associated with Kilauea appeared first on Hawaii Tribune-Herald.

from Hawaii News – Hawaii Tribune-Herald https://ift.tt/2uZjCd1

0 notes

Text

Science gets shut down right along with the federal government

by Angela K. Wilson

Ongoing wildlife studies are one kind of federally funded research that’s sidelined during a shutdown. USFWS, CC BY

When the U.S. government shuts down, much of the science that it supports is not spared. And there is no magic light switch that can be flipped to reverse the impact.

For instance, large-scale instruments like NASA’s Stratoscopheric Observatory for Infrared Astronomy – the “flying telescope” – have to stop operations. Eventually bringing such instrumentation back up to speed requires over a week. If the shutdown lingers, contingency funds provided to maintain large-scale instruments supported by agencies including NASA, the National Oceanic and Atmospheric Administration and the National Science Foundation will run out and operations will cease, adding to the list of closed facilities.

When I headed NSF’s Division of Chemistry from March 2016 to July 2018, I experienced firsthand two shutdowns like the one the country is weathering now. The 1,800 NSF staff would be sent home, without access to email and without even the option to work voluntarily, until eventually an end to the shutdown was negotiated. As we were unsure how long the shutdowns would run, a lot of time was spent developing contingency plans – and coordinating with many hundreds of researchers about them. Concerns about what will happen to researchers’ day-to-day projects are compounded by apprehension about interruptions to long-term funding.

What’s not happening?

Many federal agencies perform science. The Centers for Disease Control and Prevention and the National Institutes of Health are less affected by the shutdown this time since they already have their budgets for fiscal year 2019. But agencies including the NSF, the Fish and Wildlife Service, the National Parks Service, the U.S. Geological Survey, the Environmental Protection Agency, the National Institute of Standards and Technology and NOAA have had to stop most work.

In some sensitive areas involving plants, animals, earth or space phenomena that are cyclical or seasonal, scientists may miss critical windows for research. If something happens only once a year and the moment is now – such as the pollination window for some drought-resistant plants – a researcher will miss out and must wait another year. Other data sets have long records of measurements that are taken daily or at other defined times. Now they’ll have holes in their data because federal workers can’t do their jobs during a shutdown.

Databases go dark. Many scientists and engineers across the country – indeed, across the globe – rely on the information in these databases, such as those offered by NIST, which is part of the Department of Commerce. When data can’t be accessed, projects are delayed.

Vital scientific meetings such as that of the American Meteorological Society and the American Astronomical Society which are heavily reliant upon the expertise of federal scientists have been affected by the shutdown, too. Federal scientists from the closed agencies cannot travel to conferences to learn about the most recent work in the fields, nor share their own findings.

And of course, federal scientists serve as journal editors, reviewers and collaborators on research projects. Their inability to work has an impact across the scientific community in moving science and technology forward for our nation.

Without a doubt, the government shutdown will delay, cancel or compress implementation timelines of initiatives to help drive development of new science and tech in the United States. This affects both U.S. research progress and the American STEM workforce. Missed (or delayed) opportunity costs are high, as some planned investments are in areas with fierce global competition and significant investments by other countries – think next-generation computers and communication – which are critical to the country’s national security.

Scientists are always hoping for a bigger piece of the federal budget. AP Photo/J. Scott Applewhite

Budget worries compound the shutdown’s effects

The shutdown is not just a long vacation. The amount of work that must be done at federal agencies isn’t reduced. In fact, while the employees are away, the work continues to build up.

For some divisions at the NSF, where I worked, the early part of the year is the peak period in terms of workload. Scientists submit around 40,000 research proposals annually, hoping to secure funding for their projects. The longer the shutdown, the more intense the workload will be once the government reopens, since decisions about the support of research still need to occur during the current fiscal year. Decisions – and projects – will be delayed.

It is important to note that the government shutdown is exacerbating the effect of mostly flat budgets (with the exception of the 2009 stimulus) that many federally funded scientific agencies have been dealing with for more than a decade.

Prior to the shutdown, contingencies were made to ensure that some of the scientific facilities have spending authority for at least a month or so of operations. But, if the shutdown continues, furloughs of facility staff may become necessary if the limits of obligated funding are reached.

And, with all of these negatives, for all of the agencies that are shut down, the biggest question is what will happen to their budgets. Here we are, four months into the fiscal year, and agencies do not know what will happen to them for what remains of FY 2019. It is difficult to plan, it is difficult to continue to function and it affects STEM workforce morale, retention and ability to attract quality personnel into vitally important scientific roles.

Now that we’re facing the longest government shutdown to date, national security, health and the economy continue to be jeopardized by STEM research that’s been slowed or stopped.

About The Author:

Angela K. Wilson is a Professor of Physical, Theoretical and Computational Chemistry at Michigan State University.

This article is republished from our content partners at The Conversation under a Creative Commons license.

35 notes

·

View notes

Text

Breakthrough Listen releases 2 petabytes of data from SETI survey of Milky Way

https://sciencespies.com/space/breakthrough-listen-releases-2-petabytes-of-data-from-seti-survey-of-milky-way/

Breakthrough Listen releases 2 petabytes of data from SETI survey of Milky Way

Credit: CC0 Public Domain

The Breakthrough Listen Initiative today released data from the most comprehensive survey yet of radio emissions from the plane of the Milky Way Galaxy and the region around its central black hole, and it is inviting the public to search the data for signals from intelligent civilizations.

At a media briefing today in Seattle as part of the annual meeting of the American Association for the Advancement of Science (AAAS), Breakthrough Listen principal investigator Andrew Siemion of the University of California, Berkeley, announced the release of nearly 2 petabytes of data, the second data dump from the four-year old search for extraterrestrial intelligence (SETI). A petabyte of radio and optical telescope data was released last June, the largest release of SETI data in the history of the field.

The data, most of it fresh from the telescope prior to detailed study from astronomers, comes from a survey of the radio spectrum between 1 and 12 gigahertz (GHz). About half of the data comes via the Parkes radio telescope in New South Wales, Australia, which, because of its location in the Southern Hemisphere, is perfectly situated and instrumented to scan the entire galactic disk and galactic center. The telescope is part of the Australia Telescope National Facility, owned and managed by the country’s national science agency, CSIRO.

The remainder of the data was recorded by the Green Bank Observatory in West Virginia, the world’s largest steerable radio dish, and an optical telescope called the Automated Planet Finder, built and operated by UC Berkeley and located at Lick Observatory outside San Jose, California.

“Since Breakthrough Listen’s initial data release last year, we have doubled what is available to the public,” said Breakthrough Listen’s lead system administrator, Matt Lebofsky. “It is our hope that these data sets will reveal something new and interesting, be it other intelligent life in the universe or an as-yet-undiscovered natural astronomical phenomenon.”

The National Radio Astronomy Observatory (NRAO) and the privately-funded SETI Institute in Mountain View, California, also announced today an agreement to collaborate on new systems to add SETI capabilities to radio telescopes operated by NRAO. The first project will develop a system to piggyback on the National Science Foundation’s Karl G. Jansky Very Large Array (VLA) in New Mexico and provide data to state-of-the-art digital backend equipment built by the SETI Institute.

“The SETI Institute will develop and install an interface on the VLA, permitting unprecedented access to the rich data stream continuously produced by the telescope as it scans the sky,” said Siemion, who, in addition to his UC Berkeley position, is the Bernard M. Oliver Chair for SETI at the SETI Institute. “This interface will allow us to conduct a powerful, wide-area SETI survey that will be vastly more complete than any previous such search.”

“As the VLA conducts its usual scientific observations, this new system will allow for an additional and important use for the data we’re already collecting,” said NRAO Director Tony Beasley. “Determining whether we are alone in the universe as technologically capable life is among the most compelling questions in science, and NRAO telescopes can play a major role in answering it.”

“For the whole of human history, we had a limited amount of data to search for life beyond Earth. So, all we could do was speculate. Now, as we are getting a lot of data, we can do real science and, with making this data available to general public, so can anyone who wants to know the answer to this deep question,” said Yuri Milner, the founder of Breakthrough Listen.

Earth transit zone survey

In releasing the new radio and optical data, Siemion highlighted a new analysis of a small subset of the data: radio emissions from 20 nearby stars that are aligned with the plane of Earth’s orbit such that an advanced civilization around those stars could see Earth pass in front of the sun (a “transit” like those focused on by NASA’s Kepler space telescope). Conducted by the Green Bank Telescope, the Earth transit zone survey observed in the radio frequency range between 4 and 8 gigahertz, the so-called C-band. The data were then analyzed by former UC Berkeley undergraduate Sofia Sheikh, now a graduate student at Pennsylvania State University, who looked for bright emissions at a single radio wavelength or a narrow band around a single wavelength. She has submitted the paper to the Astrophysical Journal.

“This is a unique geometry,” Sheikh said. “It is how we discovered other exoplanets, so it kind of makes sense to extrapolate and say that that might be how other intelligent species find planets, as well. This region has been talked about before, but there has never been a targeted search of that region of the sky.”

While Sheikh and her team found no technosignatures of civilization, the analysis and other detailed studies the Breakthrough Listen group has conducted are gradually putting limits on the location and capabilities of advanced civilizations that may exist in our galaxy.

“We didn’t find any aliens, but we are setting very rigorous limits on the presence of a technologically capable species, with data for the first time in the part of the radio spectrum between 4 and 8 gigahertz,” Siemion said. “These results put another rung on the ladder for the next person who comes along and wants to improve on the experiment.”

Sheikh noted that her mentor, Jason Wright at Penn State, estimated that if the world’s oceans represented every place and wavelength we could search for intelligent signals, we have, to date, explored only a hot tub’s worth of it.

“My search was sensitive enough to see a transmitter basically the same as the strongest transmitters we have on Earth, because I looked at nearby targets on purpose,” Sheikh said. “So, we know that there isn’t anything as strong as our Arecibo telescope beaming something at us. Even though this is a very small project, we are starting to get at new frequencies and new areas of the sky.”

Beacons in the galactic center?

The so-far unanalyzed observations from the galactic disk and galactic center survey were a priority for Breakthrough Listen because of the higher likelihood of observing an artificial signal from that region of dense stars. If artificial transmitters are not common in the galaxy, then searching for a strong transmitter among the billions of stars in the disk of our galaxy is the best strategy, Simeon said.

On the other hand, putting a powerful, intergalactic transmitter in the core of our galaxy, perhaps powered by the 4 million-solar-mass black hole there, might not be beyond the capabilities of a very advanced civilization. Galactic centers may be so-called Schelling points: likely places for civilizations to meet up or place beacons, given that they cannot communicate among themselves to agree on a location.

“The galactic center is the subject of a very specific and concerted campaign with all of our facilities because we are in unanimous agreement that that region is the most interesting part of the Milky Way galaxy,” Siemion said. “If an advanced civilization anywhere in the Milky Way wanted to put a beacon somewhere, getting back to the Schelling point idea, the galactic center would be a good place to do it. It is extraordinarily energetic, so one could imagine that if an advanced civilization wanted to harness a lot of energy, they might somehow use the supermassive black hole that is at the center of the Milky Way galaxy.”

Visit from an interstellar comet

Breakthrough Listen also released observations of the interstellar comet 2I/Borisov, which had a close encounter with the sun in December and is now on its way out of the solar system. The group had earlier scanned the interstellar rock ‘Oumuamua, which passed through the center of our solar system in 2017. Neither exhibited technosignatures.

“If interstellar travel is possible, which we don’t know, and if other civilizations are out there, which we don’t know, and if they are motivated to build an interstellar probe, then some fraction greater than zero of the objects that are out there are artificial interstellar devices,” said Steve Croft, a research astronomer with the Berkeley SETI Research Center and Breakthrough Listen. “Just as we do with our measurements of transmitters on extrasolar planets, we want to put a limit on what that number is.”

Regardless of the kind of SETI search, Siemion said, Breakthrough Listen looks for electromagnetic radiation that is consistent with a signal that we know technology produces, or some anticipated signal that technology could produce, and inconsistent with the background noise from natural astrophysical events. This also requires eliminating signals from cellphones, satellites, GPS, internet, Wi-fi and myriad other human sources.

In Sheikh’s case, she turned the Green Bank telescope on each star for five minutes, pointed away for another five minutes and repeated that twice more. She then threw out any signal that didn’t disappear when the telescope pointed away from the star. Ultimately, she whittled an initial 1 million radio spikes down to a couple hundred, which she was able to eliminate as Earth-based human interference. The last four unexplained signals turned out to be from passing satellites.

Siemion emphasized that the Breakthrough Listen team intends to analyze all the data released to date and to do it systematically and often.

“Of all the observations we have done, probably 20% or 30% have been included in a data analysis paper,” Siemion said. “Our goal is not just to analyze it 100%, but 1000% or 2000%. We want to analyze it iteratively.”

Explore further

New technologies, strategies expanding search for extraterrestrial life

Provided by University of California – Berkeley

Citation: Breakthrough Listen releases 2 petabytes of data from SETI survey of Milky Way (2020, February 14) retrieved 14 February 2020 from https://phys.org/news/2020-02-breakthrough-petabytes-seti-survey-milky.html

This document is subject to copyright. Apart from any fair dealing for the purpose of private study or research, no part may be reproduced without the written permission. The content is provided for information purposes only.

#Space

0 notes

Link

President Trump’s proposed U.S. fiscal 2018 budget issued today sharply cuts science spending while bolstering military spending as he promised during the campaign. Among the big targets are National Institutes of Health ($6 billion cut from its $34 billion budget), the Department of Energy ($900 cut from DOE Office of Science and elimination of the $300 million Advanced Research Projects Agency), National Oceanic and Atmospheric Administration (five percent cut) and Environmental Protection Agency ($2.6 billion cut or 31.4 percent of its budget).

Perhaps surprisingly, the National Science Foundation – a key funding source for HPC research and infrastructure – was not mentioned in the budget. Science was hardly the only target. The Trump budget closely adhered to the administration’s “America First” tenets slashing $10 billion from the US Agency for International Development (USAID). Health and Human Services and Education are also targeted for cuts of 28.7 and 16.2 percent respectively.

One of the more thorough examinations of Trump’s proposed budget impact on science is presented in Science Magazine (NIH, DOE Office of Science face deep cuts in Trump’s first budget). The Wall Street Journal also offers a broad review of the full budget (Trump Budget Seeks Big Cuts to Environment, Arts, Foreign Aid) and noted the proposed budget faces bipartisan opposition and procedural hurdles:

“…Already, Republicans have voiced alarm over proposed funding cuts to foreign aid. In addition, Senate rules require 60 votes to advance the annual appropriations bills that set each department’s spending levels. Republicans control 52 Senate seats, meaning the new president will need support from Democrats to advance his domestic spending agenda.

“You don’t have 50 votes in the Senate for most of this, let alone 60,” said Steve Bell, a former GOP budget aide who is now a senior analyst at the Bipartisan Policy Center. “There’s as much chance that this budget will pass as there is that I’m going to have a date with Elle Macpherson.”

A broad chorus of concern is emerging. The Information Technology and Innovation Foundation (ITIF) posted its first take on President Trump’s budget. It cuts critical investment and eliminates vital programs, argues ITIF. The preliminary evidence suggests that the administration is taking its cues from a deeply flawed framework put forward by the Heritage Foundation.

Overall, ITIF says “The reality is that if the United States is going to successfully manage its growing financial problems and improve living standards for all Americans, it needs to increase its investment in the primary drivers of innovation, productivity, and competitiveness. The Trump budget goes in the opposite direction. If these cuts were to be enacted, they would signal the end of the American century as a global innovation leader.”

Two years ago, the National Strategic Computing Initiative (July 2015) was established by then President Obama’s executive order. It represents a grandly ambitious effort to nourish all facets of the HPC ecosystem in the U.S. That said, after initial fanfare, NSCI has seemed to languish although a major element – DOE’s Exascale Computing Program – continues marching forward. It’s not clear how the Trump Administration perceives NSCI and to a large degree no additional funding has been funneled into the program since its announcement.

The issuing of the budget closely follows the recent release and media coverage of a December 2016 DOE-NSA Technical Meeting report that declares underinvestment by the U.S. government in HPC and supercomputing puts U.S. computer technology leadership and national competitiveness at risk in the face of China’s steady ascent in HPC. (See HPCwire coverage, US Supercomputing Leaders Tackle the China Question)

The Department of Defense is one of the few winners in the proposed budget with a $53.2 billion jump (10 percent) in keeping with Trump campaign promises. At a top level, NASA is relatively unscathed but Science reports “At NASA, a roughly $100 million to cut to the agency’s earth sciences program would be mostly achieved by canceling four climate-related missions, according to sources. They are the Orbiting Carbon Observatory-3; the Plankton, Aerosol, Cloud, ocean Ecosystem program; the Deep Space Climate Observatory; and the CLARREO Pathfinder. Overall, NASA receives a 1% cut.”

Obviously, it remains early days for the budget battle. There have been suggestions that the proposed cuts, some especially deep, are part of a broad strategy by the Administration to settle for lesser cuts but stronger buy-in from Congress on other Trump policy initiatives.

Link to Science Magazine coverage: http://ift.tt/2mvHCix

Link to WSJ article: http://ift.tt/2mvmqcA

Link to Nature coverage: http://ift.tt/2mw4TQx

The post Trump Budget Targets NIH, DOE, and EPA; No Mention of NSF appeared first on HPCwire.

via Government – HPCwire

0 notes

Text

Big science receives $328-million boost from Ottawa

From The Globe and Mail: Some of Canada’s largest and most unique science labs and initiatives will share a $328-million top-up, federal Science Minister Kirsty Duncan announced on Monday.The funding, awarded through the Canada Foundation for Innovation, is intended to help keep the lights on at 17 key national facilities that cover a broad range of research disciplines.Major recipients include the Canadian Light Source ($48-million), a powerful X-ray beam based at the University of Saskatchewan that allows researchers to discern structures and processes at molecular scales; Ocean Networks Canada ($46.6-million), a University of Victoria-run series of sea-floor observatories that conduct ocean monitoring; and Canada’s Genomics Enterprise ($32-million), a three-institution collaboration based in Montreal, Toronto and Vancouver that sequences thousands of genomes a year.

MORE: http://www.theglobeandmail.com/news/national/big-science-receives-328-million-boost-from-ottawa/article33546056/

0 notes

Photo

Pickering's Triangle image taken with the Mayall 4-meter Telescope

A wide-field image of Pickering's Triangle taken with the U.S. National Science Foundation's Mayall 4-meter Telescope at Kitt Peak National Observatory. Pickering's Triangle is part of the Cygnus Loop supernova remnant.

Credit: T.A. Rector/University of Alaska Anchorage, H. Schweiker/WIYN and NOIRLab/NSF/AURA (Available under Creative Commons Attribution 4.0 International)

#t a rector#university of alaska anchorage#h schweider#wiyn#noirlab#national science foundation-ocean observatory initiative#aura#pickering's triangle#astronomy#space#mayall 4-meter telescope#cygnus loop supernova#nature

31 notes

·

View notes