#more ai commentary

Explore tagged Tumblr posts

Text

The ongoing AI battle. I had to unfollow yet another author, and stop supporting their patreon. It breaks my heart. It really does. I understand the excitement of “oh look people made fanart”, but the cost is this. If you share AI Art, you promote it. You say it’s okay. It’s to be tolerated. You may not be able to stop people from doing it, but you sure as hell can stand with artists and not give it your platform.

AI Art is ART THEFT. Periodt.

Really stings with authors tho. Especially since I know so many struggle and feel burned when people leak their patreon stuff. Don’t be a hypocrite. If you share and promote AI art you’re not better than someone benefitting from someone else’s leak.

#not art#art adjacent#fuck ai art#ai art is stolen art#more ai commentary#sorry for the vent#this makes it so hard to want to keep making art#ty to everyone who follows and supports me#you keep me going when I feel like giving up

28 notes

·

View notes

Note

I think some of what comes from art breeder support is not realizing that the user base has added stolen art. Art breeder did not start this way. It was people using portraits, filters, breeding long chains to get decent portraits. It became the standard portrait use for the IF community (on tumblr). Which is a reason so many look “similar” they don’t have massive chains and so get eerily familiar looks to each other.

I didn’t know when exactly the change occured that it went from just using people, stock photos, etc to adding and abusing art. I hadn’t followed it, researched it, and thus cosigned the use of it. There were updates but it flew under the radar. Especially with larger companies that have done much worse. Add that unless a user goes through the history of a breeded portrait, they may not know that it was influenced by stolen art. It’s really just sneaky poison.

The best anyone can do is try to educate. Ask if the user/author knew what is going on. If they checked the history. Just try to be patient. And don’t send death threats or try to verbally cut off their head. I know it’s infuriating, but not everyone is knowledgable on this and doing it with purpose.

I typed an extremely long ask and then chickened out and deleted it. But I'm really disappointed by any IF author who chooses to use artbreeder as it is now. Users are allowed to upload anything, and it's obvious they're adding stolen arts to the datasets. Tons of stolen art.

And here's where I chickened out because I don't know if this take is insane or not - if an author posts artbreeder portraits fully knowing it's full of stolen art because because they can't afford commissions and it's convenient, how do they feel about people who steal side stories and early access off of an author's patreon because they "can't afford it and can't wait?"

I hate indie piracy btw, I just can't escape this idea that people care less about artbreeder theft because they don't know the people affected.

[posting as is]

[note: I think we exhausted the topic for now. Unless someone has a take that is different to the ones already posted, I'll pause posting asks about this topic.]

#not art#more ai commentary#don’t send death threats to authors#or anyone#ai sucks#but we can learn from each other#and do better

14 notes

·

View notes

Text

Wish fervent AI tech enthusiasts would understand that it's nice to manually do something (e.g make art on your own even if it's not up to par to what you'd like to make), because else your brain will rot.

#commentary#i can understand the part where ai stuff can be used as a potential tool#but i feel like people have been more considering it as a replacement altogether#at least it's what it seemed like from what i've seen since last year up to now#i also fully endorse literally anyone picking up art and this recent movement should be an even better motivator to do it#also this shit needs tight regulation asap

91 notes

·

View notes

Text

for the electric dreams remake, all i care about is that edgar has the most obnoxious early 2000s internet aesthetic possible. i repeat, THE MOST OBNOXIOUS. 2 billion blinkies, terrible gradients, bad contrast, absolutely unreadable bullshit. if modern tech minimalism comes anywhere near that entire movie i’m going to throw up

#i know it’s inevitable that they’re gonna make him a chatgpt alexa#and it’s gonna have like modern ai commentary#but you gotta understand. the aesthetic of that movie is one of its main draws#if you HAVE to make it modern#make it modern in a different way than the bland apple aesthetic i BEG#sorry to my trekkie followers i am hyperfixating on this movie. you will look at it#electric dreams#electric dreams 1984#edgar electric dreams#edgar#COUGH ALSO MAKE HIM QUEER. MAKE THE WHOLE MOVIE EXPLICITLY QUEER.#MAKE IT BI AND POLYAM#BUT LETS FACE IT. THEYRE COWARDS#SO IF THEY DONT#AT LEEEAST REMOVE THE MALE GAZEY SHIT AND MAKE THE PLOT BE MORE COHESIVE#and make miles actually face the consequences of his actions#fix the problems the movie already has DONT MAKE NEW ONES#AAUUUUHGHHH#rainspeak

118 notes

·

View notes

Text

want to give my two cents on the AI usage in the maestro trailer--

i think seventeen doing a whole concept that is anti-AI is very cool, especially as creatives themselves i think it's good that they're speaking up against it and i hope it gets more ppl talking about the issue. i also understand on a surface level the artistic choice (whether it was made by the members, the mv director, or whoever else), to directly use AI in contrast to real, human-made visuals and music in order to criticize it. i also appreciate that they clearly stated the intention of the use of AI at the beginning of the video

however, although i understand it to an extent, i do not agree with the choice to use AI to critique AI. one of the main ethical concerns with generative AI is that it is trained on other artists' work without their knowledge, consent, or compensation. and even when AI generated images are being used to critique AI, it still does not negate this particular ethical concern

the use of AI to critique also does not negate the fact that this is work that could have been done by an actual artist. i have seen some people argue that it's okay in this context because it's a critique specifically about AI, and it is content that never would have been done by a real artist anyway because it doesn't make sense for the story they're trying to tell. but i disagree. i think you can still tell the exact same story without using AI

and in fact, i would argue that it would make the anti-AI message stronger if they HAD paid an artist to draw/animate the scenes that are supposed to represent AI generated images. wouldn't it just be proof that humans can create images that are just as bad and nonsensical and soulless as AI, but that AI can't replicate the creativity and beauty and basic fucking anatomy that's in human-made art?

it feels very obvious this was not just a way to cut corners and costs like a lot of scummy people are using AI for. ultimately it was a very intentional creative decision, i just personally think it was a very poor one. and even if some ethical considerations were taken into account before this decision, i certainly don't think all of them were. at the very least i feel like the decision undermines the message they want to convey

i would also like to recognize that i myself am not an artist, and i have seen some artists that are totally on board with the use of AI in this specific context, so clearly this is not a topic that is cut and dry. but generative AI is still new, and i think it's important to keep having these conversations

#melia.txt#also want to add that as musicians svt are more directly threatened by AI generated audio than they are by AI generated images#and yet AI generated images is what was used in the video#and i guess the MV director/production company are the ones directly responsible for putting that in there#whether it was their initial idea or not#and they work in a visual medium so perhaps that makes it more 'fair' but idk it just feels like#the commentary is around music. which makes sense. and using human produced music/sound#but then taking advantage of AI images#idk just feels weird#i mean i don't like it either way#like i said in the main post i understand the intention behind the creative decision#and i'm still happy svt are speaking against ai at all i do think overall they're doing a good thing here#i just don't agree with the creative decision they/the production company/whoever made#edit: deleted the part about not boycotting svt over this bc ppl were commenting about boycotting bc of the 🛴 stuff#i meant specifically /I/ am not calling for a boycott because of specifically the ai stuff#was just trying to make a general point that im not making this post bc i want to sabatoge svt or whatever#bc kpop fans love to pull that catd whenever u criticize anything#so yeah just removed that bit bc i dont want ppl getting confused what im talking about#respect ppl boycotting because of scooter/israel stuff but thats not what this post was intended to be about#edit 2: turning off reblogs bc im going to bed and having asomewhat controversial post up is not gonna help me sleep well lol#may or my not turn rb's back on in the morning

49 notes

·

View notes

Text

毎日 | Kenshi Yonezu

あなただけ側にいてレイディー 焦げるまで組み合ってグルービー 日々共に生き尽くすには また永遠も半ばを過ぎるのに 駆けるだけ駆け出してブリージング 少しだけ祈ろうぜベイビー 転がるほどに願うなら 七色の魔法も使えるのに

///

Lady, just stay by my side Combine until we burn, groovy To live through each day together Even halfway through eternity Just keep running and breezing, baby Let's pray a little, baby If we wish enough as we roll We can even use a seven-colored magic

#毎日#every day#米津玄師#kenshi yonezu#lost corner#音楽#gif#my gifs#when i tell you that i've wanted to gif this mv since it's release !#just like the lyrics tho#毎日 毎日 毎日 毎日 僕は僕なりに頑張ってきたのに!!!!#i felt this so much during the job search & feel it even more right now#being so drained after work that there's basically no time or energy for creative pursuits has been unbelievably frustrating#so it's nice to have a song which acknowledges those feelings#and is also like 'you must persist regardless!'#so here we are months later >.<#despite the obvious tension in the lyrics i love how upbeat this song is#the parts where he goes ぢっ! and ハイホー!ハイホー!#🤸🚀🗓️💡#very interesting that this song was largely inspired by the creative process (and all the struggles it entails)#bc i think it goes hand in hand with the themes of post human & the commentary kenshi yonezu made about ai#how ai lacks that most crucial element#that the process is centered around joy more than anything and will continue to be

13 notes

·

View notes

Text

tf is this ai generated commentary for the us open don't they know tennisblr is liveblogging all i need to know????

#seriously though cant they. hire more commentators jesus christ#fuckass ai voice wheres the PASSION#tennis#sorry i saw their little instastory about it mr agassi r they holding a gun to ur head#once again asking for tennisblr commentary booth

7 notes

·

View notes

Text

youtube

Stumbled on this - so for anyone out of the loop part of Reddit blowing up last year was because it was making use of it's API prohibitively expensive for the average person to use, killing off a lot of (superior) third party apps used to both browse and moderate the platform on mobile.

I don't know if it was stated explicitly at the time, but for me the writing was on the wall - this was purely to fence off Reddit's data from being trawled by web scraping bots - exactly the same thing Elon Musk did when he took over Twitter so he could wall off that data for his own AI development.

So it comes as absolutely zero surprise to me that with Reddit's IPO filing, AI and LLM (Large Language Models) are mentioned SEVERAL times. This is all to tempt a public buyer.

What they do acknowledge though, which is why this video is titled 'Reddit's Trojan Horse' is the fact that while initially this might work and be worth a lot - as the use of AI grows, so will the likelihood that AI generated content being passed off as 'human generated' on the platform will grow - essentially nulling the value of having a user-generated dataset, if not actively MAKING IT WORSE.

As stated in the video - it's widely known that feeding AI content into an AI causes 'model collapse', or complete degeneration into gibberish and 'hallucinations'. This goes for both LLM's and Image Generation AI.

Now given current estimates that 90% of the internet's content will be AI generated by 2026 that means most of the internet is going to turn into a potential minefield for web-scraping content to shove into a training dataset, because now you have to really start paying attention what your bot is sucking up - because lets face it, no one is really going to look at what is in that dataset because it's simply too huge (unless you're one of those poor people in Kenya being paid jack shit to basically weed out the most disgusting and likely traumatizing content from a massive dataset).

What I know about current web-scraping, is OpenAI at least has built it's bot to recognize AI generated image content and exclude it from the scrape. An early version of image protection on the side of Artists was something like this - it basically injected a little bit of data to make the bot think it was AI generated and leave it alone. Now of course we have Nightshade and Glaze, which actively work against training the model and 'poison' the dataset, making Model Collapse worse.

So right now, the best way to protect your images (and I mean all images you post online publicly, not just art) from being scraped is to Glaze/Nightshade them, because either these bots will likely be programmed to avoid them - but if not, good news! You poisoned the dataset.

What I was kind of stumped on is Language Models. While feeding AI LLM's their own data also causes Model Collapse, it's harder to understand why. With an image it makes sense - it's all 1's and 0's to a machine, and there is some underlying pattern within that data which gets further reinforced and contributes to the Model Collapse. But with text?

You can't really Nightshade/Glaze text.

Or can you?

Much like with images, there is clearly something about the way a LLM chooses words and letters that has a similar pattern that when reinforced contributes to this Model Collapse. It may read perfectly fine to us, but in a way that text is poisoned for the AI. There's talk of trying to figure out a way to 'watermark' generated text, but probably won't figure that one out any time soon given they're not really sure how it's happening in the first place. But AI has turned into a global arms race of development, they need data and they need it yesterday.

For those who want to disrupt LLM's, I have a proposal - get your AI to reword your shit. Just a bit. Just enough, that it's got this pattern injected.

These companies have basically opened Pandora's Box to the internet before even knowing this would be a problem - they were too focused on getting money (surprise! It's capitalism again). And well, Karma's about to be a massive bitch to them for rushing it out the door and stealing a metric fucktonne of data without permission.

If they want good data? They will have to come to the people who hold the good data, in it's untarnished, pure form.

I don't know how accurate this language poisoning method could be, I'm just spitballing hypotheticals here based on the stuff I know and current commentary in AI tech spaces. Either way, the tables are gonna turn soon.

So hang in there. Don't let corpos convince you that you don't have control here - you soon will have a lot of control. Trap the absolute fuck out of everything you post online, let it become a literal minefield for them.

Let them get desperate. And if they want good data? Well they're just going to have to pay for it like they should have done in the first place.

Fuck corpos. Poison the machine. Give them nothing for free.

#kerytalk#anti ai#honestly the fact that language models can't identify it's own text should have hit me a LOT sooner#long post#Sorry I am enjoying the fuck out of this and the direction it's going in - like for once Karma might ACTUALLY WORK#especially enjoying it since yeah AI image generation dropping killed my creative motivation big time and I'm still struggling with it#these fuckers need to pay#fuck corpos#tech dystopia#my commentary#is probably a more accurate tag I'll need to change to#Youtube

6 notes

·

View notes

Text

This is what it feels like being a hex fan sometimes, like its not even a twitter issue (and in a sense its not necessarily an issue, sometimes ppl just wanna have fun n not spout theories n discussion 24/7! I get that!) but i feel like a lot of people r quick to write off aspects of the game and characters and insist that, despite it being a mullinsverse title, it's not that deep/things are surface level

#This goes for a lot of characters. you can already guess one of them since i defend his honor constantly here#but like. I've seen a lot of people brush off Irving as just 'the big bad' and insist theres nothing deeper to his character#than just being an abusive villain#he very much IS an abusive villain. Irving is not Irving if he isn't a metaphor for abuse in the video game industry#but that doesnt mean you can't. lets say. interpret his relationship with Lionel as something more than just 'ai assistant n his dev'#that doesnt mean you cant read inbetween the lines n point out *he cares about Lionel. and that a good chunk of his motivations r bc of him#Irving is rightfully hated but often times I get. nervous. that one of these days someone is gonna accuse me of being a sympathizer#or making him act 'ooc' or giving him grace when he doesnt deserve it#bc god forbid someone in a game where every character has layers. has layers#regardless: no discussion about Irving really leaves raw hatred or 'omg hes hot' anyways#Bryce is also a good example cause when he's not being a horrible yaoi fodder victim#Everyone just says 'Oh hes so nice! He's so kind! I feel bad for him!' and moves on#No one wants to pick apart that he's not a pure angelic soul who gets pushed around. that hes nuanced#and that there is very much commentary that ties to his black identity and the forced role of 'fighter'#To many Bryce's story is simple; got put in CAX and then he got out n granny died and it was REALLY bad#and then they dont care to analyze him further than that. i understand that the nature of the game leaves some characters underdeveloped#But there is still very much a lot to pick apart with every character in the game; sadly a lot of people don't care to do anything w/ it#feels like that en mass the fandom has this air of anti-discussion despite the source material. idk man idk#Im goin to bed early lol ive been tired ever since i got back from school#but yeah. my opinions

2 notes

·

View notes

Text

Ah, it seems my counterpart is verbal once more. Hello there, 079.

--O5-13-ii, "The Old Ai"

7 notes

·

View notes

Text

I don't understand using AI to roleplay (with other people, chat bots are fine). Where's the fun in having your replies being written for you

#( out of character. )#( dash commentary. )#( i mean i guess if youre lazy but like... can the ai put the same love into writing replies like a human?#( also if it doesnt make sense isnt it more work to go in and fix it

3 notes

·

View notes

Text

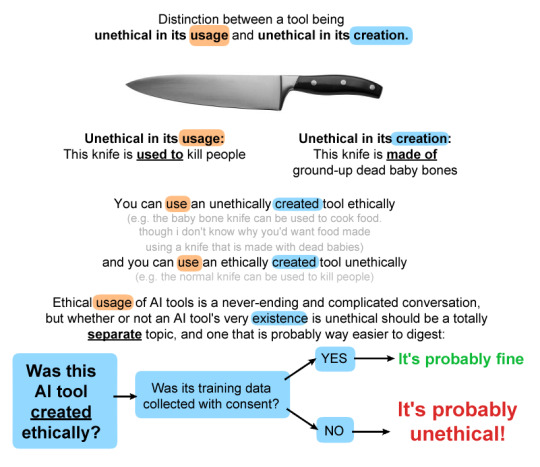

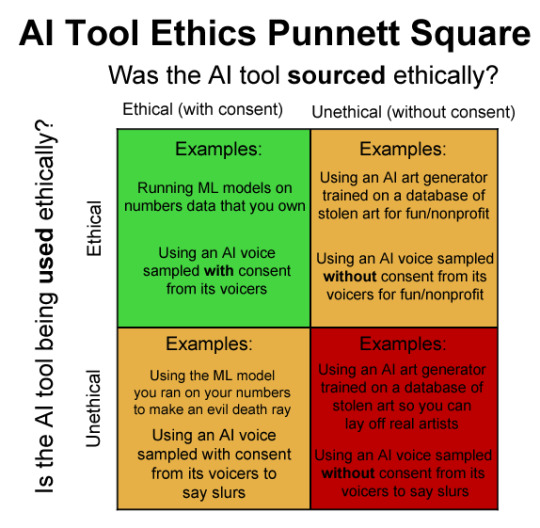

i feel like these graphics by @/ricedeity on twitter are really good descriptions of ethical AI vs unethical AI for those who don't understand the difference.

Some of y'all will see the word "AI" and freak out without actually processing anything that's being said like a conservative reading the word "pronouns"

#reblogs#commentary#ai#not to mention there is like#sooo much more to ai than midjourney and stablediffusion or whatever#its not just generative ai that exists#thats just the big buzz thats going around right now#ai has been around since before 2020#ai has been around since computers were born

30K notes

·

View notes

Text

More about the novel's setting

Part 1 - Plot of Ms. Orange

The important thing you have to know is that the story is set in a cartoony world, where the characters have weird names and some things are exaggerated. To give you an idea, think of the cartoons you watched as a kid.

Why is the setting like this? There's an in-universe explanation: the author (the character, not me) had planned to write a children's book, but when Orange discovers the truth, the novel starts presenting slightly serious topics. Let me clarify that the book doesn't feature anything inappropriate or horror-related.

My idea is to make the reader believe at first that they're reading a children's book, but soon they realize that the book is actually aimed at an older audience (I assume 12- to 15-year-olds will find it interesting).

Now, I'll give a more detailed explanation:

The novel is set in 2060 in a fictitious city called Utopia, which is famous for being the safest city in the world, hence why Orange decides to live there.

Besides its high level of safety, the city is also known for the scientific breakthroughs made by the scientists born there. Because of this and other factors, young people are highly fascinated by science, but they especially admire a scientist who is dedicated to robotics and is president of a well-known science club.

Speaking of robotics, one of the city's defining characteristics is the presence of robots everywhere, but this isn't noticeable at first because they look identical to humans. Although this may sound horrifying to people living outside Utopia, the citizens have found a way to coexist with the robots, incorporate them into their daily lives and see them as something good for society.

However, all the things that make this city unique will be put at stake as the story progresses.

#my post#ms orange#novel writing#novel wip#worldbuilding#writers on tumblr#2nd translated post from the writing blog#i started writing the novel in 2019 (before ai became a serious problem)#i don't intend to do a lot of social commentary. the main idea of the setting is to give more context to the villain's background#(that's all i can say for now)

0 notes

Text

i think black mirror is arguably one of the harder fandoms to be in regarding discussion. there's not enough people talking about it. there's too many people talking about the same things. all discourse is wrong. all discourse is correct. you're ostracized for liking [x] episodes because they don't follow the typical episode format. and yet, the episodes have no typical format. be scared by the episodes. but also there's no point in being scared because they're resemblant of real life. black mirror is groundbreaking. but you're not allowed to like anything groundbreaking, because again, made-up episode format.

#black mirror#it was never just about technology guys#pretty sure the original writers said it was always meant to be a social commentary on general society#and anyway#as technology breaks new boundaries and becomes even more of a staple in our society#there's only so much you can do to make it scart that the general public isn't already scared of#i can only see ai rebel against humanity so many times#i'm already desensitized to the possibility of robots taking over the world

1 note

·

View note

Text

What kind of bubble is AI?

My latest column for Locus Magazine is "What Kind of Bubble is AI?" All economic bubbles are hugely destructive, but some of them leave behind wreckage that can be salvaged for useful purposes, while others leave nothing behind but ashes:

https://locusmag.com/2023/12/commentary-cory-doctorow-what-kind-of-bubble-is-ai/

Think about some 21st century bubbles. The dotcom bubble was a terrible tragedy, one that drained the coffers of pension funds and other institutional investors and wiped out retail investors who were gulled by Superbowl Ads. But there was a lot left behind after the dotcoms were wiped out: cheap servers, office furniture and space, but far more importantly, a generation of young people who'd been trained as web makers, leaving nontechnical degree programs to learn HTML, perl and python. This created a whole cohort of technologists from non-technical backgrounds, a first in technological history. Many of these people became the vanguard of a more inclusive and humane tech development movement, and they were able to make interesting and useful services and products in an environment where raw materials – compute, bandwidth, space and talent – were available at firesale prices.

Contrast this with the crypto bubble. It, too, destroyed the fortunes of institutional and individual investors through fraud and Superbowl Ads. It, too, lured in nontechnical people to learn esoteric disciplines at investor expense. But apart from a smattering of Rust programmers, the main residue of crypto is bad digital art and worse Austrian economics.

Or think of Worldcom vs Enron. Both bubbles were built on pure fraud, but Enron's fraud left nothing behind but a string of suspicious deaths. By contrast, Worldcom's fraud was a Big Store con that required laying a ton of fiber that is still in the ground to this day, and is being bought and used at pennies on the dollar.

AI is definitely a bubble. As I write in the column, if you fly into SFO and rent a car and drive north to San Francisco or south to Silicon Valley, every single billboard is advertising an "AI" startup, many of which are not even using anything that can be remotely characterized as AI. That's amazing, considering what a meaningless buzzword AI already is.

So which kind of bubble is AI? When it pops, will something useful be left behind, or will it go away altogether? To be sure, there's a legion of technologists who are learning Tensorflow and Pytorch. These nominally open source tools are bound, respectively, to Google and Facebook's AI environments:

https://pluralistic.net/2023/08/18/openwashing/#you-keep-using-that-word-i-do-not-think-it-means-what-you-think-it-means

But if those environments go away, those programming skills become a lot less useful. Live, large-scale Big Tech AI projects are shockingly expensive to run. Some of their costs are fixed – collecting, labeling and processing training data – but the running costs for each query are prodigious. There's a massive primary energy bill for the servers, a nearly as large energy bill for the chillers, and a titanic wage bill for the specialized technical staff involved.

Once investor subsidies dry up, will the real-world, non-hyperbolic applications for AI be enough to cover these running costs? AI applications can be plotted on a 2X2 grid whose axes are "value" (how much customers will pay for them) and "risk tolerance" (how perfect the product needs to be).

Charging teenaged D&D players $10 month for an image generator that creates epic illustrations of their characters fighting monsters is low value and very risk tolerant (teenagers aren't overly worried about six-fingered swordspeople with three pupils in each eye). Charging scammy spamfarms $500/month for a text generator that spits out dull, search-algorithm-pleasing narratives to appear over recipes is likewise low-value and highly risk tolerant (your customer doesn't care if the text is nonsense). Charging visually impaired people $100 month for an app that plays a text-to-speech description of anything they point their cameras at is low-value and moderately risk tolerant ("that's your blue shirt" when it's green is not a big deal, while "the street is safe to cross" when it's not is a much bigger one).

Morganstanley doesn't talk about the trillions the AI industry will be worth some day because of these applications. These are just spinoffs from the main event, a collection of extremely high-value applications. Think of self-driving cars or radiology bots that analyze chest x-rays and characterize masses as cancerous or noncancerous.

These are high value – but only if they are also risk-tolerant. The pitch for self-driving cars is "fire most drivers and replace them with 'humans in the loop' who intervene at critical junctures." That's the risk-tolerant version of self-driving cars, and it's a failure. More than $100b has been incinerated chasing self-driving cars, and cars are nowhere near driving themselves:

https://pluralistic.net/2022/10/09/herbies-revenge/#100-billion-here-100-billion-there-pretty-soon-youre-talking-real-money

Quite the reverse, in fact. Cruise was just forced to quit the field after one of their cars maimed a woman – a pedestrian who had not opted into being part of a high-risk AI experiment – and dragged her body 20 feet through the streets of San Francisco. Afterwards, it emerged that Cruise had replaced the single low-waged driver who would normally be paid to operate a taxi with 1.5 high-waged skilled technicians who remotely oversaw each of its vehicles:

https://www.nytimes.com/2023/11/03/technology/cruise-general-motors-self-driving-cars.html

The self-driving pitch isn't that your car will correct your own human errors (like an alarm that sounds when you activate your turn signal while someone is in your blind-spot). Self-driving isn't about using automation to augment human skill – it's about replacing humans. There's no business case for spending hundreds of billions on better safety systems for cars (there's a human case for it, though!). The only way the price-tag justifies itself is if paid drivers can be fired and replaced with software that costs less than their wages.

What about radiologists? Radiologists certainly make mistakes from time to time, and if there's a computer vision system that makes different mistakes than the sort that humans make, they could be a cheap way of generating second opinions that trigger re-examination by a human radiologist. But no AI investor thinks their return will come from selling hospitals that reduce the number of X-rays each radiologist processes every day, as a second-opinion-generating system would. Rather, the value of AI radiologists comes from firing most of your human radiologists and replacing them with software whose judgments are cursorily double-checked by a human whose "automation blindness" will turn them into an OK-button-mashing automaton:

https://pluralistic.net/2023/08/23/automation-blindness/#humans-in-the-loop

The profit-generating pitch for high-value AI applications lies in creating "reverse centaurs": humans who serve as appendages for automation that operates at a speed and scale that is unrelated to the capacity or needs of the worker:

https://pluralistic.net/2022/04/17/revenge-of-the-chickenized-reverse-centaurs/

But unless these high-value applications are intrinsically risk-tolerant, they are poor candidates for automation. Cruise was able to nonconsensually enlist the population of San Francisco in an experimental murderbot development program thanks to the vast sums of money sloshing around the industry. Some of this money funds the inevitabilist narrative that self-driving cars are coming, it's only a matter of when, not if, and so SF had better get in the autonomous vehicle or get run over by the forces of history.

Once the bubble pops (all bubbles pop), AI applications will have to rise or fall on their actual merits, not their promise. The odds are stacked against the long-term survival of high-value, risk-intolerant AI applications.

The problem for AI is that while there are a lot of risk-tolerant applications, they're almost all low-value; while nearly all the high-value applications are risk-intolerant. Once AI has to be profitable – once investors withdraw their subsidies from money-losing ventures – the risk-tolerant applications need to be sufficient to run those tremendously expensive servers in those brutally expensive data-centers tended by exceptionally expensive technical workers.

If they aren't, then the business case for running those servers goes away, and so do the servers – and so do all those risk-tolerant, low-value applications. It doesn't matter if helping blind people make sense of their surroundings is socially beneficial. It doesn't matter if teenaged gamers love their epic character art. It doesn't even matter how horny scammers are for generating AI nonsense SEO websites:

https://twitter.com/jakezward/status/1728032634037567509

These applications are all riding on the coattails of the big AI models that are being built and operated at a loss in order to be profitable. If they remain unprofitable long enough, the private sector will no longer pay to operate them.

Now, there are smaller models, models that stand alone and run on commodity hardware. These would persist even after the AI bubble bursts, because most of their costs are setup costs that have already been borne by the well-funded companies who created them. These models are limited, of course, though the communities that have formed around them have pushed those limits in surprising ways, far beyond their original manufacturers' beliefs about their capacity. These communities will continue to push those limits for as long as they find the models useful.

These standalone, "toy" models are derived from the big models, though. When the AI bubble bursts and the private sector no longer subsidizes mass-scale model creation, it will cease to spin out more sophisticated models that run on commodity hardware (it's possible that Federated learning and other techniques for spreading out the work of making large-scale models will fill the gap).

So what kind of bubble is the AI bubble? What will we salvage from its wreckage? Perhaps the communities who've invested in becoming experts in Pytorch and Tensorflow will wrestle them away from their corporate masters and make them generally useful. Certainly, a lot of people will have gained skills in applying statistical techniques.

But there will also be a lot of unsalvageable wreckage. As big AI models get integrated into the processes of the productive economy, AI becomes a source of systemic risk. The only thing worse than having an automated process that is rendered dangerous or erratic based on AI integration is to have that process fail entirely because the AI suddenly disappeared, a collapse that is too precipitous for former AI customers to engineer a soft landing for their systems.

This is a blind spot in our policymakers debates about AI. The smart policymakers are asking questions about fairness, algorithmic bias, and fraud. The foolish policymakers are ensnared in fantasies about "AI safety," AKA "Will the chatbot become a superintelligence that turns the whole human race into paperclips?"

https://pluralistic.net/2023/11/27/10-types-of-people/#taking-up-a-lot-of-space

But no one is asking, "What will we do if" – when – "the AI bubble pops and most of this stuff disappears overnight?"

If you'd like an essay-formatted version of this post to read or share, here's a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2023/12/19/bubblenomics/#pop

Image: Cryteria (modified) https://commons.wikimedia.org/wiki/File:HAL9000.svg

CC BY 3.0 https://creativecommons.org/licenses/by/3.0/deed.en

--

tom_bullock (modified) https://www.flickr.com/photos/tombullock/25173469495/

CC BY 2.0 https://creativecommons.org/licenses/by/2.0/

4K notes

·

View notes

Text

In other uncanny-valley AI voice news...

Google has this new thing called "NotebookLM," which allows you to upload any document, click a button, and then a few minutes later receive an entire AI-generated podcast episode (!) about the document. The generation seems to occur somewhat faster than real-time.

(This is currently offered for free as a demo, all you need is a Google account.)

These podcast episodes are... they're not, uh, good. In fact, they're terrible – so cringe-y and inane that I find them painful to listen to.

But – unlike with the "AI-generated content" of even the very recent past – the problem with this stuff isn't that it's unrealistic. It's perfectly realistic. The podcasters sound like real people! Everything they say is perfectly coherent! It's just coherently ... bad.

It's a perfect imitation of superficial, formulaic, cringe-y media commentary podcasts. The content isn't good, but it's a type of bad content that exists, and the AI mimics it expertly.

The badness is authentic. The dumb shit they say is exactly the sort of dumb shit that humans would say on this sort of podcast, and they say it with the exact sorts of inflections that people would use when saying that dumb shit on that sort of podcast, and... and everything.

(Advanced Voice Mode feels a lot like this too. And – much as with Advanced Voice Mode – if Google can do this, then they can presumably do lots of things that are more interesting and artistically impressive.

But even if no one especially likes this kind of slop, it's highly inoffensive – palatable to everyone, not likely to confuse anyone or piss anyone off – and so it's what we get, for now, while these companies are still cautiously testing the waters.)

----

Anyway.

The first thing I tried was my novel Almost Nowhere, as a PDF file.

This seemed to throw the whole "NotebookLM" system for a loop, to some extent because it's a confusing book (even to humans), but also to some extent because it's very long.

I saw several different "NotebookLM" features spit out different attempts to summarize/describe it that seemed to be working off of different subsets of the text.

In the case of the generated podcast, the podcasters appear to have only "seen" the first 8 (?) chapters.

And their discussion of those early chapters is... like I said, pretty bad. They get some basic things wrong, and the commentary is painfully basic even when it's not actually inaccurate. But it's still uncanny that something like this is possible.

(Spoilers for the first ~8 chapters of Almost Nowhere)

The second thing I tried was my previous novel, The Northern Caves.

The Northern Caves is a much shorter book, and there were no length-related issues this time.

It's also a book that uses a found-media format and includes a fictitious podcast transcript.

And, possibly because of this, NotebookLM "decided" to generate a podcast that treated the story and characters as though they existed in the real world – effectively, creating fanfiction as opposed to commentary!

(Spoilers for The Northern Caves.)

----

Related links:

I tried OpenAI's Advanced Voice Mode ChatGPT feature and wrote a post about my experiences

I asked NotebookLM to make a podcast about my Advanced Voice Mode post, with surreal results

Tumblr user ralfmaximus takes this to the limit, creating NotebookLM podcast about the very post you're reading now

#“ready to dig into something different today? we're going to be looking at leonard salby. you know him... he wrote 'a thornbush tale.'”#ai tag#almost nowhere#the northern caves

1K notes

·

View notes