#literally no-one asked for these ai tools btw

Explore tagged Tumblr posts

Text

so discord decided to not learn anything from the failed crypto/nft integration of last year, as they’re now planning to include ai chatbots and ai tools into their godforsaken app.

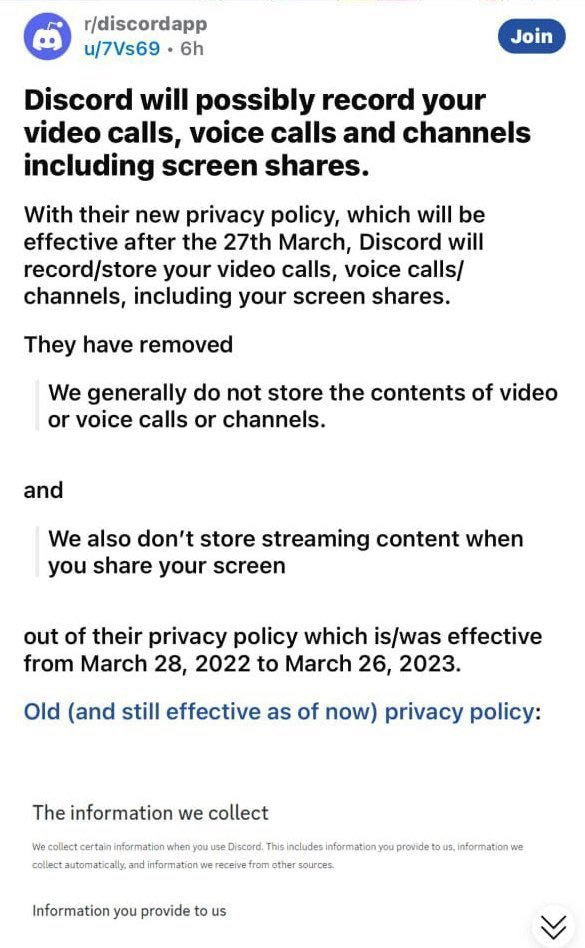

they’re also changing their tos to reverse their protection policies on recording our data

i’m heavily advising anyone with sensitive, private or nda information on their discords to think about hosting it elsewhere as discord will likely suck it up without bias to feed into their new ai chatbot. this is a major fuckup on discord’s end and incredbily tone-deaf to what their userbase consist of.

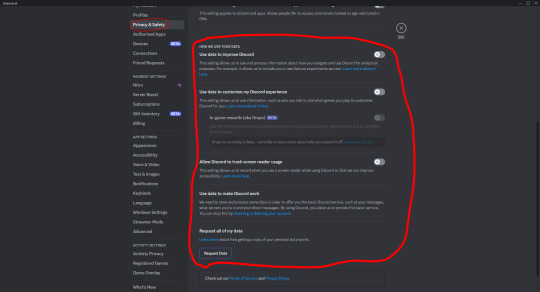

i also reccomend while you still can to disable any data analysis you have enabled in privacy and security. it’s on by default for many users. hell i didn’t even know i had it on in the first place.

its time to bully companies again into making them not make stupid tone-deaf decisions. discord needs another lesson

#literally no-one asked for these ai tools btw#aside from techbros#i guess all ears on them now and not the rest of your userbase of just average normal people#we've been asking for a higher file size limit since forever and they focus on a harmful fad instead#great to know they legitimately don't have our best interest in mind#discord#discord app

92 notes

·

View notes

Text

btw moot happily will delete if you ask, I want to chime in as someone with a disability that affects my drawing (dysgraphia)

the main criticism of Ai art isn’t like artificial art, no I actually think it’s super cool and awesome if done right.

(In a way that is not basing itself on others art but just random ai making things with no references. Genuinely the best thing ever it makes my heart melt, robots are cool and them actually fucking around with paint is awesome.)

but the main criticism is actually the art the “ai” is trained on is actually completely used against both authors and artists wishes.

like it’s not that ai art is bad lol, it’s that machine learning art cannot exist without being trained on others art, or having art made especially for the machine learning to train on.

(Which no one is doing lol.)

again the usage of the word Ai when it’s actually of machine learning is kinda misleading and makes out robotic art and actual real but dumb ai art too be evil or bad when it’s just not actually.

it’s just currently our ai revolution is built on the backs of plagiarism, art theft, and not the consent of the artists to have their work used against their will.

because modern “ai” is just a glorified autocorrect, that’s stolen art and writing is so compelling to us, and it’s so hard to believe they aren’t sentient.

But they are like the equivalent of a virus in terms of sentience, if one could talk. just copying and mutating and evolving, but not really sentient with thoughts yet. (IN MY OWN PERSONAL OPINION)

that’s actually the main criticism.

but even with disabilities that affect drawing and movement, disabled people don’t actually care. We love drawing and making art even if it’s wonky! Even if it hurts, because it’s just what art is about yk?

it’s not restricted by disability really, because art exists in all forms. And there are many different ways around it that allows us to draw and make art!

again it’s kinda a ableist talking point that gets thrown around in the discourse.

but I don’t blame you at all for not knowing, disability affects us all differently!

but being an artist and being disabled is actually an extremely common job and career! Because you can go at your own pace and do it as safely and accessible as you can.

but robotic art and like actual ai art are actually fine! It’s just the way machine learning art works currently is built genuinely on plagiarism and art theft is the bad part.

because generative art is actually a useful tool for people who want to test out different ideas and concepts, but as the way it’s used now?

not very good, because again the before mentioned art theft and plagiarism.

but also on the flip side with written art? It’s very exploitative and predatory to the people who love the “ai” and the companies behind it are charging money to not have people’s chatbot “ai” friends and lovers literally stolen and taken away from them.

which I think is important to add to the discussion and conversation, my thoughts on it are mostly pro-ai but anti-machine learning art.

which are very different things, but that’s my opinion on it.

hope you don’t mind this awful awful reblog!

i’ve always been open to the constructive aspects of generative ai but there’s a lot of… dissonance. on one hand i understand that all art is derivative and there’s no such thing as true originality but on the other hand, it just irks me to hear the phrase “established AI artist.” like… it just fills me with Questions. and i’m trying to find some kind of middle ground because i’m very prone to instantly being vehemently “anti” or “pro” whatever side of any issue i care about.

i’d like to validate the accessibility point. as much as i say “if you can’t draw but want to be able to draw, you should draw anyway and you will naturally learn how to draw,” i see the other side. someone with tremors or hypermobility or any number of conditions that would disable someone from learning to draw by hand could absolutely find genuine value in ai art. i don’t want to take that away from anyone. i know some people also don’t have the time or energy or motivation to actually learn to draw. that’s also ok, not everyone has to be an artist. and not all artists have to do everything the same way.

learning how to create prompts is a skill, knowing about the model and program that you’re using to generate images is a skill set. i don’t have that skill set. I imagine i could figure it out, but i don’t think that’s intrinsically less valuable than writing a novel or coming up with a concept for art you want to make by hand. all of these are nebulous skills and there’s considerable overlap with what i feel a nudge to call “real art,” but i’ll use “manual art” instead. “real art” is a social construct as much as “real genders”

i hate talking about pricing also, i feel like it makes me seem greedy or self pitying. but i feel like there’s a difference in the amount of labor that goes into making manual vs ai art. i feel like manual artists should be offered more money. but that’s a feeling. there’s nuance. i don’t know enough about that part to draw a conclusion. i come from a place of trying for so, so many years to get anyone to even care about the art i make, let alone spend money on it. i come from a position of never having money as a kid and trying to sell my art so i could get a snack after school, to very little avail. im forever grateful for the handful of people who commissioned me when i was growing up. i think there were three or four. that’s more than a lot of people get.

part of my initial rejection of ai art as Art is that there’s such an audience for it. it’s resentment, but i don’t think i can fairly describe it as a resentment for ai artists or ai art as a concept, it’s a resentment towards the general public attitude towards manual art. the commodification of “gimmicky” things like ai, those “minimalist” faceless traced photos, and basically every successful art trend. resentment from how hard it is to find footing in the art world at all, especially as someone who often falls into slumps, therefore falling out of algorithmic favor and my reach being obliterated from not posting constantly. i don’t hate ai art on its own, and i certainly don’t hate the artists. but i hate how easy it is to make ai art. how even though it takes a few minutes to generate, you get 10x the result of hours of my work process. i resent the fact that i have to worry about it drowning out my and my peers’ work from the feeds of people who might resonate with it. i hate the circumstances that make ai art a “”threat.””

i hope this makes sense, it’s 4am and i took my meds a couple hours ago so im sleepy but. i had some thoughts.

#Cyberpunk#(but actually)#punk stuff#anticapitalism stuff#anarchism stuff#mental health stuff#activism stuff#tech stuff#ai art#-pop

7 notes

·

View notes

Text

It’s been a crazy week huh? I’ve got lots to say, so let’s go!

First off, AI “art” is an abomination, and the only reason to not abandon the idea entirely is because the singular way it can be useful is as a tool an actual artist employs to make some minor corrections, like noise cancellation. That’s it. But simply writing better algorithms for those might be all that’s needed..

Using it to generate imagery (and by that I mean, it’s stealing things it copied from prior inputs) is lazy, stupid and devoid of any kind of soul or meaning. Every single other potential use is harmful. Unless it’s going to be used as this sort of tool, it should be burned in the bin with those and we can all move on. Not all tech is worthwhile; need I remind you of self-destructive DVDs?

In my world, one of my old routers died after six years of heavy service, so I got this beast, the GT-AX11000, and the performance improvements are so tremendous I’ve made it my home’s primary connection point.* Not only am I getting the full download speeds I’ve been paying for instead of slightly lower, sometimes it’s even a few megabits higher on wired connections (like to my PC), and the triple-band setup helps devices throughout the house stay connected seamlessly. Very happy with it, and before you ask, yes I use the Merlin firmware suite as well. Being able to switch back to the standard UI instead of that stupid “gamer” one it defaults to would be reason enough!

* = The router & cable modem are on the 2nd floor in a corner, so I have another access point and a repeater boosting signals downstairs due to it’s location not being optimal. We have cameras outside that also require a connection, so the WiFi radius needs to extend beyond the house on top of that. Physically moving it makes interference worse as there’s an insane amount of traffic for a suburban area, and also results in wires going all over my room, so it stays put.

Slaver, son of slavers continues to fuck the birdsite. Here’s his plane status, btw. I’d care about his privacy if he gave a damn about ours. And don’t tell me the richest man in the world can’t afford anything; he could literally solve all sorts of global issues single-handedly, but won’t. None of them ever will. Fuck ‘em.

Grifter uses NFTs to launder money. Film at 11. The less I have to talk about that loser, the better...

And then, you know, I’m enjoying the new Warcraft expansion, happy with the new patch’s raid boss (!) in Fallout 76 and it’s associated Nuka-Cola themed content with most of it actually new (!!) instead of copied from 4, and all that.

Oh, and lastly... the king’s back! Now can we just get rid of Bobby, please?!

#politics#ai art#twitter#fuck elon#fuck trump#activision#world of warcraft#warcraft#activision-blizzard#blizzard#fallout#fallout 76

21 notes

·

View notes

Text

Cyberpunk for the 21st Century: ONF’s “Sukhumvit Swimming”

If I write about a K-pop group, chances are I have been a fan of them for a while. This is not the case for ONF. M-Net’s Road to Kingdom brought this group to my attention, and though I checked out some of their work it is the sextet’s latest comeback, Spin-Off, hooked me to them. The title track for this mini album is “Sukhumvit Swimming”, a tropical house track with a touch of ONF’s signature heavy synths and guitar. The MV continues ONF’s science fiction-inspired scenarios and hones them down to a particular subgenre (my favourite)—cyberpunk. I wouldn’t label any k-pop concept as through-and-through cyberpunk until now, but “Sukhumvit Swimming” borrows and adds enough to that subgenre to be considered a part of that class of literature. The MV borrows from cyberpunk in spirit and setting but combines them in fascinating new ways.

youtube

“Sukhumvit Swimming” by ONF on Woolim Entertainment’s YouTube Channel

Cyberpunk is a subgenre of science fiction that came around in the 1980s and was fascinated by hacker culture. It was was thinking about the Internet, bodily augmentations, AI, mind uploads, all in the setting of dystopian cityscapes were corporates ruled the world. The Cyberpunk archetype is a hacker who uses the oppressive technology of the corporate to figure out the flows of late capital and direct them to his (usually, the protagonists are, unfortunately, male) goal: freedom. The “technology” is generally an Internet-like technology, and hence the “cyber”. The cyberpunk also hacks systems like the cityscape to fight corporate domination. The “punk” came from the rebellious and stylish punk-rocker, and it denotes cyberpunk’s fascination with the power of leather-clad, heavily-mascara-ed punk-pop culture. Neuromancer by William Gibson, Schismatrix by Bruce Sterling, Wetware by Rudy Rucker, and Ridley Scott’s Blade Runner are some works that found the tradition in the West. Altered Carbon by Richard K Morgan, The Matrix, and Westworld kept it alive in later decades. Ghost in the Shell, Akira, PsychoPass, Serial Experiments: Lain are some iconic works from Japan that have pushed boundaries for the subgenre. Amidst its neon landscapes, grubby alleyways, gore, and shiny machinery, cyberpunk asks a simple question: what place do we, humans and individuals, have in this “global village” of money and information? Each cyberpunk finds their own answer, and the city always plays an important role in this discovery.

Wyatt rides a tuk-tuk in cyberspace

So, where is Sukhumvit in “Sukhumvit Swimming”? The only thing remotely related to Thailand in the MV seems to be the tuk-tuk that Wyatt drives through a CGI cyberspace landscape. At first glance, even this is jarring—why would one drive a tuk-tuk through cyberspace? The answer is: why not? “Sukhumvit Swimming” insists on mixing the local with the global. The cyberpunk always stays true to their roots even as they dive into popular culture. Cyberspace, in most cyberpunk, is a visual fest where one can look like whatever they want. If one wants to traverse it with a tuk-tuk, so be it. In a way, all the six settings in the MV are Sukhumvit. They are all a bizarre mix of human-nonhuman, past-future, real-unreal, categories that cyberpunk always confuses to question their boundaries. This confusion allows cyberpunk to figure out, in distinct ways, how an individual’s life is embedded in global capital.

These androids are hella creepy. This sequence seems heavily inspired by Westworld, a cyberpunk work set in an amusement park where artificially intelligent android “hosts” gain sentience and rebel against the abuse that the human guests of the park have practiced on the androids for year.

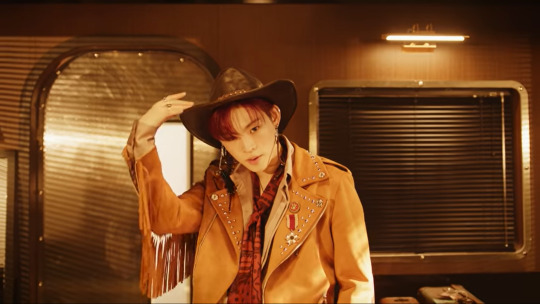

Each setting of the MV evokes Sukhumvit as a tool, and it is what makes “Sukhumvit Swimming” a clever study of cyberpunk. The first setting is Hyojin’s 1920s American railroad. Whether we are to think of the “people” on board with Hyojin as literal androids or grotesquely mechanised human beings, there are disturbingly few differences between androids and people working like clockwork to their schedules in a metropolis. Our cowboy is different from the rest of the occupants on this train; he is not dressed in the stuffy clothes of these robots—he is a (console?) cowboy, a punk, a rebel.

Hyojin as the cowboy.

U, too, seems to be on this train, but he is, well, high. He drinks a bright blue liquid from a glass and things start to swim. With time stopping and MK floating in space, questioning the reality of our disparate, mediate, postmodern existence should not be too difficult for us. In fact, stimulants of various kinds are an integral part of cyberpunk. Apart from their performance-enhancing effects, drugs are always connected to altering/understanding reality much like the technology of the cyberpunk universe.

Kids, don’t do drugs.

Is the virtual world, where we are all information, more real than our world, where we are just expressions of biology? Is the train that U is on real? The minute U consumes that glass, “reality” is up for grabs[1].

The second major setting of this MV features J-US in the sunburnt ruins of Greek columns and skyscrapers.

J-US’ suburnt world. Blade Runner 2049 has very similar visuals to this post-apocalyptic world.

This odd, out-of-time combination is another reason why “cyberpunk” jumped to my mind. Cyberpunk likes to juxtapose history with the present times and ask: what is the place of history in a time when technology has skewed our perception of time? The anxiety of technophobes is often that these revered worlds like the cradle of Western Civilisation will be forgotten. The survival of these cultures without context—just stone columns in sunbaked worlds—reminds us of the tyranny of the object. Long after humans are gone (extinct or only alive in a virtual world), these traces of us will be left. Until then, we can only absorb and re-write these monuments into our present alongside the skyscrapers of the capitalist world—much like the pastiche cityscape of Sukhumvit.

E-Tion is on the moon. The moon landing was faked, btw. Or was it?

E-Tion’s moon landing is a particularly strange setting. The others, in one way or another, can be found on earth, but why is E-Tion on the moon? Distance and travel in this MV are unstable concepts. If one can travel through cyberspace in a tuk-tuk, one can definitely grow flowers on the moon. In a patchwork fantasy world (like Sukhumvit), anything is possible. More than that, scale is another notion “Sukhumvit Swimming” is determined to throw in the trash. When virtual worlds are accessible to us through stimulants and technology, the moon is no longer the symbol of extraordinary achievement or emotion (“shoot for the moon,” it is said). Even the moon can be subsumed in the network of capitalism--just ask Elon Musk.

MK really reminds me of the Master here.

The only setting we are now left with is MK’s scenario with the mysterious machine. It could be the machine that is responsible for these strange visions; it certainly looks like the Twelfth Doctor’s time-travelling machine, the TARDIS, from BBC’s Doctor Who. Perhaps it is even one of the machines from The Matrix, that are determined to keep humans as only bio-powered cells for the energy they can provide (bio-powered batteries would not generate enough energy, by the way. That’s one of the flaws in the Wachowskis’ reasoning). Strangely enough, no-one touches the machine; MK disappears from the scene towards the end of the MV, leaving the machine perpetually working. The machine never stops and the dreamers (assuming that there is a particular “real” world) will not wake up—unless, of course, something brings them out of the illusion.

That brings me to the “storyline” of this MV. There is certainly one, mixed within the fantastic shots of this MV. All the members start from different settings but towards the end, they all arrive in the desert that J-US started from. The trigger? Hyojin readies his gun to shoot at J-US in quite a memorable scene:-

I said this MV likes to mess with scale, didn’t I?

Unexpectedly, Hyojin is the one who is shot. All the members snap out of their “illusions” and end up in the desert with J-US. Everyone is dying or has at least passed out, except J-US who has been in this setting since the beginning. The cyberpunks come together, out of their illusion—or perhaps into one. Time unfreezes and Hyojin is nowhere to be seen on the train. Our cyberpunks have lived and fought in the blink of an eye (or rather, the three-odd minutes that the MV lasts) to disappear with no trace. Fast, suave, and unreal, the cyberpunk is gone once the fight is over. But what have they achieved?

The cyberpunk cityscape is the place for the rebel to explore the strings of corporate domination. In the case of cyberpunk, relations are usually technologies embedded in the logic of capitalism. When ONF creates a temporary Sukhumvit on our screens, they tie together the disparate scenarios of the MV. Now, the hidden relations that linked the moon to a spaghetti Western train and post-apocalyptic world can be read.

If all the members end up in a dystopian, suntanned terrain, it is because this is where all history leads. “Sukhumvit Swimming” is a slow but certain dive of the world into a spiral of destruction there is no coming back from, a process of the destruction of the world that begins slowly but certainly from the days when human beings began to abuse fossil fuels. The trains, the tuk-tuks, the rockets of “Sukhumvit Swimming” are as much a part of the process as the fireworks that explode in front of E-Tion’s moon.

When the MV ends, Time begins its work again, moving inexorably towards the end. Sukhumvit is a tool to understand how the flows of global capital have isolated humans (and even technology) into our own fantastical worlds, worlds as small as our phone screens, without seeing our connections to the outside world. Our work is to make/find our Sukhumvit, our tool for understanding our place in these networks that seem to mysteriously guide our lives. The cyberpunk has disappeared, but there is someone that still remains: it is you, and your battle has just begun.

[1] Stealing this expression from Cavallaro, Dani. Cyberpunk and Cyberculture: Science Fiction and the Works of William Gibson, pp. 38.

#온앤오프#ONF#스쿰빗스위밍#sukhumvit swimming#sukhumvit#k-pop#kpop#k-pop comeback#spin off#cyberpunk#technology#thailand#bangkok

9 notes

·

View notes

Note

What do you do most of the time when you’re not on tumblr?

Thanks for asking to get to know me!!! I do MANY things! :D

Let’s get the “boring” thing out of the way: work! Occupationally, I work remotely as a theoretical linguistics contractor. Basically, that means I do freelance language analysis work for other companies. I sit at home in my pajamas at 2 AM listening to folk metal while doing boring repetitive language tasks for money. XD Usually, it involves me pouring through large databases of sentences and annotating pertinent information on top of the sentences, coding it according to the information another development team wants. What I input is what another team uses to improve an AI’s ability to understand English. For instance, maybe I’m going through a large corpus of sentences marking what the verb is, and how noun phrases relate to the verb (as the direct object, subject, etc.) My work helps accomplish a variety of practical uses. The reason why your search results are relevant on a website? The reason why virtual assistants like Siri are able to answer a question you ask it? That’s the stuff I work on, although I do the non-technical, non-programmy side of it, and am more or less just following instructions for what they want me to look for in the sentence databases they use to train their AIs.

I usually am held to non-disclosure agreements, so I can only say so much about what my work involves, but I have had the pleasure to partner with several collegiate universities, Amazon, the Mayo Clinic, Google, environmental research centers, local companies, and more over the years since I first began these gigs in 2012.

But like. Recreationally?

I LOVE DOING CREATIVE THINGS!!!!!!!

I’m usually running around doing three thousand eight hundred forty two and a half projects any given day. Music composition for indie films and video games; cosplay; fanfiction writing; original novel writing; learning new musical instruments (I own literally several dozen instruments…); drawing; Photoshop abuse; video game streaming; skiing; hiking; teaching myself new languages; collecting action figures and other rare fandom materials; getting distracted by cat videos; crying in feels over television shows; staring pointlessly at a wall for several hours; you name it!

At the moment, I’m working on a fancy pants fanfiction novel for Camp NaNoWriMo - which I hope I’ll be posting online in the next few months. I’m creating/planning cosplays for Envy (FMAB), Catra (She-Ra), Rufus (Deponia), and Krel (3Below) to debut at a cosplay convention in May (assuming I get my act together, which I probably won’t, let’s be real). I’m playing Anthem with my sister and brother-in-law and am streaming video games most Wednesdays on my twitch account - also now available on YouTube btw. I’m learning how to use illustration markers and digital art tools, while trying to improve my ability drawing humans and backgrounds. I’m teaching myself 日本語. I’m working with several friends to translate a book from German to English so we can play a roleplay game together where I GM (none of us speak German… whoopsies). I’m going to be drawing illustrations for a children’s book my grandpa wrote. I’m drafting the outline for an original novel I hope to publish in the future. I’m arranging a music suite for some of the Huzzah songs for the Deponia video game series. I’m talking with several friends about movie or game projects we could make. I’m playing Dungeons and Dragons as a gunslinging True Neutral alien. I’m planning several fandom AUs with friends that might turn into comics, fanfictions, or getting lost in the dust because I have a notorious, terrible record for finishing anything. I’m skiing with friends in the mountains before winter season ends. I’m trying to collect several difficult-ish-to-find FMA materials from eBay. I’m trying desperately to avoid making an AMV for a ship I’ve fallen into, but it’s probably going to happen despite my best efforts (damn Deponia has my heart right now, okay???). I’m haranguing my irl best friends by being a loving assholish punster gremlin to them. I’m wasting lots of my hours screwing around with friends on discord. And, I haven’t had the time yet, but I just bought a ukulele, so I want to get around to learning that, too. Only so much time in a day though…. uhhhhh….

This doesn’t mean all my projects are good… I am not talented in all these respects… but that doesn’t mean I get any less enjoyment out of all these creative hobbies!

I SWEAR I’ve recently been trying to limit the number of projects I’m doing at any given time. I even made myself a checklist for activities that I “allow” myself to do so I don’t start three hundred more pointless hare chases. But yeah! That’s the current day-to-day activities of Haddock!

Actually… this conversation is a good lead-in to something I’ve been meaning to say on my blog for a while.

It’s probably unsurprising that, given as I’m doing so much beyond tumblr, that I haven’t been spending an enormous amount of time answering analysis questions recently. My asks have begun stocking up over the last few months; though I have several hundred responses to answer and want to answer, the truth is that my life is prioritized elsewhere right now and I probably won’t get to things all that fast, if at all.

Tumblr has been an extraordinary experience for me and I’ll never like, leave-leave it behind. I’ve engaged a lot in tumblr because it’s provided me life, fandom, happiness, and a community I’ve attached to. It’s also given me hope: hope that my contribution to the fandom gives people happiness and meaning, too. Fiction is powerful for all of us and a way to give us inspiration and meaning. I hope that my engagement in fandom has helped other people feel happiness, inspiration, and meaning, too. Whenever I receive asks from people telling me kind things about my blog, I feel touched beyond words, because it makes me feel like my time on tumblr has been a benefit and a difference to other lives beyond my own, and there’s nothing better than that.

But I also admit: I’m ready to transition to New Things in life. My greatest goal in life is to make as meaningful of a difference as I can. I don’t want to breeze by my years doing nothing but recreation for myself: I want to do what I can to make a maximal difference in the lives of others for the better. Tumblr’s been fun and I hope I’ve made a good impact, but my deepest dreams and goals aren’t around tumblr analyses. They’re around creative writing, especially the wild dream to someday be a published author. I’m increasingly taking the steps and time to reach that goal. I’m done waiting; I’m done planning; I’m ready to charge forward and make this ambition reality, best as I can.

That means that, anymore, tumblr is a hindrance to my life’s greatest dreams, and I can’t do both full-tumblr-activities and reach my heart’s deepest wishes.

I’m honestly feeling a lot happier now that I’ve drastically reduced my time on tumblr. I’m so sorry that it’s resulted in me not answering many asks (that really would be fun to talk about), and I hope no one feels like I’ve forgotten or snubbed them. I’d do it if I had time. But I don’t have time. The truth is that I feel my life shifting to new directions, and I want to take that. Otherwise, I’ll live in a stagnant world in which I go nowhere… and I can’t have that. I can’t. I want to fly.

So I’m going to be continuing to march through with these other life projects. With the creative writing especially. I hope I can post some of this writing to you guys on tumblr, too! It means I’ll be spending less and less time on tumblr, doing analyses not-anywhere-as-often-as-I-used-to (I don’t know if I’ll ever answer everyone’s asks again, sorry!). Leave tumblr? No. I’ll still be here. But… my shift in time… it’s all in the dream to make meaning out of my life.

Thank you so much for asking again! I hope you’re having a great day, have some fun activities of your own, and are staying awesome!

#blabbing Haddock#kingofthewilderwest#non-dragons#about me#my life#ask#ask me#awesome anonymous friend#Anonymous

34 notes

·

View notes

Note

I'm curious, why did you choose coding as a career? Did you like it from the start, or did it grew on you over time? I just heard it's really frustrating, but I imagine you like it, right? What do you like about coding? Sorry if it's too many questions it's just an area way out of my league XD

It pays good money lol.

I’m actually looking to lean more towards Project Management, which still pays really well, but requires less coding from me and puts my leadership training that I’ve done to good use lol. I’ll be graduating next May with a BA in Computer Science and a minor in Management of Information Systems.

I didn’t have coding in mind until literally my senior year of high school, I took AP computer science, with zero coding knowledge under my belt at the time lol. My dad’s actual a Computer Science major as well and works in the industry, so he’s helped me during that AP class and on other things as well. Obviously I have to do the work myself or I don’t learn and he’s good at asking the right questions to get me down the right thinking path lol.

I do enjoy coding, but I hate doing the school version of it. Many assignments, which do have reasons for being the way they are and are important, absolutely suck to do. My favorites (which also can be the most frustrating) are when we do games like Breakout or Battleship. Most assignments early on are fine, usually small, teaching you basics and stuff. But later on you find yourself literally writing an Excel like program (which I never got mine fully working heh…) and writing the filesystem commands to function as if you were using it normally on a Linux computer but you’re running a C program. (And that program can actually legit delete things and stuff? So like it is 100% important to know how to not delete things you want to keep, including the actual computer).

I’ve also been granted a lot of opportunities because of choosing coding. I went to the Grace Hopper Celebration for Women in Technology in 2016, all paid for by the school. It’s a huge conference which has about 15,000 attendees each year, hundreds of companies which you can talk to representatives about the company and do interviews, and tons of free junk lol (I have a pic of my collection lol). I’ve also attended my school’s Hackathon each year except this past one (something else was going on that weekend and I couldn’t) which I’ve won prizes at twice. My first year I was a finalist for the main coding competition (I think the guy I was working with and I placed 5th?) and the second I won a category which was implementing Amazon Web Services or tools in some way which I was using Amazon Lumberyard, which is a game development engine (Which was very hard to use btw, I think it’s gotten a bit better with more learning tools but Unity is way better with tutorials and learning materials).

It’s 100% frustrating, but google and my whiteboard are my besties, and talking with others about things helps a lot. It’s a very logical and methodical field but it also takes creativity to come up with the solution. And for all my complaining and whining about it I do still enjoy plenty of different aspects (though I’m never touching autopilot shit again after my senior project is over and not even gonna think about AI because that shit requires soooo much high level crazy math, I mean not that other things don’t require crazy math, but there’s easier crazy math and then there’s that).

My favorite classes have been about algorithm analysis. I have a professor I’ve taken three classes with (who sadly I can’t take any more because the next one is a grad student class and I don’t need that stress lol). I actually enjoy a lot of the theory, even if I’m uh… not knowledgeable about the math in some spots.

I’d love to end up in the game industry, more along the story telling side, but I’m gonna be taking a less direct route, I’ll either be an entry level coder or entry level project manager. It’s much easier to get into what you really want after you’ve gotten actually in.

And no worries! I’m always happy to answer things about coding, no matter how aggravating my own path has been, I fully believe in supporting anyone who wants to give it a shot. It’s an incredibly important field and becoming more and more important for people to know things about. It’s rocks being electrified and told this series of 0’s and 1’s means this lol. And I’d equate it to learning musical instruments, it’s another language you’re learning to read and write (well languages, but most languages follow some basic things and stuff so they can do most of the same things it’s just they have areas they specialize in).

6 notes

·

View notes

Text

At AI Research Company ROSS, A New Stage of Transparency and Engagement

Was this the same ROSS Intelligence that I’d wondered and speculated about?

For years, the artificial-intelligence legal research company maintained a shroud of secrecy around its product. Sure, they talked about it plenty. They garnered lots of media coverage. And CEO Andrew Arruda was a ubiquitous presence at legal conferences, touting the future of AI in law.

But when it came to seeing the product, that was another story. I’d pestered Arruda for years to let me review it, without success. At a conference of law librarians two years ago, Arruda was called out during a panel for his company’s lack of transparency. Surely somebody must have been seeing ROSS, because law firms were reportedly buying it. But, if so, I had trouble finding them.

Now here I was, on Thursday and Friday last week, sitting in ROSS’s Toronto research and development office, with unfettered access to its entire engineering and design teams. I was given detailed demonstrations of the product’s inner workings. I was invited to sit in on engineering and UX team meetings. I was encouraged to ask any question I wanted, of anyone I wanted to ask it.

And that shroud of secrecy around its product? ROSS had shredded it. In June, without fanfare, ROSS quietly changed its website to offer free trials of its product to anyone. The product that had been treated as if it were a state secret was now open to everyone for a 14-day free trial, without requiring even a credit card.

So what is going on here? The answer to that question starts with a look back at the company’s history.

A Rapid Rise

ROSS began in 2014 at the University of Toronto as a student-built entrant in a cognitive-computing competition staged by IBM to develop applications for its Watson computer – then famous for having won Jeopardy! ROSS’s prototype won the UT competition, earning them a write-up on the front page of The Globe and Mail – which touted ROSS as the future junior associate at Bay Street law firms – and serving as a springboard for the company’s rapid acceleration.

At the time, the oldest of the three founders, CEO Arruda, a University of Saskatchewan law graduate who was then articling at a Toronto law firm, was 25. The youngest, Jimoh Ovbiagele, a computer scientist who is now the company’s CTO, was 21. The third, Pargles Dall’Oglio, also a computer scientist, was a bit younger than Arruda.

Sitting in on a meeting of the ROSS UX team.

ROSS went on to compete in IBM’s national competition in 2015 as the only non-U.S. college team. Although it came in second, its momentum was already established, further fueled by a series of click-bait headlines touting ROSS as the robot lawyer of the future.

Soon, the founders were invited to Silicon Valley to participate in the prestigious Y-Combinator startup incubator. Denton’s NextLaw Labs made ROSS one of its earliest investments. In 2015, they secured $4.3 million in seed funding and then, two years later, another $8.7 million in Series A funding. In 2017, Forbes named the three founders to its “30 Under 30.”

But over the past year, it had seemed to me that ROSS had gone quiet. Not literally, of course. But no longer was its formerly globetrotting CEO a presence at every legal conference. The company put out little in the way of news or announcements. Even the company’s social media presence seemed more restrained.

Then came the call from Arruda, inviting me to Toronto, and resulting in my visit there last week.

A Period of Refocusing

In Toronto, what quickly became clear to me was that the last year had been a period of refocusing and refinement for ROSS. It was a period that reflected, it seemed to me, a new stage of maturity for this still-young company.

The period started almost exactly a year ago with the announcement of the “new ROSS,” which marked the first time the legal research platform included cases from all federal and state courts and all practice areas. ROSS’s first U.S. prototype covered only bankruptcy cases, and as it had added other practice areas, it required users to choose one before submitting a query. With the new ROSS, users no longer had to choose.

While that “new ROSS” marked a turning point, it was not an end point. CTO Ovbiagele said they have worked hard to deliver a better legal research experience. Through the use of natural language processing and machine learning, they have developed a product that turns the research workflow on its head, he said, allowing lawyers to ask complex questions using natural language and get results that are highly on point.

“Legal research platforms have trained lawyers to start with broad results and then narrow them down,” Ovbiagele said. “We say, what if we brought you right to the decision on point, and then you can widen out your research from there.”

In pursuit of that goal, the last year has yielded several significant developments for the company:

ROSS refined its research product to the point where it was ready to show it to the world and let users decide whether it does – as the ROSS people adamantly maintain – deliver more focused results than other research platforms.

ROSS brought on Stergios Anastasiadis, the former director of engineering at Shopify and engineering manager at Google, as its head of engineering to lead further refinement and development of the product.

ROSS refocused its marketing efforts away from larger firms, where it has long targeted its sales, to solo and small firms.

ROSS introduced transparent, no-obligation monthly pricing, so that users are not tied to long-term subscription contracts. “Pay for the months you need us, then don’t pay for the months you don’t,” Arruda said.

The capstone to all this came last month with the announcement that Jack Newton, cofounder and CEO of practice management company Clio, had joined the ROSS board of directors. Newton is highly regarded in the legal tech world, not just for what he and cofounder Rian Gauvreau accomplished over the last decade in starting and building Clio, but also as a mentor and advisor to other startups.

A More Mature Company

For the three founders once featured in Forbes “30 Under 30,” the year brought one other milestone. CEO Arruda, the oldest of the three, himself turned 30. While symbolically notable, it also underscores the fact that these three once-inexperienced founders have now been at this for five years. They have matured, and so has their company.

In fact, Arruda told me last week, those early years were stressful for them. The founders had a vision to use AI to make legal research more affordable. But they had no experience in what it took to be entrepreneurs or to build a company.

Recording a LawNext episode with founders Arruda and Ovbiagele.

On top of that, because ROSS was one of the earliest companies to focus on AI in legal research, there was no playbook on how to build its product. Although they developed their prototype on Watson, they built their commercial product from scratch. And they did so even as they were already caught up in the glare of media and investor attention.

“It was a huge challenge for us to build it from scratch,” Arruda said. “We were experimenting and innovating under the spotlights, because we had a lot of media attention.”

That explained the secrecy around the product, Arruda told me. They truly thought they were building something unique, and they feared that a competitor would steal it out from under them. So they kept the product close to the vest, until they reached the point where they were confident that it was ready.

Arruda also confirmed my perception that he had scaled back his public speaking over the past year. He did it in part to open opportunities for others in the company to speak, he said. But he also felt the need to focus more of his time and attention on being CEO and managing the day-to-day operations of his growing company, which has a sales and marketing office in San Francisco in addition to the Toronto R&D office.

There is no better way to get the pulse of a company than to move past its executives and marketers and meet with the people who actually design, build and service the product. Over the course of multiple conversations last week, one point became clear to me. Those on the ROSS team truly believe they have created a better legal research tool. And they are not satisfied with stopping there. They have a vision of a product that does not just deliver better results, but that will someday also help lawyers make better sense of those results.

Due largely to AI, legal research is becoming one of the most vital and competitive markets in legal technology. Whereas ROSS was one of the first to tout its use of natural language processing and machine learning, now many others do. Going forward, the biggest challenge facing ROSS will be to stand out in a somewhat crowded field and win market share from long-entrenched rivals.

But in Toronto last week, it seemed clear to me that ROSS has entered a new, more mature phase in its growth, one marked by transparency around its product and pricing and engagement with its customers. My presence there was evidence of that. In the legal research market, that approach makes a lot of sense.

After all, the best measure of a research product is the results it delivers. By lifting the shroud and inviting anyone to try its service for free, ROSS is declaring itself ready for the challenge.

(BTW: Watch for a forthcoming LawNext episode recorded with Arruda and Ovbiagele last week, as well as for my review of the ROSS research platform.)

(Full disclosure: ROSS is reimbursing me for the air and hotel expenses I incurred in traveling from Boston to Toronto.)

from Law and Politics https://www.lawsitesblog.com/2019/07/at-ai-research-company-ross-a-new-stage-of-transparency-and-engagement.html via http://www.rssmix.com/

0 notes

Link

Link to Official GoogleIO Livestream and infoTheVerge LiveblogTechcrunch Live CoverageCnet Live CoverageEngadget Live CoveragePlease be sure to share news updates in this thread!TL;DW Live Updates (thanks to TheVerge)DIETER BOHN10:39:35 AM PDT Coming 'in the next few months.'DIETER BOHN10:39:29 AM PDT Calls out with a private number, but you can link your number to the Google Assistant. VLAD SAVOV10:39:14 AM PDT (photo)DIETER BOHN10:39:11 AM PDT It recognizes your voice so it can use your own address book.ADI ROBERTSON10:39:10 AM PDT Will Google automatically detect that she wants him to visit and bring flowers and arrange that, though?CASEY NEWTON10:39:02 AM PDT The actor playing Rishi Chandra's mom just now? You guessed it: Morgan Freeman.DIETER BOHN10:38:59 AM PDT "No setup, apps, or even phone required."KARA VERLANEY10:38:48 AM PDT Google’s Home speaker can now make phone callsDIETER BOHN10:38:44 AM PDT I doubt we're really calling Rishi's mom but she is mad at him for not calling. "Oh hi, everyone." Or maybe it's real. Who knows!VLAD SAVOV10:38:24 AM PDT No love for Europe with the free calls, eh Google?DIETER BOHN10:38:11 AM PDT "Hey Google, Call Mom."VLAD SAVOV10:38:07 AM PDT (photo)DIETER BOHN10:37:55 AM PDT Sounds like you can dial out from the Home. You can call any number in US or Canada for free.DIETER BOHN10:37:40 AM PDT Hands-free calling.DIETER BOHN10:37:29 AM PDT Will start with reminders, flight status, traffic alerts.CASEY NEWTON10:37:26 AM PDT "Proactive assistance" sounds like a good idea on a whiteboard, but the next thing you know you're asking people if they want to buy movie tickets when they ask you for the weather and then they throw their smart speaker in the garbage.DIETER BOHN10:37:03 AM PDT The lights on the Home turn on, he says "Hey Google, what's up?"VLAD SAVOV10:36:53 AM PDT (photo)DIETER BOHN10:36:51 AM PDT Sounds like notifications for Home.DIETER BOHN10:36:46 AM PDT "Automatically notify you of those timely messages."DIETER BOHN10:36:36 AM PDT "Proactive Assistance" is coming to Google Home...VLAD SAVOV10:36:27 AM PDT (photo)DIETER BOHN10:36:21 AM PDT You can schedule meetings or set reminders. FINALLY!!VLAD SAVOV10:36:15 AM PDT (photo)CASEY NEWTON10:36:09 AM PDT You can see why Google and other companies WANT you to buy things with your voice, but it's almost always so much easier to tap with your fingers.DIETER BOHN10:36:06 AM PDT This summer, hitting Canada, Australia, France, Germany, and Japan.VLAD SAVOV10:35:41 AM PDT (Dieter is keeping his hands warm by typing furiously fast. This must be the coldest Google I/O ever. Sorry I brought the British weather with me, guys.)DIETER BOHN10:35:32 AM PDT It's been out for 6 months. 50 new features since then.DIETER BOHN10:35:16 AM PDT Moving on! Time to talk about Google Home. Rishi Chandra takes the stage.DIETER BOHN10:34:55 AM PDT There are 70+ partners today.ADI ROBERTSON10:34:51 AM PDT This is a version of the "buy food or something" chatbot that I would actually use.VLAD SAVOV10:34:49 AM PDT (photo)DIETER BOHN10:34:45 AM PDT Any smart home developer can make a Google Action now.DIETER BOHN10:34:33 AM PDTgooglelovescarbsVLAD SAVOV10:34:33 AM PDT (photo)DIETER BOHN10:34:19 AM PDT "I didn't have to install anything or create an account."VLAD SAVOV10:34:18 AM PDT (photo)ADI ROBERTSON10:34:09 AM PDT I'm disappointed she's not literally ordering Panera to the amphitheater.KARA VERLANEY10:34:08 AM PDT You can buy stuff with Google Assistant nowDIETER BOHN10:34:07 AM PDT You can scan your fingerprint to pay with Google.VLAD SAVOV10:34:03 AM PDT (photo)DIETER BOHN10:33:55 AM PDT She's ordering food from Panera.DIETER BOHN10:33:43 AM PDT This isn't a demo, turns out. It's a video.ADI ROBERTSON10:33:16 AM PDT A full system with payments, receipts, account creation, &c.DIETER BOHN10:33:02 AM PDT They also will support transactions.DIETER BOHN10:32:54 AM PDT "They can even pick up where they left off across devices."VLAD SAVOV10:32:49 AM PDT (photo)DIETER BOHN10:32:41 AM PDT Actions are coming to Android phones and iPhones.KARA VERLANEY10:32:35 AM PDT Third-party actions will soon work on Google Assistant on the phoneDIETER BOHN10:32:28 AM PDT (She didn't mention Alexa, of course.)CASEY NEWTON10:32:26 AM PDT "Actions on the Google Platform" is the only action most of these devs will be getting all year, if you know what I mean.DIETER BOHN10:32:16 AM PDT This is "Actions" on the Google platform. Like Alexa's skills, but Google.DIETER BOHN10:31:59 AM PDT Demo time! Valerie Nygaard.DIETER BOHN10:31:49 AM PDT It works with Google stuff and third-party developers.ADI ROBERTSON10:31:41 AM PDT I'm going to start taking bets on the first toothbrush with Google Assistant, btw. Hit me up.DIETER BOHN10:31:35 AM PDT Huffman is pointing out that Assistant isn't the same thing as Search. Because it can "do" stuff.VLAD SAVOV10:31:34 AM PDT (photo)DIETER BOHN10:31:14 AM PDT Coming to more languages: French, German, Japanese, German, Korean, Italian. A bunch - I didn't catch them all.ADI ROBERTSON10:31:06 AM PDT Lots of new languages being added to Assistant today, too.VLAD SAVOV10:30:53 AM PDT (photo)DIETER BOHN10:30:46 AM PDT There will be a "Google Assistant Built In" badge on products.DIETER BOHN10:30:32 AM PDT Already moving on! An image of a coffee maker just appeared. Do you want that? Do I want that?KARA VERLANEY10:30:07 AM PDT Hey Siri, Google Assistant is on the iPhone nowVLAD SAVOV10:29:56 AM PDT (photo)ADI ROBERTSON10:29:54 AM PDT "All your favorite Google features on the iPhone."CASEY NEWTON10:29:53 AM PDT I'm definitely looking forward to putting Assistant on my iPhone and trying out all the nifty Lens stuff.DIETER BOHN10:29:52 AM PDT That's a lot of phones for the Assistant.DIETER BOHN10:29:37 AM PDT Heeey.DIETER BOHN10:29:34 AM PDT Google Assistant now available on iPhone.VLAD SAVOV10:29:12 AM PDT (photo)VLAD SAVOV10:29:03 AM PDT (photo)ADI ROBERTSON10:28:35 AM PDT Assistant can basically do all the things now that Google promised us in that original Glass conceptual video.DIETER BOHN10:28:33 AM PDT It recognized a band name on a marquee, and gives options to buy tickets.KARA VERLANEY10:28:31 AM PDT You can finally use the keyboard to ask Google Assistant questionsDIETER BOHN10:28:15 AM PDT Lens can automatically deal with business cards and receipts, apparently.VLAD SAVOV10:28:02 AM PDT (photo)VLAD SAVOV10:27:51 AM PDT (photo)DIETER BOHN10:27:50 AM PDT It just translated Japanese Menu to English, and then he asked what the dish looks like.VLAD SAVOV10:27:41 AM PDT (photo)DIETER BOHN10:27:25 AM PDT Lens can do Google Translate.DIETER BOHN10:27:15 AM PDT "Available in the coming months."ADI ROBERTSON10:27:12 AM PDT This actually is useful, but it's great to have an "advance" that is just bringing back the normal way of interacting with your phone.DIETER BOHN10:27:07 AM PDT Google Lens definitely coming to Assistant. We've got a demo for the feature.KARA VERLANEY10:26:55 AM PDT Google’s app for lost Android phones is now called Find My DeviceVLAD SAVOV10:26:37 AM PDT (photo)CASEY NEWTON10:26:37 AM PDT (photo)DIETER BOHN10:26:37 AM PDT F I N A L L YDIETER BOHN10:26:33 AM PDT AHHH. You can type to the Assistant on the phone.DIETER BOHN10:26:26 AM PDT He's pointing out that Google Home can recognize voices.DIETER BOHN10:25:55 AM PDT 70 percent of requests to Assistant come in natural language, not keywords.DIETER BOHN10:25:40 AM PDT Three themes: Conversational, Available, Ready.VLAD SAVOV10:25:34 AM PDT (photo)KARA VERLANEY10:25:23 AM PDT Google will soon be able to remove objects from photosDIETER BOHN10:25:14 AM PDT "It's your own individual Google."ADI ROBERTSON10:25:12 AM PDT "You should be able to just express what you want throughout your day and the right thing should happen."DIETER BOHN10:24:52 AM PDT He's been doing Search and Assistant for a long time at GoogleVLAD SAVOV10:24:42 AM PDT (photo)DIETER BOHN10:24:41 AM PDT Scott Huffman takes the stage.ADI ROBERTSON10:24:28 AM PDT No Burger King, though.ADI ROBERTSON10:23:53 AM PDT Google Assistant DJing here with much more success than our I/O guy.DIETER BOHN10:23:42 AM PDT It starts with a bunch of people saying "OK Google," and every attendee just checked their phone.ADI ROBERTSON10:23:19 AM PDT Now we've got a sizzle reel for Google Assistant.DIETER BOHN10:23:17 AM PDT Video time.DIETER BOHN10:23:13 AM PDT Google Assistant is now available on over 100 million devices.DIETER BOHN10:22:50 AM PDT "The most important product we are using this is for Google Search and Google Assistant."ADI ROBERTSON10:22:46 AM PDT We spent really quite a lot of time with Autodraw. http://ift.tt/2qt48xz SAVOV10:22:40 AM PDT (photo)KARA VERLANEY10:22:32 AM PDT Google announces over 2 billion monthly active users on AndroidDIETER BOHN10:22:23 AM PDT Also fun things: Autodraw. http://ift.tt/2rrtMQb SAVOV10:22:09 AM PDT (photo)ADI ROBERTSON10:22:00 AM PDT "One day AI will invent new molecules that behave in predefined ways."DIETER BOHN10:21:27 AM PDT Neural nets are also helping with DNA sequencing. "More accurately than state-of-the-art methods."ADI ROBERTSON10:21:15 AM PDT "Providing tools for people to do what they do better." - Google's AI motto.VLAD SAVOV10:21:10 AM PDT (photo)CASEY NEWTON10:20:51 AM PDT Mostly I'm just being quiet and listening right now because AI is cool and Google is extremely good at it.DIETER BOHN10:20:46 AM PDT First time somebody said "It's early days" onstage. We will try to keep count.DIETER BOHN10:20:33 AM PDT We're looking at how machine learning can help with detecting cancer.ADI ROBERTSON10:20:07 AM PDT They're also called "baby neural nets," because Google has clearly learned some things from Guardians of the Galaxy 2.DIETER BOHN10:19:52 AM PDT Pichai made an Inception joke. "We must go deeper." Because they're nesting neural nets inside neural nets. It got a chuckle.VLAD SAVOV10:19:45 AM PDT (photo)ADI ROBERTSON10:19:29 AM PDT "We want it to be possible for hundreds of thousands of developers to use machine learning." A neural net will generate other neural nets to improve AI.DIETER BOHN10:19:20 AM PDT The fact that Pichai is leading with all this deep AI stuff is a sign, in case that's not obvious. This is a new moat for Google — or at least they want it to be.VLAD SAVOV10:18:58 AM PDT (photo)ADI ROBERTSON10:18:09 AM PDT It will focus on three areas: research, tools, and "applied AI."DIETER BOHN10:17:58 AM PDT All of Google's AI stuff will be at Google.aiVLAD SAVOV10:17:24 AM PDT (photo)DIETER BOHN10:17:23 AM PDT These are going to be available on the Google Cloud Compute Engine.DIETER BOHN10:16:49 AM PDT 180 trillion floating point operations per second. Which seems like a lot.VLAD SAVOV10:16:45 AM PDT (photo)DIETER BOHN10:16:34 AM PDT Last year TPUs were good for inference (figuring out what a thing is), but not optimized for training. The next-gen "Cloud TPU" is good for training, too.VLAD SAVOV10:16:33 AM PDT (photo)VLAD SAVOV10:15:41 AM PDT (photo)KARA VERLANEY10:15:34 AM PDT Google Assistant will soon search by sight with your smartphone cameraDIETER BOHN10:15:31 AM PDT Pichai is talking about Tensor Processing Units, which are better than CPUs or GPUs for Google's AI processing.CASEY NEWTON10:15:21 AM PDT If your data center isn't AI-first, kiss your ass goodbye.DIETER BOHN10:15:08 AM PDT On to server stuff: "AI First data centers"ADI ROBERTSON10:14:57 AM PDT So you can take a picture of the sticker on a router and it will save the info on it for you, for instance.VLAD SAVOV10:14:32 AM PDT (photo)ADI ROBERTSON10:14:29 AM PDT The premise now isn't just that it can identify things, but that it can save and organize the things it detects.DIETER BOHN10:14:22 AM PDT You can point it at restaurants to see the info about that restaurant (it also uses GPS).CASEY NEWTON10:14:02 AM PDT I hope that eventually you can point Google Lens at a guy and it will tell you whether to date him. VLAD SAVOV10:14:02 AM PDTDIETER BOHN10:13:57 AM PDT It can grab a username and password from a Wi-Fi router. It has OCR. VLAD SAVOV10:13:51 AM PDTDIETER BOHN10:13:42 AM PDT Launch Lens from Assistant, point it at a flower, and it can identify the flower.DIETER BOHN10:13:23 AM PDT Coming first to Google Assistant and Photos. VLAD SAVOV10:13:19 AM PDTDIETER BOHN10:13:17 AM PDT "Set of vision-based computing capabilities."DIETER BOHN10:13:06 AM PDT New initiative: "Google Lens"ADI ROBERTSON10:13:04 AM PDT Biggest cheers since the beginning of the keynote for that one.DIETER BOHN10:13:01 AM PDT Google removed a chain link fence from an image. Wild.DIETER BOHN10:12:52 AM PDT "Coming very soon" .... Google can remove obstructions from photos.VLAD SAVOV10:12:46 AM PDT (photo)KARA VERLANEY10:12:45 AM PDT Smart Reply is coming to Gmail for Android and iOSDIETER BOHN10:12:31 AM PDT Claims that Google's computer vision is better at recognizing images than humans. Huh.CASEY NEWTON10:11:57 AM PDT Google Home is now personalized to the user, so everyone hears a different Burger King ad.ADI ROBERTSON10:11:54 AM PDT "Similar to speech, we are seeing great improvements in computer vision."VLAD SAVOV10:11:54 AM PDT (photo)DIETER BOHN10:11:54 AM PDT "We are seeing great improvements in computer vision."DIETER BOHN10:11:39 AM PDT Google didn't need 8 microphones, it only needed 2, on the Google Home — because it uses machine learning to figure out who is speaking.VLAD SAVOV10:11:28 AM PDT (photo)DIETER BOHN10:11:08 AM PDT (Vision, eh? That's iiiinteresting.) But we're starting by talking about Voice.DIETER BOHN10:10:34 AM PDT Voice and vision are "new modalities" for computing, after keyboard and mouse.ADI ROBERTSON10:10:30 AM PDT He's charting an evolution from keyboard / mouse to multitouch to voice.VLAD SAVOV10:10:25 AM PDT (photo)DIETER BOHN10:10:05 AM PDT (Same thing that's in Inbox, btw)DIETER BOHN10:09:55 AM PDT Rolling out Smart Reply to 1 billion users of Gmail.DIETER BOHN10:09:47 AM PDT Pichai is showing a bunch of products that use Google AI and machine learning. Duo, YouTube suggestions, AdBrain, Photo Search.VLAD SAVOV10:09:31 AM PDT (photo)ADI ROBERTSON10:09:24 AM PDT "We are rethinking all our products" to incorporate machine learning and AI.DIETER BOHN10:09:08 AM PDT "Computing is evolving again ... from a mobile-first to an AI-first world."VLAD SAVOV10:08:52 AM PDT (photo)ADI ROBERTSON10:08:44 AM PDT "As you can see, the robot behind me is also pretty happy."VLAD SAVOV10:08:39 AM PDT (photo)DIETER BOHN10:08:28 AM PDT Android: 2 billion active devices.ADI ROBERTSON10:08:21 AM PDT Every day users upload 1.2 billion photos to Google.DIETER BOHN10:08:20 AM PDT (Dare you to bring up Allo. DOUBLE DARE)DIETER BOHN10:08:11 AM PDT 800 million monthly active users on Google Drive. 500 million active users on Google Photos.ADI ROBERTSON10:08:01 AM PDT Doing various stats here — 800 million active users for Google Drive.DIETER BOHN10:07:46 AM PDT Google's mission is still "organizing the world's information," Pichai says. He's hitting the numbers now. 1 billion users on YouTube, 1 billion hours watched. 1 billion KM navigated on Maps daily.ADI ROBERTSON10:07:35 AM PDT "Every single day, users watch over 1 billion hours of video on YouTube." via /r/technology

0 notes