#kubernetes services training

Explore tagged Tumblr posts

Text

How to Implement Security Best Practices in EKS

Implementing security best practices in Amazon EKS involves configuring IAM roles and policies for fine-grained access control to resources. Utilize Amazon’s VPC CNI for network policies that isolate pods and enable logging and monitoring with CloudWatch and CloudTrail to detect and respond to threats. Secure container images by scanning them with tools like ECR. Implement regular security assessments and updates to maintain a robust security posture. For more information visit: https://www.webagesolutions.com/courses/WA3108-automation-with-terraform-and-aws-elastic-kubernetes-service

0 notes

Text

Intelligence agencies 101: MI6

Dashing spies and deadly agents, from James Bond to Alex Rider and George Smiley. We have all heard of British Intelligence, but just how much do you know about MI6?

1.- It is the oldest secret service in the world.

If we want to get technical, spies have been working for the British crown since 1569, thanks to Queen Elizabeth I and her Secretary of State, Sir Francis Walsingham. But for now, we'll focus on the contemporary Secret Service.

Hear me out, back in 1909 in the midst of what we call the "armed peace", things were getting anything but peaceful. Countries developed and accumulated weapons like it was a sport, and most of them were unsatisfied with the territories they owned. Germany was going all Queen and screaming "I Want It All", which made the rest of the European countries slightly concerned by its imperialistic ambitions.

Britain was the first to grow paranoid and so Prime Minister Asquith decided to have the Committee of Imperial Defence, create a Secret Service Bureau.

However, it is worth mentioning that the existence of the agency wasn't formally acknowledged until 1994, under the Intelligence Services Act, and even though everyone had known about it for ages.

2.- They have very... diverse tasks

Officially, MI6 is tasked with the collection, analysis, and adequate distribution of foreign intelligence (it is a common misconception that MI6 also handles national affairs, that's what its counterpart MI5 is for).

Now, note that I said "officially", and that is because unofficially (it is kind of very illegal), MI6 has been known to carry out espionage activity overseas. But you already knew that, didn't you? Otherwise, why would you be here?

3.- Roles

As described by the SIS itself, there are several roles within the organisation:

Intelligence officers: Must be UK nationals of at least 18, with no drug use and pass a very intrusive security clearance. The jobs are divided into the following subcategories:

Operational Managers: planning and managing intelligence collection operations.

Targeters: turning information (data) into human intelligence operations.

Officers: link to Whitehall (government) as well as validating and testing intelligence.

Case Officers: managing and building relationships with agents.

Operational Data Analysts: Must be UK nationals of at least 18, with no drug use and pass a very intrusive security clearance. Tech abilities are a must. Training course lasts 2 years.

Tech Network Area: Must be UK nationals of at least 18, with no drug use and pass a very intrusive security clearance. Skills in: GoLang, gRPC, Protobuf, Kubernetes & Docker Python, Java, C#, C, C++, and React (+Redux).

Language Specialists: Must be UK nationals of at least 18, with no drug use and pass a very intrusive security clearance. Russian, Arabic and Mandarin linguists are the most solicited, followed by translators.

4.- Their alphabet is a bit jumbled up

Anyone that has ever seen or read any 007 material knows that M is the head of MI6, whether that be Judy Dench, Bernard Lee or Ralph Fiennes.

But what if I told you that the head of MI6 is actually a certain C?

Back when the Secret Service Bureau was created, a 50-year-old Royal Navy officer called Mansfield Cumming (and dubbed "C") was chosen to head the Foreign Section.

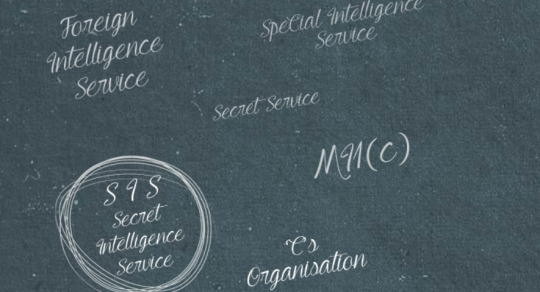

5.- MI6 or SIS?

Officially, the agency's current name (adopted in 1920) is Secret Intelligence Service, hence the acronym SIS, but it wasn't always that. We've established that it started its days as the Secret Service Bureau, and during WWI, the agency joined forces with Military Intelligence, even going as far as to adopt the cover name "MI1(c)".

The agency continued to acquire several names throughout the years, such as "Foreign Intelligence Service", "Secret Service", "Special Intelligence Service" and even "C's organisation". It wasn't until WWII started, that the name MI6 was adopted, in reference to the agency being "section six" of Military Intelligence.

And I truly do hate to be the bearer of bad news but... the name MI6, as cool as it sounds, is no longer in use. Writers and journalists still use that name, but those within the organisation just call it SIS nowadays.

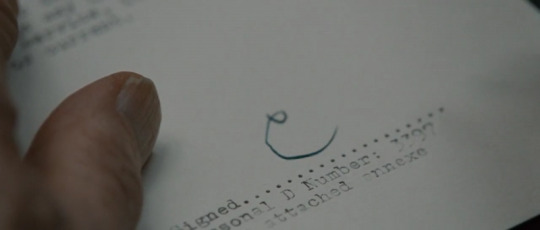

6.- They are fond of their traditions

Remember our dear Commander Mansfield? Well, turns out he started a thing. The man used to sign his letters in green ink and always with the letter "C" a tradition that proved to be sticky enough to be passed down to every single Chief afterwards. Another tradition worth mentioning, is that of calling intelligence reports "CX reports", which... you guessed it, is still done to this day.

7.- Special friends

On 1949, the SIS began a formal collaboration with the CIA, even though the agency had already helped to train their predecessor's personnel, the U.S. Office of Strategic Services.

Even the CIA has admitted that the MI6 has provided them with some of the most valuable information of all time, including information that helped during the Cuban Missile Crisis and key elements to the capture of Osama Bin Laden.

I hope this will be of some use to your future writings and do feel free to submit an ask if you happen to have a specific question regarding British intelligence, or any other International Relations subject!

Yours truly,

–The Internationalist

#writing advice#writing help#writing community#writing tips#writing resources#creative writing#james bond#george smiley#alex rider#mi6#spies

443 notes

·

View notes

Text

How To Use Llama 3.1 405B FP16 LLM On Google Kubernetes

How to set up and use large open models for multi-host generation AI over GKE

Access to open models is more important than ever for developers as generative AI grows rapidly due to developments in LLMs (Large Language Models). Open models are pre-trained foundational LLMs that are accessible to the general population. Data scientists, machine learning engineers, and application developers already have easy access to open models through platforms like Hugging Face, Kaggle, and Google Cloud’s Vertex AI.

How to use Llama 3.1 405B

Google is announcing today the ability to install and run open models like Llama 3.1 405B FP16 LLM over GKE (Google Kubernetes Engine), as some of these models demand robust infrastructure and deployment capabilities. With 405 billion parameters, Llama 3.1, published by Meta, shows notable gains in general knowledge, reasoning skills, and coding ability. To store and compute 405 billion parameters at FP (floating point) 16 precision, the model needs more than 750GB of GPU RAM for inference. The difficulty of deploying and serving such big models is lessened by the GKE method discussed in this article.

Customer Experience

You may locate the Llama 3.1 LLM as a Google Cloud customer by selecting the Llama 3.1 model tile in Vertex AI Model Garden.

Once the deploy button has been clicked, you can choose the Llama 3.1 405B FP16 model and select GKE.Image credit to Google Cloud

The automatically generated Kubernetes yaml and comprehensive deployment and serving instructions for Llama 3.1 405B FP16 are available on this page.

Deployment and servicing multiple hosts

Llama 3.1 405B FP16 LLM has significant deployment and service problems and demands over 750 GB of GPU memory. The total memory needs are influenced by a number of parameters, including the memory used by model weights, longer sequence length support, and KV (Key-Value) cache storage. Eight H100 Nvidia GPUs with 80 GB of HBM (High-Bandwidth Memory) apiece make up the A3 virtual machines, which are currently the most potent GPU option available on the Google Cloud platform. The only practical way to provide LLMs such as the FP16 Llama 3.1 405B model is to install and serve them across several hosts. To deploy over GKE, Google employs LeaderWorkerSet with Ray and vLLM.

LeaderWorkerSet

A deployment API called LeaderWorkerSet (LWS) was created especially to meet the workload demands of multi-host inference. It makes it easier to shard and run the model across numerous devices on numerous nodes. Built as a Kubernetes deployment API, LWS is compatible with both GPUs and TPUs and is independent of accelerators and the cloud. As shown here, LWS uses the upstream StatefulSet API as its core building piece.

A collection of pods is controlled as a single unit under the LWS architecture. Every pod in this group is given a distinct index between 0 and n-1, with the pod with number 0 being identified as the group leader. Every pod that is part of the group is created simultaneously and has the same lifecycle. At the group level, LWS makes rollout and rolling upgrades easier. For rolling updates, scaling, and mapping to a certain topology for placement, each group is treated as a single unit.

Each group’s upgrade procedure is carried out as a single, cohesive entity, guaranteeing that every pod in the group receives an update at the same time. While topology-aware placement is optional, it is acceptable for all pods in the same group to co-locate in the same topology. With optional all-or-nothing restart support, the group is also handled as a single entity when addressing failures. When enabled, if one pod in the group fails or if one container within any of the pods is restarted, all of the pods in the group will be recreated.

In the LWS framework, a group including a single leader and a group of workers is referred to as a replica. Two templates are supported by LWS: one for the workers and one for the leader. By offering a scale endpoint for HPA, LWS makes it possible to dynamically scale the number of replicas.

Deploying multiple hosts using vLLM and LWS

vLLM is a well-known open source model server that uses pipeline and tensor parallelism to provide multi-node multi-GPU inference. Using Megatron-LM’s tensor parallel technique, vLLM facilitates distributed tensor parallelism. With Ray for multi-node inferencing, vLLM controls the distributed runtime for pipeline parallelism.

By dividing the model horizontally across several GPUs, tensor parallelism makes the tensor parallel size equal to the number of GPUs at each node. It is crucial to remember that this method requires quick network connectivity between the GPUs.

However, pipeline parallelism does not require continuous connection between GPUs and divides the model vertically per layer. This usually equates to the quantity of nodes used for multi-host serving.

In order to support the complete Llama 3.1 405B FP16 paradigm, several parallelism techniques must be combined. To meet the model’s 750 GB memory requirement, two A3 nodes with eight H100 GPUs each will have a combined memory capacity of 1280 GB. Along with supporting lengthy context lengths, this setup will supply the buffer memory required for the key-value (KV) cache. The pipeline parallel size is set to two for this LWS deployment, while the tensor parallel size is set to eight.

In brief

We discussed in this blog how LWS provides you with the necessary features for multi-host serving. This method maximizes price-to-performance ratios and can also be used with smaller models, such as the Llama 3.1 405B FP8, on more affordable devices. Check out its Github to learn more and make direct contributions to LWS, which is open-sourced and has a vibrant community.

You can visit Vertex AI Model Garden to deploy and serve open models via managed Vertex AI backends or GKE DIY (Do It Yourself) clusters, as the Google Cloud Platform assists clients in embracing a gen AI workload. Multi-host deployment and serving is one example of how it aims to provide a flawless customer experience.

Read more on Govindhtech.com

#Llama3.1#Llama#LLM#GoogleKubernetes#GKE#405BFP16LLM#AI#GPU#vLLM#LWS#News#Technews#Technology#Technologynews#Technologytrends#govindhtech

2 notes

·

View notes

Text

Is cPanel on Its Deathbed? A Tale of Technology, Profits, and a Slow-Moving Train Wreck

Ah, cPanel. The go-to control panel for many web hosting services since the dawn of, well, web hosting. Once the epitome of innovation, it’s now akin to a grizzled war veteran, limping along with a cane and wearing an “I Survived Y2K” t-shirt. So what went wrong? Let’s dive into this slow-moving technological telenovela, rife with corporate greed, security loopholes, and a legacy that may be hanging by a thread.

Chapter 1: A Brief, Glorious History (Or How cPanel Shot to Stardom)

Once upon a time, cPanel was the bee’s knees. Launched in 1996, this software was, for a while, the pinnacle of web management systems. It promised simplicity, reliability, and functionality. Oh, the golden years!

Chapter 2: The Tech Stack Tortoise

In the fast-paced world of technology, being stagnant is synonymous with being extinct. While newer tech stacks are integrating AI, machine learning, and all sorts of jazzy things, cPanel seems to be stuck in a time warp. Why? Because the tech stack is more outdated than a pair of bell-bottom trousers. No Docker, no Kubernetes, and don’t even get me started on the lack of robust API support.

Chapter 3: “The Corpulent Corporate”

In 2018, Oakley Capital, a private equity firm, acquired cPanel. For many, this was the beginning of the end. Pricing structures were jumbled, turning into a monetisation extravaganza. It’s like turning your grandma’s humble pie shop into a mass production line for rubbery, soulless pies. They’ve squeezed every ounce of profit from it, often at the expense of the end-users and smaller hosting companies.

Chapter 4: Security—or the Lack Thereof

Ah, the elephant in the room. cPanel has had its fair share of vulnerabilities. Whether it’s SQL injection flaws, privilege escalation, or simple, plain-text passwords (yes, you heard right), cPanel often appears in the headlines for all the wrong reasons. It’s like that dodgy uncle at family reunions who always manages to spill wine on the carpet; you know he’s going to mess up, yet somehow he’s always invited.

Chapter 5: The (Dis)loyal Subjects—The Hosting Companies

Remember those hosting companies that once swore by cPanel? Well, let’s just say some of them have been seen flirting with competitors at the bar. Newer, shinier control panels are coming to market, offering modern tech stacks and, gasp, lower prices! It’s like watching cPanel’s loyal subjects slowly turn their backs, one by one.

Chapter 6: The Alternatives—Not Just a Rebellion, but a Revolution

Plesk, Webmin, DirectAdmin, oh my! New players are rising, offering updated tech stacks, more customizable APIs, and—wait for it—better security protocols. They’re the Han Solos to cPanel’s Jabba the Hutt: faster, sleeker, and without the constant drooling.

Conclusion: The Twilight Years or a Second Wind?

The debate rages on. Is cPanel merely an ageing actor waiting for its swan song, or can it adapt and evolve, perhaps surprising us all? Either way, the story of cPanel serves as a cautionary tale: adapt or die. And for heaven’s sake, update your tech stack before it becomes a relic in a technology museum, right between floppy disks and dial-up modems.

This outline only scratches the surface, but it’s a start. If cPanel wants to avoid becoming the Betamax of web management systems, it better start evolving—stat. Cheers!

#hosting#wordpress#cpanel#webdesign#servers#websites#webdeveloper#technology#tech#website#developer#digitalagency#uk#ukdeals#ukbusiness#smallbussinessowner

14 notes

·

View notes

Text

DevOps for Beginners: Navigating the Learning Landscape

DevOps, a revolutionary approach in the software industry, bridges the gap between development and operations by emphasizing collaboration and automation. For beginners, entering the world of DevOps might seem like a daunting task, but it doesn't have to be. In this blog, we'll provide you with a step-by-step guide to learn DevOps, from understanding its core philosophy to gaining hands-on experience with essential tools and cloud platforms. By the end of this journey, you'll be well on your way to mastering the art of DevOps.

The Beginner's Path to DevOps Mastery:

1. Grasp the DevOps Philosophy:

Start with the Basics: DevOps is more than just a set of tools; it's a cultural shift in how software development and IT operations work together. Begin your journey by understanding the fundamental principles of DevOps, which include collaboration, automation, and delivering value to customers.

2. Get to Know Key DevOps Tools:

Version Control: One of the first steps in DevOps is learning about version control systems like Git. These tools help you track changes in code, collaborate with team members, and manage code repositories effectively.

Continuous Integration/Continuous Deployment (CI/CD): Dive into CI/CD tools like Jenkins and GitLab CI. These tools automate the building and deployment of software, ensuring a smooth and efficient development pipeline.

Configuration Management: Gain proficiency in configuration management tools such as Ansible, Puppet, or Chef. These tools automate server provisioning and configuration, allowing for consistent and reliable infrastructure management.

Containerization and Orchestration: Explore containerization using Docker and container orchestration with Kubernetes. These technologies are integral to managing and scaling applications in a DevOps environment.

3. Learn Scripting and Coding:

Scripting Languages: DevOps engineers often use scripting languages such as Python, Ruby, or Bash to automate tasks and configure systems. Learning the basics of one or more of these languages is crucial.

Infrastructure as Code (IaC): Delve into Infrastructure as Code (IaC) tools like Terraform or AWS CloudFormation. IaC allows you to define and provision infrastructure using code, streamlining resource management.

4. Build Skills in Cloud Services:

Cloud Platforms: Learn about the main cloud providers, such as AWS, Azure, or Google Cloud. Discover the creation, configuration, and management of cloud resources. These skills are essential as DevOps often involves deploying and managing applications in the cloud.

DevOps in the Cloud: Explore how DevOps practices can be applied within a cloud environment. Utilize services like AWS Elastic Beanstalk or Azure DevOps for automated application deployments, scaling, and management.

5. Gain Hands-On Experience:

Personal Projects: Put your knowledge to the test by working on personal projects. Create a small web application, set up a CI/CD pipeline for it, or automate server configurations. Hands-on practice is invaluable for gaining real-world experience.

Open Source Contributions: Participate in open source DevOps initiatives. Collaborating with experienced professionals and contributing to real-world projects can accelerate your learning and provide insights into industry best practices.

6. Enroll in DevOps Courses:

Structured Learning: Consider enrolling in DevOps courses or training programs to ensure a structured learning experience. Institutions like ACTE Technologies offer comprehensive DevOps training programs designed to provide hands-on experience and real-world examples. These courses cater to beginners and advanced learners, ensuring you acquire practical skills in DevOps.

In your quest to master the art of DevOps, structured training can be a game-changer. ACTE Technologies, a renowned training institution, offers comprehensive DevOps training programs that cater to learners at all levels. Whether you're starting from scratch or enhancing your existing skills, ACTE Technologies can guide you efficiently and effectively in your DevOps journey. DevOps is a transformative approach in the world of software development, and it's accessible to beginners with the right roadmap. By understanding its core philosophy, exploring key tools, gaining hands-on experience, and considering structured training, you can embark on a rewarding journey to master DevOps and become an invaluable asset in the tech industry.

7 notes

·

View notes

Text

Microsoft Azure Fundamentals AI-900 (Part 5)

Microsoft Azure AI Fundamentals: Explore visual studio tools for machine learning

What is machine learning? A technique that uses math and statistics to create models that predict unknown values

Types of Machine learning

Regression - predict a continuous value, like a price, a sales total, a measure, etc

Classification - determine a class label.

Clustering - determine labels by grouping similar information into label groups

x = features

y = label

Azure Machine Learning Studio

You can use the workspace to develop solutions with the Azure ML service on the web portal or with developer tools

Web portal for ML solutions in Sure

Capabilities for preparing data, training models, publishing and monitoring a service.

First step assign a workspace to a studio.

Compute targets are cloud-based resources which can run model training and data exploration processes

Compute Instances - Development workstations that data scientists can use to work with data and models

Compute Clusters - Scalable clusters of VMs for on demand processing of experiment code

Inference Clusters - Deployment targets for predictive services that use your trained models

Attached Compute - Links to existing Azure compute resources like VMs or Azure data brick clusters

What is Azure Automated Machine Learning

Jobs have multiple settings

Provide information needed to specify your training scripts, compute target and Azure ML environment and run a training job

Understand the AutoML Process

ML model must be trained with existing data

Data scientists spend lots of time pre-processing and selecting data

This is time consuming and often makes inefficient use of expensive compute hardware

In Azure ML data for model training and other operations are encapsulated in a data set.

You create your own dataset.

Classification (predicting categories or classes)

Regression (predicting numeric values)

Time series forecasting (predicting numeric values at a future point in time)

After part of the data is used to train a model, then the rest of the data is used to iteratively test or cross validate the model

The metric is calculated by comparing the actual known label or value with the predicted one

Difference between the actual known and predicted is known as residuals; they indicate amount of error in the model.

Root Mean Squared Error (RMSE) is a performance metric. The smaller the value, the more accurate the model’s prediction is

Normalized root mean squared error (NRMSE) standardizes the metric to be used between models which have different scales.

Shows the frequency of residual value ranges.

Residuals represents variance between predicted and true values that can’t be explained by the model, errors

Most frequently occurring residual values (errors) should be clustered around zero.

You want small errors with fewer errors at the extreme ends of the sale

Should show a diagonal trend where the predicted value correlates closely with the true value

Dotted line shows a perfect model’s performance

The closer to the line of your model’s average predicted value to the dotted, the better.

Services can be deployed as an Azure Container Instance (ACI) or to a Azure Kubernetes Service (AKS) cluster

For production AKS is recommended.

Identify regression machine learning scenarios

Regression is a form of ML

Understands the relationships between variables to predict a desired outcome

Predicts a numeric label or outcome base on variables (features)

Regression is an example of supervised ML

What is Azure Machine Learning designer

Allow you to organize, manage, and reuse complex ML workflows across projects and users

Pipelines start with the dataset you want to use to train the model

Each time you run a pipelines, the context(history) is stored as a pipeline job

Encapsulates one step in a machine learning pipeline.

Like a function in programming

In a pipeline project, you access data assets and components from the Asset Library tab

You can create data assets on the data tab from local files, web files, open at a sets, and a datastore

Data assets appear in the Asset Library

Azure ML job executes a task against a specified compute target.

Jobs allow systematic tracking of your ML experiments and workflows.

Understand steps for regression

To train a regression model, your data set needs to include historic features and known label values.

Use the designer’s Score Model component to generate the predicted class label value

Connect all the components that will run in the experiment

Average difference between predicted and true values

It is based on the same unit as the label

The lower the value is the better the model is predicting

The square root of the mean squared difference between predicted and true values

Metric based on the same unit as the label.

A larger difference indicates greater variance in the individual label errors

Relative metric between 0 and 1 on the square based on the square of the differences between predicted and true values

Closer to 0 means the better the model is performing.

Since the value is relative, it can compare different models with different label units

Relative metric between 0 and 1 on the square based on the absolute of the differences between predicted and true values

Closer to 0 means the better the model is performing.

Can be used to compare models where the labels are in different units

Also known as R-squared

Summarizes how much variance exists between predicted and true values

Closer to 1 means the model is performing better

Remove training components form your data and replace it with a web service inputs and outputs to handle the web requests

It does the same data transformations as the first pipeline for new data

It then uses trained model to infer/predict label values based on the features.

Create a classification model with Azure ML designer

Classification is a form of ML used to predict which category an item belongs to

Like regression this is a supervised ML technique.

Understand steps for classification

True Positive - Model predicts the label and the label is correct

False Positive - Model predicts wrong label and the data has the label

False Negative - Model predicts the wrong label, and the data does have the label

True Negative - Model predicts the label correctly and the data has the label

For multi-class classification, same approach is used. A model with 3 possible results would have a 3x3 matrix.

Diagonal lien of cells were the predicted and actual labels match

Number of cases classified as positive that are actually positive

True positives divided by (true positives + false positives)

Fraction of positive cases correctly identified

Number of true positives divided by (true positives + false negatives)

Overall metric that essentially combines precision and recall

Classification models predict probability for each possible class

For binary classification models, the probability is between 0 and 1

Setting the threshold can define when a value is interpreted as 0 or 1. If its set to 0.5 then 0.5-1.0 is 1 and 0.0-0.4 is 0

Recall also known as True Positive Rate

Has a corresponding False Positive Rate

Plotting these two metrics on a graph for all values between 0 and 1 provides information.

Receiver Operating Characteristic (ROC) is the curve.

In a perfect model, this curve would be high to the top left

Area under the curve (AUC).

Remove training components form your data and replace it with a web service inputs and outputs to handle the web requests

It does the same data transformations as the first pipeline for new data

It then uses trained model to infer/predict label values based on the features.

Create a Clustering model with Azure ML designer

Clustering is used to group similar objects together based on features.

Clustering is an example of unsupervised learning, you train a model to just separate items based on their features.

Understanding steps for clustering

Prebuilt components exist that allow you to clean the data, normalize it, join tables and more

Requires a dataset that includes multiple observations of the items you want to cluster

Requires numeric features that can be used to determine similarities between individual cases

Initializing K coordinates as randomly selected points called centroids in an n-dimensional space (n is the number of dimensions in the feature vectors)

Plotting feature vectors as points in the same space and assigns a value how close they are to the closes centroid

Moving the centroids to the middle points allocated to it (mean distance)

Reassigning to the closes centroids after the move

Repeating the last two steps until tone.

Maximum distances between each point and the centroid of that point’s cluster.

If the value is high it can mean that cluster is widely dispersed.

With the Average Distance to Closer Center, we can determine how spread out the cluster is

Remove training components form your data and replace it with a web service inputs and outputs to handle the web requests

It does the same data transformations as the first pipeline for new data

It then uses trained model to infer/predict label values based on the features.

2 notes

·

View notes

Text

What is the best Azure online training?

The best Azure Online Training provides a comprehensive, hands-on learning experience covering core to advanced Azure topics. This training typically includes modules on Azure fundamentals, deployment, security, networking, storage, Azure DevOps, and multi-cloud management, enabling learners to develop cloud solutions using Azure's diverse services.

What is Microsoft Azure Online Training?

Microsoft Azure Online Training is a structured program designed to teach individuals and teams how to use Azure cloud services effectively. Azure is a cloud platform offering a range of services, including computing, analytics, storage, and networking. The training encompasses real-world scenarios, interactive labs, and expert-led sessions to help participants understand Azure’s cloud architecture and its applications in various industries.

How to Learn Azure through Online Training?

Start with Fundamentals: Begin with the basics like Azure's core services, architecture, and platform navigation.

Interactive Labs and Real-World Projects: Most courses provide practical labs, allowing learners to work on deploying resources, managing networks, configuring VMs, and securing environments.

Advanced Modules: After building a foundation, move to advanced modules like Azure DevOps, containerization with Kubernetes, Azure AI, and multi-cloud strategies.

Certification Preparation: Comprehensive training usually prepares learners for certifications like Azure Fundamentals (AZ-900), Azure Administrator (AZ-104), and Azure Solutions Architect (AZ-305).

Who Can Learn Microsoft Azure?

Azure Online Training is suitable for:

IT Professionals: System admins, network engineers, and DevOps professionals who want to upskill.

Developers: Software developers interested in creating and deploying applications on Azure.

Business Analysts and Architects: Those involved in designing and implementing cloud solutions.

Beginners and Cloud Enthusiasts: Anyone looking to start a career in cloud computing.

Prerequisites for Microsoft Azure Online Training

While beginners can start with foundational courses, the following prerequisites can be helpful:

Basic IT Knowledge: Understanding of computer systems, operating systems, and networking.

Experience with Virtualization and Networking: Familiarity with concepts like virtual machines, IP addressing, and DNS.

Programming Knowledge (Optional): While not mandatory, knowledge of scripting or programming can be beneficial for advanced Azure functions and automation.

Promote Microsoft Azure Online Training

If you're looking to dive into the world of cloud computing, Microsoft Azure Online Training is an ideal choice! Our training at Naresh I Technologies (NiT) provides an engaging learning environment with certified instructors, 24/7 support, and real-world projects. Join our Microsoft Azure Online Training to gain the skills necessary for in-demand certifications and excel in your career with cloud expertise. Start today and become an Azure professional with Naresh IT!

0 notes

Text

Azure AI-102 Training in Hyderabad | Visualpath

Creating and Managing Machine Learning Experiments in Azure AI

Introduction:

AI 102 Certification is a significant milestone for professionals aiming to design and implement intelligent AI solutions using Azure AI services. This certification demonstrates proficiency in key Azure AI functionalities, including building and managing machine learning models, automating model training, and deploying scalable AI solutions. A critical area covered in the Azure AI Engineer Training is creating and managing machine learning experiments. Understanding how to streamline experiments using Azure's tools ensures AI engineers can develop models efficiently, manage their iterations, and deploy them in real-world scenarios.

Introduction to Azure Machine Learning

Azure AI is a cloud-based platform that provides comprehensive tools for developing, training, and deploying machine learning models. It simplifies the process of building AI applications by offering pre-built services and flexible APIs. Azure Machine Learning (AML), a core component of Azure AI, plays a vital role in managing the entire machine learning lifecycle, from data preparation to model monitoring.

Creating machine learning experiments in Azure involves designing workflows, training models, and tuning hyper parameters. The platform offers both no-code and code-first experiences, allowing users of various expertise levels to build AI models. For those preparing for the AI 102 Certification, learning to navigate Azure Machine Learning Studio and its features is essential. The Studio's drag-and-drop interface enables users to build models without writing extensive code, while more advanced users can take advantage of Python and R programming support for greater flexibility.

Setting Up Machine Learning Experiments in Azure AI

The process of setting up machine learning experiments in Azure begins with defining the experiment's objective, whether it's classification, regression, clustering, or another machine learning task. After identifying the problem, the next step is gathering and preparing the data. Azure AI supports various data formats, including structured, unstructured, and time-series data. Azure’s integration with services like Azure Data Lake and Azure Synapse Analytics provides scalable data storage and processing capabilities, allowing engineers to work with large datasets effectively.

Once the data is ready, it can be imported into Azure Machine Learning Studio. This environment offers several tools for pre-processing data, such as cleaning, normalization, and feature engineering. Pre-processing is a critical step in any machine learning experiment because the quality of the input data significantly affects the performance of the resulting model. Through Azure AI Engineer Training, professionals learn the importance of preparing data effectively and how to use Azure's tools to automate and optimize this process.

Training Machine Learning Models in Azure

Training models is the heart of any machine learning experiment. Azure Machine Learning provides multiple options for training models, including automated machine learning (Auto ML) and custom model training using frameworks like Tensor Flow, PyTorch, and Scikit-learn. Auto ML is particularly useful for users who are new to machine learning, as it automates many of the tasks involved in training a model, such as algorithm selection, feature selection, and hyper parameter tuning. This capability is emphasized in the AI 102 Certification as it allows professionals to efficiently create high-quality models without deep coding expertise.

For those pursuing the AI 102 Certification, it's crucial to understand how to configure training environments and choose appropriate compute resources. Azure offers scalable compute options, such as Azure Kubernetes Service (AKS), Azure Machine Learning Compute, and even GPUs for deep learning models. Engineers can scale their compute resources up or down based on the complexity of the experiment, optimizing both cost and performance.

Managing and Monitoring Machine Learning Experiments

After training a machine learning model, managing the experiment's lifecycle is essential for ensuring the model performs as expected. Azure Machine Learning provides robust experiment management features, including experiment tracking, version control, and model monitoring. These capabilities are crucial for professionals undergoing Azure AI Engineer Training, as they ensure transparency, reproducibility, and scalability in AI projects.

Experiment tracking in Azure allows data scientists to log metrics, parameters, and outputs from their experiments. This feature is particularly important when running multiple experiments simultaneously or iterating on the same model over time. With experiment tracking, engineers can compare different models and configurations, ultimately selecting the model that offers the best performance.

Version control in Azure Machine Learning enables data scientists to manage different versions of their datasets, code, and models. This feature ensures that teams can collaborate on experiments while maintaining a history of changes. It is also crucial for auditability and compliance, especially in industries such as healthcare and finance where regulations require a detailed history of AI model development. For those pursuing the AI 102 Certification, mastering version control in Azure is vital for managing complex AI projects efficiently.

Deploying and Monitoring Models

Once a model has been trained and selected, the next step is deployment. Azure AI simplifies the process of deploying models to various environments, including cloud, edge, and on-premises infrastructure. Through Azure AI Engineer Training, professionals learn how to deploy models using Azure Kubernetes Service (AKS), Azure Container Instances (ACI), and Azure IoT Edge, ensuring that models can be used in a variety of scenarios.

Monitoring also allows engineers to set up automated alerts when a model's performance falls below a certain threshold, ensuring that corrective actions can be taken promptly. For example, engineers can retrain a model with new data to ensure that it continues to perform well in production environments. The ability to manage model deployment and monitoring is a key skill covered in Azure AI Engineer Training, and it is a critical area of focus for the AI 102 Certification.

Best Practices for Managing Machine Learning Experiments

To succeed in creating and managing machine learning experiments, Azure AI engineers must follow best practices that ensure efficiency and scalability. One such practice is implementing continuous integration and continuous deployment (CI/CD) for machine learning models. Azure AI integrates with DevOps tools, enabling teams to automate the deployment of models, manage experiment lifecycles, and streamline collaboration.

Moreover, engineers should optimize the use of computer resources. Azure provides a wide range of virtual machine sizes and configurations, and choosing the right one for each experiment can significantly reduce costs while maintaining performance. Through Azure AI Engineer Training, individuals gain the skills to select the best compute resources for their specific use cases, ensuring cost-effective machine learning experiments.

Conclusion

In conclusion, creating and managing machine learning experiments in Azure AI is a key skill for professionals pursuing the AI 102 Certification. Azure provides a robust platform for building, training, and deploying models, with tools designed to streamline the entire process. From defining the problem and preparing data to training models and monitoring their performance, Azure AI covers every aspect of the machine learning lifecycle.

By mastering these skills through Azure AI Engineer Training, professionals can efficiently manage their AI workflows, optimize model performance, and ensure the scalability of their AI solutions. With the right training and certification, AI engineers are well-equipped to drive innovation in the rapidly growing field of artificial intelligence, delivering value across various industries and solving complex business challenges with cutting-edge technology.

Visualpath is the Best Software Online Training Institute in Hyderabad. Avail complete Azure AI (AI-102) worldwide. You will get the best course at an affordable cost.

Attend Free Demo

Call on - +91-9989971070.

WhatsApp: https://www.whatsapp.com/catalog/919989971070/

Visit: https://www.visualpath.in/online-ai-102-certification.html

#Ai 102 Certification#Azure AI Engineer Certification#Azure AI Engineer Training#Azure AI-102 Course in Hyderabad#Azure AI Engineer Online Training#Microsoft Azure AI Engineer Training#AI-102 Microsoft Azure AI Training

0 notes

Text

Transforming Infrastructure with Automation: The Power of Terraform and AWS Elastic Kubernetes Service Training

In the digital age, organizations are modernizing their infrastructure and shifting to cloud-native solutions. Terraform automates infrastructure provisioning across multiple cloud providers, while AWS Elastic Kubernetes Service (EKS) orchestrates containers, enabling businesses to manage scalable, high-availability applications. Together, these technologies form a foundation for managing dynamic systems at scale. To fully leverage them, professionals need practical, hands-on skills. This is where Elastic Kubernetes Services training becomes essential, offering expertise to automate and manage containerized applications efficiently, ensuring smooth operations across complex cloud infrastructures.

Why Automation Matters in Cloud Infrastructure

As businesses scale, manual infrastructure management becomes inefficient and prone to errors, especially in large, multi-cloud environments. Terraform, as an infrastructure-as-code (IaC) tool, automates provisioning, networking, and deployments, eliminating repetitive manual tasks and saving time. When paired with AWS Elastic Kubernetes Service (EKS), automation improves reliability and scalability, optimizes resource use, minimizes downtime, and significantly enhances deployment velocity for businesses operating in a cloud-native ecosystem.

The Role of Terraform in Automating AWS

Terraform simplifies cloud infrastructure by codifying resources into reusable, version-controlled configuration files, ensuring consistency and reducing manual effort across environments. In AWS, Terraform automates critical services such as EC2 instances, VPCs, and RDS databases. Integrated with Elastic Kubernetes Services (EKS), Terraform automates the lifecycle of Kubernetes clusters—from creating clusters to scaling applications across availability zones—allowing seamless cloud deployment and enhancing automation efficiency across diverse environments.

How AWS Elastic Kubernetes Service Elevates Cloud Operations

AWS Elastic Kubernetes Service (EKS) simplifies deploying, managing, and scaling Kubernetes applications by offering a fully managed control plane that takes the complexity out of Kubernetes management. When combined with Terraform, this automation extends even further, allowing infrastructure to be defined, deployed, and updated with minimal manual intervention. Elastic Kubernetes services training equips professionals to master this level of automation, from scaling clusters dynamically to managing workloads and applying security best practices in a cloud environment.

Benefits of Elastic Kubernetes Services Training for Professionals

Investing in Elastic Kubernetes Services training goes beyond managing Kubernetes clusters; it’s about gaining the expertise to automate and streamline cloud infrastructure efficiently. This training enables professionals to:

Increase Operational Efficiency: Automating repetitive tasks allows teams to focus on innovation rather than managing infrastructure manually, improving productivity across the board.

Scale Applications Seamlessly: Understanding how to leverage EKS ensures that applications can scale with demand, handling traffic spikes without sacrificing performance or reliability.

Stay Competitive: With cloud technologies evolving rapidly, staying up-to-date on tools like Terraform and EKS gives professionals a significant edge, allowing them to meet modern business demands effectively.

Driving Innovation with Automation

Automation is essential for businesses seeking to scale and remain agile in a competitive digital landscape. Terraform and AWS Elastic Kubernetes Service (EKS) enable organizations to automate infrastructure management and deploy scalable, resilient applications. Investing in Elastic Kubernetes Services training with Web Age Solutions equips professionals with technical proficiency and practical skills, positioning them as key innovators in their organizations while building scalable cloud environments that support long-term growth and future technological advancements.

For more information visit: https://www.webagesolutions.com/courses/WA3108-automation-with-terraform-and-aws-elastic-kubernetes-service

0 notes

Text

Breaking into the Cloud Computing and DevOps Field: Where to Start?

The tech landscape is rapidly transforming, with cloud computing and DevOps at the forefront of this evolution. As businesses increasingly migrate their operations to the cloud and adopt agile practices, the demand for skilled professionals in these areas continues to rise. If you’re looking to break into this exciting field, one of the best first steps is to enroll in a cloud computing institute in Hyderabad. Here’s how to get started on your journey.

Understanding the Basics

Before diving into a career in cloud computing and DevOps, it’s essential to understand what these fields entail. Cloud computing involves delivering various services, such as storage, databases, and software, over the internet, enabling businesses to operate more efficiently. DevOps is a set of practices that integrates software development (Dev) and IT operations (Ops), fostering collaboration and streamlining workflows.

Understanding these concepts in cloud computing institute in Hyderabad lays the foundation for your career. Once you grasp the basics, you can focus on acquiring the necessary skills and knowledge.

Choosing the Right Cloud Computing Institute in Hyderabad

Selecting a reputable cloud computing institute in Hyderabad is crucial for your education and future career. Look for institutes that offer:

Comprehensive Curriculum: Ensure the program covers essential topics such as cloud service models (IaaS, PaaS, SaaS), deployment models, cloud security, and fundamental DevOps practices.

Hands-On Training: Practical experience is vital. Choose institutes that offer labs, projects, and internships to apply theoretical knowledge in real-world scenarios.

Industry Recognition: Look for programs that are well-regarded in the industry, ideally with partnerships or collaborations with leading tech companies.

Experienced Instructors: Learn from professionals with real-world experience who can provide valuable insights and mentorship.

Certifications: Opt for institutes that prepare you for industry-recognized certifications, such as AWS Certified Solutions Architect or Microsoft Certified: Azure Fundamentals. These credentials enhance your employability and credibility.

Building Your Skill Set

Once you’ve enrolled in a cloud computing institute in Hyderabad, focus on building your skill set. Key areas to concentrate on include:

Cloud Platforms: Familiarize yourself with major cloud service providers like AWS, Microsoft Azure, and Google Cloud. Understanding their services and offerings will give you a competitive edge.

Networking and Security: Learn about network configurations, security protocols, and compliance standards relevant to cloud environments.

DevOps Tools: Gain proficiency in popular DevOps tools such as Docker, Kubernetes, Jenkins, and Git. These tools are essential for automating processes and enhancing collaboration.

Scripting and Automation: Basic programming and scripting skills (Python, Bash, etc.) can significantly enhance your ability to automate tasks and manage cloud resources efficiently.

Gaining Practical Experience

Practical experience is crucial for solidifying your understanding and enhancing your resume. Participate in internships or collaborative projects during your training. Many cloud computing institutes in Hyderabad offer internship placements that can provide invaluable experience.

Additionally, consider contributing to open-source projects or creating your own projects. This hands-on experience not only helps you apply your skills but also demonstrates your initiative and problem-solving capabilities to potential employers.

Networking and Building Connections

Networking is essential in the tech industry. Attend workshops, webinars, and local meetups to connect with professionals and peers. Joining online forums and social media groups focused on cloud computing and DevOps can also help you stay updated on industry trends and job opportunities.

Leverage platforms like LinkedIn to showcase your skills, share your projects, and engage with industry leaders. Building a strong professional network can lead to mentorship opportunities and job referrals.

Exploring Career Opportunities

Once you’ve completed your training from cloud computing institute in Hyderabad and gained practical experience, it’s time to explore career opportunities. The job market for cloud computing and DevOps professionals is robust, with various roles available, including:

Cloud Engineer: Responsible for managing and implementing cloud services and solutions.

DevOps Engineer: Focuses on integrating development and operations to improve deployment efficiency and reliability.

Cloud Architect: Designs and oversees cloud infrastructure and strategy.

Site Reliability Engineer (SRE): Ensures systems' reliability and performance through proactive monitoring and incident management.

Many organizations are actively seeking talent in these roles, offering competitive salaries and benefits.

Continuous Learning and Development

The tech landscape is ever-evolving, making continuous learning vital. After starting your career, consider pursuing additional certifications or advanced training programs to keep your skills updated. This commitment to learning will enhance your career trajectory and keep you relevant in a competitive job market.

Conclusion

Breaking into the cloud computing and DevOps field may seem daunting, but with the right approach, it can be an exciting and rewarding journey. Start by enrolling in a reputable cloud computing institute in Hyderabad to gain the foundational knowledge and skills necessary for success. Focus on building your expertise, gaining practical experience, and networking within the industry. By taking these steps, you’ll be well on your way to establishing a fulfilling career in these dynamic and in-demand fields. Embrace the challenge, and you’ll find a world of opportunities waiting for you!

#technology#ai#devops#aws#Cloud Computing#cloud computing course in mumbai#cloud computing trends#cloud computing certification#cloud computing market#cloud computing training#cloud computing jobs#cloud computing course#DevOps#aws devops course in hyderabad#devops certification#DevOps training#DevOps course#DevOps training in hyderabad#aws training in hyderabad#aws cloud#aws certification#aws course

0 notes

Text

Google Cloud Professional Cloud Architect: Your Pathway to a Cloud Future

In the evolving landscape of technology, cloud computing has become the bedrock of innovation, transformation, and scalability for businesses worldwide. A Google Cloud Professional Cloud Architect holds the knowledge, experience, and technical prowess to lead companies into the cloud era, optimizing resources and driving efficiency. For those aiming to master Google Cloud Platform (GCP), this certification opens doors to designing and implementing solutions that stand out for their effectiveness and resilience.

Why Pursue a Google Cloud Professional Cloud Architect Certification?

The demand for cloud architects is growing rapidly, and a Google Cloud Professional Cloud Architect certification equips you with both foundational and advanced cloud skills. This certification isn't just about learning GCP – it's about understanding how to make strategic, well-informed decisions that impact the entire organization's infrastructure. For anyone looking to validate their cloud expertise and secure a career in a high-growth industry, this is the credential to pursue.

Understanding the Role of a Google Cloud Professional Cloud Architect

A Google Cloud Professional Cloud Architect is responsible for designing, developing, and managing scalable, secure, and high-performing solutions on the Google Cloud Platform (GCP). They ensure that the architecture aligns with the organization’s business goals, compliance requirements, and security measures. This certification enables you to gain expertise in cloud fundamentals and Google’s ecosystem of tools, including BigQuery, Compute Engine, and Kubernetes.

Key Benefits of Becoming a Google Cloud Professional Cloud Architect

High Earning Potential: Cloud architects are in demand, and those certified in Google Cloud enjoy a competitive edge in salary negotiations.

Career Advancement: As cloud adoption becomes mainstream, roles like Cloud Architect, Cloud Engineer, and Cloud Consultant are increasingly valuable.

Real-World Application: The skills you gain from this certification apply to real-world scenarios, preparing you to handle complex architectural challenges.

The Core Skills You’ll Develop as a Google Cloud Professional Cloud Architect

The Google Cloud Professional Cloud Architect certification curriculum covers several areas to ensure that candidates can manage the full spectrum of cloud-based tasks:

Designing and Planning a Cloud Solution Architecture: Learn to define infrastructure requirements and plan for solution lifecycles.

Managing and Provisioning a Cloud Solution Infrastructure: Develop skills in Google Kubernetes Engine (GKE), Cloud SQL, and Compute Engine.

Security and Compliance: Master the security protocols and policies to safeguard your organization’s data and assets.

Analysis and Optimization: Gain insights into maximizing resource efficiency through cost-optimization techniques.

How to Prepare for the Google Cloud Professional Cloud Architect Exam

Preparing for the Google Cloud Professional Cloud Architect exam requires a mix of theoretical knowledge, hands-on practice, and strategy. Here’s a step-by-step approach to get you ready:

Start with the Basics: Begin by reviewing the official Google Cloud documentation on core services like BigQuery, Cloud Storage, and VPC networking.

Enroll in GCP Training Programs: Courses, such as those on Udemy or Coursera, are designed to provide a structured learning path.

Practice with Google Cloud’s Free Tier: Experiment with Compute Engine, App Engine, and Cloud Pub/Sub to get a feel for their functions.

Take Mock Exams: Practice exams will help you get comfortable with the question format, timing, and content areas.

Recommended Learning Resources for Aspiring Google Cloud Architects

Official Google Cloud Training: Google Cloud offers training specifically designed to prepare candidates for the Professional Cloud Architect exam.

Hands-On Labs: Platforms like Qwiklabs provide interactive labs that are ideal for gaining hands-on experience.

Community Forums: Joining GCP forums and Slack communities can be beneficial for sharing insights and resources with other learners.

Key Google Cloud Professional Cloud Architect Services to Master

As a Google Cloud Professional Cloud Architect, you'll be expected to understand and implement various services. Here are some of the primary ones to focus on:

Compute Engine: GCP’s Compute Engine is a flexible infrastructure as a service (IaaS) that allows you to run custom virtual machines with scalability.

BigQuery: An integral part of data analytics, BigQuery lets you manage and analyze large datasets with SQL-like queries.

Google Kubernetes Engine (GKE): Kubernetes has become the go-to for containerized applications, and GKE provides a fully managed Kubernetes service for easier deployment and scaling.

Cloud SQL: As a fully managed database service, Cloud SQL supports relational databases, making it perfect for storing structured data.

Real-Life Applications of Google Cloud Architect Skills

E-commerce: Design scalable infrastructure to support high-traffic demands and secure transaction processing.

Healthcare: Implement solutions that adhere to strict data privacy and compliance requirements, ensuring patient data is securely stored.

Finance: Develop high-performance solutions capable of processing real-time data to enable better decision-making.

Tips to Succeed in Your Role as a Google Cloud Professional Cloud Architect

Keep Abreast of GCP Updates: Google Cloud evolves constantly. As an architect, staying updated with the latest services and features is crucial.

Engage with the GCP Community: Attend Google Cloud events and join forums to connect with other professionals and learn from their experiences.

Master Cost Management: Controlling costs in the cloud is crucial. Learn to leverage GCP’s cost management tools to optimize resource usage and prevent unnecessary expenses.

Additional Google Cloud Architect-Related Keywords to Boost SEO

Google Cloud Architecture Best Practices

Cloud Computing with Google Cloud

Top GCP Certifications

Google Cloud Architect Salary

Google Cloud Professional Cloud Architect Jobs

GCP BigQuery and Cloud Storage Integration

Google Cloud Kubernetes Training

By strategically integrating these keywords, the blog post will not only rank well for Google Cloud Professional Cloud Architect but also capture search interest related to GCP careers, cloud architecture, and cloud computing certifications.

Conclusion: Your Future as a Google Cloud Professional Cloud Architect

Earning a Google Cloud Professional Cloud Architect certification is more than just a credential – it’s a statement of your commitment to mastering cloud architecture. As businesses across the globe embrace cloud technology, the role of cloud architects becomes central to success. With the skills and knowledge gained from this certification, you’ll be equipped to design, deploy, and manage solutions that are robust, scalable, and in alignment with modern business needs.

4o

0 notes

Text

How Nscale Strengthens AI Cloud Infrastructure in Europe

Nscale Technology

The AI-engineered hyperscaler. Use the AI cloud platform to access thousands of GPUs customized to your needs.

Features of Nscale

A fully integrated suite of AI services and compute

Utilize a fully integrated platform to manage your AI tasks more effectively, save expenses, and increase income. It platform is intended to make the process of going from development to production easier, regardless of whether you’re using your own AI/ML tools or those that are integrated into Nscale.

Turnkey AI creation and implementation

Users may access a variety of AI/ML tools and resources via the Nscale Marketplace, making model building and deployment effective and scalable.

Dedicated training clusters ready to go

The optimized GPU clusters from its are designed to increase efficiency and shorten model training durations. For a stable infrastructure solution that makes it easy to install, manage, and expand containerized workloads, make the most of Slurm and Kubernetes.

Setting a new standard for inference

Get access to quick, inexpensive, and self-scaling AI inference infrastructure. Using the fast GPUs and sophisticated orchestration tools, it has optimized each tier of the stack for batch and streaming workloads, allowing you to scale your AI inference processes while preserving optimal performance.

Scalable, flexible AI Compute

With the help of cutting-edge cooling technologies, Nscale’s GPU Nodes provide high-performance processing capability designed for AI and high-performance computing (HPC) activities.

Nscale Enhances AI Cloud Infrastructure in Europe

Nscale‘s Glomfjord data center in Norway has showcased its latest GPU cluster, powered by AMD Instinct MI250X accelerators. The world’s best supercomputers use AMD Instinct MI250X GPUs to speed HPC workloads and satisfy AI inference, training, and fine-tuning needs.

It Glomfjord data center, near Norway’s Arctic Circle, enhances energy efficiency by employing local cooling. Innovative adiabatic cooling and 100% renewable energy enable efficient operations, scalable solutions, and an ecologically friendly footprint for the data center.

Nscale is one of the least expensive AI training centers as it has access to some of the most affordable renewable electricity in the world. It are able to provide high-performance, sustainable AI infrastructure faster and more affordably than it rivals because to the vertical integration and inexpensive electricity.

Experts in moving from CUDA to ROCm

It might be difficult to switch your workload from CUDA to ROCm. The staff at Nscale offers the assistance and direction required to ensure a seamless and effective transfer process. To live cluster is prepared for you to experience the potent capabilities of AMD Instinct accelerators, whether you’re wanting to investigate the performance advantages of MI250X or getting ready for the impending release of MI325X.

Built for AI Workloads

The most complex AI workloads may be readily supported by the vertically integrated platform. It offers sophisticated inference services, SLURM-powered AI task scheduling, bare metal and virtualized GPU nodes, and Kubernetes-native services (NKS).

GPU Nodes

The virtualized and bare metal GPU nodes from Nscale are designed for users that want high-performance computing without sacrificing any quality. Your teams can concentrate on creativity while it take care of the complexity with one-click deployment, which lets you set up your infrastructure in a matter of minutes.

For both short-term and long-term projects, it solution guarantees optimal performance with little overhead, whether it is in AI training, deep learning, or data processing.

Nscale’s Kubernetes (NKS)

Using Nscale’s GPU-powered computation, containerized applications may be deployed in a controlled environment using Nscale‘s Kubernetes Service (NKS). The service enables you to rapidly supply worker nodes for expanding AI workloads and offers a highly available control plane.

As a result, cloud-native AI applications may be deployed, scaled, and managed without the hassle of maintaining the supporting infrastructure.

Nscale SLONK

You may utilize and administer your own high-performance computing cluster in the cloud with Nscale SLONK. A wide variety of scientific workloads, including large-scale simulations and AI/ML training, may be scheduled, executed, and monitored using its batch environment and extensive portfolio of AI/ML and HPC tools.

The AI Workload Scheduler is powered by Nscale’s Infrastructure Services. The batch task scheduler SLURM provides access to the computer resources. A combination of open-source and proprietary software makes up the software environment.

Nscale Inference Service

One platform for implementing AI and ML models is the Nscale Inference Service. Developed using Kubernetes and KServe, it makes use of state-of-the-art horizontal and vertical scaling strategies that allow for cost reductions and effective hardware utilization. Its goal is to make the process of putting models into production as efficient as possible.

It offers unparalleled flexibility and control over cost and performance by supporting both serverless and managed computing installations.

Expanding It Reach

Nscale can provide custom GPU clusters at any size because to its expanding pipeline of 1GW+ greenfield facilities in North America and Europe. It are able to provide top-notch infrastructure that satisfies the demands for AI training, fine-tuning, and inference at a reasonable cost and scale because to this capability and it strategic partnerships with leading companies in the sector, including AMD.

With Nscale, you can satisfy sustainability objectives, save expenses, and have a strong, scalable AI infrastructure that expands to match business demands.

Read more on Govindhtech.com

#Nscale#AI#NscaleTechnology#ML#GPUs#AICloud#AMDInstinctMI250X#ROCm#GPUnodes#HPC#News#Technews#Technology#Technologynews#Technologytrends#govindhtech

0 notes

Text

Top 10 Advantages of Choosing Google Cloud Platform

When it comes to cloud computing it is like choosing the right vehicle for your journey. If you are going off roading you would not pick a compact sedan. You would choose a sturdy & reliable 4x4 that can handle tough terrain with ease. The same logic applies to cloud platforms. With so many options on the market how do you choose the right one?

For many the answer is Google Cloud Platform (GCP). Not because it is the biggest or flashiest but because it is designed to take you on a smooth & efficient ride toward your digital goals whether you are a student a tech professional or a decision maker in a company. Lets dive into what is Google Cloud Platform why GCP stands out & how it provides advantages that simplify your cloud journey.

Top Advantages of Choosing Google Cloud Platform

1. Unmatched Scalability Growing With You

Imagine building a Lego structure. At first it is small but as you need more you snap on additional pieces. GCP works the same way. Whether you are running a small project or need infrastructure for a global enterprise Google Cloud scales with you. The infrastructure is elastic which means you can scale up when traffic spikes or down when things slow without worrying about performance dips or downtime.

2. Top Tier Security Peace of Mind

Now digital world security is non negotiable. It is like putting a high end security system in your home. You would not settle for just a lock on the door. Google takes security seriously with measures like data encryption at rest & in transit as well as advanced AI based threat detection. Google resources allow them to invest in the latest security technology with a team of experts monitoring & responding to threats every day around the clock.

3. Industry Leading AI & Machine Learning Capabilities

AI & machine learning are no longer futuristic ideas but tools being used today to optimize everything from customer service to medical diagnoses. With Google Cloud AI & machine learning services you can build smarter apps & automate tasks with ease. GCP provides pre trained models or the ability to train your own giving you flexibility depending on your specific needs.

4. Seamless Integration With Other Google Services

Chances are you already use some Google services like Gmail Google Docs or YouTube. Google Cloud integrates effortlessly with these familiar tools allowing you to unify business operations. Whether you are looking to enhance collaboration through Google Workspace or streamline ad operations with Google Ads GCPs deep integration with Google suite makes it a natural fit for many businesses.

5. Competitive Pricing Pay for What You Use

When it comes to pricing Google Cloud does not require you to buy an annual ticket when you only need a day pass. GCP uses a pay as you go model so you are billed for the compute power storage & resources you actually use. This flexible approach along with sustained use discounts makes it attractive for companies looking to manage costs efficiently.

6. Global Network Fast & Reliable Performance

Imagine a road trip where you always have access to the fastest lanes wherever you go. GCP benefits from one of the largest & most advanced networks in the world. It is the same infrastructure Google uses for its own products like Search & YouTube. This global network ensures low latency performance with high availability regardless of where your users are located.

7. Open Source Friendly Flexibility & Freedom

In the tech world many developers prefer open source tools as they allow for more customization & control. Google Cloud is a strong advocate for open source technology supporting Kubernetes (which Google invented) & other open source initiatives. With GCP you get the freedom to build on the tools you prefer without being locked into proprietary systems.

8. Sustainability Green Cloud Computing

Sustainability is not just a buzzword it is a growing priority for businesses worldwide. Google is committed to operating the cleanest cloud in the industry. GCP has been carbon neutral since 2007 & aims to be carbon free by 2030. By choosing Google Cloud you are contributing to your sustainability goals ensuring your data is stored & processed with minimal environmental impact.

9. Powerful Data Analytics Turning Data into Insights

Businesses today collect vast amounts of data but raw data is like an unmined treasure. It is valuable but only if you know how to process it. GCP offers powerful data analytics services such as BigQuery which allow you to sift through large datasets to find actionable insights. These tools are scalable & fast enabling you to make data driven decisions with ease.

10. Reliable Customer Support & Documentation

When your cloud infrastructure is critical to operations timely support can be the difference between smooth sailing & disaster. GCP course offers a range of support options from basic to premium plans. Along with extensive documentation & user friendly dashboards even less experienced users can get the help they need to solve problems efficiently.

Wrap Up

Choosing the right cloud platform may feel daunting but Google Cloud Platform simplifies that choice by offering an agile secure & scalable infrastructure that grows with you. Whether you are a student experimenting with machine learning or a company CTO streamlining operations GCPs robust offerings cater to everyone.

Think of GCP as a reliable SUV carrying you through smooth highways & the bumpy off roads of digital transformation. It is flexible dependable & packed with features that make your journey efficient & secure. As organizations continue moving to the cloud the need for a forward thinking platform becomes more critical. This is where GCP truly shines.

0 notes

Text

What Are the Key Outcomes of Elastic Kubernetes Services Training?

Elastic Kubernetes Services (EKS) Training equips teams with the skills to efficiently manage and scale containerized applications on AWS. Key outcomes include mastering EKS architecture, automating deployments, enhancing security, and optimizing resource utilization. This training empowers organizations to deploy resilient, scalable applications, reduce operational overhead, and accelerate cloud-native transformations, ultimately driving business growth and innovation.

For more information visit: https://www.webagesolutions.com/courses/WA3108-automation-with-terraform-and-aws-elastic-kubernetes-service

0 notes

Text

Vultr Welcomes AMD Instinct MI300X Accelerators to Enhance Its Cloud Platform

The partnership between Vultr's flexible cloud infrastructure and AMD's cutting-edge silicon technology paves the way for groundbreaking GPU-accelerated workloads, extending from data centers to edge computing. “Innovation thrives in an open ecosystem,” stated J.J. Kardwell, CEO of Vultr. “The future of enterprise AI workloads lies in open environments that promote flexibility, scalability, and security. AMD accelerators provide our customers with unmatched cost-to-performance efficiency. The combination of high memory with low power consumption enhances sustainability initiatives and empowers our customers to drive innovation and growth through AI effectively.” With the AMD ROCm open-source software and Vultr's cloud platform, businesses can utilize a premier environment tailored for AI development and deployment. The open architecture of AMD combined with Vultr’s infrastructure grants companies access to a plethora of open-source, pre-trained models and frameworks, facilitating a seamless code integration experience and creating an optimized setting for speedy AI project advancements. “We take great pride in our strong partnership with Vultr, as their cloud platform is specifically designed to handle high-performance AI training and inferencing tasks while enhancing overall efficiency,” stated Negin Oliver, corporate vice president of business development for the Data Center GPU Business Unit at AMD. “By implementing AMD Instinct MI300X accelerators and ROCm open software for these latest deployments, Vultr customers will experience a truly optimized system capable of managing a diverse array of AI-intensive workloads.” Tailored for next-generation workloads, the AMD architecture on Vultr's infrastructure enables genuine cloud-native orchestration of all AI resources. The integration of AMD Instinct accelerators and ROCm software management tools with the Vultr Kubernetes Engine for Cloud GPU allows the creation of GPU-accelerated Kubernetes clusters capable of powering the most resource-demanding workloads globally. Such platform capabilities empower developers and innovators with the tools necessary to create advanced AI and machine learning solutions to address complex business challenges. Additional advantages of this collaboration include: Vultr is dedicated to simplifying high-performance cloud computing so that it is user-friendly, cost-effective, and readily accessible for businesses and developers worldwide. Having served over 1.5 million customers across 185 nations, Vultr offers flexible, scalable global solutions including Cloud Compute, Cloud GPU, Bare Metal, and Cloud Storage. Established by David Aninowsky and fully bootstrapped, Vultr has emerged as the largest privately-held cloud computing enterprise globally without ever securing equity financing. LowEndBox is a go-to resource for those seeking budget-friendly hosting solutions. This editorial focuses on syndicated news articles, delivering timely information and insights about web hosting, technology, and internet services that cater specifically to the LowEndBox community. With a wide range of topics covered, it serves as a comprehensive source of up-to-date content, helping users stay informed about the rapidly changing landscape of affordable hosting solutions. Read the full article

0 notes