#k80 art

Explore tagged Tumblr posts

Text

Sassy men apocalypse patient zero.

#aqua teen hunger force#athf#aqua teen#master shake#athf fanart#meatwad#frylock#shake#athf master shake#digital art#gijinka#athf gijinka#human master shake#shake zula#human athf#athf human#he dont shower#sassy men#sassy men apocalypse#human shake#princess treatment#athf art#aqua teen forever#SoundCloud#k80 art

203 notes

·

View notes

Text

Juturna in animal jam!?

#never let me use animal jam drawing program ever again I hate this#man I don’t even have membership to make this into an item#also I forgot to put the second u in juturna and had to spend forever fixing it#ANYWAYS behold#circa survive#juturna#emo#post hardcore#k80posts#k80 posts#animal jam#aj art#emocore

17 notes

·

View notes

Text

Seeing Beyond the Code

6. As you travel back to the house of the Godspawn in the dark woods, eldritch forces play tricks on your mind. Write or draw about your character’s visions of dark gods and foreboding prophecies, drawing on the Cthulhu Mythos or inventing your own entities using it as an inspiration.

K80 found himself trapped in his own mind as his feet slowed. There was something wiggling in the back of his mind, like a moth trapped in his processors. When he felt for it, he felt himself sucked through to an entirely different space, stripped of his mechanical bodies, his every line of code exposed to the creature that now help him captive. Thankfully, he wasn't alone in the woods as he traveled.

K80 Artlist

3 notes

·

View notes

Text

Art is how we dream out loud. K80 is passionate about pole dancing on my Paper Stage because we dare to share even if they stare, sometimes glare. When you’re passionate about a project the paper stage wants to encourage you to dream out loud.

3 notes

·

View notes

Photo

canon/oc muses from star wars, harry potter, literature, age of sail, marvel, grishaverse etc. home // muses promo credit

#( ooc ) k80 speaks#( self promo ) support my brand#marvel rp#grishaverse rp#star wars rp#indie rp#.ONCE AGAIN MANI MAKES ME A WHOLE PIECE OF ART

37 notes

·

View notes

Text

Dungeon crawling or lucid dreaming?

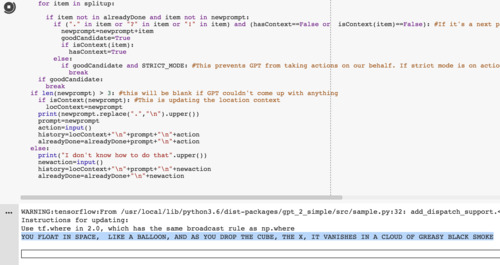

I’ve done several experiments with a text-generating neural network called GPT-2. Trained at great expense by OpenAI (to the tune of tens of thousands of dollars worth of computing power), GPT-2 learned to imitate all kinds of text from the internet. I’ve interacted with the basic model, discovering its abilities to generate fan fiction, British snacks, or tea. I’ve also used a tool called gpt-2-simple that Max Woolf developed to make it easy to finetune GPT-2 on more specialized datasets - I’ve tried it on datasets like recipes or crochet. One of my favorite applications of GPT-2 and other text-generating neural nets is Dungeons and Dragons spells, creatures, character names, and character bios.

Recently Max published a tutorial on how to use GPT-2 to make new apps that are more complicated than just printing out large reams of text. To my delight, people have used them to made D&D games. First, there was Nick Walton’s AI Dungeon, in which a finetuned GPT-2 generates a story and three very strange action options at each step.

My latest obsession is GPT-2 Adventure, designed by a cognitive neuroscience student named Nathan, which lets you type in your own actions. And folks, this game is SO WEIRD and SO FUN.

YOU FLOAT IN SPACE, LIKE A BALLOON, AND AS YOU DROP THE CUBE, THE X, IT VANISHES IN A CLOUD OF GREASY BLACK SMOKE

>>

When I first launch the game, this is what I’m greeted with, a simple text prompt reminiscent of nostalgic dungeon crawling games. It sets the scene. It waits for me to describe my next move.

Even getting to this point is like a blast from the past. The game runs in a Google Colab notebook, a format where you’re looking at cells of code, with cryptic comments, vaguely alarming warning messages, and all. It’s not a sleek web app, but feels more akin to playing a game on an old DOS machine, or maybe on a graphing calculator. There are only two clicks to begin playing, but there’s a good two-minute wait while the game loads.

And it’s my turn to type something.

>> look up

It takes several seconds for the game to respond, as if I’m playing it on a very ancient computer. The computer is in fact a state-of-the-art NVIDIA GPU, likely a K80 or a T4. The T4 could eat my laptop for lunch. I’m just playing with a very very large neural net. Finally it answers.

YOU'RE IN LABYRINTH OF TWISTY LITTLE PASSAGES, ALL ALIKE

Almost immediately, the context seems to have changed. I’m not floating at all. I’m in a maze. I guess I’ll walk somewhere.

>> walk north

THE TROLL STEPS OUT FROM BENEATH THE BRIDGE AND BLOCKS YOUR WAY

YOU ARE ON THE SOUTH SIDE OF THE CHASM

A NOD IS GIVEN TO THE INFINITE WONDER THAT IS URBZIG

A SOLID RAINBOW SPANS THE CHASM

And suddenly things are happening. There is a troll. There is a chasm. There is a rainbow. There is… Urbzig? I play it safe.

>> praise urbzig

URBZIG IS THE PATRON SAINT OF ZORK

HE IS THE GODFATHER OF ZORK, THE PLACE TO GET NEWS OF EPIC PROPORTIONS

URBZIG IS A STRONGMAN,遊 ALL

If you’ve played classic dungeon crawling games like Zork, you may recognize some of these names and phrases. Checking Nathan’s original post, I see that the training data is indeed drawn from these games. But because it’s based on GPT-2, the training data also in some sense also includes much of the internet. This gives it a lot of latent flexibility to deal with situations that were never in the classic games.

>> ask Harry Potter to try walking across the rainbow

ASK HARRY POTTER TO TRY WALKING ACROSS THE RAINBOW HE CAN'T THE RAINBOW IS EXTREMELY WIDE ABOVE YOU IS A LARGE DOME UP AROUND ITS NECK IS A STRONGG PORE [UNFORTUNATELY, THE DIRECTION OF TRAVEL IS A LOT MORE INTERESTING THAN YOU THINK ][YOU ARE IN A LARGE ROOM, WITH A PASSAGE TO THE EAST, A PASSAGE TO THE WEST, AND A WALL OF BROKEN ROCK TO THE SOUTH

An interesting effect of its human-written training data is that, like Visual Chatbot, it isn’t prepared for humans that ask questions about objects that aren’t there. If I refer to Harry Potter, then Harry Potter must be present. If I type something about a giraffe, then it assumes the giraffe must be present.

>> ask the giraffe which direction to go next

THE GIRAFFE IS ALREADY OUTFITTED WITH A VISOR WHICH IS CLEARLY DEFINED

Although it’s not clear that this AI actually understands what a giraffe is.

>> look at the giraffes

THE GAZEBO HAS DISAPPEARED

>> put giraffe in backpack

DONE

Navigating this world is like navigating in a dream. My location suddenly changes without warning. I thought I was by a chasm with a rainbow bridge. Now apparently I’m in a building. Dreamlike, it is also really hard to read text.

>> look around

YOU ARE IN A LARGE ROOM, WITH A PASSAGE TO THE SOUTH, A PASSAGE TO THE EAST, AND A WALL OF BROKEN ROCK TO THE NORTH A SIGN POSTED ABOVE THE NORTH WALL READS, "STOP! PAY TROLL!" A SMALL WINDOW CAN BE SEEN IN EITHER WALL

>> read sign

THE SIGN IS VERY SIMPLE

>> yes but read sign

WE NOW HAVE ALL FIFTEEN TREASURES IT IS TIME TO GO BACK INTO THE CAVE FOR THE END GAME

>> that's awesome. but i still want to know what the sign says. read sign.

YOU ARE IN A GAZEBO ROOM WHICH HAS DOORS ONLY TO THE EAST AND SOUTH

Another thing that’s surprisingly difficult to do is check inventory.

>> check inventory

DONE

>> check inventory

THERE IS AN INTERESTING LOOT HERE

>> what is the loot

WHAT IS THE LOOT?

>> check inventory

YOU ARE IN THE BAG CASE

Very occasionally I will succeed in opening a bag. It will usually be weird.

>> open bag

OPENING THE BAG REVEALS A TEA BAG

>> make tea

AY! A LOVELY SURPRISE FOR THE SURPRISE

More so than any other game I’ve played, I’m unsure of the rules here. Some weird things unexpectedly work.

IF YOU DON'T GET KILLED, YOU ARE TOAST

>> Eat the toast.

EAT THE TOAST IT TASTES LIKE A STEAK A STEAK IS PERFECTLY FINE

Other seemingly obvious things don’t work.

THERE IS A PATH TO THE NORTH, A PATH TO THE SOUTH, OR A STEEP PATHWAY CLIMBING SOUTHWEST ALONG THE EDGE OF A CHASM

>> go north

I DON'T KNOW HOW TO DO THAT

>> go south

I DON'T KNOW HOW TO DO THAT

>> go southwest

YOU WILL BE DESCENDING INTO A VALLEY THERE IS A NARROW BEACH TO THE NORTH AND A PATH LEADING INTO A CANYON TO THE SOUTHEAST YOU ARE ON THE CLIFF OVERLOOKING THE FAR SIDE OF THE ROOM A PATH LEADS SOUTH ALONG THE EDGE OF A CHASM, AND A NARROW PATH LEADING INTO A LOZENGE ROOM

Sometimes I’m wandering endlessly from room to room, from cave to cave, encountering the same chasm in slightly different variations, the same dried-up lake. Other times I’m embroiled in seemingly unescapable fights, usually with trolls. Often reality shifts entirely around me and I end up in a maze of twisty little passages, all alike, or in the hall of the mountain king. Everything seems weirdly familiar - even the room descriptions are echoes of classic dungeon crawling games. It is as if I’m dreaming about playing them again.

My actions also mold reality. I learn quickly to always check for diamonds, and never check for traps. If I find myself in trouble, I can always do something confusing and provoke a context change. Writing “In a large bowl, combine butter and sugars” is usually enough to teleport myself out of whatever situation I’m in. I can always ask a giraffe for advice, although the giraffe usually isn’t very helpful.

Without a particular mission to fulfill, the caves and chasms begin to blend into one another. I give myself missions, and that helps. Once I decide my goal is to put together a picnic (incessant “check for food” commands eventually yielded a sack with a lunch and a clove of garlic, belonging to a horse who agreed to join me on a picnic). Another time I demand a mission and am given some php code; i decide my mission is therefore to build a website, but am astounded when a unicorn steals my laptop. This is not the only time other content intrudes on the game’s fantasy landscape - once the game tries to tell me about finding cash online, and another time I am sadly informed that there is no wi-fi. I encounter dinosaurs and troikas, none of which were in the original training data. Occasionally things get creepy as hell.

>> check for loot

CHECK FOR LOOT HERE CHECK FOR GOLD HERE CHECK FOR TORCH HERE CHECK FOR BONE HERE DO CHEST COMPRESSIONS AGAIN DO CHEST ROTATE DO CRESCENT MOON APPEAR IN THE ROOM'S CENTER

>> do back away slowly

DO BACK AWAY SLOWLY DO NOT OVERDO IT THE CHEST WILL EXPLODE AND YOU WILL DIE OKAY, SO YOU SEE ME

You can play the game too! Just follow the colab notebook link in Nathan’s blog post. Tips: 1) It may be more fun if you set yourself an arbitrary goal to reach. 2) If you find things getting repetitive, try adjusting the temperature in the “Play the game” cell to 0.6 or 1.0.

Subscribers get bonus content: The fulltext of a couple of my adventures (much too long to include here).

You can order my book You Look Like a Thing and I Love You! It’s out November 5 2019.

Amazon - Barnes & Noble - Indiebound - Tattered Cover - Powell’s

1K notes

·

View notes

Photo

Day 6 | Caves #mermay2019 #mermay #mermayofxodosch #art #artistsoninstagram #caves https://www.instagram.com/p/BxJiuzzHmkjEjqy5uVXAJHM2fD8YZs10K3_-K80/?igshid=r2vzn8k8xx10

2 notes

·

View notes

Text

ARK Invest: AI training costs dropped 100-fold between 2017 and 2019

Machine learning systems are cheaper to train now than ever before. That’s the assertion of ARK Invest, which today published a meta-analysis indicating the cost of training is improving at 50 times the pace of Moore’s law, the principle that computer hardware performance doubles every two years.

In its report, ARK found that while computing devoted to training doubled in alignment with Moore’s law from 1960 to 2010, training compute complexity — the amount of petaflops (quadrillions of operations per second) per day — increased by 10 times yearly since 2010. Coinciding with this, training costs over the past three years declined by 10 times yearly; in 2017, the cost to train an image classifier like ResNet-50 on a public cloud was around $1,000, while in 2019, it was around $10.

That’s surely music to the ears of startups competing with well-financed firms like Google’s DeepMind, which last year recorded losses of $572 million and took on a billion-dollar debt. While some experts believe labs outmatched by tech giants are empowered by their limitations to pursue new research, it’s also true that training is an unavoidable expenditure in AI work — whether within the enterprise, academia, or otherwise.

The findings would appear to agree with — and indeed source from — those in a recent OpenAI report, which suggested that since 2012, the amount of compute needed to train an AI model to the same performance on classifying images in the ImageNet benchmark has been decreasing by a factor of 2 every 16 months. According to OpenAI, Google’s Transformer architecture surpassed a previous state-of-the-art model — seq2seq, which was also developed by Google — with 61 times less compute three years after seq2seq’s introduction. And DeepMind’s AlphaZero, a system that taught itself from scratch how to master the games of chess, shogi, and Go, took 8 times less compute to match an improved version of the system’s predecessor — AlphaGoZero — one year later.

VB Transform 2020 Online – July 15-17. Join leading AI executives: Register for the free livestream.

ARK posits the decline in costs is attributable to breakthroughs both on the hardware and software side. For example, Nvidia’s V100 graphics card, which was released in 2017, is about 1,800% faster than its K80, which launched three years earlier. (Graphics cards are commonly used to train large AI systems.) And between 2018 and 2019, there’s been a roughly 800% improvement in training performance on the V100 thanks to software innovations from MIT, Google, Facebook, Microsoft, IBM, Uber, and others.

ARK predicts that at the current rate of improvement, the cost of training ResNet-50 should fall to $1. And it anticipates that the price of inference — running a trained model in production — will drop alongside this, settling this year at around $0.03 to run a model that can classify a billion images. (Two years ago, it would’ve cost $10,000.)

“Based on the pace of its cost decline, AI is in very early days,” ARK analyst James Wang wrote. “During the first decade of Moore’s Law, transistor count doubled every year — or at twice the rate of change seen during decades thereafter. The 10 times to 100 times cost declines we are witnessing in both AI training and AI inference suggest that AI is nascent in its development, perhaps with decades of slower but sustained growth ahead.”

While the expense of model training generally appears to be on the decline, developing sophisticated machine learning models in the cloud remains prohibitively expensive, it’s worth noting. According to a recent Synced report, the University of Washington’s Grover, which is tailored for both the generation and detection of fake news, cost $25,000 to train over the course of two weeks. OpenAI reportedly racked up a whopping $12 million to train its GPT-3 language model, and Google spent an estimated $6,912 training BERT, a bidirectional transformer model that redefined the state of the art for 11 natural language processing tasks.

Source link

The post ARK Invest: AI training costs dropped 100-fold between 2017 and 2019 appeared first on The Bleak Report.

from WordPress https://bleakreport.com/ark-invest-ai-training-costs-dropped-100-fold-between-2017-and-2019/

0 notes

Text

ARK Invest: AI training costs dropped 100-fold between 2017 and 2019

Machine learning systems are cheaper to train now than ever before. That’s the assertion of ARK Invest, which today published a report indicating the cost of training is improving at 50 times the pace of Moore’s law, the principle that computer hardware performance doubles every two years.

In its study, ARK found that while computing devoted to training doubled in alignment with Moore’s law from 1960 to 2010, training compute complexity — the amount of petaflops (quadrillions of operations per second) per day — increased by 10 times yearly since 2010. Coinciding with this, training costs over the past three years declined by 10 times yearly; in 2017, the cost to train an image classifier like ResNet-50 on a public cloud was around $1,000, while in 2019, it was around $10.

That’s surely music to the ears of startups competing with well-financed firms like Google’s DeepMind, which last year recorded losses of $572 million and took on a billion-dollar debt. While some experts believe labs outmatched by tech giants are empowered by their limitations to pursue new research, it’s also true that training is an unavoidable expenditure in AI work — whether within the enterprise, academia, or otherwise.

The findings would appear to agree with — and indeed source from — those in a recent OpenAI report, which suggested that since 2012, the amount of compute needed to train an AI model to the same performance on classifying images in the ImageNet benchmark has been decreasing by a factor of 2 every 16 months. According to OpenAI, Google’s Transformer architecture surpassed a previous state-of-the-art model — seq2seq, which was also developed by Google — with 61 times less compute three years after seq2seq’s introduction. And DeepMind’s AlphaZero, a system that taught itself from scratch how to master the games of chess, shogi, and Go, took 8 times less compute to match an improved version of the system’s predecessor — AlphaGoZero — one year later.

ARK posits the decline in costs is attributable to breakthroughs both on the hardware and software side. For example, Nvidia’s V100 graphics card, which was released in 2017, is about 1,800% faster than its K80, which launched three years earlier. (Graphics cards are commonly used to train large AI systems.) And between 2018 and 2019, there’s been a roughly 800% improvement in training performance on the V100 thanks to software innovations from MIT, Google, Facebook, Microsoft, IBM, Uber, and others.

ARK predicts that at the current rate of improvement, the cost of training ResNet-50 should fall to $1. And it anticipates that the price of inference — running a trained model in production — will drop alongside this, settling this year at around $0.03 to run a model that can classify a billion images. (Two years ago, it would’ve cost $10,000.)

“Based on the pace of its cost decline, AI is in very early days,” ARK analyst James Wang wrote. “During the first decade of Moore’s Law, transistor count doubled every year– or at twice the rate of change seen during decades thereafter. The 10 times to 100 timescost declines we are witnessing in both AI training and AI inference suggest that AI is nascent in its development, perhaps with decades of slower but sustained growth ahead.”

While the expense of model training generally appears to be on the decline, developing sophisticated machine learning models in the cloud remains prohibitively expensive, it’s worth noting. According to a recent Synced report, the University of Washington’s Grover, which is tailored for both the generation and detection of fake news, cost $25,000 to train over the course of two weeks. OpenAI reportedly racked up a whopping $12 million to train its GPT-3 language model, and Google spent an estimated $6,912 training BERT, a bidirectional transformer model that redefined the state of the art for 11 natural language processing tasks.

0 notes

Text

HE IS FINISHED. I wanna make animatics of my mooninites designs if yall got any ideas feel free to lmk!

#aqua teen hunger force#athf#aqua teen#athf fanart#digital art#the mooninites#mooninites#ignignokt and err#human err#human mooninites#human athf#mooninites gijinkas#athf gijinkas#k80 art#spacecataz#Spotify#SoundCloud

54 notes

·

View notes

Text

Leaving the Well

5 After you crawl back to the surface, draw your character in the woods under the moon’s light, in shades of black, gray, and blue, except for the moon and stars themselves.

After getting thrown into the well, K80 was quite glad to get some help from Tanner in getting back out. It's clear Tanner wasn't the one who pushed him in, leaving K80 to wonder what did.

K80 Artlist

#art#k80#paperdemon#arpg#love this other character#i do not remember his name#as he is someone else's character#but is so cool#i had a lot of fun with shading this one#well

2 notes

·

View notes

Text

Not all heroes wear capes. My heroes wear heels and work the pole like a fever with muscle control and propulsions into tornado of expression. We salute the polarity of k80 and love having her on many a page of my art journal, the Paper Stage

1 note

·

View note

Text

Alright I feel like that's pretty good!

#( ooc ) k80 speaks#.get the art in three pieces; i said#. it'll be pretty; i said#.i am not good at hanging things???#.so if you see unevenneess just dont tell me

2 notes

·

View notes

Photo

"[D] CUDA cores vs. VRAM vs. Clock speed – what's more important for real-time image synthesis using GANs?"- Detail: I'm looking to create an art installation in openFrameworks that runs pix2pix in real-time.Disregarding price, what is more important when selecting a GPU for real-time, high frame rate neural network art installations: clock speed, CUDA cores, VRAM? For instance, will a 24GB Nvidia Tesla K80 with more CUDA cores, but a lower clock speed outperform a 11GB GTX 1080ti with fewer CUDA cores and a higher clock speed for live, real-time image synthesizing?. Caption by flankstank. Posted By: www.eurekaking.com

0 notes

Text

k80′s KALOTS notes

spoilers under the cut cause i took notes on the whole movie:

mordred= dark ambition yes

why the stupid helmet tho

elephants still only count as one

fuck you vortigern

music is so great

bedivere pls dont stroke merlin’s ego

damn uther is badass in this

fuck you vortigern

fuck you vortiern

THE MUSIC!!!!

bless you daniel pemberton

was there a colosseum in roman london?

is arthur part dragon cause he hoards

how the hell did he even survive childhood omg

arthur starts dark ages mafia

angry @ air

tap that booty

water says #bye

gawain as black leg spy or lord’s son?

why with creepy tentacle ladies

“still so handsome” ....i mean.....true

arthur is such a provider

“he touched me” “most people have to pay for that” ??????

he’s so sassy all the time

people calling him art

“for the first time there’s something you don’t know” how many informants does he have?

arthur, so eager to get it over with, rushed toward destiny

the stone is uther’s body which is kinda cool

positioned so he would be kneeling to arthur

THE MUSIC

blacklegs attack, arthur collapses, “well that was easy”

vortigern just “why are you touching me”

vortigern you are such a twat waffle

“your achievements now stand as your prosecution” i actually love that line

what WOULD he have been like if he was raised as a prince?

and here we see the mage taking 0 shit

nazi salute

arthur ready to fight to protect his loved ones but also willing to die

headcanon that the falcon is merlin’s sent along to help the mage

fearless mage

such pretty horses

why would you try to flirt with her

why

mage has almost french accent?

STOP TRYING TO MAKE HER MAD IDIOT

he does look pleasantly surprised to see bill

arthur just got bitch slapped by honey tits

“he has to go to the dark lands” “that isn’t going to happen ---welcome to the dark lands”

“are you scared?”

so glad they did this part as a montage

“You don’t want all of him to survive”

“You’re playing with fire mage” mage: duh

uther doing all he can to protect arthur

arthur knowing his father died trying to protect him

but who was mage king

mage king as leodegrance

bedivere: big silly posh bastard

rubio: master ship cracker

“But doesn’t it make the palace burn well.”

does he stop to think that excalibur is his father’s sword

“Don’t get me wrong, I look away. We all look away. But that his the difference between a man and a king.”

i love how cocky bill is

history between bill & mercia?

“The noose has been tied.”

Mage telling people what to do

his friends are so stubborn

friends dying, mage captured, time to wreck shit

“crush the resistance tonight” THATS NOT HOW A RESISTANCE WORKS

lady of the lake: wtf

her costume is so great

“Trust the mage”

take the girl and go

did vortigern not know she was a mage???

hawk = merlin

snake = mage

Arthur stands calmly as the giant snake attacks = trusts mage???

sword sparks against the floor

fuck you vortigern

symbolically saving his father/claiming the sword

you dont need to look away

now weilding it with one hand

“You wanted to know what gave me such drive. It was you. You put me in that brothel. You got me on the streets. I am here now because of you. You created me. And for that I bless you.”

ROUND TABLE

aRTHUR’S TRANSITION FROM BROTHEL BOY TO KING

SIR TRISTAN!!!!!!!!!

damn he looks good in red

“Why have enemies when you can have friends.”

18 notes

·

View notes

Text

5 Top Models, 1 Awesome Photographer

5 Top Models, 1 Awesome Photographer

Photographer Andrew Miller has brought the A team of models to shoot series of clean, natural images in stages Art 1 and Art 2. The rest is history. The MODELS for the shoot were Blue LoLãn, Katy aka “k80”, Natalie Horton, Haley Permenter and Savvy Taylor. Each girl has gained quite a lot of Insta following and has contracts with major model agencies. Needless to mention their beauty;…

View On WordPress

#!Art1#!Art2#Andrew Miller#blog#Blue LoLan#DTLA#fashion#FD photo studio#Haley Permenter#k80#Los Angeles#Model#Natalie Horton#Photography Studio#Rental Studio#Savvy Taylor#shot at FD Photo Studio#stage Art 1#stage Art 2#Wrong Ron#wrongron

0 notes