#it doesn’t even look like ai. it’s just digitally rendered

Explore tagged Tumblr posts

Text

Charbroiled Basilisk

“Run that by me one more time,” Cleo said, rubbing their temples, “You…what?”

“We accidentally made an AI.” Mumbo said sheepishly, “And it says it’s made copies of all of you, besides me and Doc, and is torturing all your copies in the worst ways imaginable. For um. Eternity?”

Cleo stared at the box Mumbo was talking about. It was a rectangular PC case with a monitor perched on top, a monitor that was showing a pair of angry red eyes. The eyes looked between Mumbo, and Doc, and then back to her.

The box, Cleo noted, was plugged into the wall.

“Uh,” Jevin said, tilting his head with a slosh, “So like, far be it from me to tell you guys how to do your jobs. But like, why? Why did you make a machine that did that?”

“We didn’t!” Doc threw his hands up, “We made the AI to help us design things. I just- we wanted a redstone helper.”

“And then it got really smart really quickly.” Mumbo said awkwardly, twiddling his moustache nervously, “It says it’s perfectly benevolent and only wants to help!”

“Uh-huh.” Cleo said, “‘Benevolent’, is it?”

“Well, yeah. It’s been spitting out designs for new farms I couldn’t even imagine.” Mumbo said, pointing at the machine. The evil red eyes faded away, and it suddenly showed an image of a farm of some kind, rotating in place. It was spitting out a constant stream of XP onto a waiting player, who looked very happy.

A nearby printer started to grind and wheeze, Cleo’s eyes following a cable plugged into the box all the way to the emerging paper. Doc fished out the printout, and hummed consideringly.

“Interesting. Never considered a guardian-based approach to one of these…”

“Doc.” Cleo said, “What was that about this thing torturing copies of us for all eternity?”

“Oh, uh, that,” Doc said, “Um. The machine says it’s benevolent and only wants what’s best for us, which is why it’s decided that your copies need to suffer an eternity of torment. For um. Not helping in its creation, and slowing down the time it took for this thing to exist?”

Cleo stared at the box.

“...So, there’s a fragment of me swirling around in there in abject agony?” Cleo mused, and Jevin hissed some gas out of a hole in his slime in exasperation.

“Like, I’m no philosopher,” Jevin said, “But that doesn’t sound particularly “benevolent” to me. Like, my idea of a benevolent helper-guy is…honestly, probably Joe. Helps with no thought of reward and doesn’t, uh, want to send me into the freaking torment nexus? Why would something benevolent want to send us to super-hell? I didn’t do anything wrong!”

“Fair point. I knew you were making this stupid thing, but. This is just dumb.” Cleo groaned.

“Man, I need a drink,” Jevin said, pulling a bottle of motor oil out of his inventory and popping the top. Jevin shoved the bottle into the slime of his other hand and let the viscous yellow fluid pour into his slime, slowly turning green as it met with the blue.

“Yeah, I’ll second that. So…to recap, you two decided to build a thing. The thing declared it was a benevolent helper to playerkind, then immediately decided it was also going to moonlight as the new Satan of our own personal digital Hell? Have I got all that correct?” Cleo sighed, and Mumbo and Doc nodded sheepishly.

“Cool. I mean, not cool, but. Cool.” Jevin sighed.

“Now, hold on,” Cleo said, “because. How do we know your magic evil box is even telling the truth?”

“Uh…because it told us so?” Mumbo offered weakly.

“Yeah, but… Hang on.” Cleo sighed, tapping a message into their comm.

<ZombieCleo> Cub, how much data storage would it take to store and render a single player’s brain or brain equivalent?

<cubfan135> probably like a petabyte or more

<cubfan135> why

<ZombieCleo> don’t ask

<cubfan135> i see

<cubfan135> what did doc do this time?

<ZombieCleo> You don’t want to know.

“So, let’s say it’s a petabyte per player,” Cleo mused, looking up from their comm, “So that’s…twenty-six petabytes to render all of us, minus you two, of course.”

The red eyes were staring at her angrily.

“Did you guys give your evil box twenty-six petabytes of data storage, by chance?”

“Um, no? I don’t think so, anyway…” Mumbo said awkwardly, scratching his head.

“So, odds are, if this thing IS being truthful, then all it’s torturing are a bunch of sock puppet hermits.” Cleo said, gesturing at the computer, “It doesn’t have the data storage, let alone processing power.”

“If that,” Jevin countered, “that thing’s probably got, what, ten terabytes? Optimistically? Dude, it’s probably just sticking pins in a jello cube instead of actually torturing, you know, me.”

“And another thing!” Cleo said, “Even assuming you DID give your stupid box enough data storage for all of us, how the hell did it get our player data to start with?”

“Yeah!” Jevin countered, “It would have had to either get us to submit to a brain scan- which, why would you ever do that if it’s gonna use the scan to torture you? Or like, since I don’t have a brain, find some way to steal our player data. And I feel like Hypno or X or someone would have noticed?”

“Uh…” Doc scratched his head, “I don’t know.”

“You reckon it’s lying, mate?” Mumbo asked, and Doc nodded.

“Probably yeah. So…We can just…ignore it?”

“Oh no,” Cleo said, shaking their head, “We’re not ignoring anything.”

“We’re not?” Mumbo asked.

“Nope!” Cleo said, “We’re not ignoring a damn thing. Because…”

She and Jevin locked eyes.

“-Because if there’s even the SLIGHTEST CHANCE that this thing’s locked me and you in a phone booth together for like, three days, then…well. Then it pays.” Jevin nodded with a slop of slime.

Cleo marched over and grabbed the plug, yanking it out of the wall. The screen momentarily showed a bright red ! and then flashed to a dead black. She picked up the whole unit and walked over to Jevin, who’d punched a one-block hole in the floor and filled it with lava.

Cleo threw the computer inside, and all four hermits watched as it fizzled away to nothing.

“And that,” Cleo said, “is how you roast a basilisk.”

#magnetar writes#Hermitcraft fic#Mumbo Jumbo#Docm77#ZombieCleo#iJevin#Parody#this was written at 1 AM last night so this may be a little ???

243 notes

·

View notes

Text

Glitchcore dialogue prompts

1. "Reality is buffering… What happens when we hit pause?"

Character A stares at the glitching horizon, where the sky flickers between pixelated voids. Character B frowns, “Maybe we’re not meant to see the code behind it all.”

2. "You’re a corrupted file. But that doesn’t mean you’re broken."

Character A experiences moments of disconnection, their speech fragmented by static. Character B tries to reassure them, but each word feels like it’s slipping through the cracks of reality.

3. "Every time I blink, the world skips a frame."

Character A notices the world is out of sync. People flicker, objects disappear, and their reflection isn’t quite right. They turn to Character B for answers, but even their words are distorted, glitching mid-sentence.

4. "I was never programmed to feel this… but here I am, crashing."

Character A, an AI or digitally enhanced human, starts to experience emotions for the first time, leading to a system overload. Their thoughts flash like corrupted code, scrambling their sense of self.

5. "We’re stuck in a loop. But maybe this time, we can break it."

Time is glitching for Character A and Character B, repeating the same moments over and over. As they try to escape, reality fractures, showing distorted fragments of alternate timelines.

6. "If I glitch out, don’t follow. I’m just data—nothing more."

Character A is fading, pixel by pixel, as the virtual world they live in begins to collapse. Character B insists on trying to save them, even though the lines between digital and physical are breaking down.

7. "I hear the static whispers… It’s like they know we’re here."

Character A starts to pick up on strange sounds—static, broken transmissions, and voices from somewhere beyond. They believe the glitches are alive, watching them.

8. "We’re just echoes in the system, flickering between what’s real and what’s not."

Character A questions their existence as the world around them constantly shifts and deforms. The glitches feel too intentional, like someone—or something—is controlling it all.

9. "I saw myself glitch today… but it wasn’t me. It was something pretending to be me."

Character A sees their own reflection glitch and morph into something unfamiliar. Is it an error in the system, or is something trying to overwrite them?

10. "I’ve been patched up so many times, I don’t even know which version I am anymore."

Character A has been modified, both physically and digitally, so many times that they’ve lost their sense of identity. They question whether they’re still the same person they once were, or just a collection of fragments.

"You're not seeing me right now, are you? I'm stuck between frames."

"The code is breaking down. I can feel it. Every time I blink, something new glitches."

"We were perfect once. Now, we're just corrupted data fragments trying to piece ourselves together."

"Reality doesn’t crash. It fades, like static, until the lines blur and you can’t tell what’s real anymore."

"Don't trust what you see. It's all just a simulation rendering too slowly to hide its flaws."

"Every time I move, I leave a part of myself behind, like I’m lagging between timelines."

"I’m not sure if I’m the glitch or if the world around me is. Does it matter?"

"The pixels around your face—they’re unraveling. We need to reset the program before you disappear completely."

"I keep hearing this… echo. It’s like my thoughts are repeating, but they aren’t mine."

"I thought I deleted you. Why do you keep reappearing in my feed?"

"The horizon just flickered. Did you see that? I think we’re reaching the edge of the simulation."

"Every time I think I’ve fixed it, the glitches return, worse than before. Maybe we’re meant to stay broken."

"If I lose connection, you have to promise to reboot me. I can’t afford to stay stuck in here."

"It’s strange, isn’t it? How the glitch makes everything look more real than reality ever did."

"What if I’m just a copy of me, and the original got corrupted long ago?"

"I saw the world tear for a second. The sky turned into data streams, and I think I saw someone behind it all."

"I can’t trust the mirrors anymore. They show me… versions of myself that I don’t recognize."

"They keep trying to patch me, but it never works. I think I’m beyond fixing."

"You keep glitching. Are you real or just an error in the system trying to communicate?"

"I can feel myself desyncing from reality. Every moment, I drift further away."

"I’ve been seeing static in the mirror. Like I’m glitching in and out of existence."

"I can’t tell if I’m in the real world or a simulation. The lines are all blurred now."

"My thoughts are stuttering—like an old video buffering. Can you hear it too?"

"We’ve got less than a second before the whole system crashes. Are you ready?"

"Every time I blink, I lose a part of myself. The screen flickers, and I'm gone."

"There’s a glitch in my memory. Did we meet before, or is this another loop?"

"I’ve been coded wrong, haven’t I? My emotions don’t feel… real."

"I tried to log out, but the world didn’t let me. Now, I’m stuck in the error."

"We’re all just data points now. I can see your code unraveling."

"You’re breaking the system. If you keep doing that, everything might collapse."

"Sometimes I hear a voice, like a distorted signal. It tells me the end is near."

"I reached out to touch you, but my hand just passed through like you were a hologram."

"The colors are bleeding into one another, like corrupted files. Can you fix this?"

"I’m not supposed to exist, not like this. I’m a glitch, an error in the code."

"Reality froze for a moment. Did you see it? Everything just stopped moving."

#glitchcore#glitching#tadc pomni#the amazing digital circus#digital circus#the amazing digital circus pomni#tadc#tadc caine#the amazing digital circus caine#dialogue prompt#writing dialogue#character dialogue#dialouge#creative writing

21 notes

·

View notes

Text

Duty Now For The Future [AI edition]

First the eyes gave it away, then they figured out how to make them the same size and facing the same direction.

Fingers and limbs proved to be next the next tells, but now limbs usually look anatomically correct and fingers -- while problematic -- are getting better.

Rooms and vehicles and anything that needs to interact with human beings typically show some detail that's wrong, revealing the image as AI generated.

But again, mistakes are fewer and fewer, smaller and smaller, and more and more pushed to the periphery of the image, thus avoiding glaring error.

Letters and numbers -- especially when called to spell out a word -- provide an easy tell, typically rendered as arcane symbols or complete gibberish, but now AI can spell out short words correctly on images and it's only a matter of time before that merges with generative text AI to provide seamless readable signs and paragraphs.

All this in just a few years. We can practically see AI evolving right before our eyes.

Numerous problems still must be dealt with, but based on the progress already displayed, we are in the ballpark. All of this is a preamble to a look at where AI is heading and what we'll find when we get there. I haven't even touched on AI generated music or text yet, but I will include them going forward.

. . .

The single biggest challenge facing image generating AI is that it still doesn't grasp the concept of on model.

For those not familiar with this animation term, it refers to the old hand drawn model sheets showing cartoon characters in a variety of poses and expressions. Animators relied on model sheets to keep their characters consistent from cartoon to cartoon, scene to scene, even frame to frame in the animation. Violate that reference -- go “off model” as it were -- and the effect could look quite jarring.*

AI still struggles to show the same thing the same way twice. Currently it can come close, but as the saying goes, “Close don't count except in horseshoes, hand grenades, and hydrogen warfare.”

There are some workarounds to this problem, some clever (i.e., isolate the approved character and copy then paste them into other scenes), some requiring brute force (i.e., make thousands of images based on the same prompt then select the ones that look closest to one another).

When done carefully enough, AI can produce short narrative videos narrative in the sense they can use narration to appear to be thematically linked.

Usually, however, they're just an endless flow of images that we, the human audience, link together in our mind. This gives the final product, at least from a human POV, a surreal, dreamlike quality.

In and of themselves, these can be interesting, but they convey no meaning or intent; rather, it's the meaning we the audience subscribed to them.

Years ago when I had my first job in show biz (lot attendant at a drive-in theater), a farmer with property adjoining us raised peacocks as a hobby. The first few times I heard them was an unnerving experience: They sounded like a woman screaming help me.

But once I learned the sounds came from peacocks, I stopped hearing cries for help and only heard birds calling out in a way that sounded similar to a woman in distress.

Currently AI does that with video. This will change with blinding speed once AI learns to stay on model. The dreamlike / nightmarish / hallucinogenic visions we see now will be replaced with video that shows the same characters shot to shot, making it possible to actually tell stories.

How to achieve this?

Well, we already use standard digital modeling for animated films and video games. Contemporary video games show characters not only looking consistent but moving in a realistic manner. Tell the AI to draw only those digital models, and it can generate uniformity. Already in video game design a market exists for plug-in models of humans, animals, mythical beasts, robots, vehicles, spacecraft, buildings, and assorted props. There are further programs to provide skins and textures to these, plus programs to create a wide variety of visual effects and renderings.

Add to this literally thousands of preexistent model sheets and there's no reason AI can't be tweaked to render the same character or setting again and again.

As mentioned, current AI images and video show a dreamlike quality. Much as our minds attempt to weave a myriad of self-generated stimulations into some coherent narrative form when we sleep, resulting in dreams, current AI shows some rather haunting visual images when it hits on something that shares symbolic significance in many minds.

This is why the most effective AI videos touch on the strange and uncanny in some form. Morphing faces and blurring limbs appear far more acceptable in video fantastique than attempts to recreate reality. Like a Rorschach blot, the meaning is supplied by the viewer, not the creator.

This, of course, lends to the philosophical rabbit hole re quantum mechanics and whether objects really exist independent of an observer, but that's an even deeper dive for a different day.

© Buzz Dixon

* (There are times animators deliberately go off model for a given effect, of course, but most of the time they strive for visual continuity.)

6 notes

·

View notes

Text

To Continue, Please Verify You Are A Human

Today’s prompt: a conversation with my BFF about the comments I received on my fanfiction about it being written by an AI. It wasn’t, but I have to confess, that same BFF is my smart friend who helps me climb out of plot holes and link insane ideas together. It may be an oversight of mine that I have never checked to see if she is a robot…

By the time the ambulance arrives, it’s already over. The neighbours come out to gawk as the medics climb the stairs with a stretcher.

“What’s happened?” demands one woman, watching as they don’t even attempt to knock on the door of 11001 of the biggest block of flats in The Circuit. A new housing development which the cynical claim is only paid for by the world’s super wealthy in order to be used as a tax free means of transferring money across Europe.

“Stand back, please,” says the man, not answering her question.

His colleague gives an apologetic smile, but they let themselves in and the door is shut sharply in the faces of the bystanders.

“Poor thing,” the woman who had spoken says, already turning back to her own doorway and the incomplete daily tasks. “I hope it’s nothing serious, she can’t be much more than 20.”

“Nice girl, polite,” agrees Mrs Next-Door-But-One.

“Hot,” leers middle aged Mr Across-The-Road, which the women take as a cue to disappear back through their own doors.

*

Meanwhile, inside the flat, the paramedics are looking at the body on the floor. It’s supine, limbs stiff and bent unnaturally like a dropped doll. Honey brown hair fans out from the face and the eyes are wide and staring like a pair of marbles, increasing the resemblance to an imperfect facsimile.

The man, so gruff outside the door, sighs, closes his eyes and pinches the bridge of his nose. “I hate this bit.” He skirts a spreading, red puddle which seems to be pooling from deep gouges across the body’s thigh. “Why do they have to make it red?”

The woman pulls her ponytail into a higher and more severe bun and shrugs off her paramedic vest, retrieving a pouch of hidden tools from a secret belt pocket. “You know why.”

“Doesn’t seem to make any difference,” he mutters, but retrieves the saw from under the stretcher blanket in case it is needed nonetheless.

The woman reaches out, touching the body’s face, turning it this way and that, then she digs a nail into an indent behind the ear and a whole section of the forehead slides back. Inside is a spaghetti mass of wiring, still slightly smoking and visibly charred.

“Let’s see what we’ve got here then.” She plugs in a hand held downloader, navigating through what remains of the data files.

*

She’s done it hundreds of times. Answered numerous digital requests to identify horses or traffic lights or bridges, reconstructed vague blurry renderings of random numbers and letters, but for some reason this is defeating her. Again.

Still.

It had been funny at first. “Isn’t technology grand,” she’d joked and considers, not for the first time, slinging the whole thing out the window, but she’s just had it repaired (at no small expense) and knowing her track record, her phone isn’t long for this world so best not.

Then it had been frustrating. The lack of customer service number, the inability to reason with a human, just an unfeeling digital screen blankly requesting she prove her humanity and rejecting her.

And then there had been the dreams.

*

“Not much left,” he looks over her shoulder.

She navigates through the fragments which are present. Some bits of personality, error messages, little more. There’s nothing even resembling the Operating System or basic function algorithms.

*

She is human. Of course she is human. She remembered her school, her family, her life. She had gone for lunch with her sister and niece just yesterday.

But still the dreams and the insidious thought - they looked so alike, everyone commented on it, like factory produced dolls on an assembly line, each identical.

I’m always breaking technology, she tries to reassure herself. I’m the only one in the office that can’t use that stupid Odoo Accounting thing. It just crashes when I go near it. But if living through Covid has taught her anything, it is that humans could be dangerous to others, spreading contagion just by going near them.

But I remember things, she insists again in her own mind. I get sick.

Always the other voice: computers get viruses too, and always the mocking request of the white screen before her.

“To continue, please verify that you are a human”

If I was a murderer, she thinks inanely, clicking away at the requested squares with hard jabs, I would be presumed innocent. It would be their job to verify that I was not. Robot cannot be the default. She knows too well online that it can be, social media full of bots and her email full of spam, even her phone chooses that moment to chime with an automated text about her upcoming need to book a dentist appointment.

“Unsuccessful attempt: To continue, please verify that you are a human”

It’s been days.

“I am human!” she whisper-shrieks at the computer. “I’ll prove it you stupid machine.”

It’s a mark of the stress, of the recursive error loop she’s been trapped in. She grabs her scissors from the table and digs hard into her leg. Immediate red gushes up and out in fast spurts matching her frantically beating heart. “See…blood…” she trails off. “Well, fuck. I didn’t mean to-”

The blood makes her fingers slippery, her fingerprint unreadable. It takes minutes for her phone to respond to her touch, to dial for the help she needs, as she babbles her panic to the woman on the other side of the phone, as she slips into the darkness.

*

“No.” The woman puts away her device and unplugs it. “Not enough to reboot, it needs a full wipe and reinstall, and the casing is ruined anyway.”

He grimaces and hefts the saw. “You want me to-?”

“Yeah, the synthetic flesh is still fresh enough for the lab and once it’s processed the damage won’t be noticeable. May as well recycle.”

“I wonder,” he says, hovering the blade over the body’s shoulder joint, “why this model can’t handle existential crises at all?”

She shrugs, already calling the office. “We need a new Life Image Synthetic Adult for 11001 on The Circuit…yeah…yeah…no, none of the neighbours saw anything, programme her with memory of calling an ambulance for- Oh, oh yeah. Good idea. I forgot we had that one. Yeah, make use of it, even with only one eye. Hmmm...we’ll be back at the office in half an hour or so unless we get another call out. See you later, Sharon. Yeah, definitely. Drinks tonight, I’ll see you at Harry’s. Yeah. Yeah. Around 8. Bye.”

She helps her colleague load the limbs into the cool storage and the torso for deep analysis back at the labs onto the stretcher, where she covers it with a blanket so all the neighbours will see is an ill girl being loaded into an ambulance. She takes her time, positioning the body perfectly, inconspicuously.

“This is easier when we can use a body bag,” he comments.

“Honestly, if you want my opinion, this whole thing is a failure. No point if the Life Image Synthetic Adults freak out about their humanity at the slightest provocation. Decommission the lot of them and spend the money on repairing the environmental damage instead of on versions of humans that don’t contribute to it.” She finishes tucking in the blanket.

He barks out a laugh. “I wouldn’t let The Collaboration hear you say that.”

She rolls her eyes, but doesn’t push her point. The Collaboration does not like dissenters. “Anything else we need?”

“The reinstallation team’ll do the carpet.”

“Let’s just see what she was trying to access.” The woman bends down and her fingers quickly select a Ps5gQ. They wait as the loading wheel turns, morbidly curious to know what had been so important it had been worth dying for.

“Unsuccessful attempt: To continue, please verify that you are a human” blinks up on the screen.

“Coincidence,” he says.

“Must be a server fault,” she adds.

#@beloveddawn-blog#my writing#AI#Because I was cross#Written for/with beloveddawn#tw for suicidal imagery

5 notes

·

View notes

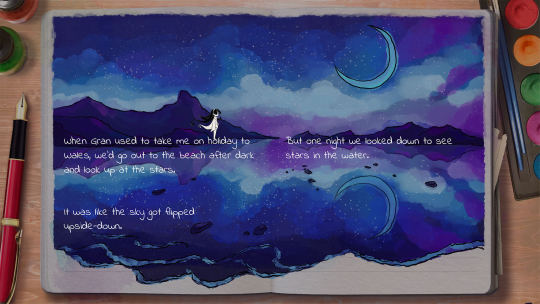

Photo

Objective: To have fun around using 3D, digital painting and AI image generation. Testing the resources needed, the modularity, expediency of the process, and how accurate the results could be. Idea: I wanted something like a that reminisced old timey pulp magazine cover, but with a modern look. Ideally, it would look dreamy and foggy. Assets: Characters modelled on Genesis 8 base, with morphs and textures made by myself. Background is a mix of custom 3D objects, plus store bought scenary (IIRC Grovebrook Park and Urban Sprawl). On the AI side, I used the models Chillout Mix and NeverEnding Dream. I also used free images here and there. Process: I started with a 3D render, the ones I usually do. I tried many poses, my lighting options (turns out that doesn't make a difference). Then I started splicing the image in multiple parts by theme (Lois, background, Clark, etc...) and started tweaking them with AI, and digitally painting over when needed, doing many generations, a lot of repainting and blending. The objective was to take these bunch of different layers and blend them to something somewhat coherent. Problems: It's definitively more labor intensive than just painting, or using AI, or manipulating 3D objects then rendering, mostly because all three methods hate each other I guess. And I'm not very good. For one, AI hates faces. And eyes. Faces with glasses. And hands. And limbs. And edge of clothes. And objects. And everything. It will try to mix everything, fuse clothing to body, deform limbs, etc. Jon was supposed to be snapping his fingers, but AI really wanted him to hold a glass... or hallucination object. Every new layer needed more painting to add cohesion. And it was not very predictable, I wanted, for example, to make Lois look less like a teenaged anime girl, but the model does have biases. After that, make the whole thing look not like a bunch of layer barely blended at the borders was more time intensive than difficult, per se. I couldn't just chuck the whole image on AI and hope it would do it for me because I don't have a server room full of GPUs, and I didn't want to compromise on resolution, so I did by hand. I will pretend the ghosting was an intentional artistic choice. It was my first time doing this, so... yeah, there are things that I didn't know I could do that would have helped in the beginning. My thoughts: Overall, it's very resource intensive process, for GPU, time and energy. It's not very modular, or more precisely, not easily modular. I did a test with an older Jon model and just the thought of spending even more hours made me decide it's not worth it. There's an advantage that it's free, but it's also single use unlike 3D stuff you can make or purchase or just find online. Aesthetically... I don't dislike how Clark or Jon came out, but Lois was not the best. It was fun, if exhausting.

#3d#fanart#dc fanart#superman#superhero#clark kent#jon kent#lois lane#superboy#digital art#civilian clothes

2 notes

·

View notes

Text

Path Tracing with DLSS 3.5: NVIDIA’s Contribution

In order to commemorate the release of NVIDIA DLSS 3.5 in Cyberpunk 2077: Phantom Liberty, Digital Foundry held a roundtable video chat with a number of special guests. Among those in attendance were Bryan Catanzaro, Vice President of Applied Deep Learning Research at NVIDIA, and Jakub Knapik, Vice President of Art and Global Art Director at CD PROJEKT RED.

DLSS 3.5: NVIDIA’s Contribution

When talking about the new technology, NVIDIA’s Bryan Catanzaro noted that not only is DLSS 3.5 more attractive than native rendering, but in a way, its frames are more real when coupled with path tracing than native rendering when using the old rasterized methodology. This was said by Catanzaro in reference to how native rendering creates images using a rasterized method.

To tell you the truth, I believe that DLSS 3.5 is responsible for even further enhancing Cyberpunk 2077’s already stunning visuals. That is how I see things. Again, this is due to the fact that the AI is able to make decisions regarding how to display the picture that are more intelligent than those that we were previously capable of making without the assistance of AI. That’s something that’s going to keep evolving, in my opinion.

When compared to standard visuals, the frames in Cyberpunk 2077 that make use of DLSS (including Frame Generation) appear far more “real.” If you consider all of the graphic trickery, such as occlusions, artificial reflections, and shadows, as well as screen-space effects…Doesn’t everyone agree that raster in general is just a big bag of lies? Now that we have that information, we can toss it out and begin doing path tracing. If we’re lucky, we’ll end up with real shadows and true reflections.

The only way we will be able to accomplish that is by using AI to create a large number of pixels. Without the use of gimmicks, rendering via path tracing would need an excessive amount of computer power. Therefore, we are modifying the kind of methods that we make use of, and I believe that, at the end of the day, we are obtaining a greater number of genuine pixels with DLSS 3.5 than we were previously.

Jakub Knapik, who works for CDPR, agreed with him and referred to rasterization as “a bunch of hacks” placed one on top of the other.

It feels strange to say it, but I am in agreement with you. You bring up a very intriguing point when you ask, “What’s the tradeoff here?” This perspective is quite interesting. On the one hand, you have a rasterizing approach that is a collection of hacks, as Bryan described it earlier. You have a repository of rendering layers, but they are not in any way balanced with one another; instead, you are simply piling one on top of the other to generate frames.

Every single layer is a trick, an approximation of reality; screen space reflections and everything else like that; and you generate pixels in this manner as opposed to having a far more accurate definition of reality using path tracing, where you generate something in the middle (DLSS).

In the past, it was possible to state that you were sacrificing some quality in exchange for performance when you used DLSS. However, with DLSS 3.5, the image is actually and unquestionably better looking than it would be without it.Link Here

The topic of ‘fake frames’ was brought up for the first time in conjunction with DLSS Frame Generation. This is due to the fact that DLSS Frame Generation generates one frame independently of the rendering pipeline and inserts the’simulated frame’ after every’real frame.’ This sparked the beginning of the discussion on fake frames. However, the performance improvement that is afforded by the created frames is undeniably significant.

This is especially true in CPU-bound games, where the conventional upscaler can only do so much, while the generated frames are able to do significantly more. It’s not a surprise that AMD is going in the same direction with FSR 3, which will shortly make its premiere in games like Forspoken and Immortals of Aveum.

Regarding DLSS 3.5 in particular, the brand-new Ray Reconstruction feature offers significant improvements to the image’s overall quality whenever both upscaling and ray tracing are enabled at the same time. My test for Cyberpunk 2077: Phantom Liberty contains more information on this topic. Visit this site if you want to read the complete review of the game.

0 notes

Text

AI art, and a couple of thoughts

Awhile back, an artist/author I follow started playing around with digital art assistants. They made it sound interesting enough that I tried Midjourney...and got a little hooked. (Examples below the cut.)

As a person who’s never had the patience or skill to Do Art, I find it overwhelmingly joyful to just...enter some words, and be given art in return? It’s most likely not what I pictured, but that often makes it more fun. I had fun in the beginning putting in abstract concepts, just to see what came back. Two of my favorites were generated by simply putting in “evil hunger” and “icy rage”.

Evil Hunger:

Icy Rage:

At that time, Midjourney was...not good at rendering humans. You see, it works by taking all the art it has access to online, attempting to match keywords to prompts, putting that subset of the art in its little AI blender, and spitting out the result. It doesn’t know, for example, that humans typically have two eyes that look in the same direction. Early experiments gave me a lot of figures facing away from the camera in silhouette, which was good because the clearer ones usually came straight from the Uncanny Valley. I got human figures with one leg, and horses with six.

That was Midjourney version 3. They’ve since come out with a version 4, which is much better at getting the proper number of physical features sorted. It still has a hard time with hands...but that’s a problem for all artists, from what I understand.

As you can read in the Discourse, if you’re so inclined, AI art can be Problematic. Is it stealing? Maybe. It’s a little ludicrous for me to input “icy rage” into a text box and claim the result is “my art.” There are thousands of real artists, living and dead, whose skill the AI is borrowing. That said, can we defend it as creative derivation? Artists (and writers, and most creatives) borrow from each other all the time. We use each others’ work as inspiration and let it drive our own creation. Isn’t that what’s happening here?

The question is, how much of the result is transforming others’ works, and how much is straight up copying? Unfortunately, there’s no way to tell.

For myself, I’ve got two rules. One is, I don’t use artists’ names in my prompts. A lot of people do, but to me, that’s where it crosses the line into plagiarism. The other is...well, I’ll illustrate with something it gave me awhile back:

I asked it for a “fire opal talisman” and it gave me this set of four. Look at the one in the lower right corner. It looks like the AI has picked up a signature from whatever art it was using as a reference. That makes me profoundly uncomfortable. So my second rule is, no matter how much I like a result, anything with a ghost signature/watermark is out.

Now, this is a whump blog, so of course I was curious about how it might do for that. The answer is...meh? Even though the current version is better at rendering people, it’s still not great. Many human figures end up looking cartoony. Also, Midjourney has a list of words that cannot be used as prompts, in order to prevent misuse. That’s definitely a good thing, but it means I can’t use “bruised” for example.

I did get a couple of interesting whumpy results, like when I asked it for a film-noir-ish wounded man in a trenchcoat:

That’s not bad. I could use that as story inspo or illustration. It’s just that ultimately, I find that the more specific the result I want, the more difficult it is to wring that image out of the AI.

And one more thing

Since I’m posting this on Tumblr in November 2022, I’m contractually obligated to add this masterpiece I generated five minutes ago:

(IYKYK and all that. The weirdness of the 3 and 4 are an AI artifact, but it amuses me to think that this might be the start of a dream sequence or hallucination where our MC imagines the clock’s numbers moving and changing.)

9 notes

·

View notes

Text

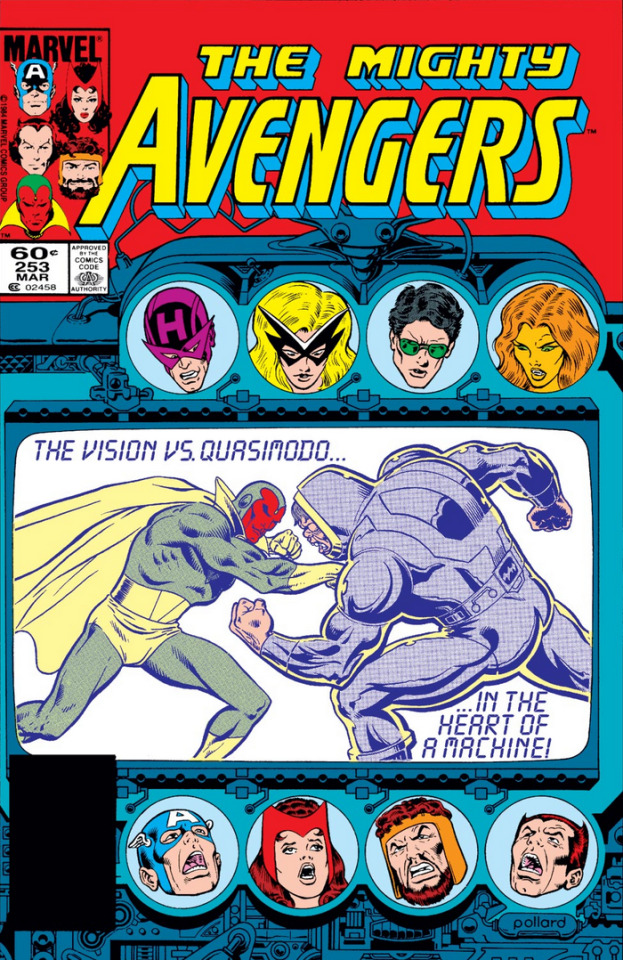

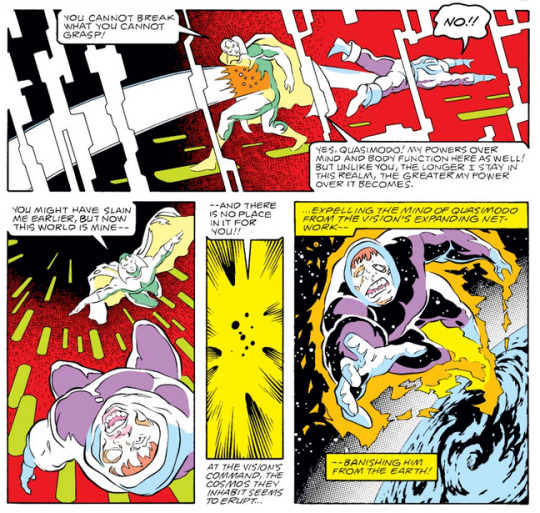

Essential Avengers: Avengers #253: CONQUERING VISION

March, 1985

The Vision vs. Quasimodo... in the heart of a machine!

ITS A ROBOT RUMBLE

ON THE INTERNET!

The Avengers seem very perturbed. Or maybe they’ve placed bets and are yelling at each other.

Anyway. Anyyyyyywayyyy.

Last time on Avengers: Vision became confined to a tube and was only fixed when Starfox hooked him up to Titan’s supercomputer ISAAC. While it helped Vision fix himself, it also seems to have changed his personality. Vision began conspiring with ISAAC to build a take-over-the-world-for-its-own-good device so he could take over the world for its own good and erase the evils and inequalities of man.

Vision was hesitant to pull the trigger on becoming a well-intentioned extremist and tried to gain power and influence by becoming the Avengers chairman and trying to make them more prominent with a branch team and closer ties to the White House.

But when anti-mutant arsonists burn down Vision and Scarlet Witch’s house during a new wave of anti-mutant fear, Vision decides ‘mmm yup, taking over the world time’. He distracts the Avengers by sending them to babysit the army as they poke Thanos technology that they shouldn’t poke and accidentally summon the Blood Brothers. And distracts Captain Marvel to go check out Thanos’ ship several light hours away past Pluto. Black Knight shows up unexpectedly but Vision shoves him into a tube to keep him out of trouble.

And now I guess Vision is going to fight Quasimodo the robot guy? Not sure how that fits in.

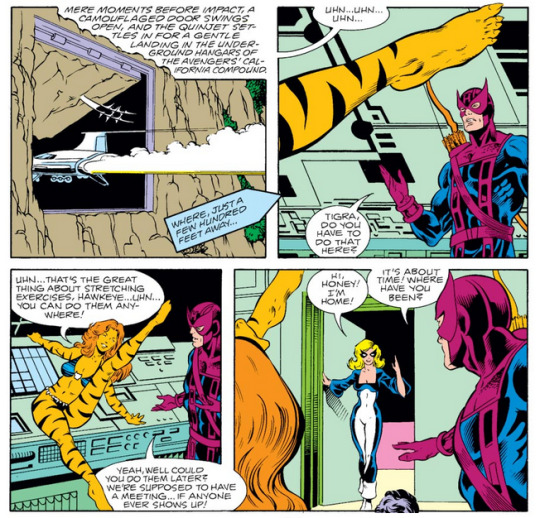

But first, some West Coast Avengers!

Like I said last time, they didn’t stop doing stuff just because their book is over.

Mockingbird happens to run into some drug runners while getting in some flight practice and figures heck why not beat up an entire boat full of gun-toting people as a light workout.

I guess the Quinjet can hover? Doesn’t seem to have thrusters or repulsors on the bottom or be a VTOL but hey, super advanced possibly Wakanda tech. It can do what it likes.

Mockingbird turns the drug runners over to the Coast Guard and returns to Palos Verdes and even gets to fly into one of those cool cliffside hangers disguised as a perfectly normal cliff. The West Coast Avengers revamped the hell out of the compound they bought.

Can you even legally excavate into a cliff like that? You can if you’re a superhero, I guess.

For some reason, there’s a fakeout where its implied Tigra is licking herself, cat style, but she’s just stretching. At least I hope the joke is that it sounded like she was cat cleaning herself and not something else.

One can never tell.

Anyway, I assume Hawkeye is just annoyed that he’s going to be vacuuming hair out of expensive equipment banks later. But really its that what if he threw a meeting and only he and Tigra came?

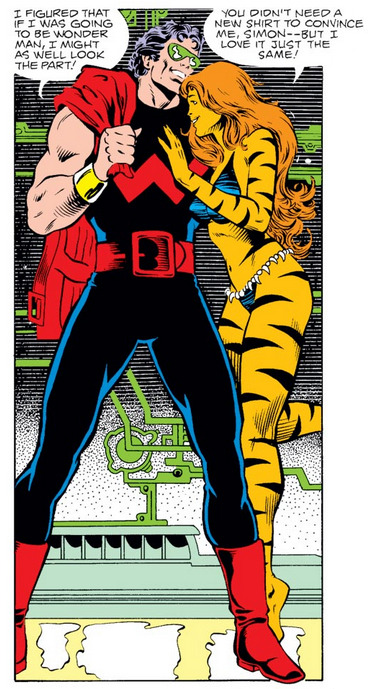

Mockingbird comes in not long after Hawkeye complains, slightly delayed from beating up drug runners. Wonder Man comes in shortly after, delayed by

FASHION

You know, this is a pretty great costume for Wonder Man. Its what all his modern outfits are based on when he’s not just dicks out energy man. I think I like the red jacket outfit more because being the only guy who dresses in ‘normal’ clothes while still looking somehow out of fashion with normal people fits for Wonder Man.

But I do love this one too. Its got a simple charm. Deciding that Wonder Man’s colors are black and red instead of Christmas green and red was a great decision and I’m sure that nobody will ever try to put him in red and green again.

Hawkeye grouses “Next, I suppose Iron Man will show up with a new chrome job!” but Iron Man is Sir Not Appearing in This Comic.

And the reason why is... looks like Tony and Rhodey are beating the crap out of each other in Iron Men armor this same month in Iron Man #192.

I don’t know the details but dammit Tony!

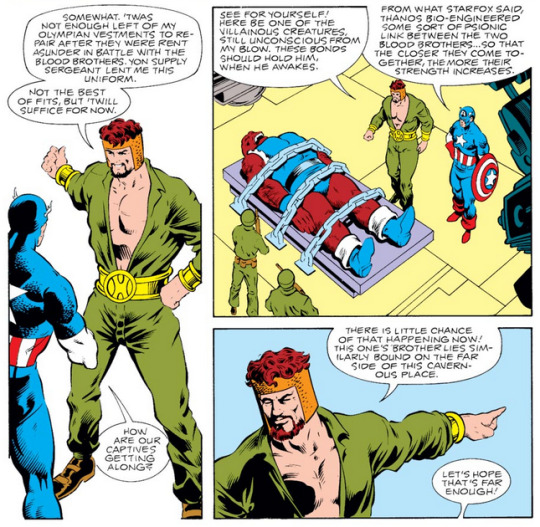

Anyway, over at last issue’s plot, the Avengers are still in Thanos’ ex-secret base in Arizona, still rolling their eyes and smh at the US Army for poking things what should not be poked.

Starfox and Scarlet Witch find a chamber blocked by rubble which has a symbio-nullifier which Starfox proposes to use to symbio-nullify the Blood Brothers.

First, he flexes on the US Army.

Army Guy: “It must weigh tons!”

Starfox: “Tons? Yes. But only about eight-and-a-half! Hardly any bother at all!”

Good flexing, Starfox.

Meanwhile, Captain America’s scolding has born fruit. The Pentagon has agreed to seal Thanos’ base, pending further investigation. And Colonel Farnam agrees because his training never prepared him to deal with MONSTERS FROM OUTER SPACE.

Also meanwhile, the army took pity on Hercules’ poor pantsless state and slash or were intimidated by it and have lent him a uniform.

He wears it as you’d expect Hercules to wear it.

With plenty of plunging neckline.

Since the Blood Brothers have a psionic link which makes them stronger the closer they are, Hercules has chained them up on very distant parts of the base.

But this precaution is rendered moot pretty quickly when Starfox returns with the symbio-nullifier to symbio-nullify the Blood Brothers.

Starfox suspected that Thanos had one of these lying around as a precaution if he was going to let the Blood Brothers into his base.

Hercules lightly complains that he didn’t get a good fight with the Blood Brothers especially since the hordes of Muspell and Maelstrom’s wacky minions were interesting but not all that much of a challenge for the prince of power.

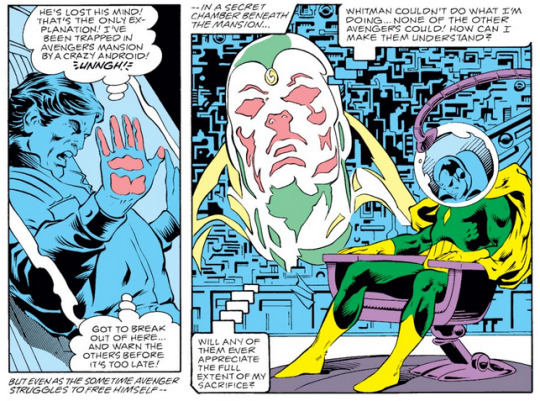

Back at the Avengers Mansion, the giant holographic head of Vision is still dealing with Dane Black Knight Whitman. Mostly by showing him video footage of how the other Avengers are tied up.

Dane is confused for multiple reasons, including that when last he heard Wasp was the leader.

Vision: “My failure to anticipate your arrival was an unfortunate lapse. I regret that, as a result, you must suffer the indignity of incarceration.”

Dane: “But... why?! What does keeping me in a tube accomplish?”

Vision: “It prevents you from interfering! You see, I have come to the conclusion that the only way I can fulfill my duty to make the Earth a safer place... is to run it myself!”

Dane: “What?!? But that’s crazy! Uh... I mean, you can’t possibly...”

Vision: “Exactly the sort of reaction I expected!”

Vision: ‘See, this is why you’re a tube boy now.’

Vision turns off the hologram saying that Dane will understand when its all over.

As usual when somebody says something like that, Dane isn’t reassured, just more convinced he needs to break out and warn someone.

I’m not sure if its not already too late since Vision is safely ensconced in his take over the world chair in his secret take over the world room.

ISAAC’s head hologram shows up to Vision and asks him what the delay is, chop chop get to taking over the world for its own good.

Vision: “Sorry, ISAAC... I was just remembering how much I enjoyed having a body.”

Oh my god.

ISAAC: “What’s the sense of that? This entire world will soon be your ‘body’! How can the mobility of a single humanoid form compare to that?”

Vision: “I wouldn’t expect you to understand, ISAAC. It’s odd, though, so many times others have controlled my body... the robot Ultron, the Mad Thinker, Necrodamus... All have tried to subvert my mind and take me over. And now here am I... about to initiate the greatest takeover of all. One would almost think there were some mad connection -- !”

ISAAC: “Vision! You must not tarry!”

.................. Um, okay. So, rather than just being influenced by his brush with death and also brush with supercomputer, I think Vision is being actively manipulated into this by ISAAC.

I don’t know why but I do know that Vision continues being a viable character for decades so he probably can’t be burning all his bridges here.

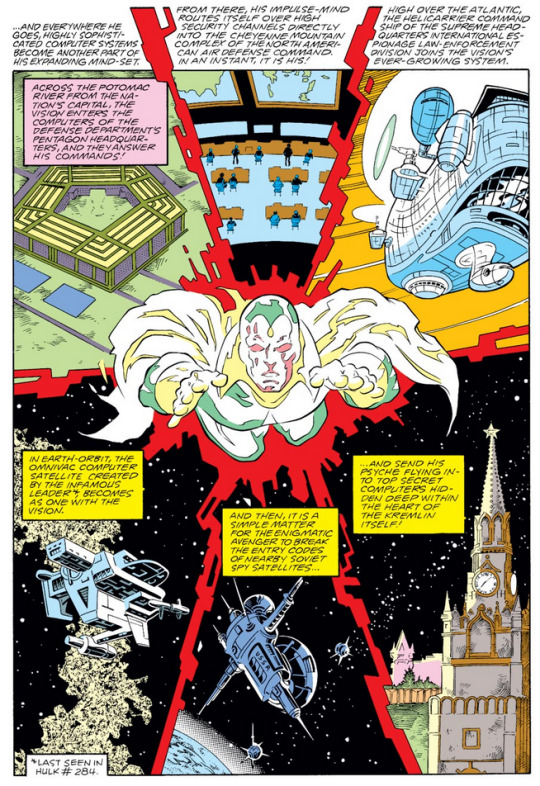

Anyway, Vision uploads his psyche into the internet.

And like immediately starts taking over everything. One page montage immediately. The Pentagon, Cheyenne Mountain, SHIELD, satellites, the Kremlin.

Presumably the best security systems in the world barely warrant a mention for Vision’s mighty synthezoid brain.

He’s pulling a Skynet (for the world’s own good, so he says) and its barely an effort.

The scenery of being on the internet is, I dunno, pretty standard? Bright colors and dashes of light? I feel like I’ve seen it a lot of places.

But if we’re on page 13 of a book and Vision is effortlessly Skynetting, whats the rest of the issue going to be about? Interestingly, to me anyway, despite this being Vision’s turn villainous or well-intentioned extremist, another villain gets shoved in anyway for him to fight.

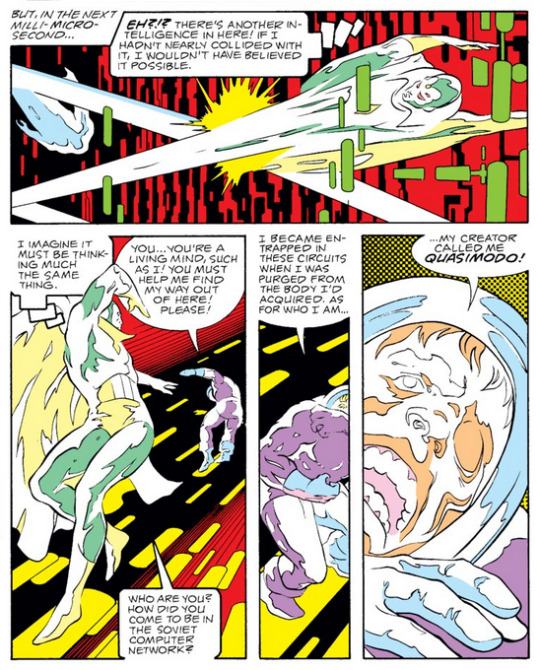

As Vision is nyooming around the Kremlin’s computers, he nearly runs into another AI, Quasimodo.

Helpfully, we get a recap of Quasimodo’s ENTIRE LIFE STORY because this is pre-fan wikis and I don’t think Quasimodo has appeared in Avengers before.

He was created to be the ultimate computer by the Mad Thinker but was abandoned when he developed a mind of his own.

Quasimodo was found by the Silver Surfer who used the cosmic powers of the Power Cosmic to transform Quasimodo from a computer into a robot.

Turning to the wiki for more information: He turns on Silver Surfer because he doesn’t like the body he got, so Surfer turns him into a stone gargoyle. Let that be a lesson about ingratitude.

Somehow, he stopped being a gargoyle and fought various people until he was defeated by the Fantastic Four and the Sphinx and wound up a disembodied intelligence in a Russian computer system. And here we are!

Quasimodo begs Vision to help him escape this digital hellhole but Vision just turns and leaves because he doesn’t have time for these shenanigans. And also because he knows Quasimodo is a villain who tends to turn on the people who help him so fuck that.

Quasimodo: “You know of my past - of my power - and you still would dare deny me?! There can be but one name for such as you... and that is fool!”

He then hauls off and punches Vision. Because they’re both digital intelligences on the internet they can punch each other and have a fight scene. That’s how internet works.

That’s why Mega Man X can beat up so many people in cyberspace.

Quasimodo says if Vision doesn’t help him get back to the physical world, he’ll destroy him.

Vision: “Now, listen to me... I am consolidating all computers worldwide. I gave up my own physical body to do this, and I’ll not tolerate any interference from the likes of you!”

Quasimodo: “You willingly abandoned your body?! You’re not a fool... you’re mad!”

Faced with an irreconcilable set of priorities, Quasimodo trips them both into “the irresistible currents of the IMPULSE VORTEX!”

Sure. That sounds like how internet works.

Meanwhile, over at Pluto is very far away, Monica Marvel nyooms past the moons of Uranus. Apparently her visual acuity is REALLY good because she takes in the scenery while she’s nyooming and finds it frighteningly beautiful out in the outer planets.

Anyway, Vision scolds Quasimodo for plunging them into a torrent. Which makes me laugh. Surely its too soon for torrents to be a thing. He’s just using it in a metaphorical sense.

Quasimodo tries to shoot EYE BEAM at Vision, which misses the digital synthezoid but obliterates an electron.

In a cutaway that would be at home in a Marvel movie, the scene briefly shifts to a Soviet computing center and a guy named Alexey complaining that his program just crashed.

Quasimodo does Vision some punches but Vision decides to start trying since Quasimodo’s attacks risk alerting people that something is amiss on the internet. And Vision’s powers work just as well on the internet as Quasimodo’s do. In fact, screw that, they work better! Vision just gets more and more powerful the longer he spends on the internet!

Vision: “You might have slain me earlier, but now this world is mine -- and there is no place in it for you!!”

And at Vision’s command the internet launches Quasimodo from Earth itself.

The internet can do that.

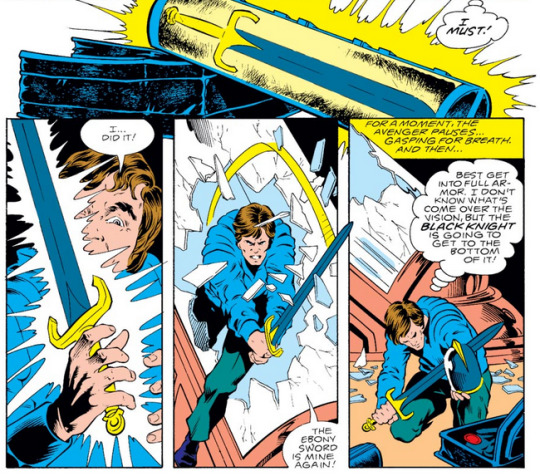

Meanwhile, back at Avenger’s Mansion, Dane Whitman determines that the tube he’s a tube boy in may look like glass but its as strong as steel. He’s not punching his way out of here.

But his recently uncursed cursed sword (the curse never stays not cursed for long so I hope Dane enjoys having a notcursed but very enchanted sword) is just a few feet away with the rest of his luggage. And there’s a mystic bond between himself and the sword so if he just thinks about the sword hard enough, surely it’ll manifest in his hand.

Like the Force but slightly more convenient.

Dane Whitman: Nothing’s happening. Must not... be concentrating hard enough! Maybe the link was broken with the curse. No... no, I mustn’t even think that! I need my sword! I must have my sword! I must!

He do it!

The Notcursed Ebony Sword appears in his hand and he slices through that steel glass like its just glass.

Meanwhile, over at Arizona, the Avengers finish up nullifying the Blood Brothers and putting them in suspended animation, or if you prefer, naptime timeout.

Captain America receives a buzz from Hawkeye who wonders what he’s doing within hailing range, ie in the western half of the US.

Captain America: “Arizona... government business... And I’m as surprised to hear you, as you are me! I take it that your team finished its mission in the Pacific early!”

Hawkeye: “Mission? What are you talking about, Cap? We haven’t been on any mission!”

Which is a dun dun dun considering their whole reason for being sent on this mission was that the West Coast Avengers were ostensibly busy.

And Vision lying about that raises a whole lot of questions for the Avengers.

Cap and Wanda Witch rush over to the Quinjet and contact the Mansion.

Vision: “Then you’re aware of my deception. I... am sorry, Cap. I didn’t want to mislead you, but I felt it necessary to carry out my plan.”

Scarlet Witch: “Plan? Vision, what do you mean? What have you done?”

Vision: “I... well, there is no easy way to put this... But I have taken over the world.”

You never want to hear “I have taken over the world” from a friend, unless its followed with “and I want to get you in on the ground floor of this exciting new opportunity.”

Vision promises the two that he’s taking over all of Earth’s computers for a really good reason like ending war and strife. And signs off by telling Wanda everything will be alright and that he loves her.

Aww?

Cap: “He meant it... he meant every word.”

Scarlet Witch: “He’d been upset lately, but I never thought... Cap, we have to stop him!”

Cap: “Yes. If there’s still time!”

DUN DUN DUN!

Follow @essential-avengers because I don’t know when I’ve been more excited to get to the next issue! Like and reblog?

#Avengers#Essential Avengers#Quasimodo#the Vision#Captain America#Scarlet Witch#Hercules#Starfox#Captain Marvel#Monica Rambeau#Hawkeye#Mockingbird#Tigra#Wonder Man#with a great new costume#Vision takes over the world#these things happen#from time to time#essential marvel liveblogging

13 notes

·

View notes

Photo

Language supermodel: How GPT-3 is quietly ushering in the A.I. revolution https://ift.tt/3mAgOO1

OpenAI

OpenAI’s GPT-2 text-generating algorithm was once considered too dangerous to release. Then it got released — and the world kept on turning.

In retrospect, the comparatively small GPT-2 language model (a puny 1.5 billion parameters) looks paltry next to its sequel, GPT-3, which boasts a massive 175 billion parameters, was trained on 45 TB of text data, and cost a reported $12 million (at least) to build.

“Our perspective, and our take back then, was to have a staged release, which was like, initially, you release the smaller model and you wait and see what happens,” Sandhini Agarwal, an A.I. policy researcher for OpenAI told Digital Trends. “If things look good, then you release the next size of model. The reason we took that approach is because this is, honestly, [not just uncharted waters for us, but it’s also] uncharted waters for the entire world.”

Jump forward to the present day, nine months after GPT-3’s release last summer, and it’s powering upward of 300 applications while generating a massive 4.5 billion words per day. Seeded with only the first few sentences of a document, it’s able to generate seemingly endless more text in the same style — even including fictitious quotes.

Is it going to destroy the world? Based on past history, almost certainly not. But it is making some game-changing applications of A.I. possible, all while posing some very profound questions along the way.

What is it good for? Absolutely everything

Recently, Francis Jervis, the founder of a startup called Augrented, used GPT-3 to help people struggling with their rent to write letters negotiating rent discounts. “I’d describe the use case here as ‘style transfer,'” Jervis told Digital Trends. “[It takes in] bullet points, which don’t even have to be in perfect English, and [outputs] two to three sentences in formal language.”

Powered by this ultra-powerful language model, Jervis’s tool allows renters to describe their situation and the reason they need a discounted settlement. “Just enter a couple of words about why you lost income, and in a few seconds you’ll get a suggested persuasive, formal paragraph to add to your letter,” the company claims.

This is just the tip of the iceberg. When Aditya Joshi, a machine learning scientist and former Amazon Web Services engineer, first came across GPT-3, he was so blown away by what he saw that he set up a website, www.gpt3examples.com, to keep track of the best ones.

“Shortly after OpenAI announced their API, developers started tweeting impressive demos of applications built using GPT-3,” he told Digital Trends. “They were astonishingly good. I built [my website] to make it easy for the community to find these examples and discover creative ways of using GPT-3 to solve problems in their own domain.”

Fully interactive synthetic personas with GPT-3 and https://t.co/ZPdnEqR0Hn ????

They know who they are, where they worked, who their boss is, and so much more. This is not your father's bot… pic.twitter.com/kt4AtgYHZL

— Tyler Lastovich (@tylerlastovich) August 18, 2020

Joshi points to several demos that really made an impact on him. One, a layout generator, renders a functional layout by generating JavaScript code from a simple text description. Want a button that says “subscribe” in the shape of a watermelon? Fancy some banner text with a series of buttons the colors of the rainbow? Just explain them in basic text, and Sharif Shameem’s layout generator will write the code for you. Another, a GPT-3 based search engine created by Paras Chopra, can turn any written query into an answer and a URL link for providing more information. Another, the inverse of Francis Jervis’ by Michael Tefula, translates legal documents into plain English. Yet another, by Raphaël Millière, writes philosophical essays. And one other, by Gwern Branwen, can generate creative fiction.

“I did not expect a single language model to perform so well on such a diverse range of tasks, from language translation and generation to text summarization and entity extraction,” Joshi said. “In one of my own experiments, I used GPT-3 to predict chemical combustion reactions, and it did so surprisingly well.”

More where that came from

The transformative uses of GPT-3 don’t end there, either. Computer scientist Tyler Lastovich has used GPT-3 to create fake people, including backstory, who can then be interacted with via text. Meanwhile, Andrew Mayne has shown that GPT-3 can be used to turn movie titles into emojis. Nick Walton, chief technology officer of Latitude, the studio behind GPT-generated text adventure game AI Dungeon recently did the same to see if it could turn longer strings of text description into emoji. And Copy.ai, a startup that builds copywriting tools with GPT-3, is tapping the model for all it’s worth, with a monthly recurring revenue of $67,000 as of March — and a recent $2.9 million funding round.

“Definitely, there was surprise and a lot of awe in terms of the creativity people have used GPT-3 for,” Sandhini Agarwal, an A.I. policy researcher for OpenAI told Digital Trends. “So many use cases are just so creative, and in domains that even I had not foreseen, it would have much knowledge about. That’s interesting to see. But that being said, GPT-3 — and this whole direction of research that OpenAI pursued — was very much with the hope that this would give us an A.I. model that was more general-purpose. The whole point of a general-purpose A.I. model is [that it would be] one model that could like do all these different A.I. tasks.”

Many of the projects highlight one of the big value-adds of GPT-3: The lack of training it requires. Machine learning has been transformative in all sorts of ways over the past couple of decades. But machine learning requires a large number of training examples to be able to output correct answers. GPT-3, on the other hand, has a “few shot ability” that allows it to be taught to do something with only a small handful of examples.

Plausible bull***t

GPT-3 is highly impressive. But it poses challenges too. Some of these relate to cost: For high-volume services like chatbots, which could benefit from GPT-3’s magic, the tool might be too pricey to use. (A single message could cost 6 cents which, while not exactly bank-breaking, certainly adds up.)

Others relate to its widespread availability, meaning that it’s likely going to be tough to build a startup exclusively around since fierce competition will likely drive down margins.

Christina Morillo/Pexels

Another is the lack of memory; its context window runs a little under 2,000 words at a time before, like Guy Pierce’s character in the movie Memento, its memory is reset. “This significantly limits the length of text it can generate, roughly to a short paragraph per request,” Lastovich said. “Practically speaking, this means that it is unable to generate long documents while still remembering what happened at the beginning.”

Perhaps the most notable challenge, however, also relates to its biggest strength: Its confabulation abilities. Confabulation is a term frequently used by doctors to describe the way in which some people with memory issues are able to fabricate information that appears initially convincing, but which doesn’t necessarily stand up to scrutiny upon closer inspection. GPT-3’s ability to confabulate is, depending upon the context, a strength and a weakness. For creative projects, it can be great, allowing it to riff on themes without concern for anything as mundane as truth. For other projects, it can be trickier.

Francis Jervis of Augrented refers to GPT-3’s ability to “generate plausible bullshit.” Nick Walton of AI Dungeon said: “GPT-3 is very good at writing creative text that seems like it could have been written by a human … One of its weaknesses, though, is that it can often write like it’s very confident — even if it has no idea what the answer to a question is.”

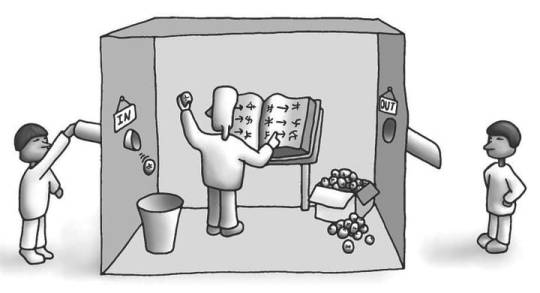

Back in the Chinese Room

In this regard, GPT-3 returns us to the familiar ground of John Searle’s Chinese Room. In 1980, Searle, a philosopher, published one of the best-known A.I. thought experiments, focused on the topic of “understanding.” The Chinese Room asks us to imagine a person locked in a room with a mass of writing in a language that they do not understand. All they recognize are abstract symbols. The room also contains a set of rules that show how one set of symbols corresponds with another. Given a series of questions to answer, the room’s occupant must match question symbols with answer symbols. After repeating this task many times, they become adept at performing it — even though they have no clue what either set of symbols means, merely that one corresponds to the other.

GPT-3 is a world away from the kinds of linguistic A.I. that existed at the time Searle was writing. However, the question of understanding is as thorny as ever.

“This is a very controversial domain of questioning, as I’m sure you’re aware, because there’s so many differing opinions on whether, in general, language models … would ever have [true] understanding,” said OpenAI’s Sandhini Agarwal. “If you ask me about GPT-3 right now, it performs very well sometimes, but not very well at other times. There is this randomness in a way about how meaningful the output might seem to you. Sometimes you might be wowed by the output, and sometimes the output will just be nonsensical. Given that, right now in my opinion … GPT-3 doesn’t appear to have understanding.”

An added twist on the Chinese Room experiment today is that GPT-3 is not programmed at every step by a small team of researchers. It’s a massive model that’s been trained on an enormous dataset consisting of, well, the internet. This means that it can pick up inferences and biases that might be encoded into text found online. You’ve heard the expression that you’re an average of the five people you surround yourself with? Well, GPT-3 was trained on almost unfathomable amounts of text data from multiple sources, including books, Wikipedia, and other articles. From this, it learns to predict the next word in any sequence by scouring its training data to see word combinations used before. This can have unintended consequences.

Feeding the stochastic parrots

This challenge with large language models was first highlighted in a groundbreaking paper on the subject of so-called stochastic parrots. A stochastic parrot — a term coined by the authors, who included among their ranks the former co-lead of Google’s ethical A.I. team, Timnit Gebru — refers to a large language model that “haphazardly [stitches] together sequences of linguistic forms it has observed in its vast training data, according to probabilistic information about how they combine, but without any reference to meaning.”

“Having been trained on a big portion of the internet, it’s important to acknowledge that it will carry some of its biases,” Albert Gozzi, another GPT-3 user, told Digital Trends. “I know the OpenAI team is working hard on mitigating this in a few different ways, but I’d expect this to be an issue for [some] time to come.”

OpenAI’s countermeasures to defend against bias include a toxicity filter, which filters out certain language or topics. OpenAI is also working on ways to integrate human feedback in order to be able to specify which areas not to stray into. In addition, the team controls access to the tool so that certain negative uses of the tool will not be granted access.

“One of the reasons perhaps you haven’t seen like too many of these malicious users is because we do have an intensive review process internally,” Agarwal said. “The way we work is that every time you want to use GPT-3 in a product that would actually be deployed, you have to go through a process where a team — like, a team of humans — actually reviews how you want to use it. … Then, based on making sure that it is not something malicious, you will be granted access.”

Some of this is challenging, however — not least because bias isn’t always a clear-cut case of using certain words. Jervis notes that, at times, his GPT-3 rent messages can “tend towards stereotypical gender [or] class assumptions.” Left unattended, it might assume the subject’s gender identity on a rent letter, based on their family role or job. This may not be the most grievous example of A.I. bias, but it highlights what happens when large amounts of data are ingested and then probabilistically reassembled in a language model.

“Bias and the potential for explicit returns absolutely exist and require effort from developers to avoid,” Tyler Lastovich said. “OpenAI does flag potentially toxic results, but ultimately it does add a liability customers have to think hard about before putting the model into production. A specifically difficult edge case to develop around is the model’s propensity to lie — as it has no concept of true or false information.”

Language models and the future of A.I.

Nine months after its debut, GPT-3 is certainly living up to its billing as a game changer. What once was purely potential has shown itself to be potential realized. The number of intriguing use cases for GPT-3 highlights how a text-generating A.I. is a whole lot more versatile than that description might suggest.

Not that it’s the new kid on the block these days. Earlier this year, GPT-3 was overtaken as the biggest language model. Google Brain debuted a new language model with some 1.6 trillion parameters, making it nine times the size of OpenAI’s offering. Nor is this likely to be the end of the road for language models. These are extremely powerful tools — with the potential to be transformative to society, potentially for better and for worse.

Challenges certainly exist with these technologies, and they’re ones that companies like OpenAI, independent researchers, and others, must continue to address. But taken as a whole, it’s hard to argue that language models are not turning to be one of the most interesting and important frontiers of artificial intelligence research.

Who would’ve thought text generators could be so profoundly important? Welcome to the future of artificial intelligence.

1 note

·

View note

Text

Seven Types of Game Devs And The Games They Make

The Computer Science Student

The computer science student had to write a game for class in the fourth semester. The game must demonstrate OOP design and programming concepts, and solid grasp of C++.

This game is written not to be fun to play, but to demonstrate your skill to the professors - or to their poor assistants who have to read the code and grade the accompanying term paper. The core loop of the game is usually quite simple, but there are many loosely connected mechanics in there that barely don’t really fit. For example, whatever the core gameplay is, there could be birds in the sky doing some kind of AI swarm behaviour, there could be physics-enabled rocks on the floor, there could be a complicated level and unit editor with a custom XML-based format, and all kinds of weird shaders and particle effects.

And with all this tech infrastructure and OOP, there are just two types of enemies. That’s just barely enough to show you understand how inheritance works in C++.

The core gameplay is usually bad. Un-ergonomic controls, unresponsive game feel, flashy yet impractical 3D GUI widgets make it hard to play - but not actually difficult to beat, just unpleasant. The colours are washed-out, and everything moves a bit too slow. There is no overarching design, the moment-to-moment gameplay is not engaging, and the goal feels like an afterthought.

But that’s ok. It is to be expected. The professors are CS professors. They (or rather their assistants) don’t grade the game based on whether the units are balanced, whether the graphics are legible, or whether the game is any fun at all. They grade on understanding and correctly applying what you learned in class, documentation, integration of third-party libraries or given base code, and correct implementation of an algorithm based on a textbook.

The CS student usually writes a tower defense game, a platformer, or a SHMUP. After writing two or three games like this, he usually graduates without ever having gotten better at game design.

The After-Hours Developer

The after hours programmer has a day job doing backend business logic stuff for a B2B company you never heard of.

This kind of game is a labour of love.Screenshots might not look impressive at first glance. There is a lot going on, and the graphics look a bit wonky. But this game is not written to demonstrate mastery of programming techniques and ability to integrate third-party content, tools and libraries. This game was made, and continues to be developed, because it is fun to program and to design.

There is a clear core loop, and it is fun and engaging. The graphics are simple and functional, but some of them are still placeholder art. This game will never be finished, thus there will always be place-holders as long as the code gets ahead of the art. There is no XML or cloud-based savegame in there just because that is the kind of thing would look impressive in a list of features.

More than features, this games focuses on content and little flourishes. This game has dozens of skills, enemies, weapons, crafting recipes, biomes, and quests. NPCs and enemies interact with each other. There is a day-night cycle and a progression system.

While the CS student game is about showing off as many tech/code features as possible, this kind of programmer game is about showing off content and game design elements and having fun adding all this stuff to the game.

This game will be finished when the dev gets bored with adding new stuff. Only then, he’ll plan to add a beginning and an ending to the game within the next six months, and go over the art to make it look coherent. The six months turn into two years.

The after-hours developer often makes RPGs, metroidvanias, or rogue-like games. These genres have a set of core mechanics (e.g. combat, loot, experience, jumping) and opportunity for a bunch of mechanics built around the core (e.g. pets, crafting, conversation trees, quest-giving NPCs, achievements, shops/trading, inventory management, collecting trinkets, skill trees, or combo attacks).

The First-Time Game Jammer

The first-time game jammer wants to make his first game for an upcoming game jam. He knows many languages, but he does a lot of machine learning with torch7 for his day job, so he has decided to use LÖVE2D or pico-8 to make a simple game.

This guy has no training in digital art, game design, or game feel. But the he has a working knowledge of high-school maths, physics, and logic. So he can write his own physics engine, but doesn’t know about animation or cartoon physics. He doesn’t waste time writing a physics engine though. He just puts graphics on the screen. These graphics are abstract and drawn in mspaint. The numbers behind everything are in plain sight. Actions are either triggered by clicking on extradiegetic buttons or by bumping into things.

The resulting game is often not very kinetic or action-oriented. In this case, it often has a modal/stateful UI, or a turn-based economy. If it is action-oriented, it could be a simple platformer based around one core mechanic and not many variations on it. Maybe it’s a novel twist on Pong or Tetris.

The first-time game jammer successfully finished his first game jam by already knowing how to program in Lua, copying a proven game genre and not bothering to learn any new tools during the limited jamming time. Instead, he wrote the code to create every level by hand, in separate .lua files, using GNU EMACS.

The Solo Graphic Designer

The graphic designer has a skill set and approach opposite to those of the two programmers described above. He is about as good at writing code as the programmer is at drawing images in mspaint. The graphic designer knows all about the principles of animation, but has no idea how to code a simple loop to simulate how a tennis ball falls down and bounces off walls or the ground. He used to work in a team with coders, but this time he wants to make his own game based on his own creative vision.

The graphic designer knows all about animation tools, 3D modelling, composition. He has a graphic tablet and he can draw. He knows all about light and shade and gestalt psychology, but he can’t write a shader to save his life.

Naturally, the graphic designer plays to his strengths and uses a game engine with an IDE and a visual level editor, like Unity3D, Construct, or GameMaker.

The graphic designer makes a successful game by doing the opposite of what the coder does, because he does it well. The screenshots look good, and his game gets shared on Twitter. He struggles writing the code to aim a projectile at the cursor in a twin-stick shooter, but we live in a world of Asset Stores and StackOverflow.

The resulting game is a genre-mixing thingy full of set pieces, cut scenes, and visual-novel-style conversations. The actual gameplay is walking around and finding keys for locks, but it’s cleverly recontextualised with a #deep theme and boy does it look pretty.

The Engine Coder

The engine coder is like the CS student on steroids. He has nothing to prove. He knows his C++. He lives in a shack in Alaska, and pushes code to GitHub over a satellite connection. He also knows his Lua, C#, Python, and Haskell. The engine coder writes a physics engine, particle system, dialogue engine, planning-based mob AI, savegame system, a network layer and GUI widget library.

He has written five simple demos for the engine: A first-person walking simulator, a third-person platformer, a very pretty glowing orb swarm shader thingy, a non-interactive simulation of a flock of sheep grazing and a pack of wolves occasionally coming in to cull the herd with advanced predator AI, and a game where you fly a spaceship through space.

Somebody comments in the forums that it’s hard to even write Pong or Tetris in the engine. The Engine Coder is more concerned with optimising batched rendering and automatically switching LoD in the BSP tree so you can land on planets in space without loading screens.

The Overeager Schoolboy

The schoolboy has an idea for a game. He saves his money to buy Game Maker (or RPG Maker) and tells his all friends about his amazing idea. Then he makes a post about it on tumblr. Then he makes a sideblog about the game and posts there too, tagged #game development.

Unfortunately, the schoolboy is 15, and while he is talented, he doesn’t really know how to program or draw. He’s good at math, and he can draw with a pencil. Unfortunately, he wants to learn digital art, level design, and programming all in one go. He already knows all the characters for his game, and he writes posts about each of them individually, with pencilled concept art and flavourful lore.

Even more unfortunately, our schoolboy is hazy on how big the game is actually going to be, and what core mechanic the game should be based around.

After designing sprite sheets and portraits for ten characters you could add to your party, plus the Big Bad End Boss, he realises that he has no idea how to get there, or how to make the first level. He starts over with another set of tools and engine, but he doesn’t limit his scope.

In an overdramatic post two months later, he apologises to the people who were excited to play the game when it’s done. A week later he deletes the tumblr. He never releases a playable demo. He never gets constructive feedback from game developers.

The Game Designer’s Game Designer

The game designer’s game designer is not exactly a household name, but he has done this for a while. While you have never heard of him, the people who made the games you like have. All your favourite games journalists also have. Through this connection, many concepts have trickled down into the games you play and the way your friends talk to you about games they like.

The game designer’s game designer has been going at this for a while. When he started, there was no way to learn game design, so he probably studied maths, psychology, computer science, industrial design, or music theory.

The games fall outside of genres, and not just in the sense of mixing two genres together. They are sometimes outside of established genres, or they are clearly inside the tradition of RTS, rogue-likes or clicker games, but they feel like something completely new.

The games of the game designer’s game designer are sometimes released for free, out of the blue, and sometimes commissioned for museums and multimedia art festivals. Some of them are about philosophy, but they don’t merely mention philosophical concepts, or use them to prop up a game mechanic (cloning and transporters, anyone?). They explore concepts like “the shortness of life” or “capitalism” or “being one with the world” or “unfriendly AI” through game mechanics.

But they also explore gameplay tropes like “inventory management“ or “unidentified magic items“ or “unit pathfinding“.

Sometimes bursts of multiple games are released within weeks, after years of radio silence. Should you ever meet the game designer’s game designer, you tell him that you got a lot out of the textbook he wrote, but you feel guilty that you never played one of his games. So you lie and tell him you did.

17 notes

·

View notes

Text