#ifacialmocap

Explore tagged Tumblr posts

Text

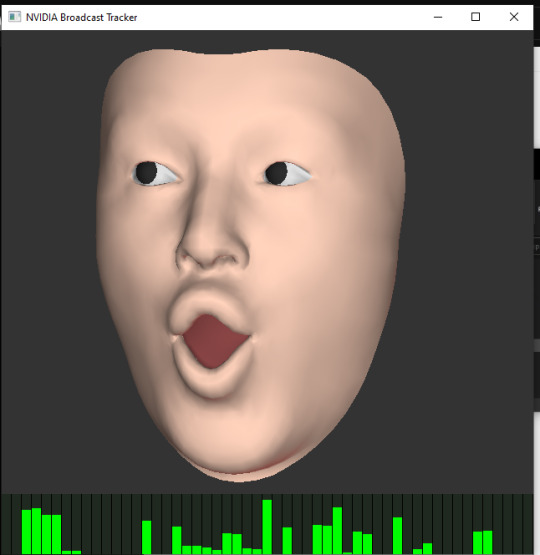

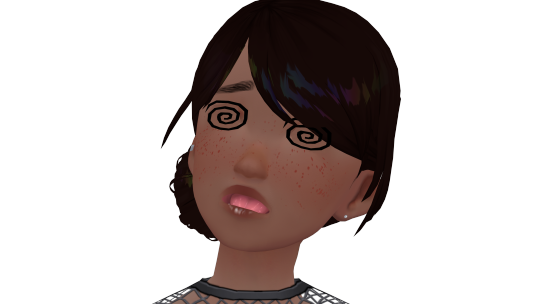

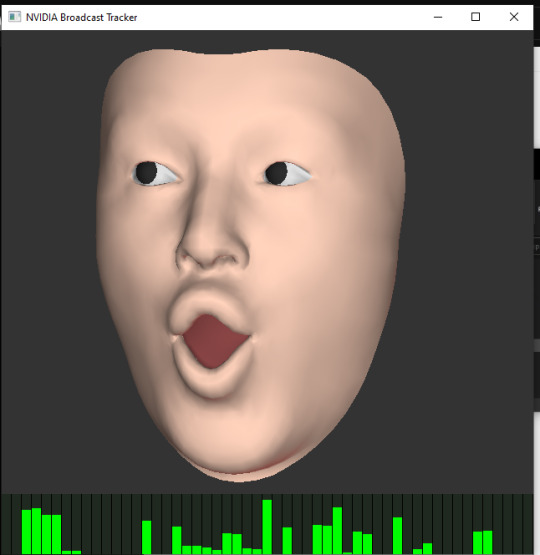

i was trying something new, there's a facial tracker now in NVidia Broadcast. and a program that can translate it into iFacialMocap protocol

it looks really cool and promising but when i connected it with VSeeFace it was lagging like hell and could only track the general facing direction. no expressions whatsoever. but that might be a me / my model problem

buuut it's cool that an iPhone might not be required in the future for detailed tracking!!!

5 notes

·

View notes

Text

Hand tracking!!

#xabane#Vtuber#3d vtuber#vtube model#vtuber uprising#vtubers#xabanevt#Twitch#Vtuber clips#hand tracking#ifacialmocap

2 notes

·

View notes

Text

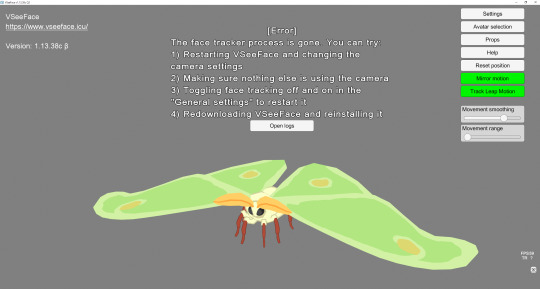

Any vtubers know how to resolve this error in VSeeFace? :<

I've been working on this avatar all week and haven't had any issues until the end of my day yesterday- I was using VSeeFace all day and this error message popped up randomly and hasn't gone away since.

I've followed all the troubleshooting steps outlined in the error message multiple times, tried running the program as an adminstrator, updated my iFacialMocap app, checked that my iPhone/iFacialMocap work in Warudo, etc. but still this error persists.

It's so frustrating bc I was hoping to finish this project today but I really can't do any more work on it until this issue is fixed ?

I'm scared bc all the threads I can find about this error message on Reddit seem to end with the OP not finding a solution, even months later oaifjd UPDATE: Solved! Some combination of changing the camera in my general settings a few times, resetting motion weights in iFacialMocap, updating the app, and restarting my iPhone.

17 notes

·

View notes

Text

FAQ Y ADVERTENCIAS DE COMISIONES!

PREGUNTAS FRECUENTES!

Qué tipo de vtubers vendes? VROID MODIFICADOS EN BLENDER! Es la opción más económica y rápida!

Precio? Gracias por el interés! el precio de hacer un avatar completo depende mucho de la complejidad de tu diseño!

Qué son las Blendshapes? En pocas palabras: Movimientos musculares faciales que NO poseén los avatares por si solos, es un trabajo que se debe hacer en blender. Un vroid tiene por predeterminado las letras A, E , I , O , U, y emociones que se activan MANUALMENTE. Con las blendshapes puedes controlar un montón de musculos faciales extra para ser más efectivo en las emociones e intenciones usando solo tu cara! De igual manera SIEMPRE agrego emociones manuales por si sientes la necesidad de exagerar el momento.

Qué estilo puedo elegir? ¡Eso depende de ti! debes tener la mayor cantidad de referencias posibles antes de comisionar!

Ejemplos:

El estilo final general de tu personaje

Estilo final general de la ROPA

Forma general de cuerpo y estatura

Forma de la cara DE FRENTE Y PERFIL

Forma final del cabello DE FRENTE, PERFIL Y POR ATRÁS

Los colores EXACTOS que quieres en TODO

Qué tipo de sombreado y si lleva delineado (tipo 2D) o no.

Puedo solo comprar BlendShapes y texturas para un vroid ya existente?

Claro que sí!

Cuanto tardas en entregar la comisión? Un máximo de 2 semanas si es un personaje completo! depende mucho de cuantos cambios quieras que haga en el proceso, pero por le general no suelo tardar.

Un máximo de 3 días si solo es un trabajo en un vroid existente.

Cuantos cambios puedo hacer? Los cambios los puedes empezar a hacer una vez que te de los avances finales de las texturas! Tienes derecho a:

3 cambios en la forma de la cara

2 cambios en el pelo

2 cambios en la ropa

Cada cambio nuevo después de agotar tus 2 cambios tiene un costo extra de $5USD

Por donde puedo pagar? Si eres de México acepto depositos y transferencias! Si eres del resto del mundo, Paypal!

Debo pagar todo en el momento? No! con el 50% del pago final empiezo a hacer tu trabajo! Cuando llegue el momento de entregarte el archivo final deberás liquidar en su totalidad el otro 50%

Haces reembolsos? No, debes estar completamente seguro de esta inversión antes de hacer el depósito, empiezo a hacer el trabajo el mismo día que pagas.

Con qué usas tú a los vtubers? Trackeo de cara: Con un iphone 12 App de trackeo en celular: iFacialMocap Programa en PC: VSEEFACE

Es posible usar mi vtuber con android? Sí! es posible! La calidad de trackeo no es tan precisa como iPhone, pero definitivamente es funcional!

Puedo usar mi vtuber con camara web? Si! Programas como:

Webcam Motion Capture

VUP

Leen la MAYORÍA de las blendshapes incluso usando solo la camara web, Prueba diferentes opciónes de trackeo en casa!

ATENCIÓN:

Al momento de comisionar 8l00my tiene permiso de usar la imagen y nombre del personaje para agregarlo a un portafolio de trabajo. Tambien de hacer una MUESTRA NO MONETIZADA del modelo en Youtube (ver ESTE VIDEO para ejemplo). 8L00MY se compromete a NO USAR TU MODELO de manera maliciosa ni de REVENDER O DIVULGAR el archivo del personaje.

7 notes

·

View notes

Text

youtube

Vtuber Cinnameg Chibi Live2d Model

Recorded using VtubeStudio, iFacialMocap, and Vbridger

Vtuber: Cinnameg

• https://www.twitch.tv/Cinnamegart_

• https://twitter.com/Cinnamegart_

Model Illustration and L2D Rig: Cinnameg_

• https://twitter.com/Cinnamegart_

Art Programs: CSP, Live2D, Davinci Resolve

BGM: Waiting For You composed by Kei Morimoto https://www.youtube.com/watch?v=sHPwO...

2 notes

·

View notes

Video

youtube

I was in charge of Live2D Rigging for Abyzab! Full Body Rigging only - check credits for the wonderful Artist / Video Editor / Composer!

Vtuber is: https://www.twitch.tv/abyzab https://twitter.com/Abyzab_ https://linktr.ee/Abyzab

Video Editor: https://twitter.com/KaeVeo Model Artist: https://twitter.com/KANADE_616 Music Composer: https://twitter.com/BonesNoize

Tracking: VBridger/VTubeStudio/ifacialMoCap

6 notes

·

View notes

Text

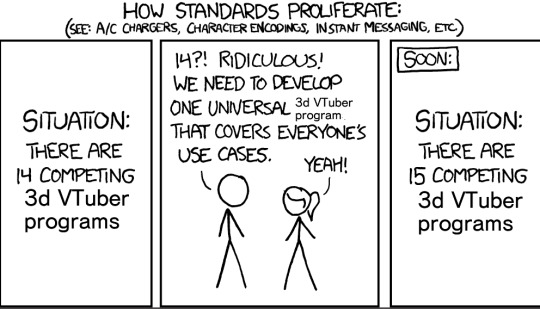

Hey have you tried vnyan? warudo? live3d? Luppet? Tracking World? VMagicMirror? Oh you use VseeFace, so you need to install iFacialMocap or Waidayo or Facemotion3d or etc so you can track your face from your phone then you need to use a program like VRM Posing Desktop to position your character but then they'll be stiff you better use a different program also you're going to need a different one if you want tts and oh you want throwable objects or chat interraction? Well you better install a 3rd of 4th program, don't forget you need obs open so you can stream everything what do you MEAN you want to play a modern game have you tried turning all settings to low or maybe buying a better computer?

original x

1K notes

·

View notes

Text

and that's our stream! It feels good to be back to my base model! We got some good progress on the ear rigging and physics, and a few other things. Also got a chance to test out iFacialMocap, but I think I need to tune my model a bit better to take advantage of it

2 notes

·

View notes

Text

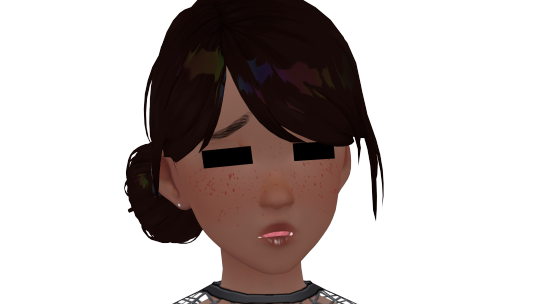

testing a new hair, emotes installed, base created

just need to adjust the arkit blendshapes, they're a little too heavy but that's intentional so you can adjust them.

the emotes

the blendshapes

pics taken using vseeface and ifacialmocap

4 notes

·

View notes

Text

Vsee for linux

Vsee for linux update#

Old versions can be found in the release archive here. If necessary, V4 compatiblity can be enabled from VSeeFace’s advanced settings.

Vsee for linux update#

If you use a Leap Motion, update your Leap Motion software to V5.2 or newer! Just make sure to uninstall any older versions of the Leap Motion software first. To update VSeeFace, just delete the old folder or overwrite it when unpacking the new version. For those, please check out VTube Studio or PrprLive. Please note that Live2D models are not supported. If that doesn’t help, feel free to contact me, Emiliana_vt! If you have any questions or suggestions, please first check the FAQ. Running four face tracking programs (OpenSeeFaceDemo, Luppet, Wakaru, Hitogata) at once with the same camera input. In this comparison, VSeeFace is still listed under its former name OpenSeeFaceDemo. You can see a comparison of the face tracking performance compared to other popular vtuber applications here. For the optional hand tracking, a Leap Motion device is required. Capturing with native transparency is supported through OBS’s game capture, Spout2 and a virtual camera.įace tracking, including eye gaze, blink, eyebrow and mouth tracking, is done through a regular webcam. VSeeFace can send, receive and combine tracking data using the VMC protocol, which also allows support for tracking through Virtual Motion Capture, Tracking World, Waidayo and more. Perfect sync is supported through iFacialMocap/ FaceMotion3D/ VTube Studio/ MeowFace. VSeeFace runs on Windows 8 and above (64 bit only). VSeeFace offers functionality similar to Luppet, 3tene, Wakaru and similar programs. VSeeFace is a free, highly configurable face and hand tracking VRM and VSFAvatar avatar puppeteering program for virtual youtubers with a focus on robust tracking and high image quality.

0 notes

Video

youtube

Blender Facial tracking with iFacialMocap (PC+Android)

0 notes

Note

If you don’t mind my asking how do you find working in the vtuber program you use? I have an ancient copy of facerig but when I saw I had to make like over 50 animations that it blends between I was like “well I don’t have time for that” but never really looked around any further because I like don’t stream often At All and it was a passing “it’d be fun to make something like that” curiosity but man. I’m thinking about it again

howdy! :D

I use VSeeFace on my PC and iFacialMocap on my iPhone for vtuber tracking and streaming! I really like it, but it's lots and lots of trial and error haha- that's partly because all my avatars are non-humanoid/are literally animals with few to no human features, and vtuber tracking programs are designed to measure the movements of a human face and translate them onto a human vtuber avatar, so I have to make lots of little workarounds. If you're modeling a human/humanoid avatar, I would imagine things are more straightforward

I think as long as you're comfortable with 3D modeling and are ready to be patient, it's definitely worth exploring! I really like using my model for streaming and narration of YouTube videos, Tiktoks, etc. For me it's just a lot of fun! :D It's a bit like being a puppeteer haha

If you're looking to get started making vtubers, I really recommend this series of tutorials by MakoRay on YouTube, it's super informative and goes step-by-step:

youtube

Best of luck! :D

17 notes

·

View notes

Text

La única manera que hemos encontrado de minimizar (no evitar) el clipeo y reacción en este caso es editar manualmente los valores de cada movimiento problemático dentro de Meow Face o en el caso más extremo hacer que ciertos movimientos faciales sean activables manualmente con el uso del teclado. (como sacar la lengua o hinchar las mejillas).

No hay mucho que se pueda hacer de mi parte al llegar a este punto.

Recomiendo el uso de productos apple por el momento para evitar estos problemas.

Nota: Probablemente funcione mejor que este ejemplo un celular con Android más actual, no puedo asegurar nada.

3 notes

·

View notes

Text

youtube

Vtuber: ClaricalVT

• https://www.twitch.tv/Claricalvt

• https://twitter.com/Claricalvt

Model Illustration and L2D Rig: Cinnamegart_

• https://twitter.com/cinnamegart_

Art Programs: CSP, Live2D, VTS, Vbridger, iFacialMocap, Davinci Resolve

________________________

Cinnameg’s Commission Info:

•Website: https://cinnmegl2d.carrd.co

Cinnameg’s Socials:

•Twitter: https://twitter.com/Cinnamegart_

0 notes

Text

also here the video tutorial i was following :3 (it only works if you have an RTX btw!!!)

i was trying something new, there's a facial tracker now in NVidia Broadcast. and a program that can translate it into iFacialMocap protocol

it looks really cool and promising but when i connected it with VSeeFace it was lagging like hell and could only track the general facing direction. no expressions whatsoever. but that might be a me / my model problem

buuut it's cool that an iPhone might not be required in the future for detailed tracking!!!

5 notes

·

View notes