#fedu

Explore tagged Tumblr posts

Text

#fedus#cat6#ethernet cable#len cable#power cable cumputer cable#12v adaptor#laptop power cable#cat7 cable#cat8 cable#wifi#ruters#speed#fastspeed

2 notes

·

View notes

Text

┊┊❁ཻུ۪۪♡ ͎. 。˚ °

Age;19

Species; Dragon hybrid

Gender; Libramasculine

Sexuality; Demisexual

Pronouns; He/him

Height; 2m

Appereance;

Kayn has shoulder length, fluffy black hair, which he sometimes keeps tied up in a ponytail. His skin is tanned, with coral undertones.

A pair of freckles lays on his face, though they're hard to notice at first glance. What's easy to notice Is his stature, taller than most, though he's not particularly beefy either.

His almond, iris colored eyes give him a friendlier look, as well as pointy ears on the sides of his head. He has a little smile stampted on his face.

┊┊❁ཻུ۪۪♡ ͎. 。˚ °

Personality;

Bubbly and clumsy, Kayn is generally someone who's vibing with the Friends™, even if that vibe might end up in something not so vibey but hey at least you'd be with him!!

He's very cheerful, someone you'd like to have around from time to time. A goofball most call him, but a kind one at that.

In short; he's the goddest boy<33but my god Is he stupid

┊┊❁ཻུ۪۪♡ ͎. 。˚ °

Trivia;

He's originary from South Italy, more specifically Naples.

Kayn has a boyfriend, and no, that won't be changed, even for rps or aus. (Anyways they r referred to as The Beloveds/Boys and i love them so dearly)

His birthday Is on the same day as the creator, which Is on the 8th of march.

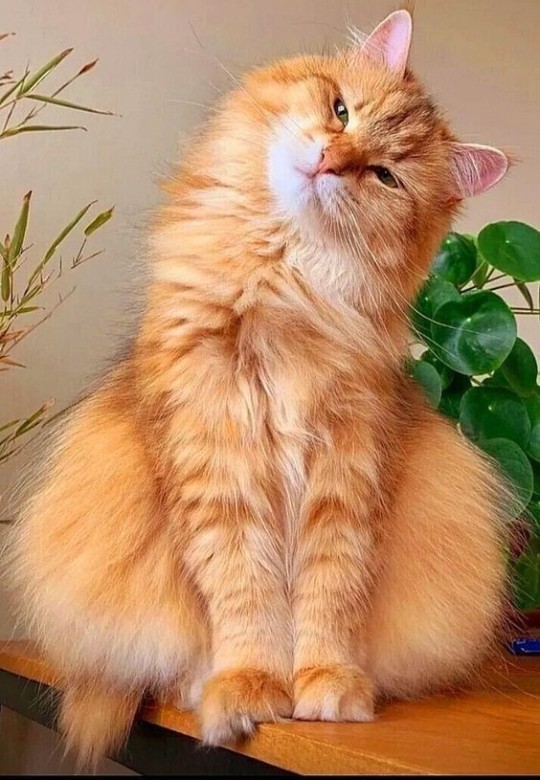

Kayn and Ruri are proud owners of a cat, whose name Is Fedus. She's a 2y/o girlie and she looks like this.

Kayn absolutely adores to give names to things he has a connection with. For example, if someone close to him were to give him a anything, even a small pen, he'd still give It a name and take great care of It.

He's a summer enjoyer!!

Kayn's love language Is physical contact. With a partner he would get extremely clingy, especially indoors and alone. That doesn't mean friends are apart from that; he will still hug them or scruff their hair if that meant staying close with them. He's just affectionate like that, even if that affection isn't romantic or platonic.

If It wasn't clear already, he's kind of an hopeless romantic, and his inability to form coherent english sentences plays in that. <\3

┊┊❁ཻུ۪۪♡ ͎. 。˚ °

Tags;

@a-chaotic-dumbass @spoopy-fish-writes @edensrose @nsk96 @dopesaladlady @audre-falrose @flowergarden1 @eden-dum @anonymousgeekhere

18 notes

·

View notes

Text

Extraction\ : Cette brique

Savoir l'arrachage des têtes 1 ça ne vient pas comme ça l'arrachage des têtes 2 un regard qui ne dit ni oui ni non soyons équitable ce kat cette brique lai ou rondeau de la mort forces de l'univers scandez votre incoercible colère fedu du français fête.

(idem, 2013)

4 notes

·

View notes

Text

Price: [price_with_discount] (as of [price_update_date] - Details) [ad_1] From the brand Commitment To Quality Cat6 Ethernet cable Cable Power Cord Cable High Quality Power Strip Power Adaptor USB Cable ▶OXYGEN FREE COPPER SPEAKER WIRE - The FEDUS Pro series speaker wires and cables use 16 Gauge AWG oxygen free copper conductors to build excellent signal transmission, FEDUS Pro Series OFC Speaker Wire Cable that’s build with high stranded conductors provides premium sound quality with for home theater and car audio system, It is perfect for achieving outstanding audio performance, exceptional flexibility, easy stripping and great polarity identification. ▶WIDELY APPLICABLE - Fits most banana plugs and can be used for Wall Speakers, Ceiling Speakers, Conference Room Sound Systems, Green Room Installation, Car Audio, Home Theater Systems, Hi-Fis, Amplifiers, and Receivers Designed for professional performances, FEDUS Speaker Wire widely applicable from indoor (clubs, restaurants, wedding halls, hotels, projectors in meeting rooms) to outdoor (pool parties, outdoor TVs, car radios, open cinema.) ▶PERFECT FOR CUSTOM INSTALLATIONS - You can make sure you get the highest safety standard when you use this FEDUS Pro Series speaker wire for In-wall installation or to pair with banana plugs, spade tips, or bent pin connectors to connect your speakers to your A/V receiver or amplifier with this FEDUS Pro Series 16 AWG speaker wire. The lower the gauge number, the thicker the wire. Thicker wire presents less resistance to current flow. ▶ADVANTAGE OF OFC (OXYGEN FREE COPPER) - ADVANTAGE OF OXYGEN-FREE COPPER - OFC Oxygen Free Copper vs CCA Copper-Clad Aluminum. Oxygen Free Copper Wires are 165% more conductive than CCA (Copper Clad Aluminium) Wires and prevent Wires From Oxidation. In Contrast, CCA wires are aluminium wires coated with a thin layer of copper, that erodes due to oxidation resulting in distorted sound. FEDUS Pro series OFC Speaker Cables provide a lifetime of distortion-free sound. [ad_2]

0 notes

Text

USA: Ve Fedu se shodují na potřebě snížení sazeb, jejich důvody se ale liší

EKONOMIKA - Ještě před dvěma a půl měsíci většina amerických centrálních bankéřů na blížící se zasedání ve dnech 17. až 18. září nepredikovala snížení úrokových sazeb.

EKONOMIKA – Ještě před dvěma a půl měsíci většina amerických centrálních bankéřů na blížící se zasedání ve dnech 17. až 18. září nepredikovala snížení úrokových sazeb. Koncem minulého měsíce, když předseda Federálního rezervního systému. Když Jerome Powell řekl, že je čas začít snižovat náklady na půjčky, si to mysleli téměř všichni jeho kolegové. Z velké části to bylo proto, že se široký rozsah…

0 notes

Text

Pharaɸh Kh🖖🏽𓈖 Midae Heeru Yu𓆄 𓇽𓀔 𓇴 Lord 𓂀♠️🥷🏼🌈 thee 𓅐𓃀 𓎡 ua senu jemet fedu fendi Pharaɸh Shade 🍎+🖖🏽 spirit of Ameny-𓇴 thee 𓄤𓆑𓂋/hoodfella better senu-pac probably the last one left senu-pac voice they want to use your Seba Simba 𓇽 to go to hell of Duat but don’t want to accurately use 𓋇 to ethical live thru kosheri or khepri

1 note

·

View note

Text

The Rise of Mixture-of-Experts for Efficient Large Language Models

New Post has been published on https://thedigitalinsider.com/the-rise-of-mixture-of-experts-for-efficient-large-language-models/

The Rise of Mixture-of-Experts for Efficient Large Language Models

In the world of natural language processing (NLP), the pursuit of building larger and more capable language models has been a driving force behind many recent advancements. However, as these models grow in size, the computational requirements for training and inference become increasingly demanding, pushing against the limits of available hardware resources.

Enter Mixture-of-Experts (MoE), a technique that promises to alleviate this computational burden while enabling the training of larger and more powerful language models. In this technical blog, we’ll delve into the world of MoE, exploring its origins, inner workings, and its applications in transformer-based language models.

The Origins of Mixture-of-Experts

The concept of Mixture-of-Experts (MoE) can be traced back to the early 1990s when researchers explored the idea of conditional computation, where parts of a neural network are selectively activated based on the input data. One of the pioneering works in this field was the “Adaptive Mixture of Local Experts” paper by Jacobs et al. in 1991, which proposed a supervised learning framework for an ensemble of neural networks, each specializing in a different region of the input space.

The core idea behind MoE is to have multiple “expert” networks, each responsible for processing a subset of the input data. A gating mechanism, typically a neural network itself, determines which expert(s) should process a given input. This approach allows the model to allocate its computational resources more efficiently by activating only the relevant experts for each input, rather than employing the full model capacity for every input.

Over the years, various researchers explored and extended the idea of conditional computation, leading to developments such as hierarchical MoEs, low-rank approximations for conditional computation, and techniques for estimating gradients through stochastic neurons and hard-threshold activation functions.

Mixture-of-Experts in Transformers

Mixture of Experts

While the idea of MoE has been around for decades, its application to transformer-based language models is relatively recent. Transformers, which have become the de facto standard for state-of-the-art language models, are composed of multiple layers, each containing a self-attention mechanism and a feed-forward neural network (FFN).

The key innovation in applying MoE to transformers is to replace the dense FFN layers with sparse MoE layers, each consisting of multiple expert FFNs and a gating mechanism. The gating mechanism determines which expert(s) should process each input token, enabling the model to selectively activate only a subset of experts for a given input sequence.

One of the early works that demonstrated the potential of MoE in transformers was the “Outrageously Large Neural Networks: The Sparsely-Gated Mixture-of-Experts Layer” paper by Shazeer et al. in 2017. This work introduced the concept of a sparsely-gated MoE layer, which employed a gating mechanism that added sparsity and noise to the expert selection process, ensuring that only a subset of experts was activated for each input.

Since then, several other works have further advanced the application of MoE to transformers, addressing challenges such as training instability, load balancing, and efficient inference. Notable examples include the Switch Transformer (Fedus et al., 2021), ST-MoE (Zoph et al., 2022), and GLaM (Du et al., 2022).

Benefits of Mixture-of-Experts for Language Models

The primary benefit of employing MoE in language models is the ability to scale up the model size while maintaining a relatively constant computational cost during inference. By selectively activating only a subset of experts for each input token, MoE models can achieve the expressive power of much larger dense models while requiring significantly less computation.

For example, consider a language model with a dense FFN layer of 7 billion parameters. If we replace this layer with an MoE layer consisting of eight experts, each with 7 billion parameters, the total number of parameters increases to 56 billion. However, during inference, if we only activate two experts per token, the computational cost is equivalent to a 14 billion parameter dense model, as it computes two 7 billion parameter matrix multiplications.

This computational efficiency during inference is particularly valuable in deployment scenarios where resources are limited, such as mobile devices or edge computing environments. Additionally, the reduced computational requirements during training can lead to substantial energy savings and a lower carbon footprint, aligning with the growing emphasis on sustainable AI practices.

Challenges and Considerations

While MoE models offer compelling benefits, their adoption and deployment also come with several challenges and considerations:

Training Instability: MoE models are known to be more prone to training instabilities compared to their dense counterparts. This issue arises from the sparse and conditional nature of the expert activations, which can lead to challenges in gradient propagation and convergence. Techniques such as the router z-loss (Zoph et al., 2022) have been proposed to mitigate these instabilities, but further research is still needed.

Finetuning and Overfitting: MoE models tend to overfit more easily during finetuning, especially when the downstream task has a relatively small dataset. This behavior is attributed to the increased capacity and sparsity of MoE models, which can lead to overspecialization on the training data. Careful regularization and finetuning strategies are required to mitigate this issue.

Memory Requirements: While MoE models can reduce computational costs during inference, they often have higher memory requirements compared to dense models of similar size. This is because all expert weights need to be loaded into memory, even though only a subset is activated for each input. Memory constraints can limit the scalability of MoE models on resource-constrained devices.

Load Balancing: To achieve optimal computational efficiency, it is crucial to balance the load across experts, ensuring that no single expert is overloaded while others remain underutilized. This load balancing is typically achieved through auxiliary losses during training and careful tuning of the capacity factor, which determines the maximum number of tokens that can be assigned to each expert.

Communication Overhead: In distributed training and inference scenarios, MoE models can introduce additional communication overhead due to the need to exchange activation and gradient information across experts residing on different devices or accelerators. Efficient communication strategies and hardware-aware model design are essential to mitigate this overhead.

Despite these challenges, the potential benefits of MoE models in enabling larger and more capable language models have spurred significant research efforts to address and mitigate these issues.

Example: Mixtral 8x7B and GLaM

To illustrate the practical application of MoE in language models, let’s consider two notable examples: Mixtral 8x7B and GLaM.

Mixtral 8x7B is an MoE variant of the Mistral language model, developed by Anthropic. It consists of eight experts, each with 7 billion parameters, resulting in a total of 56 billion parameters. However, during inference, only two experts are activated per token, effectively reducing the computational cost to that of a 14 billion parameter dense model.

Mixtral 8x7B has demonstrated impressive performance, outperforming the 70 billion parameter Llama model while offering much faster inference times. An instruction-tuned version of Mixtral 8x7B, called Mixtral-8x7B-Instruct-v0.1, has also been released, further enhancing its capabilities in following natural language instructions.

Another noteworthy example is GLaM (Google Language Model), a large-scale MoE model developed by Google. GLaM employs a decoder-only transformer architecture and was trained on a massive 1.6 trillion token dataset. The model achieves impressive performance on few-shot and one-shot evaluations, matching the quality of GPT-3 while using only one-third of the energy required to train GPT-3.

GLaM’s success can be attributed to its efficient MoE architecture, which allowed for the training of a model with a vast number of parameters while maintaining reasonable computational requirements. The model also demonstrated the potential of MoE models to be more energy-efficient and environmentally sustainable compared to their dense counterparts.

The Grok-1 Architecture

GROK MIXTURE OF EXPERT

Grok-1 is a transformer-based MoE model with a unique architecture designed to maximize efficiency and performance. Let’s dive into the key specifications:

Parameters: With a staggering 314 billion parameters, Grok-1 is the largest open LLM to date. However, thanks to the MoE architecture, only 25% of the weights (approximately 86 billion parameters) are active at any given time, enhancing processing capabilities.

Architecture: Grok-1 employs a Mixture-of-8-Experts architecture, with each token being processed by two experts during inference.

Layers: The model consists of 64 transformer layers, each incorporating multihead attention and dense blocks.

Tokenization: Grok-1 utilizes a SentencePiece tokenizer with a vocabulary size of 131,072 tokens.

Embeddings and Positional Encoding: The model features 6,144-dimensional embeddings and employs rotary positional embeddings, enabling a more dynamic interpretation of data compared to traditional fixed positional encodings.

Attention: Grok-1 uses 48 attention heads for queries and 8 attention heads for keys and values, each with a size of 128.

Context Length: The model can process sequences up to 8,192 tokens in length, utilizing bfloat16 precision for efficient computation.

Performance and Implementation Details

Grok-1 has demonstrated impressive performance, outperforming LLaMa 2 70B and Mixtral 8x7B with a MMLU score of 73%, showcasing its efficiency and accuracy across various tests.

However, it’s important to note that Grok-1 requires significant GPU resources due to its sheer size. The current implementation in the open-source release focuses on validating the model’s correctness and employs an inefficient MoE layer implementation to avoid the need for custom kernels.

Nonetheless, the model supports activation sharding and 8-bit quantization, which can optimize performance and reduce memory requirements.

In a remarkable move, xAI has released Grok-1 under the Apache 2.0 license, making its weights and architecture accessible to the global community for use and contributions.

The open-source release includes a JAX example code repository that demonstrates how to load and run the Grok-1 model. Users can download the checkpoint weights using a torrent client or directly through the HuggingFace Hub, facilitating easy access to this groundbreaking model.

The Future of Mixture-of-Experts in Language Models

As the demand for larger and more capable language models continues to grow, the adoption of MoE techniques is expected to gain further momentum. Ongoing research efforts are focused on addressing the remaining challenges, such as improving training stability, mitigating overfitting during finetuning, and optimizing memory and communication requirements.

One promising direction is the exploration of hierarchical MoE architectures, where each expert itself is composed of multiple sub-experts. This approach could potentially enable even greater scalability and computational efficiency while maintaining the expressive power of large models.

Additionally, the development of hardware and software systems optimized for MoE models is an active area of research. Specialized accelerators and distributed training frameworks designed to efficiently handle the sparse and conditional computation patterns of MoE models could further enhance their performance and scalability.

Furthermore, the integration of MoE techniques with other advancements in language modeling, such as sparse attention mechanisms, efficient tokenization strategies, and multi-modal representations, could lead to even more powerful and versatile language models capable of tackling a wide range of tasks.

Conclusion

The Mixture-of-Experts technique has emerged as a powerful tool in the quest for larger and more capable language models. By selectively activating experts based on the input data, MoE models offer a promising solution to the computational challenges associated with scaling up dense models. While there are still challenges to overcome, such as training instability, overfitting, and memory requirements, the potential benefits of MoE models in terms of computational efficiency, scalability, and environmental sustainability make them an exciting area of research and development.

As the field of natural language processing continues to push the boundaries of what is possible, the adoption of MoE techniques is likely to play a crucial role in enabling the next generation of language models. By combining MoE with other advancements in model architecture, training techniques, and hardware optimization, we can look forward to even more powerful and versatile language models that can truly understand and communicate with humans in a natural and seamless manner.

#2022#accelerators#ai#anthropic#Apache#applications#approach#architecture#Art#Artificial Intelligence#attention#attention mechanism#Behavior#billion#Blog#Building#carbon#carbon footprint#code#communication#Community#computation#computing#data#deployment#Design#development#Developments#devices#direction

1 note

·

View note

Text

První celý únorový týden bude především o výsledkové sezóně

Přelom ledna a února byl o zasedání amerického Fedu a výsledkové sezóně. Do ústraní šla mírně geopolitika. Středeční FOMC podle očekávání ponechala úrokové sazby počtvrté za sebou beze změny (5,25-5,50 %). Fed ve více směrech naznačil blížící se obrat v měnové politice, který by ale měl přijít později, než trhy dosud očekávaly. Změnil se tón prohlášení, centrální bankéři pozitivně hodnotí…

View On WordPress

0 notes

Text

2 notes

·

View notes

Text

Revo WF-206BNC

Mesin absensi WiFi memudahkan unduh dan kelola data absensi lebih praktis & realtime, tanpa atur kabel jaringan.

Berbagai fitur mesin absensi sidik jari & wajah Fingerspot yang bisa kamu dapatkan:

Instalasi lebih mudah dengan koneksi WiFi

Karyawan bisa lihat data kehadiran di mesin

Hingga 2000 karyawan, 2000 wajah, 10.000 sidik jari, dan 200.000 data absensi

Koneksi Wifi, TCP/IP, USB Flashdisk & USB Client

Gratis aplikasi desktop absensi dan penggajian

Mendukung absensi online karyawan Fingerspot.iO, absensi online sekolah dan universitas Fedu dan Fingerspot BTS

0 notes

Text

Price: [price_with_discount] (as of [price_update_date] - Details) [ad_1] Product Description FEDUS Rca to 3.5mm Male Audio Auxiliary Cable 3.5mm to 2 RCA audio cable is ideal for plugging the 3.5mm plug into the headphone jack of your device or computer and the 2 RCA's to the left and right auxiliary inputs on your sound system. This high-end aux to RCA stereo adapter cables offer incredible audio clarity over all lengths to provide a versatile connection between hardware for all your stereo audio needs While most mobile devices use the 3.5mm stereo headphone output, most home audio systems with an amplifier or receiver use RCA jacks for audio inputs. This cable is ideal for connecting the output of your mp3 player or phone to your home audio system. 24K gold-plated connector, pure copper wire core, and three shielding layers ensure superior fidelity sound, reliable performance, and reduced signal loss. ✔️NOTE!!! Please pay attention that our cables can only be used for bi-directional transmitting audio signal, not for video signal transmission. Please ensure compatibility and correct operation of all connected equipment before the cable is permanently installed inside a permanent fixture. Red/white color-marked connectors for quick, easy left-and-right hookups.(Red is the right, silver is the left) Incredibly Durable 3.5mm to 2RCA Cable Adds the strong and high-quality PVC, the most flexible, powerful and durable material, makes tensile force increased by 200%, special strain relief support design, can bear 10000+ bending tests. 24K Gold Plated Connectors . Premium Zinc Alloy Case Oxygen-free copper Durable & Tangle-Free Driver free Amazing Original Sound Quality Enjoy a trouble-free perfect sound on your HiFi stereo sound system. No software and drivers are required, easily connect smartphones, tablets, MP3 players, and other mobile devices to a speaker, stereo receiver, or other RCA-enabled devices with the 3.5mm to 2-Male RCA adapter cable. Can be used for can be digital audio or low frequency (subwoofers), and can meet any applications for your home stereo or theater system needs Enjoy High-Quality Music Anywhere and Anytime with this Stereo Audio Cable. 24K Gold Plated Connectors, with 99.99% Oxygen-free copper provide maximum's conductivity and durability Triple Shielding Al/Mylar and Copper Braiding for Shielding to Protect You Signal Interrupted from Out. 10000+ bend lifespan & High Quality PVC exterior make audio rca cables adding to the durability and tangle free. The conductors are 22AWG and each conductor is shield to prevent EMI or RFI interference from entering your audio system Broad Compatibility 3.5MM Jack - 3.5mm 1/8" stereo male interface works perfectly for all devices equipped with a standard 3.5mm aux-in jack. Used on Smartphones: iPhone, Huawei, Samsung, Tablets, Laptop, Desktop computer, iPad, iPod, TV, Car Mp3 etc...

2 RCA Jack - RCA interface are compatible with various kinds of stereo players. Stereo receivers or Loudspeakers, VCR, DVD player, TV, Video camera, Amplifier, Projector, Radio, Subwoofer, HDTV, Dolby Digital Receiver, DTS Decoder, TV, VCR, etc... Bidirectional Audio Transmission: Deliver audio from smartphones, MP3 players, tablets, or laptops (with 3.5mm auxiliary ports) to an RCA jack speaker. Or transfer music from DVD (with RCA jack) or other RCA-enabled devices to a 3.5mm speaker, but please exchange the red and white plugs when connecting this way. Convenient to use 3.5mm to 2RCA Audio Auxiliary Stereo cable connectors provides a beveled step-down design that creates a fully plugged-in, secure connection, even for smartphones or other devices that are held inside a bulky protective case (some exceptions). The handy adapter cable provides two Male RCA connectors on one end and a 3.5mm Male connector on the other end. The Male RCA connectors fit devices with a left and right audio input, while the 3.5mm Male connector works with portable audio devices equipped with a standard 3.5mm auxiliary jack (typically used for headphones or earbuds). ►QUALITY YOU CAN HEAR: Heavy Duty 20Awg oxygen-free copper (OFC) core and ultra-thick Gold-plated pure copper RCA connectors come with aluminum shielding, creating blocking against RF and EM interference, giving you the clearest sound quality available. Double Shielding could eliminate signal loss and static noise, provide durability, and improve signal transmission; the aluminum shell blocks any interference, which prevents the loss of sound quality. feel every single music note of your device ►WIDELY COMPATIBLE: 3.5mm to 2 RCA Cable provides the simplest way to connect all 3.5mm devices like smartphones, tablets, iPads, laptops, iPods, MP3 players, CD players, Car stereo or PC to a Hi-Fi amplifier, Xbox 360, AV receiver, DJ controller, turntable, soundbar, speaker, home cinema system, TV, Set-top box, Game Console and video camera, or any Device with an L / R Jack, Most importantly, the RCA cable is bi-directional which also can deliver audio from 2RCA devices to 3.5mm! ►INCREDIBLY DURABLE: This RCA cable can stand 10000+ bending tests; the zinc alloy case combined with 24k Gold plated reinforced connectors fit snugly to ensure a stable connection, anti-stretch design, and High-quality PVC Jacket, improving the durability and corrosion-resistant, the tangle-free design makes it easier to use and store. Durable & flexible RAC cable for a longer lifespan. Fit with phones in nearly any type of case. ►EXCELLENT SOUND QUALITY: Built with dual-shielding, a 24K gold

plated connector, and an oxygen-free pure copper core, it creates reliable performance and reduces signal loss. No longer suffer from radio frequency interference (RFI) and electromagnetic interference (EMI), seamlessly transmit clear and high-quality stereo sound with minimal signal loss. Seamlessly transmits stereo audio for high-quality sound, making you enjoy your music anywhere and anytime. ►USER-FRIENDLY DESIGN: FEDUS 3.5mm to 2RCA Audio Y Cord Designed with an ultra-thin connector, it can be connected tightly and securely without taking off the protective cover from the smartphone or tablet. RCA connectors have easy grips for plugging and unplugging while ensuring reliability. ►PLUG AND PLAY: No drivers are required; enjoy the high-quality stereo sound by simply connecting two devices with this 2RCA cable—red/white color-marked connectors for quick, easy left-and-right hookups. 2RCA and 3.5mm connectors have easy grips for plugging and unplugging while ensuring reliability. ►1-YEAR REPLACEMENT WARRANTY: 1 Year manufacturer warranty and product support provide peace of mind when purchasing; each RCA Cord goes through rigorous testing to ensure a secure wired connection. If, for any reason, you are not satisfied with the item, you will get a replacement [ad_2]

0 notes

Photo

Apakah ruanganmu mudah diakses oleh banyak orang?😱 Sehingga kamu butuh pengamanan ekstra?😱 Inilah alat yang tepat untukmu mesin absensi + akses kontrol profesional Revo WFA-207NC dari Fingerspot.👍 Akses ruanganmu bisa terpantau dengan mudah dan juga bisa kamu gunakan sebagai absensi. 🎉 Apa saja kemudahan yang bisa kamu dapatkan dengan menggunakan Revo WFA-207NC?🤔 ✅ Instalasi lebih mudah dengan koneksi WiFi. ✅ Karyawan bisa lihat data kehadiran di mesin. ✅ PIN karyawan hingga 22 digit. ✅ Menampilkan foto karyawan (optional). ✅ Hingga 1.000 karyawan, 1.000 wajah, 10.000 sidik jari, 1.000 kartu, dan 200.000 data absensi. ✅ Beli mesin absensi Gratis aplikasi desktop Fingerspot Personnel. ✅ Mendukung absensi online karyawan Fingerspot.iO. ✅ Mendukung absensi online sekolah dan universitas Fingerspot BTS, Kitaschool, FEdu. ✅ Bisa diatur siapa & kapan untuk membuka pintu, dilengkapi dengan door sensor untuk deteksi lupa tutup pintu. Ada lagi nih fitur yang keren! Dengan menghubungkan Revo WFA-207NC dan Fingerspot.iO kamu bisa terima notifikasi pintu yang dibuka paksa atau pintu yang lupa ditutup di ponsel melalui App FiO.😎 Yuk pakai sekarang juga! Informasi lebih lanjut: WhatsApp: 08116366353📱 Dapatkan jaminan garansi mesin 12 bulan & layanan purna jual dari Fingerspot di 14 kota besar di Indonesia!🎉

0 notes

Text

Mau mesin absensi yang lebih mudah, praktis, dan bisa dihubungkan dengan absensi online?😱

Jadinya kamu bisa lihat absensi kehadiran karyawanmu di ponsel kapan pun dan dimana pun dan pantau lokasi dimana mereka berada. Wah ada nggak ya⁉️

Ini dia mesin absensi Revo WF-206BNC. Mesin absensi yang lebih praktis dan higienis dengan identifikasi wajah dari Fingerspot dan bisa langsung kamu hubungkan dengan absensi online karyawan Fingerspot.iO.📱

Wow bukan‼️ Terus apa aja sih kemudahan lainnya yang bisa kamu dapatkan:

🔅 Instalasi lebih mudah dengan koneksi WiFi. 🔅PIN pengguna hingga 22 digit. 🔅Panjang nama hingga 15 huruf. 🔅Karyawan bisa lihat data kehadiran di mesin. 🔅Dilengkapi dengan baterai cadangan agar mesin tetap menyala meski listrik padam. 🔅Hingga 2.000 karyawan, 2.000 wajah, 10.000 sidik jari, 2.000 kartu, dan 200.000 data absensi. 🔅Beli mesin absensi GRATIS aplikasi absensi desktop Fingerspot Personnel dilengkapi fitur penggajian. 🔅Mendukung absensi online karyawan Fingerspot.iO. 🔅Mendukung absensi online 1sekolah dan universitas Fingerspot BTS, Kitaschool, FEdu.

Praktis dan mudah bukan? Tunggu apalagi? Yuk pakai sekarang juga!👀

Dapatkan jaminan garansi mesin 12 bulan & layanan purna jual dari Fingerspot di 14 kota besar di Indonesia!🎉

#Fingerspot #FingerspotBekasi #FingerspotJawaBarat #Fingerprint #MesinAbsensi #HRDMantul #AbsensiOnline #AbsensiGratis #AbsensiPonsel #Absensikaryawan #Absensisiswa #covid19 #freewifi #touchless #hrdindonesia

0 notes

Text

Masih belum menjaga kehigienisan di lingkunganmu?🤦♀️🤦

Jangan khawatir ini saatnya kamu pakai alat higienis dari Fingerspot.

Wah apa ya😱? Ini dia Revo WF-206BNC dari Fingerspot, absensi + buka pintu pakai wajah👩🦰 tanpa sentuh mesin.

Apa aja kemudahan lainnya yang bisa kamu dapatkan? ✅ Instalasi lebih mudah dengan koneksi WiFi. ✅ PIN pengguna hingga 22 digit. ✅ Panjang nama hingga 15 huruf. ✅ Karyawan bisa lihat data kehadiran di mesin. ✅ Dilengkapi dengan baterai cadangan agar mesin tetap menyala meski listrik padam. ✅ Hingga 2.000 karyawan, 2.000 wajah, 10.000 sidik jari, 2.000 kartu, dan 200.000 data absensi. ✅ Beli mesin absensi GRATIS aplikasi absensi desktop Fingerspot Personnel dilengkapi fitur penggajian. ✅ Mendukung absensi online karyawan Fingerspot.iO. ✅ Mendukung absensi online sekolah dan universitas Fingerspot BTS, Kitaschool, FEdu.

Tunggu apalagi! Ayo jadikan tempatmu lebih higienis sekarang juga!👀

Dapatkan jaminan garansi mesin 12 bulan & layanan purna jual dari Fingerspot di 14 kota besar di Indonesia!🎉

#Fingerspot#FingerspotBekasi#FingerspotJawaBarat#Fingerprint#MesinAbsensi#HRDMantul#AbsensiOnline#AbsensiGratis#AbsensiPonsel#Absensikaryawan#Absensisiswa#covid19#freewifi#touchless#hrdindonesia

0 notes

Text

USD se pohybuje na základě "jestřábí" NFP a "holubičího" ISM - Denní shrnutí

Indexy na Wall Street se dnes obchodují převážně výše – Dow Jones si připisuje 0,1 %, S&P 500 se obchoduje o 0,3 % výše a Dow Nasdaq přidává 0,4 %. Russell 2000 s malou kapitalizací klesá o 0,2 %. Americký dolar dnes zažil volatilní seanci. USD vyskočil po “jestřábí” zprávě NFP, ale později po tisku ISM služeb všechny zisky smazal. Ceny peněžního trhu pro snížení sazeb Fedu v březnu 2024 po…

View On WordPress

0 notes

Text

Masih belum menjaga kehigienisan di lingkunganmu?🤦♀️🤦

Jangan khawatir ini saatnya kamu pakai alat higienis dari Fingerspot.

Wah apa ya😱? Ini dia Revo WF-206BNC dari Fingerspot, absensi + buka pintu pakai wajah👩🦰 tanpa sentuh mesin.

Apa aja kemudahan lainnya yang bisa kamu dapatkan? ✅ Instalasi lebih mudah dengan koneksi WiFi. ✅ PIN pengguna hingga 22 digit. ✅ Panjang nama hingga 15 huruf. ✅ Karyawan bisa lihat data kehadiran di mesin. ✅ Dilengkapi dengan baterai cadangan agar mesin tetap menyala meski listrik padam. ✅ Hingga 2.000 karyawan, 2.000 wajah, 10.000 sidik jari, 2.000 kartu, dan 200.000 data absensi. ✅ Beli mesin absensi GRATIS aplikasi absensi desktop Fingerspot Personnel dilengkapi fitur penggajian. ✅ Mendukung absensi online karyawan Fingerspot.iO. ✅ Mendukung absensi online sekolah dan universitas Fingerspot BTS, Kitaschool, FEdu.

Tunggu apalagi! Ayo jadikan tempatmu lebih higienis sekarang juga!👀

Dapatkan jaminan garansi mesin 12 bulan & layanan purna jual dari Fingerspot di 14 kota besar di Indonesia!🎉

#Fingerspot#FingerspotBekasi#FingerspotJawaBarat#Fingerprint#MesinAbsensi#HRDMantul#AbsensiOnline#AbsensiGratis#AbsensiPonsel#Absensikaryawan#Absensisiswa#covid19#freewifi#touchless#hrdindonesia

1 note

·

View note