#fabrex

Explore tagged Tumblr posts

Text

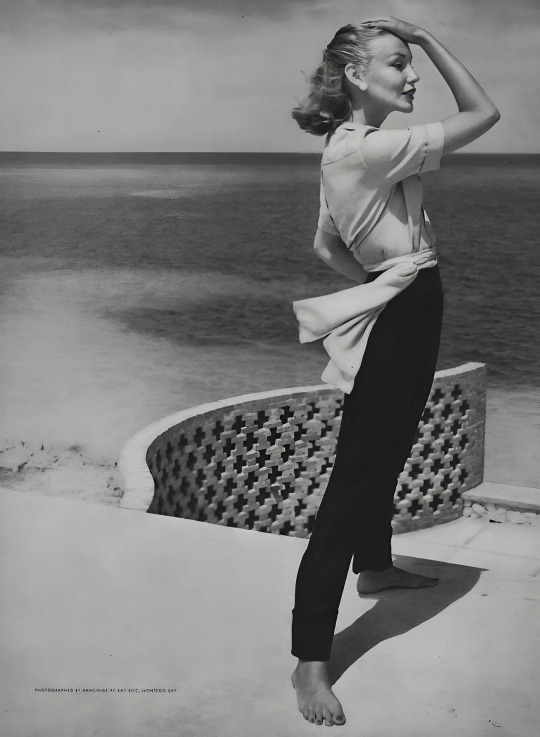

US Vogue May 1, 1953

Liz Pringle wears navy blue Moygashel linen and nylon pants, pale blue Fabrex spun Du Pont rayon shirt with chiffon belt, all by, B. H. Wragge.

Liz Pringle porte un pantalon en lin et nylon Moygashel bleu marine, une chemise en rayonne Du Pont filée Fabrex bleu pâle avec une ceinture en mousseline, l'ensemble par, B. H. Wragge.

Photo John Rawlings vogue archive

#us vogue#may 1953#fashion 50s#spring/summer#printemps/été#b.h.wragge#du pont#fabrex#liz pringle#john rawlings#vintage vogue#vintage fashion#montego bay#bay roc#jamaica

18 notes

·

View notes

Text

AMD Instinct MI210’s 2nd Gen AMD CDNA Architecture Uses AI

GigaIO

GigaIO & AMD: Facilitating increased computational effectiveness, scalability, and quicker AI workload deployments.

It always find it interesting to pick up knowledge from those that recognise the value of teamwork in invention. GigaIO CEO Alan Benjamin is one of those individuals. GigaIO is a workload-defined infrastructure provider for technical computing and artificial intelligence.

GigaIO SuperNODE

They made headlines last year when they configured 32 AMD Instinct MI210 accelerators to a single-node server known as the SuperNODE. Previously, in order to access 32 GPUs, four servers with eight GPUs each were needed, along with the additional costs and latency involved in connecting all of that additional hardware. Alan and myself had a recent conversation for the AMD EPYC TechTalk audio series, which you can listen to here. In the blog article below, They’ve shared a few highlights from the interview.

Higher-performance computing (HPC) is in greater demand because to the emergence of generative AI at a time when businesses are routinely gathering, storing, and analysing enormous volumes of data. Data centres are therefore under more pressure to implement new infrastructures that meet these rising demands for performance and storage.

However, setting up larger HPC systems is more complicated, takes longer, and can be more expensive. There’s a chance that connecting or integrating these systems will result in choke points that impede response times and solution utilisation.

A solution for scaling accelerator technology is offered by Carlsbad, California-based GigaIO, which does away with the increased expenses, power consumption, and latency associated with multi-CPU systems. GigaIO provides FabreX, the dynamic memory fabric that assembles rack-scale resources, in addition to SuperNode. Data centres can free up compute and storage resources using GigaIO and share them around a cluster by using a disaggregated composable infrastructure (DCI).

GigaIO has put a lot of effort into offering something that may be even more beneficial than superior performance, in addition to assisting businesses in getting the most out of their computer resources.

GigaIO Networks Inc

“Easy setup and administration of rapid systems may be more significant than performance “Alan said. “Many augmented-training and inferencing companies have approached us for an easy way to enhance their capabilities. But assure them that their ideas will function seamlessly. You can take use of more GPUs by simply dropping your current container onto a SuperNODE.”

In order to deliver on the “it just works” claim, GigaIO and AMD collaborated to design the TensorFlow and PyTorch libraries into the SuperNODE’s hardware and software stack. SuperNODE will function with applications that have not been modified.

“Those optimised containers that are optimised literally for servers that have four or eight GPUs, you can drop them onto a SuperNODE with 32 GPUs and they will just run,” Alan stated. “In most cases you will get either 4x or close to 4x, the performance advantage.”

The necessity for HPC in the scientific and engineering communities gave rise to GigaIO. These industries’ compute needs were initially driven by CPUs and were just now beginning to depend increasingly on GPUs. That started the race to connect bigger clusters of GPUs, which has resulted in an insatiable appetite for more GPUs.

Alan stated that there has been significant increase in the HPC business due to the use of AI and huge language models. However, GigaIO has recently witnessed growth in the augmentation space, where businesses are using AI to improve human performance.

GigaIO Networks

In order to accomplish this, businesses require foundational models in the first place, but they also want to “retrain and fine-tune” such models by adding their own data to them.

Alan looks back on his company’s accomplishment of breaking the 8-GPU server restriction, which many were doubtful could be accomplished. He believes GigaIO’s partnership with AMD proved to be a crucial component.

Alan used the example of Dr. Moritz Lehmann’s testing SuperNODE on a computational fluid dynamic program meant to replicate airflow over the Concord’s wings at landing speed last year to highlight his points. Lehmann created his model in 32 hours without changing a single line of code after gaining access to SuperNODE. Alan calculated that the task would have taken more than a year if he had relied on eight GPUs and conventional technology.

“A great example of AMD GPUs and CPUs working together “Alan said. This kind of cooperation has involved several iterations. [Both firms have] performed admirably in their efforts to recognise and address technological issues at the engineering level.”

AMD Instinct MI210 accelerator

The Exascale-Class Technologies for the Data Centre: AMD INSTINCT MI210 ACCELERATOR

With the AMD Instinct MI210 accelerator, AMD continues to lead the industry in accelerated computing for double precision (FP64) on PCIe form factors for workloads related to mainstream HPC and artificial intelligence.

The 2nd Gen AMD CDNA architecture of the AMD Instinct MI210, which is based on AMD Exascale-class technology, empowers scientists and researchers to address today’s most critical issues, such as vaccine research and climate change. By utilising the AMD ROCm software ecosystem in conjunction with MI210 accelerators, innovators can leverage the capabilities of AI and HPC data centre PCIe GPUs to expedite their scientific and discovery endeavours.

Specialised Accelerators for AI & HPC Tasks

With up to a 2.3x advantage over Nvidia Ampere A100 GPUs in FP64 performance, the AMD Instinct MI210 accelerator, powered by the 2nd Gen AMD CDNA architecture, delivers HPC performance leadership over current competitive PCIe data centre GPUs today, delivering exceptional performance for a broad range of HPC & AI applications.

With an impressive 181 teraflops peak theoretical FP16 and BF16 performance, the MI210 accelerator is designed to speed up deep learning training. It offers an extended range of mixed-precision capabilities based on the AMD Matrix Core Technology and gives users a strong platform to drive the convergence of AI and HPC.

New Ideas Bringing Performance Leadership

Through the unification of the CPU, GPU accelerator, and most significant processors in the data centre, AMD’s advances in architecture, packaging, and integration are pushing the boundaries of computing. Using AMD EPYC CPUs and AMD Instinct MI210 accelerators, AMD is delivering performance, efficiency, and overall system throughput for HPC and AI thanks to its cutting-edge double-precision Matrix Core capabilities and the 3rd Gen AMD Infinity Architecture.

2nd Gen AMD CDNA Architecture

The computing engine chosen for the first U.S. Exascale supercomputer is now available to commercial HPC & AI customers with the AMD Instinct MI210 accelerator. The 2nd Generation AMD CDNA architecture powers the MI210 accelerator, which offers exceptional performance for AI and HPC. With up to 22.6 TFLOPS peak FP64|FP32 performance, the MI210 PCIe GPU outperforms the Nvidia Ampere A100 GPU in double and single precision performance for HPC workloads.

This allows scientists and researchers worldwide to process HPC parallel codes more efficiently across several industries. For any mix of AI and machine learning tasks you need to implement, AMD’s Matrix Core technology offers a wide range of mixed precision operations that let you work with huge models and improve memory-bound operation performance.

With its optimised BF16, INT4, INT8, FP16, FP32, and FP32 Matrix capabilities, the MI210 can handle all of your AI system requirements with supercharged compute performance. For deep learning training, the AMD Instinct MI210 accelerator provides 181 teraflops of peak FP16 and bfloat16 floating-point performance, while also handling massive amounts of data with efficiency.

AMD Fabric Link Technology

AMD Instinct MI210 GPUs, with their AMD Infinity Fabric technology and PCIe Gen4 support, offer superior I/O capabilities in conventional off-the-shelf servers. Without the need of PCIe switches, the MI210 GPU provides 64 GB/s of CPU to GPU bandwidth in addition to 300 GB/s of Peer-to-Peer (P2P) bandwidth performance over three Infinity Fabric links.

The AMD Infinity Architecture provides up to 1.2 TB/s of total theoretical GPU capacity within a server design and allows platform designs with two and quad direct-connect GPU hives with high-speed P2P connectivity. By providing a quick and easy onramp for CPU codes to accelerated platforms, Infinity Fabric contributes to realising the potential of accelerated computing.

Extremely Quick HBM2e Memory

Up to 64GB of high-bandwidth HBM2e memory with ECC support can be found in AMD Instinct MI210 accelerators, which operate at 1.6 GHz. and provide an exceptionally high memory bandwidth of 1.6 TB/s to accommodate your biggest data collections and do rid of any snags when transferring data in and out of memory. Workload can be optimised when you combine this performance with the MI210’s cutting-edge Infinity Fabric I/O capabilities.

AMD Instinct MI210 Price

AMD Instinct MI210 GPU prices vary by retailer and area. It costs around $16,500 in Japan.. In the United States, Dell offers it for about $8,864.28, and CDW lists it for $9,849.99. These prices reflect its high-end specifications, including 64GB of HBM2e memory and a PCIe interface, designed for HPC and AI server applications.

Read more on Govindhtech.com

#GigaIO#amd#amdinstinct#amdinstinctmi210#amdcdna#amdepyc#govindhtech#news#technews#technology#technologytrends#technologynews

0 notes

Text

Supercomputador portátil tem 4 GPUs e 246 TB de armazenamento

Por Vinicius Torres Oliveira

Supercomputador portátil pode ser personalizado e é perfeito para tarefas que fazem uso de inteligência artificial

A GigaIO, em colaboração com a SourceCode, anunciou o lançamento do Gryf, um supercomputador portátil desenvolvido para atender às necessidades de inteligência artificial. O dispositivo, com peso inferior a 25 kg, cabe em uma mala de mão aprovada pela TSA, podendo ser levado para qualquer lugar.

Ele é capaz de coletar dados em grande escala, uma tarefa que tradicionalmente exigiria o envio dos dados para locais externos. O equipamento permite a configuração em tempo real de seu hardware no local de uso, adaptando-se rapidamente a diferentes tipos de carga de trabalho sem necessidade de transporte para um centro de dados.

Este supercomputador em tamanho de mala possui seis compartimentos modulares. Eles podem ser configurados com diferentes combinações de módulos de computação, armazenamento, aceleração e rede, dependendo das necessidades específicas do projeto.

Por exemplo, para tarefas de inteligência artificial ou aprendizado de máquina, é possível configurar o Gryf com dois módulos de computação, um módulo de aceleração, dois de armazenamento e um de rede.

Configurações e módulos do supercomputador portátil

O módulo de computação é equipado com um processador AMD EPYC 7003 series, 16 núcleos, memória de 256 GB e suporte para diversos sistemas operacionais como Linux e Ubuntu. Além disso, o supercomputador conta com opções robustas de conectividade, incluindo portas QSFP56 e QSFP+ de 100GbE.

No que diz respeito ao armazenamento, o Gryf oferece até 246 TB através de oito SSDs NVMe-E1.L de 30 TB cada. A flexibilidade é ainda maior com o uso da tecnologia de memória FabreX da GigaIO, que permite interligar até cinco Gryfs para processar mais de um petabyte de informação e realizar tarefas mais exigentes.

Além de suas capacidades técnicas, o supercomputador é projetado para ser durável e eficiente, com uma estrutura de fibra de carbono reforçada, filtros de ventilação removíveis e unidades substituíveis em campo, facilitando a manutenção e a longevidade do equipamento.

0 notes

Text

vScaler Announces SLURM integration with GigaIO FabreX

vScaler Announces SLURM integration with GigaIO FabreX

LONDON, United Kingdom. – Aug 13th, 2020 – vScaler, an opensource Private Cloud offering, built and designed by HPC experts for HPC workloads, today announced the integration of SLURM with GigaIO’s FabreX offering within its Cloud platform, enabling elastic scaling of PCI devices and true HPC disaggregation.

As the industry’s first in-memory network, FabreX supports vScaler’s private cloud…

View On WordPress

0 notes

Photo

You don’t know it yet, but your GPUs are mostly left untouched even with finely tuned models and servers, so you end up wasting space, energy, and well.. your power bills. GigaIO™ solves this problem with FabreX – the highest performance, lowest latency rack-scale network you can get.GigaIO™ provides Composable Disaggregated Infrastructure solutions, which comes in both hardware and software. Check full post to know more.

0 notes

Photo

Fabrex - Business and Corporate Template http://bit.ly/2C3qGcj

0 notes

Text

Fabrex - Business Multipurpose and Corporate Template (Corporate)

Fabrex – Business Multipurpose and Corporate Template developed specifically for all types of businesses like consulting financial adviser, medical, construction, tour, travels, interior design, agency, accountant, startup company, finance business , consulting firms, insurance, loan, tax... download link => Fabrex - Business Multipurpose and Corporate Template (Corporate)

0 notes

Text

GigaIO Fabrex™: The Highest Performance, Lowest Latency Rack-Scale Network on the Planet

Imagine this…

You just spent a lot on a rack of servers and multiple GPUs to solve your computational problems.

You don’t know it yet, but your GPUs are mostly left untouched even with finely tuned models and servers, so you end up wasting space, energy, and well. your power bills.

GigaIO™ solves this problem with FabreX – the highest performance, lowest latency rack-scale network you can get.

With FabreX, you can now increase utilisation and eliminate over provisioning of resources, which helps reduce cooling expenses. On top of that, you’re also able to save space with fewer power-consuming servers and accelerators for the exact same performance.

You must be wondering…

What is GigaIO™?

GigaIO™ provides Composable Disaggregated Infrastructure solutions, which comes in both hardware and software.

The hardware consists of:

· FabreX Top of Rack (TOR) Switch

· FabreX PCIe Gen4 Adapter

GigaIO™ FabreX™ Network Adapter Card enables non-blocking low-latency PCIe Gen4 which gives AI/ML, HPC, and Data Analytics users the flexibility to create exactly the system they need for optimised performance and reduced total cost of ownership.

· Accelerator Pooling Appliance

The GigaIO™ Gen4 Accelerator Pooling Appliance is the industry’s highest performing PCIe accelerator appliance fully supporting PCIe Gen4 with up to 1Tb/sec bandwidth into and out of the box. Support up to 8 double-width PCIe Gen 4.0 x16 accelerator cards with up to 300W delivered to every slot; and 2 PCIe Gen 4.0 x16 low-profile slots.

Besides GPUs, it also supports FPGAs, IPUs, DPUs, thin-NVMe-servers and specialty AI chips.

The software offered:

· FabreX Software

A Linux-based, resource-efficient software layers engine that drives the performance and dynamic composability of GigaIO’s Software-Defined HardwareTM (SDH).

It integrates with Bright Cluster Manager that supports popular HPC workload schedulers such as SLURM, PBS Pro & OpenPBS, LSF, Altair Grid Engine, Kubernetes.

For infrastructure integration it supports Bright Cluster Manager VMware vSphere integration and Supermicro SuperCloud Composer.

What solution does GigaIO™ offer?

Through an all-new architecture, GigaIO™ offers a hyper-performance network that enables a unified, software-driven composable infrastructure.

In other words, GigaIO™ allows CPU, GPU Accelerator, and NVMe drives that are installed in any server or in an external chassis to be shared among multiple servers. As a result, you no longer have to detach drives from the initial physical server to be reinstalled in another server.

Namely, GigaIO™ FabreX enables you to connect 32 GPUs with only 1 server. Not only does this reduce the total cost of ownership (TCO), the infrastructure management is simpler too. Additionally, the low latency interconnection also provides superior performance.

What do we mean by reducing TCO?

By implementing GigaIO solution, you can:

1. Spend less to get the same performance and capacity as compared to other solutions.

2. Get ROI (Return on Investment) faster because it allows the customer to do exactly the same things but with lower cost. The GigaIO solution enables you to achieve ROI in half the standard time.

3. Do more things with GigaIO, for example, GigaIO supports orchestration of ANY Compute, Acceleration (CPUs, GPUs, FPGAs, ASICs), Storage, Memory (3D-XPoint) or Networking resource for any workload using an Enterprise-Class, Easy-To-Use and Open Standards high-performance network.

How does it work?

FabreX is the only fabric which enables complete disaggregation and composition of all the resources in your server rack. Besides composing resources to servers, FabreX can also compose your servers over PCIe (and CXL in the future), without the cost, complexity and latency hit from having to switch to Ethernet or InfiniBand within the rack.

With any workload that needs more than one server and more resources (storage, GPUs, FPGAs, etc.), FabreX is exactly what you need. Typical workloads centre around the use of GPU and FPGA accelerators, including AI/ML/DL, visualisation, High Performance Computing and Data Analytics.

For more use cases visit our solutions page.

In Robust HPC, we use GigaIO’s FabreX universal dynamic fabric to enable true rack-scale computing, breaking the limits of the server box to enable the entire rack the unit of compute.

Resources such as GPU, FGPAs, ASICs and NVMe are connected via low latency FabreX switches (less than 110ns with non-blocking ports) and can be configured in various ways depending on your needs.

Long story short, you get the flexibility and agility of the cloud, but with the security and cost control of your own on-prem infrastructure.

Get in touch with Robust HPC – an authorised reseller of GigaIO in Southeast Asia, to know more about how organisations use GigaIO and find the right use case to address your computing needs.

Article Source: https://www.robusthpc.com/gigaio-fabrex/

0 notes