#energy efficient data centers

Explore tagged Tumblr posts

Text

Decarbonizing Data Centers for a Data-driven Sustainable Society

The need for data center sustainability

Digital services like streaming, emails, online shopping, and more – can reach an infinite number of people without occupying physical space. However, there is an urgent need to decarbonize data storage, management, access and distribution and data center electricity itself is likely to increase to 8 percent of the projected total global electricity demand by 2030, 15-fold of what it is today.^ Energy-efficient data centers are therefore a key climate priority.

Hitachi helps reduce the data center carbon footprint

Hitachi is focused on a sustainable future and contributing to society through the development of superior, original technology and products. Hence, it aims to reduce the environmental impact of data centers through technology and data-driven approaches.

According to research by Stanford Energy, with the right innovative solutions, data center carbon emissions could decrease by 88 percent%^^. Understanding the carbon footprint of a data center is not enough. Leveraging tools to help collaborate across infrastructure, building, energy, and corporate management to gain visibility on consumption and emissions can make a difference.

Data centers are also increasing their use of renewable power sources. But many of these are purchasing carbon credits instead of generating energy on-site or nearby. Data centers also need to reduce their energy consumption. They can use carbon footprint analytics to optimize their equipment. This involves analyzing how much space the equipment requires, how much heat is being generated, and how that can be controlled more efficiently. Meanwhile, software developers can create sustainable applications to minimize their environmental impact as well.

If done right, all these measures can enhance the environmental performance of both existing data centers and future facilities.

Working together to incorporate data center sustainability

Data center sustainability and energy optimization strategies involve multifaceted approaches that include:

Investing in conversations and analyzing an organization’s current position and where it needs progress.

Creating a pilot project and replicating it later, if successful.

Defining milestones to build momentum for the overall sustainability journey.

At Hitachi, we develop green data center solutions that span technological, organizational, training and regulatory challenges to help organizations for future green data center certifications and emerging audit standards.

Hitachi has set a goal of carbon neutrality at its business sites by 2030 and across the entire value chain by 2050. The data center emissions challenge is an opportunity to lead by example through green and digital innovation and contribute towards a sustainable society

The dawn of a new sustainable beginning has just begun.…

Discover how Hitachi is unlocking value for society with Social Innovation in Energy:

Sources :

^ https://corporate.enelx.com/en/stories/2021/12/data-center-industry-sustainability

^^ https://energy.stanford.edu/news/data-centers-can-slash-co2-emissions-88-or-more

#green data center#sustainable data center#energy efficient data centers#green data center solutions#data center energy efficiency#data center power consumption#data center impact on environment#data center carbon footprint#data center carbon emissions#data center optimization#decarbonization#sustainability

0 notes

Text

Impact and innovation of AI in energy use with James Chalmers

New Post has been published on https://thedigitalinsider.com/impact-and-innovation-of-ai-in-energy-use-with-james-chalmers/

Impact and innovation of AI in energy use with James Chalmers

In the very first episode of our monhtly Explainable AI podcas, hosts Paul Anthony Claxton and Rohan Hall sat down with James Chalmers, Chief Revenue Officer of Novo Power, to discuss one of the most pressing issues in AI today: energy consumption and its environmental impact.

Together, they explored how AI’s rapid expansion is placing significant demands on global power infrastructures and what leaders in the tech industry are doing to address this.

The conversation covered various important topics, from the unique power demands of generative AI models to potential solutions like neuromorphic computing and waste heat recapture. If you’re interested in how AI shapes business and global energy policies, this episode is a must-listen.

Why this conversation matters for the future of AI

The rise of AI, especially generative models, isn’t just advancing technology; it’s consuming power at an unprecedented rate. Understanding these impacts is crucial for AI enthusiasts who want to see AI development continue sustainably and ethically.

As James explains, AI’s current reliance on massive datasets and intensive computational power has given it the fastest-growing energy footprint of any technology in history. For those working in AI, understanding how to manage these demands can be a significant asset in building future-forward solutions.

Main takeaways

AI’s power consumption problem: Generative AI models, which require vast amounts of energy for training and generation, consume ten times more power than traditional search engines.

Waste heat utilization: Nearly all power in data centers is lost as waste heat. Solutions like those at Novo Power are exploring how to recycle this energy.

Neuromorphic computing: This emerging technology, inspired by human neural networks, promises more energy-efficient AI processing.

Shift to responsible use: AI can help businesses address inefficiencies, but organizations need to integrate AI where it truly supports business goals rather than simply following trends.

Educational imperative: For AI to reach its potential without causing environmental strain, a broader understanding of its capabilities, impacts, and sustainable use is essential.

Meet James Chalmers

James Chalmers is a seasoned executive and strategist with extensive international experience guiding ventures through fundraising, product development, commercialization, and growth.

As the Founder and Managing Partner at BaseCamp, he has reshaped traditional engagement models between startups, service providers, and investors, emphasizing a unique approach to creating long-term value through differentiation.

Rather than merely enhancing existing processes, James champions transformative strategies that set companies apart, strongly emphasizing sustainable development.

Numerous accolades validate his work, including recognition from Forbes and Inc. Magazine as a leader of one of the Fastest-Growing and Most Innovative Companies, as well as B Corporation’s Best for The World and MedTech World’s Best Consultancy Services.

He’s also a LinkedIn ‘Top Voice’ on Product Development, Entrepreneurship, and Sustainable Development, reflecting his ability to drive substantial and sustainable growth through innovation and sound business fundamentals.

At BaseCamp, James applies his executive expertise to provide hands-on advisory services in fundraising, product development, commercialization, and executive strategy.

His commitment extends beyond addressing immediate business challenges; he prioritizes building competency and capacity within each startup he advises. Focused on sustainability, his work is dedicated to supporting companies that address one or more of the United Nations’ 17 Sustainable Development Goals through AI, DeepTech, or Platform Technologies.

About the hosts:

Paul Anthony Claxton – Q1 Velocity Venture Capital | LinkedIn

www.paulclaxton.io – am a Managing General Partner at Q1 Velocity Venture Capital… · Experience: Q1 Velocity Venture Capital · Education: Harvard Extension School · Location: Beverly Hills · 500+ connections on LinkedIn. View Paul Anthony Claxton’s profile on LinkedIn, a professional community of 1 billion members.

Rohan Hall – Code Genie AI | LinkedIn

Are you ready to transform your business using the power of AI? With over 30 years of… · Experience: Code Genie AI · Location: Los Angeles Metropolitan Area · 500+ connections on LinkedIn. View Rohan Hall’s profile on LinkedIn, a professional community of 1 billion members.

Like what you see? Then check out tonnes more.

From exclusive content by industry experts and an ever-increasing bank of real world use cases, to 80+ deep-dive summit presentations, our membership plans are packed with awesome AI resources.

Subscribe now

#ai#AI development#AI models#approach#Artificial Intelligence#bank#basecamp#billion#Building#Business#business goals#code#Community#Companies#computing#content#data#Data Centers#datasets#development#education#Emerging Technology#energy#energy consumption#Energy-efficient AI#engines#Environmental#environmental impact#Explainable AI#extension

3 notes

·

View notes

Text

#Data centers#energy efficiency#AI-driven#Consegic Business Intelligence#ThermalManagement#SustainableTech#electronicsnews#technologynews

0 notes

Text

Cipher Mining Acquires 300 MW Data Center Site in West Texas

Cipher Mining secures a 300 MW data center in Texas, growing its portfolio to over 2.5 GW.

Cipher Mining has announced the acquisition of a new 300-megawatt (MW) data center site in West Texas, a move aimed at significantly scaling up its Bitcoin mining operations in the region. The company has agreed to purchase the site for $67.5 million, with an additional variable fee of $3 per megawatt-hour (MWh) for the first five years after the site is…

#300 MW data center#Bitcoin#bitcoin mining#Cipher Mining#crypto#cryptocurrency infrastructure#data center acquisition#energy capacity#energy-efficient mining#finance#high-performance computing#technology#Texas Bitcoin#West Texas

0 notes

Text

0 notes

Text

Exploring the Growing $21.3 Billion Data Center Liquid Cooling Market: Trends and Opportunities

In an era marked by rapid digital expansion, data centers have become essential infrastructures supporting the growing demands for data processing and storage. However, these facilities face a significant challenge: maintaining optimal operating temperatures for their equipment. Traditional air-cooling methods are becoming increasingly inadequate as server densities rise and heat generation intensifies. Liquid cooling is emerging as a transformative solution that addresses these challenges and is set to redefine the cooling landscape for data centers.

What is Liquid Cooling?

Liquid cooling systems utilize liquids to transfer heat away from critical components within data centers. Unlike conventional air cooling, which relies on air to dissipate heat, liquid cooling is much more efficient. By circulating a cooling fluid—commonly water or specialized refrigerants—through heat exchangers and directly to the heat sources, data centers can maintain lower temperatures, improving overall performance.

Market Growth and Trends

The data centre liquid cooling market is on an impressive growth trajectory. According to industry analysis, this market is projected to grow USD 21.3 billion by 2030, achieving a remarkable compound annual growth rate (CAGR) of 27.6%. This upward trend is fueled by several key factors, including the increasing demand for high-performance computing (HPC), advancements in artificial intelligence (AI), and a growing emphasis on energy-efficient operations.

Key Factors Driving Adoption

1. Rising Heat Density

The trend toward higher power density in server configurations poses a significant challenge for cooling systems. With modern servers generating more heat than ever, traditional air cooling methods are struggling to keep pace. Liquid cooling effectively addresses this issue, enabling higher density server deployments without sacrificing efficiency.

2. Energy Efficiency Improvements

A standout advantage of liquid cooling systems is their energy efficiency. Studies indicate that these systems can reduce energy consumption by up to 50% compared to air cooling. This not only lowers operational costs for data center operators but also supports sustainability initiatives aimed at reducing energy consumption and carbon emissions.

3. Space Efficiency

Data center operators often grapple with limited space, making it crucial to optimize cooling solutions. Liquid cooling systems typically require less physical space than air-cooled alternatives. This efficiency allows operators to enhance server capacity and performance without the need for additional physical expansion.

4. Technological Innovations

The development of advanced cooling technologies, such as direct-to-chip cooling and immersion cooling, is further propelling the effectiveness of liquid cooling solutions. Direct-to-chip cooling channels coolant directly to the components generating heat, while immersion cooling involves submerging entire server racks in non-conductive liquids, both of which push thermal management to new heights.

Overcoming Challenges

While the benefits of liquid cooling are compelling, the transition to this technology presents certain challenges. Initial installation costs can be significant, and some operators may be hesitant due to concerns regarding complexity and ongoing maintenance. However, as liquid cooling technology advances and adoption rates increase, it is expected that costs will decrease, making it a more accessible option for a wider range of data center operators.

The Competitive Landscape

The data center liquid cooling market is home to several key players, including established companies like Schneider Electric, Vertiv, and Asetek, as well as innovative startups committed to developing cutting-edge thermal management solutions. These organizations are actively investing in research and development to refine the performance and reliability of liquid cooling systems, ensuring they meet the evolving needs of data center operators.

Download PDF Brochure :

The outlook for the data center liquid cooling market is promising. As organizations prioritize energy efficiency and sustainability in their operations, liquid cooling is likely to become a standard practice. The integration of AI and machine learning into cooling systems will further enhance performance, enabling dynamic adjustments based on real-time thermal demands.

The evolution of liquid cooling in data centers represents a crucial shift toward more efficient, sustainable, and high-performing computing environments. As the demand for advanced cooling solutions rises in response to technological advancements, liquid cooling is not merely an option—it is an essential element of the future data center landscape. By embracing this innovative approach, organizations can gain a significant competitive advantage in an increasingly digital world.

#Data Center#Liquid Cooling#Energy Efficiency#High-Performance Computing#Sustainability#Thermal Management#AI#Market Growth#Technology Innovation#Server Cooling#Data Center Infrastructure#Immersion Cooling#Direct-to-Chip Cooling#IT Solutions#Digital Transformation

0 notes

Text

Our new article in Joule titled "To better understand AI’s growing energy use, analysts need a data revolution" was published online at Joule today

Our new article in Joule on data needs for understanding AI electricity use came out online today in Joule (link will be good until October 8, 2024). Here's the summary section:

As the famous quote from George Box goes, “All models are wrong, but some are useful.” Bottom-up AI data center models will never be a perfect crystal ball, but energy analysts can soon make them much more useful for decisionmakers if our identified critical data needs are met. Without better data, energy analysts may be forced to take several shortcuts that are more uncertain, less explanatory, less defensible, and less useful to policymakers, investors, the media, and the public. Meanwhile, all of these stakeholders deserve greater clarity on the scales and drivers of the electricity use of one of the most disruptive technologies in recent memory. One need only look to the history of cryptocurrency mining as a cautionary tale: after a long initial period of moderate growth, mining electricity demand rose rapidly. Meanwhile, energy analysts struggled to fill data and modeling gaps to quantify and explain that growth to policymakers—and to identify ways of mitigating it—especially at local levels where grids were at risk of stress. The electricity demand growth potential of AI data centers is much larger, so energy analysts must be better prepared. With the right support and partnerships, the energy analysis community is ready to take on the challenges of modeling a fast moving and uncertain sector, to continuously improve, and to bring much-needed scientific evidence to the table. Given the rapid growth of AI data center operations and investments, the time to act is now."

I worked with my longtime colleagues Eric Masanet and Nuoa Lei on this article.

1 note

·

View note

Text

Green Data Center Solutions by Radiant Info Solutions

Discover how Radiant Info Solutions leads the way in green data center solutions. Sustainable, energy-efficient, and cost-effective data center designs.

#Green data center solutions#Radiant Info Solutions#data center consultancy#energy-efficient data centers#sustainable data centers

0 notes

Text

Reimagining the Energy Landscape: AI's Growing Hunger for Computing Power #BlogchatterA2Z

Reimagining the Energy Landscape: AI's Growing Hunger for Computing Power #BlogchatterA2Z #AIdevelopment #energyConsumption #DataCenterInfrastructure #ArmHoldings #energyEfficiency #SustainableTechnology #RenewableEnergy #EdgeComputing #RegulatoryMeasures

Navigating the Energy Conundrum: AI’s Growing Hunger for Computing Power In the ever-expanding realm of artificial intelligence (AI), the voracious appetite for computing power threatens to outpace our energy sources, sparking urgent calls for a shift in approach. According to Rene Haas, Chief Executive Officer of Arm Holdings Plc, by the year 2030, data centers worldwide are projected to…

View On WordPress

#AI Development#Arm Holdings#Custom-built chips#Data center infrastructure#Edge computing#Energy consumption#energy efficiency#Regulatory measures#Renewable Energy#Sustainable technology

0 notes

Text

Who Holds the Keys? Ethical Considerations for AI Training and Data Storage

As artificial intelligence (AI) evolves, so do the complex questions surrounding its development and use. One particularly critical issue: who owns the data used to train and store AI models? This seemingly simple question sparks a web of ethical and legal considerations that demand careful analysis.

Fueling the AI Engine

AI thrives on data. Vast amounts of data are used to train and refine models, from text and images to personal information and even medical records. But who, ultimately, owns this data?

Individuals: The data often originates from individuals, raising questions about their consent and control over its use. Should they have a say in how their data is used for AI development?

Companies: Companies collecting and utilizing data for AI development claim ownership, arguing it's part of their intellectual property. But does this outweigh individual rights and interests?

Governments: In some cases, government agencies collect and hold vast amounts of data, further complicating the ownership picture. What role should they play in regulating and overseeing AI data use?

Beyond Ownership: Ethical Implications

The question of ownership is just one part of the equation. Ethical considerations abound:

Bias and Discrimination: AI models trained on biased data can perpetuate discrimination, harming individuals and communities. How can we ensure data used for AI is fair and inclusive?

Privacy Concerns: When personal data is used for AI development, privacy concerns are paramount. How can we balance innovation with individual privacy rights?

Security and Transparency: Data breaches and misuse pose significant risks. How can we ensure secure storage and transparent use of AI training data?

Navigating the Maze

So, how do we move forward? There's no one-size-fits-all solution, but here are some steps:

Clear and informed consent: Individuals should have clear and easily understood ways to consent to their data being used for AI development.

Robust data protection laws: Strong legislation is crucial to ensure responsible data collection, storage, and use, protecting individual rights and privacy.

Ethical AI development: Developers and companies must adopt ethical frameworks and principles to guide data collection and model training.

Independent oversight bodies: Establishing independent bodies to monitor and advise on AI data practices can offer much-needed transparency and accountability.

The path forward demands collaboration: individuals, companies, governments, and researchers must work together to establish ethical guidelines and frameworks for AI training and data storage. Only then can we ensure that AI truly benefits humanity, respecting individual rights and building a fairer, more equitable future.

#artificial intelligence#machine learning#deep learning#technology#robotics#autonomous vehicles#robots#collaborative robots#business#energy efficiency#energy consumption#sustainable energy#energy#data center

0 notes

Text

Green energy is in its heyday.

Renewable energy sources now account for 22% of the nation’s electricity, and solar has skyrocketed eight times over in the last decade. This spring in California, wind, water, and solar power energy sources exceeded expectations, accounting for an average of 61.5 percent of the state's electricity demand across 52 days.

But green energy has a lithium problem. Lithium batteries control more than 90% of the global grid battery storage market.

That’s not just cell phones, laptops, electric toothbrushes, and tools. Scooters, e-bikes, hybrids, and electric vehicles all rely on rechargeable lithium batteries to get going.

Fortunately, this past week, Natron Energy launched its first-ever commercial-scale production of sodium-ion batteries in the U.S.

“Sodium-ion batteries offer a unique alternative to lithium-ion, with higher power, faster recharge, longer lifecycle and a completely safe and stable chemistry,” said Colin Wessells — Natron Founder and Co-CEO — at the kick-off event in Michigan.

The new sodium-ion batteries charge and discharge at rates 10 times faster than lithium-ion, with an estimated lifespan of 50,000 cycles.

Wessells said that using sodium as a primary mineral alternative eliminates industry-wide issues of worker negligence, geopolitical disruption, and the “questionable environmental impacts” inextricably linked to lithium mining.

“The electrification of our economy is dependent on the development and production of new, innovative energy storage solutions,” Wessells said.

Why are sodium batteries a better alternative to lithium?

The birth and death cycle of lithium is shadowed in environmental destruction. The process of extracting lithium pollutes the water, air, and soil, and when it’s eventually discarded, the flammable batteries are prone to bursting into flames and burning out in landfills.

There’s also a human cost. Lithium-ion materials like cobalt and nickel are not only harder to source and procure, but their supply chains are also overwhelmingly attributed to hazardous working conditions and child labor law violations.

Sodium, on the other hand, is estimated to be 1,000 times more abundant in the earth’s crust than lithium.

“Unlike lithium, sodium can be produced from an abundant material: salt,” engineer Casey Crownhart wrote in the MIT Technology Review. “Because the raw ingredients are cheap and widely available, there’s potential for sodium-ion batteries to be significantly less expensive than their lithium-ion counterparts if more companies start making more of them.”

What will these batteries be used for?

Right now, Natron has its focus set on AI models and data storage centers, which consume hefty amounts of energy. In 2023, the MIT Technology Review reported that one AI model can emit more than 626,00 pounds of carbon dioxide equivalent.

“We expect our battery solutions will be used to power the explosive growth in data centers used for Artificial Intelligence,” said Wendell Brooks, co-CEO of Natron.

“With the start of commercial-scale production here in Michigan, we are well-positioned to capitalize on the growing demand for efficient, safe, and reliable battery energy storage.”

The fast-charging energy alternative also has limitless potential on a consumer level, and Natron is eying telecommunications and EV fast-charging once it begins servicing AI data storage centers in June.

On a larger scale, sodium-ion batteries could radically change the manufacturing and production sectors — from housing energy to lower electricity costs in warehouses, to charging backup stations and powering electric vehicles, trucks, forklifts, and so on.

“I founded Natron because we saw climate change as the defining problem of our time,” Wessells said. “We believe batteries have a role to play.”

-via GoodGoodGood, May 3, 2024

--

Note: I wanted to make sure this was legit (scientifically and in general), and I'm happy to report that it really is! x, x, x, x

#batteries#lithium#lithium ion batteries#lithium battery#sodium#clean energy#energy storage#electrochemistry#lithium mining#pollution#human rights#displacement#forced labor#child labor#mining#good news#hope

3K notes

·

View notes

Text

Parallel Generator Set Controllers Market Outlook, Demand, Overview Analysis, Trends, Key Growth, Opportunity by 2032

Market Overview:

The parallel generator set controllers market refers to the segment of the power generation industry that deals with the control and synchronization of multiple generator sets operating in parallel. These controllers play a crucial role in ensuring efficient and reliable power generation by monitoring and managing the synchronization, load sharing, and other operational parameters of parallel generator sets.

Key Factors Driving the Parallel Generator Set Controllers Market:

The demand for parallel generator set controllers is rising in response to the expanding requirement for dependable power supply in a variety of commercial settings, industrial settings, and essential infrastructure.These controllers make it possible for several generators to synchronise and share loads with ease, resulting in a steady supply of electricity.

An increase in the use of renewable energy sources:Parallel generator set controllers are necessary to combine renewable energy sources with traditional generators to build hybrid power systems as solar and wind energy sources gain popularity.With this integration, renewable energy may be used more efficiently while still ensuring grid stability.

Expansion of Data Centres and Telecom Infrastructure: Robust backup power solutions are required due to the swift expansion of data centres and the telecommunications sector.Using parallel generator set controllers, generator sets can be synchronised and managed effectively for continuous operation

Growth of the manufacturing sector and industrial process automation: As the manufacturing industry expands, there is a greater need for dependable and efficient power generation. The best power distribution is achieved with the use of parallel generator set controllers, which synchronise the generators to meet changing load needs.

Governments all across the world are enforcing strict laws to reduce emissions from the production of electricity. In order to comply with environmental requirements, parallel generator set controllers can assist generator sets operate more efficiently. This results in less fuel usage and emissions.

Trends:

Smart and Digital Solutions: The industry was moving towards more intelligent and digitally connected solutions. Advanced control systems with remote monitoring, predictive maintenance, and data analytics capabilities were gaining traction.

Renewable Energy Integration: With the increasing focus on sustainability, there was a trend towards integrating parallel generator sets with renewable energy sources such as solar and wind, creating hybrid power solutions.

Energy Storage Integration: Battery storage systems were being integrated with generator sets to provide seamless power during transient events and enhance load management.

Microgrid Development: Parallel generator set controllers were being used in microgrid applications, allowing localized power generation and distribution in remote or critical areas.

Load Flexibility: Controllers were becoming more adept at managing variable and dynamic loads, optimizing fuel consumption and power distribution based on real-time demand.

Remote Monitoring and Control: The ability to monitor and control parallel generator sets remotely through IoT technology was becoming increasingly important, enabling efficient operation and maintenance.

Here are some of the key benefits:

Enhanced Power Management

Increased Power Availability

Flexibility and Scalability

Load Sharing and Efficiency

Redundancy and Reliability

Remote Monitoring and Control

Compliance and Environmental Benefits

Safety and Protection Features

Cost-Effective Solution

Integration with Smart Grids

We recommend referring our Stringent datalytics firm, industry publications, and websites that specialize in providing market reports. These sources often offer comprehensive analysis, market trends, growth forecasts, competitive landscape, and other valuable insights into this market.

By visiting our website or contacting us directly, you can explore the availability of specific reports related to this market. These reports often require a purchase or subscription, but we provide comprehensive and in-depth information that can be valuable for businesses, investors, and individuals interested in this market.

“Remember to look for recent reports to ensure you have the most current and relevant information.”

Click Here, To Get Free Sample Report: https://stringentdatalytics.com/sample-request/parallel-generator-set-controllers-market/10331/

Market Segmentations:

Global Parallel Generator Set Controllers Market: By Company

• CRE TECHNOLOGY

• SmartGen

• KOHLER

• S.I.C.E.S.

• Bruno generators

• VISA GROUP

• Alfred Kuhse GmbH

• DEIF Group

• Mechtric Group

Global Parallel Generator Set Controllers Market: By Type

• Automatic

• Manual

Global Parallel Generator Set Controllers Market: By Application

• Industrial

• Chemical

• Power Industry

Global Parallel Generator Set Controllers Market: Regional Analysis

North America: The North America region includes the U.S., Canada, and Mexico. The U.S. is the largest market for Muscle Wire in this region, followed by Canada and Mexico. The market growth in this region is primarily driven by the presence of key market players and the increasing demand for the product.

Europe: The Europe region includes Germany, France, U.K., Russia, Italy, Spain, Turkey, Netherlands, Switzerland, Belgium, and Rest of Europe. Germany is the largest market for Muscle Wire in this region, followed by the U.K. and France. The market growth in this region is driven by the increasing demand for the product in the automotive and aerospace sectors.

Asia-Pacific: The Asia-Pacific region includes Singapore, Malaysia, Australia, Thailand, Indonesia, Philippines, China, Japan, India, South Korea, and Rest of Asia-Pacific. China is the largest market for Muscle Wire in this region, followed by Japan and India. The market growth in this region is driven by the increasing adoption of the product in various end-use industries, such as automotive, aerospace, and construction.

Middle East and Africa: The Middle East and Africa region includes Saudi Arabia, U.A.E, South Africa, Egypt, Israel, and Rest of Middle East and Africa. The market growth in this region is driven by the increasing demand for the product in the aerospace and defense sectors.

South America: The South America region includes Argentina, Brazil, and Rest of South America. Brazil is the largest market for Muscle Wire in this region, followed by Argentina. The market growth in this region is primarily driven by the increasing demand for the product in the automotive sector.

Visit Report Page for More Details: https://stringentdatalytics.com/reports/parallel-generator-set-controllers-market/10331/

Reasons to Purchase Parallel Generator Set Controllers Market Report:

• To gain insights into market trends and dynamics: this reports provide valuable insights into industry trends and dynamics, including market size, growth rates, and key drivers and challenges.

• To identify key players and competitors: this research reports can help businesses identify key players and competitors in their industry, including their market share, strategies, and strengths and weaknesses.

• To understand consumer behavior: this research reports can provide valuable insights into consumer behavior, including their preferences, purchasing habits, and demographics.

• To evaluate market opportunities: this research reports can help businesses evaluate market opportunities, including potential new products or services, new markets, and emerging trends.

About US:

Stringent Datalytics offers both custom and syndicated market research reports. Custom market research reports are tailored to a specific client's needs and requirements. These reports provide unique insights into a particular industry or market segment and can help businesses make informed decisions about their strategies and operations.

Syndicated market research reports, on the other hand, are pre-existing reports that are available for purchase by multiple clients. These reports are often produced on a regular basis, such as annually or quarterly, and cover a broad range of industries and market segments. Syndicated reports provide clients with insights into industry trends, market sizes, and competitive landscapes. By offering both custom and syndicated reports, Stringent Datalytics can provide clients with a range of market research solutions that can be customized to their specific needs

Contact US:

Stringent Datalytics

Contact No - +1 346 666 6655

Email Id - [email protected]

Web - https://stringentdatalytics.com/

#Hybrid Power Systems#Remote Monitoring#Technological Advancements#Energy Security#Backup Solutions#Power Distribution#Microgrids#Smart Control Systems#Energy Efficiency#Sustainability#Data Centers.

0 notes

Text

Impact and innovation of AI in energy use with James Chalmers

New Post has been published on https://thedigitalinsider.com/impact-and-innovation-of-ai-in-energy-use-with-james-chalmers/

Impact and innovation of AI in energy use with James Chalmers

In the very first episode of our monhtly Explainable AI podcas, hosts Paul Anthony Claxton and Rohan Hall sat down with James Chalmers, CEO of Novo Power, to discuss one of the most pressing issues in AI today: energy consumption and its environmental impact.

Together, they explored how AI’s rapid expansion is placing significant demands on global power infrastructures and what leaders in the tech industry are doing to address this.

The conversation covered various important topics, from the unique power demands of generative AI models to potential solutions like neuromorphic computing and waste heat recapture. If you’re interested in how AI shapes business and global energy policies, this episode is a must-listen.

Why this conversation matters for the future of AI

The rise of AI, especially generative models, isn’t just advancing technology; it’s consuming power at an unprecedented rate. Understanding these impacts is crucial for AI enthusiasts who want to see AI development continue sustainably and ethically.

As James explains, AI’s current reliance on massive datasets and intensive computational power has given it the fastest-growing energy footprint of any technology in history. For those working in AI, understanding how to manage these demands can be a significant asset in building future-forward solutions.

Main takeaways

AI’s power consumption problem: Generative AI models, which require vast amounts of energy for training and generation, consume ten times more power than traditional search engines.

Waste heat utilization: Nearly all power in data centers is lost as waste heat. Solutions like those at Novo Power are exploring how to recycle this energy.

Neuromorphic computing: This emerging technology, inspired by human neural networks, promises more energy-efficient AI processing.

Shift to responsible use: AI can help businesses address inefficiencies, but organizations need to integrate AI where it truly supports business goals rather than simply following trends.

Educational imperative: For AI to reach its potential without causing environmental strain, a broader understanding of its capabilities, impacts, and sustainable use is essential.

Meet James Chalmers

James Chalmers is a seasoned executive and strategist with extensive international experience guiding ventures through fundraising, product development, commercialization, and growth.

As the Founder and Managing Partner at BaseCamp, he has reshaped traditional engagement models between startups, service providers, and investors, emphasizing a unique approach to creating long-term value through differentiation.

Rather than merely enhancing existing processes, James champions transformative strategies that set companies apart, strongly emphasizing sustainable development.

Numerous accolades validate his work, including recognition from Forbes and Inc. Magazine as a leader of one of the Fastest-Growing and Most Innovative Companies, as well as B Corporation’s Best for The World and MedTech World’s Best Consultancy Services.

He’s also a LinkedIn ‘Top Voice’ on Product Development, Entrepreneurship, and Sustainable Development, reflecting his ability to drive substantial and sustainable growth through innovation and sound business fundamentals.

At BaseCamp, James applies his executive expertise to provide hands-on advisory services in fundraising, product development, commercialization, and executive strategy.

His commitment extends beyond addressing immediate business challenges; he prioritizes building competency and capacity within each startup he advises. Focused on sustainability, his work is dedicated to supporting companies that address one or more of the United Nations’ 17 Sustainable Development Goals through AI, DeepTech, or Platform Technologies.

About the hosts:

Paul Anthony Claxton – Q1 Velocity Venture Capital | LinkedIn

www.paulclaxton.io – am a Managing General Partner at Q1 Velocity Venture Capital… · Experience: Q1 Velocity Venture Capital · Education: Harvard Extension School · Location: Beverly Hills · 500+ connections on LinkedIn. View Paul Anthony Claxton’s profile on LinkedIn, a professional community of 1 billion members.

Rohan Hall – Code Genie AI | LinkedIn

Are you ready to transform your business using the power of AI? With over 30 years of… · Experience: Code Genie AI · Location: Los Angeles Metropolitan Area · 500+ connections on LinkedIn. View Rohan Hall’s profile on LinkedIn, a professional community of 1 billion members.

Like what you see? Then check out tonnes more.

From exclusive content by industry experts and an ever-increasing bank of real world use cases, to 80+ deep-dive summit presentations, our membership plans are packed with awesome AI resources.

Subscribe now

#ai#AI development#AI models#approach#Artificial Intelligence#bank#basecamp#billion#Building#Business#business goals#CEO#code#Community#Companies#computing#content#data#Data Centers#datasets#development#education#Emerging Technology#energy#energy consumption#Energy-efficient AI#engines#Environmental#environmental impact#Explainable AI

0 notes

Text

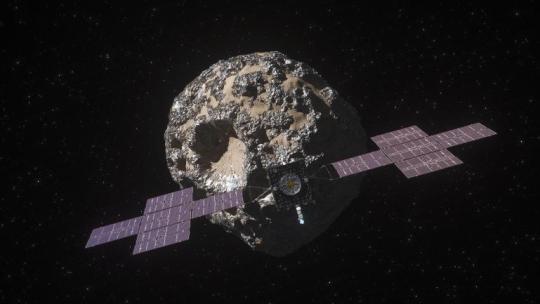

Let's Explore a Metal-Rich Asteroid 🤘

Between Mars and Jupiter, there lies a unique, metal-rich asteroid named Psyche. Psyche’s special because it looks like it is part or all of the metallic interior of a planetesimal—an early planetary building block of our solar system. For the first time, we have the chance to visit a planetary core and possibly learn more about the turbulent history that created terrestrial planets.

Here are six things to know about the mission that’s a journey into the past: Psyche.

1. Psyche could help us learn more about the origins of our solar system.

After studying data from Earth-based radar and optical telescopes, scientists believe that Psyche collided with other large bodies in space and lost its outer rocky shell. This leads scientists to think that Psyche could have a metal-rich interior, which is a building block of a rocky planet. Since we can’t pierce the core of rocky planets like Mercury, Venus, Mars, and our home planet, Earth, Psyche offers us a window into how other planets are formed.

2. Psyche might be different than other objects in the solar system.

Rocks on Mars, Mercury, Venus, and Earth contain iron oxides. From afar, Psyche doesn’t seem to feature these chemical compounds, so it might have a different history of formation than other planets.

If the Psyche asteroid is leftover material from a planetary formation, scientists are excited to learn about the similarities and differences from other rocky planets. The asteroid might instead prove to be a never-before-seen solar system object. Either way, we’re prepared for the possibility of the unexpected!

3. Three science instruments and a gravity science investigation will be aboard the spacecraft.

The three instruments aboard will be a magnetometer, a gamma-ray and neutron spectrometer, and a multispectral imager. Here’s what each of them will do:

Magnetometer: Detect evidence of a magnetic field, which will tell us whether the asteroid formed from a planetary body

Gamma-ray and neutron spectrometer: Help us figure out what chemical elements Psyche is made of, and how it was formed

Multispectral imager: Gather and share information about the topography and mineral composition of Psyche

The gravity science investigation will allow scientists to determine the asteroid’s rotation, mass, and gravity field and to gain insight into the interior by analyzing the radio waves it communicates with. Then, scientists can measure how Psyche affects the spacecraft’s orbit.

4. The Psyche spacecraft will use a super-efficient propulsion system.

Psyche’s solar electric propulsion system harnesses energy from large solar arrays that convert sunlight into electricity, creating thrust. For the first time ever, we will be using Hall-effect thrusters in deep space.

5. This mission runs on collaboration.

To make this mission happen, we work together with universities, and industry and NASA to draw in resources and expertise.

NASA’s Jet Propulsion Laboratory manages the mission and is responsible for system engineering, integration, and mission operations, while NASA’s Kennedy Space Center’s Launch Services Program manages launch operations and procured the SpaceX Falcon Heavy rocket.

Working with Arizona State University (ASU) offers opportunities for students to train as future instrument or mission leads. Mission leader and Principal Investigator Lindy Elkins-Tanton is also based at ASU.

Finally, Maxar Technologies is a key commercial participant and delivered the main body of the spacecraft, as well as most of its engineering hardware systems.

6. You can be a part of the journey.

Everyone can find activities to get involved on the mission’s webpage. There's an annual internship to interpret the mission, capstone courses for undergraduate projects, and age-appropriate lessons, craft projects, and videos.

You can join us for a virtual launch experience, and, of course, you can watch the launch with us on Oct. 12, 2023, at 10:16 a.m. EDT!

For official news on the mission, follow us on social media and check out NASA’s and ASU’s Psyche websites.

Make sure to follow us on Tumblr for your regular dose of space!

#Psyche#Mission to Psyche#asteroid#NASA#exploration#technology#tech#spaceblr#solar system#space#not exactly#metalcore#but close?

2K notes

·

View notes

Text

How to Build Your Own Bitcoin Mining Data Center (Bitaxe Edition) + Spread Sheet Included

Embarking on the journey to create my own Bitcoin mining data center has been an exciting endeavor, involving precise planning, careful equipment selection, and a focus on efficiency. Here’s a breakdown of the components I’m using and why each one is essential for achieving a streamlined mining operation. The cornerstone of my setup is the Bitaxe Gamma 601 SOLO Miner. With a hash rate of 1.2…

#2.4 GHz frequency#Bitaxe Gamma 601#Bitaxe HEX#Bitcoin#bitcoin mining#bitcoin mining data center#Bitcoin portfolio#blockchain#crypto#cryptocurrency#data center#energy efficiency#Ethereum#hash rate#mining efficiency#mining operation#mining setup#power backup#power consumption#science#solar power#technology#WiFi connectivity

0 notes