#docker tagging best practices

Explore tagged Tumblr posts

Text

Docker Tag and Push Image to Hub | Docker Tagging Explained and Best Practices

Full Video Link: https://youtu.be/X-uuxvi10Cw Hi, a new #video on #DockerImageTagging is published on @codeonedigest #youtube channel. Learn TAGGING docker image. Different ways to TAG docker image #Tagdockerimage #pushdockerimagetodockerhubrepository #

Next step after building the docker image is to tag docker image. Image tagging is important to upload docker image to docker hub repository or azure container registry or elastic container registry etc. There are different ways to TAG docker image. Learn how to tag docker image? What are the best practices for docker image tagging? How to tag docker container image? How to tag and push docker…

View On WordPress

#docker#docker and Kubernetes#docker build tag#docker compose#docker image tagging#docker image tagging best practices#docker tag and push image to registry#docker tag azure container registry#docker tag command#docker tag image#docker tag push#docker tagging best practices#docker tags explained#docker tutorial#docker tutorial for beginners#how to tag and push docker image#how to tag existing docker image#how to upload image to docker hub repository#push docker image to docker hub repository#Tag docker image#tag docker image after build#what is docker

0 notes

Text

Building Scalable Infrastructure with Ansible and Terraform

Building Scalable Infrastructure with Ansible and Terraform

Modern cloud environments require scalable, efficient, and automated infrastructure to meet growing business demands. Terraform and Ansible are two powerful tools that, when combined, enable Infrastructure as Code (IaC) and Configuration Management, allowing teams to build, manage, and scale infrastructure seamlessly.

1. Understanding Terraform and Ansible

📌 Terraform: Infrastructure as Code (IaaC)

Terraform is a declarative IaaC tool that enables provisioning and managing infrastructure across multiple cloud providers.

🔹 Key Features: ✅ Automates infrastructure deployment. ✅ Supports multiple cloud providers (AWS, Azure, GCP). ✅ Uses HCL (HashiCorp Configuration Language). ✅ Manages infrastructure as immutable code.

🔹 Use Case: Terraform is used to provision infrastructure ��� such as setting up VMs, networks, and databases — before configuration.

📌 Ansible: Configuration Management & Automation

Ansible is an agentless configuration management tool that automates software installation, updates, and system configurations.

🔹 Key Features: ✅ Uses YAML-based playbooks. ✅ Agentless architecture (SSH/WinRM-based). ✅ Idempotent (ensures same state on repeated runs). ✅ Supports cloud provisioning and app deployment.

🔹 Use Case: Ansible is used after infrastructure provisioning to configure servers, install applications, and manage deployments.

2. Why Use Terraform and Ansible Together?

Using Terraform + Ansible combines the strengths of both tools:

TerraformAnsibleCreates infrastructure (VMs, networks, databases).Configures and manages infrastructure (installing software, security patches). Declarative approach (desired state definition).Procedural approach (step-by-step execution).Handles infrastructure state via a state file. Doesn’t track state; executes tasks directly. Best for provisioning resources in cloud environments. Best for managing configurations and deployments.

Example Workflow: 1️⃣ Terraform provisions cloud infrastructure (e.g., AWS EC2, Azure VMs). 2️⃣ Ansible configures servers (e.g., installs Docker, Nginx, security patches).

3. Building a Scalable Infrastructure: Step-by-Step

Step 1: Define Infrastructure in Terraform

Example Terraform configuration to provision AWS EC2 instances:hclprovider "aws" { region = "us-east-1" }resource "aws_instance" "web" { ami = "ami-12345678" instance_type = "t2.micro" tags = { Name = "WebServer" } }

Step 2: Configure Servers Using Ansible

Example Ansible Playbook to install Nginx on the provisioned servers:yaml- name: Configure Web Server hosts: web_servers become: yes tasks: - name: Install Nginx apt: name: nginx state: present - name: Start Nginx Service service: name: nginx state: started enabled: yes

Step 3: Automate Deployment with Terraform and Ansible

1️⃣ Use Terraform to create infrastructure:bash terraform init terraform apply -auto-approve

2️⃣ Use Ansible to configure servers:bashansible-playbook -i inventory.ini configure_web.yaml

4. Best Practices for Scalable Infrastructure

✅ Modular Infrastructure — Use Terraform modules for reusable infrastructure components. ✅ State Management — Store Terraform state in remote backends (S3, Terraform Cloud) for team collaboration. ✅ Use Dynamic Inventory in Ansible — Fetch Terraform-managed resources dynamically. ✅ Automate CI/CD Pipelines — Integrate Terraform and Ansible with Jenkins, GitHub Actions, or GitLab CI. ✅ Follow Security Best Practices — Use IAM roles, secrets management, and network security groups.

5. Conclusion

By combining Terraform and Ansible, teams can build scalable, automated, and well-managed cloud infrastructure.

Terraform ensures consistent provisioning across multiple cloud environments, while Ansible simplifies configuration management and application deployment.

WEBSITE: https://www.ficusoft.in/devops-training-in-chennai/

0 notes

Text

Understanding Docker Playground Online: Your Gateway to Containerization

In the ever-evolving world of software development, containerization has become a pivotal technology, allowing developers to create, deploy, and manage applications in isolated environments. Docker, a leader in this domain, has revolutionized how applications are built and run. For both novices and seasoned developers, mastering Docker is now essential, and one of the best ways to do this is by leveraging an Online Docker Playground. In this article, we will explore the benefits of using such a platform and delve into the Docker Command Line and Basic Docker Commands that form the foundation of containerization.

The Importance of Docker in Modern Development

Docker has gained immense popularity due to its ability to encapsulate applications and their dependencies into containers. These containers are lightweight, portable, and can run consistently across different computing environments, from a developer's local machine to production servers in the cloud. This consistency eliminates the "it works on my machine" problem, which has historically plagued developers.

As a developer, whether you are building microservices, deploying scalable applications, or managing a complex infrastructure, Docker is an indispensable tool. Understanding how to effectively use Docker begins with getting comfortable with the Docker Command Line Interface (CLI) and mastering the Basic Docker Commands.

Learning Docker with an Online Docker Playground

For beginners, diving into Docker can be daunting. The Docker ecosystem is vast, with numerous commands, options, and configurations to learn. This is where an Online Docker Playground comes in handy. An Online Docker Playground provides a sandbox environment where you can practice Docker commands without the need to install Docker locally on your machine. This is particularly useful for those who are just starting and want to experiment without worrying about configuring their local environment.

Using an Online Docker Playground offers several advantages:

Accessibility: You can access the playground from any device with an internet connection, making it easy to practice Docker commands anytime, anywhere.

No Installation Required: Skip the hassle of installing Docker and its dependencies on your local machine. The playground provides a ready-to-use environment.

Safe Experimentation: You can test commands and configurations in a risk-free environment without affecting your local system or production environment.

Immediate Feedback: The playground often includes interactive tutorials that provide instant feedback, helping you learn more effectively.

Getting Started with Docker Command Line

The Docker Command Line Interface (CLI) is the primary tool you'll use to interact with Docker. It's powerful, versatile, and allows you to manage your Docker containers and images with ease. The CLI is where you will issue commands to create, manage, and remove containers, among other tasks.

To begin, let's explore some Basic Docker Commands that you will frequently use in your journey to mastering Docker:

docker run: This command is used to create and start a new container from an image. For example, docker run hello-world pulls the "hello-world" image from Docker Hub and runs it in a new container.

docker ps: To see a list of running containers, use the docker ps command. To view all containers (running and stopped), you can add the -a flag: docker ps -a.

docker images: This command lists all the images stored locally on your machine. It shows details like the repository, tag, image ID, and creation date.

docker pull: To download an image from Docker Hub, use docker pull. For example, docker pull nginx fetches the latest version of the NGINX image from Docker Hub.

docker stop: To stop a running container, use docker stop [container_id]. Replace [container_id] with the actual ID or name of the container you want to stop.

docker rm: Once a container is stopped, you can remove it using docker rm [container_id].

docker rmi: If you want to delete an image from your local storage, use docker rmi [image_id].

Conclusion

Mastering Docker is a crucial skill for modern developers, and utilizing an Online Docker Playground is one of the most effective ways to get started. By practicing Docker Command Line usage and familiarizing yourself with Basic Docker Commands, you can gain the confidence needed to manage complex containerized environments. As you progress, you'll find that Docker not only simplifies the deployment process but also enhances the scalability and reliability of your applications. Dive into Docker today, and unlock the full potential of containerization in your development workflow.

0 notes

Text

Continuous Integration and Deployment in AI: Anton R Gordon’s Best Practices with Jenkins and GitLab CI/CD

In the ever-evolving field of artificial intelligence (AI), continuous integration and deployment (CI/CD) pipelines play a crucial role in ensuring that AI models are consistently and efficiently developed, tested, and deployed. Anton R Gordon, an accomplished AI Architect, has honed his expertise in setting up robust CI/CD pipelines tailored specifically for AI projects. His approach leverages tools like Jenkins and GitLab CI/CD to streamline the development process, minimize errors, and accelerate the delivery of AI solutions. This article explores Anton’s best practices for implementing CI/CD pipelines in AI projects.

The Importance of CI/CD in AI Projects

AI projects often involve complex workflows, from data preprocessing and model training to validation and deployment. The integration of CI/CD practices into these workflows ensures that changes in code, data, or models are automatically tested and deployed in a consistent manner. This reduces the risk of errors, speeds up development cycles, and allows for more frequent updates to AI models, keeping them relevant and effective.

Jenkins: A Versatile Tool for CI/CD in AI

Jenkins is an open-source automation server that is widely used for continuous integration. Anton R Gordon’s expertise in Jenkins allows him to automate the various stages of AI development, ensuring that each component of the pipeline functions seamlessly. Here are some of his best practices for using Jenkins in AI projects:

Automating Model Training and Testing

Jenkins can be configured to automatically trigger model training and testing whenever changes are pushed to the repository. Anton sets up Jenkins pipelines that integrate with popular machine learning libraries like TensorFlow and PyTorch, ensuring that models are continuously updated and tested against new data.

Parallel Execution

AI projects often involve computationally intensive tasks. Anton leverages Jenkins' ability to execute tasks in parallel, distributing workloads across multiple machines or nodes. This significantly reduces the time required for model training and validation.

Version Control Integration

Integrating Jenkins with version control systems like Git allows Anton to track changes in code and data. This ensures that all updates are versioned and can be rolled back if necessary, providing a reliable safety net during the development process.

GitLab CI/CD: Streamlining AI Model Deployment

GitLab CI/CD is a powerful tool that integrates directly with GitLab repositories, offering seamless CI/CD capabilities. Anton R Gordon utilizes GitLab CI/CD to automate the deployment of AI models, ensuring that new versions of models are reliably and efficiently deployed to production environments. Here are some of his key practices:

Environment-Specific Deployments

Anton configures GitLab CI/CD pipelines to deploy AI models to different environments (e.g., staging, production) based on the branch or tag of the code. This ensures that models are thoroughly tested in a staging environment before being rolled out to production, reducing the risk of deploying untested or faulty models.

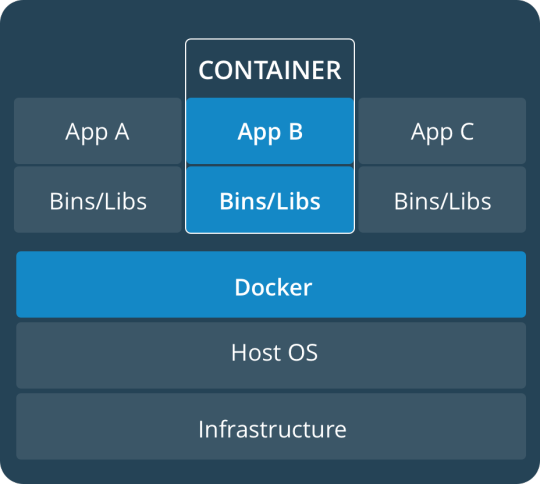

Docker Integration

To ensure consistency across different environments, Anton uses Docker containers within GitLab CI/CD pipelines. By containerizing AI models, he ensures that they run in the same environment, regardless of where they are deployed. This eliminates environment-related issues and streamlines the deployment process.

Automated Monitoring and Alerts

After deployment, it’s crucial to monitor the performance of AI models in real-time. Anton configures GitLab CI/CD pipelines to include automated monitoring tools that track model performance metrics. If the performance drops below a certain threshold, alerts are triggered, allowing for immediate investigation and remediation.

The Synergy of Jenkins and GitLab CI/CD

While Jenkins and GitLab CI/CD can each independently handle CI/CD tasks, Anton R Gordon often combines the strengths of both tools to create a more robust and flexible pipeline. Jenkins’ powerful automation capabilities complement GitLab’s streamlined deployment processes, resulting in a comprehensive CI/CD pipeline that covers the entire lifecycle of AI development and deployment.

Conclusion

Anton R Gordon’s expertise in CI/CD practices, particularly with Jenkins and GitLab CI/CD, has significantly advanced the efficiency and reliability of AI projects. By automating the integration, testing, and deployment of AI models, Anton ensures that these models are continuously refined and updated, keeping pace with the rapidly changing demands of the industry. His best practices serve as a blueprint for AI teams looking to implement or enhance their CI/CD pipelines, ultimately driving more successful AI deployments.

0 notes

Text

AWS Certified Solutions Architect - Associate (SAA-C03) Exam Guide by SK Singh

Unlock the potential of your AWS expertise with the "AWS Solutions Architect Associate Exam Guide." This comprehensive book prepares you for the AWS Certified Solutions Architect - Associate exam, ensuring you have the knowledge and skills to succeed.

Chapter 1 covers the evolution from traditional IT infrastructure to cloud computing, highlighting key features, benefits, deployment models, and cloud economics. Chapter 2 introduces AWS services and account setup, teaching access through the Management Console, CLI, SDK, IDE, and Infrastructure as Code (IaC).

In Chapter 3, master AWS Budgets, Cost Explorer, and Billing, along with cost allocation tags, multi-account billing, and cost-optimized architectures. Chapter 4 explores AWS Regions and Availability Zones, their importance, and how to select the right AWS Region, including AWS Outposts and Wavelength Zones.

Chapter 5 delves into IAM, covering users, groups, policies, roles, and best practices. Chapter 6 focuses on EC2, detailing instance types, features, use cases, security, and management exercises.

Chapter 7 explores S3 fundamentals, including buckets, objects, versioning, and security, with practical exercises. Chapter 8 covers advanced EC2 topics, such as instance types, purchasing options, and auto-scaling. Chapter 9 provides insights into scalability, high availability, load balancing, and auto-scaling strategies. Chapter 10 covers S3 storage classes, lifecycle policies, and cost-optimization strategies.

Chapter 11 explains DNS concepts and Route 53 features, including CloudFront and edge locations. Chapter 12 explores EFS, EBS, FSx, and other storage options. Chapter 13 covers CloudWatch, CloudTrail, AWS Config, and monitoring best practices. Chapter 14 dives into Amazon RDS, Aurora, DynamoDB, ElastiCache, and other database services.

Chapter 15 covers serverless computing with AWS Lambda and AWS Batch, and related topics like API Gateway and microservices. Chapter 16 explores Amazon SQS, SNS, AppSync, and other messaging services. Chapter 17 introduces Docker and container management on AWS, ECS, EKS, Fargate, and container orchestration. Chapter 18 covers AWS data analytics services like Athena, EMR, Glue, and Redshift.

Chapter 19 explores AWS AI/ML services such as SageMaker, Rekognition, and Comprehend. Chapter 20 covers AWS security practices, compliance requirements, and encryption techniques. Chapter 21 explains VPC, subnetting, routing, network security, VPN, and Direct Connect. Chapter 22 covers data backup, retention policies, and disaster recovery strategies.

Chapter 23 delves into cloud adoption strategies and AWS migration tools, including database migration and data transfer services. Chapter 24 explores AWS Amplify, AppSync, Device Farm, frontend services, and media services. Finally, Chapter 25 covers the AWS Well-Architected Framework and its pillars, teaching you to use the Well-Architected Tool to improve cloud architectures.

This guide includes practical exercises, review questions, and YouTube URLs for further learning. It is the ultimate resource for anyone aiming to get certified as AWS Certified Solutions Architect - Associate.

Order YOUR Copy NOW: https://amzn.to/3WQWU53 via @amazon

1 note

·

View note

Text

Docker and Kubernetes Training | Hyderabad

How to Store Images in Container Registries?

Introduction:

Container registries serve as central repositories for storing and managing container images, facilitating seamless deployment across various environments. However, optimizing image storage within these registries requires careful consideration of factors such as scalability, security, and performance. - Docker and Kubernetes Training

Choose the Right Registry:

Selecting the appropriate container registry is the first step towards efficient image storage. Popular options include Docker Hub, Google Container Registry (GCR), Amazon Elastic Container Registry (ECR), and Azure Container Registry (ACR). Evaluate factors such as integration with existing infrastructure, pricing, security features, and geographical distribution to make an informed decision.

Image Tagging Strategy:

Implement a robust tagging strategy to organize and manage container images effectively. Use semantic versioning or timestamp-based tagging to denote image versions and updates clearly. Avoid using generic tags like "latest," as they can lead to ambiguity and inconsistent deployments. - Kubernetes Online Training

Optimize Image Size:

Minimize image size by adhering to best practices such as using lightweight base images, leveraging multi-stage builds, and optimizing Dockerfiles. Removing unnecessary dependencies and layers helps reduce storage requirements and accelerates image pull times during deployment.

Security Considerations:

Prioritize security by implementing access controls, image signing, and vulnerability scanning within the container registry. Restrict image access based on user roles and permissions to prevent unauthorized usage. Regularly scan images for vulnerabilities and apply patches promptly to mitigate potential risks. - Docker Online Training

Automated Builds and CI/CD Integration:

Integrate container registries with continuous integration/continuous deployment (CI/CD) pipelines to automate image builds, testing, and deployment processes. Leverage tools like Jenkins, GitLab CI/CD, or GitHub Actions to streamline workflows and ensure consistent image updates across environments.

Content Trust and Image Signing:

Enable content trust mechanisms such as Docker Content Trust or Notary to ensure image authenticity and integrity. By digitally signing images and verifying signatures during pull operations, organizations can mitigate the risk of tampering and unauthorized modifications.

Data Backup and Disaster Recovery:

Implement robust backup and disaster recovery strategies to safeguard critical container images against data loss or corruption. Regularly backup registry data to redundant storage locations and establish procedures for swift restoration in the event of failures or disasters. - Docker and Kubernetes Online Training

Performance Optimization:

Optimize registry performance by leveraging caching mechanisms, content delivery networks (CDNs), and geo-replication to reduce latency and improve image retrieval speeds. Distribute registry instances across multiple geographical regions to enhance availability and resilience.

Conclusion:

By following best practices such as selecting the right registry, optimizing image size, enforcing security measures, and integrating with CI/CD pipelines, organizations can streamline image management and enhance their containerized deployments without diving into complex coding intricacies.

Visualpath is the Leading and Best Institute for learning Docker And Kubernetes Online in Ameerpet, Hyderabad. We provide Docker Online Training Course, you will get the best course at an affordable cost.

Attend Free Demo

Call on - +91-9989971070.

Visit : https://www.visualpath.in/DevOps-docker-kubernetes-training.html

Blog : https://dockerandkubernetesonlinetraining.blogspot.com/

#docker and kubernetes training#docker online training#docker training in hyderabad#kubernetes training hyderabad#docker and kubernetes online training#docker online training hyderabad#kubernetes online training#kubernetes online training hyderabad

0 notes

Text

Dockers Stylish High-Quality Casual Trouser Pants For Men At Unmatched Prices

In the quest for an effortlessly stylish and versatile wardrobe, money remains a major determinant. But you don’t always have to empty your purse to achieve optimal class. There are several brands that allow you to express your fashion taste without breaking the bank. Among them, Dockers emerges as a beacon of both quality and affordability. Their range of best men’s casual pants doesn’t just elevate your everyday style; it does so without burning a hole in your pocket.

The Dockers Legacy

Affordable Luxury

Sustainability In Style

An Array Of Styles For Every Occasion

Perks Of Men’s Casual Pants

Comfort And Convenience

The fundamental allure of men’s casual pants lies in their comfort and convenience. After a demanding day at work, the prospect of donning formal attire can feel cumbersome. That’s why opting for casual trousers becomes a practical choice, allowing you to transition from professional settings to informal after-work gatherings seamlessly with no wardrobe change. This not only enhances comfort but also reduces stress, fostering a more relaxed and productive environment.

The Capacity For Expression

While business attire often adheres to a more standardized and limited wardrobe, casual dressing provides a canvas for individual expression. Men’s casual pants allow for diverse styles that align more closely with personal preferences, reflecting one’s unique personality. This freedom of expression contributes to a more authentic and enjoyable work atmosphere, where employees can showcase their individuality through their chosen looks and styles.

Enhanced Toughness

Durability is a notable advantage of casual trousers. Designed and stitched to resist ripping, these pants are crafted with longevity in mind. The added layers and sturdy construction contribute to the pants’ robustness, ensuring they withstand the wear and tear of regular use. This durability is especially beneficial for those seeking long-lasting, reliable clothing that remains resilient in various settings.

Comfortable And Versatile

Men’s casual pants are synonymous with comfort. Their lightweight fabrics and comfortable designs make them ideal for quick trips to public places like grocery stores or restaurants. Beyond convenience, these trousers help maintain a polished appearance while offering a relaxed fit, allowing for ease of movement without compromising style. They are versatile enough to be worn in various settings, whether at work, home, or outdoor gatherings. With casual trouser pants, you can boast of having a well-rounded wardrobe.

Wide Variety

Conclusion

Dockers stylish high-quality casual trouser pants for men offer a harmonious blend of timeless elegance, craftsmanship, and affordability. Embrace the Dockers legacy and redefine your casual wardrobe with trousers that effortlessly combine sophistication and comfort. Elevate your style game without the hefty price tag – because Dockers understands that fashion should be accessible to all.

#DockersFashion#AffordableStyle#CasualChic#QualityCraftsmanship#SustainableFashion#VersatileWardrobe#ComfortAndStyle#FashionForEveryOccasion#ExpressYourself#DurableClothing#StylishComfort#WardrobeEssentials#FashionWithPurpose#MensStyle#BudgetFriendlyFashion

0 notes

Text

DevOps Certification Training at H2KInfosys

In today's fast-paced tech industry, staying competitive and relevant is crucial. As organizations increasingly adopt DevOps practices to enhance collaboration, boost efficiency, and accelerate software delivery, professionals with DevOps skills are in high demand. To meet this demand and help individuals advance their careers, H2K Infosys offers a comprehensive DevOps certification training that equips learners with the knowledge and hands-on experience they need to excel in this dynamic field.

Why DevOps Certification Matters

DevOps is not just a buzzword; it's a fundamental shift in the way software development and IT operations work together. By obtaining a DevOps certification at H2K Infosys, you'll prove to employers that you have the skills and knowledge to streamline processes, automate tasks, and foster a culture of collaboration within an organization. Whether you're a software developer, system administrator, or IT manager, a DevOps certification is an investment in your future.

youtube

H2K Infosys: Your Trusted DevOps Training Partner

H2K Infosys has a sterling reputation as a provider of high-quality IT training and certification programs. The DevOps certification course at H2K Infosys is designed to meet the needs of beginners and experienced professionals alike. Here's why H2K Infosys is the ideal choice for your DevOps journey:

Comprehensive Curriculum: Our DevOps certification program covers the entire DevOps lifecycle, including version control, continuous integration, continuous deployment, automation, and collaboration. You'll gain proficiency in tools like Git, Jenkins, Docker, Kubernetes, and more.

Hands-On Experience: Theory is essential, but practical experience is equally crucial. H2K Infosys provides extensive hands-on labs and projects to ensure that you can apply what you've learned in real-world scenarios.

Expert Instructors: Our instructors are seasoned DevOps professionals with real-world experience. They are dedicated to guiding you through the intricacies of DevOps practices and technologies.

Flexibility: We understand that everyone has different schedules. Our DevOps certification program offers flexible options, including weekday and weekend classes, ensuring that you can acquire DevOps skills without disrupting your work or personal life.

Job Assistance: H2K Infosys goes the extra mile to help you secure a job in the DevOps field. We offer resume building, interview preparation, and job placement assistance to our certified students.

Tags: H2KInfosys, DevOps certification, H2K Infosys DevOps, DevOps tools, Devops certification H2K Infosys, Automation in DevOps, Best DevOps in GA USA, worldwide top rating AWS DevOps Certification | H2K Infosys, devops certification, aws devops certification, azure devops certification, devops training,devops training near me, devops tutorial,devops certification training course

Contact: +1-770-777-1269

Mail: [email protected]

Location: Atlanta, GA - USA, 5450 McGinnis Village Place, # 103 Alpharetta, GA 30005, USA.

Facebook: https://www.facebook.com/H2KInfosysLLC

Instagram: https://www.instagram.com/h2kinfosysllc/

Youtube: https://www.youtube.com/watch?v=fgOgOCT_6L4

Visit: https://www.h2kinfosys.com/courses/devops-online-training-course/

DevOps Course: bit.ly/45vT1nB

0 notes

Text

How to set up command-line access to Amazon Keyspaces (for Apache Cassandra) by using the new developer toolkit Docker image

Amazon Keyspaces (for Apache Cassandra) is a scalable, highly available, and fully managed Cassandra-compatible database service. Amazon Keyspaces helps you run your Cassandra workloads more easily by using a serverless database that can scale up and down automatically in response to your actual application traffic. Because Amazon Keyspaces is serverless, there are no clusters or nodes to provision and manage. You can get started with Amazon Keyspaces with a few clicks in the console or a few changes to your existing Cassandra driver configuration. In this post, I show you how to set up command-line access to Amazon Keyspaces by using the keyspaces-toolkit Docker image. The keyspaces-toolkit Docker image contains commonly used Cassandra developer tooling. The toolkit comes with the Cassandra Query Language Shell (cqlsh) and is configured with best practices for Amazon Keyspaces. The container image is open source and also compatible with Apache Cassandra 3.x clusters. A command line interface (CLI) such as cqlsh can be useful when automating database activities. You can use cqlsh to run one-time queries and perform administrative tasks, such as modifying schemas or bulk-loading flat files. You also can use cqlsh to enable Amazon Keyspaces features, such as point-in-time recovery (PITR) backups and assign resource tags to keyspaces and tables. The following screenshot shows a cqlsh session connected to Amazon Keyspaces and the code to run a CQL create table statement. Build a Docker image To get started, download and build the Docker image so that you can run the keyspaces-toolkit in a container. A Docker image is the template for the complete and executable version of an application. It’s a way to package applications and preconfigured tools with all their dependencies. To build and run the image for this post, install the latest Docker engine and Git on the host or local environment. The following command builds the image from the source. docker build --tag amazon/keyspaces-toolkit --build-arg CLI_VERSION=latest https://github.com/aws-samples/amazon-keyspaces-toolkit.git The preceding command includes the following parameters: –tag – The name of the image in the name:tag Leaving out the tag results in latest. –build-arg CLI_VERSION – This allows you to specify the version of the base container. Docker images are composed of layers. If you’re using the AWS CLI Docker image, aligning versions significantly reduces the size and build times of the keyspaces-toolkit image. Connect to Amazon Keyspaces Now that you have a container image built and available in your local repository, you can use it to connect to Amazon Keyspaces. To use cqlsh with Amazon Keyspaces, create service-specific credentials for an existing AWS Identity and Access Management (IAM) user. The service-specific credentials enable IAM users to access Amazon Keyspaces, but not access other AWS services. The following command starts a new container running the cqlsh process. docker run --rm -ti amazon/keyspaces-toolkit cassandra.us-east-1.amazonaws.com 9142 --ssl -u "SERVICEUSERNAME" -p "SERVICEPASSWORD" The preceding command includes the following parameters: run – The Docker command to start the container from an image. It’s the equivalent to running create and start. –rm –Automatically removes the container when it exits and creates a container per session or run. -ti – Allocates a pseudo TTY (t) and keeps STDIN open (i) even if not attached (remove i when user input is not required). amazon/keyspaces-toolkit – The image name of the keyspaces-toolkit. us-east-1.amazonaws.com – The Amazon Keyspaces endpoint. 9142 – The default SSL port for Amazon Keyspaces. After connecting to Amazon Keyspaces, exit the cqlsh session and terminate the process by using the QUIT or EXIT command. Drop-in replacement Now, simplify the setup by assigning an alias (or DOSKEY for Windows) to the Docker command. The alias acts as a shortcut, enabling you to use the alias keyword instead of typing the entire command. You will use cqlsh as the alias keyword so that you can use the alias as a drop-in replacement for your existing Cassandra scripts. The alias contains the parameter –v "$(pwd)":/source, which mounts the current directory of the host. This is useful for importing and exporting data with COPY or using the cqlsh --file command to load external cqlsh scripts. alias cqlsh='docker run --rm -ti -v "$(pwd)":/source amazon/keyspaces-toolkit cassandra.us-east-1.amazonaws.com 9142 --ssl' For security reasons, don’t store the user name and password in the alias. After setting up the alias, you can create a new cqlsh session with Amazon Keyspaces by calling the alias and passing in the service-specific credentials. cqlsh -u "SERVICEUSERNAME" -p "SERVICEPASSWORD" Later in this post, I show how to use AWS Secrets Manager to avoid using plaintext credentials with cqlsh. You can use Secrets Manager to store, manage, and retrieve secrets. Create a keyspace Now that you have the container and alias set up, you can use the keyspaces-toolkit to create a keyspace by using cqlsh to run CQL statements. In Cassandra, a keyspace is the highest-order structure in the CQL schema, which represents a grouping of tables. A keyspace is commonly used to define the domain of a microservice or isolate clients in a multi-tenant strategy. Amazon Keyspaces is serverless, so you don’t have to configure clusters, hosts, or Java virtual machines to create a keyspace or table. When you create a new keyspace or table, it is associated with an AWS Account and Region. Though a traditional Cassandra cluster is limited to 200 to 500 tables, with Amazon Keyspaces the number of keyspaces and tables for an account and Region is virtually unlimited. The following command creates a new keyspace by using SingleRegionStrategy, which replicates data three times across multiple Availability Zones in a single AWS Region. Storage is billed by the raw size of a single replica, and there is no network transfer cost when replicating data across Availability Zones. Using keyspaces-toolkit, connect to Amazon Keyspaces and run the following command from within the cqlsh session. CREATE KEYSPACE amazon WITH REPLICATION = {'class': 'SingleRegionStrategy'} AND TAGS = {'domain' : 'shoppingcart' , 'app' : 'acme-commerce'}; The preceding command includes the following parameters: REPLICATION – SingleRegionStrategy replicates data three times across multiple Availability Zones. TAGS – A label that you assign to an AWS resource. For more information about using tags for access control, microservices, cost allocation, and risk management, see Tagging Best Practices. Create a table Previously, you created a keyspace without needing to define clusters or infrastructure. Now, you will add a table to your keyspace in a similar way. A Cassandra table definition looks like a traditional SQL create table statement with an additional requirement for a partition key and clustering keys. These keys determine how data in CQL rows are distributed, sorted, and uniquely accessed. Tables in Amazon Keyspaces have the following unique characteristics: Virtually no limit to table size or throughput – In Amazon Keyspaces, a table’s capacity scales up and down automatically in response to traffic. You don’t have to manage nodes or consider node density. Performance stays consistent as your tables scale up or down. Support for “wide” partitions – CQL partitions can contain a virtually unbounded number of rows without the need for additional bucketing and sharding partition keys for size. This allows you to scale partitions “wider” than the traditional Cassandra best practice of 100 MB. No compaction strategies to consider – Amazon Keyspaces doesn’t require defined compaction strategies. Because you don’t have to manage compaction strategies, you can build powerful data models without having to consider the internals of the compaction process. Performance stays consistent even as write, read, update, and delete requirements change. No repair process to manage – Amazon Keyspaces doesn’t require you to manage a background repair process for data consistency and quality. No tombstones to manage – With Amazon Keyspaces, you can delete data without the challenge of managing tombstone removal, table-level grace periods, or zombie data problems. 1 MB row quota – Amazon Keyspaces supports the Cassandra blob type, but storing large blob data greater than 1 MB results in an exception. It’s a best practice to store larger blobs across multiple rows or in Amazon Simple Storage Service (Amazon S3) object storage. Fully managed backups – PITR helps protect your Amazon Keyspaces tables from accidental write or delete operations by providing continuous backups of your table data. The following command creates a table in Amazon Keyspaces by using a cqlsh statement with customer properties specifying on-demand capacity mode, PITR enabled, and AWS resource tags. Using keyspaces-toolkit to connect to Amazon Keyspaces, run this command from within the cqlsh session. CREATE TABLE amazon.eventstore( id text, time timeuuid, event text, PRIMARY KEY(id, time)) WITH CUSTOM_PROPERTIES = { 'capacity_mode':{'throughput_mode':'PAY_PER_REQUEST'}, 'point_in_time_recovery':{'status':'enabled'} } AND TAGS = {'domain' : 'shoppingcart' , 'app' : 'acme-commerce' , 'pii': 'true'}; The preceding command includes the following parameters: capacity_mode – Amazon Keyspaces has two read/write capacity modes for processing reads and writes on your tables. The default for new tables is on-demand capacity mode (the PAY_PER_REQUEST flag). point_in_time_recovery – When you enable this parameter, you can restore an Amazon Keyspaces table to a point in time within the preceding 35 days. There is no overhead or performance impact by enabling PITR. TAGS – Allows you to organize resources, define domains, specify environments, allocate cost centers, and label security requirements. Insert rows Before inserting data, check if your table was created successfully. Amazon Keyspaces performs data definition language (DDL) operations asynchronously, such as creating and deleting tables. You also can monitor the creation status of a new resource programmatically by querying the system schema table. Also, you can use a toolkit helper for exponential backoff. Check for table creation status Cassandra provides information about the running cluster in its system tables. With Amazon Keyspaces, there are no clusters to manage, but it still provides system tables for the Amazon Keyspaces resources in an account and Region. You can use the system tables to understand the creation status of a table. The system_schema_mcs keyspace is a new system keyspace with additional content related to serverless functionality. Using keyspaces-toolkit, run the following SELECT statement from within the cqlsh session to retrieve the status of the newly created table. SELECT keyspace_name, table_name, status FROM system_schema_mcs.tables WHERE keyspace_name = 'amazon' AND table_name = 'eventstore'; The following screenshot shows an example of output for the preceding CQL SELECT statement. Insert sample data Now that you have created your table, you can use CQL statements to insert and read sample data. Amazon Keyspaces requires all write operations (insert, update, and delete) to use the LOCAL_QUORUM consistency level for durability. With reads, an application can choose between eventual consistency and strong consistency by using LOCAL_ONE or LOCAL_QUORUM consistency levels. The benefits of eventual consistency in Amazon Keyspaces are higher availability and reduced cost. See the following code. CONSISTENCY LOCAL_QUORUM; INSERT INTO amazon.eventstore(id, time, event) VALUES ('1', now(), '{eventtype:"click-cart"}'); INSERT INTO amazon.eventstore(id, time, event) VALUES ('2', now(), '{eventtype:"showcart"}'); INSERT INTO amazon.eventstore(id, time, event) VALUES ('3', now(), '{eventtype:"clickitem"}') IF NOT EXISTS; SELECT * FROM amazon.eventstore; The preceding code uses IF NOT EXISTS or lightweight transactions to perform a conditional write. With Amazon Keyspaces, there is no heavy performance penalty for using lightweight transactions. You get similar performance characteristics of standard insert, update, and delete operations. The following screenshot shows the output from running the preceding statements in a cqlsh session. The three INSERT statements added three unique rows to the table, and the SELECT statement returned all the data within the table. Export table data to your local host You now can export the data you just inserted by using the cqlsh COPY TO command. This command exports the data to the source directory, which you mounted earlier to the working directory of the Docker run when creating the alias. The following cqlsh statement exports your table data to the export.csv file located on the host machine. CONSISTENCY LOCAL_ONE; COPY amazon.eventstore(id, time, event) TO '/source/export.csv' WITH HEADER=false; The following screenshot shows the output of the preceding command from the cqlsh session. After the COPY TO command finishes, you should be able to view the export.csv from the current working directory of the host machine. For more information about tuning export and import processes when using cqlsh COPY TO, see Loading data into Amazon Keyspaces with cqlsh. Use credentials stored in Secrets Manager Previously, you used service-specific credentials to connect to Amazon Keyspaces. In the following example, I show how to use the keyspaces-toolkit helpers to store and access service-specific credentials in Secrets Manager. The helpers are a collection of scripts bundled with keyspaces-toolkit to assist with common tasks. By overriding the default entry point cqlsh, you can call the aws-sm-cqlsh.sh script, a wrapper around the cqlsh process that retrieves the Amazon Keyspaces service-specific credentials from Secrets Manager and passes them to the cqlsh process. This script allows you to avoid hard-coding the credentials in your scripts. The following diagram illustrates this architecture. Configure the container to use the host’s AWS CLI credentials The keyspaces-toolkit extends the AWS CLI Docker image, making keyspaces-toolkit extremely lightweight. Because you may already have the AWS CLI Docker image in your local repository, keyspaces-toolkit adds only an additional 10 MB layer extension to the AWS CLI. This is approximately 15 times smaller than using cqlsh from the full Apache Cassandra 3.11 distribution. The AWS CLI runs in a container and doesn’t have access to the AWS credentials stored on the container’s host. You can share credentials with the container by mounting the ~/.aws directory. Mount the host directory to the container by using the -v parameter. To validate a proper setup, the following command lists current AWS CLI named profiles. docker run --rm -ti -v ~/.aws:/root/.aws --entrypoint aws amazon/keyspaces-toolkit configure list-profiles The ~/.aws directory is a common location for the AWS CLI credentials file. If you configured the container correctly, you should see a list of profiles from the host credentials. For instructions about setting up the AWS CLI, see Step 2: Set Up the AWS CLI and AWS SDKs. Store credentials in Secrets Manager Now that you have configured the container to access the host’s AWS CLI credentials, you can use the Secrets Manager API to store the Amazon Keyspaces service-specific credentials in Secrets Manager. The secret name keyspaces-credentials in the following command is also used in subsequent steps. docker run --rm -ti -v ~/.aws:/root/.aws --entrypoint aws amazon/keyspaces-toolkit secretsmanager create-secret --name keyspaces-credentials --description "Store Amazon Keyspaces Generated Service Credentials" --secret-string "{"username":"SERVICEUSERNAME","password":"SERVICEPASSWORD","engine":"cassandra","host":"SERVICEENDPOINT","port":"9142"}" The preceding command includes the following parameters: –entrypoint – The default entry point is cqlsh, but this command uses this flag to access the AWS CLI. –name – The name used to identify the key to retrieve the secret in the future. –secret-string – Stores the service-specific credentials. Replace SERVICEUSERNAME and SERVICEPASSWORD with your credentials. Replace SERVICEENDPOINT with the service endpoint for the AWS Region. Creating and storing secrets requires CreateSecret and GetSecretValue permissions in your IAM policy. As a best practice, rotate secrets periodically when storing database credentials. Use the Secrets Manager helper script Use the Secrets Manager helper script to sign in to Amazon Keyspaces by replacing the user and password fields with the secret key from the preceding keyspaces-credentials command. docker run --rm -ti -v ~/.aws:/root/.aws --entrypoint aws-sm-cqlsh.sh amazon/keyspaces-toolkit keyspaces-credentials --ssl --execute "DESCRIBE Keyspaces" The preceding command includes the following parameters: -v – Used to mount the directory containing the host’s AWS CLI credentials file. –entrypoint – Use the helper by overriding the default entry point of cqlsh to access the Secrets Manager helper script, aws-sm-cqlsh.sh. keyspaces-credentials – The key to access the credentials stored in Secrets Manager. –execute – Runs a CQL statement. Update the alias You now can update the alias so that your scripts don’t contain plaintext passwords. You also can manage users and roles through Secrets Manager. The following code sets up a new alias by using the keyspaces-toolkit Secrets Manager helper for passing the service-specific credentials to Secrets Manager. alias cqlsh='docker run --rm -ti -v ~/.aws:/root/.aws -v "$(pwd)":/source --entrypoint aws-sm-cqlsh.sh amazon/keyspaces-toolkit keyspaces-credentials --ssl' To have the alias available in every new terminal session, add the alias definition to your .bashrc file, which is executed on every new terminal window. You can usually find this file in $HOME/.bashrc or $HOME/bash_aliases (loaded by $HOME/.bashrc). Validate the alias Now that you have updated the alias with the Secrets Manager helper, you can use cqlsh without the Docker details or credentials, as shown in the following code. cqlsh --execute "DESCRIBE TABLE amazon.eventstore;" The following screenshot shows the running of the cqlsh DESCRIBE TABLE statement by using the alias created in the previous section. In the output, you should see the table definition of the amazon.eventstore table you created in the previous step. Conclusion In this post, I showed how to get started with Amazon Keyspaces and the keyspaces-toolkit Docker image. I used Docker to build an image and run a container for a consistent and reproducible experience. I also used an alias to create a drop-in replacement for existing scripts, and used built-in helpers to integrate cqlsh with Secrets Manager to store service-specific credentials. Now you can use the keyspaces-toolkit with your Cassandra workloads. As a next step, you can store the image in Amazon Elastic Container Registry, which allows you to access the keyspaces-toolkit from CI/CD pipelines and other AWS services such as AWS Batch. Additionally, you can control the image lifecycle of the container across your organization. You can even attach policies to expiring images based on age or download count. For more information, see Pushing an image. Cheat sheet of useful commands I did not cover the following commands in this blog post, but they will be helpful when you work with cqlsh, AWS CLI, and Docker. --- Docker --- #To view the logs from the container. Helpful when debugging docker logs CONTAINERID #Exit code of the container. Helpful when debugging docker inspect createtablec --format='{{.State.ExitCode}}' --- CQL --- #Describe keyspace to view keyspace definition DESCRIBE KEYSPACE keyspace_name; #Describe table to view table definition DESCRIBE TABLE keyspace_name.table_name; #Select samples with limit to minimize output SELECT * FROM keyspace_name.table_name LIMIT 10; --- Amazon Keyspaces CQL --- #Change provisioned capacity for tables ALTER TABLE keyspace_name.table_name WITH custom_properties={'capacity_mode':{'throughput_mode': 'PROVISIONED', 'read_capacity_units': 4000, 'write_capacity_units': 3000}} ; #Describe current capacity mode for tables SELECT keyspace_name, table_name, custom_properties FROM system_schema_mcs.tables where keyspace_name = 'amazon' and table_name='eventstore'; --- Linux --- #Line count of multiple/all files in the current directory find . -type f | wc -l #Remove header from csv sed -i '1d' myData.csv About the Author Michael Raney is a Solutions Architect with Amazon Web Services. https://aws.amazon.com/blogs/database/how-to-set-up-command-line-access-to-amazon-keyspaces-for-apache-cassandra-by-using-the-new-developer-toolkit-docker-image/

1 note

·

View note

Text

A Beginner’s Guide to Docker: Building and Running Containers in DevOps

Docker has revolutionized the way applications are built, shipped, and run in the world of DevOps. As a containerization platform, Docker enables developers to package applications and their dependencies into lightweight, portable containers, ensuring consistency across environments. This guide introduces Docker’s core concepts and practical steps to get started.

What is Docker? Docker is an open-source platform that allows developers to: Build and package applications along with their dependencies into containers.

Run these containers consistently across different environments. Simplify software development, deployment, and scaling processes.

2. Why Use Docker in DevOps? Environment Consistency: Docker containers ensure that applications run the same in development, testing, and production.

Speed: Containers start quickly and use system resources efficiently.

Portability: Containers can run on any system that supports Docker, whether it’s a developer’s laptop, an on-premises server, or the cloud.

Microservices Architecture: Docker works seamlessly with microservices, enabling developers to build, deploy, and scale individual services independently.

3. Key Docker Components Docker Engine:

The core runtime for building and running containers.

Images: A blueprint for containers that include the application and its dependencies. Containers: Instances of images that are lightweight and isolated.

Dockerfile: A script containing instructions to build a Docker image.

Docker Hub: A repository for sharing Docker images.

4. Getting Started with Docker

Step 1: Install Docker Download and install Docker Desktop for your operating system from Docker’s official site.

Step 2: Write a Dockerfile Create a Dockerfile to define your application environment.

Example for a Python app:

dockerfile Edit

# Use an official Python runtime as a base image FROM python:3.9-slim

# Set the working directory WORKDIR /app

# Copy project files COPY . .

# Install dependencies RUN pip install -r requirements.txt # Define the command to run the app CMD [“python”, “app.py”]

Step 3: Build the Docker Image Run the following command to build the image:

bash Copy Edit docker build -t my-python-app .

Step 4: Run the Container Start a container from your image:

bash

Edit docker run -d -p 5000:5000 my-python-app

This maps port 5000 of the container to port 5000 on your host machine.

Step 5: Push to Docker Hub Share your image by pushing it to Docker Hub: bash

Edit docker tag my-python-app username/my-python-app docker push username/my-python-app

5. Practical Use Cases in DevOps Continuous Integration/Continuous Deployment (CI/CD):

Docker is commonly used in pipelines for building, testing, and deploying applications.

Microservices:

Each service runs in its own container, isolated from others.

Scalability:

Containers can be easily scaled up or down based on demand.

Testing:

Test environments can be quickly spun up and torn down using Docker containers.

6. Best Practices Keep Docker images small by using minimal base images. Avoid hardcoding sensitive data into images; use environment variables instead.

Use Docker Compose to manage multi-container applications. Regularly scan images for vulnerabilities using Docker’s built-in security tools.

Conclusion

Docker simplifies the development and deployment process, making it a cornerstone of modern DevOps practices. By understanding its basics and starting with small projects, beginners can quickly leverage Docker to enhance productivity and streamline workflows.

0 notes

Text

Introduction to the framework

Programming paradigms

From time to time, the difference in writing code using computer languages was introduced.The programming paradigm is a way to classify programming languages based on their features. For example

Functional programming

Object oriented programming.

Some computer languages support many patterns. There are two programming languages. These are non-structured programming language and structured programming language. In structured programming language are two types of category. These are block structured(functional)programming and event-driven programming language. In a non-structured programming language characteristic

earliest programming language.

A series of code.

Flow control with a GO TO statement.

Become complex as the number of lines increases as a example Basic, FORTRAN, COBOL.

Often consider program as theories of a formal logical and computations as deduction in that logical space.

Non-structured programming may greatly simplify writing parallel programs.The structured programming language characteristics are

A programming paradigm that uses statement that change a program’s state.

Structured programming focus on describing how a program operators.

The imperative mood in natural language express commands, an imperative program consist of command for the computer perform.

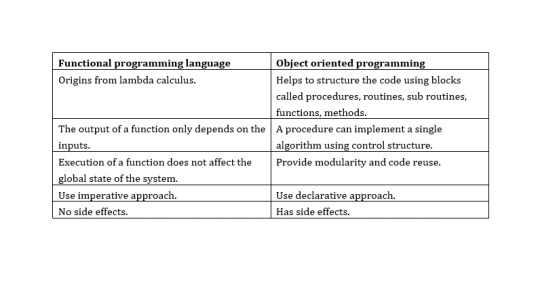

When considering the functional programming language and object-oriented programming language in these two languages have many differences

In here lambda calculus is formula in mathematical logic for expressing computation based on functional abstraction and application using variable binding and substitution. And lambda expressions is anonymous function that can use to create delegates or expression three type by using lambda expressions. Can write local function that can be passed as argument or returned as the value of function calls. A lambda expression is the most convenient way to create that delegate. Here an example of a simple lambda expression that defines the “plus one” function.

λx.x+1

And here no side effect meant in computer science, an operation, function or expression is said to have a side effect if it modifies some state variable values outside its local environment, that is to say has an observable effect besides returning a value to the invoke of the operation.Referential transparency meant oft-touted property of functional language which makes it easier to reason about the behavior of programs.

Key features of object-oriented programming

There are major features in object-oriented programming language. These are

Encapsulation - Encapsulation is one of the basic concepts in object-oriented programming. It describes the idea of bundling the data and methods that work on that data within an entity.

Inheritance - Inheritance is one of the basic categories of object-oriented programming languages. This is a mechanism where can get a class from one class to another, which can share a set of those characteristics and resources.

Polymorphous - Polymorphous is an object-oriented programming concept that refers to the ability of a variable, function, or object to take several forms.

Encapsulation - Encapsulation is to include inside a program object that requires all the resources that the object needs to do - basically, the methods and the data.

These things are refers to the creation of self-contain modules that bind processing functions to the data. These user-defined data types are called “classes” and one instance of a class is an “object”.

These things are refers to the creation of self-contain modules that bind processing functions to the data. These user-defined data types are called “classes” and one instance of a class is an “object”.

How the event-driven programming is different from other programming paradigms???

Event driven programming is a focus on the events triggered outside the system

User events

Schedulers/timers

Sensor, messages, hardware, interrupt.

Mostly related to the system with GUI where the users can interact with the GUI elements. User event listener to act when the events are triggered/fired. An internal event loop is used to identify the events and then call the necessary handler.

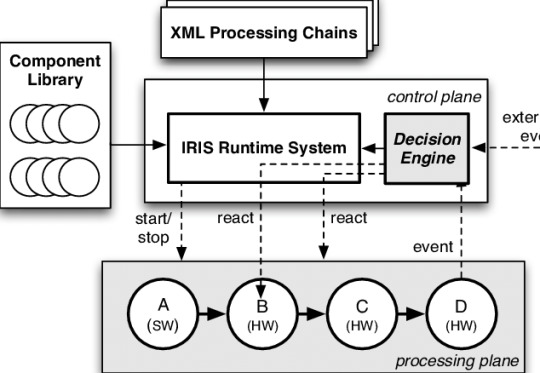

Software Run-time Architecture

A software architecture describes the design of the software system in terms of model components and connectors. However, architectural models can also be used on the run-time to enable the recovery of architecture and the architecture adaptation Languages can be classified according to the way they are processed and executed.

Compiled language

Scripting language

Markup language

Communication between application and OS needs additional components.The type of language used to develop application components.

Compiled language

The compiled language is a programming language whose implementation is generally compiled, and not interpreter

Some executions can be run directly on the OS. For example, C on windows. Some executable s use vertical run-time machines. For example, java.net.

Scripting language

A scripting or script language is a programming language that supports the script - a program written for a specific run-time environment that automates the execution of those tasks that are performed by a human operator alternately by one-by-one can go.

The source code is not compiled it is executed directly.At the time of execution, code is interpreted by run-time machine. For example PHP, JS.

Markup Language

The markup language is a computer language that uses tags to define elements within the document.

There is no execution process for the markup language.Tool which has the knowledge to understand markup language, can render output. For example, HTML, XML.Some other tools are used to run the system at different levels

Virtual machine

Containers/Dockers

Virtual machine

Containers

Virtual Machine Function is a function for the relation of vertical machine environments. This function enables the creation of several independent virtual machines on a physical machine which perpendicular to resources on the physical machine such as CPU, memory network and disk.

Development Tools

A programming tool or software development tool is a computer program used by software developers to create, debug, maintain, or otherwise support other programs and applications.Computer aided software engineering tools are used in the engineering life cycle of the software system.

Requirement – surveying tools, analyzing tools.

Designing – modelling tools

Development – code editors, frameworks, libraries, plugins, compilers.

Testing – test automation tools, quality assurance tools.

Implementation – VM s, containers/dockers, servers.

Maintenance – bug trackers, analytical tools.

CASE software types

Individual tools – for specific task.

Workbenches – multiple tools are combined, focusing on specific part of SDLC.

Environment – combines many tools to support many activities throughout the SDLS.

Framework vs Libraries vs plugins….

plugins

plugins provide a specific tool for development. Plugin has been placed in the project on development time, Apply some configurations using code. Run-time will be plugged in through the configuration

Libraries

To provide an API, the coder can use it to develop some features when writing the code. At the development time,

Add the library to the project (source code files, modules, packages, executable etc.)

Call the necessary functions/methods using the given packages/modules/classes.

At the run-time the library will be called by the code

Framework

Framework is a collection of libraries, tools, rules, structure and controls for the creation of software systems. At the run-time,

Create the structure of the application.

Place code in necessary place.

May use the given libraries to write code.

Include additional libraries and plugins.

At run-time the framework will call code.

A web application framework may provide

User session management.

Data storage.

A web template system.

A desktop application framework may provide

User interface functionality.

Widgets.

Frameworks are concrete

Framework consists of physical components that are usable files during production.JAVA and NET frameworks are set of concrete components like jars,dlls etc.

A framework is incomplete

The structure is not usable in its own right. Apart from this they do not leave anything empty for their user. The framework alone will not work, relevant application logic should be implemented and deployed alone with the framework. Structure trade challenge between learning curve and saving time coding.

Framework helps solving recurring problems

Very reusable because they are helpful in terms of many recurring problems. To make a framework for reference of this problem, commercial matter also means.

Framework drives the solution

The framework directs the overall architecture of a specific solution. To complete the JEE rules, if the JEE framework is to be used on an enterprise application.

Importance of frameworks in enterprise application development

Using code that is already built and tested by other programmers, enhances reliability and reduces programming time. Lower level "handling tasks, can help with framework codes. Framework often help enforce platform-specific best practices and rules.

1 note

·

View note

Text

docker commands cheat sheet free EEC#

💾 ►►► DOWNLOAD FILE 🔥🔥🔥🔥🔥 docker exec -it /bin/sh. docker restart. Show running container stats. Docker Cheat Sheet All commands below are called as options to the base docker command. for more information on a particular command. To enter a running container, attach a new shell process to a running container called foo, use: docker exec -it foo /bin/bash . Images are just. Reference - Best Practices. Creates a mount point with the specified name and marks it as holding externally mounted volumes from native host or other containers. FROM can appear multiple times within a single Dockerfile in order to create multiple images. The tag or digest values are optional. If you omit either of them, the builder assumes a latest by default. The builder returns an error if it cannot match the tag value. Normal shell processing does not occur when using the exec form. There can only be one CMD instruction in a Dockerfile. If the user specifies arguments to docker run then they will override the default specified in CMD. To include spaces within a LABEL value, use quotes and backslashes as you would in command-line parsing. The environment variables set using ENV will persist when a container is run from the resulting image. Match rules. Prepend exec to get around this drawback. It can be used multiple times in the one Dockerfile. Multiple variables may be defined by specifying ARG multiple times. It is not recommended to use build-time variables for passing secrets like github keys, user credentials, etc. Build-time variable values are visible to any user of the image with the docker history command. The trigger will be executed in the context of the downstream build, as if it had been inserted immediately after the FROM instruction in the downstream Dockerfile. Any build instruction can be registered as a trigger. Triggers are inherited by the "child" build only. In other words, they are not inherited by "grand-children" builds. After a certain number of consecutive failures, it becomes unhealthy. If a single run of the check takes longer than timeout seconds then the check is considered to have failed. It takes retries consecutive failures of the health check for the container to be considered unhealthy. The command's exit status indicates the health status of the container. Allows an alternate shell be used such as zsh , csh , tcsh , powershell , and others.

1 note

·

View note

Text

docker commands cheat sheet 100% working 4RXD#

💾 ►►► DOWNLOAD FILE 🔥🔥🔥🔥🔥 docker exec -it /bin/sh. docker restart. Show running container stats. Docker Cheat Sheet All commands below are called as options to the base docker command. for more information on a particular command. To enter a running container, attach a new shell process to a running container called foo, use: docker exec -it foo /bin/bash . Images are just. Reference - Best Practices. Creates a mount point with the specified name and marks it as holding externally mounted volumes from native host or other containers. FROM can appear multiple times within a single Dockerfile in order to create multiple images. The tag or digest values are optional. If you omit either of them, the builder assumes a latest by default. The builder returns an error if it cannot match the tag value. Normal shell processing does not occur when using the exec form. There can only be one CMD instruction in a Dockerfile. If the user specifies arguments to docker run then they will override the default specified in CMD. To include spaces within a LABEL value, use quotes and backslashes as you would in command-line parsing. The environment variables set using ENV will persist when a container is run from the resulting image. Match rules. Prepend exec to get around this drawback. It can be used multiple times in the one Dockerfile. Multiple variables may be defined by specifying ARG multiple times. It is not recommended to use build-time variables for passing secrets like github keys, user credentials, etc. Build-time variable values are visible to any user of the image with the docker history command. The trigger will be executed in the context of the downstream build, as if it had been inserted immediately after the FROM instruction in the downstream Dockerfile. Any build instruction can be registered as a trigger. Triggers are inherited by the "child" build only. In other words, they are not inherited by "grand-children" builds. After a certain number of consecutive failures, it becomes unhealthy. If a single run of the check takes longer than timeout seconds then the check is considered to have failed. It takes retries consecutive failures of the health check for the container to be considered unhealthy. The command's exit status indicates the health status of the container. Allows an alternate shell be used such as zsh , csh , tcsh , powershell , and others.

1 note

·

View note

Text

docker commands cheat sheet trainer NKD!

💾 ►►► DOWNLOAD FILE 🔥🔥🔥🔥🔥 docker exec -it /bin/sh. docker restart. Show running container stats. Docker Cheat Sheet All commands below are called as options to the base docker command. for more information on a particular command. To enter a running container, attach a new shell process to a running container called foo, use: docker exec -it foo /bin/bash . Images are just. Reference - Best Practices. Creates a mount point with the specified name and marks it as holding externally mounted volumes from native host or other containers. FROM can appear multiple times within a single Dockerfile in order to create multiple images. The tag or digest values are optional. If you omit either of them, the builder assumes a latest by default. The builder returns an error if it cannot match the tag value. Normal shell processing does not occur when using the exec form. There can only be one CMD instruction in a Dockerfile. If the user specifies arguments to docker run then they will override the default specified in CMD. To include spaces within a LABEL value, use quotes and backslashes as you would in command-line parsing. The environment variables set using ENV will persist when a container is run from the resulting image. Match rules. Prepend exec to get around this drawback. It can be used multiple times in the one Dockerfile. Multiple variables may be defined by specifying ARG multiple times. It is not recommended to use build-time variables for passing secrets like github keys, user credentials, etc. Build-time variable values are visible to any user of the image with the docker history command. The trigger will be executed in the context of the downstream build, as if it had been inserted immediately after the FROM instruction in the downstream Dockerfile. Any build instruction can be registered as a trigger. Triggers are inherited by the "child" build only. In other words, they are not inherited by "grand-children" builds. After a certain number of consecutive failures, it becomes unhealthy. If a single run of the check takes longer than timeout seconds then the check is considered to have failed. It takes retries consecutive failures of the health check for the container to be considered unhealthy. The command's exit status indicates the health status of the container. Allows an alternate shell be used such as zsh , csh , tcsh , powershell , and others.

1 note

·

View note

Text

Microsoft excel 2019 vba and macros review 無料ダウンロード.Office VBA リファレンス

Microsoft excel 2019 vba and macros review 無料ダウンロード.Microsoft Excel 2019 VBA and Macros

Popular Books.Microsoft Excel VBA and Macros (Business Skills) » GFxtra

Microsoft Excel VBA and macros , Renowned Excel experts Bill Jelen (MrExcel) and Tracy Syrstad explain how to build more powerful, reliable, and efficien · Microsoft Excel VBA and Macros Editorial Reviews Renowned Excel experts Bill Jelen (MrExcel) and Tracy Syrstad explain how to build more powerful, reliable, and efficient Excel spreadsheets When You Need Microsoft Excel Help- Whether It's Solving An Excel Emergency Or Simplifying A Task - Is There. Is An Entire Com

Microsoft excel 2019 vba and macros review 無料ダウンロード.[PDF] microsoft excel vba and macros Free

· Microsoft Excel VBA and Macros Editorial Reviews Renowned Excel experts Bill Jelen (MrExcel) and Tracy Syrstad explain how to build more powerful, reliable, and efficient Excel spreadsheets When You Need Microsoft Excel Help- Whether It's Solving An Excel Emergency Or Simplifying A Task - Is There. Is An Entire Com · マイクロソフトのプログラム開発環境であるビジュアルスタジオ(Visual Studio) が公開されました。コミュニティ版ならば誰でも無料で試すことができます。 VBやCシャープ、UWPの開発以外にPtyhonや、Azureなどの開発環境も整えることができます。Estimated Reading Time: 6 mins

Thank you so much and I hope you will have an interesting time with EVBA. Mail: quantriexcel gmail. Main Web Map VBA Ebook VBA code Excel ebooks Free Excel Templetes Tool and Add in Excel LEARN EXCEL AND VBA ONLINE Programming ebook. Home VBA ebooks Microsoft Excel VBA and Macros. Microsoft Excel VBA and Macros. Microsoft Excel VBA and Macros Bill Jelen, Tracy Syrstad Renowned Excel experts Bill Jelen MrExcel and Tracy Syrstad explain how to build more powerful, reliable, and efficient Excel spreadsheets.

Use this guide to automate virtually any routine Excel task: save yourself hours, days, maybe even weeks. Bill Jelen and Tracy Syrstad help you instantly visualize information to make it actionable; capture data from anywhere, and use it anywhere; and automate the best new features in Excel and Excel in Office Microsoft Pr. ISBN Business Skills. PDF, Download pdf, Save for later Toggle Dropdown.

Tags VBA ebooks. About Mr Excel. Marcadores: VBA ebooks. Popular Posts. Excel VBA in easy steps, 2nd Edition. Learn Docker Crash for busy DevOps and Developers Free Video Course. Computer Programming for Absolute Beginners: 3 Books in 1 — Learn the Art of Computer Programming and Start Your Journey as A Self-Taught Programmer. Between the Spreadsheets: Classifying and Fixing Dirty Data. Excel Formulas and Functions for Beginners The Step-by-Step Practical Guide with Examples.

Learn Excel Macros With Vba Free Video Course Free Download. Creating Basic Excel Formulas Free PDF. EXCEL FOR BEGINNERS EDITION: Guide on Mastering Excel Application with Proper Illustrations. ADVANCE EXCEL SHORTCUTS KEY FREE PDF. Strong Security Governance through Integration and Automation: A Practical Guide to Building an Integrated GRC Framework for Your Organization.

Advanced Excel for Productivity. Share Free 1. Basic and Advance Excel Formula Guide: Simple Step By Step Time-Saving Approaches to Bring Formulas into Excel. Expert Excel VBA Programming: A To Z Tutorials To Learn And Master Excel VBA: Macro Excel Vba Tutorial Focusing on Excel and Word: A Complete Easy Step-by-Step Guide to Microsoft Office.

Excel PivotTables and Dashboard: The step-by-step guide to learn and master Excel PivotTables and dashboard. Search This Blog. AMAZON FREE EBOOKS. EXCEL AND VBA FREE VIDEO COURSES Home. EVBA App for learning online Iphone and Android. KING EXCEL. Total Pageviews. Labels Cloud Excel ebooks Excel tips Python ebooks VBA ebooks EVBA. net ebooks CCS ebook Microsoft Project Node. js ebooks Other TIPS and TRICKS Word and PowerPoint Video training linux ebooks AWS Angular Crypto ebook Economics ebooks Marketing Networking R Rails Russian Excel ebook SEO Word and PowerPoint Tips AI Adobe Indesign Android Apache Spark AutoCad Azure Big Data Blogchain Bootstrap ebooks C ebook CSS Critical Thinking Ebooks Dart Data Analytics Data ebook Docker EVBA.

info Free Cou FBA Flutter Game Designer Git and GitHub Google Jamboard HBR ebooks Hadoop Italian Excel ebooks JQuery ebooks Laravel Make money ebooks Microsoft Excel Free Download Microsoft Teams Rust ebook SAP ebook SASS Scala Science Selnium ebook Sharepoint Ebooks Spreadsheets book Tensorflow Typescript ebook Vba tips cookbooks i.

info Free Cou EVBA. info Free Course Excel excel Excel Excel Excel and VBA free courses Excel cheatsheets Excel ebooks Excel free ebooks Excel shortcuts Excel tips Excel Video FBA Finance ebooks Flutter Free Course Free Excel Templates French Excel free ebooks Function in Excel Game Designer Germany Excel ebooks Git and GitHub Google Jamboard Hacking Ebooks Hadoop HBR ebooks HR ebooks HTML EBOOKS i Intelligence ebooks It book Italian Excel ebooks Java ebooks Javascript ebooks JQuery ebooks Kotlin Laravel linux ebooks Machine Learning Ebook Make money ebooks Management ebooks Marketing Microsoft Excel Free Download Microsoft Project Microsoft Teams MOS EBOOKS Most populaer free ebook PDF and EPUB Mr King Excel Tips Networking New free Course Video - Excel PRO TIPS for Power Users Node.

ADVANCE EXCEL SHORTCUTS KEY FREE PDF DOWNLOAD DOWNLOAD DOWNLOAD. DOWNLOAD DOWNLOAD 2 DOWNLOAD 3 Excel VBA in easy steps, 2nd Edition by Mike McGrath Length: pages Edition: 3 Language: English P DOWNLOAD DOWNLOAD 2 DOWNLOAD 3 Between the Spreadsheets: Classifying and Fixing Dirty Data by Susan Walsh Length: pages Edition Excel Formulas and Functions for Beginners The Step-by-Step Practical Guide with Examples DOWNLOAD DOWNLOAD 2 DOWNLOAD 3 by James Bu Crafted with by TemplatesYard.

0 notes

Text

DevOps Training at H2KInfosys

In the fast-paced world of technology, DevOps has emerged as a critical approach for organizations to streamline their software development and IT operations. With H2K Infosys, you can embark on a transformative journey that will equip you with the skills and knowledge required to excel in the field of DevOps. In this article, we'll explore the significance of DevOps Training, the advantages of pursuing it at H2K Infosys, and how it can transform your career prospects.

Why DevOps?

DevOps, short for Development and Operations, is a set of practices that combine software development (Dev) and IT operations (Ops). It emphasizes automation, collaboration, and communication between software developers and IT professionals, leading to a faster and more reliable software development process. In today's competitive business landscape, organizations are actively seeking DevOps professionals who can drive efficiency, reduce errors, and accelerate time to market.

The H2K Infosys Advantage

H2K Infosys is a renowned online training and placement assistance organization that has been instrumental in shaping the careers of thousands of IT professionals. When it comes to DevOps training, H2K Infosys stands out for several compelling reasons:

Industry-Experienced Instructors