#devops automation model

Explore tagged Tumblr posts

Text

DevOps Automation Model: Accelerate Success

Discover the power of the DevOps automation model and DevOps testing. Learn how they boost software delivery, enhance collaboration, and ensure scalability. Dive into a strategic approach with expert insights on tools and integration for business success.

0 notes

Text

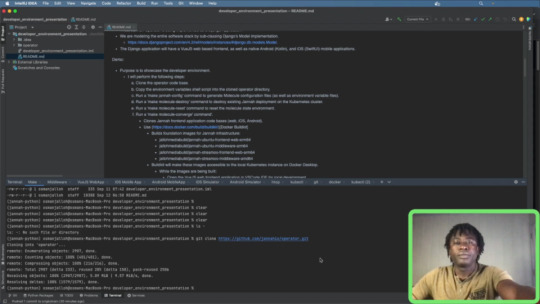

Developer Environment Presentation 1 Part 9: Mobile Applications Preview (iOS, Android)

Preview of the Jannah iOS and Android mobile applications. Showcase making WorkflowList query and the response data structure.

Developer Environment Presentation 1 Part 9: Mobile Applications Preview (iOS, Android). In the previous video, we had left off showcasing the Web frontend application, pulling data from the Django based middleware application. I had shown pulling data from the GraphQL API at http://0.0.0.0:8080/graphql. The mobile apps perform the same GraphQL queries to get data from the middlware. The iOS…

View On WordPress

#ansible#automation#boot#Developer#devOps#DX#Environment#Feedback#github#IDE#jannahio#Jannah_io#middleware#model#molecule#Network#Sites#software#Stack#storage#Variables

0 notes

Text

Exciting developments in MLOps await in 2024! 🚀 DevOps-MLOps integration, AutoML acceleration, Edge Computing rise – shaping a dynamic future. Stay ahead of the curve! #MLOps #TechTrends2024 🤖✨

#MLOps#Machine Learning Operations#DevOps#AutoML#Automated Pipelines#Explainable AI#Edge Computing#Model Monitoring#Governance#Hybrid Cloud#Multi-Cloud Deployments#Security#Forecast#2024

0 notes

Text

How-To IT

Topic: Core areas of IT

1. Hardware

• Computers (Desktops, Laptops, Workstations)

• Servers and Data Centers

• Networking Devices (Routers, Switches, Modems)

• Storage Devices (HDDs, SSDs, NAS)

• Peripheral Devices (Printers, Scanners, Monitors)

2. Software

• Operating Systems (Windows, Linux, macOS)

• Application Software (Office Suites, ERP, CRM)

• Development Software (IDEs, Code Libraries, APIs)

• Middleware (Integration Tools)

• Security Software (Antivirus, Firewalls, SIEM)

3. Networking and Telecommunications

• LAN/WAN Infrastructure

• Wireless Networking (Wi-Fi, 5G)

• VPNs (Virtual Private Networks)

• Communication Systems (VoIP, Email Servers)

• Internet Services

4. Data Management

• Databases (SQL, NoSQL)

• Data Warehousing

• Big Data Technologies (Hadoop, Spark)

• Backup and Recovery Systems

• Data Integration Tools

5. Cybersecurity

• Network Security

• Endpoint Protection

• Identity and Access Management (IAM)

• Threat Detection and Incident Response

• Encryption and Data Privacy

6. Software Development

• Front-End Development (UI/UX Design)

• Back-End Development

• DevOps and CI/CD Pipelines

• Mobile App Development

• Cloud-Native Development

7. Cloud Computing

• Infrastructure as a Service (IaaS)

• Platform as a Service (PaaS)

• Software as a Service (SaaS)

• Serverless Computing

• Cloud Storage and Management

8. IT Support and Services

• Help Desk Support

• IT Service Management (ITSM)

• System Administration

• Hardware and Software Troubleshooting

• End-User Training

9. Artificial Intelligence and Machine Learning

• AI Algorithms and Frameworks

• Natural Language Processing (NLP)

• Computer Vision

• Robotics

• Predictive Analytics

10. Business Intelligence and Analytics

• Reporting Tools (Tableau, Power BI)

• Data Visualization

• Business Analytics Platforms

• Predictive Modeling

11. Internet of Things (IoT)

• IoT Devices and Sensors

• IoT Platforms

• Edge Computing

• Smart Systems (Homes, Cities, Vehicles)

12. Enterprise Systems

• Enterprise Resource Planning (ERP)

• Customer Relationship Management (CRM)

• Human Resource Management Systems (HRMS)

• Supply Chain Management Systems

13. IT Governance and Compliance

• ITIL (Information Technology Infrastructure Library)

• COBIT (Control Objectives for Information Technologies)

• ISO/IEC Standards

• Regulatory Compliance (GDPR, HIPAA, SOX)

14. Emerging Technologies

• Blockchain

• Quantum Computing

• Augmented Reality (AR) and Virtual Reality (VR)

• 3D Printing

• Digital Twins

15. IT Project Management

• Agile, Scrum, and Kanban

• Waterfall Methodology

• Resource Allocation

• Risk Management

16. IT Infrastructure

• Data Centers

• Virtualization (VMware, Hyper-V)

• Disaster Recovery Planning

• Load Balancing

17. IT Education and Certifications

• Vendor Certifications (Microsoft, Cisco, AWS)

• Training and Development Programs

• Online Learning Platforms

18. IT Operations and Monitoring

• Performance Monitoring (APM, Network Monitoring)

• IT Asset Management

• Event and Incident Management

19. Software Testing

• Manual Testing: Human testers evaluate software by executing test cases without using automation tools.

• Automated Testing: Use of testing tools (e.g., Selenium, JUnit) to run automated scripts and check software behavior.

• Functional Testing: Validating that the software performs its intended functions.

• Non-Functional Testing: Assessing non-functional aspects such as performance, usability, and security.

• Unit Testing: Testing individual components or units of code for correctness.

• Integration Testing: Ensuring that different modules or systems work together as expected.

• System Testing: Verifying the complete software system’s behavior against requirements.

• Acceptance Testing: Conducting tests to confirm that the software meets business requirements (including UAT - User Acceptance Testing).

• Regression Testing: Ensuring that new changes or features do not negatively affect existing functionalities.

• Performance Testing: Testing software performance under various conditions (load, stress, scalability).

• Security Testing: Identifying vulnerabilities and assessing the software’s ability to protect data.

• Compatibility Testing: Ensuring the software works on different operating systems, browsers, or devices.

• Continuous Testing: Integrating testing into the development lifecycle to provide quick feedback and minimize bugs.

• Test Automation Frameworks: Tools and structures used to automate testing processes (e.g., TestNG, Appium).

19. VoIP (Voice over IP)

VoIP Protocols & Standards

• SIP (Session Initiation Protocol)

• H.323

• RTP (Real-Time Transport Protocol)

• MGCP (Media Gateway Control Protocol)

VoIP Hardware

• IP Phones (Desk Phones, Mobile Clients)

• VoIP Gateways

• Analog Telephone Adapters (ATAs)

• VoIP Servers

• Network Switches/ Routers for VoIP

VoIP Software

• Softphones (e.g., Zoiper, X-Lite)

• PBX (Private Branch Exchange) Systems

• VoIP Management Software

• Call Center Solutions (e.g., Asterisk, 3CX)

VoIP Network Infrastructure

• Quality of Service (QoS) Configuration

• VPNs (Virtual Private Networks) for VoIP

• VoIP Traffic Shaping & Bandwidth Management

• Firewall and Security Configurations for VoIP

• Network Monitoring & Optimization Tools

VoIP Security

• Encryption (SRTP, TLS)

• Authentication and Authorization

• Firewall & Intrusion Detection Systems

• VoIP Fraud DetectionVoIP Providers

• Hosted VoIP Services (e.g., RingCentral, Vonage)

• SIP Trunking Providers

• PBX Hosting & Managed Services

VoIP Quality and Testing

• Call Quality Monitoring

• Latency, Jitter, and Packet Loss Testing

• VoIP Performance Metrics and Reporting Tools

• User Acceptance Testing (UAT) for VoIP Systems

Integration with Other Systems

• CRM Integration (e.g., Salesforce with VoIP)

• Unified Communications (UC) Solutions

• Contact Center Integration

• Email, Chat, and Video Communication Integration

2 notes

·

View notes

Text

Quality Assurance (QA) Analyst - Tosca

Model-Based Test Automation (MBTA):

Tosca uses a model-based approach to automate test cases, which allows for greater reusability and easier maintenance.

Scriptless Testing:

Tosca offers a scriptless testing environment, enabling testers with minimal programming knowledge to create complex test cases using a drag-and-drop interface.

Risk-Based Testing (RBT):

Tosca helps prioritize testing efforts by identifying and focusing on high-risk areas of the application, improving test coverage and efficiency.

Continuous Integration and DevOps:

Integration with CI/CD tools like Jenkins, Bamboo, and Azure DevOps enables automated testing within the software development pipeline.

Cross-Technology Testing:

Tosca supports testing across various technologies, including web, mobile, APIs, and desktop applications.

Service Virtualization:

Tosca allows the simulation of external services, enabling testing in isolated environments without dependency on external systems.

Tosca Testing Process

Requirements Management:

Define and manage test requirements within Tosca, linking them to test cases to ensure comprehensive coverage.

Test Case Design:

Create test cases using Tosca’s model-based approach, focusing on functional flows and data variations.

Test Data Management:

Manage and manipulate test data within Tosca to support different testing scenarios and ensure data-driven testing.

Test Execution:

Execute test cases automatically or manually, tracking progress and results in real-time.

Defect Management:

Identify, log, and track defects through Tosca’s integration with various bug-tracking tools like JIRA and Bugzilla.

Reporting and Analytics:

Generate detailed reports and analytics on test coverage, execution results, and defect trends to inform decision-making.

Benefits of Using Tosca for QA Analysts

Efficiency: Automation and model-based testing significantly reduce the time and effort required for test case creation and maintenance.

Accuracy: Reduces human error by automating repetitive tasks and ensuring consistent execution of test cases.

Scalability: Easily scales to accommodate large and complex testing environments, supporting continuous testing in agile and DevOps processes.

Integration: Seamlessly integrates with various tools and platforms, enhancing collaboration across development, testing, and operations teams.

Skills Required for QA Analysts Using Tosca

Understanding of Testing Principles: Fundamental knowledge of manual and automated testing principles and methodologies.

Technical Proficiency: Familiarity with Tosca and other testing tools, along with basic understanding of programming/scripting languages.

Analytical Skills: Ability to analyze requirements, design test cases, and identify potential issues effectively.

Attention to Detail: Keen eye for detail to ensure comprehensive test coverage and accurate defect identification.

Communication Skills: Strong verbal and written communication skills to document findings and collaborate with team members.

2 notes

·

View notes

Text

Note to Self: DON'T USE UNITY ENGINE

Take FEE from Developers for Every copy for every game installed.

FREE GAMES the fees still apply estimate more than $25,000,000

Declare bankruptcy to the bank, loaner's and users. (??? Does Unity apply the same charges to Casino machines,slot websites, jackpot party, it's legally a gaming ain't it?)

I get FREE GAME, while DEVELOPER is CHARGED for that MY FREE COPY

So if I a Gamer become a Developer will be CHARGED for FREE GAMES even by multiple copies from one user

" That's bad " I feel bad for studio's situation :(

*Edit UPDATES (September 13 2023)

- Unity "regrouped" and now says ONLY the initial installation of a game triggers a fee (0.20$ per install){I hope there no glitches concerning installation}

- Demos mostly won't trigger fees (Keyword: MOSTLY what does that mean)

- Devs not charged fee for Game Pass, Thank God for the Indie Developers

- Charity games/bundles exempted from fees

Xbox is on the hook, for Gamepass?

*Edit Updates (September 13 2023)

Unity:

-Who is impacted by this price increase: The price increase is very targeted. In fact, more than 90% of our customers will not be affected by this change. Customers who will be impacted are generally those who have found a substantial scale in downloads and revenue and have reached both our install and revenue thresholds. This means a low (or no) fee for creators who have not found scale success yet and a modest one-time fee for those who have. (How big of scale of success before your charged?)

-Fee on new installs only: Once you meet the two install and revenue thresholds, you only pay the runtime fee on new installs after Jan 1, 2024. It’s not perpetual: You only pay once for an install, not an ongoing perpetual license royalty like a revenue share model. (???)(How do they know that from device)

-How we define and count installs: Assuming the install and revenue thresholds are met, we will only count net new installs on any device starting Jan 1, 2024. Additionally, developers are not responsible for paying a runtime fee on: • Re-install charges - we are not going to charge a fee for re-installs. •Fraudulent installs charges - we are not going to charge a fee for fraudulent installs. We will work directly with you on cases where fraud or botnets are suspected of malicious intent.

- Trials, partial play demos, & automation installs (devops) charges - we are not going to count these toward your install count. Early access games are not considered demos.

- Web and streaming games - we are not going to count web and streaming games toward your install count either.

- Charity-related installs - the pricing change and install count will not be applied to your charity bundles/initiatives.(Good)

•If I make a expansion pack does count as install, what if I made sequel?

•Fee apply to$200,000 USD (How does work for other countries)

So I charge $60 per ONE Videogame I will be charged fees once I sell about 3400 copies ($204000)

I then sell say 10,000copies(New Sequels as well)

(If I download game onto my computer twice they get charged 0.20, how ever if I redownload onto another device say Xbox, would they get charged again, charges may vary depending on how many games.)

OVERALL

Seems to force companies to charge customers higher prices on videogames to avoid a loss of profit.

*Edit Updates as of (September 22.2023)

- Your Game is made using a Unity Pro or Unity Enterprise plan.

- Your Game is created or will be upgraded to the next major Unity version releasing in 2024.

- Your Game meets BOTH thresholds of $1,000,000 (USD) gross revenue (GROSS= Before Deductions & Taxes) on a trailing 12 month basis(?) AND 1,000,000 *lifetime initial engagements.

As for counting the number of *initial engagements, it will depend on your game and distribution platforms.

Some example metrics that we recommend are number of units sold or first-time user downloads.

This list is not comprehensive, but you can submit an estimate based on these metrics. Hope this helps! You can also find more information here: https://unity.com/pricing-updates

I'm sorry, Did that User say runtime fee is still tied to the number of installations (WTF Runtime Fee)

•Qualify(Ew) for the run-time fee:

1) are on Pro and Enterprise plans

2) have upgraded to the Long Term Support (LTS) version releasing in 2024 (or later)

3) You have crossed the $1,000,000 (USD) in gross revenue (GROSS= Before Deductions & Taxes)(trailing 12 months)

4) 1,000,000 initial engagements

( I noticed that it doesn't seem to mention International Revenue. Only the USD)

•Delete Unity

•Deletes Game before they make million

•Make $900,000 then make Game Free

•Make Game Free and implore people for their generosity

•Change Game Engine

Too tired to do the math...

8 notes

·

View notes

Text

Exploring Python: Features and Where It's Used

Python is a versatile programming language that has gained significant popularity in recent times. It's known for its ease of use, readability, and adaptability, making it an excellent choice for both newcomers and experienced programmers. In this article, we'll delve into the specifics of what Python is and explore its various applications.

What is Python?

Python is an interpreted programming language that is high-level and serves multiple purposes. Created by Guido van Rossum and released in 1991, Python is designed to prioritize code readability and simplicity, with a clean and minimalistic syntax. It places emphasis on using proper indentation and whitespace, making it more convenient for programmers to write and comprehend code.

Key Traits of Python :

Simplicity and Readability: Python code is structured in a way that's easy to read and understand. This reduces the time and effort required for both creating and maintaining software.

Python code example: print("Hello, World!")

Versatility: Python is applicable across various domains, from web development and scientific computing to data analysis, artificial intelligence, and more.

Python code example: import numpy as np

Extensive Standard Library: Python offers an extensive collection of pre-built libraries and modules. These resources provide developers with ready-made tools and functions to tackle complex tasks efficiently.

Python code example: import matplotlib.pyplot as plt

Compatibility Across Platforms: Python is available on multiple operating systems, including Windows, macOS, and Linux. This allows programmers to create and run code seamlessly across different platforms.

Strong Community Support: Python boasts an active community of developers who contribute to its growth and provide support through online forums, documentation, and open-source contributions. This community support makes Python an excellent choice for developers seeking assistance or collaboration.

Where is Python Utilized?

Due to its versatility, Python is utilized in various domains and industries. Some key areas where Python is widely applied include:

Web Development: Python is highly suitable for web development tasks. It offers powerful frameworks like Django and Flask, simplifying the process of building robust web applications. The simplicity and readability of Python code enable developers to create clean and maintainable web applications efficiently.

Data Science and Machine Learning: Python has become the go-to language for data scientists and machine learning practitioners. Its extensive libraries such as NumPy, Pandas, and SciPy, along with specialized libraries like TensorFlow and PyTorch, facilitate a seamless workflow for data analysis, modeling, and implementing machine learning algorithms.

Scientific Computing: Python is extensively used in scientific computing and research due to its rich scientific libraries and tools. Libraries like SciPy, Matplotlib, and NumPy enable efficient handling of scientific data, visualization, and numerical computations, making Python indispensable for scientists and researchers.

Automation and Scripting: Python's simplicity and versatility make it a preferred language for automating repetitive tasks and writing scripts. Its comprehensive standard library empowers developers to automate various processes within the operating system, network operations, and file manipulation, making it popular among system administrators and DevOps professionals.

Game Development: Python's ease of use and availability of libraries like Pygame make it an excellent choice for game development. Developers can create interactive and engaging games efficiently, and the language's simplicity allows for quick prototyping and development cycles.

Internet of Things (IoT): Python's lightweight nature and compatibility with microcontrollers make it suitable for developing applications for the Internet of Things. Libraries like Circuit Python enable developers to work with sensors, create interactive hardware projects, and connect devices to the internet.

Python's versatility and simplicity have made it one of the most widely used programming languages across diverse domains. Its clean syntax, extensive libraries, and cross-platform compatibility make it a powerful tool for developers. Whether for web development, data science, automation, or game development, Python proves to be an excellent choice for programmers seeking efficiency and user-friendliness. If you're considering learning a programming language or expanding your skills, Python is undoubtedly worth exploring.

9 notes

·

View notes

Text

Going Over the Cloud: An Investigation into the Architecture of Cloud Solutions

Because the cloud offers unprecedented levels of size, flexibility, and accessibility, it has fundamentally altered the way we approach technology in the present digital era. As more and more businesses shift their infrastructure to the cloud, it is imperative that they understand the architecture of cloud solutions. Join me as we examine the core concepts, industry best practices, and transformative impacts on modern enterprises.

The Basics of Cloud Solution Architecture A well-designed architecture that balances dependability, performance, and cost-effectiveness is the foundation of any successful cloud deployment. Cloud solutions' architecture is made up of many different components, including networking, computing, storage, security, and scalability. By creating solutions that are tailored to the requirements of each workload, organizations can optimize return on investment and fully utilize the cloud.

Flexibility and Resilience in Design The flexibility of cloud computing to grow resources on-demand to meet varying workloads and guarantee flawless performance is one of its distinguishing characteristics. Cloud solution architecture create resilient systems that can endure failures and sustain uptime by utilizing fault-tolerant design principles, load balancing, and auto-scaling. Workloads can be distributed over several availability zones and regions to help enterprises increase fault tolerance and lessen the effect of outages.

Protection of Data in the Cloud and Security by Design

As data thefts become more common, security becomes a top priority in cloud solution architecture. Architects include identity management, access controls, encryption, and monitoring into their designs using a multi-layered security strategy. By adhering to industry standards and best practices, such as the shared responsibility model and compliance frameworks, organizations may safeguard confidential information and guarantee regulatory compliance in the cloud.

Using Professional Services to Increase Productivity Cloud service providers offer a variety of managed services that streamline operations and reduce the stress of maintaining infrastructure. These services allow firms to focus on innovation instead of infrastructure maintenance. They include server less computing, machine learning, databases, and analytics. With cloud-native applications, architects may reduce costs, increase time-to-market, and optimize performance by selecting the right mix of managed services.

Cost control and ongoing optimization Cost optimization is essential since inefficient resource use can quickly drive up costs. Architects monitor resource utilization, analyze cost trends, and identify opportunities for optimization with the aid of tools and techniques. Businesses can cut waste and maximize their cloud computing expenses by using spot instances, reserved instances, and cost allocation tags.

Acknowledging Automation and DevOps Important elements of cloud solution design include automation and DevOps concepts, which enable companies to develop software more rapidly, reliably, and efficiently. Architects create pipelines for continuous integration, delivery, and deployment, which expedites the software development process and allows for rapid iterations. By provisioning and managing infrastructure programmatically with Infrastructure as Code (IaC) and Configuration Management systems, teams may minimize human labor and guarantee consistency across environments.

Multiple-cloud and hybrid strategies In an increasingly interconnected world, many firms employ hybrid and multi-cloud strategies to leverage the benefits of many cloud providers in addition to on-premises infrastructure. Cloud solution architects have to design systems that seamlessly integrate several environments while ensuring interoperability, data consistency, and regulatory compliance. By implementing hybrid connection options like VPNs, Direct Connect, or Express Route, organizations may develop hybrid cloud deployments that include the best aspects of both public and on-premises data centers. Analytics and Data Management Modern organizations depend on data because it fosters innovation and informed decision-making. Thanks to the advanced data management and analytics solutions developed by cloud solution architects, organizations can effortlessly gather, store, process, and analyze large volumes of data. By leveraging cloud-native data services like data warehouses, data lakes, and real-time analytics platforms, organizations may gain a competitive advantage in their respective industries and extract valuable insights. Architects implement data governance frameworks and privacy-enhancing technologies to ensure adherence to data protection rules and safeguard sensitive information.

Computing Without a Server Server less computing, a significant shift in cloud architecture, frees organizations to focus on creating applications rather than maintaining infrastructure or managing servers. Cloud solution architects develop server less programs using event-driven architectures and Function-as-a-Service (FaaS) platforms such as AWS Lambda, Azure Functions, or Google Cloud Functions. By abstracting away the underlying infrastructure, server less architectures offer unparalleled scalability, cost-efficiency, and agility, empowering companies to innovate swiftly and change course without incurring additional costs.

Conclusion As we come to the close of our investigation into cloud solution architecture, it is evident that the cloud is more than just a platform for technology; it is a force for innovation and transformation. By embracing the ideas of scalability, resilience, and security, and efficiency, organizations can take advantage of new opportunities, drive business expansion, and preserve their competitive edge in today's rapidly evolving digital market. Thus, to ensure success, remember to leverage cloud solution architecture when developing a new cloud-native application or initiating a cloud migration.

1 note

·

View note

Text

Python's Age: Unlocking the Potential of Programming

Introduction:

Python has become a powerful force in the ever-changing world of computer languages, influencing how developers approach software development. Python's period is distinguished by its adaptability, ease of use, and vast ecosystem that supports a wide range of applications. Python has established itself as a top choice for developers globally, spanning from web programming to artificial intelligence. We shall examine the traits that characterize the Python era and examine its influence on the programming community in this post. Learn Python from Uncodemy which provides the best Python course in Noida and become part of this powerful force.

Versatility and Simplicity:

Python stands out due in large part to its adaptability. Because it is a general-purpose language with many applications, Python is a great option for developers in a variety of fields. It’s easy to learn and comprehend grammar is straightforward, concise, and similar to that of the English language. A thriving and diverse community has been fostered by Python's simplicity, which has drawn both novice and experienced developers.

Community and Collaboration:

It is well known that the Python community is open-minded and cooperative. Python is growing because of the libraries, frameworks, and tools that developers from all around the world create to make it better. Because the Python community is collaborative by nature, a large ecosystem has grown up around it, full of resources that developers may easily access. The Python community offers a helpful atmosphere for all users, regardless of expertise level. Whether you are a novice seeking advice or an expert developer searching for answers, we have you covered.

Web Development with Django and Flask:

Frameworks such as Django and Flask have helped Python become a major force in the online development space. The "batteries-included" design of the high-level web framework Django makes development more quickly accomplished. In contrast, Flask is a lightweight, modular framework that allows developers to select the components that best suit their needs. Because of these frameworks, creating dependable and

scalable web applications have become easier, which has helped Python gain traction in the web development industry.

Data Science and Machine Learning:

Python has unmatched capabilities in data science and machine learning. The data science toolkit has become incomplete without libraries like NumPy, pandas, and matplotlib, which make data manipulation, analysis, and visualization possible. Two potent machine learning frameworks, TensorFlow and PyTorch, have cemented Python's place in the artificial intelligence field. Data scientists and machine learning engineers can concentrate on the nuances of their models instead of wrangling with complicated code thanks to Python's simple syntax.

Automation and Scripting:

Python is a great choice for activities ranging from straightforward scripts to intricate automation workflows because of its adaptability in automation and scripting. The readable and succinct syntax of the language makes it easier to write automation scripts that are both effective and simple to comprehend. Python has evolved into a vital tool for optimizing operations, used by DevOps engineers to manage deployment pipelines and system administrators to automate repetitive processes.

Education and Python Courses:

The popularity of Python has also raised the demand for Python classes from people who want to learn programming. For both novices and experts, Python courses offer an organized learning path that covers a variety of subjects, including syntax, data structures, algorithms, web development, and more. Many educational institutions in the Noida area provide Python classes that give a thorough and practical learning experience for anyone who wants to learn more about the language.

Open Source Development:

The main reason for Python's broad usage has been its dedication to open-source development. The Python Software Foundation (PSF) is responsible for managing the language's advancement and upkeep, guaranteeing that programmers everywhere can continue to use it without restriction. This collaborative and transparent approach encourages creativity and lets developers make improvements to the language. Because Python is open-source, it has been possible for developers to actively shape the language's development in a community-driven ecosystem.

Cybersecurity and Ethical Hacking:

Python has emerged as a standard language in the fields of ethical hacking and cybersecurity. It's a great option for creating security tools and penetration testing because of its ease of use and large library. Because of Python's adaptability, cybersecurity experts can effectively handle a variety of security issues. Python plays a more and bigger part in system and network security as cybersecurity becomes more and more important.

Startups and Entrepreneurship:

Python is a great option for startups and business owners due to its flexibility and rapid development cycles. Small teams can quickly prototype and create products thanks to the language's ease of learning, which reduces time to market. Additionally, companies may create complex solutions without having to start from scratch thanks to Python's large library and framework ecosystem. Python's ability to fuel creative ideas has been leveraged by numerous successful firms, adding to the language's standing as an engine for entrepreneurship.

Remote Collaboration and Cloud Computing:

Python's heyday aligns with a paradigm shift towards cloud computing and remote collaboration. Python is a good choice for creating cloud-based apps because of its smooth integration with cloud services and support for asynchronous programming. Python's readable and simple syntax makes it easier for developers working remotely or in dispersed teams to collaborate effectively, especially in light of the growing popularity of remote work and distributed teams. The language's position in the changing cloud computing landscape is further cemented by its compatibility with key cloud providers.

Continuous Development and Enhancement:

Python is still being developed; new features, enhancements, and optimizations are added on a regular basis. The maintainers of the language regularly solicit community input to keep Python current and adaptable to the changing needs of developers. Python's longevity and ability to stay at the forefront of technical breakthroughs can be attributed to this dedication to ongoing development.

The Future of Python:

The future of Python seems more promising than it has ever been. With improvements in concurrency, performance optimization, and support for future technologies, the language is still developing. Industry demand for Python expertise is rising, suggesting that the language's heyday is still very much alive. Python is positioned to be a key player in determining the direction of software development as emerging technologies like edge computing, quantum computing, and artificial intelligence continue to gain traction.

Conclusion:

To sum up, Python is a versatile language that is widely used in a variety of sectors and is developed by the community. Python is now a staple of contemporary programming, used in everything from artificial intelligence to web development. The language is a favorite among developers of all skill levels because of its simplicity and strong capabilities. The Python era invites you to a vibrant and constantly growing community, whatever your experience level with programming. Python courses in Noida offer a great starting place for anybody looking to start a learning journey into the broad and fascinating world of Python programming.

Source Link: https://teletype.in/@vijay121/Wj1LWvwXTgz

2 notes

·

View notes

Text

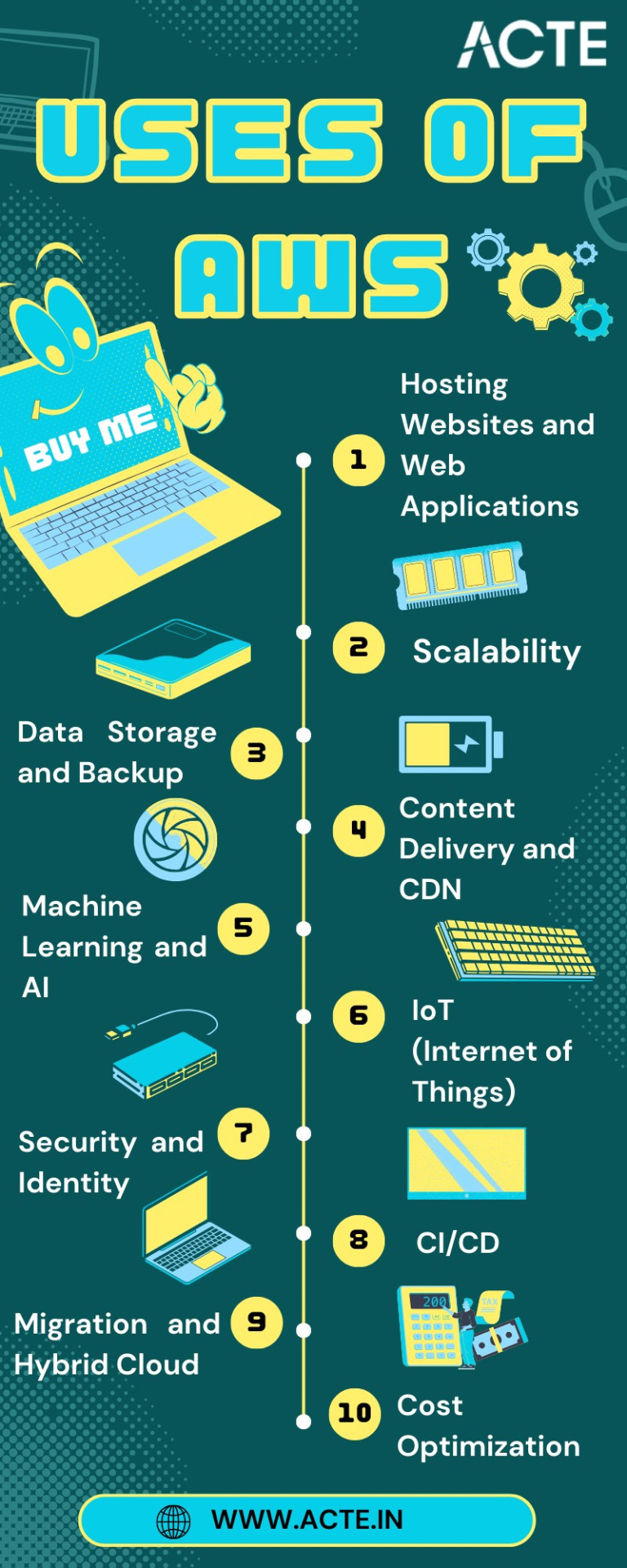

From Flexibility to Security: Unraveling the AWS Advantage

In the ever-evolving landscape of cloud computing, Amazon Web Services (AWS) stands out as a trailblazer, offering a robust and versatile platform that has redefined the way businesses and individuals leverage computing resources. AWS Training in Bangalore further enhances the accessibility and proficiency of individuals and businesses in leveraging the full potential of this powerful cloud platform. With AWS training in Bangalore, professionals can gain the skills and knowledge needed to harness the capabilities of AWS for diverse applications and industries.

Let's take a closer look at the key aspects that make AWS a cornerstone in the world of cloud computing.

1. Cloud Computing Services for Every Need

At its core, AWS is a comprehensive cloud computing platform that provides a vast array of services. These services encompass Infrastructure as a Service (IaaS), Platform as a Service (PaaS), and Software as a Service (SaaS), offering users a flexible and scalable approach to computing.

2. Scalability and Flexibility

A defining feature of AWS is its scalability. Users have the ability to scale their resources up or down based on demand. This flexibility is particularly advantageous for businesses with varying workloads, allowing them to optimize costs while ensuring optimal performance.

3. Global Infrastructure for Enhanced Performance

AWS operates a global network of data centers known as Availability Zones, strategically located in regions around the world. This geographical diversity enables users to deploy applications and services close to end-users, enhancing performance, and ensuring high availability.

4. Emphasis on Security and Compliance

Security is a top priority for AWS. The platform offers robust security features, including data encryption, identity and access management, and compliance with various industry standards and regulations. This commitment to security instills confidence in users, especially those handling sensitive data.

5. Cost-Efficiency at Its Core

AWS follows a pay-as-you-go pricing model, allowing users to pay only for the resources they consume. This cost-efficient approach makes AWS accessible to startups, small businesses, and enterprises alike, eliminating the need for significant upfront investments.

6. Comprehensive Service Offerings

AWS boasts an extensive portfolio of services, covering computing, storage, databases, machine learning, analytics, IoT, security, and more. This diversity empowers users to build, deploy, and manage applications for virtually any purpose, making AWS a one-stop-shop for a wide range of computing needs.

7. Vibrant Ecosystem and Community

The AWS ecosystem is vibrant and dynamic, supported by a large community of users, developers, and partners. This ecosystem includes a marketplace for third-party applications and services, as well as a wealth of documentation, tutorials, and forums that foster collaboration and support.

8. Enterprise-Grade Reliability

The reliability of AWS is paramount, attracting the trust of many large enterprises, startups, and government organizations. Its redundant architecture and robust infrastructure contribute to high availability and fault tolerance, crucial for mission-critical applications.

9. Continuous Innovation

Innovation is ingrained in the AWS DNA. The platform consistently introduces new features and services to address evolving industry needs and technological advancements. Staying at the forefront of innovation ensures that AWS users have access to cutting-edge tools and capabilities.

10. Facilitating DevOps and Automation

AWS supports DevOps practices, empowering organizations to automate processes and streamline development workflows. This emphasis on automation contributes to faster and more efficient software delivery, aligning with modern development practices.

In conclusion, Amazon Web Services (AWS) stands as a powerhouse in the cloud computing arena. Its scalability, security features, and extensive service offerings make it a preferred choice for organizations seeking to harness the benefits of cloud technology. Whether you're a startup, a small business, or a large enterprise, AWS provides the tools and resources to propel your digital initiatives forward. As the cloud computing landscape continues to evolve, AWS remains a stalwart, driving innovation and empowering users to build and scale with confidence. To master the intricacies of AWS and unlock its full potential, individuals can benefit from enrolling in the Best AWS Training Institute. This training ensures that professionals gain the expertise needed to navigate the complexities of AWS, empowering them to contribute effectively to their organizations' digital transformation and success.

2 notes

·

View notes

Text

Developer Environment Presentation 1 Part 7: Run Jannah's Middleware Application Continues

Run Jannah's Middleware application in development mode, and add sample Feedback, and Workflows Models using the Django Web Admin.

Developer Environment Presentation 1 Part 7: Run Jannah’s Middleware Application Continues Video Highlights: A run of the Jannah middleware application (continues). I performed the following steps: python manage.py runserver. Continue on entering sample data via the Django Web admin. Feedback Layer Logs Model All data related to user feedback, including error ticket trackers. Workflow…

View On WordPress

#ansible#automation#boot#Developer#devOps#DX#Environment#Feedback#github#IDE#jannahio#Jannah_io#middleware#model#molecule#Network#Sites#software#Stack#storage#ux#Variables

0 notes

Video

youtube

Kanban, Waterfall, and DevOps are three different approaches to project management and software development. Here's an overview of each concept: 1. Kanban: Definition: Kanban is a visual management method for software development and knowledge work. It originated from manufacturing processes in Toyota and has been adapted for use in software development to improve efficiency and flow.

Key Concepts: Visualization: Work items are represented on a visual board, usually with columns such as "To Do," "In Progress," and "Done."

Work in Progress (WIP) Limits: Limits are set on the number of items allowed in each column to optimize flow and avoid bottlenecks.

Continuous Delivery: Focus on delivering work continuously without distinct iterations.

Advantages: Flexibility in responding to changing priorities.

Continuous delivery of value. Visual representation of work enhances transparency.

Use Case: Kanban is often suitable for teams with variable and unpredictable workloads, where tasks don't follow a fixed iteration cycle.

2. Waterfall: Definition: The Waterfall model is a traditional and sequential approach to software development. It follows a linear and rigid sequence of phases, with each phase building upon the outputs of the previous one.

Phases: Requirements: Define and document project requirements. Design: Create the system architecture and design. Implementation: Code the system based on the design. Testing: Conduct testing to identify and fix defects. Deployment: Deploy the completed system to users. Maintenance: Provide ongoing support and maintenance.

Advantages:

Clear structure and well-defined phases.

Documentation at each stage.

Predictable timelines and costs.

Disadvantages: Limited flexibility for changes after the project starts.

Late feedback on the final product.

Risk of customer dissatisfaction if initial requirements are misunderstood.

Use Case: Waterfall is suitable for projects with well-defined requirements and stable environments where changes are expected to be minimal.

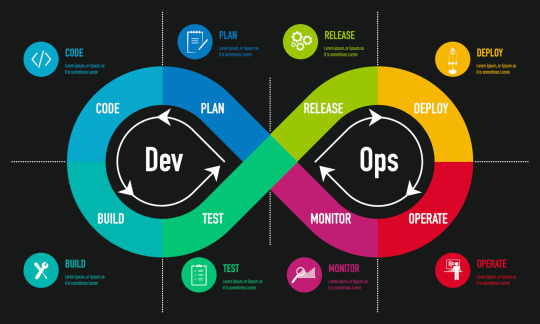

3. DevOps: Definition: DevOps (Development and Operations) is a set of practices that aim to automate and improve the collaboration between software development and IT operations. The goal is to shorten the development lifecycle, deliver high-quality software, and foster a culture of continuous integration and delivery.

Key Practices: Continuous Integration (CI): Merge code changes frequently and automatically test them.

Continuous Delivery/Deployment (CD): Automate the release and deployment processes.

Collaboration: Promote collaboration and communication between development and operations teams.

Advantages: Faster delivery of software. Reduced manual errors through automation. Improved collaboration and communication.

Use Case: DevOps is suitable for organizations aiming to achieve faster and more reliable delivery of software through the automation of development, testing, and deployment processes.

#mktmarketing4you #distributionchannels #HoshinPlanning #Leanmethods #marketing #M4Y #lovemarketing #IPAM #ipammarketingschool #Kanban #ContingencyPlanning #virtual #volunteering #project #Management #Economy #ConsumptionBehavior #BrandManagement #ProductManagement #Logistics #Lifecycle #Brand #Neuromarketing #McKinseyMatrix #Breakevenanalysis #innovation #Facebook #icebergmodel #EdgarScheinsCultureModel #STARMethod #VRIO #7SFramework #gapanalysis #AIDAModel #SixLeadershipStyles #MintoPyramidPrinciple #StrategyDiamond #InternalRateofReturn #irr #BrandManagement #dripmodel #HoshinPlanning #XMatrix #backtobasics #BalancedScorecard #Product #ProductManagement #Logistics #Branding #freemium #businessmodel #business #4P #3C #BCG #SWOT #TOWS #EisenhowerMatrix #Study #marketingresearch #marketer #marketing manager #Painpoints #Pestel #ValueChain # VRIO #marketingmix We also left a video about Lean vs Agile vs Waterfall | What is Lean | Difference between Waterfall and Agile and that could help you. Later we will leave one about Kanban:

2 notes

·

View notes

Text

Your Journey Through the AWS Universe: From Amateur to Expert

In the ever-evolving digital landscape, cloud computing has emerged as a transformative force, reshaping the way businesses and individuals harness technology. At the forefront of this revolution stands Amazon Web Services (AWS), a comprehensive cloud platform offered by Amazon. AWS is a dynamic ecosystem that provides an extensive range of services, designed to meet the diverse needs of today's fast-paced world.

This guide is your key to unlocking the boundless potential of AWS. We'll embark on a journey through the AWS universe, exploring its multifaceted applications and gaining insights into why it has become an indispensable tool for organizations worldwide. Whether you're a seasoned IT professional or a newcomer to cloud computing, this comprehensive resource will illuminate the path to mastering AWS and leveraging its capabilities for innovation and growth. Join us as we clarify AWS and discover how it is reshaping the way we work, innovate, and succeed in the digital age.

Navigating the AWS Universe:

Hosting Websites and Web Applications: AWS provides a secure and scalable place for hosting websites and web applications. Services like Amazon EC2 and Amazon S3 empower businesses to deploy and manage their online presence with unwavering reliability and high performance.

Scalability: At the core of AWS lies its remarkable scalability. Organizations can seamlessly adjust their infrastructure according to the ebb and flow of workloads, ensuring optimal resource utilization in today's ever-changing business environment.

Data Storage and Backup: AWS offers a suite of robust data storage solutions, including the highly acclaimed Amazon S3 and Amazon EBS. These services cater to the diverse spectrum of data types, guaranteeing data security and perpetual availability.

Databases: AWS presents a panoply of database services such as Amazon RDS, DynamoDB, and Redshift, each tailored to meet specific data management requirements. Whether it's a relational database, a NoSQL database, or data warehousing, AWS offers a solution.

Content Delivery and CDN: Amazon CloudFront, AWS's content delivery network (CDN) service, ushers in global content distribution with minimal latency and blazing data transfer speeds. This ensures an impeccable user experience, irrespective of geographical location.

Machine Learning and AI: AWS boasts a rich repertoire of machine learning and AI services. Amazon SageMaker simplifies the development and deployment of machine learning models, while pre-built AI services cater to natural language processing, image analysis, and more.

Analytics: In the heart of AWS's offerings lies a robust analytics and business intelligence framework. Services like Amazon EMR enable the processing of vast datasets using popular frameworks like Hadoop and Spark, paving the way for data-driven decision-making.

IoT (Internet of Things): AWS IoT services provide the infrastructure for the seamless management and data processing of IoT devices, unlocking possibilities across industries.

Security and Identity: With an unwavering commitment to data security, AWS offers robust security features and identity management through AWS Identity and Access Management (IAM). Users wield precise control over access rights, ensuring data integrity.

DevOps and CI/CD: AWS simplifies DevOps practices with services like AWS CodePipeline and AWS CodeDeploy, automating software deployment pipelines and enhancing collaboration among development and operations teams.

Content Creation and Streaming: AWS Elemental Media Services facilitate the creation, packaging, and efficient global delivery of video content, empowering content creators to reach a global audience seamlessly.

Migration and Hybrid Cloud: For organizations seeking to migrate to the cloud or establish hybrid cloud environments, AWS provides a suite of tools and services to streamline the process, ensuring a smooth transition.

Cost Optimization: AWS's commitment to cost management and optimization is evident through tools like AWS Cost Explorer and AWS Trusted Advisor, which empower users to monitor and control their cloud spending effectively.

In this comprehensive journey through the expansive landscape of Amazon Web Services (AWS), we've embarked on a quest to unlock the power and potential of cloud computing. AWS, standing as a colossus in the realm of cloud platforms, has emerged as a transformative force that transcends traditional boundaries.

As we bring this odyssey to a close, one thing is abundantly clear: AWS is not merely a collection of services and technologies; it's a catalyst for innovation, a cornerstone of scalability, and a conduit for efficiency. It has revolutionized the way businesses operate, empowering them to scale dynamically, innovate relentlessly, and navigate the complexities of the digital era.

In a world where data reigns supreme and agility is a competitive advantage, AWS has become the bedrock upon which countless industries build their success stories. Its versatility, reliability, and ever-expanding suite of services continue to shape the future of technology and business.

Yet, AWS is not a solitary journey; it's a collaborative endeavor. Institutions like ACTE Technologies play an instrumental role in empowering individuals to master the AWS course. Through comprehensive training and education, learners are not merely equipped with knowledge; they are forged into skilled professionals ready to navigate the AWS universe with confidence.

As we contemplate the future, one thing is certain: AWS is not just a destination; it's an ongoing journey. It's a journey toward greater innovation, deeper insights, and boundless possibilities. AWS has not only transformed the way we work; it's redefining the very essence of what's possible in the digital age. So, whether you're a seasoned cloud expert or a newcomer to the cloud, remember that AWS is not just a tool; it's a gateway to a future where technology knows no bounds, and success knows no limits.

6 notes

·

View notes

Text

The Future of Testing: How Selenium Automation Testing is transforming the Industry

Introduction

What is quality assurance and testing? Increasing complexity in modern applications has made manual testing difficult, as it is not only time-consuming but also inefficient. Here is where Selenium automation testing is making a difference in the industry, from being reliable and scalable to a fast solution for testing software.

What is selenium automation testing?

Selenium is an open-source framework for automating web-based applications across various browsers and platforms. Selenium models at automated tests, thus running much more efficiently and effectively than general manual testing. Selenium is an extremely versatile and flexible solution, as developers and testers can write scripts in various programming languages, including Java, Python, C#, Ruby, and JavaScript.

Opening New Avenues in Software Testing with Selenium

1. Cross-Browser Compatibility

Another great advantage of Selenium automation testing is that it supports multiple browsers such as Google Chrome, Mozilla Firefox, Safari, Edge, and Internet Explorer. This guarantees that web applications operate uniformly across various settings, erasing browser-related problems.

2. Integrate with CI/CD Pipelines

As organizations embrace DevOps and CI/CD at scale, Selenium works with popular tools such as Jenkins, Bamboo, and GitHub Actions. This enables

3. Parallel Test Execution for Speed and Efficiency

Manual testing requires significant time and resources. Selenium Grid, an advanced feature of Selenium, allows parallel test execution across multiple machines and browsers. This drastically reduces the time needed for testing, ensuring rapid feedback and improved software quality.

4. Cost-Effectiveness and Open-Source Advantage

Because Selenium is entirely free and open-source, in contrast to many commercial testing tools, it is a great option for start-ups, small businesses, and major companies. Updates, bug fixes, and new features are continuously accessible because of the strong community support.

5. Flexible Language Support

Selenium supports a wide array of programming languages, including:

Java

Python

C#

Ruby

JavaScript

Test script development is made easier and more efficient by this flexibility, which enables test automation engineers to work with a language they are familiar with.

Essential Elements of Selenium Automation

1. Selenium WebDriver

WebDriver, the core component of Selenium, works directly with web browsers to perform user actions including text input, button clicks, and page scrolling. It offers faster execution and enables headless browser testing for better performance.

2. The IDE for Selenium

The main purpose of the record-and-playback Selenium Integrated Development Environment (IDE) is to facilitate the rapid construction of test scripts. For novices wishing to begin test automation without extensive programming experience, it is perfect.

3. Grid Selenium

Selenium Grid drastically cuts down on test execution time by enabling parallel test execution across several computers and settings. Large-scale enterprise applications that need a lot of regression testing will find it especially helpful.

Selenium Automation Testing Best Practices

1. Make use of the POM (Page Object Model)

A design pattern called the Page Object Model (POM) improves the reusability and maintainability of test scripts. Teams can readily alter test cases without compromising the main framework by keeping UI components and test logic separate.

2. Implement Data-Driven Testing

Using frameworks like TestNG and JUnit, testers can implement data-driven testing, allowing them to run test scripts with multiple sets of input data. This ensures broader test coverage and better validation of application functionality.

3. Make Use of Headless Browser Evaluation Using browsers like Chrome Headless and PhantomJS to run tests in headless mode (without a GUI) expedites test execution, which makes it perfect for CI/CD pipelines. 4. Include Exception Management Testers should use explicit waits, implicit waits, and try-catch blocks to improve the resilience of test scripts and avoid test failures caused by small problems like network delays or element loading times. 5. Constant Tracking and Reporting Teams can more efficiently examine test results and monitor issues over time by integrating test reporting solutions such as Extent Reports, Allure, or TestNG Reports.

Selenium Automation Testing's Future

Because of updating in artificial intelligence (AI) and machine learning (ML), Selenium automation testing seems to have a bright future.

Emerging AI-driven self-healing test automation frameworks enable scripts to dynamically adjust to UI changes, minimizing maintenance requirements. Furthermore, scalable, on-demand test execution is made possible by the integration of cloud-based testing platforms such as Sauce Labs, browser stack, and Lambda Test, guaranteeing high performance in international settings.

Conclusion

By increasing productivity, reducing expenses, and raising software quality, Selenium automation testing is transforming the software testing sector rapidly. It is an essential tool for modern software development teams due to its adaptability, cross-browser compatibility, and integration capabilities. Businesses may use Selenium automation's advantages and maintain their competitive edge in the current digital environment by putting best practices into effect, utilizing parallel execution, and integrating with CI/CD pipelines. Advanto Software in Pune offers the best Selenium Automation Testing Courseat an affordable price with 100% placement assistance.

Join us today: www.profitmaxacademy.com/

0 notes

Text

Empower Your Business with Microsoft Azure Consulting Services by Goognu

Cloud computing is revolutionizing the way businesses operate, offering scalability, security, and efficiency. Microsoft Azure Consulting Services help organizations transition seamlessly to the cloud, unlocking new possibilities for innovation and growth. At Goognu, we specialize in providing expert Azure Consulting Services to optimize your cloud strategy and ensure a successful transformation.

Why Choose Microsoft Azure?

Microsoft Azure is a trusted cloud platform with a vast ecosystem of tools and services that cater to businesses of all sizes. Here’s why Azure stands out:

Scalability & Flexibility: Adjust resources as needed to match your business growth.

Advanced Security & Compliance: Protect your data with industry-leading security frameworks.

Cost-Effective Solutions: Optimize cloud spending with a pay-as-you-go model.

Seamless Integration: Easily integrate with Microsoft 365, Dynamics 365, and enterprise applications.

AI & Data Analytics: Gain insights through AI-powered analytics and big data processing.

Goognu’s Comprehensive Azure Consulting Services

Goognu provides end-to-end Microsoft Azure Consulting Services, ensuring your cloud journey is smooth and result-driven. Our key offerings include:

1. Azure Strategy & Planning

We assess your business requirements and design a custom Azure adoption roadmap to align with your goals.

2. Azure Migration & Deployment

Seamlessly transition your IT infrastructure, applications, and databases to Azure with minimal disruption.

3. Cloud Architecture Design

Build a secure, scalable, and high-performance Azure environment tailored to your business needs.

4. Security & Compliance Management

Implement robust security controls, identity management, and compliance frameworks to safeguard your data.

5. Azure DevOps & Automation

Enhance productivity with CI/CD pipelines, infrastructure as code (IaC), and workflow automation for seamless development and deployment.

6. Cost Optimization & Performance Tuning

Improve efficiency and reduce expenses with strategic resource allocation and continuous performance monitoring.

7. Managed Azure Services

Enjoy hassle-free cloud management with 24/7 monitoring, maintenance, and technical support from our experts.

8. Business Continuity & Disaster Recovery

Ensure high availability with backup solutions and disaster recovery strategies to prevent downtime.

Why Partner with Goognu?

With Goognu’s Azure Consulting Services, businesses gain an experienced cloud partner dedicated to driving success. Here’s why clients trust us:

Certified Azure Experts: Skilled consultants with deep expertise in Azure technologies.

Tailored Solutions: Customized cloud strategies to meet unique business objectives.

Proven Industry Experience: Successfully transforming businesses with innovative cloud solutions.

24/7 Support & Monitoring: Round-the-clock technical assistance to ensure smooth cloud operations.

Agile & Future-Ready Approach: Leverage cutting-edge Azure innovations for continuous improvement.

Take Your Cloud Strategy to the Next Level

Unlock the full potential of the cloud with Goognu’s Microsoft Azure Consulting Services. Whether you need migration, optimization, security, or management support, our team is ready to assist you every step of the way.

Don't wait—contact us today and accelerate your cloud transformation with Azure!

0 notes

Text

Advanced DevOps Strategies: Optimizing Software Delivery and Operations

Introduction

By bridging the gap between software development and IT operations, DevOps is a revolutionary strategy that guarantees quicker and more dependable software delivery. Businesses may increase productivity and lower deployment errors by combining automation, continuous integration (CI), continuous deployment (CD), and monitoring. Adoption of DevOps has become crucial for businesses looking to improve their software development lifecycle's scalability, security, and efficiency. To optimize development workflows, DevOps approaches need the use of tools such as Docker, Kubernetes, Jenkins, Terraform, and cloud platforms. Businesses are discovering new methods to automate, anticipate, and optimize their infrastructure for optimal performance as AI and machine learning become more integrated into DevOps.

Infrastructure as Code (IaC): Automating Deployments

Infrastructure as Code (IaC), one of the fundamental tenets of DevOps, allows teams to automate infrastructure administration. Developers may describe infrastructure declaratively with tools like Terraform, Ansible, and CloudFormation, doing away with the need for manual setups. By guaranteeing repeatable and uniform conditions and lowering human error, IaC speeds up software delivery. Scalable and adaptable deployment models result from the automated provisioning of servers, databases, and networking components. Businesses may achieve version-controlled infrastructure, quicker disaster recovery, and effective resource use in both on-premises and cloud settings by implementing IaC in DevOps processes.

The Role of Microservices in DevOps

DevOps is revolutionized by microservices architecture, which makes it possible to construct applications in a modular and autonomous manner. Microservices encourage flexibility in contrast to conventional monolithic designs, enabling teams to implement separate services without impacting the program as a whole. The administration of containerized microservices is made easier by DevOps automation technologies like Docker and Kubernetes, which provide fault tolerance, scalability, and high availability. Organizations may improve microservices-based systems' observability, traffic management, and security by utilizing service mesh technologies like Istio and Consul. Microservices integration with DevOps is a recommended method for contemporary software development as it promotes quicker releases, less downtime, and better resource usage.

CI/CD Pipelines: Enhancing Speed and Reliability

Continuous Integration (CI) and Continuous Deployment (CD) are the foundation of DevOps automation, allowing for quick software changes with no interruption. Software dependability is ensured by automating code integration, testing, and deployment with tools like Jenkins, GitLab CI/CD, and GitHub Actions. By using CI/CD pipelines, production failures are decreased, development cycles are accelerated, and manual intervention is eliminated. Blue-green deployments, rollback procedures, and automated testing all enhance deployment security and stability. Businesses who use CI/CD best practices see improved time-to-market, smooth upgrades, and high-performance apps in both on-premises and cloud settings.

Conclusion

Businesses may achieve agility, efficiency, and security in contemporary software development by mastering DevOps principles. Innovation and operational excellence are fueled by the combination of IaC, microservices, CI/CD, and automation. A DevOps internship may offer essential industry exposure and practical understanding of sophisticated DevOps technologies and processes to aspiring individuals seeking to obtain practical experience.

#devOps#devOps mastery#Devops mastery course#devops mastery internship#devops mastery training#devops internship in pune#e3l#e3l.co

0 notes