#data centers cooling systems

Explore tagged Tumblr posts

Text

Future Applications of Cloud Computing: Transforming Businesses & Technology

Cloud computing is revolutionizing industries by offering scalable, cost-effective, and highly efficient solutions. From AI-driven automation to real-time data processing, the future applications of cloud computing are expanding rapidly across various sectors.

Key Future Applications of Cloud Computing

1. AI & Machine Learning Integration

Cloud platforms are increasingly being used to train and deploy AI models, enabling businesses to harness data-driven insights. The future applications of cloud computing will further enhance AI's capabilities by offering more computational power and storage.

2. Edge Computing & IoT

With IoT devices generating massive amounts of data, cloud computing ensures seamless processing and storage. The rise of edge computing, a subset of the future applications of cloud computing, will minimize latency and improve performance.

3. Blockchain & Cloud Security

Cloud-based blockchain solutions will offer enhanced security, transparency, and decentralized data management. As cybersecurity threats evolve, the future applications of cloud computing will focus on advanced encryption and compliance measures.

4. Cloud Gaming & Virtual Reality

With high-speed internet and powerful cloud servers, cloud gaming and VR applications will grow exponentially. The future applications of cloud computing in entertainment and education will provide immersive experiences with minimal hardware requirements.

Conclusion

The future applications of cloud computing are poised to redefine business operations, healthcare, finance, and more. As cloud technologies evolve, organizations that leverage these innovations will gain a competitive edge in the digital economy.

🔗 Learn more about cloud solutions at Fusion Dynamics! 🚀

#Keywords#services on cloud computing#edge network services#available cloud computing services#cloud computing based services#cooling solutions#cloud backups for business#platform as a service in cloud computing#platform as a service vendors#hpc cluster management software#edge computing services#ai services providers#data centers cooling systems#https://fusiondynamics.io/cooling/#server cooling system#hpc clustering#edge computing solutions#data center cabling solutions#cloud backups for small business#future applications of cloud computing

0 notes

Text

Data Center Cooling System: Air Cooled Chillers vs Water Cooled Chillers Which One is Right?

If you manage or design data centers, you already know this: heat is the invisible threat. With servers running round the clock, cooling isn’t just a background function—it’s core to performance, uptime, and reliability.

And when it comes to cooling large-scale infrastructure, two technologies dominate the conversation: Air Cooled Chillers and Water Cooled Chillers.

They both serve the same purpose—removing heat—but how they do it, and what that means for your operations, is where the difference lies.

So, let’s break it down. But first let’s understand What is a Data Center Cooling System and why a data center needs it?

What is a Data Center Cooling System?

A Data Center Cooling System is the infrastructure and technology used to remove excess heat generated by servers, storage units, and networking equipment within a data center. This maintains optimal temperature and humidity to ensure reliable operation and longevity of IT infrastructure. Key Goals of a Data Center Cooling System:

Maintain safe operating temperatures (typically 18–27°C or 64–81°F)

Control humidity to prevent static and condensation

Improve energy efficiency (PUE – Power Usage Effectiveness)

Minimize environmental impact

Why Do Data Centers Need a Cooling System?

Data centers are built to run nonstop. Whether handling cloud applications, transactions, or machine learning workloads, the servers inside never sleep—and neither does the heat they produce.

Without an efficient Data Center Cooling System, all that heat buildup can lead to:

Hardware failures

Slower processing speeds

Reduced lifespan of IT equipment

Costly downtime

An effective Data Center Cooling System keeps temperatures within optimal limits, improves energy efficiency, and ensures that critical operations remain uninterrupted.

What is an Air Cooled Chiller?

Air cooled chillers are pretty straightforward. They use ambient air to remove heat from a system. Fans pull in outside air, push it across condenser coils, and carry heat away from the refrigerant. That’s it—no extra plumbing, no cooling towers, no complex installation.

These systems are self-contained and typically placed on rooftops or open outdoor areas. Because they’re compact and easy to install, they’re a go-to for smaller facilities and fast-track projects.

Why they’re popular:

Easy to deploy and maintain

Don’t require access to a water source

Lower initial setup costs

Ideal for moderate loads and less complex sites

But, there’s a trade-off. Air cooled chillers depend on outside air temperature. In hot climates, they may struggle to perform efficiently. They’re also noisier due to the large fans used for airflow.

What is a Water Cooled Chiller?

Water cooled chillers use a different approach. Instead of ambient air, they rely on water to absorb and dissipate heat. These systems circulate water through condenser coils and release that heat via a cooling tower.

This method is significantly more efficient—especially in larger facilities that operate 24/7. It also offers more stable cooling, regardless of outdoor weather conditions.

Why they’re preferred in mission-critical setups:

Higher energy efficiency

Quieter operation (since cooling towers can be located remotely)

Better suited for high-density environments

Long-term savings despite higher upfront investment

However, water cooled systems require more space, more infrastructure (like pumps, piping, and water treatment), and consistent upkeep.

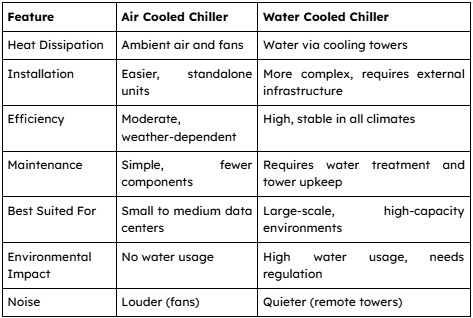

Key Differences of Air Cooled Chillers and Water Cooled Chillers

Air Cooled Chillers or Water Cooled Chillers: Which is the Best for a Data Center Cooling System?

Every Data Center Cooling System has to balance efficiency, reliability, and cost. The choice between Air Cooled Chillers and Water Cooled Chillers depends on your specific operational needs.

Choose Air Cooled Chillers if:

Your data center is small or medium-sized

You need a fast, straightforward installation

You want minimal maintenance and lower water usage

You’re dealing with moderate workloads

Choose Water Cooled Chillers if:

You operate a large or hyperscale facility

Energy efficiency is a high priority

Your infrastructure supports cooling towers

You can invest in long-term operational savings

Wrap Up

The demand for data—and the infrastructure to support it—is only growing. That means Data Center Cooling Systems must evolve too, offering efficiency, resilience, and flexibility.

While Air Cooled Chillers may not always match the efficiency of water-based systems, they shine in scenarios where simplicity, speed, and space constraints matter most. As cooling technology continues to advance, air cooled solutions are becoming smarter, quieter, and more efficient—making them an increasingly attractive option for modern data centers.

Still unsure which chiller system fits your needs best?We at Climaveneta India help facilities assess, design, and implement custom cooling strategies tailored for today’s digital demands—and tomorrow’s energy expectations.

0 notes

Text

Hybrid Small Modular Reactors (SMRs): Pioneering the Future of Energy and Connectivity

SolveForce is proud to announce the release of a groundbreaking new book, “Hybrid Small Modular Reactors (SMRs): From Design to Future Technologies,” co-authored by Ronald Joseph Legarski, Jr., President & CEO of SolveForce and Co-Founder of Adaptive Energy Systems. This publication stands at the convergence of next-generation nuclear energy, telecommunications infrastructure, and digital…

#Adaptive Energy Systems#AI in Energy#Cybersecurity#Data Center Energy Solutions#Digital twin#Energy and Telecom Integration#Energy Storage#Energy Sustainability#Fiber Optics#Fusion-Fission Hybrids#Grid Optimization#Hybrid SMRs#Hydrogen from SMRs#Lead-Cooled Reactor#Modular Energy#Next-Gen Reactors#Nuclear Book Release#Nuclear Energy#Nuclear Innovation#Reactor Safety#Recycled Nuclear Fuel#Ron Legarski#Small Modular Reactors#Smart Grid#smart infrastructure#SMR Deployment Strategies#SMR Design#SolveForce#Telecommunications#Yash Patel

0 notes

Text

Data Center Liquid Cooling Market Size, Forecast & Growth Opportunities

In 2025 and beyond, the data center liquid cooling market size is poised for significant growth, reshaping the cooling landscape of hyperscale and enterprise data centers. As data volumes surge due to cloud computing, AI workloads, and edge deployments, traditional air-cooling systems are struggling to keep up. Enter liquid cooling—a next-gen solution gaining traction among CTOs, infrastructure heads, and facility engineers globally.

Market Size Overview: A Surge in Demand

The global data center liquid cooling market size was valued at USD 21.14 billion in 2030, and it is projected to grow at a CAGR of over 33.2% between 2025 and 2030. By 2030, fueled by escalating energy costs, density of server racks, and the drive for energy-efficient and sustainable operations.

This growth is also spurred by tech giants like Google, Microsoft, and Meta aggressively investing in high-density AI data centers, where air cooling simply cannot meet the thermal requirements.

What’s Driving the Market Growth?

AI & HPC Workloads The rise of artificial intelligence (AI), deep learning, and high-performance computing (HPC) applications demand massive processing power, generating heat loads that exceed air cooling thresholds.

Edge Computing Expansion With 5G and IoT adoption, edge data centers are becoming mainstream. These compact centers often lack space for elaborate air-cooling systems, making liquid cooling ideal.

Sustainability Mandates Governments and corporations are pushing toward net-zero carbon goals. Liquid cooling can reduce power usage effectiveness (PUE) and water usage, aligning with green data center goals.

Space and Energy Efficiency Liquid cooling systems allow for greater rack density, reducing the physical footprint and optimizing cooling efficiency, which directly translates to lower operational costs.

Key Technology Trends Reshaping the Market

Direct-to-Chip (D2C) Cooling: Coolant circulates directly to the heat source, offering precise thermal management.

Immersion Cooling: Servers are submerged in thermally conductive dielectric fluid, offering superior heat dissipation.

Rear Door Heat Exchangers: These allow retrofitting of existing setups with minimal disruption.

Modular Cooling Systems: Plug-and-play liquid cooling solutions that reduce deployment complexity in edge and micro-data centers.

Regional Insights: Where the Growth Is Concentrated

North America leads the market, driven by early technology adoption and hyperscale investments.

Asia-Pacific is witnessing exponential growth, especially in India, China, and Singapore, where government-backed digitalization and smart city projects are expanding rapidly.

Europe is catching up fast, with sustainability regulations pushing enterprises to adopt liquid cooling for energy-efficient operations.

Download PDF Brochure - Get in-depth insights, market segmentation, and technology trends

Key Players in the Liquid Cooling Space

Some of the major players influencing the data center liquid cooling market size include:

Vertiv Holdings

Schneider Electric

LiquidStack

Submer

Iceotope Technologies

Asetek

Midas Green Technologies

These innovators are offering scalable and energy-optimized solutions tailored for the evolving data center architecture.

Forecast Outlook: What CTOs Need to Know

CTOs must now factor in thermal design power (TDP) thresholds, AI-driven workloads, and sustainability mandates in their IT roadmap. Liquid cooling is no longer experimental—it is a strategic infrastructure choice.

By 2027, more than 40% of new data center builds are expected to integrate liquid cooling systems, according to recent industry forecasts. This shift will dramatically influence procurement strategies, energy models, and facility designs.

Request sample report - Dive into market size, trends, and future

Conclusion:

The data center liquid cooling market size is set to witness a paradigm shift in the coming years. With its ability to handle intense compute loads, reduce energy consumption, and offer environmental benefits, liquid cooling is becoming a must-have for forward-thinking organizations. It is time to evaluate and invest in liquid cooling infrastructure now—not just to stay competitive, but to future-proof their data center operations for the AI era.

#data center cooling#liquid cooling market#data center liquid cooling#market forecast#cooling technology trends#data center infrastructure#thermal management#liquid cooling solutions#data center growth#edge computing#HPC cooling#cooling systems market#future of data centers#liquid immersion cooling#server cooling technologies

0 notes

Text

Future-Ready Data Centers: Cooling Innovations Shaping Tomorrow

In the rapidly evolving world of technology, data centers have become the backbone of modern business operations. However, as these centers grow larger and more powerful, managing their heat output has become a significant challenge. Revolutionizing data center cooling is not just a trend—it’s a necessity for sustainability, efficiency, and performance. In Hyderabad, a hub of technological innovation, several companies are stepping up with cutting-edge solutions.

The Growing Need for Advanced Data Center Cooling

Data centers are high-density environments that generate enormous amounts of heat. Traditional cooling methods, such as simple air conditioning, are no longer sufficient to handle the demands of modern servers and storage units. This has given rise to a new wave of specialized solutions designed to optimize energy consumption and maintain ideal operating conditions.

In Hyderabad, the demand for efficient data center cooling solutions is rising. Businesses are increasingly relying on data center cooling system manufacturers in Hyderabad to provide systems that ensure maximum uptime and reliability.

Innovative Cooling Technologies Transforming Data Centers

Modern cooling technologies are transforming the way data centers operate. Some of the most promising innovations include:

Liquid Cooling: By circulating a coolant directly to critical components, liquid cooling provides better thermal management than traditional air-based systems.

Immersion Cooling: Servers are submerged in a non-conductive liquid that absorbs heat efficiently, drastically reducing the need for air cooling.

AI-driven Climate Control: Artificial intelligence monitors temperature, humidity, and airflow, making real-time adjustments for maximum efficiency.

Free Cooling: Using the outside air during cooler months to assist in data center cooling, significantly reducing energy costs.

Companies offering data center cooling solutions in Hyderabad are quickly adopting these technologies to stay ahead of the curve and help businesses reduce their carbon footprint.

Choosing the Right Data Center Cooling Solutions Company in Hyderabad

When selecting a data center cooling solutions company in Hyderabad, businesses must consider several critical factors:

Experience and Expertise: Look for companies with a proven track record in installing and maintaining advanced cooling systems.

Customization: Each data center has unique requirements; a good provider should offer customized solutions based on specific needs.

Energy Efficiency: Opt for providers that prioritize green solutions and help lower operational costs through innovative cooling techniques.

24/7 Support: Continuous monitoring and quick response are vital to preventing system failures.

With several experienced data center cooling solution providers in Hyderabad, businesses have plenty of options to choose from when building or upgrading their data centers.

Why Hyderabad is Emerging as a Leader in Data Center Cooling

Hyderabad has emerged as a stronghold for IT and data center infrastructure in India. With the presence of numerous multinational corporations, tech parks, and start-ups, the city has witnessed a sharp rise in demand for efficient data center management.

As a result, Hyderabad is now home to some of the top data center cooling system manufacturers who provide state-of-the-art solutions tailored to the region's climate and industry needs. These manufacturers are playing a crucial role in making Hyderabad a preferred location for data center operations.

Future Trends in Data Center Cooling

Looking ahead, the future of data center cooling is centered around sustainability and automation. Some upcoming trends include:

Hybrid Cooling Systems: Combining liquid and air cooling for maximum flexibility and performance.

Smart Cooling Solutions: IoT-enabled sensors that provide real-time analytics and predictive maintenance.

Renewable Energy Integration: Using renewable energy sources to power cooling systems, further reducing environmental impact.

As these innovations continue to develop, data center cooling systems in Hyderabad will only become more efficient, sustainable, and intelligent.

Conclusion

The revolution in data center cooling is not only enhancing the efficiency of modern data centers but also contributing significantly to environmental sustainability. Hyderabad, with its robust ecosystem of data center cooling system manufacturers, solution providers, and cutting-edge technologies, is leading this transformation in India. Businesses investing in innovative cooling solutions today are not just future-proofing their operations—they are setting the stage for a greener, smarter future.

#data center cooling solutions in hyderabad#data center cooling solution providers in hyderabad#data center cooling system in hyderabad

0 notes

Text

Eco-Friendly and Efficient Data Center Cooling Systems in Chennai

In the fast-evolving digital landscape, data centers play a crucial role in ensuring seamless IT operations. However, maintaining optimal temperatures within these facilities is a significant challenge due to the immense heat generated by high-performance computing equipment. This is where data center cooling solutions in Chennai come into play. With advanced cooling technologies, these solutions help maintain efficiency, reduce energy consumption, and enhance the longevity of critical infrastructure.

Importance of Data Center Cooling Systems

Data centers house thousands of servers, network equipment, and storage devices that generate substantial heat. Without proper cooling, excessive heat can lead to equipment failures, downtime, and increased operational costs. Data center cooling system manufacturers in Chennai provide innovative and energy-efficient solutions that ensure optimal temperature control, contributing to the overall sustainability of data centers.

Leading Data Center Cooling Solutions Companies in Chennai

Chennai is home to several reputed data center cooling solutions companies that specialize in designing, manufacturing, and installing state-of-the-art cooling systems. These companies offer a range of solutions, including:

Precision Air Conditioning: Ensures stable temperature and humidity levels, essential for sensitive IT equipment.

Liquid Cooling Solutions: Uses advanced techniques like direct-to-chip cooling to improve energy efficiency.

In-Row Cooling Systems: Placed between server racks to provide targeted cooling and reduce hotspots.

Cold Aisle & Hot Aisle Containment: Improves airflow management and minimizes energy wastage.

Key Features of Data Center Cooling Systems

Data center cooling system providers in Chennai focus on integrating innovative technologies to enhance efficiency and sustainability. Some key features include:

Energy Efficiency: Advanced cooling systems reduce power consumption and operational costs.

Scalability: Modular cooling solutions can be scaled as per the data center’s growing needs.

Smart Monitoring & Automation: IoT-enabled cooling systems offer real-time monitoring and predictive maintenance.

Eco-Friendly Solutions: Adoption of green cooling technologies minimizes the carbon footprint.

Choosing the Right Data Center Cooling Solution Provider in Chennai

Selecting the best data center cooling system manufacturers in Chennai requires careful consideration of factors such as experience, technology expertise, energy efficiency, and customer support. The right provider ensures not only effective cooling but also cost savings and improved operational efficiency.

Why is Chennai a Hub for Data Center Cooling Solutions?

Chennai has emerged as a significant player in the data center industry due to its robust IT infrastructure and favorable climatic conditions. The city boasts several data center cooling solutions companies offering cutting-edge technologies that cater to both large-scale enterprises and small businesses. These companies focus on sustainable cooling practices, ensuring compliance with global standards.

Conclusion

With the rising demand for efficient and eco-friendly data center cooling solutions, Chennai has become a key hub for innovative cooling technologies. Whether you are looking for precision cooling, liquid cooling, or energy-efficient air cooling solutions, data center cooling system manufacturers in Chennai offer a wide range of options tailored to your needs.For high-quality data center cooling system manufacturers in Chennai, visit Refroid, a leading provider specializing in cutting-edge cooling technologies that enhance data center performance and efficiency.

#data center cooling solutions in chennai#data center cooling solution providers in chennai#data center cooling system in chennai

0 notes

Text

The New Pillar of Infra: Data Centers Can Deliver More Computing Power While Improving Sustainability

Pandemic marked a pivotal point for many data centers and digital infrastructure providers; more was expected of them, and they were able to deliver. To meet the rising demand for computing power and digital services, data centers had to expand. Their demand has grown from a few megawatts to over 800 megawatts today. This is expected to double in the next 4-5 years. Mumbai & Chennai are considered hubs for DCs due to the existing infrastructure. NCR and Hyderabad are emerging hotspots due to the policies concerning data infra.

Many new data centers are being built today at a location where customers can rent and share space as well as computing power. New technologies like 5G mobile networks, artificial intelligence (AI), and high-performance computing have increased data centers demand. However, meeting this demand is just the tip of the iceberg.

Customers, as well as government and industry regulatory bodies, put constant pressure on data centers to deliver more and demand less. Data centers today are under constant pressure to increase computing power while using less energy and reducing their carbon footprint. While this will be a pillar of the Indian economy in the coming years, India has a unique opportunity to learn from other countries’ experiences. Designers, DC providers, regulatory bodies, and suppliers will bear a significant amount of responsibility in this regard. Cooling systems’ efficiency and effectiveness are the solution to the dilemma of reducing data center energy consumption while maintaining sustainability.

Data Center Trends

Adopting Best Cooling Practices: Back in the day, traditional air-cooling systems could keep a safe, controlled environment for low rack densities of 2 kW to 5 kW per rack. Today’s operators, on the other hand, aim for densities of up to 20 kW, which are beyond the capabilities of traditional air-cooling systems. Modern cooling systems, such as rear door heat exchangers, PAC, and liquid cooling, maybe a good fit. Chillers can now operate at chilled water temperatures of up to 22 degrees Celsius, and this limit is constantly being pushed.

Plugging Into the Smart Grid: There are ways to make your data center more efficient while maintaining sustainability, thanks to daily innovations and technological advancements. Smart grids create an automated power delivery network by allowing energy and information to flow in both directions. Operators of data centers can draw clean power from the grid and integrate renewable energy sources, making them occasional power suppliers.

Minimizing unused IT Equipment: Distributed computing, which combines several computers into a single machine, is a great way to reduce unused IT equipment. By combining the processing power of multiple data centers, they can increase processing power while eliminating the need for separate facilities for different applications. More effort must be put into designing data centers that address cooling, energy consumption, and waste to create truly green data centers that are beneficial to the environment.

● Installing energy-efficient servers ● Opting for more effective cooling solutions ● Optimizing hardware refresh cycles

Focusing on these key areas can help the global IT industry achieve, if not exceed, the reduction in carbon footprint that cloud computing has enabled. The industry is at an inflexion point and it is a golden opportunity to establish this foundation and the latest trends and best practices.

0 notes

Text

Data Center Cooling Systems – Benefits, Differences, and Comparisons

Data centers generate massive amounts of heat due to high-performance computing equipment running 24/7. Efficient cooling systems are essential to prevent overheating, downtime, and hardware failure. This guide explores the benefits, differences, and comparisons of various data center cooling solutions.

Why Is Data Center Cooling Important?

🔥 Prevents overheating and extends server lifespan. 💡 Ensures optimal performance and reduces downtime. 💰 Lowers energy costs by improving efficiency. 🌱 Supports green IT initiatives by reducing carbon footprint.

Types of Data Center Cooling Systems

1. Air-Based Cooling Systems 🌬️

a) Traditional CRAC (Computer Room Air Conditioning) Units ✔ Uses chilled air circulation to cool the room. ✔ Lower upfront cost, but higher long-term energy use. ✔ Best for smaller data centers with low-density racks.

b) CRAH (Computer Room Air Handler) Units ✔ Works with chilled water cooling systems instead of refrigerants. ✔ More energy-efficient than CRAC units. ✔ Suitable for medium to large-scale data centers.

c) Hot & Cold Aisle Containment ✔ Separates hot & cold air to improve cooling efficiency. ✔ Reduces energy consumption by up to 40%. ✔ Ideal for high-density data centers.

2. Liquid-Based Cooling Systems 💧

a) Chilled Water Cooling ✔ Uses chilled water loops to remove heat. ✔ Highly efficient but requires extensive plumbing. ✔ Best for large-scale data centers.

b) Immersion Cooling ✔ Submerges servers in non-conductive liquid. ✔ Extreme efficiency – reduces cooling energy by up to 95%. ✔ Ideal for high-performance computing (HPC) and AI workloads.

c) Direct-to-Chip Liquid Cooling ✔ Coolant circulates directly through server components. ✔ Prevents hotspots and boosts efficiency. ✔ Great for compact, high-density server racks.

3. Free Cooling (Economization) ❄️

✔ Uses outside air or water sources to cool servers. ✔ Low operating costs but depends on climate conditions. ✔ Ideal for data centers in cooler geographic regions.

Comparison: Air Cooling vs. Liquid Cooling

FeatureAir Cooling (CRAC/CRAH)Liquid Cooling (Chilled Water, Immersion)EfficiencyModerateHigh (up to 95% efficiency)Energy CostHigherLowerSpace RequirementLarge footprintCompactUpfront CostLowerHigherMaintenanceSimpleMore complexBest ForSmall/Medium data centersHigh-density, HPC, AI, Large data centers

Key Benefits of Advanced Cooling Solutions

✅ Reduced energy consumption lowers operational costs. ✅ Enhanced server lifespan due to controlled temperatures. ✅ Improved efficiency for high-performance computing. ✅ Lower environmental impact with green cooling solutions.

Final Thoughts: Choosing the Right Cooling System

The best cooling solution depends on factors like data center size, energy efficiency goals, and budget. Traditional air cooling works for smaller setups, while liquid cooling and free cooling are better for high-density or large-scale operations.

Need Help Choosing the Best Cooling System for Your Data Center?

Let me know your data center size, power requirements, and efficiency goals, and I’ll help find the best solution!

0 notes

Text

Data Center Liquid Cooling Market - Forecast(2024 - 2030) - IndustryARC

The Data Center Liquid Cooling Market size is estimated at USD 4.48 billion in 2024, and is expected to reach USD 12.76 billion by 2029, growing at a CAGR of 23.31% during the forecast period (2024-2029). The increasing adoption of various liquid cooling strategies such as dielectric cooling over air cooling in order to manage equipment temperature is boosting the Data Center Liquid Cooling Market. In addition, the growing demand for room-level cooling for cloud computing applications is tremendously driving the data center cooling systems market size during the forecast period 2022-2027.

#data#data centers#liquid cooling systems market#liquidcooling#datacenter#market#trends#markettrends#cloudcomputing

0 notes

Text

Available Cloud Computing Services at Fusion Dynamics

We Fuel The Digital Transformation Of Next-Gen Enterprises!

Fusion Dynamics provides future-ready IT and computing infrastructure that delivers high performance while being cost-efficient and sustainable. We envision, plan and build next-gen data and computing centers in close collaboration with our customers, addressing their business’s specific needs. Our turnkey solutions deliver best-in-class performance for all advanced computing applications such as HPC, Edge/Telco, Cloud Computing, and AI.

With over two decades of expertise in IT infrastructure implementation and an agile approach that matches the lightning-fast pace of new-age technology, we deliver future-proof solutions tailored to the niche requirements of various industries.

Our Services

We decode and optimise the end-to-end design and deployment of new-age data centers with our industry-vetted services.

System Design

When designing a cutting-edge data center from scratch, we follow a systematic and comprehensive approach. First, our front-end team connects with you to draw a set of requirements based on your intended application, workload, and physical space. Following that, our engineering team defines the architecture of your system and deep dives into component selection to meet all your computing, storage, and networking requirements. With our highly configurable solutions, we help you formulate a system design with the best CPU-GPU configurations to match the desired performance, power consumption, and footprint of your data center.

Why Choose Us

We bring a potent combination of over two decades of experience in IT solutions and a dynamic approach to continuously evolve with the latest data storage, computing, and networking technology. Our team constitutes domain experts who liaise with you throughout the end-to-end journey of setting up and operating an advanced data center.

With a profound understanding of modern digital requirements, backed by decades of industry experience, we work closely with your organisation to design the most efficient systems to catalyse innovation. From sourcing cutting-edge components from leading global technology providers to seamlessly integrating them for rapid deployment, we deliver state-of-the-art computing infrastructures to drive your growth!

What We Offer The Fusion Dynamics Advantage!

At Fusion Dynamics, we believe that our responsibility goes beyond providing a computing solution to help you build a high-performance, efficient, and sustainable digital-first business. Our offerings are carefully configured to not only fulfil your current organisational requirements but to future-proof your technology infrastructure as well, with an emphasis on the following parameters –

Performance density

Rather than focusing solely on absolute processing power and storage, we strive to achieve the best performance-to-space ratio for your application. Our next-generation processors outrival the competition on processing as well as storage metrics.

Flexibility

Our solutions are configurable at practically every design layer, even down to the choice of processor architecture – ARM or x86. Our subject matter experts are here to assist you in designing the most streamlined and efficient configuration for your specific needs.

Scalability

We prioritise your current needs with an eye on your future targets. Deploying a scalable solution ensures operational efficiency as well as smooth and cost-effective infrastructure upgrades as you scale up.

Sustainability

Our focus on future-proofing your data center infrastructure includes the responsibility to manage its environmental impact. Our power- and space-efficient compute elements offer the highest core density and performance/watt ratios. Furthermore, our direct liquid cooling solutions help you minimise your energy expenditure. Therefore, our solutions allow rapid expansion of businesses without compromising on environmental footprint, helping you meet your sustainability goals.

Stability

Your compute and data infrastructure must operate at optimal performance levels irrespective of fluctuations in data payloads. We design systems that can withstand extreme fluctuations in workloads to guarantee operational stability for your data center.

Leverage our prowess in every aspect of computing technology to build a modern data center. Choose us as your technology partner to ride the next wave of digital evolution!

#Keywords#services on cloud computing#edge network services#available cloud computing services#cloud computing based services#cooling solutions#hpc cluster management software#cloud backups for business#platform as a service vendors#edge computing services#server cooling system#ai services providers#data centers cooling systems#integration platform as a service#https://www.tumblr.com/#cloud native application development#server cloud backups#edge computing solutions for telecom#the best cloud computing services#advanced cooling systems for cloud computing#c#data center cabling solutions#cloud backups for small business#future applications of cloud computing

0 notes

Text

Efficient Cooling Solutions for Modern Data Centers

#cooling solutions for data centers#data center cooling solutions company#immersion cooling solutions for data centers#server immersion cooling solutions in india#data center cooling system manufacturers in hyderabad#data center cooling solutions company in mumbai#immersion cooling system for data centers in chennai#ecopod immersion cooling solutions for data centers in delhi#isopod immersion cooling system manufacturers in bangalore

1 note

·

View note

Text

If anyone wants to know why every tech company in the world right now is clamoring for AI like drowned rats scrabbling to board a ship, I decided to make a post to explain what's happening.

(Disclaimer to start: I'm a software engineer who's been employed full time since 2018. I am not a historian nor an overconfident Youtube essayist, so this post is my working knowledge of what I see around me and the logical bridges between pieces.)

Okay anyway. The explanation starts further back than what's going on now. I'm gonna start with the year 2000. The Dot Com Bubble just spectacularly burst. The model of "we get the users first, we learn how to profit off them later" went out in a no-money-having bang (remember this, it will be relevant later). A lot of money was lost. A lot of people ended up out of a job. A lot of startup companies went under. Investors left with a sour taste in their mouth and, in general, investment in the internet stayed pretty cooled for that decade. This was, in my opinion, very good for the internet as it was an era not suffocating under the grip of mega-corporation oligarchs and was, instead, filled with Club Penguin and I Can Haz Cheezburger websites.

Then around the 2010-2012 years, a few things happened. Interest rates got low, and then lower. Facebook got huge. The iPhone took off. And suddenly there was a huge new potential market of internet users and phone-havers, and the cheap money was available to start backing new tech startup companies trying to hop on this opportunity. Companies like Uber, Netflix, and Amazon either started in this time, or hit their ramp-up in these years by shifting focus to the internet and apps.

Now, every start-up tech company dreaming of being the next big thing has one thing in common: they need to start off by getting themselves massively in debt. Because before you can turn a profit you need to first spend money on employees and spend money on equipment and spend money on data centers and spend money on advertising and spend money on scale and and and

But also, everyone wants to be on the ship for The Next Big Thing that takes off to the moon.

So there is a mutual interest between new tech companies, and venture capitalists who are willing to invest $$$ into said new tech companies. Because if the venture capitalists can identify a prize pig and get in early, that money could come back to them 100-fold or 1,000-fold. In fact it hardly matters if they invest in 10 or 20 total bust projects along the way to find that unicorn.

But also, becoming profitable takes time. And that might mean being in debt for a long long time before that rocket ship takes off to make everyone onboard a gazzilionaire.

But luckily, for tech startup bros and venture capitalists, being in debt in the 2010's was cheap, and it only got cheaper between 2010 and 2020. If people could secure loans for ~3% or 4% annual interest, well then a $100,000 loan only really costs $3,000 of interest a year to keep afloat. And if inflation is higher than that or at least similar, you're still beating the system.

So from 2010 through early 2022, times were good for tech companies. Startups could take off with massive growth, showing massive potential for something, and venture capitalists would throw infinite money at them in the hopes of pegging just one winner who will take off. And supporting the struggling investments or the long-haulers remained pretty cheap to keep funding.

You hear constantly about "Such and such app has 10-bazillion users gained over the last 10 years and has never once been profitable", yet the thing keeps chugging along because the investors backing it aren't stressed about the immediate future, and are still banking on that "eventually" when it learns how to really monetize its users and turn that profit.

The pandemic in 2020 took a magnifying-glass-in-the-sun effect to this, as EVERYTHING was forcibly turned online which pumped a ton of money and workers into tech investment. Simultaneously, money got really REALLY cheap, bottoming out with historic lows for interest rates.

Then the tide changed with the massive inflation that struck late 2021. Because this all-gas no-brakes state of things was also contributing to off-the-rails inflation (along with your standard-fare greedflation and price gouging, given the extremely convenient excuses of pandemic hardships and supply chain issues). The federal reserve whipped out interest rate hikes to try to curb this huge inflation, which is like a fire extinguisher dousing and suffocating your really-cool, actively-on-fire party where everyone else is burning but you're in the pool. And then they did this more, and then more. And the financial climate followed suit. And suddenly money was not cheap anymore, and new loans became expensive, because loans that used to compound at 2% a year are now compounding at 7 or 8% which, in the language of compounding, is a HUGE difference. A $100,000 loan at a 2% interest rate, if not repaid a single cent in 10 years, accrues to $121,899. A $100,000 loan at an 8% interest rate, if not repaid a single cent in 10 years, more than doubles to $215,892.

Now it is scary and risky to throw money at "could eventually be profitable" tech companies. Now investors are watching companies burn through their current funding and, when the companies come back asking for more, investors are tightening their coin purses instead. The bill is coming due. The free money is drying up and companies are under compounding pressure to produce a profit for their waiting investors who are now done waiting.

You get enshittification. You get quality going down and price going up. You get "now that you're a captive audience here, we're forcing ads or we're forcing subscriptions on you." Don't get me wrong, the plan was ALWAYS to monetize the users. It's just that it's come earlier than expected, with way more feet-to-the-fire than these companies were expecting. ESPECIALLY with Wall Street as the other factor in funding (public) companies, where Wall Street exhibits roughly the same temperament as a baby screaming crying upset that it's soiled its own diaper (maybe that's too mean a comparison to babies), and now companies are being put through the wringer for anything LESS than infinite growth that Wall Street demands of them.

Internal to the tech industry, you get MASSIVE wide-spread layoffs. You get an industry that used to be easy to land multiple job offers shriveling up and leaving recent graduates in a desperately awful situation where no company is hiring and the market is flooded with laid-off workers trying to get back on their feet.

Because those coin-purse-clutching investors DO love virtue-signaling efforts from companies that say "See! We're not being frivolous with your money! We only spend on the essentials." And this is true even for MASSIVE, PROFITABLE companies, because those companies' value is based on the Rich Person Feeling Graph (their stock) rather than the literal profit money. A company making a genuine gazillion dollars a year still tears through layoffs and freezes hiring and removes the free batteries from the printer room (totally not speaking from experience, surely) because the investors LOVE when you cut costs and take away employee perks. The "beer on tap, ping pong table in the common area" era of tech is drying up. And we're still unionless.

Never mind that last part.

And then in early 2023, AI (more specifically, Chat-GPT which is OpenAI's Large Language Model creation) tears its way into the tech scene with a meteor's amount of momentum. Here's Microsoft's prize pig, which it invested heavily in and is galivanting around the pig-show with, to the desperate jealousy and rapture of every other tech company and investor wishing it had that pig. And for the first time since the interest rate hikes, investors have dollar signs in their eyes, both venture capital and Wall Street alike. They're willing to restart the hose of money (even with the new risk) because this feels big enough for them to take the risk.

Now all these companies, who were in varying stages of sweating as their bill came due, or wringing their hands as their stock prices tanked, see a single glorious gold-plated rocket up out of here, the likes of which haven't been seen since the free money days. It's their ticket to buy time, and buy investors, and say "see THIS is what will wring money forth, finally, we promise, just let us show you."

To be clear, AI is NOT profitable yet. It's a money-sink. Perhaps a money-black-hole. But everyone in the space is so wowed by it that there is a wide-spread and powerful conviction that it will become profitable and earn its keep. (Let's be real, half of that profit "potential" is the promise of automating away jobs of pesky employees who peskily cost money.) It's a tech-space industrial revolution that will automate away skilled jobs, and getting in on the ground floor is the absolute best thing you can do to get your pie slice's worth.

It's the thing that will win investors back. It's the thing that will get the investment money coming in again (or, get it second-hand if the company can be the PROVIDER of something needed for AI, which other companies with venture-back will pay handsomely for). It's the thing companies are terrified of missing out on, lest it leave them utterly irrelevant in a future where not having AI-integration is like not having a mobile phone app for your company or not having a website.

So I guess to reiterate on my earlier point:

Drowned rats. Swimming to the one ship in sight.

36K notes

·

View notes

Text

Data Center Cooling Systems Market - Forecast(2024 - 2030)

Data Center Cooling Systems Market Overview

The data center cooling market size was valued at USD 13.51 billion in 2022 and is projected to grow from USD 14.85 billion in 2023 to USD 30.31 billion by 2030. The increasing adoption of various cooling strategies such as free cooling, air containment and closed loop cooling in order to manage equipment temperature is boosting the data center cooling system market. In addition, the growing demand for room-level cooling for utilizing down-flow computer-room air conditioners (CRACs) is tremendously driving the data center cooling system market size during the forecast period 2021-2026. The outsourcing of data center services to a colocation facility frees up precious IT power, enabling a company to rely more on research and development rather than on an ongoing basis learning the ins and outs of its network. Money that may have been invested on running a data center might go into market analysis or product creation, providing additional ways for corporations to make the most of their current capital and achieve their business objectives.

In an era dominated by digital transformation, the demand for robust and efficient data center cooling systems has never been more critical. The Global Data Center Cooling Systems Market is witnessing a paradigm shift towards sustainability, as businesses recognize the need for energy-efficient solutions to support their growing digital infrastructure.

Report Coverage

The report: “Data Center Cooling Systems Market Forecast (2021-2026)”, by IndustryARC, covers an in-depth analysis of the following segments of the Data Center Cooling Systems Market.

By Cooling Strategies: Free Cooling (Air-Side Economization, Water-side Economization), Air Containment (Code-Aisle Containment and Hot-Aisle Containment), Closed Loop Cooling.

By End-use Types: Data Center Type (Tier 1, Tier 2, Tier 3 and Tier 4).

By Industry Verticals: Telecommunication (Outdoor Cabin, Mobile network computer rooms and Railway switchgear), Oil and Gas/Energy/Utilities, Healthcare, IT/ITES/Cloud Service Providers, Colocation, Content & Content Delivery Network, Education, Banking and Financial Services, Government, Food & Beverages, Manufacturing/Mining, Retail and others.

By Cooling Technique: Rack/Row Based and Room Based

By Service: Installation/Deployment Services, Maintenance Services and Monitoring Software (DCIM and Remote Climate Monitoring Services).

By Geography: North America (U.S, Canada, Mexico), South America(Brazil, Argentina, Ecuador, Peru, Colombia, Costa Rica and others), Europe(Germany, UK, France, Italy, Spain, Russia, Netherlands, Denmark and Others), APAC (China, Japan India, South Korea, Australia, Taiwan, Malaysia and Others), and RoW (Middle east and Africa).

Request Sample

Key Takeaways

In 2020, the Data Center Cooling System market was dominated by North American region owing to the adoption of environmental-friendly solutions in the data centers.

The integration of artificial intelligence (AI) based algorithms in order to predict the energy usage by the equipment in the data centers negatively and positively are further accelerating the market growth.

With the growing demand for environmental-friendly solutions in order to reduce carbon footprints from the data centers is leading to fuel the demand for data center cooling systems market.

The rising inefficient power performance leading to the shut-down of the cooling systems in data centers and further leading to financial risk to businesses. This factor is thus hampering the growth of the market.

Data Center Cooling Systems Market Segment Analysis - By Industry Vertical

Telecommunication segment held the largest market share in the Data Center Cooling System market in 2020 at 34.1%. The demand for effective data centers is growing tremendously which is leading the telecommunication sector to keep their facilities operational. This is also leading to reliability, energy consumption and maintenance. The need for efficiently managing thermal loads in the telecom facilities and electronic enclosures are enhancing the data cooling systems market. In addition, the rising usage of data center cooling systems allows telecom customers to install more communication equipment.

Inquiry Before Buying

Data Center Cooling Systems Market Segment Analysis – By End User

Data Center Cooling Market is segmented into Tier 1, Tier 2 and Tier 3 on the basis of organization size. The Tier 1 segment is anticipated to witness the highest market share of 49.4% in 2020. Commoditization and ever-increasing data center architecture changes have tilted the balance in favor of outsourced colocations. Colocation services have the facilities construction experience and pricing capacity by economies of scale. This results to provide electricity, energy and cooling at rates that individual businesses who develop their own data centers cannot access. Consequently, colocation service providers operates their facilities considerably more effectively. The return-on - investment model no longer supports businesses that are developing their own vital project facilities. Another major driver for the new IT network is the drastic rise in demand for higher power densities. Virtualization and the continuing push to accommodate more workloads within the same footprint have created problems for existing data centers designed for the purpose. From a TCO (total cost of ownership) viewpoint, the expense of retrofitting an existing building with the electricity and cooling systems required to meet network demands is significantly greater than the cost of utilizing new colocation facilities. These two considerations have tilted the scales in favor of colocation for all but the very biggest installations — businesses including Amazon, Apple, Google , and Microsoft.

Data Center Cooling Systems Market Segment Analysis - By Geography

In 2020, North America dominated the Data Center Cooling System market with a share of more than 38.1%, followed by Europe and APAC. The adoption of data center cooling technologies such as calibrated vectored cooling, chilled water system and among others by mid-to-large-sized organizations in the US are driving the market growth in this region. Additionally, the US-based data centers and companies are majorly focusing on the need for cost-effective and environmentally friendly solutions which results in the demand for efficient data center cooling systems. Furthermore, the presence of an increasing number of data centers in the US is further propelling the growth of data center cooling system market in this region.

Schedule a Call

Data Center Cooling Systems Market Drivers

Rising need for Environmental-Friendly Solutions

The growing demand for money-saving and eco-friendly solutions for the reduction of energy consumed in IT and telecom industry is enhancing the demand for data center cooling systems. The development of data center with ultra-low-carbon footprint by key player such as Schneider Electric is further embracing the growth of the market. Therefore, the demand for reducing carbon footprint of data centers is further escalating the need for environmental friendly solutions which will thereby drive the data center cooling system market.

Integration of Artificial Intelligence in the Cooling Systems

The deployment of advanced technology has highly enhanced various facilities and systems such as data center cooling systems. This deployment has led to the integration of artificial intelligence (AI) for data center cooling that gathers data by using sensors in every five minutes. The AI-based algorithms has become the major factor which is predicting the different combinations in a data center negatively and positively which affects the use of energy. As, companies are looking for ideal solutions in order to maintain temperatures in their data centers which is further embracing the AI, and thus surging the growth for the market.

Data Center Cooling Systems Market Challenges

Inefficient Power Performance

Data centers require huge amount of power to run effectively, and the presence of inefficient power performance becomes a critical issue for data center to run smoothly. The lack of effective power performance leads to slow or shut down of the cooling systems that further results in the closing of data center in order to avoid any damage to the equipment. This shut down of the data center also results in affecting the business operation causing financial risk to the business. Therefore, these key factor highly leads to hamper the growth of the data center cooling system market.

Buy Now

Data Center Cooling Systems Market Landscape

Technology launches, acquisitions and R&D activities are key strategies adopted by players in the Data Center Cooling Systems market. In 2020, the market of Data Center Cooling Systems has been consolidated by the major players – Emerson Network Power, APC, Rittal Corporation, Airedale International, Degree Controls Inc., Schneider Electric Equinix, Cloud Dynamics Inc, KyotoCooling BV, Simon and among others.

Acquisitions/Technology Launches

In July 2020, Green Revolution Cooling (GRC) major provider of single-phase immersion cooling for data centers announced the closing of $7 million series B investment. This funding will allow GRC to raise additional capital in order to support new product development and strategic partnerships. This new funding will enhance OEM agreement with Dell offering warranty coverage for Dell servers in GRC immersion systems. The other agreement will include OEM agreement with HPE, pilot projects leading to production installations, extension of many existing customer locations, winning phase one of the AFWERX initiative of the Air Force.

In May 2020, Schneider Electric partnered with EcoDataCenter in order to develop an ultra-low-carbon-footprint data center at HPC colocation in Falun, Sweden. This data center will be amongst the most sustainable data center in the Nordics, which will enhance Schneider’s EcoStruxure Building Operation, Galaxy VX UPS with lithium-Ion, and MasterPact MTZ are just some of the solutions.

#Data Center Cooling Systems Market#Data Center Cooling Systems Market Share#Data Center Cooling Systems Market Size#Data Center Cooling Systems Market Forecast#Data Center Cooling Systems Market Report#Data Center Cooling Systems Market Growth

0 notes

Text

ISOPod by Refroid: Revolutionizing Ice Storage and Cooling Solutions

Introduction:

In the world of food preservation and beverage service, the importance of reliable ice storage cannot be overstated. Whether for commercial use in restaurants, bars, and hotels or personal use in homes, a dependable ice storage solution is crucial. Enter the ISOPod by Refroid, a cutting-edge innovation that is set to transform how we store and utilize ice. This article delves into the features, benefits, and applications of the ISOPod, showcasing why it stands out in the market.

Innovative Design and Technology:

The ISOPod by Refroid represents a leap forward in ice storage technology. At its core, the ISOPod boasts a sleek, ergonomic design that maximizes storage efficiency while minimizing the space it occupies. Isopod cooling units for data centers in Mumbai, this compact footprint makes it an ideal choice for both small-scale and large-scale operations. The use of high-grade, insulated materials ensures that the ice remains solid for extended periods, reducing the frequency of refills and thereby enhancing convenience.

Efficiency and Sustainability:

In today’s world, sustainability is a key consideration for any product, and the ISOPod does not disappoint. Refroid has incorporated eco-friendly components and energy-efficient technology to minimize the environmental impact. Isopod cooling systems for data centers in Bangalore, the ISOPod uses a low-energy cooling system that not only reduces electricity consumption but also decreases operational costs. This dual benefit of cost-effectiveness and environmental responsibility makes the ISOPod an attractive option for eco-conscious businesses and individuals.

Applications Across Industries:

Beyond the realm of ice storage, Refroid also excels as one of the premier ISOPod immersion cooling system manufacturers in India. This innovative technology is pivotal for ISOPod cooling units for data centers in Mumbai and ISOPod cooling systems for data centers in Bangalore. These systems provide reliable and efficient cooling, essential for maintaining the optimal performance of data centers.

Versatility and Customization:

Furthermore, ISOPod liquid cooling solutions for data centers in Hyderabad highlight the adaptability of Refroid’s technology to various environments, ensuring that critical infrastructure remains operational under optimal conditions. This adaptability makes ISOPod a valuable asset in hospitals, clinics, and tech hubs where maintaining the integrity of stored items and equipment is crucial.

Conclusion:

The ISOPod by Refroid transcends the traditional boundaries of ice storage, offering a comprehensive solution that meets the diverse needs of modern users. With its innovative design, superior temperature regulation, and eco-friendly components, the ISOPod stands out as a leading choice for both personal and commercial applications. Moreover, Refroid’s expertise extends beyond ice storage to advanced cooling solutions, positioning them as a key player among ISOPod immersion cooling system manufacturers in India. Their ISOPod cooling units for data centers in Mumbai, Bangalore, and Hyderabad underscore their versatility and commitment to quality.

0 notes

Text

Eco-Friendly and Efficient Data Center Cooling Systems in Chennai,

In the fast-evolving digital landscape, data centers play a crucial role in ensuring seamless IT operations. However, maintaining optimal temperatures within these facilities is a significant challenge due to the immense heat generated by high-performance computing equipment. This is where data center cooling solutions in Chennai come into play. With advanced cooling technologies, these solutions help maintain efficiency, reduce energy consumption, and enhance the longevity of critical infrastructure.

Importance of Data Center Cooling Systems

Data centers house thousands of servers, network equipment, and storage devices that generate substantial heat. Without proper cooling, excessive heat can lead to equipment failures, downtime, and increased operational costs. Data center cooling system manufacturers in Chennai provide innovative and energy-efficient solutions that ensure optimal temperature control, contributing to the overall sustainability of data centers.

Leading Data Center Cooling Solutions Companies in Chennai

Chennai is home to several reputed data center cooling solutions companies that specialize in designing, manufacturing, and installing state-of-the-art cooling systems. These companies offer a range of solutions, including:

Precision Air Conditioning: Ensures stable temperature and humidity levels, essential for sensitive IT equipment.

Liquid Cooling Solutions: Uses advanced techniques like direct-to-chip cooling to improve energy efficiency.

In-Row Cooling Systems: Placed between server racks to provide targeted cooling and reduce hotspots.

Cold Aisle & Hot Aisle Containment: Improves airflow management and minimizes energy wastage.

Key Features of Data Center Cooling Systems

Data center cooling system providers in Chennai focus on integrating innovative technologies to enhance efficiency and sustainability. Some key features include:

Energy Efficiency: Advanced cooling systems reduce power consumption and operational costs.

Scalability: Modular cooling solutions can be scaled as per the data center’s growing needs.

Smart Monitoring & Automation: IoT-enabled cooling systems offer real-time monitoring and predictive maintenance.

Eco-Friendly Solutions: Adoption of green cooling technologies minimizes the carbon footprint.

Choosing the Right Data Center Cooling Solution Provider in Chennai

Selecting the best data center cooling system manufacturers in Chennai requires careful consideration of factors such as experience, technology expertise, energy efficiency, and customer support. The right provider ensures not only effective cooling but also cost savings and improved operational efficiency.

Why is Chennai a Hub for Data Center Cooling Solutions?

Chennai has emerged as a significant player in the data center industry due to its robust IT infrastructure and favorable climatic conditions. The city boasts several data center cooling solutions companies offering cutting-edge technologies that cater to both large-scale enterprises and small businesses. These companies focus on sustainable cooling practices, ensuring compliance with global standards.

Conclusion

With the rising demand for efficient and eco-friendly data center cooling solutions, Chennai has become a key hub for innovative cooling technologies. Whether you are looking for precision cooling, liquid cooling, or energy-efficient air cooling solutions, data center cooling system manufacturers in Chennai offer a wide range of options tailored to your needs.For high-quality data center cooling system manufacturers in Chennai, visit Refroid, a leading provider specializing in cutting-edge cooling technologies that enhance data center performance and efficiency.

#data center cooling solutions in chennai#data center cooling solution providers in chennai#data center cooling system in chennai

0 notes

Text

Eco-Friendly and Efficient Data Center Cooling Systems in Chennai,

In the fast-evolving digital landscape, data centers play a crucial role in ensuring seamless IT operations. However, maintaining optimal temperatures within these facilities is a significant challenge due to the immense heat generated by high-performance computing equipment. This is where data center cooling solutions in Chennai come into play. With advanced cooling technologies, these solutions help maintain efficiency, reduce energy consumption, and enhance the longevity of critical infrastructure.

Importance of Data Center Cooling Systems

Data centers house thousands of servers, network equipment, and storage devices that generate substantial heat. Without proper cooling, excessive heat can lead to equipment failures, downtime, and increased operational costs. Data center cooling system manufacturers in Chennai provide innovative and energy-efficient solutions that ensure optimal temperature control, contributing to the overall sustainability of data centers.

Leading Data Center Cooling Solutions Companies in Chennai

Chennai is home to several reputed data center cooling solutions companies that specialize in designing, manufacturing, and installing state-of-the-art cooling systems. These companies offer a range of solutions, including:

Precision Air Conditioning: Ensures stable temperature and humidity levels, essential for sensitive IT equipment.

Liquid Cooling Solutions: Uses advanced techniques like direct-to-chip cooling to improve energy efficiency.

In-Row Cooling Systems: Placed between server racks to provide targeted cooling and reduce hotspots.

Cold Aisle & Hot Aisle Containment: Improves airflow management and minimizes energy wastage.

Key Features of Data Center Cooling Systems

Data center cooling system providers in Chennai focus on integrating innovative technologies to enhance efficiency and sustainability. Some key features include:

Energy Efficiency: Advanced cooling systems reduce power consumption and operational costs.

Scalability: Modular cooling solutions can be scaled as per the data center’s growing needs.

Smart Monitoring & Automation: IoT-enabled cooling systems offer real-time monitoring and predictive maintenance.

Eco-Friendly Solutions: Adoption of green cooling technologies minimizes the carbon footprint.

Choosing the Right Data Center Cooling Solution Provider in Chennai

Selecting the best data center cooling system manufacturers in Chennai requires careful consideration of factors such as experience, technology expertise, energy efficiency, and customer support. The right provider ensures not only effective cooling but also cost savings and improved operational efficiency.

Why is Chennai a Hub for Data Center Cooling Solutions?

Chennai has emerged as a significant player in the data center industry due to its robust IT infrastructure and favorable climatic conditions. The city boasts several data center cooling solutions companies offering cutting-edge technologies that cater to both large-scale enterprises and small businesses. These companies focus on sustainable cooling practices, ensuring compliance with global standards.

Conclusion

With the rising demand for efficient and eco-friendly data center cooling solutions, Chennai has become a key hub for innovative cooling technologies. Whether you are looking for precision cooling, liquid cooling, or energy-efficient air cooling solutions, data center cooling system manufacturers in Chennai offer a wide range of options tailored to your needs.For high-quality data center cooling system manufacturers in Chennai, visit Refroid, a leading provider specializing in cutting-edge cooling technologies that enhance data center performance and efficiency.

#data center cooling system manufacturers in chennai#data center cooling solutions company in chennai#data center cooling solutions in chennai

1 note

·

View note