#data appending services

Explore tagged Tumblr posts

Text

What Are Data Appending Services & Why Your B2B Business Needs Them?

Many education and training businesses struggle with outdated or incomplete contact lists. Emails bounce back, calls go unanswered, and promising leads slip away. This happens because databases often have missing or incorrect details.

That’s where data appending services help. They add phone numbers, job titles, emails, and other important information to your records, updating and enriching them. This guarantees that the appropriate individuals are reached by your marketing and sales teams, be it HR managers seeking corporate training or educational institutions interested in new educational initiatives.

Email marketing work better, outreach is made simpler, and lead creation is enhanced with precise data. You make contact with actual decision-makers rather than squandering time on faulty contact lists.

Maintaining current data is essential for educational organizations that depend on solid business-to-business connections. More possibilities, improved engagement, and increased conversions are all correlated with accurate data. This post will explain how data appending functions, why it's crucial for generating business-to-business leads, and how to pick the best provider for your company.

What Are Data Appending Services?

Data appending services help businesses fix and improve their contact lists. They fill in missing details like emails, phone numbers, job titles, and company names. This makes it easier to reach the right people.

Over time, business data becomes outdated. People change jobs, companies switch emails, and phone numbers get disconnected. If your database isn’t updated, your emails bounce, calls go unanswered, and marketing campaigns fail.

Data appending solves this problem. It compares your old records with reliable databases, finds missing or incorrect details, and updates them. This keeps your contact list accurate and useful.

There are different types of data appending:

Email appending – Adds or updates email addresses.

Phone appending – Finds correct phone numbers.

Demographic appending – Adds details like job title and company size.

Social media appending – Links contacts to their LinkedIn or Twitter profiles.

For education and training businesses, this means better outreach to HR managers, schools, and corporate clients. Instead of wasting time on bad data, you connect with real decision-makers.

Why Your Education & Training Business Needs Data Appending Services?

Reaching the right people is the key to growing any education or training business. But if your contact list is outdated, your emails won’t be read, and your calls won’t be answered. Data appending services help fix this by updating missing or incorrect information.

Better Lead Generation

If you’re targeting schools, universities, or companies for training programs, you need accurate contacts. Data appending ensures you have the right emails and phone numbers of decision-makers like HR managers and training coordinators.

Improved Email Marketing

Email campaigns only work if they reach the inbox. With updated emails, you reduce bounce rates and improve open rates. This means better engagement and more leads.

Stronger Sales Outreach

Sales teams waste time on bad data. With correct phone numbers and job titles, they connect with the right people faster, leading to higher conversions.

Saves Time and Resources

Instead of manually searching for updated contacts, your team can focus on closing deals and building relationships.

Keeps You Compliant

Outdated databases can violate data privacy rules. Appended data follows legal standards, keeping your outreach safe and ethical.

For education businesses, clean data means more enrollments, stronger partnerships, and better marketing results.

How to Choose the Right Data Appending Service for Your Education Business?

Not all data appending services are the same. Choosing the right one ensures your contact lists stay accurate, helping you connect with schools, universities, and corporate training clients. Here’s what to look for:

1. Accuracy and Reliability

The service should use trusted sources to update your data. Ask how they verify information to avoid errors.

2. Industry Experience

A provider that works with education businesses understands your needs better. They can help you find the right contacts, such as training managers and HR decision-makers.

3. CRM and Email Integration

Updating contacts should be simple. Choose a service that works with your CRM and email marketing tools. This saves time and reduces manual work.

4. Data Privacy Compliance

Ensure the service follows data protection laws like GDPR and CCPA. This keeps your outreach legal and ethical.

5. Customer Support

A good provider offers help when you need it. Look for one that answers questions quickly and provides ongoing support.

The right data appending service helps your education business reach more prospects, improve marketing, and boost enrollments.

The Future of Data Appending in the Education & Training Industry

As education and training businesses rely more on digital outreach, keeping contact lists updated will become even more important. Data appending services are changing to meet this demand, making it easier to reach the right people.

1. AI and Automation

New tools powered by artificial intelligence (AI) are making data appending faster and more accurate. These systems scan large databases in seconds, ensuring your contact list stays fresh without manual work.

2. Real-Time Data Updates

Instead of updating records once in a while, businesses will soon have access to real-time data updates. This means fewer bounced emails and faster connections with decision-makers.

3. Predictive Analytics

Predictive tools will help businesses find the best leads by analyzing past data. This can help education providers focus on contacts most likely to enroll or invest in training programs.

4. Stronger Privacy Measures

As data protection laws grow stricter, data appending services will need to follow even tighter security rules. Choosing a provider that values privacy will be key.

In the future, businesses that keep their data clean and updated will have a big advantage. Staying ahead with the right tools can mean better outreach, more leads, and stronger relationships.

Conclusion & Call to Action

Having the right contact information can make a big difference in your education or training business. Missed chances result from outdated data, but a B2B data company offering data appending services can keep your records up to date. Having up-to-date phone numbers, email addresses, and job titles will help you reach decision-makers more quickly and enhance your marketing outcomes.

As technology advances, data appending will become even more accurate and effective. Businesses that invest in clean data will see higher engagement, better conversions, and stronger connections with potential customers.

If you're ready to improve outreach, update your contact lists today. Find a trusted B2B data company that meets your needs and start targeting the right people now. Accurate data leads to better results, don’t let outdated information hold your business back.

0 notes

Text

Enhance your customer insights with data appending services. By enriching existing records with updated details, businesses can achieve a complete, accurate view of their customers. This leads to improved personalization, better decision-making, and stronger relationships. Optimize your marketing and engagement strategies by leveraging data appending for a competitive edge.

0 notes

Text

Boost Your Marketing Efforts with Data Appending Services

Improve the quality of your customer data with IBC Connect's top-notch data appending services. Our expert team utilizes advanced techniques to enrich your database, adding missing or outdated information.

0 notes

Text

Bizkonnect works in Actionable Sales Intelligence space. It provides intelligence to sales and marketing people like the List of companies using specific technologies and also assists in personalized campaigns . BizKonnect can be your data partner for cleaning up existing CRM data

#Qualified and Personalized Campaign#CRM Data refresh#Data Services#contact list#data append#lead generation network#Lead Qualification Services#virtual sales assistant#Sales Research#Sales Analysis#HR#Hospitality#e commerce#Digitial Media#eLearning#List of companies using specific technologies#database marketing

0 notes

Text

by Luke Rosiak

More than 80 media outlets with forced to issue a correction after running an Associated Press story that alleged that 40,000 civilians had been killed in the Gaza Strip.

An August 18 AP story said that Vice President Kamala Harris had been “vocal” about the need to protect civilians in the Israel-Hamas war, and that the “civilian death toll has now exceeded 40,000.”

But “not even Hamas has alleged that more than 40,000 Palestinian civilians in Gaza have been killed in the war between Israel and the terror organization,” according to the Committee for Accuracy in Middle East Reporting and Analysis (CAMERA), which monitors Arab media.

“While the Hamas-controlled Ministry of Health in Gaza has reported over 40,000 total deaths among Gaza’s residents, its data does not distinguish between civilians and combatants. Israeli sources, meanwhile, estimate that over 17,000 of those killed are Hamas combatants,” it said.

AP appended a correction to its story on August 19.

The outlet also revised the article’s text to say “More than 40,000 Palestinians have been killed in the Israel-Hamas war in Gaza, the territory’s Hamas-controlled Health Ministry says, but how many are civilians is unknown. The ministry does not distinguish between civilians and militants in its count. Israel says it has killed more than 17,000 militants in the war.”

Media outlets who carried the wire service’s article also issued corrections.

But CAMERA said that NBC affiliates in Los Angeles, Chicago, and New York had still not made the correction three days after being notified.

83 notes

·

View notes

Text

TARGET DECISION MAKER DATABASE FOR YOUR BUSINESS

Is your marketing team require defined target audience?

At Navigant, our Target Decision Maker (TDM) Database Services are administered by a distinct, specialized team of professionals. This team excels in various data-related tasks, including data sourcing, organizational data profiling, data cleansing, data verification, data validation, and data append.

Our backend team goes beyond mere data extraction. They possess the experience to grasp the campaign's objectives, target audience, and more. They strategize and plan campaign inputs, share market insights to refine the approach, depth, and reach, and even forecast potential campaign outcomes.

Book A Meeting: https://meetings.hubspot.com/sonal-arora

Contact us Web: https://www.navigant.in Email us at: [email protected] Cell: +91 9354739641

#Navigant#database#dataprofiling#generation#demandgeneration#marketing#socialmediamarketing#business#seo#emailmarketing#marketingstrategy#leads#sales#socialmedia#contentmarketing#onlinemarketing#marketingtips#branding#leadgenerationstrategy#entrepreneur#smallbusiness#salesfunnel#leadgen#advertising

2 notes

·

View notes

Text

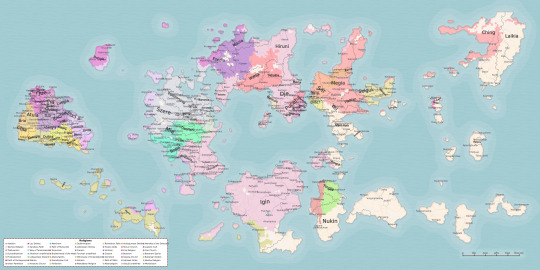

“Karalis” clef / keymap between 16^12 Angora & real-life Earth (Second Edition Preview, 11496 HE eq.)

PREFACE

Derived from the previous posts on the 16^12 constructed universe, soon to append additional content derived from books I own... (Looking especially towards Mark Rosenfelder's works but got a bunch more useful ones that aren't of his hand)

Yet to refine a bunch of specific details to emulate, to avoid / differentiate, and correlating the whole mess with a bunch of additional sources, files and documents to reach a satisfactory conclusion to this article.

Some fundamental key parameters to remember

Reason: Worldbuilding for filling in the lore behind my art and also for manifestation purposes

Motivation: Mental health, wish-fulfillment, feelings of accomplishment, historical exploration, personal improvement at the craft, nuanced political advocacy?;

Genre: Alternate history / parallel universe, adventure/mystery, far future, medium magick / psionics users, adult audience (Millenials + Zillenials + Gen Z);

Scale: Planet (focus points, smallest scale?)

Mood: Noble [Grim to Noble agency scale], Neutral-Bright [Dark to Bright lively comfort scale]

Theme: Coming of Age [cycles of constructive renewal every so often] (storytelling motifs)

Conflict: Political intrigue, ethics of knowledge, addventure exploration, data processing, progress vs preservation, internal + external conflicts;

Workflow: From blob map to detailed flat map to GIS+OSM-enabled map

Name: Try using meaningful, nuanced or at least representative names

Magic: Yes it exists, but high technology and other meta-physical matters take priority and by far.

Timescale: From ~16 billion years after the big bang to the late iron stars age of the universe (mostly focused onto the later time periods)

Civilization 5 & its planetary geography: A classical terrestrial planet (144x72 hexagonal tiles -> 288x144 hexagonal tiles? -> 2880x1440p basemap), Got Lakes? map script (to be expanded onto later), 5 Billion Years, Normal temperate, Normal rainfall, High Sea level, Abundant resources, Globe World Wrap, Tectonic Plates Mountains, 'Tectonic and chains' Extras, Small Continents Landmasses, Evergreen & Crop Ice Age & Full Ice Age, Tilted Axis Crop & Tilted Axis Full & Two Suns, enraged barbarians, random personalities, new random seed, complete kills, randomize resources & goodies, (planet with its associated divine realms heavily intertwinned with the living's physical plane?)

Mainline sapient species: Humans (Traditional humans yet with several more sub-species & ancestries, such as Otterfolk, Bearfolk, Mothfolk, Elkfolk, Selkie, Sabertoothfolk, Salukifolk, Hyenafolk, Tigerfolk, Boarfolk, Karibufolk, Glyptodonfolk, [insert up to four additional Cenozoic-inspired sub-species here]…), Automaton (constructs, robots, droids, synthetics…), Izki (butterfly folk), Evandari (rodent folk), Urzo (jellyfish-like molluscoid), Akurites (individualistic sapient anthropods), Ganlarev (sapient fungoid species), DAAR Hive Awareness (Rogue Servitor divided / disparate service grids)

Sideline sapient species: Devils, Hellhounds, Mariliths, Imps, Daemons, Valkyrie, Sphinx-folk, Angels, Cherubs, Thrones, Seraphims, Devas & Devi & Asuras, Fairy, Sprites, Dryads, Nymphs, Skinwalkers…

2D Ethos: [ Transparency - Mystery, Instrument - Agency; ] scales Threeway Ethical "Ancestral" Lineages: [ Harmony - Liberty - Progress; ] scale

Stellaris parameters: 4x Habitable worlds, 3x primitive civilizations, 4500s as contemporary present day, 4800s as Stellaris starting point, 5200 early game start, 5600 mid-game, 6000 late game, 6400 endgame / victory, all crisis types, tweaks to Stellaris' Nemesis system for extremely long-term lore (Neue Pangea Sol-3, Dying Sol-3, Undead Sol-3, Red Dwarves, Black Holes, Iron Stars, Heat Death;)…

FreeCiv parameters: [?]

SimCity 4 parameters: [?]

Life Simulation 'toybox' parameters: [?]

CRPG parameters: [?]

Computer mini-FS: [?]

Immersion and reality shifting feelies: [?]

Atlas parameters: [?]

[...]

CIVILIZATIONS

(12 majors, 32-48 minors, but it is a fairly flexible system as to leave room for many game scenarios and variations)

(Civ_1 to Civ_12) Shoshones (as the eponymous Shoshoni, also somewhat similar to the Western US of A + Cascadia + British Colombia to be frank), Maya (as the Atepec), Morocco (as the Tatari), Celts < Scotland < Gaelic Picts (as the Aberku), Brazil (as the March+Burgund+Hugues cultural co-federated group), Persia (as the Taliyan), Poland (as the Rzhev), Incas (as the Palche), Assyria (as the Syriac), Babylon (as the Ishtar), Polynesia < Samoa (as the Sama),

(Civ_13 to Civ_16) Korea (as the Hwatcha), Sweden (as the Mersuit), Japan ≈ Austria < Portugal (as the Arela), China ≈ Siam < Vietnam (as the Cao),

(Civ_17 to Civ_20) Indonesia < Inuit (as the Eqalen), Carthage (as the Eyn), Mongols < Angola (as the Temu), Netherlands (as the Treano);

(Civ_21 to Civ_36) Hungary (as the Uralic & Caucasus peoples, including Avars & Hungarians) Aremorica (as a different, more inner continental Gaulish Breton [or Turkey's Galatians], flavor of Aberku druidic Celts, from which the Angora names derives from) Sumer (some additional mesopotamian civilization into the mixture) Burgundy (as a releasable Occitan cultural state from Brazil) Lithuania (as the Chunhau cantonese seafarers) Carib (Classical Nahuatl / Nubian civilization of darkest skin cultures, integral part of a major human labor market before it got shutdown) Austria (as a releasable March cultural state from Brazil with some exiled cities) England (as a releasable Hugues cultural state from Brazil) Spain ≈ Castille ≈ Aragon (as the Medran) Nippur ≈ Nibru ≈ Elam (as another, east-ward mesopotamian state) Myceneans < Minoans (as a seafarers aggressive culture) Ethiopia < Kilwa ≈ Oman (as a Ibadi Islam outspot of trade) Venice < Tuscany (as another Treano state) Byzantium ≈ Classical Greece (as the pious religious orthodox Zapata government akin to tsarist Russia dynasty & Vatican Papal States during the late 18th century) Ottomans < Turks (as the Turchian turkic culture group) Hittites (as the Hatris / Lydians culture group)

CULTURES

(Ranges from ~36 to 48 total)

Eyn = Levantine

Ibrad = Hungarian

Zebie = Basque

Tatari = Berber

Cao = Vietnamese

Shoshoni

Turchian = Turkish

Eqalen = Inuit

Tersun = Ruthenian

Temu = Nigerian

Hugues = English

Lueur = Mongolian

March = German

Teotlan = Nahuatl

Hwatcha = Korean

Ishtar = Mesopotamian

Taliyan = Iranian

Palche = Quechua

Aberku = Celtic

Sama = Polynesian

Medran = Castillian

Burgund = French

Bantnani = Karnataka

Syriac = Mesopotamian

Atepec = Mayan

Rzhev = Ruthenian

Matwa = Swahili

Hangzhou = Chinese

Chunhau = Cantonese

Mersuit = Inuit

Treano = Italian

Arela = Portuguese

Hatris = Hittites / Lydians

Zapata = Byzantines / Mycenean Greeks

Nippir = Elam / Far-Eastern Mesopotamia

Irena = Minoan Greeks

STATES

(most likely much more than 96, and not yet decided either)

RELIGIONS

(~24 majors, 48 minors...)

Pohakantenna renamed as Utchwe (Shoshoni pantheon)

Confucianism tradition (and Shinto...)

Al-Asnam (Celtic druidic pantheon)

Ba'hai (monotheistic non-exclusive syncretism)

Arianism (iterated from the defunct Christianity dialect)

Chaldeanism (Mesopotamian pantheon)

Calvinism (derived from the Protestant Reformation's Huguenot Southern French, monotheism)

Tala-e-Fonua (Samoan pantheon)

Hussitism (central slavic dialect of monotheism)

Jainism (communal humility & individualized ki monks culture)

Buddhism tradition (inner way reincarnation & large monasteries)

Judaism

Zoroastrianism

Ibadiyya (Islam)

Shia (Islam)

Canaanism (Carthaginian belief system)

Pesedjet (Numidan Hieroglyphics belief system)

Mwari (Carib religion)

Inti pantheon

Mayan pantheon

Political Ideologies

Harmony (right-wing preservationist / "conservative" party, with very limited Wilsonism involved due to historical failings, so like a mixture of Democrats and Republicans as a Unionist Party)

Progress (think of the Theodore Roosevelt progressives party...)

Liberty (political center party)

Syndicalism (alternate development & continuation of IRL marxism, leninism, maoism, trotskyist "new-left" and the other left-wing doctrines of the socialism / communism types)

Georgism / ( "One Tax" + Ecological movement )

Classical Liberalism (aka open-choice Libertarians with brutal constructivist modular views of the world perhaps?)

Philosophies

[ Yet to be really researched and decided ]

Historical equivalences & differences

Mersuit emulating the history of Sweden.

Shoshoni as something somewhat similar to a developed amerindian old westerns' United States of America...

Widespread appeal of Asetism (Monasteries, humility and introspection, likewise to Jains and Buddhists) & Taizhou (Tala-e-Fonua equivalent) as key major worldly religions

No Woodrow Wilson, progressive major successes in the 1910-1945 equivalent.

More long-term sustainable and successful generational pathway in the 1960s-2000 period, still leading to a slow partial ecological collapse like in the Incatena reality just about around the mid 2045-2050 period with signs of decay arising from the 2020s. So the sapient peoples are more cooperative and empowered with the people that era and won't see as much of the managerial crisis sparks until the mid-2020s.

The global pandemic hit during the early 2000s alongside the dawn of ecological issues coming ahead (giving a slight headstart to fully figure problems coming not that far ahead), just around the time of nanotech synthetic autonomous androids emergence and a handful of alternatively successful technical progressions making them a slight bit ahead of ours on a couple fields. No mainstream autonomous governance AI service grids or really crazy Sci-fi innovations just yet, but a fair share of orphaned developments we did not have continue in this world.

A couple of benevolent worker cooperatives like Pflaumen (DEC+ZuseKG), EBM (IBM+ICL) & Utalics (Symbolics+Commodore+GNU Foundation)... continue well into the 21st century and persist as major computation players in the tech industry, averting the immediate rise of Macroware (Microsoft), Avant (Google) & Maynote (Meta) by the ill-conceived social medium strategy.

[ More to be written... ]

POSTFACE

All may be subject to heavy changes still (but especially everything global map related), so take it with a large pinch of salt, please. Thanks for reading btw and farewell to soon!

3 notes

·

View notes

Text

Boost your marketing efforts with email appending services that help you connect with your target audience effectively. This powerful solution enhances outdated or incomplete databases by adding valid email addresses, increasing campaign reach, and driving better engagement. Discover how smart businesses get a competitive edge through precise, data-driven communication strategies. Stay ahead — embrace smarter outreach today!

0 notes

Text

2025’s Leading Address Validation Software: Features, Reviews & Comparison

Address validation software has become a cornerstone of operational excellence in 2025. From customer onboarding to order fulfillment and direct marketing, it helps businesses avoid costly errors while enriching databases. But with dozens of tools on the market, choosing the right one can be overwhelming.

In this guide, we review and compare the top address validation software of 2025 based on performance, usability, integration, support, and user feedback.

What Is Address Validation Software?

Address validation software ensures that addresses entered into a system exist and are deliverable. It corrects misspellings, formats addresses to postal standards, and, in many cases, appends geolocation data.

Key functions include:

Syntax correction

Postal format standardization

Verification against postal databases

Geocoding and reverse geocoding

Real-time and batch processing

Top Features in 2025’s Leading Address Validation Software

Real-Time Validation

Autocomplete & Suggestion Engines

Global Postal Database Access

CASS, SERP, PAF Compliance

Data Enrichment & Analytics

Multichannel Support (Web, Mobile, API)

Security (SOC-2, GDPR, HIPAA compliance)

The Best Address Validation Software of 2025 (With Reviews)

Here are the best tools ranked based on feature set, customer reviews, and overall performance:

1. Loqate

Rating: ★★★★★

Strengths: Global data reach, geocoding, real-time validation, ease of integration

Integrations: Shopify, WooCommerce, Salesforce, BigCommerce

Ideal For: Global retailers and logistics

User Review: “Loqate is our go-to for international orders. Never had a failed shipment since we integrated it.”

2. Smarty

Rating: ★★★★☆

Strengths: Fast API, easy to use, affordable pricing, developer-friendly

Integrations: Native APIs, third-party tools via Zapier

Ideal For: SMBs, developers

User Review: “Smarty is blazing fast and easy to plug in. Our form abandonment dropped by 22%.”

3. Melissa

Rating: ★★★★★

Strengths: Data quality services, enrichment, compliance support

Integrations: HubSpot, Microsoft Dynamics, NetSuite

Ideal For: Data-driven teams, marketing departments

User Review: “Melissa helped us clean a 500k-record database. The difference in deliverability was immediate.”

4. PostGrid

Rating: ★★★★☆

Strengths: Print/mail integration, compliance, fast support

Integrations: CRMs, EHRs, and Zapier workflows

Ideal For: Healthcare, finance, law firms

User Review: “We love the HIPAA compliance and ability to automate physical mailings.”

5. AddressFinder

Rating: ★★★★☆

Strengths: Excellent for Australia & NZ, fast & accurate suggestions

Integrations: Shopify, Magento, WooCommerce

Ideal For: Regional eCommerce platforms

User Review: “A must-have for businesses in Australia. Address accuracy is spot-on.”

6. Experian Address Validation

Rating: ★★★★★

Strengths: Enterprise-grade, high data accuracy, trusted brand

Integrations: Enterprise CRMs, ERPs

Ideal For: Fortune 500 and multinational companies

User Review: “No-brainer for enterprise-level address hygiene. Support is world-class.”

youtube

SITES WE SUPPORT

Verify Postcards Online – Wix

0 notes

Text

What is USPS Address Validation API?

The USPS Address Validation API is a web service provided by the United States Postal Service to verify and standardize mailing addresses. It ensures that addresses entered into a system are accurate, properly formatted, and deliverable by USPS.

Features of USPS Address Validation API

1. Address Standardization

The API formats addresses according to USPS guidelines, correcting abbreviations and ensuring consistency.

2. Address Verification

It checks whether an address exists in the USPS database and is deliverable.

3. ZIP+4 Code Lookup

The API appends the correct ZIP+4 code to an address, improving mail sorting and delivery efficiency.

4. Residential and Business Address Identification

It differentiates between residential and business addresses, helping businesses optimize delivery strategies.

5. Real-Time Validation

The API can be integrated into websites and applications for real-time address validation during customer checkout.

How to Use USPS Address Validation API

1. Obtain API Access

Businesses must register for a USPS Web Tools account and obtain API credentials.

2. Integrate the API

Developers can integrate the API using programming languages like Python, JavaScript, or PHP. The API sends requests to USPS servers and retrieves validated addresses.

3. Process Address Data

Once validated, the API returns a standardized address with ZIP+4 codes and formatting corrections.

Benefits of USPS Address Validation API

Reduces Undeliverable Mail: Ensures that only valid addresses are used.

Saves Time and Money: Eliminates errors that lead to costly reshipments.

Enhances Customer Experience: Improves order fulfillment by preventing address-related delays.

Compliance with USPS Standards: Ensures addresses adhere to USPS formatting rules.

Conclusion

The USPS Address Validation API is a powerful tool for businesses looking to improve address accuracy, reduce undelivered mail, and enhance operational efficiency. By integrating this API, companies can ensure smooth and error-free shipping processes.

youtube

SITES WE SUPPORT

Mail Patient Statements – Wix

0 notes

Text

Data Appending Services | Outsource B2B Data Appending

Unlock enhanced business insights with Damco’s data appending services. Elevate your data quality and completeness, ensuring accurate customer information. Seamlessly integrate missing data such as email addresses and phone numbers. Maximize your outreach and engagement. Visit to explore how our services can optimize your data strategy.Know more: https://www.damcogroup.com/data-appending-services

View On WordPress

0 notes

Text

Enhance Your Marketing Strategy with Data Appending Services: Tips and Best Practices!

In today's highly competitive business landscape, effective marketing strategies are essential for success. And when it comes to marketing, data is king.

0 notes

Text

Brief introduction of Information Technology and Systems and their role in turning corporate strategy into action. Views on application Information Technology and various areas of utilization of Information Technology by organizations in competitive business environment are enumerated together with the details of the sources of the schemes. As far the suggestion for recommending Information 'Technology for developing countries, the answer is indicated as "YES " and detailed justification appended to the statement. A detailed example is quoted for better appreciation of the Paper. The Paper concludes with a recommendation for implementation of Information Technology. 16 References are listed in a separate page at the end, as prescribed. Role of Information Technology Information Technology is for everyday and for everyone, more so for an organization doing business. But, technology is only a means to achieve a business end through service centric system landscapes. As the market becomes competitive, organization need to focus on stronger and more effective communication, by way of transmission of voice, video and data. Timely access to relevant critical data is crucial in today's competitive world. Proper document imaging, capture, indexing, searching, retrieval, archiving and record management are necessary. The system should be robust, simple, reliable, flexible, cost-effective, scalable, interoperable, sharable on need basis, secure and easy to use. Information generated by IT/IS has to be used for speedy suitable decisions for cost-effectiveness. This exercise will help the organization to achieve early materialization of break-even point by early realization of fixed costs and even reducing the marginal cost to increase the profitability of the organization by means of standardization, effective budgetary control, early visualization of future needs/threats, risk-analysis, and guiding the management to address vulnerability of opportunities, and control IT sources to proactively manage risk and keep business up and running, through optimal solutions and integrity of business critical operations, reduced storage costs, increase in productivity, lower transaction time, reduced business operating costs, reduced document retrieval time, faster and easier access to information across the enterprise, improved work flow efficiency, and access to database by management for effective decisions. "Information Technology involves computers, software, and services, but good IT synthesizes these elements with a concentration on how your organization can best meet its goals. Increasingly, the IT department is the hub of any company-and companies expect IT managers to accomplish a variety of tasks with limited resources." Thus, IT must use organizational and managerial skills to run the most effective program possible.(The Power of IT-Jan de Suttter) "The role of IT will differ depending on their organization's current business context: fighting for survival, successfully competing, or leading its industry by creating a vision for how technology can improve the business to creating clear and appropriate IT governance to building a leaner, more focused IS organization, to deliver on the promise of IT to yield real, measurable, and bankable results." ( The New CIO Leader-by MARIANNE BROADBENT AND ELLEN S.KITZIS) "IT leveling the playing field for smaller-size businesses: It is precisely because of evolving IT and business process-processing that mid-sized firms from all over the world compete now on a more level playing field. Suddenly, mid-sized and even small businesses have access to the same advantages that were once held exclusively by the larger, vertically integrated firms." (Farmer-owned ethanol and the role of information technology --Anthony Crooks and John Dunn) With the above views in the background, the Areas of utilization of Information Technology by organizations in competitive business environment are enumerated below together with the details of the authors of the schemes. "Systems Analysis and Design and management of production planning and control systems at advanced level. This includes forecasting, aggregate and master planning, capacity planning and management, inventory and replenishment systems, MRP/MRPII, JIT and OPT/TOC, systems design, implementation and design, customized to the complex manufacturing systems. This includes ascertaining Customer expectations, service and orientation, the management of resources and operations, the development, management and exploitation of information systems and their impact upon organizations, the development of appropriate business policies and strategies to meet stakeholder needs within a changing environment, effective qualitative problem solving and decision making skills, the ability to create, Read the full article

0 notes

Text

How to Choose the Right Data Quality Tool for Your Organization

In today's data-driven world, organizations rely heavily on accurate, consistent, and reliable data to make informed decisions. However, ensuring the quality of data is a daunting task due to the vast volumes and complexities of modern datasets. To address this challenge, businesses turn to Data Quality Tools—powerful software solutions designed to identify, cleanse, and manage data inconsistencies. Selecting the right tool for your organization is crucial to maintaining data integrity and achieving operational efficiency.

This blog will guide you through choosing the perfect Data Quality Tool, highlighting key factors to consider, features to look for, and steps to evaluate your options.

Understanding the Importance of Data Quality

Before diving into the selection process, it's vital to understand why data quality matters. Poor data quality can lead to:

Inefficient Operations: Erroneous data disrupts workflows, leading to wasted time and resources.

Faulty Decision-Making: Decisions based on incorrect data can result in missed opportunities and financial losses.

Regulatory Compliance Issues: Many industries require adherence to strict data governance standards. Low-quality data can lead to non-compliance and penalties.

Customer Dissatisfaction: Inaccurate data affects customer service and satisfaction, harming your reputation.

Investing in a reliable Data Quality Tool helps mitigate these risks, ensuring data accuracy, completeness, and consistency.

Key Factors to Consider When Choosing a Data Quality Tool

Selecting the right Data Quality Tool involves evaluating various factors to ensure it aligns with your organization's needs. Here are the essential considerations:

Identify Your Data Challenges

Understand the specific data quality issues your organization faces. Common challenges include duplicate records, incomplete data, inconsistent formatting, and outdated information. A thorough analysis will help you choose a tool that addresses your unique requirements.

Scalability

As your organization grows, so does your data. The tool you select should be scalable and capable of handling increased data volumes and complexity without compromising performance.

Integration Capabilities

A robust Data Quality Tool must seamlessly integrate with your existing IT infrastructure, including databases, data warehouses, and other software applications. Compatibility ensures smooth workflows and efficient data processing.

Ease of Use

The tool should have an intuitive interface, making it easy for your team to adopt and use effectively. Complex tools with steep learning curves can hinder productivity.

Automation Features

Look for automation capabilities such as data cleansing, deduplication, and validation. Automation saves time and reduces human error, enhancing overall data quality.

Cost and ROI

Evaluate the total cost of ownership, including licensing, implementation, and maintenance. Ensure the tool delivers a measurable return on investment (ROI) through improved data accuracy and operational efficiency.

Vendor Support and Updates

Choose a tool from a vendor with a solid track record of customer support and regular updates. This ensures the tool remains up to date with evolving technology and regulatory requirements.

Must-Have Features in a Data Quality Tool

When evaluating options, ensure the Data Quality Tool includes the following key features:

Data Profiling

Data profiling analyses datasets to identify patterns, anomalies, and inconsistencies. This feature is essential for understanding the current state of your data and pinpointing problem areas.

Data Cleansing

Data cleansing automates correcting errors such as typos, duplicate records, and invalid values, ensuring your datasets are accurate and reliable.

Data Enrichment

Some tools enhance data by appending missing information from external sources, improving its completeness and relevance.

Real-Time Processing

Real-time data processing is a critical feature for organizations handling dynamic datasets. It ensures that data is validated and cleansed as it enters the system.

Customizable Rules and Workflows

Every organization has unique data governance policies. A good Data Quality Tool allows you to define and implement custom rules and workflows tailored to your needs.

Reporting and Visualization

Comprehensive reporting and visualization tools provide insights into data quality trends and metrics, enabling informed decision-making and progress tracking.

Compliance Features

To avoid legal complications, ensure the tool complies with industry-specific regulations, such as GDPR, HIPAA, or PCI DSS.

Steps to Evaluate and Choose the Right Data Quality Tool

Define Your Objectives

Set clear goals for achieving success with a Data Quality Tool. These can range from improving customer data accuracy to ensuring compliance with data governance standards.

Create a Checklist of Requirements

Based on your objectives, create a checklist of essential features and capabilities. Use this as a reference when comparing tools.

Research and Shortlist Tools

Research available tools, read reviews, and seek recommendations from industry peers. Then, shortlist tools that meet your requirements and budget.

Request Demos and Trials

Most vendors offer free demos or trial periods. Use this opportunity to explore the tool's features, usability, and compatibility with your systems.

Evaluate Vendor Reputation

Check the vendor's reputation in the market. Look for case studies, customer testimonials, and third-party reviews to gauge their reliability and support quality.

Involve Stakeholders

The evaluation process includes key stakeholders, such as IT teams and data managers. Their insights are invaluable in assessing the tool's practicality and effectiveness.

Make an Informed Decision

After a thorough evaluation, choose the Data Quality Tool that best meets your organization's needs, budget, and long-term goals.

Benefits of Implementing the Right Data Quality Tool

Investing in the right Data Quality Tool offers numerous benefits:

Improved Decision-Making: With accurate and reliable data, decision-makers can confidently strategize and plan.

Enhanced Operational Efficiency: Automation reduces manual effort, allowing your team to focus on strategic initiatives.

Regulatory Compliance: Robust tools ensure your data governance practices align with industry regulations.

Cost Savings: High-quality data minimizes financial losses caused by errors or inefficiencies.

Customer Satisfaction: Accurate customer data improves personalization and service quality, boosting satisfaction and loyalty.

Top Trends in Data Quality Tools

The field of Data Quality Tools is constantly evolving. Here are some emerging trends to watch:

AI and Machine Learning Integration

Advanced tools leverage AI and machine learning to predict and resolve data quality issues proactively.

Cloud-Based Solutions

Cloud-based Data Quality Tools offer scalability, flexibility, and cost-efficiency, making them popular among businesses of all sizes.

Data Quality as a Service (DQaaS)

Many vendors now offer DQaaS, providing data quality management as a subscription-based service.

Focus on Real-Time Data Quality

As businesses rely on real-time analytics, tools that ensure streaming data quality are gaining traction.

Conclusion

Choosing the right Data Quality Tool is a strategic decision that impacts your organization's success. By understanding your data challenges, evaluating essential features, and involving key stakeholders, you can select a tool that ensures data accuracy, compliance, and efficiency. Investing in a reliable tool pays off by enabling better decision-making, enhancing operations, and improving customer satisfaction.

As data's importance continues to grow, adopting a comprehensive Data Quality Tool is no longer optional—it's necessary for organizations striving to stay competitive in a data-driven landscape.

0 notes

Text

The Ultimate Guide to Data Enrichment

In today’s data-driven world, businesses rely heavily on accurate, complete, and actionable data to make informed decisions. However, raw data often lacks depth and context, limiting its effectiveness. This is where data enrichment comes into play. Data enrichment enhances existing datasets by supplementing them with additional, relevant information, leading to more valuable insights and improved decision-making.

In this comprehensive guide, we will explore the concept of data enrichment, its importance, types, benefits, challenges, and best practices to implement it successfully.

What is Data Enrichment?

Data enrichment is the process of enhancing, refining, and improving raw data by integrating additional external or internal data sources. It helps organizations obtain a more detailed and accurate picture of their customers, prospects, and business operations.

For example, a company may have a basic customer database containing only names and email addresses. By enriching this data with demographic information, purchase history, or behavioral insights, businesses can create more personalized and targeted marketing strategies.

Types of Data Enrichment

There are various types of data enrichment, depending on the kind of information being enhanced. Some of the most common types include:

1. Demographic Enrichment

Adding details such as age, gender, income level, education, and occupation to customer records can help businesses tailor their offerings to specific audience segments.

2. Geographic Enrichment

This involves appending location-based data, such as addresses, zip codes, or GPS coordinates, to customer profiles. Businesses can use this information for targeted advertising, regional promotions, or logistics optimization.

3. Behavioral Enrichment

By integrating browsing history, social media activity, and purchase patterns, companies can gain insights into customer preferences and buying habits, enabling more personalized engagement.

4. Firmographic Enrichment

For B2B companies, enriching data with firmographic details like company size, industry, revenue, and employee count can improve lead segmentation and sales targeting.

5. Contact Enrichment

This involves updating and verifying customer contact details, such as phone numbers, email addresses, and social media profiles, ensuring more effective communication.

6. Financial Enrichment

Financial institutions and fintech companies enrich data with credit scores, transaction histories, and risk assessments to make better lending and investment decisions.

Why is Data Enrichment Important?

Data enrichment is essential for businesses across all industries due to the following reasons:

Improved Customer Insights – Enriched data helps businesses understand their customers better, leading to more effective marketing and sales strategies.

Enhanced Personalization – Personalized communication based on enriched data improves customer engagement and loyalty.

Increased Data Accuracy – Keeping data up-to-date reduces errors and ensures reliable decision-making.

Better Lead Scoring – Businesses can prioritize leads based on enriched data, improving conversion rates.

Stronger Fraud Detection – Enriched data helps detect suspicious activities and reduce fraudulent transactions.

Optimized Business Operations – Companies can use enriched data for demand forecasting, inventory management, and supply chain optimization.

Challenges in Data Enrichment

Despite its advantages, data enrichment comes with its challenges:

Data Quality Issues – If external sources provide incorrect or outdated data, it can lead to inaccuracies.

Privacy and Compliance – Ensuring compliance with regulations like GDPR and CCPA is critical when handling enriched data.

Integration Complexities – Merging enriched data with existing systems can be technically challenging.

Cost and Resources – High-quality data enrichment services often require significant investment.

Overloading Data – Too much data can make it difficult to extract actionable insights and may lead to analysis paralysis.

Best Practices for Effective Data Enrichment

To maximize the benefits of data enrichment, businesses should follow these best practices:

Define Clear Objectives – Understand why you need data enrichment and what insights you hope to gain.

Ensure Data Quality – Use reliable sources and implement regular data validation checks.

Maintain Compliance – Always adhere to data protection regulations to avoid legal risks.

Automate the Process – Utilize AI and machine learning to streamline data enrichment.

Integrate Seamlessly – Ensure enriched data is effectively integrated into your CRM, marketing automation, and analytics tools.

Monitor and Update Data Regularly – Keep your data fresh by continuously updating and enriching it.

Data Enrichment Tools and Services

Several tools and platforms can help businesses enrich their data efficiently. Some popular ones include:

Clearbit – Provides real-time enrichment for B2B businesses.

ZoomInfo – A powerful tool for firmographic data enrichment.

FullContact – Specializes in contact and social media data enrichment.

Experian Data Quality – Ensures data accuracy and validation.

Google BigQuery – Enables large-scale data enrichment and analytics.

Real-World Applications of Data Enrichment

Marketing and Sales

Companies enrich data to create targeted marketing campaigns, segment audiences, and personalize customer interactions.

E-commerce and Retail

Retailers use data enrichment to recommend products based on purchase history and browsing behavior.

Finance and Banking

Financial institutions enrich data for risk assessment, credit scoring, and fraud detection.

Healthcare

Healthcare providers use data enrichment to enhance patient records and improve treatment recommendations.

The Future of Data Enrichment

With advancements in AI, machine learning, and big data analytics, data enrichment is becoming more automated and intelligent. Predictive analytics, real-time data processing, and advanced data visualization will further enhance its effectiveness. Businesses that invest in robust data enrichment strategies will gain a competitive edge in understanding their customers and making informed decisions.

Conclusion

Data enrichment is a powerful process that transforms raw data into a valuable asset for businesses. By integrating additional information, companies can gain deeper insights, enhance customer experiences, and optimize operations. Despite challenges, following best practices and leveraging the right tools can ensure a successful data enrichment strategy.

As businesses continue to generate vast amounts of data, enriching that data will remain essential for staying ahead in today’s competitive market. Investing in data enrichment today can lead to more intelligent decision-making and better business outcomes in the future.

1 note

·

View note

Text

Target Decision Maker (TDM) Database Services

Is your marketing team require defined target audience?

The Target Decision Maker (TDM) Database Services at Navigant are delivered by a separate dedicated team of professionals who specialize in data sourcing, organizational data profiling, data cleansing, data verification, data validation, data append etc.

The back-end team is not just listed pullers but is experienced to understand the objective of the campaign, target audience, etc & work on the same to plan the inputs for the campaign, share market insights towards approach, depth and reach, predict the probable campaign results etc.

Web: https://www.navigant.in Email us at: [email protected] Cell: +91 9354739641

#Navigant#database#dataprofiling#generation#demandgeneration#marketing#socialmediamarketing#business#seo#emailmarketing#marketingstrategy#leads#sales#socialmedia#contentmarketing#onlinemarketing#marketingtips#branding#leadgenerationstrategy#entrepreneur#smallbusiness#salesfunnel#leadgen#advertising

2 notes

·

View notes