#azure ai and ml services

Explore tagged Tumblr posts

Text

Everything you wanted to know about Azure OpenAI Pricing

Azure OpenAI is a powerful tool for businesses, integrating seamlessly with Azure for advanced AI capabilities. It automates processes like natural language processing and image recognition, enhancing efficiency. By leveraging these advances, businesses can automate tasks, improve operations, and unleash limitless potential.

In this blog, we provide detailed insights into Azure OpenAI pricing, helping you understand the costs associated with each service. Our expert guidance ensures you can navigate these options effectively, optimizing your AI investment.

Feel free to reach out if you have questions or need clarification on using Azure OpenAI services, including their use cases. Contact us for detailed pricing information and personalized support.

📞 Phone: +1(702) 780-7900

✉️ Email: [email protected]

1 note

·

View note

Text

Artificial Intellligence & Machine Learning Solutions | AI/ML Services

As we step into a new era of AI/ML, businesses can unlock unprecedented advantages, leveraging the power of data-driven insights, automated processes, intelligent decision-making, and transformative innovation to gain a competitive edge and drive exponential growth.

#Artificial Intellligence & Machine Learning Solutions#AI/ML Services#cloud based quantum machine learning services#cloud machine learning services#google machine learning services#ai and ml development company#aws nlp services#nlp services#azure nlp services

0 notes

Text

AI Agent Development: How to Create Intelligent Virtual Assistants for Business Success

In today's digital landscape, businesses are increasingly turning to AI-powered virtual assistants to streamline operations, enhance customer service, and boost productivity. AI agent development is at the forefront of this transformation, enabling companies to create intelligent, responsive, and highly efficient virtual assistants. In this blog, we will explore how to develop AI agents and leverage them for business success.

Understanding AI Agents and Virtual Assistants

AI agents, or intelligent virtual assistants, are software programs that use artificial intelligence, machine learning, and natural language processing (NLP) to interact with users, automate tasks, and make decisions. These agents can be deployed across various platforms, including websites, mobile apps, and messaging applications, to improve customer engagement and operational efficiency.

Key Features of AI Agents

Natural Language Processing (NLP): Enables the assistant to understand and process human language.

Machine Learning (ML): Allows the assistant to improve over time based on user interactions.

Conversational AI: Facilitates human-like interactions.

Task Automation: Handles repetitive tasks like answering FAQs, scheduling appointments, and processing orders.

Integration Capabilities: Connects with CRM, ERP, and other business tools for seamless operations.

Steps to Develop an AI Virtual Assistant

1. Define Business Objectives

Before developing an AI agent, it is crucial to identify the business goals it will serve. Whether it's improving customer support, automating sales inquiries, or handling HR tasks, a well-defined purpose ensures the assistant aligns with organizational needs.

2. Choose the Right AI Technologies

Selecting the right technology stack is essential for building a powerful AI agent. Key technologies include:

NLP frameworks: OpenAI's GPT, Google's Dialogflow, or Rasa.

Machine Learning Platforms: TensorFlow, PyTorch, or Scikit-learn.

Speech Recognition: Amazon Lex, IBM Watson, or Microsoft Azure Speech.

Cloud Services: AWS, Google Cloud, or Microsoft Azure.

3. Design the Conversation Flow

A well-structured conversation flow is crucial for user experience. Define intents (what the user wants) and responses to ensure the AI assistant provides accurate and helpful information. Tools like chatbot builders or decision trees help streamline this process.

4. Train the AI Model

Training an AI assistant involves feeding it with relevant datasets to improve accuracy. This may include:

Supervised Learning: Using labeled datasets for training.

Reinforcement Learning: Allowing the assistant to learn from interactions.

Continuous Learning: Updating models based on user feedback and new data.

5. Test and Optimize

Before deployment, rigorous testing is essential to refine the AI assistant's performance. Conduct:

User Testing: To evaluate usability and responsiveness.

A/B Testing: To compare different versions for effectiveness.

Performance Analysis: To measure speed, accuracy, and reliability.

6. Deploy and Monitor

Once the AI assistant is live, continuous monitoring and optimization are necessary to enhance user experience. Use analytics to track interactions, identify issues, and implement improvements over time.

Benefits of AI Virtual Assistants for Businesses

1. Enhanced Customer Service

AI-powered virtual assistants provide 24/7 support, instantly responding to customer queries and reducing response times.

2. Increased Efficiency

By automating repetitive tasks, businesses can save time and resources, allowing employees to focus on higher-value tasks.

3. Cost Savings

AI assistants reduce the need for large customer support teams, leading to significant cost reductions.

4. Scalability

Unlike human agents, AI assistants can handle multiple conversations simultaneously, making them highly scalable solutions.

5. Data-Driven Insights

AI assistants gather valuable data on customer behavior and preferences, enabling businesses to make informed decisions.

Future Trends in AI Agent Development

1. Hyper-Personalization

AI assistants will leverage deep learning to offer more personalized interactions based on user history and preferences.

2. Voice and Multimodal AI

The integration of voice recognition and visual processing will make AI assistants more interactive and intuitive.

3. Emotional AI

Advancements in AI will enable virtual assistants to detect and respond to human emotions for more empathetic interactions.

4. Autonomous AI Agents

Future AI agents will not only respond to queries but also proactively assist users by predicting their needs and taking independent actions.

Conclusion

AI agent development is transforming the way businesses interact with customers and streamline operations. By leveraging cutting-edge AI technologies, companies can create intelligent virtual assistants that enhance efficiency, reduce costs, and drive business success. As AI continues to evolve, embracing AI-powered assistants will be essential for staying competitive in the digital era.

4 notes

·

View notes

Text

Exploring DeepSeek and the Best AI Certifications to Boost Your Career

Understanding DeepSeek: A Rising AI Powerhouse

DeepSeek is an emerging player in the artificial intelligence (AI) landscape, specializing in large language models (LLMs) and cutting-edge AI research. As a significant competitor to OpenAI, Google DeepMind, and Anthropic, DeepSeek is pushing the boundaries of AI by developing powerful models tailored for natural language processing, generative AI, and real-world business applications.

With the AI revolution reshaping industries, professionals and students alike must stay ahead by acquiring recognized certifications that validate their skills and knowledge in AI, machine learning, and data science.

Why AI Certifications Matter

AI certifications offer several advantages, such as:

Enhanced Career Opportunities: Certifications validate your expertise and make you more attractive to employers.

Skill Development: Structured courses ensure you gain hands-on experience with AI tools and frameworks.

Higher Salary Potential: AI professionals with recognized certifications often command higher salaries than non-certified peers.

Networking Opportunities: Many AI certification programs connect you with industry experts and like-minded professionals.

Top AI Certifications to Consider

If you are looking to break into AI or upskill, consider the following AI certifications:

1. AICerts – AI Certification Authority

AICerts is a recognized certification body specializing in AI, machine learning, and data science.

It offers industry-recognized credentials that validate your AI proficiency.

Suitable for both beginners and advanced professionals.

2. Google Professional Machine Learning Engineer

Offered by Google Cloud, this certification demonstrates expertise in designing, building, and productionizing machine learning models.

Best for those who work with TensorFlow and Google Cloud AI tools.

3. IBM AI Engineering Professional Certificate

Covers deep learning, machine learning, and AI concepts.

Hands-on projects with TensorFlow, PyTorch, and SciKit-Learn.

4. Microsoft Certified: Azure AI Engineer Associate

Designed for professionals using Azure AI services to develop AI solutions.

Covers cognitive services, machine learning models, and NLP applications.

5. DeepLearning.AI TensorFlow Developer Certificate

Best for those looking to specialize in TensorFlow-based AI development.

Ideal for deep learning practitioners.

6. AWS Certified Machine Learning – Specialty

Focuses on AI and ML applications in AWS environments.

Includes model tuning, data engineering, and deep learning concepts.

7. MIT Professional Certificate in Machine Learning & Artificial Intelligence

A rigorous program by MIT covering AI fundamentals, neural networks, and deep learning.

Ideal for professionals aiming for academic and research-based AI careers.

Choosing the Right AI Certification

Selecting the right certification depends on your career goals, experience level, and preferred AI ecosystem (Google Cloud, AWS, or Azure). If you are a beginner, starting with AICerts, IBM, or DeepLearning.AI is recommended. For professionals looking for specialization, cloud-based AI certifications like Google, AWS, or Microsoft are ideal.

With AI shaping the future, staying certified and skilled will give you a competitive edge in the job market. Invest in your learning today and take your AI career to the next leve

3 notes

·

View notes

Text

The Future of Jobs in IT: Which Skills You Should Learn.

With changes in the industries due to technological changes, the demand for IT professionals will be in a constant evolution mode. New technologies such as automation, artificial intelligence, and cloud computing are increasingly being integrated into core business operations, which will soon make jobs in IT not just about coding but about mastering new technologies and developing versatile skills. Here, we cover what is waiting to take over the IT landscape and how you can prepare for this future.

1. Artificial Intelligence (AI) and Machine Learning (ML):

AI and ML are the things that are currently revolutionizing industries by making machines learn from data, automate processes, and predict outcomes. Thus, jobs for the future will be very much centered around these fields of AI and ML, and the professionals can expect to get work as AI engineers, data scientists, and automation specialists.

2. Cloud Computing:

With all operations now moving online, architects, developers, and security experts are in high demand for cloud work. It is very important to have skills on platforms such as AWS, Microsoft Azure, and Google Cloud for those who wish to work on cloud infrastructure and services.

3. Cybersecurity:

As dependence on digital mediums continues to increase, so must cybersecurity measures. Cybersecurity, ethical hacking, and network security would be skills everyone must use to protect data and systems from all the continuous threats.

4. Data Science and Analytics:

As they say, the new oil in this era is data. Therefore, organisations require professionals who would be able to analyze humongous datasets and infer actionable insights. Data science, data engineering, as well as advanced analytics tools, will be your cornucopia for thriving industries in the near future.

5. DevOps and Automation:

DevOps engineers are the ones who ensure that continuous integration and deployment work as smoothly and automatically as possible. Your knowledge of the business/operations will orient you well on that terrain, depending on how that applies to your needs.

Conclusion

IT job prospects rely heavily on AI, cloud computing, cybersecurity, and automation. It means that IT professionals must constantly innovate and update their skills to stay in competition. Whether an expert with years of experience or a newcomer, focusing on the following in-demand skills will gather success in this diverse land of IT evolution.

You might also like: How to crack interview in MNC IT

2 notes

·

View notes

Text

How can you optimize the performance of machine learning models in the cloud?

Optimizing machine learning models in the cloud involves several strategies to enhance performance and efficiency. Here’s a detailed approach:

Choose the Right Cloud Services:

Managed ML Services:

Use managed services like AWS SageMaker, Google AI Platform, or Azure Machine Learning, which offer built-in tools for training, tuning, and deploying models.

Auto-scaling:

Enable auto-scaling features to adjust resources based on demand, which helps manage costs and performance.

Optimize Data Handling:

Data Storage:

Use scalable cloud storage solutions like Amazon S3, Google Cloud Storage, or Azure Blob Storage for storing large datasets efficiently.

Data Pipeline:

Implement efficient data pipelines with tools like Apache Kafka or AWS Glue to manage and process large volumes of data.

Select Appropriate Computational Resources:

Instance Types:

Choose the right instance types based on your model’s requirements. For example, use GPU or TPU instances for deep learning tasks to accelerate training.

Spot Instances:

Utilize spot instances or preemptible VMs to reduce costs for non-time-sensitive tasks.

Optimize Model Training:

Hyperparameter Tuning:

Use cloud-based hyperparameter tuning services to automate the search for optimal model parameters. Services like Google Cloud AI Platform’s HyperTune or AWS SageMaker’s Automatic Model Tuning can help.

Distributed Training:

Distribute model training across multiple instances or nodes to speed up the process. Frameworks like TensorFlow and PyTorch support distributed training and can take advantage of cloud resources.

Monitoring and Logging:

Monitoring Tools:

Implement monitoring tools to track performance metrics and resource usage. AWS CloudWatch, Google Cloud Monitoring, and Azure Monitor offer real-time insights.

Logging:

Maintain detailed logs for debugging and performance analysis, using tools like AWS CloudTrail or Google Cloud Logging.

Model Deployment:

Serverless Deployment:

Use serverless options to simplify scaling and reduce infrastructure management. Services like AWS Lambda or Google Cloud Functions can handle inference tasks without managing servers.

Model Optimization:

Optimize models by compressing them or using model distillation techniques to reduce inference time and improve latency.

Cost Management:

Cost Analysis:

Regularly analyze and optimize cloud costs to avoid overspending. Tools like AWS Cost Explorer, Google Cloud’s Cost Management, and Azure Cost Management can help monitor and manage expenses.

By carefully selecting cloud services, optimizing data handling and training processes, and monitoring performance, you can efficiently manage and improve machine learning models in the cloud.

2 notes

·

View notes

Text

2 notes

·

View notes

Text

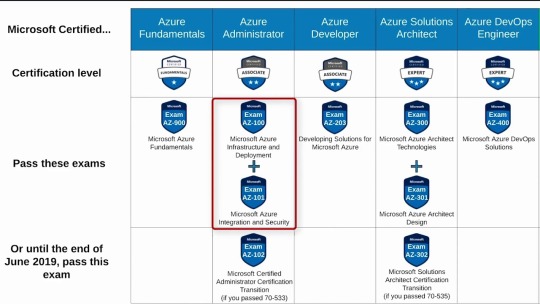

Navigating the Future as Azure Certifications in 2024

In the ever-evolving landscape of cloud technology, Azure certifications continue to be instrumental in shaping the careers of IT professionals and influencing the strategies of organizations worldwide. As we step into 2024, it's essential to explore the current trends, advancements, and the transformative impact that Azure certifications are poised to have in the coming year.

The Continued Relevance of Azure Certifications

Azure certifications are not mere credentials; they are gateways to expertise and recognition in the dynamic world of cloud computing. As businesses increasingly rely on cloud solutions, the demand for skilled Azure professionals continues to grow. In 2024, Azure certifications stand as key assets, ensuring that professionals possess the knowledge and skills needed to navigate the complexities of cloud technology effectively.

Azure Certification Paths in 2024

Azure certifications are structured into comprehensive paths, catering to individuals at various stages of their cloud journey. From foundational certifications for beginners to advanced tracks tailored for specialized roles like Azure Solutions Architect or Azure DevOps Engineer, the certification paths have evolved to align with the diverse needs of IT professionals. The year 2024 sees an increased focus on role-based certifications, allowing individuals to hone specific skills relevant to their job roles.

Key Benefits of Azure Certifications in 2024

Career Advancement:

Azure certifications are a proven catalyst for career growth. In 2024, as businesses seek skilled professionals, holding an Azure certification becomes a valuable asset for those aiming to advance their careers in cloud technology.

Industry Recognition:

Globally recognized, Azure certifications validate one's expertise in Microsoft's cloud services. Employers across industries acknowledge and value these certifications as a mark of proficiency in handling Azure-based solutions.

Continuous Learning Culture:

In 2024, Azure certifications are not just about achieving a one-time qualification; they embody a commitment to continuous learning. Microsoft regularly updates its certification paths to align with emerging technologies, encouraging professionals to stay abreast of the latest industry trends.

Increased Employability:

As the job market becomes more competitive, possessing Azure certifications enhances employability. In 2024, businesses are actively seeking candidates with practical Azure skills, making certification holders highly sought after.

Emerging Trends in Azure Certifications for 2024

Micro-Certifications:

2024 witnesses a rise in micro-certifications, focusing on specific, targeted skills. These bite-sized certifications allow professionals to demonstrate expertise in niche areas, providing a more granular approach to skill validation.

Scenario-Based Assessments:

Azure certification exams in 2024 are increasingly incorporating scenario-based questions. This shift aims to evaluate not just theoretical knowledge but the ability to apply that knowledge in practical, real-world situations.

Integration of AI and ML:

With the growing importance of artificial intelligence (AI) and machine learning (ML), Azure certifications in 2024 are placing a greater emphasis on these technologies. Certification tracks dedicated to AI and ML applications within Azure are gaining prominence.

Focus on Security:

In response to the heightened concern for cybersecurity, Azure certifications in 2024 place a significant focus on security-related tracks. Azure Security Engineer certifications are expected to be in high demand as organizations prioritize securing their cloud environments.

Tips for Success in Azure Certifications 2024

Stay Updated: Given the evolving nature of technology, staying updated with the latest Azure services and features is crucial. Regularly check Microsoft's official documentation and announcements for any updates.

Hands-On Experience: Practical experience is invaluable. Utilize Azure's sandbox environments, participate in real-world projects, and engage with the Azure portal to reinforce your theoretical knowledge.

Leverage Learning Resources: Microsoft provides a wealth of learning resources, including online courses, documentation, and practice exams. Take advantage of these resources to supplement your preparation.

Join the Azure Community: Engage with the Azure community through forums, webinars, and social media. Networking with professionals in the field can provide insights, tips, and support during your certification journey.

Conclusion

As we venture into 2024, Azure certifications stand as pivotal tools for IT professionals aiming to thrive in the dynamic world of cloud technology. Whether you are starting your journey with foundational certifications or advancing your skills with specialized tracks, Azure certifications in 2024 represent more than just qualifications – they symbolize a commitment to excellence, continuous learning, and a future shaped by innovation in the cloud. Embrace the opportunities, stay ahead of the curve, and let Azure certifications be your guide to success in the ever-evolving realm of cloud proficiency.

Frequently Asked Questions (FAQs)

What are the new Azure certifications introduced in 2024?

As of 2024, Microsoft has introduced several new certifications to align with emerging technologies. Notable additions include specialized tracks focusing on AI, ML, and advanced security.

How has the exam format changed for 2024?

The exam format in 2024 has evolved to include more scenario-based questions. This change is aimed at assessing practical application skills in addition to theoretical knowledge.

Are there any prerequisites for Azure certifications in 2024?

Prerequisites vary based on the specific certification. While some foundational certifications may have no prerequisites, advanced certifications often require prior experience or the completion of specific lower-level certifications.

Can I still take exams for older Azure certifications in 2024?

Microsoft often provides a transition period for older certifications, allowing candidates to complete them even as new certifications are introduced. However, it's advisable to check Microsoft's official documentation for specific details.

How frequently are Azure certifications updated?

Azure certifications are regularly updated to stay aligned with the latest technologies and industry trends. Microsoft recommends that candidates stay informed about updates through official communication channels.

4 notes

·

View notes

Text

How To Get An Online Internship In the IT Sector (Skills And Tips)

Internships provide invaluable opportunities to gain practical skills, build professional networks, and get your foot in the door with top tech companies.

With remote tech internships exploding in IT, online internships are now more accessible than ever. Whether a college student or career changer seeking hands-on IT experience, virtual internships allow you to work from anywhere.

However, competition can be fierce, and simply applying is often insufficient. Follow this comprehensive guide to develop the right technical abilities.

After reading this, you can effectively showcase your potential, and maximize your chances of securing a remote tech internship.

Understand In-Demand IT Skills

The first step is gaining a solid grasp of the most in-demand technical and soft skills. While specific requirements vary by company and role, these competencies form a strong foundation:

Technical Skills:

Proficiency in programming languages like Python, JavaScript, Java, and C++

Experience with front-end frameworks like React, Angular, and Vue.js

Back-end development skills - APIs, microservices, SQL databases Cloud platforms such as AWS, Azure, Google Cloud

IT infrastructure skills - servers, networks, security

Data science abilities like SQL, R, Python

Web development and design

Mobile app development - Android, iOS, hybrid

Soft Skills:

Communication and collaboration

Analytical thinking and problem-solving

Leadership and teamwork

Creativity and innovation

Fast learning ability

Detail and deadline-oriented

Flexibility and adaptability

Obtain Relevant Credentials

While hands-on skills hold more weight, relevant academic credentials and professional IT certifications can strengthen your profile. Consider pursuing:

Bachelor’s degree in Computer Science, IT, or related engineering fields

Internship-specific courses teaching technical and soft skills

Certificates like CompTIA, AWS, Cisco, Microsoft, Google, etc.

Accredited boot camp programs focusing on applied skills

MOOCs to build expertise in trending technologies like AI/ML, cybersecurity

Open source contributions on GitHub to demonstrate coding skills

The right credentials display a work ethic and supplement practical abilities gained through projects.

Build An Impressive Project Portfolio

Nothing showcases skills better than real-world examples of your work. Develop a portfolio of strong coding, design, and analytical projects related to your target internship field.

Mobile apps - publish on app stores or use GitHub project pages

Websites - deploy online via hosting services

Data science - showcase Jupyter notebooks, visualizations

Open source code - contribute to public projects on GitHub

Technical writing - blog posts explaining key concepts

Automation and scripts - record demo videos

Choose projects demonstrating both breadth and depth. Align them to skills required for your desired internship roles.

Master Technical Interview Skills

IT internship interviews often include challenging technical questions and assessments. Be prepared to:

Explain your code and projects clearly. Review them beforehand.

Discuss concepts related to key technologies on your resume. Ramp up on fundamentals.

Solve coding challenges focused on algorithms, data structures, etc. Practice online judges like LeetCode.

Address system design and analytical problems. Read case interview guides.

Show communication and collaboration skills through pair programming tests.

Ask smart, well-researched questions about the company’s tech stack, projects, etc.

Schedule dedicated time for technical interview practice daily. Learn to think aloud while coding and get feedback from peers.

Show Passion and Curiosity

Beyond raw skills, demonstrating genuine passion and curiosity for technology goes a long way.

Take online courses and certifications beyond the college curriculum

Build side projects and engage in hackathons for self-learning

Stay updated on industry news, trends, and innovations

Be active on forums like StackOverflow to exchange knowledge

Attend tech events and conferences

Participate in groups like coding clubs and prior internship programs

Follow tech leaders on social mediaListen to tech podcasts while commuting

Show interest in the company’s mission, products, and culture

This passion shines through in interviews and applications, distinguishing you from other candidates.

Promote Your Personal Brand

In the digital age, your online presence and personal brand are make-or-break. Craft a strong brand image across:

LinkedIn profile - showcase achievements, skills, recommendations

GitHub - displays coding activity and quality through clean repositories

Portfolio website - highlight projects and share valuable content

Social media - post career updates and useful insights, but avoid oversharing

Blogs/videos - demonstrate communication abilities and thought leadership

Online communities - actively engage and build relationships

Ensure your profiles are professional and consistent. Let your technical abilities and potential speak for themselves.

Optimize Your Internship Applications

Applying isn’t enough. You must optimize your internship applications to get a reply:

Ensure you apply to openings that strongly match your profile Customize your resume and cover letters using keywords in the job description

Speak to skills gained from coursework, online learning, and personal projects

Quantify achievements rather than just listing responsibilities

Emphasize passion for technology and fast learning abilities

Ask insightful questions that show business understanding

Follow up respectfully if you don’t hear back in 1-2 weeks

Show interest in full-time conversion early and often

Apply early since competitive openings close quickly

Leverage referrals from your network if possible

This is how you do apply meaningfully. If you want a good internship, focus on the quality of applications. The hard work will pay off.

Succeed in Your Remote Internship

The hard work pays off when you secure that long-awaited internship! Continue standing out through the actual internship by:

Over Communicating in remote settings - proactively collaborate

Asking smart questions and owning your learning

Finding mentors and building connections remotely

Absorbing constructive criticism with maturity

Shipping quality work on or before deadlines

Clarifying expectations frequently

Going above and beyond prescribed responsibilities sometimes

Getting regular feedback and asking for more work

Leaving with letters of recommendation and job referrals

When you follow these tips, you are sure to succeed in your remote internship. Remember, soft skills can get you long ahead in the company, sometimes core skills can’t.

Conclusion

With careful preparation, tenacity, and a passion for technology, you will be able to get internships jobs in USA that suit your needs in the thriving IT sector.

Use this guide to build the right skills, create an impressive personal brand, ace the applications, and excel in your internship.

Additionally, you can browse some good job portals. For instance, GrandSiren can help you get remote tech internships. The portal has the best internship jobs in India and USA you’ll find. The investment will pay dividends throughout your career in this digital age. Wishing you the best of luck! Let me know in the comments about your internship hunt journey.

#itjobs#internship opportunities#internships#interns#entryleveljobs#gradsiren#opportunities#jobsearch#careeropportunities#jobseekers#ineffable interns#jobs#employment#career

4 notes

·

View notes

Text

How To Get An Online Internship In the IT Sector (Skills And Tips)

Internships provide invaluable opportunities to gain practical skills, build professional networks, and get your foot in the door with top tech companies.

With remote tech internships exploding in IT, online internships are now more accessible than ever. Whether a college student or career changer seeking hands-on IT experience, virtual internships allow you to work from anywhere.

However, competition can be fierce, and simply applying is often insufficient. Follow this comprehensive guide to develop the right technical abilities.

After reading this, you can effectively showcase your potential, and maximize your chances of securing a remote tech internship.

Understand In-Demand IT Skills

The first step is gaining a solid grasp of the most in-demand technical and soft skills. While specific requirements vary by company and role, these competencies form a strong foundation:

Technical Skills:

>> Proficiency in programming languages like Python, JavaScript, Java, and C++ >> Experience with front-end frameworks like React, Angular, and Vue.js >> Back-end development skills - APIs, microservices, SQL databases >> Cloud platforms such as AWS, Azure, Google Cloud >> IT infrastructure skills - servers, networks, security >> Data science abilities like SQL, R, Python >> Web development and design >> Mobile app development - Android, iOS, hybrid

Soft Skills:

>> Communication and collaboration >> Analytical thinking and problem-solving >> Leadership and teamwork >> Creativity and innovation >> Fast learning ability >> Detail and deadline-oriented >> Flexibility and adaptability

Obtain Relevant Credentials

While hands-on skills hold more weight, relevant academic credentials and professional IT certifications can strengthen your profile. Consider pursuing:

>> Bachelor’s degree in Computer Science, IT, or related engineering fields. >> Internship-specific courses teaching technical and soft skills. >> Certificates like CompTIA, AWS, Cisco, Microsoft, Google, etc. >> Accredited boot camp programs focusing on applied skills. >> MOOCs to build expertise in trending technologies like AI/ML, cybersecurity. >> Open source contributions on GitHub to demonstrate coding skills.

The right credentials display a work ethic and supplement practical abilities gained through projects.

Build An Impressive Project Portfolio

Nothing showcases skills better than real-world examples of your work. Develop a portfolio of strong coding, design, and analytical projects related to your target internship field.

>> Mobile apps - publish on app stores or use GitHub project pages >> Websites - deploy online via hosting services >> Data science - showcase Jupyter notebooks, visualizations >> Open source code - contribute to public projects on GitHub >> Technical writing - blog posts explaining key concepts >> Automation and scripts - record demo videos

Choose projects demonstrating both breadth and depth. Align them to skills required for your desired internship roles.

Master Technical Interview Skills

IT internship interviews often include challenging technical questions and assessments. Be prepared to:

>> Explain your code and projects clearly. Review them beforehand. >> Discuss concepts related to key technologies on your resume. Ramp up on fundamentals. >> Solve coding challenges focused on algorithms, data structures, etc. Practice online judges like LeetCode. >> Address system design and analytical problems. Read case interview guides. >> Show communication and collaboration skills through pair programming tests. >> Ask smart, well-researched questions about the company’s tech stack, projects, etc.

Schedule dedicated time for technical interview practice daily. Learn to think aloud while coding and get feedback from peers.

Show Passion and Curiosity

Beyond raw skills, demonstrating genuine passion and curiosity for technology goes a long way.

>> Take online courses and certifications beyond the college curriculum >> Build side projects and engage in hackathons for self-learning >> Stay updated on industry news, trends, and innovations >> Be active on forums like StackOverflow to exchange knowledge >> Attend tech events and conferences >> Participate in groups like coding clubs and prior internship programs >> Follow tech leaders on social media >> Listen to tech podcasts while commuting >> Show interest in the company’s mission, products, and culture

This passion shines through in interviews and applications, distinguishing you from other candidates.

Promote Your Personal Brand

In the digital age, your online presence and personal brand are make-or-break. Craft a strong brand image across:

>> LinkedIn profile - showcase achievements, skills, recommendations >> GitHub - displays coding activity and quality through clean repositories >> Portfolio website - highlight projects and share valuable content >> Social media - post career updates and useful insights, but avoid oversharing >> Blogs/videos - demonstrate communication abilities and thought leadership >> Online communities - actively engage and build relationships

Ensure your profiles are professional and consistent. Let your technical abilities and potential speak for themselves.

Optimize Your Internship Applications

Applying isn’t enough. You must optimize your internship applications to get a reply:

>> Ensure you apply to openings that strongly match your profile >> Customize your resume and cover letters using keywords in the job description >> Speak to skills gained from coursework, online learning, and personal projects >> Quantify achievements rather than just listing responsibilities >> Emphasize passion for technology and fast learning abilities >> Ask insightful questions that show business understanding >> Follow up respectfully if you don’t hear back in 1-2 weeks >> Show interest in full-time conversion early and often >> Apply early since competitive openings close quickly >> Leverage referrals from your network if possible

This is how you do apply meaningfully. If you want a good internship, focus on the quality of applications. The hard work will pay off.

Succeed in Your Remote Internship

The hard work pays off when you secure that long-awaited internship! Continue standing out through the actual internship by:

>> Over Communicating in remote settings - proactively collaborate >> Asking smart questions and owning your learning >> Finding mentors and building connections remotely >> Absorbing constructive criticism with maturity >> Shipping quality work on or before deadlines >> Clarifying expectations frequently >> Going above and beyond prescribed responsibilities sometimes >> Getting regular feedback and asking for more work >> Leaving with letters of recommendation and job referrals

When you follow these tips, you are sure to succeed in your remote internship. Remember, soft skills can get you long ahead in the company, sometimes core skills can’t.

Conclusion

With careful preparation, tenacity, and a passion for technology, you will be able to get internships jobs in USA that suit your needs in the thriving IT sector.

Use this guide to build the right skills, create an impressive personal brand, ace the applications, and excel in your internship.

Additionally, you can browse some good job portals. For instance, GrandSiren can help you get remote tech internships. The portal has the best internship jobs in India and USA you’ll find.

The investment will pay dividends throughout your career in this digital age. Wishing you the best of luck! Let me know in the comments about your internship hunt journey.

#internship#internshipopportunity#it job opportunities#it jobs#IT internships#jobseekers#jobsearch#entryleveljobs#employment#gradsiren#graduation#computer science#technology#engineering#innovation#information technology#remote jobs#remote work#IT Remote jobs

5 notes

·

View notes

Text

Maximize Success: Transform Your Life with Azure OpenAI

Artificial Intelligence (AI) has evolved significantly. It transitioned from fiction to an integral part of our daily lives and business operations. In business, AI has shifted from a luxury to an essential tool. It helps analyze data, automate tasks, improve customer experiences, and strategize decisions.

McKinsey’s report suggests AI could contribute $13 trillion to the global economy by 2030. Amidst the ever-changing tech landscape, Azure Open AI stands out as an unstoppable force.

In this blog, we’ll delve into the life-changing impact of Azure Open AI features. We’ll explore how its integration can better workflows, enhance decision-making, and drive unparalleled innovation. Join us on a journey to uncover Azure Open AI to reshape business operations in the modern era.

#azure ai#azure open ai desktop#azure open ai pricing#Azure open ai services#azure ai and ml services

1 note

·

View note

Text

Microsoft Azure Fundamentals AI-900 (Part 5)

Microsoft Azure AI Fundamentals: Explore visual studio tools for machine learning

What is machine learning? A technique that uses math and statistics to create models that predict unknown values

Types of Machine learning

Regression - predict a continuous value, like a price, a sales total, a measure, etc

Classification - determine a class label.

Clustering - determine labels by grouping similar information into label groups

x = features

y = label

Azure Machine Learning Studio

You can use the workspace to develop solutions with the Azure ML service on the web portal or with developer tools

Web portal for ML solutions in Sure

Capabilities for preparing data, training models, publishing and monitoring a service.

First step assign a workspace to a studio.

Compute targets are cloud-based resources which can run model training and data exploration processes

Compute Instances - Development workstations that data scientists can use to work with data and models

Compute Clusters - Scalable clusters of VMs for on demand processing of experiment code

Inference Clusters - Deployment targets for predictive services that use your trained models

Attached Compute - Links to existing Azure compute resources like VMs or Azure data brick clusters

What is Azure Automated Machine Learning

Jobs have multiple settings

Provide information needed to specify your training scripts, compute target and Azure ML environment and run a training job

Understand the AutoML Process

ML model must be trained with existing data

Data scientists spend lots of time pre-processing and selecting data

This is time consuming and often makes inefficient use of expensive compute hardware

In Azure ML data for model training and other operations are encapsulated in a data set.

You create your own dataset.

Classification (predicting categories or classes)

Regression (predicting numeric values)

Time series forecasting (predicting numeric values at a future point in time)

After part of the data is used to train a model, then the rest of the data is used to iteratively test or cross validate the model

The metric is calculated by comparing the actual known label or value with the predicted one

Difference between the actual known and predicted is known as residuals; they indicate amount of error in the model.

Root Mean Squared Error (RMSE) is a performance metric. The smaller the value, the more accurate the model’s prediction is

Normalized root mean squared error (NRMSE) standardizes the metric to be used between models which have different scales.

Shows the frequency of residual value ranges.

Residuals represents variance between predicted and true values that can’t be explained by the model, errors

Most frequently occurring residual values (errors) should be clustered around zero.

You want small errors with fewer errors at the extreme ends of the sale

Should show a diagonal trend where the predicted value correlates closely with the true value

Dotted line shows a perfect model’s performance

The closer to the line of your model’s average predicted value to the dotted, the better.

Services can be deployed as an Azure Container Instance (ACI) or to a Azure Kubernetes Service (AKS) cluster

For production AKS is recommended.

Identify regression machine learning scenarios

Regression is a form of ML

Understands the relationships between variables to predict a desired outcome

Predicts a numeric label or outcome base on variables (features)

Regression is an example of supervised ML

What is Azure Machine Learning designer

Allow you to organize, manage, and reuse complex ML workflows across projects and users

Pipelines start with the dataset you want to use to train the model

Each time you run a pipelines, the context(history) is stored as a pipeline job

Encapsulates one step in a machine learning pipeline.

Like a function in programming

In a pipeline project, you access data assets and components from the Asset Library tab

You can create data assets on the data tab from local files, web files, open at a sets, and a datastore

Data assets appear in the Asset Library

Azure ML job executes a task against a specified compute target.

Jobs allow systematic tracking of your ML experiments and workflows.

Understand steps for regression

To train a regression model, your data set needs to include historic features and known label values.

Use the designer’s Score Model component to generate the predicted class label value

Connect all the components that will run in the experiment

Average difference between predicted and true values

It is based on the same unit as the label

The lower the value is the better the model is predicting

The square root of the mean squared difference between predicted and true values

Metric based on the same unit as the label.

A larger difference indicates greater variance in the individual label errors

Relative metric between 0 and 1 on the square based on the square of the differences between predicted and true values

Closer to 0 means the better the model is performing.

Since the value is relative, it can compare different models with different label units

Relative metric between 0 and 1 on the square based on the absolute of the differences between predicted and true values

Closer to 0 means the better the model is performing.

Can be used to compare models where the labels are in different units

Also known as R-squared

Summarizes how much variance exists between predicted and true values

Closer to 1 means the model is performing better

Remove training components form your data and replace it with a web service inputs and outputs to handle the web requests

It does the same data transformations as the first pipeline for new data

It then uses trained model to infer/predict label values based on the features.

Create a classification model with Azure ML designer

Classification is a form of ML used to predict which category an item belongs to

Like regression this is a supervised ML technique.

Understand steps for classification

True Positive - Model predicts the label and the label is correct

False Positive - Model predicts wrong label and the data has the label

False Negative - Model predicts the wrong label, and the data does have the label

True Negative - Model predicts the label correctly and the data has the label

For multi-class classification, same approach is used. A model with 3 possible results would have a 3x3 matrix.

Diagonal lien of cells were the predicted and actual labels match

Number of cases classified as positive that are actually positive

True positives divided by (true positives + false positives)

Fraction of positive cases correctly identified

Number of true positives divided by (true positives + false negatives)

Overall metric that essentially combines precision and recall

Classification models predict probability for each possible class

For binary classification models, the probability is between 0 and 1

Setting the threshold can define when a value is interpreted as 0 or 1. If its set to 0.5 then 0.5-1.0 is 1 and 0.0-0.4 is 0

Recall also known as True Positive Rate

Has a corresponding False Positive Rate

Plotting these two metrics on a graph for all values between 0 and 1 provides information.

Receiver Operating Characteristic (ROC) is the curve.

In a perfect model, this curve would be high to the top left

Area under the curve (AUC).

Remove training components form your data and replace it with a web service inputs and outputs to handle the web requests

It does the same data transformations as the first pipeline for new data

It then uses trained model to infer/predict label values based on the features.

Create a Clustering model with Azure ML designer

Clustering is used to group similar objects together based on features.

Clustering is an example of unsupervised learning, you train a model to just separate items based on their features.

Understanding steps for clustering

Prebuilt components exist that allow you to clean the data, normalize it, join tables and more

Requires a dataset that includes multiple observations of the items you want to cluster

Requires numeric features that can be used to determine similarities between individual cases

Initializing K coordinates as randomly selected points called centroids in an n-dimensional space (n is the number of dimensions in the feature vectors)

Plotting feature vectors as points in the same space and assigns a value how close they are to the closes centroid

Moving the centroids to the middle points allocated to it (mean distance)

Reassigning to the closes centroids after the move

Repeating the last two steps until tone.

Maximum distances between each point and the centroid of that point’s cluster.

If the value is high it can mean that cluster is widely dispersed.

With the Average Distance to Closer Center, we can determine how spread out the cluster is

Remove training components form your data and replace it with a web service inputs and outputs to handle the web requests

It does the same data transformations as the first pipeline for new data

It then uses trained model to infer/predict label values based on the features.

2 notes

·

View notes

Text

How Do Emerging Technologies Influence AI Agent Development?

The world of artificial intelligence (AI) is advancing at a rapid pace, and AI agents — autonomous systems capable of making decisions and performing tasks — are at the forefront of this transformation. AI agents are revolutionizing industries, from healthcare and finance to manufacturing and transportation. However, the development of these intelligent systems is closely tied to the evolution of emerging technologies.

In this blog, we will explore how various emerging technologies are influencing the development of AI agents, propelling their capabilities, efficiency, and potential for real-world applications.

1. Machine Learning and Deep Learning Advancements

Influence on AI Agent Development:

Machine learning (ML) and deep learning (DL) are core to the functioning of AI agents. These technologies allow AI agents to learn from vast amounts of data, identify patterns, and make predictions or decisions autonomously. The continuous evolution of ML and DL algorithms is enhancing the capabilities of AI agents, making them smarter and more adaptable.

Key Impact:

Improved Decision-Making: Advancements in machine learning algorithms, such as reinforcement learning and supervised learning, allow AI agents to make more informed and accurate decisions in complex environments.

Self-Learning: Deep learning models, particularly neural networks, enable AI agents to continuously learn from new data. With improved architectures, such as transformer models, AI agents can adapt to new scenarios without needing explicit retraining.

Natural Language Processing (NLP): With improvements in NLP models (e.g., GPT, BERT, and T5), AI agents can now better understand, process, and generate human language. This is crucial for applications like chatbots, virtual assistants, and AI-driven customer service systems.

2. Reinforcement Learning and Autonomous Systems

Influence on AI Agent Development:

Reinforcement learning (RL) is a subfield of machine learning that has played a significant role in making AI agents more autonomous. In RL, AI agents learn by interacting with their environment and receiving feedback based on their actions. This allows them to optimize their decision-making over time, ultimately leading to more efficient and intelligent behaviors.

Key Impact:

Autonomous Navigation: AI agents, especially in robotics and autonomous vehicles, leverage RL to navigate environments, make decisions, and interact with their surroundings without human intervention. This technology is essential in areas like self-driving cars, drones, and robotics used in manufacturing and logistics.

Real-Time Decision Making: RL helps AI agents operate in dynamic, real-time environments where they need to adapt to new information on the fly. This is particularly useful in high-stakes industries like trading or healthcare, where agents must make real-time decisions based on changing variables.

Simulated Environments: RL enables AI agents to be trained in simulated environments before being deployed in the real world. For instance, simulated driving environments allow self-driving cars to train without the risks of real-world testing.

3. Cloud Computing and Edge Computing

Influence on AI Agent Development:

Both cloud computing and edge computing have a profound impact on the performance and scalability of AI agents. Cloud computing allows for the storage and processing of massive amounts of data, enabling AI agents to access powerful computational resources. Edge computing, on the other hand, enables real-time data processing closer to where the data is generated, reducing latency and improving efficiency.

Key Impact:

Scalability: Cloud computing platforms, like Amazon Web Services (AWS) and Microsoft Azure, provide the infrastructure necessary for scaling AI agent applications. Cloud resources are essential for training AI models on vast datasets, running complex computations, and providing AI-powered services to users across the globe.

Real-Time Performance: Edge computing is especially important in scenarios where low-latency decisions are crucial, such as in autonomous vehicles or industrial robotics. By processing data at the edge (near the source), AI agents can respond more quickly and reduce the burden on centralized servers.

Cost-Effectiveness: Cloud solutions make AI agent development more accessible to startups and smaller businesses by providing pay-per-use models and eliminating the need for extensive in-house computational infrastructure. Similarly, edge computing reduces the need for continuous cloud connections, which can be costly and bandwidth-intensive.

4. 5G Technology

Influence on AI Agent Development:

The advent of 5G technology is set to revolutionize AI agent development by providing faster internet speeds, lower latency, and increased connectivity. These benefits enable AI agents to interact with the world in real-time and make faster decisions.

Key Impact:

Low-Latency Communication: 5G's ultra-low latency capabilities are particularly important for AI agents in mission-critical systems such as autonomous vehicles, smart cities, and remote healthcare. These agents need to process and communicate data in near-real-time to make timely decisions.

Massive Connectivity: With 5G, AI agents can connect to an even larger number of devices, facilitating the growth of the Internet of Things (IoT). For example, in smart cities, AI agents can manage traffic flow, energy distribution, and public safety by connecting to IoT devices across the urban landscape.

Enhanced Mobile AI: 5G enables AI agents to function seamlessly on mobile devices, enhancing the experience for users interacting with virtual assistants, augmented reality (AR) applications, and AI-powered apps. With 5G, these agents can provide more personalized and responsive experiences.

5. Blockchain and Decentralized Technologies

Influence on AI Agent Development:

Blockchain and decentralized technologies are opening up new opportunities for AI agents, particularly when it comes to transparency, trust, and security. Blockchain can enable AI agents to interact with decentralized networks in a secure and verifiable manner.

Key Impact:

Trust and Accountability: Blockchain's transparent and immutable ledger can be used to record and track the decisions made by AI agents. This helps ensure accountability and trust, particularly in industries where decision-making is under scrutiny (e.g., finance, healthcare, and insurance).

Decentralized AI: AI agents can leverage decentralized technologies to operate in distributed networks, allowing them to make decisions in a decentralized manner without the need for central control. This could lead to the development of decentralized autonomous organizations (DAOs), where AI agents play a role in governance and decision-making.

Secure Data Sharing: Blockchain can facilitate secure and private data sharing, enabling AI agents to interact with sensitive information (e.g., health records or financial transactions) while maintaining user privacy. This is particularly important in sectors like healthcare, where data security is paramount.

6. Quantum Computing

Influence on AI Agent Development:

Although still in the early stages, quantum computing has the potential to drastically change the landscape of AI agent development. Quantum computers leverage quantum bits (qubits) to perform computations at speeds far beyond the capabilities of classical computers, which could accelerate AI training and decision-making processes.

Key Impact:

Accelerated AI Model Training: Quantum computing could speed up the training of complex AI models, especially those that require vast amounts of data and computational power, such as deep learning networks. This could reduce the time it takes to develop advanced AI agents and make them more effective in real-world applications.

Optimization Problems: Quantum algorithms could be used to solve complex optimization problems, improving the decision-making capabilities of AI agents. This could be particularly beneficial for applications like logistics, finance, and resource allocation, where finding the optimal solution is often computationally intensive.

Simulating Complex Environments: Quantum computers could simulate complex environments with much greater efficiency than classical computers, enabling AI agents to test and train in more realistic scenarios. This could lead to breakthroughs in areas like autonomous vehicles, robotics, and drug discovery.

7. Augmented Reality (AR) and Virtual Reality (VR)

Influence on AI Agent Development:

Augmented reality (AR) and virtual reality (VR) technologies are transforming how AI agents interact with the world, offering immersive environments where agents can understand and respond to visual and spatial data.

Key Impact:

Enhanced Human-AI Interaction: AI agents integrated with AR and VR can create more interactive and immersive user experiences. Virtual assistants, for instance, can use AR to overlay useful information onto the real world, providing contextual guidance in industries like retail or healthcare.

Training and Simulation: VR provides an ideal platform for training AI agents in simulated environments. AI agents can practice real-world tasks in virtual spaces before being deployed, allowing for faster and safer learning.

Spatial Awareness: AI agents powered by AR and VR can better understand and interact with their physical surroundings. This is especially useful for autonomous robots, drones, and other AI-driven systems that require spatial awareness to navigate and make decisions.

Conclusion

Emerging technologies are not only enabling new possibilities for AI agent development but also reshaping how these intelligent systems operate and interact with the world. As machine learning algorithms become more powerful, cloud and edge computing provide scalable resources, 5G enhances real-time decision-making, blockchain ensures transparency, quantum computing promises faster learning, and AR/VR revolutionizes human-AI interaction — AI agents are becoming increasingly sophisticated.

The continuous advancements in these technologies hold immense potential to unlock the next generation of AI agents, capable of solving complex problems, adapting to dynamic environments, and making more accurate and ethical decisions. As these technologies continue to mature, the development of AI agents will undoubtedly play a pivotal role in shaping the future of intelligent systems across all industries.

0 notes

Text

Comprehensive Fintech Development Services: Innovating the Future of Digital Finance

Fintech Development has transformed the global financial landscape, offering businesses and consumers innovative digital solutions for payments, banking, lending, and investment. As technology advances, financial institutions, startups, and enterprises require specialized Fintech Development Services to stay competitive and meet growing customer demands. In this article, we will explore the significance of fintech development services and their role in shaping the future of digital finance.

1. Understanding Fintech Development Services

Fintech Development Services encompass the design, development, and deployment of financial technology solutions tailored to modern business needs. These services integrate advanced technologies such as artificial intelligence (AI), blockchain, cloud computing, and data analytics to optimize financial transactions and enhance user experience.

Key areas of fintech development services include:

Digital banking and mobile banking applications

Payment gateways and digital wallets

Blockchain and decentralized finance (DeFi) solutions

AI-powered financial analytics and risk assessment

Peer-to-peer lending and crowdfunding platforms

Regtech (Regulatory Technology) solutions for compliance

2. The Role of AI and Machine Learning in Fintech Development

Artificial Intelligence (AI) and Machine Learning (ML) play a vital role in fintech development services. AI-powered chatbots provide customer support, while ML algorithms enhance fraud detection, credit scoring, and personalized financial recommendations. By integrating AI, fintech companies can automate processes, reduce human errors, and improve efficiency.

For instance, AI-driven robo-advisors help customers make informed investment decisions by analyzing market trends and user preferences. Similarly, AI-based fraud detection systems monitor transactions in real-time to prevent suspicious activities, ensuring a secure financial ecosystem.

3. Blockchain and Decentralized Finance (DeFi) Solutions

Blockchain technology is revolutionizing the financial industry by enhancing security, transparency, and efficiency. Fintech development services now incorporate blockchain solutions for secure transactions, smart contracts, and decentralized finance (DeFi) applications.

DeFi platforms eliminate intermediaries, enabling users to access financial services such as lending, borrowing, and trading directly through blockchain networks. This innovation promotes financial inclusion by providing banking services to the unbanked population and reducing transaction costs.

4. Payment Gateways and Digital Wallet Development

The rise of digital payments has made payment gateway and digital wallet development essential components of fintech development services. Businesses require seamless, secure, and scalable payment solutions to cater to a global audience.

Fintech development services offer:

Multi-currency payment processing

Contactless and QR-code-based payments

Cryptocurrency payment integrations

AI-powered fraud prevention systems

These digital payment solutions enhance customer convenience and streamline financial transactions across various platforms, including e-commerce, retail, and subscription-based services.

5. Cloud Computing and Scalable Fintech Solutions

Cloud computing has become a cornerstone of fintech development, providing secure and scalable infrastructure for financial applications. Cloud-based fintech solutions enable businesses to manage vast amounts of data, process transactions in real-time, and ensure regulatory compliance.

With hybrid and multi-cloud strategies, fintech firms can optimize performance, ensure data redundancy, and enhance security. Leading cloud service providers such as AWS, Google Cloud, and Microsoft Azure offer tailored fintech solutions that support high availability and seamless integrations.

6. Regtech: Ensuring Compliance and Security

Regulatory compliance is a significant concern for financial institutions. Regtech solutions, a crucial component of fintech development services, leverage technology to automate compliance processes and mitigate risks.

These solutions include:

AI-driven anti-money laundering (AML) and Know Your Customer (KYC) verification

Automated risk assessment and fraud detection

Secure data encryption and privacy measures

By implementing regtech solutions, businesses can ensure adherence to financial regulations, reduce operational risks, and enhance customer trust.

7. Mobile-First Approach in Fintech Development

With the increasing reliance on smartphones for financial transactions, fintech development services focus on mobile-first solutions. Mobile banking apps, peer-to-peer payment platforms, and financial management applications are designed with intuitive user interfaces and enhanced security features.

Key mobile-first fintech solutions include:

Biometric authentication (fingerprint, facial recognition)

AI-driven personal finance management tools

Instant peer-to-peer transfers and bill payments

Cross-border remittance services

Ensuring a seamless mobile experience enhances user engagement and provides financial services at users’ fingertips.

8. The Role of Xettle Technologies in Fintech Development

Xettle Technologies is among the companies driving innovation in fintech development. By leveraging AI, blockchain, and cloud computing, Xettle Technologies is reshaping financial services and enabling businesses to offer secure and scalable digital solutions. Their contributions highlight the importance of cutting-edge fintech development services in revolutionizing the financial industry.

9. Future Trends in Fintech Development

As fintech continues to evolve, several trends will shape the industry:

Embedded Finance: Financial services will be integrated into non-financial platforms, such as e-commerce and ride-sharing apps.

Central Bank Digital Currencies (CBDCs): Governments worldwide are exploring digital currencies to enhance financial accessibility.

Quantum Computing: Future fintech solutions may leverage quantum computing for faster and more secure transactions.

Green Fintech: Sustainable finance solutions will emerge to support eco-friendly investment and banking initiatives.

Conclusion

Fintech development services are at the forefront of digital transformation, enabling businesses to offer secure, efficient, and user-friendly financial solutions. From AI-powered analytics to blockchain-driven security, fintech development continues to reshape the financial landscape. Companies that invest in fintech development services can leverage emerging technologies to enhance customer experiences, drive financial inclusion, and stay ahead in a competitive market.

As digital finance expands, businesses must partner with fintech development experts to create innovative, scalable, and compliant solutions. By adopting cutting-edge technologies and user-centric designs, fintech firms can pave the way for a more inclusive and technologically advanced financial ecosystem.

0 notes

Text

What is the latest technology in software development (2024)

what is the latest technology in software development is characterized by rapid advancements in various areas, aiming to improve development speed, enhance security, and increase efficiency. Below we discuss some of the most notable and recent technologies making waves in the industry.

What is the latest technology in software development

As we discuss what is the latest technology in software development, AI and ML are transforming the field by enabling predictive analytics, automating testing, and improving decision-making processes. Tools like GitHub Copilot, powered by OpenAI, assist developers in writing code faster by suggesting code snippets and automating repetitive tasks.

These technologies are not only improving development productivity but also enabling more robust, intelligent software applications. Low-code and no-code development platforms have gained significant traction as they enable users with minimal coding experience to build applications easily.

As with this aspect of what is the latest technology in software development, these platforms use drag-and-drop interfaces and pre-built components, reducing the need for extensive hand-coding. This trend democratizes software development, empowering businesses to create custom applications quickly and at a lower cost.

As cyber threats become more sophisticated, integrating security directly into the development pipeline is critical. DevSecOps incorporates security practices within the DevOps process, ensuring that code is secure from the beginning of the development lifecycle. This shift-left approach reduces vulnerabilities and enhances compliance, making software more robust against potential attacks.

Also on the list of what is the latest technology in software development, microservices have been around for some time, but they are continually evolving to make software development more scalable and maintainable. Combined with serverless architectures provided by platforms like AWS Lambda and Azure Functions, developers can deploy code without worrying about the underlying infrastructure.

These technologies support greater flexibility, allowing teams to scale individual services independently and optimize resource usage, resulting in cost-effective solutions. Progressive Web Apps (PWAs) bridge the gap between web and mobile applications by delivering an app-like experience directly through the web browser.

As we are discussing what is the latest technology in software development, these PWAs are reliable, fast, and capable of working offline, enhancing user engagement without the complexity of traditional app stores. This technology is particularly beneficial for businesses looking to provide a seamless user experience across devices.

Another one, blockchain, is no longer limited to cryptocurrencies. It is now finding applications in software development for creating secure, transparent, and tamper-proof solutions. Smart contracts and decentralized apps (dApps) are being used in various industries such as supply chain, finance, and identity management to foster trust and eliminate intermediaries.

While still in its early stages today as we discuss what is the latest technology in software development, quantum computing promises to solve complex problems that traditional computers cannot. Companies like IBM and Google are making significant strides in this area. Although it has not been fully integrated into mainstream software development, the potential for breakthroughs in data analysis and optimization is significant.

Cloud-native development, utilizing container orchestration platforms like Kubernetes and Docker, continues to reshape how applications are built and deployed. These technologies facilitate better resource management, scalability, and seamless deployment across multiple cloud environments, supporting the growing demand for flexible and resilient infrastructure.

Edge computing complements cloud one by processing data closer to the source, reducing latency and bandwidth usage. This technology is especially important for IoT applications and real-time analytics, as it ensures quicker response times and a more efficient use of resources. With advancements in hardware and software, developers now have more tools to create immersive applications that merge the physical and digital.

AR and VR are enhancing user experiences in various domains, from gaming to training simulations and e-commerce. The latest technologies in software development are driven by the need for greater efficiency, security, and scalability. Whether it’s AI-enhanced coding tools, cloud-native solutions, or quantum computing, these innovations are really reshaping the development landscape.

Link Article Here

0 notes

Text

5 Revolutionary Trends Shaping the Future of Computer Science

The field of computer science is evolving rapidly, introducing cutting-edge technologies that are transforming industries and reshaping the future of technology. From Artificial Intelligence (AI) and Quantum Computing to Java Coaching Centre in Yamuna Vihar , these advancements are opening doors to exciting career opportunities.

If you're enrolled in a Computer Science Course in Yamuna Vihar or pursuing a Diploma in Computer Application in Uttam Nagar, staying updated on these trends will help you gain a competitive edge in the industry. Let’s explore five game-changing developments shaping the future of computer science.

1. Artificial Intelligence and Machine Learning: The Power of Automation

Artificial Intelligence (AI) and Machine Learning (ML) are transforming the world by enabling automation, enhancing decision-making, and optimizing business processes.

Key AI & ML Advancements:

AI is driving progress in natural language processing (NLP), robotics, and predictive analytics.

Machine Learning powers personalized recommendations in e-commerce, entertainment, and healthcare.

AI-powered chatbots and virtual assistants are improving customer interactions.

AI-driven cybersecurity tools are helping businesses detect and prevent cyber threats.

Students pursuing a Java Course in Yamuna Vihar or a Java Training Institute in Uttam Nagar can explore how AI integrates with Java-based applications to build intelligent systems.

2. Quantum Computing: The Next Evolution in Processing Power

Quantum computing is set to revolutionize problem-solving by delivering unparalleled computational power beyond traditional computers.

Why Quantum Computing is Important:

It can solve complex problems in cryptography, drug discovery, and climate modeling.

Google, IBM, and Microsoft are leading advancements in quantum algorithms.

Quantum encryption is expected to redefine data security, making conventional hacking methods obsolete.

Industries relying on massive data processing, such as finance and healthcare, are exploring quantum solutions.

For students in C++ Training in Yamuna Vihar or C++ Coaching in Uttam Nagar, understanding quantum computing principles can provide a deeper insight into the future of programming and data security.

3. Cybersecurity and Data Protection: Safeguarding the Digital World

With the increasing number of cyber threats, cybersecurity has become a crucial part of computer science. Organizations are investing heavily in data protection and ethical hacking to counter evolving threats.

Major Cybersecurity Trends:

AI-powered security systems detect and respond to cyber threats in real time.

Blockchain technology is transforming secure transactions and digital identities.

Ethical hacking and penetration testing are in high demand for securing sensitive data.

Businesses are adopting Zero Trust security models to prevent unauthorized access.

Students interested in cybersecurity should consider Data Structure Coaching in Yamuna Vihar or SQL Classes in Uttam Nagar, as knowledge of data structures and databases is essential for securing systems.

4. Cloud Computing and Edge Computing: Enabling Smart Technologies

Cloud computing has already revolutionized data storage and software deployment, but edge computing is enhancing real-time data processing.

How Cloud & Edge Computing Are Changing Technology:

Cloud services like AWS, Google Cloud, and Microsoft Azure offer scalable computing solutions.

Edge computing improves performance for IoT devices, autonomous cars, and smart cities by reducing latency.

Organizations are adopting hybrid cloud models to enhance efficiency.

Serverless computing is allowing developers to focus on coding without managing infrastructure.

Students in MySQL Training in Yamuna Vihar or MySQL Training in Uttam Nagar should focus on cloud database management, as cloud computing skills are essential in today’s job market.

5. The Evolution of Web Development: Modern Technologies & Frameworks

Web development is evolving rapidly with new frameworks, programming languages, and user-centric designs. JavaServer Pages (JSP) and UI/UX Designing continue to play a critical role in building modern applications.

Latest Trends in Web Development:

React, Angular, and Vue.js are leading the front-end development landscape.

Progressive Web Apps (PWAs) are bridging the gap between web and mobile applications.

JSP technology is widely used for developing secure and scalable enterprise applications.

Full-stack development is becoming an essential skill in the IT industry.

Students enrolled in a JSP Coaching in Yamuna Vihar or a UI/UX Designer Course in Uttam Nagar should explore modern frameworks and user experience (UX) design principles to stay competitive in the industry.

Conclusion: The Future of Computer Science is Now

The future of computer science is filled with endless opportunities, driven by advancements in AI, cybersecurity, quantum computing, cloud technology

Whether you're studying at a Computer Science Coaching Centre in Yamuna Vihar, attending C++ Training in Uttam Nagar, or pursuing a Diploma in Computer Application in Uttam Nagar, staying informed about these trends will boost your career prospects.

By embracing these technological innovations today, you can position yourself at the forefront of the digital revolution.

Suggested Links :–

C++ Programming Language

Database Management System