#azizan

Explore tagged Tumblr posts

Text

Developing Unstoppable SMEs: How To Navigate and Conquer Economic Turbulence

By Datuk Wira Dr. Azizan Osman, Founder of Richworks International Sdn. Bhd In the ASEAN region, one of the fastest-growing regions of the global economy, SMEs make up over 97% of all businesses. In fact, for both Singapore and Malaysia, SMEs contribute between 40-50% to their respective national gross domestic products (GDP) alone. However, these businesses already struggle with many obstacles,…

0 notes

Text

Azizan-Yulli, Kang Nong Kabupaten Serang 2024

SERANG – Pemerintah Kabupaten Serang melalui Dinas Pemuda Olahraga dan Pariwisata (Disporapar) telah selesai menggelar pemilihan Kang-Nong Kabupaten Serang 2024. Pada ajang tersebut, terpilih satu pasang putra putri terbaik Kabupaten Serang yakni Rizky Azizan Ghofur dan Yulli Lestariyani, sebagai Kang dan Nong Kabupaten Serang 2024. Keduanya terpilih melalui seleksi yang ketat sejak pendaftaran…

View On WordPress

#Desa Wisata#Dispar Kabupaten Serang#Kang Nong Banten#Kang Nong Kabupaten Serang 2024#Rizky Azizan Ghofur#Wisata di Kabupaten Serang#Yulli Lestariyani

0 notes

Text

Lilian's "mini" pig

Just a random little idea I had. SPOILERS for future LG stories under the cut:

Pigs are a recurring theme in Lily's story. I won't get into them or what they represent in this post (if you've read her saga then you already know) I will just mention that Dario kept domestic pigs to help him dispose of his victim's corpses. Lily was often in charge of caring for the pigs, she made friends with them and especially loved the baby ones.

One day Lily tells Zov about the baby pigs, speaking about them with such fondness that it gives him an idea. He goes to the market in Taybiya, where ranchers sell their livestock, and sure enough he finds a man selling piglets.

He asks the man how big these babies will get. The man boasts that they will surely grow to a thousand pounds at least, and provide lots of meat! Zov is disappointed, mentioning that he was hoping to find something smaller to give his girlfriend as a pet.

As Zov leaves, the man suddenly stops him. He picks up a random piglet from his stock and tells Zov that this particular one is a "mini pig". It will stay small and cute forever, he promises! Zov buys the animal, puts a bow on it, and gives it to Lily.

Of course Lily falls in love with the adorable little piglet and names it Pinky.

1 year later, Pinky is a 1500lb behemoth. Zov was ready to drag her back to that conman and demand a refund 1300 pounds ago, but Lily had grown too attached by then, so now he's stuck with a giant farm animal sleeping at the foot of his bed every night like a dog.

Pinky is a sweet and intelligent beast...as long as she gets her daily PB&J sandwich. If she does not get that sandwich, Gaia help everyone in the temple...she goes berserk, breaking down doors, tearing up floorboards, and flipping people like pancakes until she gets what she wants.

Lily trains her to guard the temple from Crescent Cultists. Pinky can identify their wretched stink and she will gore them to death in seconds when she does.

Zov comes to begrudgingly accept the beast. She becomes Jennie's childhood pet and companion, and she's so big that Jennie can even ride her like a horse. The Karenzans use Pinky's cultist-detecting ability to find local hideouts and destroy them. This pig seems to have the unique ability to sense evil.

With Pinky's help, the Karenzans are able to weed out all the hidden cult hives in Taybiya. This becomes crucial later when they turn Taybiya into Azizaland, the kingdom of Etherea's second territory (the first is the Ethereal City).

They erect a big pig statue in Azizaland's plaza to honor Pinky for centuries to come. 🐖 Some of Pinky's offspring inherit her ability, and Karenzan beastmasters breed them to create a new breed of cultist-detecting war mounts.

These beasts become known as Azizan Crescent-Crunchers, or just "Crunchers" for short. They look like a burly-ass cross between a domestic pig, a wild boar, and a rhinoceros. They are highly motivated by peanut butter.

8 notes

·

View notes

Text

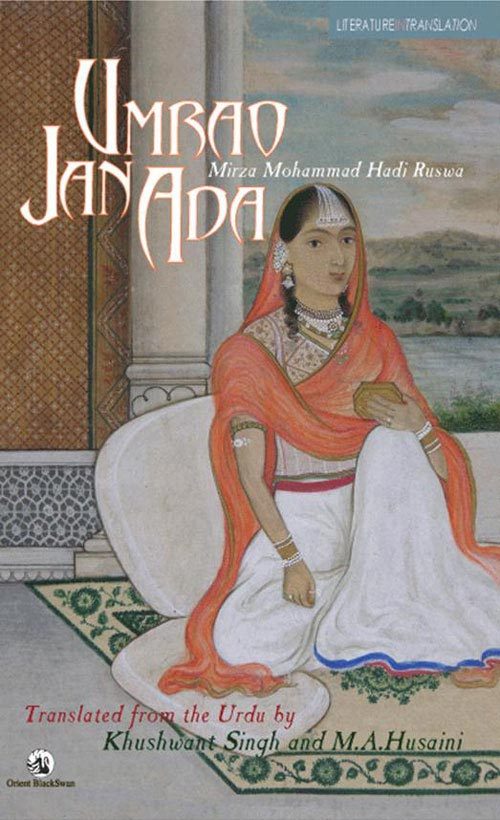

Umrao Jaan Ada: The Courtesan from Lucknow.

An Indian Classic Novel About Exploitation and Double Standards.

Considered by many to be the first Urdu novel, "Umrao Jaan Ada" was published in 1899. This novel narrates the life story of a courtesan and poet from 19th century Lucknow. It was penned by Mirza Hadi Ruswa, an Urdu language poet and writer known for his works in fiction and treatises, particularly on topics like religion, philosophy, and astronomy. Ruswa was a polyglot, proficient in several languages, including Urdu, Greek, and English.

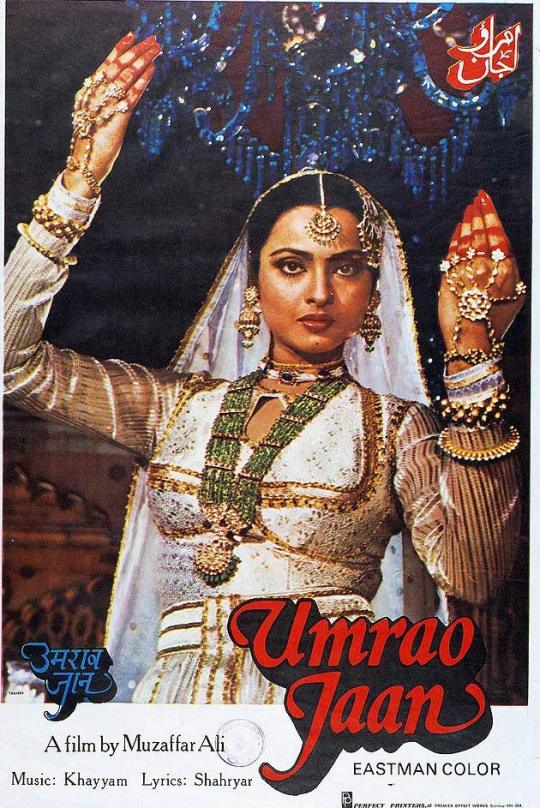

Ruswa was born in Lucknow around the time of the 1857 'Indian Mutiny,' which marked a significant turning point not only in the lives of Princely States like Awadh (also known as Avadh or Oudh) but also in the history of India as a whole. According to the novel, Umrao Jaan's story was recounted by her to the author during a poetry gathering in Lucknow. However, the actual existence of Umrao Jaan is a subject of dispute among scholars. There are few mentions of her outside Ruswa's novel, although there are records of the existence of an Uttar Pradesh dacoit named Fazal Ali. British documents also mention a courtesan named Azizan Bai, who claimed to have been taught by Umrao Jaan.

Rekha as Umra Jaan Ada in the 1981 adaptation of the novel.

The dark secrets of mid 19th Century Lucknow.

Umrao Jaan Ada provides an intricate portrayal of mid-19th century Lucknow, depicting both its decadent and morally hypocritical society. Some argue that it serves as a metaphor for India itself—a nation that had long attracted numerous suitors, many of whom were only seeking to exploit her.

#Literature#Made in India#urdu literature#Indian literature#Umrao jan ada#urudu classic#classic literature#lucknow

3 notes

·

View notes

Text

Kerja korek tanah dekat kawasan letupan paip gas dapat permit MBSJ- Datuk Bandar

SUBANG JAYA – Kerja pengorekan tanah berhampiran lokasi insiden letupan paip gas bawah tanah di Putra Heights, mendapat permit yang sah daripada Majlis Bandaraya Subang Jaya (MBSJ) dan PETRONAS. Selain itu, kerja yang dilakukan di kawasan berkenaan juga mematuhi prosedur operasi standard (SOP) yang ditetapkan. Datuk Bandar Subang Jaya, Datuk Amirul Azizan Abd Rahim, yang mengesahkan perkara itu…

0 notes

Text

Listen And Download New Music By Reza Azizan Called Ay Omrum With 2 Quality 320 And 128 On TrackMelody Media New Persian Music Reza Azizan – Ay Omrum Download New Music By Reza Azizan Called Ay Omrum With Best Quality And Play Online On TrackMelody Media . . #TrackMelody #persian_music #Persian_music_download #Iranian_music_download #New_Persian_Music #New_Music #Arabic_music #Turkish_music #pop #rock #rap #jazz #download_music #trend_music

0 notes

Text

Not the weirdest Lore deep dive I have ever done

#Note: We are not doing the “voodoo is demonic” thing on my blog#anyone that tries to start stuff is getting blocked on sight#anyway#I love learning more about African creatures 🤗#aziza#azizan#west african mythology#black fairy#fairy#fairy lore#benin#dahomey#voodoo#vodun more accurately#vodun#“aziza are closely associated with gbo charms”#look inside#gbo is another name for a Ju-Ju#african fairy#african mythology

5 notes

·

View notes

Text

EPF CEO Tipped to be Finance Minister II

EPF CEO Datuk Seri Amir Hamzah Azizan is tipped to be finance minister II in a Cabinet reshuffle that sources say will take place tomorrow. Reports on X say he is now sworn in as a Senator and will be given a portfolio in the much-expected cabinet reshuffle today. Social Media Links Follow us on: Instagram Threads Facebook Twitter YouTube DailyMotion Read More News #latestmalaysia EPF CEO as…

View On WordPress

0 notes

Text

Merabak Sembah (2024)

Antologi sajak berlima, membariskan 4 lagi rakan yang disayangi; Jack Malik, Azizan Afi, Meor Hailree dan Tulangkata. Memuatkan 10 sajak Aliff Awan selepas hiatus sejak Julai 2022.

0 notes

Text

How to assess a general-purpose AI model’s reliability before it’s deployed

New Post has been published on https://sunalei.org/news/how-to-assess-a-general-purpose-ai-models-reliability-before-its-deployed/

How to assess a general-purpose AI model’s reliability before it’s deployed

Foundation models are massive deep-learning models that have been pretrained on an enormous amount of general-purpose, unlabeled data. They can be applied to a variety of tasks, like generating images or answering customer questions.

But these models, which serve as the backbone for powerful artificial intelligence tools like ChatGPT and DALL-E, can offer up incorrect or misleading information. In a safety-critical situation, such as a pedestrian approaching a self-driving car, these mistakes could have serious consequences.

To help prevent such mistakes, researchers from MIT and the MIT-IBM Watson AI Lab developed a technique to estimate the reliability of foundation models before they are deployed to a specific task.

They do this by training a set of foundation models that are slightly different from one another. Then they use their algorithm to assess the consistency of the representations each model learns about the same test data point. If the representations are consistent, it means the model is reliable.

When they compared their technique to state-of-the-art baseline methods, it was better at capturing the reliability of foundation models on a variety of classification tasks.

Someone could use this technique to decide if a model should be applied in a certain setting, without the need to test it on a real-world dataset. This could be especially useful when datasets may not be accessible due to privacy concerns, like in health care settings. In addition, the technique could be used to rank models based on reliability scores, enabling a user to select the best one for their task.

“All models can be wrong, but models that know when they are wrong are more useful. The problem of quantifying uncertainty or reliability gets harder for these foundation models because their abstract representations are difficult to compare. Our method allows you to quantify how reliable a representation model is for any given input data,” says senior author Navid Azizan, the Esther and Harold E. Edgerton Assistant Professor in the MIT Department of Mechanical Engineering and the Institute for Data, Systems, and Society (IDSS), and a member of the Laboratory for Information and Decision Systems (LIDS).

He is joined on a paper about the work by lead author Young-Jin Park, a LIDS graduate student; Hao Wang, a research scientist at the MIT-IBM Watson AI Lab; and Shervin Ardeshir, a senior research scientist at Netflix. The paper will be presented at the Conference on Uncertainty in Artificial Intelligence.

Counting the consensus

Traditional machine-learning models are trained to perform a specific task. These models typically make a concrete prediction based on an input. For instance, the model might tell you whether a certain image contains a cat or a dog. In this case, assessing reliability could simply be a matter of looking at the final prediction to see if the model is right.

But foundation models are different. The model is pretrained using general data, in a setting where its creators don’t know all downstream tasks it will be applied to. Users adapt it to their specific tasks after it has already been trained.

Unlike traditional machine-learning models, foundation models don’t give concrete outputs like “cat” or “dog” labels. Instead, they generate an abstract representation based on an input data point.

To assess the reliability of a foundation model, the researchers used an ensemble approach by training several models which share many properties but are slightly different from one another.

“Our idea is like counting the consensus. If all those foundation models are giving consistent representations for any data in our dataset, then we can say this model is reliable,” Park says.

But they ran into a problem: How could they compare abstract representations?

“These models just output a vector, comprised of some numbers, so we can’t compare them easily,” he adds.

They solved this problem using an idea called neighborhood consistency.

For their approach, the researchers prepare a set of reliable reference points to test on the ensemble of models. Then, for each model, they investigate the reference points located near that model’s representation of the test point.

By looking at the consistency of neighboring points, they can estimate the reliability of the models.

Aligning the representations

Foundation models map data points in what is known as a representation space. One way to think about this space is as a sphere. Each model maps similar data points to the same part of its sphere, so images of cats go in one place and images of dogs go in another.

But each model would map animals differently in its own sphere, so while cats may be grouped near the South Pole of one sphere, another model could map cats somewhere in the Northern Hemisphere.

The researchers use the neighboring points like anchors to align those spheres so they can make the representations comparable. If a data point’s neighbors are consistent across multiple representations, then one should be confident about the reliability of the model’s output for that point.

When they tested this approach on a wide range of classification tasks, they found that it was much more consistent than baselines. Plus, it wasn’t tripped up by challenging test points that caused other methods to fail.

Moreover, their approach can be used to assess reliability for any input data, so one could evaluate how well a model works for a particular type of individual, such as a patient with certain characteristics.

“Even if the models all have average performance overall, from an individual point of view, you’d prefer the one that works best for that individual,” Wang says.

However, one limitation comes from the fact that they must train an ensemble of large foundation models, which is computationally expensive. In the future, they plan to find more efficient ways to build multiple models, perhaps by using small perturbations of a single model.

This work is funded, in part, by the MIT-IBM Watson AI Lab, MathWorks, and Amazon.

0 notes

Text

How to assess a general-purpose AI model’s reliability before it’s deployed

New Post has been published on https://thedigitalinsider.com/how-to-assess-a-general-purpose-ai-models-reliability-before-its-deployed/

How to assess a general-purpose AI model’s reliability before it’s deployed

Foundation models are massive deep-learning models that have been pretrained on an enormous amount of general-purpose, unlabeled data. They can be applied to a variety of tasks, like generating images or answering customer questions.

But these models, which serve as the backbone for powerful artificial intelligence tools like ChatGPT and DALL-E, can offer up incorrect or misleading information. In a safety-critical situation, such as a pedestrian approaching a self-driving car, these mistakes could have serious consequences.

To help prevent such mistakes, researchers from MIT and the MIT-IBM Watson AI Lab developed a technique to estimate the reliability of foundation models before they are deployed to a specific task.

They do this by training a set of foundation models that are slightly different from one another. Then they use their algorithm to assess the consistency of the representations each model learns about the same test data point. If the representations are consistent, it means the model is reliable.

When they compared their technique to state-of-the-art baseline methods, it was better at capturing the reliability of foundation models on a variety of classification tasks.

Someone could use this technique to decide if a model should be applied in a certain setting, without the need to test it on a real-world dataset. This could be especially useful when datasets may not be accessible due to privacy concerns, like in health care settings. In addition, the technique could be used to rank models based on reliability scores, enabling a user to select the best one for their task.

“All models can be wrong, but models that know when they are wrong are more useful. The problem of quantifying uncertainty or reliability gets harder for these foundation models because their abstract representations are difficult to compare. Our method allows you to quantify how reliable a representation model is for any given input data,” says senior author Navid Azizan, the Esther and Harold E. Edgerton Assistant Professor in the MIT Department of Mechanical Engineering and the Institute for Data, Systems, and Society (IDSS), and a member of the Laboratory for Information and Decision Systems (LIDS).

He is joined on a paper about the work by lead author Young-Jin Park, a LIDS graduate student; Hao Wang, a research scientist at the MIT-IBM Watson AI Lab; and Shervin Ardeshir, a senior research scientist at Netflix. The paper will be presented at the Conference on Uncertainty in Artificial Intelligence.

Counting the consensus

Traditional machine-learning models are trained to perform a specific task. These models typically make a concrete prediction based on an input. For instance, the model might tell you whether a certain image contains a cat or a dog. In this case, assessing reliability could simply be a matter of looking at the final prediction to see if the model is right.

But foundation models are different. The model is pretrained using general data, in a setting where its creators don’t know all downstream tasks it will be applied to. Users adapt it to their specific tasks after it has already been trained.

Unlike traditional machine-learning models, foundation models don’t give concrete outputs like “cat” or “dog” labels. Instead, they generate an abstract representation based on an input data point.

To assess the reliability of a foundation model, the researchers used an ensemble approach by training several models which share many properties but are slightly different from one another.

“Our idea is like counting the consensus. If all those foundation models are giving consistent representations for any data in our dataset, then we can say this model is reliable,” Park says.

But they ran into a problem: How could they compare abstract representations?

“These models just output a vector, comprised of some numbers, so we can’t compare them easily,” he adds.

They solved this problem using an idea called neighborhood consistency.

For their approach, the researchers prepare a set of reliable reference points to test on the ensemble of models. Then, for each model, they investigate the reference points located near that model’s representation of the test point.

By looking at the consistency of neighboring points, they can estimate the reliability of the models.

Aligning the representations

Foundation models map data points in what is known as a representation space. One way to think about this space is as a sphere. Each model maps similar data points to the same part of its sphere, so images of cats go in one place and images of dogs go in another.

But each model would map animals differently in its own sphere, so while cats may be grouped near the South Pole of one sphere, another model could map cats somewhere in the Northern Hemisphere.

The researchers use the neighboring points like anchors to align those spheres so they can make the representations comparable. If a data point’s neighbors are consistent across multiple representations, then one should be confident about the reliability of the model’s output for that point.

When they tested this approach on a wide range of classification tasks, they found that it was much more consistent than baselines. Plus, it wasn’t tripped up by challenging test points that caused other methods to fail.

Moreover, their approach can be used to assess reliability for any input data, so one could evaluate how well a model works for a particular type of individual, such as a patient with certain characteristics.

“Even if the models all have average performance overall, from an individual point of view, you’d prefer the one that works best for that individual,” Wang says.

However, one limitation comes from the fact that they must train an ensemble of large foundation models, which is computationally expensive. In the future, they plan to find more efficient ways to build multiple models, perhaps by using small perturbations of a single model.

This work is funded, in part, by the MIT-IBM Watson AI Lab, MathWorks, and Amazon.

#ai#ai model#algorithm#Algorithms#Amazon#Animals#approach#Art#artificial#Artificial Intelligence#author#cats#chatGPT#Computer science and technology#concrete#conference#creators#dall-e#data#datasets#dog#dogs#driving#Edgerton#engineering#Foundation#Future#Giving#Health#Health care

0 notes

Text

Business coaching oleh Azizan Osman dan marketing oleh Najib Asaddok

Dapatkan nasihat kewangan & pelaburan : https://forms.gle/K7Peiq4YynpBB9BEA

0 notes

Text

Malaysia proposes to only allow locally-produced sealed e-liquids for sale

According to a report by The Star on May 8, 2023, the Malaysian National Anti-Drug Agency (Masac) has proposed to only allow locally-produced vape e-liquids sold in sealed glass bottles in the country. Masac Secretary-General Raja Azizan Suhaimi stated that the use of glass bottles can minimize the risk of adding harmful substances. A joint study by Masac, the Asian Centre for Drug Policy and the International Islamic University Malaysia found that more and more teenage girls are using e-liquids containing illicit substances. Raja Azizan also proposed raising the age limit for vapes from 18 to 21 to minimize the abuse of e-liquids that may contain drugs. So far, only 10 manufacturers of liquid nicotine have registered with the customs department, even though the registration deadline is April 30, 2023. Prime Minister Datuk Seri Anwar Ibrahim revealed in the revised 2023 budget in February that e-liquids containing nicotine are still illegally sold in the country, with estimated sales of RM2 billion. Meanwhile, Health Minister Zaliha Mustafa stated that the revision of the "Tobacco Control and Smoking Bill," which aims to gradually raise the smoking age to cover the entire population, will be accelerated and redeveloped. Read the full article

0 notes

Text

Caruman KWSP pekerja asing dimulakan suku keempat tahun ini

KUALA LUMPUR – Skim caruman Kumpulan Wang Simpanan Pekerja (KWSP) kepada pekerja bukan warganegara akan mula dilaksana suku keempat tahun ini. Menteri Kewangan II, Datuk Seri Amir Hamzah Azizan, berkata pelaksanaan skim caruman itu akan menambah dana pelaburan kepada KWSP sekaligus memberi pulangan baik kepada ekonomi negara. Beliau berkata, caruman ini akan dilaksana dengan pendekatan lunak,…

0 notes