#also i used lens blur in camera raw filter not bad not bad

Explore tagged Tumblr posts

Text

𝔄𝔫𝔡 𝔪𝔶 𝔩𝔬𝔳𝔢 𝔦𝔰 𝔫𝔬 𝔤𝔬𝔬𝔡 𝔄𝔤𝔞𝔦𝔫𝔰𝔱 𝔱𝔥𝔢 𝔣𝔬𝔯𝔱𝔯𝔢𝔰𝔰 𝔱𝔥𝔞𝔱 𝔦𝔱 𝔪𝔞𝔡𝔢 𝔬𝔣 𝔶𝔬𝔲 𝔅𝔩𝔬𝔬𝔡 𝔦𝔰 𝔯𝔲𝔫𝔫𝔦𝔫𝔤 𝔡𝔢𝔢𝔭 𝔖𝔬𝔯𝔯𝔬𝔴 𝔱𝔥𝔞𝔱 𝔶𝔬𝔲 𝔨𝔢𝔢𝔭

#as flo rider once said... it twas only just a dream#me: actually freakin cryin during the editing process#also i used lens blur in camera raw filter not bad not bad#i was struggling with dof and transparencies so it was a nice fix#anyways if any of u have 45 min to watch the masterpiece that is this visual album pls do <3#oc: taryn#oc: atlas#ts4#simblr#sims 4#show us your sims

303 notes

·

View notes

Text

Landscape Photography Research

In order to create our persona’s content we must be able to work like they do. To do this I began some research into how I could become a better landscape photographer. I found these tips really useful and insightful as they were definitely things I would not have picked up on. Reading these tips and ideas has gotten me excited to try and experiment with them, especially the lens filters I didn't even know they existed.

Location

You should always have a clear idea of where you are planning to go, and at what time of the day you will be able to capture the best photograph.

Have patients

The key is to always allow yourself enough time at a location, so that you are able to wait if you need to. Forward planning can also help you hugely, so make sure to check weather forecasts before leaving, maximizing your opportunity for the weather you require.

Don't be lazy

Don’t rely on easily accessible viewpoints, that everyone else can just pull up to and see. Instead, look for those unique spots (providing they are safe to get to) that offer amazing scenes, even if they require determination to get there.

Use the best light

The best light for landscape photography is early in the morning or late afternoon, with the midday sun offering the harshest light. But, part of the challenge of landscape photography is about being able to adapt and cope with different lighting conditions, for example, great landscape photos can be captured even on stormy or cloudy days. The key is to use the best light as much as possible, and be able to influence the look and feel of your photos to it.

Carry a tripod

Photography in low light conditions (e.g. early morning or early evening) without a tripod would require an increase in ISO to be able to avoid camera shake, which in turn means more noise in your images. If you want to capture a scene using a slow shutter speed or long exposure (for example, to capture the movement of clouds or water) then without a tripod you simply won’t be able to hold the camera steady enough, to avoid blurred images from camera shake.Photography in low light conditions (e.g. early morning or early evening) without a tripod would require an increase in ISO to be able to avoid camera shake, which in turn means more noise in your images. If you want to capture a scene using a slow shutter speed or long exposure (for example, to capture the movement of clouds or water) then without a tripod you simply won’t be able to hold the camera steady enough, to avoid blurred images from camera shake.

Maximize your depth of field

Usually landscape photos require the vast majority of the photo to be sharp (the foreground and background) so you need a deeper depth of field (f/16-f/22) than if you are taking a portrait of someone. A shallower depth of field can also be a powerful creative tool if used correctly, as it can isolate the subject by keeping it sharp, while the rest of the image is blurred. As a starting point, if you are looking to keep the majority of the photo sharp, set your camera to Aperture Priority (A or Av) mode, so you can take control of the aperture. Start at around f/8 and work up (f/11 or higher) until you get the desired effect.

Think about composition

There are several techniques that you can use to help your composition (such as the rule of thirds), but ultimately you need to train yourself to be able to see a scene, and analyze it in your mind. With practice this will become second nature, but the important thing is to take your time.

Use neutral density and polarizing filters

A polarizing filter can help by minimizing the reflections and also enhancing the colours (greens and blues). But remember, polarizing filters often have little or no effect on a scene if you’re directly facing the sun, or it’s behind you. For best results position yourself between 45° and 90° to the sun.

One of the other big challenges is getting a balanced exposure between the foreground, which is usually darker, and a bright sky. Graduated ND filters help to compensate for this by darkening the sky, while keeping the foreground brighter. This can be replicated in post-production, but it is always best to try and capture the photo as well as possible in-camera.

Use the histogram

A histogram is a simple graph that shows the different tonal distribution in your image. The left side of the graph is for dark tones and the right side of the graph represents bright tones.

If you find that the majority of the graph is shifted to one side, this is an indication that your photo is too light or dark (overexposed or underexposed). This isn’t always a bad thing, and some images work perfectly well either way. However, if you find that your graph extends beyond the left or right edge, this shows that you have parts of the photo with lost detail (pure black areas if the histogram extends beyond the left edge and pure white if it extends beyond the right edge).

Shoot in RAW format

RAW files contain much more detail and information, and give far greater flexibility in post-production without losing quality.

Use a wide angle lens

Wide-angle lenses are preferred for landscape photography because they can show a broader view, and therefore give a sense of wide open space. They also tend to give a greater depth of field and allow you to use faster shutter speeds because they allow more light. Taking an image at f/16 will make both the foreground and background sharp.

Capture movement

If you are working with moving water you can create a stunning white water effect by choosing a long exposure. If working with bright daylight you must use an ND filter to reduce the amount of light hitting the camera, this way the camera will allow you to have a longer shutter time. You must always use a tripod for this kind of shot so that the rest of your image remains sharp.

______________

References:

Dadfar, Kav. "12 Tips to Help You Capture Stunning Landscape Photos." Digital Photography School. Last modified June 13, 2016. https://digital-photography-school.com/12-tips-to-help-you-capture-stunning-landscape-photos/.

Kun, Attila. "Landscape Photography Tips." ExposureGuide.com. Last modified November 25, 2019. https://www.exposureguide.com/landscape-photography-tips/.

0 notes

Text

Discover Why You Shouldn't Consider Noise your Enemy and Add It to Your Pictures

Whenever we talk about noise we usually talk in pejorative terms, that is, we consider noise our enemy, something ugly that we should avoid. However, this hate of noise sometimes makes us too obsessive with it, we want to get shots completely free of noise. And, I'm sorry to tell you that, cold, but a shot without noise does not exist. Noise is an inherent part of photography .

But there is no need to worry! What we must learn is to control the noise, to handle it as we please and, why not, to add it as an aesthetic motif in our photographs.

Remembering what is noise

We already explain to you what the noise is in our article " ISO in Photography: What it is and How It Is Used ", but we will refresh the memory a bit.

As a general rule, the higher the ISO you shoot, the greater the amount of noise that appears in your picture . But what exactly is noise? Noise is that kind of grain that appears mostly in the darkest areas of the photo. To understand the concept of noise and how it is generated, we must first understand how image capture works in our camera. please follow the link for getting a service clipping path service.

The sensor of our camera is composed of a mesh of thousands of photosensitive cells that receive the light that enters through the lens. Upon receiving the light, these cells generate an electric current, which will be processed by the camera and converted into digital data. Each of these cells will generate a pixel of the final photograph.

However, this electrical signal not only possesses the data of the captured image, but also generates random data, the result of the electric current itself. This random data will be reflected in the image as noise.

When we increase the ISO we are digitally amplifying the electrical signal received by the photosensitive cells, but at the same time we will also be amplifying that random data . That's why the more ISO we upload, the more noise will appear in our picture.

Learn Easy Photography ... with dzoom PREMIUM

Learning to Control Noise

You might think then, after knowing how the noise is generated in our camera, that it would be worth underexposing a photograph rather than increasing the ISO, so as not to generate noise. Well, you are very wrong.

A poorly exposed photograph will always have a lower quality than a well exposed photograph . And trying to expose it later in editing programs like Photoshop or Lightroom will always generate more noise than if we had exposed it correctly when shooting, even if it had been increasing the ISO. So, raising the ISO is not bad, you just have to control it and know how far your camera can go.

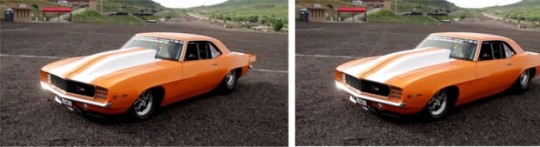

The underexposed and later clarified photo in edition has more noise despite having been shot with a lower ISO

Each camera has a different noise treatment, and you should consider how much ISO you can upload on your camera and take pictures with acceptable noise. We can always reduce the noise in editing programs a bit , but we will lose definition and if we go over we can blur the picture so much that it ends up looking like an oil painting. So, unfortunately noise is an enemy that we will always have to deal with. for learning more about cliping path, please visit https://www.udemy.com/course/how-to-adobe-photoshop-clipping-mask-layer-tutorial-images-photoshop/

However, we will not always consider him "our enemy . " The vintage nostalgia that is lived today has made many photographers choose to add noise in their photographs. Adding noise to your photographs can help you emulate that characteristic grain of analog photographs, or it can give your photographs a cinematic look. So, you yourself will be the one who judges what amount of noise is best for your photographs.

Remember that noise tolerance is subjective , so we cannot speak of an “ISO limit”. In addition, as I said before, each camera has a different noise management. Therefore, it should be you who sets the maximum ISO limit for your camera, in which the noise that appears is acceptable to you. Do tests with your camera at different ISOs and in different situations to know it thoroughly and know how far you can go.

Adding Noise in your Photographs as Aesthetic Reason

If you see any winning analog photograph of multiple awards and cheers, you will discover that it has noise. Or grain, as it is also called. Noise and grain are the same thing, only that term "noise" has acquired a connotation so negative that when we add it on purpose with an aesthetic motive we usually refer to it as grain .

Call it what you call it, the truth is that that grain does not affect photography, it is more that analog, vintage or cinematic touch that makes us look at that image in a different way. That attractive touch that brings grain to photography is what many photographers look for in their work. And that's why many of them add noise on purpose in their shots.

You can choose to add the noise at the time of shooting, of course. You will only have to raise the ISO and compensate for this greater light input by increasing the shutter speed and closing the diaphragm , following the Law of reciprocity .

However, if you are still experimenting with this style and you are not totally convinced if you will like the result or not, or if you prefer to control how much noise you add in a much more personalized way, it is best to shoot your shot as clean as possible and that you add it later in the processing .

How to Add Grain to your Photos in Lightroom

Adding grain to your photos in Lightroom is very easy. In the development module, go to the Effects panel . In the Granulated submenu you will find 3 sliders that will allow you to configure the amount and appearance of noise to your liking:

Quantity: The amount of noise you want to add.

Size: The size of the grain. The bigger it is, the more it will be perceived.

Roughness: The distribution of grain. With a low roughness the grain will be more orderly distributed, while rising the roughness will be distributed in a more random way.

How to Add Grain to your Photos in Photoshop

To add grain to your photos from Photoshop you can do it in two different ways.

One is to add it from Adobe Camera RAW , which will open automatically when you open a RAW in Photoshop, or you can also activate it from the Filter / Filter menu of Camera RAW . As this tool is a "mini Lightroom" integrated into Photoshop, the way to add noise will be exactly the same as we have seen in the previous point. You will find those same sliders in the Effects tab (fx) .

The other way is to add it from Photoshop's own noise tool. You will find it in the menu Filter / Noise / Add noise . In this window you will find 3 options to customize your noise:

Quantity: The amount of noise you want to add.

Distribution: You can choose between Uniform, which will add a tidier noise, or Gaussian, which will add a more randomly distributed noise.

Monochromatic: If you do not check this box, the noise you will add will be chromatic noise (that is, it will have color) while if you mark it, it will simply be grain.

0 notes

Text

The future of photography is code

New Post has been published on https://photographyguideto.com/must-see/the-future-of-photography-is-code-2/

The future of photography is code

What’s in a camera? A lens, a shutter, a light-sensitive surface and, increasingly, a set of highly sophisticated algorithms. While the physical components are still improving bit by bit, Google, Samsung and Apple are increasingly investing in (and showcasing) improvements wrought entirely from code. Computational photography is the only real battleground now.

The reason for this shift is pretty simple: Cameras can’t get too much better than they are right now, or at least not without some rather extreme shifts in how they work. Here’s how smartphone makers hit the wall on photography, and how they were forced to jump over it.

Not enough buckets

An image sensor one might find in a digital camera

The sensors in our smartphone cameras are truly amazing things. The work that’s been done by the likes of Sony, OmniVision, Samsung and others to design and fabricate tiny yet sensitive and versatile chips is really pretty mind-blowing. For a photographer who’s watched the evolution of digital photography from the early days, the level of quality these microscopic sensors deliver is nothing short of astonishing.

But there’s no Moore’s Law for those sensors. Or rather, just as Moore’s Law is now running into quantum limits at sub-10-nanometer levels, camera sensors hit physical limits much earlier. Think about light hitting the sensor as rain falling on a bunch of buckets; you can place bigger buckets, but there are fewer of them; you can put smaller ones, but they can’t catch as much each; you can make them square or stagger them or do all kinds of other tricks, but ultimately there are only so many raindrops and no amount of bucket-rearranging can change that.

Sensors are getting better, yes, but not only is this pace too slow to keep consumers buying new phones year after year (imagine trying to sell a camera that’s 3 percent better), but phone manufacturers often use the same or similar camera stacks, so the improvements (like the recent switch to backside illumination) are shared amongst them. So no one is getting ahead on sensors alone.

See the new iPhone’s ‘focus pixels’ up close

Perhaps they could improve the lens? Not really. Lenses have arrived at a level of sophistication and perfection that is hard to improve on, especially at small scale. To say space is limited inside a smartphone’s camera stack is a major understatement — there’s hardly a square micron to spare. You might be able to improve them slightly as far as how much light passes through and how little distortion there is, but these are old problems that have been mostly optimized.

The only way to gather more light would be to increase the size of the lens, either by having it A: project outwards from the body; B: displace critical components within the body; or C: increase the thickness of the phone. Which of those options does Apple seem likely to find acceptable?

In retrospect it was inevitable that Apple (and Samsung, and Huawei, and others) would have to choose D: none of the above. If you can’t get more light, you just have to do more with the light you’ve got.

Isn’t all photography computational?

The broadest definition of computational photography includes just about any digital imaging at all. Unlike film, even the most basic digital camera requires computation to turn the light hitting the sensor into a usable image. And camera makers differ widely in the way they do this, producing different JPEG processing methods, RAW formats and color science.

For a long time there wasn’t much of interest on top of this basic layer, partly from a lack of processing power. Sure, there have been filters, and quick in-camera tweaks to improve contrast and color. But ultimately these just amount to automated dial-twiddling.

The first real computational photography features were arguably object identification and tracking for the purposes of autofocus. Face and eye tracking made it easier to capture people in complex lighting or poses, and object tracking made sports and action photography easier as the system adjusted its AF point to a target moving across the frame.

These were early examples of deriving metadata from the image and using it proactively, to improve that image or feeding forward to the next.

In DSLRs, autofocus accuracy and flexibility are marquee features, so this early use case made sense; but outside a few gimmicks, these “serious” cameras generally deployed computation in a fairly vanilla way. Faster image sensors meant faster sensor offloading and burst speeds, some extra cycles dedicated to color and detail preservation and so on. DSLRs weren’t being used for live video or augmented reality. And until fairly recently, the same was true of smartphone cameras, which were more like point and shoots than the all-purpose media tools we know them as today.

The limits of traditional imaging

Despite experimentation here and there and the occasional outlier, smartphone cameras are pretty much the same. They have to fit within a few millimeters of depth, which limits their optics to a few configurations. The size of the sensor is likewise limited — a DSLR might use an APS-C sensor 23 by 15 millimeters across, making an area of 345 mm2; the sensor in the iPhone XS, probably the largest and most advanced on the market right now, is 7 by 5.8 mm or so, for a total of 40.6 mm2.

Roughly speaking, it’s collecting an order of magnitude less light than a “normal” camera, but is expected to reconstruct a scene with roughly the same fidelity, colors and such — around the same number of megapixels, too. On its face this is sort of an impossible problem.

Improvements in the traditional sense help out — optical and electronic stabilization, for instance, make it possible to expose for longer without blurring, collecting more light. But these devices are still being asked to spin straw into gold.

Luckily, as I mentioned, everyone is pretty much in the same boat. Because of the fundamental limitations in play, there’s no way Apple or Samsung can reinvent the camera or come up with some crazy lens structure that puts them leagues ahead of the competition. They’ve all been given the same basic foundation.

All competition therefore comprises what these companies build on top of that foundation.

Image as stream

The key insight in computational photography is that an image coming from a digital camera’s sensor isn’t a snapshot, the way it is generally thought of. In traditional cameras the shutter opens and closes, exposing the light-sensitive medium for a fraction of a second. That’s not what digital cameras do, or at least not what they can do.

A camera’s sensor is constantly bombarded with light; rain is constantly falling on the field of buckets, to return to our metaphor, but when you’re not taking a picture, these buckets are bottomless and no one is checking their contents. But the rain is falling nevertheless.

To capture an image the camera system picks a point at which to start counting the raindrops, measuring the light that hits the sensor. Then it picks a point to stop. For the purposes of traditional photography, this enables nearly arbitrarily short shutter speeds, which isn’t much use to tiny sensors.

Why not just always be recording? Theoretically you could, but it would drain the battery and produce a lot of heat. Fortunately, in the last few years image processing chips have gotten efficient enough that they can, when the camera app is open, keep a certain duration of that stream — limited resolution captures of the last 60 frames, for instance. Sure, it costs a little battery, but it’s worth it.

Access to the stream allows the camera to do all kinds of things. It adds context.

Context can mean a lot of things. It can be photographic elements like the lighting and distance to subject. But it can also be motion, objects, intention.

A simple example of context is what is commonly referred to as HDR, or high dynamic range imagery. This technique uses multiple images taken in a row with different exposures to more accurately capture areas of the image that might have been underexposed or overexposed in a single exposure. The context in this case is understanding which areas those are and how to intelligently combine the images together.

This can be accomplished with exposure bracketing, a very old photographic technique, but it can be accomplished instantly and without warning if the image stream is being manipulated to produce multiple exposure ranges all the time. That’s exactly what Google and Apple now do.

Something more complex is of course the “portrait mode” and artificial background blur or bokeh that is becoming more and more common. Context here is not simply the distance of a face, but an understanding of what parts of the image constitute a particular physical object, and the exact contours of that object. This can be derived from motion in the stream, from stereo separation in multiple cameras, and from machine learning models that have been trained to identify and delineate human shapes.

These techniques are only possible, first, because the requisite imagery has been captured from the stream in the first place (an advance in image sensor and RAM speed), and second, because companies developed highly efficient algorithms to perform these calculations, trained on enormous data sets and immense amounts of computation time.

What’s important about these techniques, however, is not simply that they can be done, but that one company may do them better than the other. And this quality is entirely a function of the software engineering work and artistic oversight that goes into them.

A system to tell good fake bokeh from bad

DxOMark did a comparison of some early artificial bokeh systems; the results, however, were somewhat unsatisfying. It was less a question of which looked better, and more of whether they failed or succeeded in applying the effect. Computational photography is in such early days that it is enough for the feature to simply work to impress people. Like a dog walking on its hind legs, we are amazed that it occurs at all.

But Apple has pulled ahead with what some would say is an almost absurdly over-engineered solution to the bokeh problem. It didn’t just learn how to replicate the effect — it used the computing power it has at its disposal to create virtual physical models of the optical phenomenon that produces it. It’s like the difference between animating a bouncing ball and simulating realistic gravity and elastic material physics.

Why go to such lengths? Because Apple knows what is becoming clear to others: that it is absurd to worry about the limits of computational capability at all. There are limits to how well an optical phenomenon can be replicated if you are taking shortcuts like Gaussian blurring. There are no limits to how well it can be replicated if you simulate it at the level of the photon.

Similarly the idea of combining five, 10, or 100 images into a single HDR image seems absurd, but the truth is that in photography, more information is almost always better. If the cost of these computational acrobatics is negligible and the results measurable, why shouldn’t our devices be performing these calculations? In a few years they too will seem ordinary.

If the result is a better product, the computational power and engineering ability has been deployed with success; just as Leica or Canon might spend millions to eke fractional performance improvements out of a stable optical system like a $2,000 zoom lens, Apple and others are spending money where they can create value: not in glass, but in silicon.

Double vision

One trend that may appear to conflict with the computational photography narrative I’ve described is the advent of systems comprising multiple cameras.

This technique doesn’t add more light to the sensor — that would be prohibitively complex and expensive optically, and probably wouldn’t work anyway. But if you can free up a little space lengthwise (rather than depthwise, which we found impractical) you can put a whole separate camera right by the first that captures photos extremely similar to those taken by the first.

A mock-up of what a line of color iPhones could look like

Now, if all you want to do is re-enact Wayne’s World at an imperceptible scale (camera one, camera two… camera one, camera two…) that’s all you need. But no one actually wants to take two images simultaneously, a fraction of an inch apart.

These two cameras operate either independently (as wide-angle and zoom) or one is used to augment the other, forming a single system with multiple inputs.

The thing is that taking the data from one camera and using it to enhance the data from another is — you guessed it — extremely computationally intensive. It’s like the HDR problem of multiple exposures, except far more complex as the images aren’t taken with the same lens and sensor. It can be optimized, but that doesn’t make it easy.

So although adding a second camera is indeed a way to improve the imaging system by physical means, the possibility only exists because of the state of computational photography. And it is the quality of that computational imagery that results in a better photograph — or doesn’t. The Light camera with its 16 sensors and lenses is an example of an ambitious effort that simply didn’t produce better images, though it was using established computational photography techniques to harvest and winnow an even larger collection of images.

Light and code

The future of photography is computational, not optical. This is a massive shift in paradigm and one that every company that makes or uses cameras is currently grappling with. There will be repercussions in traditional cameras like SLRs (rapidly giving way to mirrorless systems), in phones, in embedded devices and everywhere that light is captured and turned into images.

Sometimes this means that the cameras we hear about will be much the same as last year’s, as far as megapixel counts, ISO ranges, f-numbers and so on. That’s okay. With some exceptions these have gotten as good as we can reasonably expect them to be: Glass isn’t getting any clearer, and our vision isn’t getting any more acute. The way light moves through our devices and eyeballs isn’t likely to change much.

What those devices do with that light, however, is changing at an incredible rate. This will produce features that sound ridiculous, or pseudoscience babble on stage, or drained batteries. That’s okay, too. Just as we have experimented with other parts of the camera for the last century and brought them to varying levels of perfection, we have moved onto a new, non-physical “part” which nonetheless has a very important effect on the quality and even possibility of the images we take.

Read more: https://techcrunch.com

0 notes

Text

Sony A7RIII Review: Officially The Best Pro Full-Frame Mirrorless Camera On The Market

When I test a new camera, I usually have an idea of how the review might go, but there are always some things that are a complete unknown, and a few things that totally surprise me.

I know better than to pass judgment on the day a camera is announced. The images, and the user experience are what matter. If a camera has all the best specs but lacks reliability or customizability, it’s a no-deal for me.

So, you can probably guess how this review of the Sony A7RIII is going to turn out. Released in October of 2017 at $3200, the camera has already had well over a year of real-world field use, by working professionals and hobbyists alike. Also, it now faces full-frame competition from both Canon and Nikon, in the forms of Canons new RF mount and Nikon’s Z mount, although these only have two bodies each and 3-4 native lenses each.

As a full-time wedding and portrait photographer, however, I can’t just jump on a new camera the moment it arrives. Indeed, the aspects of reliability and sheer durability are always important. And, considering the track record for reliability (buggy-ness) of the Sony A7-series as a whole since its birth in October of 2013, I waited patiently for there to be a general consensus about this third generation of cameras.

Indeed, the consensus has been loud: third time’s a charm.

Although, I wouldn’t exactly call the A7RIII a charming little camera. Little, sure, professional, absolutely! But, it has taken a very long time to become truly familiar and comfortable with it.

Before you comment, “oh no, not another person complaining about how hard it is to ‘figure out’ a Sony camera” …please give me a chance to thoroughly describe just how incredibly good of a camera the A7RIII is, and tell you why you should (probably) get one, in spite of (or even because of) its complexity.

Sony A7RIII (mk3) Specs

42-megapixel full-frame BSI CMOS sensor (7952 x 5304 pixels)

ISO 100-32000 (up to ISO 50 and ISO 102400)

5-axis sensor-based image stabilization (IBIS)

Hybrid autofocus, 399 phase-detect, 425 contrast-detect AF points

3.6M dot Electronic Viewfinder, 1.4M dot 3″ rear LCD

10 FPS (frames per second) continuous shooting, with autofocus

Dual SD card slots (one UHS-II)

4K @ 30, 24p video, 1080 @ 120, 60, 30, 24p

Metal frame, weather-sealed body design

Sony A7R3, Sony 16-35mm f/2.8 GM | 1/80 sec, f/11, ISO 100

100% crop from the above image, fine-radius sharpening applied

Sony A7R3 Pros

The pros are going to far outweigh the cons for this camera, and that should come as no surprise to anyone who has paid attention to Sony’s mirrorless development over the last few years. Still, let’s break things down so that we can discuss each one as it actually relates to the types of photography you do.

Because, even though I’ve already called the A7RIII the “best pro full-frame mirrorless camera”, there may still be at least a couple other great choices (spoiler: they’re also Sony) for certain types of working pro photographers.

Sony A7R3, Sony 16-35mm f/2.8 GM, 3-stop Polar Pro NDPL Polarizer filter 2 sec, f/10, ISO 100

Image Quality

The A7RIII’s image quality is definitely a major accomplishment. The 42-megapixel sensor was already a milestone in overall image quality when it first appeared in the A7RII, with its incredible resolution and impressive high ISO image quality. This next-generation sensor is yet another (incremental) step forward.

Compared to its predecessor, by the way, at the lowest ISO’s (mostly at 100) you can expect slightly lower noise and higher dynamic range. At the higher ISOs, you can expect roughly the same (awesome) image quality.

Also, when scaled back down to the resolution of its 12-24 megapixel Sony siblings, let alone the competition, it’s truly impressive to see what the A7R3 can output.

Speaking of its competitors’ sensors, the Sony either leaves them in the dust, (for example, versus a Canon sensor’s low-ISO shadow recovery) …or roughly matches their performance. (For example, versus Nikon’s 36 and 45-megapixel sensor resolution and dynamic range.)

Sony A7R3, 16-35mm f/2.8 GM | 31mm, 1/6 sec, f/10, ISO 100, handheld

100% crop from the above image – IBIS works extremely well!

I’ll be honest, though: I reviewed this camera with the perspective of a serious landscape photographer. If you’re a very casual photographer, whether you do nature, portraits, or travel or action sports, casually, then literally any camera made in the last 5+ years will deliver more image quality than you’ll ever need.

The Sony A7R3’s advantages come into play when you start to push the envelope of what is possible in extremely difficult conditions. Printing huge. Shooting single exposures in highly dynamic lighting conditions, especially when you have no control over the light/conditions. Shooting in near-pitch-dark conditions, by moonlight or starlight… You name it; the A7RIII will match, or begin to pull ahead of, the competition.

Personally, as a landscape, nightscape, and time-lapse photographer, I couldn’t ask for a better all-around sensor and image quality than this. Sure, I would love to have a native ISO 50, and I do appreciate the three Nikon sensors which offer a base ISO of 64, when I’m shooting in conditions that benefit from it.

Still, as a sensor that lets me go from ISO 100 to ISO 6400 without thinking twice about whether or not I can make a big print, the A7RIII’s images have everything I require.

Sony Color Science

Before we move on, we must address the common stereotype about dissatisfaction with “Sony colors”. Simply put, it takes two…Actually, it takes three! The camera manufacturer, the raw processing software, and you, the artist who wields those two complex, advanced tools.

Sony A7R3, Sony 16-35mm f/2.8 GM | 6 sec, f/3.5, ISO 100 | Lightroom CCC

The truth is that, in my opinion, Adobe is the most guilty party when it comes to getting colors “right”, or having them look “off”, or having muddy tones in general.

Why do I place blame on what is by far the most ubiquitous, and in popular opinion the absolute best, raw processing software? My feelings are indeed based on facts, and not the ambiguous “je ne sais quoi” that some photographers try to complain about:

1.) If you shoot any camera in JPG, whether Sony, Nikon, or Canon, they are all capable of beautiful skin tones and other colors. Yes, I know, serious photographers all shot RAW. However, looking at a JPG is the only way to fairly judge the manufacturer’s intended color science. And in that regard, Sony’s colors are not bad at all.

2.) If you use another raw processing engine, such as Capture One Pro, you get a whole different experience with Sony .ARW raw files, both with regard to tonal response and color science. The contrast and colors both look great. Different, yes, but still great.

I use Adobe’s camera profiles when looking for punchy colors from raw files

Again, I’ll leave it up to you to decide which is truly better in your eyes as an artist. In some lighting conditions, I absolutely love Canon, Nikon, and Fuji colors too. However, in my experience, it is mostly the raw engine, and the skill of the person using it, that is to blame when someone vaguely claims, “I just don’t like the colors”…

Disclaimer: I say this as someone who worked full-time in post-production for many years, and who has post-produced over 2M Canon CR2 files, 2M Nikon NEF files, and over 100,000 Sony ARW files.

Features

There is no question that the A7R3 shook up the market with its feature set, regardless of the price point. This is a high-megapixel full-frame mirrorless camera with enough important features that any full-time working pro could easily rely on the camera to get any job done.

Flagship Autofocus

The first problem that most professional photographers had with mirrorless technology was that it just couldn’t keep up with the low-light autofocus reliability of a DSLR’s phase-detect AF system.

This line has been blurred quite a bit from the debut of the Sony A7R2 onward, however, and with this current-generation, hybrid on-sensor phase+contrast detection AF system, I am happy to report that I’m simply done worrying about autofocus. Period. I’m done counting the number of in-focus frames from side-by-side comparisons between a mirrorless camera and a DSLR competitor.

In other words, yes, there could be a small percent difference in tack-sharp keepers between the A7R3’s autofocus system and that of, say, a Canon 5D4 or a Nikon D850. In certain light, with certain AF point configurations, the Canon/Nikon do deliver a few more in-focus shots, on a good day. But, I don’t care.

Sony A7R3, 16-35mm f/2.8 GM | 1/160 sec, f/2.8, ISO 100

Why? Because the A7R3 is giving me a less frustrating experience overall due to the fact that I’ve completely stopped worrying about AF microadjustment, and having to check for front-focus or back-focus issues on this-or-that prime lens. If anything, the faster the aperture, the better the lens is at nailing focus in low light. That wasn’t the case with DSLRs; usually, it was the 24-70 and 70-200 2.8’s that were truly reliable at focusing in terrible light, and most f/1.4 or f/1.8 DSLR primes were hit-or-miss. I am so glad those days are over.

Now, the A7R3 either nails everything perfectly or, when the light gets truly terrible, it still manages to deliver about the same number of in-focus shots as I’d be getting out of my DSLRs anyways.

Eye Autofocus and AF customization

Furthermore, the A7R3 offers a diverse variety of focus point control and operation. And, with new technologies such as face-detection and Eye AF, the controls really do need to be flexible! Thanks to the level of customizability offered in the the A7R3, I can do all kinds of things, such as:

Quickly change from a designated, static AF point to a dynamic, adaptable AF point. (C1 or C2 button, you pick which based on your own dexterity and muscle memory)

Easily switch face-detection on and off. (I put this in the Fn menu)

Designate the AF-ON button to perform traditional autofocus, while the AEL button performs Eye-AF autofocus. (Or vice-versa, again depending on your own muscle memory and dexterity)

Switch between AF-S and AF-C using any number of physical customizations. (I do wish there was a physical switch for this, though, like the Sony A9 has.)

Oh, and it goes without saying that a ~$3,000 camera gets a dedicated AF point joystick, although I must say I’m preferential to touchscreen AF point control now that there are literally hundreds of AF points to choose from.

In short, this is one area where Sony did almost everything right. They faced a daunting challenge of offering ways to implement all these useful technologies, and they largely succeeded.

This is not just professional-class autofocus, it’s a whole new generation of autofocus, a new way of thinking about how we ensure that each shot, whether portrait or not, is perfectly focused exactly how we want it to be, even with ultra-shallow apertures or in extremely low light.

Dual Card Slots

Like professional autofocus, dual card slots is nothing new in a ~$3,000 camera. Both the Nikon D850 (and D810, etc.) and the Canon 5D4 (and 5D3) have had these features for years. Although, notably, the $3400 Nikon Z7 does not; it opted for a single XQD slot instead. Read our full Nikon Z7 review here.)

Unlike those DLSRs, however, the Sony A7R3 combines the professional one-two punch of pro AF and dual card slots with other things such as the portability and other general benefits of mirrorless, as well as great 4K video specs and IBIS. (By the way, no, IBIS and 4K video aren’t exclusive to mirrorless; many DSLRs have 4K video now, and Pentax has had IBIS in its traditional DSLRs for many years too.)

One of my favorite features: Not only can the camera be charged via USB, it can operate directly from USB power!

Sony A7R3 Mirrorless Battery Life

One of the last major drawbacks of mirrorless systems, and the nemesis of Sony’s earlier A7-series in particular was battery life. The operative word being, WAS. Now, the Sony NP-FZ100 battery allows the A7R3 to last just as long as, or in some cases even longer than, a DSLR with comparable specs. (Such as lens-based stabilization, or 4K video)

Oh, and Sony’s is the only full-frame mirrorless platform that allows you to directly run a camera off USB power without a “dummy” battery, as of March 2019. This allows you to shoot video without ever interrupting your clip/take to swap batteries, and capture time-lapses for innumerable hours, or, just get through a long wedding day without having to worry about carrying more than one or two fully charged batteries.

By the way, for all you marathon-length event photographers and videographers out there: A spare Sony NP-FZ-100 battery will set you back $78, while an Anker 20,100 mAh USB battery goes for just $49. So, no matter your budget, your battery life woes are officially over.

Durability

This is one thing I don’t like to speak about until the gear I’m reviewing has been out in the real world for a long time. I’ve been burned before, by cameras that I rushed to review as soon as they were released, and I gave some of them high praise even, only to discover a few weeks/months later that there’s a major issue with durability or functionality, sometimes even on the scale of a mass recall. (*cough*D600*cough*)

Thankfully, we don’t have that problem here, since the A7RIII has been out in the real world for well over a year now. I can confidently report, based on both my own experience and the general consensus from all those who I’ve talked to directly, that this camera is a rock-solid beast. It is designed and built tough, with good overall strength and extensive weather sealing.

It does lack one awesome feature that the Canon EOS R offers, which is the simple but effective use of the mechanical mirror to protect the sensor whenever the camera is of, or when changing lenses. Because, if I’m honest, the Sony A7R3 sensor is a dust magnet, and the sensor cleaner doesn’t usually do more than shake one or two of the three or five specks of dust that are always landing on the sensor after just a half-day of swapping lenses periodically, especially in drier, static-y environments.

Value

Currently, at just under $3200 and sometimes on sale for less than $2800, there’s no dispute- We have the best value around, if you actually need the specific things that the A7RIII offers compared to your other options.

But, could there be an even better camera out there, for you and your specific needs?

If you don’t plan to make giant prints, and you rarely ever crop your images very much, then you just don’t need 42 megapixels. In fact, it’s actually going to be quite a burden on your memory cards, hard drives, and computer CPU/RAM, especially if you decide to shoot uncompressed raw and rack up a few thousand images each time you take the camera out.

Indeed, the 24 megapixels of the A7III is currently (and will likely remain) the goldilocks resolution for almost all amateurs and many types of working pros. Personally, as a wedding and portrait photographer, I would much rather have “just” 24 megapixels for the long 12-14+ hour weddings that I shoot. It adds up to many terabytes at the end of the year. Especially if you shoot the camera any faster than its slowest continuous drive mode. (You better buy some 128GB SD cards!)

As a landscape photographer, of course, I truly appreciate the A7RIII’s extra resolution. I would too if I were a fashion, commercial, or any other type of photographer whose priority involved delivering high-res imagery.

We’ll get deeper into which cameras are direct competition or an attractive alternative to the A7RIII later. Let’s wrap up this discussion of value with a quick overview of the closest sibling to the A7RIII, which is of course the A7III.

The differences between them go beyond just a sensor. The A7III has a slightly newer AF system, with just a little bit more borrowed technology from the Sony A9. But, it also has a slightly lower resolution EVF and rear LCD, making the viewfinder shooting experience just a little bit more digital looking. Lastly, partly thanks to its lower megapixel count, and lower resolution screens, the A7III gets even better battery life than the A7RIII. (It goes without saying that you’ll save space on your memory cards and hard drives, too.)

So, it’s not cut-and-dry at all. You might even decide that the A7III is actually a better camera for you and what you shoot. Personally, I certainly might prefer the $1998 A7III if I shot action sports, wildlife, journalism, weddings, and certainly nightscapes, especially if I wasn’t going to be making huge prints of any of those photography genres.

Or, if you’re a serious pro, you need a backup camera anyway, and since they’re physically identical, buy both! The A7III and A7RIII are the best two-camera kit ever conceived. Throw one of your 2.8 zooms on the A7III, and your favorite prime on the A7RIII. As a bonus, you can program “Super-35 Mode” onto one of your remaining customization options, (I like C4 for this) and you’ve got two primes in one!

Sony A7R3, Sony FE 16-35mm f/2.8GM | 1/4000 sec, f/10, ISO 100 (Extreme dynamic range processing applied to this single file)

Cons

This is going to be a short list. In fact, I’ll spoil it for you right now: If you’re already a (happy) Sony shooter, or if you have tried a Sony camera and found it easy to operate, there are essentially zero cons about this camera, aside from the few aforementioned reasons which might incline certain photographers to get an A7III instead.

A very not-so-helpful notification that is often seen on Sony cameras. I really do wish they could have taken the time to write a few details for all function incompatibilities, not just some of them!

Ergonomics & Menus

I’ll get right to the point: as someone who has tested and/or reviewed almost every DSLR camera (and lots of mirrorless cameras) from the last 15 years, from some of the earliest Canon Rebels to the latest 1D and D5 flagships, I have never encountered a more complex camera than the A7R3.

Sony, I suspect in their effort to make the camera attractive to both photographers and videographers alike, has made the camera monumentally customizable.

We’ll get to the sheer learning curve and customizations of the camera in a bit, but first, a word on the physical ergonomics: Basically, Sony has made it clear that they are going to stay focused on compactness and portability, even if it’s just not a comfortable grip for anyone with slightly larger hands.

The argument seems to be clearly divided among those who prefer the compact design, and those who dislike it.

The dedicated AF-ON button is very close to three other main controls, the REC button for video, the rear command dial, and the AF point joystick. With large thumbs, AF operation just isn’t as effortless and intuitive as it could be. Which is a shame, because I definitely love the customizations that have given me instant access to multiple AF modes. I just wish the AF-ON button, and that whole thumb area, was designed better. My already minor fumbling will wane even further with familiarity, but that doesn’t mean it is an optimal design.

By the way, I’m not expecting Sony to make a huge camera that totally defeats one of the main purposes of the mirrorless format. In fact, in my Nikon D850 review, I realized that the camera was in fact too big and that I’m already accustomed to a smaller camera, something along the size of a Nikon D750, or a Canon EOS R.

Speaking of the Canon EOS R, I think all full-frame cameras ought to have a grip like that one. It is a perfect balance between portability and grip comfort. After you hold the EOS R, or even the EOS RP, you’ll realize that there’s no reason for a full-frame mirrorless camera not to have a perfect, deep grip.

As another example, while I applaud Sony for putting the power switch in the right spot, (come on, Canon!) …I strongly dislike their placement of the lens release button. If the lens release button were on the other side, where it normally is on Canon and Nikon, then maybe we could have custom function buttons similar to Nikon’s. These buttons are perfectly positioned for my grip fingers while holding the camera naturally, so I find them effortless to reach compared to Sony’s C1 and C2 buttons.

As I hinted earlier, I strongly suspect that a lot of this ergonomic design is meant to be useful to both photographers and videographers alike. And videographers, more of tne than not, simply aren’t shooting with the camera hand-held up to their eye, instead, the camera is on a tripod, monopod, slider, or gimbal. In this shooting scenario, buttons are accessed in a totally different way, and in fact, the controls of the latest Sony bodies all make more sense.

It’s a shame, because, for this reason I feel compelled to disclaim that if you absolutely don’t shoot video, you may find that Nikon and/or Canon ergonomics are significantly more user-friendly, whether you’re working with their DSLRs or their mirrorless bodies. (And yes, I actually like the Canon “touch dial”. Read my full Canon EOS R review here.)

Before we move on, though, I need to make one thing clear: if a camera is complicated, but it’s the best professional option on the market, then the responsible thing for a pro to do is to suck it up and master the camera. I actually love such a challenge, because it’s my job and because I’m a camera geek, but I absolutely don’t hold it against even a professional landscape photographer for going with a Nikon Z7, or a professional portrait photographer for going with a Canon EOS R. (Single SD card slot aside.)

Yet another quick-access menu. However, this one cannot be customized.

Customizability

This is definitely the biggest catch-22 of the whole story. The Sony A7R3 is very complex to operate, and even more complex to customize. Of course, it has little choice in the matter, as a pioneer of so many new features and functions. For example, I cannot fault a camera for offering different bitrates for video compression, just because it adds one more item to a menu page. In fact, this is a huge bonus, just like the ability to shoot both uncompressed and compressed .ARW files.

By the way, the “Beep” settings are called “Audio signals”

There are 35 pages of menu items with nearly 200 items total, plus five pages available for custom menu creation, a 2×6 grid of live/viewfinder screen functions, and approximately a dozen physical buttons can be re-programmed or totally customized.

I actually love customizing cameras, and it’s the very first thing I do whenever I pick up a new camera. I go over every single button, and every single menu item, to see how I can set up the camera so that it is perfect for me. This is a process I’ve always thoroughly enjoyed, that is until the Sony A7-series came along. When I first saw how customizable the camera was, I was grinning. However, it took literally two whole weeks to figure out which button ought to perform which function, and which arrangement was best for the Fn menu, and then last but not least, how to categorize the remaining five pages of menu items I needed to access while shooting. Because even if I memorized all 35 pages, it still wouldn’t be practical to go digging through them to access the various things I need to access in an active scenario.

Then, I started to notice that not every function or setting could be programmed to just any button or Fn menu. Despite offering extensive customization options, (some customization options have 22 pages of options,) there are still a few things that just can’t be done.

“Shoot Mode” is how you change the exposure when the camera’s exposure mode dial is set to video mode. Which is useful if you shot a lot of video…

For example, It’s not easy to change the exposure mode when the camera’s mode dial itself is set to video mode. You can’t just program “Shooting Mode” to one of the C1/C2 buttons, it can only go in the Fn or custom menu.

As another example, for some reason, you can’t program both E-shutter and Silent Shooting to the Fn menu, even though these functions are so similar that they belong next to each other in any shooter’s memory.

Lastly, because the camera relies so heavily on customization, you may find that you run out of buttons when trying to figure out where to put common things that used to have their own buttons, such as Metering, White Balance, AF Points. Not to mention the handful of new bells and whistles that you might want to program to a physical button, such as switching IBIS on/off, or activating Eye AF.

All in all, the camera is already extremely complex, and yet I feel like it could also use an extra 2-3 buttons, and even more customization for the existing buttons. Which, again, leads me to the conclusion that if you’re looking for an intuitive camera that is effortless to pick up and shoot with, you may have nightmares about the user manual for this thing. And if you don’t even shoot video at all, then like I said, you’re almost better off going with something simpler.

But again, just to make sure we’re still on the same page here: If you’re a working professional, or a serious hobbyist even, you make it work. It’s your job to know your tools! (The Apollo astronauts didn’t say, “ehh, no thanks” just because their capsule was complicated to operate!)

Every camera has quirks. But, not every camera offers the images and a feature set like the A7R3 does. As a camera geek, and as someone who does shoot a decent amount of both photo and video, I’d opt for the A7R3 in a heartbeat.

Sony A7R3, Sony FE 16-35mm f/2.8 GM, PolarPro ND1000 filter 15 sec, f/14, ISO 100

The A7RIII’s Competition & Alternatives

Now that it’s early 2019, we finally have Canon and Nikon competition in the market of full-frame mirrorless camera platforms. (Not to mention Panasonic, Sigma, Lecia…)

So, where does that put this mk3 version of the Sony A7R, a third-generation camera which is part of a system that is now over 5 years old?

Until more competition enters the market, this section of our review can be very simple, thankfully. I’ll be blunt and to the point…

First things first: the Sony A7R3 has them all beat, in terms of overall features and value. You just can’t get a full-frame mirrorless body with this many features, for this price, anywhere else. Not only does the Sony have the market cornered, they have three options with roughly the same level of professional features, when you count the A7III and the A9.

Having said that, here’s the second thing you should know: Canon and Nikon’s new full-frame mirrorless mounts are going to try as hard as they can to out-shine Sony’s FE lens lineup, as soon as possible. Literally the first thing Canon did for its RF mount was a jaw-droppingly good 50mm f/1.2, and of course the massive beast that is the 28-70mm f/2. Oh, and Nikon announced that they’d be resurrecting their legendary “Noct” lens nomenclature, for an absurdly fast 58mm f/0.95.

If you’re at all interested in this type of exotic, high-end glass, the larger size diameters and shorter flange distances of Canon and Nikon’s new FF mounts may prove to have a slight advantage over Sony’s relatively modest E mount.

However, as Sony has already proven, its mount is nothing to scoff at, and is entirely capable of amazing glass with professional results. Two of their newest fast-aperture prime lenses, the 135mm f/1.8 G-Master and the 24mm f/1.4 G-Master, prove this. Both lenses are almost optically flawless, and ready to easily resolve the 42 megapixels of this generation A7R-series camera, and likely the next generation too even if it has a 75-megapixel sensor.

This indicates that although Canon and Nikon’s may have an advantage when it comes to the upper limits of what is possible with new optics, Sony’s FE lens lineup will be more than enough for most pros.

Sony A7R3, Sony FE 70-300mm G OSS | 128mm, 1/100 sec, f/14, ISO 100

Sony A7RIII Review Verdict & Conclusion

There is no denying that Sony has achieved a huge milestone with the A7R mk3, in every single way. From its price point and feature set to its image quality and durable body, it is quite possibly the biggest threat that its main competitors, Canon and Nikon, face.

So, the final verdict for this review is very simple: If you want the most feature-rich full-frame camera (and system) that $3,200 can buy you, (well, get you started in) …the best investment you can make is the Sony A7RIII.

(By the way, it is currently just $2798, as of March 2019, and if you missed this particular sale price, just know that the camera might go on sale for $400 off, sooner or later.)

Sony A7R3, Sony FE 70-300mm G OSS | 1/400 sec, f/10, ISO 100

Really, the only major drawback for the “average” photographer is the learning curve, which even after three generations still feels like a sheer cliff when you first pick up the camera and look through its massive menu interface and customizations. The A7R3 body, (nor the A9 or A7III, for that matter) is not for the “casual” shooter who wants to just leave the camera in “green box mode”, and expect it to be simple to operate. I’ve been testing and reviewing digital cameras for over 15 years now, and the A7RIII is by far the most complex camera I’ve ever picked up.

That shouldn’t be a deterrent for the serious pro, because these cameras are literally the tools of our trade. We don’t have to get a degree in electrical engineering or mechanical engineering in order to be photographers, we just have to master our camera gear, and of course the creativity that happens after we’ve mastered that gear.

However, a serious pro who is considering switching from Nikon or Canon should still be aware that not everything you’re used to with those camera bodies is possible, let alone effortless feeling, on this Sony. The sheer volume of functionality related to focusing alone will require you to spend many hours learning how the camera works, and then customizing its different options to the custom buttons and custom menus so that you can achieve something that mimics simplicity, and effortless operation.

Sony A7R3, Sony 16-35mm f/2.8 GM | 1/4 sec, f/14, ISO 100

Personally, I’m always up for challenge. It took me a month of learning, customizing, and re-customizing this mk3-generation of Sony camera bodies, but I got it the way I want it, and now I get the benefits of things like having both the witchcraft/magic that is Eye-AF, and the traditinal “oldschool” AF methods, at my fingertips. As a working pro who shoots in active conditions, from portraits and weddings to action sports and stage performance, it has been absolutely worth it to tackle the steepest learning curve of my entire career. I have confidence that you’re up to the task, too.

from SLR Lounge https://www.slrlounge.com/sony-a7riii-review-best-full-frame-pro-mirrorless-camera/ via IFTTT

0 notes

Link

What’s in a camera? A lens, a shutter, a light-sensitive surface and, increasingly, a set of highly sophisticated algorithms. While the physical components are still improving bit by bit, Google, Samsung and Apple are increasingly investing in (and showcasing) improvements wrought entirely from code. Computational photography is the only real battleground now.

The reason for this shift is pretty simple: Cameras can’t get too much better than they are right now, or at least not without some rather extreme shifts in how they work. Here’s how smartphone makers hit the wall on photography, and how they were forced to jump over it.

Not enough buckets

An image sensor one might find in a digital camera

The sensors in our smartphone cameras are truly amazing things. The work that’s been done by the likes of Sony, OmniVision, Samsung and others to design and fabricate tiny yet sensitive and versatile chips is really pretty mind-blowing. For a photographer who’s watched the evolution of digital photography from the early days, the level of quality these microscopic sensors deliver is nothing short of astonishing.

But there’s no Moore’s Law for those sensors. Or rather, just as Moore’s Law is now running into quantum limits at sub-10-nanometer levels, camera sensors hit physical limits much earlier. Think about light hitting the sensor as rain falling on a bunch of buckets; you can place bigger buckets, but there are fewer of them; you can put smaller ones, but they can’t catch as much each; you can make them square or stagger them or do all kinds of other tricks, but ultimately there are only so many raindrops and no amount of bucket-rearranging can change that.

Sensors are getting better, yes, but not only is this pace too slow to keep consumers buying new phones year after year (imagine trying to sell a camera that’s 3 percent better), but phone manufacturers often use the same or similar camera stacks, so the improvements (like the recent switch to backside illumination) are shared amongst them. So no one is getting ahead on sensors alone.

See the new iPhone’s ‘focus pixels’ up close

Perhaps they could improve the lens? Not really. Lenses have arrived at a level of sophistication and perfection that is hard to improve on, especially at small scale. To say space is limited inside a smartphone’s camera stack is a major understatement — there’s hardly a square micron to spare. You might be able to improve them slightly as far as how much light passes through and how little distortion there is, but these are old problems that have been mostly optimized.

The only way to gather more light would be to increase the size of the lens, either by having it A: project outwards from the body; B: displace critical components within the body; or C: increase the thickness of the phone. Which of those options does Apple seem likely to find acceptable?

In retrospect it was inevitable that Apple (and Samsung, and Huawei, and others) would have to choose D: none of the above. If you can’t get more light, you just have to do more with the light you’ve got.

Isn’t all photography computational?

The broadest definition of computational photography includes just about any digital imaging at all. Unlike film, even the most basic digital camera requires computation to turn the light hitting the sensor into a usable image. And camera makers differ widely in the way they do this, producing different JPEG processing methods, RAW formats and color science.

For a long time there wasn’t much of interest on top of this basic layer, partly from a lack of processing power. Sure, there have been filters, and quick in-camera tweaks to improve contrast and color. But ultimately these just amount to automated dial-twiddling.

The first real computational photography features were arguably object identification and tracking for the purposes of autofocus. Face and eye tracking made it easier to capture people in complex lighting or poses, and object tracking made sports and action photography easier as the system adjusted its AF point to a target moving across the frame.

These were early examples of deriving metadata from the image and using it proactively, to improve that image or feeding forward to the next.

In DSLRs, autofocus accuracy and flexibility are marquee features, so this early use case made sense; but outside a few gimmicks, these “serious” cameras generally deployed computation in a fairly vanilla way. Faster image sensors meant faster sensor offloading and burst speeds, some extra cycles dedicated to color and detail preservation and so on. DSLRs weren’t being used for live video or augmented reality. And until fairly recently, the same was true of smartphone cameras, which were more like point and shoots than the all-purpose media tools we know them as today.

The limits of traditional imaging

Despite experimentation here and there and the occasional outlier, smartphone cameras are pretty much the same. They have to fit within a few millimeters of depth, which limits their optics to a few configurations. The size of the sensor is likewise limited — a DSLR might use an APS-C sensor 23 by 15 millimeters across, making an area of 345 mm2; the sensor in the iPhone XS, probably the largest and most advanced on the market right now, is 7 by 5.8 mm or so, for a total of 40.6 mm2.

Roughly speaking, it’s collecting an order of magnitude less light than a “normal” camera, but is expected to reconstruct a scene with roughly the same fidelity, colors and such — around the same number of megapixels, too. On its face this is sort of an impossible problem.

Improvements in the traditional sense help out — optical and electronic stabilization, for instance, make it possible to expose for longer without blurring, collecting more light. But these devices are still being asked to spin straw into gold.

Luckily, as I mentioned, everyone is pretty much in the same boat. Because of the fundamental limitations in play, there’s no way Apple or Samsung can reinvent the camera or come up with some crazy lens structure that puts them leagues ahead of the competition. They’ve all been given the same basic foundation.

All competition therefore comprises what these companies build on top of that foundation.

Image as stream

The key insight in computational photography is that an image coming from a digital camera’s sensor isn’t a snapshot, the way it is generally thought of. In traditional cameras the shutter opens and closes, exposing the light-sensitive medium for a fraction of a second. That’s not what digital cameras do, or at least not what they can do.

A camera’s sensor is constantly bombarded with light; rain is constantly falling on the field of buckets, to return to our metaphor, but when you’re not taking a picture, these buckets are bottomless and no one is checking their contents. But the rain is falling nevertheless.

To capture an image the camera system picks a point at which to start counting the raindrops, measuring the light that hits the sensor. Then it picks a point to stop. For the purposes of traditional photography, this enables nearly arbitrarily short shutter speeds, which isn’t much use to tiny sensors.

Why not just always be recording? Theoretically you could, but it would drain the battery and produce a lot of heat. Fortunately, in the last few years image processing chips have gotten efficient enough that they can, when the camera app is open, keep a certain duration of that stream — limited resolution captures of the last 60 frames, for instance. Sure, it costs a little battery, but it’s worth it.

Access to the stream allows the camera to do all kinds of things. It adds context.

Context can mean a lot of things. It can be photographic elements like the lighting and distance to subject. But it can also be motion, objects, intention.

A simple example of context is what is commonly referred to as HDR, or high dynamic range imagery. This technique uses multiple images taken in a row with different exposures to more accurately capture areas of the image that might have been underexposed or overexposed in a single exposure. The context in this case is understanding which areas those are and how to intelligently combine the images together.

This can be accomplished with exposure bracketing, a very old photographic technique, but it can be accomplished instantly and without warning if the image stream is being manipulated to produce multiple exposure ranges all the time. That’s exactly what Google and Apple now do.

Something more complex is of course the “portrait mode” and artificial background blur or bokeh that is becoming more and more common. Context here is not simply the distance of a face, but an understanding of what parts of the image constitute a particular physical object, and the exact contours of that object. This can be derived from motion in the stream, from stereo separation in multiple cameras, and from machine learning models that have been trained to identify and delineate human shapes.

These techniques are only possible, first, because the requisite imagery has been captured from the stream in the first place (an advance in image sensor and RAM speed), and second, because companies developed highly efficient algorithms to perform these calculations, trained on enormous data sets and immense amounts of computation time.

What’s important about these techniques, however, is not simply that they can be done, but that one company may do them better than the other. And this quality is entirely a function of the software engineering work and artistic oversight that goes into them.

A system to tell good fake bokeh from bad

DxOMark did a comparison of some early artificial bokeh systems; the results, however, were somewhat unsatisfying. It was less a question of which looked better, and more of whether they failed or succeeded in applying the effect. Computational photography is in such early days that it is enough for the feature to simply work to impress people. Like a dog walking on its hind legs, we are amazed that it occurs at all.

But Apple has pulled ahead with what some would say is an almost absurdly over-engineered solution to the bokeh problem. It didn’t just learn how to replicate the effect — it used the computing power it has at its disposal to create virtual physical models of the optical phenomenon that produces it. It’s like the difference between animating a bouncing ball and simulating realistic gravity and elastic material physics.

Why go to such lengths? Because Apple knows what is becoming clear to others: that it is absurd to worry about the limits of computational capability at all. There are limits to how well an optical phenomenon can be replicated if you are taking shortcuts like Gaussian blurring. There are no limits to how well it can be replicated if you simulate it at the level of the photon.

Similarly the idea of combining five, 10, or 100 images into a single HDR image seems absurd, but the truth is that in photography, more information is almost always better. If the cost of these computational acrobatics is negligible and the results measurable, why shouldn’t our devices be performing these calculations? In a few years they too will seem ordinary.

If the result is a better product, the computational power and engineering ability has been deployed with success; just as Leica or Canon might spend millions to eke fractional performance improvements out of a stable optical system like a $2,000 zoom lens, Apple and others are spending money where they can create value: not in glass, but in silicon.

Double vision

One trend that may appear to conflict with the computational photography narrative I’ve described is the advent of systems comprising multiple cameras.

This technique doesn’t add more light to the sensor — that would be prohibitively complex and expensive optically, and probably wouldn’t work anyway. But if you can free up a little space lengthwise (rather than depthwise, which we found impractical) you can put a whole separate camera right by the first that captures photos extremely similar to those taken by the first.

A mock-up of what a line of color iPhones could look like

Now, if all you want to do is re-enact Wayne’s World at an imperceptible scale (camera one, camera two… camera one, camera two…) that’s all you need. But no one actually wants to take two images simultaneously, a fraction of an inch apart.

These two cameras operate either independently (as wide-angle and zoom) or one is used to augment the other, forming a single system with multiple inputs.

The thing is that taking the data from one camera and using it to enhance the data from another is — you guessed it — extremely computationally intensive. It’s like the HDR problem of multiple exposures, except far more complex as the images aren’t taken with the same lens and sensor. It can be optimized, but that doesn’t make it easy.

So although adding a second camera is indeed a way to improve the imaging system by physical means, the possibility only exists because of the state of computational photography. And it is the quality of that computational imagery that results in a better photograph — or doesn’t. The Light camera with its 16 sensors and lenses is an example of an ambitious effort that simply didn’t produce better images, though it was using established computational photography techniques to harvest and winnow an even larger collection of images.

Light and code

The future of photography is computational, not optical. This is a massive shift in paradigm and one that every company that makes or uses cameras is currently grappling with. There will be repercussions in traditional cameras like SLRs (rapidly giving way to mirrorless systems), in phones, in embedded devices and everywhere that light is captured and turned into images.

Sometimes this means that the cameras we hear about will be much the same as last year’s, as far as megapixel counts, ISO ranges, f-numbers and so on. That’s okay. With some exceptions these have gotten as good as we can reasonably expect them to be: Glass isn’t getting any clearer, and our vision isn’t getting any more acute. The way light moves through our devices and eyeballs isn’t likely to change much.

What those devices do with that light, however, is changing at an incredible rate. This will produce features that sound ridiculous, or pseudoscience babble on stage, or drained batteries. That’s okay, too. Just as we have experimented with other parts of the camera for the last century and brought them to varying levels of perfection, we have moved onto a new, non-physical “part” which nonetheless has a very important effect on the quality and even possibility of the images we take.

via TechCrunch

0 notes

Text

How I Created a 16-Gigapixel Photo of Quito, Ecuador

A few years ago, I flew out to Ecuador to create a high-resolution image of the capital city of Quito. The final image turned out to be 16 gigapixels in size and at a printed size of over 25 meters (~82 feet), it allows people see jaw-dropping detail even when viewed from a few inches away.

I’ve always thought that gigapixel technology was amazing since I first saw it around 8 or 9 years ago. It combines everything that I like about photography: the adventure of trying to capture a complex image in challenging conditions as well as using high tech equipment, powerful computers, and advanced image processing software to create the final image.

I’ve been doing this for a while now, so I thought that I would share some of my experiences with you all so that you can make your own incredible gigapixel image as well.

The Gist

The picture was made with the 50-megapixel Canon 5DSR and a 100-400mm lens. It consists of 912 photos with each one having a .RAW file size of over 60MB. To create the image a robotic camera mount was used to capture over 900 images with a Canon 5DSR and 400mm lens. Digital stitching software was then used to combine them into a uniform high-resolution picture.

With a resolution of 300,000×55,313 pixels, the picture is the highest resolution photo of Quito ever taken. This allows you instantly view and explore high-resolution images that are over several gigabytes in size.

Site Selection

The first step in taking the photo is site selection. I went around Quito and viewed several different sites. Some the sites I felt were too low to the ground and didn’t give the wide enough panorama that I was looking for. Other sites were difficult to access or were high up but still not able to give the wide panoramic view that I was looking for.

I finally settled on taking the image from near the top of the Pichincha Volcano. Pichincha is classified as a stratovolcano and its peak is over 15,000ft high. I was to access the spot via a cable car and it gave a huge panoramic vista of the entire city as well as all the volcanoes that surround Quito.

The only drawback that I saw to the site is that I felt that it was a little too far away from the city and I didn’t think that people would be able to see any detail in the city when they zoomed in. To fix this situation I decided to choose a site a bit further down from the visitor center. That meant that we would have to carry all there equipment there (which isn’t easy at high altitudes) but I felt that it would give the best combination of a great panoramic view and be close enough to the city for detail to be captured.

The Setup

The site was surrounded by very tall grass as well as a little bit of a hill that could block the complete view so I decided to set up three levels of scaffolding and shoot from the top of that. There wasn’t any power at the site since it was on the side of a volcano so we had to bring a small generator with us.