#ai and facial recognition

Explore tagged Tumblr posts

Text

Artificial Intelligence-Based Face Recognition

Current technology astounds people with incredible innovations that not only make life easier but also more pleasant. Face recognition has consistently shown to be the least intrusive and fastest form of biometric verification. To validate one's identification, the software compares a live image to a previously stored facial print using deep learning techniques. This technology's foundation is built around image processing and machine learning. Face recognition has gained significant interest from researchers as a result of human activity in many security applications such as airports, criminal detection, face tracking, forensics, and so on. Face biometrics, unlike palm prints, iris scans, fingerprints, and so on, can be non-intrusive.

They can be captured without the user's knowledge and then used for security-related applications such as criminal detection, face tracking, airport security, and forensic surveillance systems. Face recognition is extracting facial images from a video or surveillance camera. They are compared to the stored database. Face recognition entails training known photos, categorizing them with known classes, and then storing them in a database. When a test image is sent to the system, it is classed and compared to the stored database.

Face recognition

Face recognition with Artificial Intelligence (AI) is a computer vision technique that identifies a person or object in an image or video. It employs a combination of deep learning, computer vision algorithms, and image processing. These technologies allow a system to detect, recognize, and validate faces in digital photos or videos. The technology has grown in popularity across a wide range of applications, including smartphone unlocking, door unlocking, passport verification, security systems, medical applications, and so on. Some models can recognize emotions through facial expressions.

Difference between Face recognition & Face detection

Face recognition is the act of identifying a person from an image or video stream, whereas face detection is the process of finding a face within an image or video feed. Face recognition is the process of recognizing and distinguishing people based on their facial characteristics. It uses more advanced processing techniques to determine a person's identity using feature point extraction and comparison algorithms. and can be employed in applications such as automatic attendance systems or security screenings. While face detection is a considerably easier procedure, it can be utilized for applications such as image labeling or changing the angle of a shot based on the recognized face. It is the first phase in the face recognition process and is a simpler method for identifying a face in an image or video feed.

Image Processing and Machine learning

Computer Vision is the process of processing images using computers. It focuses on a high-level understanding of digital images or movies. The requirement is to automate operations that human visual systems can complete. so, a computer should be able to distinguish items like a human face, a lamppost, or even a statue.

OpenCV is a Python package created to handle computer vision problems. OpenCV was developed by Intel in 1999 and later sponsored by Willow Garage.

Machine learning

Every Machine Learning algorithm accepts a dataset as input and learns from it, which essentially implies that the algorithm is learned from the input and output data. It recognizes patterns in the input and generates the desired algorithm. For example, to determine whose face is present in a given photograph, various factors might be considered as a pattern: The facial height and width. Height and width measurements may be unreliable since the image could be rescaled to a smaller face or grid. However, even after rescaling, the ratios stay unchanged: the ratio of the face's height to its width will not alter. Color of the face. Width of other elements of the face, such as the nose, etc

There is a pattern: different faces, such as those seen above, have varied dimensions. comparable faces share comparable dimensions. Machine Learning algorithms can only grasp numbers, making the task difficult. This numerical representation of a "face" (or an element from the training set) is known as a feature vector. A feature vector is made up of various numbers arranged in a specified order. As a simple example, we can map a "face" into a feature vector that can contain multiple features such as: Height of the face (in cm) Width of the face in centimeters Average hue of the face (R, G, B). Lip width (centimeters) Height of the nose (cm)

Essentially, given a picture, we may turn it into a feature vector as follows: Height of the face (in cm) Width of the face in centimeters Average hue of the face (RGB). Lip width (centimeters) Height of the nose (cm)

There could be numerous other features obtained from the photograph, such as hair color, facial hair, spectacles, and so on. 1. Face recognition technology relies on machine learning for two primary functions. These are listed below. Deriving the feature vector: It is impossible to manually enumerate all of the features because there are so many. Many of these features can be intelligently labeled by a machine learning system. For example, a complicated feature could be the ratio of nose height to forehead width. 2. Matching algorithms: Once the feature vectors have been produced, a Machine Learning algorithm must match a new image to the collection of feature vectors included in the corpus.

3. Face Recognition Operations

Face Recognition Operations

Facial recognition technology may differ depending on the system. Different software uses various ways and means to achieve face recognition. The stepwise procedure is as follows: Face Detection: To begin, the camera will detect and identify a face. The face is best recognized when the subject looks squarely at the camera, as this allows for easy facial identification. With technological improvements, this has advanced to the point that the face may be identified with a minor difference in posture when facing the camera.

Face Analysis: A snapshot of the face is taken and evaluated. Most facial recognition uses 2D photos rather than 3D since they are easier to compare to a database. Facial recognition software measures the distance between your eyes and the curve of your cheekbones. Image to Data Conversion: The face traits are now transformed to a mathematical formula and represented as integers. This numerical code is referred to as a face print. Every person has a unique fingerprint, just as they all have a distinct face print.

Match Finding: Next, the code is compared to a database of other face prints. This database contains photographs with identification that may be compared. The system then finds a match for your specific features in the database. It returns a match with connected information such as a name and address, or it depends on the information kept in an individual's database.

Conclusion In conclusion, the evolution of facial recognition technology powered by artificial intelligence has paved the way for ground breaking innovations in various industries. From enhancing security measures to enabling seamless user experiences, AI-based face recognition has proven to be a versatile and invaluable tool.

#ai face identification#ai face match#ai face recognition#ai face recognition online#ai and facial recognition

0 notes

Text

Parts of Alberta’s personal information protection legislation have been ruled unconstitutional. But the ruling from Court of King’s Bench Justice Colin Feasby also upheld an order to stop an American facial recognition company from collecting images of Albertans. Clearview AI scrapes the internet and social media for images of people and adds them to a database, which it markets to law enforcement agencies as a facial recognition tool.

Continue reading

Tagging: @newsfromstolenland @abpoli

#ai scraping#personal protection#clearview ai#facial recognition#personal information#cdnpoli#canada#canadian politics#canadian news#canadian#alberta

67 notes

·

View notes

Text

Hypothetical AI election disinformation risks vs real AI harms

I'm on tour with my new novel The Bezzle! Catch me TONIGHT (Feb 27) in Portland at Powell's. Then, onto Phoenix (Changing Hands, Feb 29), Tucson (Mar 9-12), and more!

You can barely turn around these days without encountering a think-piece warning of the impending risk of AI disinformation in the coming elections. But a recent episode of This Machine Kills podcast reminds us that these are hypothetical risks, and there is no shortage of real AI harms:

https://soundcloud.com/thismachinekillspod/311-selling-pickaxes-for-the-ai-gold-rush

The algorithmic decision-making systems that increasingly run the back-ends to our lives are really, truly very bad at doing their jobs, and worse, these systems constitute a form of "empiricism-washing": if the computer says it's true, it must be true. There's no such thing as racist math, you SJW snowflake!

https://slate.com/news-and-politics/2019/02/aoc-algorithms-racist-bias.html

Nearly 1,000 British postmasters were wrongly convicted of fraud by Horizon, the faulty AI fraud-hunting system that Fujitsu provided to the Royal Mail. They had their lives ruined by this faulty AI, many went to prison, and at least four of the AI's victims killed themselves:

https://en.wikipedia.org/wiki/British_Post_Office_scandal

Tenants across America have seen their rents skyrocket thanks to Realpage's landlord price-fixing algorithm, which deployed the time-honored defense: "It's not a crime if we commit it with an app":

https://www.propublica.org/article/doj-backs-tenants-price-fixing-case-big-landlords-real-estate-tech

Housing, you'll recall, is pretty foundational in the human hierarchy of needs. Losing your home – or being forced to choose between paying rent or buying groceries or gas for your car or clothes for your kid – is a non-hypothetical, widespread, urgent problem that can be traced straight to AI.

Then there's predictive policing: cities across America and the world have bought systems that purport to tell the cops where to look for crime. Of course, these systems are trained on policing data from forces that are seeking to correct racial bias in their practices by using an algorithm to create "fairness." You feed this algorithm a data-set of where the police had detected crime in previous years, and it predicts where you'll find crime in the years to come.

But you only find crime where you look for it. If the cops only ever stop-and-frisk Black and brown kids, or pull over Black and brown drivers, then every knife, baggie or gun they find in someone's trunk or pockets will be found in a Black or brown person's trunk or pocket. A predictive policing algorithm will naively ingest this data and confidently assert that future crimes can be foiled by looking for more Black and brown people and searching them and pulling them over.

Obviously, this is bad for Black and brown people in low-income neighborhoods, whose baseline risk of an encounter with a cop turning violent or even lethal. But it's also bad for affluent people in affluent neighborhoods – because they are underpoliced as a result of these algorithmic biases. For example, domestic abuse that occurs in full detached single-family homes is systematically underrepresented in crime data, because the majority of domestic abuse calls originate with neighbors who can hear the abuse take place through a shared wall.

But the majority of algorithmic harms are inflicted on poor, racialized and/or working class people. Even if you escape a predictive policing algorithm, a facial recognition algorithm may wrongly accuse you of a crime, and even if you were far away from the site of the crime, the cops will still arrest you, because computers don't lie:

https://www.cbsnews.com/sacramento/news/texas-macys-sunglass-hut-facial-recognition-software-wrongful-arrest-sacramento-alibi/

Trying to get a low-waged service job? Be prepared for endless, nonsensical AI "personality tests" that make Scientology look like NASA:

https://futurism.com/mandatory-ai-hiring-tests

Service workers' schedules are at the mercy of shift-allocation algorithms that assign them hours that ensure that they fall just short of qualifying for health and other benefits. These algorithms push workers into "clopening" – where you close the store after midnight and then open it again the next morning before 5AM. And if you try to unionize, another algorithm – that spies on you and your fellow workers' social media activity – targets you for reprisals and your store for closure.

If you're driving an Amazon delivery van, algorithm watches your eyeballs and tells your boss that you're a bad driver if it doesn't like what it sees. If you're working in an Amazon warehouse, an algorithm decides if you've taken too many pee-breaks and automatically dings you:

https://pluralistic.net/2022/04/17/revenge-of-the-chickenized-reverse-centaurs/

If this disgusts you and you're hoping to use your ballot to elect lawmakers who will take up your cause, an algorithm stands in your way again. "AI" tools for purging voter rolls are especially harmful to racialized people – for example, they assume that two "Juan Gomez"es with a shared birthday in two different states must be the same person and remove one or both from the voter rolls:

https://www.cbsnews.com/news/eligible-voters-swept-up-conservative-activists-purge-voter-rolls/

Hoping to get a solid education, the sort that will keep you out of AI-supervised, precarious, low-waged work? Sorry, kiddo: the ed-tech system is riddled with algorithms. There's the grifty "remote invigilation" industry that watches you take tests via webcam and accuses you of cheating if your facial expressions fail its high-tech phrenology standards:

https://pluralistic.net/2022/02/16/unauthorized-paper/#cheating-anticheat

All of these are non-hypothetical, real risks from AI. The AI industry has proven itself incredibly adept at deflecting interest from real harms to hypothetical ones, like the "risk" that the spicy autocomplete will become conscious and take over the world in order to convert us all to paperclips:

https://pluralistic.net/2023/11/27/10-types-of-people/#taking-up-a-lot-of-space

Whenever you hear AI bosses talking about how seriously they're taking a hypothetical risk, that's the moment when you should check in on whether they're doing anything about all these longstanding, real risks. And even as AI bosses promise to fight hypothetical election disinformation, they continue to downplay or ignore the non-hypothetical, here-and-now harms of AI.

There's something unseemly – and even perverse – about worrying so much about AI and election disinformation. It plays into the narrative that kicked off in earnest in 2016, that the reason the electorate votes for manifestly unqualified candidates who run on a platform of bald-faced lies is that they are gullible and easily led astray.

But there's another explanation: the reason people accept conspiratorial accounts of how our institutions are run is because the institutions that are supposed to be defending us are corrupt and captured by actual conspiracies:

https://memex.craphound.com/2019/09/21/republic-of-lies-the-rise-of-conspiratorial-thinking-and-the-actual-conspiracies-that-fuel-it/

The party line on conspiratorial accounts is that these institutions are good, actually. Think of the rebuttal offered to anti-vaxxers who claimed that pharma giants were run by murderous sociopath billionaires who were in league with their regulators to kill us for a buck: "no, I think you'll find pharma companies are great and superbly regulated":

https://pluralistic.net/2023/09/05/not-that-naomi/#if-the-naomi-be-klein-youre-doing-just-fine

Institutions are profoundly important to a high-tech society. No one is capable of assessing all the life-or-death choices we make every day, from whether to trust the firmware in your car's anti-lock brakes, the alloys used in the structural members of your home, or the food-safety standards for the meal you're about to eat. We must rely on well-regulated experts to make these calls for us, and when the institutions fail us, we are thrown into a state of epistemological chaos. We must make decisions about whether to trust these technological systems, but we can't make informed choices because the one thing we're sure of is that our institutions aren't trustworthy.

Ironically, the long list of AI harms that we live with every day are the most important contributor to disinformation campaigns. It's these harms that provide the evidence for belief in conspiratorial accounts of the world, because each one is proof that the system can't be trusted. The election disinformation discourse focuses on the lies told – and not why those lies are credible.

That's because the subtext of election disinformation concerns is usually that the electorate is credulous, fools waiting to be suckered in. By refusing to contemplate the institutional failures that sit upstream of conspiracism, we can smugly locate the blame with the peddlers of lies and assume the mantle of paternalistic protectors of the easily gulled electorate.

But the group of people who are demonstrably being tricked by AI is the people who buy the horrifically flawed AI-based algorithmic systems and put them into use despite their manifest failures.

As I've written many times, "we're nowhere near a place where bots can steal your job, but we're certainly at the point where your boss can be suckered into firing you and replacing you with a bot that fails at doing your job"

https://pluralistic.net/2024/01/15/passive-income-brainworms/#four-hour-work-week

The most visible victims of AI disinformation are the people who are putting AI in charge of the life-chances of millions of the rest of us. Tackle that AI disinformation and its harms, and we'll make conspiratorial claims about our institutions being corrupt far less credible.

If you'd like an essay-formatted version of this post to read or share, here's a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2024/02/27/ai-conspiracies/#epistemological-collapse

Image: Cryteria (modified) https://commons.wikimedia.org/wiki/File:HAL9000.svg

CC BY 3.0 https://creativecommons.org/licenses/by/3.0/deed.en

#pluralistic#ai#disinformation#algorithmic bias#elections#election disinformation#conspiratorialism#paternalism#this machine kills#Horizon#the rents too damned high#weaponized shelter#predictive policing#fr#facial recognition#labor#union busting#union avoidance#standardized testing#hiring#employment#remote invigilation

146 notes

·

View notes

Text

Been traveling a lot lately and I love how, in US TSA security lines, they always make sure that the big sign saying the facial recognition photo is optional is always turned sideways or set so the spanish-translation side is facing the line and the English-translation side is facing a wall or something.

Anyway, TSA facial recognition photos are 100% not mandatory and if you don't feel like helping a company develop its facial recognition AI software (like, say, Clearview AI), you can just politely tell the TSA agent that you don't want to participate in the photo and instead show an ID or your boarding pass. Like we've been doing for years and years.

#privacy#anti facial recognition#clearwater AI#you need to protect yourself#its dishonest is what it is#the signs are always there#as per law#but they are hidden/turned/set way to the side

39 notes

·

View notes

Text

Honestly, its a lil ironic that the closest things to the curcutboard markings on scifi androids faces is makeup designed to resist AI facial recognition

3 notes

·

View notes

Text

the article

world is a boring dystopia

#dystopia#dystopian#bbc#ai#facial recognition#shoplifting#artificial intelligence#news#cyberpunk#tech#dystopian society

15 notes

·

View notes

Text

#Far-Right Agenda#Facial Recognition Tech#ICE#FBI#AI#Surveillance#Clearview AI#immigrants#political left#the Trump administration#News

3 notes

·

View notes

Text

Facial Recognition That Tracks Suspicious Friendliness Is Coming to a Store Near You

Coresight AI has released a new product that sends alerts to store security when customers and staff have anomalous interactions.

A brand new way of being surveilled could be coming to a store near you—a facial recognition system designed to detect when retail workers have anomalous interactions with customers. About a month ago, Israel-based Corsight AI began offering its global clients access to a new service aimed at rooting out what the retail industry calls “sweethearting,”—instances of store employees giving people they know discounts or free items. Traditional facial recognition systems, which have proliferated in the retail industry thanks to companies like Corsight, flag people entering stores who are on designated blacklists of shoplifters. The new sweethearting detection system takes the monitoring a step further by tracking how each customer interacts with different employees over long periods of time.

btw "Israel-based" means yet another piece of dystopian horror tech tried and tested on captive genocide victims

4 notes

·

View notes

Text

Gone are the days when “Robocop” and “Skynet” were just dystopian ideas for Hollywood blockbusters. The dark, distant future those films portrayed is now a present reality.

Read More: https://thefreethoughtproject.com/government-surveillance/growing-the-surveillance-state-drones-facial-recognition-ai-enlisted-to-fight-crime-but-at-what-cost

#TheFreeThoughtProject

#the free thought project#tftp#facial recognition#ai#drones#biometrics#robocop#skynet#dystopian#orwell#1984#surveillance

5 notes

·

View notes

Text

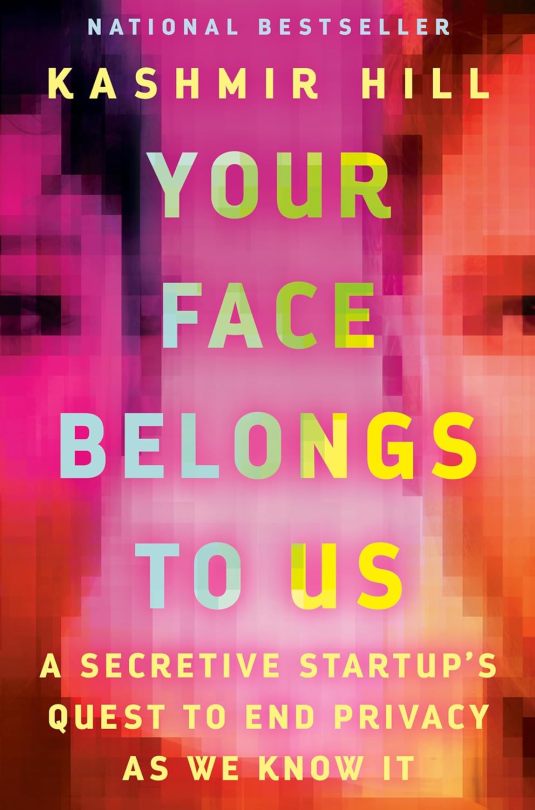

My Book Review

"If you're not paying for it, you're the product."

Your Face Belongs to Us is a terrifying yet interesting journey through the world of invasive surveillance, artificial intelligence, facial recognition, and biometric data collection by way of the birth and rise of a company called Clearview AI — a software used by law enforcement and government agencies in the US yet banned in various countries. A database of 75 million images per day.

The writing is easy flowing investigative journalism, but the information (as expected) is...chile 👀. Lawsuits and court cases to boot. This book reads somewhat like one of my favorite books of all-time, How Music Got Free by Stephen Witt (my review's here), in which it delves into the history from birth to present while learning the key players along the way.

Here's an excerpt that keeps you seated for this wild ride:

“I was in a hotel room in Switzerland, six months pregnant, when I got the email. It was the end of a long day and I was tired but the email gave me a jolt. My source had unearthed a legal memo marked “Privileged & Confidential” in which a lawyer for Clearview had said that the company had scraped billions of photos from the public web, including social media sites such as Facebook, Instagram, and LinkedIn, to create a revolutionary app. Give Clearview a photo of a random person on the street, and it would spit back all the places on the internet where it had spotted their face, potentially revealing not just their name but other personal details about their life. The company was selling this superpower to police departments around the country but trying to keep its existence a secret.”

#your face belongs to us#kashmir hill#thechanelmuse reviews#book recommendations#articifial intelligence#facial recognition#hoan ton that#clearview ai

7 notes

·

View notes

Text

The technology, which marries Meta’s smart Ray Ban glasses with the facial recognition service Pimeyes and some other tools, lets someone automatically go from face, to name, to phone number, and home address.

#404 media#meta#ray ban#pimeyes#harvard#facial recognition#privacy#surveillance#surveillance capitalism#technology#ai#artificial intelligence#doxxing

3 notes

·

View notes

Text

i actually think celebrities should be able to mail a bill to your house for $500 a minute if you bother them in public

#listen if we're going to have ai facial recognition let's start putting it to good use#'thanks for asking for a photo with ms. roan in the airport. she declines. please check your mailing address in 2 - 3 weeks for the invoice#fuck bodyguards let's give 'em traveling accountants

5 notes

·

View notes

Text

Behbeh

#apple#apple iphone#iphone#face id#ai#facial recognition#non discriminatory#new iOS#iOS#technology#big brother#mac#cartoon#teddy bear#illustration#dailybehbeh#behbeh#cute#stuffed animal#art#funny#comedy

10 notes

·

View notes

Text

#Facial recognition#pride month#tiktok#privacy#security#technology news#technology#anti capitalism#anti ai

4 notes

·

View notes

Text

EU passes artificial intelligence act

View On WordPress

#ai#artificial intelligence#computer#data#facial recognition#information#machine learning#meme#memes#safety#security

3 notes

·

View notes