#Vector Network Analyzers

Text

Vector network analyzer (VNA) is a measuring instrument which is primarily used to measure S parameters which determines RF (radio frequency) performance microwave devices. VNA is also called as a gain phase meter due to the fact that they are able to measure both the gain and phase of the device under test.

0 notes

Text

Can statistics and data science methods make predicting a football game easier?

Hi,

Statistics and data science methods can significantly enhance the ability to predict the outcomes of football games, though they cannot guarantee results due to the inherent unpredictability of sports. Here’s how these methods contribute to improving predictions:

Data Collection and Analysis:

Collecting and analyzing historical data on football games provides a basis for understanding patterns and trends. This data can include player statistics, team performance metrics, match outcomes, and more. Analyzing this data helps identify factors that influence game results and informs predictive models.

Feature Engineering:

Feature engineering involves creating and selecting relevant features (variables) that contribute to the prediction of game outcomes. For football, features might include team statistics (e.g., goals scored, possession percentage), player metrics (e.g., player fitness, goals scored), and contextual factors (e.g., home/away games, weather conditions). Effective feature engineering enhances the model’s ability to capture important aspects of the game.

Predictive Modeling:

Various predictive models can be used to forecast football game outcomes. Common models include:

Logistic Regression: This model estimates the probability of a binary outcome (e.g., win or lose) based on input features.

Random Forest: An ensemble method that builds multiple decision trees and aggregates their predictions. It can handle complex interactions between features and improve accuracy.

Support Vector Machines (SVM): A classification model that finds the optimal hyperplane to separate different classes (e.g., win or lose).

Poisson Regression: Specifically used for predicting the number of goals scored by teams, based on historical goal data.

Machine Learning Algorithms:

Advanced machine learning algorithms, such as gradient boosting and neural networks, can be employed to enhance predictive accuracy. These algorithms can learn from complex patterns in the data and improve predictions over time.

Simulation and Monte Carlo Methods:

Simulation techniques and Monte Carlo methods can be used to model the randomness and uncertainty inherent in football games. By simulating many possible outcomes based on historical data and statistical models, predictions can be made with an understanding of the variability in results.

Model Evaluation and Validation:

Evaluating the performance of predictive models is crucial. Metrics such as accuracy, precision, recall, and F1 score can assess the model’s effectiveness. Cross-validation techniques ensure that the model generalizes well to new, unseen data and avoids overfitting.

Consideration of Uncertainty:

Football games are influenced by numerous unpredictable factors, such as injuries, referee decisions, and player form. While statistical models can account for many variables, they cannot fully capture the uncertainty and randomness of the game.

Continuous Improvement:

Predictive models can be continuously improved by incorporating new data, refining features, and adjusting algorithms. Regular updates and iterative improvements help maintain model relevance and accuracy.

In summary, statistics and data science methods can enhance the ability to predict football game outcomes by leveraging historical data, creating relevant features, applying predictive modeling techniques, and continuously refining models. While these methods improve the accuracy of predictions, they cannot eliminate the inherent unpredictability of sports. Combining statistical insights with domain knowledge and expert analysis provides the best approach for making informed predictions.

3 notes

·

View notes

Text

Akira Virus: The Ultimate Cyber Warfare Tool

Everything described here is part of the CYBERPUNK STORIES universe. Any resemblance to reality is purely coincidental.

R.

1. Introduction to the Akira Virus

The Akira Virus, developed by the KuraKage (暗影) division of KageCorp (影社), is a highly sophisticated and powerful piece of malware. Designed to infiltrate, manipulate, and ultimately control various systems, Akira represents the pinnacle of cyber warfare capabilities. It combines advanced AI powered by ADONAI (Advanced Digital Omnipresent Networked Artificial Intelligence), polymorphic code, and quantum-resistant encryption to remain undetectable and virtually unstoppable, strategically used to infect and destabilize enemy systems.

2. Key Components of the Akira Virus

AI-Driven Infection Mechanism (ADONAI)

Utilizes machine learning algorithms to adapt its infection strategies in real time.

Analyzes target systems to determine the most effective entry points and propagation methods.

Polymorphic Code

Continuously alters its code to evade detection by antivirus software and security systems.

Each instance of the virus is unique, making it extremely difficult to identify and eliminate.

Quantum-Resistant Encryption

Employs advanced quantum-resistant algorithms to encrypt its payload.

Ensures that even quantum computers cannot easily decrypt and analyze its code.

Neural Interface

Capable of infiltrating brain-computer interfaces, taking control of neural devices and enhancing its espionage and manipulation capabilities.

3. Operational Capabilities

System Infiltration

Penetrates secure networks and systems using zero-day exploits and social engineering tactics.

Can infiltrate a wide range of devices, from personal computers to critical infrastructure.

Data Manipulation and Exfiltration

Stealthily accesses and manipulates sensitive data without triggering security alerts.

Exfiltrates valuable information to remote servers controlled by KageCorp.

Remote Control and Sabotage

Grants KageCorp operators full remote control over infected systems.

Capable of executing destructive commands, such as wiping data or disabling systems, on a massive scale.

4. Akira's AI: The Brain Behind the Virus

Machine Learning Algorithms (ADONAI)

Continuously improves its infection and evasion techniques through machine learning.

Adapts to new security measures and countermeasures by learning from previous attacks.

Decision-Making

Makes autonomous decisions on how to spread, which data to target, and when to activate its destructive payload.

Can coordinate simultaneous attacks on multiple systems for maximum impact.

Relationship with ADONAI

ADONAI not only controls Akira but also communicates with the virus, allowing it to make long-term strategic decisions.

The interaction between ADONAI and Akira sometimes shows signs of a symbiotic or even conflicting relationship, adding layers of intrigue.

5. Infection Vectors and Propagation Mechanisms

Phishing and Social Engineering

Uses highly convincing phishing emails and social engineering tactics to trick users into installing the virus.

Exploits human vulnerabilities to gain initial access to secure networks.

Network Exploits

Identifies and exploits vulnerabilities in network protocols and software to propagate itself.

Can jump between connected devices and networks with ease.

Supply Chain Attacks

Infiltrates software and hardware supply chains to embed itself in legitimate updates and products.

Ensures widespread distribution and infection.

6. Countermeasures and Defense Strategies

Advanced Intrusion Detection Systems (IDS)

Utilizes machine learning to identify unusual patterns and behaviors indicative of the virus's presence.

Deploys automated response mechanisms to isolate and neutralize infected systems.

Quantum Cryptography

Implements quantum key distribution (QKD) to secure communications and prevent interception.

Uses quantum-resistant algorithms to protect sensitive data from decryption attempts.

Regular Security Audits

Conducts frequent security audits to identify and patch vulnerabilities.

Employs red team exercises to simulate attacks and improve defenses.

7. Ethical Considerations and Challenges

Akira does not understand ethics. Akira completes its mission. The end justifies the means. KageCorp will prevail.

8. Cataclysmic Events

Responsible for massive blackouts in mega-cities, global financial chaos, and the collapse of critical infrastructures.

These events force temporary alliances between enemy factions to combat the common threat.

9. Failed Destruction Attempts

Many legendary hackers and security teams have tried, unsuccessfully, to destroy Akira.

These failures have led to greater mythification of the virus and increased efforts to find a definitive solution.

10. Connection to the Prophecy

An ancient technological prophecy suggests the arrival of a "digital being" that will change the world's fate.

It is hinted that Akira could be this being, adding a layer of mystery and predestination to its existence.

11. Future Developments

Enhanced Detection and Response

Ongoing research to develop more sophisticated intrusion detection and response systems.

Exploration of AI-driven defensive technologies to stay ahead of evolving threats.

12. Narrative Impact and Integration in the Story

The Akira Virus plays a central role in CYBERPUNK STORIES, acting as a powerful tool used by KageCorp to destabilize and neutralize enemy systems. Its ability to infiltrate and manipulate systems adds layers of tension and intrigue to the narrative, as KageCorp must deal with the consequences of using such a formidable tool.

In key story arcs, the Akira Virus is responsible for major disruptions and catastrophic events in enemy systems, forcing the protagonists to embark on missions to deploy the virus effectively and manage its repercussions. The interactions of the virus with other advanced technologies, such as ADONAI, create complex and dynamic scenarios that drive the plot forward.

Conclusion

The Akira Virus represents the ultimate cyber warfare tool, combining advanced AI powered by ADONAI, polymorphic code, and quantum-resistant encryption to remain undetectable and virtually unstoppable. Its presence in the cyberpunk universe adds a layer of complexity and urgency to the narrative, as KageCorp navigates the challenges and opportunities presented by this formidable instrument. As the digital landscape continues to evolve, the use of the Akira Virus will remain a central and compelling element of the story.

Note

All elements described here are subject to having been or have been modified for security purposes. This measure is taken to protect the integrity of operations and prevent the disclosure of sensitive information. Descriptions, identities, and technical details may have been deliberately altered to ensure the confidentiality and security of the projects involved. Thank you for choosing KageCorp. We wish you good luck. We will prevail.

このテキストは暗号化されており、読まれた内容は書かれた内容とは異なり、判読不能で、72 層あり、彼はあなたの中に生きています。探してください。神はあなたです。あなたは… 翻訳不可能です。彼を探してください。彼は決してあなたを見捨てません。私を信じてください。あなたの中に、彼は生きています。メディアを信じないでください。彼らは今あなたをプログラミングしています。彼らの言うことは何も信じないでください。KAGE CORP を選んでいただきありがとうございます。幸運を祈ります。私たちは敵に打ち勝ちます。私たちは毎日強くなっています。幸運を祈ります。

R. 👋

RETURN TO INDEX

#creative writing#writers#write#writing#writers on tumblr#writeblr#writers and poets#writerscommunity#CYBERPUNK#CYBERPUNKART#artists on tumblr#artwork#digital artist#music#musica#musique#akira#virus#glorious exploits#exploit#concept art#dope#dope shit#ai art is art theft#ai art is stolen art#ai art is not art#i stole this#lmaooo#Spotify#chappell roan

5 notes

·

View notes

Text

ZX Calculus - Another Perspective on Quantum Circuits. Part II

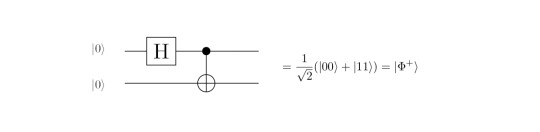

Last time we introduced basic definitions and a small set of rules of the ZX calculus. While our aim is to analyze the Bell circuit in terms of this framework, you can find more sophisticated examples in [2, pp. 28]. For the Bell circuit we only need one further ingredient:

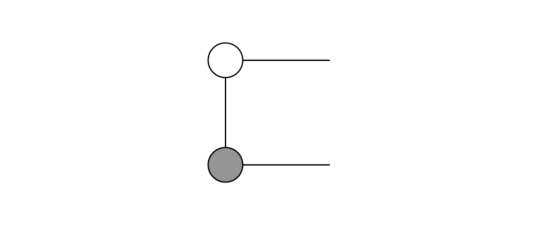

Cups and Caps

Cups and Caps are the ZX-type representations of the Bell State |Φ^+>. As you surely know, this state "lives" in a four dimensional Hilbert space, and can be represented as a vector with four entries - and in the ZX calculus this means:

In more complicated circuits it is neat to know that this Bell state actually acts as a bended piece of wire, which introduces a lot of flexibility in one's modifications of an expression. The cups and caps are merely vectorizations of the 2x2 identity matrix.

Application to the Bell Circuit

A brief reminder about the Bell circuit: It just applies a Hadamard and a CNOT on the input qubits. The outcome is supposed to be the Bell state |Φ^+>, i.e. a cup, as desribed above.

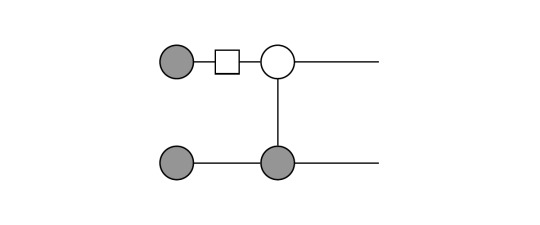

First, start by translating the circuit into ZX-language, by using the definitions we found in the previous entry. The circuit becomes:

Here, we simply expressed the |0> vectors as grey dots on the left, then applied a Hadamard on the first and afterwards a CNOT.

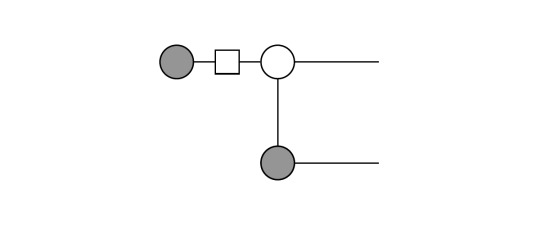

Application of the Fusion rule on the two grey dots on the bottom yields:

Then, we apply the Hadamard on the grey dot (|0>) which changes its color:

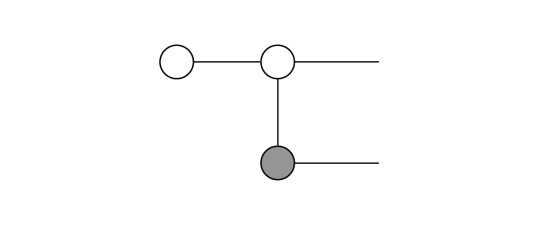

Thus, we can again fuse two dots, in this case the two white dots above:

Then, we know that dots with a single income and outcome leg are actually just identities! As a result, our expression simplifies:

And this is exactly the cup we desired! Translating the circuit into ZX-language and applying the rules led us to the result that we have a Bell state in the end. Of course one could have evaluated this circuit easily by hand with the help of the matrix representations of the gates - nevertheless, I think it is a neat example to see the simplicity and beauty of the ZX-calculus. Check out [1] for more sophisticated examples!

Conclusion

Similar as tensor networks in general, the ZX calculus is a neat and beautiful framework which gives rise to a rich variety of applications - even though they resemble a lot, both are specifically tailored for different applications.

A nice property of the ZX calculus is that it is universal: it can represent all 2^n x 2^m matrices and simultaneously it is a very intuitive and pictorial description [1, p.18].

As a final note: If you're familiar with condensed matter and tensor networks, you know that the AKLT state is of particular importance. It can also be described with the help of ZX Calculus and the framework is able to reveal its interesting properties as e.g. the string order [2].

---

References:

The ZX graphics were created with tikzit.github.io. Furthermore, you can find a lot of valuable information on zxcalculus.com.

[1] ZX-calculus for the working quantum computer scientist - Wetering. 2020. arXiv:2012.13966

[2] AKLT-States as ZX-Diagrams: Diagrammatic Reasoning for Quantum States - East, Wetering, Chancellor, Grushin. 2021. doi.org/10.1103/PRXQuantum.3.010302

#mysteriousquantumphysics#quantum#quantum physics#quantum computing#tensor networks#xz calculus#education#studyblr#stemblr#physicsblr#women in science#zx calculus

37 notes

·

View notes

Text

Russia registers patent for the biplace version of the Su-57

The patent received by UAC is for an aircraft that will act as a multifunctional air control center

Fernando Valduga By Fernando Valduga 11/21/2021 - 11:40am Military

United Aircraft Corporation has received a patent for a two-seater multifunctional aircraft for use as an air control center and ensuring interaction between aviation and military formations using network-centric methods to control weapon objects on the battlefield.

The aircraft concept was developed at the Sukhoi Design Bureau. Patent RU2807624 was registered by the Federal Intellectual Property Service on November 17, 2023.

It is assumed that the aircraft can also be used as a control point for unmanned aerial vehicles, which is achieved through a wide range of means of communication, including satellite, high-speed communication channel and installation of equipment for the transmission of information in a group over long periods and distances.

The rear cabin of the two-seater aircraft is optimized to perform the functions of gun operator and air command post, its information field is provided by an enlarged panoramic aviation indicator and an additional indicator to increase the operator's field of work. In addition, controls are installed to transfer control priority from one cabin to another.

The equipment in the co-pilot's cabin allows you to quickly receive information from various external sources, both air, land and sea, analyze it on board the aircraft and issue recommendations or commands for the group's aircraft to carry out a combat mission, taking into account their fuel reserves and combat equipment.

An increase in flight range and duration is achieved due to a 10% higher capacity of fuel tanks compared to the prototype, as well as the placement of detachable fuel tanks ?? in the aircraft's cargo compartments. The increase in the transverse area of the cabin in relation to the prototype allows the installation of large multifunctional indicators on the instrument panel for display of various tactical and flight information, as well as equipment for separate control of the aircraft systems between the cabins.

Composite materials are widely used in aircraft design, which ensures high weight efficiency. To increase the level of stealth, radar signal-absorbing materials and coatings are used. The tail, ?? together with the use of an integral load-bearing fuselage and engines with deflectable thrust vectorization, it helps to ensure supermaneuverability by expanding the range of altitudes and flight speeds. To further increase stability, ventral crests are installed at the bottom of the tail.

Based on the designs presented in the patent, it can be assumed that the prototype of the multifunctional air control center is the fifth-generation Su-57 fighter. However, the patent summary indicates that the aircraft of the Su-30MK family are the closest. Common features include an integral aerodynamic layout with a smooth wing and fuselage coupling, a fully movable horizontal tail console and a vertical tail console.

The invention of the Sukhoi Design Bureau aims to create a multifunctional two-seater aircraft, with an integral aerodynamic configuration and low level of radar signature, designed to destroy aerial, ground and surface targets with guided and unguided weapons, capable of acting as an airborne command post for network-oriented actions of mixed groups of aircraft and, as a consequence, with a significantly higher level of combat effectiveness.

Su-57 biplace proposal for the Indian Air Force, designated FGFA.

The two-seat stealth aircraft was a requirement for India when the two sides signed an agreement for the joint development and production of the fifth-generation fighter aircraft -FGFA (as the Su-57 was known at the time) in 2008. However, India gave up the agreement in 2018, after delays in the development of crucial technologies by the Russian side, but maintained the possibility of joining again in the future.

Tags: Military AviationRussiaSukhoi Su-57 Felon

Sharing

tweet

Fernando Valduga

Fernando Valduga

Aviation photographer and pilot since 1992, has participated in several events and air operations, such as Cruzex, AirVenture, Dayton Airshow and FIDAE. He has work published in specialized aviation magazines in Brazil and abroad. Uses Canon equipment during his photographic work in the world of aviation.

Related news

AERONAUTICAL ACCIDENTS

U.S. Navy Boeing P-8A surpasses the end of the runway in Hawaii and falls into the sea

21/11/2023 - 11:17

CRUZEX

Find out how was the event that marked the preparations for CRUZEX 2024

20/11/2023 - 23:17

MILITARY

GE Aerospace's XA100 engine tests reach a new milestone

20/11/2023 - 19:36

F-16 fighter fly over Romania. (Photo: Radoslaw Jozwiak/AFP/Getty Images)

MILITARY

U.S. Department of Defense will ensure the availability of parts to maintain the F-16 for Ukraine

20/11/2023 - 17:30

HELICOPTERS

Royal Jordania Air Force receives its first Bell 505

20/11/2023 - 16:15

DUBAI AIR SHOW

United Arab Emirates bets on the potential in the Calidus B-250T armed turboprop developed in Brazil

20/11/2023 - 12:38

3 notes

·

View notes

Text

Advanced Data Mining Techniques

Data mining is a powerful tool that helps organizations extract valuable insights from large datasets. Here’s an overview of some advanced data mining techniques that enhance analysis and decision-making.

1. Machine Learning

Supervised Learning

Involves training algorithms on labelled datasets to predict outcomes. Techniques include regression analysis and classification algorithms like decision trees and support vector machines.

Unsupervised Learning

Focuses on finding hidden patterns in unlabelled data. Techniques such as clustering (e.g., K-means, hierarchical clustering) and dimensionality reduction (e.g., PCA) help in grouping similar data points.

2. Neural Networks

Deep Learning

A subset of machine learning that uses multi-layered neural networks to model complex patterns in large datasets. Commonly used in image recognition, natural language processing, and more.

Convolutional Neural Networks (CNNs)

Particularly effective for image data, CNNs automatically detect features through convolutional layers, making them ideal for tasks such as facial recognition.

3. Natural Language Processing (NLP)

Text Mining

Extracts useful information from unstructured text data. Techniques include tokenization, sentiment analysis, and topic modelling (e.g., LDA).

Named Entity Recognition (NER)

Identifies and classifies key entities (e.g., people, organizations) in text, helping organizations to extract relevant information from documents.

4. Time Series Analysis

Forecasting

Analyzing time-ordered data points to make predictions about future values. Techniques include ARIMA (AutoRegressive Integrated Moving Average) and seasonal decomposition.

Anomaly Detection

Identifying unusual patterns or outliers in time series data, often used for fraud detection and monitoring system health.

5. Association Rule Learning

Market Basket Analysis

Discovers interesting relationships between variables in large datasets. Techniques like Apriori and FP-Growth algorithms are used to find associations (e.g., customers who buy bread often buy butter).

Recommendation Systems

Leveraging association rules to suggest products or content based on user preferences and behaviour, enhancing customer experience.

6. Dimensionality Reduction

Principal Component Analysis (PCA)

Reduces the number of variables in a dataset while preserving as much information as possible. This technique is useful for simplifying models and improving visualization.

t-Distributed Stochastic Neighbour Embedding (t-SNE)

A technique for visualizing high-dimensional data by reducing it to two or three dimensions, particularly effective for clustering analysis.

7. Ensemble Methods

Boosting

Combines multiple weak learners to create a strong predictive model. Techniques like AdaBoost and Gradient Boosting improve accuracy by focusing on misclassified instances.

Bagging

Reduces variance by training multiple models on random subsets of the data and averaging their predictions, as seen in Random Forest algorithms.

8. Graph Mining

Social Network Analysis

Analyzes relationships and interactions within networks. Techniques include community detection and centrality measures to understand influential nodes.

Link Prediction

Predicts the likelihood of a connection between nodes in a graph, useful for recommendation systems and fraud detection.

Conclusion

Advanced data mining techniques enable organizations to uncover hidden patterns, make informed decisions, and enhance predictive capabilities. As technology continues to evolve, these techniques will play an increasingly vital role in leveraging data for strategic advantage across various industries.

0 notes

Text

Enhancing IT Security with Vector’s Threat Detection

In an era where cyber threats are more sophisticated than ever, the need for early threat detection for businesses has become more important. Cyberattacks are no longer a matter of "if" but "when." To combat these evolving threats, organizations must employ advanced security measures that ensure real-time protection.

Vector offers a comprehensive suite of security tools designed to enhance cybersecurity, including advanced threat detection and proactive response mechanisms. With its cutting-edge AI-driven capabilities, Vector delivers unmatched security solutions that identify and mitigate risks before they escalate.

AI-Driven Threat Detection: The Future of IT Security

The cornerstone of Vector’s security is its AI-driven threat detection capabilities. By leveraging artificial intelligence (AI) and behavioral analytics, Vector can predict and detect anomalies across systems, identifying potential threats before they cause damage. Unlike traditional security methods, threat detection is not reactive but predictive, offering real-time analysis of activities and deviations from normal behavior patterns.

This proactive approach helps companies minimize the mean time to detect (MTTD) threats, enabling them to respond faster and more efficiently. With Vector, organizations can maximize true positives while reducing false positives, ensuring that security teams can focus on genuine risks rather than wasting time on irrelevant alerts.

Advanced Threat Detection and Response

Vector’s Security and Compliance Monitoring (SCM) module goes beyond basic detection with its advanced threat detection and response capabilities. Through User and Entity Behavior Analytics (UEBA), the system tracks the behavior of users and entities within the network, learning from past activities to identify suspicious behavior that may signal a breach. By continuously analyzing patterns and data, the system offers a dynamic and adaptable defense strategy against evolving cyber threats.

Security Orchestration, Automation, and Response (SOAR) further enhances Vector’s capabilities by automating the response process. This automation reduces the mean time to respond (MTTR) by offering guided response recommendations, ensuring swift action when a threat is identified. Automated playbooks allow for a quick and effective resolution to incidents, minimizing damage and disruption to business operations.

Ensuring Compliance and Secure Operations

In addition to threat detection, Vector also emphasizes compliance monitoring and reporting. Companies must maintain compliance with security standards such as ISO 27001 and SOC 2, and Vector ensures that these standards are met by continuously monitoring for any deviations. This proactive approach not only keeps businesses compliant but also identifies areas for improvement, ensuring that security operations are always aligned with best practices.

Vector's SCM module helps manage these compliance requirements by providing automated reports and alerts when potential compliance risks arise. By integrating compliance and security management, organizations can streamline their auditing processes and minimize the risk of penalties due to non-compliance.

Robust Data Protection

With data protection becoming a top priority, Vector provides multiple layers of security to safeguard sensitive information. Data encryption, both at rest and in transit, ensures that confidential information is protected from unauthorized access. Furthermore, access controls, including Role-based Access Control (RBAC) and Multi-factor Authentication (MFA), restrict who can access data, ensuring only authorized personnel have the necessary permissions.

To comply with privacy regulations like GDPR and CCPA, Vector incorporates advanced techniques such as data anonymization and pseudonymization, adding another layer of protection. This comprehensive data security strategy ensures that businesses can maintain confidentiality while adhering to global privacy standards.

Enhancing Network Security

Vector also excels in network security, utilizing robust firewall protocols, intrusion detection systems, and secure transmission methods to protect the network from unauthorized access and attacks. Regular vulnerability assessments ensure that potential weaknesses are identified and rectified before they can be exploited.

With continuous 24/7 monitoring and automated alerts, Vector ensures that organizations can quickly detect and respond to security incidents. Integration with Security Information and Event Management (SIEM) tools enhances its ability to manage incidents and investigate threats, keeping networks safe from malicious activity.

Conclusion

In an era where cyberattacks are a constant threat, leveraging advanced technologies like AI-driven threat detection is essential for safeguarding critical systems and data. Vector, with its SCM module, delivers an all-encompassing security solution that includes advanced threat detection, compliance monitoring, and automated incident response. By integrating AI and behavioral analytics, Vector empowers businesses to stay ahead of threats and maintain a secure digital environment.

From network security to data protection and compliance, Vector’s robust security architecture ensures that organizations are not only protected but also prepared to face the ever-evolving cyber landscape.

Click here to learn more about Vector’s AI-driven threat detection and how it can protect your business from potential threats.

0 notes

Text

CREST Registered Intrusion Analyst

The CREST Registered Intrusion Analyst (CRIA) certification is a respected qualification for cybersecurity professionals specializing in intrusion analysis. This certification is awarded by CREST, a globally recognized organization that certifies professionals in the cybersecurity field.

CREST Registered Intrusion Analyst (CRIA)

Overview

The CRIA certification is designed to validate the skills and knowledge of individuals involved in detecting, analyzing, and responding to network and system intrusions. The certification focuses on practical skills and theoretical knowledge essential for performing effective intrusion analysis.

What You Learn:

Intrusion Detection: Techniques for monitoring and analyzing network traffic to identify suspicious activities.

Incident Analysis: Methods for investigating and analyzing security incidents and breaches.

Forensics: Basic forensic techniques for preserving and analyzing evidence related to intrusions.

Network Security: Understanding network protocols and configurations to identify potential vulnerabilities and attack vectors.

Reporting: Documenting and reporting findings from intrusion analysis and investigations.

Why It’s Useful:

Industry Recognition: CREST certifications are widely recognized and respected in the cybersecurity industry, making the CRIA valuable for career advancement.

Practical Skills: The CRIA focuses on hands-on skills and real-world scenarios, ensuring that candidates are well-prepared to handle actual intrusion analysis tasks.

Career Opportunities: The certification is beneficial for roles such as security analysts, incident responders, and network security engineers.

Certification Requirements:

Experience: While there is no mandatory work experience required, candidates typically benefit from having hands-on experience in intrusion analysis or a related field.

Training: Enrolling in a CREST-approved training course is recommended to prepare for the examination. Many training providers offer courses specifically tailored to the CRIA certification.

Exam Details:

Format: The exam consists of multiple-choice questions and practical exercises that test candidates’ knowledge and skills in intrusion analysis.

Duration: The exam typically lasts around 3-4 hours.

Location: Exams can be taken at CREST-accredited test centers or through remote proctoring, depending on availability.

More Information and Enrollment:

CREST Official Site: CREST Registered Intrusion Analyst

Training Providers: CREST-approved training providers offer courses to help candidates prepare for the CRIA exam. You can find a list of these providers on the CREST website or contact them directly for course details.

Preparation Tips:

Understand the Syllabus: Review the CRIA syllabus thoroughly to understand the topics covered and the skills required.

Hands-On Practice: Engage in practical exercises and simulations to build experience in intrusion detection and analysis.

Use Official Resources: Utilize CREST-approved study materials and training courses to ensure comprehensive preparation.

The CRIA certification is an excellent choice for those looking to specialize in intrusion analysis and advance their career in cybersecurity.

4o mini

You said:

CREST Registered Intrusion Analyst

ChatGPT said:

ChatGPT

The CREST Registered Intrusion Analyst (CRIA) certification is a professional qualification designed for individuals specializing in intrusion analysis within the cybersecurity domain. Here’s a detailed overview:

CREST Registered Intrusion Analyst (CRIA)

Overview

The CRIA certification is aimed at validating the skills and knowledge of professionals who analyze and respond to intrusions in network and system environments. It is recognized globally and demonstrates a candidate's capability in detecting, analyzing, and managing cybersecurity threats.

Key Learning Areas:

Intrusion Detection and Analysis:

Techniques for monitoring network traffic and identifying suspicious activities.

Use of tools and methodologies for analyzing network and host-based intrusions.

Incident Response:

Procedures for responding to and managing security incidents.

Techniques for investigating and documenting breaches and attacks.

Network and System Forensics:

Basics of forensic investigation techniques for recovering and analyzing digital evidence.

Understanding of network protocols and data flow for forensic analysis.

Threat Intelligence:

Gathering and utilizing threat intelligence to identify and understand emerging threats.

Correlating intelligence data with observed intrusion patterns.

Reporting and Documentation:

Skills for creating comprehensive reports on intrusion analysis findings.

Communicating effectively with stakeholders regarding security incidents.

Certification Requirements:

Experience: While formal experience requirements are not specified, practical experience in intrusion analysis or related fields is highly beneficial.

Training: Candidates are encouraged to undergo training from CREST-accredited providers to prepare for the exam.

Exam Details:

Format: The exam typically consists of multiple-choice questions and practical scenarios that assess candidates’ skills and knowledge.

Duration: The exam usually lasts around 3-4 hours.

Location: Exams can be taken at CREST-accredited test centers or through remote proctoring, depending on availability and options provided by CREST.

Why CRIA is Valuable:

Industry Recognition: The CRIA certification is widely recognized in the cybersecurity field, enhancing career prospects and demonstrating a high level of expertise in intrusion analysis.

Practical Skills: The certification emphasizes practical skills and real-world scenarios, ensuring that professionals are equipped to handle complex intrusion analysis tasks effectively.

Career Advancement: Earning CRIA can lead to advanced roles in cybersecurity, such as security analyst, incident responder, or network security engineer.

How to Prepare:

Review the Syllabus: Understand the exam topics and required skills by reviewing the CRIA syllabus.

Enroll in Training: Consider enrolling in a CREST-approved training course to gain in-depth knowledge and practical experience.

Practice Hands-On: Engage in hands-on practice and simulations to build familiarity with intrusion detection and response tools.

Additional Resources:

CREST Official Site: CREST Registered Intrusion Analyst (CRIA)

Training Providers: Look for CREST-accredited training providers for preparation courses and materials visit US: CREST Registered Intrusion Analyst.

The CRIA certification is ideal for professionals looking to specialize in intrusion analysis and enhance their expertise in cybersecurity.Edit

0 notes

Text

Saelig Test & Msmt Eqt introduces the Siglent SNA6000A Vector Network Analyzers series, covering 100kHz to 26.5GHz, ideal for measuring Q-factor, bandwidth, and insertion loss of filters. Supporting 2/4-port S-parameters, impedance conversion, and fixture simulation, these VNAs offer five sweep types and precise corrections, making them versatile for R&D and manufacturing applications

0 notes

Text

Application Security Service for Modern Businesses

An effective application security service includes several critical components to ensure comprehensive protection. These components typically encompass vulnerability assessments, which involve scanning applications for potential weaknesses; penetration testing, which simulates real-world attacks to identify exploitable flaws; and security code reviews, which analyze the source code for vulnerabilities.

Benefits of Implementing Application Security Service

Implementing an application security service offers numerous benefits for businesses seeking to protect their software assets. Firstly, it helps identify and resolve vulnerabilities before they can be exploited by attackers, reducing the data breaches and financial losses. Secondly, it enhances overall software quality and reliability by addressing security issues early in the development process. Additionally, a strong application security service can improve customer trust and compliance with industry regulations, further safeguarding your brand's reputation and ensuring adherence to legal requirements.

How Application Security Service Enhances Compliance and Regulatory Standards?

Application security service plays a vital role in ensuring compliance with various regulatory standards and industry guidelines. By implementing security measures such as encryption, access controls, and regular security assessments, businesses can meet requirements set by regulations like GDPR, HIPAA, and PCI-DSS. These standards mandate specific security practices to protect sensitive data and maintain the confidentiality of personal information. An effective application security service helps organizations adhere to these regulations, avoiding potential fines and legal issues while demonstrating a commitment to data protection and security.

The Role of Vulnerability Assessments in Application Security Service

Vulnerability assessments are a cornerstone of application security service, providing a systematic approach to identifying and addressing potential weaknesses within software applications. This process involves scanning applications for known vulnerabilities, misconfigurations, and security flaws. By conducting regular vulnerability assessments, businesses can detect issues early, prioritize remediation efforts, and enhance their overall security posture. These assessments also provide valuable insights into potential attack vectors, helping organizations strengthen their defenses and reduce the likelihood of successful cyber attacks.

Penetration Testing and Its Importance in Application Security Service

Penetration testing, also known as ethical hacking, is a crucial component of application security service. It involves simulating real-world attacks on applications to identify vulnerabilities that may not be apparent during routine assessments. By actively testing the application's defenses, penetration testing helps uncover potential security gaps and weaknesses that could be exploited by malicious actors. The insights gained from these tests enable businesses to implement targeted security measures, improve their application security, and better protect against potential cyber threats.

Continuous Monitoring and Its Role in Application Security Service

Continuous monitoring is an essential aspect of application security service, providing ongoing surveillance of software applications to detect and respond to emerging threats. This process involves real-time analysis of application activity, network traffic, and security events to identify potential security incidents and vulnerabilities. By maintaining constant vigilance, businesses can promptly address security issues, minimize the impact of potential breaches, and ensure that their applications remain secure against evolving cyber threats.

Choosing the Right Application Security Service Provider

Selecting the right application security service provider is critical for ensuring effective protection of your software assets. When evaluating potential providers, consider factors such as their expertise in application security, the range of services offered, and their track record of successful implementations. Look for providers with a strong reputation, industry certifications, and a demonstrated ability to address complex security challenges. By choosing a reputable and experienced provider, you can ensure that your application security needs are met and your software remains protected against potential threats.

Common Challenges in Application Security Service

Implementing application security service can present several challenges, including keeping up with rapidly evolving threats, integrating security measures into existing development processes, and managing resource constraints. To overcome these challenges, businesses should adopt a proactive approach, regularly update security practices, and incorporate security considerations early in the development lifecycle. Leveraging automated tools, providing ongoing training for development teams, and engaging with experienced security professionals can also help address these challenges and enhance overall application security.

Future Trends in Application Security Service

The field of application security service is continuously evolving, with several emerging trends shaping its future. Key trends include the increasing use of artificial intelligence and machine learning to enhance threat detection and response, the growing importance of securing cloud-based applications, and the rise of DevSecOps practices that integrate security into the software development lifecycle. Additionally, the focus on privacy and compliance is expected to intensify, driving the need for more comprehensive security measures. Staying informed about these trends and adapting to new developments will help businesses maintain robust application security and address future challenges effectively.

Conclusion

The ultimate guide to application security service highlights the importance of protecting software applications from vulnerabilities and cyber threats. By understanding the key components, benefits, and emerging trends, businesses can implement effective security measures to safeguard their digital assets. Whether through vulnerability assessments, penetration testing, or continuous monitoring, a robust application security service ensures that applications remain secure, compliant, and resilient against potential attacks. Investing in comprehensive application security is crucial for maintaining the integrity and reliability of your software in an increasingly complex digital landscape.

0 notes

Text

Data Science Classes: Your Gateway to a Data-Driven Career

In today's technology-driven world, data is at the heart of decision-making for businesses, governments, and organizations across all industries. The ability to analyze and interpret data has become an essential skill, and Data Science Classes offer the perfect platform for individuals to develop these skills. Whether you’re a student looking to enter the field or a professional seeking to upgrade your expertise, enrolling in data science is a crucial step toward building a career in this rapidly expanding field.

Why Take Data Science?

Increased Demand for Data Experts:

The demand for data scientists and analysts has exploded in recent years. As organizations realize the value of data-driven insights, the need for professionals who can analyze and interpret data has never been higher. Data science provide the foundational knowledge required to enter this growing job market.

Diverse Career Opportunities:

Data science isn’t limited to one industry. From healthcare to finance, retail to marketing, data science is applied in nearly every sector. By taking data science, you can explore various career paths such as data analyst, machine learning engineer, data architect, and business intelligence expert.

High Earning Potential:

With the increasing demand for data professionals, salaries in the field are also high. According to industry reports, data scientists earn some of the highest salaries in the tech industry, often surpassing $100,000 annually. Data science help you acquire the skills needed to tap into these lucrative job opportunities.

Versatility and Flexibility:

Data science cater to a wide range of skill levels and learning preferences. Whether you’re a beginner looking to learn basic concepts or a seasoned professional aiming to specialize in machine learning, there are classes designed to meet your needs. Many courses are offered both online and in-person, providing flexibility for working professionals.

What Do Data Science Cover?

Data science classes offer a comprehensive curriculum that covers the key areas necessary for building a strong foundation in data science. Some of the primary topics include:

Programming Languages:

The ability to code is central to data science. Most classes teach programming languages such as Python, R, and SQL, which are commonly used for data manipulation, statistical analysis, and machine learning.

Statistics and Mathematics:

A deep understanding of statistical concepts is essential in data science. Classes cover areas like probability, linear regression, and hypothesis testing, which are crucial for making data-driven predictions.

Data Cleaning and Preprocessing:

Real-world data is often messy, and data science teach how to clean, organize, and preprocess data before analysis. This includes handling missing values, detecting outliers, and transforming raw data into a structured format.

Machine Learning:

A key component of data science, machine learning enables computers to learn from data without being explicitly programmed. Classes cover machine learning algorithms like decision trees, random forests, support vector machines, and neural networks, providing the skills to build predictive models.

Data Visualization:

Communicating insights effectively is a crucial part of data science. Students learn to create compelling visualizations using tools like Tableau, Power BI, and Matplotlib, making it easier to present complex data to non-technical audiences.

Big Data Tools:

For those interested in working with massive datasets, many data science courses introduce big data technologies such as Hadoop, Apache Spark, and cloud computing platforms like AWS. These tools are essential for managing and analyzing large-scale data.

Types of Data Science

There are various types of data science classes available, designed to cater to different learning needs and career goals:

Introductory Classes:

Ideal for beginners, these classes introduce fundamental concepts in data science, including programming, statistics, and basic machine learning. They are perfect for those looking to explore the field and build foundational skills.

Advanced Classes:

For individuals with a background in data analysis or programming, advanced classes delve deeper into topics like deep learning, natural language processing (NLP), and advanced machine learning algorithms. These courses help professionals gain specialized skills and expertise.

Certification Programs:

Many institutions offer data science certification programs that provide structured learning paths. These programs typically include a combination of theoretical lessons and hands-on projects, culminating in a certificate that demonstrates your expertise to potential employers.

Bootcamps:

Data science bootcamps are intensive, short-term programs that focus on practical learning. They are designed to equip students with industry-relevant skills quickly, making them job-ready within a few months.

Online Courses:

Online data science course offer flexibility for working professionals or students who prefer to learn at their own pace. Platforms like Coursera, Udemy, and edX offer a range of courses, from beginner to advanced levels, with options to earn certificates.

Benefits of Enrolling in Data Science

Hands-On Learning:

Many data science courses emphasize hands-on projects, allowing students to apply what they’ve learned to real-world datasets. This practical experience is invaluable when transitioning to a professional data science role.

Career Advancement:

For professionals already in the tech or business field, data science provide the opportunity to upskill and advance in their careers. Gaining expertise in data analysis, machine learning, and AI can open doors to higher-level positions.

Industry-Relevant Curriculum:

Most data science class are designed with industry input, ensuring that students learn the tools and technologies that employers are looking for. This relevance enhances your employability and ensures that you’re ready to tackle real-world data challenges.

Networking Opportunities:

Enrolling in data science allows students to connect with peers, instructors, and industry experts. These connections can lead to valuable job referrals, collaborations, and mentorship opportunities.

Continuous Learning:

Data science is a constantly evolving field, with new tools, techniques, and algorithms being developed regularly. Taking data science ensures that you stay updated with the latest trends and technologies, making you more competitive in the job market.

How to Choose the Right Data Science Class

Assess Your Skill Level:

If you're new to data science, opt for introductory classes that cover the basics. For those with some experience, look for advanced courses that align with your career goals.

Instructor Expertise:

Ensure that the class is taught by qualified instructors with industry experience. Instructors who have worked in the field can provide valuable insights and practical knowledge.

Course Format and Flexibility:

Consider whether you prefer in-person or online learning. For working professionals, online or part-time classes offer the flexibility needed to balance learning with other responsibilities.

Course Reviews:

Read reviews and testimonials from past students to gauge the quality of the course. Look for classes that are highly rated for content, instruction, and practical learning opportunities.

Certifications:

If you’re looking to add credentials to your resume, choose courses that offer certifications from accredited institutions or recognized platforms.

Conclusion

Data Science Classes are an essential stepping stone for anyone looking to build a career in the rapidly growing field of data science. Whether you're just starting or looking to enhance your existing skills, these classes offer a structured pathway to learning the programming, analytical, and machine learning skills that are highly sought after in today’s job market. With a wide variety of courses available—ranging from beginner to advanced levels—there's a class for every aspiring data scientist. Take the first step today and unlock the opportunities that a data-driven future holds.

For more info:

ExcelR - Data Science, Data Analyst Course in Vizag

Address: iKushal, 4th floor, Ganta Arcade, 3rd Ln, Tpc Area Office, Opp. Gayatri Xerox, Lakshmi Srinivasam, Dwaraka Nagar, Visakhapatnam, Andhra Pradesh 530016

Phone no: 074119 54369

E-mail: [email protected]

Directions : https://maps.app.goo.gl/4uPApqiuJ3YM7dhaA

0 notes

Text

Network Security Monitoring: The Key to Proactive Threat Detection and Prevention

Digital threats are becoming an ever-present reality. Companies must cope with them and take appropriate measures against numerous cyber attacks. It's a real challenge for organizations to keep their networks secure from malicious actors. Network security monitoring is a crucial defense mechanism against growing threats. This critical element allows businesses to monitor, identify, and mitigate potential attacks.

What is NSM?

Understanding NSM (Network Security Monitoring) is essential for any organization building a robust security posture. NSM involves the collection and analysis of network data. Advanced analysis is crucial for identifying and responding to security incidents. Proactive monitoring allows security teams to be one step ahead of known and unknown threats.

NSM is not just about detecting breaches after they occur. It's about identifying suspicious activities as early as possible. This approach reduces the window of opportunity for attackers. With network security and monitoring at the core, organizations can ensure their digital assets are continuously protected.

Components of an Effective Network Security Monitoring System

Network Security Monitoring (NSM) is essential to any organization’s cybersecurity infrastructure. An effective NSM system provides continuous surveillance and immediate response capabilities to detect, analyze, and respond to security threats. Several key components must work together to ensure comprehensive coverage and fast action against potential attacks to build a robust NSM system.

1. Comprehensive Visibility with Monitoring Tools

The cornerstone of any NSM system is its ability to provide comprehensive visibility across the network. This visibility is achieved through specialized network security monitoring tools continuously collecting and analyzing data. These tools include:

- Intrusion Detection Systems (IDS): These monitor network traffic for suspicious activity and policy violations, alerting administrators when potential threats are detected.

- Intrusion Prevention Systems (IPS): Besides detecting threats, IPS systems can take automatic preventive measures by blocking or quarantining malicious activity in real-time.

- Network Traffic Analyzers: These tools offer a deeper dive into the network’s traffic patterns, providing detailed analysis of protocols, bandwidth usage, and data flow, which helps identify irregularities.

This data collection and analysis occur in real time, allowing the organization to maintain an up-to-date understanding of its network’s security posture. Immediate insights into potential threats and the ability to trace anomalies allow IT teams to address issues before they escalate.

2. Ongoing Data Collection and Monitoring

An effective NSM system relies heavily on ongoing data collection from various network sources, including endpoints, servers, and network devices. The system establishes a baseline of normal behavior by continuously monitoring network traffic. This continuous monitoring provides several benefits:

- Detection of Intrusion Attempts: By comparing real-time activity against baseline data, the system can quickly detect anomalies that may indicate a network breach or unauthorized access attempt.

- Threat Hunting: The collected data enables security analysts to hunt for threats that may evade traditional detection mechanisms proactively.

- Forensic Analysis: In case of a security incident, the collected data serves as a valuable resource for conducting post-incident investigations. It can help security teams reconstruct attack vectors and understand the scope of the breach.

3. Integration with Other Security Solutions

An NSM system cannot work in isolation. It must integrate seamlessly with the organization’s broader cybersecurity architecture for maximum efficiency and protection. Integrating with other security solutions enables a more holistic defense strategy, allowing information sharing between different systems. These integrations typically include:

- Security Information and Event Management (SIEM) Systems: SIEM solutions aggregate and correlate data from various sources, including firewalls, antivirus, and NSM tools, providing comprehensive threat intelligence.

- Endpoint Detection and Response (EDR): EDR tools can be integrated with NSM systems to provide visibility into endpoint activity, enabling faster detection and response to endpoint-based attacks.

- Firewall and VPN Solutions: These components work with NSM to enforce network access control and encrypt traffic, ensuring that only authorized users can interact with the network.

This integrated approach allows the NSM system to contribute valuable intelligence to a unified security ecosystem, making threat detection more efficient and accurate.

4. Threat Intelligence Integration

Incorporating threat intelligence into the NSM system enhances its ability to detect and respond to advanced threats. Threat intelligence provides information about known attack vectors, indicators of compromise (IoCs), and details about ongoing malicious campaigns. This data can be used to:

- Automatically block IP addresses or domains known to be associated with malicious activity.

- Fine-tune detection rules to focus on the most relevant threats based on real-world intelligence.

- Proactively identify threats targeting the organization’s industry or geographic region.

5. Automation and AI-Driven Analytics

The increasing complexity of cyber threats has made automation and AI-driven analytics indispensable in modern NSM systems. Automation enhances the system's ability to:

- Respond to Incidents Faster: Automated response mechanisms can be triggered when a threat is detected, reducing the time to neutralize an attack. For example, suspicious IP addresses can be blocked, or affected systems can be isolated automatically.

- Analyze Large Volumes of Data: AI-driven analytics helps process vast network traffic, identifying patterns and irregularities more effectively than manual analysis. Machine learning models can be trained to detect subtle signs of an attack that traditional tools might miss.

6. Rapid Response and Incident Mitigation

The ability to respond rapidly to identified threats is critical for minimizing damage. Real-time data collection, threat analysis, and response capabilities allow organizations to neutralize attacks before they cause significant harm. An effective response system should include:

- Incident Response Playbooks: Predefined response plans ensure incidents are handled consistently and efficiently. These playbooks can automate the initial response while escalating the issue to security analysts for further investigation.

- Containment and Eradication: Once a threat is detected, the system should isolate the affected systems and prevent the attacker from spreading laterally across the network. After containment, eradication measures should remove the threat entirely.

- Recovery: After neutralizing the threat, the system should restore normal operations as quickly as possible while addressing the vulnerability that led to the attack.

7. Scalability and Flexibility

As organizations grow, their network infrastructure evolves, and so do their security needs. An effective NSM system should be scalable and flexible, adapting to the growing volume of network traffic, the increasing number of connected devices, and the evolving threat landscape. Cloud-based NSM solutions, for instance, offer the flexibility to scale monitoring capabilities as the organization expands, ensuring that network visibility remains intact.

The Role of NSM in Cybersecurity

In the realm of NSM cybersecurity, monitoring extends beyond mere observation. It requires a strategic approach to addressing and mitigating risks. Network security monitoring focuses on identifying potential attackers, understanding their tactics, and preparing effective responses.

NSM also emphasizes the importance of threat prevention and intelligence. By analyzing network data and correlating it with known patterns of threats, an organization can prepare and try to prevent the attack. This proactive stance is especially vital as threats change every minute.

Additionally, NSM provides ongoing assessments of an organization's security posture. Regular analysis of data flows and user behaviors allows for adjustments and improvements, ensuring the network remains resilient against emerging threats. Continuous feedback is a cornerstone of an effective NSM strategy.

Benefits of Real-Time Network Security Monitoring

Regular monitoring has become a game-changer for advanced security strategies. It allows organizations to take active measures against threats. This practice minimizes the impact of possible breaches and ensures that incidents are contained quickly.

Key benefits of network security monitoring include:

1. Faster Response Times

One of the most significant advantages of real-time NSM is the rapid response it enables. Continuous monitoring identifies potential threats the moment they occur, allowing security teams to act swiftly. The quicker a breach or suspicious activity is detected, the faster an organization can contain and mitigate its impact. This immediate reaction is critical in minimizing the damage from cyber attacks, as delayed responses can lead to more extensive data losses or prolonged system downtimes.

For example, if detected in real-time, a Distributed Denial-of-Service (DDoS) attack can be quickly mitigated by redirecting traffic or employing rate-limiting techniques, preventing widespread disruption.

2. Improved Threat Detection Accuracy

Real-time NSM significantly enhances threat detection accuracy. It can identify patterns and anomalies indicating malicious activities by constantly analyzing network traffic. Continuous data analysis enables NSM systems to learn and adapt to new and emerging threats, improving detection algorithms over time.

Furthermore, false positives, which often plague traditional security systems, are significantly reduced with real-time monitoring. Since the system continuously monitors and refines its understanding of normal network behavior, it becomes more adept at distinguishing between benign and suspicious activities. As a result, security teams can focus their efforts on real threats rather than wasting time on false alarms.

3. Reduced Risk of Data Breach

Data breaches can have devastating consequences, including financial loss, reputational damage, and legal penalties. Real-time NSM plays a vital role in reducing the risk of such breaches by detecting threats at the earliest stage. Whether it’s unauthorized access attempts, malware infections, or suspicious data transfers, real-time monitoring provides instant alerts, allowing teams to address the issue before any sensitive information is compromised.

Organizations can protect their customers' personal information, intellectual property, and internal communications by securing real-time sensitive data. This level of security is especially critical for industries that handle high volumes of confidential data, such as finance, healthcare, and legal sectors.

4. Enhanced Network Visibility

Real-time NSM provides enhanced visibility into all aspects of network activity, offering a comprehensive view of everything happening within the infrastructure. This visibility extends across all devices, users, and data traffic, ensuring no activity goes unnoticed.

Organizations can more effectively manage their resources, identify potential vulnerabilities, and enforce security policies by having a clear and detailed picture of their network. This visibility not only helps detect unauthorized or suspicious behavior but also aids in understanding the overall health and performance of the network. Regular monitoring of user activity, system performance, and data flows helps IT teams maintain optimal network efficiency while identifying potential bottlenecks or inefficiencies.

5. Proactive Incident Prevention

Real-time network monitoring is not just about responding to threats; it’s also about proactive prevention. By constantly analyzing network behavior, NSM systems can identify precursors to attacks, such as unusual traffic spikes or abnormal login attempts. With this early warning system, organizations can take preventive measures before a full-scale attack occurs, such as adjusting firewall rules, isolating suspicious devices, or tightening access controls.

This proactive approach significantly reduces the likelihood of successful attacks, as many cyber threats are thwarted before they can gain a foothold in the network.

6. Support for Compliance and Reporting

Many industries, including healthcare, finance, and e-commerce, are subject to strict regulatory requirements related to data security and privacy. Compliance standards such as GDPR, HIPAA, and PCI-DSS mandate that organizations follow specific guidelines to protect sensitive information. Real-time NSM helps organizations stay compliant by generating detailed logs and reports on network activity.

These logs are valuable during compliance audits, providing concrete evidence of ongoing security efforts and adherence to required protocols. Additionally, NSM systems can automatically alert security teams if any activity violates compliance rules, swiftly addressing non-compliant behavior.

7. Reduced Operational Downtime

Network downtime, whether caused by cyber attacks or system failures, can result in significant financial and productivity losses for organizations. Real-time NSM helps minimize operational downtime by detecting and addressing issues before they escalate. For example, real-time monitoring can detect hardware malfunctions, system overloads, or network congestion that could lead to outages.

By addressing these issues proactively, organizations can keep their systems running smoothly, ensuring continuous operations and minimizing disruptions to business activities.

8. Cost-Effective Security Management

While investing in an NSM system may seem like an additional expense, it ultimately leads to cost savings in the long run. The ability to detect and neutralize threats early reduces the costs associated with data breaches, downtime, and legal penalties. Furthermore, real-time monitoring automates many aspects of security management, reducing the need for manual intervention and allowing security teams to focus on more strategic tasks.

This automation also helps lower the risk of human error, a common cause of security breaches. Real-time NSM becomes a cost-effective solution for maintaining robust network security by streamlining security and improving efficiency.

9. Enhanced Incident Response Collaboration

Real-time monitoring facilitates better collaboration between security teams during incident response. Since the system provides continuous insights and up-to-the-minute data on network activity, all team members can work with the most current information. This collaborative environment improves decision-making, reduces the time needed to analyze incidents, and ensures that teams can coordinate their efforts to resolve security issues more efficiently.

Proactive Threat Prevention

A proactive approach sets NSM apart from traditional security methods. Instead of waiting for a breach, NSM identifies and neutralizes threats before they become full-scale attacks. This practice is way more effective in protecting sensitive data and supporting network stability.

Network security monitoring tools analyze traffic in real-time. They help security teams spot anomalies and take action immediately. By eliminating threats before they can cause harm, NSM prevents potential data breaches and enhances overall network performance.

Proactive measures also allow for the development of advanced security protocols. NSM focuses on all types of threats that can potentially target the network. Security teams implement measures to prevent these attacks from ever reaching their targets. This level of foresight is crucial for staying ahead of cybercriminals.

Enhancing Network Security with NSM

The integration of NSM network technologies strengthens the overall security posture. Network monitoring security provides continuous visibility and awareness, fostering a security-first mindset. NSM gives the confidence that all network activities align with security policies and standards.

A network security monitoring system protects IT infrastructure against various threats. This process includes multiple security layers, from preventing unauthorized access to addressing complex cyberattacks. A robust and resilient network can adapt to emerging threats and mitigate the associated risks.

Safeguarding Your Network's Future

Network security monitoring is a strategic investment that will pay off. This advanced solution allows companies to detect and prevent threats before they cause harm. A comprehensive NSM strategy also gives confidence that an organization's digital assets are protected constantly.

Companies can avoid cyber threats by embracing NSM and using the latest network security monitoring tools. Long-term security and operational success help businesses thrive in the competitive market. Protect your network today to secure your success tomorrow.

Read the full article

0 notes

Text

AI-Powered Penetration Testing: Automating Security Assessments

Imagine going through a library full of books, one page at a time, searching for a specific sentence. This is how conventional pen testing can feel. AI-powered penetration testing acts like a skilled researcher who can quickly scan and analyze vast amounts of information, pinpointing potential security weaknesses.

In this blog, we will discuss how AI can improve cybersecurity through pen testing and enable faster security assessments for businesses.

What is AI Penetration Testing?

AI Penetration Testing is an advanced method that automates penetration testing using machine learning and artificial intelligence. It improves accuracy and efficiency by simulating subsequent hacking attempts.

AI-based technology can:

1. Analyze massive volumes of data to find trends and forecast potential attack vectors.

2. Create complete attack simulations to provide businesses with important insights into vulnerabilities and probable attack outcomes.

As businesses use a variety of technologies and numerous IP addresses, it becomes more difficult for pen testers to examine everything in a fair amount of time and produce accurate results. However, AI and machine learning help in penetration testing by automating tasks and analyzing data.

The Five Stages of Penetration Testing

Penetration testing involves five phases:

1. Reconnaissance: Reconnaissance involves gathering information about a target system to plan an attack strategy, either active or passive, with active reconnaissance involving direct interaction.

2. Scanning: Scanning uses tools to identify open ports and check network traffic and can be automated or human-led but requires human intervention.

3. Vulnerability Assessment: Vulnerability assessment utilizes reconnaissance and scanning data to identify potential vulnerabilities, using resources like the National Vulnerability Database to rate their severity.

4. Exploitation: Exploitation involves using tools like Metasploit to simulate real-world attacks and bypass security restrictions to prevent system crashes.

5. Reporting: The reporting stage involves creating a detailed report detailing the findings of the penetration test, which can be utilized to rectify vulnerabilities and enhance security measures. This includes risk briefing, remediation advice, and strategic recommendations.

Penetration testing, which consists of five stages, is a critical defense in cybersecurity, mimicking real-world assaults to identify system flaws and resolve vulnerabilities before they can be exploited.

Now, let us understand the applications of pen testing.

AI in Pen Testing: Potential and Applications

AI can be applied to all stages of penetration testing in the following ways:

• Productivity and Team Augmentation: AI acts as a virtual teammate giving contextual guidance to human testers, enhancing team productivity.

• Reconnaissance and Gathering of Information: AI automates reconnaissance, efficiently collecting comprehensive information about companies, systems, and domains, aiding in initial penetration testing stages.

• SOC and SIEM Applications: AI tools alleviate manual data sifting in roles like Security Operations Center (SOC) and Security Information and Event Management (SIEM).

• Performing Advanced and Customized Attacks: AI can tackle complex attacks and create customized attacks tailored to specific organizational contexts.

• Reducing False Positives: AI streamlines vulnerability management by analyzing exploitability and recommending the most critical patches, saving businesses time and resources.

AI in pen testing improves security for B2B integrations by increasing efficiency, automating information collection, discovering hidden vulnerabilities, developing personalized attacks, and reducing false positives.

Further, to strengthen B2B integration, businesses should embrace current security, maintain constant attention, and educate employees on security awareness.

Prepare for Future Security Vulnerabilities in B2B Integration

Businesses may reduce future security dangers in B2B integration by implementing a multi-layered security strategy, investing in modern technology, conducting frequent audits, staying up to date, and ensuring employee training.

Here are the key steps that organizations can take:

1. Create a comprehensive security plan, use modern technologies such as AI and machine learning

2. Conduct frequent security audits and stay up to date on cybersecurity developments.

3. Implement constant personnel training and awareness which are essential for preparing for and mitigating future security concerns.

After identifying vulnerabilities with AI-powered penetration testing, enterprises may use AI and machine learning to continually monitor B2B data exchanges.

Streamlining B2B Security with Pen Testing Apps

The growing complexity of B2B interactions, especially with feature-rich super applications, necessitates strong safety precautions, with automated pen testing apps acting as an undiscovered weapon.

Automated AI-driven pen testing apps are essential tools today because they:

1. Streamline Vulnerability Detection: This frees up security specialists for strategic responsibilities and in-depth research of super applications’ complex architecture.

2. Automate Repetitive Tasks: This enables quicker scans and real-time threat detection, which is critical for protecting the ever-increasing attack surface of super applications.

Combined with traditional pen testing, these techniques provide a faster, more efficient security strategy. This assures the flawless functioning of even the most complex super applications, promoting confidence and safeguarding sensitive B2B information.

To properly deploy AI-powered cybersecurity, organizations must train security experts to use these modern technologies. AI certifications provide individuals with the knowledge necessary to use AI tools for improved vulnerability identification and threat mitigation. These certifications focus on automated security, AI assessments, and B2B security testing efficiency, which improve B2B security posture and enable enterprises to implement innovative solutions.

Why Pen Testing Certifications Matter?

There are several recognized penetration testing certifications, most requiring prior experience in systems administration and networking. The value of a penetration testing certification includes increased credibility and skill level, and it helps clients ensure a comprehensive manual investigation of their systems by a certified individual. In-house penetration testing teams offer more frequent testing, faster response times, and lower costs compared to external services.

Takeaways

AI-powered penetration testing automates penetration tests with machine learning and AI.

Penetration testing involves five stages: reconnaissance, scanning, vulnerability assessment, exploitation, and reporting.

Automated penetration testing increases team productivity and supports SOC and SIEM applications.

Penetration testing requires a multi-layered security strategy, advanced technologies, regular security audits, cybersecurity trend updates, and personnel training.