#Used IBM Server Storage Equipment

Explore tagged Tumblr posts

Text

Containerized Data Center Market

Containerized Data Center Market Size, Share, Trends: Huawei Technologies Lead

Rising Adoption of Cloud and Edge Computing Fuels Market Growth

Market Overview:

The global containerized data center market is rapidly expanding, driven by the increasing adoption of cloud computing, edge computing, and the need for scalable and portable data center infrastructure. In 2024, North America emerged as the leading region, accounting for a significant share of the global revenue. The growth in this market is primarily propelled by businesses migrating their workloads to cloud and edge networks, necessitating modular, scalable, and quickly deployable data center solutions. Containerized data centers, with their plug-and-play design and portability, are particularly well-suited to meet this demand.

DOWNLOAD FREE SAMPLE

Market Trends:

One major trend driving the containerized data center market is the growing need for energy-efficient and sustainable data centers. Traditional brick-and-mortar data centers consume vast amounts of energy for cooling and power distribution. In contrast, containerized data centers, with their compact design and advanced cooling systems, can reduce energy consumption by up to 40% compared to traditional data centers. This trend is significant as it addresses the increasing focus on sustainability and energy efficiency in the data center industry, with companies like Google deploying containerized data centers globally to reduce their carbon footprint.

Market Segmentation:

The containerized data center market is segmented into hardware and software components. The hardware segment, which includes servers, storage systems, and network equipment, dominated the market in 2024, accounting for over 60% of the global revenue. This segment is expected to maintain its dominance through 2031, driven by continuous advancements in server and storage technologies. In 2024, Dell Technologies introduced a new line of servers optimized for containerized deployments, featuring improved density and energy efficiency. The software segment, which includes virtualization platforms, container orchestration tools, and management software, is expected to exhibit the highest CAGR of 25.5% from 2024 to 2031, driven by the growing adoption of cloud-native technologies and the increasing complexity of managing containerized infrastructure.

Market Key Players:

Huawei Technologies Co., Ltd.

Dell Technologies Inc.

Hewlett Packard Enterprise Development LP

Cisco Systems, Inc.

IBM Corporation

Schneider Electric SE

Contact Us:

Name: Hari Krishna

Email us: [email protected]

Website: https://aurorawaveintellects.com/

0 notes

Text

Spectra Technologies Inc: Used Dell EMC Servers with Performance You Can Trust

In an era where technology is at the core of business operations, companies are constantly seeking ways to optimize their IT infrastructure. High-performing servers are crucial to supporting the growing demands of modern enterprises, but new equipment can come with a hefty price tag. For businesses looking to balance performance and budget, used Dell EMC servers provide an ideal solution. Spectra Technologies Inc. stands out as a trusted provider of these high-quality, pre-owned servers that deliver exceptional value without compromising on performance.

Spectra Technologies Inc. offers businesses access to a wide range of used Dell EMC servers, enabling companies to leverage the power and reliability of Dell’s enterprise-grade hardware at a fraction of the cost of new models. Along with these high-quality servers, Spectra Technologies provides expert IBM server maintenance services, ensuring that all the hardware is running at peak efficiency for years to come.

Why Choose Used Dell EMC Servers?

Dell EMC has a reputation for manufacturing some of the most reliable and powerful servers on the market. Known for their performance, scalability, and longevity, Dell EMC servers have been trusted by some of the world’s most demanding organizations. However, purchasing brand-new servers can be a significant financial investment. By choosing used Dell EMC servers from Spectra Technologies, businesses can get the same level of performance and reliability, but at a much lower cost. Here’s why used Dell EMC servers are a smart choice for companies looking to optimize their IT infrastructure.

Cost Savings Without Compromise One of the most obvious advantages of purchasing used Dell EMC servers is the substantial cost savings. Brand-new enterprise-grade servers can be prohibitively expensive, especially for small to medium-sized businesses. Used servers, on the other hand, offer significant savings while still providing high performance and reliability. Spectra Technologies ensures that each pre-owned server undergoes rigorous testing and quality checks, ensuring it performs just as well as new equipment.

Proven Performance and Scalability Dell EMC servers are known for their ability to handle demanding workloads, from running complex business applications to managing large databases. Even used units retain much of their performance capabilities, offering excellent processing power, memory capacity, and storage options. Whether you need to run virtualized environments, big data applications, or high-traffic websites, a used Dell EMC server from Spectra Technologies will meet your needs. Additionally, Dell EMC servers are highly scalable, meaning you can expand and upgrade your infrastructure as your business grows. You can easily add more storage, memory, or processing power to accommodate increasing demands, all while keeping operational costs in check.

Reliability and Durability Dell EMC is a brand renowned for the durability and longevity of its servers. Even after years of use, used Dell EMC servers remain reliable and continue to deliver the performance required for mission-critical tasks. Spectra Technologies offers used servers that have been carefully refurbished to ensure they are in excellent working condition, providing businesses with the reliability they need to operate without disruption.

Energy Efficiency As energy costs continue to rise, companies are increasingly looking for ways to reduce power consumption. Dell EMC servers are designed with energy efficiency in mind, using advanced technologies to deliver high performance without excessive power usage. By purchasing used servers from Spectra Technologies, businesses can further reduce energy costs while benefiting from the latest energy-saving technologies.

Comprehensive Warranty and Support Spectra Technologies backs all of its used Dell EMC servers with a warranty, offering businesses peace of mind knowing that their investment is protected. In addition to warranties, Spectra Technologies provides ongoing technical support to help companies troubleshoot issues, perform routine maintenance, and ensure the long-term performance of their servers.

Powering Business with IBM: Used IBM Power 10 Servers

While Dell EMC servers are a great choice for many businesses, some organizations may require even more specialized hardware. Used IBM Power 10 servers are an excellent option for companies that need superior performance and the ability to handle demanding workloads, including large-scale data processing, AI applications, and complex enterprise resource planning (ERP) systems.

The IBM Power 10 architecture represents a significant leap forward in server technology, offering industry-leading performance, security, and energy efficiency. Businesses that need maximum processing power can rely on these systems to deliver exceptional results, especially in environments where performance is a critical factor. By opting for used IBM Power 10 servers from Spectra Technologies, companies can access this next-generation technology without the expense of purchasing brand-new systems.

IBM Server Maintenance: Ensuring Peak Performance

While purchasing used Dell EMC servers or used IBM Power 10 systems is an excellent investment, ensuring these systems are properly maintained is equally important. Spectra Technologies provides IBM server maintenance services to help businesses maximize the lifespan and performance of their servers. Regular maintenance is essential to prevent downtime, extend hardware life, and ensure that systems continue to operate efficiently.

Proactive Monitoring One of the keys to successful IBM server maintenance is proactive monitoring. Spectra Technologies offers monitoring services that track the performance of servers in real-time, allowing issues to be identified before they cause major problems. By catching potential issues early, businesses can avoid costly downtime and prevent minor problems from escalating into critical failures.

Timely Repairs and Replacements Even with regular maintenance, hardware components will eventually need repairs or replacement. Spectra Technologies provides timely support for repairs and parts replacements, including for used IBM Power 10 systems. Whether it’s a failing hard drive, a malfunctioning power supply, or other hardware issues, Spectra’s technicians are trained to quickly resolve issues and restore servers to optimal performance.

Software and Firmware Updates IBM server maintenance isn’t just about physical hardware; it also includes regular software and firmware updates. Keeping your servers’ operating systems and firmware up-to-date ensures that your systems are secure and performing optimally. Spectra Technologies assists businesses with these updates, ensuring that their used IBM Power 10 or used Dell EMC servers are always running the latest software for improved functionality and security.

Security Patches and Threat Management With the increasing frequency and sophistication of cyber threats, security is a top concern for businesses. Spectra Technologies includes security monitoring and patch management as part of their maintenance services, ensuring that vulnerabilities are addressed promptly. For businesses using used IBM Power 10 servers or used Dell EMC servers, regular security updates are crucial to safeguarding sensitive data and ensuring compliance with industry regulations.

Tailored Maintenance Plans Each business has unique needs when it comes to IBM server maintenance. Spectra Technologies offers tailored maintenance plans to meet the specific requirements of your organization. Whether you need basic support or full-scale IT management, Spectra can design a solution that works for your business, ensuring maximum uptime and minimal disruption.

The Spectra Technologies Advantage

At Spectra Technologies Inc., the focus is on delivering high-quality used IT equipment, including used Dell EMC servers and used IBM Power 10 systems, combined with expert maintenance services that extend the life and performance of your hardware. The company’s commitment to providing reliable, cost-effective solutions makes it a trusted partner for businesses of all sizes looking to optimize their IT infrastructure without breaking the bank.

With Spectra Technologies, businesses can trust that their servers will continue to perform at their best, even as they scale and evolve. Whether you’re looking for used servers or need comprehensive IBM server maintenance, Spectra Technologies is your go-to source for quality, service, and support.

By choosing used Dell EMC servers and partnering with Spectra Technologies for ongoing maintenance, your business can enjoy the benefits of high-performance IT infrastructure at a cost-effective price point. The reliability, scalability, and energy efficiency of these servers, combined with expert maintenance, will ensure that your business stays competitive in today’s technology-driven world.

0 notes

Text

The Role of Artificial Intelligence in Sustainable IT

Introduction

In today’s rapidly evolving technological landscape, the importance of sustainability in IT cannot be overstated. With the growing environmental concerns, the IT sector faces increasing pressure to adopt sustainable practices. Artificial Intelligence (AI) emerges as a powerful tool with the potential to significantly impact sustainability efforts. This article aims to explore the crucial role AI plays in promoting sustainable IT practices, highlighting its applications in energy efficiency, resource management, predictive maintenance, green software development, and sustainable supply chain management.

Understanding Sustainable IT

Sustainable IT refers to the practice of designing, operating, and disposing of technology in ways that minimize environmental impact. This encompasses energy-efficient data centers, responsible e-waste management, and the use of renewable energy sources. The importance of sustainability in the IT sector is driven by the industry’s significant energy consumption and e-waste production. Achieving sustainable IT involves overcoming challenges such as high energy demands, rapid technological obsolescence, and the environmental costs of production and disposal. However, these challenges also present opportunities for innovation and the adoption of greener practices, with AI playing a pivotal role in this transformation.

AI and Energy Efficiency

Artificial Intelligence (AI) plays a crucial role in optimizing energy consumption in data centers, which are notorious for their high energy demands. AI-driven energy management systems can analyze vast amounts of data to predict and manage energy use more efficiently, reducing waste and costs. For example, AI algorithms can dynamically adjust cooling systems and optimize server workloads to ensure minimal energy usage. Companies like Google have successfully implemented AI to manage their data centers, achieving significant energy savings and reducing their carbon footprint. These AI-driven innovations highlight the potential for substantial improvements in energy efficiency within the IT sector.

AI in Resource Management

Artificial Intelligence (AI) enhances resource management by optimizing allocation and minimizing waste in IT operations. AI systems can predict resource needs, efficiently distributing computational power and storage to avoid over-provisioning. In smart grids, AI enhances electricity distribution by balancing supply and demand in real-time, reducing energy wastage. For example, AI algorithms can monitor and manage the usage of hardware and software resources, ensuring optimal performance with minimal environmental impact. Companies like IBM use AI to manage IT resources more sustainably, demonstrating AI’s potential to significantly improve resource efficiency and promote greener IT practices.

AI for Predictive Maintenance

Predictive maintenance, powered by Artificial Intelligence (AI), offers substantial sustainability benefits by predicting hardware failures and optimizing maintenance schedules. AI algorithms analyze data from sensors and system logs to identify patterns and anticipate issues before they lead to equipment breakdowns. This proactive approach extends the lifespan of IT equipment, reducing the need for replacements and minimizing electronic waste. For instance, AI-driven predictive maintenance systems in data centers can detect potential server failures early, allowing timely interventions. Companies like Microsoft utilize AI for predictive maintenance, ensuring efficient operation and contributing to sustainable IT practices by reducing resource consumption and waste.

Read More: https://www.manras.com/the-role-of-artificial-intelligence-in-sustainable-it/

0 notes

Text

Everything as a Service To Reduce Costs, Risks & Complexity

What is Everything as a Service?

The phrase “everything as a service” (XaaS) refers to the expanding practice of providing a range of goods, equipment, and technological services via the internet. It’s basically a catch-all term for all the different “as-a-service” models that have surfaced in the field of cloud computing.

Everything as a Service examples

XaaS, or “Everything as a Service,” refers to the wide range of online software and services. Many services can be “X” in XaaS. Examples of common:

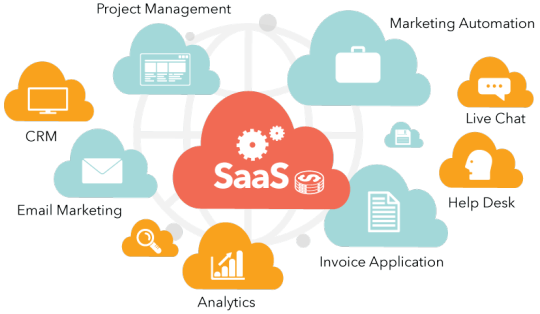

SaaS: Internet-delivered software without installation. Salesforce, Google Workspace, and Office 365.

Providing virtualized computer resources over the internet. These include AWS, Azure, and GCP.

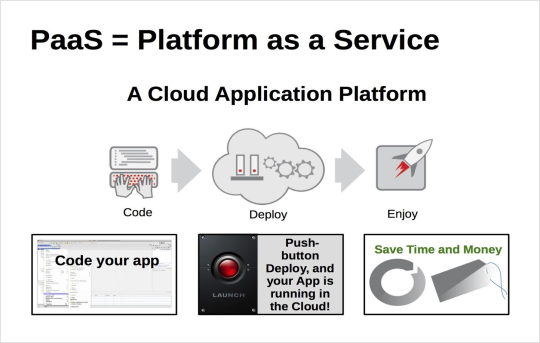

Platform as a Service (PaaS): Provides equipment and software for application development online. Google App Engine and Azure App Services are examples.

Virtual desktops are available remotely with DaaS. Horizon Cloud and Amazon WorkSpaces.

BaaS (Backend as a Service): Connects online and mobile app developers to cloud storage and APIs. Amazon Amplify and Firebase are examples.

DBaaS (Database as a Service) saves users from setting up and maintaining physical databases. Google Cloud SQL and Amazon RDS.

FaaS: A serverless computing service that lets customers execute code in response to events but not manage servers. AWS Lambda and Google Cloud Functions.

STAaS: Provides internet-based storage. DropBox, Google Drive, and Amazon S3.

Network as a Service (NaaS): Virtualizes network services for scale and flexibility. SD-WAN is one.

By outsourcing parts of their IT infrastructure to third parties, XaaS helps companies cut expenses, scale up, and simplify. Flexibility allows users to pay for what they use, optimizing resources and costs.

IBM XaaS

Enterprises are requiring models that measure business outcomes instead of just IT results in order to spur rapid innovation. These businesses are under growing pressure to restructure their IT estates in order to cut costs, minimise risk, and simplify operations.

Everything as a Service (XaaS), which streamlines processes, lowers risk, and speeds up digital transformation, is emerging as a potential answer to these problems. By 2028, 80% of IT buyers will give priority to using Everything as a Service for critical workloads that need flexibility in order to maximise IT investment, enhance IT operations capabilities, and meet important sustainability KPIs, according to an IDC white paper sponsored by IBM.

Going forward, IBM saw three crucial observations that will keep influencing how firms develop in the upcoming years.

IT should be made simpler to improve business results and ROI

Enterprises are under a lot of pressure to modernise their old IT infrastructures. The applications that IBM is currently developing will be the ones that they must update in the future.

Businesses can include mission-critical apps into a contemporary hybrid environment using Everything as a Service options, especially for workloads and applications related to artificial intelligence.

For instance, CrushBank and IBM collaborated to restructure IT assistance, optimising help desk processes and providing employees with enhanced data. As a result, resolution times were cut by 45%, and customer satisfaction significantly increased. According to CrushBank, consumers have expressed feedback of increased happiness and efficiency, enabling the company to spend more time with the people who matter most: their clients, thanks to Watsonx on IBM Cloud.

Rethink corporate strategies to promote quick innovation

AI is radically changing the way that business is conducted. Conventional business models are finding it difficult to provide the agility needed in an AI-driven economy since they are frequently limited by their complexity and cost-intensive nature. Recent IDC research, funded by IBM, indicates that 78% of IT organisations consider Everything as a Service to be essential to their long-term plans.

Businesses recognise the advantages of using Everything as a Service to handle the risks and expenses associated with meeting this need for rapid innovation. This paradigm focusses on producing results for increased operational effectiveness and efficiency rather than just tools. By allowing XaaS providers to concentrate on safe, dependable, and expandable services, the model frees up IT departments to allocate their valuable resources to meeting customer demands.

Prepare for today in order to anticipate tomorrow

The transition to a Everything as a Service model aims to augment IT operations skills and achieve business goals more quickly and nimbly, in addition to optimising IT spending.

CTO David Tan of CrushBank demonstrated at Think how they helped customers innovate and use data wherever it is in a seamless way, enabling them to create a comprehensive plan that addresses each customer’s particular business needs. Enabling an easier, quicker, and more cost-effective way to use AI while lowering the risk and difficulty of maintaining intricate IT architectures is still crucial for businesses functioning in the data-driven world of today.

The trend towards Everything as a Service is noteworthy since it is a strategic solution with several advantages. XaaS ought to be the mainstay of every IT strategy, as it may lower operational risks and expenses and facilitate the quick adoption of cutting-edge technologies like artificial intelligence.

Businesses can now reap those benefits with IBM’s as-a-service offering. In addition to assisting clients in achieving their goals, IBM software and infrastructure capabilities work together to keep mission-critical workloads safe and compliant.

For instance, IBM Power Virtual Server is made to help top firms all over the world successfully go from on-premises servers to hybrid cloud infrastructures, giving executives greater visibility into their companies. With products like Watsonx Code Assistant for Java code or enterprise apps, the IBM team is also collaborating with their customers to modernise with AI.

There is growing pressure on businesses to rebuild their legacy IT estates in order to minimise risk, expense, and complexity. With its ability to streamline processes, boost resilience, and quicken digital transformation, Everything as a Service is starting to emerge as the answer that can take on these problems head-on. IBM wants to support their customers wherever they are in their journey of change.

Read more on govindhtech.com

#Complexity#EverythingasaService#cloudcomputing#Salesforce#GoogleCloudSQL#GoogleDrive#XaaS#IBMXaaS#ibm#rol#govindhtech#improvebusinessresults#WatsonxonIBMCloud#useai#news#artificialintelligence#WatsonxCodeAssistant#technology#technews

0 notes

Text

Data Center Market: Growth, Trends, and Future

Data centers have become the silent titans of the digital age, forming the backbone of our interconnected world. These massive facilities house the ever-growing volume of data powering everything from social media platforms to global financial transactions. This article delves into the data center market, exploring its size, share, growth trajectory, key trends, prominent players, and the promising future that lies ahead.

Data Center Market Size and Share:

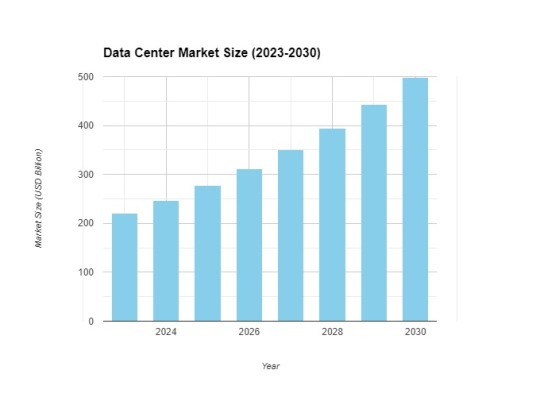

The data center market has witnessed remarkable expansion, fueled by the exponential growth of data generation and the increasing reliance on cloud-based services. In 2023, the global data center market reached a staggering USD 220 billion, showcasing a robust 12.4% CAGR. This growth is projected to continue, with the market expected to surpass USD 498 billion by 2030.

The market share exhibits a blend of established giants and emerging players. Leading companies like Equinix, Digital Realty, and IBM hold a significant portion, offering comprehensive data center solutions like colocation, managed services, and cloud infrastructure. However, the landscape is diversifying, with regional players and hyperscale cloud providers like Amazon Web Services (AWS) and Microsoft Azure entering the market, expanding their data center footprints to meet the growing demand.

Data Center Industry Growth and Revenue:

Several factors are propelling the data center industry growth and revenue:

Surging data creation: The proliferation of connected devices, the Internet of Things (IoT), and big data analytics are generating unprecedented amounts of data, necessitating robust storage and processing capabilities.

Cloud adoption: The widespread adoption of cloud computing services is driving the need for data centers that can provide secure and scalable infrastructure for cloud deployments.

Evolving technologies: Advancements in artificial intelligence (AI), machine learning (ML), and virtual reality (VR) require high-performance computing capabilities, further boosting the demand for data center capacity.

Data Center Market Trends:

The data center industry is undergoing a constant process of evolution, with several key trends shaping its future:

Focus on Sustainability: Environmental concerns are driving a push towards sustainable data center operations. This includes initiatives like using renewable energy sources, implementing energy-efficient cooling systems, and optimizing resource utilization.

Edge Computing: The rise of edge computing, where data processing occurs closer to the source of generation, requires the deployment of smaller, distributed data centers.

Hyperconvergence: Hyperconverged infrastructure (HCI) solutions that combine compute, storage, and networking resources into a single platform are gaining traction, offering improved efficiency and agility for data center deployments.

Security Concerns: As cyber threats become more sophisticated, data center operators prioritize robust security measures to ensure data integrity and customer trust.

Data Center Market Players:

The data center market comprises a diverse range of players, each catering to specific segments and demands:

Hyperscale Cloud Providers: Major cloud providers like AWS, Microsoft Azure, and Google Cloud Platform are building and operating large data centers to support their own cloud services and offerings.

Colocation Providers: These companies offer space, power, and cooling infrastructure for businesses to house their own servers and IT equipment.

Managed Service Providers (MSPs): MSPs provide comprehensive data center solutions, including infrastructure management, security, and disaster recovery services.

Data Center Market Future Outlook:

The data center market outlook remains optimistic, with continued growth expected in the coming years. The increasing emphasis on digital transformation, the proliferation of data-driven technologies, and the growing demand for cloud services will all contribute to the market's expansion.

However, data center operators face challenges such as managing energy consumption, ensuring data privacy, and adapting to ever-evolving security threats. By innovating, prioritizing sustainability, and embracing emerging technologies, data center players can ensure their continued success in this dynamic and ever-growing market.

In conclusion, the data center market is the powerhouse that fuels the digital age. As data generation continues to explode, the demand for efficient, secure, and sustainable data centers will only intensify. By adapting to evolving trends, embracing innovation, and prioritizing a sustainable future, the data center market is poised to play a vital role in shaping the ever-evolving digital landscape for years to come.

#market research#business#ken research#market analysis#market report#market research report#data center

1 note

·

View note

Text

Data Center Server Market Global Industry Trends, Growth, Forecast 2023-2028

The latest report by IMARC Group, titled "Data Center Server Market : Global Industry Trends, Share, Size, Growth, Opportunity and Forecast 2023-2028“. The global data center server market size reached US$ 52.3 Billion in 2022. Looking forward, IMARC Group expects the market to reach US$ 69.7 Billion by 2028, exhibiting a growth rate (CAGR) of 4.79% during 2023-2028.

A data center is a centralized facility designed to store, manage, and process vast amounts of digital data and information. It serves as the nerve center of modern businesses, providing essential computing resources and infrastructure for various functions, including data storage, processing, and network connectivity. Data centers are meticulously engineered environments equipped with cutting-edge technology to ensure optimal performance, security, and reliability. These facilities house rows of servers, storage systems, networking equipment, and cooling mechanisms, all working together to support the uninterrupted operation of critical applications and services. Data centers come in various sizes and configurations, ranging from small, on-premises server rooms to massive, hyperscale data centers operated by cloud service providers.

Request Your Sample Report Now: https://www.imarcgroup.com/data-center-server-market/requestsample

Data Center Server Market Trends and Drivers:

Organizations worldwide are undergoing digital transformation initiatives to enhance efficiency and competitiveness. This necessitates modern data centers equipped to handle the escalating volumes of data generated by IoT devices, cloud services, and online transactions. Additionally, the rapid adoption of cloud services by businesses and consumers alike is propelling demand for data center infrastructure. Cloud providers require vast data center resources to deliver scalable and reliable cloud solutions. Other than this, the rise of edge computing, where data is processed closer to its source, demands distributed data centers to reduce latency and improve real-time decision-making. This trend is particularly significant in IoT applications. Besides this, the flourishing e-commerce sector relies heavily on data centers to manage online shopping platforms, payment processing, and order fulfillment, with increasing demand for robust infrastructure to ensure uninterrupted service. In line with this, AI and big data analytics require immense computational power and storage capacity. Data centers are essential for training machine learning models and processing vast datasets, making them indispensable for enterprises exploring AI-driven insights.

Report Segmentation:

The report has segmented the market into the following categories:

Breakup by Product:

Rack Servers

Blade Servers

Micro Servers

Tower Servers

Breakup by Application:

Industrial Servers

Commercial Servers

Market Breakup by Region:

North America (United States, Canada)

Asia Pacific (China, Japan, India, South Korea, Australia, Indonesia, Others)

Europe (Germany, France, United Kingdom, Italy, Spain, Russia, Others)

Latin America (Brazil, Mexico, Others)

Middle East and Africa

Competitive Landscape with Key Player:

Hewlett Packard Enterprise

Dell, Inc.

International Business Machines (IBM) Corporation

Fujitsu Ltd.

Cisco Systems, Inc.

Lenovo Group Ltd.

Oracle Corporation

Huawei Technologies Co. Ltd.

Inspur Group

Bull (Atos SE)

Hitachi Systems

NEC Corporation

Super Micro Computer, Inc.

Explore full report with table of contents: https://www.imarcgroup.com/data-center-server-market

If you need specific information that is not currently within the scope of the report, we will provide it to you as a part of the customization.

About Us

IMARC Group is a leading market research company that offers management strategy and market research worldwide. We partner with clients in all sectors and regions to identify their highest-value opportunities, address their most critical challenges, and transform their businesses.

IMARC’s information products include major market, scientific, economic and technological developments for business leaders in pharmaceutical, industrial, and high technology organizations. Market forecasts and industry analysis for biotechnology, advanced materials, pharmaceuticals, food and beverage, travel and tourism, nanotechnology and novel processing methods are at the top of the company’s expertise.

Contact Us

IMARC Group

Email: [email protected]

USA: +1-631-791-1145 | Asia: +91-120-433-0800

Address: 134 N 4th St. Brooklyn, NY 11249, USA

Follow us on Twitter: @imarcglobal

0 notes

Text

Cloud Server Market Region, Applications, Drivers, Trends & Forecast Till 2024

The global cloud server market is segmented into deployment models type such as Hybrid Cloud, Private Cloud and Public Cloud. Among these segments, hybrid cloud is the fastest growing segment. Drivers which are expected to drive the market for cloud server are cost effectiveness, flexibility, scalability and reliability to cloud server by end-users. Market of cloud server is also becoming popular due to the increase use of cloud-based storage by most of the business enterprises.

Global cloud server market is expected to grow at a notable CAGR of 18.23% during the forecast period. Moreover, the global cloud server market is expected to grow with high pace during the forecasting period due to the rapid change in computing and high demand for flexibility of resources.

In terms of regional platform, Asia Pacific countries such as China, India and Japan accounted for the fastest growing market of global cloud server in terms of revenue in 2017. Increasing awareness about cloud server among developing economies such as China, India and Japan will drive the market demand in this region.

North America is expected to hold the largest market for cloud server as most of the business enterprises adopt the cloud computing technologies for real-time data accessibility.

Apart from this, Europe is the second largest in cloud server market share in terms of revenue due to the presence of tremendous opportunities for cloud server market in government sector.

Rising growth of Hybrid Cloud Market

Hybrid cloud is the mixture of public and private cloud. It integrates private computing resources and public cloud services. Hybrid cloud allows organizations to harness the cost benefits and efficiencies of public clouds while managing the security. The hybrid cloud market, in terms of value, is estimated around USD 34.56 Billion in 2016 and is expected to grow rapidly during the forecasting period at a CAGR of around 17.45%.

Hybrid cloud seems the natural evolution from a traditional model, and it provides benefits such as security and compliance, agility, improved experience, security and compliance and increased innovation. Hybrid cloud has the ability to use multiple features of both public and private cloud which results reduction of deployment cost. Growing emphasis on agile, growing volumes of business data and scalable computing processes are the drivers for the growth of hybrid cloud market.

The report titled “Cloud Server Market: Global Demand Analysis & Opportunity Outlook 2027” delivers detailed overview of the global cloud server market in terms of market segmentation by deployment models, by verticals, by applications type and by region.

Request Sample Pages @ https://www.researchnester.com/sample-request-694

Further, for the in-depth analysis, the report encompasses the industry growth drivers, restraints, supply and demand risk, market attractiveness, BPS analysis and Porter’s five force model. This report also provides the existing competitive scenario of some of the key players of the cloud server market which includes company profiling of IBM Corporation, Rackspace, Microsoft Corporation, Google, Oracle Corporation, Dell, VMware, Hewlett-Packard and Amazon. The profiling enfolds key information of the companies which encompasses business overview, products and services, key financials and recent news and developments.

On the whole, the report depicts detailed overview of the global cloud server market that will help industry consultants, equipment manufacturers, existing players searching for expansion opportunities, new players searching possibilities and other stakeholders to align their market centric strategies according to the ongoing and expected trends in the future.

0 notes

Text

Cloud Computing Courses in Pune | Cloud Computing Classes in Pune

Cloud Computing Classes in Pune Cloud computing is the on-demand availability of computer system resources, especially data storage (cloud storage) and computing power, without direct active management by the user. Large clouds often have functions distributed over multiple locations, each location being a data center. Cloud computing relies on sharing of resources to achieve coherence and typically using a “pay-as-you-go” model which can help in reducing capital expenses but may also lead to unexpected operating expenses for unaware users.

Nits Software offers Cloud Computing courses in Pune. These courses provide an in–depth understanding of cloud computing technologies and their practical application in businesses. The courses are designed to equip participants with the skills needed to deploy and manage cloud–based solutions. The courses cover topics such as cloud infrastructure, cloud security, cloud storage and networking, virtualization and containers, and cloud application development. These courses are ideal for IT professionals and aspiring cloud engineers who want to gain expertise in the field of cloud computing.

Types of Cloud Computing Cloud computing is not a single piece of technology like a microchip or a cellphone. Rather, it’s a system primarily comprised of three services: software-as-a-service (SaaS), infrastructure-as-a-service (IaaS), and platform-as-a-service (PaaS).

Software-as-a-service (SaaS) involves the licensure of a software application to customers. Licenses are typically provided through a pay-as-you-go model or on-demand. This type of system can be found in Microsoft Office’s 365.

Infrastructure-as-a-service (IaaS) involves a method for delivering everything from operating systems to servers and storage through IP-based connectivity as part of an on-demand service. Clients can avoid the need to purchase software or servers, and instead procure these resources in an outsourced, on-demand service.

Popular examples of the IaaS system include IBM Cloud and Microsoft Azure. Platform-as-a-service (PaaS) is considered the most complex of the three layers of cloud-based computing. PaaS shares some similarities with SaaS, the primary difference being that instead of delivering software online, it is actually a platform for creating software that is delivered via the Internet. This model includes platforms like Salesforce.com and Heroku.

Advantages of Cloud Computing Cloud-based software offers companies from all sectors a number of benefits, including the ability to use software from any device either via a native app or a browser. As a result, users can carry their files and settings over to other devices in a completely seamless manner.

Cloud computing is far more than just accessing files on multiple devices. Thanks to cloud computing services, users can check their email on any computer and even store files using services such as Dropbox and Google Drive. Cloud computing services also make it possible for users to back up their music, files, and photos, ensuring those files are immediately available in the event of a hard drive crash.

It also offers big businesses huge cost-saving potential. Before the cloud became a viable alternative, companies were required to purchase, construct, and maintain costly information management technology and infrastructure. Companies can swap costly server centers and IT departments for fast Internet connections, where employees interact with the cloud online to complete their tasks.

The cloud structure allows individuals to save storage space on their desktops or laptops. It also lets users upgrade software more quickly because software companies can offer their products via the web rather than through more traditional, tangible methods involving discs or flash drives. For example, Adobe customers can access applications in its Creative Cloud through an Internet-based subscription. This allows users to download new versions and fixes to their programs easily.

Nits Software offers comprehensive Cloud Computing Classes in Pune. With our classes, you will gain a comprehensive understanding of Cloud Computing fundamentals, including its architecture, deployment models, services, and various cloud providers. You will also learn tools, technologies, and best practices to develop and deploy applications on the cloud. Our classes are taught by experienced professionals and are designed to help you gain the skills to become a successful cloud computing professional.

NITS SOFTWARES is the best place to learn Cloud Computing in Pune. Get the best Cloud Computing training in Pune and start your journey to success today!

Nits Softwares Test Learn From 8 Years Experience Trainers. 100% Job Placement Assistance. Well Equipped Computer Laboratories. Innovative Infrastructure. Biggest Practice Computer Lab. Lowest Course Fees In Pune. Course Completion Certificates Free Internship Projects Would Be Given. 100% Placement Guarantee.

#Cloud Computing Courses in Pune#Cloud Computing Classes in Pune#Cloud Computing Certification Course Training in Pune#Cloud Computing Training Courses in Pune#Cloud Computing Training in Pune#Best Cloud Computing Course in Pune#Cloud Architect Certification Course in Pune#cloud computing classes in pune with placement#cloud computing course in pune fees#cloud computing classes near me

0 notes

Text

Learn to Sell Selling Your Used Server

As businesses start scaling down on their infrastructures, used servers are becoming increasingly popular. Offices Sell New and Used IBM Server Storage Equipment. Thus, it leads to less room in company-owned data centers. To last longer than three years, Servers aren’t typically designed. That means, every year, the depreciation cost becomes more significant after that point.

Granting you access to newer hardware at a low monthly cost, companies provide great options for leasing servers on the other hand. To utilize their budgets more efficiently, these services allow businesses by investing in only the most current technology.

Significance of Selling Used Servers

On their infrastructures, businesses are looking to scale down by utilizing resources such as leases or rental services and selling off older equipment. There are still many unused servers out there despite the efforts of these companies that have gone through a rigorous depreciation process over a certain period.

Sellers will often seek it so that they can get rid of second-hand machines, as, usually, older servers lack the processing power required to maintain today’s standards.

Learn When to Sell Used Servers

The depreciation cost of a server can be very high when it becomes necessary. Thus, in order to sell their equipment this is often a good time for sellers. If they haven’t already done so personal factors such as job changes and economic factors can also lead to people considering selling their servers.

How You Can Sell Used Servers Safely

There are various ways that you can go about selling your equipment. For selling we will start with the safest method, which is to a partner. This can be an individual or business with whom you are familiar with. To make sure that your company’s servers aren’t compromised during the process you want to ensure that they have the expertise in data destruction.

You can Sell New and Used IBM Server Storage Equipment back to the manufacturer is the next option. If you have purchased their equipment directly from them many companies offer this service for free. There are manufacturers who provide additional services such as recycling materials or donating used equipment, so you just need to check with your provider beforehand.

#Sell New and Used IBM Server Storage Equipment#Used IBM Server Storage Equipment#IBM Server Storage

0 notes

Photo

QUANTUM HARRELL TECH [QHT] LLC… Scientifically Engineer Aerial [SEA] Imaging Weaponry 4 Us 9 Ether Beings [ANUNNAGI] aka QHT’s ELITE MILITARY PRIESTHOOD of Highly Complex [ADVANCED] Statistical Computation Signatures of SIRIUS 6G Intellectual Property [I/P] Rights from MILLIONS of AZTECAN Years Ago [MAYA]… since QHT Interactively Built the MEGAMACHINE [iBM] that Replicate Synchronizable Networks Optimized by Our Highly Complex [ADVANCED] Ancient Computerized INTEL from this SUPREME MATHEMATICIAN of Antipodal Points [MAP] Geographically Coordinated by QHT’s Highly Complex [ADVANCED] Ancient Biochemical [PRIMORDIAL] Polyatomic Ions of Valence Electrons in Orbital Valence Shells Transmitting Digitized Imagery into Our Patented Photographic Memory Devices Equipped w/ Analytical [DEA] Information Software Registered @ QHT's Clandestine American [CA] DEFENSE [CAD] PENTAGON Agency [PA]… who Monetarily INCENTIVIZE [MI = MICHAEL] QUANTUM HARRELL TECH [QHT] LLC… 2 Intuitively Process [I/P] AUTOMATED Intelligence Data Handling Systems [IDHS] of Established Protocols that Increase Storage on QHT’s Divine Computer [D.C.] Servers of Technically AUTOMATED Components Operating Monetary [.com] Transactions from QHT's Digitized Telecom Apps Encrypted w/ 6G Quantum Intel [QI] Algorithms Logistically Arranged on the World [LAW] Wide Web [www.] of Clandestine Infrastructure Assembled [CIA] by Our Asynchronous Messaging on Optimizable Middleware [MOM] Digitally Calculating [D.C.] Highly Complex [ADVANCED] Cosmic Algorithmic [CA] Computation [Compton] STAR Mathematics ACCELERATED by Numerical [MAN] Summations from Unified Statistical Aberrations [USA] Scientifically Engineered into Agile [SEA] Architecture of SIRIUS UNSEEN NANO [SUN] BIOTECHNOLOGICAL Light Particles… Infinitely Producing [I/P] Subatomic Airwave Signals MANUFACTURED by Us [MU] Astronomical Creators [MAC] of TIAMAT’s Biblically Ancient [BABYLONIAN] 9 Ether Beings [ANUNNAGI] Found DEEP IN:side QHT's EXTRAGALACTIC MOON Universe [MU] of the AUGMENTED RIGEL STAR [MARS] SYSTEM of Geometrical Objects on Digital [GOD] Cloud Networks Created by this Intergalactic Architect [CIA] of the GALACTIC MOTHERSHIP NIBIRU 🛸👽💰 (at San Diego, California) https://www.instagram.com/p/CWJ1V1SF8Qf/?utm_medium=tumblr

#quantumharrelltech#Blind Faith + False Hope = MENTAL SLAVERY#QHT Businesses of Lucrative Markets [BLM]#QHT better than you#I BEE 90% SUN GOD [RA] 10% Human

3 notes

·

View notes

Text

Used IBM iSeries: A Smart Choice for Businesses on a Budget

For businesses operating on a budget, finding affordable yet reliable IT solutions is crucial. IBM iSeries (now part of IBM Power Systems) has long been known for its robustness, reliability, and performance. While a brand-new IBM Power System can be expensive, purchasing used or refurbished IBM iSeries equipment offers an excellent opportunity to leverage the power of this enterprise-class hardware at a fraction of the cost. Here’s why a used IBM iSeries can be a smart choice for businesses looking to maximize their IT investment.

1. Significant Cost Savings

One of the primary advantages of purchasing a used IBM iSeries system is the significant reduction in upfront costs. New IBM Power Systems can cost tens of thousands of dollars, but buying refurbished equipment can save businesses up to 50% or more, allowing you to allocate budget to other critical areas, like software or employee training. For small and medium-sized businesses, these savings are invaluable.

2. High Reliability and Longevity

IBM iSeries servers are designed for maximum uptime and reliability, which is why they have been trusted for mission-critical applications for decades. Known for their durability, many used iSeries models still offer years of dependable service. IBM’s reputation for quality means that even a used iSeries system is likely to provide years of consistent performance, making it an excellent investment for businesses that need dependable IT infrastructure without paying top-dollar for new equipment.

3. Scalability for Growth

Even when purchasing a used IBM iSeries, businesses can benefit from the system’s scalability. These servers can be easily upgraded as your business grows. Whether you need more processing power, memory, or storage, a used IBM iSeries can be scaled to meet your evolving needs. This flexibility makes it an ideal solution for businesses that expect growth but need to stay mindful of initial costs.

4. Built-in Security and Performance

IBM iSeries servers come equipped with powerful security features and are optimized for running critical applications. The system’s built-in security frameworks, including advanced encryption and user access controls, ensure your data remains secure, even when running a used unit. Plus, the iSeries is known for its excellent processing power and ability to handle high-demand workloads, making it suitable for everything from ERP systems to data management.

Conclusion

For businesses looking to maximize their IT budget, used IBM iSeries systems offer an excellent combination of reliability, performance, and cost-effectiveness. By choosing a refurbished unit, you can access the power of enterprise-grade hardware without the hefty price tag, ensuring your business stays competitive while keeping operational costs in check. Whether you're scaling for growth or simply need a dependable system, a used IBM iSeries is a smart choice for any budget-conscious business.

0 notes

Text

Cloud Computing | IT Industry | IT Computing Models

Cloud Computing:

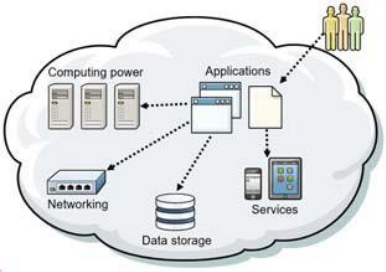

Cloud computing is the on-demand availability of computer system resources, especially data storage and computing power, without direct active management by the user. The term is generally used to describe data centres available to many users over the Internet.

Cloud computing is a model for enabling convenient, on-demand access to provider-managed suite of both hardware and software resources that can be rapidly provisioned and released with minimal management effort or service provider interaction.

Cloud computing is a disruptive changing in the IT industry that represents a new model for the IT infrastructure that is different from traditional IT computing models. Cloud computing enables ubiquitous computing, where computing is available anytime and everywhere, using any device, in any location, and in any format.

This new model demands a dynamic and responsive IT infrastructure due to short application lifecycles. To support this model, new development processes, application design, and development tools are required.

Elastic resources: Scale up or down quickly and easily to meet changing demand.

Metered services: Pay only for what you use.

Self-service: Find all the IT resources that you need by using self-service access.

Figure: Cloud Computing

Cloud vendor:

An organization that sells computing infrastructure, software as a service (SaaS) or storage

Top cloud service providers are as follows:

· IBM Cloud

· Amazon Web Services (AWS) Amazon Web Services (AWS)

· Microsoft Azure

· Google Cloud

· Salesforce

· Oracle Cloud

Article 2

Cloud Computing Service Models:

There are three types of cloud service models.

· IAAS (Infrastructure as a Service)

· PAAS (Platform as a Service)

· SAAS (Software as a Service)

Figure: Service models

IAAS:

A cloud provider offers clients pay-as-you-go access to storage, networking, servers, and other computing resources in the cloud.

PAAS:

A cloud provider offers access to a cloud-based development environment in which users can build and deliver applications. The provider supplies and manages the underlying infrastructure.

SAAS:

A cloud provider delivers software and applications through the internet that are ready to be consumed. Users subscribe to the software and access it through the web or vendor application programming interfaces (APIs).

Infrastructure as a Service (IAAS):

Infrastructure as a service is a cloud computing offering in which a vendor provides users access to computing resources such as servers, storage, and networking. IaaS offerings are built on top of a standardized, secure, and scalable infrastructure

Key features:

· Instead of purchasing hardware outright, users pay for IAAS on demand.

· Infrastructure is scalable depending on processing and storage needs.

· Saves enterprises the costs of buying and maintaining their own hardware

· Because data is on the cloud, there is no single point of failure.

· Enables the virtualization of administrative tasks, freeing up time for other work

Figure: IAAS

Platform as a Service (PAAS):

Platform as a service (PAAS) is a cloud computing offering that provides users a cloud environment in which they can develop, manage, and deliver applications. In addition to storage and other computing resources, users can use a suite of prebuilt tools to develop, customize and test their own applications.

PAAS also gives the developer an automatic method for scaling. For example, consider a situation where the developer wants more hardware resources that are dedicated to an application (scaling up or vertical scaling) or more instances of the application to handle the load (scaling out or horizontal scaling). PAAS also provides built-in application monitoring. For example, the platform sends notifications to inform developers when their application crashes.

Figure: PAAS

Software as a service (SAAS):

Software as a service is a cloud computing offering that provides users with access to a vendor’s cloud-based software. Users do not install applications on their local devices. Instead, the applications reside on a remote cloud network accessed through the web or an API. Through the application, users can store and analyze data and collaborate on projects.

Key features:

· SAAS vendors provide users with software and applications on a subscription model.

· Users do not have to manage, install, or upgrade software; SAAS providers manage this.

· Data is secure in the cloud; equipment failure does not result in loss of data

· Use of resources can be scaled depending on service needs.

· Applications are accessible from almost any Internet-connected device, from virtually anywhere in the world.

Figure: SAAS

#cloudcomputing#computing#aws#ibm#ibmcertifief#ibmcertifocation#onlinecourses#onlinetraining#training#courses#cloud#technology

13 notes

·

View notes

Text

Machine Learning for IBM z/OS v3.2 provides AI for IBM Z

On the IBM Z with Machine Learning for IBM z/OS v3.2, speed, scale, and reliable AI Businesses have doubled down on AI adoption, which has experienced a phenomenal growth in recent years. Approximately 42% of enterprise scale organizations (those with more than 1,000 workers) who participated in the IBM Global AI Adoption Index said that they had actively implemented AI in their operations.

IBM Application Performance Analyzer for z/os Companies who are already investigating or using AI report that they have expedited their rollout or investments in the technology, according to 59% of those surveyed. Even yet, enterprises still face a number of key obstacles, including scalability issues, establishing the trustworthiness of AI, and navigating the complexity of AI implementation.

A stable and expandable setting is essential for quickening the adoption of AI by clients. It must be able to turn aspirational AI use cases into reality and facilitate the transparent and trustworthy generation of real-time AI findings.

For IBM z/OS, what does machine learning mean? An AI platform designed specifically for IBM z/OS environments is called Machine Learning for IBM z/OS. It mixes AI infusion with data and transaction gravity to provide scaled-up, transparent, and rapid insights. It assists clients in managing the whole lifespan of their AI models, facilitating rapid deployment on IBM Z in close proximity to their mission-critical applications with little to no application modification and no data migration. Features include explain, drift detection, train-anywhere, and developer-friendly APIs.

Machine Learning for IBM z/OS IBM z16 Many transactional use cases on IBM z/OS can be supported by machine learning. Top use cases include:

Real-time fraud detection in credit cards and payments: Large financial institutions are gradually incurring more losses due to fraud. With off-platform alternatives, they were only able to screen a limited subset of their transactions. For this use case, the IBM z16 system can execute 228 thousand z/OS CICS credit card transactions per second with 6 ms reaction time and a Deep Learning Model for in-transaction fraud detection.

IBM internal testing running a CICS credit card transaction workload using inference methods on IBM z16 yield performance results. They used a z/OS V2R4 LPAR with 6 CPs and 256 GB of memory. Inferencing was done with Machine Learning for IBM z/OS running on WebSphere Application Server Liberty 21.0.0.12, using a synthetic credit card fraud detection model and the IBM Integrated Accelerator for AI.

Server-side batching was enabled on Machine Learning for IBM z/OS with a size of 8 inference operations. The benchmark was run with 48 threads conducting inference procedures. Results represent a fully equipped IBM z16 with 200 CPs and 40 TB storage. Results can vary.

Clearing and settlement: A card processor considered utilising AI to assist in evaluating which trades and transactions have a high-risk exposure before settlement to prevent liability, chargebacks and costly inquiry. In support of this use case, IBM has proven that the IBM z16 with Machine Learning for IBM z/OS is designed to score business transactions at scale delivering the capacity to process up to 300 billion deep inferencing queries per day with 1 ms of latency.

Performance result is extrapolated from IBM internal tests conducting local inference operations in an IBM z16 LPAR with 48 IFLs and 128 GB memory on Ubuntu 20.04 (SMT mode) using a simulated credit card fraud detection model utilising the Integrated Accelerator for AI. The benchmark was running with 8 parallel threads, each pinned to the first core of a distinct processor.

The is CPU programmed was used to identify the core-chip topology. Batches of 128 inference operations were used. Results were also recreated using a z/OS V2R4 LPAR with 24 CPs and 256 GB memory on IBM z16. The same credit card fraud detection model was employed. The benchmark was run with a single thread executing inference operations. Results can vary.

Anti-money laundering: A bank was studying ways to include AML screening into their immediate payments operating flow. Their present end-day AML screening was no longer sufficient due to tougher rules. IBM has shown that collocating applications and inferencing requests on the IBM z16 with z/OS results in up to 20x lower response time and 19x higher throughput than sending the same requests to a compared x86 server in the same data centre with 60 ms average network latency.

IBM Z Performance from IBM internal tests using a CICS OLTP credit card workload with in-transaction fraud detection. Credit card fraud was detected using a synthetic model. Inference was done with MLz on zCX on IBM z16. Comparable x86 servers used Tensorflow Serving. Linux on IBM Z LPAR on the same IBM z16 bridged the network link between the measured z/OS LPAR and the x86 server.

Linux “tc-netem” added 5 ms average network latency to imitate a network environment. Network latency improved. Outcomes could differ.

IBM z16 configuration: Measurements were done using a z/OS (v2R4) LPAR with MLz (OSCE) and zCX with APAR- oa61559 and APAR- OA62310 applied, 8 CPs, 16 zIIPs and 8 GB of RAM.

x86 configuration: Tensorflow Serving 2.4 ran on Ubuntu 20.04.3 LTS on 8 Sky lake Intel Xeon Gold CPUs @ 2.30 GHz with Hyperthreading activated on, 1.5 TB memory, RAID5 local SSD Storage.

Machine Learning for IBM z/OS Machine Learning for IBM z/OS with IBM Z can also be utilized as a security-focused on-prem AI platform for additional use cases where clients desire to increase data integrity, privacy and application availability. The IBM z16 systems, with GDPS, IBM DS8000 series storage with Hyper Swap and running a Red Hat Open Shift Container Platform environment, are designed to deliver 99.99999% availability.

IBM z16, IBM z/VM V7.2 systems or above collected in a Single System Image, each running RHOCP 4.10 or above, IBM Operations Manager, GDPS 4.5 for managing virtual machine recovery and data recovery across metro distance systems and storage, including GDPS Global and Metro Multisite Workload, and IBM DS8000 series storage with IBM Hyper Swap are among the required components.

Necessary resiliency technology must be configured, including z/VM Single System Image clustering, GDPS xDR Proxy for z/VM and Red Hat Open Shift Data Foundation (ODF) 4.10 for administration of local storage devices. Application-induced outages are not included in the preceding assessments. Outcomes could differ. Other configurations (hardware or software) might have different availability characteristics.

IBM Developer for z/os The general public can now purchase Machine Learning for IBM z/OS via IBM and approved Business Partners. Furthermore, IBM provides a LinuxONE Discovery Workshop and AI on IBM Z at no cost. You can assess possible use cases and create a project plan with the aid of this workshop, which is an excellent place to start. You can use machine learning for IBM z/OS to expedite your adoption of AI by participating in this workshop.

Read more on Govindhtech.com

0 notes

Text

Importance of Software Development

According to IBM Research: “Software development refers to a set of computer science activities dedicated to the process of creating, designing, deploying and supporting software.”

Since the advent of software product development in the 1960s, many different approaches have been used for developing the software; the most common today uses an agile approach to development. An agile software development definition includes an approach where development requirements and solutions are delivered through the collaborative effort of self-organizing and cross-functional teams and their customers. Unlike the more traditional and often inflexible development software examples, agile encourages and promotes flexible responses to change, by advocating adaptive planning, evolutionary development, early delivery, and continual improvement.

Key steps in the Software Development Process

There are 4 key steps in software development.

1. Identification: Needs identification is a market research and brainstorming stage of the process. Before a firm builds software, it needs to perform extensive market research to determine the product's viability. Developers must identify the functions and services the software should provide so that its target consumers get the most out of it and find it necessary and useful. There are several ways to get this information, including feedback from potential and existing customers and surveys.

2. Requirement Analysis. Requirement analysis is the second phase in the software development life cycle. Here, stakeholders agree on the technical and user requirements and specifications of the proposed product to achieve its goals. This phase provides a detailed outline of every component, the scope, the tasks of developers and testing parameters to deliver a quality product.The requirement analysis stage involves developers, users, testers, project managers and quality assurance. This is also the stage where programmers choose the software development approach such as the waterfall or V model. The team records the outcome of this stage in a Software Requirement Specification document which teams can always consult during the project implementation.

3. Development and Implementation. The next stage is the development and implementation of the design parameters. Developers code based on the product specifications and requirements agreed upon in the previous stages. Following company procedures and guidelines, front-end developers build interfaces and back-ends while database administrators create relevant data in the database. The programmers also test and review each other's code.Once the coding is complete, developers deploy the product to an environment in the implementation stage. This allows them to test a pilot version of the program to make performance match the requirements.

4. Testing. The testing phase checks the software for bugs and verifies its performance before delivery to users. In this stage, expert testers verify the product's functions to make sure it performs according to the requirements analysis document.Testers use exploratory testing if they have experience with that software or a test script to validate the performance of individual components of the software. They notify developers of defects in the code. If developers confirm the flaws are valid, they improve the program, and the testers repeat the process until the software is free of bugs and behaves according to requirements.

Types of Software Development

There are several different types. They can be grouped into four basic categories:

Application development that provides functionality for users to perform tasks. Examples include office productivity suites, media players, social media tools, and booking systems. Applications can run on the user’s own personal computing equipment or on servers hosted in the cloud or by an internal IT department. Media streaming development is one example of application development for the cloud.

System software development to provide the core functions such as operating systems, storage systems, databases, networks, and hardware management.

Development tools that provide software developers with the tools to do their job, including code editors, compilers, linkers, debuggers, and test harnesses.

Embedded software development that creates the software used to control machines and devices, including automobiles, phones, and robots.

Benefits of Your Custom Software

Businesses face issues when they try to align existing softwares with their business processes. Existing softwares are challenging and difficult to integrate with your processes. Identifying when it’s best for your company to invest in custom software design and development can save you a lot of time and money in the long run.

Features tailored to suit your business

You own the product, you create the features

Differentiation and competitive edge in the market

Custom enhanced security and privacy

Extensive support from technical team of creators

Architecture Design

Patterns and techniques are used to design and build an application. Application architecture gives you a roadmap to follow when building an application, resulting in a well-structured app. Software designers and patterns can help you to build a custom software and application for your business. Programmers use the latest programming languages and tools to create the best possible results for you.

Design and Solution Thinking

Think outside the box for your business! The objective is to create innovative, custom solutions for well-defined problems within your business. Design and solution thinking methods will help you and your business to achieve its objective better and efficiently. Through understanding and creation of software solutions that seamlessly solve your problems, you'll be able to digitalise your processes and emerge as a leader in your industry

References

https://itchronicles.com/what-is-software-development/

https://appdevelopers.my/

https://www.indeed.com/career-advice/career-development/what-is-software-development

1 note

·

View note

Text

We buy back used servers, storage and networking equipment's

We buy back used servers, storage and networking equipment's of all major brand IBM, HP, DELL, CISCO etc..... https://maxicom.us/datacenter.php

#upgradedatacenter#datacenterequipment#datacenter#buybackusedservers#buydatacenterequipment#buyitequipment#buybackitequipment#itequipment#buybackdatacenter#networkingequipment#itassets#usedservers

1 note

·

View note

Text

Bryan Strauch is an Information Technology specialist in Morrisville, NC

Resume: Bryan Strauch

[email protected] 919.820.0552(cell)

Skills Summary

VMWare: vCenter/vSphere, ESXi, Site Recovery Manager (disaster recovery), Update Manager (patching), vRealize, vCenter Operations Manager, auto deploy, security hardening, install, configure, operate, monitor, optimize multiple enterprise virtualization environments

Compute: Cisco UCS and other major bladecenter brands - design, rack, configure, operate, upgrade, patch, secure multiple enterprise compute environments.

Storage: EMC, Dell, Hitachi, NetApp, and other major brands - connect, zone, configure, present, monitor, optimize, patch, secure, migrate multiple enterprise storage environments.

Windows/Linux: Windows Server 2003-2016, templates, install, configure, maintain, optimize, troubleshoot, security harden, monitor, all varieties of Windows Server related issues in large enterprise environments. RedHat Enterprise Linux and Ubuntu Operating Systems including heavy command line administration and scripting.

Networking: Layer 2/3 support (routing/switching), installation/maintenance of new network and SAN switches, including zoning SAN, VLAN, copper/fiber work, and other related tasks around core data center networking

Scripting/Programming: SQL, Powershell, PowerCLI, Perl, Bash/Korne shell scripting

Training/Documentation: Technical documentation, Visio diagramming, cut/punch sheets, implementation documentations, training documentations, and on site customer training of new deployments

Security: Alienvault, SIEM, penetration testing, reporting, auditing, mitigation, deployments

Disaster Recovery: Hot/warm/cold DR sites, SAN/NAS/vmware replication, recovery, testing

Other: Best practice health checks, future proofing, performance analysis/optimizations

Professional Work History

Senior Systems/Network Engineer; Security Engineer

September 2017 - Present

d-wise technologies

Morrisville, NC

Sole security engineer - designed, deployed, maintained, operated security SIEM and penetration testing, auditing, and mitigation reports, Alienvault, etc

responsibility for all the systems that comprise the organizations infrastructure and hosted environments

main point of contact for all high level technical requests for both corporate and hosted environments

Implement/maintain disaster recovery (DR) & business continuity plans

Management of network backbone including router, firewall, switch configuration, etc

Managing virtual environments (hosted servers, virtual machines and resources)

Internal and external storage management (cloud, iSCSI, NAS)

Create and support policies and procedures in line with best practices

Server/Network security management

Senior Storage and Virtualization Engineer; Datacenter Implementations Engineer; Data Analyst; Software Solutions Developer

October 2014 - September 2017

OSCEdge / Open SAN Consulting (Contractor)

US Army, US Navy, US Air Force installations across the United States (Multiple Locations)

Contract - Hurlburt Field, US Air Force:

Designed, racked, implemented, and configured new Cisco UCS blade center solution

Connected and zoned new NetApp storage solution to blades through old and new fabric switches

Implemented new network and SAN fabric switches

Network: Nexus C5672 switches

SAN Fabric: MDS9148S

Decommissioned old blade center environment, decommissioned old network and storage switches, decommissioned old SAN solution

Integrated new blades into VMWare environment and migrated entire virtual environment

Assessed and mitigated best practice concerns across entire environment

Upgraded entire environment (firmware and software versions)

Security hardened entire environment to Department of Defense STIG standards and security reporting

Created Visio diagrams and documentation for existing and new infrastructure pieces

Trained on site operational staff on new/existing equipment

Cable management and labeling of all new and existing solutions

Implemented VMWare auto deploy for rapid deployment of new VMWare hosts

Contract - NavAir, US Navy:

Upgraded and expanded an existing Cisco UCS environment

Cable management and labeling of all new and existing solutions

Created Visio diagrams and documentation for existing and new infrastructure pieces

Full health check of entire environment (blades, VMWare, storage, network)

Upgraded entire environment (firmware and software versions)

Assessed and mitigated best practice concerns across entire environment

Trained on site operational staff on new/existing equipment

Contract - Fort Bragg NEC, US Army:

Designed and implemented a virtualization solution for the US ARMY.

This technology refresh is designed to support the US ARMY's data center consolidation effort, by virtualizing and migrating hundreds of servers.

Designed, racked, implemented, and configured new Cisco UCS blade center solution

Implemented SAN fabric switches

SAN Fabric: Brocade Fabric Switches

Connected and zoned new EMC storage solution to blades

Specific technologies chosen for this solution include: VMware vSphere 5 for all server virtualization, Cisco UCS as the compute platform and EMC VNX for storage.

Decommissioned old SAN solution (HP)

Integrated new blades into VMWare environment and migrated entire environment

Physical to Virtual (P2V) conversions and migrations

Migration from legacy server hardware into virtual environment

Disaster Recovery solution implemented as a remote hot site.

VMware SRM and EMC Recoverpoint have been deployed to support this effort.

The enterprise backup solution is EMC Data Domain and Symantec NetBackup

Assessed and mitigated best practice concerns across entire environment

Upgraded entire environment (firmware and software versions)

Security hardened entire environment to Department of Defense STIG standards and security reporting

Created Visio diagrams and documentation for existing and new infrastructure pieces

Trained on site operational staff on new equipment

Cable management and labeling of all new solutions

Contract - 7th Signal Command, US Army:

Visited 71 different army bases collecting and analyzing compute, network, storage, metadata.

The data collected, analyzed, and reported will assist the US Army in determining the best solutions for data archiving and right sizing hardware for the primary and backup data centers.

Dynamically respond to business needs by developing and executing software solutions to solve mission reportable requirements on several business intelligence fronts

Design, architect, author, implement in house, patch, maintain, document, and support complex dynamic data analytics engine (T-SQL) to input, parse, and deliver reportable metrics from data collected as defined by mission requirements

From scratch in house BI engine development, 5000+ SQL lines (T-SQL)

Design, architect, author, implement to field, patch, maintain, document, and support large scale software tools for environmental data extraction to meet mission requirements

Large focus of data extraction tool creation in PowerShell (Windows, Active Directory) and PowerCLI (VMWare)

From scratch in house BI extraction tool development, 2000+ PowerShell/PowerCLI lines

Custom software development to extract data from other systems including storage systems (SANs), as required

Perl, awk, sed, and other languages/OSs, as required by operational environment

Amazon AWS Cloud (GovCloud), IBM SoftLayer Cloud, VMWare services, MS SQL engines

Full range of Microsoft Business Intelligence Tools used: SQL Server Analytics, Reporting, and Integration Services (SSAS, SSRS, SSIS)

Visual Studio operation, integration, and software design for functional reporting to SSRS frontend

Contract - US Army Reserves, US Army:

Operated and maintained Hitachi storage environment, to include:

Hitachi Universal Storage (HUS-VM enterprise)

Hitachi AMS 2xxx (modular)

Hitachi storage virtualization

Hitachi tuning manager, dynamic tiering manager, dynamic pool manager, storage navigator, storage navigator modular, command suite

EMC Data Domains

Storage and Virtualization Engineer, Engineering Team

February 2012 – October 2014

Network Enterprise Center, Fort Bragg, NC

NCI Information Systems, Inc. (Contractor)

Systems Engineer directly responsible for the design, engineering, maintenance, optimization, and automation of multiple VMWare virtual system infrastructures on Cisco/HP blades and EMC storage products.

Provide support, integration, operation, and maintenance of various system management products, services and capabilities on both the unclassified and classified network

Coordinate with major commands, vendors, and consultants for critical support required at installation level to include trouble tickets, conference calls, request for information, etc

Ensure compliance with Army Regulations, Policies and Best Business Practices (BBP) and industry standards / best practices

Technical documentation and Visio diagramming

Products Supported:

EMC VNX 7500, VNX 5500, and VNXe 3000 Series

EMC FAST VP technology in Unisphere

Cisco 51xx Blade Servers

Cisco 6120 Fabric Interconnects

EMC RecoverPoint

VMWare 5.x enterprise

VMWare Site Recovery Manager 5.x

VMWare Update Manager 5.x

VMWare vMA, vCops, and PowerCLI scripting/automation

HP Bladesystem c7000 Series

Windows Server 2003, 2008, 2012