#Tensorflow Lite

Text

Tensorflow Lite

The rapid emergence of low-power embedded devices and modern machine learning (ML) algorithms has created a new Internet of Things (IoT) era where lightweight ML frameworks such as TinyML have created new opportunities for ML algorithms running within edge devices.

2 notes

·

View notes

Text

youtube

Lesson 2

Adding Audio classification to your mobile app simple tracking wawes size windows and the end adding tensorflow lite task library.

*NB: still by the taken capture there dog on microphone tool

#tensorflow lite#Audio text collaboration#pre-trained model#adding task library#google#tracking wawes#by size windows#Youtube

1 note

·

View note

Text

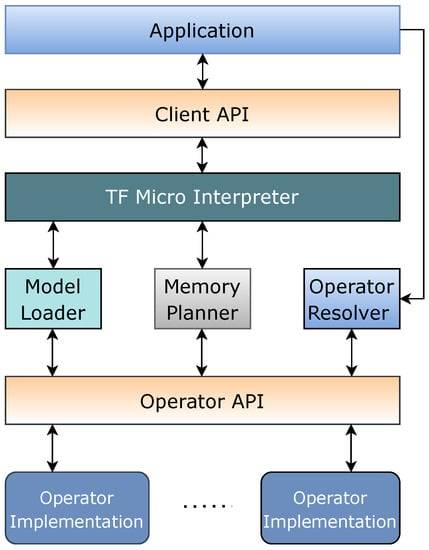

Tensorflow Lite for Microcontrollers | Bermondsey Electronics Limited

Explore the future of embedded AI with Bermondsey Electronics Limited. Harness the power of TensorFlow Lite for Microcontrollers to bring intelligence to your devices. Elevate your products with advanced machine learning capabilities.

0 notes

Link

1 note

·

View note

Text

Flutter's Most In-Demand Skills in 2025

As Flutter continues to dominate the cross-platform development landscape, the skill set required to excel in Flutter development is rapidly evolving. By 2025, the most in-demand skills for Flutter developers will reflect the growing complexity and scope of applications being built using this framework.

Mastery of Flutter 3.0 and Beyond

Flutter's updates in recent years have brought significant improvements, especially with version 3.0 and beyond. Developers need to be proficient in utilizing these newer features like desktop and embedded system support, web improvements, and enhanced performance optimizations.

Expertise in State Management (Bloc, Riverpod, GetX)

As apps become more complex, efficient state management is crucial. Developers who master modern state management solutions like Bloc, Riverpod, and GetX will stand out. GetX, in particular, is gaining traction for its minimal boilerplate and ease of use, making it a must-know for future projects.

Advanced UI/UX Design

With Flutter’s focus on building beautiful UIs, a deep understanding of custom widget design, animations, and responsive layouts will be essential. Developers should be skilled in creating dynamic, adaptive, and user-friendly designs that seamlessly translate across multiple platforms (mobile, web, desktop).

Integration with AI and ML APIs

Flutter developers who can integrate machine learning and AI functionalities into their apps using TensorFlow Lite, ML Kit, or cloud-based AI services will be in high demand. As AI continues to reshape industries, developers must be adept at making their apps smarter and more intuitive.

Proficiency in Firebase and Backend Integration

Firebase remains the go-to for many Flutter applications. Skills in Firebase Authentication, Firestore, and real-time databases will continue to be sought after. However, knowledge of other backend integrations (Node.js, GraphQL, AWS Amplify) will also be crucial as apps require more powerful cloud solutions.

Cross-Platform Testing and CI/CD Pipeline

Ensuring apps are bug-free across platforms will become more challenging. Developers proficient in automated testing tools like Flutter Driver, integration testing, and setting up CI/CD pipelines will be highly valued, especially in enterprise-grade applications.

Native Code Integration (Platform Channels)

Even though Flutter is a cross-platform framework, many projects still require platform-specific features. Understanding how to integrate native Kotlin/Swift code into Flutter using platform channels will remain a valuable skill for hybrid developers.

WebAssembly and Performance Optimization

With Flutter's growing capabilities on the web, knowledge of WebAssembly and optimizing app performance for web deployments will be a crucial skill. Developers should be able to create web applications that are lightweight yet offer native-level performance.

Flutter for IoT and Embedded Devices

The next frontier for Flutter is embedded systems and IoT devices. Developers with the ability to build applications that interact with hardware using Flutter, especially with support for Linux and embedded systems, will find themselves at the cutting edge of app development.

Security and Data Privacy Best Practices

As data privacy concerns continue to rise, Flutter developers must be well-versed in implementing secure authentication, data encryption, and ensuring GDPR and CCPA compliance. Secure app development practices will be a top priority.

Mastery of Flutter 3.0 and Beyond

Flutter's updates in recent years have brought significant improvements, especially with version 3.0 and beyond. Developers need to be proficient in utilizing these newer features like desktop and embedded system support, web improvements, and enhanced performance optimizations.

Expertise in State Management (Bloc, Riverpod, GetX)

As apps become more complex, efficient state management is crucial. Developers who master modern state management solutions like Bloc, Riverpod, and GetX will stand out. GetX, in particular, is gaining traction for its minimal boilerplate and ease of use, making it a must-know for future projects.

Advanced UI/UX Design

With Flutter’s focus on building beautiful UIs, a deep understanding of custom widget design, animations, and responsive layouts will be essential. Developers should be skilled in creating dynamic, adaptive, and user-friendly designs that seamlessly translate across multiple platforms (mobile, web, desktop).

Integration with AI and ML APIs

Flutter developers who can integrate machine learning and AI functionalities into their apps using TensorFlow Lite, ML Kit, or cloud-based AI services will be in high demand. As AI continues to reshape industries, developers must be adept at making their apps smarter and more intuitive.

Proficiency in Firebase and Backend Integration

Firebase remains the go-to for many Flutter applications. Skills in Firebase Authentication, Firestore, and real-time databases will continue to be sought after. However, knowledge of other backend integrations (Node.js, GraphQL, AWS Amplify) will also be crucial as apps require more powerful cloud solutions.

Cross-Platform Testing and CI/CD Pipeline

Ensuring apps are bug-free across platforms will become more challenging. Developers proficient in automated testing tools like Flutter Driver, integration testing, and setting up CI/CD pipelines will be highly valued, especially in enterprise-grade applications.

Native Code Integration (Platform Channels)

Even though Flutter is a cross-platform framework, many projects still require platform-specific features. Understanding how to integrate native Kotlin/Swift code into Flutter using platform channels will remain a valuable skill for hybrid developers.

WebAssembly and Performance Optimization

With Flutter's growing capabilities on the web, knowledge of WebAssembly and optimizing app performance for web deployments will be a crucial skill. Developers should be able to create web applications that are lightweight yet offer native-level performance.

Flutter for IoT and Embedded Devices

The next frontier for Flutter is embedded systems and IoT devices. Developers with the ability to build applications that interact with hardware using Flutter, especially with support for Linux and embedded systems, will find themselves at the cutting edge of app development.

Security and Data Privacy Best Practices

As data privacy concerns continue to rise, Flutter developers must be well-versed in implementing secure authentication, data encryption, and ensuring GDPR and CCPA compliance. Secure app development practices will be a top priority.

By 2025, Flutter developers with these skills will be in high demand across industries, from mobile and web applications to embedded systems and beyond. Mastering these skills will ensure that developers stay competitive in an increasingly sophisticated development landscape.

#flutter app development#mobile app development#mobile application development#mobile app company#EmbeddedSystems#FlutterIoT#SecureFlutterApps#MobileAppDevelopment#CrossPlatformDevelopment#Flutter2025#FlutterExperts#InDemandSkills

1 note

·

View note

Text

#flutter app development#flutter app development company#Instant Object Recognition in Live Camera Streams#Real-time Object Detection in Flutter#top flutter app development companies

0 notes

Text

Embedded AI Market | Future Growth Aspect Analysis to 2030

The Embedded AI Market was valued at USD 8.9 billion in 2023 and will surpass USD 21.5 billion by 2030; growing at a CAGR of 13.5 % during 2024 - 2030.Embedded AI refers to the integration of artificial intelligence algorithms and processing capabilities directly into hardware devices. Unlike traditional AI, which often requires connection to powerful cloud computing systems, embedded AI operates locally on edge devices such as sensors, microcontrollers, or other hardware components. This enables real-time decision-making and data analysis with reduced latency and power consumption.

This convergence of AI and embedded systems is unlocking new possibilities for smarter, autonomous, and responsive devices that can analyze and act upon data instantly without needing to send it to remote servers for processing.

Market Growth and Key Drivers

The global embedded AI market is expanding rapidly, driven by several key factors:

Advancements in Edge Computing

The proliferation of edge computing has played a pivotal role in the growth of embedded AI. Edge devices with built-in AI capabilities are able to process data locally, reducing the need for constant communication with cloud servers. This is particularly crucial for applications requiring immediate decision-making, such as autonomous vehicles, drones, and industrial automation.

Increased Demand for IoT Devices

The Internet of Things (IoT) is a major contributor to the growth of embedded AI. IoT devices are embedded in everyday objects like smart home appliances, wearable devices, and industrial equipment, gathering data in real time. By integrating AI, these devices can offer predictive maintenance, enhanced user experiences, and optimized operational efficiency.

Read More about Sample Report: https://intentmarketresearch.com/request-sample/embedded-ai-market-3623.html

Enhanced AI Algorithms

AI algorithms have become more efficient and powerful, enabling them to operate in low-power, resource-constrained environments like embedded systems. With advancements in AI frameworks, such as TensorFlow Lite and PyTorch Mobile, the ability to deploy AI models on edge devices is now more accessible than ever.

Industry 4.0 and Smart Manufacturing

Industry 4.0 emphasizes automation, smart factories, and connected machinery. Embedded AI plays a critical role in optimizing processes in manufacturing, such as predictive maintenance, quality control, and energy management. Machines equipped with AI can autonomously monitor their own performance, identify inefficiencies, and make adjustments in real time.

Rise of Autonomous Systems

The push toward autonomous systems, especially in the automotive industry, is driving embedded AI adoption. Self-driving cars, drones, and robots rely on embedded AI to process vast amounts of sensor data, make real-time decisions, and navigate complex environments without human intervention.

Key Sectors Driving Embedded AI Adoption

Automotive Industry

The automotive industry is at the forefront of embedded AI adoption. AI-driven features like autonomous driving, advanced driver-assistance systems (ADAS), and predictive maintenance are all powered by embedded AI systems. These technologies enable cars to analyze real-time road conditions, detect potential hazards, and make instant decisions, enhancing safety and efficiency.

Healthcare

In healthcare, embedded AI is transforming medical devices and diagnostic tools. AI-powered wearables can monitor patients' vital signs in real time, providing healthcare professionals with actionable insights for early diagnosis and personalized treatment plans. Moreover, embedded AI systems in medical imaging devices can assist in detecting diseases like cancer with higher accuracy.

Consumer Electronics

From smart speakers to home security systems, embedded AI is driving innovation in the consumer electronics space. Devices are becoming more intuitive, offering personalized experiences through voice recognition, gesture control, and facial recognition technologies. These AI-driven enhancements have revolutionized how consumers interact with their devices.

Industrial Automation

Embedded AI in industrial automation is enabling smarter, more efficient factories. AI-powered sensors and controllers can optimize production processes, predict equipment failures, and reduce downtime. As industries move toward fully autonomous operations, embedded AI will play an integral role in managing complex industrial systems.

Challenges in the Embedded AI Market

Despite its rapid growth, the embedded AI market faces several challenges. Developing AI algorithms that can operate efficiently in resource-constrained environments is complex. Power consumption, heat generation, and the limited processing capabilities of embedded devices must all be carefully managed. Moreover, there are concerns around data privacy and security, particularly in industries handling sensitive information, such as healthcare and finance.

Another challenge is the lack of standardization across embedded AI platforms, which can hinder widespread adoption. To address this, industry stakeholders are collaborating on developing open standards and frameworks to streamline AI deployment in embedded systems.

Ask for Customization Report: https://intentmarketresearch.com/ask-for-customization/embedded-ai-market-3623.html

The Future of Embedded AI

The future of embedded AI looks promising, with continued advancements in hardware, AI algorithms, and edge computing technologies. As AI capabilities become more efficient and affordable, their integration into everyday devices will become increasingly ubiquitous. In the coming years, we can expect to see even greater adoption of embedded AI in smart cities, autonomous transportation systems, and advanced robotics.

Moreover, the convergence of 5G technology with embedded AI will further accelerate innovation. With faster, more reliable connectivity, edge devices equipped with AI will be able to process and transmit data more efficiently, unlocking new use cases across various industries.

Conclusion

The embedded AI market is revolutionizing industries by enabling devices to think, analyze, and act autonomously. As the demand for smarter, more responsive technology grows, embedded AI will continue to transform sectors such as automotive, healthcare, industrial automation, and consumer electronics. With its ability to provide real-time insights and decision-making at the edge, embedded AI is set to play a central role in the next wave of technological innovation.

0 notes

Text

TensorFlow Mastery: Build Cutting-Edge AI Models

In the realm of artificial intelligence and machine learning, TensorFlow stands out as one of the most powerful and widely-used frameworks. Developed by Google, TensorFlow provides a comprehensive ecosystem for building and deploying machine learning models. For those looking to master this technology, a well-structured TensorFlow course for deep learning can be a game-changer. In this blog post, we will explore the benefits of mastering TensorFlow, the key components of a TensorFlow course for deep learning, and how it can help you build cutting-edge AI models. Whether you are a beginner or an experienced practitioner, this guide will provide valuable insights into the world of TensorFlow.

1. Understanding TensorFlow

1.1 What is TensorFlow?

TensorFlow is an open-source machine learning framework that allows developers to build and deploy machine learning models with ease. It provides a flexible and comprehensive ecosystem that includes tools, libraries, and community resources. TensorFlow supports a wide range of tasks, from simple linear regression to complex deep learning models. This versatility makes it an essential tool for anyone looking to delve into the world of AI.

1.2 Why Choose TensorFlow?

There are several reasons why TensorFlow is a popular choice among data scientists and AI practitioners. Firstly, it offers a high level of flexibility, allowing users to build custom models tailored to their specific needs. Secondly, TensorFlow’s extensive documentation and community support make it accessible to both beginners and experts. Lastly, TensorFlow’s integration with other Google products, such as TensorFlow Extended (TFX) and TensorFlow Lite, provides a seamless workflow for deploying models in production environments.

2. Key Components of a TensorFlow Course for Deep Learning

2.1 Introduction to Deep Learning

A comprehensive TensorFlow course for deep learning typically begins with an introduction to deep learning concepts. This includes understanding neural networks, activation functions, and the basics of forward and backward propagation. By grasping these foundational concepts, learners can build a solid base for more advanced topics.

2.2 Building Neural Networks with TensorFlow

The next step in a TensorFlow course for deep learning is learning how to build neural networks using TensorFlow. This involves understanding TensorFlow’s core components, such as tensors, operations, and computational graphs. Learners will also explore how to create and train neural networks using TensorFlow’s high-level APIs, such as Keras.

2.3 Advanced Deep Learning Techniques

As learners progress through the TensorFlow course for deep learning, they will encounter more advanced techniques. This includes topics such as convolutional neural networks (CNNs) for image recognition, recurrent neural networks (RNNs) for sequence data, and generative adversarial networks (GANs) for generating new data. These advanced techniques enable learners to tackle complex AI challenges and build cutting-edge models.

2.4 Model Optimization and Deployment

A crucial aspect of any TensorFlow course for deep learning is learning how to optimize and deploy models. This includes techniques for hyperparameter tuning, regularization, and model evaluation. Additionally, learners will explore how to deploy models using TensorFlow Serving, TensorFlow Lite, and TensorFlow.js. These deployment tools ensure that models can be efficiently integrated into real-world applications.

3. Practical Applications of TensorFlow

3.1 Computer Vision

One of the most popular applications of TensorFlow is in the field of computer vision. By leveraging TensorFlow’s powerful libraries, developers can build models for image classification, object detection, and image segmentation. A TensorFlow course for deep learning will typically include hands-on projects that allow learners to apply these techniques to real-world datasets.

3.2 Natural Language Processing

Another key application of TensorFlow is in natural language processing (NLP). TensorFlow provides tools for building models that can understand and generate human language. This includes tasks such as sentiment analysis, language translation, and text generation. By mastering TensorFlow, learners can develop sophisticated NLP models that can be used in various applications, from chatbots to language translation services.

3.3 Reinforcement Learning

Reinforcement learning is a branch of machine learning that focuses on training agents to make decisions by interacting with their environment. TensorFlow provides a robust framework for building and training reinforcement learning models. A TensorFlow course for deep learning will often cover the basics of reinforcement learning and provide practical examples of how to implement these models using TensorFlow.

4. Benefits of Mastering TensorFlow

4.1 Career Advancement

Mastering TensorFlow can significantly enhance your career prospects. As one of the most widely-used machine learning frameworks, TensorFlow skills are in high demand across various industries. By completing a TensorFlow course for deep learning, you can demonstrate your expertise and open up new career opportunities in AI and machine learning.

4.2 Personal Growth

Beyond career advancement, mastering TensorFlow offers personal growth and intellectual satisfaction. The ability to build and deploy cutting-edge AI models allows you to tackle complex problems and contribute to innovative solutions. Whether you are working on personal projects or collaborating with a team, TensorFlow provides the tools and resources needed to bring your ideas to life.

4.3 Community and Support

One of the key benefits of learning TensorFlow is the vibrant community and support network. TensorFlow’s extensive documentation, tutorials, and community forums provide valuable resources for learners at all levels. By engaging with the TensorFlow community, you can gain insights, share knowledge, and collaborate with other AI enthusiasts.

Conclusion

In conclusion, mastering TensorFlow through a well-structured TensorFlow course for deep learning can open up a world of possibilities in the field of artificial intelligence. From understanding the basics of neural networks to building and deploying advanced models, a comprehensive course provides the knowledge and skills needed to excel in AI. This deep dive into TensorFlow not only enhances your career prospects but also offers personal growth and intellectual satisfaction.

0 notes

Text

Primate Labs launches Geekbench AI benchmarking tool

New Post has been published on https://thedigitalinsider.com/primate-labs-launches-geekbench-ai-benchmarking-tool/

Primate Labs launches Geekbench AI benchmarking tool

.pp-multiple-authors-boxes-wrapper display:none;

img width:100%;

Primate Labs has officially launched Geekbench AI, a benchmarking tool designed specifically for machine learning and AI-centric workloads.

The release of Geekbench AI 1.0 marks the culmination of years of development and collaboration with customers, partners, and the AI engineering community. The benchmark, previously known as Geekbench ML during its preview phase, has been rebranded to align with industry terminology and ensure clarity about its purpose.

Geekbench AI is now available for Windows, macOS, and Linux through the Primate Labs website, as well as on the Google Play Store and Apple App Store for mobile devices.

Primate Labs’ latest benchmarking tool aims to provide a standardised method for measuring and comparing AI capabilities across different platforms and architectures. The benchmark offers a unique approach by providing three overall scores, reflecting the complexity and heterogeneity of AI workloads.

“Measuring performance is, put simply, really hard,” explained Primate Labs. “That’s not because it’s hard to run an arbitrary test, but because it’s hard to determine which tests are the most important for the performance you want to measure – especially across different platforms, and particularly when everyone is doing things in subtly different ways.”

The three-score system accounts for the varied precision levels and hardware optimisations found in modern AI implementations. This multi-dimensional approach allows developers, hardware vendors, and enthusiasts to gain deeper insights into a device’s AI performance across different scenarios.

A notable addition to Geekbench AI is the inclusion of accuracy measurements for each test. This feature acknowledges that AI performance isn’t solely about speed but also about the quality of results. By combining speed and accuracy metrics, Geekbench AI provides a more holistic view of AI capabilities, helping users understand the trade-offs between performance and precision.

Geekbench AI 1.0 introduces support for a wide range of AI frameworks, including OpenVINO on Linux and Windows, and vendor-specific TensorFlow Lite delegates like Samsung ENN, ArmNN, and Qualcomm QNN on Android. This broad framework support ensures that the benchmark reflects the latest tools and methodologies used by AI developers.

The benchmark also utilises more extensive and diverse datasets, which not only enhance the accuracy evaluations but also better represent real-world AI use cases. All workloads in Geekbench AI 1.0 run for a minimum of one second, allowing devices to reach their maximum performance levels during testing while still reflecting the bursty nature of real-world applications.

Primate Labs has published detailed technical descriptions of the workloads and models used in Geekbench AI 1.0, emphasising their commitment to transparency and industry-standard testing methodologies. The benchmark is integrated with the Geekbench Browser, facilitating easy cross-platform comparisons and result sharing.

The company anticipates regular updates to Geekbench AI to keep pace with market changes and emerging AI features. However, Primate Labs believes that Geekbench AI has already reached a level of reliability that makes it suitable for integration into professional workflows, with major tech companies like Samsung and Nvidia already utilising the benchmark.

(Image Credit: Primate Labs)

See also: xAI unveils Grok-2 to challenge the AI hierarchy

Want to learn more about AI and big data from industry leaders? Check out AI & Big Data Expo taking place in Amsterdam, California, and London. The comprehensive event is co-located with other leading events including Intelligent Automation Conference, BlockX, Digital Transformation Week, and Cyber Security & Cloud Expo.

Explore other upcoming enterprise technology events and webinars powered by TechForge here.

Tags: ai, artificial intelligence, benchmark, geekbench, geekbench ai, machine learning, primate labs, tools

#Accounts#ai#ai & big data expo#AI Engineering#ai use cases#amp#android#app#app store#apple#applications#approach#Articles#artificial#Artificial Intelligence#automation#benchmark#benchmarking#Big Data#browser#challenge#Cloud#Collaboration#Community#Companies#complexity#comprehensive#conference#cyber#cyber security

0 notes

Text

The Ultimate Guide to Integrating AI Features in Your Flutter Apps

As technology quickly evolves, incorporating artificial intelligence (AI) into mobile applications has become essential for providing superior user experiences, unique content, and cutting-edge features. Flutter App Development, with its robust ecosystem and cross-platform app development capabilities, is an ideal framework for developing AI-enhanced apps. This guide will walk you through the steps and best practices for integrating AI features into your Flutter applications.

1. Introduction to AI in Mobile Apps: Artificial intelligence is transforming how mobile applications interact with users. AI enables apps to offer intelligent features like voice approval, image processing, personalized options, and project analytics, all of which in particular enhance the user experience and engagement.

2. Why Choose Flutter for AI Integration?: Flutter, Google's open-source UI software development toolkit, is well-known for its Flutter app development company, expressive UI, and native performance on both iOS and Android platforms. Here are a few reasons why Flutter is perfect for AI integration:

Cross-Platform Development: Develop once, deploy anywhere.

Rich Ecosystem: Access a wide array of plugins and packages.

Hot Reload: Instantly see the results of your code changes.

Strong Community Support: Benefit from an active developer community and extensive documentation.

3. Getting Started with AI in Flutter: Before diving into AI integration, it's crucial to have a solid understanding of Flutter's development. Here’s a quick checklist:

Set up your Flutter development environment.

Get familiar with the Dart programming language.

Explore Flutter’s widget tree and state management.

4. Popular AI Features in Flutter Apps

Here are some common AI features you can integrate into your Flutter apps:

Voice Recognition: Convert speech to text and vice versa.

Image Processing: Recognize objects, faces, and scenes.

Natural Language Processing (NLP): sentiment analysis, language translation, and chatbots.

Predictive Analytics: Provide personalized recommendations and forecasts.

5. Setting Up Your Development Environment

To begin integrating AI into your Flutter app, you need the following:

Flutter SDK: Install the latest version from the Flutter website.

Dart SDK: Included with Flutter.

IDE: Use Visual Studio Code, Android Studio, or IntelliJ IDEA.

AI Libraries and Packages: TensorFlow Lite, Google ML Kit, etc.

6. Integrating Pre-built AI Solutions

For rapid development, consider using pre-built AI solutions:

Google ML Kit: A collection of machine learning APIs for mobile apps.

TensorFlow Lite: A lightweight solution for deploying ML models on mobile devices.

IBM Watson: Advanced AI and Machine Learning APIs.

7. Testing and Deployment

Thoroughly test your AI features before deployment:

Unit Testing: Test individual components and functions.

Integration Testing: Ensure AI features work seamlessly with other app components.

Performance Testing: Monitor the performance impact of AI features.

Deploy your app using Flutter’s built-in tools for both iOS and Android.

8. Future Trends in AI and Flutter

Stay ahead by keeping an eye on emerging trends:

Edge AI: running AI models directly on mobile devices for real-time performance.

AutoML: automated machine learning tools that simplify the model training process.

Explainable AI: Making AI decision-making processes transparent and understandable.

Conclusion

Embrace the Future of Mobile App Development with Flutter

Flutter continues to improve the mobile app development landscape, offering outstanding benefits that businesses can't afford to ignore. As more companies shift towards this impressive cross-platform architecture, now is the perfect time to join them. For your next project, trust XcelTec, the leading Flutter app development company, to bring your vision to life.

#flutter app development#flutter app development company#flutter app#flutter development#flutter development services#best flutter app development services

0 notes

Text

edge computing

The rapid emergence of low-power embedded devices and modern machine learning (ML) algorithms has created a new Internet of Things (IoT) era where lightweight ML frameworks such as TinyML have created new opportunities for ML algorithms running within edge devices.

https://www.mdpi.com/1999-5903/14/12/363

2 notes

·

View notes

Text

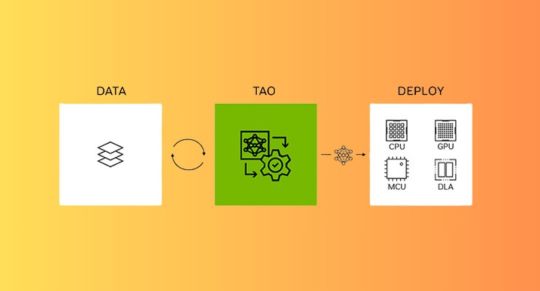

MediaTek NeuroPilot SDK Develops AI Faster with NVIDIA TAO

MediaTek NeuroPilot SDK

As part of its edge inference silicon plan, MediaTek stated at COMPUTEX 2024 that it will integrate NVIDIA TAO with MediaTek’s NeuroPilot SDK. With MediaTek’s support for NVIDIA TAO, developers will have an easy time integrating cutting-edge AI capabilities into a wide range of IoT applications that utilise the company’s state-of-the-art silicon. This will assist businesses of all sizes in realising edge AI’s promise in a variety of IoT verticals, such as smart manufacturing, smart retail, smart cities, transportation, and healthcare.

MediaTek’s NeuroPilot SDK Edge AI Applications

MediaTek created the extensive NeuroPilot SDK software toolkit and APIs to simplify the creation and implementation of AI applications on hardware that uses their chipsets. What NeuroPilot SDK has to provide is broken down as follows:

Emphasis on Edge AI

NeuroPilot SDK is based on the idea of “Edge AI,” which entails processing AI data locally on the device rather than on external servers. Better privacy, quicker reaction times, and less dependency on internet access are all made possible by this.

Efficiency is Critical

MediaTek’s NeuroPilot SDK is designed to maximise the efficiency of AI applications running on their hardware platforms, especially on their mobile System-on-Chip (SoC) processors. This results in longer battery life and more seamless operation for AI-powered functions on gadgets.

Agnostic towards Framework

NeuroPilot SDK is made to be independent of the underlying AI framework, even though it may include features unique to MediaTek hardware. For their apps, developers can use custom models or well-known frameworks like TensorFlow Lite thanks to this.

Tools and Resources for Development

The NeuroPilot SDK offers a wide range of tools and resources to developers, such as:

APIs for utilising MediaTek smartphones’ AI hardware.

To get started, use tutorials and sample code.

Integration with various IDEs to facilitate a smooth workflow for development environments.

Support and documentation to help developers along the way.

Latest Happenings

MediaTek declared in June 2024 that NeuroPilot SDK and NVIDIA’s TAO Toolkit are now integrated. Through the following, this collaboration seeks to further expedite development:

Pre-trained AI models

A massive library of pre-trained AI models for picture recognition and object detection saves developers time and effort.

Model optimization tools

Tools for improving pre-trained models for particular use cases and maximising performance on MediaTek hardware are known as model optimisation tools.

In general, MediaTek’s NeuroPilot SDK enables developers to design strong and effective AI apps for a variety of edge devices, such as wearables, smart home appliances, industrial machinery, and smartphones. NeuroPilot wants to be a major force behind Edge AI in the future by emphasising efficiency, ease of development, and a developing tool ecosystem.

NVIDIA TAO Tutorial

The NVIDIA TAO Toolkit: What Is It?

With transfer learning, you may expedite the development process and generate AI/machine learning models without requiring a horde of data scientists or mounds of data. With the help of this effective method, learning features from an existing neural network model may be instantaneously transferred to a newly customised one.

Based on TensorFlow and PyTorch, the open-source NVIDIA TAO Toolkit leverages the power of transfer learning to streamline the model training procedure and optimise the model for throughput of inference on nearly any platform. The outcome is a workflow that is extremely efficient. Utilise pre-trained or custom models, modify them for your own actual or synthetic data, and then maximise throughput for inference. All without the requirement for substantial training datasets or AI competence.

Principal Advantages

Educate Models Effectively

To save time and effort on manual tweaking, take advantage of TAO Toolkit’s AutoML feature.

Construct a Very Accurate AI

To build extremely accurate and unique AI models for your use case, leverage NVIDIA pretrained models and SOTA vision transformer.

Aim for Inference Optimisation

Beyond customisation, by optimising the model for inference, you can gain up to 4X performance.

Implement on Any Device

Install optimised models on MCUs, CPUs, GPUs, and other hardware.

A Top-Notch Solution for Your Vital AI Mission

As a component of NVIDIA AI Enterprise, an enterprise-ready AI software platform, NVIDIA TAO accelerates time to value while reducing the possible risks associated with open-source software. It offers security, stability, management, and support. NVIDIA AI Enterprise comes with three unique foundation models that have been trained on datasets that are profitable for businesses:

The only commercially effective fundamental model for vision, NV-DINOv2, was trained on more than 100 million images by self-supervised learning. With such a little amount of training data,Their model may be rapidly optimised for a variety of vision AI tasks.

PCB classification provides great accuracy in identifying missing components on a PCB and is based on NV-DINOv2.

A significant number of retail SKUs can be identified using retail recognition, which is based on NV-DINOv2.

Starting with TAO 5.1, fundamental models based on NV-DINOv2 can be optimised for specific visual AI tasks.

Get a complimentary 90-day trial licence for NVIDIA AI Enterprise.

Use NVIDIA LaunchPad to explore TAO Toolkit and NVIDIA AI Enterprise.

Image credit to nividia

Why It Is Important for the Development of AI

Customise your application with generative AI

A disruptive factor that will alter numerous sectors is generative AI. Foundation models that have been trained on a vast corpus of text, image, sensor, and other data are powering this. With TAO, you can now develop domain-specific generative AI applications by adjusting and customising these base models. Multi-modal models like NV-DINOv2, NV-CLIP, and Grounding-DINO can be fine-tuned thanks to TAO.

Implement Models across All Platforms

AI on billions of devices can be powered by the NVIDIA TAO Toolkit. For improved interoperability, the recently released NVIDIA TAO Toolkit 5.0 offers model export in ONNX, an open standard. This enables the deployment of a model on any computing platform that was trained using the NVIDIA TAO Toolkit.

AI-Powered Data Labelling

You can now name segmentation masks more quickly and affordably thanks to new AI-assisted annotation features. Mask Auto Labeler (MAL), a transformer-based segmentation architecture with poor supervision, can help in segmentation annotation as well as with tightening and adjusting bounding boxes for object detection.

Use Rest APIs to Integrate TAO Toolkit Into Your Application

Using Kubernetes, you can now more easily integrate TAO Toolkit into your application and deploy it in a contemporary cloud-native architecture over REST APIs. Construct a novel artificial intelligence service or include the TAO Toolkit into your current offering to facilitate automation across various technologies.Image credit to nividia

AutoML Makes AI Easier

AI training and optimisation take a lot of time and require in-depth understanding of which model to use and which hyperparameters to adjust. These days, AutoML makes it simple to train high-quality models without requiring the laborious process of manually adjusting hundreds of parameters.Image credit to nividia

Utilise Your Preferred Cloud Run

TAO’s cloud-native technology offers the agility, scalability, and portability required to manage and implement AI applications more successfully. Any top cloud provider’s virtual machines (VMs) can be used to implement TAO services, and they can also be used with managed Kubernetes services like Amazon EKS, Google GKE, or Azure AKS. To make infrastructure administration and scaling easier, it can also be utilised with cloud machine learning services like Azure Machine Learning, Google Vertex AI, and Google Colab.

TAO also makes it possible to integrate with a number of cloud-based and third-party MLOPs services, giving developers and businesses an AI workflow that is optimised. With the W&B or ClearML platform, developers can now monitor and control their TAO experiment and manage models.

Performance of Inference

Reach maximum inference performance on all platforms, from the cloud with NVIDIA Ampere architecture GPUs to the edge with NVIDIA Jetson solutions. To learn more about various models and batch size,

Every year, MediaTek powers over two billion connected devices. The goal of the company’s edge silicon portfolio is to ensure that edge AI applications operate as effectively as possible while optimising their performance. Additionally, it incorporates the cutting-edge multimedia and connection technology from MediaTek. MediaTek’s edge inference silicon roadmap enables marketers to provide amazing AI experiences to devices across different price points, with chipsets for the premium, mid-range, and entry tiers.

On all MediaTek devices, big and small, with an intuitive UI and performance optimisation features, developers may advance the field of Vision AI with MediaTek’s NeuroPilot SDK integration with NVIDIA TAO. Additionally, NVIDIA TAO opens customised vision AI capabilities for a variety of solutions and use cases with over 100 ready-to-use pretrained models. Even in the case of developers without in-depth AI knowledge, it expedites the process of fine-tuning AI models and lowers development time and complexity.

“CK Wang, General Manager of MediaTek’s IoT business unit, stated, “Integrating NVIDIA TAO with MediaTek NeuroPilot will further broaden NVIDIA TAO aim of democratising access to AI, helping generate a new wave of AI-powered devices and experiences.” “With MediaTek’s Genio product line and these increased resources, it’s easier than ever for developers to design cutting-edge Edge AI products that differentiate themselves from the competition.”

The Vice President of Robotics and Edge Computing at NVIDIA, Deepu Talla, stated that “generative AI is turbocharging computer vision with higher accuracy for AIoT and edge applications.” “Billions of IoT devices will have access to the newest and best vision AI models thanks to the combination of NVIDIA TAO and MediaTek NeuroPilot SDK.”

Read more on Govindhtech.com

#NVIDIATAO#mediatek#AIapplications#AIframework#AIhardware#smartphones#AImodels#pytorch#VertexAI#MachineLearning#nvidia#IoT#news#technews#technology#technologynews#technologytrends#govindhtech

0 notes

Text

Arduino Nano 33 BLE Sense:

Overview:

The Arduino Nano 33 BLE Sense is a small development board with a Cortex-M4 microcontroller, motion sensors, a microphone, and BLE – and is fully supported by Edge Impulse. You can sample raw data, build models, and deploy trained machine-learning models right in the studio.

Description:

“Explore the endless possibilities of Arduino Nano 33 BLE Sense on Blogsintra. Discover tutorials, projects, and insights into harnessing the power of this innovative board for your IoT, wearable, and sensor-based creations. Start your journey into the world of Arduino innovation today!”

Overview:

Nano 33 BLE Sense (no header) is Arduino’s 3.3V AI-compatible board in the smallest form factor available: 45 x 18 mm!

The Arduino Nano 33 BLE Sense is a brand-new board with a familiar form factor. It has a number of integrated sensors:

9-Axis Inertial Sensor: This makes this board ideal for portable devices

Humidity and temperature sensor: for high-precision measurements of environmental conditions

Barometric sensor: You can build a simple weather station

Microphone: to capture and analyze sound in real-time.

Sensor for movement, proximity, light color, and light intensity: estimates the brightness of the room, but also if someone approaches the painting

Arduino NANO 33 BLE sensors:

The Arduino Nano 33 BLE Sense is a further development of the traditional Arduino Nano, but with a much more powerful processor, the nRF52840 from Nordic Semiconductors, a 32-bit ARM® Cortex®-M4 CPU running at 64 MHz. This allows you to create larger programs than the Arduino Uno (it has 1 MB of program memory, 32 times larger) and with many more variables (its RAM is 128 times larger). The main processor has other great features such as Bluetooth® pairing via NFC and ultra-low power modes.

Built-in artificial intelligence:

The main feature of this board, apart from its impressive array of sensors, is the ability to run Edge Computing (AI) applications on it using TinyML. You can build your machine learning models using TensorFlow™ Lite and upload them to your board using the Arduino IDE.

Arduino developer Sandeep Mistry and Arduino consultant Dominic Pajak have prepared an introductory AI tutorial on the Nano 33 BLE Sense as well as an advanced guide to color recognition.

An improved Arduino Nano:

If you have used the Arduino Nano in your projects before, the Nano 33 BLE Sense is a pin-like alternative. Your code will still work, but remember it runs on 3.3V. This means you will have to revise your original design if it is not 3.3V compatible. In addition, the main differences from the classic Nano are a better processor, a micro-USB port, and all the above sensors.

You can get the board with or without a header, so you can embed the Nano in all sorts of inventions, including wearables. The board comes with mosaic connectors and no B-side components. These features allow you to solder the board directly onto your own design, minimizing the height of your entire prototype.

Oh, and did we mention the improved price? Thanks to a redesigned production process, the Arduino Nano 33 BLE Sense is truly cost-effective. See more

#blog#blogs#blogsintra#dean winchester#wei wuxian#pedrostories#pedro pascal#mdzs#greek mythology#outdoors#arduino#arduino nano#software#programming#computer#innovation#technology#circuits#circuit#the amazing digital circus#product design#100 days of productivity#film production in 2024#glitch productions#kristen applebees#advertising#trending#viralpost#viral#viral trends

0 notes

Text

Hailo-10 M.2 modülü, 40 TOPS'a kadar yapay zeka performansı sağlıyor

Hailo, üretken yapay zekaya hizmet etmek üzere tasarlanmış özel bir Hailo-10 modülünü duyurdu . Enerji açısından yüksek verimliliğe sahip bu hızlandırıcı, bir iş istasyonuna sistemine kurulabiliyor.

Ürün, PCIe 3.0 x4 arayüzüyle M.2 Key M 2242/2280 form faktöründe üretilmiştir. Ekipman bir Hailo-10H yongası ve 8 GB LPDDR4 bellek içeriyor. x86 ve Aarch64 (Arm64) mimarilerini temel alan CPU'lara sahip bilgisayarlarla uyumlu olduğu söyleniyor. Windows 11'in yanı sıra TensorFlow, TensorFlow Lite, Keras, PyTorch ve ONNX AI çerçeveleri için destek veriyor.

Hailo'nun belirttiği gibi, yeni ürün 40 TOPS'a kadar yapay zeka performansı sağlıyor. Güç tüketimi 3,5 W'tan az. Yapay zeka modülünün çıkarımla ilgili iş yüklerini gerçek zamanlı olarak desteklediği söyleniyor. Örneğin, büyük Llama2-7B dil modeliyle çalışırken saniyede 10 jetona (TPS) varan hızlara ulaşılabiliyor. Stable Diffusion 2.1 kullanıldığında, metne dayalı tek bir görselin 5 saniyeden daha kısa sürede oluşturulması mümkün oluyor.

Hailo-10'un kullanımı, belirli yapay zeka iş yüklerini buluttan veya veri merkezinden uca taşımanıza olanak tanır. Bu, gecikmeleri azaltır ve sorunları çevrimdışı çözmeyi mümkün kılar. Yeni ürün ilk etapta chatbotların, otopilotların, kişisel asistanların ve ses kontrollü sistemlerin çalışmasını desteklemek amacıyla PC'ler ve otomotiv bilgi-eğlence sistemleri alanlarında kullanılmak üzere konumlandırılacak.

Hailo-10 numunelerinin teslimatı 2024 yılının ikinci çeyreğinde gerçekleştirilecek. Şirketin ürün yelpazesi aynı zamanda M.2 formatındaki Hailo-8 hızlandırıcıyı da içermektedir : 26 TOPS'a kadar performans sağlar ve aynı zamanda 3 TOPS/W enerji verimliliğine sahip.

Read the full article

0 notes

Text

Coral AI:Googleが開発したエッジAIプラットフォーム

Coral AIとは

Coral AIは、Googleが開発・販売するエッジAI向けのハードウェアとソフトウェアのプラットフォームです。低消費電力で高速な機械学習の推論処理を実現するEdge TPUと呼ばれる専用チップを中心に、TensorFlow Liteをベースとしたソフトウェア開発キットやAPIを提供しています。

ハードウェアラインナップ

Coral AIは、プロトタイプ開発から量産まで対応した様々な形態のハードウェアを用意しています。開発ボードは、Edge TPUを搭載したシングルボードコンピュータで、PythonやC++を使ってアプリケーション開発ができます。USBアクセラレータは、既存のシステムにEdge TPUの機械学習処理能力を手軽に追加できるUSBデバイスです。量産向けには、M.2やPCIe接続のアクセラレータモジュールや、Edge…

View On WordPress

0 notes

Text

Leveraging Machine Learning in Python with TensorFlow 2 and PyTorch

In the vast and ever-evolving landscape of machine learning (ML), Python stands as a beacon for developers and researchers alike, offering an intuitive syntax coupled with a robust ecosystem of libraries and frameworks. Among these, TensorFlow 2 and PyTorch have emerged as frontrunners, each with its unique strengths and community of supporters. This blog “Leveraging Machine Learning in Python with TensorFlow 2 and PyTorch” delves into how TensorFlow 2 and PyTorch can be harnessed to drive innovation and efficiency in ML projects, providing a comprehensive guide for practitioners leveraging these powerful tools.

Introduction to TensorFlow 2

Developed by Google, TensorFlow 2 is an open-source library for research and production. It offers an ecosystem of tools, libraries, and community resources that allow developers to build and deploy ML-powered applications. TensorFlow 2 made significant improvements over its predecessor, making it more user-friendly and focusing on simplicity and ease of use. Its eager execution mode, by default, allows businesses looking to hire python developers develop a more intuitive coding and immediate feedback, essential for debugging and experimentation.

Key Features of TensorFlow 2

Eager Execution: TensorFlow 2 executes operations immediately, making it easier to start with and debug, providing a more pythonic feel.

Keras Integration: Tight integration with Keras, a high-level neural networks API, written in Python and capable of running on top of TensorFlow. This simplifies model creation and experimentation.

Distributed Training: TensorFlow 2 supports distributed training strategies out of the box, enabling models to be trained on multiple CPUs, GPUs, or TPUs without significant code changes.

Model Deployment: TensorFlow offers various tools like TensorFlow Serving, TensorFlow Lite, and TensorFlow.js for deploying models across different platforms easily.

Introduction to PyTorch

PyTorch, developed by Facebook's AI Research lab, has rapidly gained popularity for its ease of use, efficiency, and dynamic computation graph that offers flexibility in ML model development. It is particularly favored for academic research and prototyping, where its dynamic nature allows for iterative and exploratory approaches to model design and testing.

Key Features of PyTorch

Dynamic Computation Graphs: PyTorch uses dynamic computation graphs, meaning the graph is built on the fly as operations are performed. This offers unparalleled flexibility in changing the way your network behaves on the fly and with minimal code.

Pythonic Nature: PyTorch is deeply integrated with Python, making it more intuitive for developers who are already familiar with Python.

Extensive Libraries: It has a rich ecosystem of libraries and tools, such as TorchVision for computer vision tasks, making it easier to implement complex models.

Strong Support for CUDA: PyTorch offers seamless CUDA integration, ensuring efficient use of GPUs for training and inference, making it highly scalable and fast.

Comparing TensorFlow 2 and PyTorch

While both TensorFlow 2 and PyTorch are powerful in their rights, they cater to different preferences and project requirements.

Ease of Use: PyTorch is often praised for its more intuitive and straightforward syntax, making it a favorite among researchers and those new to ML. TensorFlow 2, with its integration of Keras, has significantly closed the gap, offering a much simpler API for model development.

Performance and Scalability: TensorFlow 2 tends to have an edge in deployment and scalability, especially in production environments. Its comprehensive suite of tools for serving models and performing distributed training is more mature.

Community and Support: Both top Python frameworks boast large and active communities. TensorFlow, being older, has a broader range of resources, tutorials, and support. However, PyTorch has seen rapid growth in its community, especially in academic circles, due to its flexibility and ease of use.

Practical Applications

Implementing ML projects with TensorFlow 2 or PyTorch involves several common steps: data preprocessing, model building, training, evaluation, and deployment. Here, we’ll briefly outline how a typical ML project could be approached with both frameworks, focusing on a simple neural network for image classification.

TensorFlow 2 Workflow

Data Preprocessing: Utilize TensorFlow’s tf.data API to load and preprocess your dataset efficiently.

Model Building: Leverage Keras to define your model. You can use a sequential model with convolutional layers for a simple image classifier.

Training: Compile your model with an optimizer, loss function, and metrics. Use the model.fit() method to train it on your data.

Evaluation and Deployment: Evaluate your model’s performance with model.evaluate(). Deploy it using TensorFlow Serving or TensorFlow Lite for mobile devices.

PyTorch Workflow

Data Preprocessing: Use torchvision.transforms to preprocess your images. torch.utils.data.DataLoader is handy for batching and shuffling.

Model Building: Define your neural network class by extending torch.nn.Module. Implement the forward method to specify the network's forward pass.

Training: Prepare your loss function and optimizer from torch.nn and torch.optim, respectively. Iterate over your dataset, and use backpropagation to train your model.

Evaluation and Deployment: Evaluate the model on a test set. For deployment, you can export your model using TorchScript or convert it for use with ONNX for cross-platform compatibility.

Conclusion

Both TensorFlow 2 and PyTorch offer unique advantages and have their place in the ML ecosystem. TensorFlow 2 stands out for its extensive deployment tools and scalability, making it ideal for production environments. PyTorch, with its dynamic computation graph and intuitive design, excels in research and rapid prototyping.

Your choice between TensorFlow 2 and PyTorch may depend on specific project needs, your comfort with Python, and the ecosystem you're most aligned with. Regardless of your choice to hire top python development companies, both frameworks are continuously evolving, driven by their vibrant communities and the shared goal of making ML more accessible and powerful.

In leveraging these frameworks, practitioners are equipped with the tools necessary to push the boundaries of what's possible with ML, driving innovation and creating solutions that were once deemed futuristic. As we continue to explore the potential of ML, TensorFlow 2 and PyTorch will undoubtedly play pivotal roles in shaping the future of technology.

#software development#mobile app development#web development#python#python development company#python programming

0 notes