#Supervised Learning Vs Unsupervised Learning

Explore tagged Tumblr posts

Text

Supervised Learning Vs Unsupervised Learning in Machine Learning

Summary: Supervised learning uses labeled data for predictive tasks, while unsupervised learning explores patterns in unlabeled data. Both methods have unique strengths and applications, making them essential in various machine learning scenarios.

Introduction

Machine learning is a branch of artificial intelligence that focuses on building systems capable of learning from data. In this blog, we explore two fundamental types: supervised learning and unsupervised learning. Understanding the differences between these approaches is crucial for selecting the right method for various applications.

Supervised learning vs unsupervised learning involves contrasting their use of labeled data and the types of problems they solve. This blog aims to provide a clear comparison, highlight their advantages and disadvantages, and guide you in choosing the appropriate technique for your specific needs.

What is Supervised Learning?

Supervised learning is a machine learning approach where a model is trained on labeled data. In this context, labeled data means that each training example comes with an input-output pair.

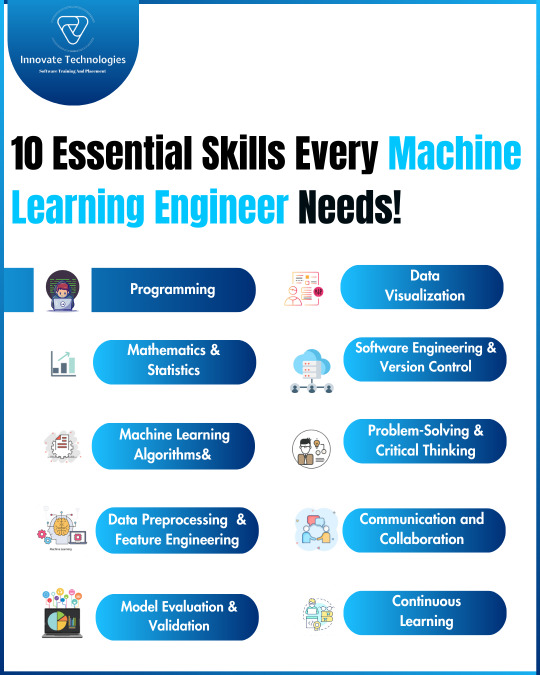

The model learns to map inputs to the correct outputs based on this training. The goal of supervised learning is to enable the model to make accurate predictions or classifications on new, unseen data.

Key Characteristics and Features

Supervised learning has several defining characteristics:

Labeled Data: The model is trained using data that includes both the input features and the corresponding output labels.

Training Process: The algorithm iteratively adjusts its parameters to minimize the difference between its predictions and the actual labels.

Predictive Accuracy: The success of a supervised learning model is measured by its ability to predict the correct label for new, unseen data.

Types of Supervised Learning Algorithms

There are two primary types of supervised learning algorithms:

Regression: This type of algorithm is used when the output is a continuous value. For example, predicting house prices based on features like location, size, and age. Common algorithms include linear regression, decision trees, and support vector regression.

Classification: Classification algorithms are used when the output is a discrete label. These algorithms are designed to categorize data into predefined classes. For instance, spam detection in emails, where the output is either "spam" or "not spam." Popular classification algorithms include logistic regression, k-nearest neighbors, and support vector machines.

Examples of Supervised Learning Applications

Supervised learning is widely used in various fields:

Image Recognition: Identifying objects or people in images, such as facial recognition systems.

Natural Language Processing (NLP): Sentiment analysis, where the model classifies the sentiment of text as positive, negative, or neutral.

Medical Diagnosis: Predicting diseases based on patient data, like classifying whether a tumor is malignant or benign.

Supervised learning is essential for tasks that require accurate predictions or classifications, making it a cornerstone of many machine learning applications.

What is Unsupervised Learning?

Unsupervised learning is a type of machine learning where the algorithm learns patterns from unlabelled data. Unlike supervised learning, there is no target or outcome variable to guide the learning process. Instead, the algorithm identifies underlying structures within the data, allowing it to make sense of the data's hidden patterns and relationships without prior knowledge.

Key Characteristics and Features

Unsupervised learning is characterized by its ability to work with unlabelled data, making it valuable in scenarios where labeling data is impractical or expensive. The primary goal is to explore the data and discover patterns, groupings, or associations.

Unsupervised learning can handle a wide variety of data types and is often used for exploratory data analysis. It helps in reducing data dimensionality and improving data visualization, making complex datasets easier to understand and analyze.

Types of Unsupervised Learning Algorithms

Clustering: Clustering algorithms group similar data points together based on their features. Popular clustering techniques include K-means, hierarchical clustering, and DBSCAN. These methods are used to identify natural groupings in data, such as customer segments in marketing.

Association: Association algorithms find rules that describe relationships between variables in large datasets. The most well-known association algorithm is the Apriori algorithm, often used for market basket analysis to discover patterns in consumer purchase behavior.

Dimensionality Reduction: Techniques like Principal Component Analysis (PCA) and t-Distributed Stochastic Neighbor Embedding (t-SNE) reduce the number of features in a dataset while retaining its essential information. This helps in simplifying models and reducing computational costs.

Examples of Unsupervised Learning Applications

Unsupervised learning is widely used in various fields. In marketing, it segments customers based on purchasing behavior, allowing personalized marketing strategies. In biology, it helps in clustering genes with similar expression patterns, aiding in the understanding of genetic functions.

Additionally, unsupervised learning is used in anomaly detection, where it identifies unusual patterns in data that could indicate fraud or errors.

This approach's flexibility and exploratory nature make unsupervised learning a powerful tool in data science and machine learning.

Advantages and Disadvantages

Understanding the strengths and weaknesses of both supervised and unsupervised learning is crucial for selecting the right approach for a given task. Each method offers unique benefits and challenges, making them suitable for different types of data and objectives.

Supervised Learning

Pros: Supervised learning offers high accuracy and interpretability, making it a preferred choice for many applications. It involves training a model using labeled data, where the desired output is known. This enables the model to learn the mapping from input to output, which is crucial for tasks like classification and regression.

The interpretability of supervised models, especially simpler ones like decision trees, allows for better understanding and trust in the results. Additionally, supervised learning models can be highly efficient, especially when dealing with structured data and clearly defined outcomes.

Cons: One significant drawback of supervised learning is the requirement for labeled data. Gathering and labeling data can be time-consuming and expensive, especially for large datasets.

Moreover, supervised models are prone to overfitting, where the model performs well on training data but fails to generalize to new, unseen data. This occurs when the model becomes too complex and starts learning noise or irrelevant patterns in the training data. Overfitting can lead to poor model performance and reduced predictive accuracy.

Unsupervised Learning

Pros: Unsupervised learning does not require labeled data, making it a valuable tool for exploratory data analysis. It is particularly useful in scenarios where the goal is to discover hidden patterns or groupings within data, such as clustering similar items or identifying associations.

This approach can reveal insights that may not be apparent through supervised learning methods. Unsupervised learning is often used in market segmentation, customer profiling, and anomaly detection.

Cons: However, unsupervised learning typically offers less accuracy compared to supervised learning, as there is no guidance from labeled data. Evaluating the results of unsupervised learning can also be challenging, as there is no clear metric to measure the quality of the output.

The lack of labeled data means that interpreting the results requires more effort and domain expertise, making it difficult to assess the effectiveness of the model.

Frequently Asked Questions

What is the main difference between supervised learning and unsupervised learning?

Supervised learning uses labeled data to train models, allowing them to predict outcomes based on input data. Unsupervised learning, on the other hand, works with unlabeled data to discover patterns and relationships without predefined outputs.

Which is better for clustering tasks: supervised or unsupervised learning?

Unsupervised learning is better suited for clustering tasks because it can identify and group similar data points without predefined labels. Techniques like K-means and hierarchical clustering are commonly used for such purposes.

Can supervised learning be used for anomaly detection?

Yes, supervised learning can be used for anomaly detection, particularly when labeled data is available. However, unsupervised learning is often preferred in cases where anomalies are not predefined, allowing the model to identify unusual patterns autonomously.

Conclusion

Supervised learning and unsupervised learning are fundamental approaches in machine learning, each with distinct advantages and limitations. Supervised learning excels in predictive accuracy with labeled data, making it ideal for tasks like classification and regression.

Unsupervised learning, meanwhile, uncovers hidden patterns in unlabeled data, offering valuable insights in clustering and association tasks. Choosing the right method depends on the nature of the data and the specific objectives.

#Supervised Learning Vs Unsupervised Learning in Machine Learning#Supervised Learning Vs Unsupervised Learning#Supervised Learning#Unsupervised Learning#Machine Learning#ML#AI#Artificial Intelligence

0 notes

Text

9 Anime to Watch to Feel Like This 👇🏾

The alternative title/concept for this list was "Anime Featuring The Zaddiests of Daddies" but, you know. Consistency or whatever. When I saw the gif, however, I cackled so loud that I figured it still captured The Vibe ✨ (that being #fatherless) Considering this context, some recommendations are slightly longer than the usual bite size serving of 12-24 episodes but you won't regret indulging. Each show is recommended for the plot which is very evident with the teaser gifs. Happy Fathers' Day, you degenerates. And remember, you don't have to have kids to be a Daddy. 😈❤️

Spy x Family (series) - There is something so wholesome about the fate of the world as he knows it relying on how convincingly he can portray a Good Father despite his own origin story. Loid Forger, in a mission to maintain peace, creates the perfect family through any means necessary. Doubt he realized, in doing so, he'd create a home for himself and the oddballs helping to keep up the ruze.

Sub/Dub | Crunchyroll, Hulu

Buddy Daddies (series) - The life of hitmen Rei Suwa and Kazuki Kurusu get a bit more messy when one of their hits leaves them with a pretty sizable loose end. Regardless of their occupation, their lives change around a little girl and trying to provide as good a home as two, single twenty-something men can. The rest, they'll figure out.

Sub/Dub | Crunchyroll

My Senpai is Annoying (series) - Very capable working woman finds herself kohai to an older, overly chummy colleague who DEFINITELY does not know how to PDF documents unsupervised. This is a show about their day to day interactions. If you're wondering if Takeda is the only contender in this series, hold out for Futaba's grandfather. Just trust me.

Sub/Dub | Crunchyroll

Jujutsu Kaisen (series + movie) - *Gestures vaguely to my blog* This show has plenty of compelling reasons to become obsessed. Trying to train strong child soldiers to protect the balance of humans vs curses so they don’t see a grisly demise is just one of them. Not your average shonen, not your average found families.

Sub/Dub | Crunchyroll, Netflix, Hulu

My Hero Academia (series + movies) - Something about kids needing guidance so as not to die while in the pursuit of some great civic duty really creates an environment for some skrunkly father figures. 😘👌🏾 Never mind that the climax of this story is one that tangles generations of families as society adapts to the advent of super powers.

Sub/Dub | Crunchyroll, Hulu, Netflix

Fire Force (series) - in a world where humans can spontaneously combust, Shinra, who is blamed for the fire that killed his mother and younger brother, seeks to overcome the stigma of his power and joins Fire Force Company 8. In training to fight Infernals, he learns to control his pyrokineses under the guidance of many talented fighters while trying to understand the world around him and the invisible hand that manipulates everything.

Sub/Dub | Crunchyroll, Hulu

Attack on Titan (series) - Unpredictable violence at the vicious jaws of larger than life monsters, the last remaining humans seek refuse behind hallowed walls. Until one day, the day the first wall fell, which made what once provided security feel more like a holding pen ahead of the slaughter. As resources dwindle and the indomitable curiosity of humans persist, the brave minority pushes the boundaries of the walls that house them and seek to uncover the shroud of mystery as of how they found themselves prisoners to titans in the first place.

Sub/Dub | Crunchyroll, Hulu, Sling TV

Chainsaw Man (series) - Human fears strengthen devils which threaten to overrun the world. Enter the Public Safety Devil Hunters responsible for exterminating devils before they become bigger problems and keeping a bead on larger threats, namely, the Gun Devil. The titular character eventually falls under the supervision of Aki Hayakawa (and later Kishibe *swoon*) who has a strong single-dad-who-works-two-jobs-who-loves-his-kids-and-never-stops type vibe.

Sub/Dub | Crunchyroll, Hulu

Blue Exorcist (series + movie) - When your dad is Satan, the bar is literally in hell for the man who steps up to raise you. Even so, LOOK AT THE DRIP. There's a narrow line to walk when trying to overcome your own parentage and twin brothers, Rin and Yukio, seek to do so by following in their adoptive father, Shiro Fujimoto's, footsteps despite obvious adversity. Just remember to skip to Season 2 after episode 17 or Google the proper order to watch.

Sub/Dub | Crunchyroll, Hulu

#neon recs#fatherless behavior#father figures#anime#manga#loid forger#spy x family#rei suwa#kazuki kurusu#buddy daddies#my senpai is annoying#hirumi takeda#jujutsu kaisen#jjk#nanami kento#toji fushiguro#satoru gojo#suguru geto#my hero academia#boku no hero academia#shouta aizawa#fire force#benimaru shinmon#akitaru obi#attack on titan#levi ackerman#chainsaw man#aki hayakawa#kishibe#blue exorcist

74 notes

·

View notes

Text

Tech AI Certifications vs. Traditional Tech Degrees: What’s Right for You?

In the fast-paced world of technology, the debate between pursuing traditional tech degrees and enrolling in specialized AI certification programs has gained momentum. With advancements in artificial intelligence (AI) driving industry transformation, professionals and students alike face a critical choice: Should they stick to conventional degree paths or opt for focused certifications?

This article explores the advantages and drawbacks of both options, helping you determine which is the best fit for your career goals. We’ll also highlight some standout AI certification programs, including AI+ Tech™ by AI Certs, and compare them to traditional academic degrees.

Traditional Tech Degrees: The Tried-and-Tested Route

The Benefits of Traditional Degrees

Comprehensive Knowledge: Degree programs provide a broad understanding of technology, including programming, databases, and systems architecture.

Networking Opportunities: Universities often facilitate connections with industry professionals, alumni, and peers.

Recognition: Degrees from accredited institutions carry significant weight globally.

Challenges with Degrees

Time-Consuming: Most degree programs take 3–4 years to complete, which may delay entry into the workforce.

High Costs: Tuition fees for tech degrees can run into tens of thousands of dollars.

Lack of Specialization: Many degrees provide a generalist approach, which may not fully prepare students for niche fields like AI.

AI Certifications: The Agile Alternative

Why AI Certifications Are Gaining Popularity

Focused Learning: Certifications target specific skills, such as machine learning, natural language processing, or AI ethics.

Cost-Effective: They are generally more affordable than traditional degrees.

Faster Turnaround: Most certifications can be completed in months, allowing professionals to upskill quickly.

Industry-Relevant Skills: Certification programs often collaborate with industry leaders, ensuring their curriculum aligns with market demands.

Challenges with Certifications

Limited Networking Opportunities: Certification programs don’t typically provide the same networking ecosystem as universities.

Less Comprehensive: While focused, certifications may not cover broader tech concepts.

Some top Tech certifications are listed below:

AI+ Data™ by AI Certs

The AI+ Data™ certification by AI Certs is an industry-tailored course that focuses on combining data science foundations with advanced AI concepts. This program equips professionals to handle data-driven projects, interpret data insights, and apply machine learning models effectively, making it ideal for tech professionals who aim to build a data-oriented AI skillset.

Key Learning Areas:

Foundations of Data Science: Covers essential data science concepts, including data cleaning, visualization, and statistical analysis.

Machine Learning Models and Applications: Teaches practical applications of supervised and unsupervised learning, as well as advanced techniques like deep learning.

Real-World Data Storytelling: Helps participants use data storytelling to communicate complex findings, making it relevant for business, analytics, and technical teams.

The AI+ Data™ course provides hands-on experience with industry-standard AI tools and data science methods. It’s a great fit for those looking to strengthen their data handling and AI skills, positioning them well for roles in data science and AI development.

Use the coupon code NEWCOURSE25 to get 25% OFF on AI CERTS’ certifications. Don’t miss out on this limited-time offer! Visit this link to explore the courses and enroll today.

Google Professional Certificate in Artificial Intelligence

Google’s certification program is ideal for those looking to leverage AI in areas like automation, analytics, and development.

Key Highlights

Comprehensive lessons on AI fundamentals and advanced tools.

Hands-on projects for real-world learning.

Focus on ethical AI and automation.

IBM AI Engineering Professional Certificate

IBM’s offering focuses on developing and deploying AI solutions, making it a great option for aspiring AI engineers.

Key Highlights

Modules on machine learning, deep learning, and AI engineering.

Uses tools like TensorFlow and PyTorch.

Recognized by leading tech companies.

Who Should Choose What?

When to Opt for a Traditional Degree

If you’re just starting out and need a broad understanding of technology.

If you value the campus experience and long-term academic networking.

If your career goals include research or teaching in academia.

When to Choose AI Certifications

If you’re a working professional looking to upskill quickly.

If you want to transition into a specialized role, such as AI engineer or data analyst.

If you’re seeking a cost-effective way to gain industry-recognized skills.

The Future of Tech Education

As industries evolve, the lines between degrees and certifications are blurring. Many professionals are now opting for a hybrid approach — starting with a degree and later augmenting their skills with certifications.

Moreover, companies are increasingly valuing practical experience and specialized knowledge over traditional credentials. Certifications like AI+ Everyone™, Google’s Professional Certificate, and IBM’s AI Engineering Certificate are leading this change.

Conclusion

The choice between traditional tech degrees and AI certifications boils down to your career goals, current skill level, and resources. While degrees offer a comprehensive foundation, certifications provide targeted, industry-relevant skills that can fast-track your career.

For those eager to innovate and stay ahead in the tech world, certifications like AI+ Everyone™ by AI Certs and programs from Google and IBM are excellent starting points. Whether you’re beginning your journey or enhancing your expertise, investing in AI education ensures you remain competitive in a rapidly evolving landscape.

0 notes

Text

Supervised and Unsupervised Learning

Supervised and Unsupervised Learning are two primary approaches in machine learning, each used for different types of tasks. Here’s a breakdown of their differences:

Definition and Purpose

Supervised Learning: In supervised learning, the model is trained on labeled data, meaning each input is paired with a correct output. The goal is to learn the mapping between inputs and outputs so that the model can predict the output for new, unseen inputs. Example: Predicting house prices based on features like size, location, and number of bedrooms (where historical prices are known). Unsupervised Learning: In unsupervised learning, the model is given data without labeled responses. Instead, it tries to find patterns or structure in the data. The goal is often to explore data, find groups (clustering), or detect outliers. Example: Grouping customers into segments based on purchasing behavior without predefined categories.

Types of Problems Addressed Supervised Learning: Classification: Categorizing data into classes (e.g., spam vs. not spam in emails). Regression: Predicting continuous values (e.g., stock prices or temperature). Unsupervised Learning: Clustering: Grouping similar data points (e.g., market segmentation). Association: Finding associations or relationships between variables (e.g., market basket analysis in retail). Dimensionality Reduction: Reducing the number of features while retaining essential information (e.g., principal component analysis for visualizing data in 2D).

Example Algorithms - Supervised Learning Algorithms: Linear Regression Logistic Regression Decision Trees and Random Forests Support Vector Machines (SVM) Neural Networks (when trained with labeled data) Unsupervised Learning Algorithms: K-Means Clustering Hierarchical Clustering Principal Component Analysis (PCA) Association Rule Mining (like the Apriori algorithm)

Training Data Requirements Supervised Learning: Requires a labeled dataset, which can be costly and time-consuming to collect and label. Unsupervised Learning: Works with unlabeled data, which is often more readily available, but the insights are less straightforward without predefined labels.

Evaluation Metrics Supervised Learning: Can be evaluated with standard metrics like accuracy, precision, recall, F1 score (for classification), and mean squared error (for regression), since we have labeled outputs. Unsupervised Learning: Harder to evaluate directly. Techniques like silhouette score or Davies–Bouldin index (for clustering) are used, or qualitative analysis may be required.

Use Cases Supervised Learning: Fraud detection, email classification, medical diagnosis, sales forecasting, and image recognition. Unsupervised Learning: Customer segmentation, anomaly detection, topic modeling, and data compression.

In summary:

Supervised learning requires labeled data and is primarily used for prediction or classification tasks where the outcome is known. Unsupervised learning doesn’t require labeled data and is mainly used for data exploration, clustering, and finding patterns where the outcome is not predefined.

1 note

·

View note

Text

Top Strategies Every AI Agent Developer Should Know

Introduction

As the field of Artificial Intelligence (AI) continues to evolve, AI agents have become integral in industries ranging from healthcare to finance, autonomous vehicles, and customer service. For AI agent developers, creating sophisticated, adaptive, and efficient agents is key. In this blog, we'll explore the top strategies every AI agent developer should know to excel in the competitive world of AI development.

1. Master the Fundamentals of Machine Learning (ML)

A deep understanding of machine learning is foundational for AI agents. Since AI agents often rely on learning from data, mastering supervised, unsupervised, and reinforcement learning techniques is critical. This includes understanding neural networks, decision trees, clustering algorithms, and deep learning architectures. Developers should also be familiar with common ML frameworks, such as TensorFlow, PyTorch, and Scikit-Learn, to efficiently build and test their agents.

2. Design for Flexibility and Adaptability

AI agents must be adaptable to changing environments. This requires designing systems that can learn from new data, adjust behaviors, and improve performance over time. Incorporating techniques like online learning and continuous model training is essential for ensuring that the agent can adapt in real-world scenarios. Furthermore, developers should focus on building flexible architectures that can handle a variety of tasks, as AI agents often need to switch between different objectives.

3. Prioritize Explainability and Transparency

In many applications, especially in high-stakes domains like healthcare or finance, the decisions made by AI agents need to be transparent and explainable. Developers should consider building models that are interpretable, ensuring that stakeholders can understand how and why certain decisions are made. Techniques such as LIME (Local Interpretable Model-agnostic Explanations) and SHAP (SHapley Additive exPlanations) are useful for providing insights into complex models, improving trust and accountability.

4. Leverage Reinforcement Learning (RL)

Reinforcement Learning is a powerful method for developing AI agents capable of learning through interaction with their environment. By employing RL, developers can create agents that continuously improve their performance based on feedback from the environment. It’s especially useful for tasks like game playing, robotics, and autonomous systems. Understanding concepts like reward signals, exploration vs. exploitation, and Markov Decision Processes (MDPs) will allow developers to create agents that can perform in dynamic, real-world scenarios.

5. Utilize Multi-Agent Systems

AI agents don’t always operate in isolation. Many complex systems require collaboration between multiple agents. Multi-agent systems (MAS) are designed to simulate such environments, where agents must cooperate, negotiate, or even compete with each other. For developers, designing agents that can work within a multi-agent system requires understanding concepts such as coordination, communication protocols, and conflict resolution. This is particularly important for AI agents working in team-based environments, such as in simulation or game theory.

6. Focus on Data Quality and Ethics

The quality of data fed to AI agents significantly impacts their performance. It’s vital to ensure that the data is clean, diverse, and free from biases. Developers should also integrate ethical considerations into the design of AI agents. This includes avoiding biased decision-making, ensuring fairness, and addressing potential issues around data privacy. Ethical AI practices not only improve performance but also help mitigate risks associated with the deployment of AI systems.

7. Optimize for Efficiency and Scalability

AI agents must be able to operate efficiently in real-time applications. This involves optimizing algorithms to reduce computational costs, storage requirements, and memory usage. It’s also essential to design scalable systems that can handle large datasets or support numerous concurrent users without compromising performance. Developers should explore techniques such as model compression, distributed computing, and parallel processing to achieve high scalability and efficiency.

Conclusion

AI agent development is a complex and multi-faceted field that demands a deep understanding of machine learning, system design, and ethical considerations. By mastering these strategies—flexibility, explainability, reinforcement learning, multi-agent systems, data ethics, and efficiency—developers can create AI agents that are not only powerful but also reliable, transparent, and adaptable to evolving real-world environments. These strategies form the bedrock for building successful AI systems capable of transforming industries across the globe.

0 notes

Text

Data Science vs. Machine Learning vs. Artificial Intelligence: What’s the Difference?

In today’s tech-driven world, terms like Data Science, Machine Learning (ML), and Artificial Intelligence (AI) are often used interchangeably. However, each plays a unique role in technology and has a distinct scope and purpose. Understanding these differences is essential to see how each contributes to business and society. Here’s a breakdown of what sets them apart and how they work together.

What is Artificial Intelligence?

Artificial Intelligence (AI) is the broadest concept among the three, referring to machines designed to mimic human intelligence. AI involves systems that can perform tasks usually requiring human intelligence, such as reasoning, problem-solving, and understanding language. AI is often divided into two categories:

Narrow AI: Specialized to perform specific tasks, like virtual assistants (e.g., Siri) and facial recognition software.

General AI: A theoretical form of AI that could understand, learn, and apply intelligence to multiple areas, similar to human intelligence. General AI remains largely a goal for future developments.

Examples of AI Applications:

Chatbots that can answer questions and hold simple conversations.

Self-driving cars using computer vision and decision-making algorithms.

What is Data Science?

Data Science is the discipline of extracting insights from large volumes of data. It involves collecting, processing, and analyzing data to find patterns and insights that drive informed decisions. Data scientists use various techniques from statistics, data engineering, and domain expertise to understand data and predict future trends.

Data Science uses tools like SQL for data handling, Python and R for data analysis, and visualization tools like Tableau. It encompasses a broad scope, including everything from data cleaning and wrangling to modeling and presenting insights.

Examples of Data Science Applications:

E-commerce companies use data science to recommend products based on browsing behavior.

Financial institutions use it for fraud detection and credit scoring.

What is Machine Learning?

Machine Learning (ML) is a subset of AI that enables systems to learn from data and improve their accuracy over time without being explicitly programmed. ML models analyze historical data to make predictions or decisions. Unlike traditional programming, where a programmer provides rules, ML systems create their own rules by learning from data.

ML is classified into different types:

Supervised Learning: Where models learn from labeled data (e.g., predicting house prices based on features like location and size).

Unsupervised Learning: Where models find patterns in unlabeled data (e.g., customer segmentation).

Reinforcement Learning: Where models learn by interacting with their environment, receiving rewards or penalties (e.g., game-playing AI).

Examples of Machine Learning Applications:

Email providers use ML to detect and filter spam.

Streaming services use ML to recommend shows and movies based on viewing history.

How Do They Work Together?

While these fields are distinct, they often intersect. For example, data scientists may use machine learning algorithms to build predictive models, which in turn are part of larger AI systems.

To illustrate, consider a fraud detection system in banking:

Data Science helps gather and prepare the data, exploring patterns that might indicate fraudulent behavior.

Machine Learning builds and trains the model to recognize patterns and flag potentially fraudulent transactions.

AI integrates this ML model into an automated system that monitors transactions, making real-time decisions without human intervention.

Conclusion

Data Science, Machine Learning, and Artificial Intelligence are closely related but have unique roles. Data Science is the broad field of analyzing data for insights. Machine Learning, a branch of AI, focuses on algorithms that learn from data. AI, the most comprehensive concept, involves creating systems that exhibit intelligent behavior. Together, they are transforming industries, powering applications from recommendation systems to autonomous vehicles, and pushing the boundaries of what technology can achieve.

If you know more about details click here.

0 notes

Text

Artificial Intelligence Course in Nagercoil

Dive into the Future with Jclicksolutions’ Artificial Intelligence Course in Nagercoil

Artificial Intelligence (AI) is rapidly transforming industries, opening up exciting opportunities for anyone eager to explore its vast potential. From automating processes to enhancing decision-making, AI technologies are changing how we live and work. If you're looking to gain a foothold in this field, the Artificial Intelligence course at Jclicksolutions in Nagercoil is the ideal starting point. This course is carefully designed to cater to both beginners and those with a tech background, offering comprehensive training in the concepts, tools, and applications of AI.

Why Study Artificial Intelligence?

AI is no longer a concept of the distant future. It is actively shaping industries such as healthcare, finance, automotive, retail, and more. Skills in AI can lead to careers in data science, machine learning engineering, robotics, and even roles as AI strategists and consultants. By learning AI, you’ll be joining one of the most dynamic and impactful fields, making you a highly valuable asset in the job market.

Course Overview at Jclicksolutions

The Artificial Intelligence course at Jclicksolutions covers foundational principles as well as advanced concepts, providing a balanced learning experience. It is designed to ensure that students not only understand the theoretical aspects of AI but also gain hands-on experience with its practical applications. The curriculum includes modules on machine learning, data analysis, natural language processing, computer vision, and neural networks.

1. Comprehensive and Structured Curriculum

The course covers every critical aspect of AI, starting from the basics and gradually moving into advanced topics. Students begin by learning fundamental concepts like data pre-processing, statistical analysis, and supervised vs. unsupervised learning. As they progress, they delve deeper into algorithms, decision trees, clustering, and neural networks. The course also includes a segment on deep learning, enabling students to explore areas like computer vision and natural language processing, which are essential for applications in image recognition and AI-driven communication.

2. Hands-On Learning with Real-World Projects

One of the standout features of the Jclicksolutions AI course is its emphasis on hands-on learning. Rather than just focusing on theoretical knowledge, the course is structured around real-world projects that allow students to apply what they’ve learned. For example, students might work on projects that involve creating machine learning models, analyzing large datasets, or designing AI applications for specific business problems. By working on these projects, students gain practical experience, making them job-ready upon course completion.

3. Experienced Instructors and Personalized Guidance

The instructors at Jclicksolutions are industry experts with years of experience in AI and machine learning. They provide invaluable insights, sharing real-life case studies and offering guidance based on current industry practices. With small class sizes, each student receives personalized attention, ensuring they understand complex topics and receive the support needed to build confidence in their skills.

4. Cutting-Edge Tools and Software

The AI course at Jclicksolutions also familiarizes students with the latest tools and platforms used in the industry, including Python, TensorFlow, and Keras. These tools are essential for building, training, and deploying AI models. Students learn how to use Jupyter notebooks for coding, experiment with datasets, and create data visualizations that reveal trends and patterns. By the end of the course, students are proficient in these tools, positioning them well for AI-related roles.

Career Opportunities after Completing the AI Course

With the knowledge gained from this AI course, students can pursue various roles in the tech industry. AI professionals are in demand across sectors such as healthcare, finance, retail, and technology. Here are some career paths open to graduates of the Jclicksolutions AI course:

Machine Learning Engineer: Design and develop machine learning systems and algorithms.

Data Scientist: Extract meaningful insights from data and help drive data-driven decision-making.

AI Consultant: Advise businesses on implementing AI strategies and solutions.

Natural Language Processing Specialist: Work on projects involving human-computer interaction, such as chatbots and voice recognition systems.

Computer Vision Engineer: Focus on image and video analysis for industries like automotive and healthcare.

To support students in their career journey, Jclicksolutions also offers assistance in building portfolios, resumes, and interview preparation, helping students transition from learning to employment.

Why Choose Jclicksolutions?

Located in Nagercoil, Jclicksolutions is known for its commitment to delivering high-quality tech education. The institute stands out for its strong focus on practical training, a supportive learning environment, and a curriculum that aligns with industry standards. Students benefit from a collaborative atmosphere, networking opportunities, and mentorship that goes beyond the classroom. This hands-on, project-based approach makes Jclicksolutions an excellent choice for those looking to make a mark in AI.

Enroll Today and Join the AI Revolution

AI is a transformative field that is reshaping industries and creating new opportunities. By enrolling in the Artificial Intelligence course at Jclicksolutions in Nagercoil, you’re setting yourself up for a promising future in tech. This course is more than just an educational program; it's a gateway to a career filled with innovation and possibilities. Whether you’re a beginner or an experienced professional looking to upskill, Jclicksolutions offers the resources, knowledge, and support to help you succeed.

Software Internship Training | Placement Centre Course Nagercoil

0 notes

Text

Title: Unlocking Insights: A Comprehensive Guide to Data Science

Introduction

Overview of Data Science: Define data science and its importance in today’s data-driven world. Explain how it combines statistics, computer science, and domain expertise to extract meaningful insights from data.

Purpose of the Guide: Outline what readers can expect to learn, including key concepts, tools, and applications of data science.

Chapter 1: The Foundations of Data Science

What is Data Science?: Delve into the definition and scope of data science.

Key Concepts: Introduce core concepts like big data, data mining, and machine learning.

The Data Science Lifecycle: Describe the stages of a data science project, from data collection

to deployment.

Chapter 2: Data Collection and Preparation

Data Sources: Discuss various sources of data (structured vs. unstructured) and the importance of data quality.

Data Cleaning: Explain techniques for handling missing values, outliers, and inconsistencies.

Data Transformation: Introduce methods for data normalization, encoding categorical variables, and feature selection.

Chapter 3: Exploratory Data Analysis (EDA)

Importance of EDA: Highlight the role of EDA in understanding data distributions and relationships.

Visualization Tools: Discuss tools and libraries (e.g., Matplotlib, Seaborn, Tableau) for data visualization.

Statistical Techniques: Introduce basic statistical methods used in EDA, such as correlation analysis and hypothesis testing.

Chapter 4: Machine Learning Basics

What is Machine Learning?: Define machine learning and its categories (supervised, unsupervised, reinforcement learning).

Key Algorithms: Provide an overview of popular algorithms, including linear regression, decision trees, clustering, and neural networks.

Model Evaluation: Discuss metrics for evaluating model performance (e.g., accuracy, precision, recall) and techniques like cross-validation.

Chapter 5: Advanced Topics in Data Science

Deep Learning: Introduce deep learning concepts and frameworks (e.g., TensorFlow, PyTorch).

Natural Language Processing (NLP): Discuss the applications of NLP and relevant techniques (e.g., sentiment analysis, topic modeling).

Big Data Technologies: Explore tools and frameworks for handling large datasets (e.g., Hadoop, Spark).

Chapter 6: Applications of Data Science

Industry Use Cases: Highlight how various industries (healthcare, finance, retail) leverage data science for decision-making.

Real-World Projects: Provide examples of successful data science projects and their impact.

Chapter 7: Tools and Technologies for Data Science

Programming Languages: Discuss the significance of Python and R in data science.

Data Science Libraries: Introduce key libraries (e.g., Pandas, NumPy, Scikit-learn) and their functionalities.

Data Visualization Tools: Overview of tools used for creating impactful visualizations.

Chapter 8: The Future of Data Science

Trends and Innovations: Discuss emerging trends such as AI ethics, automated machine learning (AutoML), and edge computing.

Career Pathways: Explore career opportunities in data science, including roles like data analyst, data engineer, and machine learning engineer.

Conclusion

Key Takeaways: Summarize the main points covered in the guide.

Next Steps for Readers: Encourage readers to continue their learning journey, suggest resources (books, online courses, communities), and provide tips for starting their own data science projects.

Data science course in chennai

Data training in chennai

Data analytics course in chennai

0 notes

Text

AI Uncovered: A Comprehensive Guide

Machine Learning (ML) ML is a subset of AI that specifically focuses on developing algorithms and statistical models that enable machines to learn from data, without being explicitly programmed. ML involves training models on data to make predictions, classify objects, or make decisions. Key characteristics: - Subset of AI - Focuses on learning from data - Involves training models using algorithms and statistical techniques - Can be supervised, unsupervised, or reinforcement learning Artificial Intelligence (AI) AI refers to the broader field of research and development aimed at creating machines that can perform tasks that typically require human intelligence. AI involves a range of techniques, including rule-based systems, decision trees, and optimization methods. Key characteristics: - Encompasses various techniques beyond machine learning - Focuses on solving specific problems or tasks - Can be rule-based, deterministic, or probabilistic Generative AI (Gen AI) Gen AI is a subset of ML that specifically focuses on generating new, synthetic data that resembles existing data. Gen AI models, such as Generative Adversarial Networks (GANs) and Variational Autoencoders (VAEs), learn to create new data samples by capturing patterns and structures in the training data. Key characteristics: - Subset of ML - Focuses on generating new, synthetic data - Involves learning patterns and structures in data - Can be used for data augmentation, synthetic data generation, and creative applications Distinctions - AI vs. ML: AI is a broader field that encompasses various techniques, while ML is a specific subset of AI that focuses on learning from data. - ML vs. Gen AI: ML is a broader field that includes various types of learning, while Gen AI is a specific subset of ML that focuses on generating new, synthetic data. - AI vs. Gen AI: AI is a broader field that encompasses various techniques, while Gen AI is a specific subset of ML that focuses on generating new data. Example Use Cases - AI: Virtual assistants (e.g., Siri, Alexa), expert systems, and decision support systems. - ML: Image classification, natural language processing, recommender systems, and predictive maintenance. - Gen AI: Data augmentation, synthetic data generation, image and video generation, and creative applications (e.g., art, music). AI Terms - ANN (Artificial Neural Network): A computational model inspired by the human brain's neural structure. - API (Application Programming Interface): A set of rules and protocols for building software applications. - Bias: A systematic error or distortion in an AI model's performance. - Chatbot: A computer program that simulates human-like conversation. - Computer Vision: The field of AI that enables computers to interpret and understand visual data. - DL (Deep Learning): A subset of ML that uses neural networks with multiple layers. - Expert System: A computer program that mimics human decision-making in a specific domain. - Human-in-the-Loop (HITL): A design approach where humans are involved in AI decision-making. - Intelligent Agent: A computer program that can perceive, reason, and act autonomously. - Knowledge Graph: A database that stores relationships between entities. - NLP (Natural Language Processing): The field of AI that enables computers to understand human language. - Robotics: The field of AI that deals with the design and development of robots. - Symbolic AI: A type of AI that uses symbols and rules to represent knowledge. ML Terms - Activation Function: A mathematical function used to introduce non-linearity in neural networks. - Backpropagation: An algorithm used to train neural networks. - Batch Normalization: A technique used to normalize input data. - Classification: The process of assigning labels to data points. - Clustering: The process of grouping similar data points. - Convolutional Neural Network (CNN): A type of neural network for image processing. - Data Augmentation: Techniques used to artificially increase the size of a dataset. - Decision Tree: A tree-like model used for classification and regression. - Dimensionality Reduction: Techniques used to reduce the number of features in a dataset. - Ensemble Learning: A method that combines multiple models to improve performance. - Feature Engineering: The process of selecting and transforming data features. - Gradient Boosting: A technique used to combine multiple weak models. - Hyperparameter Tuning: The process of optimizing model parameters. - K-Means Clustering: A type of unsupervised clustering algorithm. - Linear Regression: A type of regression analysis that models the relationship between variables. - Model Selection: The process of choosing the best model for a problem. - Neural Network: A type of ML model inspired by the human brain. - Overfitting: When a model is too complex and performs poorly on new data. - Precision: The ratio of true positives to the sum of true positives and false positives. - Random Forest: A type of ensemble learning algorithm. - Regression: The process of predicting continuous outcomes. - Regularization: Techniques used to prevent overfitting. - Supervised Learning: A type of ML where the model is trained on labeled data. - Support Vector Machine (SVM): A type of supervised learning algorithm. - Unsupervised Learning: A type of ML where the model is trained on unlabeled data. Gen AI Terms - Adversarial Attack: A technique used to manipulate input data to mislead a model. - Autoencoder: A type of neural network used for dimensionality reduction and generative modeling. - Conditional Generative Model: A type of Gen AI model that generates data based on conditions. - Data Imputation: The process of filling missing values in a dataset. - GAN (Generative Adversarial Network): A type of Gen AI model that generates data through competition. - Generative Model: A type of ML model that generates new data samples. - Latent Space: A lower-dimensional representation of data used in Gen AI models. - Reconstruction Loss: A measure of the difference between original and reconstructed data. - VAE (Variational Autoencoder): A type of Gen AI model that generates data through probabilistic encoding. Other Terms - Big Data: Large datasets that require specialized processing techniques. - Cloud Computing: A model of delivering computing services over the internet. - Data Science: An interdisciplinary field that combines data analysis, ML, and domain expertise. - DevOps: A set of practices that combines software development and operations. - Edge AI: The deployment of AI models on edge devices, such as smartphones or smart home devices. - Explainability: The ability to understand and interpret AI model decisions. - Fairness: The absence of bias in AI model decisions. - IoT (Internet of Things): A network of physical devices embedded with sensors and software. - MLOps: A set of practices that combines ML and DevOps. - Transfer Learning: A technique used to adapt pre-trained models to new tasks. This list is not exhaustive, but it covers many common terms and acronyms used in AI, ML, and Gen AI. I hope this helps you learn and navigate the field! Large Language Models (LLMs) Overview LLMs are a type of artificial intelligence (AI) designed to process and generate human-like language. They're a subset of Deep Learning (DL) models, specifically transformer-based neural networks, trained on vast amounts of text data. LLMs aim to understand the structure, syntax, and semantics of language, enabling applications like language translation, text summarization, and chatbots. Key Characteristics - Massive Training Data: LLMs are trained on enormous datasets, often exceeding billions of parameters. - Transformer Architecture: LLMs utilize transformer models, which excel at handling sequential data like text. - Self-Supervised Learning: LLMs learn from unlabeled data, predicting missing words or next tokens. - Contextual Understanding: LLMs capture context, nuances, and relationships within language. How LLMs Work - Tokenization: Text is broken into smaller units (tokens) for processing. - Embeddings: Tokens are converted into numerical representations (embeddings). - Transformer Encoder: Embeddings are fed into the transformer encoder, generating contextualized representations. - Decoder: The decoder generates output text based on the encoder's output. - Training: LLMs are trained using masked language modeling, predicting missing tokens. Types of LLMs - Autoregressive LLMs (e.g., BERT, RoBERTa): Generate text one token at a time. - Masked LLMs (e.g., BERT, DistilBERT): Predict missing tokens in a sequence. - Encoder-Decoder LLMs (e.g., T5, BART): Use separate encoder and decoder components. Applications - Language Translation: LLMs enable accurate machine translation. - Text Summarization: LLMs summarize long documents into concise summaries. - Chatbots: LLMs power conversational AI, responding to user queries. - Language Generation: LLMs create coherent, context-specific text. - Question Answering: LLMs answer questions based on context. Relationship to Other AI Types - NLP: LLMs are a subset of NLP, focusing on language understanding and generation. - DL: LLMs are a type of DL model, utilizing transformer architectures. - ML: LLMs are a type of ML model, trained using self-supervised learning. - Gen AI: LLMs can be used for generative tasks, like text generation. Popular LLMs - BERT (Bidirectional Encoder Representations from Transformers) - RoBERTa (Robustly Optimized BERT Pretraining Approach) - T5 (Text-to-Text Transfer Transformer) - BART (Bidirectional and Auto-Regressive Transformers) - LLaMA (Large Language Model Meta AI) LLMs have revolutionized NLP and continue to advance the field of AI. Their applications are vast, and ongoing research aims to improve their performance, efficiency, and interpretability. Types of Large Language Models (LLMs) Overview LLMs are a class of AI models designed to process and generate human-like language. Different types of LLMs cater to various applications, tasks, and requirements. Key Distinctions 1. Architecture - Transformer-based: Most LLMs use transformer architectures (e.g., BERT, RoBERTa). - Recurrent Neural Network (RNN)-based: Some LLMs use RNNs (e.g., LSTM, GRU). - Hybrid: Combining transformer and RNN architectures. 2. Training Objectives - Masked Language Modeling (MLM): Predicting masked tokens (e.g., BERT). - Next Sentence Prediction (NSP): Predicting sentence relationships (e.g., BERT). - Causal Language Modeling (CLM): Predicting next tokens (e.g., transformer-XL). 3. Model Size - Small: 100M-500M parameters (e.g., DistilBERT). - Medium: 1B-5B parameters (e.g., BERT). - Large: 10B-50B parameters (e.g., RoBERTa). - Extra Large: 100B+ parameters (e.g., transformer-XL). 4. Training Data - General-purpose: Trained on diverse datasets (e.g., Wikipedia, books). - Domain-specific: Trained on specialized datasets (e.g., medical, financial). - Multilingual: Trained on multiple languages. Notable Models 1. BERT (Bidirectional Encoder Representations from Transformers) - Architecture: Transformer - Training Objective: MLM, NSP - Model Size: Medium - Training Data: General-purpose 2. RoBERTa (Robustly Optimized BERT Pretraining Approach) - Architecture: Transformer - Training Objective: MLM - Model Size: Large - Training Data: General-purpose 3. DistilBERT (Distilled BERT) - Architecture: Transformer - Training Objective: MLM - Model Size: Small - Training Data: General-purpose 4. T5 (Text-to-Text Transfer Transformer) - Architecture: Transformer - Training Objective: CLM - Model Size: Large - Training Data: General-purpose 5. transformer-XL (Extra-Large) - Architecture: Transformer - Training Objective: CLM - Model Size: Extra Large - Training Data: General-purpose 6. LLaMA (Large Language Model Meta AI) - Architecture: Transformer - Training Objective: MLM - Model Size: Large - Training Data: General-purpose Choosing an LLM Selection Criteria - Task Requirements: Consider specific tasks (e.g., sentiment analysis, text generation). - Model Size: Balance model size with computational resources and latency. - Training Data: Choose models trained on relevant datasets. - Language Support: Select models supporting desired languages. - Computational Resources: Consider model computational requirements. - Pre-trained Models: Leverage pre-trained models for faster development. Why Use One Over Another? Key Considerations - Performance: Larger models often perform better, but require more resources. - Efficiency: Smaller models may be more efficient, but sacrifice performance. - Specialization: Domain-specific models excel in specific tasks. - Multilingual Support: Choose models supporting multiple languages. - Development Time: Pre-trained models save development time. LLMs have revolutionized NLP. Understanding their differences and strengths helps developers choose the best model for their specific applications. Parameters in Large Language Models (LLMs) Overview Parameters are the internal variables of an LLM, learned during training, that define its behavior and performance. What are Parameters? Definition Parameters are numerical values that determine the model's: - Weight matrices: Representing connections between neurons. - Bias terms: Influencing neuron activations. - Embeddings: Mapping words or tokens to numerical representations. Types of Parameters 1. Model Parameters Define the model's architecture and behavior: - Weight matrices - Bias terms - Embeddings 2. Hyperparameters Control the training process: - Learning rate - Batch size - Number of epochs Parameter Usage How Parameters are Used - Forward Pass: Parameters compute output probabilities. - Backward Pass: Parameters are updated during training. - Inference: Parameters generate text or predictions. Parameter Count Model Size Parameter count affects: - Model Complexity: Larger models can capture more nuances. - Computational Resources: Larger models require more memory and processing power. - Training Time: Larger models take longer to train. Common Parameter Counts - Model Sizes 1. Small: 100M-500M parameters (e.g., DistilBERT) 2. Medium: 1B-5B parameters (e.g., BERT) 3. Large: 10B-50B parameters (e.g., RoBERTa) 4. Extra Large: 100B+ parameters (e.g., transformer-XL) Parameter Efficiency Optimizing Parameters - Pruning: Removing redundant parameters. - Quantization: Reducing parameter precision. - Knowledge Distillation: Transferring knowledge to smaller models. Parameter Count vs. Performance - Overfitting: Too many parameters can lead to overfitting. - Underfitting: Too few parameters can lead to underfitting. - Optimal Parameter Count: Balancing complexity and generalization. Popular LLMs by Parameter Count 1. BERT (340M parameters) 2. RoBERTa (355M parameters) 3. DistilBERT (66M parameters) 4. T5 (220M parameters) 5. transformer-XL (1.5B parameters) Understanding parameters is crucial for developing and optimizing LLMs. By balancing parameter count, model complexity, and computational resources, developers can create efficient and effective language models. AI Models Overview What are AI Models? AI models are mathematical representations of relationships between inputs and outputs, enabling machines to make predictions, classify data, or generate new information. Models are the core components of AI systems, learned from data through machine learning (ML) or deep learning (DL) algorithms. Types of AI Models 1. Statistical Models Simple models using statistical techniques (e.g., linear regression, decision trees) for prediction and classification. 2. Machine Learning (ML) Models Trained on data to make predictions or classify inputs (e.g., logistic regression, support vector machines). 3. Deep Learning (DL) Models Complex neural networks for tasks like image recognition, natural language processing (NLP), and speech recognition. 4. Neural Network Models Inspired by the human brain, using layers of interconnected nodes (neurons) for complex tasks. 5. Graph Models Representing relationships between objects or entities (e.g., graph neural networks, knowledge graphs). 6. Generative Models Producing new data samples, like images, text, or music (e.g., GANs, VAEs). 7. Reinforcement Learning (RL) Models Learning through trial and error, maximizing rewards or minimizing penalties. Common Use Cases for Different Model Types 1. Regression Models Predicting continuous values (e.g., stock prices, temperatures) - Linear Regression - Decision Trees - Random Forest 2. Classification Models Assigning labels to inputs (e.g., spam vs. non-spam emails) - Logistic Regression - Support Vector Machines (SVMs) - Neural Networks 3. Clustering Models Grouping similar data points (e.g., customer segmentation) - K-Means - Hierarchical Clustering - DBSCAN 4. Dimensionality Reduction Models Reducing feature space (e.g., image compression) - PCA (Principal Component Analysis) - t-SNE (t-Distributed Stochastic Neighbor Embedding) - Autoencoders 5. Generative Models Generating new data samples (e.g., image generation) - GANs (Generative Adversarial Networks) - VAEs (Variational Autoencoders) - Generative Models 6. NLP Models Processing and understanding human language - Language Models (e.g., BERT, RoBERTa) - Sentiment Analysis - Text Classification 7. Computer Vision Models Processing and understanding visual data - Image Classification - Object Detection - Segmentation Model Selection - Problem Definition: Identify the problem type (regression, classification, clustering, etc.). - Data Analysis: Explore data characteristics (size, distribution, features). - Model Complexity: Balance model complexity with data availability and computational resources. - Evaluation Metrics: Choose relevant metrics (accuracy, precision, recall, F1-score, etc.). - Hyperparameter Tuning: Optimize model parameters for best performance. Model Deployment - Model Serving: Deploy models in production environments. - Model Monitoring: Track model performance and data drift. - Model Updating: Re-train or fine-tune models as needed. - Model Interpretability: Understand model decisions and feature importance. AI models are the backbone of AI systems. Understanding the different types of models, their strengths, and weaknesses is crucial for building effective AI solutions. Resources Required to Use Different Types of AI AI Types and Resource Requirements 1. Rule-Based Systems Simple, deterministic AI requiring minimal resources: * Computational Power: Low * Memory: Small * Data: Minimal * Expertise: Domain-specific knowledge 2. Machine Learning (ML) Trained on data, requiring moderate resources: * Computational Power: Medium * Memory: Medium * Data: Moderate (labeled datasets) * Expertise: ML algorithms, data preprocessing 3. Deep Learning (DL) Complex neural networks requiring significant resources: * Computational Power: High * Memory: Large * Data: Massive (labeled datasets) * Expertise: DL architectures, optimization techniques 4. Natural Language Processing (NLP) Specialized AI for text and speech processing: * Computational Power: Medium-High * Memory: Medium-Large * Data: Large (text corpora) * Expertise: NLP techniques, linguistics 5. Computer Vision Specialized AI for image and video processing: * Computational Power: High * Memory: Large * Data: Massive (image datasets) * Expertise: CV techniques, image processing Resources Required to Create AI AI Development Resources 1. Read the full article

0 notes

Text

Advanced Machine Learning Techniques for IoT Sensors

As we explore the realm of advanced machine learning techniques for IoT sensors, it’s clear that the integration of sophisticated algorithms can transform the way we analyze and interpret data. We’ve seen how deep learning and ensemble methods offer powerful tools for pattern recognition and anomaly detection in the massive datasets generated by these devices. But what implications do these advancements hold for real-time monitoring and predictive maintenance? Let’s consider the potential benefits and the challenges that lie ahead in harnessing these technologies effectively.

Overview of Machine Learning in IoT

In today’s interconnected world, machine learning plays a crucial role in optimizing the performance of IoT devices. It enhances our data processing capabilities, allowing us to analyze vast amounts of information in real time. By leveraging machine learning algorithms, we can make informed decisions quickly, which is essential for maintaining operational efficiency.

These techniques also facilitate predictive analytics, helping us anticipate issues before they arise. Moreover, machine learning automates routine tasks, significantly reducing the need for human intervention. This automation streamlines processes and minimizes errors.

As we implement these advanced techniques, we notice that they continuously learn from data patterns, enabling us to improve our systems over time. Resource optimization is another critical aspect. We find that model optimization enhances the performance of lightweight devices, making them more efficient.

Anomaly Detection Techniques

Although we’re witnessing an unprecedented rise in IoT deployments, the challenge of detecting anomalies in these vast networks remains critical. Anomaly detection serves as a crucial line of defense against various threats, such as brute force attacks, SQL injection, and DDoS attacks. By identifying deviations from expected system behavior, we can enhance the security and reliability of IoT environments.

To effectively implement anomaly detection, we utilize Intrusion Detection Systems (IDS) that can be signature-based, anomaly-based, or stateful protocol. These systems require significant amounts of IoT data to establish normal behavior profiles, which is where advanced machine learning techniques come into play.

Machine Learning (ML) and Deep Learning (DL) algorithms help us analyze complex data relationships and detect anomalies by distinguishing normal from abnormal behavior. Forming comprehensive datasets is essential for training these algorithms, as they must simulate real-world conditions.

Datasets like IoT-23, DS2OS, and Bot-IoT provide a foundation for developing effective detection systems. By leveraging these advanced techniques, we can significantly improve our ability to safeguard IoT networks against emerging threats and vulnerabilities.

Supervised vs. Unsupervised Learning

Detecting anomalies in IoT environments often leads us to consider the types of machine learning approaches available, particularly supervised and unsupervised learning.

Supervised learning relies on labeled datasets to train algorithms, allowing us to categorize data or predict numerical outcomes. This method is excellent for tasks like spam detection or credit card fraud identification, where outcomes are well-defined.

On the other hand, unsupervised learning analyzes unlabeled data to uncover hidden patterns, making it ideal for anomaly detection and customer segmentation. It autonomously identifies relationships in data without needing predefined outcomes, which can be especially useful in real-time monitoring of IoT sensors.

Both approaches have their advantages and disadvantages. While supervised learning offers high accuracy, it can be time-consuming and requires expertise to label data.

Unsupervised learning can handle vast amounts of data and discover unknown patterns but may yield less transparent results.

Ultimately, our choice between these methods depends on the nature of our data and the specific goals we aim to achieve. Understanding these distinctions helps us implement effective machine learning strategies tailored to our IoT security needs.

Ensemble Methods for IoT Security

Leveraging ensemble methods enhances our approach to IoT security by combining multiple machine learning algorithms to improve predictive performance. These techniques allow us to tackle the growing complexity of intrusion detection systems (IDS) in interconnected devices. By utilizing methods like voting and stacking, we merge various models to achieve better accuracy, precision, and recall compared to single learning algorithms.

Recent studies show that ensemble methods can reach up to 99% accuracy in anomaly detection, significantly addressing issues related to imbalanced data. Moreover, incorporating robust feature selection methods, such as chi-square analysis, helps enhance IDS performance by identifying relevant features that contribute to accurate predictions.

The TON-IoT dataset, which includes realistic attack scenarios and regular traffic, serves as a reliable benchmark for testing our models. With credible datasets, we can ensure that our machine learning approaches are effective in real-world applications.

As we continue to refine these ensemble techniques, we must focus on overcoming challenges like rapid system training and computational efficiency, ensuring our IDS remain effective against evolving cyber threats. By embracing these strategies, we can significantly bolster IoT security and protect our interconnected environments.

Deep Learning Applications in IoT

Building on the effectiveness of ensemble methods in enhancing IoT security, we find that deep learning applications offer even greater potential for analyzing complex sensor data.

By leveraging neural networks, we can extract intricate patterns and insights from vast amounts of data generated by IoT devices. This helps us not only in identifying anomalies but also in predicting potential failures before they occur.

Here are some key areas where deep learning excels in IoT:

Anomaly Detection: Recognizing unusual patterns that may indicate security breaches or operational issues.

Predictive Maintenance: Anticipating equipment failures to reduce downtime and maintenance costs.

Image and Video Analysis: Enabling real-time surveillance and monitoring through advanced visual recognition techniques.

Natural Language Processing: Enhancing user interaction with IoT systems through voice commands and chatbots.

Energy Management: Optimizing energy consumption in smart homes and industrial setups, thereby improving sustainability.

Frequently Asked Questions

What Machine Learning ML Techniques Are Used in Iot Security?

We’re using various machine learning techniques for IoT security, including supervised and unsupervised learning, anomaly detection, and ensemble methods. These approaches help us identify threats and enhance the overall safety of interconnected devices together.

What Are Advanced Machine Learning Techniques?

We’re exploring advanced machine learning techniques, which include algorithms that enhance data analysis, facilitate pattern recognition, and improve predictive accuracy. These methods help us make better decisions and optimize various applications across different industries.

How Machine Learning Techniques Will Be Helpful for Iot Based Applications in Detail?

We believe machine learning techniques can transform IoT applications by enhancing data processing, improving security, predicting failures, and optimizing maintenance. These advancements not only boost efficiency but also protect our interconnected environments from potential threats.

How Machine Learning Techniques Will Be Helpful for Iot Based Applications in Detail?

We see machine learning techniques enhancing IoT applications by enabling predictive analytics, improving decision-making, and ensuring robust security. They help us identify unusual patterns, streamline operations, and optimize resource management effectively across various sectors.

Conclusion

In conclusion, by harnessing advanced machine learning techniques, we’re transforming how IoT sensors process and analyze data. These methods not only enhance our ability to detect anomalies but also empower us to make informed decisions in real-time. As we continue to explore supervised and unsupervised learning, along with ensemble and deep learning approaches, we’re paving the way for more efficient and secure IoT systems. Let’s embrace these innovations to unlock the full potential of our connected devices.

Sign up for free courses here.

Visit Zekatix for more information.

#courses#artificial intelligence#embedded systems#embeded#edtech company#online courses#academics#nanotechnology#robotics#zekatix

0 notes

Text

LangChain vs. Traditional AI Development: What You Need to Know

Artificial intelligence (AI) has rapidly evolved over the past few decades, revolutionizing industries and transforming the way businesses operate. As organizations seek to harness AI’s full potential, they face a key challenge: choosing the right tools and frameworks for AI development. Traditionally, AI systems have been developed using frameworks like TensorFlow, PyTorch, and others that require developers to build models from scratch or train them on large datasets. However, with the emergence of LangChain, a new approach to AI development has gained momentum, offering a more modular and flexible way to build AI systems.

In this blog, we will explore the differences between LangChain and traditional AI development, highlighting why businesses, including Trantor, should consider incorporating this new framework into their AI strategies.

Traditional AI Development: A Brief Overview

Before diving into LangChain, it's essential to understand the traditional approach to AI development. Historically, AI systems have been built from the ground up using various machine learning (ML) and deep learning (DL) frameworks, each offering unique features. Some of the most popular traditional frameworks include:

TensorFlow: Developed by Google, TensorFlow is one of the most widely used frameworks for building and deploying machine learning models. It offers robust tools for both research and production, supporting deep learning, neural networks, and more.

PyTorch: Developed by Facebook, PyTorch is a popular alternative to TensorFlow, particularly among researchers. It offers dynamic computation graphs, making it easier to work with complex AI models and perform experiments in real-time.

Scikit-learn: Often used for more traditional machine learning methods, Scikit-learn offers simple and efficient tools for data mining and analysis. It’s well-suited for supervised and unsupervised learning tasks.

Traditional AI development typically involves several steps:

Data Collection and Preprocessing: The first step is gathering relevant data and preparing it for training. This often involves cleaning, normalizing, and transforming data to ensure that it’s in the correct format.

Model Selection: Once the data is prepared, developers select an appropriate model for the task at hand. This could range from simple regression models to more complex neural networks.

Training and Tuning: The selected model is trained on the dataset, and developers fine-tune hyperparameters to achieve optimal performance.

Testing and Validation: The trained model is tested on a separate validation dataset to measure its accuracy and performance.

Deployment and Maintenance: Once the model is deemed accurate, it’s deployed into a production environment, where it’s used to make predictions or automate tasks. Maintenance and updates are needed as the model encounters new data or as business needs evolve.

While traditional AI development is powerful and widely used, it can be resource-intensive and complex, particularly for businesses that lack extensive AI expertise. This is where LangChain comes into play.

What is LangChain?

LangChain is a framework designed to simplify the development of large language model (LLM)-powered applications by allowing developers to chain multiple components together. It provides a more modular and structured approach to building AI models, making it easier to integrate and scale AI solutions.

Unlike traditional AI development, which requires developers to build or train models from scratch, LangChain allows developers to harness pre-trained language models and seamlessly combine them into end-to-end applications. In essence, LangChain simplifies AI development by breaking down the complexities of model-building into smaller, manageable tasks, which can then be linked together (or "chained") to create sophisticated systems.

LangChain’s modular approach is particularly beneficial for companies like Trantor, as it reduces the development time and expertise needed to build complex AI systems. Now, let’s compare LangChain with traditional AI development and explore the advantages it offers.

LangChain vs. Traditional AI Development

1. Pre-Trained Models vs. Building from Scratch

One of the most significant differences between LangChain and traditional AI development is how they handle models. In traditional development, businesses often have to build models from scratch or, at the very least, train them on vast datasets. This process can be time-consuming and requires a deep understanding of machine learning techniques, hyperparameter tuning, and neural network architectures.

LangChain, on the other hand, allows developers to leverage pre-trained models, particularly large language models like GPT-4. These pre-trained models already have extensive knowledge, which can be customized and fine-tuned for specific use cases. Instead of spending weeks or months training a model, developers can use LangChain to assemble pre-trained components and create powerful AI applications much faster.

Advantage of LangChain: Faster development with lower complexity. Pre-trained models allow businesses to deploy AI solutions more quickly, without needing to invest in extensive training infrastructure.

2. Modularity and Flexibility

LangChain’s modular design is one of its biggest strengths. Traditional AI development is often monolithic, meaning that each component (data preprocessing, model training, etc.) is tightly coupled. Modifying one aspect of the system often requires significant changes to other components, making it harder to iterate or experiment with new ideas.

In contrast, LangChain breaks down the AI pipeline into independent modules, each responsible for a specific task, such as data transformation, language generation, or external data retrieval. These modules can be independently modified, improved, or replaced without affecting the rest of the system. This flexibility allows developers to experiment with different components and workflows, making it easier to build customized AI solutions.

Advantage of LangChain: Easier to iterate, experiment, and scale. Modularity provides greater flexibility in integrating new features or adapting to changing business needs.

3. Integration with External Data Sources