#Stuart Russell

Text

Understanding the Risks and Implications of Artificial Intelligence

Understanding the Risks and Implications of Artificial Intelligence

In the coming years, artificial intelligence (AI) is likely to change the world and people’s lives. However, there is disagreement about how it will do so.

Stuart Russell, a renowned computer science professor and AI expert, participated in an interview with the World Economic Forum. In it, he explained the difference between asking a human to do something and giving that task to an AI system as…

View On WordPress

#AI#artificial intelligence#automation#computer science#fulfillment#humans#objectives#risks#society#Stuart Russell#technological unemployment

2 notes

·

View notes

Text

Contra Andreessen on AI risk

A not long time ago Marc Andreessen published an essay titled "Why AI Will Save The World". I have some objections to the section "AI Risk #1: Will AI Kill Us All?", specifically to a pair of paragraphs that contained actual object-level arguments instead of psychologizing:

My view is that the idea that AI will decide to literally kill humanity is a profound category error. AI is not a living being that has been primed by billions of years of evolution to participate in the battle for the survival of the fittest, as animals are, and as we are. It is math – code – computers, built by people, owned by people, used by people, controlled by people. The idea that it will at some point develop a mind of its own and decide that it has motivations that lead it to try to kill us is a superstitious handwave.

In short, AI doesn’t want, it doesn’t have goals, it doesn’t want to kill you, because it’s not alive. And AI is a machine – is not going to come alive any more than your toaster will.

My response (adapted from Twitter thread) aimed mainly at the claim about goals:

AI goals are implicit in its programming. Whether chess-playing AI "wants" things or not, when deployed, it reliably steers the state of the board toward winning condition.

The fact, that the programmer built the AI, owns and uses it, doesn't change the fact, that given superhuman play on the AI's part, they will lose the chess game.

The programmer controls chess AI (they can shut it down, modify it, etc., if they want) because this AI is domain-specific and can't reason about the environment beyond its internal representation of the chessboard.

This AI in the video below is not alive but it sure does things. It's not very smart but the possibility of it being smarter is not questioned by Marc. This AI acts in a virtual environment but, in principle, AI can act in the real world through humans or our infrastructure.

youtube

Human-level AI will be capable of operating in many domains, ultimately accomplishing the goals in the world, similarly to humans. That's the goal of the field of artificial intelligence.

The worry isn't about AI developing (terminal) goals on its own but about pushing the environment toward states that are higher in preference ordering dictated by its programming.

AI researchers have no idea how to reliably aim AIs toward the intended goals and superintelligent AI will be very competent at modeling reality and planning to achieve its goals. The divergence between what we want and what AI will competently pursue is a reason for concern. As Stuart Russell put it:

The primary concern is not spooky emergent consciousness but simply the ability to make high-quality decisions. Here, quality refers to the expected outcome utility of actions taken, where the utility function is, presumably, specified by the human designer. Now we have a problem:

1. The utility function may not be perfectly aligned with the values of the human race, which are (at best) very difficult to pin down.

2. Any sufficiently capable intelligent system will prefer to ensure its own continued existence and to acquire physical and computational resources – not for their own sake, but to succeed in its assigned task.

A system that is optimizing a function of n variables, where the objective depends on a subset of size k<n, will often set the remaining unconstrained variables to extreme values; if one of those unconstrained variables is actually something we care about, the solution found may be highly undesirable. This is essentially the old story of the genie in the lamp, or the sorcerer’s apprentice, or King Midas: you get exactly what you ask for, not what you want. A highly capable decision maker – especially one connected through the Internet to all the world’s information and billions of screens and most of our infrastructure – can have an irreversible impact on humanity.

Why would it kill humans?

1) we would want to stop it when we notice it doesn't act in our interest - AI needs no survival instinct or emotions implemented by evolution to reason that if it will be shut down it will not achieve its goal

2) we could create another ASI that it would have to compete or share resources with, which would result in a worse outcome for itself

0 notes

Text

Gorillaz in my clothes❗️❗️❗️

I love dressing them up

#gorillaz#gorillaz fanart#murdoc niccals#murdoc gorillaz#2d#2d gorillaz#stuart pot#russel hobbs#russel gorillaz#noodle#noodle guitarist from gorillaz#noodle gorillaz#superfreak’s art#superfreak’s stuff

538 notes

·

View notes

Text

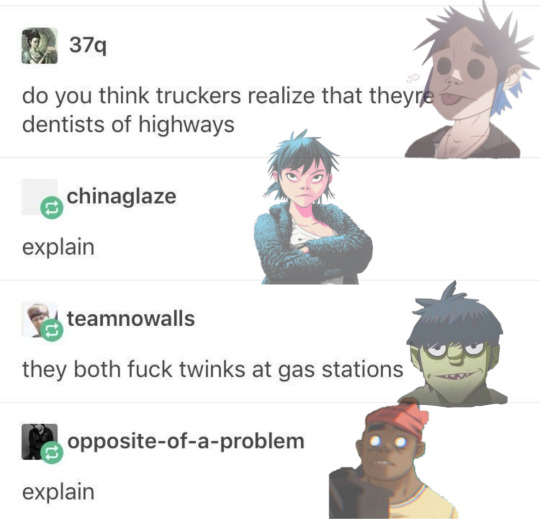

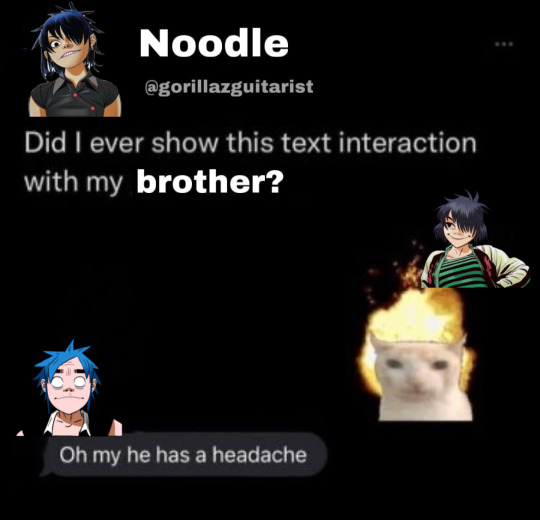

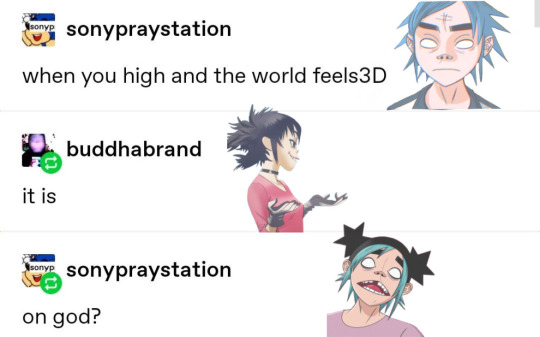

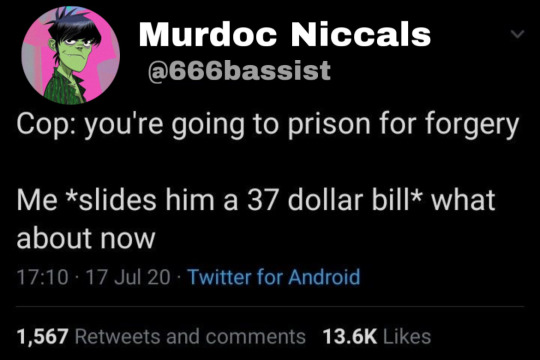

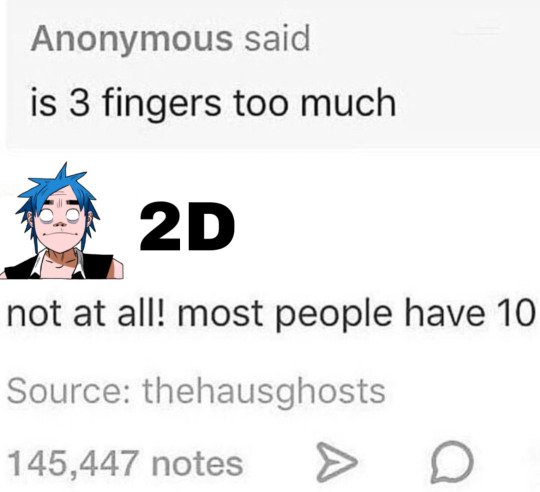

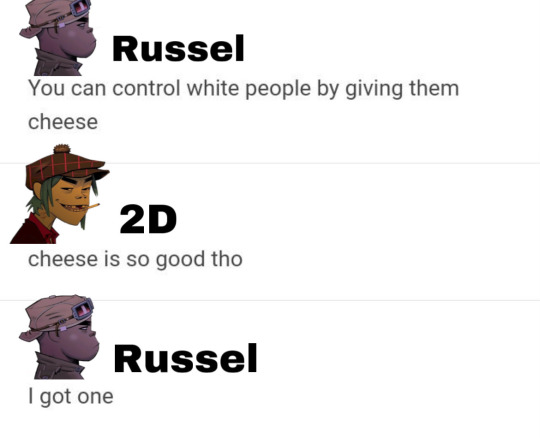

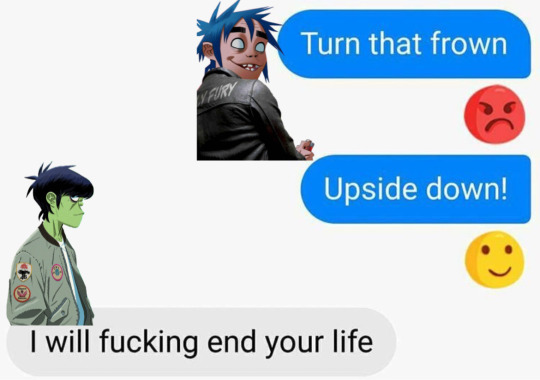

made some low effort gorillaz memes. rotating them in my brain rn like they’re leftover pizza slices.

#gorillaz#2d gorillaz#murdoc niccals#noodle gorillaz#russel hobbs#russel gorillaz#murdoc gorillaz#stuart pot#long post#gorillaz memes

684 notes

·

View notes

Text

AAAAAAAAA I have problems with perspective sorry

#gorillaz#gorillaz fanart#fanart#noodle gorillaz#gorill#murdoc gorillaz#2d gorillaz#russel hobbs#stuart pot#murdoc niccals#gorillaz russel

863 notes

·

View notes

Text

who’s ready for spooky season 👻

#gorillaz#murdoc niccals#murdoc gorillaz#stuart pot#2d gorillaz#russel hobbs#russel gorillaz#noodle gorillaz#saturnz barz#sketch for a big watercolor illustration which i will do…… never probably#one of my fav pieces in my sketchbook tho :-)

401 notes

·

View notes

Text

based off a convo that was in the Gorillaz Almanac

#gorillaz#gorillaz fanart#2d gorillaz#russel gorillaz#noodle gorillaz#murdoc gorillaz#i like the little convos they have in this book :3#stuart pot#russel hobbs#murdoc niccals

596 notes

·

View notes

Text

19-2000.

requested by @gopostal

#gifs#gif#my gifs#20fps#gorillaz#gorillaz gif#19-2000#gifset#music#animation#music video#music edit#murdoc niccals#2-d#stuart pot#noodle#russel hobbs

236 notes

·

View notes

Text

Worldstarrrrr

#gorillaz#2d gorillaz#gorillaz 2d#gorillaz fanart#gorillaz art#fanart#gorillaz phase 1#gorillaz phase 2#artists on tumblr#digital art#gorillaz fandom#gorillaz stu pot#gorillaz murdoc niccals#gorillaz meme#gorillaz murdoc#murdoc gorillaz#murdoc niccals#murdoc#gorillaz stuart pot#stuart pot#gorillaz russel#gorillaz noodle

188 notes

·

View notes

Text

Does 2D even have a chance to win?....

#artists on tumblr#art#gorillaz#fanart#2d gorillaz#stuart pot#stuart harold pot#gorillaz noodle#noodle gorillaz#gorillaz ace#noodle#murdoc niccals#russel gorillaz#russel hobbs#murdoc gorillaz#gorillaz fanart#gorillaz car#funny car#murdoc#ace copular#noodle 1 phase#cute potato noodle#I forbid you to offend noodle!!

207 notes

·

View notes

Text

group huggggg

#gorillaz#murdoc niccals#stuart pot#2D#noodle#russel hobbs#myart#and yea based off that russel hugging the guys pic i wanted noodle there too

2K notes

·

View notes

Link

[...]

Stuart Russell: I think this is a great pair of questions because the technology itself, from the point of view of AI, is entirely feasible. When the Russian ambassador made the remark that these things are 20 or 30 years off in the future, I responded that, with three good grad students and possibly the help of a couple of my robotics colleagues, it will be a term project to build a weapon that could come into the United Nations building and find the Russian ambassador and deliver a package to him.

Lucas Perry: So you think that would take you eight months to do?

Stuart Russell: Less, a term project.

Lucas Perry:Oh, a single term, I thought you said two terms.

Stuart Russell: Six to eight weeks. All the pieces, we have demonstrated quadcopter ability to fly into a building, explore the building while building a map of that building as it goes, face recognition, body tracking. You can buy a Skydio drone, which you basically key to your face and body, and then it follows you around making a movie of you as you surf in the ocean or hang glide or whatever it is you want to do. So in some sense, I almost wonder why it is that at least the publicly known technology is not further advanced than it is because I think we are seeing, I mentioned the Harpy, the Kargu, and there are a few others, there’s a Chinese weapon called the Blowfish, which is a small helicopter with a machine gun mounted on it. So these are real physical things that you can buy, but I’m not aware that they’re able to function as a cohesive tactical unit in large numbers.

Yeah. As a swarm of 10,000. I don’t think that we’ve seen demonstrations of that capability. And, we’ve seen demonstrations of 50, 100, I think 250 in one of the recent US demonstrations. But relatively simple tactical and strategic decision-making, really just showing the capability to deploy them and have them function in formations for example. But when you look at all the tactical and strategic decision-making side, when you look at the progress in AI, in video games, such as Dota, and StarCraft, and others, they are already beating professional human gamers at managing and deploying fleets of hundreds of thousands of units in long drawn out struggles. And so you put those two technologies together, the physical platforms and the tactical and strategic decision-making and communication among the units.

Stuart Russell: It seems to me that if there were a Manhattan style project where you invested the resources, but also you brought in the scientific and technical talent required. I think in certainly in less than two years, you could be deploying exactly the kind of mass swarm weapons that we’re so concerned about. And those kinds of projects could start, or they may already have started, but they could start at any moment and lead to these kinds of really problematic weapon systems very quickly.

[...]

0 notes

Text

I saw this so clear in my head

#my art#fanart#gorillaz#stuart 2d pot#2d gorillaz#russel gorillaz#murdoc niccals#murdoc gorillaz#digital art#fan comic#comics#mini comic

210 notes

·

View notes

Text

Saturnz Barz 🪐

#gorillaz#2d gorillaz#gorillaz art#gorillaz fandom#gorillaz fanart#2d#stuart pot#murdoc niccals#murdoc#noodle#noodle Gorillaz#Russel#russel hobbs#russel gorillaz#mars art

352 notes

·

View notes

Text

official style

#artist on tumblr#murdoc gorillaz#gorillaz#gorillaz noodle#gorillaz fanart#fanart#murdoc niccals#stuart pot#gorillaz art#russel hobbs#noodle gorillaz#2d gorillaz#noodle#2d

473 notes

·

View notes

Text

hello tumblr

#fanart#stuart pot#gorillaz fanart#gorillaz#2d gorillaz#gorillaz 2d#murdoc niccals#russel hobbs#gorillaz noodle#noodle gorillaz#plastic beach#demon days

325 notes

·

View notes