#Special Purpose Machine Automation | AI-based vision sensors

Explore tagged Tumblr posts

Text

Energy Power automate in pune | India

An inverter, charge controllers, a battery that stores energy, and solar panels that gather sunlight are the essential components of a solar power system. If these were absent, it would be inaccurate to state that the system is functioning well. Your smart house will be energy-efficient and optimized for usage thanks to energy automation, which links the solar power system to the primary energy operations.

#Energy and Power Automation Solutions#Special Purpose Machine Automation | AI-based vision sensors#Warehouse Automation Solutions#Material Handling Processes#Partner in Factory Automation#Electrical & software solutions#Process Automation Partner

0 notes

Text

How to Build AI Agents from Scratch in 2025: A Guide by Antier

AI agents have become a game-changer in business operations, automating tasks and enhancing efficiency across various industries. In 2025, the demand for AI agents is higher than ever, and businesses are investing in AI agent development to stay ahead in a fast-paced digital world. At Antier, we specialize in building intelligent, autonomous AI agents that can revolutionize your business.

What Are AI Agents?

An AI agent is a software system designed to carry out tasks autonomously, using tools and structured workflows. They’re more than just chatbots—AI agents can make decisions, solve problems, interact with environments, and execute actions based on data and predefined goals. From simple rule-based agents to advanced learning models, AI agents are categorized based on their complexity and capabilities.

How Do AI Agents Work?

AI agents work by following a cyclical process of perception, decision-making, and action. First, they perceive the environment by gathering data through sensors or inputs. They then process this data, creating an internal model to understand the situation. Using reasoning and planning algorithms, the agent decides on the best course of action to achieve its goal. Once the action is executed, the agent receives feedback, learns, and adapts for future tasks.

The Importance of AI Agent Development

AI agents offer substantial value to businesses by automating complex processes, improving decision-making, and providing personalized customer experiences. With their ability to handle large volumes of tasks at once, AI agents reduce operational costs and enhance productivity.

Key Steps in Building AI Agents

Building AI agents involves several key steps:

Define Objectives: Clearly establish the agent’s purpose and desired outcomes.

Data Collection: Gather high-quality, relevant data to train the agent.

Choose the Right AI Technology: Select technologies such as machine learning, NLP, or computer vision based on your needs.

Design Architecture: Create an efficient system where perception, decision-making, and actions work seamlessly.

Develop and Train: Implement algorithms and train the agent on data to improve performance.

Testing & Deployment: Thoroughly test the agent and integrate it into your workflows.

Why Antier?

At Antier, we specialize in custom AI agent development services. Whether it’s for customer service, healthcare, or finance, our team is equipped to design, develop, and deploy robust AI solutions tailored to your business needs. Let us help you harness the power of AI to drive innovation and growth.

0 notes

Text

Demystifying Computer Vision Models: An In-Depth Exploration

Computer vision, a branch of artificial intelligence (AI), empowers computers to comprehend and interpret the visual world. By deploying sophisticated algorithms and machine learning models, computer vision can analyze and interpret visual data from various sources, including cameras, images, and videos. Several models, including feature-based models, deep learning networks, and convolutional neural networks (CNNs), are designed to learn and recognize patterns in the visual environment. This comprehensive guide delves into the intricacies of computer vision models, providing a thorough understanding of their functioning and applications.

What are Computer Vision Models?

At Saiwa ,Computer vision models are specialized algorithms that enable computers to interpret and make decisions based on visual input. At the core of this technological advancement is the architecture known as convolutional neural networks (CNNs). These networks analyze images by breaking them down into pixels, evaluating the colors and patterns at each pixel, and comparing these data sets to known data for classification purposes. Through a series of iterations, the network refines its understanding of the image, ultimately providing a precise interpretation.

Various computer vision models utilize this interpretive data to automate tasks and make decisions in real-time. These models are crucial in numerous applications, from autonomous vehicles to medical diagnostics, showcasing the versatility and importance of computer vision technology.

The Role of Convolutional Neural Networks

Convolutional Neural Networks (CNNs) are a cornerstone of computer vision technology. They consist of multiple layers that process and transform the input image into a more abstract and comprehensive representation. The initial layers of a CNN typically detect basic features such as edges and textures, while deeper layers recognize more complex patterns and objects. This hierarchical structure allows CNNs to efficiently handle the complexity of visual data.

Training CNNs requires large datasets and significant computational power. High-quality annotated images are fed into the network, which adjusts its internal parameters to minimize the error in its predictions. This training process, known as backpropagation, iteratively improves the model's accuracy.

Examples of Computer Vision Models and Their Functionality

One of the most prominent examples of computer vision models is found in self-driving cars. These vehicles use cameras to continuously scan the environment, detecting and interpreting objects such as other vehicles, pedestrians, and road signs. The information gathered is used to plan the vehicle's route and navigate safely.

Computer vision models that employ deep learning techniques rely on iterative image analysis, constantly improving their performance over time. These models are self-teaching, meaning their analysis capabilities enhance as they process more data. For instance, a self-driving car system would require high-quality images depicting various road scenarios to function accurately. Similarly, a system designed to read and analyze invoices would need authentic invoice images to ensure precise results.

Application in Self-Driving Cars

In self-driving cars, computer vision models play a critical role in ensuring safe and efficient navigation. The models process data from multiple cameras and sensors, allowing the vehicle to understand its surroundings in real-time. This includes detecting lanes, traffic signals, pedestrians, and other vehicles. Advanced algorithms combine this visual data with inputs from other sensors, such as LIDAR and radar, to create a comprehensive view of the environment.

Self-driving cars utilize several computer vision tasks, including object detection, segmentation, and tracking. Object detection helps the car recognize various entities on the road, while segmentation ensures that the boundaries of these objects are clearly defined. Tracking maintains the movement and trajectory of these objects, enabling the vehicle to anticipate and react to dynamic changes in the environment.

Types of Computer Vision Models

Computer vision models answer a range of questions about images, such as identifying objects, locating them, pinpointing key features, and determining the pixels belonging to each object. These tasks are accomplished by developing various types of deep neural networks (DNNs). Below, we explore some prevalent computer vision models and their applications.

Image Classification

Image classification models identify the most significant object class within an image. Each class, or label, represents a distinct object category. The model receives an image as input and outputs a label along with a confidence score, indicating the likelihood of the label's accuracy. It is important to note that image classification does not provide the object's location within the image. Use cases requiring object tracking or counting necessitate an object detection model.

Deep Learning in Image Classification

Image classification models often rely on deep learning frameworks, particularly CNNs, to achieve high accuracy. The training process involves feeding the network with a vast number of labeled images. The network learns to associate specific patterns and features with particular labels. For example, a model trained to classify animal species would learn to differentiate between cats, dogs, and birds based on distinctive features such as fur texture, ear shape, and beak type.

Advanced techniques such as transfer learning can enhance image classification models. Transfer learning involves pre-training a CNN on a large dataset, then fine-tuning it on a smaller, domain-specific dataset. This approach leverages pre-existing knowledge, making it possible to achieve high accuracy with fewer labeled examples.

Object Detection

Object detection DNNs are crucial for determining the location of objects within an image. These models provide coordinates, or bounding boxes, specifying the area containing the object, along with a label and a confidence value. For instance, traffic patterns can be analyzed by counting the number of vehicles on a highway. Combining a classification model with an object recognition model can enhance an application's functionality. For example, importing an image section identified by the recognition model into the classification model can help count specific types of vehicles, such as trucks.

Advanced Object Detection Techniques

Modern object detection models, such as YOLO (You Only Look Once) and Faster R-CNN, offer real-time performance and high accuracy. YOLO divides the input image into a grid and predicts bounding boxes and class probabilities for each grid cell. This approach enables rapid detection of multiple objects in a single pass. Faster R-CNN, on the other hand, utilizes a region proposal network (RPN) to generate potential object regions, which are then classified and refined by subsequent layers.

These advanced techniques allow for robust and efficient object detection in various applications, from surveillance systems to augmented reality. By accurately locating and identifying objects, these models provide critical information for decision-making processes.

Image Segmentation

Certain tasks require a precise understanding of an image's shape, which is achieved through image segmentation. This process involves creating a boundary at the pixel level for each object. In semantic segmentation, DNNs classify every pixel based on the object type, while instance segmentation focuses on individual objects. Image segmentation is commonly used in applications such as virtual backgrounds in teleconferencing software, where it distinguishes the foreground subject from the background.

Semantic and Instance Segmentation

Semantic segmentation assigns a class label to each pixel in an image, enabling detailed scene understanding. For example, in an autonomous vehicle, semantic segmentation can differentiate between road, sidewalk, vehicles, and pedestrians, providing a comprehensive map of the driving environment.

Instance segmentation, on the other hand, identifies each object instance separately. This is crucial for applications where individual objects need to be tracked or manipulated. In medical imaging, for example, instance segmentation can distinguish between different tumors in a scan, allowing for precise treatment planning.

Object Landmark Detection

Object landmark detection involves identifying and labeling key points within images to capture important features of an object. A notable example is the pose estimation model, which identifies key points on the human body, such as shoulders, elbows, and knees. This information can be used in applications like fitness apps to ensure proper form during exercise.

Applications of Landmark Detection

Landmark detection is widely used in facial recognition and augmented reality (AR). In facial recognition, key points such as the eyes, nose, and mouth are detected to create a unique facial signature. This signature is then compared to a database for identity verification. In AR, landmark detection allows virtual objects to interact seamlessly with the real world. For instance, virtual try-on applications use facial landmarks to position eyewear or makeup accurately on a user's face.

Pose estimation models, a subset of landmark detection, are essential in sports and healthcare. By analyzing body movements, these models can provide feedback on athletic performance or assist in physical rehabilitation by monitoring and correcting exercise techniques.

Future Directions in Computer Vision

As we look to the future, the development of computer vision models will likely focus on increasing accuracy, reducing computational costs, and expanding to new applications. One promising area is the integration of computer vision with other AI technologies, such as natural language processing (NLP) and reinforcement learning. This integration could lead to more sophisticated systems capable of understanding and interacting with the world in a more human-like manner.

Additionally, advancements in hardware, such as the development of specialized AI chips and more powerful GPUs, will enable more complex models to run efficiently on edge devices. This will facilitate the deployment of computer vision technology in everyday objects, from smartphones to smart home devices, making AI-powered vision ubiquitous.

In conclusion, computer vision models are at the forefront of AI innovation, offering vast potential to revolutionize how we interact with and understand the visual world. By continuing to explore and refine these models, we can unlock new capabilities and drive progress across a multitude of fields.

Conclusion

Computer vision represents one of the most challenging and innovative areas within artificial intelligence. While machines excel at processing data and performing complex calculations, interpreting images and videos is a vastly different endeavor. Humans can assign labels and definitions to objects within an image and interpret the overall scene, a task that is difficult for computers to replicate. However, advancements in computer vision models are steadily bridging this gap, bringing us closer to machines that can see and understand the world as we do.

Computer vision models are transforming various industries, from autonomous driving and medical diagnostics to retail and security. As these models continue to evolve, they will unlock new possibilities, enhancing our ability to automate and innovate. Understanding the different types of computer vision models and their applications is crucial for leveraging this technology to its fullest potential.

0 notes

Text

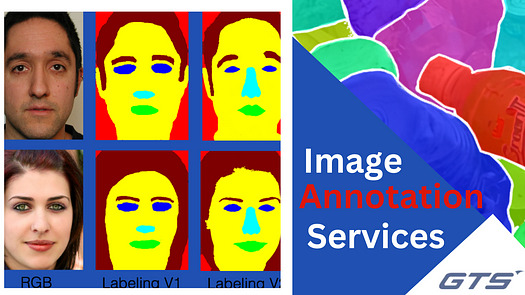

Why is Data Annotation Important for Machine Learning

Introduction:

Data annotation is the process of labeling or tagging data, such as text, images, or videos, with descriptive information that can be used by machine learning algorithms to learn and make predictions. Data annotation is a critical step in the development of machine learning models, as it provides the necessary information for the models to identify patterns and make accurate predictions.

One of the primary reasons why data annotation is important for machine learning is that it enables the creation of high-quality training datasets. Machine learning algorithms rely on large amounts of labeled data to learn and make predictions. Without accurate and consistent data labeling, machine learning models can’t identify patterns and make accurate predictions.

Data annotation company also helps to improve the efficiency and effectiveness of machine learning models. By providing accurate and consistent data labeling, machine learning models can make more accurate predictions, which can lead to better decision-making and improved outcomes.

Furthermore, data annotation helps to improve the interpretability and explainability of machine learning models. By providing descriptive information about the data used to train the model, it becomes easier to understand how the model arrived at its predictions and to identify any biases or errors in the model’s predictions.

In summary, data annotation is an essential component of machine learning, as it enables the creation of high-quality training datasets, improves the accuracy and effectiveness of machine learning models, and enhances the interpretability and explainability of these models.

What is the purpose of data annotation?

The purpose of data annotation is to add meaningful and structured information to unstructured data such as text, images, audio, and video. Data annotation is typically done by humans who label or tag the data with relevant information that can help machines understand the data and learn from it.

Data annotation is essential for training machine learning models, natural language processing models, computer vision models, and other artificial intelligence systems. For example, in natural language processing, data annotation is used to label text with parts of speech, named entities, sentiment, intent, and other relevant information. In computer vision, data annotation is used to label images with object boundaries, object categories, and attributes such as color, texture, and shape.

Data annotation helps to create high-quality training data sets that are crucial for building accurate and reliable machine learning models. Without data annotation, machines would have difficulty understanding and processing unstructured data, making it difficult to derive insights and make informed decisions based on that data.

What is the future scope of data annotation?

The future scope of data annotation is quite promising, as the need for high-quality labeled data continues to grow in many industries, especially in the field of artificial intelligence and machine learning. Here are a few trends and opportunities that are likely to shape the future of data annotation:

Increased demand for domain-specific data: As AI and ML applications become more specialized, the need for domain-specific Image annotation services will grow. For example, healthcare companies may require labeled medical images or clinical data, while automotive companies may need annotated sensor data from autonomous vehicles.

Advancements in AI technology: AI is already being used to automate some aspects of data annotation, such as image recognition and natural language processing. As AI technology continues to advance, it is likely to become even more effective at labeling data, which could lead to new opportunities for data annotation service providers.

Greater emphasis on ethical and unbiased data labeling: With the increasing awareness of ethical considerations in AI and ML, there is likely to be a greater emphasis on ethical and unbiased data labeling practices. This may include more stringent quality control measures and the use of diverse annotators to prevent biases from affecting the labeled data.

Growth of crowdsourcing platforms: Crowdsourcing platforms that enable individuals to perform data annotation tasks from anywhere in the world are becoming more popular. As these platforms continue to grow and improve, they could provide new opportunities for companies to obtain high-quality labeled data at a lower cost.

Overall, the future scope of data annotation is likely to be shaped by advances in AI technology, increased demand for domain-specific data, greater emphasis on ethical and unbiased labeling practices, and the growth of crowdsourcing platforms.

What is Data Annotation?

Data annotation is the process of labeling or tagging data with additional information that makes it easier to use in machine learning algorithms. This can involve adding metadata to images, videos, audio recordings, or any other type of data that needs to be processed by a machine learning model. Common types of data annotation include image classification, object detection, semantic segmentation, and natural language processing.

Why is Data Annotation Important for Machine Learning?

Improved Model Accuracy:

One of the main benefits of data annotation is that it improves the accuracy of machine learning models. When data is labeled and categorized correctly, it allows machine learning algorithms to learn from it more effectively. This is especially true when it comes to supervised learning, where the machine learning model is trained on labeled data. Data annotation helps to ensure that the model is trained on high-quality data, which in turn leads to better accuracy.

Better Data Management:

Data annotation also helps with better data management. By organizing and labeling data, it becomes easier to find and use in machine learning algorithms. This is particularly important when working with large datasets that contain thousands or even millions of data points. Without proper labeling and organization, it can be challenging to manage this data effectively.

Increased Efficiency:

Data annotation can also increase efficiency in machine learning projects. By providing labeled data to machine learning algorithms, it can reduce the amount of time and resources required to train the model. This is because the machine learning algorithm can learn from the labeled data much faster than it could from unstructured data. Additionally, data annotation can help to identify patterns and trends in the data more quickly, which can lead to faster model training.

Improved Generalization:

Another key benefit of data annotation is improved generalization. When a machine learning model is trained on labeled data, it can learn to recognize patterns and make predictions based on that data. However, if the data is not labeled correctly or is too limited, the model may not be able to generalize well to new, unseen data. Data annotation helps to ensure that the model is trained on a diverse range of data, which can improve its ability to generalize to new data.

Increased Customer Satisfaction:

Finally, data annotation can also lead to increased customer satisfaction. Machine learning models are often used to improve customer experiences by providing personalized recommendations or predicting customer behavior. If the model is not accurate, however, it can lead to frustration and disappointment for the customer. Data annotation helps to ensure that the model is trained on high-quality data, which can lead to better predictions and ultimately, a better customer experience.

Conclusion:

Data annotation is an essential part of machine learning. It provides labeled and categorized data that can be used to train machine learning models more effectively. By improving model accuracy, data management, efficiency, generalization, and customer satisfaction, data annotation can help to make machine learning projects more successful. As machine learning continues to play an increasingly important role in many industries, the importance of data annotation is only likely to grow.

0 notes

Text

What Is the Future of Automation? - Difference Between Soft PLC vs. Hard PLC

Programmable logic controllers (PLC) have traditionally been hardware-based. They were as much a part of production lines as any other piece of physical equipment when they were born out of the car industry in the late 1960s.

Recently, software-based PLCs have appeared, asking the question: which is better positioned to support the future of manufacturing, a hard PLC or a soft PLC?

What exactly is a programmable logic controller (PLC)?

A programmable logic controller (PLC) is a computer or software that is specifically built to control various manufacturing processes such as assembly lines, production robots, automated equipment, and more.

They are often purpose-built and designed to handle industrial environmental conditions such as prolonged temperature ranges, high levels of electrical noise, and resistance to impacts and vibration.

How Are PLCs Programmed?

This is a question that is frequently posed. PLCs have significantly streamlined the job compared to the traditional use of electric relays.

�� Ladder logic is a common programming method for PLCs. Initially, ladder logic was created as a way to record the layout of electrical relay racks, which are wired in specific ways.

● It served as the foundation for a programming language that accurately modeled the operation of electrical relays in real-world machinery like PLCs. It has a variety of advantages because it is a computing language that so closely mirrors the internal workings of these devices.

● By requiring less technical knowledge, it makes it easier for maintenance engineers to troubleshoot problems. For instance, engineers don't need to comprehend the complexities of specialized programming languages.

Difference between a soft PLC and a hard PLC

A soft PLC motion control is defined as software that can perform the tasks of a PLC's CPU component while coexisting on hardware with other software.

A hard PLC motion control is a particular piece of hardware that only serves as a PLC, with its CPU activities taking place on a separate unit.

Since PLCs exist in so many different shapes and sizes, defining either kind by its inputs (sensors) or outputs (actuators) would rapidly become complicated. Instead, concentrate on where the CPU is located. So, a PLC is considered hard if a CPU is present in it. If it is present on a different computer, it is a soft PLC.

Why Are Soft PLCs Getting More Popular?

As we enter the era of Industry 4.0, we recognize that flexibility is a critical component of progress. Machine interoperability calls for ideas like soft PLCs, in which each manufacturing operation's possible setups are indefinitely flexible.

Future factories may have cloud-based CPU functionality that enables the simultaneous operation of several manufacturing facilities. That effectively places the PLC's accountability in the realms of IT, AI, and cloud computing.

It's crucial to keep in mind that with soft PLCs, the CPUs exist independently of the rest of the PLC.

Based on an open and accessible RTOS called RTX64 from IntervalZero, Kingstar offers a fully functional and integrated software PLC. Additionally, it contains optional PLC motion control and machine vision components that are controlled via a comprehensive user interface for both C++ programmers and non-programmers. Check out Kingstar right now!

0 notes

Text

Applications of AI and Machine Learning in Electrical Engineering - Arya College

Electrical engineers work at the forefront of technological innovation. Also, they contribute to the design, development, testing, and manufacturing processes for new generations of devices and equipment. The pursuits of professionals of top engineering colleges may overlap with the rapidly expanding applications for artificial intelligence.

Recent progress in areas like machine learning and natural language processing have affected every industry along with the area of scientific research like engineering. Machine learning and electrical engineering professionals’ influences AI to build and optimize systems. Also, they provide AI technology with new data inputs for interpretation. For instance, engineers of Top Electrical Engineering Colleges build systems of connected sensors and cameras to ensure that an autonomous vehicle’s AI can “see” the environment. Additionally, they must ensure communicating information from these on-board sensors at lightning speed.

Besides, harnessing the potential of artificial intelligence may reveal chances to boost system performance while addressing problems more efficiently. AI could be used by the students of best engineering colleges in Rajasthan to automatically flag errors or performance degradation so that they can fix problems sooner. They have the opportunities to realign how their organizations manage daily operations and grow over time.

How Artificial Intelligence Used in Electrical Engineering?

The term “artificial intelligence” describes different systems built to imitate how a human mind makes decisions and solves problems. For decades, researchers and engineers of top btech colleges have explored how different types of AI can be applied to electrical and computer systems. These are some of the forms of AI that are most commonly incorporated into electrical engineering:

Expert systems

It can solve problems with an inference engine that draws from a knowledge base. Also, it is equipped with information about a specialized domain, mainly in the form of if-then rules. Since 1970s, these systems are less versatile. Generally, they are easier to program and maintain.

Fuzzy logic control systems

It helps students of BTech Colleges Jaipur to possibly create rules for how machines respond to inputs. It accounts for a continuum of possible conditions, rather than straightforward binary.

Machine learning

It includes a broad range of algorithms and statistical models. That make it possible for systems to draw inferences, find patterns, and learn to perform different tasks without specific instructions.

Artificial neural networks

They are specific types of machine learning systems. That consist of artificial synapses designed specially to imitate the structure and function of brains. The network observes and learns with the transmission of data to one another, processing information as it passes through multiple layers.

Deep learning

It is a form of machine learning based on artificial neural networks. Deep learning architectures are able to process hierarchies of increasingly abstract features. It helps the students of private engineering colleges to make them useful for purposes like speech and image recognition and natural language processing.

Most of the promising achievements at the intersection of AI and electrical engineering have focused on power systems. For instance, top engineering colleges in India has created algorithms capable of identifying malfunctions in transmission and distribution infrastructure based on images collected by drones. Further initiatives from the organization include using AI to forecast how weather conditions will affect wind and solar power generation and adjust to meet demand.

Other given AI applications in power systems mainly include implementing expert systems. It can reduce the workload of human operators in power plants by taking on tasks in data processing, routine maintenance, training, and schedule optimization.

Engineering the next wave of Artificial Intelligence

Automating tasks through machine learning models results in systems that can often make decisions and predictions more accurately than humans. For instance, it includes artificial neural networks or decision trees. With the evolvement of these systems, students of electrical engineering colleges will fundamentally transform their ability to leverage information at scale.

But the involvement of tasks in implementing machine learning algorithms for an ever-growing number of diverse applications are highly resource-intensive. It involves from agriculture to telecommunications. It takes a robust and customized network architecture to optimize the performance of deep learning algorithms that may rely on billions of training examples. Furthermore, an algorithm training must continue processing an ever-growing volume of data. Currently, some of the sensors embedded in autonomous vehicles are capable of generating 19 terabytes of data per hour.

Electrical engineers play a vital part in enabling AI’s ongoing evolution by developing computer and communications systems. It must match the growing power of artificial neural networks. Creating hardware that’s optimized to perform machine learning tasks at high speed and efficiency opens the door for new possibilities for the students of engineering colleges Jaipur. It includes autonomous vehicle guidance, fraud detection, customer relationship management, and countless other applications.

Signal processing and machine learning for electrical engineering

The adoption of machine learning in engineering is valuable for expanding the horizons of signal processing. These systems function efficiently increase the accuracy and subjective quality when sound, images, and other inputs transmitted. Machine learning algorithms make it possible for the students of engineering colleges Rajasthan to model signals, develop useful inferences, detect meaningful patterns, and make highly precise adjustments to signal output.

In turn, signal processing techniques can be used to improve the data fed into machine learning systems. By cutting out much of the noise, engineers achieve cleaner results in the performance of Internet-of-Things devices and other AI-enabled systems.

The Department of Electrical Engineering at Best Engineering College in Jaipur demonstrates the innovative, life-changing possibilities. Multidisciplinary researchers synthesize concepts from electrical engineering, artificial intelligence and other fields in an effort to simulate the way biological eyes process visual information. These efforts serve deeper understanding of how their senses function. While leading to greater capabilities for brain-computer interfaces, visual prosthetics, motion sensors, and computer vision algorithms.

0 notes

Text

The Chickens of Mars/The Girl in the Moon: Chapter 1

Moon City was a gorgeous place at night. A lot of enigneering and money had gone into making it that way, and it showed. Hiding under every perfectly striking vista was excessive planning, design, and illusion. In a way, the whole city was a facade to project the image of timeless wealth and neon beauty of the most uncannily unnatural kind. The majority of the loveliness extended only to the downtown and some of the wealthier, more respectable areas. It was a massive city, the biggest in the whole human spread, and it was made so as to draw your attention to the parts it wanted you to see. Sometimes the staggering amount of craft that went into the deception still managed to cause Midori Salo to pause and shiver a little, and she’d seen it all nearly every day of her life. Despite its uninspiring name, was home to many of the most widely respected (and well paid) artists, designers and general rich “visionaries” and their visions had demanded jutting arrogant skyscrapers offset by many rounder, more soothing, complementary structures, and even those were super-massive undertakings, which lead to a city of sparkling bright light stacking on top of itself ever upwards that still managed to serve and reveal the infinite Earth-lit sky, and that was beautiful. It was also the last thing the Earth was really good for, and all Midori had ever seen of it.

She shrugged to readjust the too-many wine bottles she was trying to carry in her arms, bitterly deriding herself in her head for not bringing a bag or a cart or anything useful at all to the store. She arms were much stronger than they looked, but it was an awkward jumble she was having difficulty maintaining as she walked a little more than briskly, and she wasn’t about to slow down. She was already running late, and it was a big night for The Boss. More realistically he was her, and many many people’s master, but he preferred to go by just Boss. Not just to her, his little matchstick clone girl, but by basically everyone on The Moon. And his party needed more wine, even though he had more wine in storage than a bored army could drink in a year. He didn’t need more, her single armload wouldn’t make any difference, but she had been told to go get it, so she did. That’s what Midori was good for. Midori was a special girl.

Midori walked in a special way. She was afraid every second of her life. She held herself in a special way and inside she felt like...knew...that she didn’t belong to herself, so she didn’t really know how to stand. Her eyes were special because they were made in a lab, like she was, but had installed separately when she was 14 Old-Earth years old. They were beautiful eyes, better than real ones. The only memory she had of her old ones was them sitting on the surgical table next to her when she opened her new ones. She’d never seen any pictures of herself with them, so she was fairly certain she didn’t miss them. She was very fond of her current eyes. They were beautiful, though sometimes she was worried they said too much, which caused people to see what they wanted to in them. People frequently burdened her with what they thought they saw in her eyes and it made her uncomfortable. They were normally a bluish tinted slightly luminescent green, but when she was scared they would become less blue. When she was asleep they were dull gray, but nobody ever saw them like that

. The Boss has lots of clones on staff. That was common practice, but Midori wasn’t a regular worker clone, despite the tasks she was frequently given. She had a much higher purpose than that, and it kept her from having to deal with many of the occupational hazards that lead to a life expectancy among labor clones rivaled only by some of the Asteroid colonies for brevity. Clones were cheap, cheaper than robots for many applications. Most smaller machines, including the computer that acted as the gatekeeper to The Boss’s grounds, used bio-computers running off the augmented cloned brains of various animals, often chicken or dogs.

“Good evening...MIDORI SALO. Arrival time: 11:46 PM. Expected arrival time 11:30. DISCIPLINARY ACTIONS: not recommended.” The gate computer had always been kind to her. It rarely suggested any consequences for her frequent tardiness.

“Thank you,” she said in her pitch-modulated tones as she walked through the gap in the energy field separating her home from the less opulent world outside. It was meant to always sound pleasant and accommodating, but she’d had it long enough to know how to express how she really felt to anyone who paid attention enough to see. She knew a range of things: awkwardness, embarrassment, ashamed over nearly everything.

She was always sweet, if a little awkward, to robots and other machines. They had to be polite, it was in their programming, but they didn’t have to like anyone either, because nobody cared how their obedient machines felt. Most people couldn’t even recognize the limited range of emotions the AIs had because they didn’t know or care they were there. Midori knew better because she was the same as them. She was made up, like the city. Like the air on the Moon.

She thought about a lot of things but she didn’t usually speak very much except to seem pleasant or say things she had to say. Because of this she was well liked and was praised. She didn’t like it, but it didn’t seem to be in her nature to retreat. She always felt obligated to stay places she didn’t want to be, but that had to be fine. That was what she’d always known and she wasn’t the type to ever get what she wanted when she even knew what that was.

The Boss was rich enough to understand the value of flaunting a conspicuously nihilist aesthetic. The walkway to the dome was flanked only by one certain type of tree, one of the extremely few that could eke out subsistence in the dead lunar soil. They were each spaced just-so, perfectly distant from each other that anyone passing by them would be forced to wonder just how much some landscaper had been paid for being so ostentatiously tasteful. Apart from that there was only plain grayness surrounding the gently self-illuminated, perfectly straight path. The Dome was in the center of the perfectly round estate and was suitably massive. The surface was able to show any color or image the owner desired, and the Boss kept it pearly and white at all times.

The seamless door opened and she hurried inside. Her name flashed all around her on the walls in a stylized typographical ballet then turned to arrows as she scampered down the dimly lit hallway, directing her to the kitchen. “Silence enhancing” frequency tones played to accompany her every footstep and breath. The walls reacted with faintly pulsing dots of lights that seemed far away. The entire place was crafted to be a classy reactive experience, based on the trendy theory that if the environment is animated by the people in it, the people in it will be animated by the environment. Reciprocal Reactivity was the name of the concept. It was at least half malarkey, but it was very hip and very expensive so, of course, the Boss had it.

The kitchen exhibited no such frills. The Chef would have probably brained anyone who would try to put them in with a skillet. He had no time for such hoity-toity foppery. He demanded a clean, efficient kitchen with lots of equipment, lots of food and absolutely no gimmicks. Despite a quick temper, he was in no way a ‘mean’ man, and Midori had always liked him. He tried to look out for her in his way. He at least wanted the best for her. He was very serious and very passionate about his cooking, but he was sweet.

He was loud, though, “Salo, there you are! Jesus, put that wine down. Why didn’t you take a cart or even a bag? Fuck it, never mind. Listen, Goki has been looking for you. I think you’re supposed to be around for that whole...event thing.” He got less loud at the end and seemed careful about how he said it.

Goki was a new robot with an old brain, one that knew how to do everything The Boss wanted the exact way he liked it done, so he kept it around. It’s state of the art body, sleek and cutting-edge though it was, exhibited the same minimalism as the rest of the place, though there was an element of old-fashioned, functional ugliness to it. It was tall, red (An uncommon allowance of adornment, reserved only for Goki among the rest of the countless house robots), angular, covered in many useful appendages and with one big round blue eye-like sensor array on each side of its head.

“SALO! You are required in the BALLROOM for the CONTACT EXPERIMENT in 23 MINUTES.” Goki informed her in his static and authoritative way as soon as she found it, roaming the w\hallway with purposeful automated seriousness.”Take THIS. HRMMMMMN.” it buzz-hummed while it waited. While it made Midori wait. Eventually, a small white orb came zipping down the hallway and dropped a longish box into her arms. It contained a stylish, slightly modest and slightly distracting state of the art dress. It wasn’t the kind of thing she felt comfortable wearing, but it would probably look fine. She just wished it was up to her.

She took it to her room and changed. She checked herself in the screen in the wall and was pleasantly surprised at how much she liked it. It made her feel a small but more confident until she remembered what she was wearing it for. All of her ‘sisters’ would be there with her for the Contact Experiment. They would all share their horror, fear, and happiness that it wasn’t happening to them.

Moving lights all over the dress twitched all around over her as she made her way to the primary ballroom. They started as cute but the closer she got the more they became distracting, almost maddening. Every unpredictable blink caught her eye and even though she lived where it was always night and she was always surrounded by countless blinking lights in the sky and on every wall in the dome, the lights on her dress felt like a countdown.

As she got closer to the ballroom there were fewer robots and more Bozos, and they all stared as she passed. They all knew better than to try to touch her, though several had tried before. They were no longer around, but she could see in their twisted mutant grinning leers that many still wanted to.

The Bozos could usually do more or less whatever they wanted, and almost nobody would be able to do anything about it if they even cared enough to try. Like Midori, they were protected. They oversaw the whole of the remnants of human society. Part police part ghoulish morale officers. They were strikingly grotesque and exuded an engineered aura that caused the average person to pay little mind to them unless the Bozos wanted their attention, which was almost never good. Whatever trick: radiation, chemical or otherwise they used to maintain their shadowy non-presence didn’t affect her brain, so Midori found herself constantly cognizant of them. They didn’t bother her, but they were always looking at her, like the one who stood by the tall round door to the ballroom, permanent red smile arching over half up his tiny, blue spotted head, her yellow cruel eyes leering over his bulbous nose. He knew what waited for her inside, and seemed to be enjoying the feelings she was dreading.

The Primary ballroom was obviously huge and extravagantly simple in decor, apart from the hundreds of people standing around it, blandly garish in their finery, out to see themselves seen on such a momentous night, more important than the other parties. They were important people who she recognized, and her friends that she didn’t but knew who they were. Very few of them held any interest for her, she was meant to gather alongside her ‘Sisters’. The other clones like her. They were gathered on a gracefully curving balcony several stories up, with a good view of the Core- a massive glowing red orb of particle-wave matter, which sometimes seemed like dull Martian stone and at others seemed like an ethereal vision of red roundness. Alive yet stone.

Midori joined her ‘Sisters’ on the balcony, and no matter how she tried to mingle- make simple talk with the 2 dozen girls that looked identically special, just like her, her eyes kept being drawn upwards towards the Core. The only one of her sisters she didn’t see there was her favorite, Justine. The only one she felt like she was really friends with. It made her fear the worst. She didn’t know very many of the sisters, she hadn’t had many chances to meet them outside of infrequent gatherings, largely for study and medical purposes. A lot could go wrong in the life of even a regular clone, let alone very important, very experimental and very expensive models like her line, and considering their importance to the operation of the ZIPP-0 bio-computer, a lot of data had to be collected. She and her sisters weren’t the first generations of their line, but they were the first line where more than 10% had lived past puberty without dying from pituitary and pineal malformations, or being harvested for system components. Midori had never met any of the prior generations of her line, but she’d never heard anyone say they were all dead.

Bic was the only other one of herself that she really knew at all. They’d met 3 years back. They were at least good acquaintances, and she wasn’t talking to anyone so Midori went and stood by her. She had different eyes, purple and a little bigger than Midori’s and had her hair up, whereas Midori kept hers down.

“Have you seen Justine?”

Bic shook her head. Midori could see she was biting her lip. This was a hugely important night, for everyone alive in the remaining human spread, but maybe most of all for the Sisters. This night was the culmination of everything they had been created for. All the ones that were still alive were there. The only explanations for Justine’s absence were either she was already dead, or she’d been selected. Midori probably would have heard something if she were dead, which made her incredibly anxious.

“You live here?” Bic asked. Midori nodded. “How can something so big be so boring?”

Midori laughed a little before catching herself. She lowered her voice, which never got very loud in the first place, “The Boss thinks boring is classy. Interesting things make him feel less interesting.”

“That probably makes sense to him.”

“I promise it does. I hope that matters.” Midori said, smiling. She’d forgotten how fatalistic and funny Bic was. She was surprised she hadn’t been culled yet for her attitude. It wasn’t far off from Midori’s, but Bic always tended to be more vocal about it. The Sisters very rarely spoke up or tried to make their individual personalities known. It never worked out for anybody except the people who controlled them and made them.

“What’s it even like to live with that guy?” Bic asked, meaning The Boss.

Midori took a big breath and thought. She had to. Nobody ever asked her about that, and she had a lot to say but was cautious about the parts she was willing to let out, “I’ve heard a lot worse about others. He’s...not always bad. For the most part, he barely notices me. He likes to talk. He’s very excited about the fact that he exists.”

“They all are. Mine is. At least yours has done something with his life. Mine just wants to die comfortably with as much of his mommy’s money left as he could. I don’t get it. I wish I could leave him. I hate him, but... there are worse things than hating someone. I just wish he wasn’t so useless. It wouldn't make me stop hating him, but he’d have something to...justify it? I’m sorry. At least “The Boss”” Bic chuckled bleakly as she said his name, “Has built something. At least he did something with his dad’s money, right?”

She was right, but it didn’t make Midori feel any better about him. Bic’s “caretaker”

was Bilfer Attims, whose mother had been made a fortune off a settlement from her mother’s death in an asteroid mining accident and had built an empire purchasing and mining the same asteroids. Bilfr was notoriously stupid, even amongst the people forced by economic classes into being his peer.

Midori looked up at the core and the hastily assembled rig of supports and catwalks effortlessly kept in place by hover-spheres. Purple coated, green visor-ed scientists and sciencey-student types hovered about, adjusting the impressively clunky and ungraceful machines that looked so out of place in the Dome’s simple clean arrangement. The area directly above the Core was blocked off by blue velvet curtains, and Midori knew that meant a surprise. One she was already fairly certain of and dreading.

Midori nervously swallowed and asked, “Do you think they picked Justine for the experiment?”

“Of course they did. She’s from the University. She knows that shit more than anybody. It had to be her, we never had a chance. We’re just spare parts.” Bic had never been comforting. Midori admired that about her.

The lights gently lowered and the murmuring of the crowd died out. It was time for the Contact Experiment, but this was The Boss’ house, and he had dumped an incredible amount of money into the project, so he got to give a speech first. He extricated himself from the crush of sycophants he’d been speaking to and took his place, drink in hand, at the center of the room. He was older than he looked, through his many surgeries and genetic rejuvenation procedures had left him in the strange state that so many privileged older people Midori had seen, with an older face, older eyes made to look artificially younger, leaving them dangling in a perpetual unnatural look. He pulled it off better than most, partly because he had excellent taste in doctors, partly because, despite all the things Midori despised about him as essentially her jailer, he had more self-confidence and a sense of personal flair than most of the soulless hangers-on and pleasers around him. Even a semblance of personality was enough to set him apart in the circles he moved in. Midori didn’t think he was even that interesting but compared to the aggressively fawning rich people who wanted a better rich person to latch onto he was a tall glass of water. His hair was as perfect as his suit was not, garish, burgundy paisley that absolutely defied the sense of tasteful understatement that defined the things he surrounded himself with.

He was smiling, and let his smile hang over the assembly for a while, taking in the moment and forcing it back on everyone else before starting his speech, “Tonight, friends, is a monumental night, and not just for us. For all humanity.” applause, “Now I don’t need to tell you that we’re standing on the edge of history. Our planet is dead, rendered nearly unlivable by an unknown event that came right out of the sky and wiped out most of our species, but you know what? We survived! Here we are, living on the Moon, something our ancestors saw every night but never dared to dream we could live on, in the biggest, most beautiful humanity has ever created. But we do. A lot of suffering and sacrifice was required, and there were a lot of people who said it couldn’t be done, who tried to stand in the way of development, advancement, progress, but where are they now? And where are we?”

Applause. The Boss gave a big smile and let them clap until, with a wave of his hand, they didn’t. He continued. “We’ve come a long way, but we can’t stop here! Our options are limitless, but only if we chase them to the ends of the universe! Of course, I’m talking about The Pig. “ there were a few chuckles in the audience. What a character they must have thought, using the vulgar slang word for The Hogsong, “we see it every day, of course. It hangs over us, always there, slowly becoming more complete. I happen to be old enough to remember when it was nothing but a bare skeletal sketch of what is now. Something you'd have to blink to see. Now, well you can see it through the roof even now.” Which was true. He'd probably even pulled a few strings to ensure it would be so close and so directly over his dome. “The largest most complex structure ever assembled by humans, last hope of an endangered species, a triumph of our ingenuity, you’ve heard it all before. Something that big and impressive, something made to contain a whole new society, needs a big impressive computer built to manage a world, to create an experience for the people in that world, and to manage the life functions of, eventually, up to 10 million people one day! Until it finds world's suitable for human habitation. Not just one, mind you. We used to have just one world. Look how that worked out. We need to spread out as far as humanly possible, and in doing so we will prove just how much is humanly possible!”

Massive applause for that. Midori looked over at Bic, who positively vibrated sardonic bemusement. It was inspirational. Midori kept faking a smile, but Bic made her feel like she at least didn’t have to put any effort into it. Less need to shave off another little sliver of her soul to animate the pretense of pleasantness.

“I am endlessly proud of what the brave researchers at Utopia University have done, as we all should be. The ZIPP-0 Bioplex solved the fundamental problems facing the creation of more than artificial intelligence, but something far above and beyond. Tonight…” He winked, “we get to find out if it works. I'd hope so. I've invested a lot of money into it.” They crowd laughed dutifully. “I’m told all the preparations are complete, it’s been a pleasure grandstanding at you for a while. But...before we go on let me speak sincerely for a moment. Tonight is the result of a lot of hard work by a lot of incredibly gifted, very well funded people. Their work is not only exemplary but extraordinary…”

While the Boss kept going on in self-aggrandizing platitudes, Bic turned to Midori and whispered, “We’re allowed to drink, right?” Midori nodded. “Do you mind?” Midori didn’t mind. She snuck down the stairs, trying to be as unassuming as possible, feeling nervous at being the only one who wasn’t paying attention. Then she realized that nobody cared. She slipped a bottle from one of the several catering stations. The robot didn't care. She slipped back up and handed it to Bic who took a drink. “Thanks.”

“I didn't realize how much I was going to want a drink until you mentioned it,” Midori said and took a sip, then a much larger one. The speech came to a close about 11 minutes later by her internal clock. The lights refocused onto the core and the catwalks surrounding it. The curtains vanished in a holographic glitter. Justine hung in the air with her hands across her chest. She wore a white plastic suit, probably Imploplex-7, with massive cables streaming out the back and terminating in two plumes out her back to massive clunky computing vats that scientists on the catwalks carefully monitored. Midori recognized the one standing closest to the ledge as Dr. Doug Smith from Utopia University. He was head of the Core Component department, and if Midori thought she had a father she'd probably think it was him, but she didn't. She zoomed her artificial eyes in on Justine. Her face was blank in the way Justine’s always was when she was trying to ignore something. Midori had seen it plenty of times. Her lips were pursed tightly. She was breathing slowly and mindfully. She was as calm as she could force herself into being.

Dr. Smith’s voice came amplified for everyone to enjoy, “Core status: 96% inert. Submerge the control medium.” A steel orb came down above the core and extended a long arm with a black orb on the end. It sparkles and vanished, it's matter sucked like pixels in a vacuum into the orb not didn't look natural. It looked like a display glitch in reality. There was no sound. The Core began to pulsate a deep, organic looking Crimson in its center.

“Control medium inserted. Interface activated. Core is stable. 89% inert.” some faceless voice described.

“Decrease field locks. Subject status?” Smith said, watching very seriously.

“Psychological dampers are at full. No contamination. Vitals optimal..”

“She’s ready. Release psychological buffers and begin the descent.”

The anti-gravity suspension rings that circled Justine began to slowly lower her down towards the core. She remained placed, but Midori could see her chest tighten in fear. She looked over to Bic, who was biting her lip, also fixed on Justine, as were the rest of the Sisters.

When she was halfway down to the Core, Dr. Smith waved his hands, slowing the descent, “Status?”

“Psychological contamination has begun. 13% at this point. Absolute borderline in 5 meters. She’ll be unsalvageable beyond that point.” another anonymous voice responded. A girl’s.

“Core status?”

“Nominal. Field restrictions at last level. Exo-Ego Field interfaces online. All meta-psychological systems ready for data flash.”

“Proceed 3.5 meters,” Smith said. The rings lowered her further until Justine’s bare toes were just over the core. Midori saw her struggling to keep her eyes closed, trying to contain whatever she was experiencing.

“Absolute borderline passed. Psychological contamination at 60%. Subject’s ego deterioration is 7% below optimal but within parameters.”

“Apply buffers at quarter power. Release Exo-Ego Field containment.” Smith directed. There was a loud snapping sound, and purple plumes that looked like solid electricity snaked up from the core and wove around Justine’s toes.

“Field released. It’s locked onto the subject.”

“Charge to control medium and initiate Exo-Ego submerge.”

“Engaged.”

The purple tendrils stopped writing and phased through her feet, becoming a purple orb of viscous energy between Justine and the core. Her face showed none of the plain defiant serenity it had before. Her eyes were still shut tightly but her mouth was monstrously wide. Whatever sounds she was making were not amplified for the audience, and even Midori’s enhanced hearing couldn’t discern it over the almost musical crackling squeal of the brightening core. More purple tendrils danced up around it and slowed, hovering around the orb.

“Subject has begun integration with the Core.” Subject. They never called her by name.

Justine clutched her chest tight enough to tear skin. She was screaming, words or shrieks Midori couldn’t tell. Large screens floating around the ballroom displayed various close-ups on the core, the scientists, readouts no one could understand, but none showed any sort of detail of Justine. That wasn’t what the audience was supposed to be looking at or caring about.

“Increase submersion speed by the second factor. She’s doing just fine.” Smith said. He and the girl’s voice were the only ones who even called her “she”. That didn’t make Midori feel any better about them.

The rings moved her down faster, but she never went past the orb. Instead, she seemed to be almost disintegrating, feeding the pulsing ball of energy. More snaking electrical tentacles came up and less phased through than they seemed to hungrily begin to absorb portions of her legs, but not biologically, almost like it was transmuting her into its digital self, but it still made the skin around the points of contact bubble up. More. Midori zoomed her eyes in. The resolution was much grainier, but it seemed like tiny arms, complete with fully fingered hands were growing out of the Imoplex suit, stretching longingly towards the places of her dissolution. Midori wanted to stop watching, so she took another drink instead. Not watching was not an option. Not yet. Horror had her too transfixed. Sadness had her paralyzed. She held the bottle out to Bic, who didn't even notice it there until Midori nudged her arm with it. She apologized and took a hefty swig. Midori could see it was getting hard for her to watch.

It was getting even harder for Justine. Midori looked back and saw she was down to her hips but still alive and still in agony, even more than before. It kept going until it was up to her neck, and then she opened her eyes. They had always been unique. They didn’t affect a natural look. They were dot-matrix LCD, with a slow refresh rate, a throwback stylistic choice in the style of an old Earth LCD display. There was something so unnaturally beautiful about them. Midori had always loved them. She saw them one last time, showing the truest and unnatural digital display of brokenness and hopelessness. Midori couldn’t bear to see them like that, but they didn’t last long. Soon she was completely absorbed into the orb along with the suit. The wires which had been streaming from the back snapped and swung aside like dead weight.

“Subject dissolution complete. Control medium reads 100% retention.”

Dr. Smith took a big breath. He wasn’t smiling yet. “Close the Exo-Ego Field.”

There was a snap like tinkling, windchime thunder and a flash. The glowing orb shattered into countless sparks and sunk into the core, which began to hum and change shades to a deep, multilayered mostly opaque crimson.”

“Field closed. Control medium dived into the core. Exo-Ego integration commencing. Complete. Exo-Ego integrated at 95%.”

Dr. Smith nodded. “Operation is a success. ZIPP-0 is online.”

They applauded so much, the sound filled the whole ballroom and made Midori want to vomit. She looked to Bic, who looked back. All the composure and bemusement was gone from her face, replaced by ghastly, horrified blankness. Neither had anything to say. Midori took her hand and lead her down the stairs to the catering kiosk closest to the furthest of the ballroom's six balconies. They each grabbed a bottle of Martian wine and escaped outside while the people began to mingle and discuss the historic moment they’d both seen.

3 notes

·

View notes

Text

State Of Enterprise AI In India 2019 | By AIM & BRIDGEi2i (Part III)

Note: This is the Third Part of a three-part series of our study ‘State of Enterprise AI In India 2019’ brought to you in association with BRIDGEi2i. Check Part I of the three-part series here. Check Part II of the three-part series here. Evolving AI Delivery Models Gradually, we shall see the rise of AI as a separate function, and it will be tightly coupled with the solutions/services a particular enterprise offers. AI has given rise to three distinct delivery engagement models — AI-as-a-Service, AI-as-a-Solutions & AI-as-a-Product. The modern AI stack consists of — infrastructure components that include computer hardware, algorithms, and data. From managing the building blocks to implementing production-level AI solutions that can generate results within a period of 7-8 months, AI delivery models will significantly change the enterprise AI landscape. a) AI-as-a-Service: Industry experts forecast that AIaaS will soon evolve as the preferred delivery model that enables rapid, cost-saving onboarding of AI without being too heavily reliant on AI experts in-house. AIaaS consumption model enables enterprises with readily available cognitive capabilities and accelerators, allowing their team to focus on the business problem without having to worry about the underlying AI hardware/infra components. Another instance of AIaaS is when Solutions Providers list several of their Deep Learning and Machine Learning algorithms through a tie-up with AWS Machine Learning Marketplace. b) AI-as-a-Solution: Solution providers deliver production-level AI solutions, custom-built around narrow business problems. In this delivery model, AI solutions providers and boutique vendors follow a collaborative approach — co-development of solutions that involve industry domain expertise. The solutions are deployed on-premise or on cloud infrastructure. By following iterative agile methodology and making the build cycles more iterative, solutions solution providers co-create solutions that deliver business value. c) AI-as-a-Product: AI-as-a-Product is when an AI software product can be configured according to the needs of an enterprise. An example of AI as a product would be BRIDGEi2i’s Watchtower & Recommender that provides granular insights with real-time alerts. These products can be configured as per the specific needs of an organization and will also have to work seamlessly with other software products on the enterprise shelf. The critical decision point will be choosing the right AI partner with domain experts, analysts, AI solutions engineering teams who can build the best solution in the shortest span of time. We believe with the shift in the scale of adoption, the role of AI Solution Delivery Leader will evolve as the one who enables the creation of production-grade AI-based automation solutions and lend business value.

“Companies today prefer the pay-as-you-go model where every service is compartmentalized and available to them as per their consumption. Cloud is a major reason behind this requirement being in vogue today.” Anil Bhasker, Business Unit Leader, Analytics Platform-India/South Asia, IBM for Analytics India Rise Of AI-As-A-Service Economy According to 14Dell Technologies’ Digital Transformation Index, India is the most digitally mature country in the world. With the third-largest startup ecosystem and a strong developer base, India is on the cusp of a massive digital transformation. As digital organizations move further up the ladder to harness the potential of AI across enterprises, the AI-as-a-Service model (AIaaS) model will become a necessity in the near future from provisioning pre-built accelerators, data access, right AI tools and APIs as self self-service trend gathers momentum. Veteran IT leader Kris Gopalakrishnan posited that AI and machine learning could be as big as 15$177bn IT services industry. Given how the AI disruption is here to stay, we see India playing a more significant role in strengthening the global AI ecosystem. India is the third-largest startup ecosystem across the globe, with 40,000 AI developers. We are also the youngest country in the world, which means that not only do we have the talent base to fuel transformation, we can also upskill and align the talent to harness the potential of AI. Home to some of the largest service providers, global system integrators and consulting companies, India is poised to become a global AI hub.

“India is no longer a test-bed for AI applications but is championing world-class solutions. By being early to market, having a strong machine learning expertise and developing powerful specializations around specific business functions, mid-size AI service providers are now well-positioned to deliver business value and specialization across the globe.” Prithvijit Roy, CEO & Co-founder, BRIDGEi2i The burgeoning AI Services market, led by global consulting majors like Accenture, Deloitte, PwC, KPMG, and EY is complemented by mid-size and niche AI Service providers like Mu Sigma, BRIDGEi2i, Cartesian Consulting and Fractal Analytics that are offering high quality AI expertise and in-built accelerators — pre-designed and pre-validated solutions which can accelerate “on-ramp” to AI effectively. With stronger competencies, AI talent base, and competencies in specific verticals — mid-sized firms are well-positioned to provide more value to larger enterprise customers. Which Sectors Are Frontrunners in AI Adoption & Where’s The Momentum Building BFSI, due to its sheer size, is the largest adopter of AI. We see Machine Learning, Computer Vision, and robotic processing getting very widely adopted in BFSI. Telecom, Retail. Healthcare & Manufacturing are the next two sectors digitizing their processes that will be the torchbearers soon. 1. Banks Are Detecting Fraud and Managing Risk With AI FSI industry generates enormous amounts of data mostly in a transactional form, which can be analyzed in real-time to make smart decisions. For banks, one primary application of AI is the automated underwriting of loans based on a customer’s entire history of transactions and credit scores. This would also eliminate human bias and errors that usually occur in loan approvals. AI is on top when it comes to security and fraud identification. By analyzing millions of transactions, machine learning systems are helping financial organizations identify anomalous patterns in transactions, which is reducing cases of fraud and strengthening trust among parties.