#Scrape Popular Retailer data

Explore tagged Tumblr posts

Text

Scraping data from e-commerce websites can be a complex task involving navigating through web pages, handling different types of data, and adhering to website terms of service. It's important to note that scraping websites without permission may be against their terms of service or even illegal. However, if you have permission or the website provides…

0 notes

Text

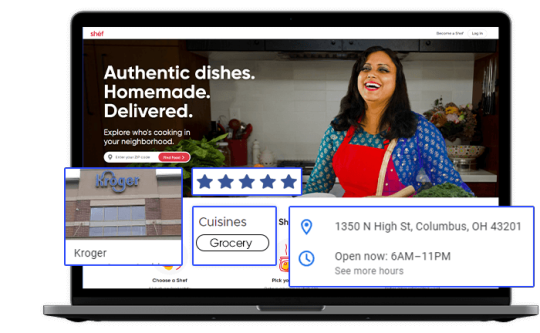

Tapping into Fresh Insights: Kroger Grocery Data Scraping

In today's data-driven world, the retail grocery industry is no exception when it comes to leveraging data for strategic decision-making. Kroger, one of the largest supermarket chains in the United States, offers a wealth of valuable data related to grocery products, pricing, customer preferences, and more. Extracting and harnessing this data through Kroger grocery data scraping can provide businesses and individuals with a competitive edge and valuable insights. This article explores the significance of grocery data extraction from Kroger, its benefits, and the methodologies involved.

The Power of Kroger Grocery Data

Kroger's extensive presence in the grocery market, both online and in physical stores, positions it as a significant source of data in the industry. This data is invaluable for a variety of stakeholders:

Kroger: The company can gain insights into customer buying patterns, product popularity, inventory management, and pricing strategies. This information empowers Kroger to optimize its product offerings and enhance the shopping experience.

Grocery Brands: Food manufacturers and brands can use Kroger's data to track product performance, assess market trends, and make informed decisions about product development and marketing strategies.

Consumers: Shoppers can benefit from Kroger's data by accessing information on product availability, pricing, and customer reviews, aiding in making informed purchasing decisions.

Benefits of Grocery Data Extraction from Kroger

Market Understanding: Extracted grocery data provides a deep understanding of the grocery retail market. Businesses can identify trends, competition, and areas for growth or diversification.

Product Optimization: Kroger and other retailers can optimize their product offerings by analyzing customer preferences, demand patterns, and pricing strategies. This data helps enhance inventory management and product selection.

Pricing Strategies: Monitoring pricing data from Kroger allows businesses to adjust their pricing strategies in response to market dynamics and competitor moves.

Inventory Management: Kroger grocery data extraction aids in managing inventory effectively, reducing waste, and improving supply chain operations.

Methodologies for Grocery Data Extraction from Kroger

To extract grocery data from Kroger, individuals and businesses can follow these methodologies:

Authorization: Ensure compliance with Kroger's terms of service and legal regulations. Authorization may be required for data extraction activities, and respecting privacy and copyright laws is essential.

Data Sources: Identify the specific data sources you wish to extract. Kroger's data encompasses product listings, pricing, customer reviews, and more.

Web Scraping Tools: Utilize web scraping tools, libraries, or custom scripts to extract data from Kroger's website. Common tools include Python libraries like BeautifulSoup and Scrapy.

Data Cleansing: Cleanse and structure the scraped data to make it usable for analysis. This may involve removing HTML tags, formatting data, and handling missing or inconsistent information.

Data Storage: Determine where and how to store the scraped data. Options include databases, spreadsheets, or cloud-based storage.

Data Analysis: Leverage data analysis tools and techniques to derive actionable insights from the scraped data. Visualization tools can help present findings effectively.

Ethical and Legal Compliance: Scrutinize ethical and legal considerations, including data privacy and copyright. Engage in responsible data extraction that aligns with ethical standards and regulations.

Scraping Frequency: Exercise caution regarding the frequency of scraping activities to prevent overloading Kroger's servers or causing disruptions.

Conclusion

Kroger grocery data scraping opens the door to fresh insights for businesses, brands, and consumers in the grocery retail industry. By harnessing Kroger's data, retailers can optimize their product offerings and pricing strategies, while consumers can make more informed shopping decisions. However, it is crucial to prioritize ethical and legal considerations, including compliance with Kroger's terms of service and data privacy regulations. In the dynamic landscape of grocery retail, data is the key to unlocking opportunities and staying competitive. Grocery data extraction from Kroger promises to deliver fresh perspectives and strategic advantages in this ever-evolving industry.

#grocerydatascraping#restaurant data scraping#food data scraping services#food data scraping#fooddatascrapingservices#zomato api#web scraping services#grocerydatascrapingapi#restaurantdataextraction

4 notes

·

View notes

Text

Introduction

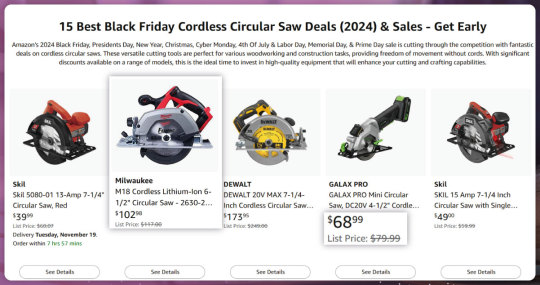

In the age of data-driven decision-making, businesses can greatly benefit from extracting data directly from mobile apps. The Amazon mobile app, rich with product listings, reviews, prices, and competitor data, provides valuable insights for sellers and marketers looking to refine their sales strategy. Using Python, a robust programming language for data scraping and analysis, businesses can automate the process of collecting and analyzing this data, making it easier to stay competitive and understand market trends. In this guide, we'll explore how Amazon mobile app data scraping Python can enhance your sales strategy and lead to better pricing and product insights.

Why Scrape Data from Mobile Apps?

With the increase in mobile usage, e-commerce platforms like Amazon have seen a surge in traffic through mobile apps. Mobile-specific data can often differ from website data, making mobile app data scraping essential for a comprehensive understanding of consumer behavior. Data extracted from mobile apps can reveal product popularity, pricing changes, discounts, and customer sentiment, which directly impact sales strategy and customer experience. By automating mobile app data scraping, you can track competitors, evaluate customer trends, and make data-driven decisions quickly.

Benefits of Amazon Mobile App Data Scraping Python

Using Python for mobile app data scraping offers multiple advantages:

Automation: Python allows for building automated scripts that continuously scrape Amazon's app for updated data.

Efficiency: Python libraries like BeautifulSoup and Scrapy are designed to extract data efficiently, saving time and resources.

Data Analysis: Python’s data analysis libraries (like pandas) are great for processing and analyzing scraped data for actionable insights.

Key Data Points to Extract from the Amazon Mobile App

When you scrape data from mobile apps like Amazon’s, several key data points can help you gain a competitive advantage:

Product Listings: Basic details like product names, descriptions, images, and ASINs (Amazon Standard Identification Numbers).

Pricing Information: Including prices, discounts, and historical price trends for accurate pricing intelligence.

Customer Reviews and Ratings: Valuable insights into customer satisfaction, product performance, and potential product improvements

Competitor Listings: Information on competing products, their prices, and popularity.

Stock Levels and Availability: Helps in understanding demand, tracking product shortages, and planning inventory.

Step-by-Step Guide to Amazon Mobile App Data Scraping Python

Step 1: Setting Up Your Python Environment

Step 2: Extract Android Apps with Python

Using Python, you can directly extract data from mobile applications, particularly Android apps, by reverse-engineering APIs or using automation tools like Selenium. Here’s how you can get started with how to Extract Amazon mobile data Python.

Step 3: Understanding Amazon’s API Structure

While Amazon’s public API may have limitations, you can explore indirect ways to access data. For example, you may simulate mobile API calls, but be cautious and ensure compliance with Amazon’s terms of service. Alternatively, use tools like Selenium to automate interactions and extract data without directly querying APIs.

Step 4: Building a Basic Python Scraper

Leveraging Data Insights for a Winning Sales Strategy

1. Price Comparison and Competitive Pricing Strategy

Using Python to scrape and analyze Amazon app data allows you to implement a price comparison strategy. By regularly monitoring competitor prices, you can adjust your own pricing to remain competitive. This strategy is particularly helpful for price-sensitive products or seasonal items.

Pricing Intelligence: Python’s data analysis capabilities enable you to develop a pricing intelligence system that dynamically updates pricing based on competitor trends. This intelligence can help retailers Scrape Retail Mobile App Using Python to maximize profits and maintain competitiveness.

2. Inventory Optimization and Demand Forecasting

With mobile app scraping, you can track stock levels, monitor availability, and predict demand patterns. Mobile app data extraction Python makes it easy to gather information about popular products, helping you adjust your inventory to meet consumer demand effectively.

3. Customer Sentiment Analysis

Customer reviews on the Amazon app offer valuable insights into customer sentiment. Using Python, you can extract and analyze this data to identify recurring complaints or positive feedback. With Mobile App Scraping Services, you can continuously monitor reviews, which can improve product development, marketing, and customer service.

Sentiment Analysis with Python: By leveraging natural language processing (NLP) libraries such as TextBlob or Vader, you can analyze review text for positive or negative sentiments. This insight is crucial for understanding customer satisfaction levels and areas for improvement.

4. Product Trend Analysis

Monitoring trends on the Amazon app provides insights into which products are gaining popularity. With Amazon app data extraction guide and Python’s analytics tools, you can identify trending products and adjust your inventory or marketing strategy accordingly.

Tools and Libraries for Amazon Mobile App Data Scraping Python

Selenium: A Python automation tool that interacts with mobile apps and web elements, perfect for scraping dynamic pages.

BeautifulSoup: An essential library for parsing HTML and XML documents, useful for extracting static page elements.

Pandas: A data analysis library to organize and analyze scraped data.

Compliance and Ethical Considerations

When scraping data from mobile apps, especially the Amazon app, it's crucial to follow ethical practices. Amazon’s terms and conditions prohibit unauthorized data scraping, so always consider using their official API where possible and consult legal experts if necessary.

Key Compliance Points

Avoid Overloading Servers: Schedule scraping at intervals to prevent high traffic.

Respect Robots.txt: Adhere to the platform’s scraping policies.

Secure User Consent: Use data in compliance with GDPR and other privacy laws.

Scaling Your Data Extraction with Mobile App Scraping Services

As you expand your scraping activities, leveraging a reliable Mobile App Scraping Service like those offered by Actowiz Solutions can simplify and scale your data operations. Our services automate the complex process to scrape Android app data and provide structured, actionable insights tailored to your business needs. This scalable approach allows you to focus on analysis rather than the technical aspects of data collection.

Conclusion

Amazon mobile app data scraping Python offers businesses a unique advantage in understanding market dynamics and improving sales strategies. By automating data extraction, you can track competitors, analyze customer feedback, and refine pricing strategies, all essential for a successful, data-driven approach in today’s competitive market. With Actowiz Solutions, you have access to expert Mobile App Scraping Services, customized solutions for price comparison, pricing intelligence, and more.

Ready to transform your data strategy? Contact Actowiz Solutions today and start leveraging powerful insights to boost your sales performance. You can also reach us for all your mobile app scraping, data collection, web scraping, and instant data scraper service requirements.

Source: https://www.actowizsolutions.com/amazon-mobile-app-data-scraping-python-boost-sales-strategy.php

0 notes

Text

Weedmaps.com Scraping Service

Weedmaps.com Scraping Service

Unlock the Power of Cannabis Market Insights with Weedmaps.com Scraping Service by DataScrapingServices.com. 🌿📊

The cannabis industry is booming, with businesses striving to outpace competitors and connect with consumers more effectively. At Data Scraping Services, our Weedmaps.com Scraping Service is designed to provide cannabis retailers, suppliers, and marketers with the data they need to succeed.

Introduction

Weedmaps.com is a leading platform in the cannabis sector, offering comprehensive details about dispensaries, products, delivery services, and more. Our Weedmaps.com Scraping Service extracts critical data from Weedmaps, helping businesses make informed decisions and improve their strategies. Whether you are a dispensary owner, cannabis supplier, or a market researcher, having access to well-organized data can elevate your growth and profitability.

List of Data Fields Extracted

Our service can provide:

- Dispensary Information: Names, locations, operating hours, and contact details.

- Product Listings: Strain names, THC/CBD levels, product descriptions, and prices.

- Delivery Services: Coverage areas, fees, and estimated delivery times.

- User Reviews and Ratings: Insights into customer preferences and feedback.

- Promotions and Deals: Discounts, special offers, and event details.

Benefits of Weedmaps.com Scraping

- Competitive Advantage: Understand market trends, pricing, and consumer demand to stay ahead of competitors.

- Enhanced Marketing: Develop targeted campaigns by analyzing customer preferences and popular products.

- Improved Inventory Management: Align your stock with market demand by tracking trending strains and products.

- Data-Driven Decisions: Leverage accurate insights to optimize business strategies and improve ROI.

- Save Time: Automate the process of data collection, allowing you to focus on strategy and execution.

Why Choose DataScrapingServices.com?

- Accuracy: We ensure that the data we provide is precise and up-to-date.

- Customization: Tailored scraping solutions to meet your unique business needs.

- Reliability: Trust us to deliver data securely and on time.

Best Weed Data Scraping Services Provider

Weedmaps Data Scraping

CBD Email List

Scraping Dispensaries from Leafly.com

Scraping Marijuana Dispensaries from Potguide.com

Marijuana Dispensaries Scraping from Allbud.com

Weedmaps Dispensaries Email List

Extract Leafly Cannabis Product Data

Scraping Weed Dispensaries From Weedmaps.com

Weedmaps Product Listings Scraping

Weedmaps.com Scraping Service

CBD Product Data Extraction from Leafly

Best Weedmaps.com Scraping Service in USA:

Oklahoma City, Las Vegas, Albuquerque, Sacramento, Boston, Houston, San Antonio, Long Beach, Fresno, Austin, Virginia Beach, Charlotte, Orlando, Raleigh, Sacramento, Indianapolis, Colorado, San Francisco, Atlanta, Seattle, Columbus, Milwaukee, Philadelphia, Bakersfield, Mesa, Washington, San Jose, Jacksonville, Chicago, Omaha, Memphis, Dallas, Wichita, Kansas City, San Francisco, San Diego, Tulsa, New Orleans, El Paso, Nashville, Colorado, Long Beach, Fort Worth, Louisville, Denver, Tucson and New York.

Conclusion

The cannabis industry thrives on innovation and insights. With the Weedmaps.com Scraping Service from DataScrapingServices.com, businesses can unlock the potential of their marketing and operational strategies. Gain the competitive edge you need with reliable data tailored to your objectives.

📩 Email: [email protected]

🌐 Learn More: DataScrapingServices.com

#weedmapsdatascraping#cannabisdataservices#cannabisindustrytools#datadrivenmarketing#webscrapingservices#datascraping#weedmapsdataanalysis

0 notes

Text

How Does Retail Store Location Data Scraping Help with Competitor Analysis?

In today's competitive business landscape, understanding customer behavior, preferences, and shopping patterns has become essential for staying ahead. Location data, particularly the geographic placement of retail stores, has emerged as a valuable resource. Businesses can gather detailed information on retail store locations, competitor analysis, and potential market opportunities by employing location data scraping. This article explores how retail store location data scraping can revolutionize business insights, fueling strategies in marketing, expansion, and customer engagement.

What is Retail Store Location Data Scraping?

Retail store location data scraping is extracting location-related information about retail outlets from online sources, such as websites, social media, and mapping platforms. This data might include store addresses, opening hours, customer reviews, sales information, and geographical coordinates. By aggregating this information, businesses can create a comprehensive dataset of retail locations and competitor positioning in real-time.

With the rise of accessible tools and platforms, companies can Scrape Stores Location Data to obtain such valuable information legally and ethically, provided they adhere to the terms of service and data usage policies of the sources they collect from. This data helps businesses analyze the spatial and demographic dynamics influencing their industry and adapt accordingly.

Benefits of Retail Store Location Data Scraping

There are numerous advantages to retail store location data extraction, making it a critical tool for business intelligence. Here are a few transformative benefits it offers:

a. Enhanced Competitor Analysis

By extracting retail location data, businesses can gain insights into competitor density in a particular area. Knowing where competitors are located helps identify market saturation, optimal locations for new stores, and areas with potential demand gaps. Competitor analysis through location data allows companies to make informed decisions on store placements and strategic positioning, giving them a competitive edge.

b. Improved Customer Targeting

Retail location data scraping services enable companies to analyze store locations about demographic information. By understanding where competitors operate and the customer demographics in those areas, businesses can tailor marketing campaigns to target the right customer segments. This localized approach improves customer engagement and enhances the chances of converting potential customers into loyal patrons.

c. Optimization of Supply Chain and Inventory

Knowing nearby competitors and market demand helps businesses optimize their supply chains. Companies can analyze the geographic distribution of stores and identify high- demand areas to avoid stockouts and maintain an efficient inventory. Additionally, retailers can streamline distribution routes by strategically planning warehouses or fulfillment centers based on proximity to high-density retail locations.

d. Identification of Market Expansion Opportunities

How Does Retail Location Data Influence Strategic Decision-Making?

Retail location data is critical in strategic decision-making, providing insights into customer behavior, competitor positioning, and market demand. Businesses can optimize site selection, tailor marketing efforts, and enhance operational efficiency by analyzing this data.

a. Site Selection and Real Estate Investment

Retail location data provides actionable insights into site selection, aiding in the decision- making process for real estate investments. Businesses often look for high foot traffic areas, proximity to transportation hubs, or locations within commercial centers to open new stores. Location data can indicate real estate trends, popular neighborhoods, and potential growth areas, enabling companies to make data-driven decisions in leasing or purchasing properties.

b. Regional Marketing Campaigns

With retail store location data, businesses can implement localized marketing strategies. For instance, by knowing store locations relative to customer demographics, retailers can design campaigns tailored to the preferences and needs of a specific region. Location data allows for hyper-targeted advertising and event planning, ensuring that marketing initiatives resonate with local customers and have a higher impact on engagement and sales.

c. Enhanced Understanding of Customer Foot Traffic

Analyzing location data helps retailers understand foot traffic patterns around their stores. Businesses can assess potential cross-traffic by scraping data on nearby competitors and complementary businesses (such as cafes near bookstores or gyms near health stores). Insights into customer movement can help retailers adjust business hours, staffing, or promotional strategies to capture more traffic during peak times.

Transforming Customer Experience with Location Data

Leveraging location data can transform customer experience by enabling personalized recommendations, real-time promotions, and location-based loyalty rewards. This targeted approach deepens customer engagement, enhances satisfaction, and encourages repeat visits, creating more meaningful connections between customers and brands.

a. Personalized Customer Recommendations

Businesses can offer personalized recommendations by correlating store location data with customer preferences. For instance, retail apps can send notifications about exclusive in- store events or new arrivals at the nearest outlet. Leveraging proximity data to engage customers with personalized messages enhances their shopping experience and encourages repeat visits.

b. Real-Time Promotions and Discounts

Retailers can use store location data to offer real-time discounts and promotions. When customers are near a specific location, businesses can trigger push notifications or text messages with exclusive offers, motivating them to visit the store. Retailers can also analyze the effectiveness of these real-time campaigns by examining foot traffic patterns in response to promotions.

c. Loyalty Programs and Local Events

Location data allows businesses to customize loyalty programs based on customer location. Retailers can organize events or workshops at specific stores or provide location-based rewards for frequent visits. For example, customers who frequently shop at a particular location could receive targeted loyalty incentives, fostering a stronger customer relationship and increasing the likelihood of store visits.

Leveraging Competitor Data for Market Positioning

Location data scraping can also extend beyond a company's stores to include competitor data, which offers valuable insights for positioning strategies:

a. Identifying Potential Threats and Opportunities

Understanding where competitors are located helps businesses identify potential threats and areas of opportunity. For instance, a competitor recently opened multiple stores in a region, which could indicate a trend or emerging demand. By staying updated on competitor expansions, businesses can act proactively by establishing their presence in the same region or targeting other untapped areas.

b. Price Comparison and Product Range Analysis

Retailers can collect location-based data on competitor pricing and product availability. By understanding the pricing and product strategies in different locations, businesses can refine their offerings and adjust prices competitively. They can also stock products that competitors lack in certain locations, attracting customers seeking specific items unavailable elsewhere.

c. Enhancing Customer Perception with Differentiation

Location data analysis helps retailers differentiate themselves by avoiding oversaturation in high-competition areas. For instance, if a particular type of store is typical in an area, a business might emphasize unique products or experiences to stand out. Analyzing competitor location data enables retailers to position themselves as distinct and create a unique brand identity that resonates with local customers.

Technological Tools and Techniques for Retail Location Data Scraping

Numerous tools and techniques can assist businesses in scraping retail location data:

Web Scraping Software: Tools like BeautifulSoup, Scrapy, etc., can automate extracting data from competitor websites, mapping platforms, and online directories.

APIs and Mapping Platforms: Platforms like Google Maps, Foursquare, and Yelp offer APIs that provide access to location data and customer reviews. Businesses can use these APIs to gather detailed information on retail store locations and customer experiences.

GIS (Geographic Information System) Analysis: GIS software allows businesses to analyze spatial data, helping them visualize patterns and gain insights into location- based factors that impact business performance.

Data Visualization Tools: Tools like Tableau and Power BI enable businesses to visualize location data, making it easier to identify trends, patterns, and strategic insights for decision-making.

Ethical and Legal Considerations in Location Data Scraping

While location data scraping offers valuable business insights, companies must ensure they operate within ethical and legal boundaries. They should prioritize user privacy and comply with data protection laws such as GDPR. It's crucial to use data sources with clear service terms and avoid scraping personal information. By maintaining transparency in data collection, businesses can harness the power of location data while respecting privacy rights.

Conclusion

Retail store location data scraping has the potential to transform business insights by offering a detailed view of market dynamics, customer preferences, and competitive landscapes. From optimizing site selection and supply chain efficiency to creating personalized marketing campaigns, location data can fuel various strategic initiatives that drive growth. As technology evolves, retailers have more access to sophisticated tools that can help them gather, analyze, and apply location data effectively. By integrating location data into their decision-making processes, businesses can improve customer engagement, enhance operational efficiency, and stay ahead in an increasingly competitive market.

Transform your retail operations with Retail Scrape Company's data-driven solutions. Harness real-time data scraping to understand consumer behavior, fine-tune pricing strategies, and outpace competitors. Our services offer comprehensive pricing optimization and strategic decision support. Elevate your business today and unlock maximum profitability. Reach out to us now to revolutionize your retail operations!

Source: https://www.retailscrape.com/retail-store-location-data-scraping.php

0 notes

Text

Scrape Blinkit Product Data and Images for Competitive Analysis

Why Should You Scrape Blinkit Product Data and Images for Competitive Analysis?

In the rapidly evolving world of e-commerce, data scraping has emerged as a vital tool for businesses, marketers, and developers. One of the platforms where data scraping holds immense potential is Blinkit Product and Image Data Extraction Services, a leading quick commerce service in India. Blinkit (formerly Grofers) offers a wide range of products, including groceries, home essentials, and other daily necessities, catering to modern consumer behavior's fast-paced, demand-driven nature. By Extracting Blinkit Product and Images Data, businesses can gain valuable insights into consumer preferences, track product trends, and improve their competitive edge in the market. This article delves into the importance of Scrape Blinkit Product Data and Images, its benefits, and how it can be leveraged effectively.

Why Scrape Blinkit Product Data and Images?

Blinkit operates in the quick-commerce sector, which focuses on delivering goods ultra-fast and efficiently—often within an hour. The platform offers an extensive catalog of products, from fresh groceries to electronics and personal care items. Customized Blinkit Data Scraping Service can provide businesses with helpful information to enhance marketing strategies, inventory management, and customer experience.

1. Product Insights and Market Research

Blinkit Product Data and Image Scraping gives businesses detailed insights into product offerings, including pricing, product descriptions, specifications, and images. By analyzing this data, companies can understand market trends, compare prices with competitors, and identify gaps in their product portfolio. This information can guide businesses in deciding which products to prioritize or discontinue and optimizing inventory and pricing strategies.

Additionally, analyzing Blinkit product data helps businesses identify popular items, seasonal products, and emerging trends. Companies can leverage this information to adjust their marketing campaigns, stock more in-demand products, and align their offerings with current consumer preferences.

2. Competitive Analysis

The competitive landscape in the e-commerce space is constantly changing. By scraping Blinkit product data, businesses can monitor their competitors' offerings, pricing strategies, and promotions or discounts. This gives companies the advantage of adjusting their strategies in real time, staying ahead of the competition.

For instance, by tracking Blinkit's prices and product offerings, a retailer can analyze how their prices compare to those of Blinkit. If Blinkit introduces a new promotion, such as a discounted grocery bundle, businesses can evaluate whether similar offers could be introduced on their platforms. This dynamic analysis allows businesses to remain competitive and relevant in a fast-moving market.

3. Personalization and Targeting

Blinkit Product Info and Images Data Collection allows businesses to customize their marketing efforts more effectively. By extracting detailed information about the products a customer is purchasing or searching for on Blinkit, companies can create personalized offers, targeted ads, and customized recommendations based on a user's preferences. This can significantly enhance the customer experience, boosting engagement and conversion rates.

For example, suppose a particular customer consistently purchases organic food items. In that case, the business can use the scraped data to recommend other organic products or send personalized discounts and offers related to organic goods. This personalization level helps drive customer loyalty and increase sales over time.

4. Image Scraping for Product Visualization

Product images are crucial in online shopping, influencing purchase decisions and customer satisfaction. Grocery Stock Availability Data Scraping Service enables businesses to create attractive product catalogs, enhance visual appeal, and maintain up-to-date images for their e-commerce platforms. It also allows businesses to compare image quality, product presentation, and promotional materials across various products.

Accurate and high-quality product images are essential for retailers to build customer trust. Grocery Delivery App Datasets ensure that businesses offer visuals that match customer expectations. Moreover, companies can use scraped images to compare the presentation of similar products, refining their own listings for greater appeal.

5. Optimizing SEO and Content Marketing

Search engine optimization (SEO) is essential for driving traffic to online stores. Scraping Blinkit product data and images can be an excellent resource for optimizing SEO strategies. By extracting relevant keywords from product descriptions and using these to create SEO-rich content, businesses can improve their search rankings and attract more customers.

Moreover, scraping product images and associated alt text can help businesses optimize image SEO. Properly tagged images and optimized product descriptions ensure that search engines can index the content properly, increasing the chances of the products appearing in search results. This drives more organic traffic to the site and increases visibility in a crowded digital marketplace.

6. Inventory Management and Stock Monitoring

Inventory management is one of the most critical aspects of running an online store. By scraping Blinkit product data, businesses can monitor the stock levels of various products in real time. This information is valuable in preventing stockouts or overstocking, which can hurt a business's profitability and reputation.

With access to up-to-date product information and stock status from Blinkit, companies can plan their purchasing and restocking activities more efficiently. Additionally, businesses can anticipate demand for certain products and plan their inventory based on trends in product availability, reducing the risk of running into supply chain issues.

7. Price Comparison and Dynamic Pricing

Price optimization is a crucial aspect of any retail strategy. By scraping Blinkit product data, businesses can monitor and track prices of similar items, adjusting their pricing strategies accordingly. With real-time price comparisons, businesses can decide whether to adjust their prices, offer discounts, or bundle products to remain competitive.

Dynamic pricing, which adjusts product prices based on demand, competition, and market conditions, is becoming increasingly popular in e-commerce. Scraping Blinkit's prices enables businesses to implement dynamic pricing strategies, which can increase profitability by ensuring that prices remain competitive without sacrificing margins.

Legal and Ethical Considerations of Scraping Blinkit Data

While Web Scraping Grocery Delivery App Data offers numerous advantages, it is essential to note that scraping should be conducted responsibly and within legal boundaries. Unauthorized data scraping from a website may violate terms of service and copyright laws, leading to legal repercussions.

To ensure compliance, businesses should:

1. Respect Terms of Service: Review Blinkit's terms and conditions to determine whether scraping is permitted. If it is not, businesses should seek permission or explore alternative methods for obtaining data.

2. Limit the Frequency of Requests: Scraping should be done at reasonable intervals to avoid overloading Blinkit's servers. Excessive scraping may be seen as malicious behavior and lead to IP blocking or legal action.

3. Protect Privacy and Data: Any data scraped from Blinkit should be handled responsibly, ensuring it does not violate user privacy or intellectual property rights.

4. Use Ethical Scraping Tools: Employ scraping tools that are efficient, ethical, and respectful of Blinkit's resources. The goal is to extract relevant data without impacting the platform's performance or reputation.

Conclusion

Scraping Blinkit product data and images provides businesses with valuable insights into the market, helps improve competitive strategies, and optimizes customer engagement. By using Grocery Delivery App Data Scraper responsibly, companies can gain a deeper understanding of product trends, consumer behavior, and pricing dynamics, all essential for making informed business decisions. Additionally, it helps streamline inventory management, enhance SEO strategies, and maintain an attractive, up-to-date product catalog.

However, to avoid potential issues with Blinkit, scraping must be approached ethically and within the legal framework. By adhering to best practices and ensuring that data scraping activities do not disrupt Blinkit's operations or violate its terms of service, businesses can make the most of this powerful tool while maintaining a positive relationship with the platform.

In the fast-paced world of e-commerce, data scraping is no longer just a technical skill—it's a strategic tool that can make or break a business's success. Scraping Blinkit product data and images offers endless possibilities for innovation and growth in the quick-commerce sector.

Experience top-notch web scraping service and mobile app scraping solutions with iWeb Data Scraping. Our skilled team excels in extracting various data sets, including retail store locations and beyond. Connect with us today to learn how our customized services can address your unique project needs, delivering the highest efficiency and dependability for all your data requirements.

Source: https://www.iwebdatascraping.com/scrape-blinkit-product-data-and-images-for-competitive-analysis.php

#BlinkitProductAndImageDataExtraction#ScrapeBlinkitProductDataAndImages#BlinkitDataScrapingService#GroceryDeliveryAppDatasets#WebScrapingGroceryDeliveryAppData

0 notes

Text

Popular tool to extract data from Shoe Palace

Shoe Palace is an American footwear retailer specializing in various types of shoes, including sneakers, fashion shoes, and children’s shoes. It has a certain position in the footwear market with its multi-brand selection, limited releases and collaborative series.

Introduction to the scraping tool

ScrapeStorm is a new generation of Web Scraping Tool based on artificial intelligence technology. It is the first scraper to support both Windows, Mac and Linux operating systems.

Preview of the scraped result

1. Create a task

(2) Create a new smart mode task

You can create a new scraping task directly on the software, or you can create a task by importing rules.

How to create a smart mode task

2. Configure the scraping rules

Smart mode automatically detects the fields on the page. You can right-click the field to rename the name, add or delete fields, modify data, and so on.

3. Set up and start the scraping task

(1) Run settings

Choose your own needs, you can set Schedule, IP Rotation&Delay, Automatic Export, Download Images, Speed Boost, Data Deduplication and Developer.

4. Export and view data

(2) Choose the format to export according to your needs.

ScrapeStorm provides a variety of export methods to export locally, such as excel, csv, html, txt or database. Professional Plan and above users can also post directly to wordpress.

How to view data and clear data

0 notes

Text

Allegro is an online e-commerce website based in Poland. Allegro.pl Sp. z o.o. organized it which was founded in 1999 and afterwards purchased by an online auction company QXL Ricardo plc in March 2000. In 2007, QXL Ricardo plc had changed its name to Tradus plc, and Naspers acquired it in 2008. Naspers sold Allegro Group to an investor group consisting of Cinven, Permira, and Mid Europa Partners in October 2016.

Allegro is well-known in Poland, where it was ranked 251st among the world's most popular internet sites by Alexa Internet in January 2017 and 5th in Poland. Allegro had more than 11 million users in 2011 and 16 million users in 2017 respectively. In 2021, Allegro acquired the largest online retailer called Mimovrste.

0 notes

Text

What is big data analysis?

Big data analysis refers to the process of examining large and complex datasets, often involving high volumes, velocities, and varieties of data, to uncover hidden patterns, unknown correlations, market trends, customer preferences, and other useful business information. The goal is to gain actionable insights that can inform decision-making and strategic planning.

Key Components of Big Data Analysis

Data Collection:

Sources: Data is collected from a wide range of sources, including social media, sensors, transaction records, log files, and more.

Techniques: Involves using technologies like web scraping, data APIs, and IoT devices to gather data.

Data Storage:

Scalable Storage Solutions: Due to the large volume of data, scalable storage solutions like Hadoop Distributed File System (HDFS), NoSQL databases (e.g., Cassandra, MongoDB), and cloud storage (e.g., Amazon S3, Google Cloud Storage) are used.

Data Processing:

Distributed Processing: Tools like Apache Hadoop and Apache Spark are used to process data in parallel across a distributed computing environment.

Real-Time Processing: For data that needs to be analyzed in real-time, tools like Apache Kafka and Apache Flink are employed.

Data Cleaning and Preparation:

Data Cleaning: Involves removing duplicates, handling missing values, and correcting errors to ensure data quality.

Data Transformation: Converting data into a format suitable for analysis, which may involve normalization, aggregation, and other preprocessing steps.

Data Analysis:

Descriptive Analytics: Summarizes historical data to understand what has happened in the past.

Diagnostic Analytics: Investigates why something happened by identifying patterns and relationships in the data.

Predictive Analytics: Uses statistical models and machine learning techniques to forecast future trends and outcomes.

Prescriptive Analytics: Recommends actions based on the analysis to optimize business processes and outcomes.

Data Visualization:

Tools: Tools like Tableau, Power BI, and D3.js are used to create visual representations of the data, such as charts, graphs, and dashboards.

Purpose: Helps in understanding complex data through visual context, making it easier to identify patterns and insights.

Machine Learning and AI:

Algorithms: Machine learning algorithms, such as clustering, classification, regression, and deep learning, are applied to discover patterns and make predictions.

Libraries: Popular libraries include TensorFlow, PyTorch, Scikit-learn, and MLlib (Spark’s machine learning library).

Benefits of Big Data Analysis

Improved Decision-Making: By providing data-driven insights, organizations can make more informed decisions.

Operational Efficiency: Identifying inefficiencies and optimizing processes to reduce costs and improve productivity.

Enhanced Customer Experience: Understanding customer behavior and preferences to personalize services and improve satisfaction.

Risk Management: Detecting and mitigating risks, such as fraud detection in financial services.

Innovation: Identifying new opportunities and trends to drive innovation and stay competitive.

Challenges in Big Data Analysis

Data Quality: Ensuring the accuracy, completeness, and reliability of data.

Data Integration: Combining data from diverse sources and formats.

Scalability: Managing and processing the sheer volume of data.

Privacy and Security: Protecting sensitive data and complying with regulations.

Skill Gap: The need for skilled professionals who can work with big data technologies and tools.

Examples of Big Data Analysis Applications

Healthcare: Analyzing patient data to improve diagnosis and treatment, and to predict disease outbreaks.

Retail: Personalizing marketing efforts and optimizing supply chain management.

Finance: Detecting fraudulent activities and managing risk.

Manufacturing: Predictive maintenance to reduce downtime and improve efficiency.

Telecommunications: Optimizing network performance and enhancing customer service.

In summary, big data analysis involves a comprehensive approach to collecting, storing, processing, and analyzing large datasets to extract valuable insights and drive better business outcomes.

0 notes

Text

How Can You Master Web Scraping for Complex Sites with Advanced APIs?

Introduction

In today’s digital economy, data is king. The ability to extract, analyze, and leverage data from complex websites enables businesses to stay competitive, identify new market trends, and optimize pricing strategies. However, to master data extraction with web scraping APIs requires advanced skills, especially when the target is structured, intricate sites like e-commerce, travel, or real estate platforms. This blog dives deep into how you can master data extraction for complex sites using web scraping API solutions, which are invaluable for overcoming site complexity and scraping restrictions. With web scraping APIs, you can efficiently extract data from complex websites and leverage it to make data-driven decisions that drive success and growth.

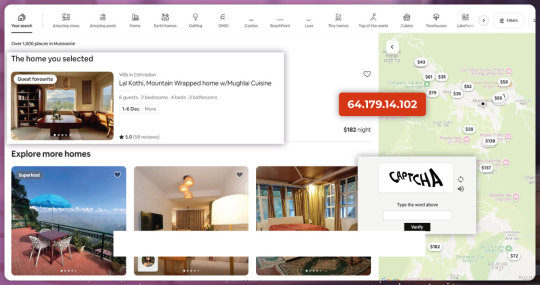

Why Focus on Data Extraction from Complex Websites?

Complex websites—such as travel aggregators, e-commerce marketplaces, and multi-layered B2B platforms—are often data-rich but difficult to scrape due to dynamic elements, CAPTCHA protection, anti- bot measures, and heavy usage of JavaScript. Yet, these sites hold valuable data for pricing intelligence, competitor analysis, trend prediction, and customer behavior tracking. Leveraging web scraping APIs for data extraction allows companies to capture this information more effectively, bypassing many common barriers.

According to a 2024 survey, the global web scraping services market is expected to reach $6.5 billion by 2032, with a projected growth rate of 14.7%. This surge is mainly due to rising demand for data extraction from complex websites in finance, retail, travel, and real estate, where companies need accurate, real-time data to stay competitive.

Challenges of Extracting Data from Complex Websites

Dynamic Content: Many websites use JavaScript frameworks, like Angular or React, which load content dynamically. This can complicate data extraction, as traditional scraping techniques may miss data that loads only when users scroll or interact.

Anti-Bot Measures: Websites implement tools such as CAPTCHA and IP blocking to deter scraping. These measures make it essential to use APIs and smart rotation techniques to avoid detection.

Rate Limits: Websites may throttle requests after a specific number, potentially resulting in blocked IPs or delayed responses.

Page Structure Changes: Complex websites often update their structure, breaking scrapers or leading to incomplete data extraction. This requires adaptable, resilient scraping methods that can handle ongoing changes.

Mastering these challenges requires advanced tools and techniques, particularly API-based web data extraction solutions.

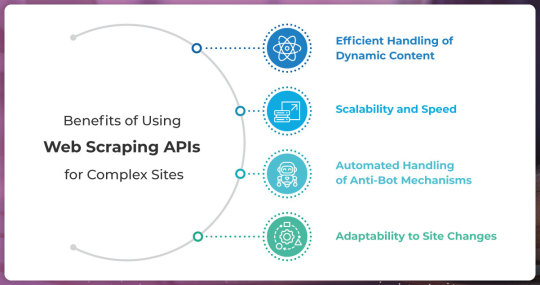

Benefits of Using Web Scraping APIs for Complex Sites

APIs tailored for web scraping offer several advantages:

Efficient Handling of Dynamic Content: Advanced APIs can render JavaScript and capture dynamically loaded content, ensuring accurate data extraction.

Scalability and Speed: APIs can handle large volumes of requests, speeding up the process for comprehensive datasets.

Automated Handling of Anti-Bot Mechanisms: Many APIs come with built-in features to bypass CAPTCHA, manage IP rotation, and adjust request headers to reduce the chance of detection.

Adaptability to Site Changes: Some APIs automatically detect and adjust to site structure changes, helping maintain continuity in data extraction workflows.

How to Master Web Scraping for Complex Sites with Advanced APIs

Selecting the right API is the first step. Some popular APIs for web scraping include:

Actowiz API: Ideal for complex websites, it offers extensive data extraction support, particularly its ability to handle dynamic content.

Real Data API: offers a JavaScript-based solution for scraping complex websites. It is capable of handling CAPTCHAs and IP rotation.

iWeb Data API: Designed to manage complex sites with its adaptive learning feature, it’s highly effective for extracting data from complex websites like travel and e-commerce platforms.

These APIs can streamline data collection for price comparison, pricing intelligence, and customer insights.

Leverage Cloud-Based Scraping for Scalability

Cloud-based scraping solutions can handle the demands of large-scale scraping projects. For instance, companies can extract data from geographically dispersed websites without overwhelming local servers by deploying cloud servers in multiple regions, such as AWS or Google Cloud. This is especially useful for multinational enterprises needing regional pricing strategy and market analysis data.

Implement Data Quality Checks

When scraping complex sites, data accuracy is paramount. To ensure quality:

Automate Data Validation: Check for completeness, duplicates, and consistency.

Monitor for Structural Changes: Advanced APIs can often detect shifts in HTML structure, but manual checks on key data points are essential for accuracy.

Set Up Smart IP Rotation and CAPTCHA Handling

APIs like Actowiz API and Real Data API provide smart IP rotation and CAPTCHA bypassing, which are essential for websites with strict anti- scraping measures. Some use machine learning to predict and mitigate blockages, reducing the need for manual intervention and increasing the likelihood of successful web data extraction for complex platforms.

Integrate API-Based Extraction with Analytics Tools

Businesses' ultimate goal is actionable insights. Integrating extracted data with analytics tools like Power BI, Tableau, or Google Data Studio allows you to convert raw data into valuable insights. This integration can power pricing intelligence and price comparison applications, helping you refine strategies and make data-driven decisions.

Examples of Successful Data Extraction with Web Scraping APIs

A global e-commerce platform sought to master data extraction from complex sites to track competitor pricing across different regions. Using the Actowiz API integrated with a custom data pipeline, the company could extract dynamic pricing data daily, even on high-traffic days like Black Friday. This data enabled real-time pricing adjustments, increasing competitiveness by over 30% and boosting sales during key promotional periods.

An online travel agency wanted to stay competitive by offering real-time hotel price comparisons. Using Actowiz API, they could scrape major travel sites, like Booking.com, to track prices and promotions. This API handled challenges like CAPTCHAs and IP restrictions, allowing the agency to offer up-to-the-minute price comparisons. The data drove a 25% increase in customer satisfaction and helped retain users by offering better deals than competitors.

Common Use Cases for Advanced Web Scraping APIs

Pricing Intelligence: By tracking competitor pricing in real time, companies can dynamically adjust their pricing strategies.

Market Research: Web scraping APIs enable in-depth research by gathering data from multiple industry sites, such as reviews, product features, and regional availability.

Lead Generation: APIs can scrape B2B directories and social media platforms, collecting business leads at scale for sales teams.

Trend Analysis: Companies in e-commerce, social media, and travel use scraped data to identify trends, seasonal changes, and shifting consumer preferences.

Final Thoughts on Mastering Data Extraction with Web Scraping APIs

For businesses aiming to excel in the data-driven landscape, mastering web scraping for complex sites is essential. By leveraging API-based web data extraction, companies can access reliable, timely data to optimize pricing strategy, product development, and customer acquisition decision-making. With powerful APIs, such as those offered by Actowiz Solutions and Real Data API, along with strategies like IP rotation and automated data validation, even the most complex sites can become valuable data sources.

While the investment in mastering these tools might seem high, the ROI—measured in competitive advantage, customer satisfaction, and revenue growth—can be transformative. Embracing API-based solutions for complex site scraping empowers businesses to unlock insights that drive growth and innovation.

Ready to master data extraction for complex sites? Contact Actowiz Solutions to discover tailored API solutions that power your data-driven success. You can also reach us for all your mobile app scraping, data collection, web scraping, and instant data scraper service requirements.

Source: https://actowizsolutions.com/master-web-scraping-for-complex-sites-advanced-apis.php

#AdvancedWebScraping#ComplexSiteScraping#WebScrapingAPI#DataExtractionExpertise#TechForData#APIIntegration#UnlockComplexData#WebScrapingTechnology#RealTimeData

0 notes

Text

Weedmaps Product Listings Scraping

Weedmaps Product Listings Scraping by DataScrapingServices.com: Enhance Your Cannabis Business Strategy. In the fast-evolving cannabis industry, staying competitive means accessing the right data to make informed decisions and develop strategic offerings. For businesses aiming to tap into the cannabis retail market, Data Scraping Services offers an invaluable service with Weedmaps Product Listings Scraping. By providing businesses with accurate, up-to-date data on products listed on Weedmaps, our service equips dispensaries, manufacturers, and marketers with essential insights into product availability, pricing, and customer preferences.

Key Data Fields Extracted

Weedmaps is a popular platform for cannabis consumers to find products, read reviews, and compare prices from various dispensaries. Our service focuses on gathering the following key data fields to give businesses a comprehensive view of the competitive landscape:

1. Product Names – Get the complete list of products, including strains, edibles, and other cannabis offerings.

2. Product Categories – Classify products into specific categories like flower, concentrates, edibles, and topicals for targeted market analysis.

3. Pricing Information – Track and analyze price points for products across different dispensaries to remain competitive.

4. THC/CBD Content – Analyze potency and composition to understand the product’s unique selling points.

5. Customer Reviews and Ratings – Leverage real consumer feedback to understand product reception and quality.

6. Dispensary Locations – Discover which dispensaries offer specific products to help with distribution strategy and market reach.

Benefits of Weedmaps Product Listings Scraping

1. Market Analysis and Competitive Intelligence

Having access to extensive Weedmaps data helps businesses understand the market trends, popular products, and pricing strategies of competitors. Dispensaries and brands can customize their products and promotions using real-time data to draw in more customers.

2. Pricing Optimization

Cannabis businesses can adjust their pricing strategy by analyzing competitor prices across different regions.

3. Product Development Insights

By observing which products are highly rated and frequently purchased, businesses can identify opportunities for new products or modify existing ones to meet consumer demands better.

4. Enhanced Customer Engagement

With insights into customer feedback, companies can identify common issues or praise points, which can guide customer service improvements and strengthen brand loyalty.

Popular Data Scraping Services

Manta.com Business Listing Extraction

Verified Canadian Physicians Email Database

DexKnows Business Listing Extraction

Europages.co.uk Business Directory Extraction

eBay.com.au Product Price Extraction

Canada411.ca People Search Data Extraction

eBay.ca Product Information Extraction

Allhomes.com.au Property Details Extraction

Insurance Agent Data Extraction from Agencyportal.irdai.gov

Amazon.ca Product Details Extraction

Insurance Agents Phone List India

Best Weedmaps Product Listings Scraping Services in USA:

Raleigh, San Francisco, Washington, Las Vegas, Denver, Fresno, Orlando, Sacramento, Wichita, Boston, Virginia Beach, Tulsa, Fort Worth, Louisville, Seattle, Columbus, Milwaukee, San Jose, San Francisco, Oklahoma City, Colorado, Kansas City, Nashville, Bakersfield, Mesa, Indianapolis, Jacksonville, San Diego, Omaha, El Paso, Atlanta, Memphis, Dallas, San Antonio, Philadelphia, Orlando, Long Beach, Long Beach, Fresno, Sacramento, New Orleans, Austin, Albuquerque, Colorado, Houston, Chicago, Charlotte, Tucson and New York.

Conclusion

DataScrapingServices.com’s Weedmaps Product Listings Scraping service provides cannabis businesses with a powerful tool to gather market intelligence, optimize pricing, and understand consumer preferences. Leveraging this data helps businesses stay competitive and grow within the cannabis industry by making well-informed decisions.

Discover how we can elevate your cannabis business at DataScrapingServices.com.

📧 Email: [email protected]

#weedmapsproductlistingsscraping#cannabismarketanalysis#productdataextractionfromweedmap#datascrapingservices#competitiveintelligence#cannabisindustry#customerinsights#pricingoptimization#cannabisbusiness

0 notes

Text

How Can Amazon Fresh and Grocery Delivery Data Scraping Benefit in Trend Analysis?

In today's highly digitized world, data is the cornerstone of most successful online businesses, and none exemplify this better than Amazon Fresh and Grocery Delivery Data Scraping. As Amazon's grocery delivery and pick-up service, Amazon Fresh sets new standards in the online grocery retail industry, bringing the convenience of one-click shopping to perishable goods. With its data-driven approach, Amazon Fresh has redefined the grocery landscape, combining cutting-edge technology with supply chain efficiency to reach a broad audience. This approach has also fueled demand for Scrape Amazon Fresh and Grocery Delivery Product Data and similar grocery delivery services. Scraping, or the automated extraction of information from websites is a powerful tool in today's digital marketplace. When applied to platforms like Amazon Fresh, it opens up many possibilities for competitors, researchers, and marketers seeking valuable insights into trends, pricing, consumer behavior, and stock availability. With the help of Amazon Fresh and Grocery Delivery Product Data Scraping Services, businesses can leverage these insights to stay competitive, optimize inventory, and improve customer satisfaction in the rapidly evolving grocery industry.

The Growing Popularity of Online Grocery Shopping

Before delving into the specifics of Amazon Fresh and Grocery Delivery Product Data Extraction, it's essential to understand the broader online grocery delivery landscape. This market has seen exponential growth in recent years, primarily driven by changing consumer habits and the pandemic's impact on shopping behavior. Online grocery shopping has evolved from a niche offering to a mainstream service, with consumers now enjoying doorstep deliveries, same-day options, and subscription-based purchasing.

Amazon Fresh has played a pivotal role in this transformation. With its vast selection, competitive pricing, and logistical prowess, Amazon Fresh has rapidly scaled to become one of the dominant forces in the online grocery market. Its success has also underscored the importance of real-time data, which is vital for effective inventory management, dynamic pricing, and personalized recommendations. E-commerce Data Scraping has thus become an essential tool for businesses seeking to extract actionable insights from this data, enabling them to optimize their operations and improve their competitiveness.

As more retailers move into the grocery space, Amazon Fresh and Grocery Delivery Product Data Extraction remains at the forefront of innovations in the sector. It provides the data needed to understand customer preferences, monitor pricing trends, and adjust inventory in real-time. This ongoing evolution highlights the critical role that data scraping plays in ensuring that businesses stay ahead in an increasingly digital world.

Importance of Scraped Data in E-commerce and Grocery Delivery

In e-commerce, data is not just a byproduct; it's the product. Businesses leverage data to make critical decisions, streamline operations, enhance customer experience, and boost revenue. For online grocery services, data is especially critical, given the time-sensitive nature of perishable goods, fluctuating consumer demand, and competitive pricing strategies.

Platforms like Amazon Fresh constantly monitor stock levels, customer preferences, and seasonal trends to deliver a seamless experience. Data also enables these platforms to offer dynamic pricing, a technique where prices fluctuate based on demand, competitor pricing, and stock availability. As a result, competitors, third-party sellers, and market analysts are increasingly interested in Amazon Fresh and Grocery Delivery Product Data Collection to monitor these variables in real-time. This data can be used for various purposes, such as optimizing pricing, improving product offerings, and enhancing delivery strategies. To gain a competitive edge, many businesses turn to Pricing Intelligence Services, which uses scraped data to track price changes, identify patterns, and adjust their pricing strategies to match or outpace Amazon Fresh's dynamic pricing model.

What is Amazon Fresh Data Scraping?

Amazon Fresh data scraping involves the automated extraction of information from Amazon's grocery platform. Using web scraping tools and techniques, data from Amazon Fresh can be collected, organized, and analyzed to gather insights on various parameters, including product pricing, customer reviews, best-selling items, and delivery availability. This data can be used for various applications, such as competitive analysis, market research, trend forecasting, and inventory planning.

For instance, businesses can scrape product prices on Amazon Fresh to ensure their pricing remains competitive. Retailers can also gather product availability and delivery times data to monitor supply chain performance and consumer satisfaction levels. Additionally, customer reviews provide a wealth of information on consumer sentiment, enabling companies to adjust their product offerings or marketing strategies accordingly. The gathered data can be compiled into Amazon Fresh and Grocery Delivery Product Datasets, which provide actionable insights across various business functions. By utilizing this data, businesses can implement Price Optimization for Retailers, ensuring they stay competitive in an ever- changing marketplace and align with consumer expectations.

Key Data Points for Amazon Fresh and Grocery Data Scraping

Several valuable data points can be obtained through Web Scraping Amazon Fresh and Grocery Delivery Product Data, each providing unique insights into consumer behavior, market trends, and competitive positioning. Some of the most sought-after data points include:

1. Product Pricing: One of the primary drivers of consumer decision-making, product pricing data allows businesses to assess Amazon Fresh's pricing strategies and adjust their pricing to stay competitive. With real-time price scraping, businesses can identify price changes instantly, helping them respond more effectively to market fluctuations.

2. Product Availability: Monitoring product availability on Amazon Fresh provides insights into inventory levels and stock turnover rates. For companies involved in logistics or supply chain management, understanding Amazon's inventory trends can reveal valuable information about consumer demand and purchasing patterns.

3. Customer Reviews and Ratings: Reviews and ratings are a rich data source for businesses looking to improve their offerings. By analyzing reviews, companies can identify common issues, consumer preferences, and potential areas for improvement in their products or services.

4. Delivery Times and Options: Scraping data on delivery times, availability of same- day delivery, and delivery fees can offer insights into Amazon Fresh's logistical capabilities and customer expectations. This data is invaluable for companies looking to match or improve Amazon Fresh's delivery performance.

5. Best-Selling Products: Knowing which items are most popular on Amazon Fresh allows companies to identify trends in consumer demand. This information can inform product development, marketing strategies, and stocking decisions.

6. Promotional Offers and Discounts: Scraping information on discounts and promotions can reveal Amazon Fresh's pricing and marketing strategies. Competitors can use this information to create similar promotions, ensuring they stay relevant in a highly competitive market.

Benefits of Data Scraping for Amazon Fresh and Grocery Delivery Analysis

Scraping Amazon Fresh data provides several significant advantages to businesses in the grocery delivery sector. By extracting and analyzing data, companies can gain insights that would be difficult or impossible to obtain through manual observation. The benefits include:

1.Competitive Intelligence: By analyzing Amazon Fresh's product offerings, pricing strategies, and delivery performance, competitors can make informed decisions that enhance their operations. For example, a grocery retailer might adjust its pricing or delivery times to offer a more attractive service than Amazon Fresh. Utilizing an Amazon Fresh and Grocery Delivery Product Scraping API can help streamline this process by automating the extraction of large volumes of competitive data.

2.Market Insights and Trend Analysis: Data scraping lets companies stay updated on the latest market trends and consumer preferences. With accurate data, businesses can anticipate shifts in consumer demand, enabling them to adjust their product offerings or marketing strategies proactively. Tools like the Amazon Fresh and Grocery Delivery Product Data Scraper allow companies to track real-time changes, ensuring they are always ahead of the curve.

3.Pricing Optimization: Real-time pricing data allows businesses to optimize their pricing strategies. By tracking Amazon Fresh's prices, companies can identify patterns in price fluctuations and set their prices accordingly, maximizing revenue without compromising competitiveness. Scrape Amazon Fresh and Grocery Delivery Search Data to gather this pricing information, which can be critical for adjusting prices dynamically.

4.Improved Customer Experience: Understanding consumer sentiment and product preferences through reviews and ratings enables businesses to improve the customer experience. Companies can build a loyal customer base by addressing common pain points and meeting customer expectations. Retail Website Data Extraction enables businesses to capture customer feedback from various product pages, making it easier to refine their offerings based on real-time insights.

5.Inventory Management: Analyzing product availability and stock turnover rates can help businesses fine-tune their inventory management processes. Companies can make more accurate forecasting and replenishment decisions by knowing which items are in high demand or likely to go out of stock. Data scraping tools can help track stock levels on Amazon Fresh, providing actionable information for inventory planning.

6.Informed Product Development: Amazon Fresh data scraping insights can guide product development efforts. For example, if certain organic products are trendy, a retailer might consider expanding its range of organic offerings to meet consumer demand. With an automated scraping solution, businesses can efficiently gather data on trending products to inform these strategic decisions.

Ethical and Legal Considerations in Data Scraping

While data scraping provides many benefits, it also raises important ethical and legal considerations. Amazon's terms of service prohibit unauthorized data scraping, and violating these terms could lead to legal action. Companies must, therefore, be cautious and ensure that their data scraping practices comply with legal requirements:

Some ethical considerations include respecting user privacy, avoiding excessive server requests, and ensuring data is used responsibly. To stay compliant with legal frameworks, businesses may opt for alternatives to traditional web scraping, such as APIs, which provide a legal and structured way to access data. These alternatives, including Product Matching techniques, help businesses align their data collection efforts with Amazon's guidelines while gaining valuable insights. Additionally, Price Scraping can be carried out responsibly by using official API access, ensuring that businesses gather pricing information without overburdening servers or violating terms of service.

In addition to scraping and API use, companies are also exploring data partnerships, which allow them to obtain valuable data insights without infringing on Amazon's policies. This collaboration helps businesses access real-time product and pricing data while maintaining legal and ethical standards.

Future Trends and Innovations in Grocery Data Scraping

Advances in artificial intelligence and machine learning will likely shape the future of grocery data scraping. These technologies allow for more sophisticated data extraction and analysis, enabling companies to derive deeper insights from Amazon Fresh data. AI-powered tools can automatically identify patterns and trends, providing businesses with valuable intelligence for Competitive Pricing Analysis and optimizing their pricing strategies. As the online grocery market grows, data scraping tools will likely become more specialized, focusing on specific areas such as product recommendation analysis, demand forecasting, and sentiment analysis.

Advances in artificial intelligence and machine learning will likely shape the future of grocery data scraping. These technologies allow for more sophisticated data extraction and analysis, enabling companies to derive deeper insights from Amazon Fresh data. AI-powered tools can automatically identify patterns and trends, providing businesses with valuable intelligence for Competitive Pricing Analysis and optimizing their pricing strategies. As the online grocery market grows, data scraping tools will likely become more specialized, focusing on specific areas such as product recommendation analysis, demand forecasting, and sentiment analysis.

Another trend to watch is the integration of blockchain technology for data verification. Blockchain can ensure the authenticity and accuracy of scraped data, offering greater transparency and trustworthiness in data-driven decision-making. By leveraging blockchain, businesses can verify the integrity of their Online Retail Price Monitoring data, providing them with more reliable insights for pricing adjustments and product positioning.

Conclusion

Amazon Fresh and grocery delivery data scraping are transforming how businesses approach competitive analysis, consumer insights, and market trends. With the right tools and practices, companies can harness the power of data to stay competitive in the rapidly evolving online grocery market. Utilizing eCommerce Scraping Services allows businesses to gain a competitive edge while remaining informed about changing market dynamics. However, it is essential to approach data scraping responsibly, balancing the desire for insights with respect for ethical and legal boundaries. By doing so, businesses can unlock the full potential of Amazon Fresh data scraping, creating a more data-driven and consumer-focused grocery delivery ecosystem.

Transform your retail operations with Retail Scrape Company's data-driven solutions. Harness real-time data scraping to understand consumer behavior, fine-tune pricing strategies, and outpace competitors. Our services offer comprehensive pricing optimization and strategic decision support. Elevate your business today and unlock maximum profitability. Reach out to us now to revolutionize your retail operations!

Source: https://www.retailscrape.com/amazon-fresh-and-grocery-delivery-data-scraping.php

0 notes

Text

Leverage Web Scraping Service for Grocery Store Location Data

Why Should Retailers Invest in a Web Scraping Service for Grocery Store Location Data?

In today's digital-first world, web scraping has become a powerful tool for businesses seeking to make data-driven decisions. The grocery industry is no exception. Retailers, competitors, and market analysts leverage web scraping to access critical data points like product listings, pricing trends, and store-specific insights. This data is crucial for optimizing operations, enhancing marketing strategies, and staying competitive. This article will explore the significance of web scraping grocery data, focusing on three critical areas: product information, pricing insights, and store-level data from major retailers.

By using Web Scraping Service for Grocery Store Location Data, businesses can also gain geographical insights, particularly valuable for expanding operations or analyzing competitor performance. Additionally, companies specializing in Grocery Store Location Data Scraping Services help retailers collect and analyze store-level data, enabling them to optimize inventory distribution, track regional pricing variations, and tailor their marketing efforts based on specific locations.

The Importance of Web Scraping in Grocery Retail

The grocery retail landscape is increasingly dynamic, influenced by evolving consumer demands, market competition, and technological innovations. Traditional methods of gathering data, such as surveys and manual research, are insufficient in providing real-time, large-scale insights. Scrape Grocery Store Locations Data to automate the data collection, enabling access to accurate, up-to-date information from multiple sources. This enables decision-makers to react swiftly to changes in the market.

Moreover, grocery e-commerce platforms such as Walmart, Instacart, and Amazon Fresh host vast datasets that, when scraped and analyzed, reveal significant trends and opportunities. This benefits retailers and suppliers seeking to align their strategies with consumer preferences and competitive pricing dynamics. Extract Supermarket Store Location Data to gain insights into geographical performance, allowing businesses to refine store-level strategies better and meet local consumer demands.

Grocery Product Data Scraping: Understanding What's Available

At the heart of the grocery shopping experience is the product assortment. Grocery Delivery App Data Collection focuses on gathering detailed information about the items that retailers offer online. This data can include:

Product Names and Descriptions: Extracting Supermarket Price Data can capture product names, detailed descriptions, and specifications such as ingredients, nutritional information, and packaging sizes. This data is essential for companies involved in product comparison or competitive analysis.

Category and Subcategory Information: By scraping product categories and subcategories, businesses can better understand how a retailer structures its product offerings. This can reveal insights into the breadth of a retailer's assortment and emerging product categories that may be gaining traction with consumers, made possible through a Web Scraping Grocery Prices Dataset.

Brand Information: Scraping product listings also allows businesses to track brand presence and popularity across retailers. For example, analyzing the share of shelf space allocated to private label brands versus national brands provides insights into a retailer's pricing and promotional strategies using a Grocery delivery App Data Scraper.

Product Availability: Monitoring which products are in or out of stock is a critical use case for grocery data scraping. Real-time product availability data can be used to optimize inventory management and anticipate potential shortages or surpluses. Furthermore, it allows retailers to gauge competitor stock levels and adjust their offerings accordingly through a Grocery delivery App data scraping api.

New Product Launches: Scraping data on new product listings across multiple retailers provides insights into market trends and innovation. This is particularly useful for suppliers looking to stay ahead of the competition by identifying popular products early on or tracking how their new products are performing across various platforms.

Scraping Grocery Data for Pricing Insights: The Competitive Advantage

Pricing is arguably the most dynamic and critical component of the grocery industry. Prices fluctuate frequently due to promotions, competitor actions, supply chain constraints, and consumer demand shifts. Web scraping enables businesses to monitor real-time pricing data from major grocery retailers, providing several key advantages:

Price Monitoring Across Retailers: Scraping pricing data from different retailers allows businesses to compare how similar products are priced in the market. This information can be used to adjust pricing strategies, ensure competitiveness, and maximize profit margins. Retailers can quickly react to competitor price changes and optimize their promotional activities to attract price-sensitive customers.

Dynamic Pricing Strategies: Businesses can implement dynamic pricing strategies with access to real-time pricing data. For instance, if a competitor lowers the price of a particular product, a retailer can respond by adjusting its prices in near real-time. This level of responsiveness helps maintain market competitiveness while protecting margins.