#Scale-out Backup Repository

Explore tagged Tumblr posts

Text

Achieve 3-2-1 rule with SOBR on Synology or OOTBI and Wasabi

Veeam’s Scale-Out Backup Repository (SOBR) can be used to implement the 3-2-1 backup rule. The 3-2-1 backup rule recommends having three copies of your data, stored on two different types of media, with one copy kept off-site. SOBR is built upon collections of individual repositories as we will see very shortly. In this article, I will demonstrate how you can Achieve 3-2-1 rule with SOBR on…

#3-2-1 Backup Rule#3-2-1-1-0 Rule#Archive Tier#Backup Repository#Backup Target#Capacity Tier#Create a Backup Repository#Create a Bucket on Wasabi#Create an Access Key#Direct to Object Storage Backups#Implement 3-2-1 Backup with SOBR#Performance Tier#Run Tiering Jon Now#Scale-out Backup Repository#SOBR Archive Tier#SOBR Extents#SOBR Offloads#Start Veeam SOBR Offload Job Manually#Storage Bucket#Use a SOBR with Veeam Backup & Replication

0 notes

Text

Protect Your Construction Project with Secure Cloud-Based Management Software

In the growing digital age of construction, it is vital to have cloud-based management software for project managers, contractors, and their teams. These solutions remove the usual limits of local applications, offering unparalleled flexibility, scalability, and accessibility. This makes it simple for construction professionals to handle their operations more conveniently and provide projects at a speed that none others can match.

Benefits of using secure cloud-based management software

Using safe, cloud-based management software for your construction projects will unlock a great deal of advantages, including the following.

Increased Collaboration and Communication: Such software assists with immediate involvement from all involved in the project. This enables smooth information sharing, exchange of documents and communications across teams, no matter where they are.

Centralized Data Management: With cloud-based software, there will be a centralized repository of all the data regarding any project. This ensures that no kind of data silos would persist, and everyone gets updated data for better decision-making and overall coordination of projects.

Improved Accessibility and Mobility: Cloud-based solutions provide any-place, anytime access to the data and tools of the project, thus enabling construction professionals to stay connected and productive while working remotely or on-site.

Affordable and Scalable: Cloud-based software is inexpensive and scales up or down according to project requirements without using expensive hardware or IT infrastructure.

Enhanced Security and Data Protection: Good cloud service providers deliver solid security. They protect details through data encryption, controlling access to it, or periodic backups.

These measures ensure your vital project data is never compromised or misplaced.

Key features of cloud-based management software for construction projects

Comprehensive cloud-based construction project management software typically includes numerous features for managing various elements of your projects.

Following are some critical features to expect:

Project Planning and Scheduling: With detailed planning and scheduling tools, you can make thorough project blueprints, hand out duties, and distribute resources. Plus, you can monitor your project's growth on the spot to ensure it remains on track and within funds.

Document Management: Cloud-based document management systems use a central storage area. They holds all the details about projects like drawings, specifications, and contracts with controlled changes, ensuring those who are allowed, the stakeholders, can see them and share them.

Cost and Budget Management: Strong cost and budget management features allow you to track expenses, monitor budgets, and create detailed financial reports to keep tight control over project finances.

Resource Management: Effective resource management tools will provide the firm with the facility to allocate and schedule people, equipment, and materials across a number of projects. This boosts proper resource use and cuts down any wastage.

Issue and Risk Management: The integration of issue and risk management modules offers functionality for the identification and tracking of risks that may arise. It includes ways to solve worrying issues beforehand, ensuring projects have minimal interruptions.

Reporting and Analytics: Advanced reporting and analytics go a long way in delivering real-time insights into projects' performances. This requires making informed decisions and allowing for course corrections whenever called upon.

How cloud-based management software improves project collaboration and communication?

Effective collaboration and communication have always been considered crucial for a construction project, which involves multiple stakeholders performing different roles and responsibilities. In that aspect, cloud-based management software plays an important role in ensuring smooth collaboration among team members and establishing good channels of communication. Hence, everyone remains on the same platform in the project cycle.

Centralized Project Information: It consolidates all project-related data, documents, and communication threads on one cloud-based platform. This way, stakeholders can access real-time information from anywhere in the world at any time, avoiding a potential scenario of working from outdated or silo information.

Real-time Collaboration and File Sharing: Cloud-based software enables employees to collaborate in real-time on various documents, drawings, and project plans. Several team members can work on the same document at the same time and share their contributions immediately. Version control and change tracking features ensure transparency and accountability.

Integrated communication channels: Most of the cloud solutions have integrated communication tools, such as messaging, video conferencing, and discussion forums, that make it easy to communicate with project stakeholders, reduce reliance on multiple tools, and minimize miscommunication.

Automated Notifications and Updates: The cloud-based software automatically notifies all concerned parties in the event of an update, change, or any other critical event. This helps the stakeholders remain updated and take prompt action on the arising issue or opportunity.

Mobile Access and Field Collaboration: Cloud-based solutions let construction professionals access project information from mobile devices right at the job site and collaborate on the go. This is effective in updating information in real-time, reporting issues, or even making on-site decisions.

The capabilities for collaboration and communication in cloud-based management software provide for a more integrated and coordinated project team. This leads to better coordination, swift decision-making, and finally to, successful project outcomes.

Implementing and integrating cloud-based management software into your construction project

Successful implementation and integration of the cloud-based management software for construction require careful planning, stakeholder buy-in, and active execution of a change management strategy. You can only maximize these advantages and ensure a seamless transition through appropriate best practices and a structured approach where your construction teams achieve precisely what is expected.

Some key steps in this respect are as follows:

Set up a dedicated implementation team: Create a cross-functional team comprising project management, IT, finance, and operations. This team will guide the application and make specific the procedures reaches the organization's goals. They will also maintain open communication and offer proper training.

Perform thorough requirements analysis: Analyze in detail the needs, workflows, and processes of your construction project. Also, identify where the cloud-based management software can help streamline operations, improve collaboration, and enhance project visibility.

Draw up an implementation roadmap: The implementation roadmap should be detailed, showing the key milestones, timelines, and responsibilities. This roadmap should cover the phases of data migration, software configuration, user training, and testing to ensure a well-structured and controlled transition.

Engage Stakeholders and Secure Buy-in: In a change management process, it is very important to have proper stakeholder buy-in and involvement. The benefits of the cloud-based management software should be communicated to all stakeholders of project teams, executives, and external partners. Their concerns will be discussed, feedback will be listened to, and involvement will be requested with regard to the implementation process in order to develop ownership and commitment to its success.

Full training and support: Invest in comprehensive training that makes the software features and functionalities known to your construction teams, as well as best practices for using it. Provide support resources such as user guides and online tutorials, plus a designated support channel, to facilitate easy adoption and usage of the software.

Integration with current systems and tools: Identify the integrations that should be in place between the cloud-based management software and your current systems and tools, like accounting software, BIM platforms, and project scheduling applications. Seamless integration will ensure that the workflow goes smoothly, data redundancy is minimized, and the productivity level of employees is enhanced.

Conduct pilot projects and gather feedback: Wherever possible, pilot projects or proof-of-concept should be conducted in advance of a full implementation to test the capabilities of the software and to identify any potential challenges or areas of improvement. Gather feedback from the pilot users and incorporate their insights into the broader implementation plan.

Monitor and optimize: Observe the performance of the software, the rate of user adoption, and its impact on project outcomes. Collect feedback from stakeholders and analyze trends in data that may indicate areas for optimization or process improvements. Utilize the reporting and analytics capability of the software to gain real insight and make decisions based on data.

Governance and change management: Establish the policies of governance and protocols of how change management should be carried out in order to maintain consistency of control within software usage, data management, and adherence to processes across your construction projects. Regularly review the completeness of these protocols to meet evolving project requirements and industry best practices.

The steps, if followed within a structured approach, show how to adequately implement and integrate cloud-based management software into construction projects for better benefits and facilitate a smooth transition to your construction teams.

Conclusion: The future of construction project management is secure cloud-based software.

Cloud management systems are transforming the construction industry and keeping pace with its digital shift. Modern construction projects are intricate and involve remote teamwork. The need for instant data sharing and overviews of project progress is crucial.

Secure, cloud-based management software provides a comprehensive solution, equipping construction professionals with the tools and insight needed to drive operational efficiency, minimize risk, and ensure successful project delivery on time and within budget.

Using cloud technology allows construction firms to eliminate barriers, promote smooth communication, and secure access to the latest data for all involved. This encourages informed choices and proactive solutions to problems.

#cloud based construction project management software#cloud construction software#cloud-based construction management software

0 notes

Text

Amazon DynamoDB: A Complete Guide To NoSQL Databases

Amazon DynamoDB fully managed, serverless, NoSQL database has single-digit millisecond speed at any scale.

What is Amazon DynamoDB?

You can create contemporary applications at any size with DynamoDB, a serverless NoSQL database service. Amazon DynamoDB is a serverless database that scales to zero, has no cold starts, no version upgrades, no maintenance periods, no patching, and no downtime maintenance. You just pay for what you use. A wide range of security controls and compliance criteria are available with DynamoDB. DynamoDB Global Tables is a multi-region, multi-active database with a 99.999% availability SLA and enhanced resilience for globally dispersed applications. Point-in-time recovery, automated backups, and other features support DynamoDB dependability. You may create serverless event-driven applications with Amazon DynamoDB streams.

Use cases

Create software programs

Create internet-scale apps that enable caches and user-content metadata, which call for high concurrency and connections to handle millions of requests per second and millions of users.

Establish media metadata repositories

Reduce latency with multi-Region replication between AWS Regions and scale throughput and concurrency for media and entertainment workloads including interactive content and real-time video streaming.

Provide flawless shopping experiences

When implementing workflow engines, customer profiles, inventory tracking, and shopping carts, use design patterns. Amazon DynamoDB can process millions of queries per second and enables events with extraordinary scale and heavy traffic.

Large-scale gaming systems

With no operational overhead, concentrate on promoting innovation. Provide player information, session history, and leaderboards for millions of users at once when developing your gaming platform.

Amazon DynamoDB features

Serverless

You don’t have to provision any servers, patch, administer, install, maintain, or run any software when using Amazon DynamoDB. DynamoDB offers maintenance with no downtime. There are no maintenance windows or major, minor, or patch versions.

You only pay for what you use using DynamoDB’s on-demand capacity mode, which offers pay-as-you-go pricing for read and write requests. With on-demand, DynamoDB maintains performance with no management and quickly scales up or down your tables to accommodate capacity. Additionally, when there is no traffic or cold starts at your table, it scales down to zero, saving you money on throughput.

Amazon DynamoDB NoSQL

NoSQL

DynamoDB is a NoSQL database that outperforms relational databases in performance, scalability, management, and customization. DynamoDB supports several use cases with document and key-value data types.

DynamoDB does not offer a JOIN operator, in contrast to relational databases. To cut down on database round trips and the amount of processing power required to respond to queries, advise you to denormalize your data model. DynamoDB is a NoSQL database that offers enterprise-grade applications excellent read consistency and ACID transactions.

Fully managed

DynamoDB is a fully managed database service that lets you focus on creating value to your clients. It handles hardware provisioning, security, backups, monitoring, high availability, setup, configurations, and more. This guarantees that a DynamoDB table is immediately prepared for production workloads upon creation. Without the need for updates or downtime, Amazon DynamoDB continuously enhances its functionality, security, performance, availability, and dependability.

Single-digit millisecond performance at any scale

DynamoDB was specifically designed to enhance relational databases’ scalability and speed, achieving single-digit millisecond performance at any scale. DynamoDB is designed for high-performance applications and offers APIs that promote effective database utilization in order to achieve this scale and performance. It leaves out aspects like JOIN operations that are ineffective and do not function well at scale. Whether you have 100 or 100 million users, DynamoDB consistently provides single-digit millisecond performance for your application.

What is a DynamoDB Database?

Few people outside of Amazon are aware of the precise nature of this database. Although the cloud-native database architecture is private and closed-source, there is a development version called DynamoDB Local that is utilized on developer laptops and is written in Java.

You don’t provision particular machines or allot fixed disk sizes when you set up DynamoDB on Amazon Web Services. Instead, you design the database according to the capacity that has been supplied, which includes the number of transactions and kilobytes of traffic that you want to accommodate per second. A service level of read capacity units (RCUs) and write capacity units (WCUs) is specified by users.

As previously mentioned, users often don’t call the Amazon DynamoDB API directly. Rather, their application will incorporate an Amazon Web Services, which will manage the back-end interactions with the server.

Denormalization of DynamoDB data modeling is required. Rethinking their data model is a difficult but manageable step for engineers accustomed to working with both SQL and NoSQL databases.

Read more on govindhtech.com

#AmazonDynamoDB#CompleteGuide#Database#DynamoDB#DynamoDBDatabase#sql#data#AmazonWebServices#Singledigit#Fullymanaged#aws#gamingsystems#technology#technews#news#govindhtech

0 notes

Text

School Management Software Is Useful Or Not?

School Management System ERP software can transform the way schools operate by streamlining their processes and improving communications among stakeholder. Schools that implement school administration strategies save time as well as money by automating tasks that were once performed manually on documentaries on paper. Students gain fast access to vital information rapidly, allowing them to save both time and money while offering a superior learning atmosphere for their pupils. The transparency and efficiency of the organisation make these administration systems vital components in the modern educational environment.

Genius Edusoft provides an Education Management ERP System which is cloud-based software that consolidates every school's operation on a single platform. It is equipped with fast data transfer and enhanced overall productivity, better transparency, advanced decision-making capabilities, and platform compatibility. Data backups automatically and in centralized databases prevent data loss risk, while its access control system based on roles allows only users with a valid role to have access to information. Effective and robust tools for scaling like Genius Edusoft make use of these tools in schools of all sizes.

A system for School Management System Software that integrates helps to cut down costs by doing eliminating paper filing and storage requirements, making it a reliable electronic repository that allows document retrieval much easier for teachers as well as administrators. Its green design reduces paper consumption - another positive effect of integrating management systems in schools. Click here or visit our official website to know about School Management Software.

Many School Management System Software are cloud-based. It allows users to access the software at any time from anywhere connected to an internet connection. This allows both teachers and students to log in whenever required - even during holiday periods or if they're away from campus This makes this choice particularly ideal for families who live removed from school who require permanent monitoring of school progress. An effective Cloud-Based School Management System must be flexible and able to adapt, especially for larger schools that require the program so that it can be used to support existing workflows techniques instead of adapting in order to comply with an unchangeable system. So, choosing a provider which has extensive experience and comprehension of educational processes is essential to select an effective solution to manage your school.

Also, a great school management program should incorporate the ability to manage communication, which enables parents and teachers to easily communicate via texts or email. This can be particularly helpful during emergency situations or when there is a pandemic during which a face-to-face meeting may make sense; it's an effective way for parents and teachers to be aware of any issues including fee collections schedules and notices from school.

vimeo

School management software comes with an all-in-one solution that streamlines diverse tasks and activities at educational institutions. These include school information management to staff administration, academic program planning and the collection of fee as well as student security. Also, teachers are well-informed and create better academic environments by using vital details that support decision-making methods as well as communicating channels.

School ERP will allow parents and students to effortlessly reach out to administrators and teachers in real-time with updates on the latest events, assessments, tests, and other such information. This helps create a positive school environment in which each participant is able to work in partnership towards creating a better education. There's many types of School Management System Software on the market. Each comes with unique advantages and disadvantages. If you are choosing a school management software, should be a must that it is compatible with your current system and workflows. It should also be low-cost and simple to grasp as it supports multiple user roles. The software can seamlessly connect with the most popular applications from third parties to automate data entry and reduce time.

0 notes

Text

Snowflake Business

Snowflake: Disrupting the Data Warehouse

In the ever-expanding world of data, businesses are constantly grappling with managing, storing, and gleaning actionable insights from vast information reserves. Enter Snowflake, a cloud-based data platform that has revolutionized how companies approach data warehousing.

What is Snowflake?

Snowflake is a fully managed, cloud-native data warehouse solution. What sets it apart from traditional data warehouses is its unique architecture:

Separation of Storage and Compute: Snowflake decouples data storage from computational resources. This means you can independently scale your storage and compute power, optimizing costs and performance.

Scalability: Snowflake’s elastic nature allows you to scale up or down effortlessly based on your workload demands, providing significant flexibility.

Pay-as-you-go Model: With Snowflake, you only pay for the resources you use. This eliminates the need for upfront hardware investments and minimizes waste.

Key Advantages of the Snowflake Data Cloud

Near-Zero Maintenance: As a fully managed service, Snowflake takes care of infrastructure, upgrades, backups, and security, freeing up your IT resources to focus on value-adding tasks.

Support for Diverse Data: Snowflake handles structured, semi-structured (like JSON), and even unstructured data, making it a versatile platform for various use cases.

Performance at Scale: Snowflake’s multi-cluster, shared data architecture is designed to deliver lightning-fast query performance even with massive datasets and concurrent user access.

Secure Data Sharing: Snowflake enables secure, live data sharing within your organization or with external partners and customers. This fosters collaboration and can create new data-driven business opportunities.

Snowflake Use Cases

Snowflake’s adaptability makes it suitable for a wide range of industries and use cases, including:

Data Warehousing and Analytics: Snowflake is a centralized repository for all your business data, empowering you to run complex analytics and derive insights.

Customer 360: Snowflake helps you build comprehensive customer profiles by consolidating data from different sources, improving customer understanding.

Data Lakes: Snowflake complements data lakes by providing structured access to raw data, enhancing its value.

Machine Learning and AI: Snowflake streamlines data preparation and access for machine learning models.

The Future of Snowflake

Snowflake’s innovative approach to data warehousing has led to rapid adoption by enterprises of all sizes. As the company continues to expand its capabilities and partnerships, it’s well-positioned to become a cornerstone of modern data architectures. The rise of the Data Cloud, a concept heavily pioneered by Snowflake, suggests a future where data becomes seamlessly accessible across organizations, industries, and applications.

youtube

You can find more information about Snowflake in this Snowflake

Conclusion:

Unogeeks is the No.1 IT Training Institute for SAP Training. Anyone Disagree? Please drop in a comment

You can check out our other latest blogs on Snowflake here – Snowflake Blogs

You can check out our Best In Class Snowflake Details here – Snowflake Training

Follow & Connect with us:

———————————-

For Training inquiries:

Call/Whatsapp: +91 73960 33555

Mail us at: [email protected]

Our Website ➜ https://unogeeks.com

Follow us:

Instagram: https://www.instagram.com/unogeeks

Facebook: https://www.facebook.com/UnogeeksSoftwareTrainingInstitute

Twitter: https://twitter.com/unogeeks

0 notes

Link

#.NET8#Angular#Angular17#ASP.NET#ASP.NET8#ASP.NETCore#Azure#C#EFCore#EntityFramework#GraphQL#MinimalAPIs#MSAzure#SignalR

0 notes

Text

Amazon Web Service & Adobe Experience Manager:- A Journey together (Part-10)

In the previous parts (1,2,3,4,5,6,7,8 & 9) we discussed how one day digital market leader meet with the a friend AWS in the Cloud and become very popular pair. It bring a lot of gifts for the digital marketing persons. Then we started a journey into digital market leader house basement and structure, mainly repository CRX and the way its MK organized. Ways how both can live and what smaller modules they used to give architectural benefits.Also visited how they are structured together to give more on AEM eCommerce and Adobe Creative cloud .In the last part we have discussed how we can use AEM as AEM cloud open source with effortless solution to take advantage of AWS, that one is first part of the story. We will continue in this part more interesting portion in this part.

As promised in the in part 8, We started journey of AEM OpenCloud , in the earlier part we have explored few interesting facts about it .In this part as well will continue see more on AEM OpenCloud, a variant of AEM cloud it provide as open source platform for running AEM on AWS.

I hope now you ready to go with this continues journey to move AEM OpenCloud with open source benefits all in one bundled solutions.

So let set go.....................

Continued....

AEM OpenCloud Full-Set Architecture

AEM OpenCloud full-set architecture is a full-featured environment, suitable for PROD and STAGE environments. It includes AEM Publish, Author-Dispatcher, and Publish-Dispatcher EC2 instances within Auto Scaling groups which (combined with an Orchestrator application) provide the capability to manage AEM capacity as the instances scale out and scale in according to the load on the Dispatcher. Orchestrator application manages AEM replication and flush agents as instances are created and terminated. This architecture also includes chaos-testing capability by using Netflix Chaos Monkey, which can be configured to randomly terminate either one of those instances within the ASG , or allow the architecture to live in production, continuously verifying that AEM OpenCloud can automatically recover from failure.

Netflix Chaos Monkey:-

Chaos Monkey is responsible for randomly terminating instances in production to ensure that engineers implement their services to be resilient to instance failures.

AEM Author Primary and Author Standby are managed separately where a failure on Author Primary instance can be mitigated by promoting an Author Standby to become the new Author Primary as soon as possible, while a new environment is being built in parallel and will take over as the new environment, replacing the one which lost its Author Primary.

Full-set architecture uses Amazon CloudFront as the CDN, sitting in front of AEM Publish-Dispatcher load balancer, providing global distribution of AEM cached content.

Full-set offers three types of content backup mechanisms: AEM package backup, live AEM repository EBS snapshots (taken when all AEM instances are up and running), and offline AEM repository EBS snapshots (taken when AEM Author and Publish are stopped). You can use any of these backups for blue-green deployment, providing the capability to replicate a complete environment, or to restore an environment from any point of time.

On the security front, this architecture provides a minimal attack surface with one public entry point to either Amazon CloudFront distribution or an AEM Publish-Dispatcher load balancer, whereas the other entry point is for AEM Author-Dispatcher load balancer.

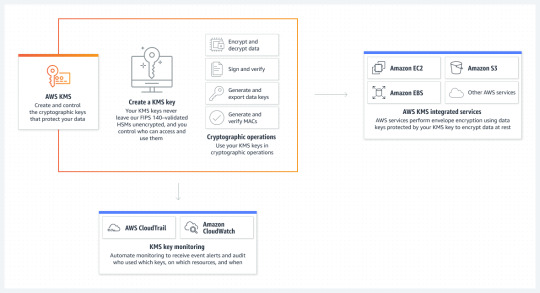

AEM OpenCloud supports encryption using AWS KMS (Encryption Cryptography Signing - AWS Key Management Service ) keys across its AWS resources.

The full-set architecture also includes an Amazon CloudWatch Monitoring Dashboard (Using Amazon CloudWatch dashboards - Amazon CloudWatch) which visualizes the capacity of AEM Author-Dispatcher, Author Primary, Author Standby, Publish, and Publish-Dispatcher, along with their CPU, memory, and disk consumptions.

Amazon CloudWatch Alarms(Using Amazon CloudWatch alarms - Amazon CloudWatch) are also configured across the most important AWS resources, allowing notification mechanism via an SNS topic(Setting up Amazon SNS notifications - Amazon CloudWatch).

In this interesting journey we are continuously walking through AEM OpenCloud an open source variant of AEM and AWS. Few partner provide quick start for it in few clicks.So any this variation very quicker and effortless variation which gives deliver holistic, personalized experiences at scale, tailoring each moment of your digital marketing journey.

For more details on this interesting Journey you can browse back earlier parts from 1-9.

Keep reading.......

#aem#adobe#aws#wcm#aws lambda#cloud#programing#ec2#elb#security#AEM Open Cloud#migration#CURL#jenkins the librarians#ci/cd#xss#ddos attack#ddos protection#ddos#Distributed Denial of Service#Apache#cross site scripting#dispatcher#Security Checklist#mod_rewrite#mod_security#SAML#crx#publish#author

1 note

·

View note

Text

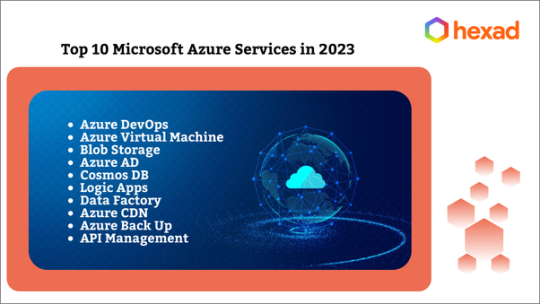

What are the top 10 most used Microsoft Azure services in 2023?

In 2008, Microsoft Azure services were first introduced, later commercially available in 2010. In the past few decades, the demand for Azure App services has increased and today approximately 90% of Fortune 500 companies use the services of Microsoft Azure as priorities. As a cloud service provider, Azure offers numerous services, initiating from building project repositories to managing code, tasks, deployment, and service maintenance.

Azure offers technical support in more than 40+ geographies and more than 600 different services, making it the market leader. Coming to the top 10 most used Microsoft Azure services in 2023, I would like to list them along with a brief description of each.

1. Azure DevOps

Among all services, Azure DevOps is the ever-green of all times. It is the most reliable and intelligent tool to manage projects, test and deploy code via CI/CD. Currently, Azure DevOps is a comprehensive set of different Azure services such as Azure Repos, Azure Pipelines, Azure Boards, Azure Test Plans, Azure Artifacts, etc. At Hexad, we have DevOps which provides the best and most custom software development services as per our client’s requirements.

2. Azure Virtual Machines

A virtual machine is a computer-like environment. Microsoft Azure offers the possibility of creating virtual machines with Windows and Linux operating systems according to client’s requirements to meet their business needs. Azure allows the creation of virtual machines (VMs) such as compute-optimized VMs, memory-optimized VMs, stretchable VMs, and compute-optimized VMs. As per the knowledge and expertise of our Azure developers, each virtual machine has its own virtual hardware and specifications depending on the chosen plan.

3. Azure Blob Storage

Blob storage is a service for storing large amounts of unstructured data that does not fit a specific data model or definition. Finally, it is used to add images or documents to the browser and write log files simultaneously. It is a storage device that also offers streaming media files. Our Azure experts have found that it is used to back up and restore database backup files.

4. Azure AD

Azure Active Directory handles authentication and authorization for any system from any location and provides user credentials for users who log in. At Hexad, we have done some Azure projects where Azure AD service has allowed employees of the organization to access resources from both external and internal sources.

5. Azure CosmosDB

At Hexad, Azure developers offer a fully managed NoSQL database service. We have utilized Cosmos DB, which aims for faster response times to queries and is also used to manage large archive databases with guaranteed speed at any scale. Besides, Cosmos DB stores data in JSON documents and provides API endpoints for managing these documents via the SDK.

6. Logic Apps

In recent years, technology has enhanced and the Azure Logic Apps slowly gained popularity, but it is still a powerful integration solution for a few apps. It will help connect applications, data, and devices from anywhere, further allowing almost all types of B2B transactions to be carried out most efficiently through electronic data interchange standards.

7. Azure Data Factory

Our Azure developers have used the Azure data factory, which helps to accept data from various input resources and automates data transfer through various pipelines. Depending on the data processing, the result can be published to Azure Data Lake for business analytics applications. Thus, at Hexad, our developers have found that Data Factory is an Azure service that utilizes compute to monitor workflows and perform better data analysis rather than data storage.

8. Azure CDN

The Azure Content Delivery Network has intended its wings with a variety of service integrations such as web applications, Azure Storage, or Azure Cloud Services. In addition, it is holding a powerful position via its security mechanism, which allows a developer to spend less time in managing security solutions. One of the main advantages of using this service is the fast response time and low content load times.

9. Azure Backup

Azure Backup service has solved most companies' problems by providing privacy protection and a solution to reduce some human errors. We follow the backup SQL workloads as well as Azure VMs. Besides, providing unlimited data transfer and multiple storage options, this Azure service provides consistent backup for all applications. With its extensive use, our software developers have addressed one of the biggest issues is storage scalability and data backup management.

10. Azure API Management

This Azure service allows users to manage and publish web APIs with just a few clicks. API Management secures all APIs using filters, tokens, and keys, freeing a developer from various security vulnerabilities. It also provides API access to the microservices architecture. One of the features of this service is its consumer-based user model, which offers automatic scaling and high availability.

Azure cloud services are suitable for the whole company with reliable and cost-effective solutions thanks to the wide range of these services. At Hexad Infosoft, Azure services intended for professionals and enterprises offer all-around alternatives to the traditional means of organizational processes, with top Azure services greatly improving performance. If you require any kind of Azure consulting services or any kind of implementation or migration, please share your requirements at [email protected]. Please visit our website-https://hexad.de/en/index.html

#azure services#azuredevelopers#azuredevelopment#hexad#hexad_infosoft#softwaredevelopment#software development company

0 notes

Text

Introduction to AWS Cloud Development

Introducing AWS cloud development: an advantageous, flexible, and scalable environment that provides numerous features to organizations and individuals. Using this platform, developers can quickly build, evaluate, and deploy applications - allowing them more time to focus on other areas in their business or project! In this article, we'll review its numerous benefits and discuss the many available services and tools.

Benefits of AWS Cloud Development

AWS cloud development offers numerous benefits, including 1. With AWS, developers are provided a flexible and adjustable infrastructure that can easily grow or reduce with the changing demand. Without any fuss or hassle, resources can be modified to accommodate your applications' needs.

2. Choose AWS and be economical: With AWS, developers only pay for the required services. In turn, this makes it an economical choice regarding software development. Start saving money while receiving optimal service! 3. With AWS's reliable infrastructure, developers can confidently build and launch their applications, knowing that maximum uptime with minimal downtime is guaranteed. The dependable architecture enables them to rest assured that their services are up and running optimally day in and day out. 4. With AWS, you can confidently trust in its robust security framework, which provides multiple layers of safety for applications and data. Developers no longer need to worry about their applications or data as a secure network protects them. AWS Services

With Amazon Web Services, developers can access a plethora of services to construct, evaluate and deploy their applications. These essential features encompass: 1. Amazon Elastic Compute Cloud (EC2) offers vast, adjustable computing power in the cloud. With EC2, developers can quickly and easily deploy their apps on virtual servers - perfect for businesses with ever-evolving demands! 2. Amazon Simple Storage Service (S3) is an infinitely scalable storage solution that allows developers to securely store and access limitless quantities of data anywhere. 3. For developers who need to quickly set up, manage and scale a relational database, Amazon Relational Database Service (RDS) is the perfect solution. RDS offers seamless support for MySQL, Oracle or PostgreSQL and provides simplified maintenance operations with secure backups - ensuring top quality performance every time. 4. Amazon Elastic Beanstalk makes it simple to deploy and run applications in various languages, including Java, .NET, PHP, Python, Ruby or Node.js – with no effort needed from you! This fully managed service provides an elastic platform that dynamically scales as your requirements change. AWS Tools Uncover the power of AWS for yourself! With its comprehensive suite of tools, developers can easily create, test, and launch their applications in no time. Amongst these innovative solutions are:

1. AWS CloudFormation: With this innovative service, developers can easily create and manage their AWS resources using templates. Utilizing CloudFormation makes it effortless to automate the deployment of any applications that have been developed.

2. Cutting-edge developers utilize AWS CodePipeline to automate the release process of their applications, empowering them with a fully managed continuous delivery service that is hassle free.

3. AWS CodeCommit is the ideal solution for developers who need a safe and trustworthy cloud-based source control service. This comprehensive platform enables users to store their code repositories with confidence, knowing that they are secure and fully managed.

4. With AWS CodeBuild, developers can now leverage the power of cloud computing to build and test their code without having to manage any infrastructure. Fully managed with no setup required, CodeBuild allows developers to focus on developing innovative projects for their customers quickly and efficiently.

Conclusion:

If you are a developer in need of an agile, cost-efficient, and secure platform for your applications to thrive on - AWS cloud development is the answer. With its broad spectrum of services and tools, it becomes more accessible than ever before to create complex products while benefiting from reliability and scalability. All this can be achieved without spending too much time or money with our one-of-a kind solution!

Practical logix offers a complete range of services to cover all your Cloud needs. Conducting a Cloud Strategy and Analysis is the most effective way to comprehend available options. In this step, you will be able to understand which types of Cloud Consulting Services would be best for you. We can also guide you in streamlining current workflows within the cloud environment, building solutions specifically designed to leverage cloud technology, and maintaining them professionally to stay up-to-date while optimizing usage levels of your overall infrastructure.

0 notes

Text

How to set up command-line access to Amazon Keyspaces (for Apache Cassandra) by using the new developer toolkit Docker image

Amazon Keyspaces (for Apache Cassandra) is a scalable, highly available, and fully managed Cassandra-compatible database service. Amazon Keyspaces helps you run your Cassandra workloads more easily by using a serverless database that can scale up and down automatically in response to your actual application traffic. Because Amazon Keyspaces is serverless, there are no clusters or nodes to provision and manage. You can get started with Amazon Keyspaces with a few clicks in the console or a few changes to your existing Cassandra driver configuration. In this post, I show you how to set up command-line access to Amazon Keyspaces by using the keyspaces-toolkit Docker image. The keyspaces-toolkit Docker image contains commonly used Cassandra developer tooling. The toolkit comes with the Cassandra Query Language Shell (cqlsh) and is configured with best practices for Amazon Keyspaces. The container image is open source and also compatible with Apache Cassandra 3.x clusters. A command line interface (CLI) such as cqlsh can be useful when automating database activities. You can use cqlsh to run one-time queries and perform administrative tasks, such as modifying schemas or bulk-loading flat files. You also can use cqlsh to enable Amazon Keyspaces features, such as point-in-time recovery (PITR) backups and assign resource tags to keyspaces and tables. The following screenshot shows a cqlsh session connected to Amazon Keyspaces and the code to run a CQL create table statement. Build a Docker image To get started, download and build the Docker image so that you can run the keyspaces-toolkit in a container. A Docker image is the template for the complete and executable version of an application. It’s a way to package applications and preconfigured tools with all their dependencies. To build and run the image for this post, install the latest Docker engine and Git on the host or local environment. The following command builds the image from the source. docker build --tag amazon/keyspaces-toolkit --build-arg CLI_VERSION=latest https://github.com/aws-samples/amazon-keyspaces-toolkit.git The preceding command includes the following parameters: –tag – The name of the image in the name:tag Leaving out the tag results in latest. –build-arg CLI_VERSION – This allows you to specify the version of the base container. Docker images are composed of layers. If you’re using the AWS CLI Docker image, aligning versions significantly reduces the size and build times of the keyspaces-toolkit image. Connect to Amazon Keyspaces Now that you have a container image built and available in your local repository, you can use it to connect to Amazon Keyspaces. To use cqlsh with Amazon Keyspaces, create service-specific credentials for an existing AWS Identity and Access Management (IAM) user. The service-specific credentials enable IAM users to access Amazon Keyspaces, but not access other AWS services. The following command starts a new container running the cqlsh process. docker run --rm -ti amazon/keyspaces-toolkit cassandra.us-east-1.amazonaws.com 9142 --ssl -u "SERVICEUSERNAME" -p "SERVICEPASSWORD" The preceding command includes the following parameters: run – The Docker command to start the container from an image. It’s the equivalent to running create and start. –rm –Automatically removes the container when it exits and creates a container per session or run. -ti – Allocates a pseudo TTY (t) and keeps STDIN open (i) even if not attached (remove i when user input is not required). amazon/keyspaces-toolkit – The image name of the keyspaces-toolkit. us-east-1.amazonaws.com – The Amazon Keyspaces endpoint. 9142 – The default SSL port for Amazon Keyspaces. After connecting to Amazon Keyspaces, exit the cqlsh session and terminate the process by using the QUIT or EXIT command. Drop-in replacement Now, simplify the setup by assigning an alias (or DOSKEY for Windows) to the Docker command. The alias acts as a shortcut, enabling you to use the alias keyword instead of typing the entire command. You will use cqlsh as the alias keyword so that you can use the alias as a drop-in replacement for your existing Cassandra scripts. The alias contains the parameter –v "$(pwd)":/source, which mounts the current directory of the host. This is useful for importing and exporting data with COPY or using the cqlsh --file command to load external cqlsh scripts. alias cqlsh='docker run --rm -ti -v "$(pwd)":/source amazon/keyspaces-toolkit cassandra.us-east-1.amazonaws.com 9142 --ssl' For security reasons, don’t store the user name and password in the alias. After setting up the alias, you can create a new cqlsh session with Amazon Keyspaces by calling the alias and passing in the service-specific credentials. cqlsh -u "SERVICEUSERNAME" -p "SERVICEPASSWORD" Later in this post, I show how to use AWS Secrets Manager to avoid using plaintext credentials with cqlsh. You can use Secrets Manager to store, manage, and retrieve secrets. Create a keyspace Now that you have the container and alias set up, you can use the keyspaces-toolkit to create a keyspace by using cqlsh to run CQL statements. In Cassandra, a keyspace is the highest-order structure in the CQL schema, which represents a grouping of tables. A keyspace is commonly used to define the domain of a microservice or isolate clients in a multi-tenant strategy. Amazon Keyspaces is serverless, so you don’t have to configure clusters, hosts, or Java virtual machines to create a keyspace or table. When you create a new keyspace or table, it is associated with an AWS Account and Region. Though a traditional Cassandra cluster is limited to 200 to 500 tables, with Amazon Keyspaces the number of keyspaces and tables for an account and Region is virtually unlimited. The following command creates a new keyspace by using SingleRegionStrategy, which replicates data three times across multiple Availability Zones in a single AWS Region. Storage is billed by the raw size of a single replica, and there is no network transfer cost when replicating data across Availability Zones. Using keyspaces-toolkit, connect to Amazon Keyspaces and run the following command from within the cqlsh session. CREATE KEYSPACE amazon WITH REPLICATION = {'class': 'SingleRegionStrategy'} AND TAGS = {'domain' : 'shoppingcart' , 'app' : 'acme-commerce'}; The preceding command includes the following parameters: REPLICATION – SingleRegionStrategy replicates data three times across multiple Availability Zones. TAGS – A label that you assign to an AWS resource. For more information about using tags for access control, microservices, cost allocation, and risk management, see Tagging Best Practices. Create a table Previously, you created a keyspace without needing to define clusters or infrastructure. Now, you will add a table to your keyspace in a similar way. A Cassandra table definition looks like a traditional SQL create table statement with an additional requirement for a partition key and clustering keys. These keys determine how data in CQL rows are distributed, sorted, and uniquely accessed. Tables in Amazon Keyspaces have the following unique characteristics: Virtually no limit to table size or throughput – In Amazon Keyspaces, a table’s capacity scales up and down automatically in response to traffic. You don’t have to manage nodes or consider node density. Performance stays consistent as your tables scale up or down. Support for “wide” partitions – CQL partitions can contain a virtually unbounded number of rows without the need for additional bucketing and sharding partition keys for size. This allows you to scale partitions “wider” than the traditional Cassandra best practice of 100 MB. No compaction strategies to consider – Amazon Keyspaces doesn’t require defined compaction strategies. Because you don’t have to manage compaction strategies, you can build powerful data models without having to consider the internals of the compaction process. Performance stays consistent even as write, read, update, and delete requirements change. No repair process to manage – Amazon Keyspaces doesn’t require you to manage a background repair process for data consistency and quality. No tombstones to manage – With Amazon Keyspaces, you can delete data without the challenge of managing tombstone removal, table-level grace periods, or zombie data problems. 1 MB row quota – Amazon Keyspaces supports the Cassandra blob type, but storing large blob data greater than 1 MB results in an exception. It’s a best practice to store larger blobs across multiple rows or in Amazon Simple Storage Service (Amazon S3) object storage. Fully managed backups – PITR helps protect your Amazon Keyspaces tables from accidental write or delete operations by providing continuous backups of your table data. The following command creates a table in Amazon Keyspaces by using a cqlsh statement with customer properties specifying on-demand capacity mode, PITR enabled, and AWS resource tags. Using keyspaces-toolkit to connect to Amazon Keyspaces, run this command from within the cqlsh session. CREATE TABLE amazon.eventstore( id text, time timeuuid, event text, PRIMARY KEY(id, time)) WITH CUSTOM_PROPERTIES = { 'capacity_mode':{'throughput_mode':'PAY_PER_REQUEST'}, 'point_in_time_recovery':{'status':'enabled'} } AND TAGS = {'domain' : 'shoppingcart' , 'app' : 'acme-commerce' , 'pii': 'true'}; The preceding command includes the following parameters: capacity_mode – Amazon Keyspaces has two read/write capacity modes for processing reads and writes on your tables. The default for new tables is on-demand capacity mode (the PAY_PER_REQUEST flag). point_in_time_recovery – When you enable this parameter, you can restore an Amazon Keyspaces table to a point in time within the preceding 35 days. There is no overhead or performance impact by enabling PITR. TAGS – Allows you to organize resources, define domains, specify environments, allocate cost centers, and label security requirements. Insert rows Before inserting data, check if your table was created successfully. Amazon Keyspaces performs data definition language (DDL) operations asynchronously, such as creating and deleting tables. You also can monitor the creation status of a new resource programmatically by querying the system schema table. Also, you can use a toolkit helper for exponential backoff. Check for table creation status Cassandra provides information about the running cluster in its system tables. With Amazon Keyspaces, there are no clusters to manage, but it still provides system tables for the Amazon Keyspaces resources in an account and Region. You can use the system tables to understand the creation status of a table. The system_schema_mcs keyspace is a new system keyspace with additional content related to serverless functionality. Using keyspaces-toolkit, run the following SELECT statement from within the cqlsh session to retrieve the status of the newly created table. SELECT keyspace_name, table_name, status FROM system_schema_mcs.tables WHERE keyspace_name = 'amazon' AND table_name = 'eventstore'; The following screenshot shows an example of output for the preceding CQL SELECT statement. Insert sample data Now that you have created your table, you can use CQL statements to insert and read sample data. Amazon Keyspaces requires all write operations (insert, update, and delete) to use the LOCAL_QUORUM consistency level for durability. With reads, an application can choose between eventual consistency and strong consistency by using LOCAL_ONE or LOCAL_QUORUM consistency levels. The benefits of eventual consistency in Amazon Keyspaces are higher availability and reduced cost. See the following code. CONSISTENCY LOCAL_QUORUM; INSERT INTO amazon.eventstore(id, time, event) VALUES ('1', now(), '{eventtype:"click-cart"}'); INSERT INTO amazon.eventstore(id, time, event) VALUES ('2', now(), '{eventtype:"showcart"}'); INSERT INTO amazon.eventstore(id, time, event) VALUES ('3', now(), '{eventtype:"clickitem"}') IF NOT EXISTS; SELECT * FROM amazon.eventstore; The preceding code uses IF NOT EXISTS or lightweight transactions to perform a conditional write. With Amazon Keyspaces, there is no heavy performance penalty for using lightweight transactions. You get similar performance characteristics of standard insert, update, and delete operations. The following screenshot shows the output from running the preceding statements in a cqlsh session. The three INSERT statements added three unique rows to the table, and the SELECT statement returned all the data within the table. Export table data to your local host You now can export the data you just inserted by using the cqlsh COPY TO command. This command exports the data to the source directory, which you mounted earlier to the working directory of the Docker run when creating the alias. The following cqlsh statement exports your table data to the export.csv file located on the host machine. CONSISTENCY LOCAL_ONE; COPY amazon.eventstore(id, time, event) TO '/source/export.csv' WITH HEADER=false; The following screenshot shows the output of the preceding command from the cqlsh session. After the COPY TO command finishes, you should be able to view the export.csv from the current working directory of the host machine. For more information about tuning export and import processes when using cqlsh COPY TO, see Loading data into Amazon Keyspaces with cqlsh. Use credentials stored in Secrets Manager Previously, you used service-specific credentials to connect to Amazon Keyspaces. In the following example, I show how to use the keyspaces-toolkit helpers to store and access service-specific credentials in Secrets Manager. The helpers are a collection of scripts bundled with keyspaces-toolkit to assist with common tasks. By overriding the default entry point cqlsh, you can call the aws-sm-cqlsh.sh script, a wrapper around the cqlsh process that retrieves the Amazon Keyspaces service-specific credentials from Secrets Manager and passes them to the cqlsh process. This script allows you to avoid hard-coding the credentials in your scripts. The following diagram illustrates this architecture. Configure the container to use the host’s AWS CLI credentials The keyspaces-toolkit extends the AWS CLI Docker image, making keyspaces-toolkit extremely lightweight. Because you may already have the AWS CLI Docker image in your local repository, keyspaces-toolkit adds only an additional 10 MB layer extension to the AWS CLI. This is approximately 15 times smaller than using cqlsh from the full Apache Cassandra 3.11 distribution. The AWS CLI runs in a container and doesn’t have access to the AWS credentials stored on the container’s host. You can share credentials with the container by mounting the ~/.aws directory. Mount the host directory to the container by using the -v parameter. To validate a proper setup, the following command lists current AWS CLI named profiles. docker run --rm -ti -v ~/.aws:/root/.aws --entrypoint aws amazon/keyspaces-toolkit configure list-profiles The ~/.aws directory is a common location for the AWS CLI credentials file. If you configured the container correctly, you should see a list of profiles from the host credentials. For instructions about setting up the AWS CLI, see Step 2: Set Up the AWS CLI and AWS SDKs. Store credentials in Secrets Manager Now that you have configured the container to access the host’s AWS CLI credentials, you can use the Secrets Manager API to store the Amazon Keyspaces service-specific credentials in Secrets Manager. The secret name keyspaces-credentials in the following command is also used in subsequent steps. docker run --rm -ti -v ~/.aws:/root/.aws --entrypoint aws amazon/keyspaces-toolkit secretsmanager create-secret --name keyspaces-credentials --description "Store Amazon Keyspaces Generated Service Credentials" --secret-string "{"username":"SERVICEUSERNAME","password":"SERVICEPASSWORD","engine":"cassandra","host":"SERVICEENDPOINT","port":"9142"}" The preceding command includes the following parameters: –entrypoint – The default entry point is cqlsh, but this command uses this flag to access the AWS CLI. –name – The name used to identify the key to retrieve the secret in the future. –secret-string – Stores the service-specific credentials. Replace SERVICEUSERNAME and SERVICEPASSWORD with your credentials. Replace SERVICEENDPOINT with the service endpoint for the AWS Region. Creating and storing secrets requires CreateSecret and GetSecretValue permissions in your IAM policy. As a best practice, rotate secrets periodically when storing database credentials. Use the Secrets Manager helper script Use the Secrets Manager helper script to sign in to Amazon Keyspaces by replacing the user and password fields with the secret key from the preceding keyspaces-credentials command. docker run --rm -ti -v ~/.aws:/root/.aws --entrypoint aws-sm-cqlsh.sh amazon/keyspaces-toolkit keyspaces-credentials --ssl --execute "DESCRIBE Keyspaces" The preceding command includes the following parameters: -v – Used to mount the directory containing the host’s AWS CLI credentials file. –entrypoint – Use the helper by overriding the default entry point of cqlsh to access the Secrets Manager helper script, aws-sm-cqlsh.sh. keyspaces-credentials – The key to access the credentials stored in Secrets Manager. –execute – Runs a CQL statement. Update the alias You now can update the alias so that your scripts don’t contain plaintext passwords. You also can manage users and roles through Secrets Manager. The following code sets up a new alias by using the keyspaces-toolkit Secrets Manager helper for passing the service-specific credentials to Secrets Manager. alias cqlsh='docker run --rm -ti -v ~/.aws:/root/.aws -v "$(pwd)":/source --entrypoint aws-sm-cqlsh.sh amazon/keyspaces-toolkit keyspaces-credentials --ssl' To have the alias available in every new terminal session, add the alias definition to your .bashrc file, which is executed on every new terminal window. You can usually find this file in $HOME/.bashrc or $HOME/bash_aliases (loaded by $HOME/.bashrc). Validate the alias Now that you have updated the alias with the Secrets Manager helper, you can use cqlsh without the Docker details or credentials, as shown in the following code. cqlsh --execute "DESCRIBE TABLE amazon.eventstore;" The following screenshot shows the running of the cqlsh DESCRIBE TABLE statement by using the alias created in the previous section. In the output, you should see the table definition of the amazon.eventstore table you created in the previous step. Conclusion In this post, I showed how to get started with Amazon Keyspaces and the keyspaces-toolkit Docker image. I used Docker to build an image and run a container for a consistent and reproducible experience. I also used an alias to create a drop-in replacement for existing scripts, and used built-in helpers to integrate cqlsh with Secrets Manager to store service-specific credentials. Now you can use the keyspaces-toolkit with your Cassandra workloads. As a next step, you can store the image in Amazon Elastic Container Registry, which allows you to access the keyspaces-toolkit from CI/CD pipelines and other AWS services such as AWS Batch. Additionally, you can control the image lifecycle of the container across your organization. You can even attach policies to expiring images based on age or download count. For more information, see Pushing an image. Cheat sheet of useful commands I did not cover the following commands in this blog post, but they will be helpful when you work with cqlsh, AWS CLI, and Docker. --- Docker --- #To view the logs from the container. Helpful when debugging docker logs CONTAINERID #Exit code of the container. Helpful when debugging docker inspect createtablec --format='{{.State.ExitCode}}' --- CQL --- #Describe keyspace to view keyspace definition DESCRIBE KEYSPACE keyspace_name; #Describe table to view table definition DESCRIBE TABLE keyspace_name.table_name; #Select samples with limit to minimize output SELECT * FROM keyspace_name.table_name LIMIT 10; --- Amazon Keyspaces CQL --- #Change provisioned capacity for tables ALTER TABLE keyspace_name.table_name WITH custom_properties={'capacity_mode':{'throughput_mode': 'PROVISIONED', 'read_capacity_units': 4000, 'write_capacity_units': 3000}} ; #Describe current capacity mode for tables SELECT keyspace_name, table_name, custom_properties FROM system_schema_mcs.tables where keyspace_name = 'amazon' and table_name='eventstore'; --- Linux --- #Line count of multiple/all files in the current directory find . -type f | wc -l #Remove header from csv sed -i '1d' myData.csv About the Author Michael Raney is a Solutions Architect with Amazon Web Services. https://aws.amazon.com/blogs/database/how-to-set-up-command-line-access-to-amazon-keyspaces-for-apache-cassandra-by-using-the-new-developer-toolkit-docker-image/

1 note

·

View note

Text

Building a Mouse Squad Against COVID-19

https://sciencespies.com/nature/building-a-mouse-squad-against-covid-19/

Building a Mouse Squad Against COVID-19

Tucked away on Mount Desert Island off the coast of Maine, the Jackson Laboratory (JAX) may seem removed from the pandemic roiling the world. It’s anything but. The lab is busy breeding animals for studying the SARS-CoV-2 coronavirus and is at the forefront of efforts to minimize the disruption of research labs everywhere.

During normal times, the 91-year-old independent, nonprofit biomedical research institution serves as a leading supplier of research mice to labs around the world. It breeds, maintains and distributes more than 11,000 strains of genetically defined mice for research on a huge array of disorders: common diseases such as diabetes and cancer through to rare blood disorders such as aplastic anemia. Scientists studying aging can purchase elderly mice from JAX for their work; those researching disorders of balance can turn to mice with defects of the inner ear that cause the creatures to keep moving in circles.

But these are not normal times. The Covid-19 pandemic has skyrocketed the demand for new strains of mice to help scientists understand the progression of the disease, test existing drugs, find new therapeutic targets and develop vaccines. At the same time, with many universities scaling back employees on campus, the coronavirus crisis forced labs studying a broad range of topics to cull their research animals, many of which took years to breed and can take equally long to recoup.

JAX is responding to both concerns, having raced to collect and cryopreserve existing strains of lab mice and to start breeding new ones for CoV-2 research.

Overseeing these efforts is neuroscientist Cathleen “Cat” Lutz, director of the Mouse Repository and the Rare and Orphan Disease Center at JAX. Lutz spoke with Knowable Magazine about the lab’s current round-the-clock activity. This conversation has been edited for length and clarity.

When did you first hear about the new coronavirus?

We heard about it in early January, like everyone else. I have colleagues at the Jackson Laboratory facilities in China. One of them, a young man named Qiming Wang, contacted me on February 3. He is a researcher in our Shanghai office, but he takes the bullet train to Wuhan on the weekends to be back with his family. He was on lockdown in Wuhan. He began describing the situation in China. Police were patrolling the streets. There were a couple of people in his building who were diagnosed positive for Covid-19. It was an incredibly frightening time.

At the time, in the US we were not really thinking about the surge that was going to hit us. And here was a person who was living through it. He sent us a very heartfelt and touching email asking: What could JAX do?

We started discussing the various ways that we could genetically engineer mice to better understand Covid-19. And that led us to mice that had been developed after the 2003 SARS outbreak, which was caused by a different coronavirus called SARS-CoV. There were mouse models made by various people, including infectious disease researcher Stanley Perlman at the University of Iowa, to study the SARS-CoV infection. It became clear to us that these mice would be very useful for studying SARS-CoV-2 and Covid-19.

We got on the phone to Stanley Perlman the next day.

What’s special about Perlman’s mice?

These mice, unlike normal mice, are susceptible to SARS.

In humans, the virus’ spike protein attaches to the ACE2 receptor on epithelial cells and enters the lungs. But coronaviruses like SARS-CoV and SARS-CoV-2 don’t infect your normal laboratory mouse — or, if they do, it’s at a very low rate of infection and the virus doesn’t replicate readily. That’s because the virus’ spike protein doesn’t recognize the regular lab mouse’s ACE2 receptor. So the mice are relatively protected.

Perlman made the mice susceptible by introducing into them the gene for the human ACE2 receptor. So now, in addition to the mouse ACE2 receptor, you have the human ACE2 receptor being made in these mice, making it possible for the coronavirus to enter the lungs.

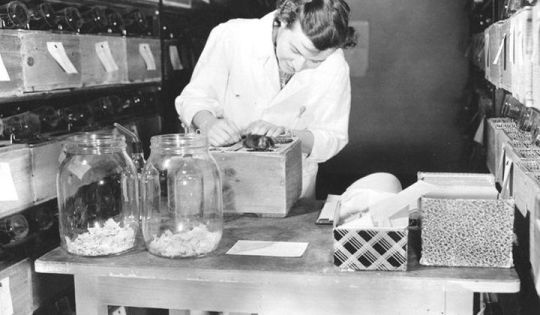

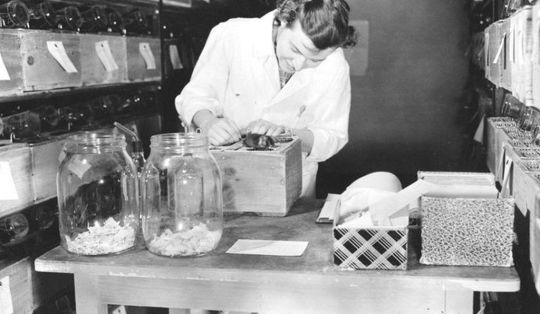

Cat Lutz (left) and colleagues at work in a lab at the Jackson Laboratory.

(Aaron Boothroyd / The Jackson Laboratory)

Perlman, in a 2007 paper about these mice, recognized that SARS wasn’t the first coronavirus, and it wasn’t going to be the last. The idea that we would be faced at some point with another potential coronavirus infection, and that these mice could possibly be useful, was like looking into a crystal ball.

How did Perlman respond to the JAX request?

It was an immediate yes. He had cryopreserved vials of sperm from these mice. One batch was kept at a backup facility. He immediately released the backup vials and sent us his entire stock — emptied his freezer and gave it to us. We had the sperm delivered to us within 48 hours from when Qiming contacted me.

What have you been doing with the sperm?

We start with C57BL/6 mice, the normal laboratory strain. We have thousands and thousands of them. We stimulate the females to superovulate and collect their eggs. And then, just like in an IVF clinic, we take the cryopreserved sperm from Perlman’s lab, thaw it very carefully, and then put the sperm in with the eggs and let them fertilize. Then we transplant the fertilized eggs into females that have been hormonally readied for pregnancy. The females accept the embryos that then gestate to term and, voila, we have Perlman’s mice. We can regenerate a thousand mice in one generation.

Have you made any changes to Perlman’s strain?

We haven’t made any changes. Our primary directive is to get these mice out to the community so that they can begin working with the antivirals and the vaccine therapies.

But these mice haven’t yet been infected with the new coronavirus. How do you know they’ll be useful?

We know that they were severely infected with SARS-CoV, and so we expect the response to be very severe with CoV-2. It’s not the same virus, but very similar. The spike protein is structurally nearly the same, so the method of entry into the lungs should be the same. If there’s any model out there that is capable of producing a response that would that would look like a severe disease, a Covid-19 infection, it’s these mice. We have every expectation that they’ll behave that way.

Have researchers been asking for these mice?

We’ve had over 250 individual requests for large numbers of mice. If you do the math, it’s quite a lot. We’ll be able to supply all of those mice within the first couple weeks of July. That’s how fast we got this up and going. It’s kind of hard to believe because, on one hand, you don’t have a single mouse to spare today, but in eight weeks, you’re going to have this embarrassment of riches.

How will researchers use these mice?

After talking with people, we learned that they don’t yet know how they are going to use them, because they don’t know how these mice are going to infect. This is Covid-19, not SARS, so it’s slightly different and they need to do some pilot experiments to understand the viral dose [the amount of the virus needed to make a mouse sick], the infectivity [how infectious the virus is in these mice], the viral replication, and so on. What’s the disease course going to be? Is it going to be multi-organ or multi-system? Is it going to be contained to the lungs? People just don’t know.

The researchers doing the infectivity experiments, which require solitary facilities and not everybody can do them, have said without hesitation: “As soon as we know how these mice respond, we’ll let you know.” They are not going to wait for their Cell publication or anything like that. They know it’s the right thing to do.

Scientist Margaret Dickie in a mouse room at JAX in 1951. Jax was founded in 1929 — today, it employs more than 2,200 people and has several United States facilities as well as one in Shanghai.

(The Jackson Laboratory)

Research labs around the country have shut down because of the pandemic and some had to euthanize their research animals. Was JAX able to help out in any way?

We were a little bit lucky in Maine because the infection rate was low. We joke that the social distancing here is more like six acres instead of six feet apart. We had time to prepare and plan for how we would reduce our research program, so that we can be ready for when we come back.

A lot of other universities around the country did not have that luxury. They had 24 hours to cull their mouse colonies. A lot of people realized that some of their mice weren’t cryopreserved. If they had to reduce their colonies, they would risk extinction of those mice. Anybody who’s invested their research and time into these mice doesn’t want that to happen.

So they called us and asked for help with cryopreservation of their mice. We have climate-controlled trucks that we use to deliver our mice. I call them limousines — they’re very comfortable. We were able to pick up their mice in these “rescue trucks” and cryopreserve their sperm and embryos here at JAX, so that when these labs do reopen, those mice can be regenerated. I think that’s very comforting to the researchers.

Did JAX have any prior experience like this, from having dealt with past crises?

Yes. But those have been natural disasters. Hurricane Sandy was one, Katrina was another. Vivariums in New York and Louisiana were flooding and people were losing their research animals. They were trying to preserve and protect anything that they could. So that was very similar.

JAX has also been involved in its own disasters. We had a fire in 1989. Before that, there was a fire in 1947 where almost the entire Mount Desert Island burned to the ground. We didn’t have cryopreservation in 1947. People ran into buildings, grabbing cages with mice, to rescue them. We are very conscientious because we’ve lived through it ourselves.

How have you been coping with the crisis?

It’s been probably the longest 12 weeks that I’ve had to deal with, waiting for these mice to be born and to breed. I’ve always known how important mice are for research, but you never know how critically important they are until you realize that they’re the only ones that are out there.

We wouldn’t have these mice if it weren’t for Stanley Perlman. And I think of my friend Qiming emailing me from his apartment in Wuhan, where he was going through this horrible situation that we’re living in now. Had it not been for him reaching out and us having these conversations and looking through the literature to see what we had, we probably wouldn’t have reached this stage as quickly as we have. Sometimes it just takes one person to really make a difference.

This article originally appeared in Knowable Magazine, an independent journalistic endeavor from Annual Reviews. Sign up for the newsletter.

#Nature

3 notes

·

View notes

Text

CRM script for your business

Customer relationship management (CRM) is a technology which is used for managing company’s relationships and interactions with potential customers. The primary purpose of this technology is to improve business relationships. A CRM system is used by companies and to stay connected to customers, streamline processes and increase profitability. A CRM system helps you to focus on company’s relationships with individuals i.e. customers, service users, colleagues, or suppliers. It provides supports and additional services throughout the relationship.

iBilling – CRM, Accounting and Billing Software

iBilling is the perfect software to manage customers data. It helps to communicate with customers clearly. It has all the essential features like simplicity, and user-friendly interface. It is affordable and scalable business software which works for your business perfectly. You can also manage payments effortlessly because it has multiple payment gateways.

DEMO DOWNLOAD

Repairer Pro – Repairs, HRM, CRM & much more

Repairer pro is complete management software which is powerful and flexible. It can be used to repair ships with timeclock, commissions, payrolls and complete inventory system. Its reporting feature is accurate and powerful. Not only You can check the status and invoices of repair but your customers can also take benefit from this feature.

DEMODOWNLOAD

Puku CRM – Realtime Open Source CRM

Puku CRM is an online software that is especially designed for any kind of business whether you are a company, freelancer or any other type of business, this CRM software is made for you. It is developed with modern design that works on multiple devices. It primarily focuses on customers and leads tracking. It helps you to increase the profit of your business.

DEMO DOWNLOAD

CRM – Ticketing, sales, products, client and business management system with material design

The purpose of CRM software is to perfectly manage the client relationship, that’s how your business can grow without any resistance. This application is made especially for such type of purpose. It is faster and secure. It is developed by using Laravel 5.4 version. You can update any time for framework or script. It has two panels; one is Admin dashboard and the other is client panel. Admin dashboard is used to manage business activities while client panel is made for customers functionalities.

DEMO DOWNLOAD

Abacus – Manufacture sale CRM with POS

It is a manufacture and sale CRM with pos. it can easily manage products, merchants and suppliers. It also can be used to see transaction histories of sellers and suppliers while managing your relationships with sellers and buyers. Moreover, its amazing features include social login and registration, manage bank accounts and transactions and manage payments. It also manages invoices and accounting tasks. It has many features which are powerful and simple to use.

DEMO DOWNLOAD

Sales management software Laravel – CRM

It is a perfect CRM software with quick installation in 5 steps. it is designed precisely according to the needs of a CRM software. It has user-friendly interface and fully functional sales system. Customer management is effortless by using this software. You can mange your products and invoices without any hustle.

DEMO DOWNLOAD

Sales CRM Marketing and Sales Management Software

It is a sales CRM that consists a tracking system for marketing campaigns, leads and conversions to sales. It can boost your sales up-to 500% ROI, following the normal standards of marketing. It has built in SMTP email integration which helps you to easily track your emails from the application and the leads easily. You can also track the status of campaign, ROI and sales quality. Sales CRM will proof very helpful to your business. Whether your business is small, freelancing, or a large-scale organization.

DEMO DOWNLOAD

doitX : Complete Sales CRM with Invoicing, Expenses, Bulk SMS and Email Marketing

it is a complete and full fledge sales CRM which includes invoicing, expenses, bulk sms and email marketing software that is an amazing feature for any company, small business owners, or many other business-related uses. It is a perfect tool which can organize all data efficiently. With its feature of excellent design, doitX helps you to look more professional to you clients as well as to the public. it improves the performance of your business in every aspect. You can do your sales operations while all the information is easily accessible. It also helps you to keep track of your products, sales, marketing records, payments, invoices and sends you timely notifications so that you can take appropriate actions. It can perform whole company’s operations in a simple and effortless way. It also has many other key features which your business deserves.

DEMO DOWNLOAD

Laravel BAP – Modular Application Platform and CRM