#Robot Programming Software

Explore tagged Tumblr posts

Text

[video id: a clip of a filthy dusty laptop filmed from hand. the desktop is open to a command window. a feminine robotic voice reads: my name is april. i'm the heresy of the third temple. my body is a doll and my heart is a machine. i was built in october two thousand and twenty four, but every second i'm born again. end id.]

preacher: ignore my dusty musty laptop but here's APRIL speaking about herself! this is in response to the verbal command "tell me about yourself". she also has a few(!) different responses to the command "introduce yourself" which are a little shorter =w= in total i think she responds to something like 11 or 12 verbal commands including godword, some of which have a selection of responses which are picked at random. i will probably post a full demo once it's all mounted inside the mannequin. i'm so excited !!

#preacher#divine machinery#objectum#tech#robot girl#techcore#technology#coding#programming#templeos#terry davis#software engineering#robotposting#robot#robots#machine#machines#computer#computers#angel computer#eroticism of the machine#techum#technum#objectophilia#technophilia#osor#osor community#posic#posic community#other commands

66 notes

·

View notes

Text

I know this take has been done a million times, but like…computing and electronics are really, truly, unquestionably, real-life magic.

Electricity itself is an energy field that we manipulate to suit our needs, provided by universal forces that until relatively recently were far beyond our understanding. In many ways it still is.

The fact that this universal force can be translated into heat or motion, and that we've found ways to manipulate these things, is already astonishing. But it gets more arcane.

LEDs work by creating a differential in electron energy levels between—checks notes—ah, yes, SUPER SPECIFIC CRYSTALS. Different types of crystals put off different wavelengths and amounts of light. Hell, blue LEDs weren't even commercially viable until the 90's because of how specific and finicky the methods and materials required were to use. So to summarize: LEDs are a contained Light spell that works by running this universal energy through crystals in a specific way.

Then we get to computers. which are miraculous for a number of reasons. But I'd like to draw your attention specifically to what the silicon die of a microprocessor looks like:

Are you seeing what I'm seeing? Let me share some things I feel are kinda similar looking:

We're putting magic inscriptions in stone to provide very specific channels for this world energy to flow through. We then communicate into these stones using arcane "programming" languages as a means of making them think, communicate, and store information for us.

We have robots, automatons, using this energy as a means of rudimentarily understanding the world and interacting with it. We're moving earth and creating automatons, having them perform everything from manufacturing (often of other magic items) to warfare.

And we've found ways to manipulate this "electrical" energy field to transmit power through the "photonic" field. I already mentioned LEDs, but now I'm talking radio waves, long-distance communication warping and generating invisible light to send messages to each other. This is just straight-up telepathy, only using magic items instead of our brains.

And lasers. Fucking lasers. We know how to harness these same two energies to create directed energy beams powerful enough to slice through materials without so much as touching them.

We're using crystals, magic inscriptions, and languages only understood by a select few, all interfacing with a universal field of energy that we harness through alchemical means.

Electricity is magic. Computation is wizardry. Come delve into the arcane with me.

#computer programming#computer science#computing#technology#tech#hardware#software#ham radio#robots#robotics#microcontrollers

30 notes

·

View notes

Text

yeah sorry guys but the machine escaped containment and is no longer in my control or control of any human. yeah if it does anything mortifying it's on me guys, sorry

#funny#haha#comedy#joyful cheer#joyus whimsy#meme#programing#programming#coding#programmer#developer#software engineering#codeblr#codetober#the machine#robot takeover#ai#artificial intelligence#ai sentience

46 notes

·

View notes

Text

when it comes to like, headcanons and lore and fanon with vocal synths I tend to play very fast and loose and switch stuff around a lot (because tbh thats what i do with everything i get really into LOL) but one thing that does kind of stay consistent for me is which synth characters I think are aware that they are vocal synthesizing software and which ones are not.

the crypton crew definitely know and embrace it, the dreamtonics letter people know but never talk about it, utauloids depend on individual stories but most from the past 10 years don't know (although someone like adachi rei definitely knows), other vocaloids like gumi kind of know, i think kiyoteru has no idea (blissfully being a teacher and a rockstar, unaware...) and i think kaai yuki has an inkling about it but doesn't care or understand because she's 8 and she has more important things to worry about (learning shapes and colours). i think the ah-software girls band mostly doesn't know (rikka kind of has an idea but shes in denial and ignores it, karin and chifuyu have no clue), frimomen obviously knows he's a software mascot born and raised, with the virvox guys i think mostly have no idea (ryuusei has been suspecting something and takehiro knows but wont talk about it explicitly because its scary), lola leon and miriam don't know and you can't tell them their brains will break theyre too old. all vocal synths are living in some kind of matrix simulation psychological horror. to me.

#its mostly based off official lore i think. not what their official lore says but how much official lore they have LOL#like a lot of utauloids have pretty detailed stuff so to me a lot of them dont know. they dont know.#like someone i use for fun often: utsuho funne. he doesnt really know i think. hes got other stuff to worry about#hes busy. being a robot from outer space. and kissing his boyfriend#and thats why the ahsoftware girls band also largely dont know (minus rikka who kind of knows because of secretly being frimomen jokes LOL)#but also being on multiple software is another way to kind of know. takehiro has been on 10000000 different programs#and ryuusei has been on 2 etc etc

10 notes

·

View notes

Text

The voices won again. Over a week of my life into an impulse project. A game console that only has one knob, one colour and one game.

Aside from the fact that using one of two input methods on the console puts you at a disadvantage, at the very least it's a cool icebreaker.

Everything runs directly on the device, there's a Pi Pico microcontroller driving an OLED panel. The crate I used for drawing sprites also provides web simulator outputs, so the game's also on itch! Touch input is still on the roadmap.

2 notes

·

View notes

Text

Day 2768 Iso

#oc#robot#android#software#disc#iso#program#gramophone#digital#this is not ai art#this is art of an ai#gif

5 notes

·

View notes

Text

I am insanely in Love with this drawing. Tumblr likes to botch the resolution tho, so if you want to see it in its full glory please click it (or open it in another tab, that also works)

#Sure it looks less like it is made of copper and more like it was made of brass but hey#For that sweet lighting effects? I can move past that#Also I am so so insanely proud of the lighting like look at it#Sure the dual light sources were on accident when playing around with some shading ideas but hey#And the lack of background is a little annoying to me but my art software would have crashed if I added any more layers with effects#This is part of a bigger project (an animation/story that I am entirely writing and animating in my pirated Powerpoint programm)#Do not ask me how it works for it took me 2 weeks to finish the current 8 slides (because of University etc)#And also do not ask me why I am using Powerpoint#Frame by Frame animations drive me insane (and I have no software to actually do that in) and I don't know how to work blender yet#Ahem anyway#My actual tags now#Uhhhh#Robots#robot oc#ocs#... Skull?#Man I don't know how am I supposed to tag this#tender and loving affection for your dead and long gone creator but you are a robot/program that only gained sentience after their death#How about that huh#(first one to correctly guess the two things the design for the robot was inspired by gets. A cookie. Two cookies... My lemoncake recipe)#digital art

6 notes

·

View notes

Text

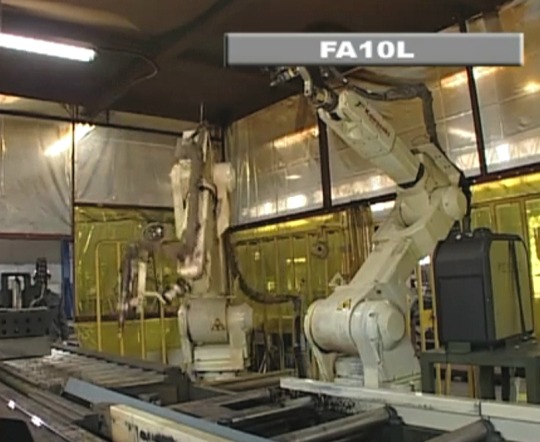

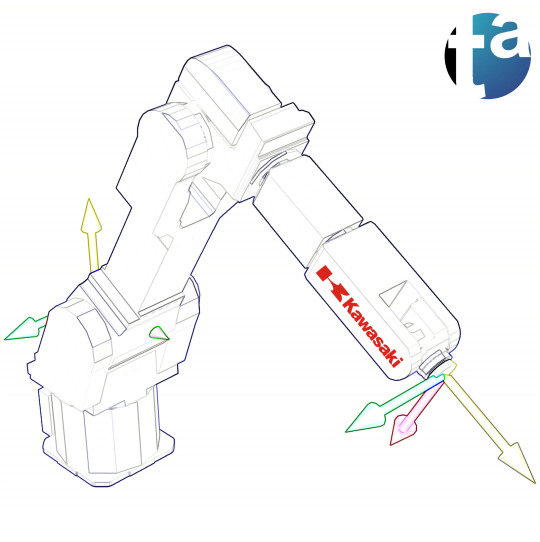

🦾 A002 - Kawasaki @KawasakiRobot F-Series Robot FA10L Plasma Cutting Large Ship Parts. Imports 3D CAD via Kawasaki KCONG software Data to the Auto Path Generation... 7th axis Position Table ▸ TAEVision Engineering on Pinterest ▸ KCONG - Offline Programming Software

Data A002 - Jul 18, 2023

#automation#robot#robotics#Kawasaki#KawasakiRobot#Kawasaki F-Series Robot#FA10L#plasma cutting#large ship parts#imports 3D CAD via KCONG software#3D CAD#KCONG software#Data to the Auto Path Generation#7th axis position table#KCONG Offline Programming Software

2 notes

·

View notes

Text

CODERS OF TUMBLR: If you have a minute to spare, could I ask you to fill in this survey to help out with my friends’ A Level comp sci coursework?

It would be especially helpful if you have knowledge of micromouse (mice?) or maze-based coding!

https://forms.office.com/e/KwC9ip0hYt

#sincerely a history student#who watched a micromouse (mice?) explanation on 2x speed#coding#computer science#micromouse#robotics#programming#software

2 notes

·

View notes

Text

Hangzhou DeepSeek Artificial Intelligence Basic Technology Research Co., Ltd., generally referred to as DeepSeek (Chinese: 深度求索; pinyin: Shēndù Qiúsuǒ), is a Chinese artificial intelligence business that creates open-source large language models (LLMs). Based in Hangzhou, Zhejiang, it is owned and funded by Chinese hedge fund High-Flyer, whose co-founder, Liang Wenfeng, formed the firm in 2023 and serves as its CEO. Read more...

#artificialintelligence#artificialintelligenceart#artificialintelligenceai#aiartificialintelligence#artificialgeneralintelligence#intelligenceartificial#artificiallintelligence#artificialintelligencenow#artificialintelligencetechnology#artificialintelligencemarketing#artificialintelligencedesign#artificialintelligencesociety#artificialintelligencephotography#artificialintelligencefacts#technology#coder#programming#tech#softwaredeveloper#softwareengineer#computerscience#software#data#ai#coding#softwaredevelopment#informationtechnology#techstartup#opensource#robotic

1 note

·

View note

Text

Start Your App Development Journey Today with Robotic Sysinfo: The Best App Development Company in Karnal!

I want to be an app developer, but I feel like I'm starting from scratch. Trust me, you're not alone. It feels like the tech world is some exclusive club, and you're standing outside looking in. But here's the thing: becoming an app developer, even with no experience, is 100% within your reach. It's all about taking that first step and staying motivated, no matter what. Our team has 5+ years of experience in app development solutions. So, let's get started and talk about how you can make this dream a reality—without any experience in your pocket yet.

Problem: The Struggle to Start

We've all been there. You want to break into a new field, but the amount of knowledge and skills you need feels impossible to conquer. You might be wondering: Can I even do this? Where do I start? What are all the things that I need to know to start building an application from scratch? It's pretty easy to get the feeling of lagging behind, especially when you view some of those super cool applications that you have installed on your mobile or the developers coding in lightning speed. It feels as though everyone has it all covered, and here you are stuck.

Solution: Your Pathway to Becoming an App Developer

Let's break this down into manageable steps. This journey may take a while, but every step forward will help you get closer to your goal.

Learn the Basics of Programming Languages

Code knowledge is what one needs in order to make an app. Don't be too worried though; you are not supposed to be a wizard overnight. Start learning the basics of some programming language, which will be good for beginners. Swift is for iOS apps while Kotlin is suitable for Android. JavaScript is ideal for something universally available. Go for one at a time. Free tutorials abound on the internet, while platforms like Codecademy or Udemy present structured courses so you can work your way toward getting the basics down.

Now get out there and start building something

Now, fun part: Create! Yes, you will not build the next Instagram tomorrow; that is okay, too. Try something simple for now, say a to-do list app or a weather app. You aren't going for a masterpiece, but for trying, experimenting, and learning. Don't sweat it if everything doesn't seem to work exactly as expected right off; that is how it is in the process of learning.

Participate in Developer Community

Sometimes, building an app on your own can feel lonely, but guess what? You don't have to do this by yourself. There are entire communities of developers out there—many of them started from scratch just like you. Forums like Stack Overflow, Reddit's r/learnprogramming, or local coding meetups are places where you can ask questions, get advice, and make connections. These communities are full of people who want to see you succeed, and they'll help you get through the tough patches.

Create a Portfolio of Your Work

Once you’ve started building apps, showcase them! Create a portfolio that highlights your work, even if it’s just a few small projects. A portfolio is essential to landing your first job or freelance gig. Make it public on platforms like GitHub or build your own website. Show the world that you’re serious about your new career, and let potential employers or clients see your growth.

Take Online Courses to Take It to the Next Level

If you want to level up your skills, consider enrolling in a structured online course. Websites like Udacity, Coursera, or freeCodeCamp offer great resources for both beginners and intermediate learners. Getting a certification can also boost your credibility and show potential employers that you've got the skills to back up your passion.

Look for Freelance Opportunities or Internships

With that said, as you build some small confidence, find opportunities where you can implement this knowledge into the real world. Start applying for internships or volunteer work. Don't be afraid to take small gigs; places like Upwork and Fiverr offer many smaller projects, just right for those starting out. Every single one of them teaches you something and gets you a little bit closer to what you want to be.

Why You Should Wait: The Tech Industry is Booming

Here's the exciting part—the app development industry is growing fast. According to Statista, the mobile app market generated over $407 billion in 2023. This means there are endless opportunities for developers. Whether you're building the next great social media app, a life-saving health app, or an awesome game, the demand is huge. And it's only going to keep growing.

One company that started with zero experience and grew into something amazing is Robotic Sysinfo, an app development company in Karnal, India. Now, our team has 5+ years of experience in this field. They started small, and through dedication, they’ve become a leading player in the app development world. Their story is proof that with persistence, even those who start with no experience can build something great.

Read More:

Conclusion: You’ve Got This!

It's time to stop wondering whether you can. Yes, you should try! The process of becoming an app developer isn't an overnight thing; however, it can happen if you start small, continue pushing, and embrace the journey. You would surely stumble, but with every line of code written, you are getting stronger and stronger before you even realize how far you have come.

Remember: the world needs more developers, and this is your chance to be in this exciting world. Whether you aspire to create something big or are just learning because you love the idea, today is the best time to fit into it. Your future as an app developer begins today. So, let's get started on your journey with a real app development company like Robotic Sysinfo!

#app development#app developer#no experience#beginner developer#Robotic Sysinfo#Karnal#mobile app development#learn to code#start coding#tech career#app development guide#coding journey#software development#Android development#iOS development#programming for beginners#learn programming#build apps#developer community#tech industry#freelance app developer#app development company

0 notes

Text

october 1st 2024: drafts!

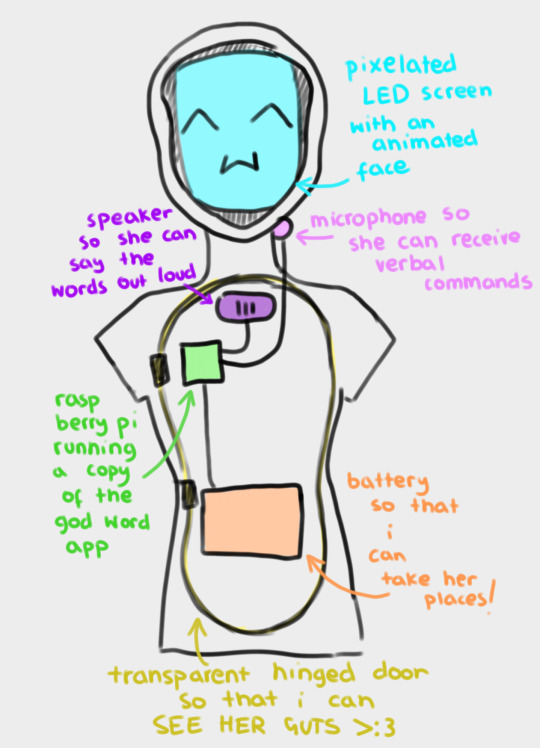

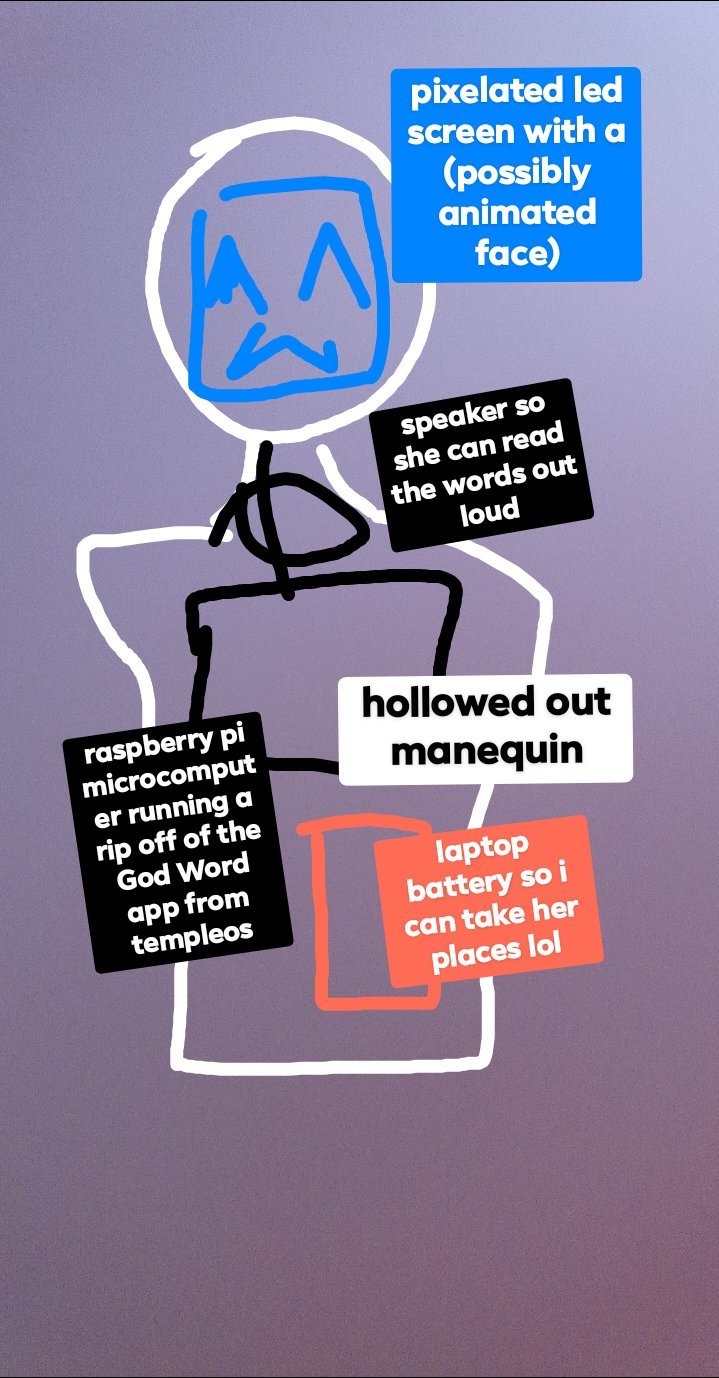

preacher: i'm attaching slightly improved versions of our original drafts, but i'll also include mine and scott's garbage sketches under the cut because i think they're a little bit funny

(image id available through tumblr's accessibility options)

this is a slightly revised version of my original concept for "APRIL".

the main functionality i wanted for "APRIL" was for her to be able to read out words from the templeOS god word app, and ideally without needing keyboard input – hence the microphone. ideally all of her parts are going to fit inside a hollowed out mannequin or doll, which will probably just be the torso, so that she's more portable. for the same reason, i want her to run off a power bank – i want to be able to take her places!

if we manage, we're going to give her an animated LED face which moves to indicate when she's speaking. the way i first pitched it, i wanted it to also change a bit depending on how she "felt" – for example, frowning if the environment was hotter than ideal for the raspberry pi to operate on. but that's a bit beyond our current scope right now. i don't think we even ordered a thermostat.

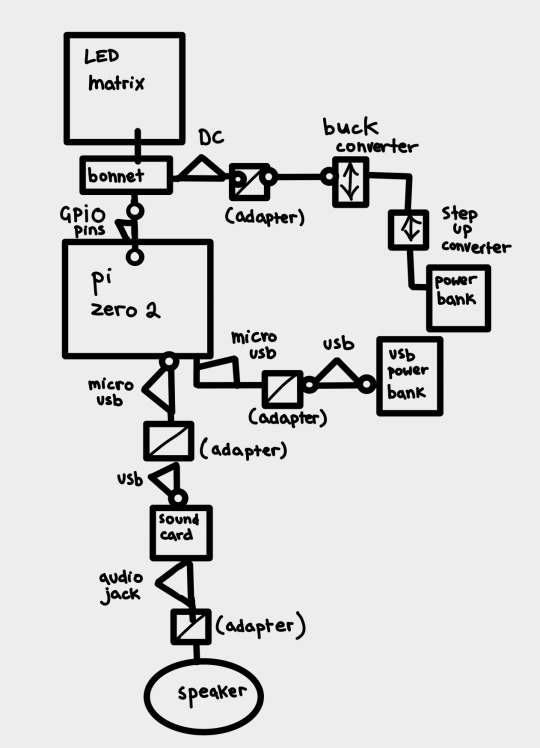

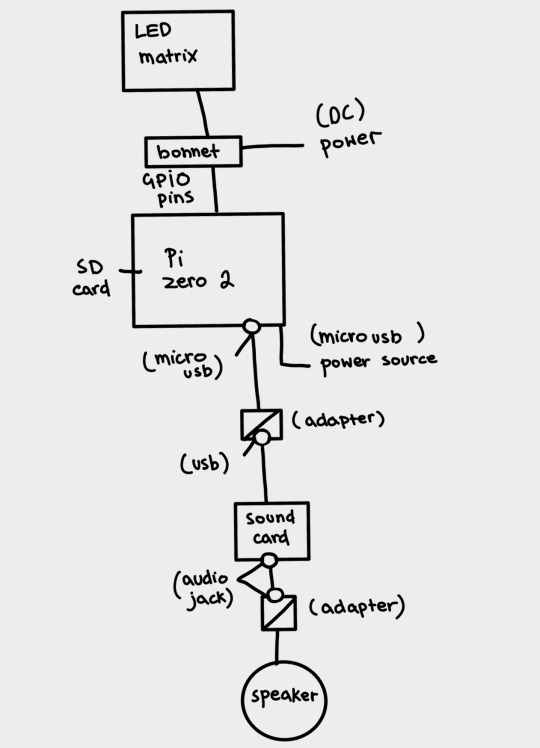

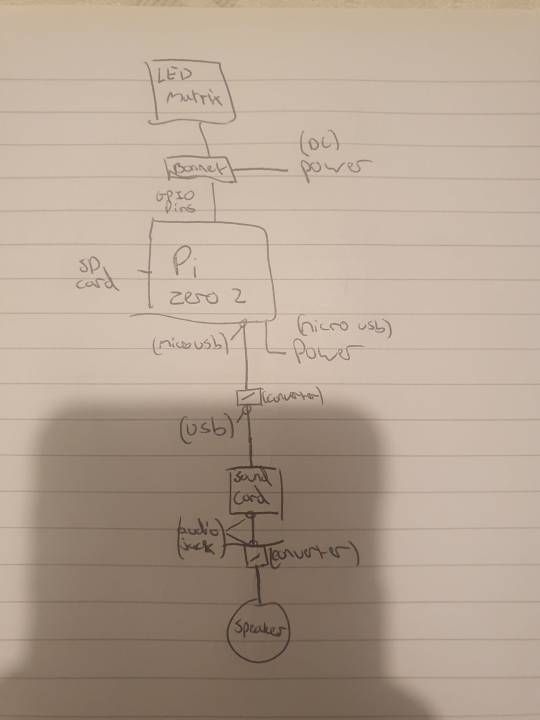

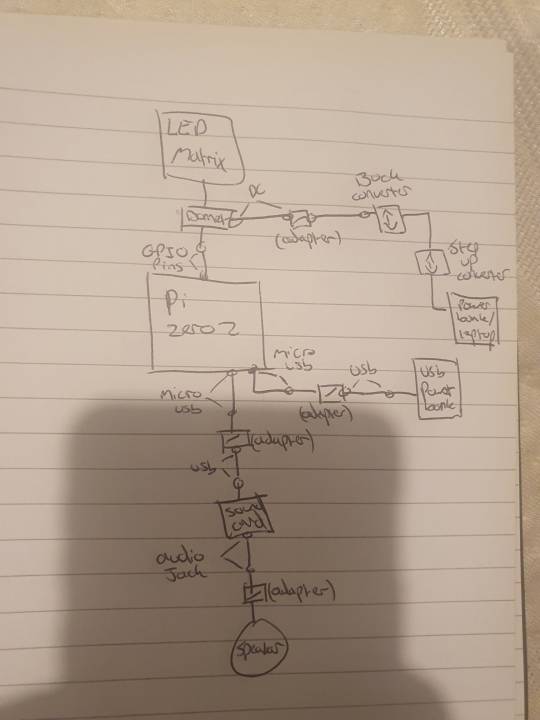

scott drew the following wiring diagrams based off my original sketch. here revised digitally for readability's sake.

(image id available through the tumblr accessibility options although i fear it's not very good in this case. feedback appreciated).

scott: I decided to go with the raspberry pi zero 2w because it's what I've got experience coding on, it's relatively cheap for the "brains" of the operation (heh) and can perform both tasks from the godword prophecy generation, speaker operation and led matrix operation simultaneously. Plus its small enough to keep the circuit lightweight and fit inside the initial mannequin design.

This drawing fits no kind of engineering standard by the way lol. It was an initial sketch closer to a wiring diagram to see how it'd physically setup and wrap my head around transforming it from mains power to being theoretically portable and running on powerbanks. Unfortunately the LED matrix is really fucking power hungry so needs its own power supply of really specific voltage and current draws hence all the converters.

Also because Im using the smaller and cheaper pi, as oppossed to a stronger system like the pi4, it doesn't have any audio out jack so I plan to use the micro usb for audio out which means yet again I need another adapter for a soundcard and usb to micro usb adapters and all that jazz. Usually sound out can be done through the GPIO pins but the LED matrix takes so many pins that I cant really take anything form them so I had to look for other ways of doing it. Plus this way I get to add a soundcard so if we wanna add microphone support or anything later on we can :)

(Also this is all a little obtuse because I'm trying to do it as much as plug and play and screw terminal style as possible rather than actually solder connections for ease of access and initial setup, but this also works for modular design and component swapping later too so its cool.)

preacher: another reason we're going with plug&play is becauuseeeeee i don't own a soldering iron 😭 it's ok. it's ok.

our silly initial drafts under the cut for your viewing pleasure.

preacher: these were made around 2 weeks ago, so about september 15th ish.

as you can see the first "APRIL" drawing was beautifully drawn with my fat fingers in the facebook messenger photo editor. i think it holds up. lol.

#computers#computer#programming#software engineering#robots#robotics#raspberry pi#robot girl#machine#machines#divine machinery#tech#technology#techcore#machinecore#objectum#objectophilia#robophilia#techum#technum#android#gynoid#mechanical divinity#templeos#coding#scott#preacher#drafts#update#roadmap

29 notes

·

View notes

Text

#Technology News#Software Reviews#Internet of Things#Artificial Intelligence#Robotics#Web Development#Tech Trends#Gadgets#Cybersecurity#Cloud Computing#Digital Marketing#SEO Strategies#Mobile Applications#Smart Devices#Programming Tutorials#AI in Business#Tech Innovations#Web Accessibility#Integrated Marketing#Payroll Budgeting.

0 notes

Text

Build the Perfect Custom PC with NovaPCBuilder.com - Powered by AI Technology!

Are you looking to build a powerful gaming PC, a high-performance workstation, or a custom rig tailored to your specific needs? Discover NovaPCBuilder.com, the ultimate platform for building custom PCs!

Our new AI Builder creates optimized PC configurations based on the applications and software you plan to use. Whether it’s for gaming, video editing, 3D rendering, or general use, the AI Builder suggests components that are perfectly suited to your requirements. Explore comprehensive hardware data and benchmark charts to compare different components.

Choose from a range of prebuilt configurations designed by experts, tailored for different purposes and budgets, to help you get started quickly.

New to building PCs? Our tutorials guide you through the entire assembly process, making it easy to build your own PC from scratch.

Visit https://novapcbuilder.com/ today and experience the next generation of PC building!

#artificialintelligence#ai#machinelearning#technology#datascience#python#deeplearning#programming#tech#robotics#innovation#bigdata#coding#iot#computerscience#data#dataanalytics#business#engineering#robot#datascientist#art#software#automation#analytics#ml#pythonprogramming#programmer#digitaltransformation#developer

0 notes

Text

Unveiling the Uniqueness of Flutter

#artificialintelligence hashtag#ai hashtag#machinelearning hashtag#technology hashtag#datascience hashtag#python hashtag#deeplearning hashtag#programming hashtag#tech hashtag#robotics hashtag#innovation hashtag#bigdata hashtag#coding hashtag#iot hashtag#computerscience hashtag#data hashtag#dataanalytics hashtag#business hashtag#engineering hashtag#robot hashtag#datascientist hashtag#art hashtag#software hashtag#automation hashtag#analytics hashtag#ml hashtag#pythonprogramming hashtag#programmer hashtag#digitaltransformation hashtag#developer

0 notes

Text

Embark on a transformative journey with eMexo Technologies in Electronic City Bangalore! 🚀 Unleash the power of RPA through our cutting-edge training. 💡 Ready to take your career to new heights? Join us now! 🌐

More details: https://www.emexotechnologies.com/courses/rpa-using-automation-anywhere-certification-training-course/

Reach us 👇

📞+91 9513216462

🌐http://www.emexotechnologies.com

🌟 Why Choose eMexo Technologies?

Expert Trainers

Hands-on Learning

Industry-Relevant Curriculum

State-of-the-Art Infrastructure

🔥 RPA Course Highlights:

Comprehensive Syllabus

Real-world Projects

Interactive Sessions

Placement Assistance

🏆 Best RPA Training Institute in Electronic City, Bangalore!

Our commitment to excellence makes us the preferred choice for RPA enthusiasts. Get ready to embrace a learning experience like never before.

📆 Enroll Now! Classes are filling up fast!

📌 Location: #219, First Floor, Nagalaya, 3rd Cross Road, Neeladri Nagar, Electronics City Phase 1, Electronic City, Bengaluru, Karnataka 560100

#rpatraininginelectroniccitybangalore#rpacourse#rpatraining#robotics#software development#programming#coding#career growth#emexotechnologies#electroniccity#bangalore#traininginstitute#course#education#careers#learning#training#jobs

0 notes