#Pixvana

Explore tagged Tumblr posts

Text

Apple’s Mysterious Fisheye Projection

If you’ve read my first post about Spatial Video, the second about Encoding Spatial Video, or if you’ve used my command-line tool, you may recall a mention of Apple’s mysterious “fisheye” projection format. Mysterious because they’ve documented a CMProjectionType.fisheye enumeration with no elaboration, they stream their immersive Apple TV+ videos in this format, yet they’ve provided no method to produce or playback third-party content using this projection type.

Additionally, the format is undocumented, they haven’t responded to an open question on the Apple Discussion Forums asking for more detail, and they didn’t cover it in their WWDC23 sessions. As someone who has experience in this area – and a relentless curiosity – I’ve spent time digging-in to Apple’s fisheye projection format, and this post shares what I’ve learned.

As stated in my prior post, I am not an Apple employee, and everything I’ve written here is based on my own history, experience (specifically my time at immersive video startup, Pixvana, from 2016-2020), research, and experimentation. I’m sure that some of this is incorrect, and I hope we’ll all learn more at WWDC24.

Spherical Content

Imagine sitting in a swivel chair and looking straight ahead. If you tilt your head to look straight up (at the zenith), that’s 90 degrees. Likewise, if you were looking straight ahead and tilted your head all the way down (at the nadir), that’s also 90 degrees. So, your reality has a total vertical field-of-view of 90 + 90 = 180 degrees.

Sitting in that same chair, if you swivel 90 degrees to the left or 90 degrees to the right, you’re able to view a full 90 + 90 = 180 degrees of horizontal content (your horizontal field-of-view). If you spun your chair all the way around to look at the “back half” of your environment, you would spin past a full 360 degrees of content.

When we talk about immersive video, it’s common to only refer to the horizontal field-of-view (like 180 or 360) with the assumption that the vertical field-of-view is always 180. Of course, this doesn’t have to be true, because we can capture whatever we’d like, edit whatever we’d like, and playback whatever we’d like.

But when someone says something like VR180, they really mean immersive video that has a 180-degree horizontal field-of-view and a 180-degree vertical field-of-view. Similarly, 360 video is 360-degrees horizontally by 180-degrees vertically.

Projections

When immersive video is played back in a device like the Apple Vision Pro, the Meta Quest, or others, the content is displayed as if a viewer’s eyes are at the center of a sphere watching video that is displayed on its inner surface. For 180-degree content, this is a hemisphere. For 360-degree content, this is a full sphere. But it can really be anything in between; at Pixvana, we sometimes referred to this as any-degree video.

It's here where we run into a small problem. How do we encode this immersive, spherical content? All the common video codecs (H.264, VP9, HEVC, MV-HEVC, AVC1, etc.) are designed to encode and decode data to and from a rectangular frame. So how do you take something like a spherical image of the Earth (i.e. a globe) and store it in a rectangular shape? That sounds like a map to me. And indeed, that transformation is referred to as a map projection.

Equirectangular

While there are many different projection types that each have useful properties in specific situations, spherical video and images most commonly use an equirectangular projection. This is a very simple transformation to perform (it looks more complicated than it is). Each x location on a rectangular image represents a longitude value on a sphere, and each y location represents a latitude. That’s it. Because of these relationships, this kind of projection can also be called a lat/long.

Imagine “peeling” thin one-degree-tall strips from a globe, starting at the equator. We start there because it’s the longest strip. To transform it to a rectangular shape, start by pasting that strip horizontally across the middle of a sheet of paper (in landscape orientation). Then, continue peeling and pasting up or down in one-degree increments. Be sure to stretch each strip to be as long as the first, meaning that the very short strips at the north and south poles are stretched a lot. Don’t break them! When you’re done, you’ll have a 360-degree equirectangular projection that looks like this.

If you did this exact same thing with half of the globe, you’d end up with a 180-degree equirectangular projection, sometimes called a half-equirect. Performed digitally, it’s common to allocate the same number of pixels to each degree of image data. So, for a full 360-degree by 180-degree equirect, the rectangular video frame would have an aspect ratio of 2:1 (the horizontal dimension is twice the vertical dimension). For 180-degree by 180-degree video, it’d be 1:1 (a square). Like many things, these aren’t hard and fast rules, and for technical reasons, sometimes frames are stretched horizontally or vertically to fit within the capabilities of an encoder or playback device.

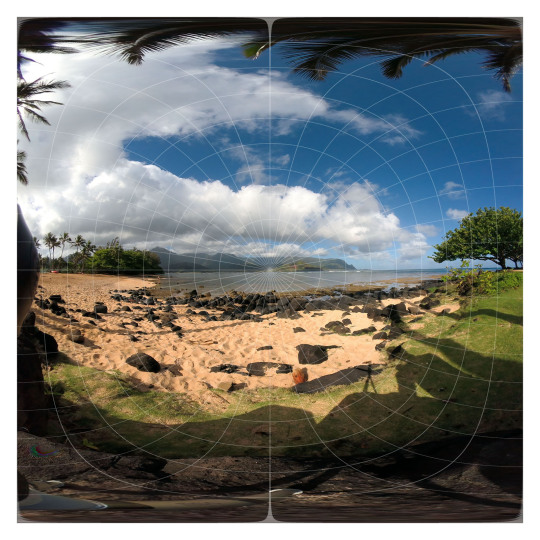

This is a 180-degree half equirectangular image overlaid with a grid to illustrate its distortions. It was created from the standard fisheye image further below. Watch an animated version of this transformation.

What we’ve described so far is equivalent to monoscopic (2D) video. For stereoscopic (3D) video, we need to pack two of these images into each frame…one for each eye. This is usually accomplished by arranging two images in a side-by-side or over/under layout. For full 360-degree stereoscopic video in an over/under layout, this makes the final video frame 1:1 (because we now have 360 degrees of image data in both dimensions). As described in my prior post on Encoding Spatial Video, though, Apple has chosen to encode stereo video using MV-HEVC, so each eye’s projection is stored in its own dedicated video layer, meaning that the reported video dimensions match that of a single eye.

Standard Fisheye

Most immersive video cameras feature one or more fisheye lenses. For 180-degree stereo (the short way of saying stereoscopic) video, this is almost always two lenses in a side-by-side configuration, separated by ~63-65mm, very much like human eyes (some 180 cameras).

The raw frames that are captured by these cameras are recorded as fisheye images where each circular image area represents ~180 degrees (or more) of visual content. In most workflows, these raw fisheye images are transformed into an equirectangular or half-equirectangular projection for final delivery and playback.

This is a 180 degree standard fisheye image overlaid with a grid. This image is the source of the other images in this post.

Apple’s Fisheye

This brings us to the topic of this post. As I stated in the introduction, Apple has encoded the raw frames of their immersive videos in a “fisheye” projection format. I know this, because I’ve monitored the network traffic to my Apple Vision Pro, and I’ve seen the HLS streaming manifests that describe each of the network streams. This is how I originally discovered and reported that these streams – in their highest quality representations – are ~50Mbps, HDR10, 4320x4320 per eye, at 90fps.

While I can see the streaming manifests, I am unable to view the raw video frames, because all the immersive videos are protected by DRM. This makes perfect sense, and while I’m a curious engineer who would love to see a raw fisheye frame, I am unwilling to go any further. So, in an earlier post, I asked anyone who knew more about the fisheye projection type to contact me directly. Otherwise, I figured I’d just have to wait for WWDC24.

Lo and behold, not a week or two after my post, an acquaintance introduced me to Andrew Chang who said that he had also monitored his network traffic and noticed that the Apple TV+ intro clip (an immersive version of this) is streamed in-the-clear. And indeed, it is encoded in the same fisheye projection. Bingo! Thank you, Andrew!

Now, I can finally see a raw fisheye video frame. Unfortunately, the frame is mostly black and featureless, including only an Apple TV+ logo and some God rays. Not a lot to go on. Still, having a lot of experience with both practical and experimental projection types, I figured I’d see what I could figure out. And before you ask, no, I’m not including the actual logo, raw frame, or video in this post, because it’s not mine to distribute.

Immediately, just based on logo distortions, it’s clear that Apple’s fisheye projection format isn’t the same as a standard fisheye recording. This isn’t too surprising, given that it makes little sense to encode only a circular region in the center of a square frame and leave the remainder black; you typically want to use all the pixels in the frame to send as much data as possible (like the equirectangular format described earlier).

Additionally, instead of seeing the logo horizontally aligned, it’s rotated 45 degrees clockwise, aligning it with the diagonal that runs from the upper-left to the lower-right of the frame. This makes sense, because the diagonal is the longest dimension of the frame, and as a result, it can store more horizontal (post-rotation) pixels than if the frame wasn’t rotated at all.

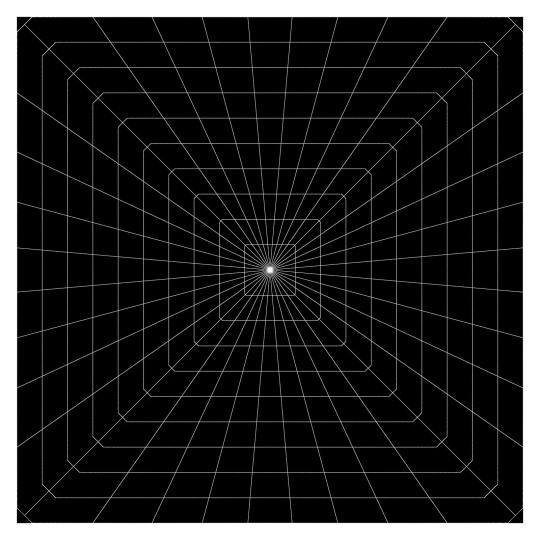

This is the same standard fisheye image from above transformed into a format that seems very similar to Apple’s fisheye format. Watch an animated version of this transformation.

Likewise, the diagonal from the lower-left to the upper-right represents the vertical dimension of playback (again, post-rotation) providing a similar increase in available pixels. This means that – during rotated playback – the now-diagonal directions should contain the least amount of image data. Correctly-tuned, this likely isn’t visible, but it’s interesting to note.

More Pixels

You might be asking, where do these “extra” pixels come from? I mean, if we start with a traditional raw circular fisheye image captured from a camera and just stretch it out to cover a square frame, what have we gained? Those are great questions that have many possible answers.

This is why I liken video processing to turning knobs in a 747 cockpit: if you turn one of those knobs, you more-than-likely need to change something else to balance it out. Which leads to turning more knobs, and so on. Video processing is frequently an optimization problem just like this. Some initial thoughts:

It could be that the source video is captured at a higher resolution, and when transforming the video to a lower resolution, the “extra” image data is preserved by taking advantage of the square frame.

Perhaps the camera optically transforms the circular fisheye image (using physical lenses) to fill more of the rectangular sensor during capture. This means that we have additional image data to start and storing it in this expanded fisheye format allows us to preserve more of it.

Similarly, if we record the image using more than two lenses, there may be more data to preserve during the transformation. For what it’s worth, it appears that Apple captures their immersive videos with a two-lens pair, and you can see them hiding in the speaker cabinets in the Alicia Keys video.

There are many other factors beyond the scope of this post that can influence the design of Apple’s fisheye format. Some of them include distortion handling, the size of the area that’s allocated to each pixel, where the “most important” pixels are located in the frame, how high-frequency details affect encoder performance, how the distorted motion in the transformed frame influences motion estimation efficiency, how the pixels are sampled and displayed during playback, and much more.

Blender

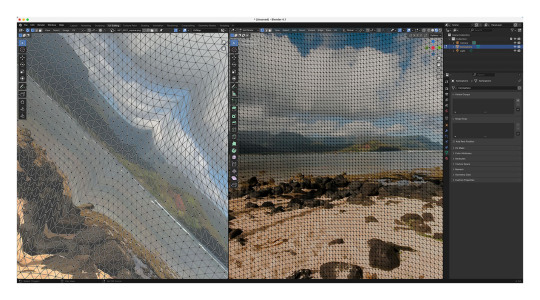

But let’s get back to that raw Apple fisheye frame. Knowing that the image represents ~180 degrees, I loaded up Blender and started to guess at a possible geometry for playback based on the visible distortions. At that point, I wasn’t sure if the frame encodes faces of the playback geometry or if the distortions are related to another kind of mathematical mapping. Some of the distortions are more severe than expected, though, and my mind couldn’t imagine what kind of mesh corrected for those distortions (so tempted to blame my aphantasia here, but my spatial senses are otherwise excellent).

One of the many meshes and UV maps that I’ve experimented with in Blender.

Radial Stretching

If you’ve ever worked with projection mappings, fisheye lenses, equirectangular images, camera calibration, cube mapping techniques, and so much more, Google has inevitably led you to one of Paul Bourke’s many fantastic articles. I’ve exchanged a few e-mails with Paul over the years, so I reached out to see if he had any insight.

After some back-and-forth discussion over a couple of weeks, we both agreed that Apple’s fisheye projection is most similar to a technique called radial stretching (with that 45-degree clockwise rotation thrown in). You can read more about this technique and others in Mappings between Sphere, Disc, and Square and Marc B. Reynolds’ interactive page on Square/Disc mappings.

Basically, though, imagine a traditional centered, circular fisheye image that touches each edge of a square frame. Now, similar to the equirectangular strip-peeling exercise I described earlier with the globe, imagine peeling one-degree wide strips radially from the center of the image and stretching those along the same angle until they touch the edge of the square frame. As the name implies, that’s radial stretching. It’s probably the technique you’d invent on your own if you had to come up with something.

By performing the reverse of this operation on a raw Apple fisheye frame, you end up with a pretty good looking version of the Apple TV+ logo. But, it’s not 100% correct. It appears that there is some additional logic being used along the diagonals to reduce the amount of radial stretching and distortion (and perhaps to keep image data away from the encoded corners). I’ve experimented with many approaches, but I still can’t achieve a 100% match. My best guess so far uses simple beveled corners, and this is the same transformation I used for the earlier image.

It's also possible that this last bit of distortion could be explained by a specific projection geometry, and I’ve iterated over many permutations that get close…but not all the way there. For what it’s worth, I would be slightly surprised if Apple was encoding to a specific geometry because it adds unnecessary complexity to the toolchain and reduces overall flexibility.

While I have been able to playback the Apple TV+ logo using the techniques I’ve described, the frame lacks any real detail beyond its center. So, it’s still possible that the mapping I’ve arrived at falls apart along the periphery. Guess I’ll continue to cross my fingers and hope that we learn more at WWDC24.

Conclusion

This post covered my experimentation with the technical aspects of Apple’s fisheye projection format. Along the way, it’s been fun to collaborate with Andrew, Paul, and others to work through the details. And while we were unable to arrive at a 100% solution, we’re most definitely within range.

The remaining questions I have relate to why someone would choose this projection format over half-equirectangular. Clearly Apple believes there are worthwhile benefits, or they wouldn’t have bothered to build a toolchain to capture, process, and stream video in this format. I can imagine many possible advantages, and I’ve enumerated some of them in this post. With time, I’m sure we’ll learn more from Apple themselves and from experiments that all of us can run when their fisheye format is supported by existing tools.

It's an exciting time to be revisiting immersive video, and we have Apple to thank for it.

As always, I love hearing from you. It keeps me motivated! Thank you for reading.

12 notes

·

View notes

Link

#vr#virtual reality#geekwire.com#geek wire#September 2018#2018#Author: TAYLOR SOPER#soper#Pixvana#seattle#SPIN#vr filmakers#8k#Oculus Rift#Oculus#Forest Key#Key#WunderVu#Oculus Go#Venture Reality Fund#VR/AR#iPhone#Apple

1 note

·

View note

Text

This is the TechSummit Rewind, a daily recap of the top technology headlines.

Justice Department files antitrust suit to block AT&T/Time Warner merger

The US Justice Department has filed a lawsuit to block the proposed merger between AT&T and Time Warner, saying that the combination of AT&T’s distribution networks and Time Warner’s news and entertainment products would harm competition. Time Warner is the parent company of CNN, TBS, TNT, Cartoon Network, and HBO, among other properties.

“AT&T/DirectTV would hinder its rivals by forcing them to pay hundreds of millions of dollars more per year for Time Warner’s networks, and it would use its increased power to slow the industry’s transition to new and exciting video distribution models that provide greater choice for consumers. The proposed merger would result in fewer innovative offerings and higher bills for American families.”

The US Justice Department, in a complaint

In a press conference, AT&T’s executive leadership gathered in New York to rebuke the DOJ lawsuit and defend the merits and legality of the proposed $85 billion merger. According to AT&T CEO Randall Stevenson, the lawsuit “stretches the very reach of antitrust law beyond the breaking point,” but he suggested that he’s looking for a way forward.

“As we head to court we will continue to offer solutions that allows this transaction to close.”

AT&T CEO Randall Stevenson, in a press conference

However, Stevenson said that selling CNN is off the table.

High-profile media lawyer Daniel Petrocelli was also at the press conference, defending AT&T and the merger.

“The DOJ has to prove that this merger will harm competition, that this merger will harm consumers. It’s a burden that they have not met in a half a century and it’s one that they cannot and will not meet here.

“Under basic principles of law and economics, combining these two non-competing companies should pose no problem. The TV bill will not go up, and the combined company will not keep CNN, TNT, HBO, or any other network to itself. Simply put, the theories in the DOJ’s complaint filled about an hour ago simply have no proof and make no sense in the real business world. We are confident that the law and facts will prevail and that this merger will be allowed to proceed.”

Lawyer Daniel Petrocelli

Uber to buy up to 24K SUVs from Volvo equipped with autonomous technology from 2019-2021

Uber plans to buy up to 24,000 self-driving cars from Volvo. According to a statement from Volvo, it’ll provide Uber with its flagship XC90 SUVs equipped with autonomous technology as part of a non-exclusive deal from 2019 to 2021.

“Our goal was from day one to make investments into a vehicle that could be manufactured at scale.”

Uber head of automotive alliances Jeff Miller

According to Miller, a small number of cars would be purchased with equity and others would be bought with debt financing.

AWS launches Secret Region for intel agencies and their partners working with classified data

Amazon has announced that its AWS cloud computing service now offers a new region that’s specifically designed for the workloads of the U.S. Intelligence Community. The AWS Secret Region can run workloads up to the “secret” security classification level, and will complement the service’s existing $600 million contract with the CIA and other agencies for running Top Secret workloads on its cloud.

“The U.S. Intelligence Community can now execute their missions with a common set of tools, a constant flow of the latest technology, and the flexibility to rapidly scale with the mission. The AWS Top Secret Region was launched three years ago as the first air-gapped commercial cloud and customers across the U.S. Intelligence Community have made it a resounding success. Ultimately, this capability allows more agency collaboration, helps get critical information to decision makers faster, and enables an increase in our Nation’s Security.”

Amazon Web Services worldwide public sector vice president Teresa Carlson

The original, air-gapped Top Secret cloud, which AWS operates for the intelligence community, was limited to intelligence agencies. The new Secret Region is available to all government agencies and is separate from the earlier work done with the CIA and other agencies, along with the existing Amazon GovCloud.

DJI threatens legal action after researcher reports bug

An essay written by security researcher Kevin Finisterre explains that DJI’s bug bounty program isn’t off to a good start after launching in August.

Finisterre describes his interactions with DJI before and after he reported problems with the drone-maker’s security. Before getting too deep into it, he checked with DJI to see if their servers were included in the scope of the bug bounty program and DJI confirmed that they were on the table. After some digging, Finisterre put a 31-page report together that detailed what he and his colleagues found. That included the private key to DJI’s SSL certificate, which was leaked on GitHub, allowing Finisterre to see customer data stored on DJI’s servers.

Finisterre turned in the report and DJI said that the information warranted a $30,000 reward. After a series of negotiations over the terms of the deal focused mostly on what Finisterre could or could not say, he was advised by a number of lawyers that the agreement was risky at best and “likely crafted in bad faith to silence anyone that signed it.” He abandoned the deal after being sent a letter stating that he had no authority to access DJI servers and the company was reserving its right of action under the Computer Fraud and Abuse Act.

“DJI asks researchers to follow standard terms for bug bounty programs, which are designed to protect confidential data and allow time for analysis and resolution of a vulnerability before it is publicly disclosed. The hacker in question refused to agree to these terms, despite DJI’s continued attempts to negotiate with him, and threatened DJI if his terms were not met.”

DJI, in a statement

Uber adds live location sharing, expands beacon program

Uber has added a few features that should make getting a ride a tad easier, including live location sharing that’ll let your driver see your location once you tap the gray icon on the bottom right of the screen. This can be helpful when you’re walking to your pickup location so your driver isn’t left in the dark.

Beacons are also being expanded to New York, Chicago, and San Francisco to help you identify your ride in a sea of Ubers. The company’s guest hailing feature has also been updated, letting you hail rides for friends and family and communicate with the driver via text message. Tap “where to,” and you’ll be able to see a different rider at the top of the screen.

Lastly, Uber has added in-app gifting, so you can send Uber credits to your friends without leaving the app.

All of these features are available today.

Tencent becomes first Chinese tech firm valued over $500B

Tencent has become the first Chinese company to be valued at over $500 billion.

Shares of the 19-year-old company, which is listed on the Hong Kong Stock Exchange, rallied to reach HK$418.80 ($53.62) to give it a market cap of HK$3.99 billion ($510.8 million).

Entry into the half-a-trillion dollar club comes a week after Tencent posted a profit of $2.7 billion on revenue of $9.8 billion for Q3 2017. Overall profit was up 69 percent year-over-year and revenue rose by 61 percent thanks to the company’s games business.

Uber fined $8.9 million by Colorado for allowing drivers with felony convictions, driver’s license issues

Colorado regulators have given Uber an $8.9 million penalty for allowing 57 people with past criminal or motor vehicle offenses to drive for the company, according to the state’s Public Utilities Commission.

According to the Commission, the drivers should have been disqualified. They had issues ranging from felony convictions to driving under the influence and reckless driving. In some cases, drivers were working with revoked, suspended, or cancelled licenses. A similar investigation done of Lyft found no violations.

“We have determined that Uber had background-check information that should have disqualified these drivers under the law, but they were allowed to drive anyway. These actions put the safety of passengers in extreme jeopardy.”

Colorado Public Utilities Commission director Doug Dean, in a statement

“We recently discovered a process error that was inconsistent with Colorado’s ridesharing regulations and proactively notified the Colorado Public Utilities Commission (CPUC). This error affected a small number of drivers and we immediately took corrective action. Per Uber safety policies and Colorado state regulations, drivers with access to the Uber app must undergo a nationally accredited third-party background screening. We will continue to work closely with the CPUC to enable access to safe, reliable transportation options for all Coloradans.”

Uber spokesperson Stephanie Sedlak, in a statement

Uber handed the PUC over 107 records, and said that they removed those people from its system.

The PUC cross-checked the Uber drivers with state crime and court databases, finding that many had aliases and other violations. While 63 were found to have issues with their driver’s licenses, the PUC focused on 57 who had additional violations, because of the impact on public safety.

“What [Uber] calls proactively reaching out to us was after we had to threaten them with daily civil penalties to get them to provide us with the [records]. This is not a data processing error. This is a public safety issue.

“They said their private background checks were superior to anything out there. We can tell you their private background checks were not superior. In some cases, we could not say they even provided a background check.”

Doug Dean

The fine is a civil penalty assessment based on a citation of $2,500 per day for each disqualified driver found to have worked. Among the findings, 12 drivers had felony convictions, 17 had major moving violations, 63 had drivers’ license issues, and three had interlock driver’s licenses, which is required after a recent drunken driving conviction.

Uber has 10 days to pay 50 percent of the $8.9 million penalty or request a hearing to contest the violation before an administrative law judge. Afterwards, the PUC will continue making audits to check for compliance. The penalty could rise if more violations are found.

“Uber can fix this tomorrow. The law allows them to have fingerprint background checks. We had found a number of a.k.a.’s and aliases that these drivers were using. That’s the problem with name-based background checks. We’re very concerned and we hope the company will take steps to correct this.”

Doug Dean

PayPal partners with Acorns to help you invest your money

PayPal has launched a number of integrations with microinvestment platform Acorns.

Acorns rounds up your purchases once you connect your bank card to the app to the nearest dollar. Users can also set up recurring investments or make an one-time payment of up to $50,000 that will be invested on their behalf.

PayPal can now be used to fund an Acorns account for round up, recurring, and one-off investments.

PayPal users can also monitor or manage their Acorns investments in the PayPal app.

The new integrations are available now for “select” PayPal customers in the US, though it will open to everyone in the U.S. in early 2018.

FanDuel CEO and co-founder Nigel Eccles leaving to start an eSports company

FanDuel CEO and co-founder Nigel Eccles is leaving the company and stepping down from the company’s board, where he was chairman.

Eccles internally announced that he’s leaving to start an eSports company. Monday was his last day.

Matt King, who served as FanDuel’s CFO until the end of 2016, will replace Eccles as CEO. He then left and spent a year at insurance broker Cottingham & Butler. King is also taking a board seat.

FanDuel is also adding two more people to the board. Former Dish Network vice chairman and president Carl Vogel is joining as chairman, and former Fox Sports executive David Nathanson is also joining the board.

The Week Ahead

Today: Salesforce, Hewlett Packard Enterprise, HP report earnings

Thursday-Sunday: No TechSummit Rewinds

In other news…

Seattle-based startup Pixvana announced a $14 million Series A investment led by Paul Allen’s Vulcan Capital with participation from new investors like Raine Ventures, Cisco Investments, and Heart Ventures.

Health IQ, a startup that collects data to let health-conscious people save an average of $1,238 a year on their life insurance premiums, has raised another $76 million in venture capital.

Bloomberg’s Twitter network will launch on Dec. 18 with six founding partners: Goldman Sachs, Infiniti, TD Ameritrade, CA Technologies, AT&T, and CME Group.

Southeast Asia-based startup aCommerce, which helps brands get into e-commerce and digital media in the region, has raised $65 million in new funding led by Emerald Media.

According to Xiaomi CEO Lei Jun, Xiaomi will invest up to $1 billion in 100 Indian startups over the next five years.

TechSummit Rewind 206 This is the TechSummit Rewind, a daily recap of the top technology headlines. Justice Department files antitrust suit to block AT&T/Time Warner merger…

#aCommerce#Acorns#Amazon Web Services#AT&T#Bloomberg#DJI#FanDuel#featured#Health IQ#Hewlett Packard Enterprise#HP#Nigel Eccles#Paypal#Pixvana#Salesforce#Tencent#Time Warner#Twitter#Uber#Volvo#Xiaomi

2 notes

·

View notes

Text

Pixvana raises $14M from Vulcan, Microsoft, top VC firms for VR production platform

New Post has been published on https://takenews.net/pixvana-raises-14m-from-vulcan-microsoft-top-vc-firms-for-vr-production-platform/

Pixvana raises $14M from Vulcan, Microsoft, top VC firms for VR production platform

Madrona Enterprise Group traders watch Pixvana’s pitch in digital actuality. (Photograph by way of Pixvana)

What higher technique to pitch a digital or augmented actuality startup concept than to strap a bunch of headsets on the heads of enterprise capitalists and switch a fundamental presentation into an immersive expertise that demonstrates the potential of your organization’s expertise?

That’s what Pixvana did — and it labored.

The Seattle startup in the present day introduced a $14 million Collection A funding led by Paul Allen’s Vulcan Capital, with participation from new traders like Raine Ventures, Microsoft Ventures, Cisco Investments, and Hearst Ventures. Earlier backer Madrona Enterprise Group, which led the corporate’s $6 million seed spherical, additionally joined.

Pixvana has developed a cloud-based end-to-end platform referred to as SPIN Studio that helps digital actuality filmmakers edit, course of, and ship video at 8K decision. Many storytellers are pressured to make use of desktop instruments that weren’t constructed with VR in thoughts, creating issues like lengthy rendering occasions, restricted modifying capabilities, and less-than-ideal decision. Pixvana’s SPIN Studio makes use of cloud infrastructure and VR-centric modifying software program designed from the bottom up. Early prospects vary from sports activities groups to eating places to media corporations which are beginning to movie and produce VR content material.

Pixvana, based in late 2015, calls its software program “the platform for XR storytelling,” which encompasses digital actuality, augmented actuality, 360-video, and different next-generation video experiences.

“There’s super alternative within the XR video area and it’s clear that excellent content material and storytelling instruments will outline this new medium,” Stuart Nagae, common associate at Vulcan Capital, mentioned in a press release. “We expect that Pixvana has a rare crew that actually understands tips on how to ship cinematic, immersive experiences in XR and may construct a SaaS enterprise at scale.”

To grasp Pixvana’s underlying expertise, it’s greatest to look at content material in digital actuality. Pixvana CEO Forest Key knew this, in order he went to pitch the corporate’s concept to greater than 50 traders for the Collection A spherical, he introduced headsets with him and confirmed a model of the video under — basically a pitch baked right into a VR expertise from Pixvana’s workplace.

Key instructed GeekWire conventional technique to pitch traders can be to indicate a PowerPoint presentation after which transfer to a VR demo.

“We discovered that once you try this, somebody goes out and in and simply tries slightly,” he mentioned in an interview at Pixvana’s HQ in Seattle’s Fremont neighborhood. “They don’t actually expertise it.”

As a substitute, Pixvana needed traders to immerse themselves with the video, which exhibits clips from Seattle Sounders matches; ballet performances; restaurant excursions; and extra, all to show the sort and high quality of content material that Pixvana helps create.

Key, who beforehand bought Seattle lodge advertising startup Buuteeq to Priceline in 2014, would ask traders what they noticed — many famous the soccer or ballet.

“I’d inform them that the primary factor they noticed was a 15-minute company presentation,” Key mentioned. “They form of received misplaced in it.”

And that was the purpose — to indicate traders how Pixvana’s software program permits any storyteller to supply “XR” content material.

“It was a technique to differentiate,” Key famous. “It labored in a manner the place we received to place our cash the place our mouth is and use our personal expertise.”

Key detailed the making of the pitch video in a weblog publish right here.

Whereas Pixvana could have a sturdy platform for “XR” storytelling, it stays to be seen if digital and augmented actuality reaches mainstream customers regardless of the hype and funding within the trade over the previous few years.

Key tells traders that Pixvana gained’t single-handedly create a mass marketplace for VR — that will probably be as much as tech giants like Fb, Microsoft, Google, Samsung, Sony, and others.

However the CEO likes the place his 24-person firm is positioned. Key mentioned that from the start, he and his co-founders predicted 2020 for a breakout yr for XR storytelling. The CEO pointed to the continued growth of cheaper and higher high quality headsets.

“We love our timing,” Key added. “If the market was actually huge already, we’d be too late. I really feel like we’re proper about the place we wish to be for optimum doable market worth creation. After all I’d like there to be extra headsets and for our expertise to be extra full, however the reality is, it’s slightly extra of a marathon than it’s a dash.”

Because it raised its preliminary seed spherical two years in the past, Pixvana has added big-name buyer companions like Valve and expanded to extra platforms like Home windows Combined Actuality whereas including options to its software program.

Now the extra money infusion will assist the corporate go to market. Key mentioned he will get collectively often with CEOs from different Seattle-based VR startups they usually all agree that these “subsequent two or three years is the place it will get actually fascinating.”

“We’re barely getting going as an trade, however we’re not barely getting going as a expertise play,” Key added. “Pixvana has a really fascinating expertise stack and we’re very properly positioned as leaders on this a part of the market.”

The standard of Pixvana’s Collection A traders can also be notable, with corporations like Microsoft and Cisco getting concerned. Buyers are clearly nonetheless excited concerning the potential of VR; VentureBeat famous that investments in leisure VR is up 79 % over the previous yr.

“We’re very excited to be working with this set of investor companions — collectively they carry a collective ardour for VR and experience in constructing and scaling applied sciences which are elementary to the immersive media future in VR and AR,” Key mentioned.

Although Key’s final firm was concerned with on-line lodge reserving, he spent chunk of previous few many years working in digital media. After finding out movie historical past at UCLA, he began his profession at Lucasfilm on the visible results crew earlier than transferring on to locations like Adobe and Microsoft the place he helped develop necessary web-based video applied sciences like Flash and Silverlight.

Key’s co-founders have spectacular resumes, too, with experience in software program platforms, visible results, video manufacturing, codecs, and content material creation. Chief Know-how Officer and Inventive Director Scott Squires is a widely known Sci-Tech Academy Award winner recognized for his visible results work and likewise a veteran of Lucasfilm. Chief Product Officer Invoice Hensler was beforehand the senior director of engineering at Apple the place he labored on photograph apps and imaging applied sciences, whereas VP of Product Administration Sean Safreed is the co-founder of movie and video software program startup Purple Large.

Pixvana, a Seattle 10 firm in 2016, is certainly one of many new up-and-coming digital actuality startups within the Seattle space. Others embrace Pluto VR, HaptX, VRStudios, VREAL, Endeavor One, Nullspace VR, Towards Gravity, Visible Vocal, and several other others. These are along with bigger corporations like Microsoft, Valve, HTC, and Oculus that are also creating digital and augmented actuality applied sciences within the area.

0 notes

Text

Valve brings 360-degree videos to Steam VR

Valve brings 360-degree videos to Steam VR

Valve brings 360-degree videos to Steam VR

While it’s not quite as immersive as a full virtual reality experience, 360-degree videos and photos are becoming more common (especially on Facebook and YouTube), and now Valve has released a Steam 360 Video Player. It uses adaptive streaming from a company called Pixvana, which should enable playback with just one click on your Rift or Vive headset.…

View On WordPress

#360degreevideo#applenews#entertainment#personal computing#personalcomputing#pixvana#rift#steam#steamvr#valve#virtualreality#vive

0 notes

Text

Valve y Pixvana se alían para traer vídeo VR en 360º a Steam

Valve y Pixvana se alían para traer vídeo VR en 360º a Steam

Pixvana, fabricantes del estudio de creación de vídeo en 360º basado en nubes SPIN, anunciaron hoy una alianza con Valve para integrar sus servicios de software en la plataforma Steam. Una versión beta del software SPIN permitirá a los usuarios publicar directamente el contenido en vídeos de 360º directamente en la Steam Store, lo que permitirá a los usuarios de headsets habilitados para Steam…

View On WordPress

0 notes

Text

0 notes

Text

Global Virtual Reality Content Creation Market Size, Share Leaders, Opportunities Assessment, Development Status, Top Manufacturers, And Forecast 2021-2027

Global Virtual Reality Content Creation Market Overview:

Market dynamics, product types, applications, end-users, and other market segments, regional market insights, country-level market insights, Global Virtual Reality Content Creation market competitive environment, and competitiveness are all included in Maximize market research reports. COVID-19's impact on Matrix, recent industry trends, Global Virtual Reality Content Creation market, market features, sales volume, projected period market size, and market share Market elements such as market momentum, market restraints, market opportunities, and market difficulties that are projected to slow market growth are all examined in the MMR Report.

The Maximize Market Research Report's market segmentation is the most complete in the industry. MMR's study includes a complete patent analysis as well as profiles of significant market competitors, all of which contribute to a competitive climate. MMR Research provides Global Virtual Reality Content Creation market data in all regions for segments like technology, services, and applications. The MMR Report presents an in-depth analysis of key industry areas and their prospects

Request for free sample: https://www.maximizemarketresearch.com/request-sample/55631

Key Players:

• Blippar • 360 Labs • Matterport • Koncept VR • SubVRsive • Panedia Pty Ltd. • WeMakeVR • VIAR (Viar360) • Pixvana Inc. • Scapic • IBM Corporation • Dell • Intel Corporation • McAfee, LLC • Trend Micro • VMware • Juniper Networks • Fortinet, Inc • Sophos Ltd. • Cisco Systems Inc.

Regional Analysis:

Europe, North America, Asia Pacific, Middle East and Africa, and Latin America are the five regions that make up the Global Virtual Reality Content Creation market. The Global Virtual Reality Content Creation market study includes important market regions, as well as notable sectors and sub segments. The present situation of regional development in terms of market size, market share, and volume is investigated in this study. This Global Virtual Reality Content Creation Market Report examines numbers, regions, revenues, business chain structures, opportunities, and news in great detail.

The goal of this report is to provide a comprehensive analysis of the Global Virtual Reality Content Creation Market, which includes all industry players. The research provides an easy-to-understand analysis of complex data, as well as historical and current industry information, as well as projected market size and trends. This Global Virtual Reality Content Creation market report examines every facet of the industry, with a particular focus on key players such as market leaders, followers, and newcomers. The study includes a PORTER, SWOT, and PESTEL analysis, as well as the influence of microeconomic market determinants. External and internal factors that are likely to have a positive or negative impact on the company have been investigated to give the reader a clear futuristic perspective of the industry.

Get more Report Details : https://www.maximizemarketresearch.com/market-report/global-virtual-reality-content-creation-market/55631/

COVID-19 Impact Analysis on Global Virtual Reality Content Creation Market:

This report looks into the impact of COVID-19 containment on the profitability of leaders, followers, and disruptors. Since its implementation, the impact of the blockade has varied not just by region and segment, but also by sector, location, and country. This paper discusses the market's short- and long-term implications. This assists decision-makers in developing short- and long-term business strategies for each region.

Key Questions answered in the Global Virtual Reality Content Creation Market Report are:

Which product segment grabbed the largest share in the Global Virtual Reality Content Creation market?

How is the competitive scenario of the Global Virtual Reality Content Creation market?

Which are the key factors aiding the Global Virtual Reality Content Creation market growth?

Which region holds the maximum share in the Global Virtual Reality Content Creation market?

What will be the CAGR of the Global Virtual Reality Content Creation market during the forecast period?

Which application segment emerged as the leading segment in the Global Virtual Reality Content Creation market?

Which are the prominent players in the Global Virtual Reality Content Creation market?

What key trends are likely to emerge in the Global Virtual Reality Content Creation market in the coming years?

What will be the Global Virtual Reality Content Creation market size by 2027?

Which company held the largest share in the Global Virtual Reality Content Creation market?

About Us:

Maximize Market Research provides B2B and B2C research on 12000 high growth emerging opportunities & technologies as well as threats to the companies across the Healthcare, Pharmaceuticals, Electronics & Communications, Internet of Things, Food and Beverages, Aerospace and Defence and other manufacturing sectors.

Contact Us:

MAXIMIZE MARKET RESEARCH PVT. LTD.

3rd Floor, Navale IT Park Phase 2,

Pune Bangalore Highway,

Narhe, Pune, Maharashtra 411041, India.

Email: [email protected]

Phone No.: +91 20 6630 3320

Website: www.maximizemarketresearch.com

0 notes

Text

Virtual Reality Content Creation Market 2021 COVID-19 Pandemic Impact, Key Players and Comprehensive Research Study till 2027

Global Virtual Reality Content Creation Market - Overview

The growing need to be physically in specific places is estimated to spur the virtual reality content creation market 2020. The global Virtual Reality Content Creation Market report by Market Research Future (MRFR) provides a clear outline on various niches and trends in VR coupled with drivers and challenges to be aware of in the period of 2019 to 2025 (forecast period). The COVID-19 outbreak and its impact on the industry are also included.

The popularity and improved accessibility to open-source platforms are anticipated to transform the virtual reality content creation market in the coming years. The escalation in demand for head-mounted displays (HMDs) like VR and AR products, especially in times like the current COVID-19 crisis is estimated to promote further growth in the virtual reality content creation market in the foreseeable future.

Get Free Sample Copy at: https://www.marketresearchfuture.com/sample_request/9552

Segmental Analysis

The segmental insight into the virtual reality content creation market has been segmented based on component, end-user, content-type, and region.

On the basis of component, the virtual reality content creation market has been divided into software and service.

Based on the content type, the virtual reality content creation market has been segmented into 360-degree photos, videos, and gaming.

The end-user basis of segmenting the virtual reality content creation market has been segmented into travel & hospitality, real estate, media & entertainment, gaming, automotive, healthcare, and others.

Based on the regions, the virtual reality content creation market consists of the Asia Pacific, the Middle East, North America, Europe, Africa, and South America.

Detailed Regional Analysis

The regional study of the virtual reality content creation market has been segmented into the Asia Pacific, the Middle East, North America, Europe, Africa, and South America. The North American region led the virtual reality content creation market in the year of 2018 and is projected to have the leading market portion all through the forecast period. The augmentation of the regional market in North America is majorly ascribed to the accessibility to a secure IT infrastructure. Moreover, towering IT spending capacity of the nation in North America also motivates the development of virtual reality content creation in the region. The virtual reality content creation market in the Asia Pacific region is anticipated to record the maximum CAGR in the forecast period due to the escalating establishment of VR based startups in different countries in this region.

Competitive Analysis

The instability in the forces of demand and supply is estimated to create a beneficial impact on the overall global market in the forecast period. The restoration and everyday operations are estimated to take some time, which will lead to intensive development of backlog in delivery. The financial assistance provided by the government around the world and trade bodies is estimated to salvage the situation in the coming years. The downturn effects visible in the market are estimated to stay a little longer due to the scale of impact on the global market. The need for prudent analysis of the market trends and demand projections is estimated to lead to formidable development in the market. The constraints of growth are expected to be significant and considerable support will be needed to transform the market effectively.

The crucial companies in the virtual reality content creation market are Koncept VR, SubVRsive, Matterport, Panedia Pty Ltd., Blippar, 360 Labs, Pixvana, Inc., WeMakeVR, VIAR (Viar360), and Scapic among others.

Industry Updates:

May 2020 Apple Inc. has purchased the virtual reality startup NextVR Inc., which concentrates on making VR video content on events such as musical performances and sports matches. It creates VR content that can be seen on consumer headsets such as the PlayStation VR, Facebook Inc.’s Oculus, and others. The startup is also recognized for its partnership with the NBA and Wimbledon to stream live sports from dedicated cameras set up courtside to individual’s headsets at home.

Get Complete Report Details at: https://www.marketresearchfuture.com/reports/virtual-reality-content-creation-market-9552

About Market Research Future:

At Market Research Future (MRFR), we enable our customers to unravel the complexity of various industries through our Cooked Research Report (CRR), Half-Cooked Research Reports (HCRR), Raw Research Reports (3R), Continuous-Feed Research (CFR), and Market Research & Consulting Services.

Contact:

Market Research Future

+1 646 845 9312

Email: [email protected]

0 notes

Text

Encoding Spatial Video

As I mentioned in my prior post about Spatial Video, the launch of the Apple Vision Pro has reignited interest in spatial and immersive video formats, and it's exciting to hear from users who are experiencing this format for the first time. The release of my spatial video command-line tool and example spatial video player has inadvertently pulled me into a lot of fun discussions, and I've really enjoyed chatting with studios, content producers, camera manufacturers, streaming providers, enthusiasts, software developers, and even casual users. Many have shared test footage, and I've been impressed by a lot of what I've seen. In these interactions, I'm often asked about encoding options, playback, and streaming, and this post will focus on encoding.

To start, I'm not an Apple employee, and other than my time working at an immersive video startup (Pixvana, 2016-2020), I don't have any secret or behind-the-scenes knowledge. Everything I've written here is based on my own research and experimentation. That means that some of this will be incorrect, and it's likely that things will change, perhaps as early as WWDC24 in June (crossing my fingers). With that out of the way, let's get going.

Encoding

Apple's spatial and immersive videos are encoded using a multi-view extension of HEVC referred to as MV-HEVC (found in Annex G of the latest specification). While this format and extension were defined as a standard many years ago, as far as I can tell, MV-HEVC has not been used in practice. Because of this, there are very few encoders that support this format. As of this writing, these are the encoders that I'm aware of:

spatial - my own command-line tool for encoding MV-HEVC on an Apple silicon Mac

Spatialify - an iPhone/iPad app

SpatialGen - an online encoding solution

QooCam EGO spatial video and photo converter - for users of this Kandao camera

Dolby/Hybrik - professional online encoding

Ateme TITAN - professional encoding (note the upcoming April 16, 2024 panel discussion at NAB)

SpatialMediaKit - an open source GitHub project for Mac

MV-HEVC reference software - complex reference software mostly intended for conformance testing

Like my own spatial tool, many of these encoders rely on the MV-HEVC support that has been added to Apple's AVFoundation framework. As such, you can expect them to behave in similar ways. I'm not as familiar with the professional solutions that are provided by Dolby/Hyrbik and Ateme, so I can't say much about them. Finally, the MV-HEVC reference software was put together by the standards committee, and while it is an invaluable tool for testing conformance, it was never intended to be a commercial tool, and it is extremely slow. Also, the reference software was completed well before Apple defined its vexu metadata, so that would have to be added manually (my spatial tool can do this).

Layers

As I mentioned earlier, MV-HEVC is an extension to HEVC, and the multi-view nature of that extension is intended to encode multiple views of the same content all within a single bitstream. One use might be to enable multiple camera angles of an event – like a football game – to be carried in a single stream, perhaps allowing a user to switch between them. Another use might be to encode left- and right-eye views to be played back stereoscopically (in 3D).

To carry multiple views, MV-HEVC assigns each view a different layer ID. In a normal HEVC stream, there is only one so-called primary layer that is assigned an ID of 0. When you watch standard 2D HEVC-encoded media, you're watching the only/primary layer 0 content. With Apple's spatial and immersive MV-HEVC content, a second layer (typically ID 1) is also encoded, and it represents a second view of the same content. Note that while it's common for layer 0 to represent a left-eye view, this is not a requirement.

One benefit of this scheme is that you can playback MV-HEVC content on a standard 2D player, and it will only playback the primary layer 0 content, effectively ignoring anything else. But, when played back on a MV-HEVC-aware player, each layered view can be presented to the appropriate eye. This is why my spatial tool allows you to choose which eye's view is stored in the primary layer 0 for 2D-only players. Sometimes (like on iPhone 15 Pro), one camera's view looks better than the other.

All video encoders take advantage of the fact that the current video frame looks an awful lot like the prior video frame. Which looks a lot like the one before that. Most of the bandwidth savings depends on this fact. This is called temporal (changes over time) or inter-view (where a view in this sense is just another image frame) compression. As an aside, if you're more than casually interested in how this works, I highly recommend this excellent digital video introduction. But even if you don't read that article, a lot of the data in compressed video consists of one frame referencing part of another frame (or frames) along with motion vectors that describe which direction and distance an image chunk has moved.

Now, what happens when we introduce the second layer (the other eye's view) in MV-HEVC-encoded video? Well, in addition to a new set of frames that is tagged as layer 1, these layer 1 frames can also reference frames that are in layer 0. And because stereoscopic frames are remarkably similar – after all, the two captures are typically 65mm or less apart – there is a lot of efficiency when storing the layer 1 data: "looks almost exactly the same as layer 0, with these minor changes…" It isn't unreasonable to expect 50% or more savings in that second layer.

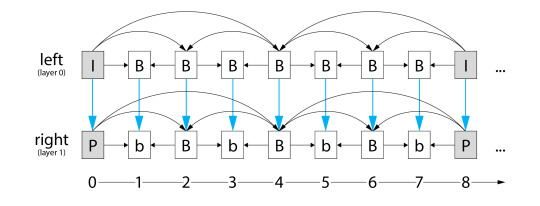

This diagram shows a set of frames encoded in MV-HEVC. Perhaps confusing at first glance, the arrows show the flow of referenced image data. Notice that layer 0 does not depend on anything in layer 1, making this primary layer playable on standard 2D HEVC video players. Layer 1, however, relies on data from layer 0 and from other adjacent layer 1 frames.

Thanks to Fraunhofer for the structure of this diagram.

Mystery

I am very familiar with MV-HEVC output that is recorded by Apple Vision Pro and iPhone 15 Pro, and it's safe to assume that these are being encoded with AVFoundation. I'm also familiar with the output of my own spatial tool and a few of the others that I mentioned above, and they too use AVFoundation. However, the streams that Apple is using for its immersive content appear to be encoded by something else. Or at least a very different (future?) version of AVFoundation. Perhaps another WWDC24 announcement?

By monitoring the network, I've already learned that Apple's immersive content is encoded in 10-bit HDR, 4320x4320 per-eye resolution, at 90 frames-per-second. Their best streaming version is around 50Mbps, and the format of the frame is (their version of) fisheye. While they've exposed a fisheye enumeration in Core Media and their files are tagged as such, they haven't shared the details of this projection type. Because they've chosen it as the projection type for their excellent Apple TV immersive content, though, it'll be interesting to hear more when they're ready to share.

So, why do I suspect that they're encoding their video with a different MV-HEVC tool? First, where I'd expect to see a FourCC codec type of hvc1 (as noted in the current Apple documentation), in some instances, I've also seen a qhvc codec type. I've never encountered that HEVC codec type, and as far as I know, AVFoundation currently tags all MV-HEVC content with hvc1. At least that's been my experience. If anyone has more information about qhvc, drop me a line.

Next, as I explained in the prior section, the second layer in MV-HEVC-encoded files is expected to achieve a bitrate savings of around 50% or more by referencing the nearly-identical frame data in layer 0. But, when I compare files that have been encoded by Apple Vision Pro, iPhone 15 Pro, and the current version of AVFoundation (including my spatial tool), both layers are nearly identical in size. On the other hand, Apple's immersive content is clearly using a more advanced encoder, and the second layer is only ~45% of the primary layer…just what you'd expect.

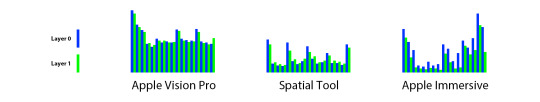

Here is a diagram that shows three subsections of three different MV-HEVC videos, each showing a layer 0 (blue), then layer 1 (green) cadence of frames. The height of each bar represents the size of that frame's payload. Because the content of each video is different, this chart is only useful to illustrate the payload difference between layers.

As we've learned, for a mature encoder, we'd expect the green bars to be noticeably smaller than the blue bars. For Apple Vision Pro and spatial tool encodings (both using the current version of AVFoundation), the bars are often similar, and in some cases, the green bars are even higher than their blue counterparts. In contrast, look closely at the Apple Immersive data; the green layer 1 frame payload is always smaller.

Immaturity

What does this mean? Well, it means that Apple's optimized 50Mbps stream might need closer to 70Mbps using the existing AVFoundation-based tools to achieve a similar quality. My guess is that the MV-HEVC encoder in AVFoundation is essentially encoding two separate HEVC streams, then "stitching" them together (by updating layer IDs and inter-frame references), almost as-if they're completely independent of each other. That would explain the remarkable size similarity between the two layers, and as an initial release, this seems like a reasonable engineering simplification. It also aligns with Apple's statement that one minute of spatial video from iPhone 15 Pro is approximately 130MB while one minute of regular video is 65MB…exactly half.

Another possibility is that it's too computationally expensive to encode inter-layer references while capturing two live camera feeds in Vision Pro or iPhone 15 Pro. This makes a lot of sense, but I'd then expect a non-real-time source to produce a more efficient bitstream, and that's not what I'm seeing.

For what it's worth, I spent a bit of time trying to validate a lack of inter-layer references, but as mentioned, there are no readily-available tools that process MV-HEVC at this deeper level (even the reference decoder was having its issues). I started to modify some existing tools (and even wrote my own), but after a bunch of work, I was still too far away from an answer. So, I'll leave it as a problem for another day.

To further improve compression efficiency, I tried to add AVFoundation's multi-pass encoding to my spatial tool. Sadly, after many attempts and an unanswered post to the Apple Developer Forums, I haven't had any luck. It appears that the current MV-HEVC encoder doesn't support multi-pass encoding. Or if it does, I haven't found the magical incantation to get it working properly.

Finally, I tried adding more data rate options to my spatial tool. The tool can currently target a quality level or an average bitrate, but it really needs finer control for better HLS streaming support. Unfortunately, I couldn't get the data rate limits feature to work either. Again, I'm either doing something wrong, or the current encoder doesn't yet support all of these features.

Closing Thoughts

I've been exploring MV-HEVC in depth since the beginning of the year. I continue to think that it's a great format for immersive media, but it's clear that the current state of encoders (at least those that I've encountered) are in their infancy. Because the multi-view extensions for HEVC have never really been used in the past, HEVC encoders have reached a mature state without multi-view support. It will now take some effort to revisit these codebases to add support for things like multiple input streams, the introduction of additional layers, and features like rate control.

While we wait for answers at WWDC24, we're in an awkward transition period where the tools we have to encode media will require higher bitrates and offer less control over bitstreams. We can encode rectilinear media for playback in the Files and Photos apps on Vision Pro, but Apple has provided no native player support for these more immersive formats (though you can use my example spatial player). Fortunately, Apple's HLS toolset has been updated to handle spatial and immersive media. I had intended to write about streaming MV-HEVC in this post, but it's already long enough, so I'll save that topic for another time.

As always, I hope this information is useful, and if you have any comments, feedback, suggestions, or if you just want to share some media, feel free to contact me.

3 notes

·

View notes

Link

vrfocus.com

By: Peter Graham

#vr#virtual reality#vrfocus.com#syfy#October 2018#2018#comic con#wundervu#syfy wire#new york comic con#author: Peter Graham#graham#Pixvana#XR#Mixed Reality#MR#NBC Universal#Rachel Lanham#lanham#Matthew Chiavelli#chiavelli#Facebook360#Google#Google Cardboard#Cardboard#YouTube360#youtube#Google Daydream#daydream

0 notes

Text

Proficient Growth in Scenario of Europe Virtual Reality Content Creator Market Outlook: Ken Research

The Virtual reality creates a digital environment to deliver real lifelike experience to the user. Rampant growth of the gaming segment is a major aspect for the growth of virtual reality content creation market. The effective growth in demand for virtual reality games on several platform such as Smartphone, PlayStation, and computer have enhanced the growth of gaming sector in the virtual reality content creation market.

According to the report analysis, ‘Europe Virtual Reality Content Creation Market 2020-2030 by Solution, Content Type, VR Medium, Application, and Country: Trend Outlook and Growth Opportunity’ states that 360 Labs, Blippar, Koncept VR, Matterport, Panedia Pty Ltd., Pixvana Inc., Scapic., SubVRsive, VIAR (Viar360)and WeMakeVR are the foremost corporate which presently operating more effectively for leading the highest market growth and dominating the great value of market share around the globe during the short span of time while delivering the better consumer satisfaction, decreasing the linked prices, employing the young prices and spreading the awareness connected to such. In addition, the virtual reality content creation tools are extensively used as an open source podium to produce immersive experience content. Such tools are developed to be user friendly; hence the acceptance of virtual reality content creation is anticipated to rise at a high rate during the review period.

Moreover, there is an augment in requirement for VR content in marketing sector owing to surge in requirement for exquisite customer experience marketing strategies across assorted end-users. However, the paucity of awareness linked with benefits of using VR devices in developing regions is a factor projected to curb the growth of the global market to a certain extent. Furthermore, the significant growth in R&D activities to increase the virtual marketing scope in retail segment is an opportunistic factor of the Europe market.

Furthermore, the virtual reality content creation market has taken a leaping fullness in the unnatural engineering and technology partition which are assisting the e-trade and direct storehouses for creating a customer-aiding interface of which their consumers or buyers can get pleasure from the hassle-free rehearsal of purchasing or trading of assets or possessions. Further, it is meeting an quickening call in pharmaceutical and healthcare locations by accepting the 3D visualization of cryosurgeries and process, subsequently equipping the medicinal scholars with the superior practical executed in the therapeutic enterprise, hence assistance the market to arise. Smartphone’s are the principal and leading target by virtual reality software expressing businesses, envisioned to function a pivotal function in establishment a user-friendly context for knowledge, intelligence, and presentation of the virtual reality content creation market around the Europe.

Furthermore, immersive gaming is anticipated to gain mainstream appeal between the individuals that are dwelling on heightened interactive virtual devices for an enthralled understanding. Thus, virtual reality has transfigured the gaming industry, as a person can understanding and interacts in a three-dimensional environment during a game. This has enlarged the need for games, the content for which is based on the virtual reality. Aforementioned is a foremost factor to influence the growth of the Europe market during the coming years.

For More Information, click on the link below:-

Europe Virtual Reality Content Creation Market Research Report

Related Report:-

Global Virtual Reality Content Creation Market 2020-2030 by Solution, Content Type, VR Medium, Application, and Region: Trend Outlook and Growth Opportunity

Contact Us:-

Ken Research

Ankur Gupta, Head Marketing & Communications

+91-9015378249

0 notes

Text

Virtual Reality Content Creation Market Insights with Key Company Profiles – Forecast to 2028

Virtual reality (VR) is a virtual environment that is created by computer-generated simulations. VR devices replicates the real-time environment into the virtual environment. For example, the driving simulators in VR headsets provide actual simulations of driving a vehicle by displaying vehicular motion and corresponding visual, motion, and audio indications to the driver. These simulations are high definition content known as VR content, which are developed with the help of software that creates three-dimensional environment or videos. Thus, the virtual reality content creation market growth is expected to rise at a significant rate in the coming years owing to proliferation of VR devices in diverse industries. The VR content is created in two different ways. First, the VR content is produced by taking a 360-degree immersive videos with the help of 360-degree camera, which has high definition such as 4K resolution. Secondly, the content is produced by making a 3-dimensional (3D) animation with the help of advanced and interactive software applications. Rise in demand for high quality content such as 4K among individuals coupled with high availability of cost-efficient VR devices are major factors expected to drive the growth of the global virtual reality content creation market during the forecast period. Ongoing modernization of visual display electronics such as TV, desktops, and others are proliferating the demand for VR content owing to its ability to adapt to surrounding environments displaying systems and provide virtual simulations. Click Here to Get Sample Premium Report @ https://www.trendsmarketresearch.com/report/sample/13379 Moreover, rise in sales of head-mounted display (HMDs) especially in gaming and entertainment sector is another factor anticipated to propel the growth of the global virtual reality content creation market. However, concerns associated with VR content piracy is a factor that hampers the growth of the global virtual reality content creation market to a certain extent. Furthermore, rise in diversification applications of VR in various industries is an opportunistic factor for the players operating in the market, which in turn is expected to fuel the growth of the global market. The virtual reality content creation market is segmented on the basis of content type, component, end-use sector, and region. Further, the videos segment is sub-categorized into 360 degree and immersive. Based on content type, the market is categorized into videos, 360 degree photos, and games. On the basis of component, it is divided into software and services. Depending on end-use sector, it is categorized into real estate, travel & hospitality, media & entertainment, healthcare, retail, gaming, automotive, and others. Based on region, the market is analyzed across North America, Europe, Asia-Pacific, and LAMEA. The market players operating in the virtual reality content creation market include Blippar, 360 Labs, Matterport, Koncept VR, SubVRsive, Panedia Pty Ltd., WeMakeVR, VIAR (Viar360), Pixvana Inc., and Scapic. KEY BENEFITS FOR STAKEHOLDERS • The study provides an in-depth analysis of the market trends to elucidate the imminent investment pockets. • Information about key drivers, restraints, and opportunities and their impact analyses on the global virtual reality content creation market size is provided. • Porter’s five forces analysis illustrates the potency of the buyers and suppliers operating in the industry. • The quantitative analysis of the market from 2018 to 2026 is provided to determine the global virtual reality content creation market potential. Buy Now report with Analysis of COVID-19 @ https://www.trendsmarketresearch.com/checkout/13379/Single KEY MARKET SEGMENTS Content Type • Videos o 360 Degree o Immersive • 360 Degree Photos • Games Component • Software • Services End-use Sector • Real Estate • Travel & Hospitality • Media & Entertainment • Healthcare • Retail • Gaming • Automotive • Others BY REGION • North America o U.S. o Canada • Europe o Germany o France o UK o Rest

0 notes

Photo

Today's Immersive VR Buzz: Sad new Pixvana will not support SPIN Studio Any longer https://buff.ly/2OkGCxU https://www.instagram.com/p/B8H9U1Jn0PN/?igshid=1ck8vw5nfnfbj

0 notes

Text

Immersive Tech Startup Pixvana Completes $14m Series A Funding Round

Pixvana, the tech startup that specialises in cloud-based video content creation and distribution has announced the completion of a Series A funding round of $14 million USD.

The round was led by Vulcan Capital with participation from new investors Raine Ventures, Microsoft Ventures, Cisco Investments and Hearst Ventures, and existing investor Madrona Venture Group.

The funding will be used to help bolster SPIN Studio, Pixvana’s cloud-based storytelling platform which handles everything from stitching, editing, publishing, and playback in one workflow. The platform offers creative tools for virtual reality (VR), augmented reality (AR) and mixed reality (MR) storytelling, including customized templates and interactive hyperports.

“We are thrilled to work with this amazing group of investors backing us, to realize our vision of XR storytelling,” said Forest Key, Pixvana CEO in a statement. “Faster iteration and blending of video and 3D content will allow creatives, brands and media companies to create amazing XR experiences. More announcements like the recent devices for Windows MR and Oculus Go will create a rapidly expanding market for XR video experiences.”

SPIN Studio users can access Pixvana’s encoding system that renders up to 8K stereo content using its patented FOVAS (field of view adaptive streaming) technology, enabling high-quality experiences on head-mounted displays (HMDs) like Microsoft’s Windows Mixed Reality, Oculus Rift, HTC Vive, Samsung Gear VR, Google Daydream, and more.

“There is tremendous opportunity in the XR video space and it is clear that outstanding content and storytelling tools will define this new medium,” said Stuart Nagae, General Partner, Vulcan Capital. “We think that Pixvana has an extraordinary team that really understands how to deliver cinematic, immersive experiences in XR and can build a SaaS business at scale.”

For the funding pitch Pixvana created a 360-degree video using SPIN Studio called Sofia – in homage to Sofia Coppola. The video (seen below) is a cut down version of the full 15-minute pitch. As with any 360 video it’s best to watch using a headset. Whilst most headsets will be able to play the YouTube video, for the best experience – and to see FOVAS in action – you’ll need to download the SPIN Play app. Currently this is available on Steam for Oculus Rift and HTC Vive, with a mobile version due next month.

For any further Pixvana updates, keep reading VRFocus.

youtube

from VRFocus http://ift.tt/2znEFLp

1 note

·

View note

Photo

Comment Rachel Lanham, directrice des opérations de Pixvana, a tracé le chemin d’une carrière aux frontières de la technologie – Newstrotteur

0 notes